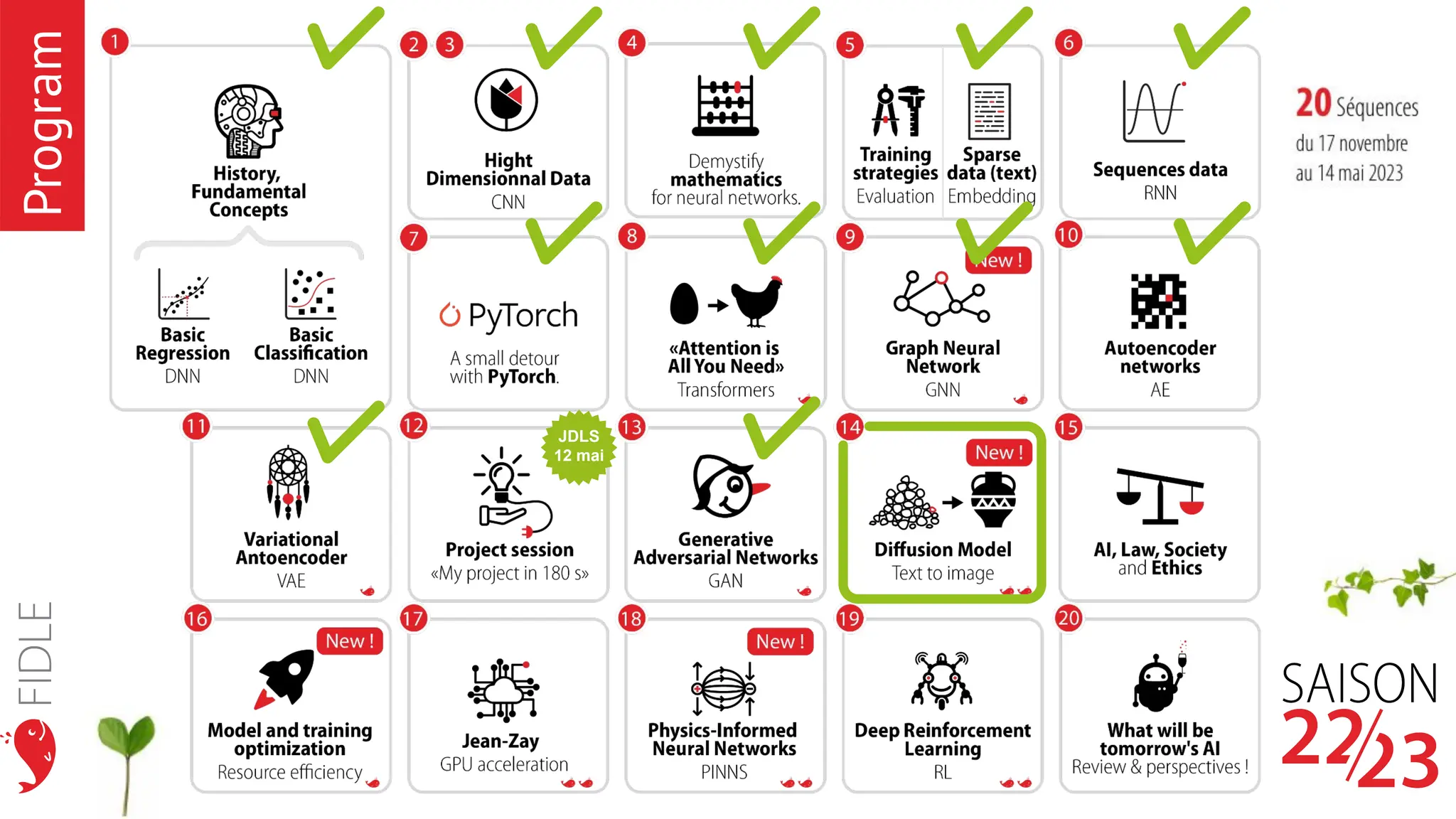

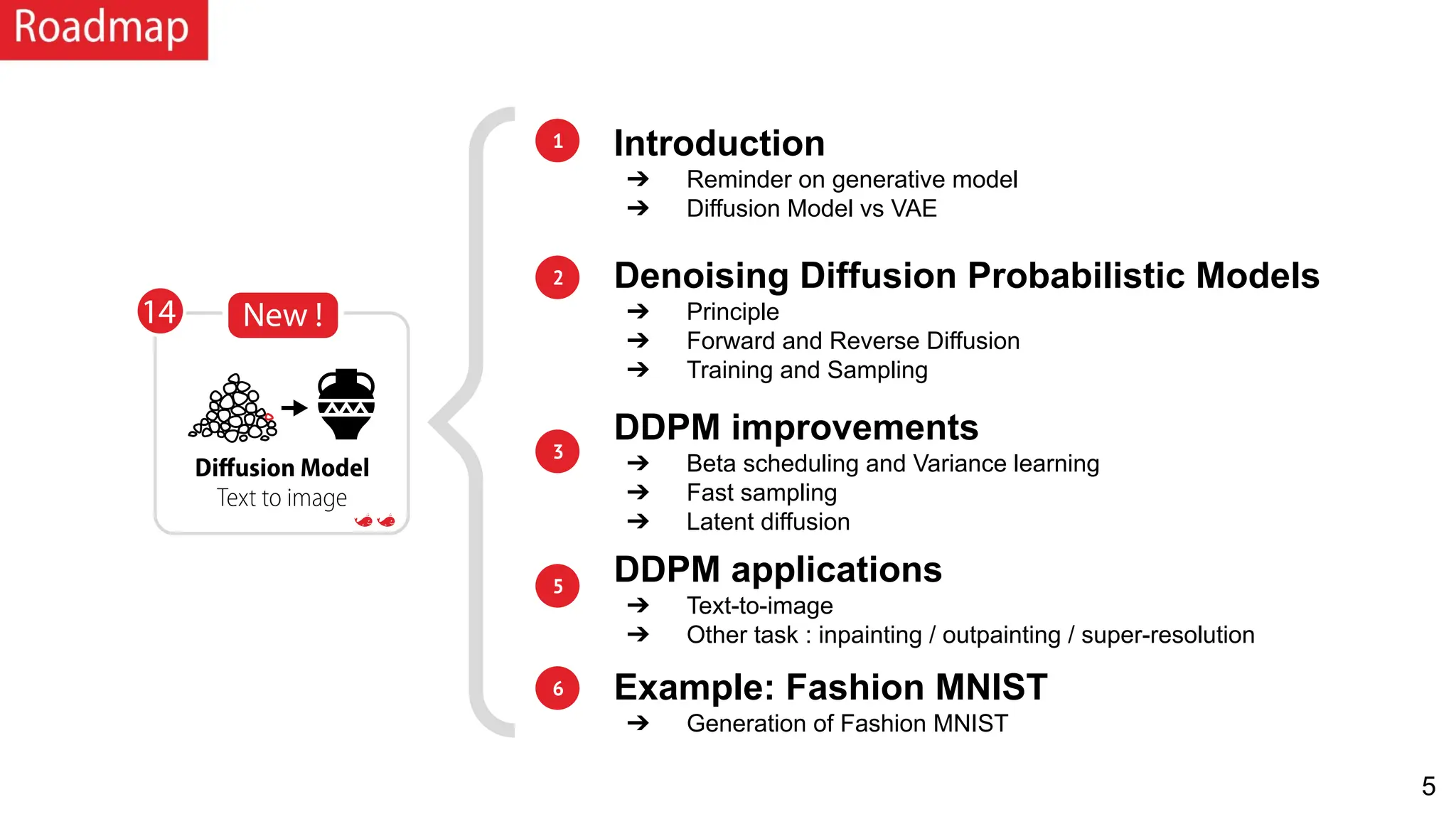

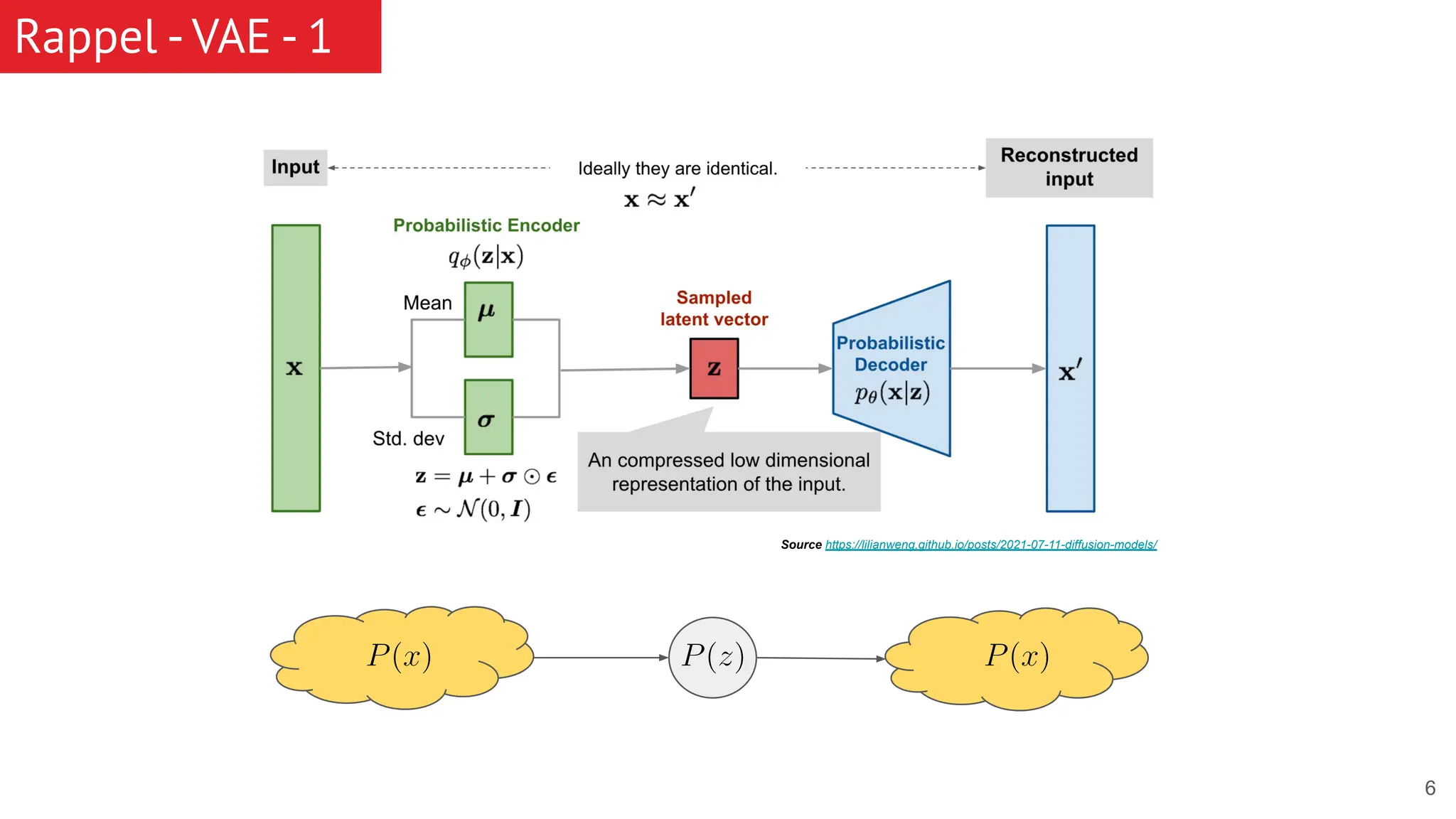

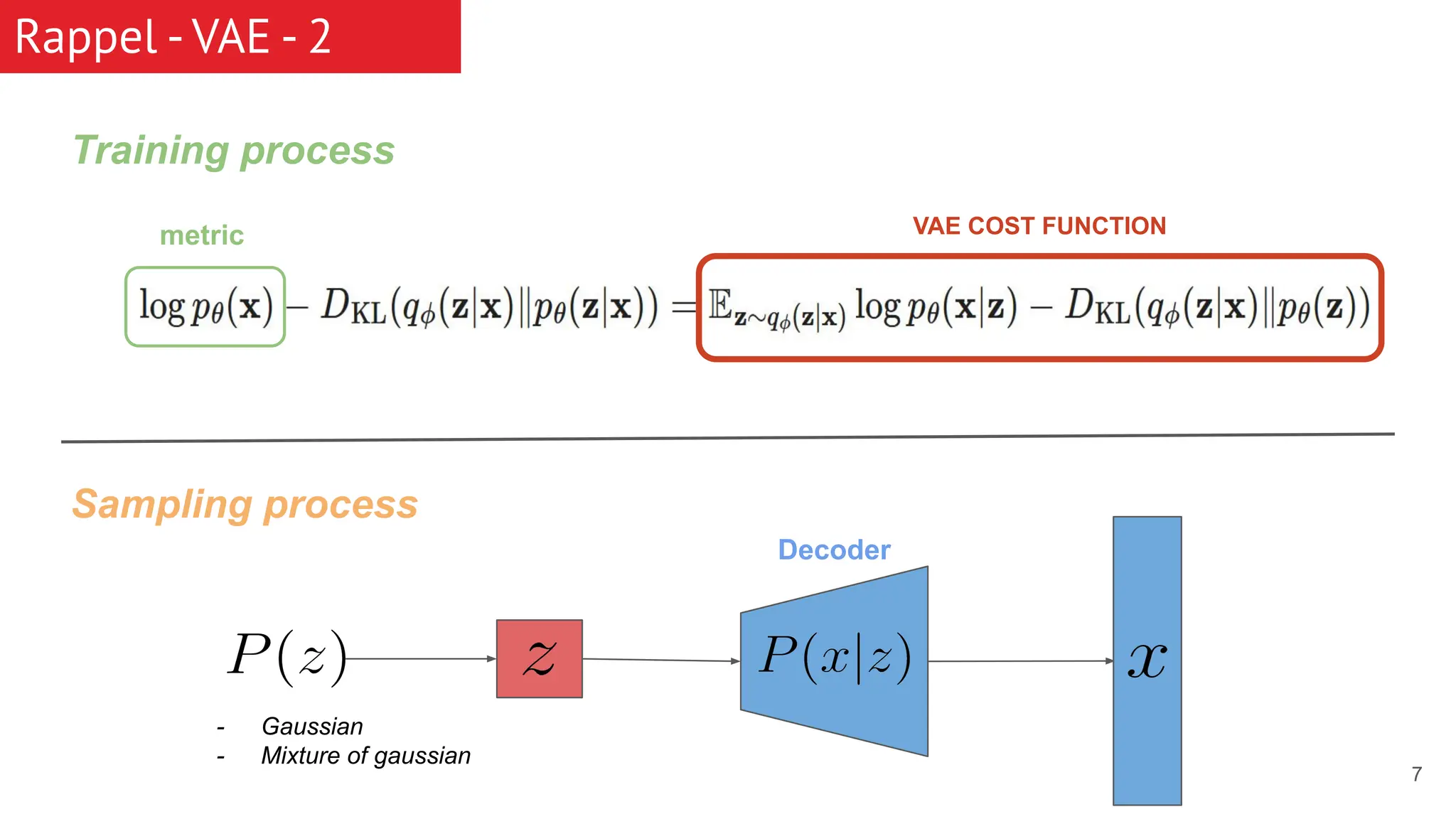

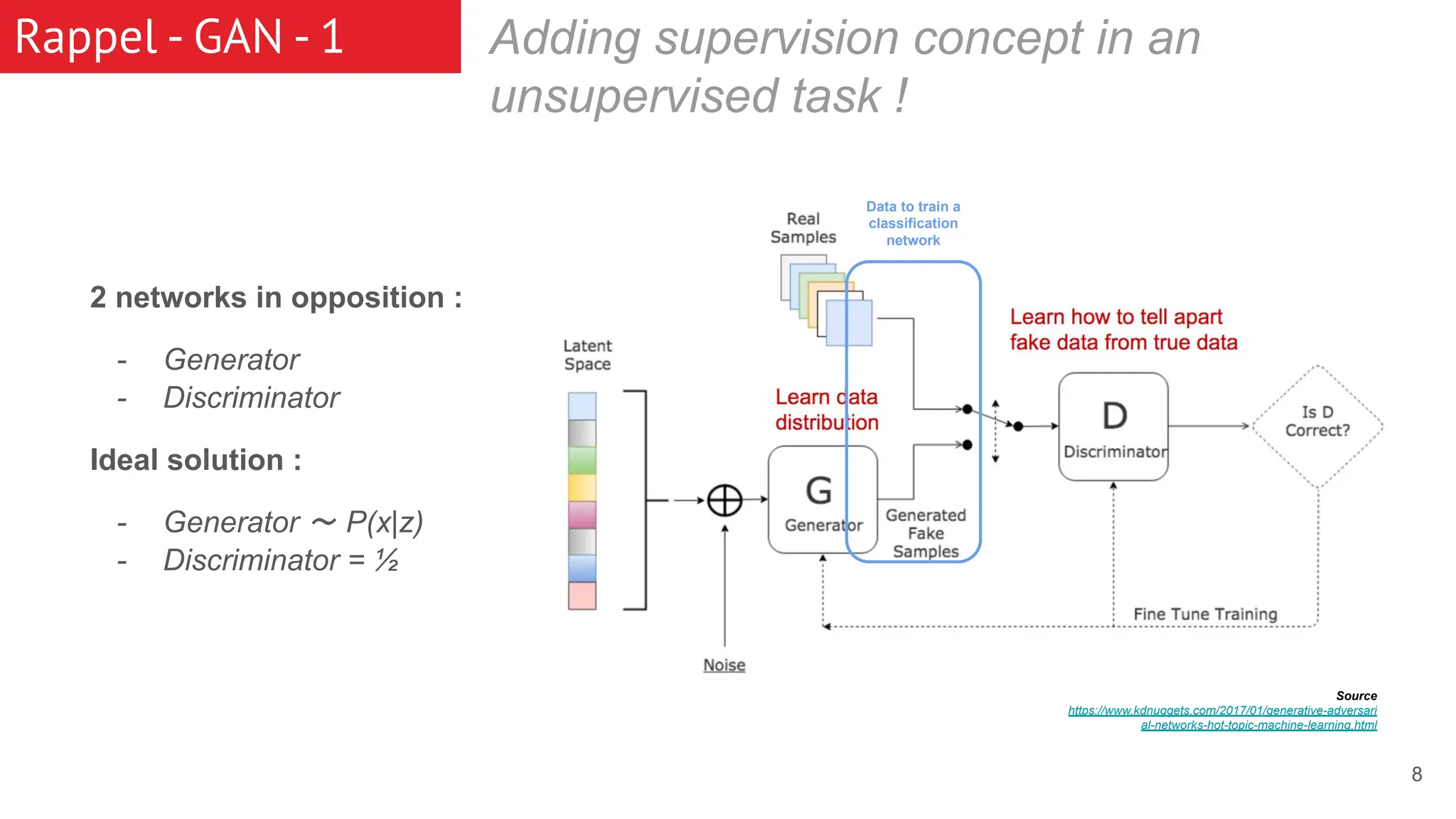

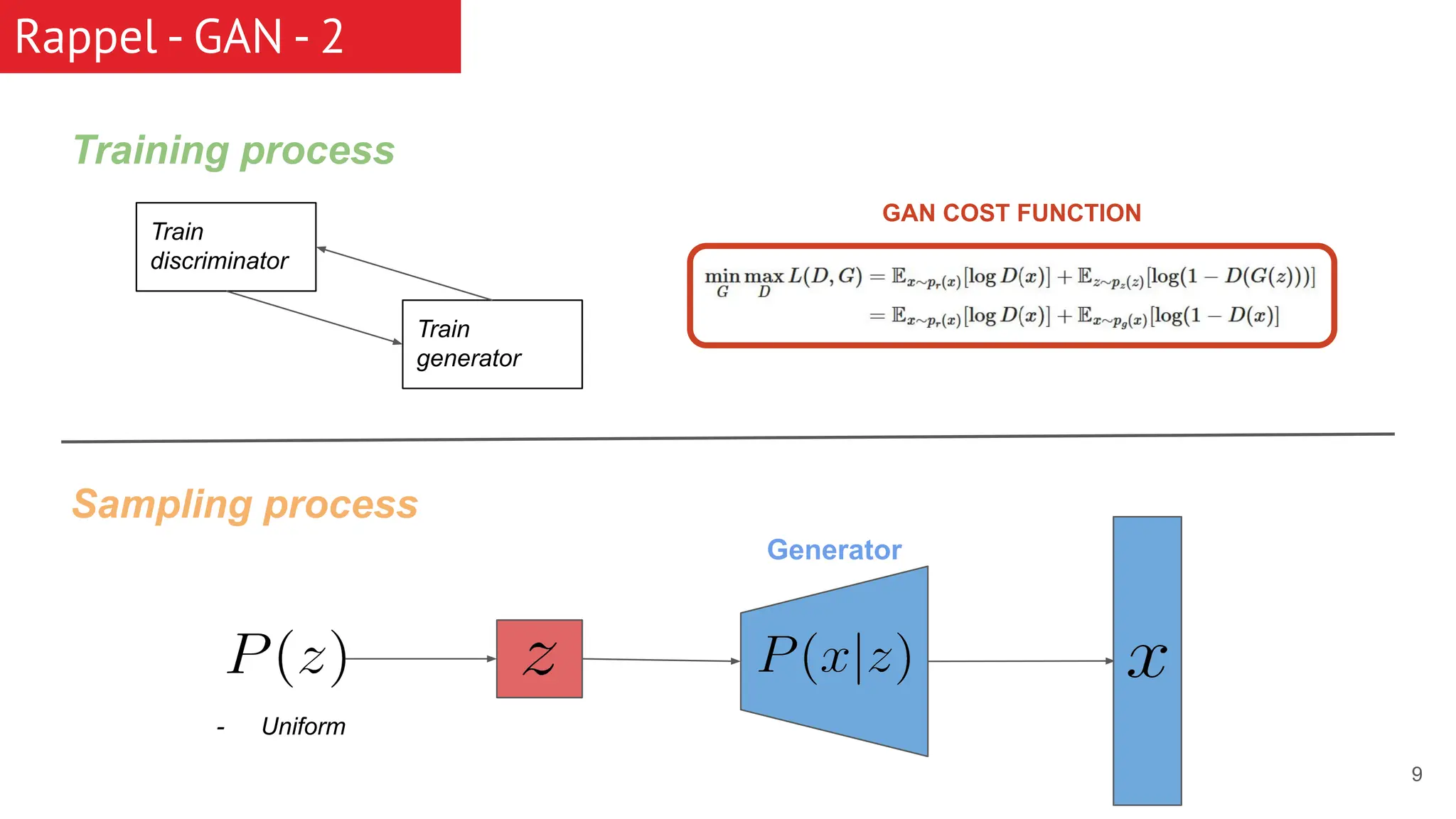

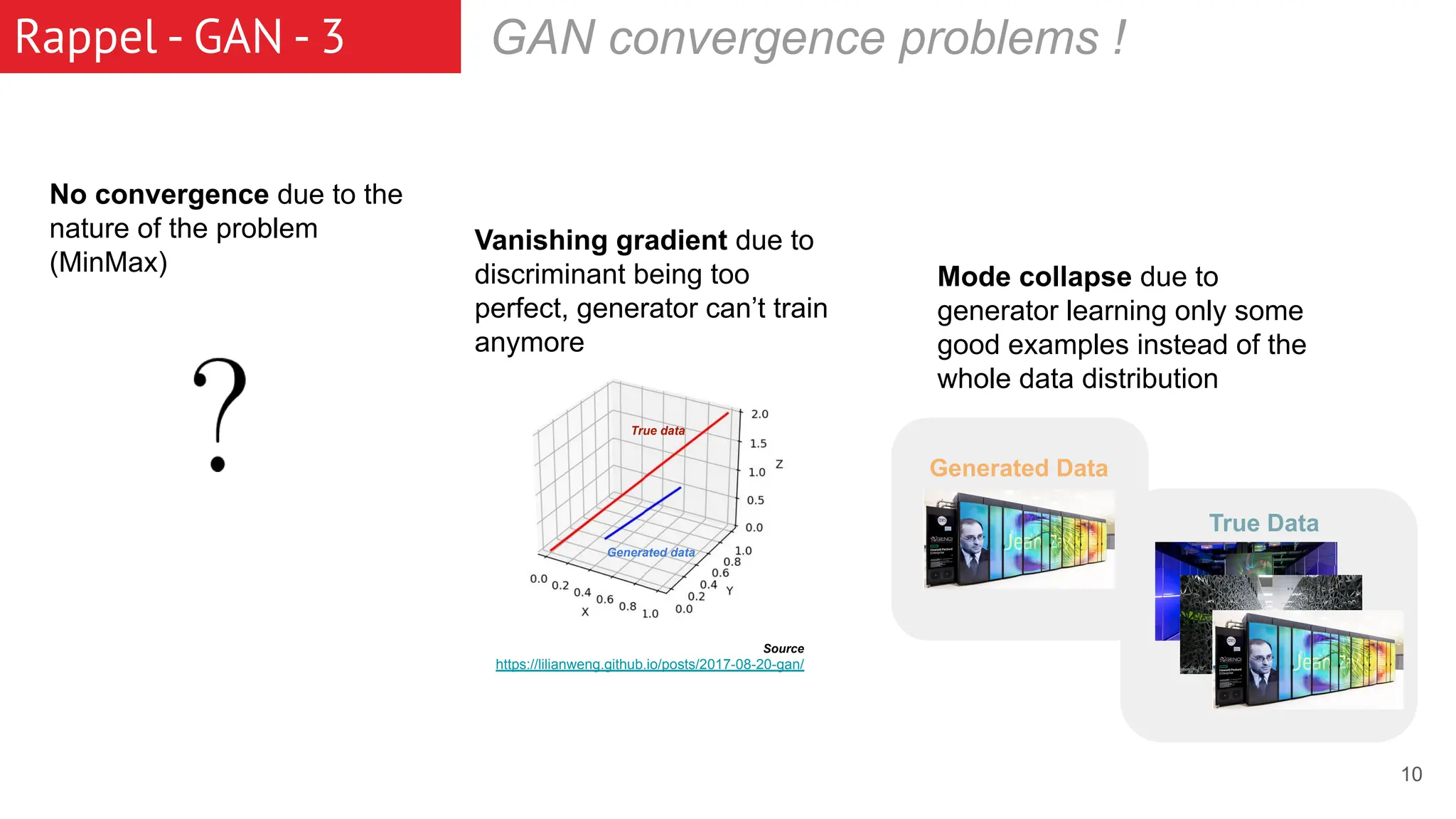

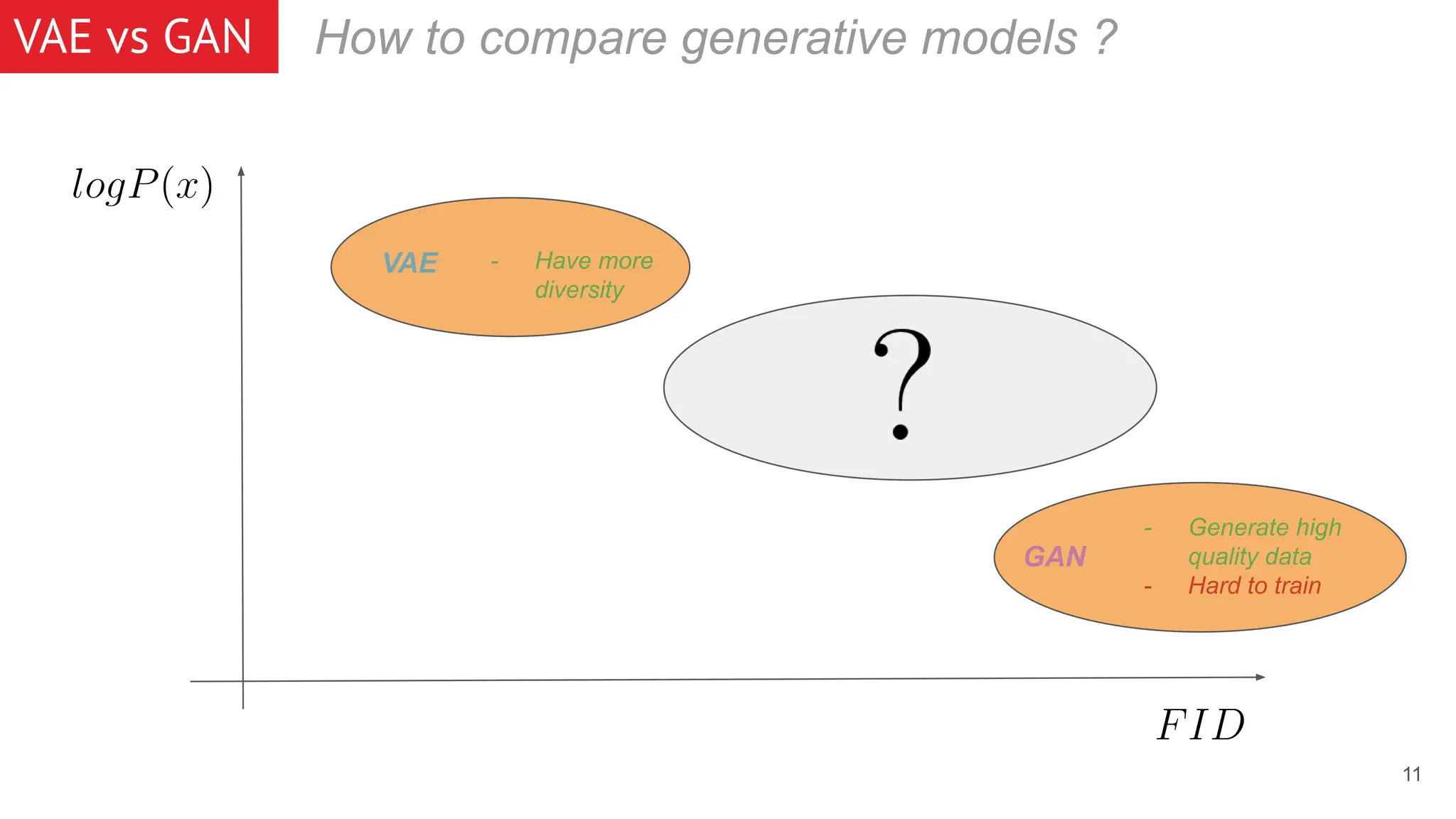

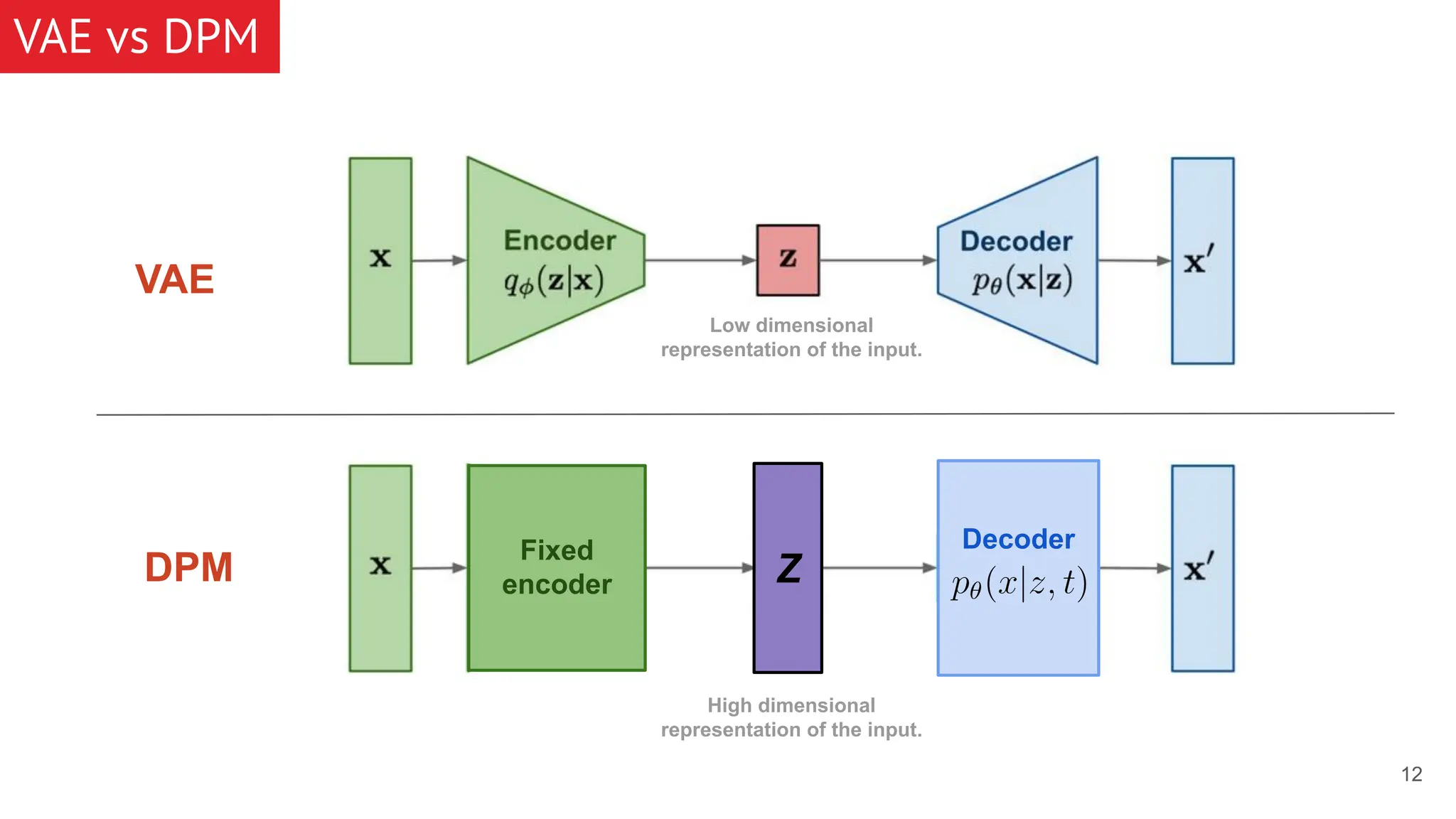

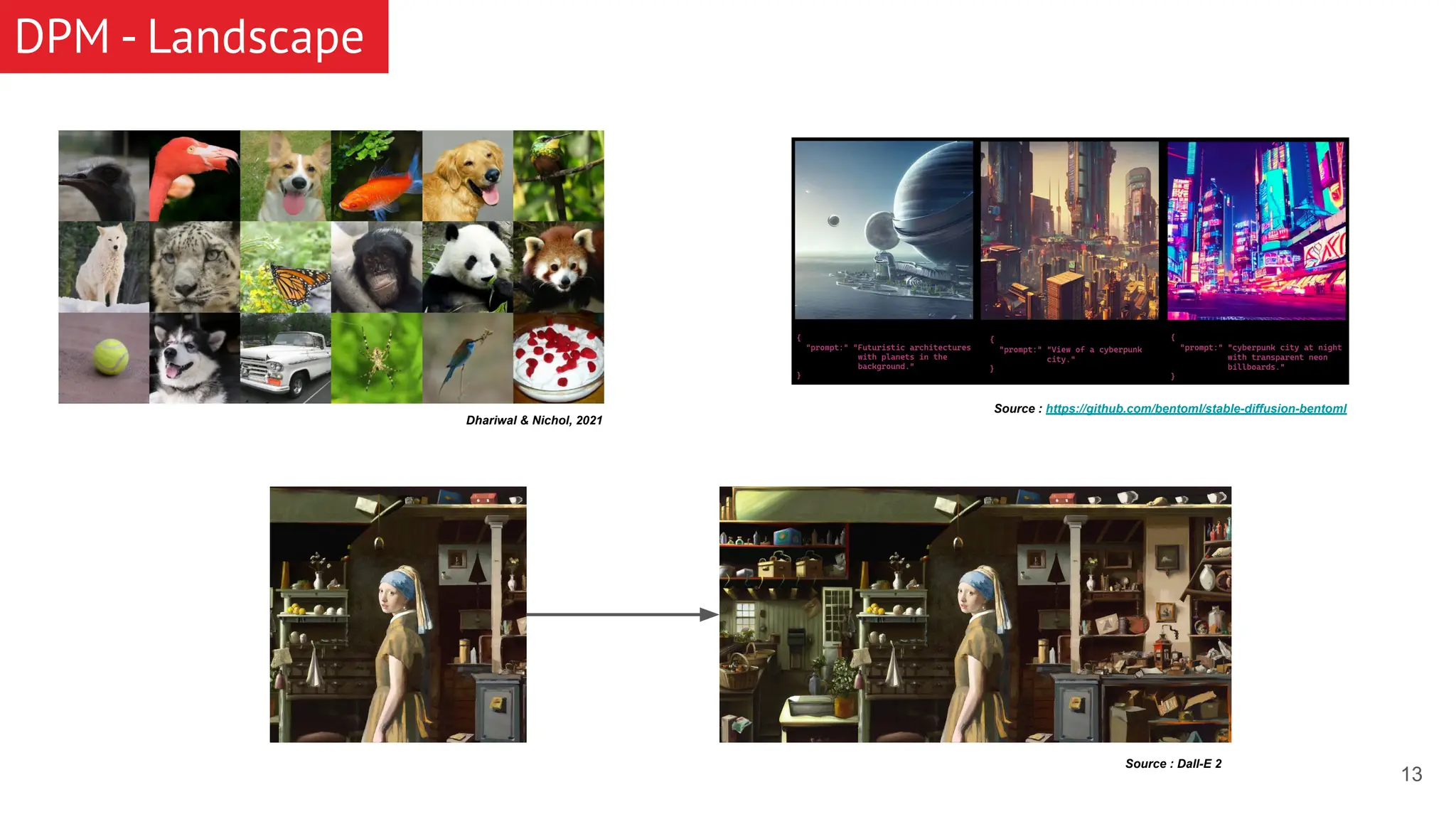

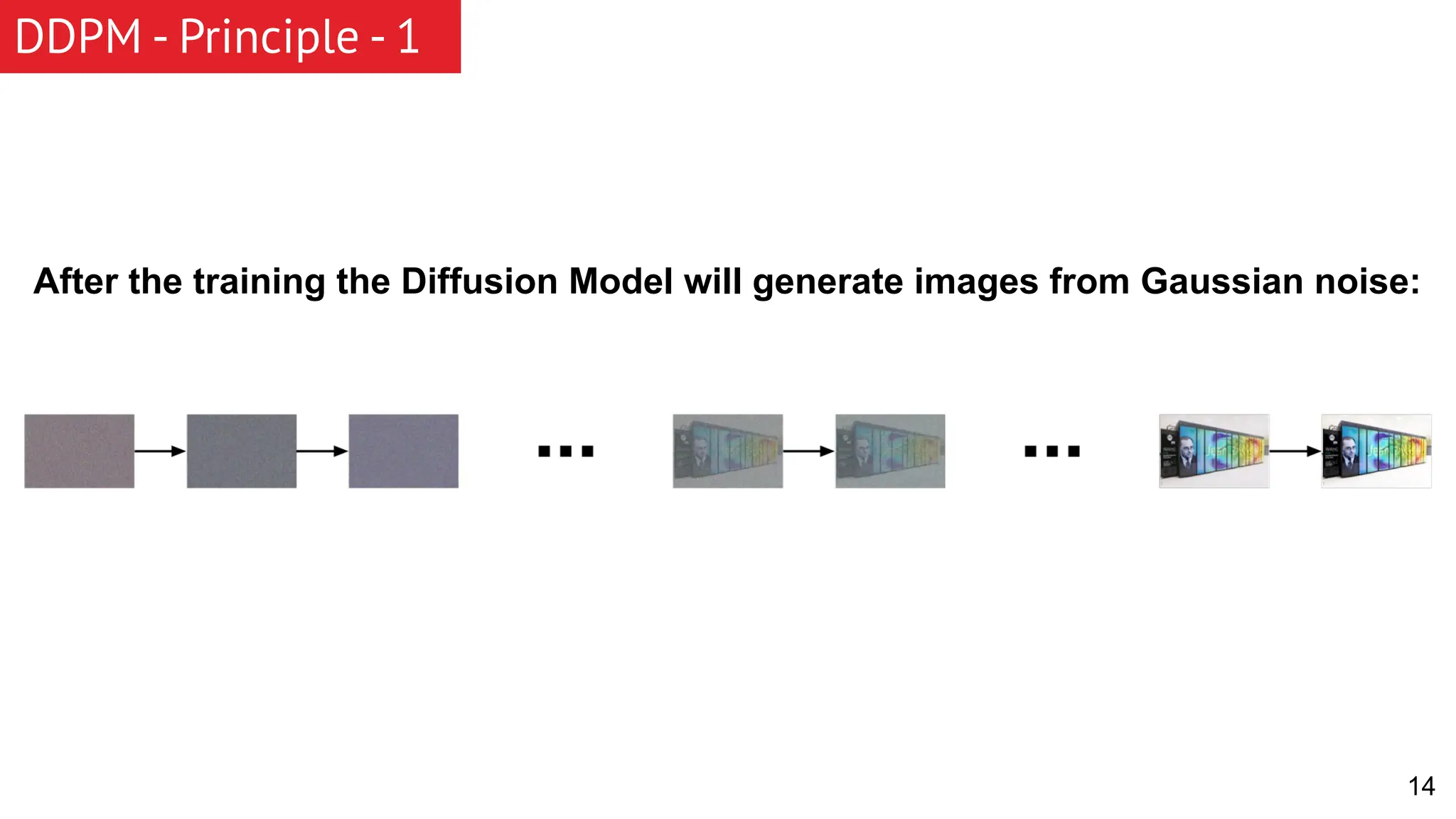

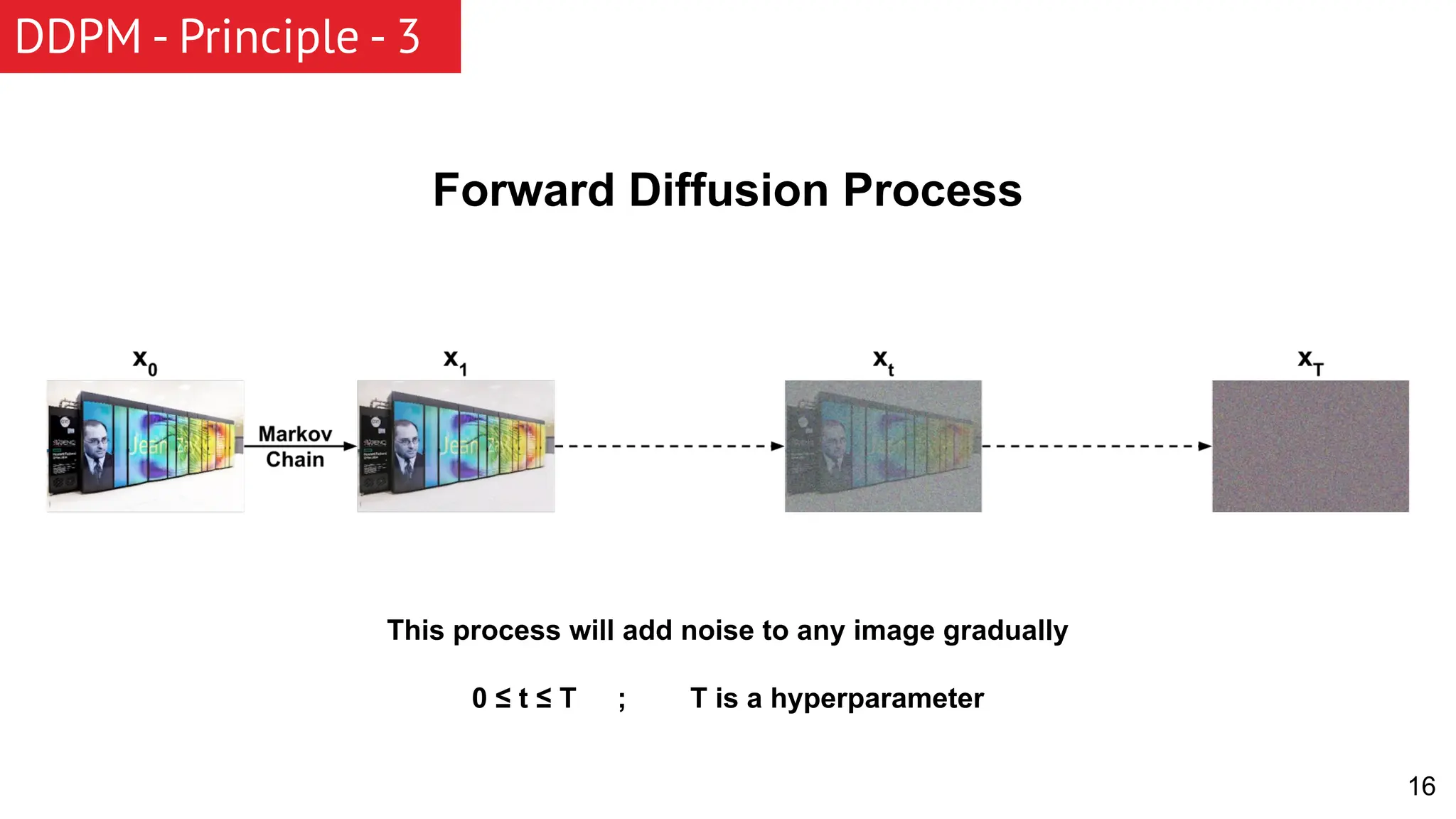

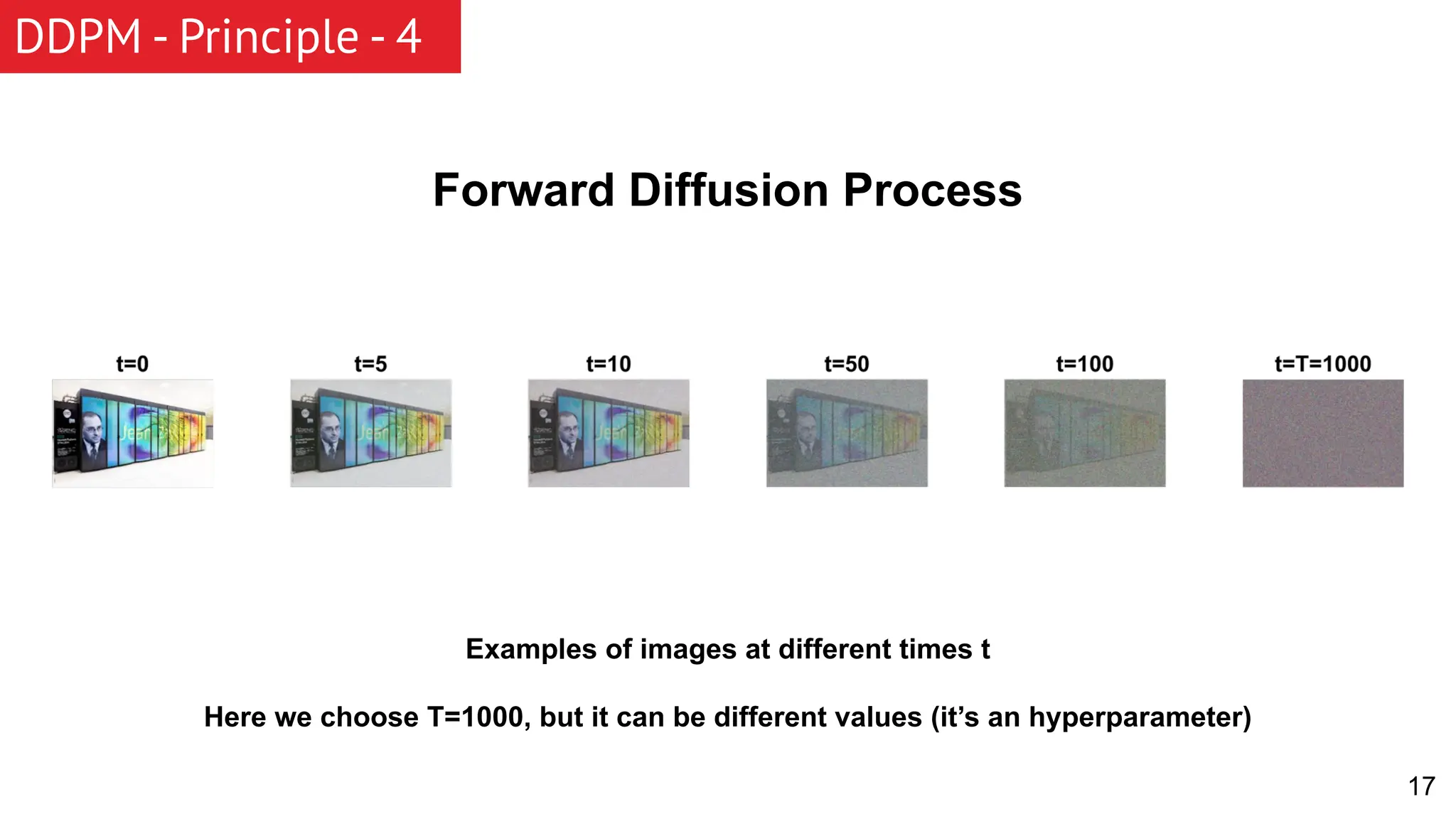

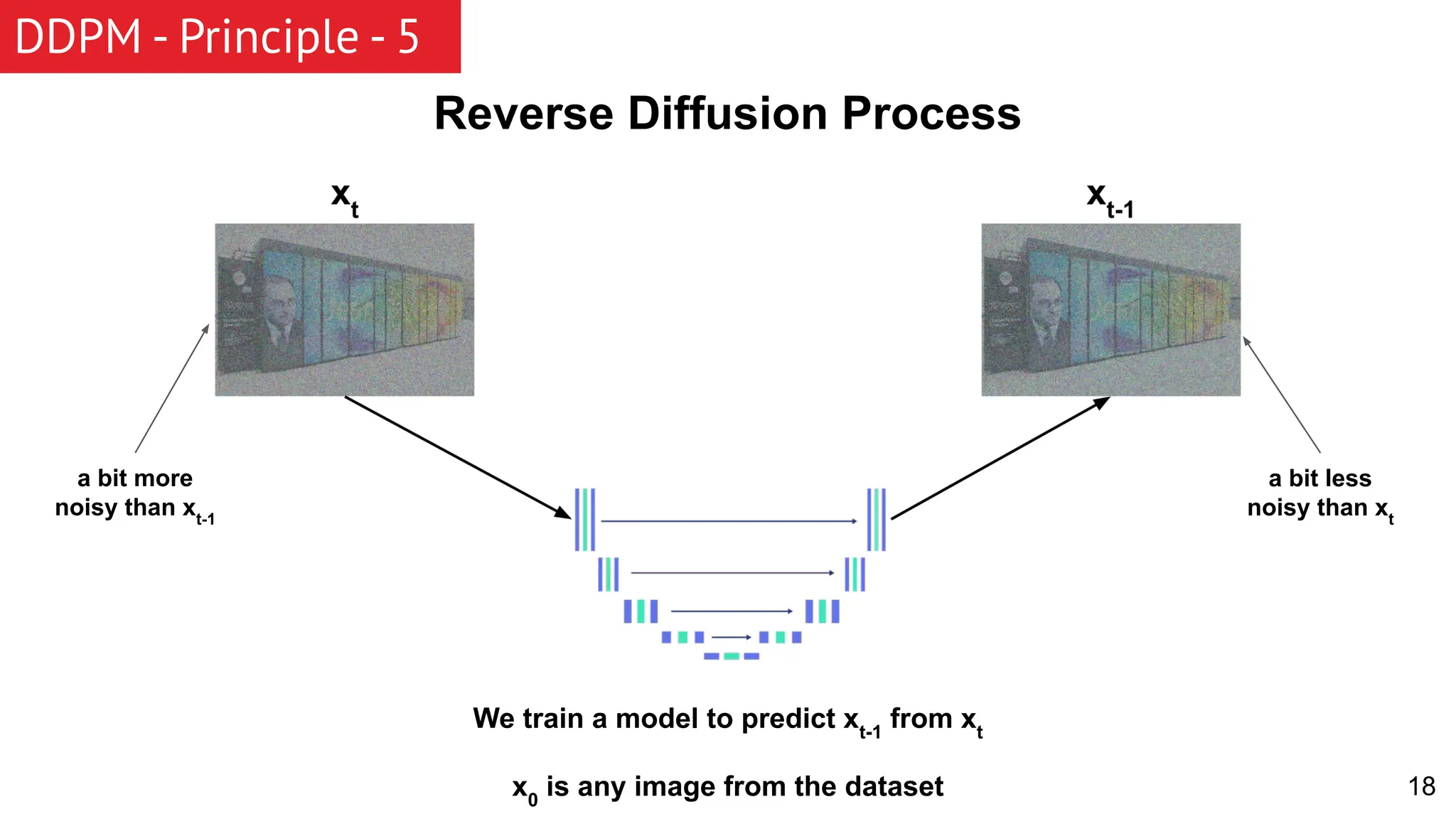

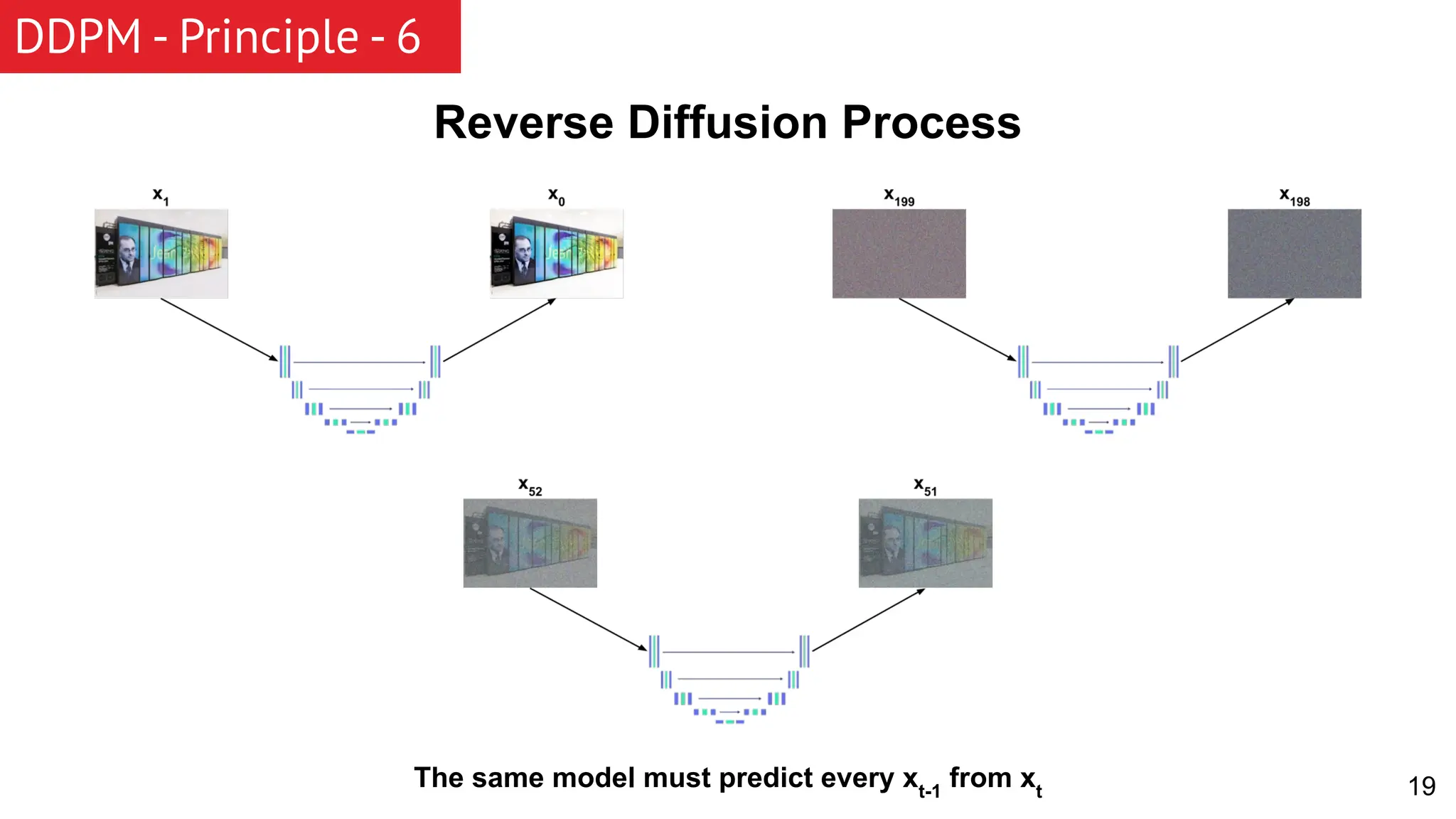

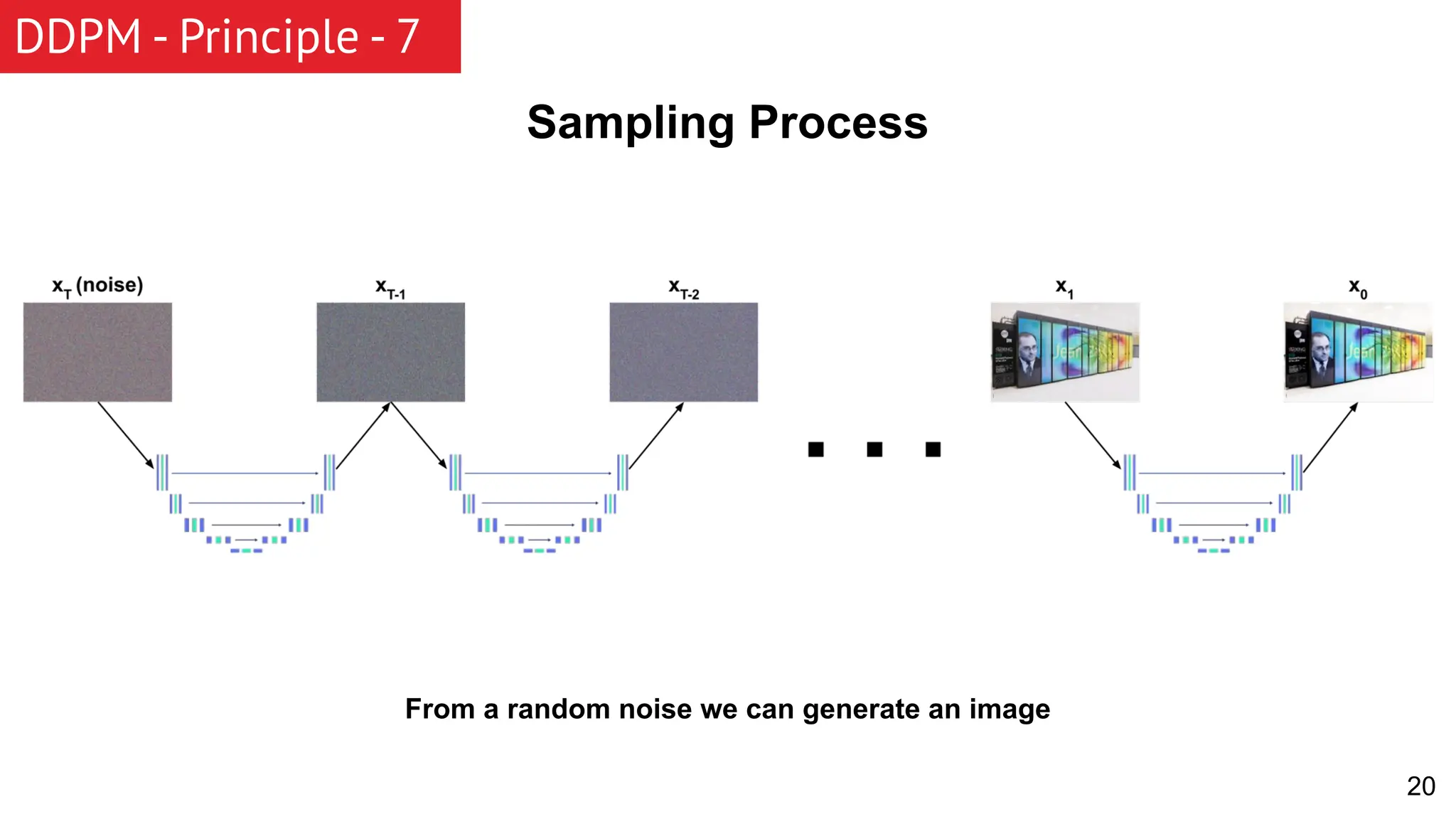

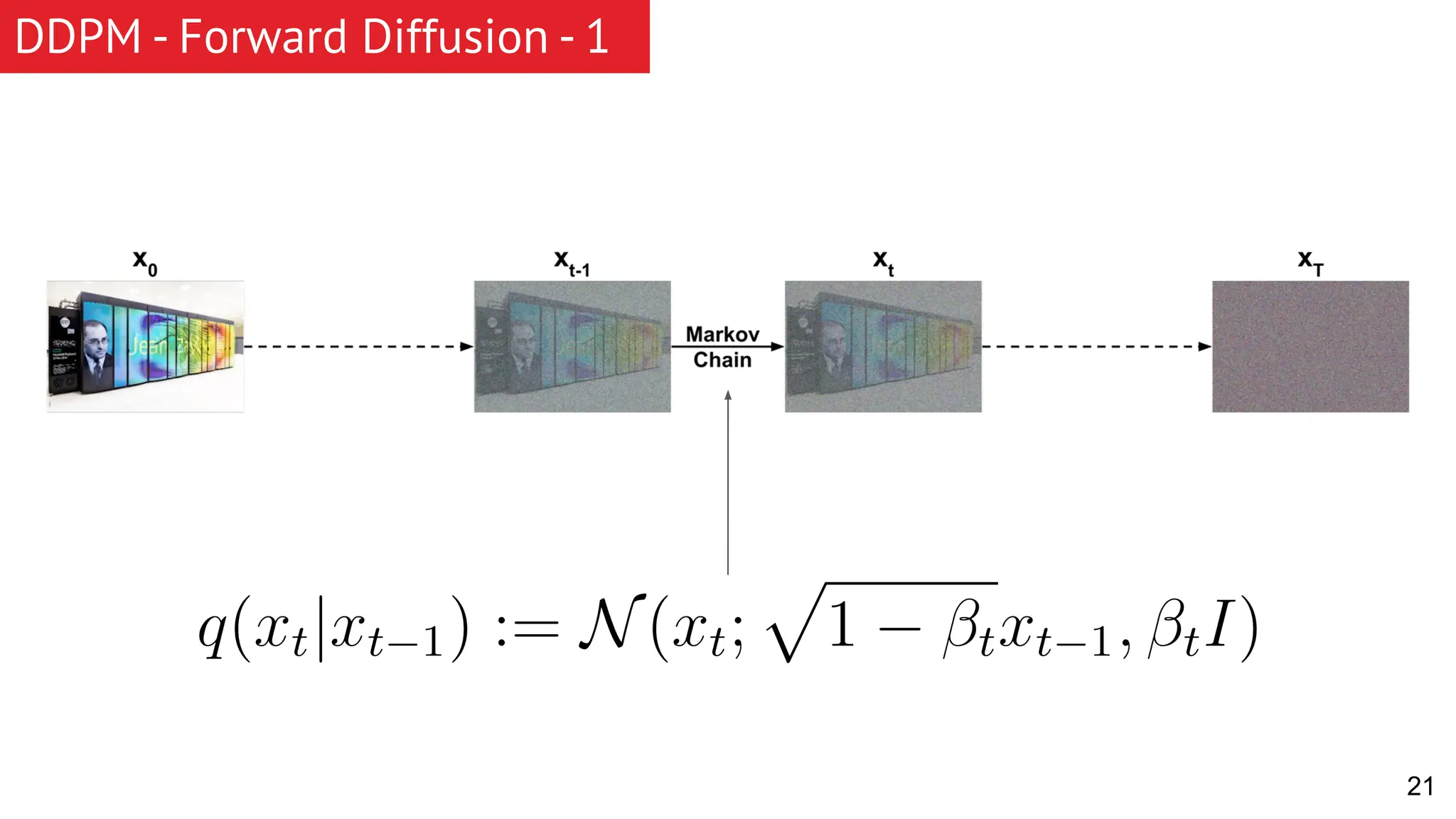

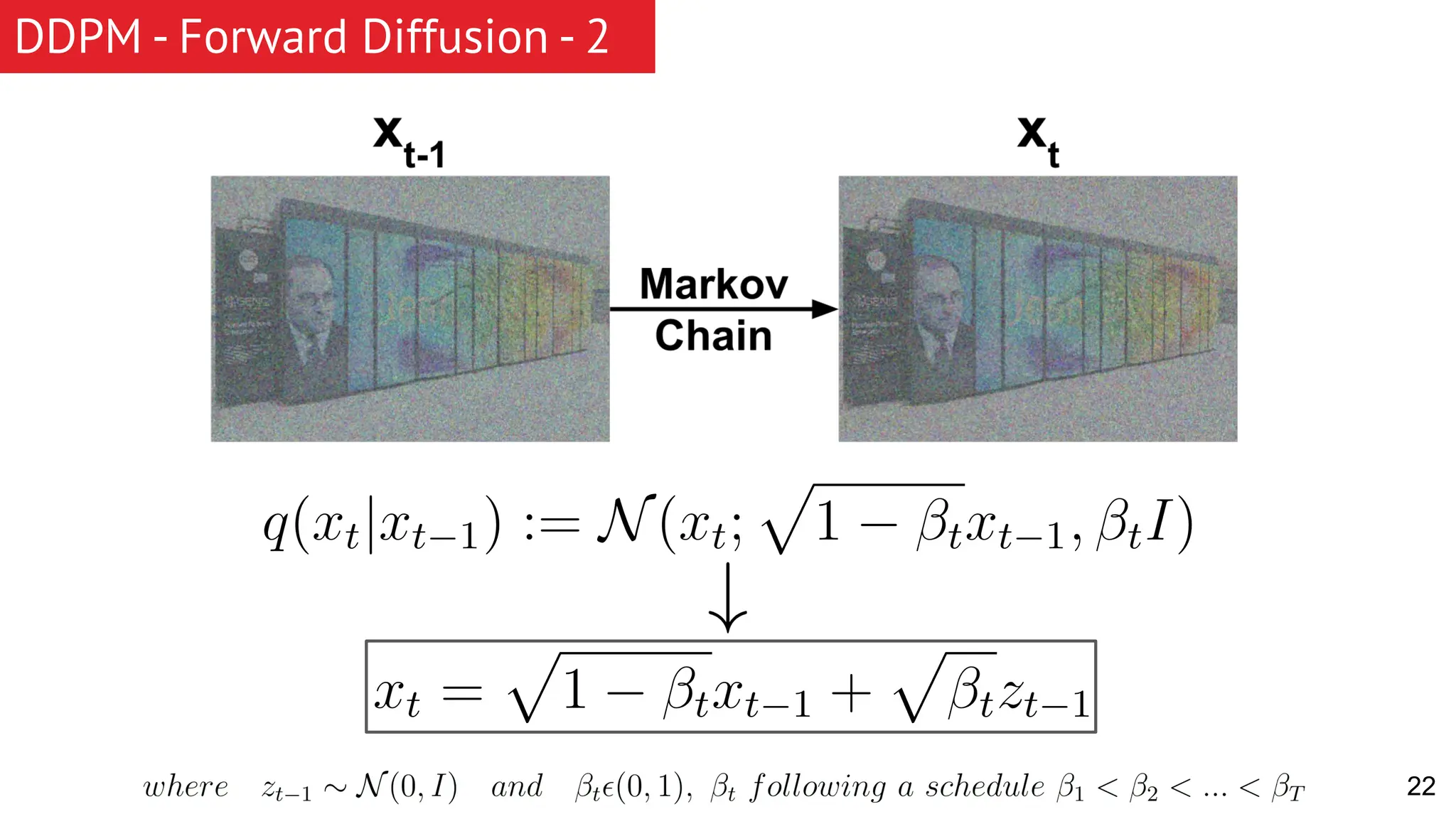

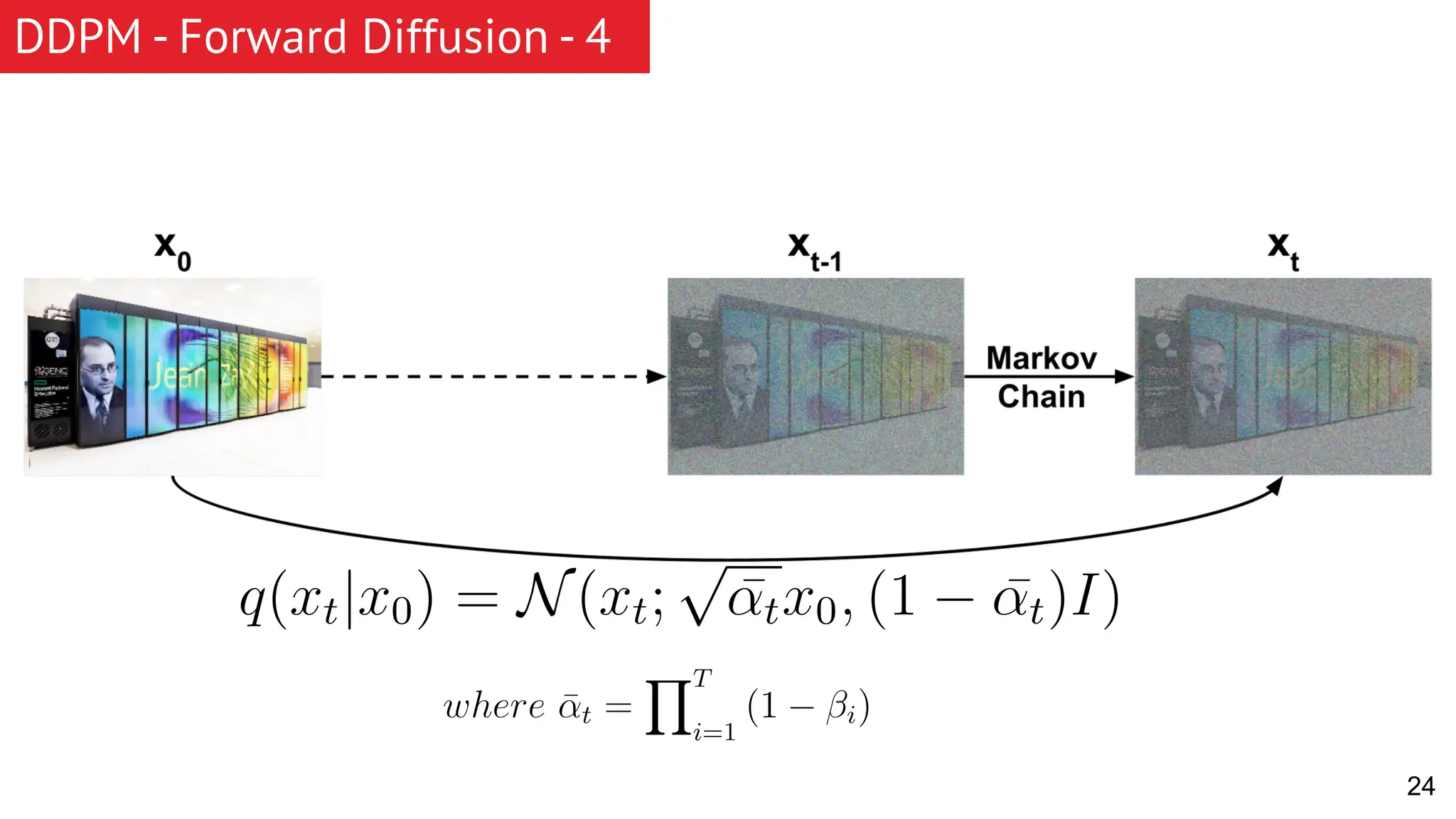

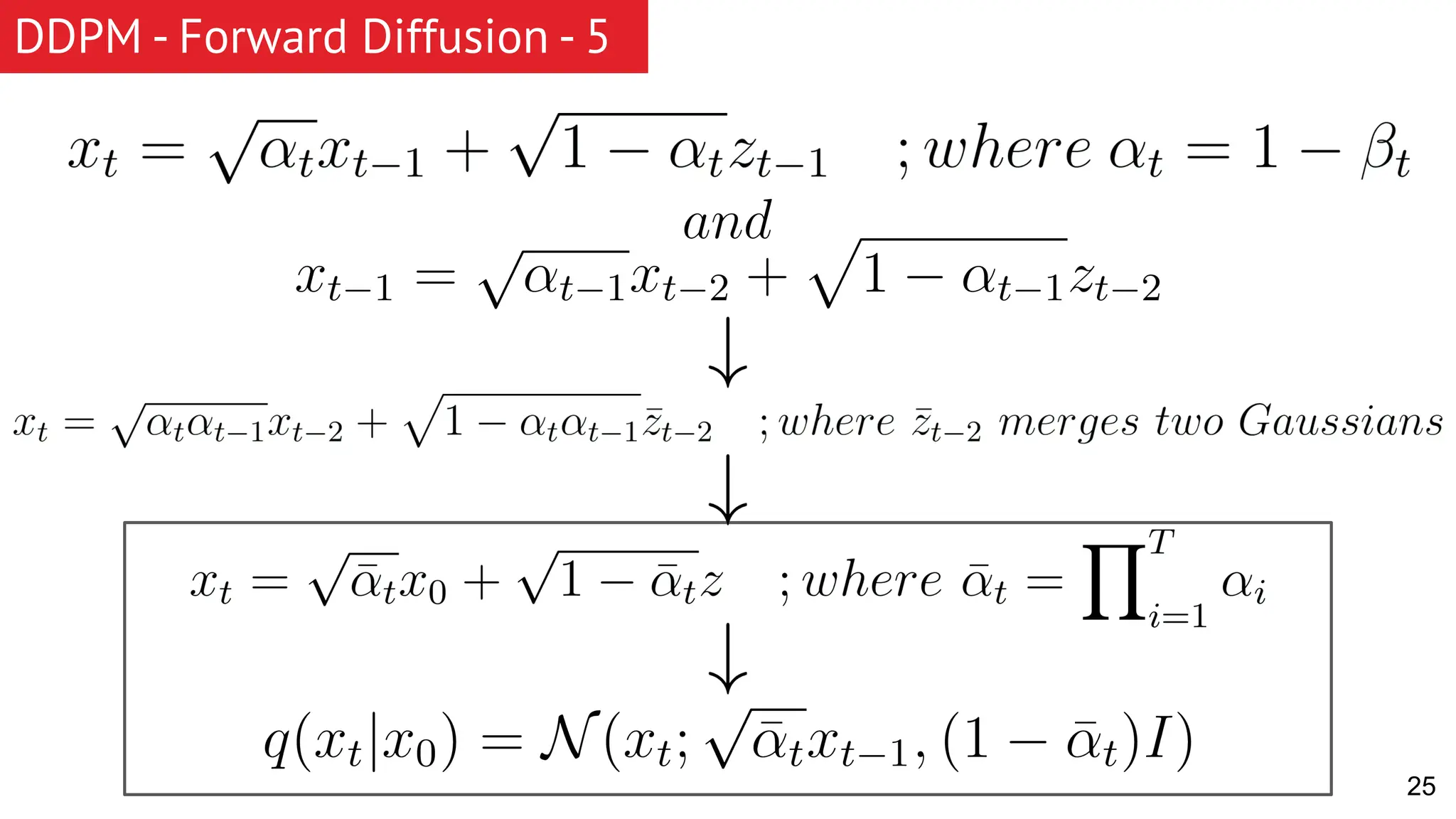

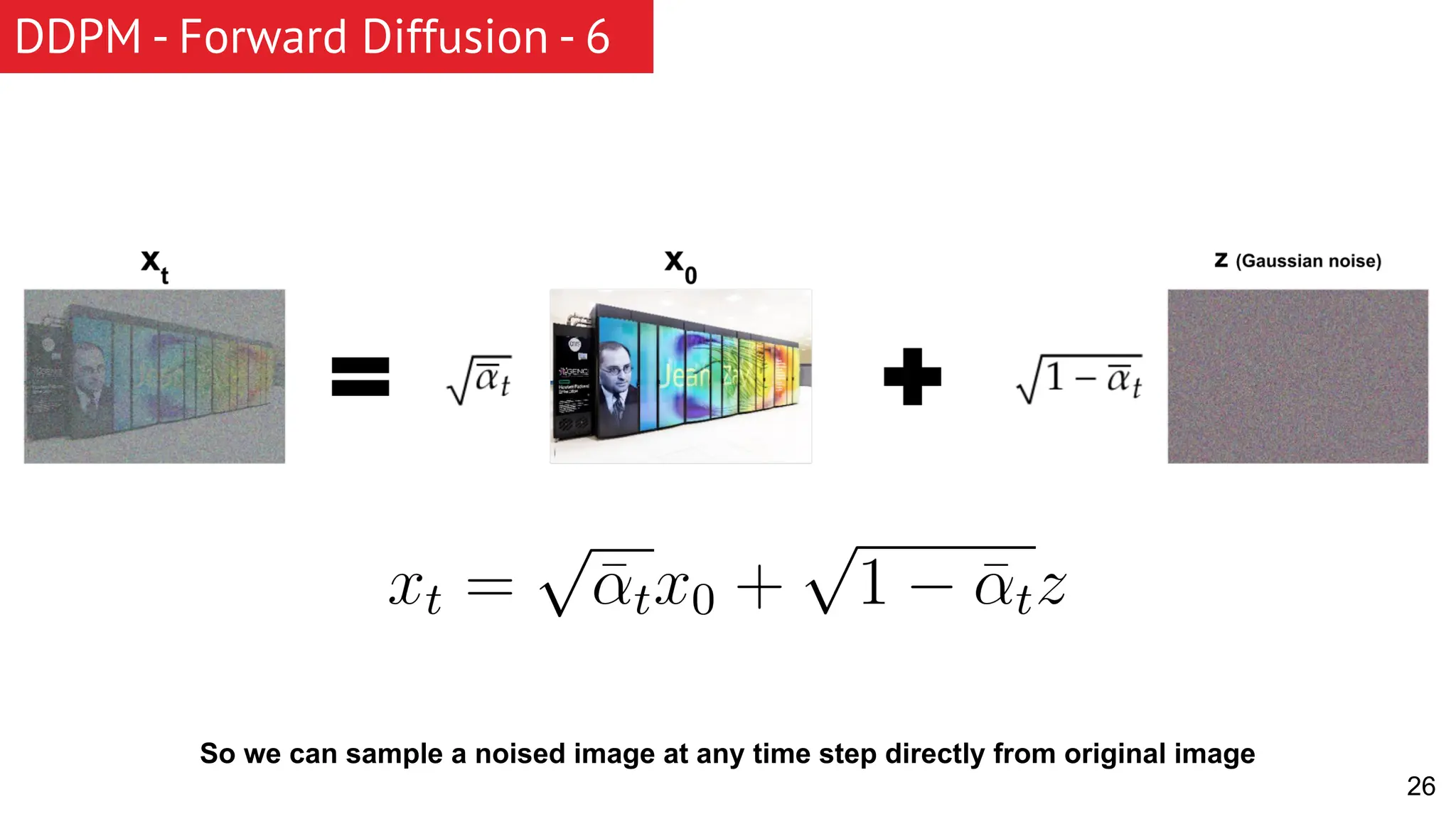

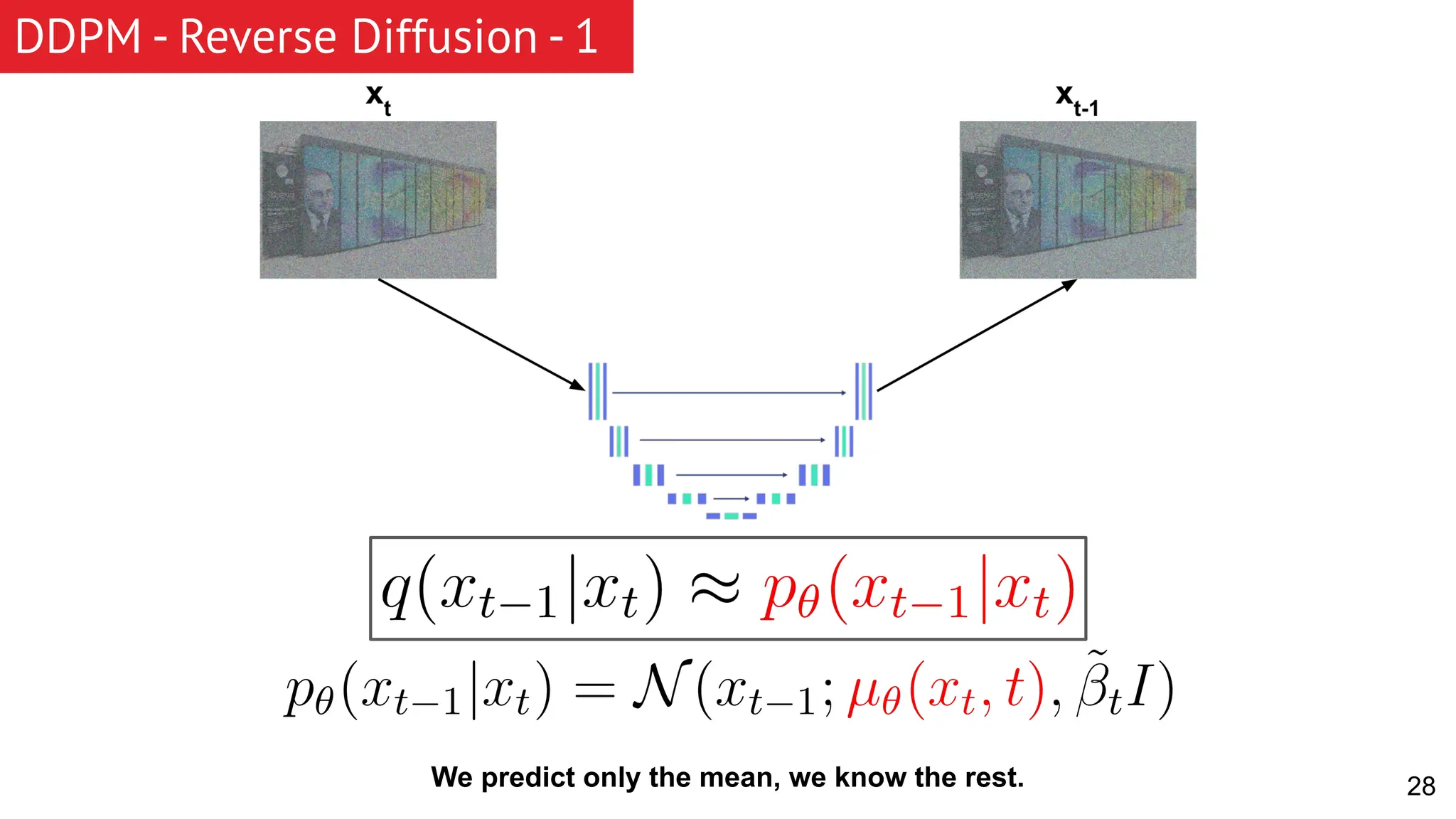

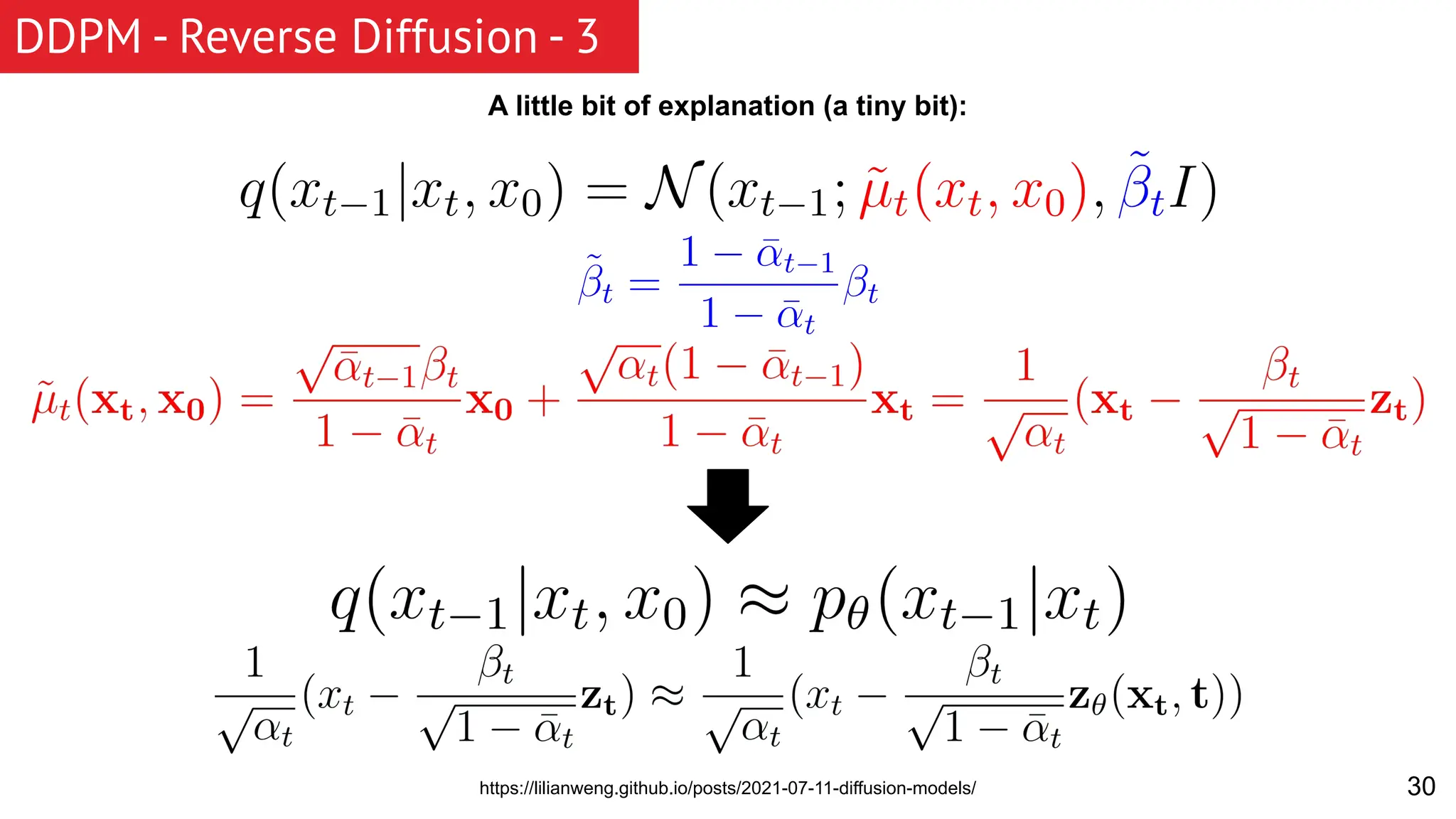

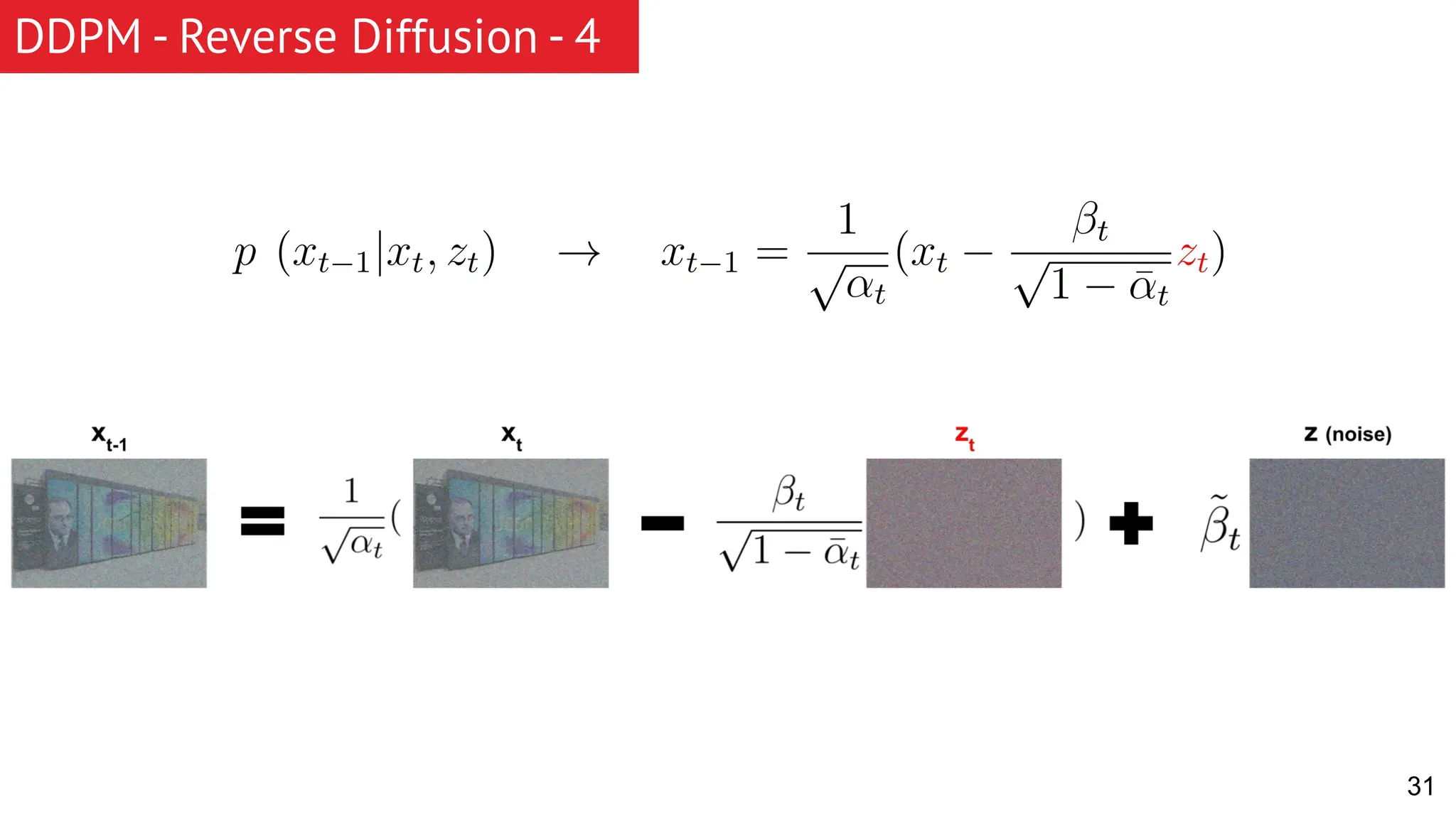

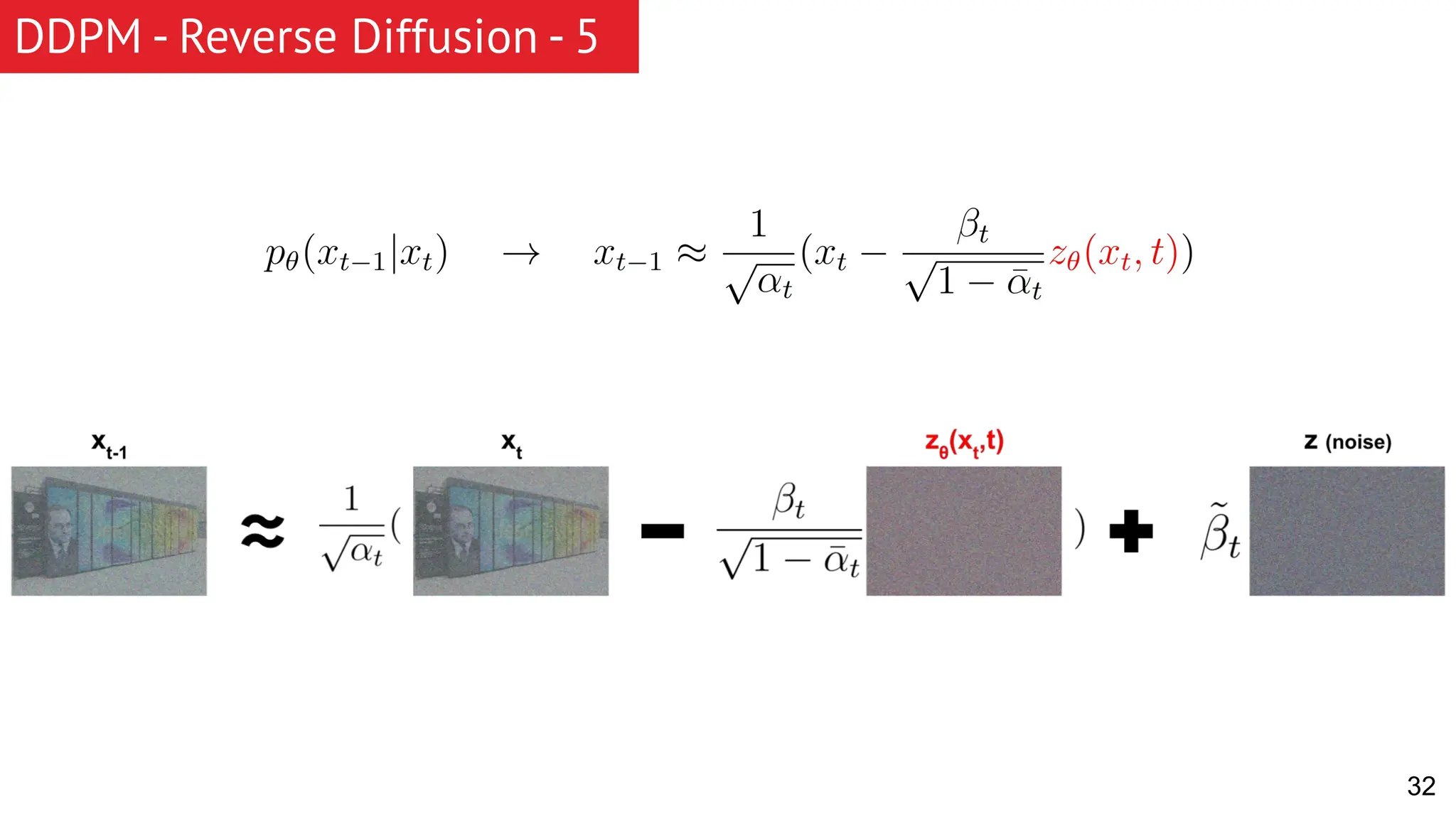

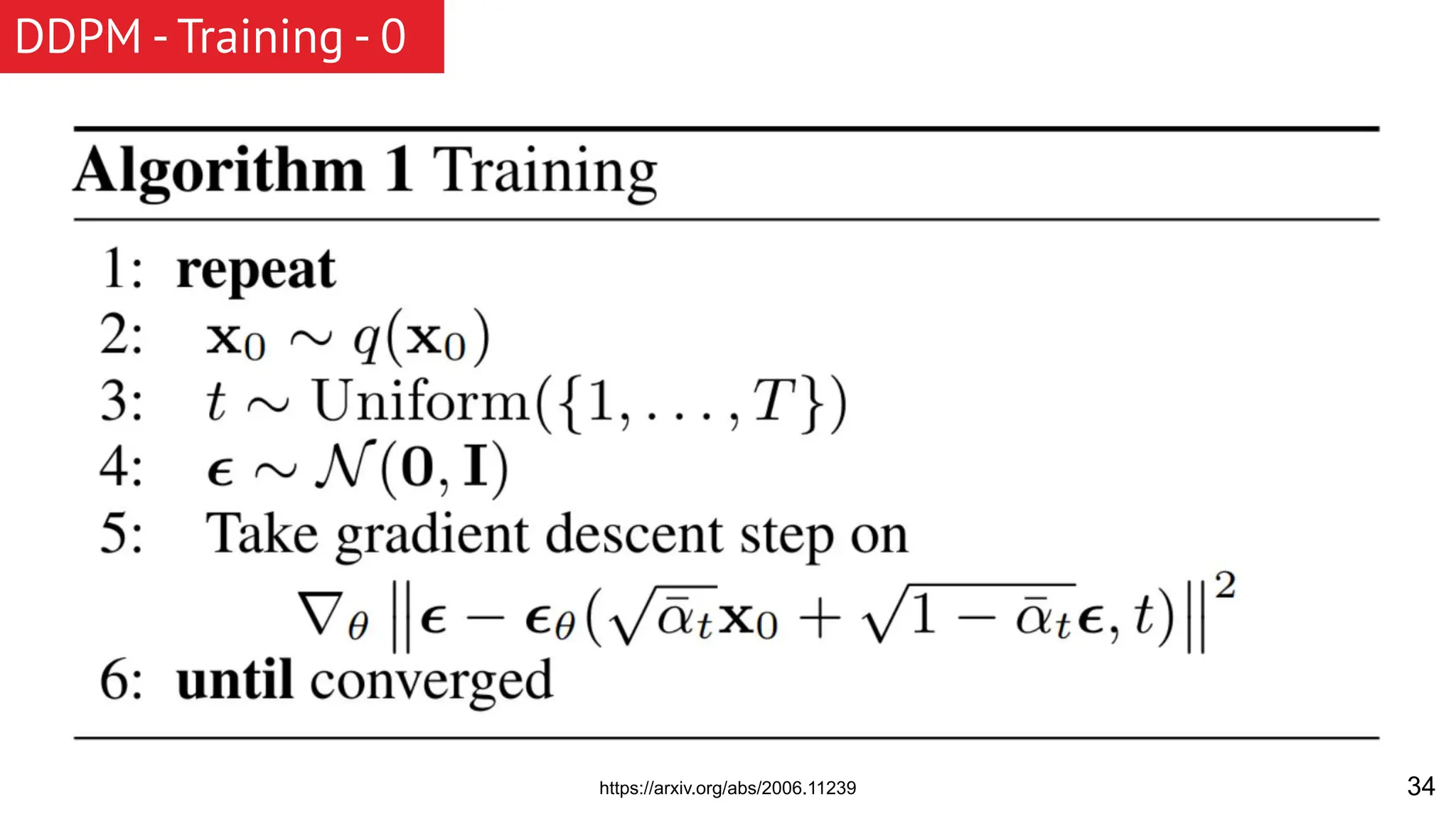

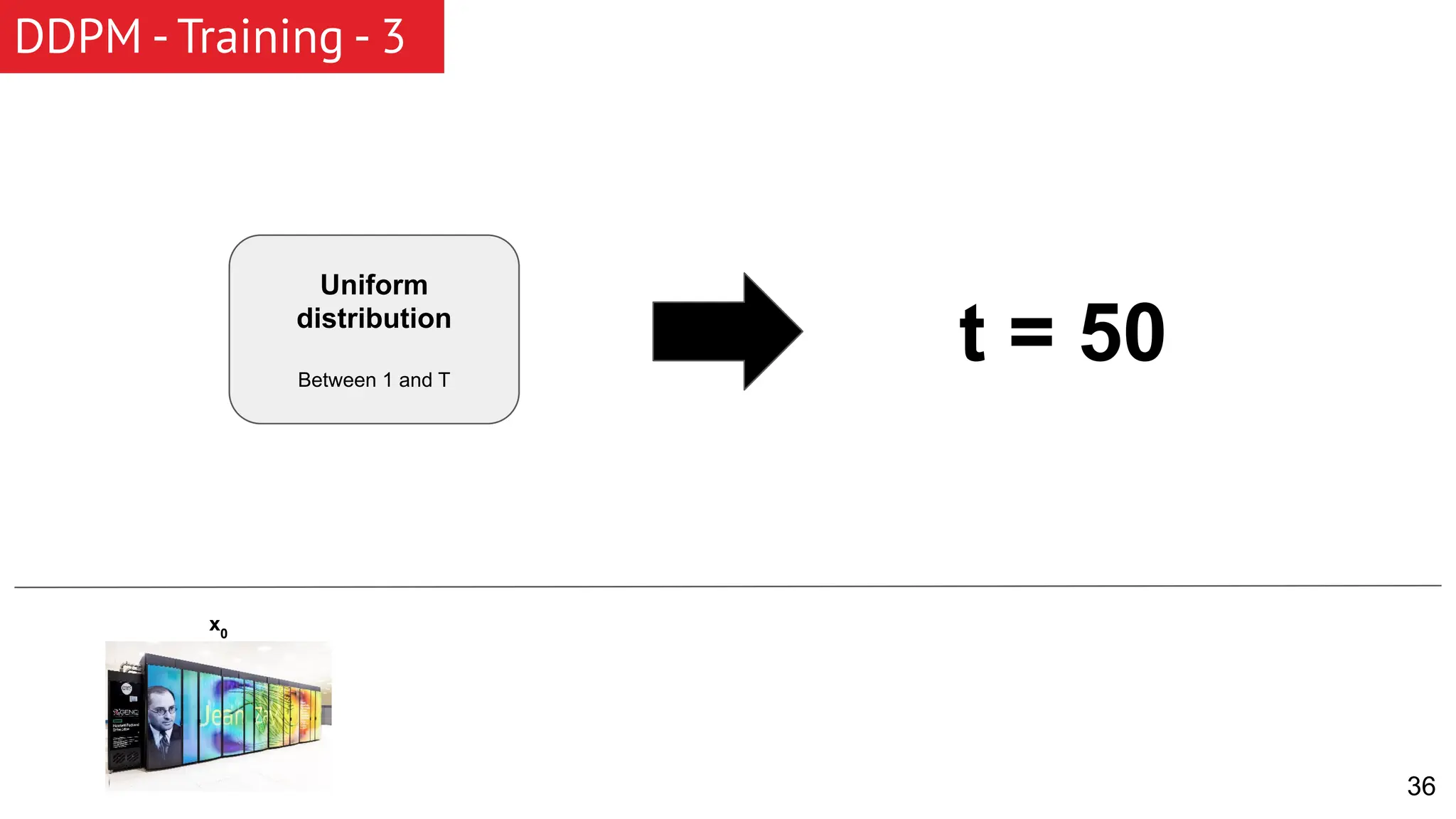

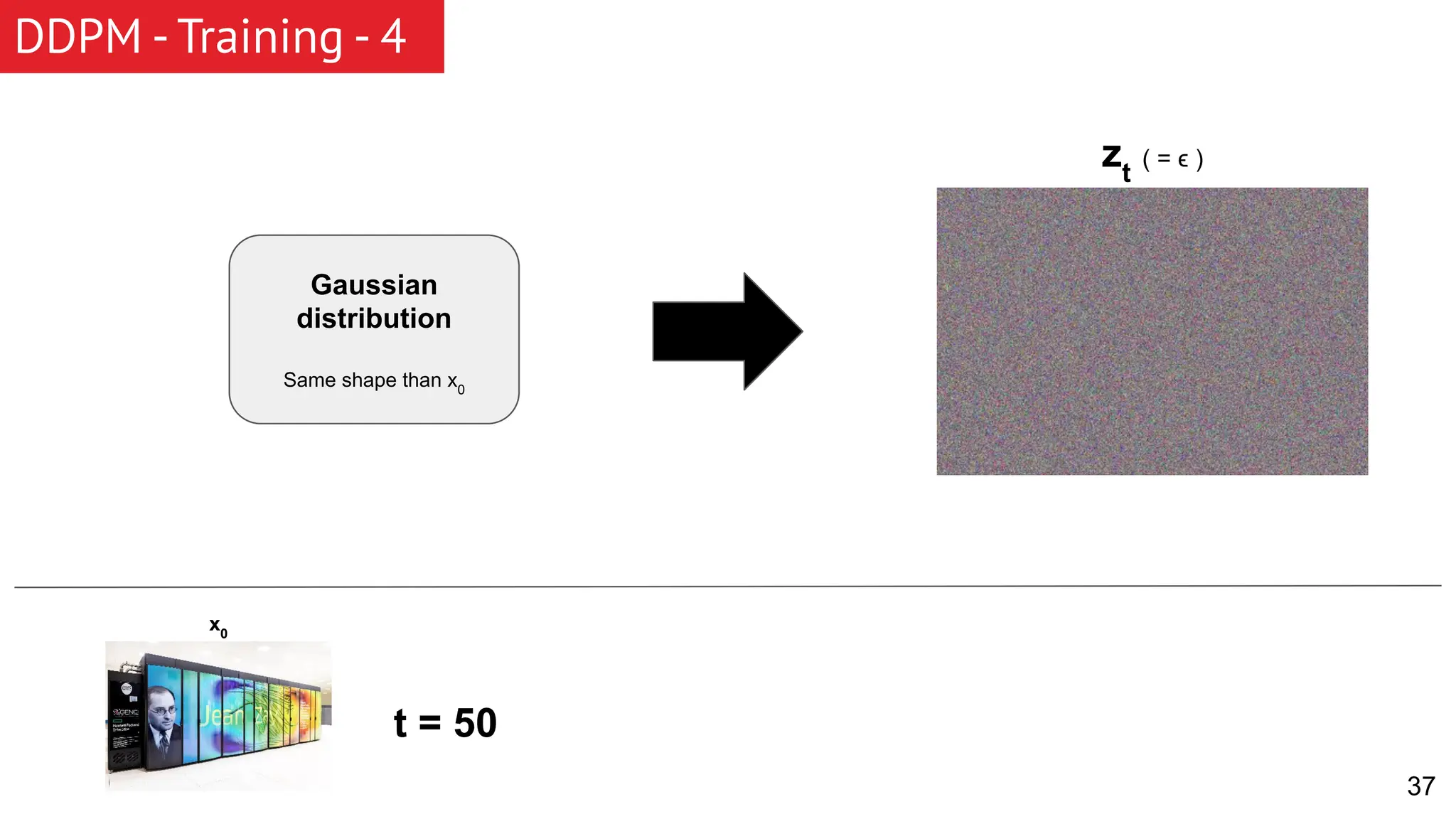

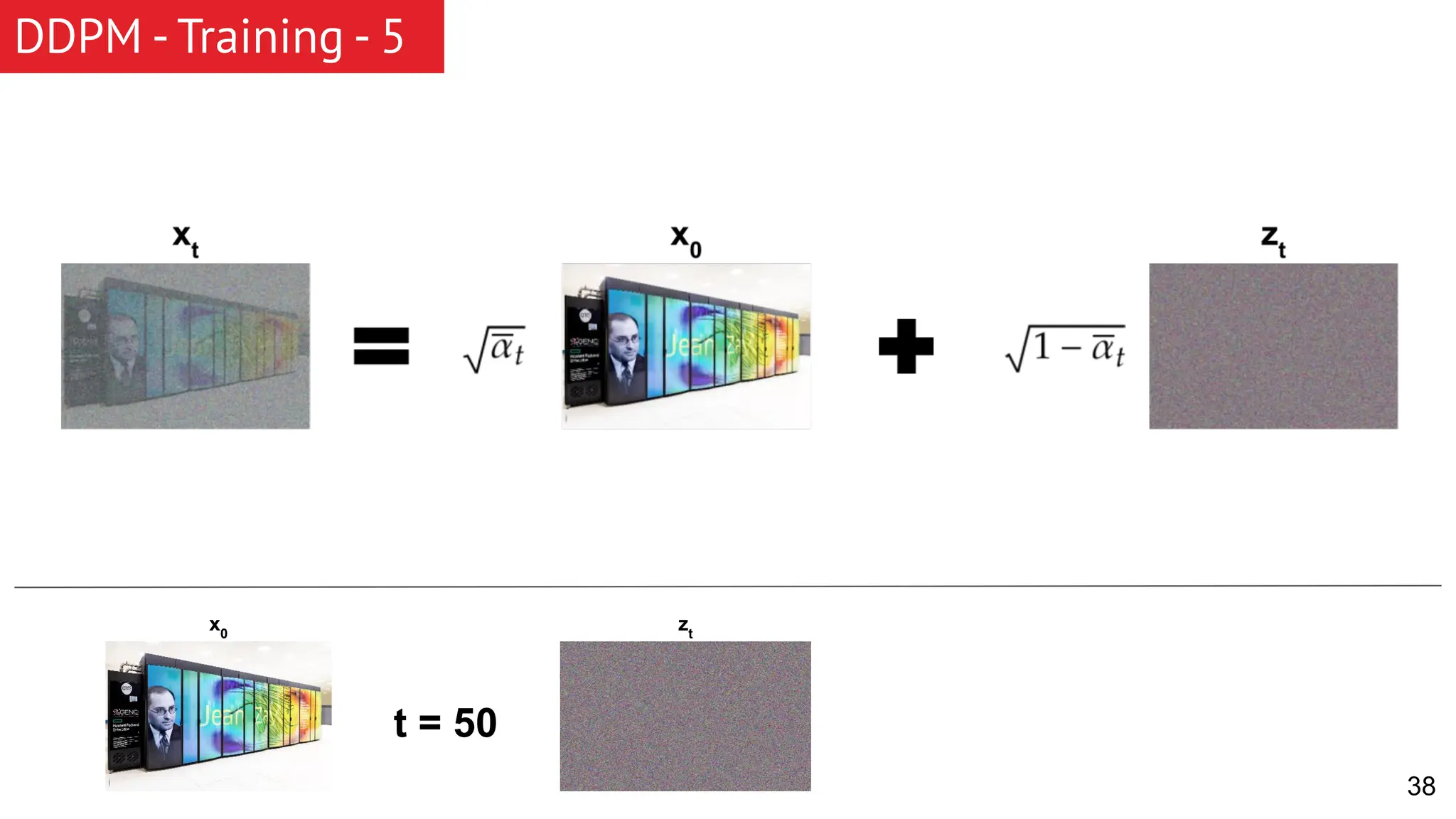

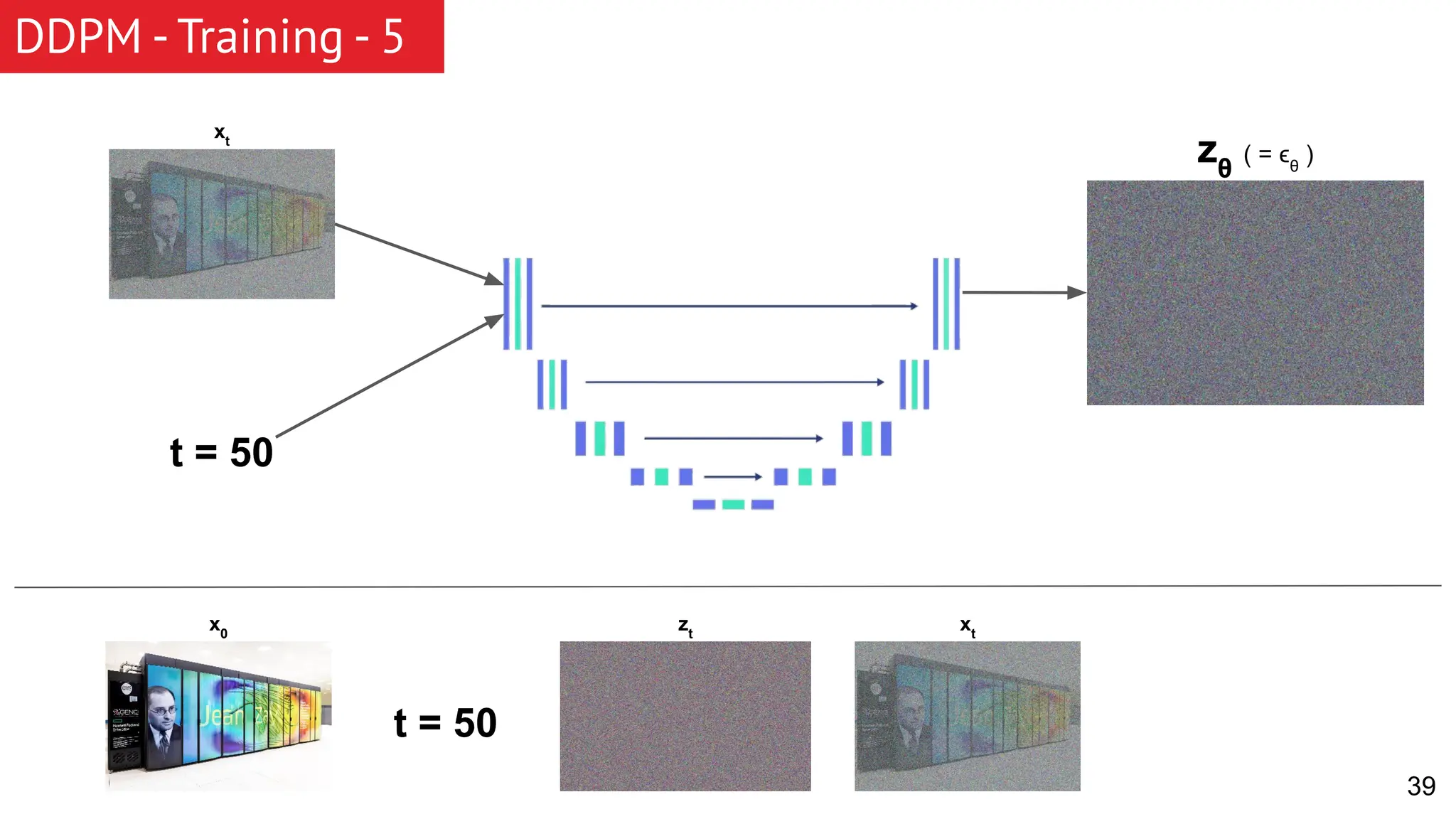

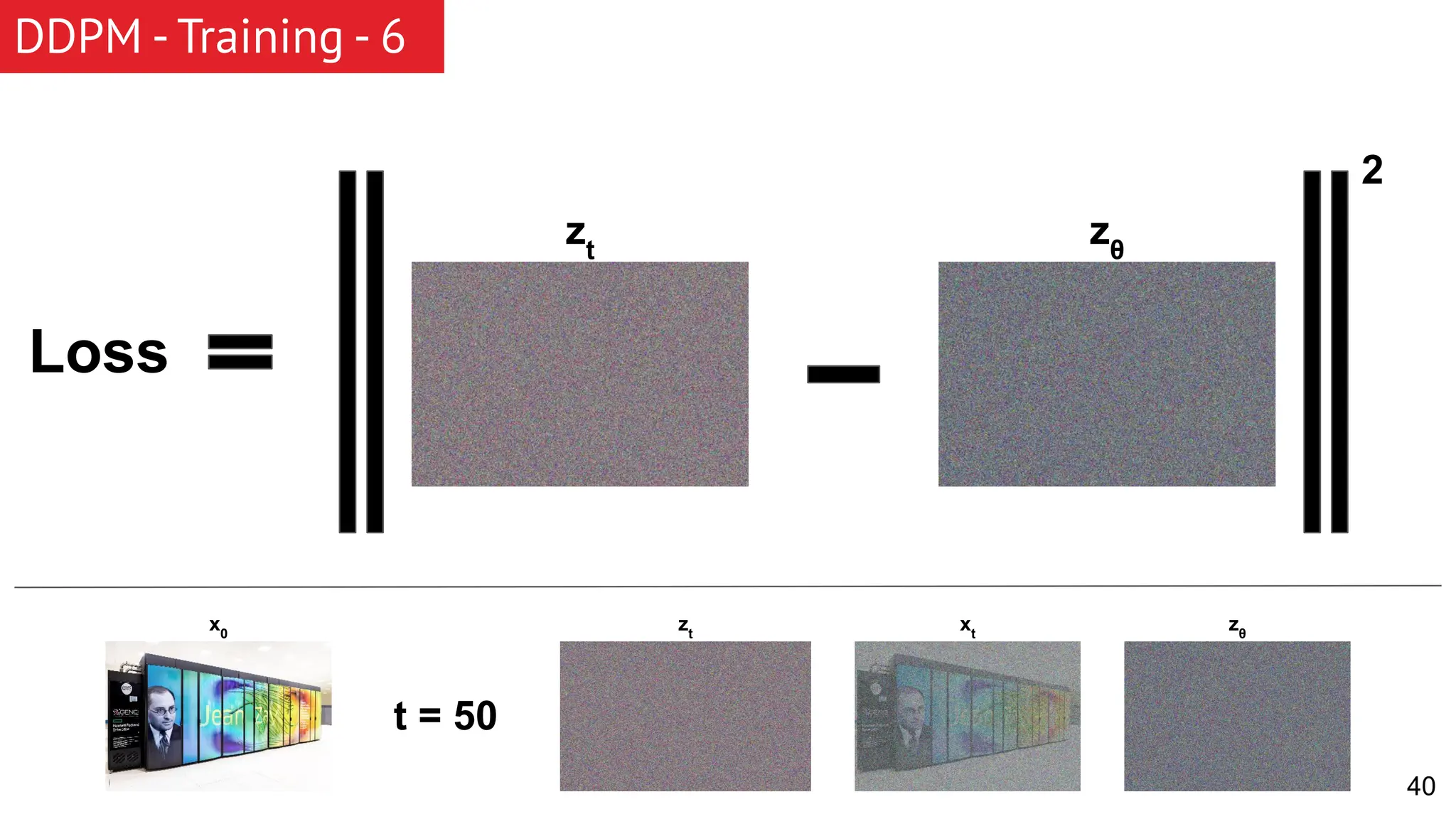

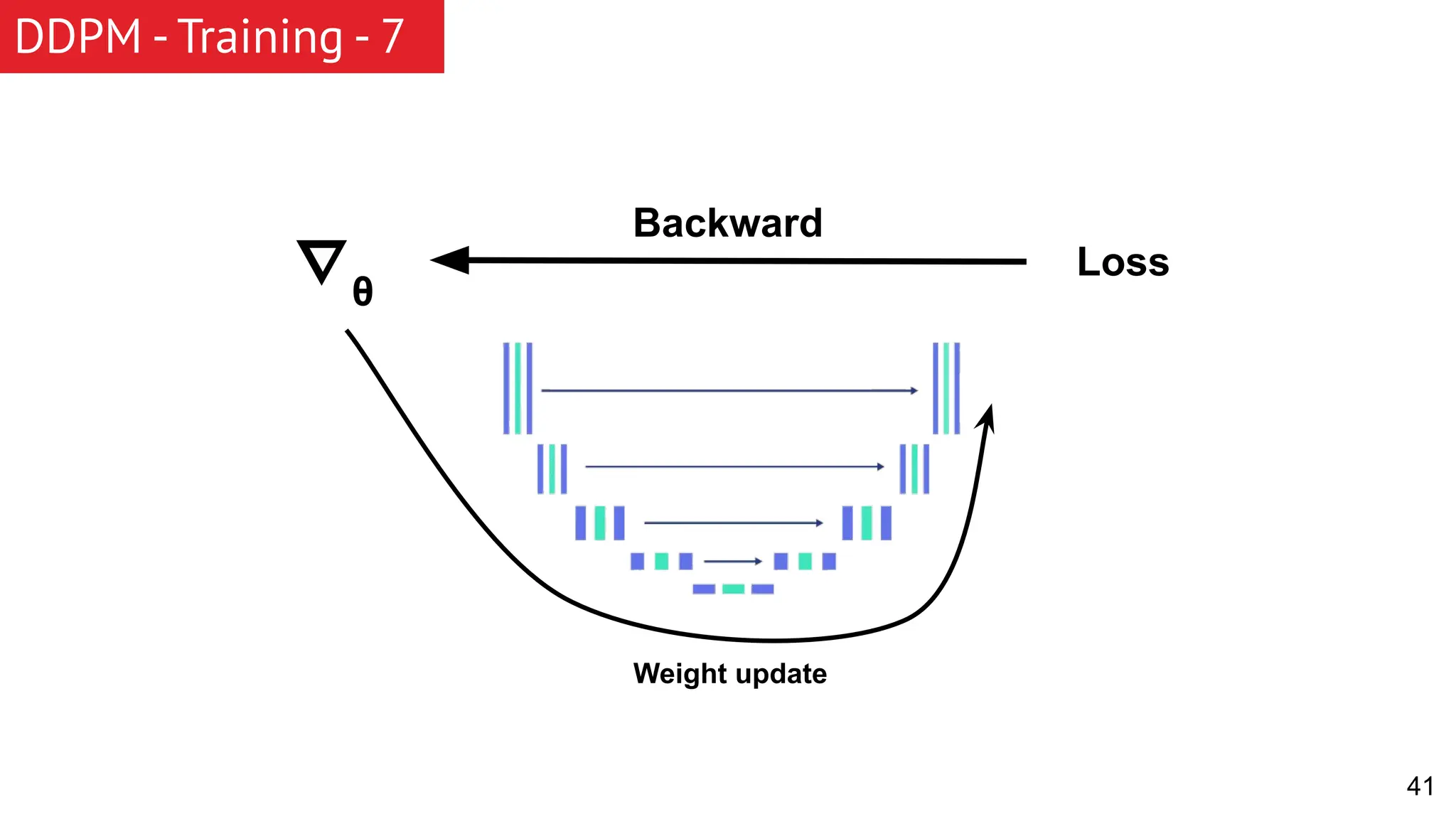

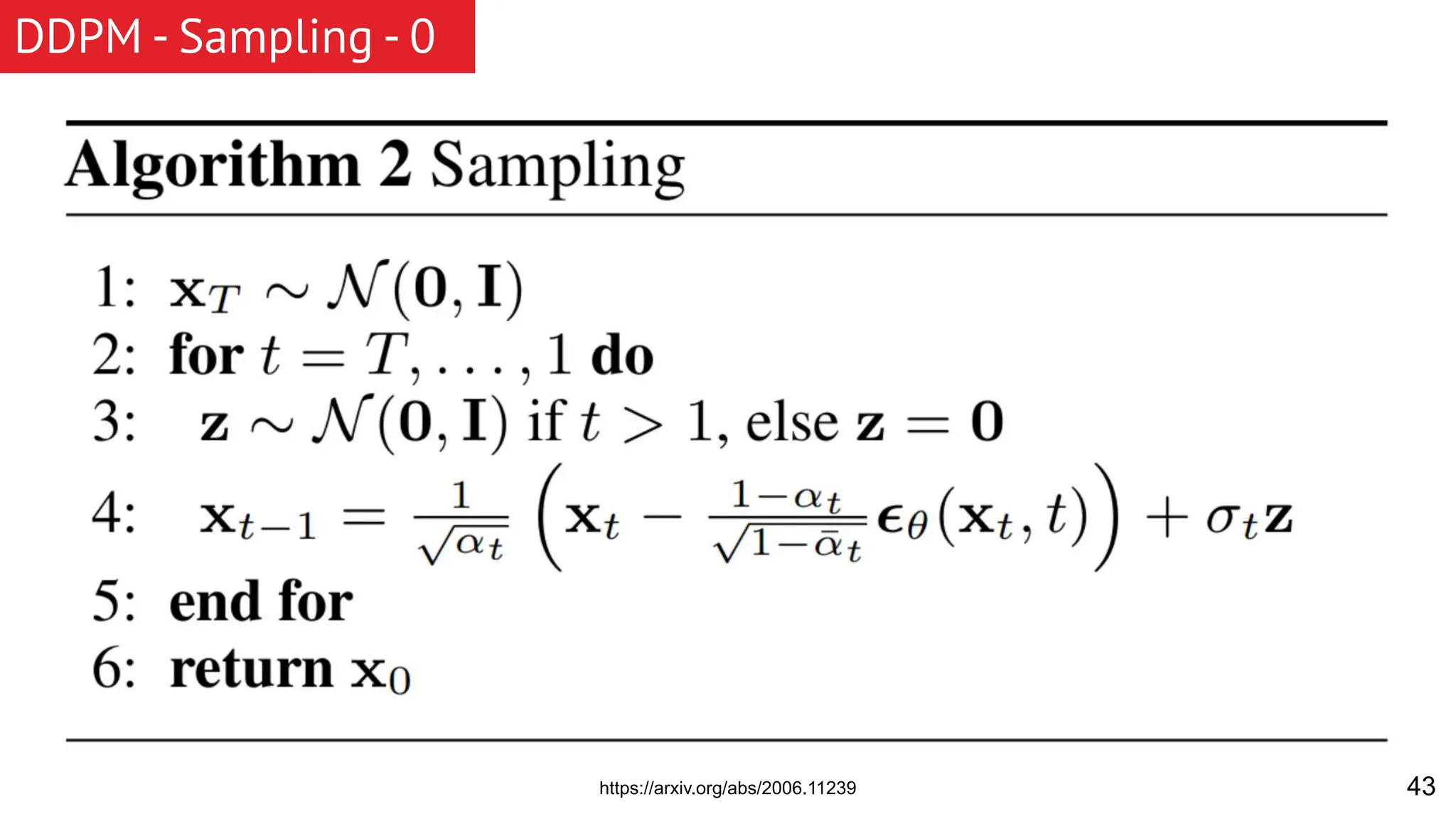

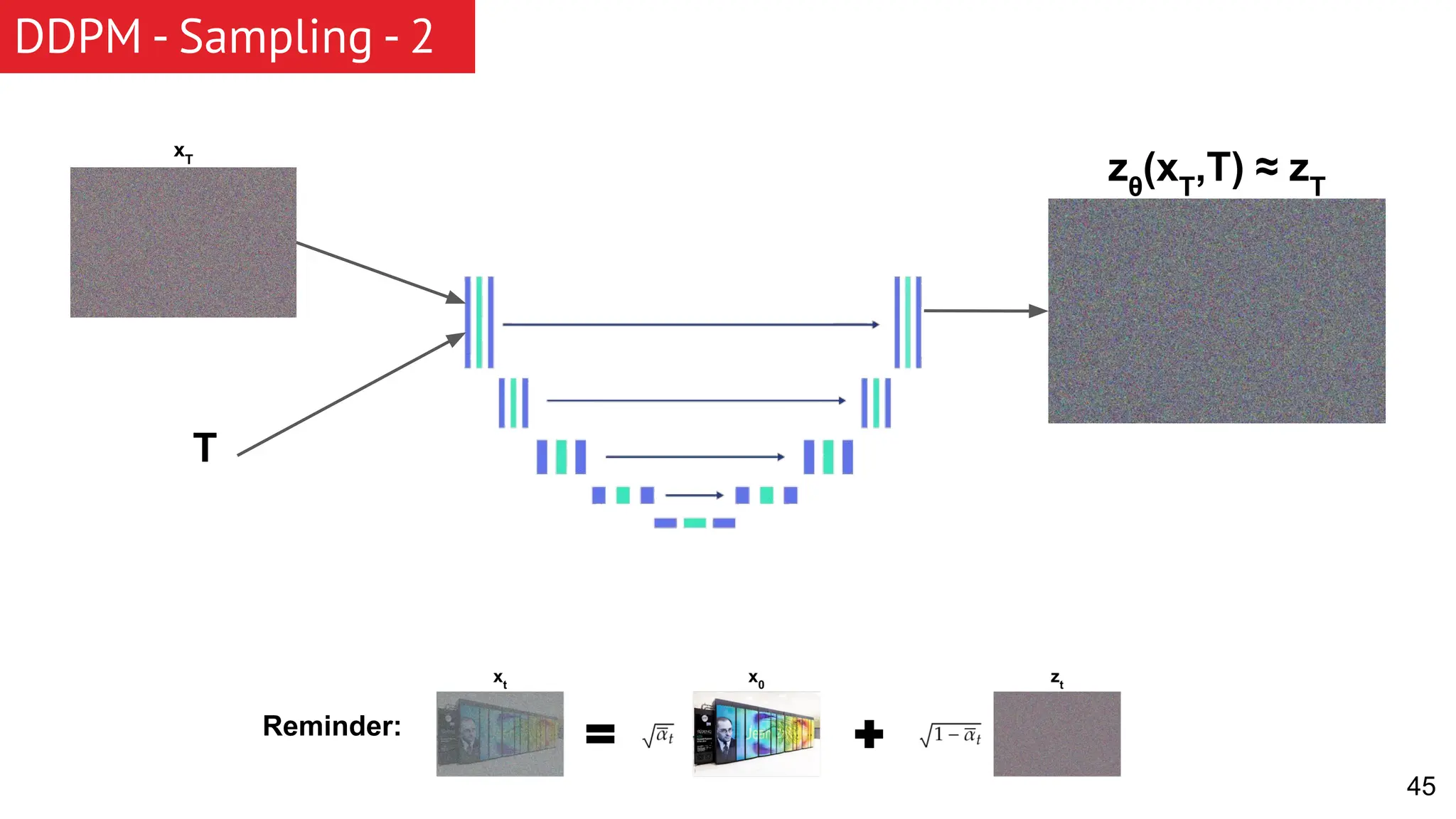

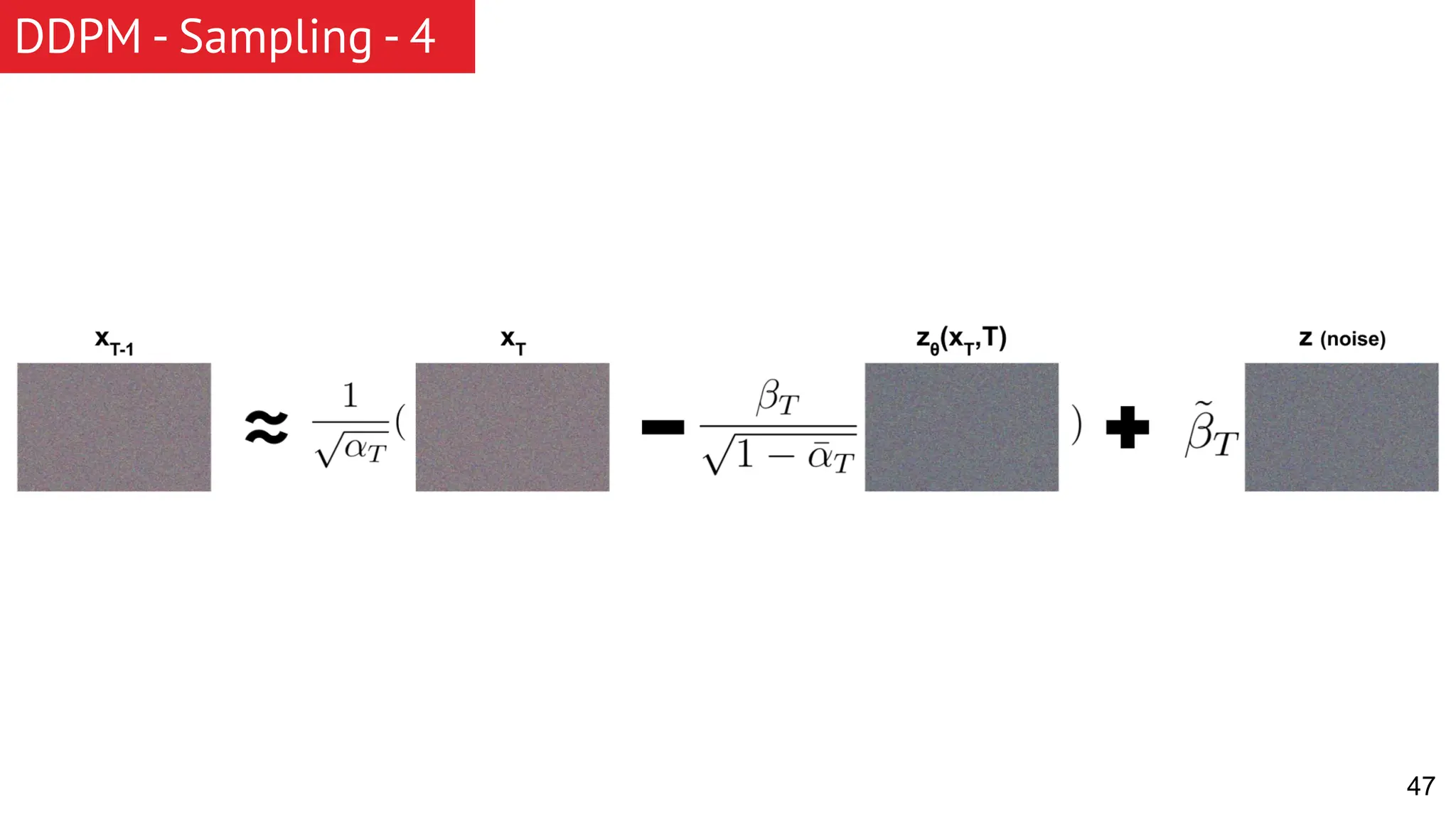

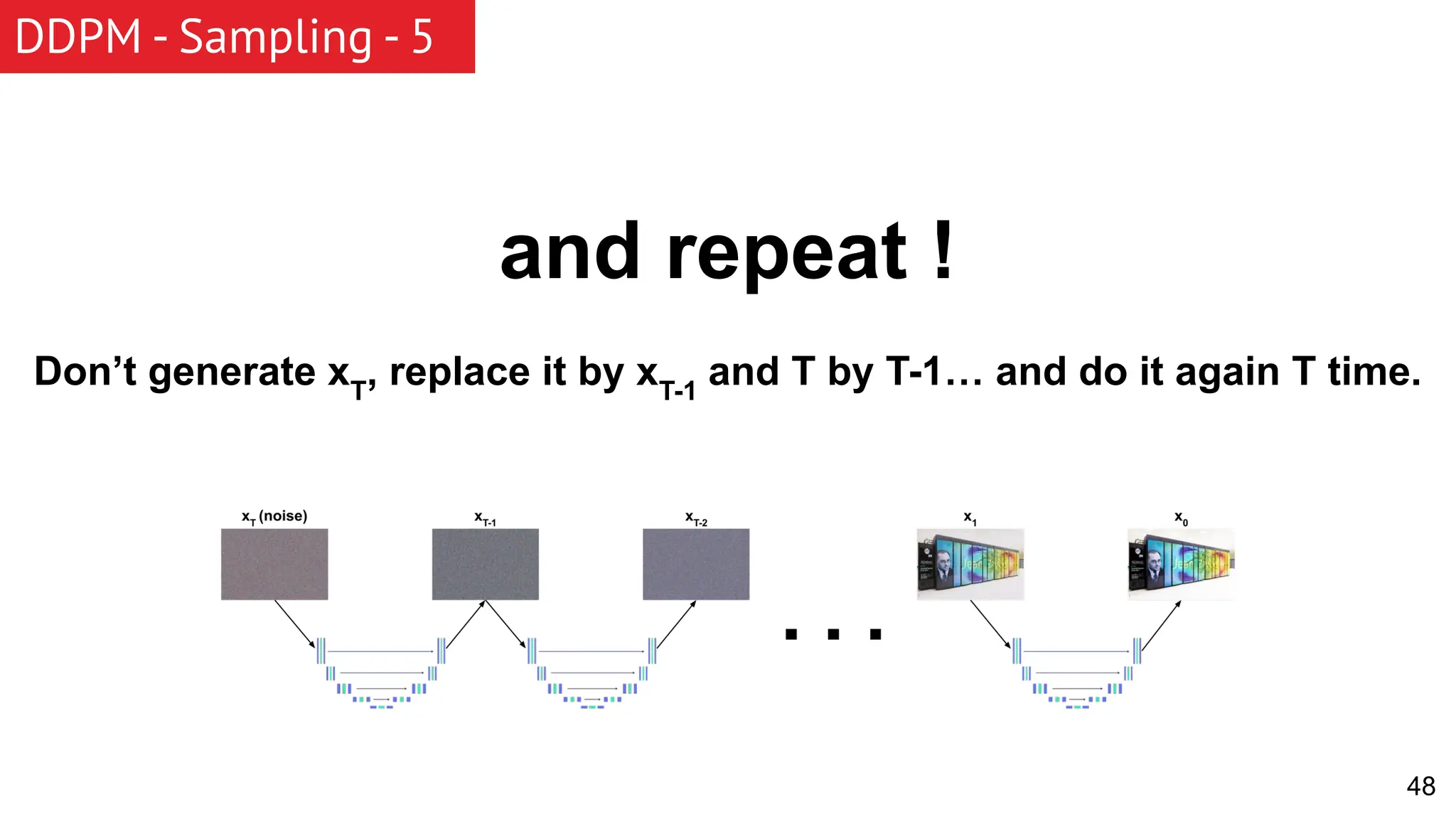

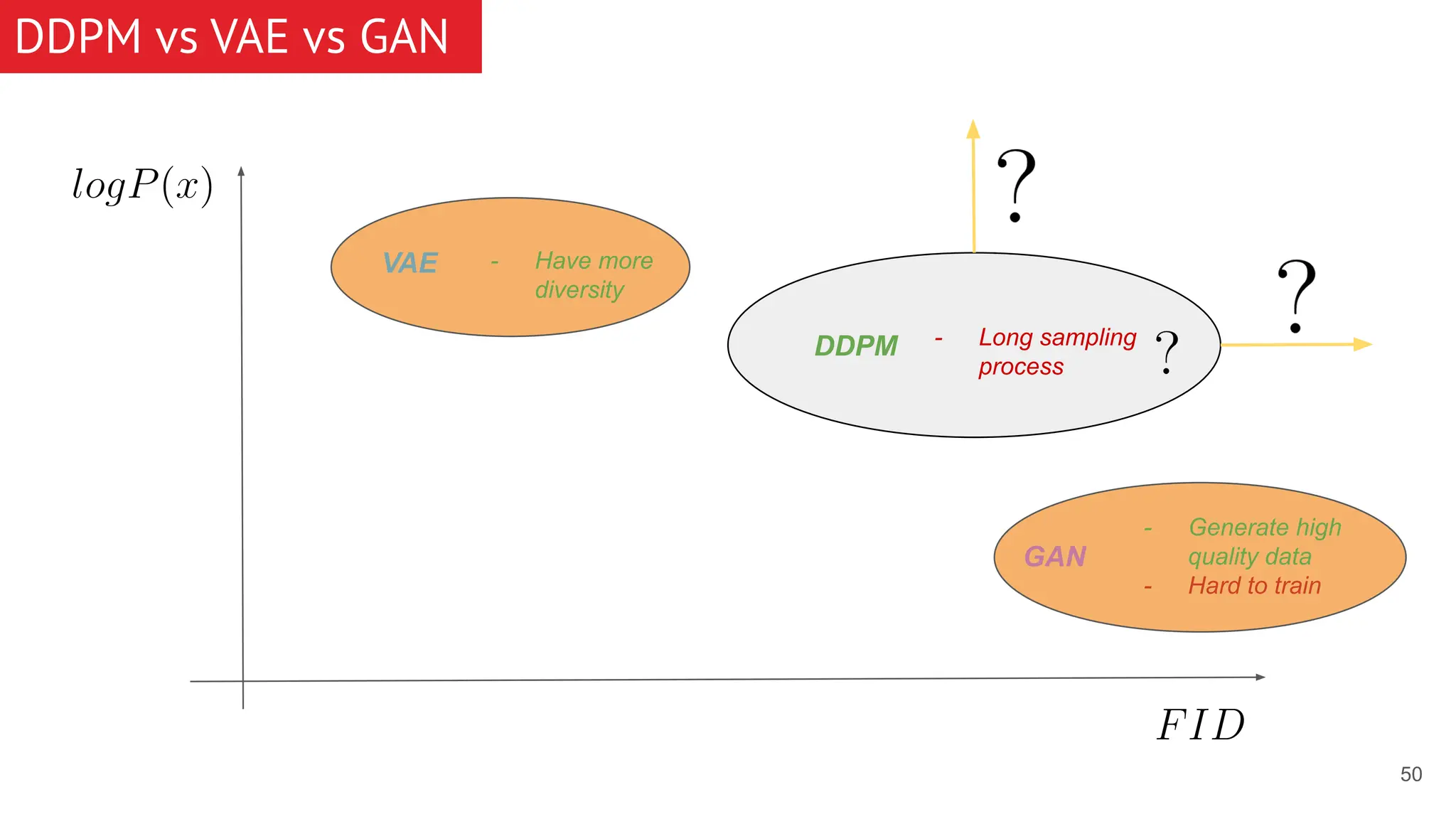

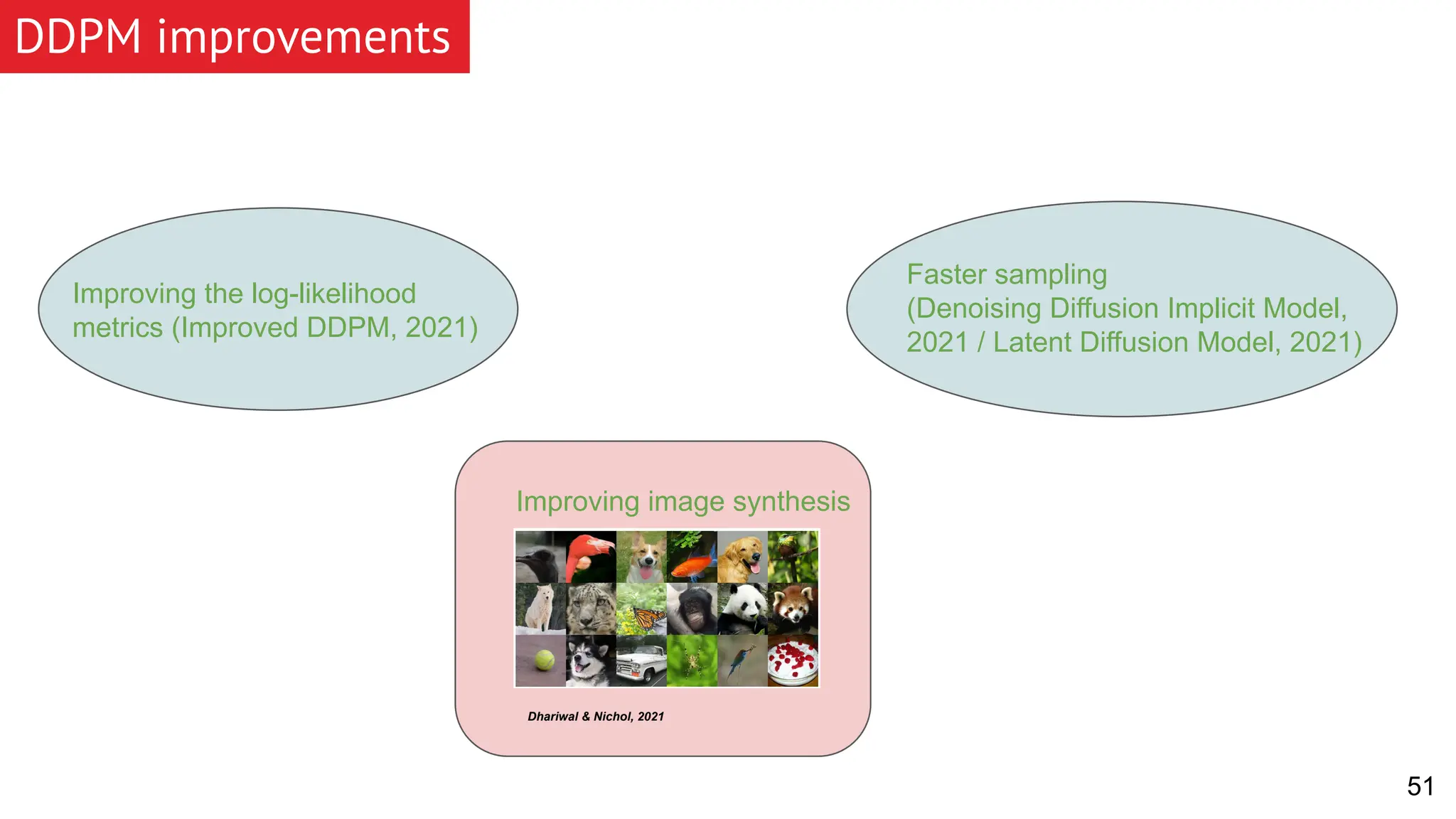

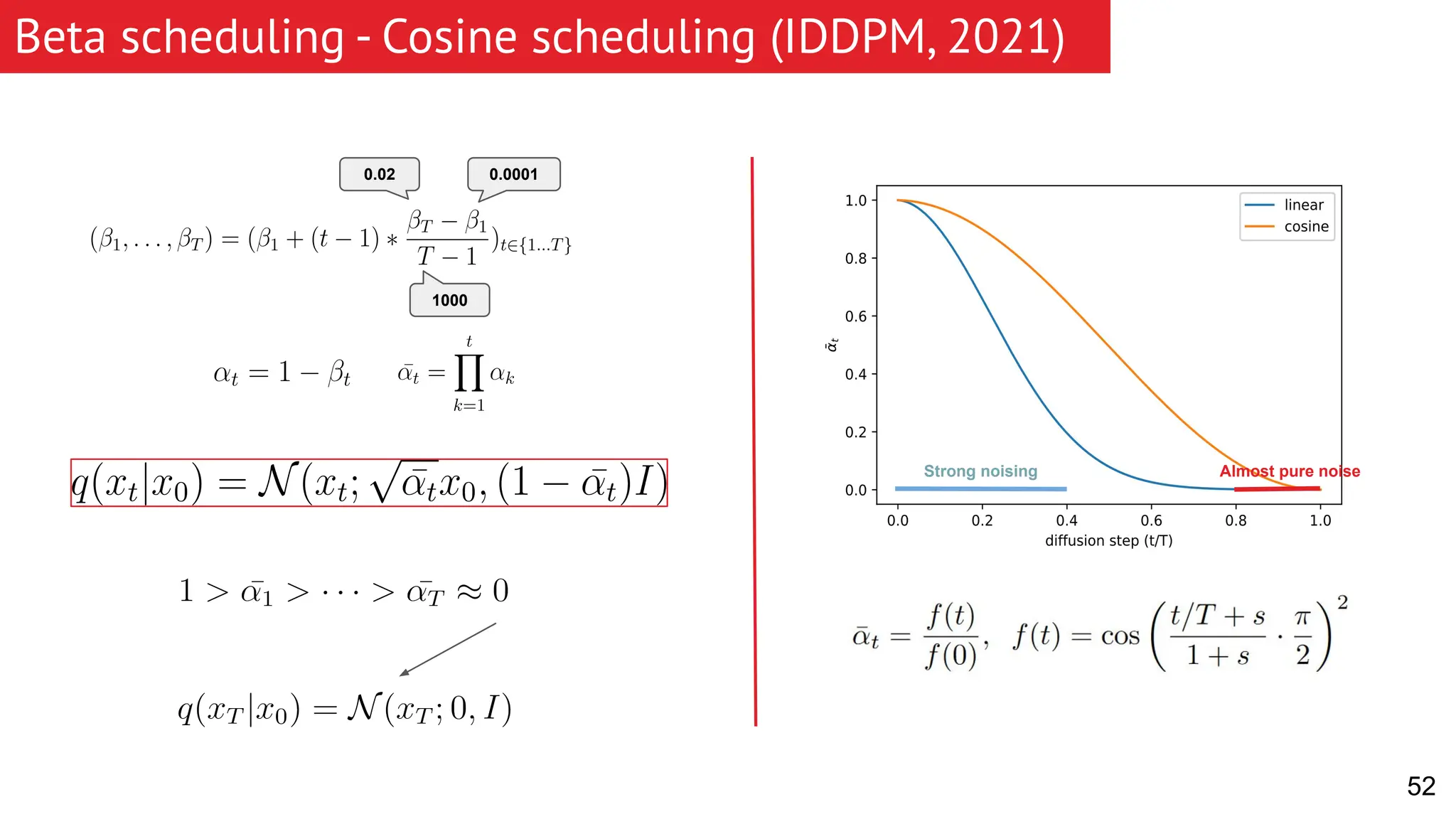

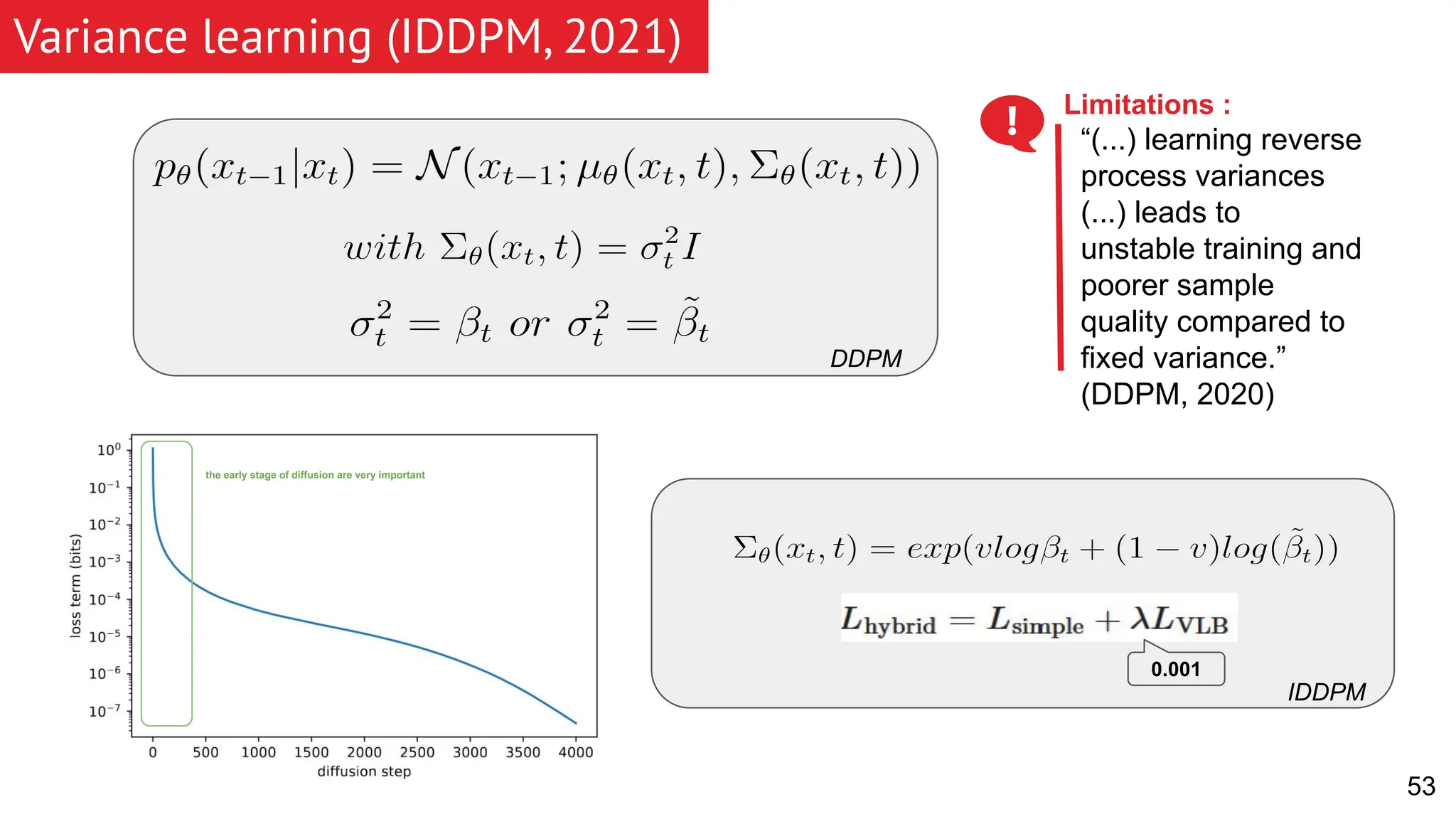

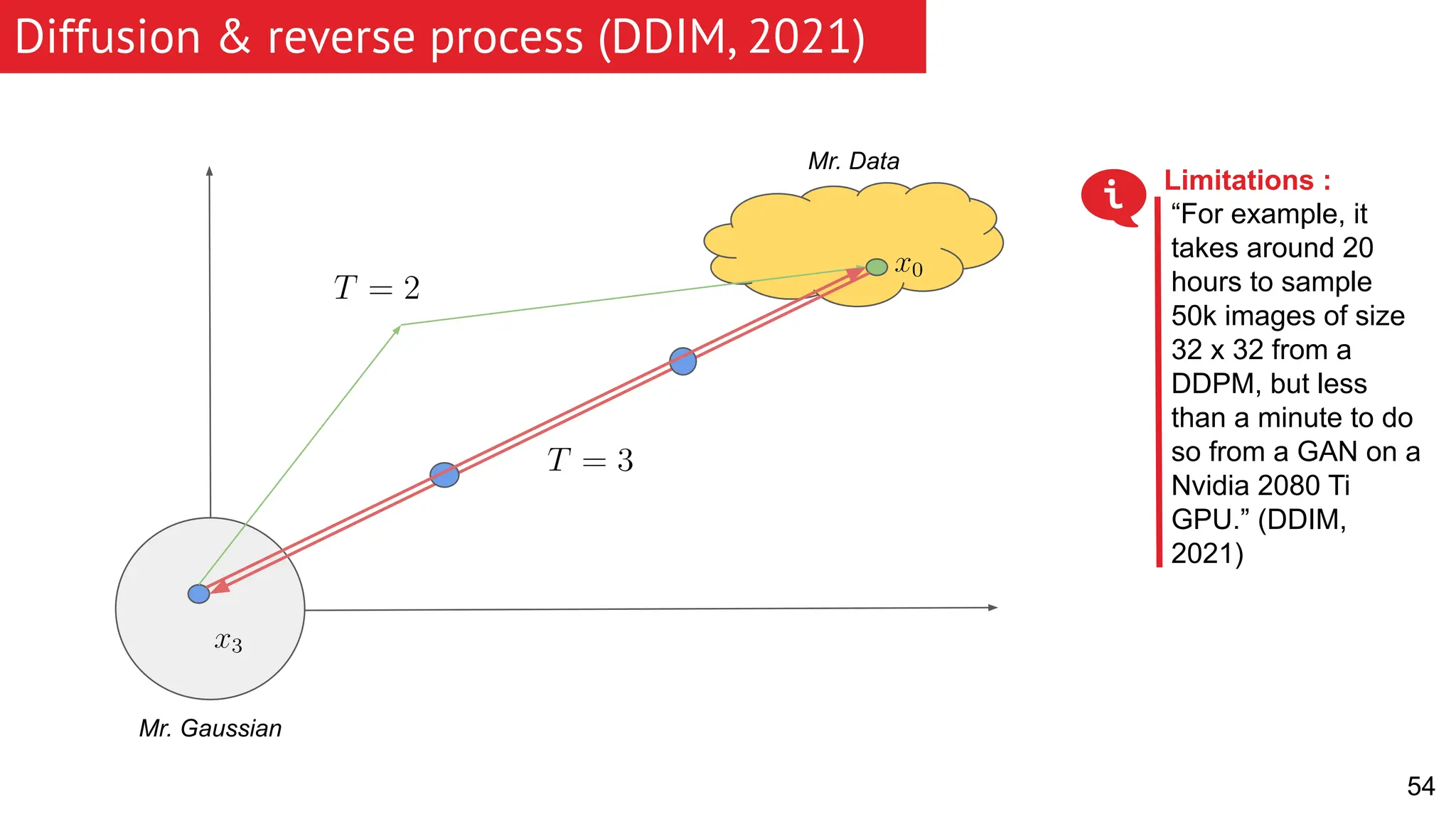

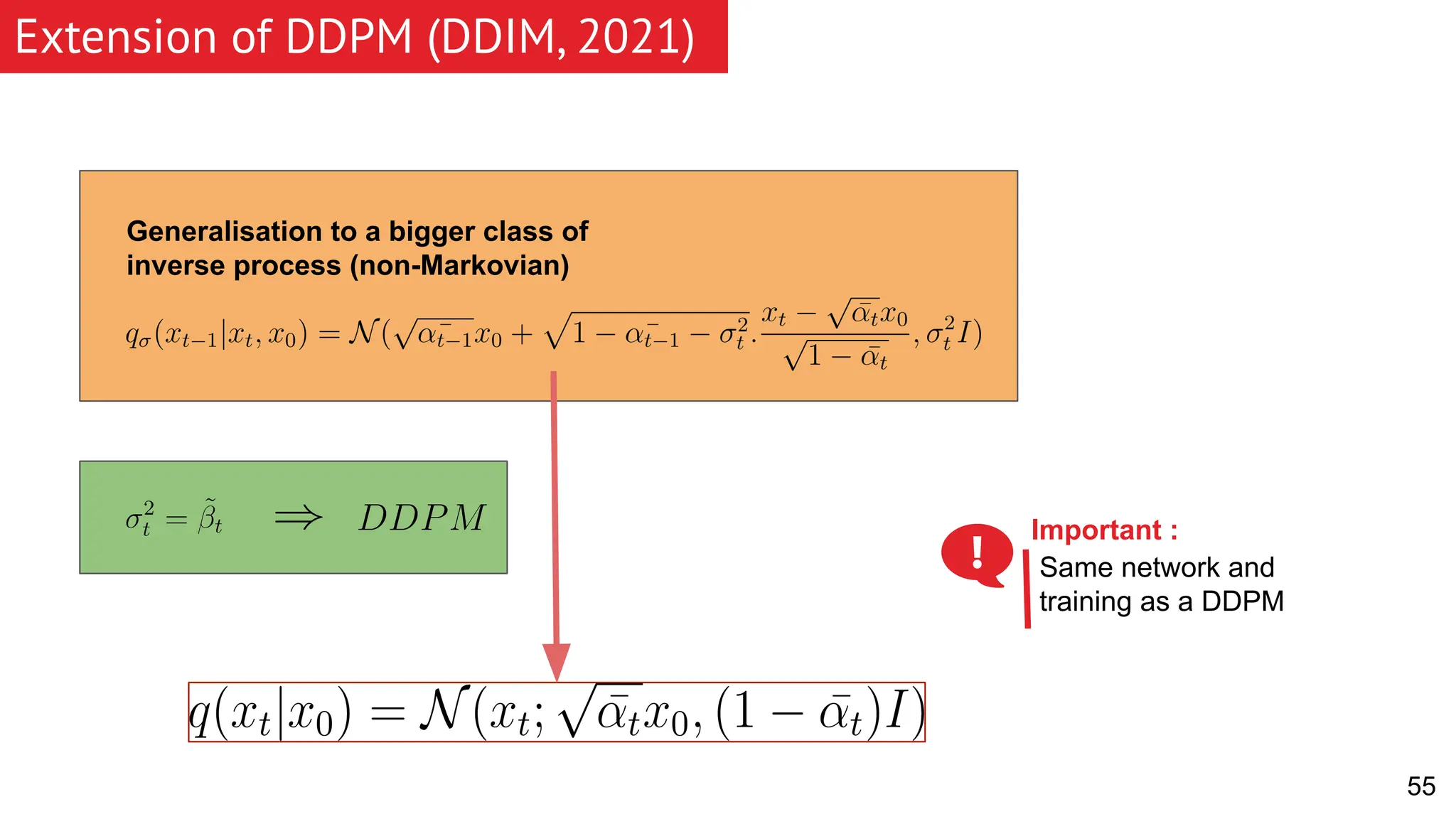

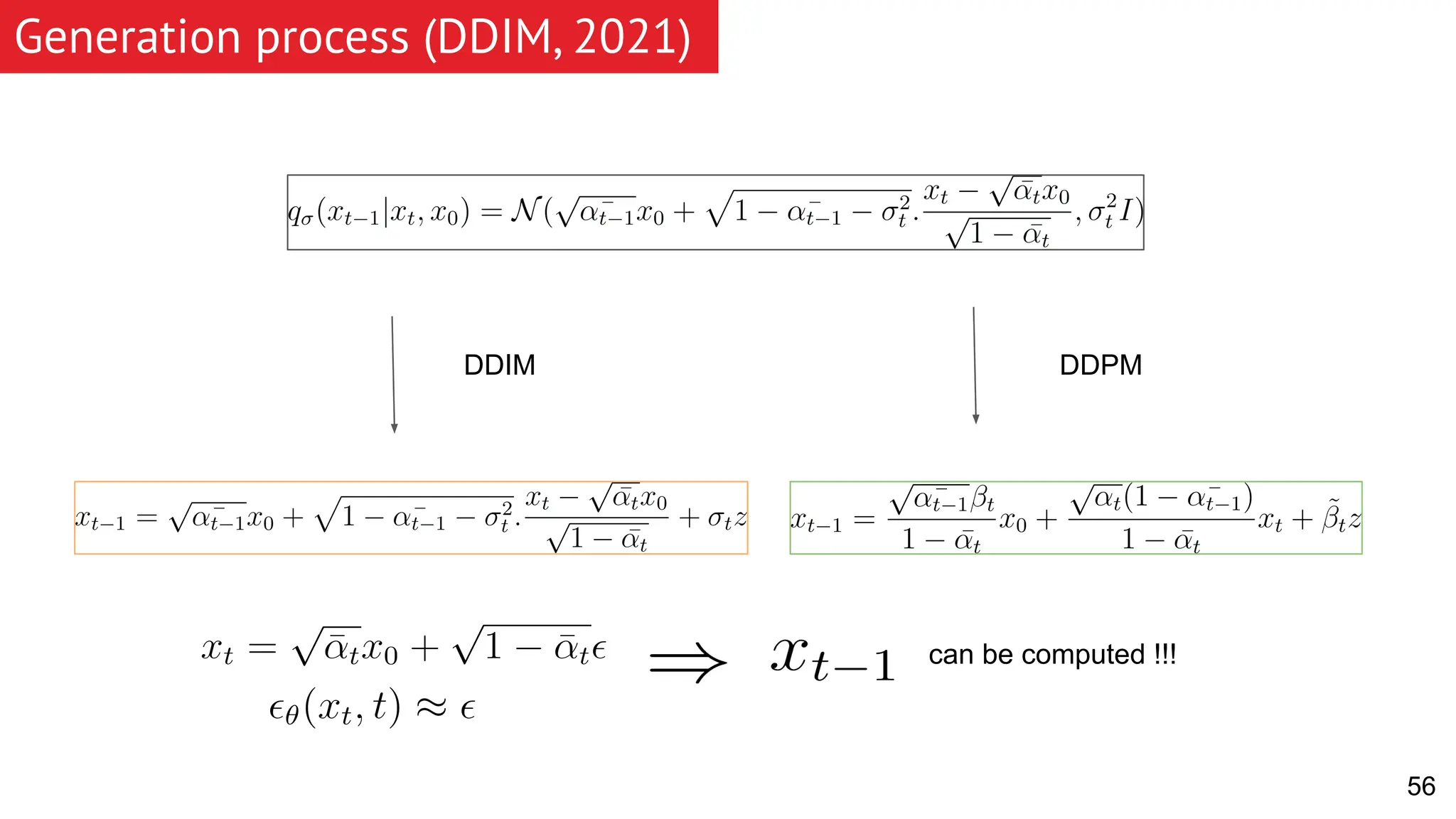

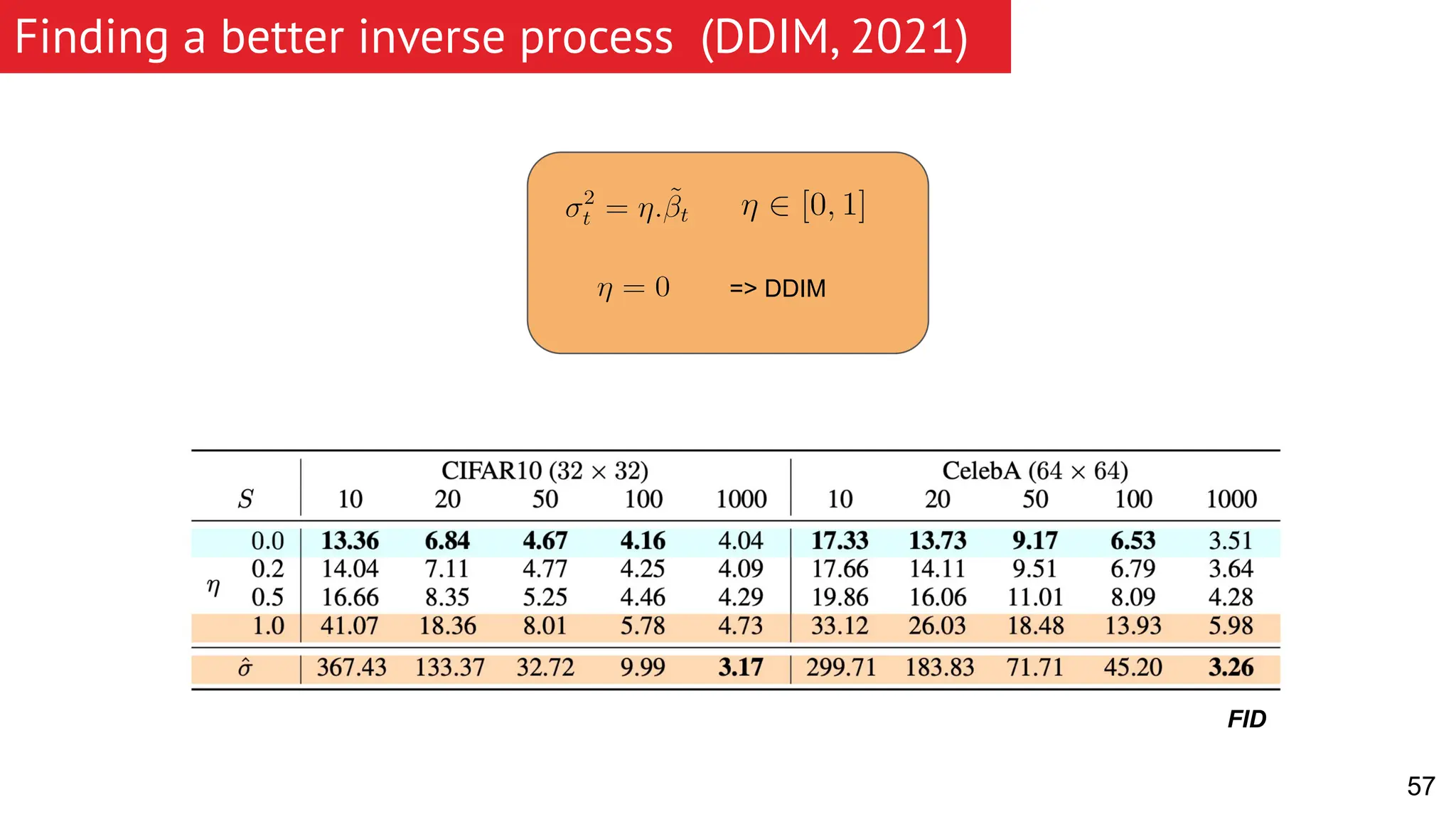

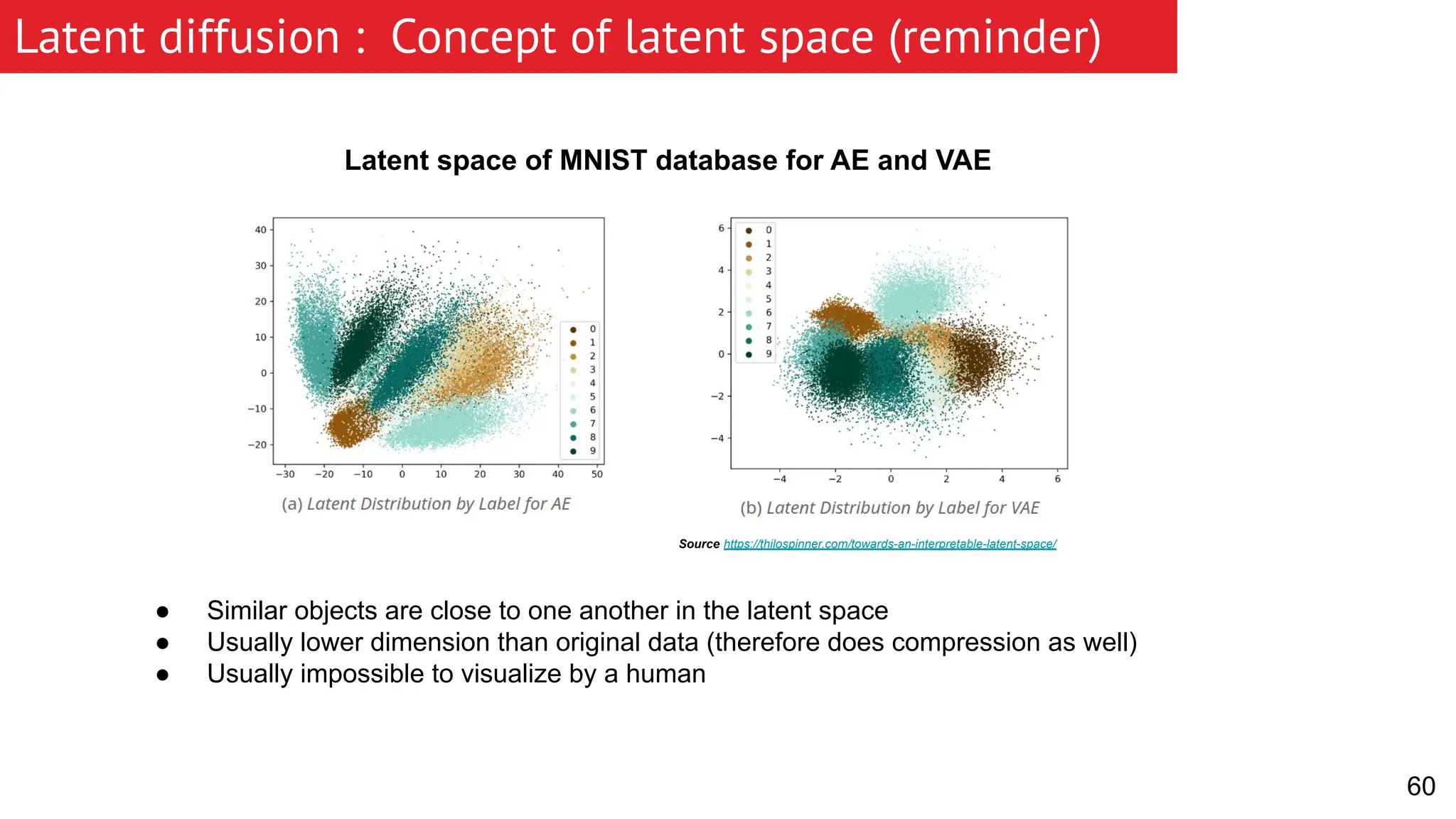

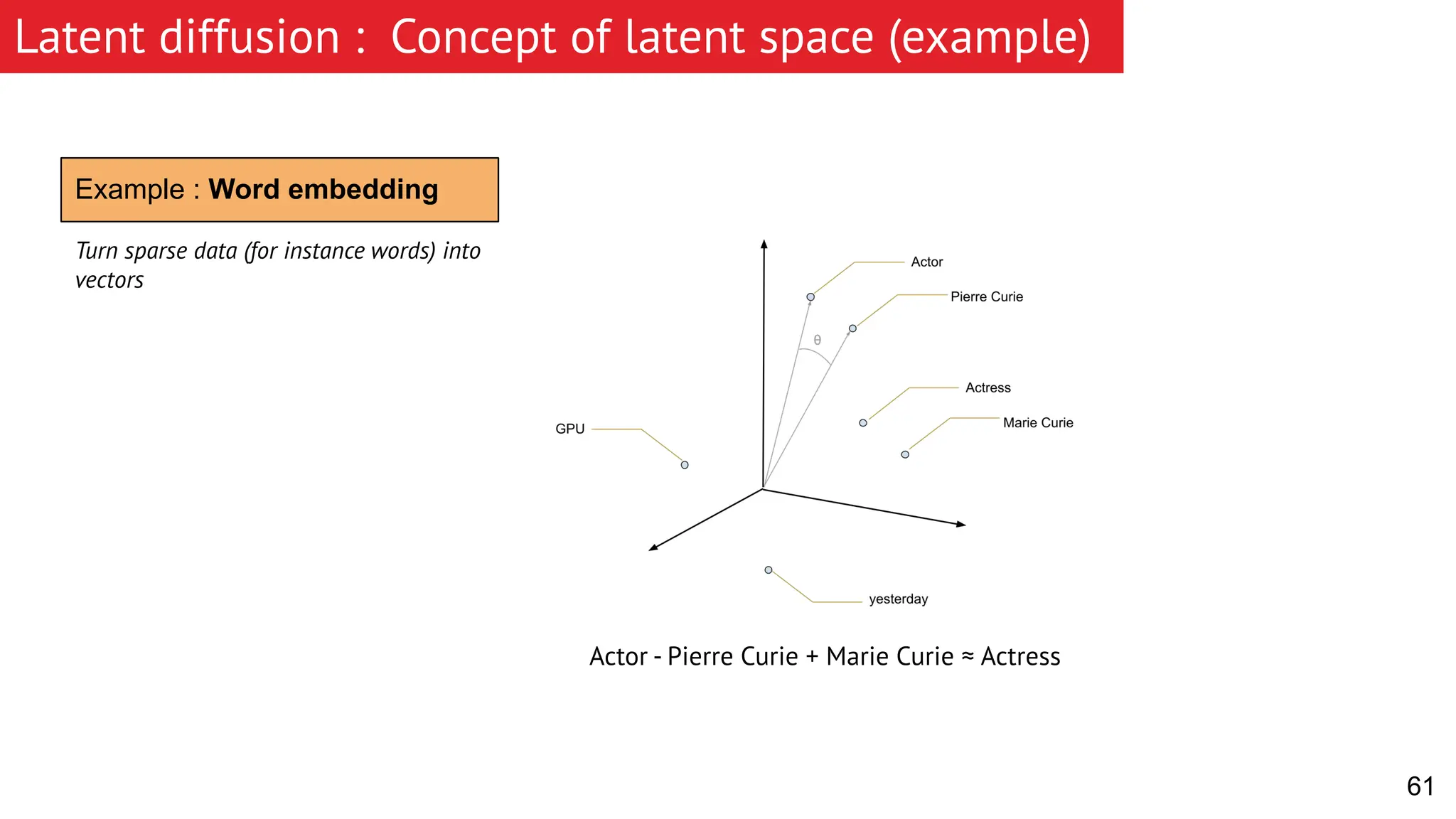

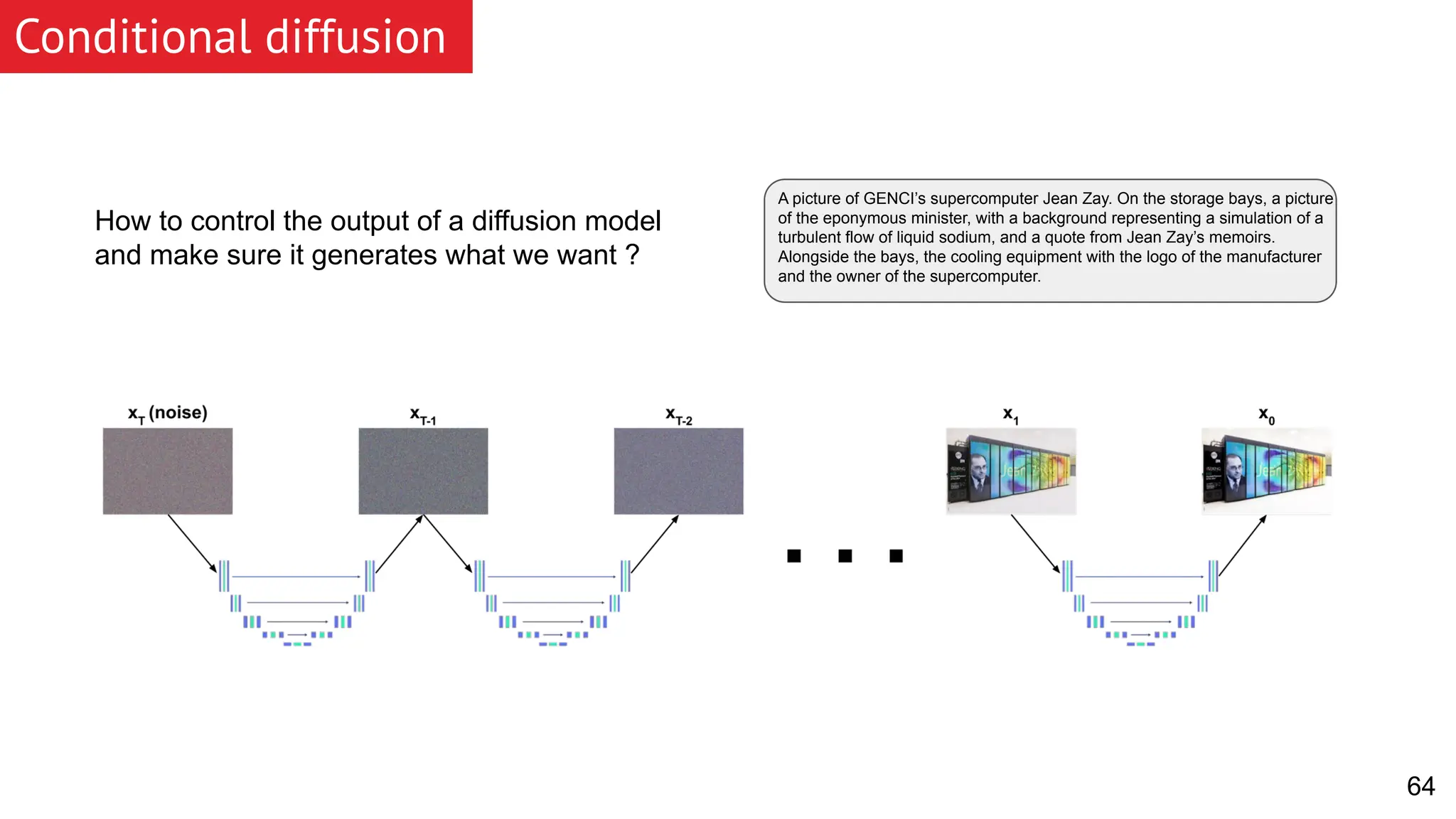

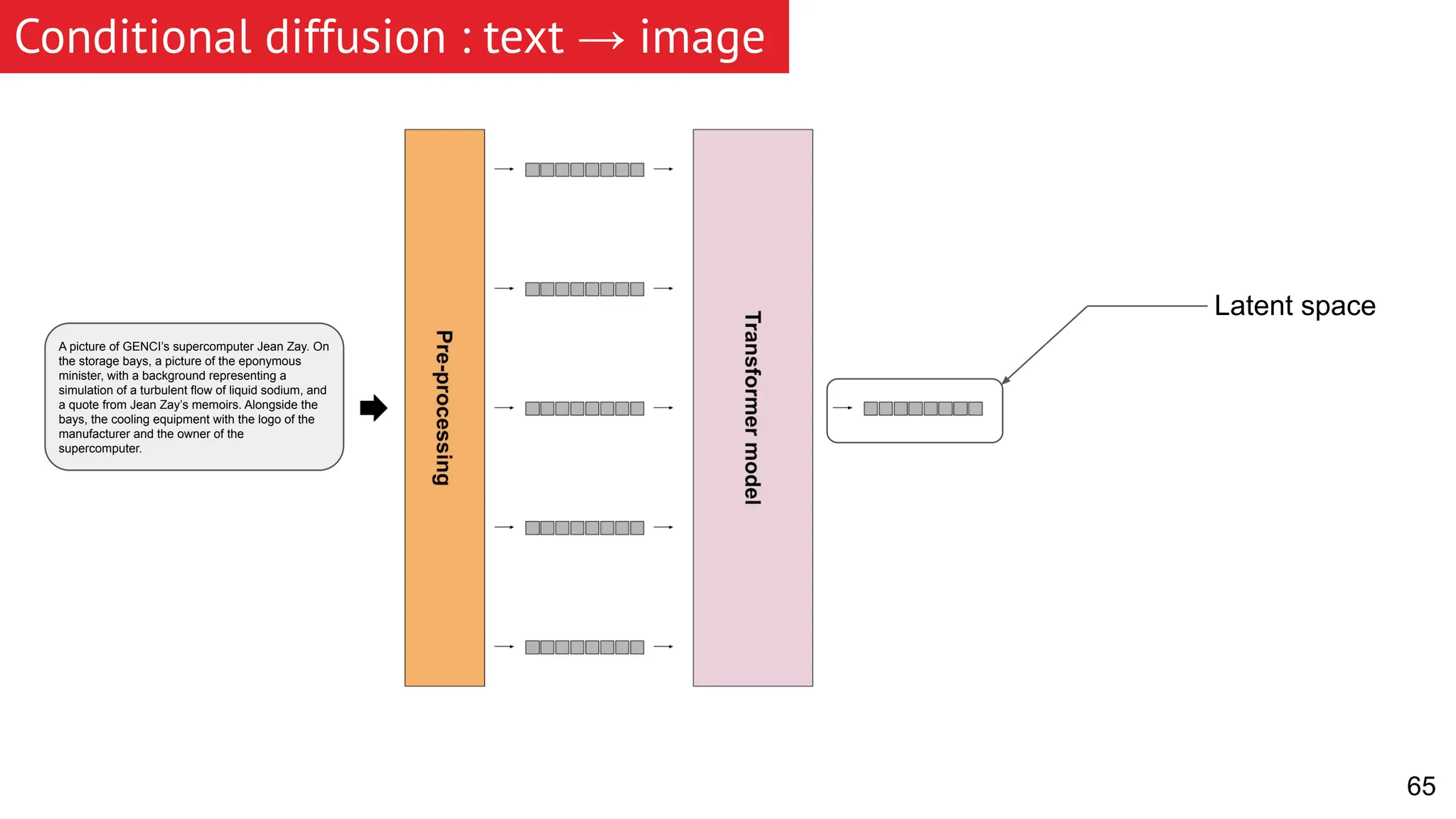

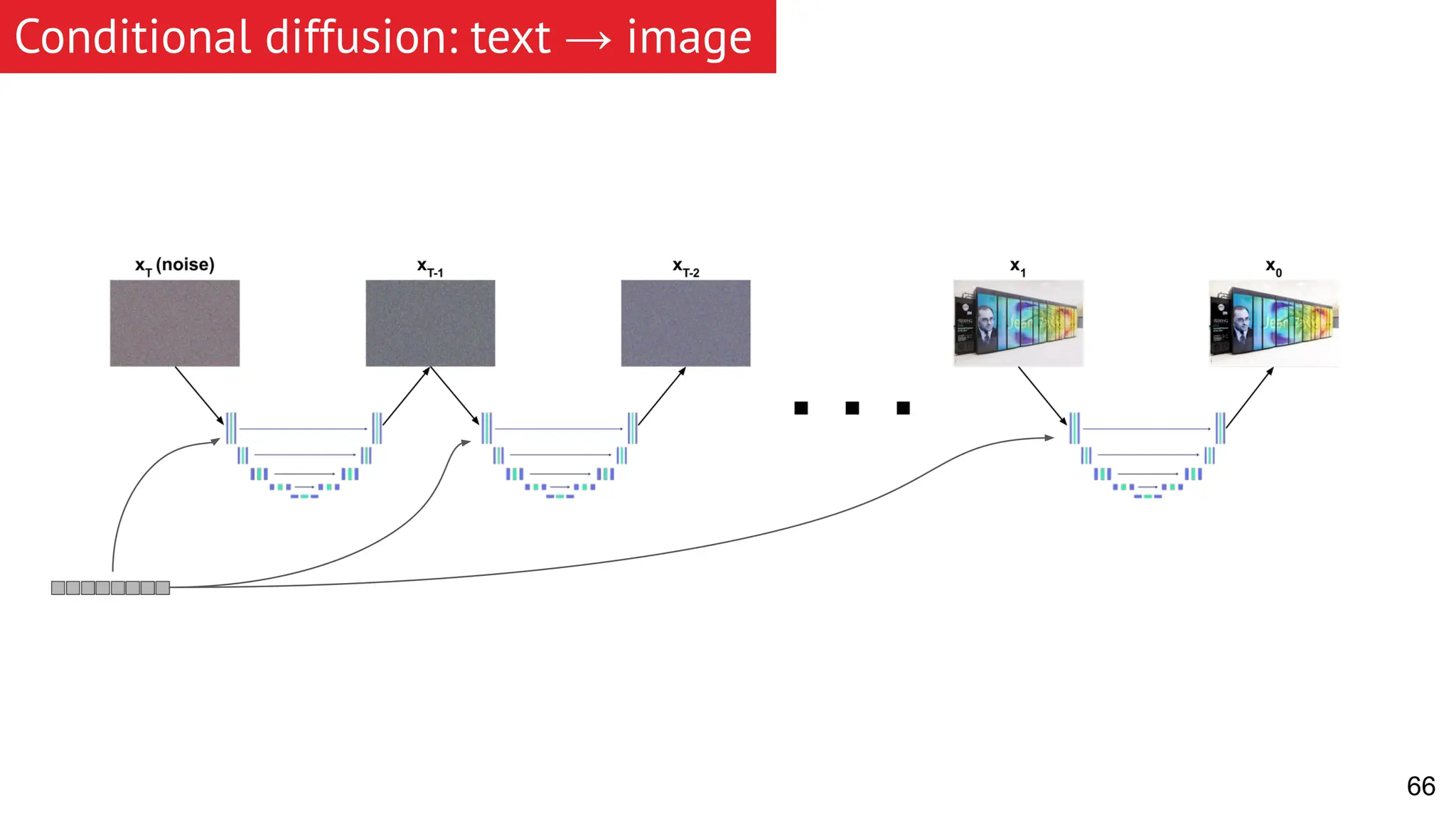

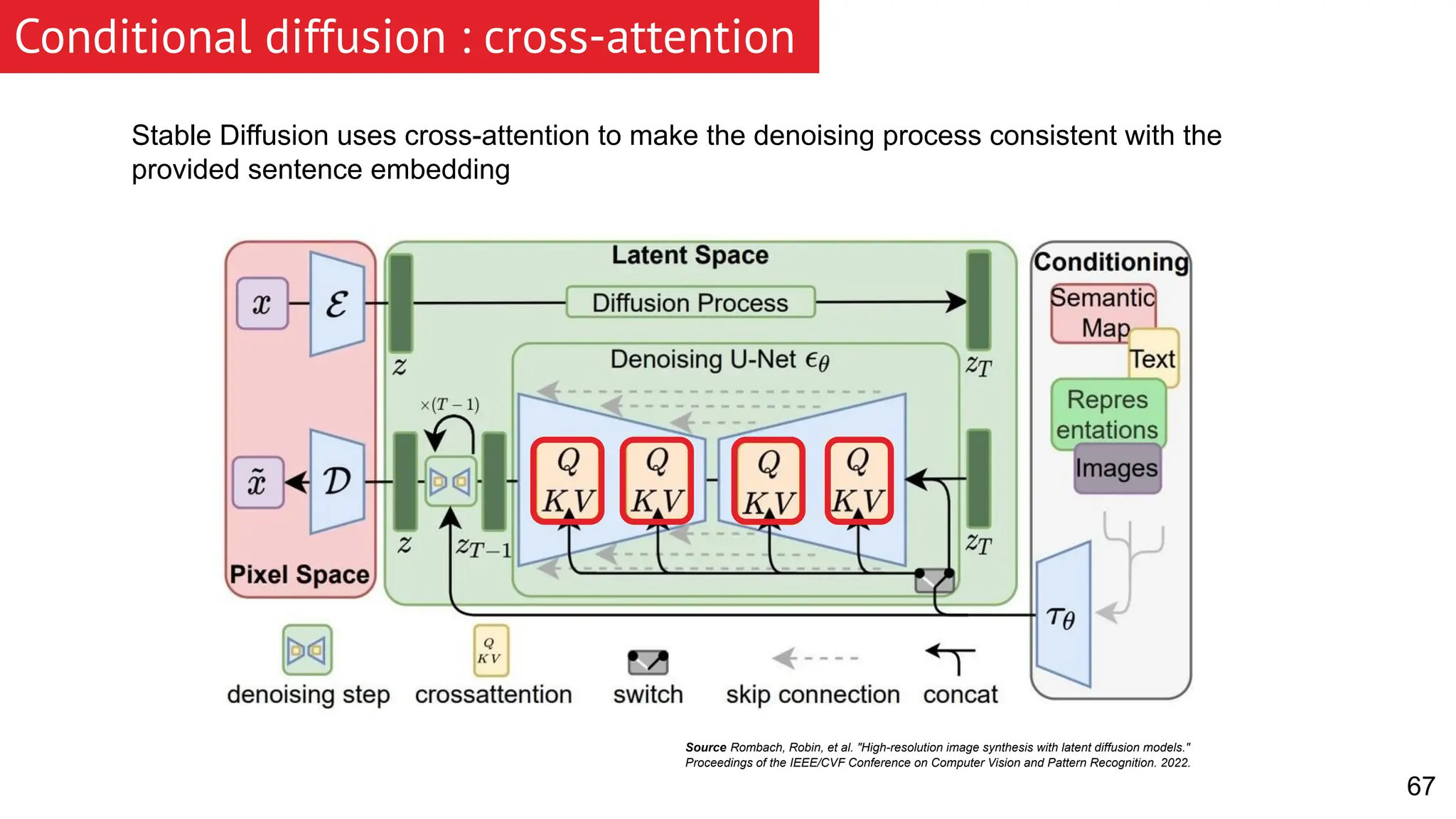

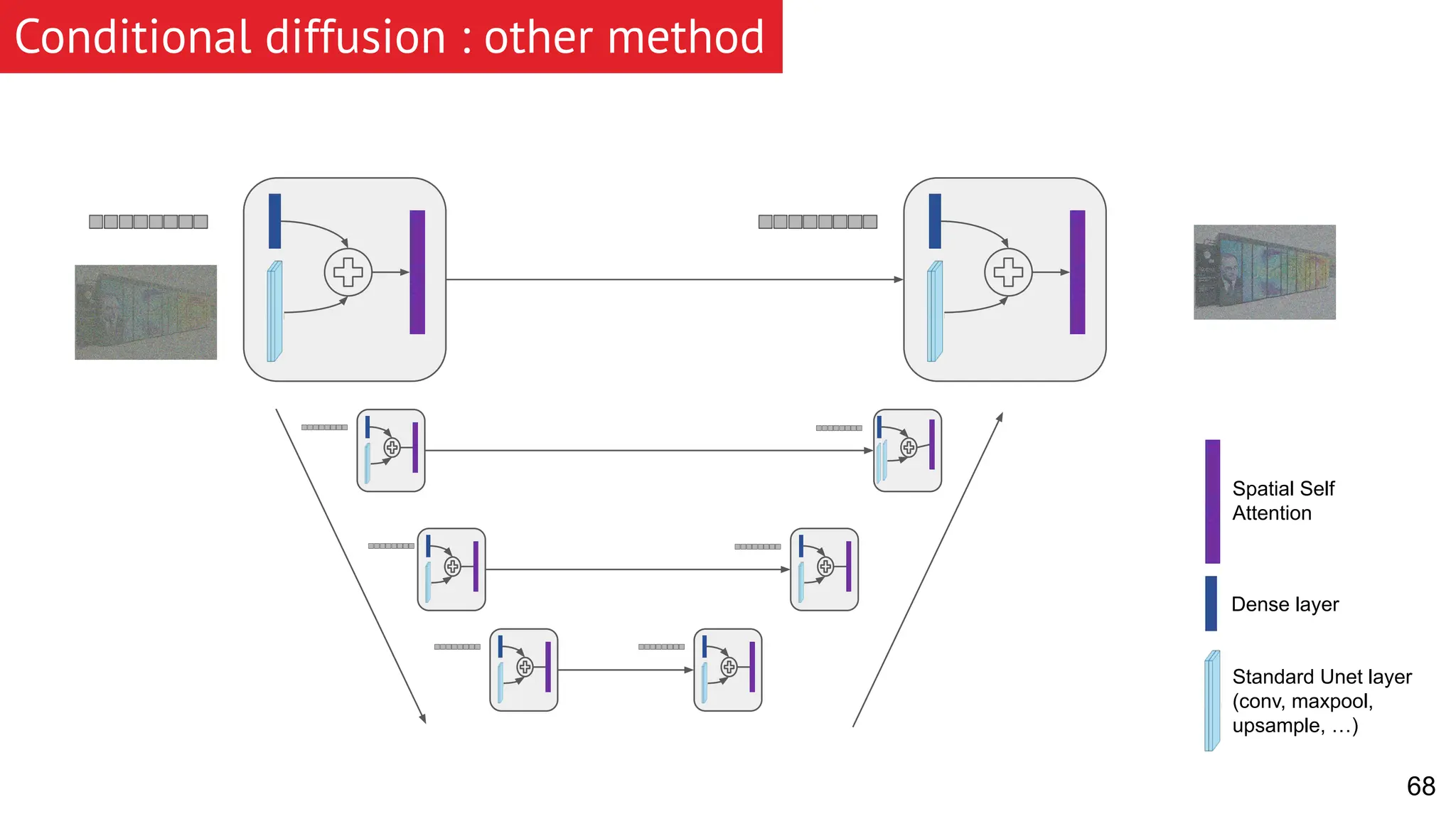

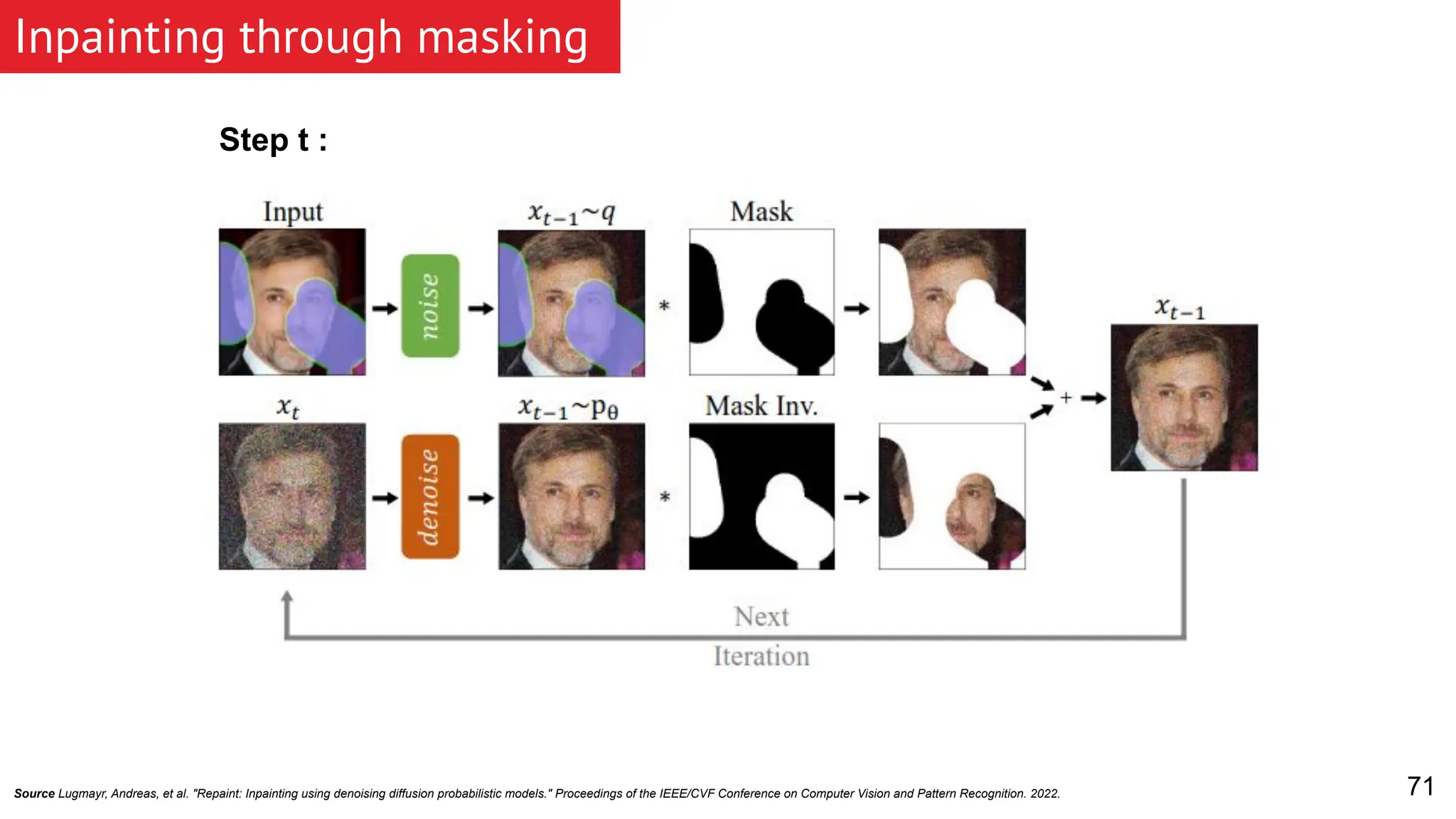

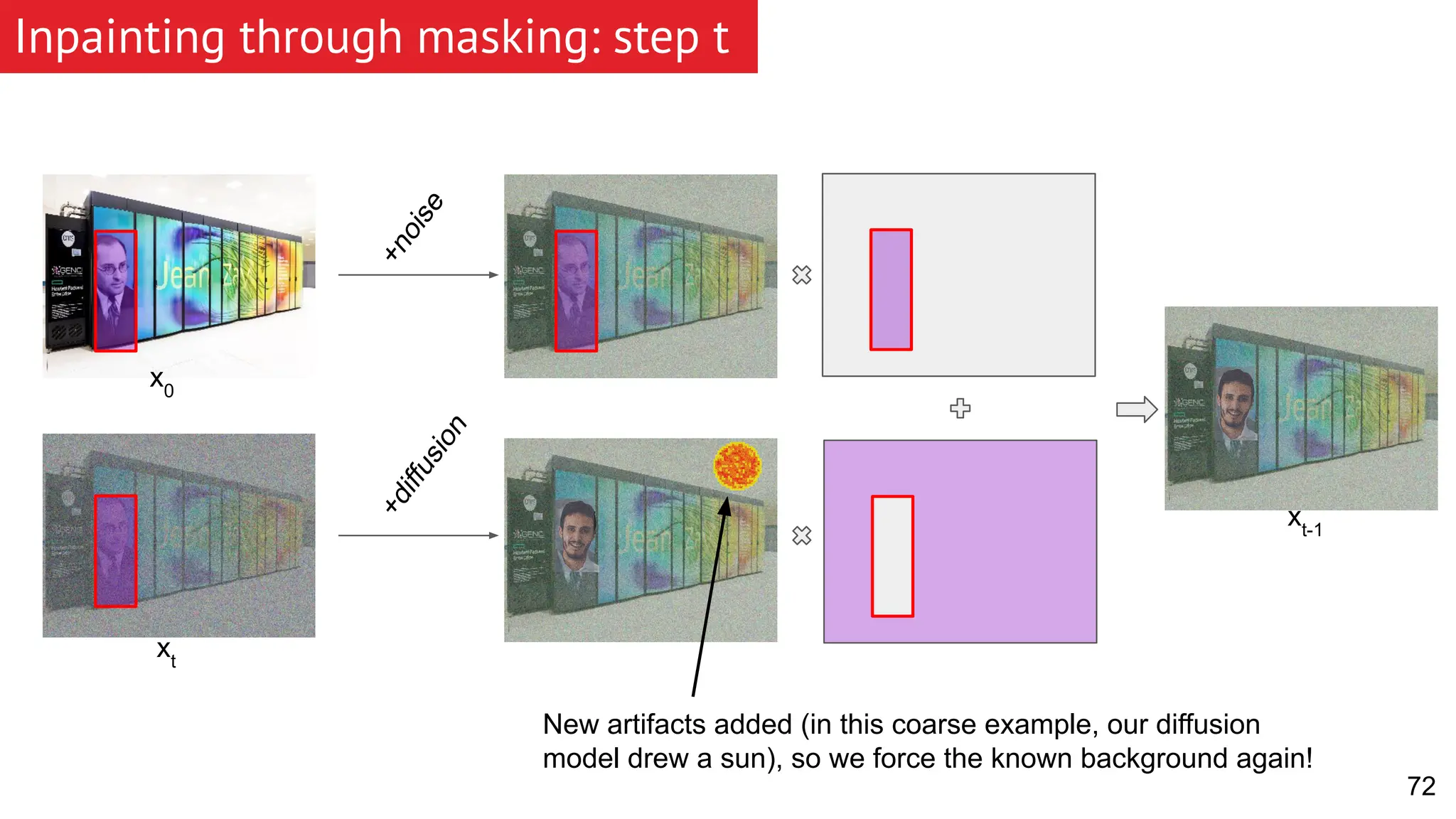

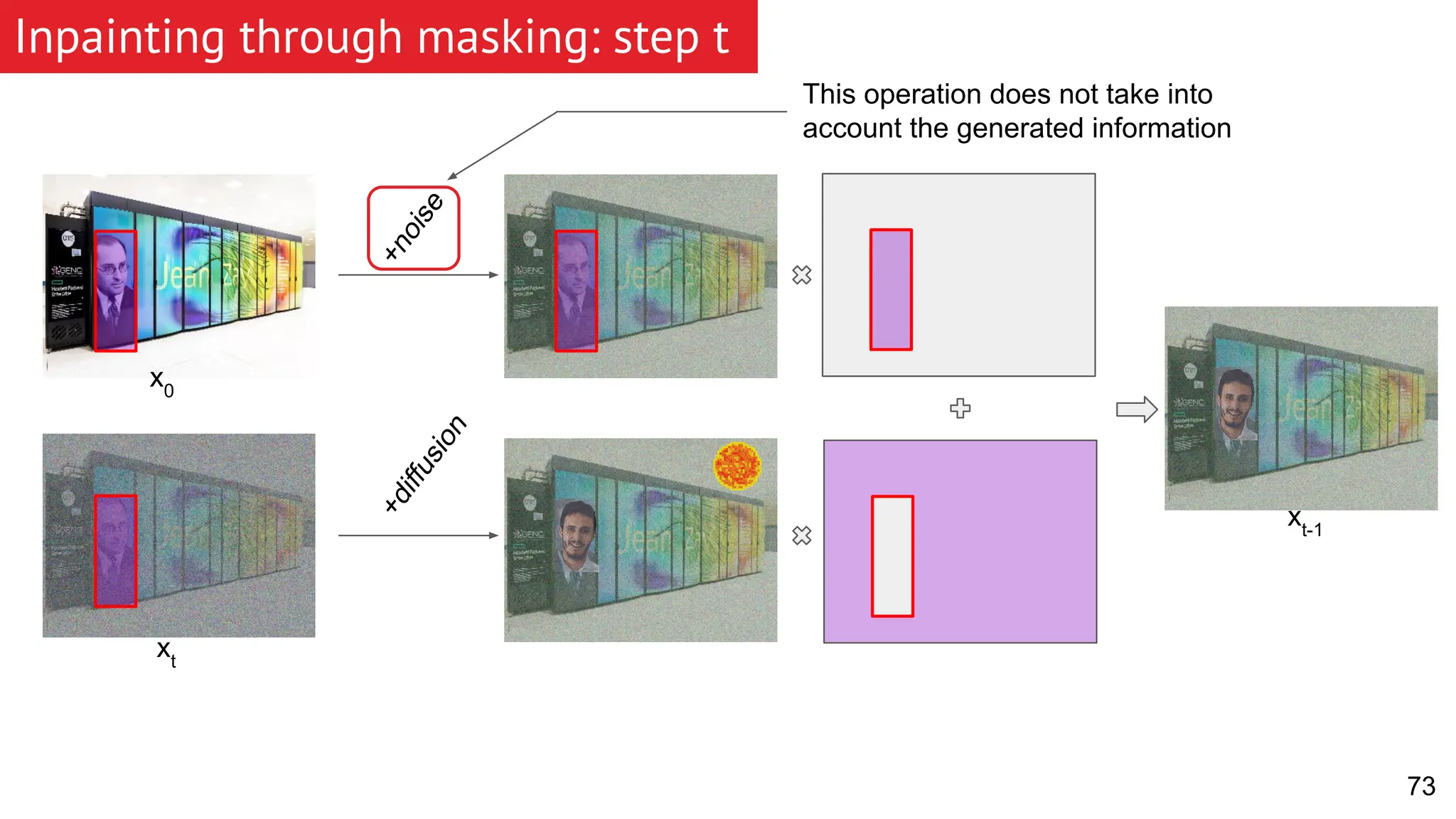

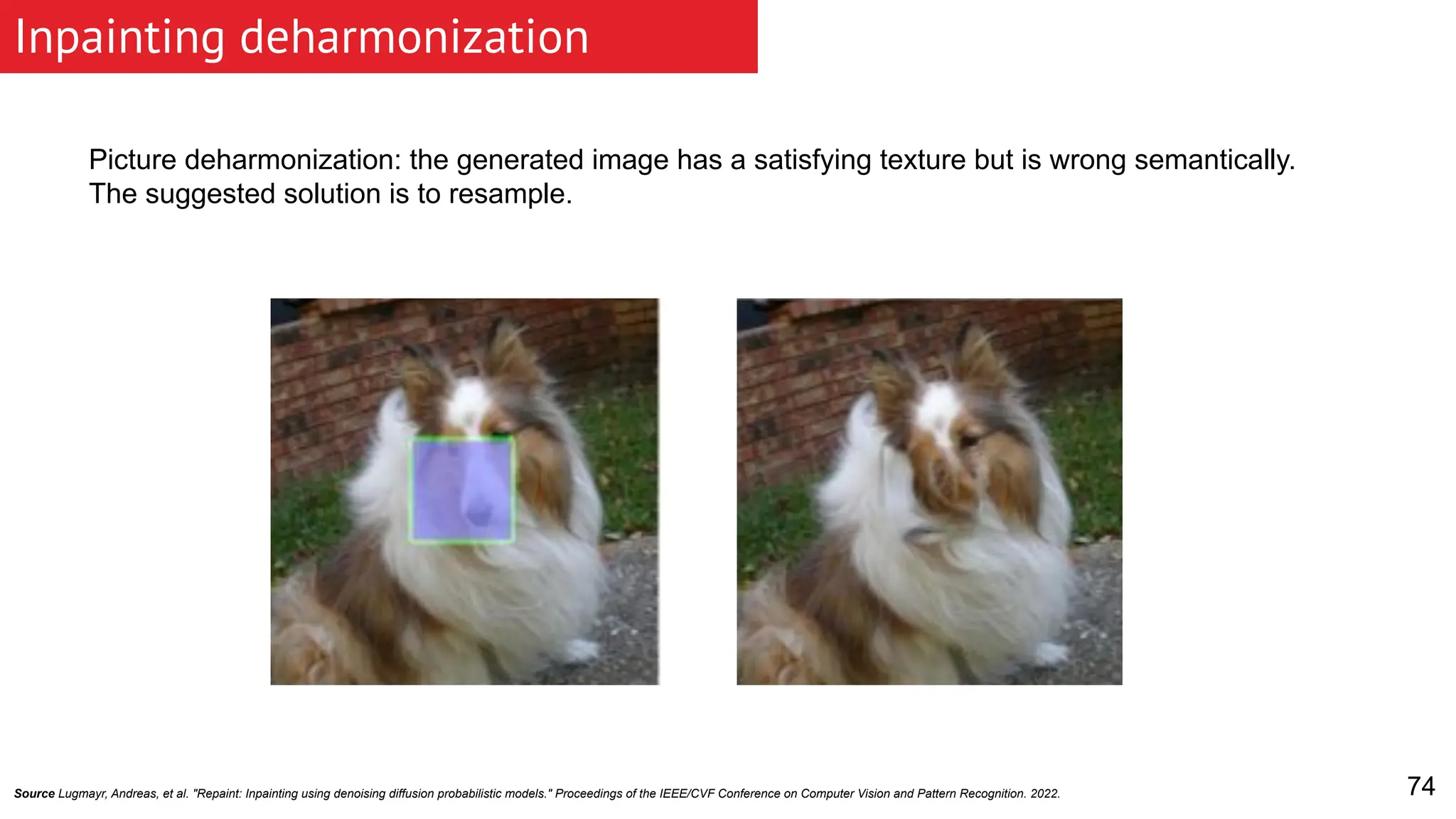

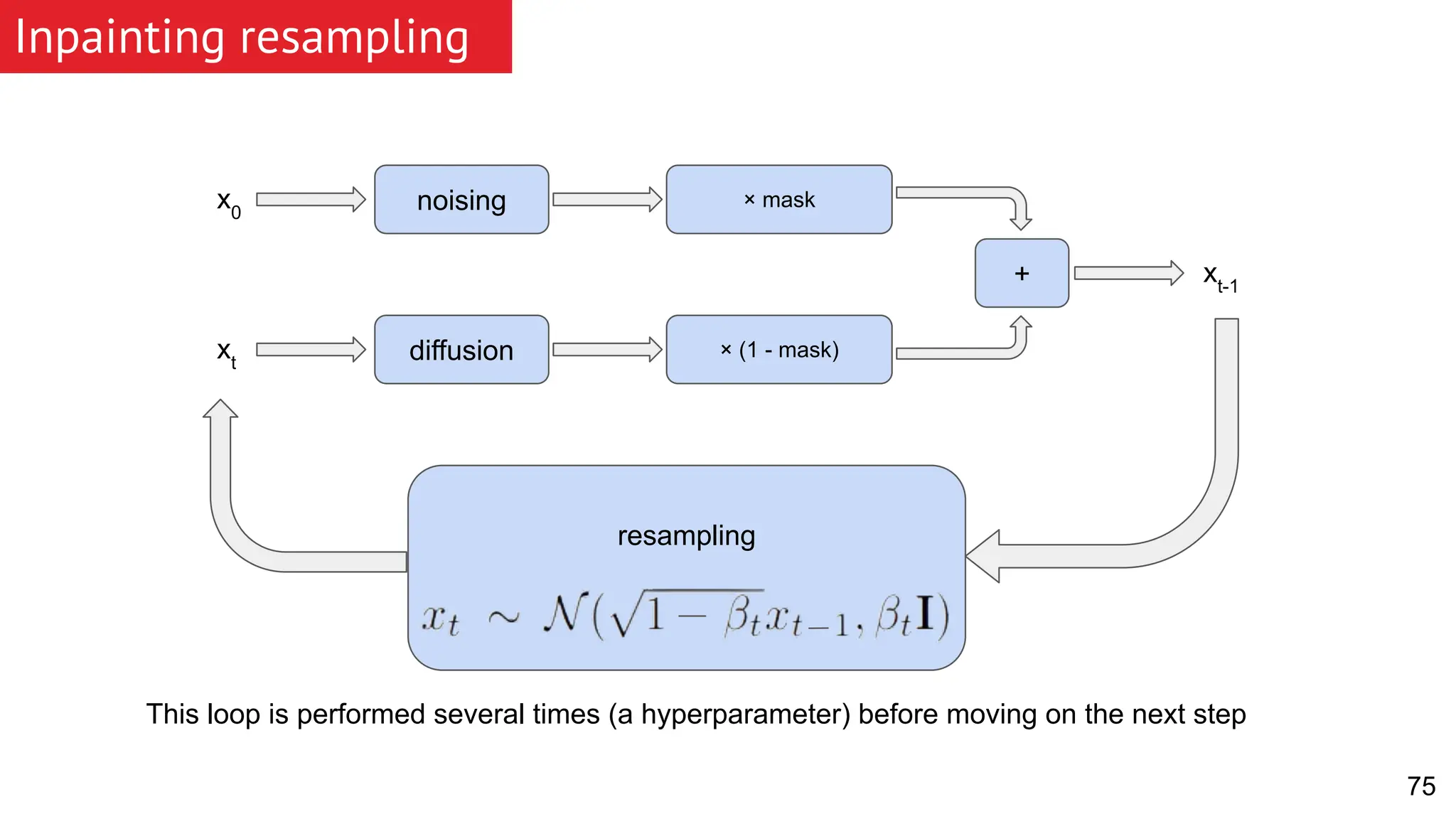

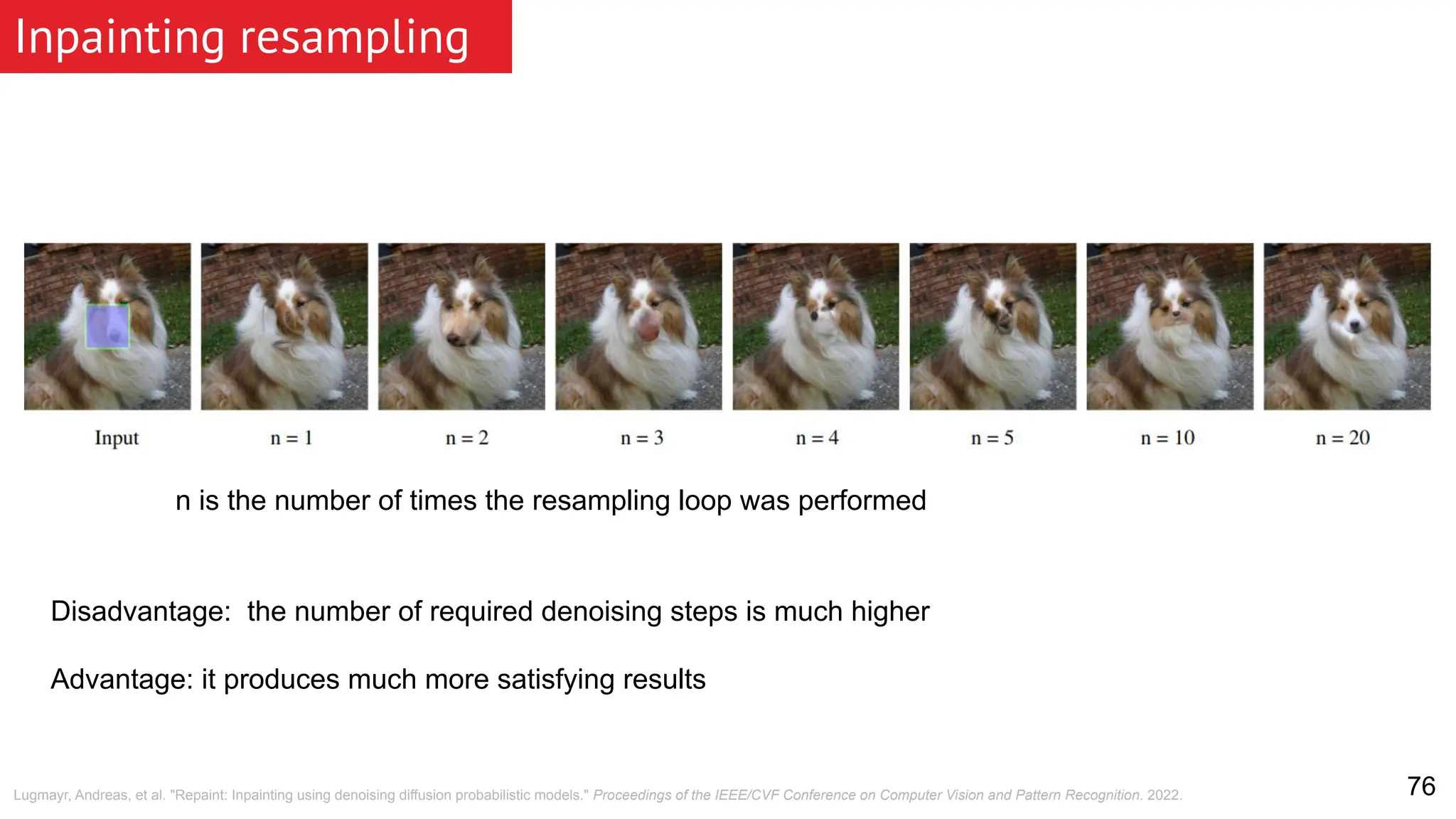

The document discusses generative models, focusing on diffusion models and their principles, including forward and reverse diffusion processes. It compares diffusion models, variational autoencoders (VAEs), and generative adversarial networks (GANs), highlighting the characteristics and applications of each. Additionally, it covers improvements in diffusion models, such as faster sampling and various tasks they can perform like inpainting and super-resolution.