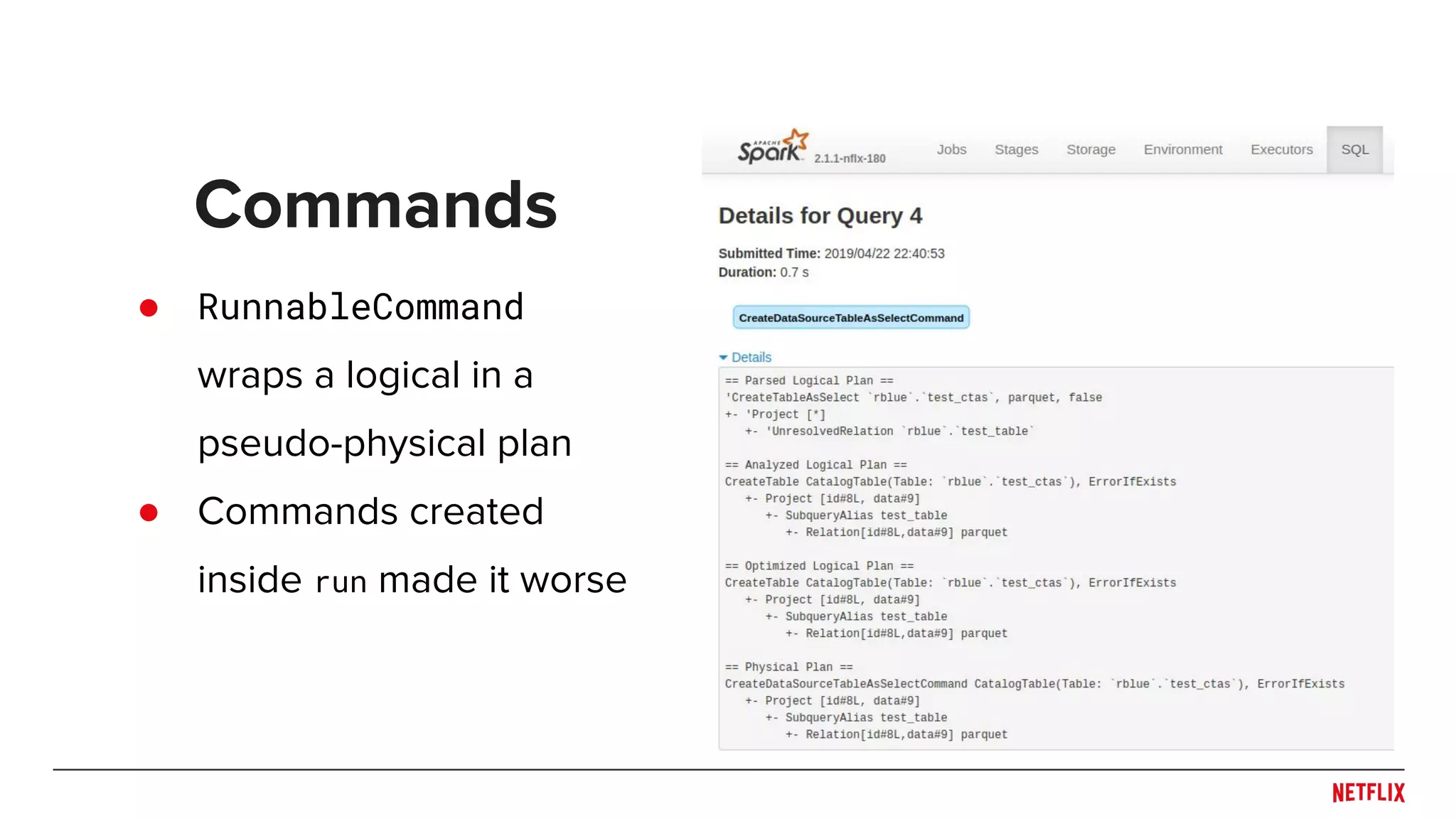

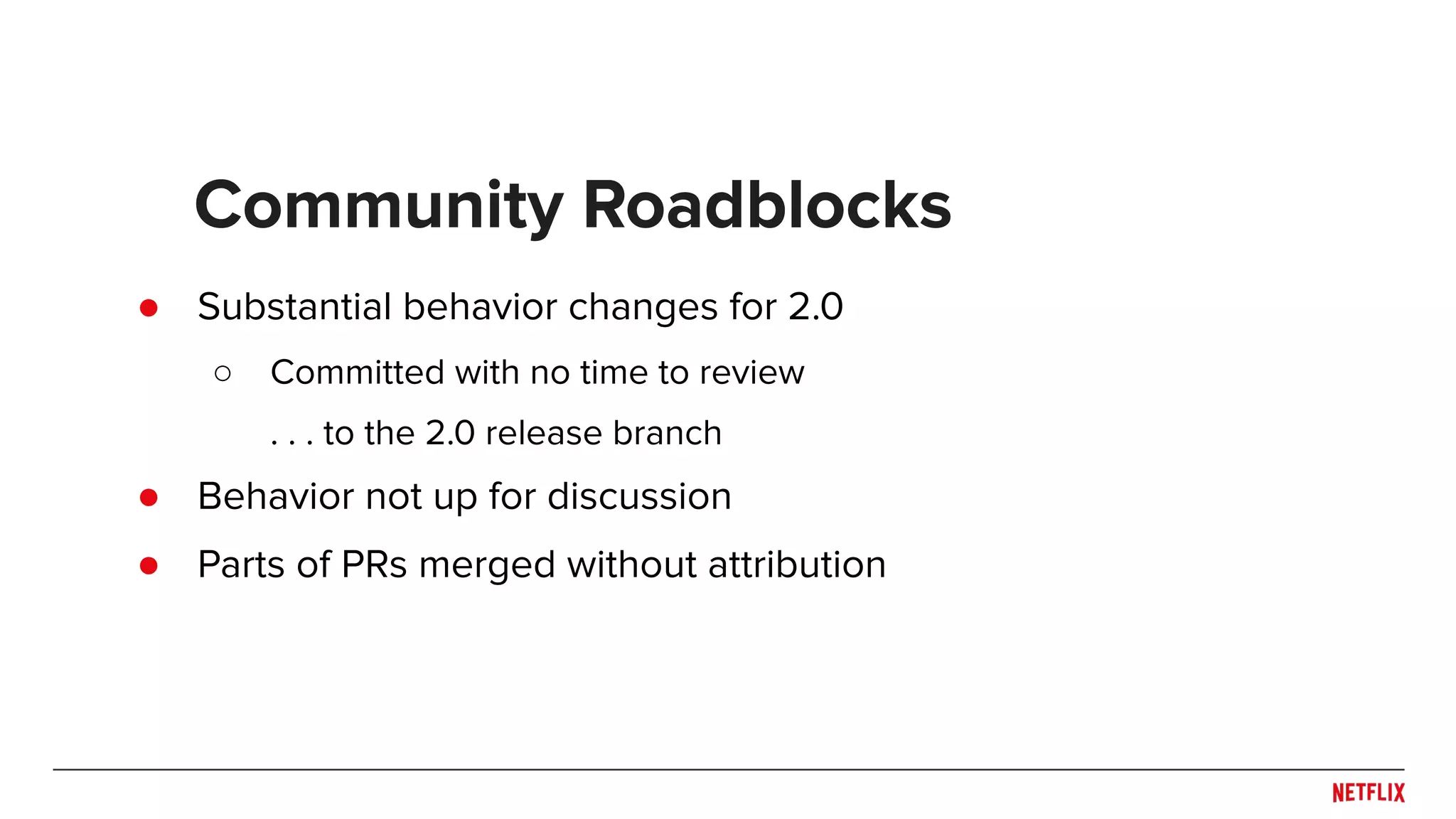

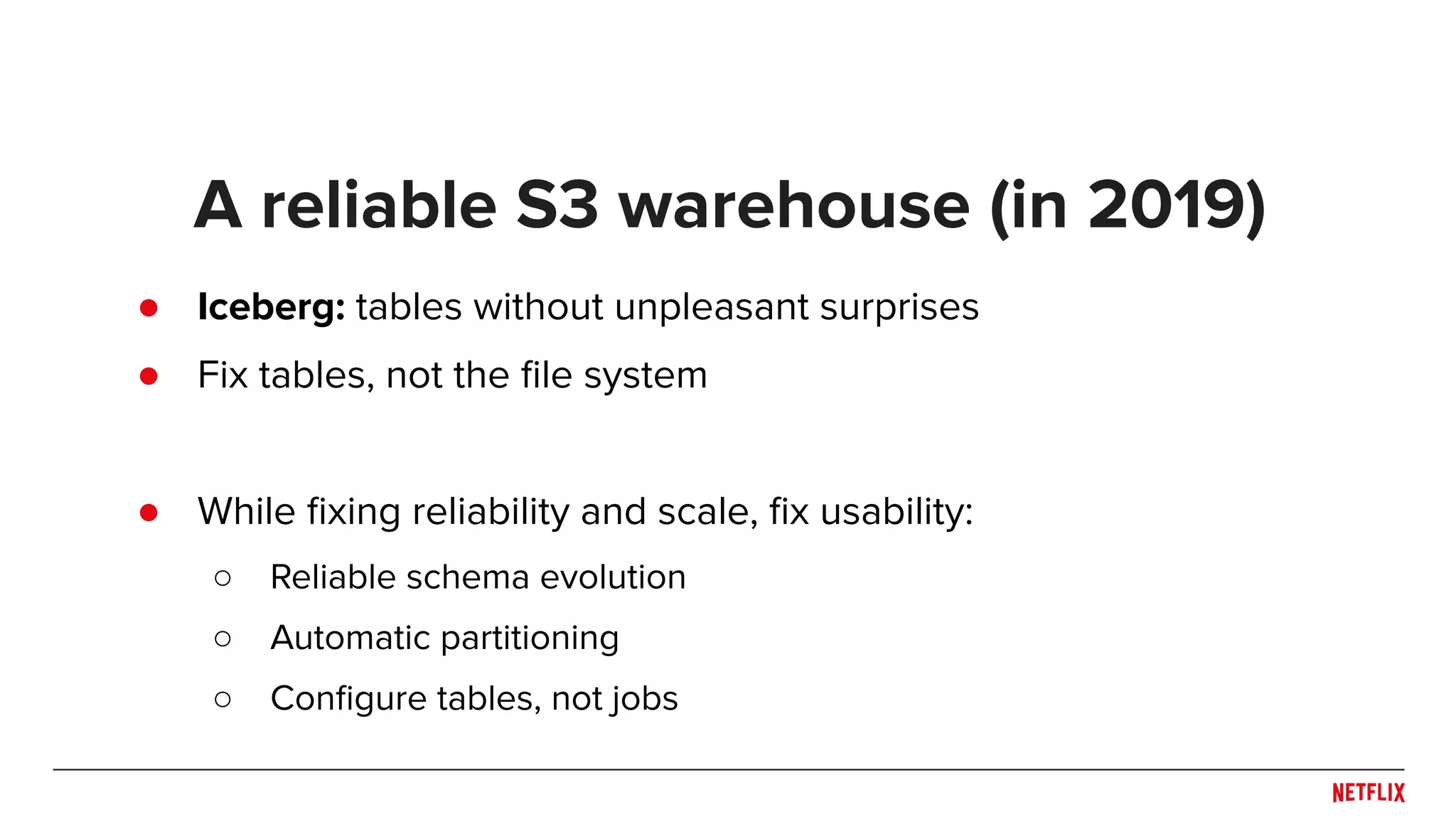

The document discusses improvements to Spark's reliability with the introduction of DataSource V2, highlighting architectural changes needed for cloud-native data warehousing at Netflix. Key topics include issues with S3 data consistency, changes in Spark versions, and the challenges of managing write paths, including the need for consistent behavior and validation across different data sources. The proposed DataSource V2 aims to standardize logical plans and improve usability by allowing clearer operations such as table creation and modification within Spark.

![“[These] should do the same thing, but as we've already published these 2 interfaces and the implementations may have different logic, we have to keep these 2 different commands.”](https://image.slidesharecdn.com/013016ryanblue-190508223636/75/Improving-Apache-Spark-s-Reliability-with-DataSourceV2-13-2048.jpg)

![“[These] should do the same thing, but as we've already published these 2 interfaces and the implementations may have different logic, we have to keep these 2 different commands.” 😕](https://image.slidesharecdn.com/013016ryanblue-190508223636/75/Improving-Apache-Spark-s-Reliability-with-DataSourceV2-14-2048.jpg)