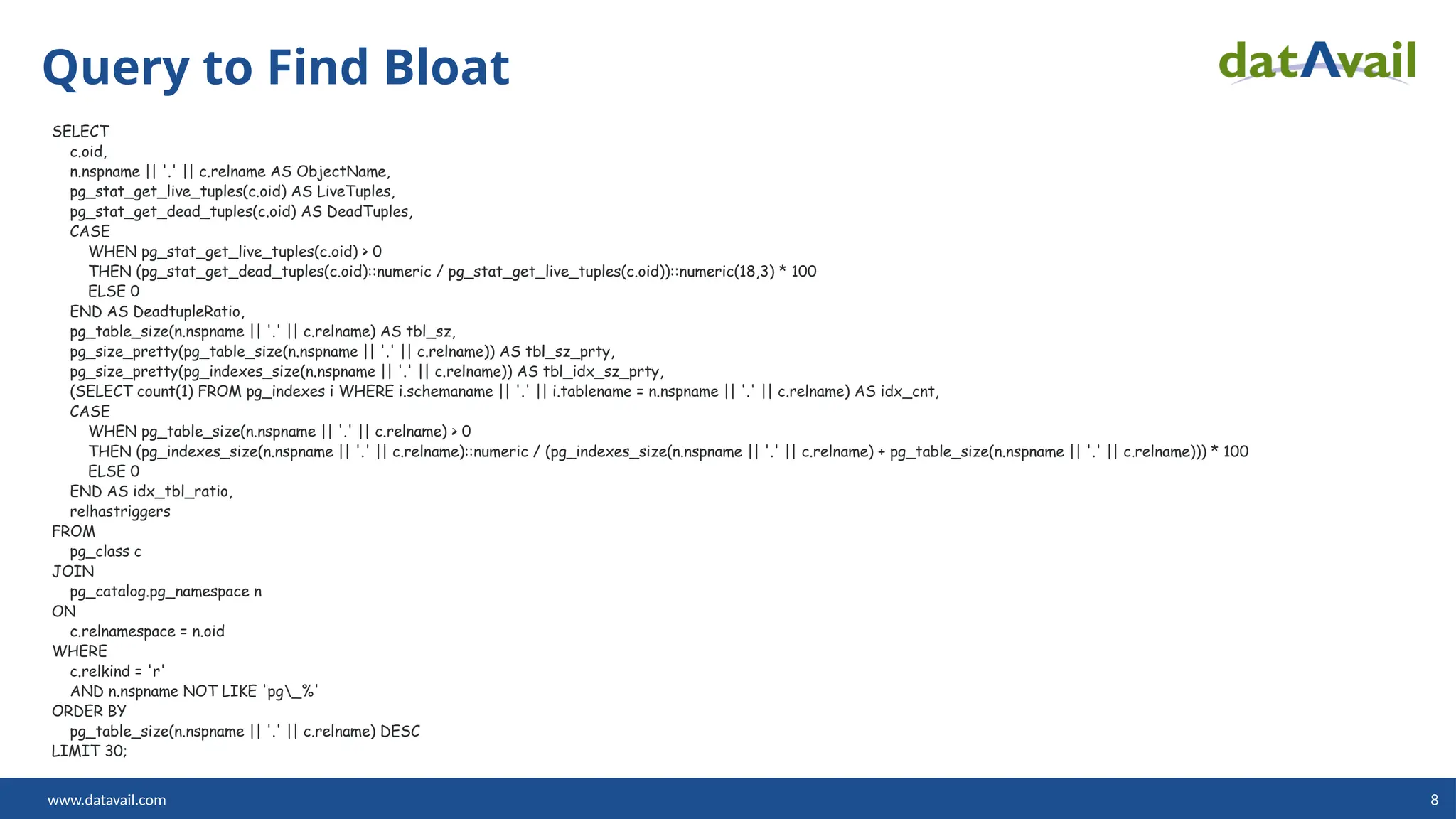

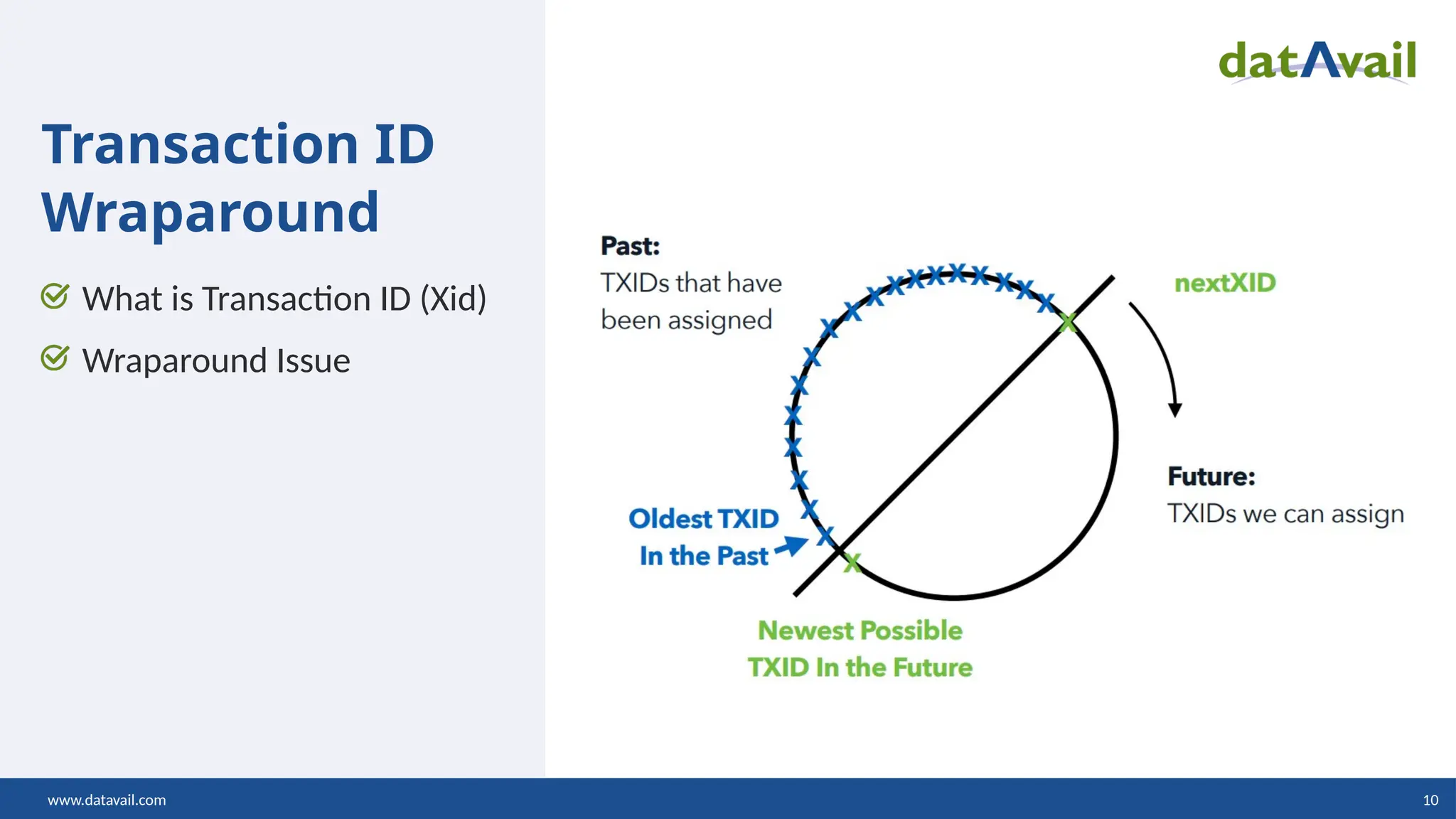

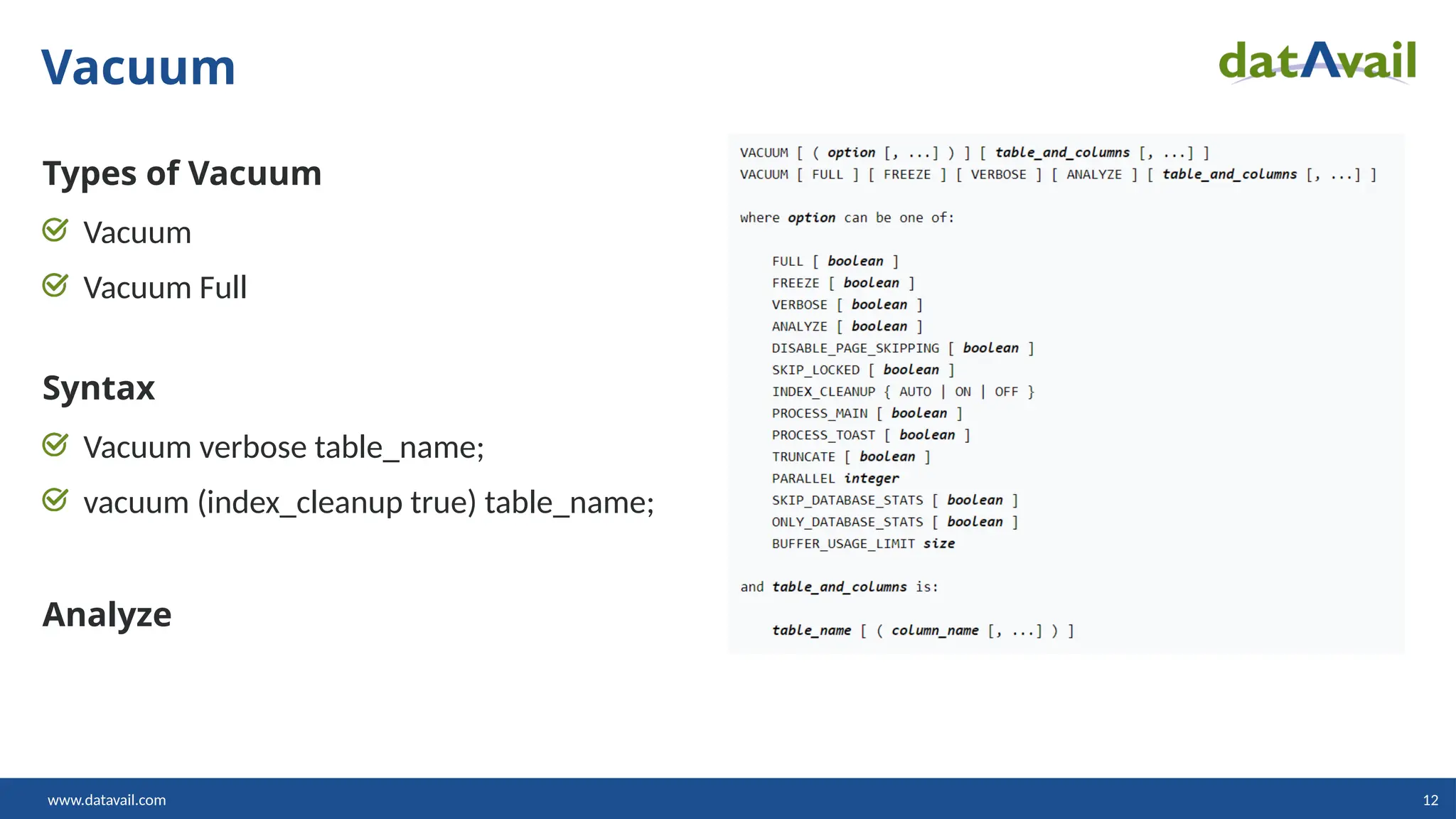

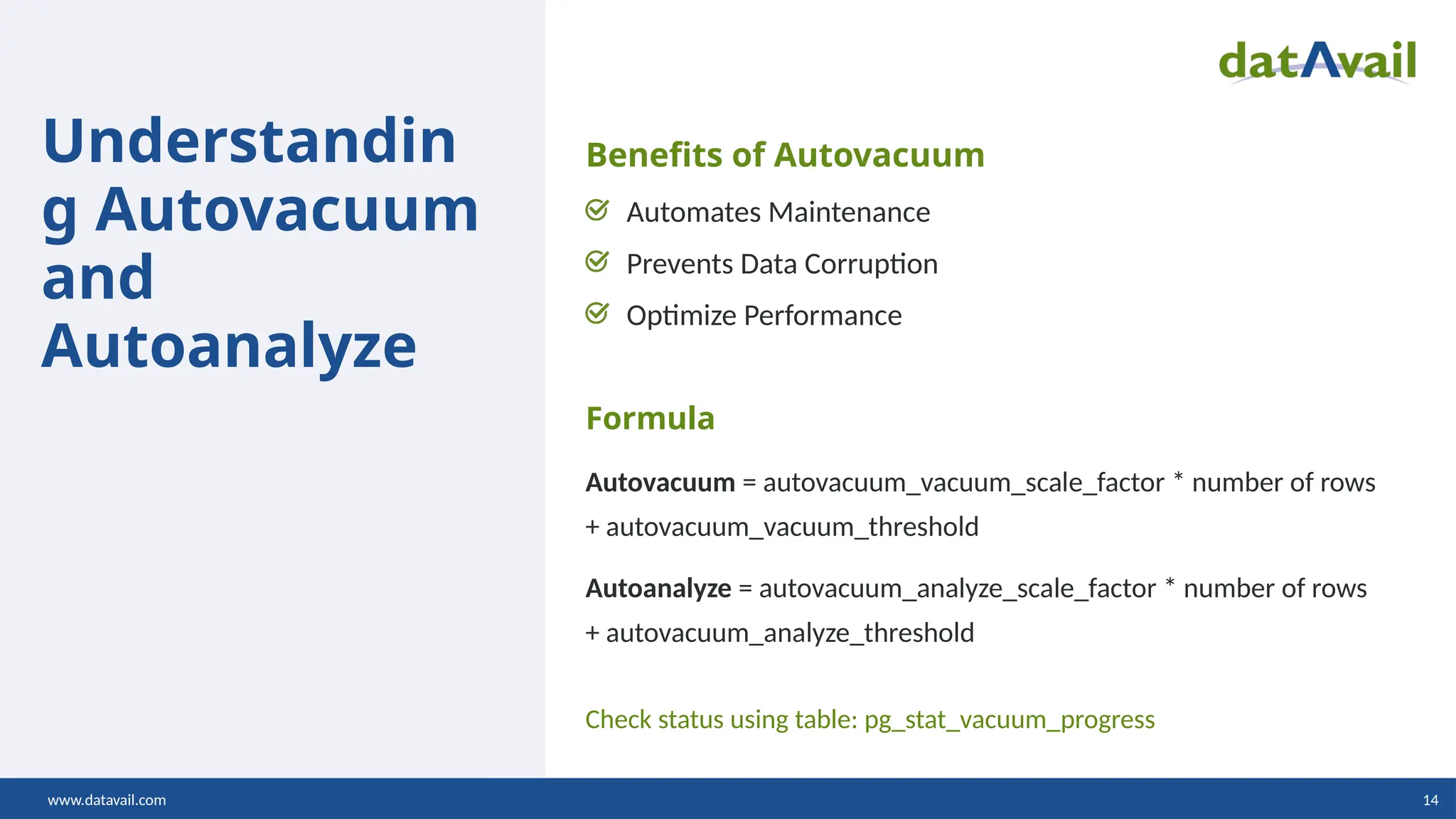

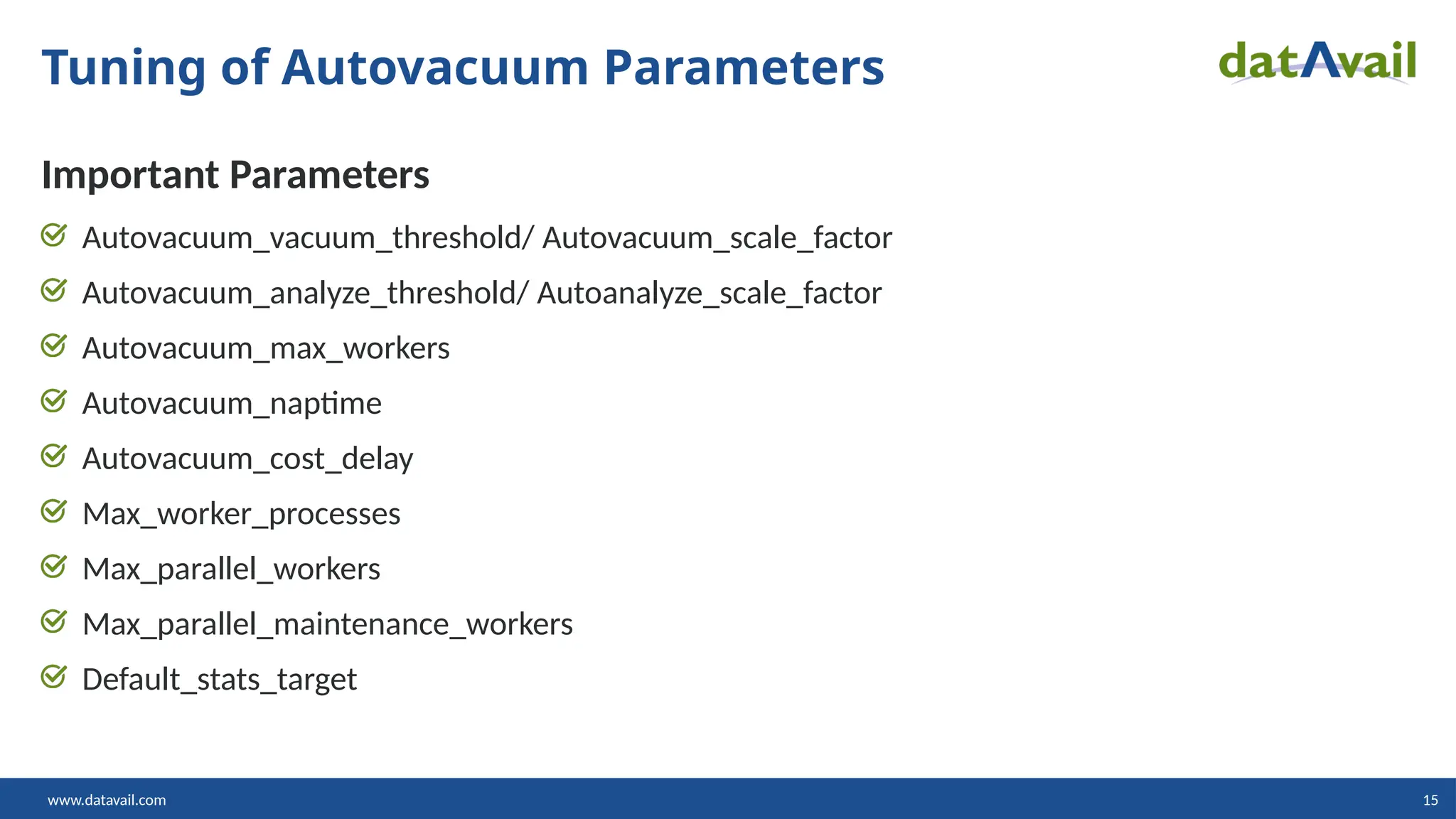

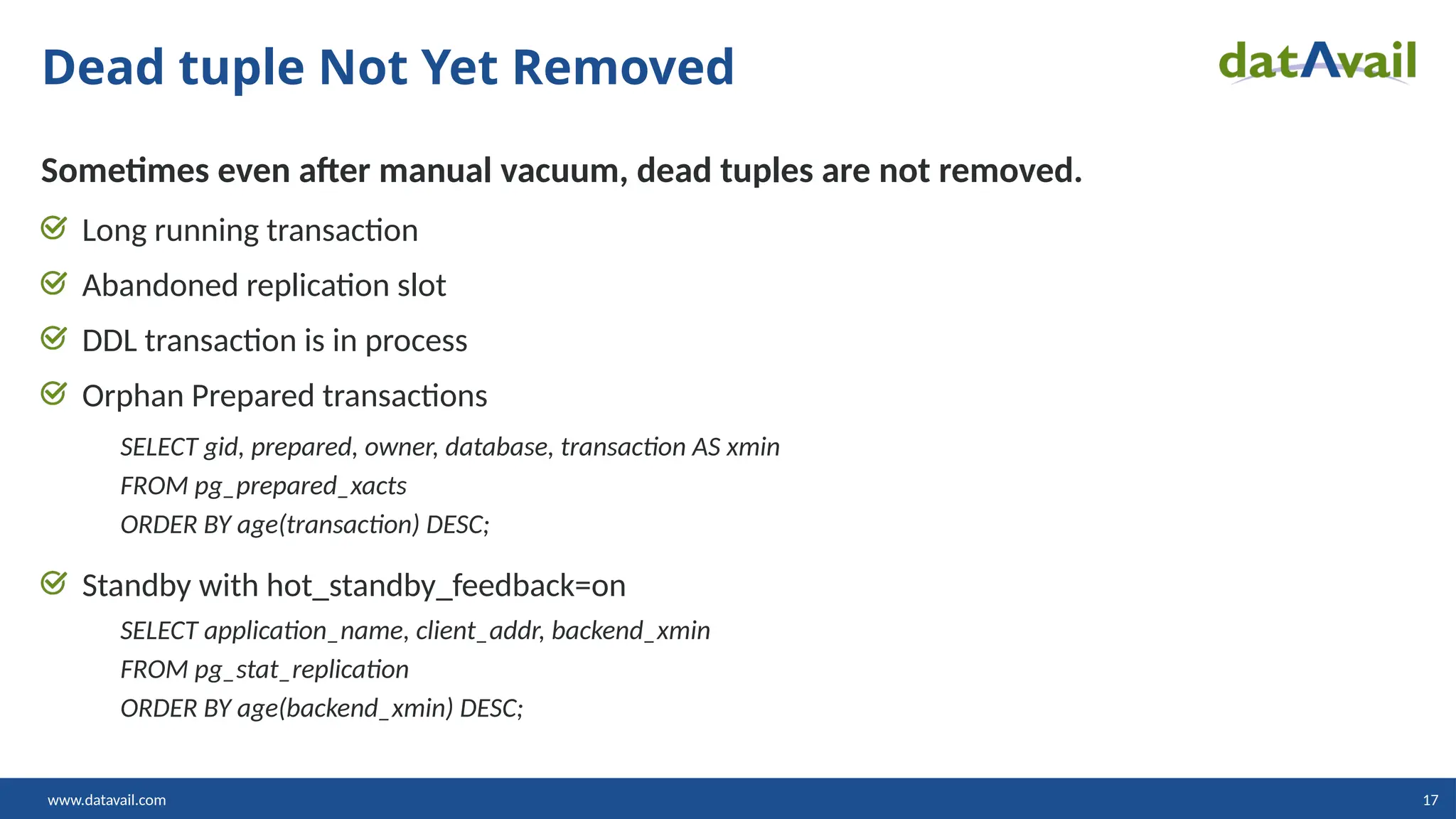

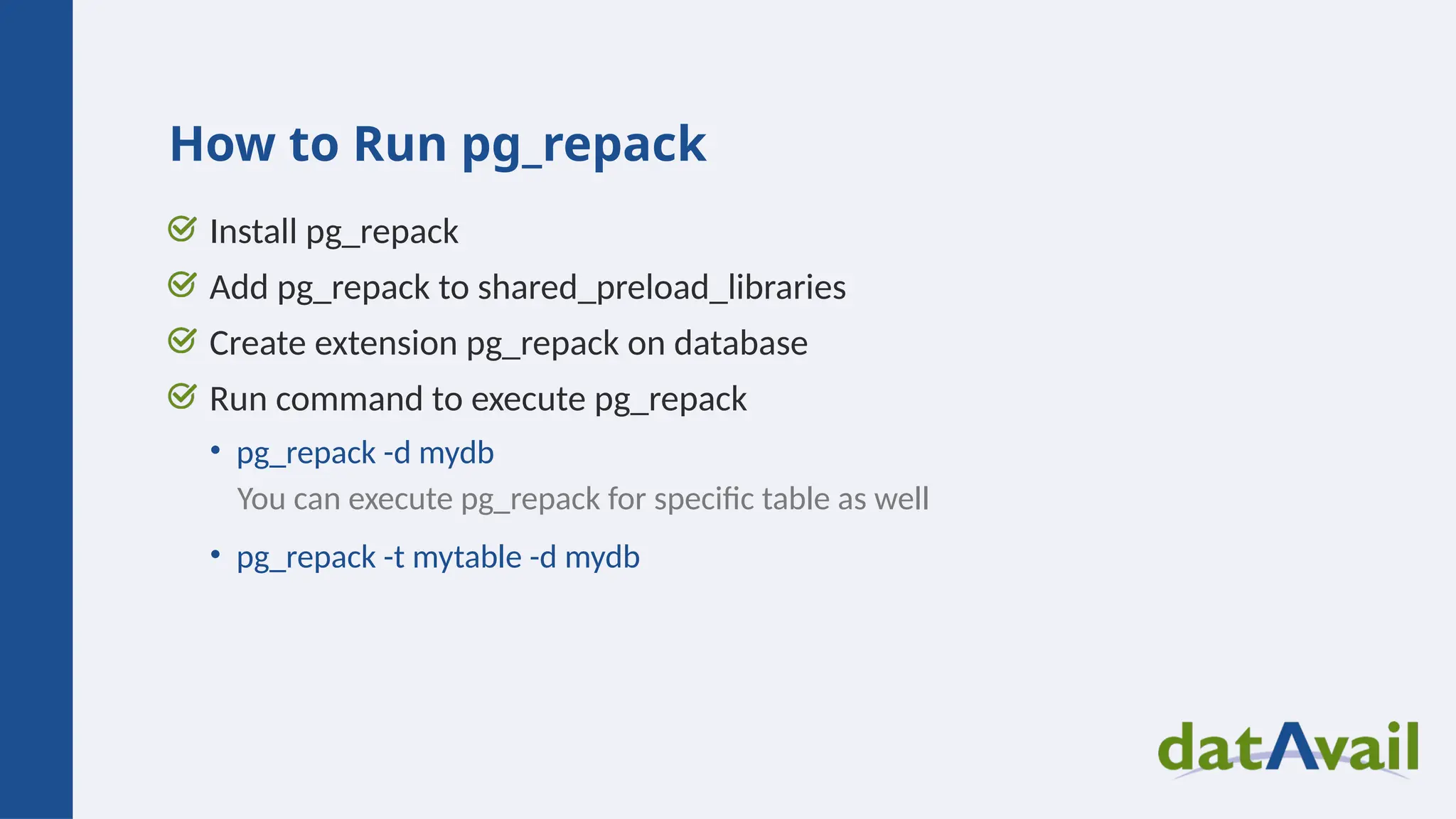

Importance of PostgreSQL Vacuum Tuning to Optimize Database Performance | Presented by Datavail PostgreSQL is a powerful open-source database, but its performance can degrade over time without proper maintenance. One of the most critical yet often overlooked aspects of PostgreSQL performance tuning is vacuuming. In this presentation, Datavail explores how vacuum tuning—especially Autovacuum configuration—can dramatically improve query speed, reduce bloat, and maintain overall database health. Whether you're running PostgreSQL on-premises or in the cloud, understanding and optimizing vacuum processes is essential for long-term scalability and reliability. 🔗 View the full presentation here: https://www.datavail.com/resources/importance-postgresql-vacuum-tuning-optimize-database-performance/ Key Highlights What is Vacuuming? Learn how PostgreSQL clears out dead tuples and refreshes query planner statistics to maintain performance. Autovacuum Explained: Understand how PostgreSQL’s built-in Autovacuum works and why tuning its parameters is crucial. Performance Impact: Discover how improper vacuum settings can lead to table bloat, slow queries, and inefficient indexing. Advanced Techniques: When to use VACUUM FULL, REINDEX, and pg_repack How to monitor and adjust Autovacuum thresholds Real-world examples of tuning for high-volume workloads Best Practices: Get actionable insights from Datavail’s PostgreSQL experts on maintaining optimal performance in production environments. Why Watch This Session? This presentation is ideal for: PostgreSQL DBAs and DevOps engineers Cloud architects managing PostgreSQL on AWS, Azure, or GCP Developers working with high-transaction applications IT leaders seeking to reduce downtime and improve database efficiency