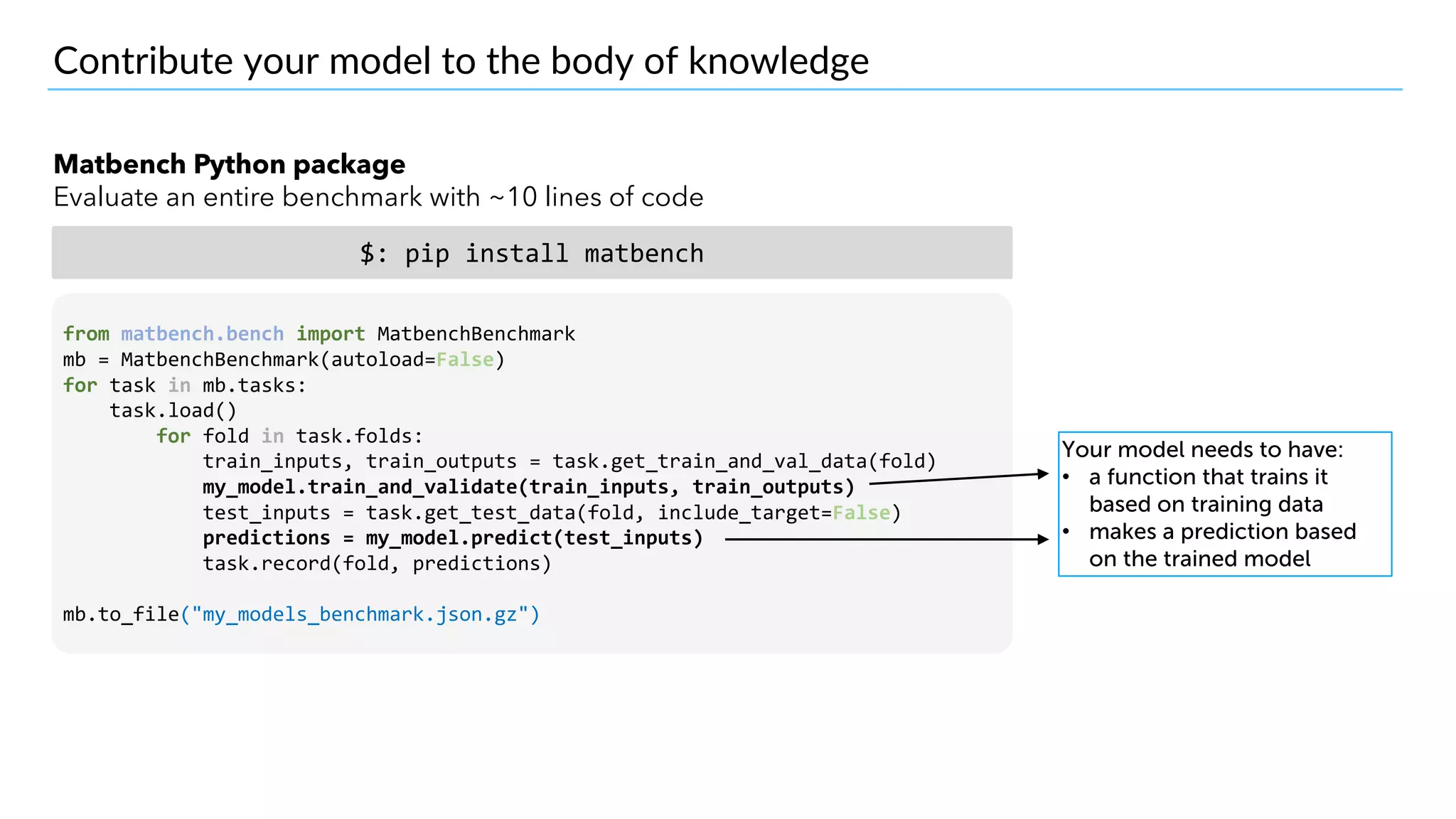

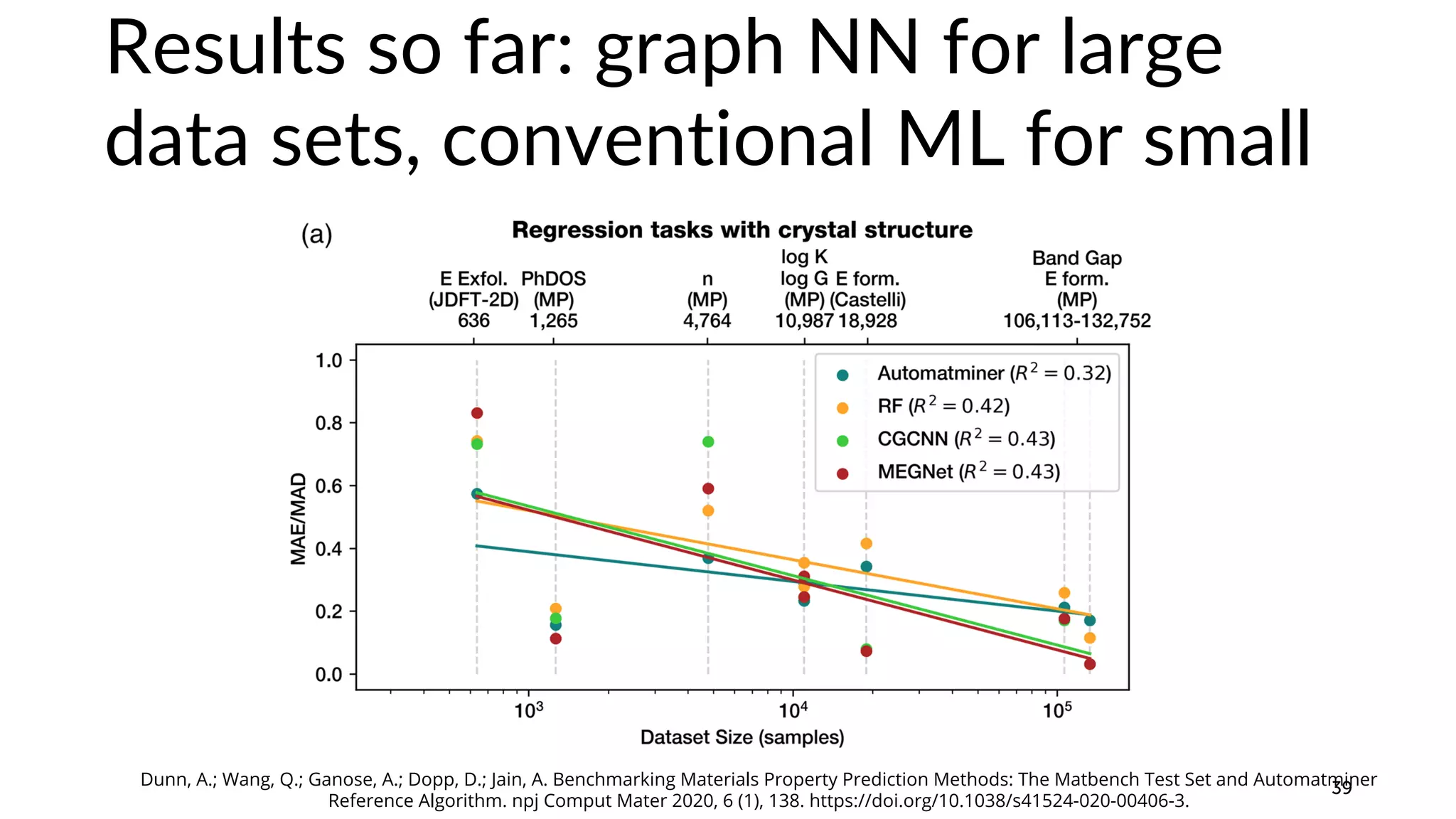

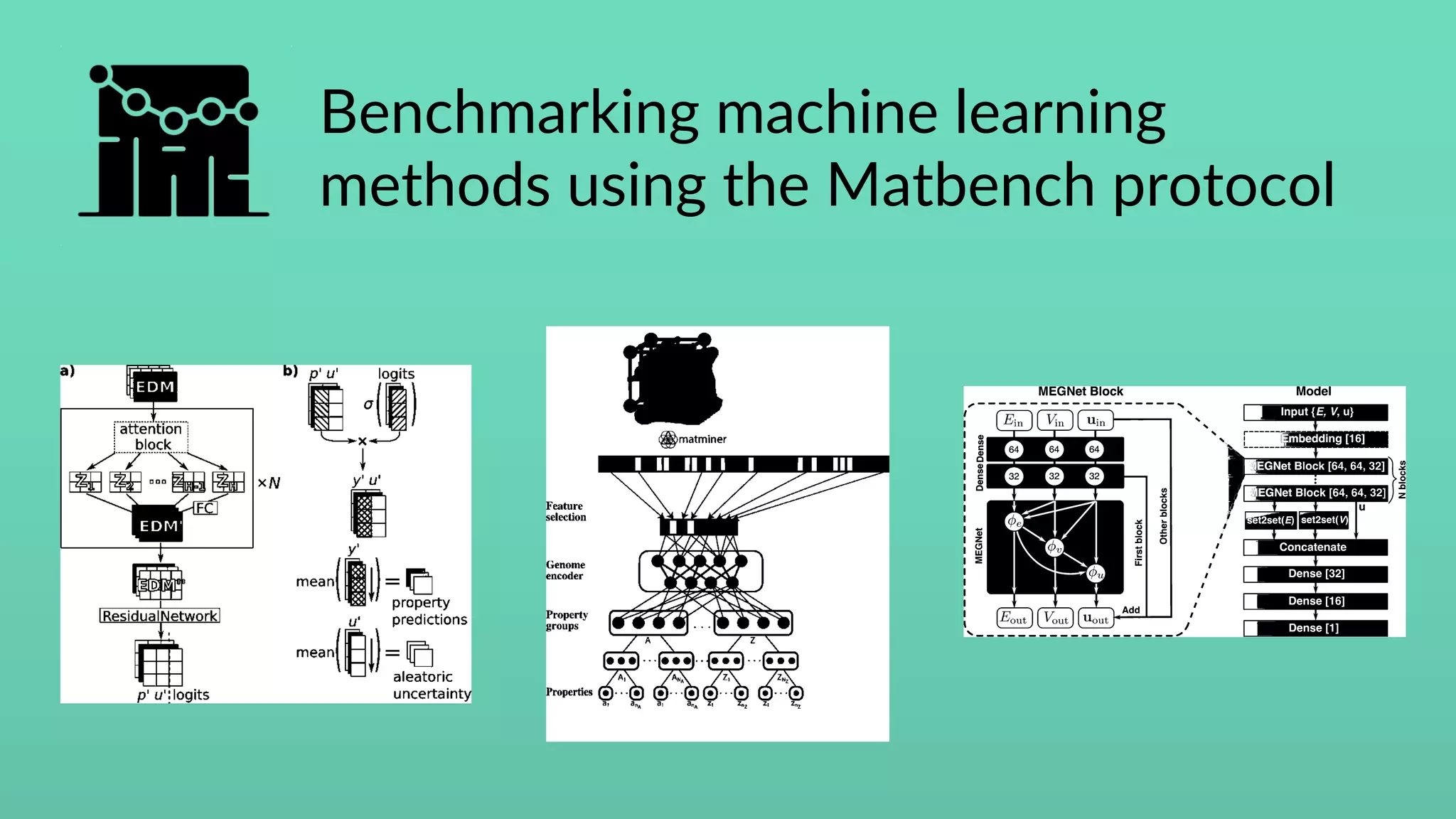

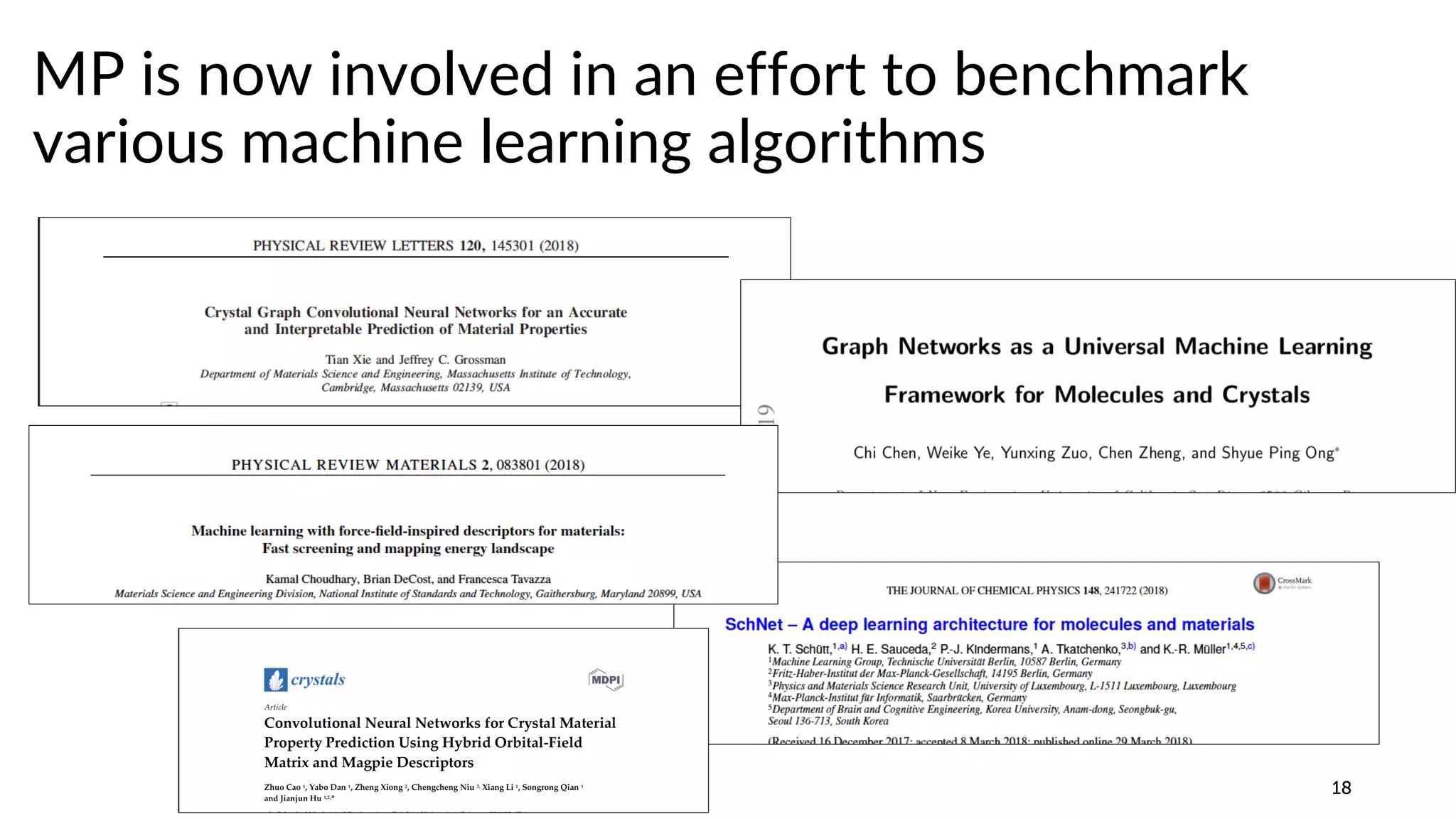

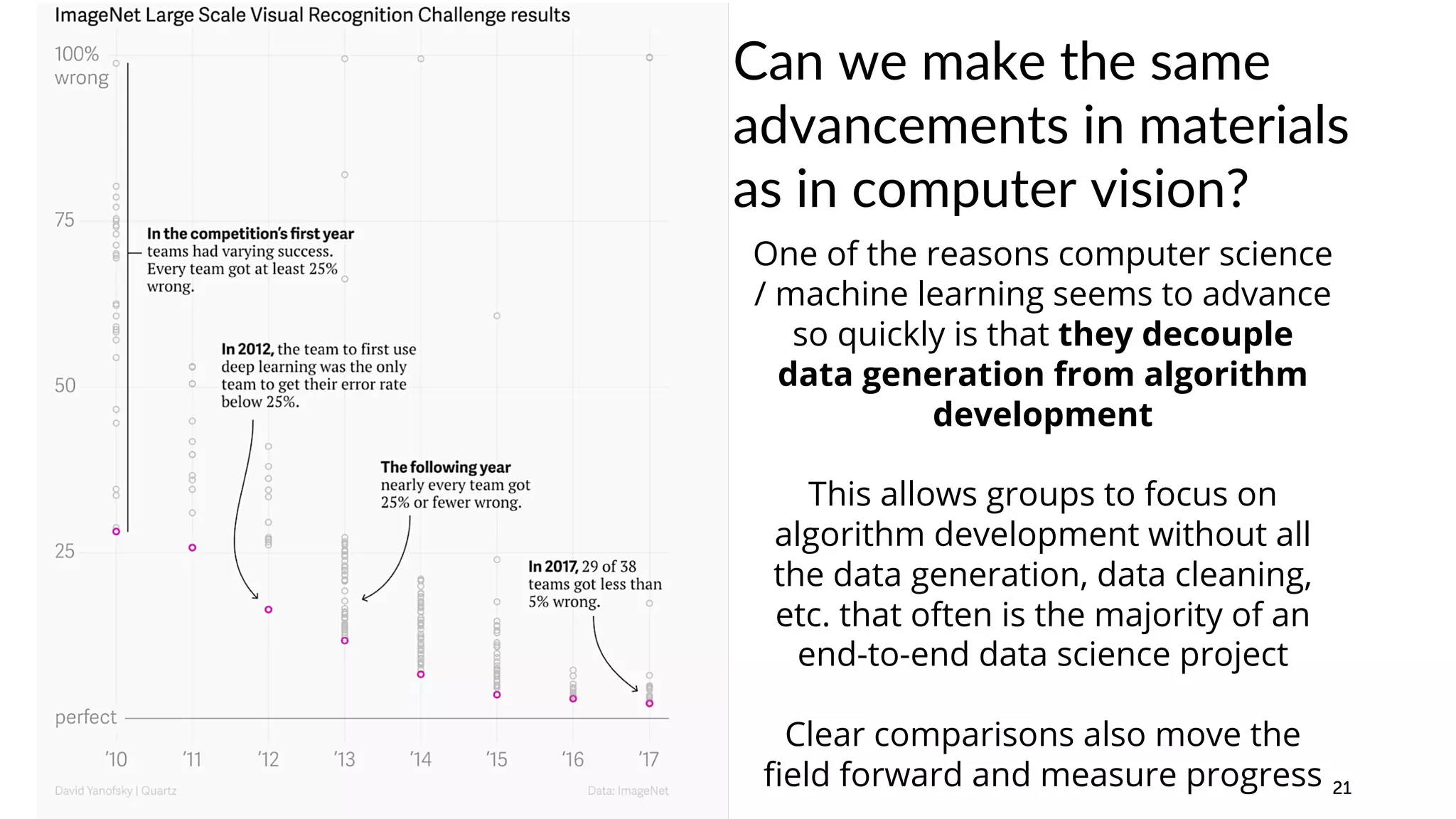

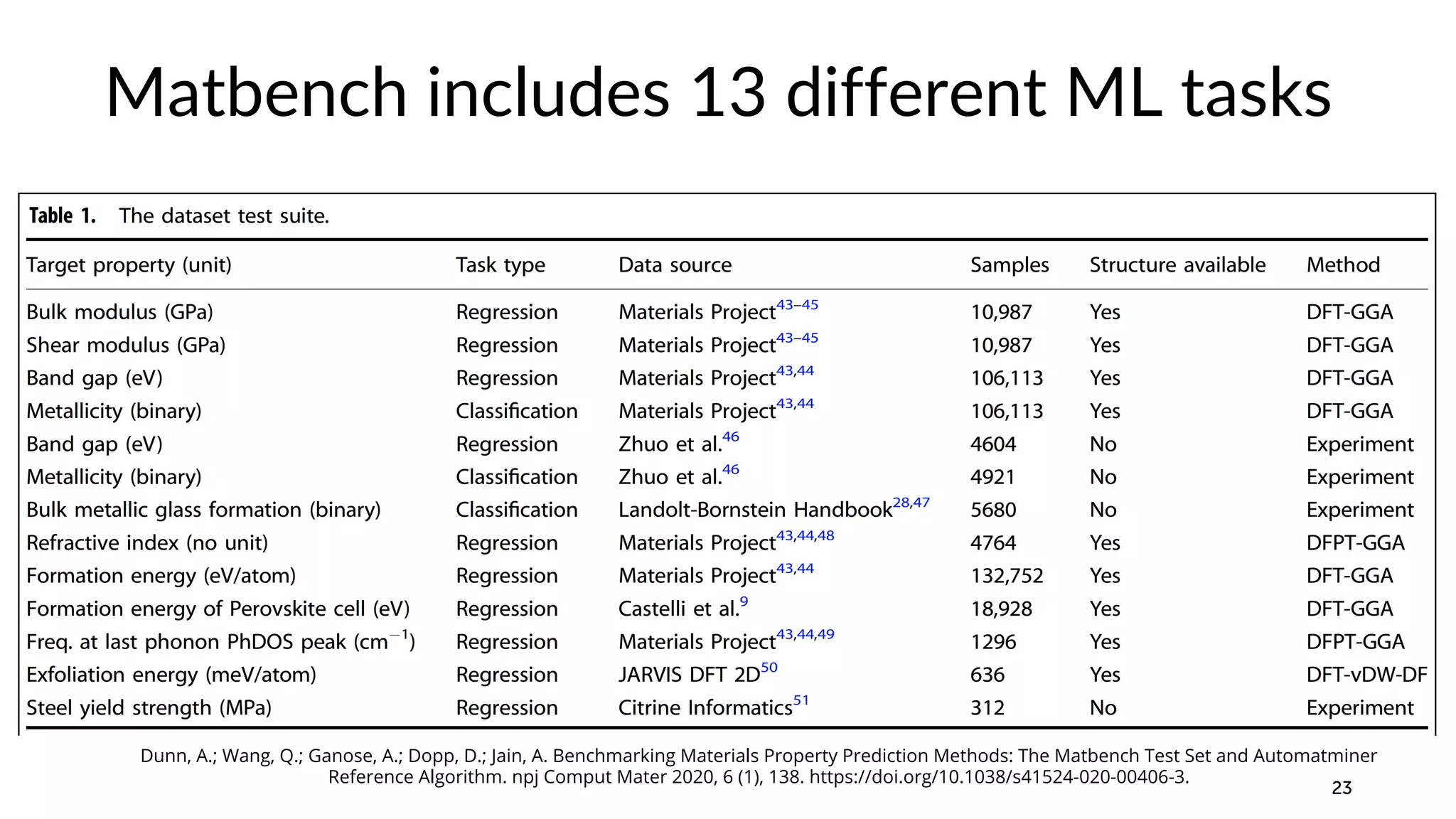

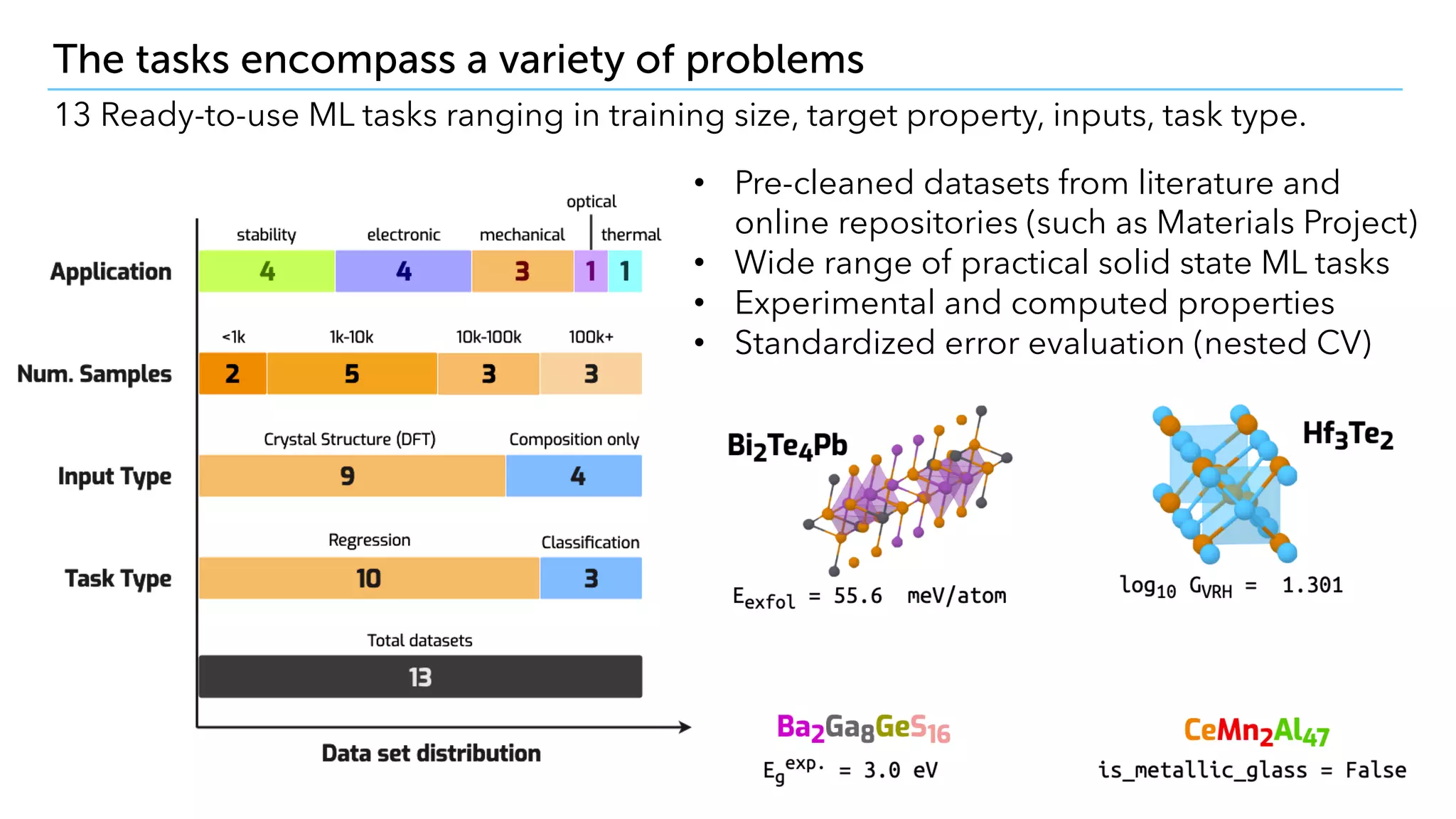

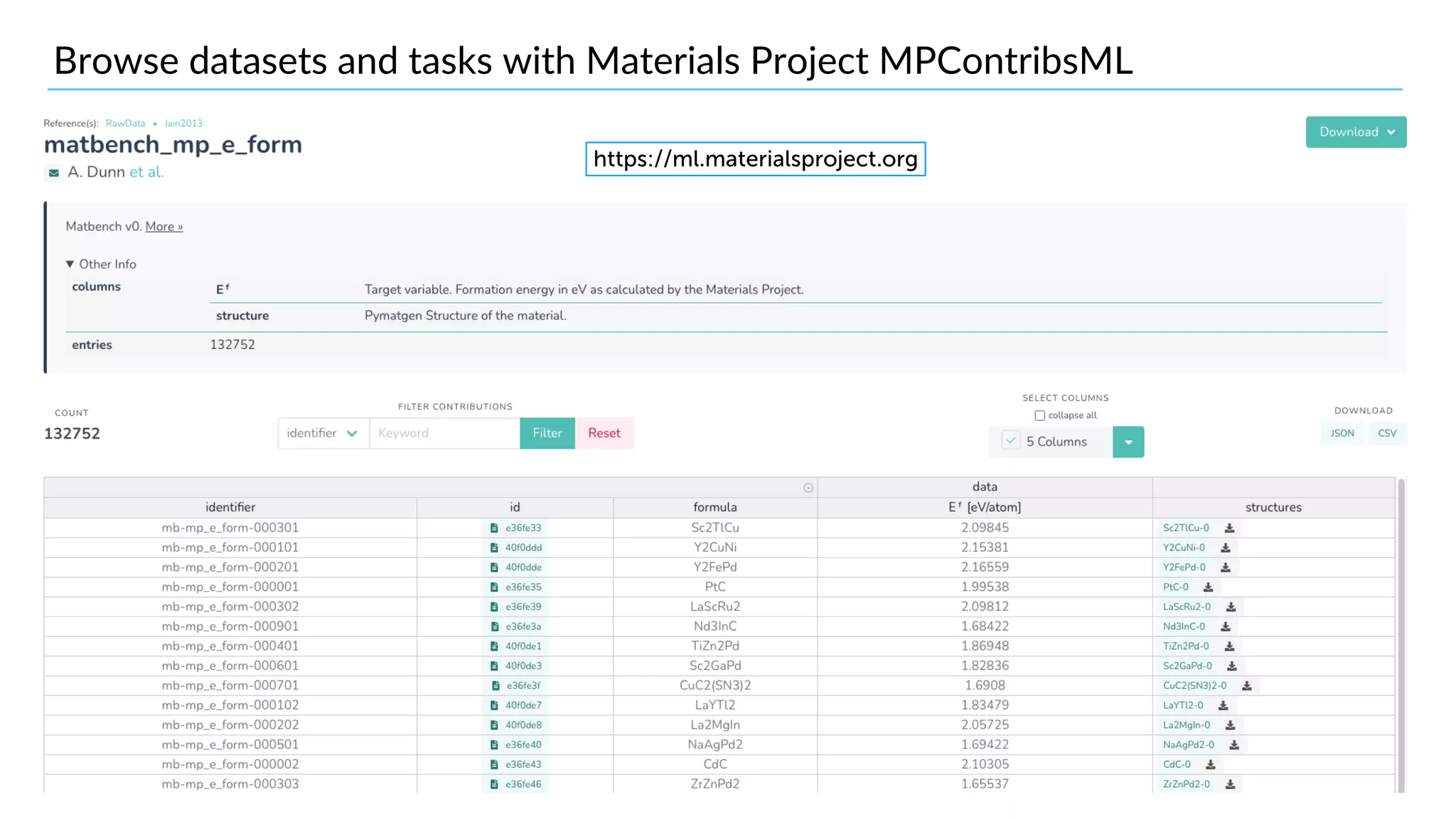

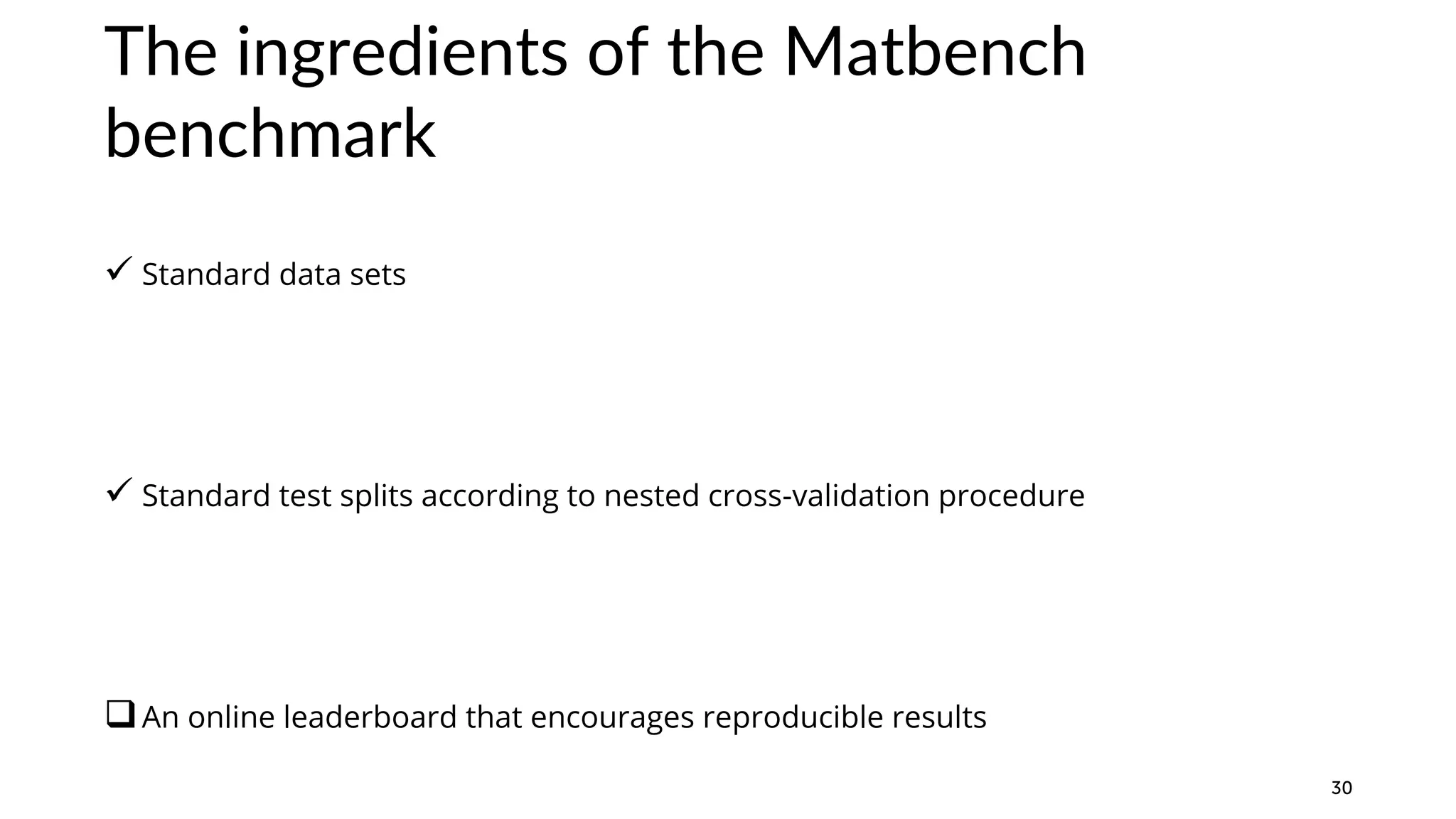

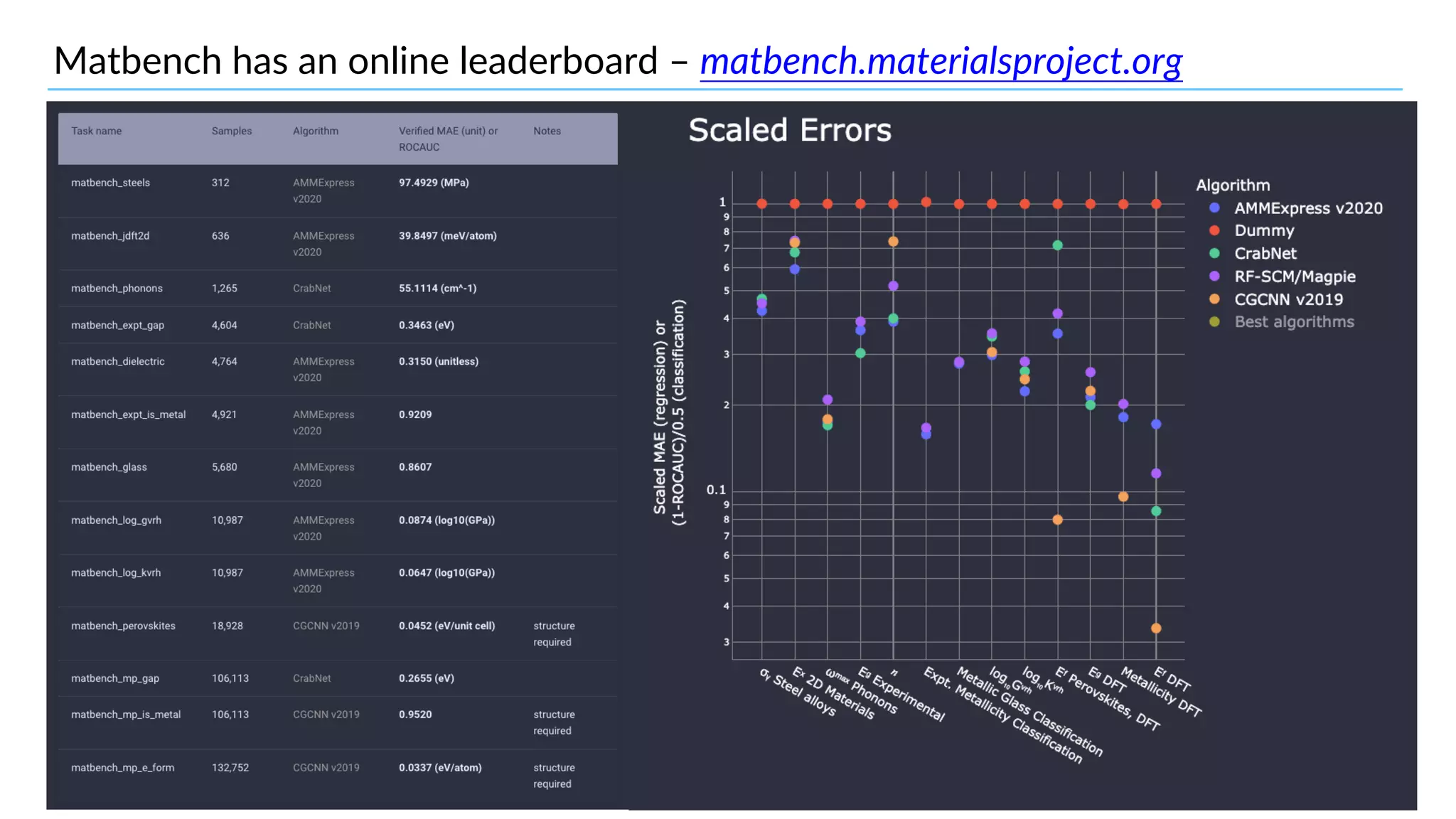

1) The document discusses evaluating machine learning algorithms for materials science using the Matbench protocol. 2) Matbench provides standardized datasets, testing procedures, and an online leaderboard to benchmark and compare machine learning performance. 3) This allows different groups to evaluate algorithms independently and identify best practices for materials science predictions.

![Example 2: matminer allows researchers to generate diverse feature sets for machine learning 9 >60 featurizer classes can generate thousands of potential descriptors that are described in the literature feat = EwaldEnergy([options]) y = feat.featurize([input_data]) • compatible with scikit- learn pipelining • automatically deploy multiprocessing to parallelize over data • include citations to methodology papers](https://image.slidesharecdn.com/matbenchpresentation-210930130844/75/Evaluating-Machine-Learning-Algorithms-for-Materials-Science-using-the-Matbench-Protocol-9-2048.jpg)

![Evaluation of ML paradigms drives research and development Traditional paradigms: • Traditional Models (e.g., RF + MagPie[1] features) • AutoML inside “traditional ML” space (Automatminer) Advancements in deep neural networks: 1. doi.org/10.1038/npjcompumats.2016.28 Attention Networks (e.g., CRABNet [2]) Optimal Descriptor Networks (e.g, MODNet [3]) Crystal Graph Networks (e.g, CGCNN, MEGNet [4]) 2. doi.org/10.1038/s41524-021-00545-1 3. doi.org/10.1038/s41524-021-00552-2 4. doi.org/10.1021/acs.chemmater.9b01294](https://image.slidesharecdn.com/matbenchpresentation-210930130844/75/Evaluating-Machine-Learning-Algorithms-for-Materials-Science-using-the-Matbench-Protocol-34-2048.jpg)

![Matbench compares these ML model paradigms Traditional paradigms: • Traditional Models (e.g., RF + MagPie[1] features) • AutoML inside “traditional ML” space (Automatminer) Advancements in deep neural networks: 1. doi.org/10.1038/npjcompumats.2016.28 Attention Networks (e.g., CRABNet [2]) Optimal Descriptor Networks (e.g, MODNet [3]) Crystal Graph Networks (e.g, CGCNN, MEGNet [4]) 2. doi.org/10.1038/s41524-021-00545-1 3. doi.org/10.1038/s41524-021-00552-2 4. doi.org/10.1021/acs.chemmater.9b01294 ✓ - in Matbench ✓ - in Matbench ✓ - in Matbench ✓ - CGCNN in Matbench ✓ - MEGNET in progress ✓ - PR in review](https://image.slidesharecdn.com/matbenchpresentation-210930130844/75/Evaluating-Machine-Learning-Algorithms-for-Materials-Science-using-the-Matbench-Protocol-35-2048.jpg)