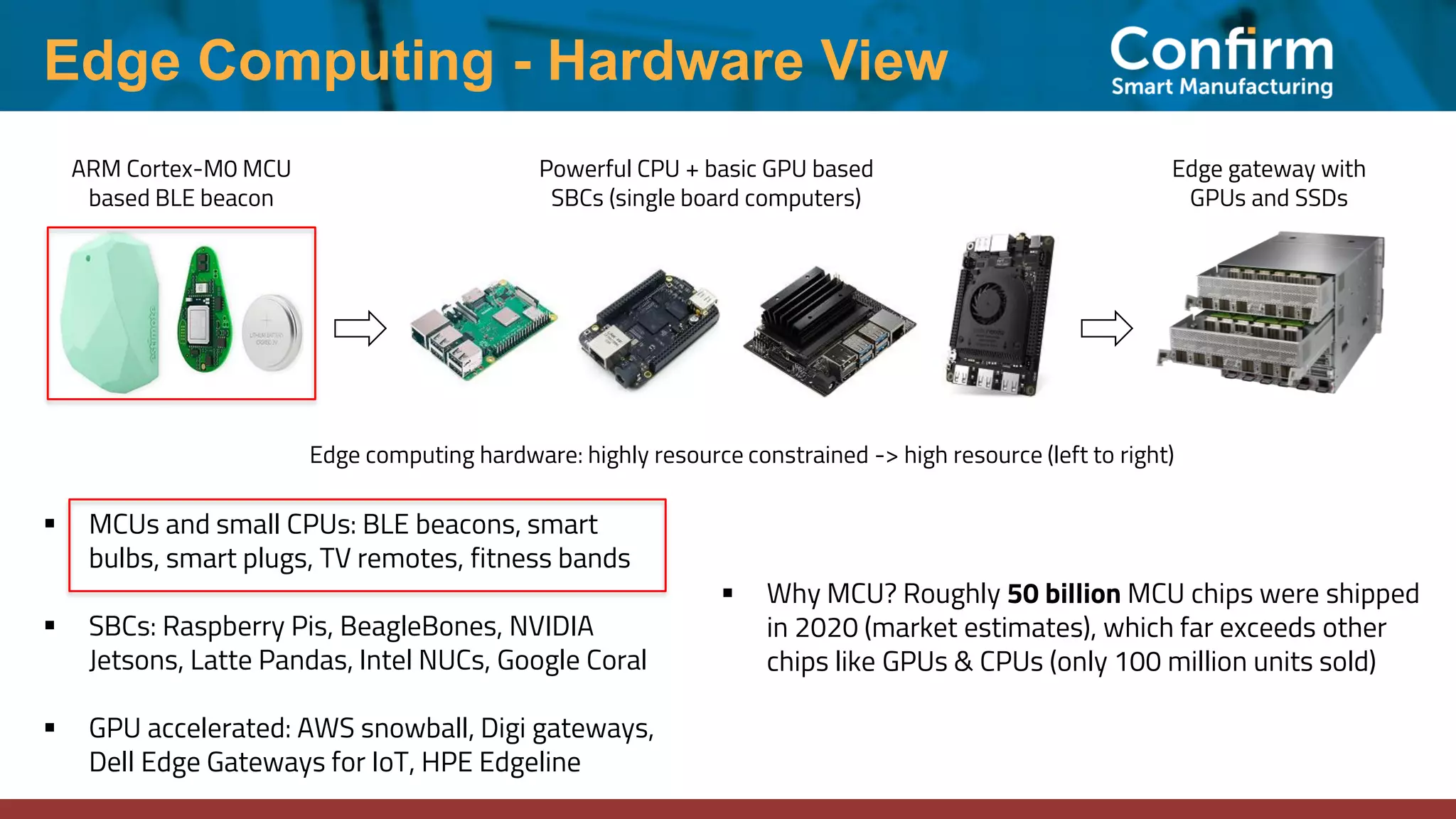

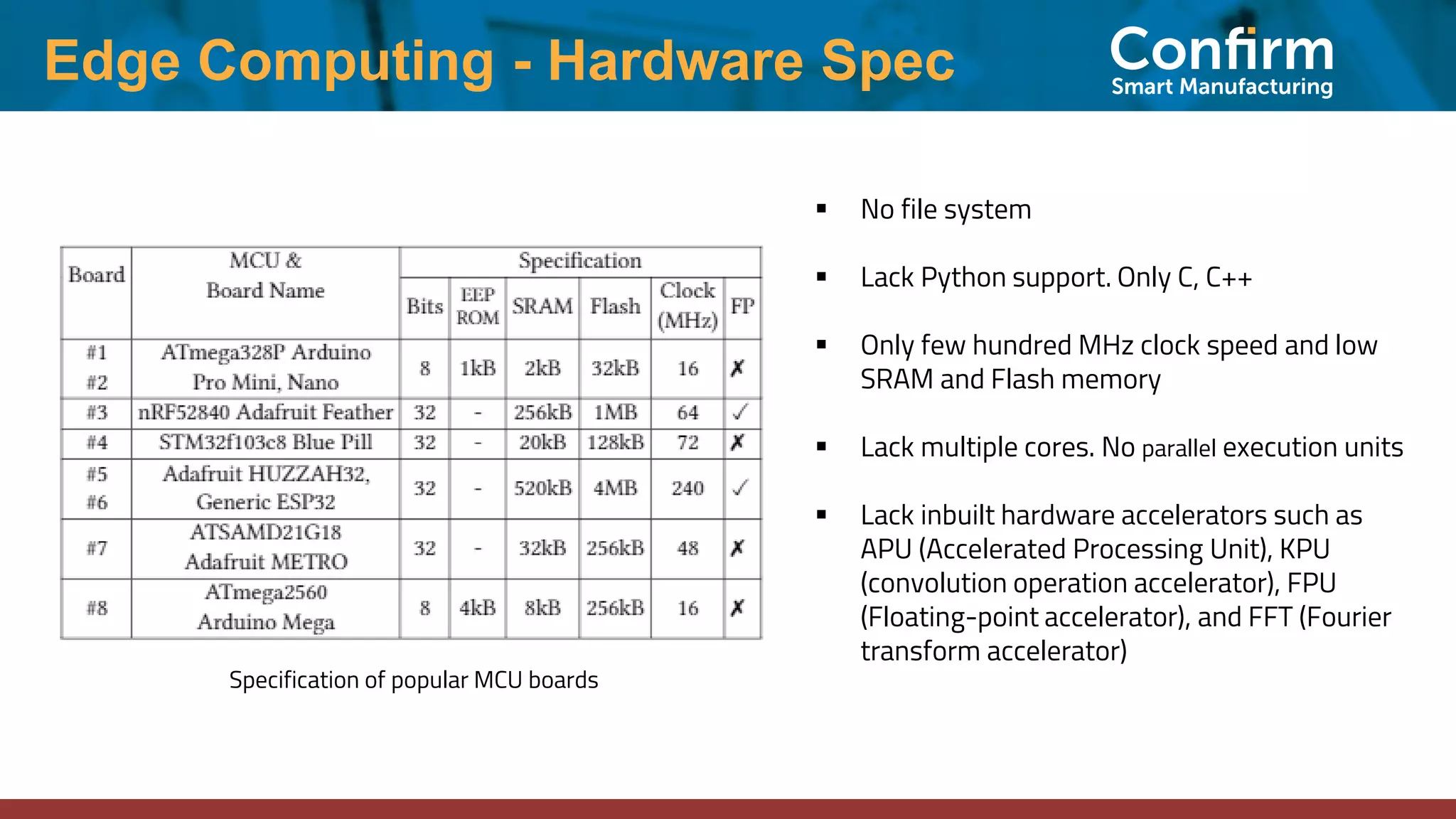

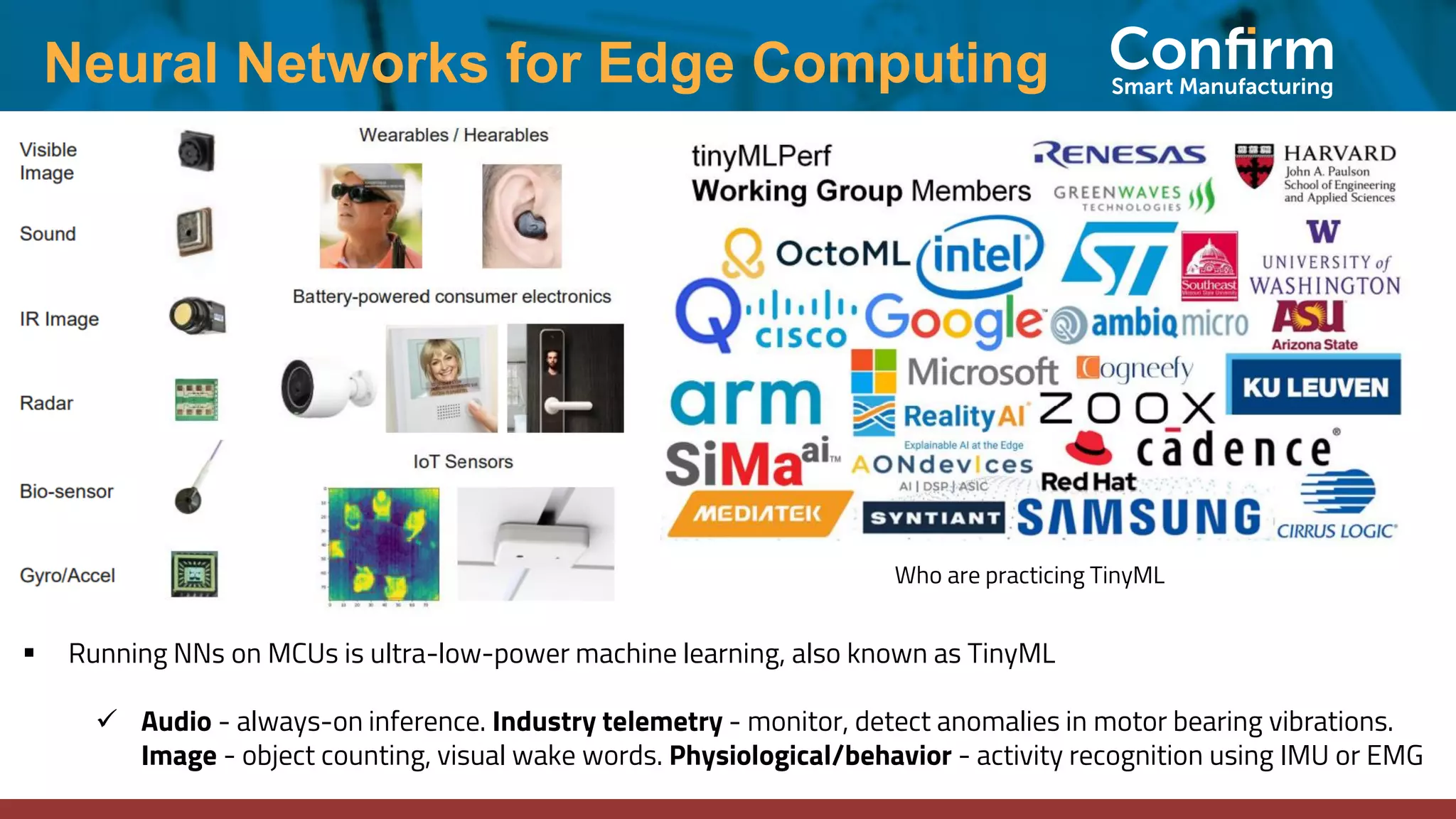

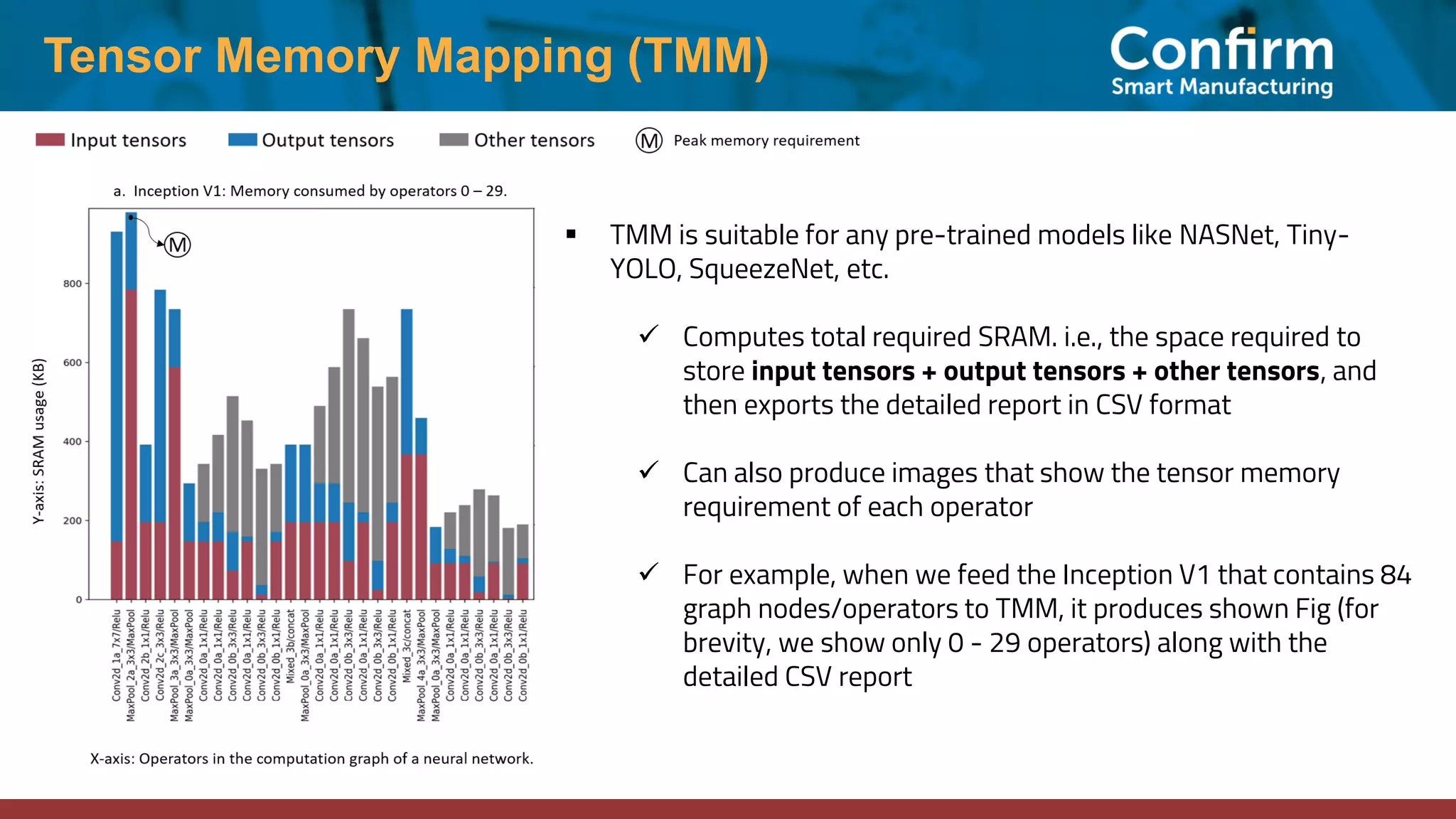

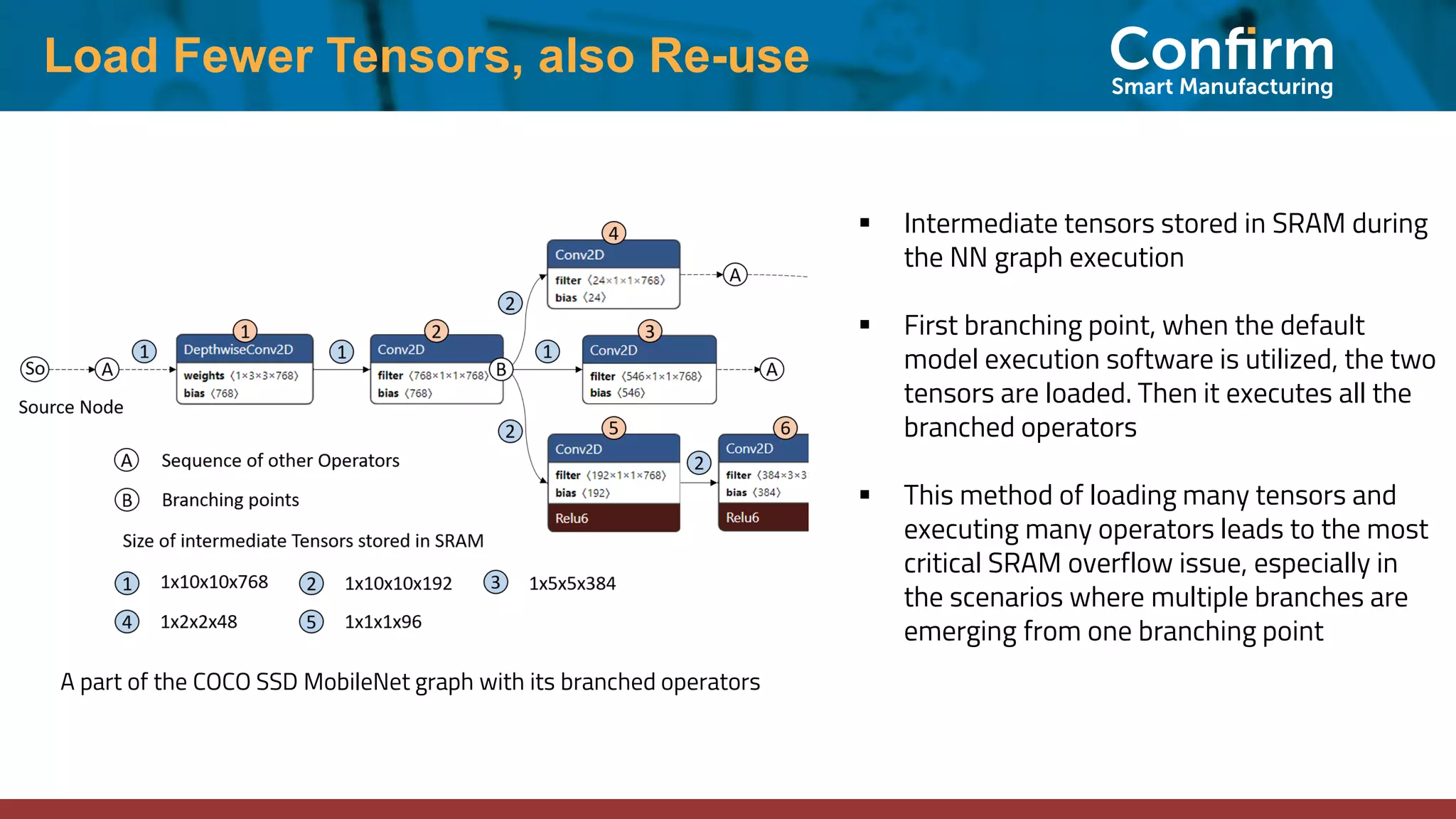

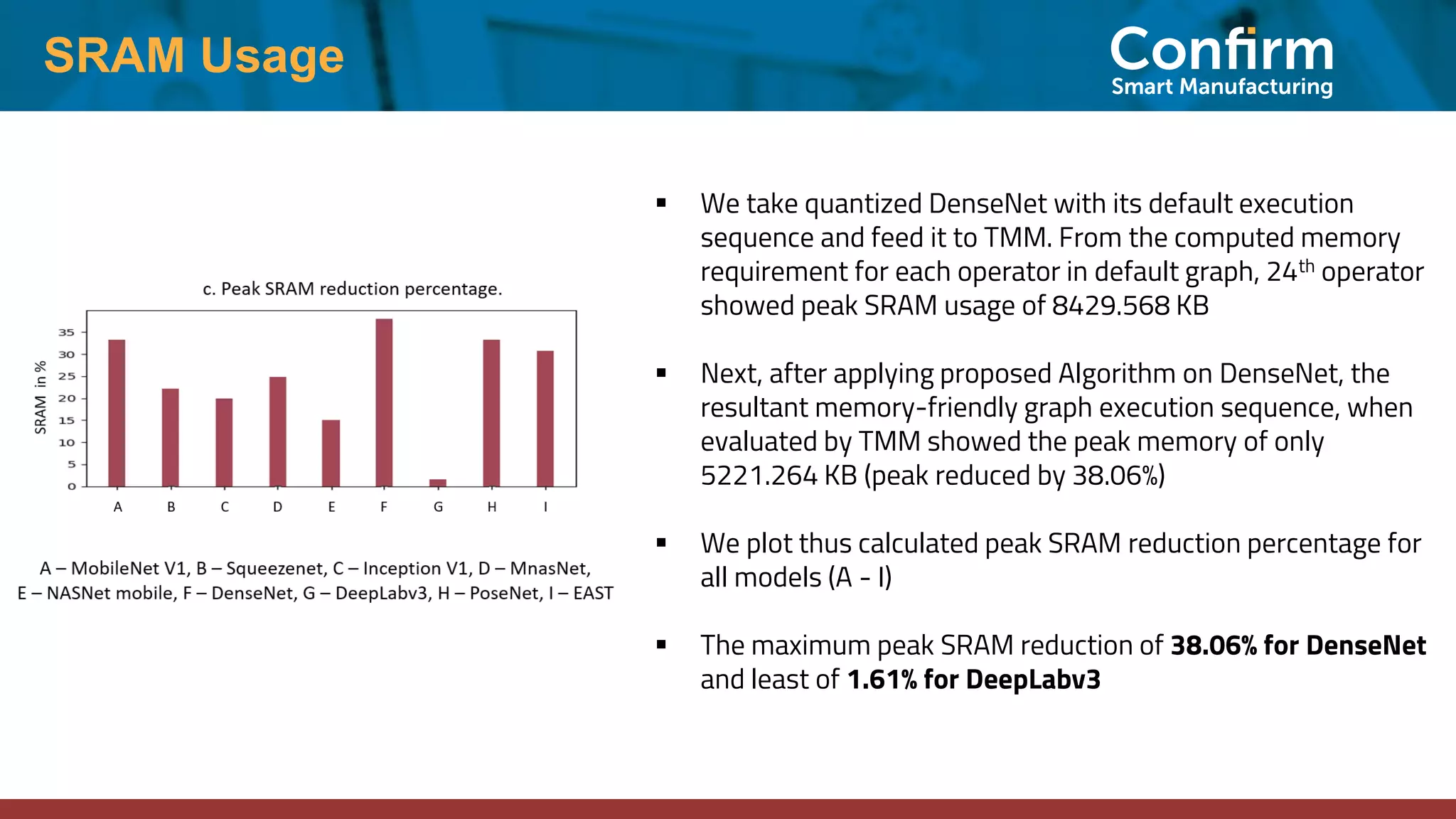

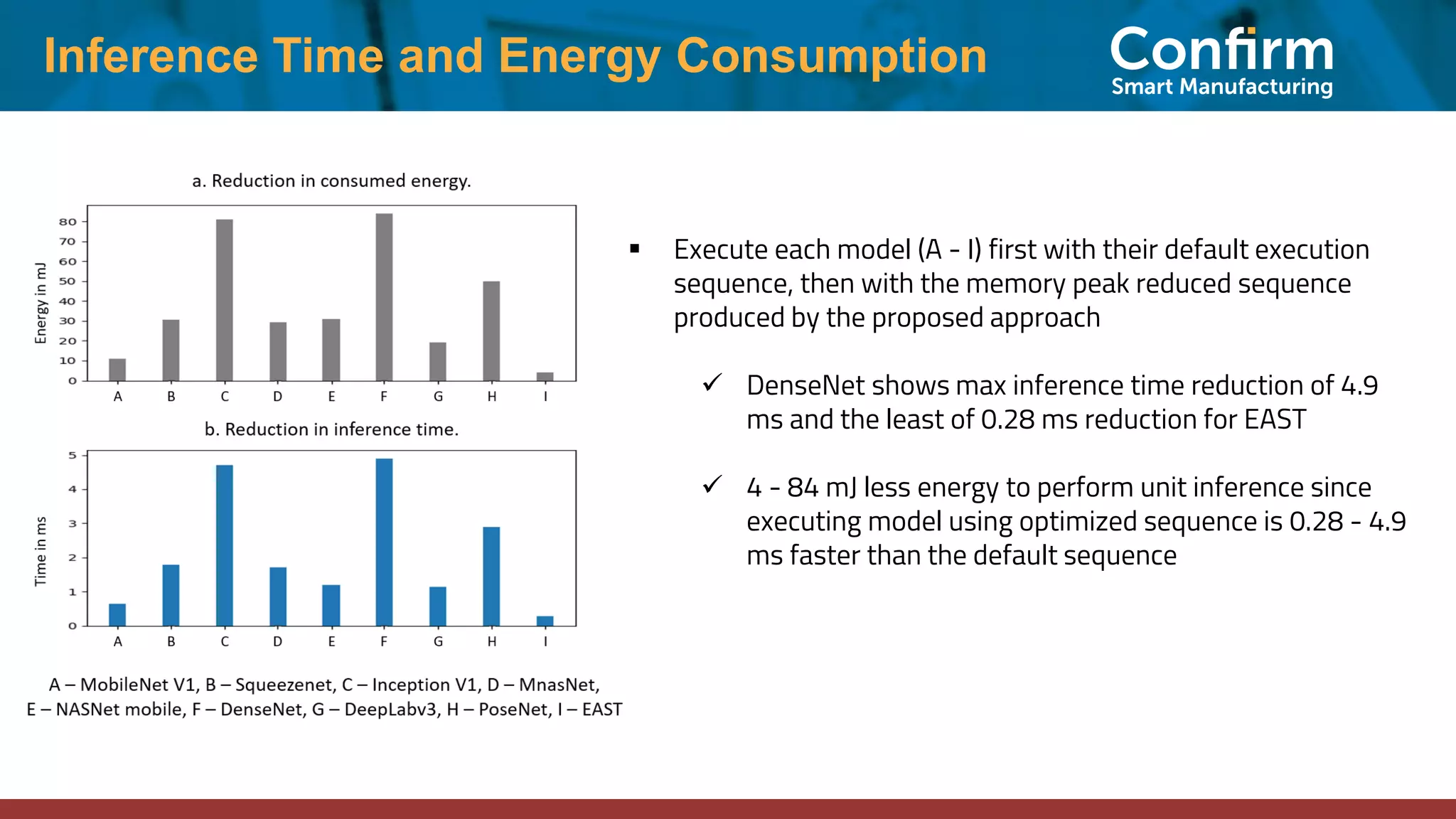

The document discusses an approach to enable machine learning on resource-constrained edge devices by employing SRAM-conserving techniques for neural networks execution. By utilizing tensor memory mapping, loading fewer tensors, and optimizing execution sequences, the method aims to reduce SRAM usage and enhance inference efficiency. The proposed strategy allows for the deployment of large, high-quality models on IoT devices without the need for hardware upgrades or model retraining.