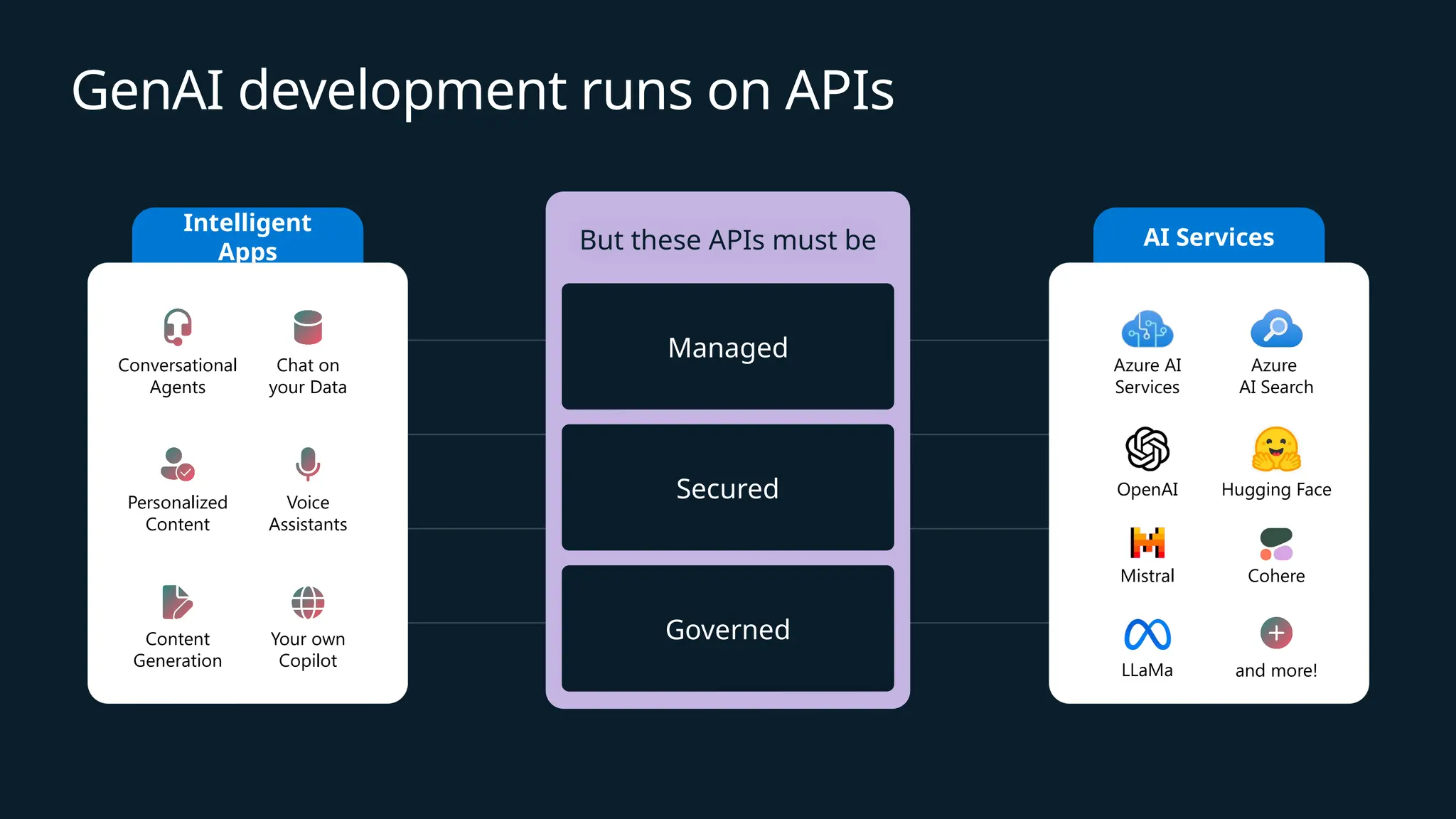

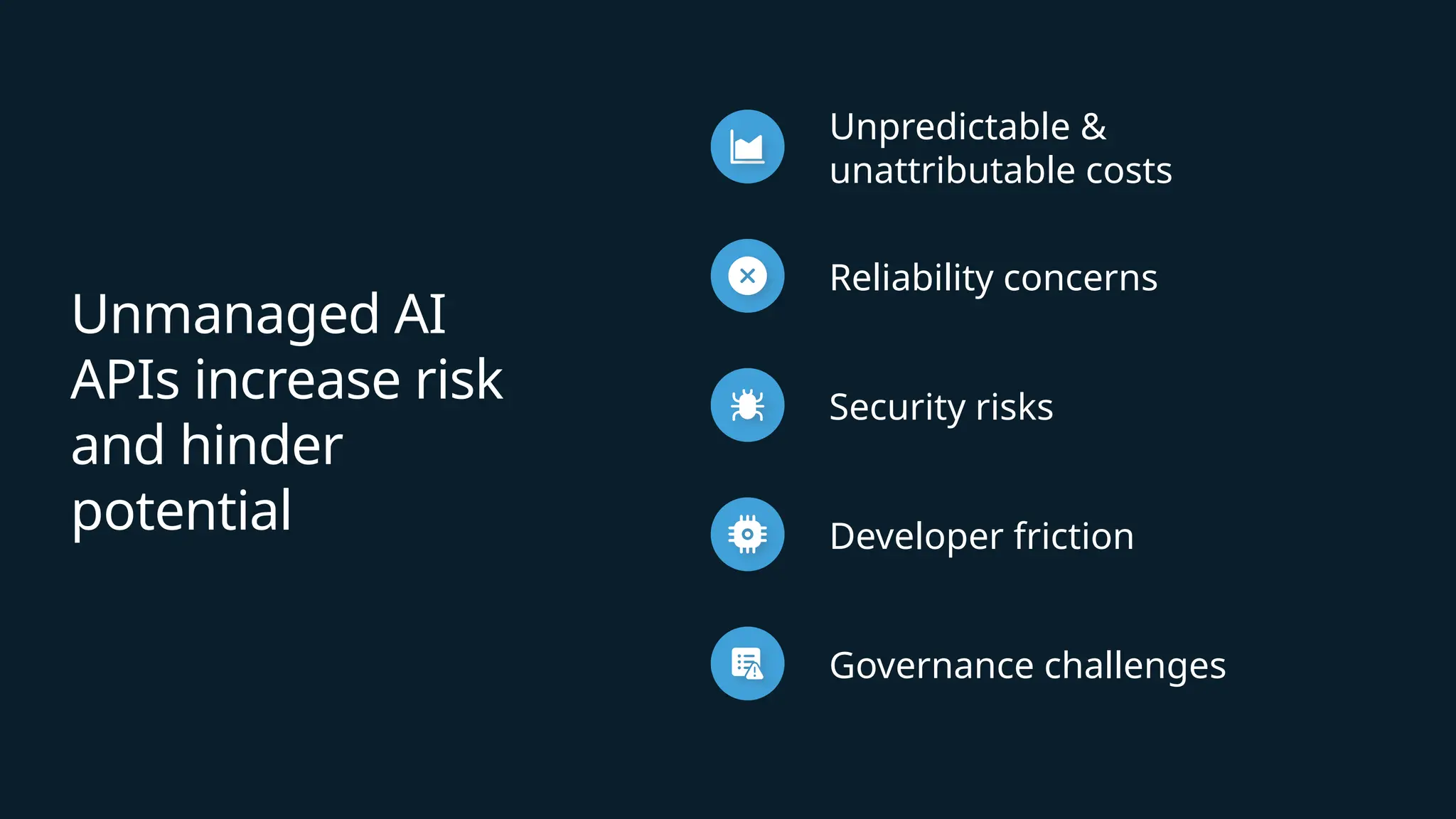

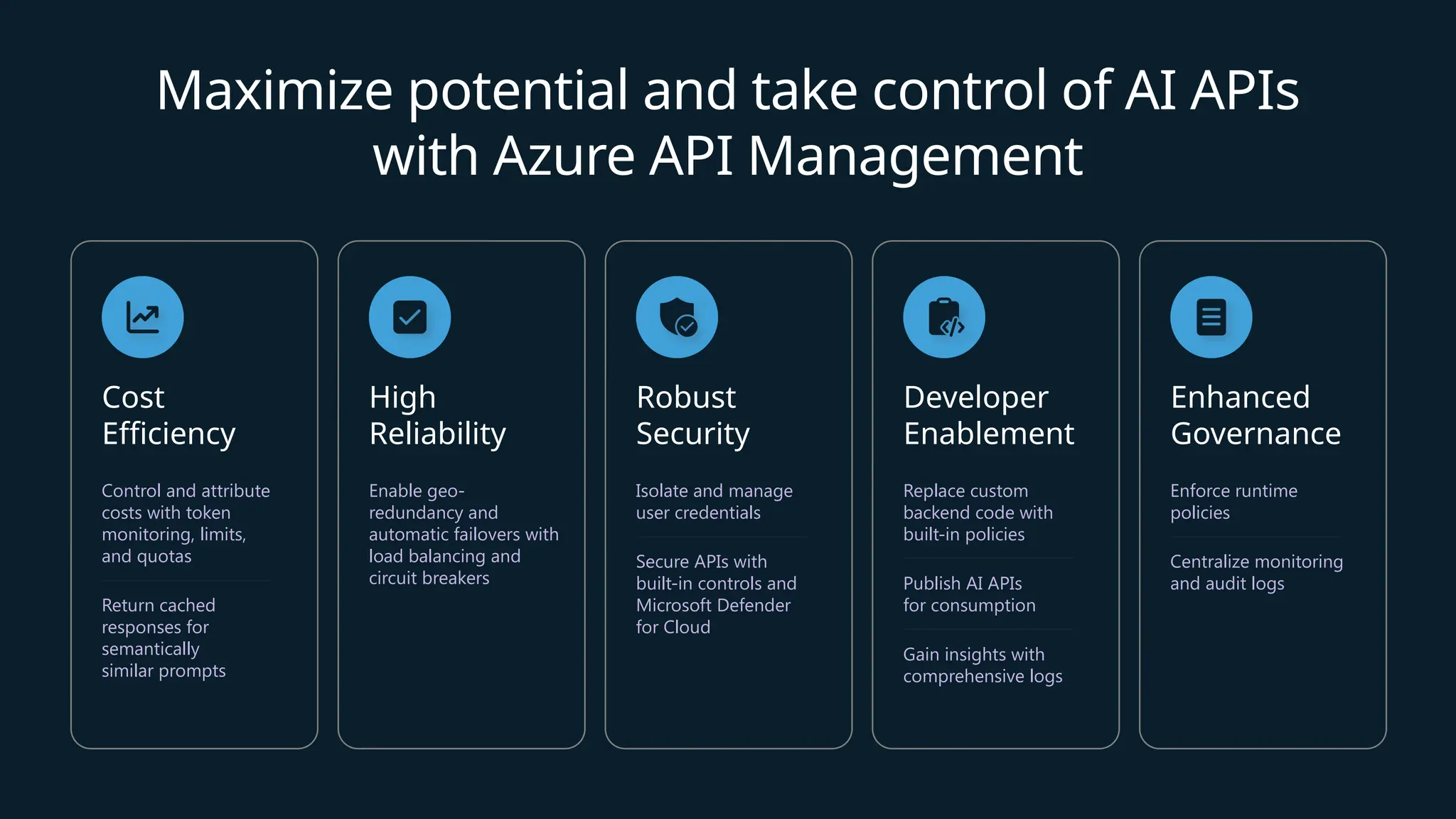

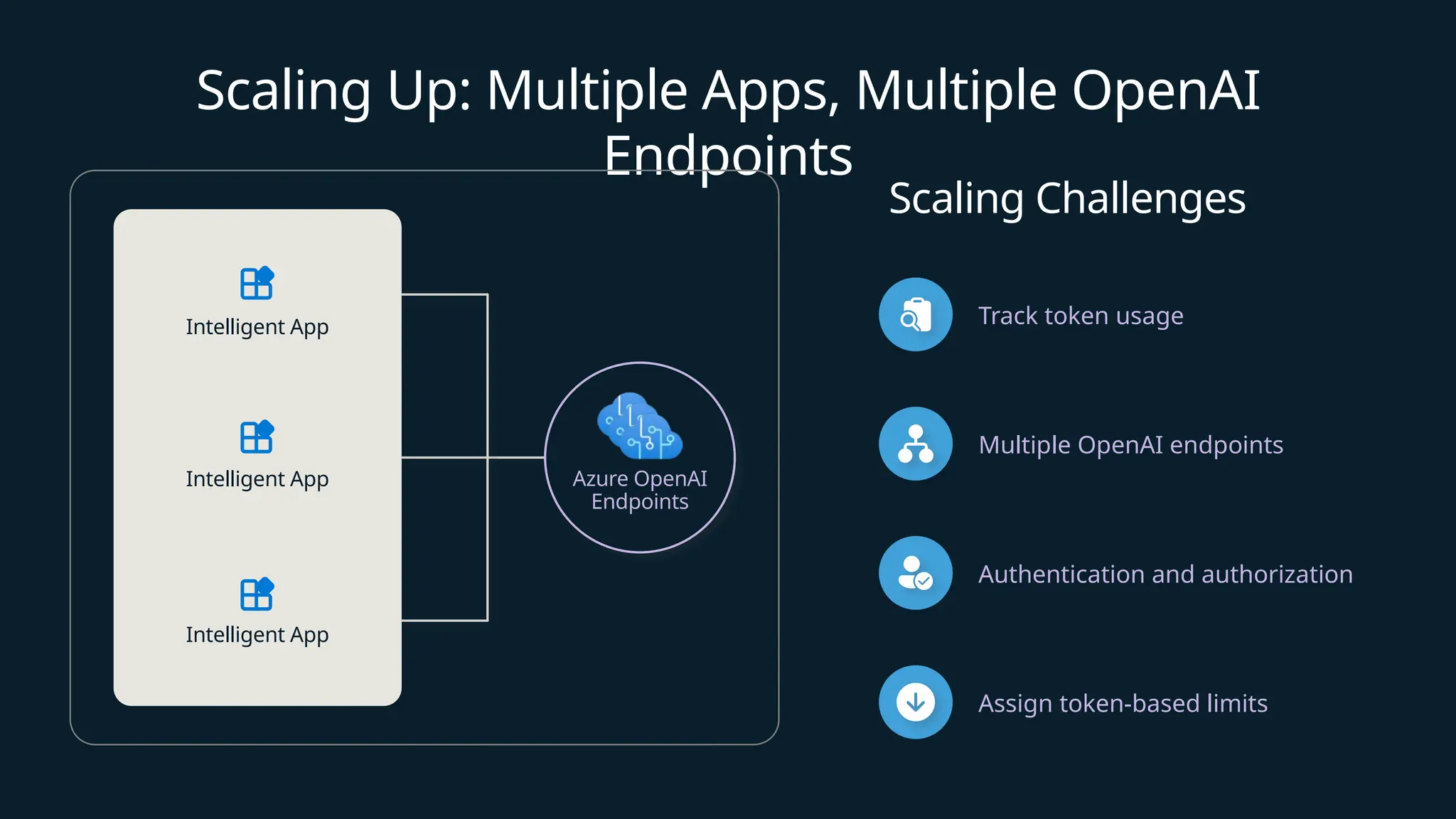

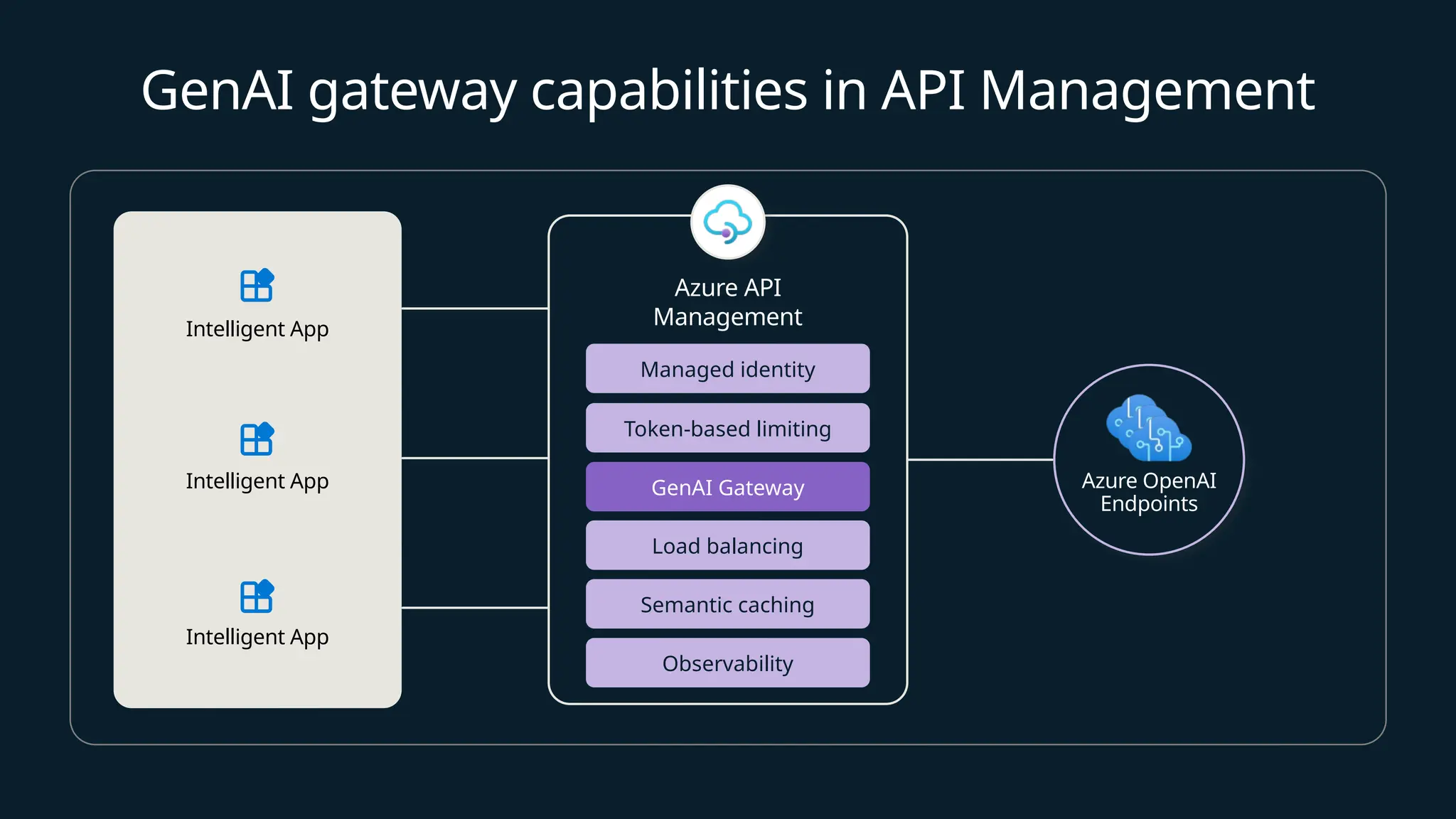

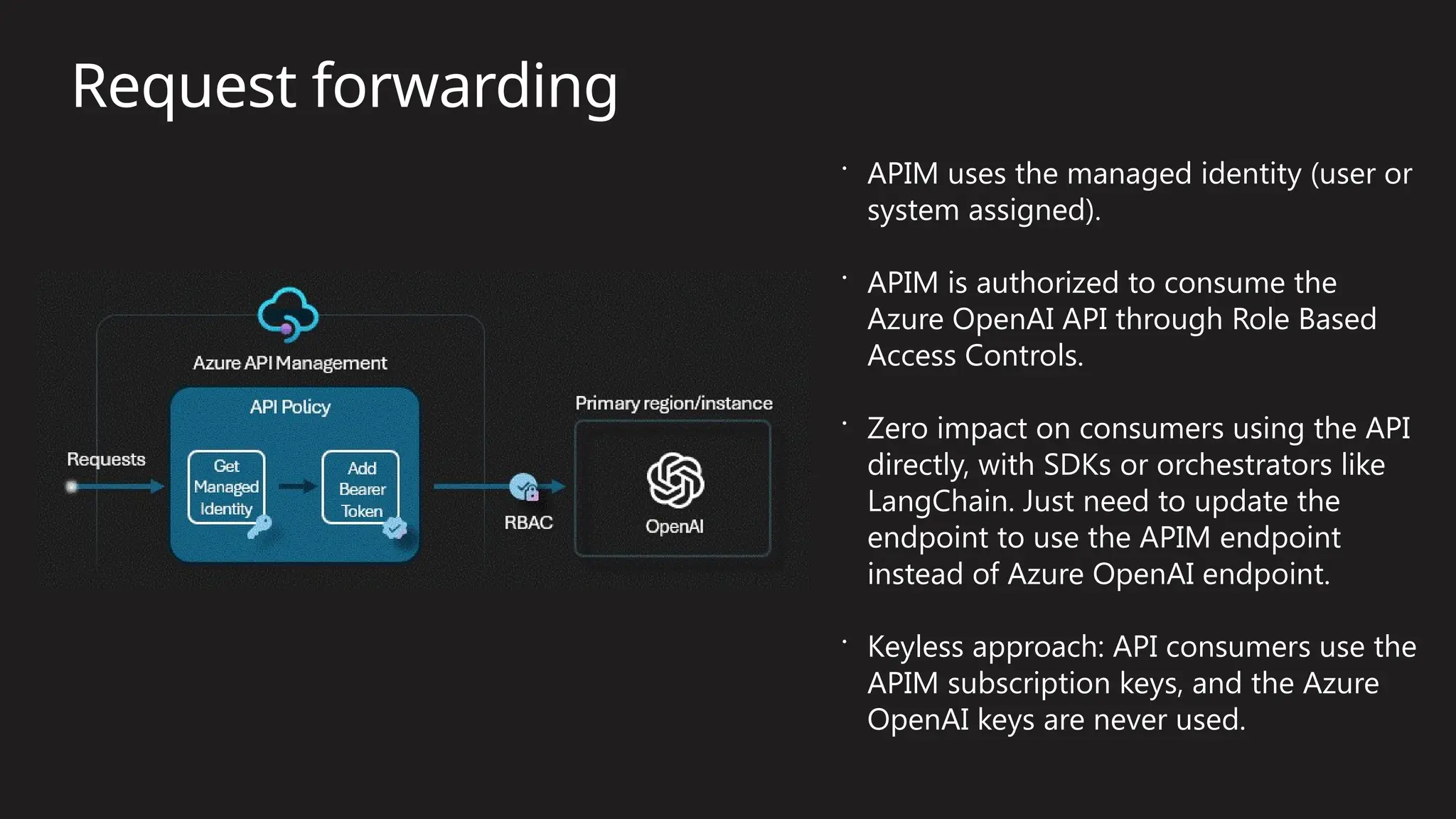

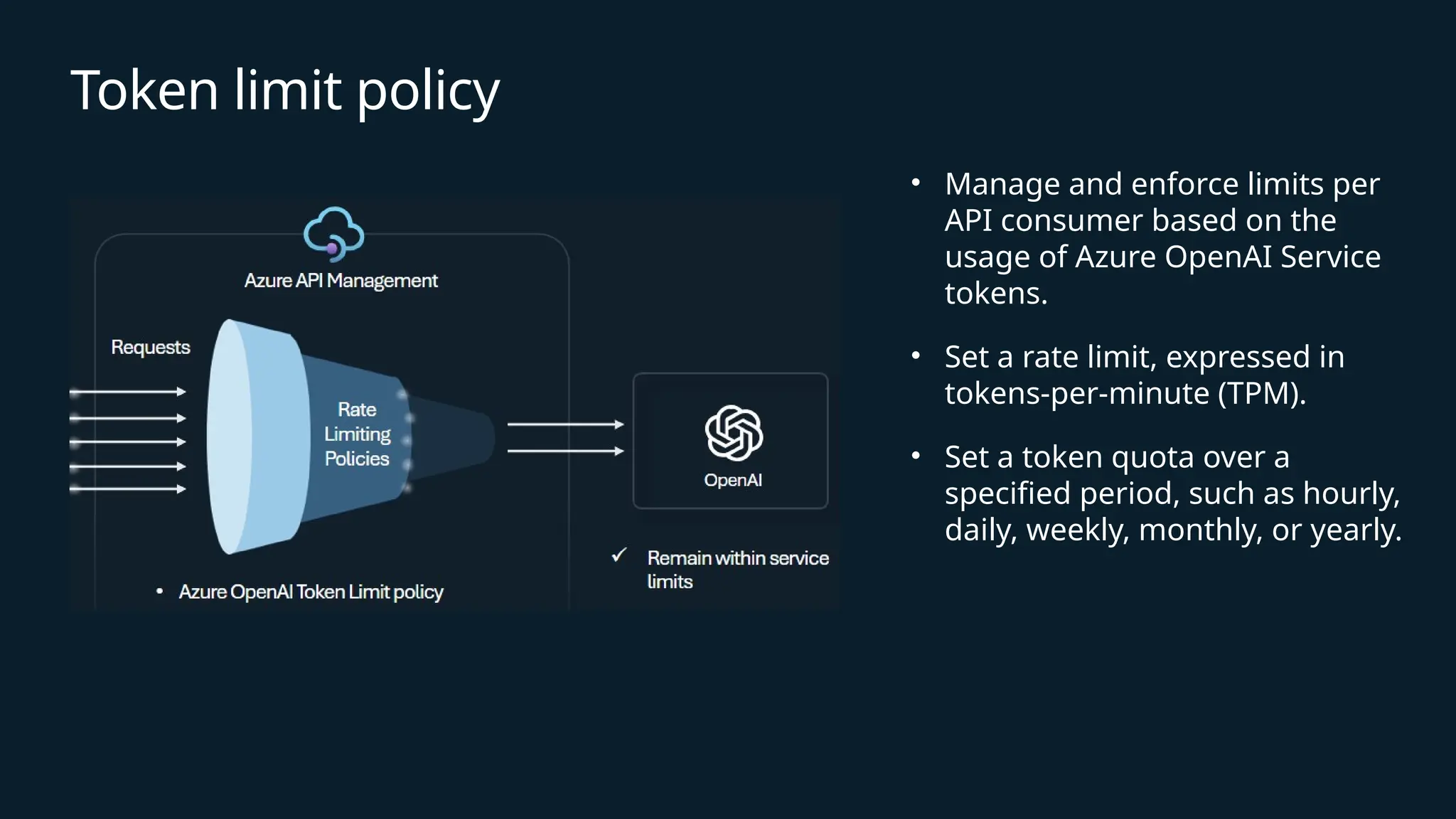

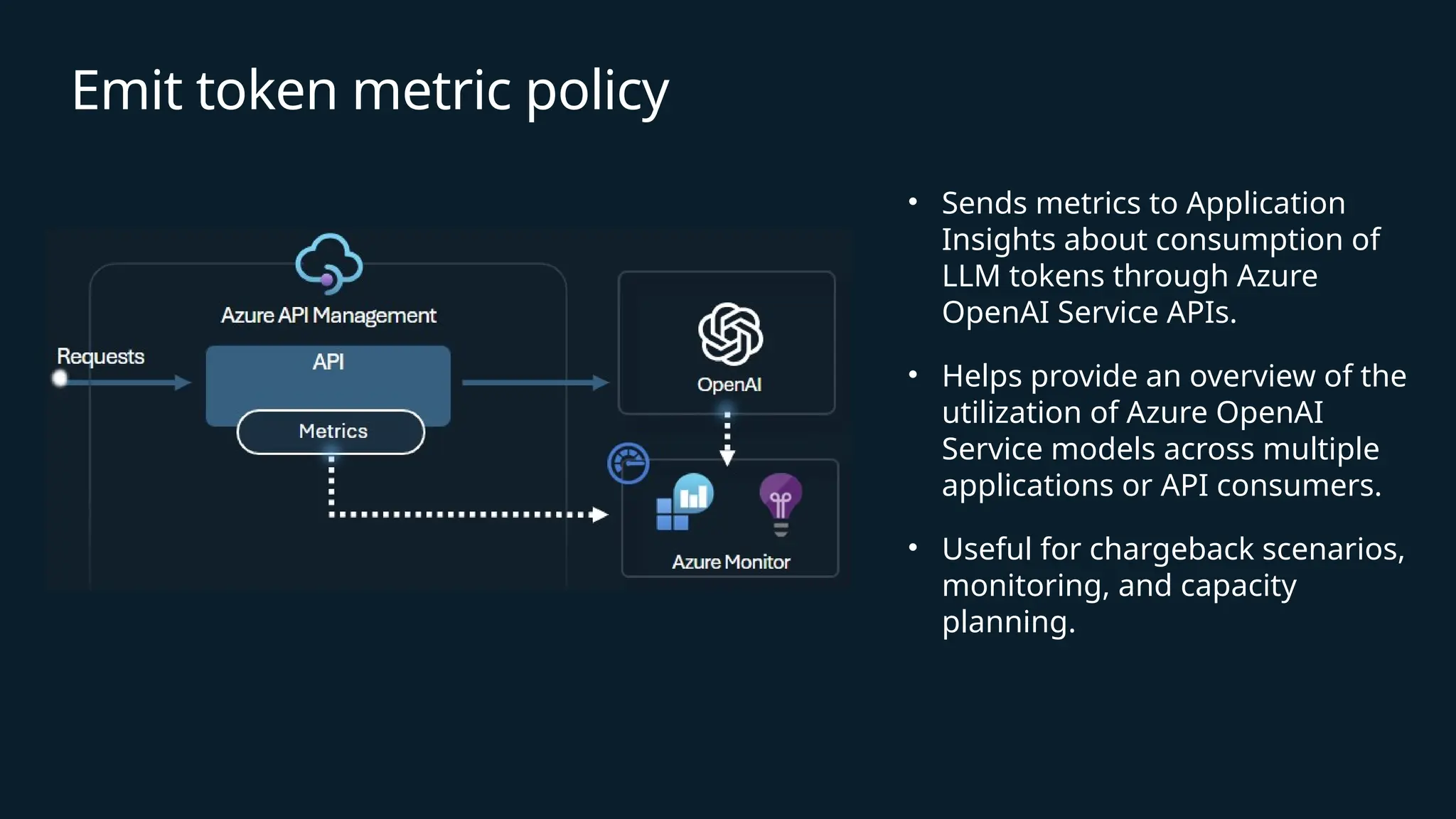

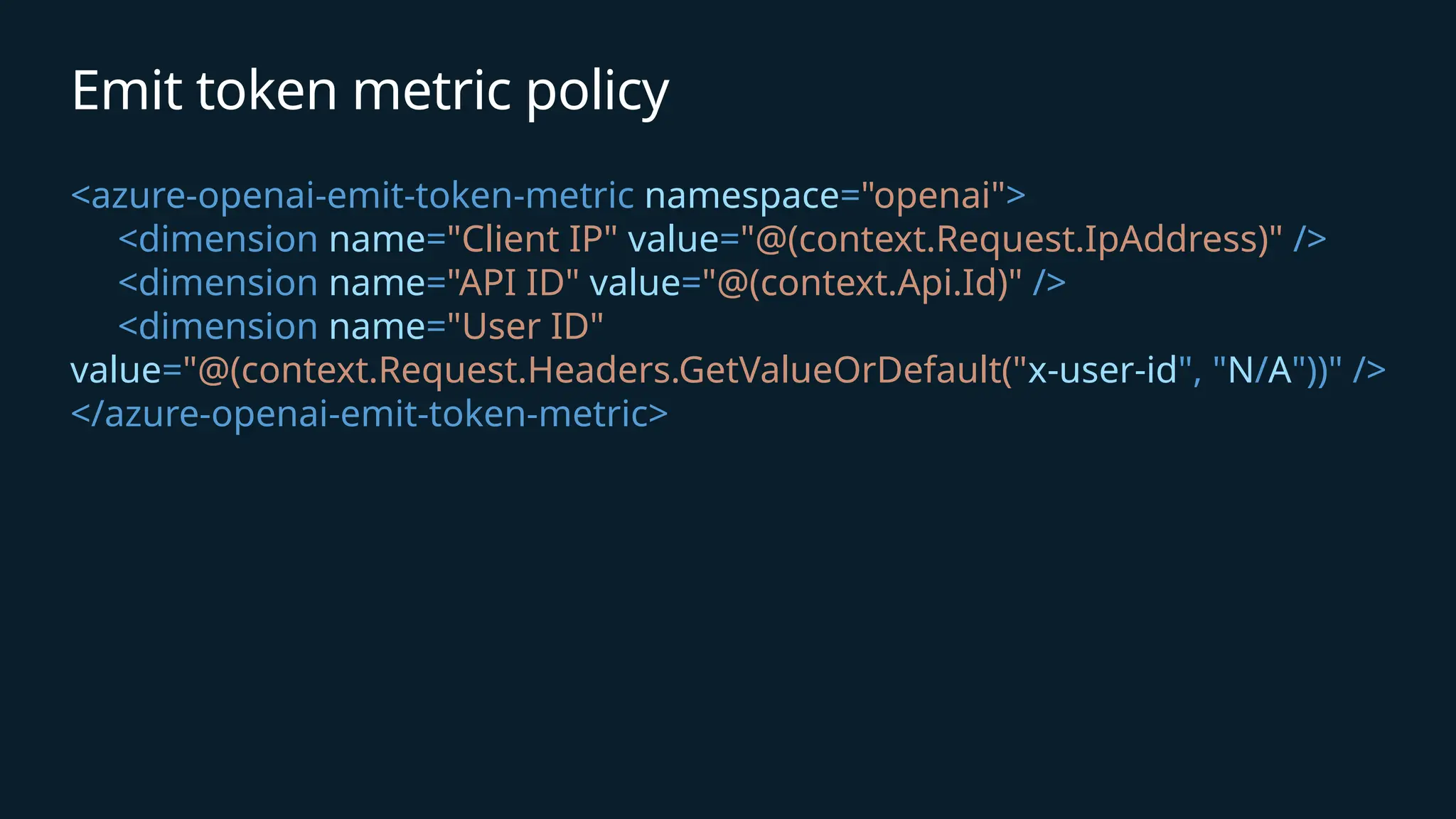

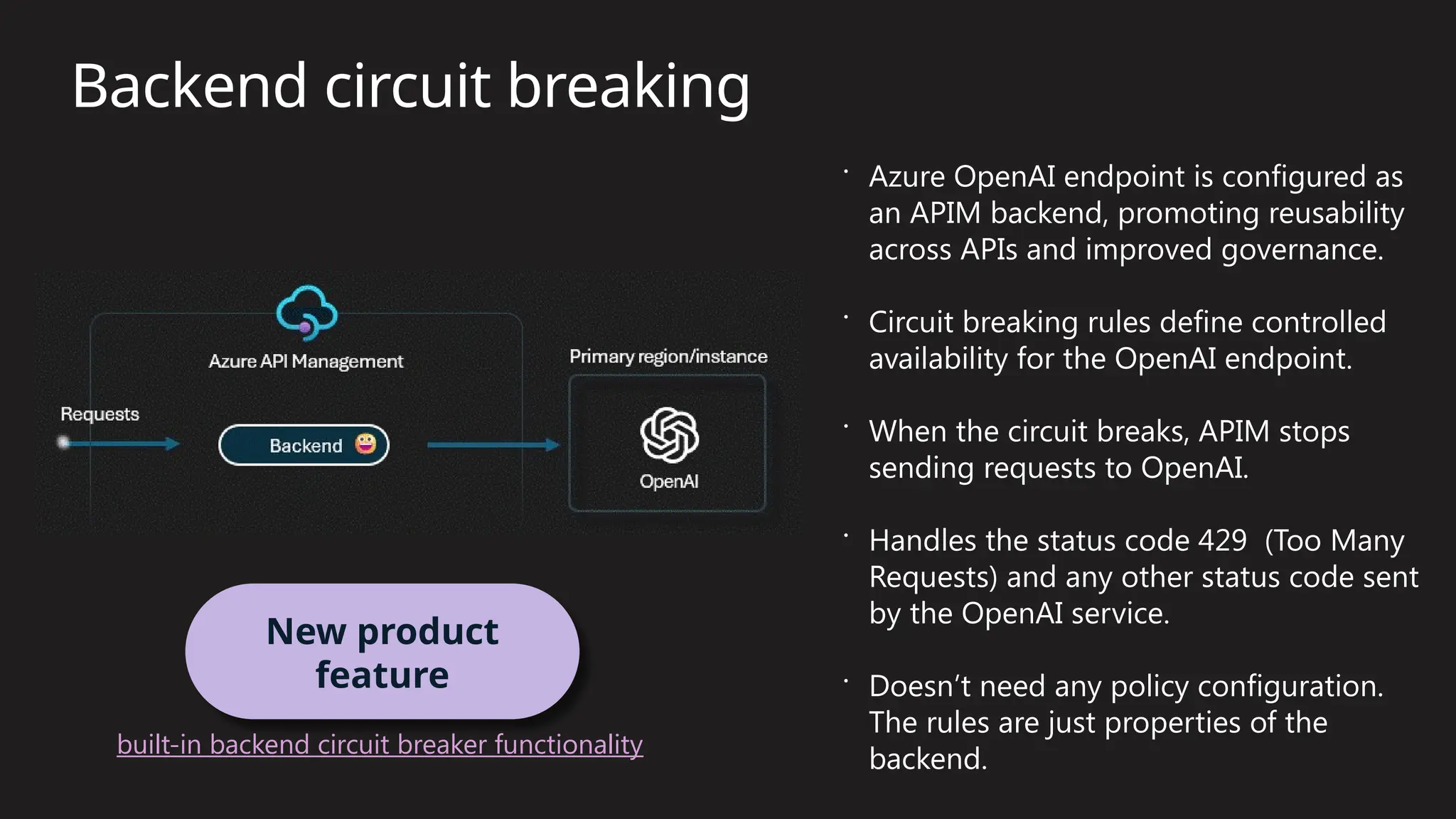

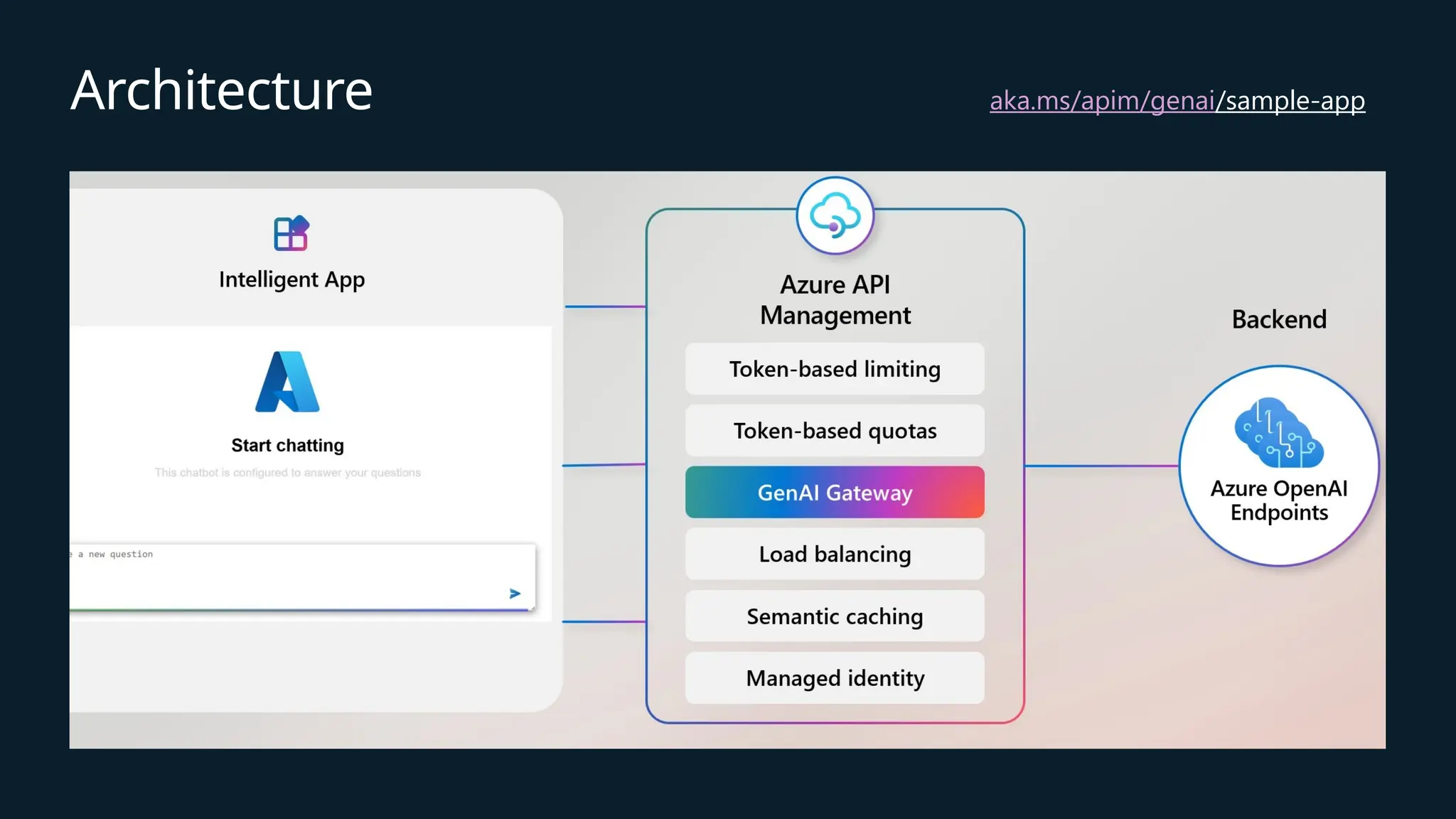

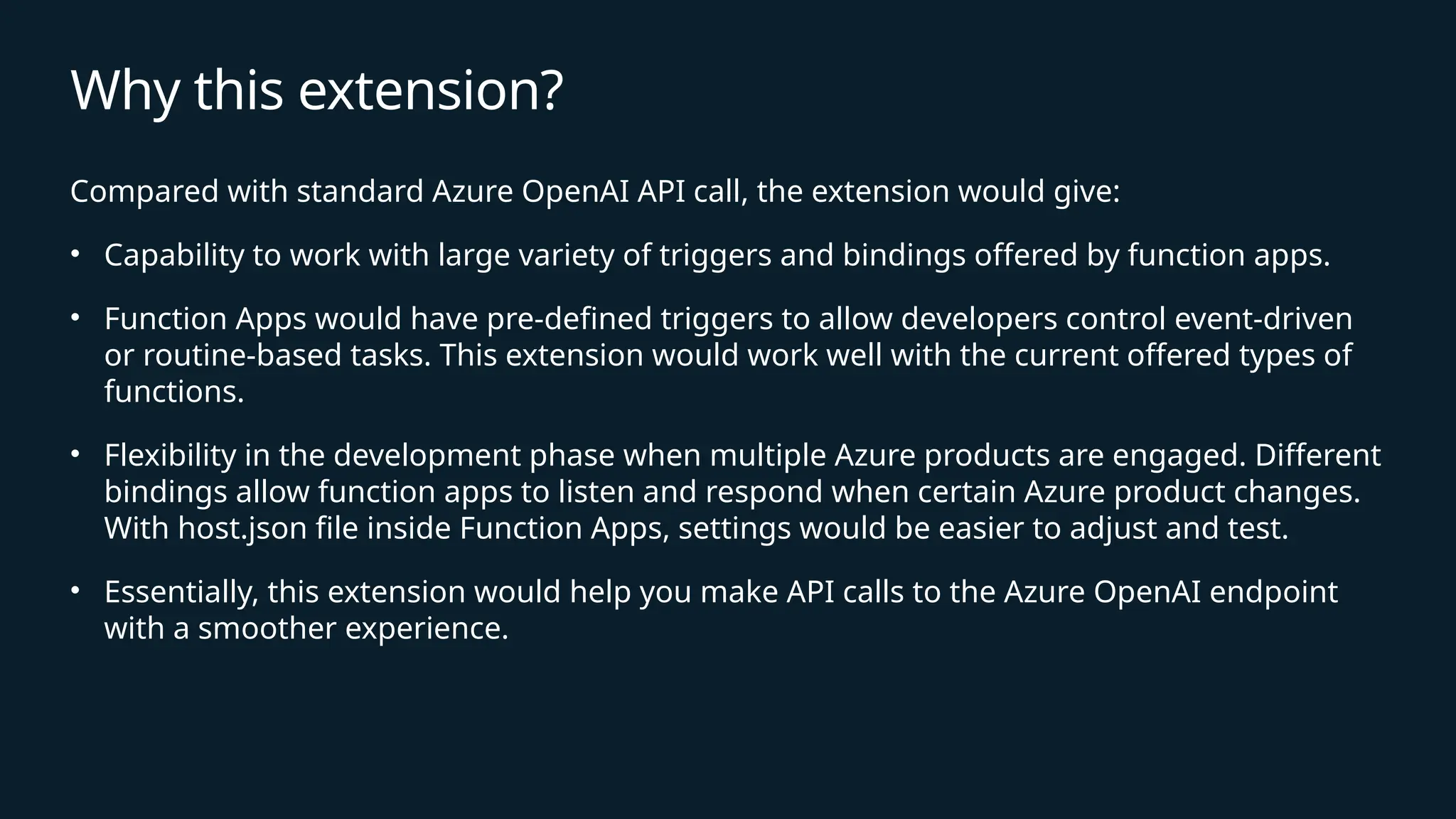

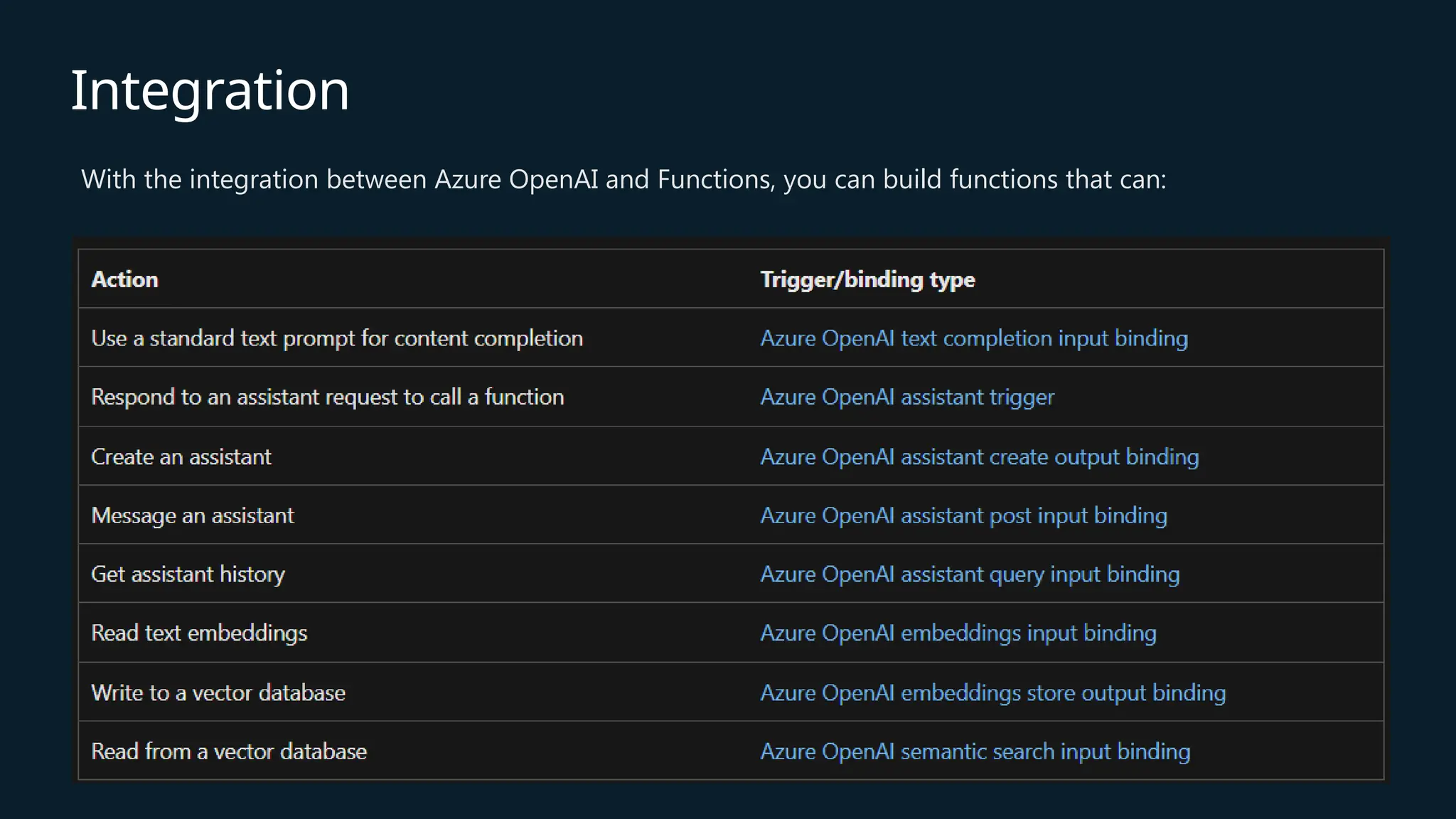

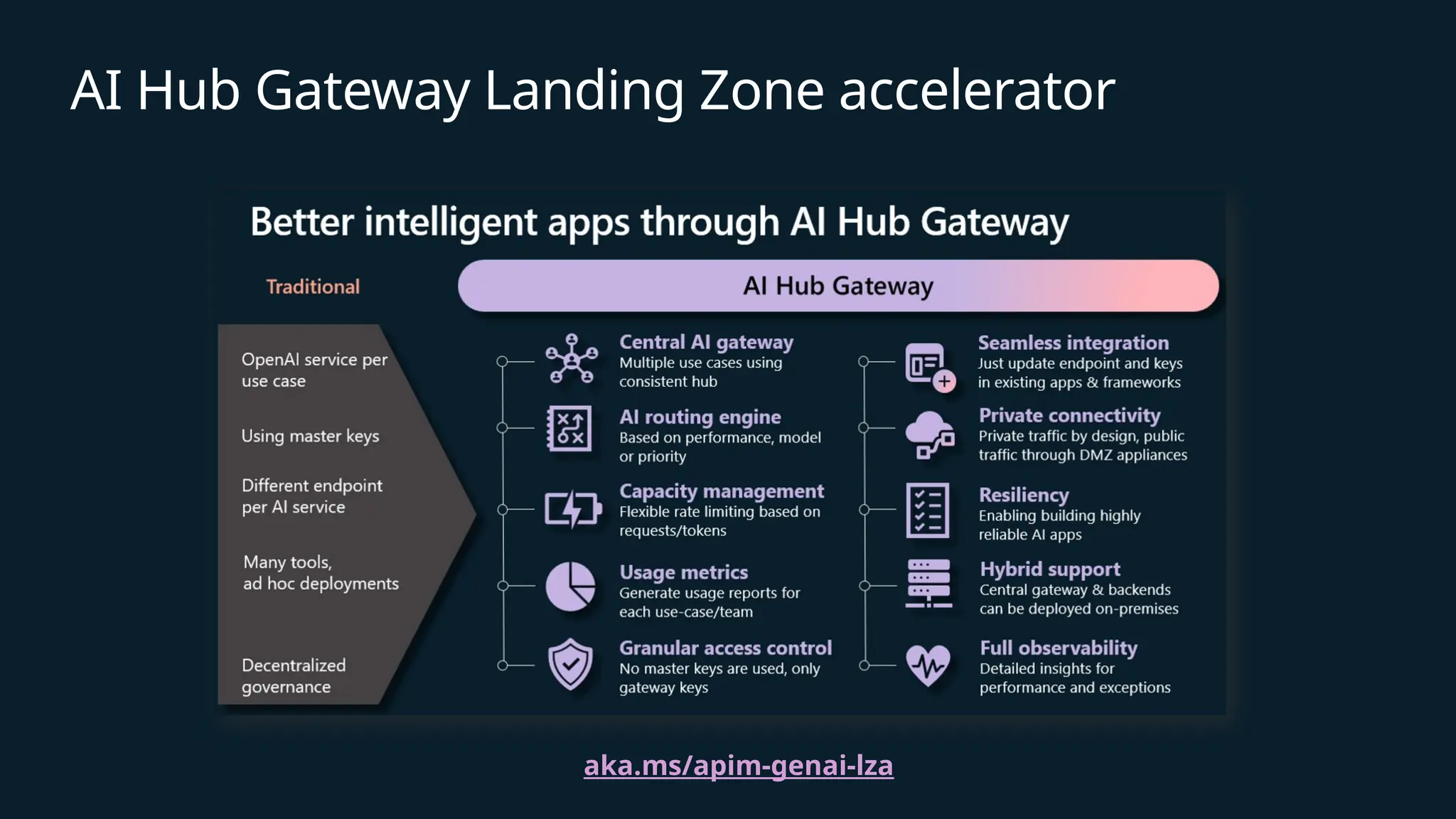

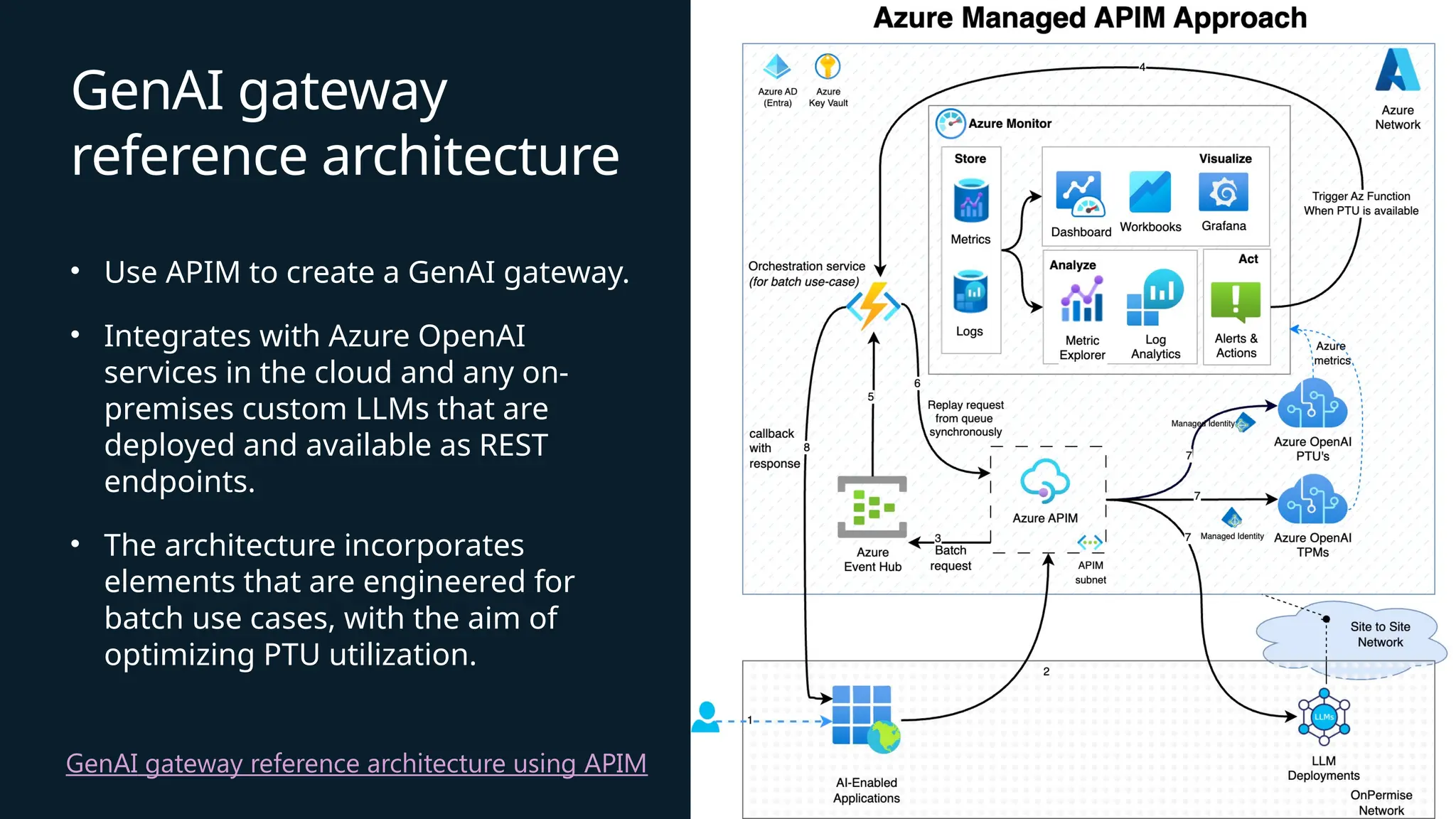

Explore the seamless integration of Azure API Management and Azure Functions with OpenAI to create intelligent, scalable, and secure applications. This presentation will delve into how API Management can enhance control, security, and monitoring of AI API calls, while Azure Functions provide event-driven processing and efficient handling of data flows. I will demonstrate practical scenarios where this combination optimizes AI-driven solutions, including chatbot development, data processing automation, and more. Attendees will gain insights into best practices for setting up API Management policies, writing Azure Functions, and leveraging OpenAI's powerful AI capabilities. Join me to unlock the full potential of Azure services in your AI projects.