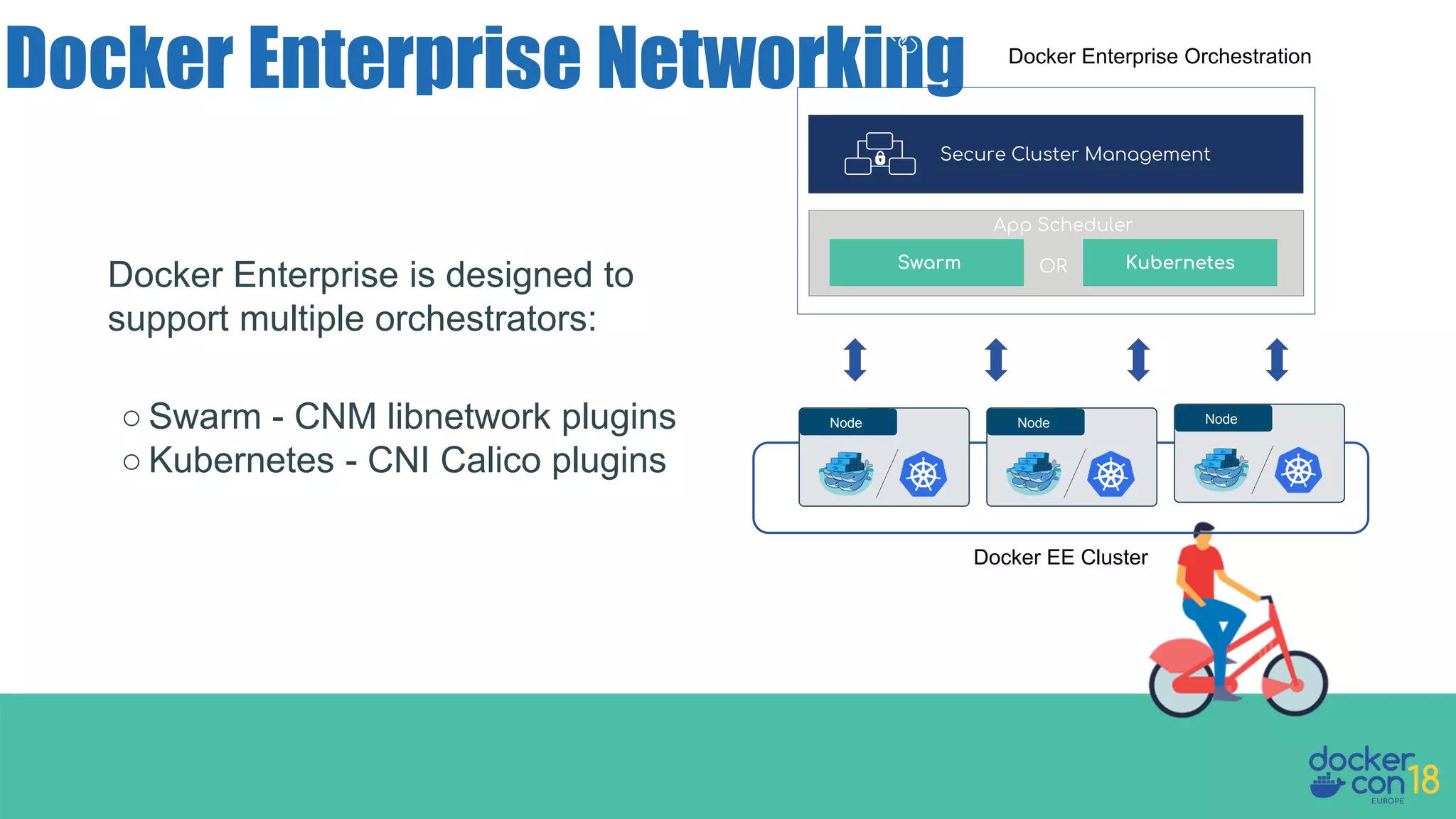

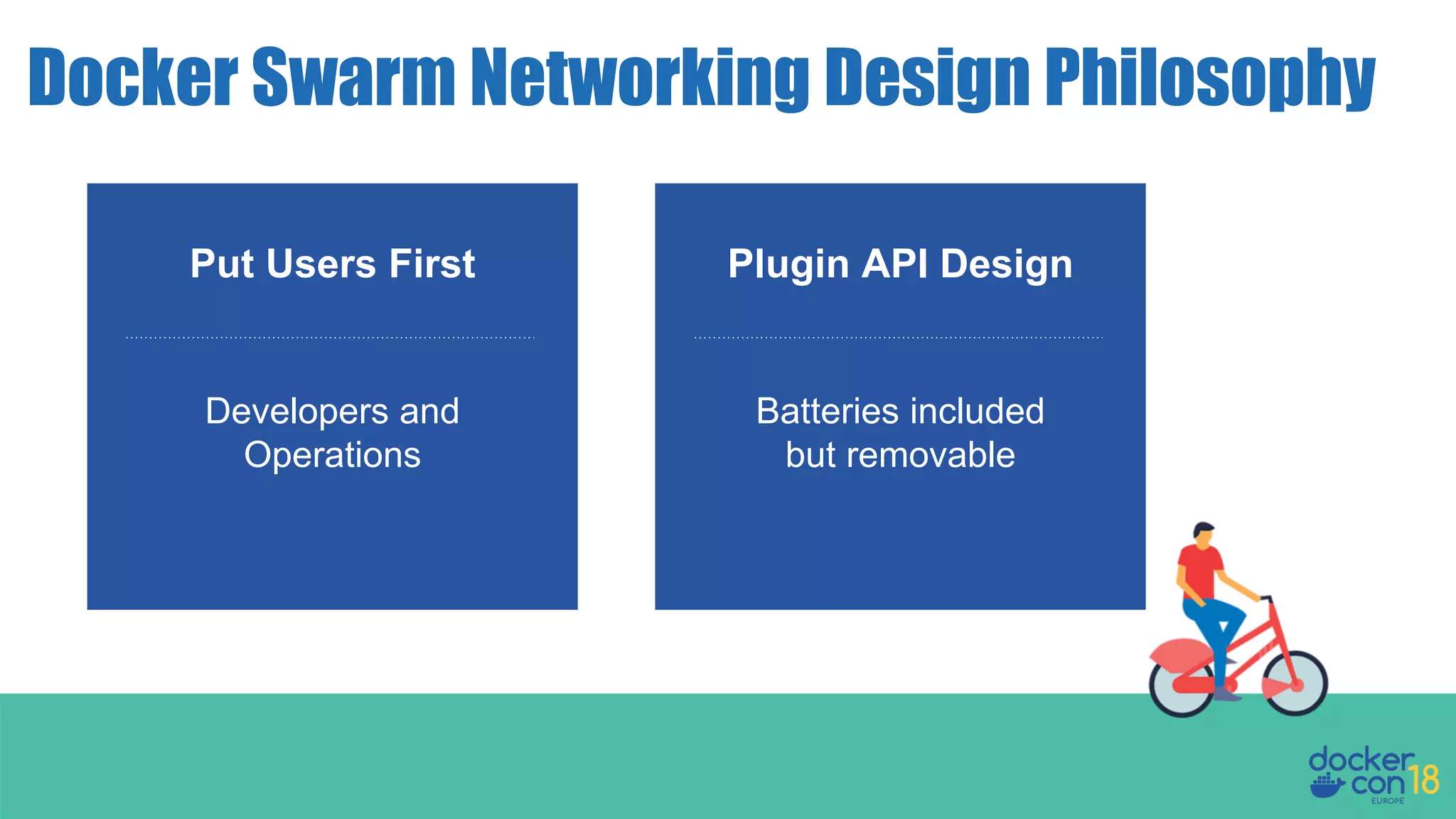

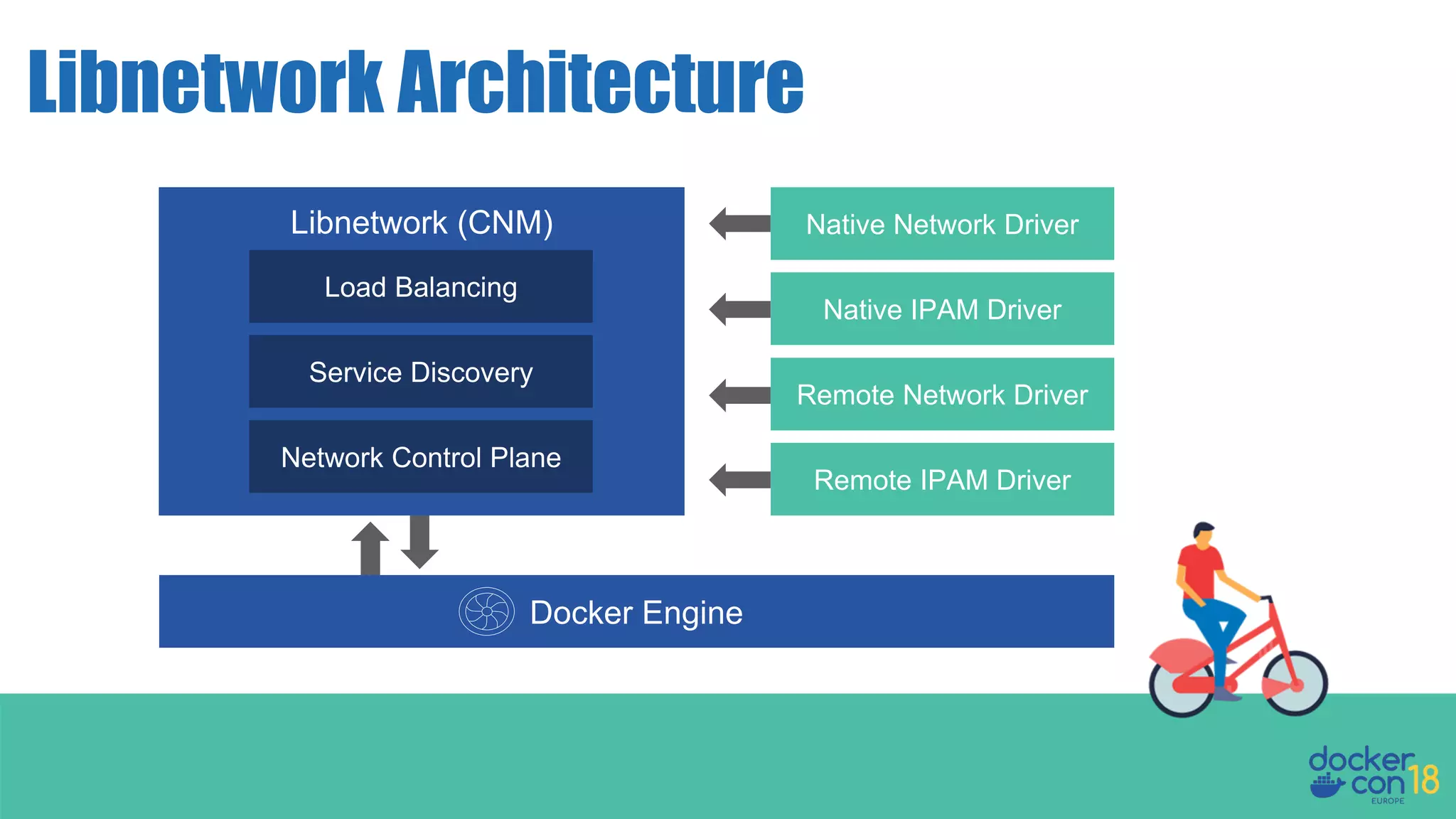

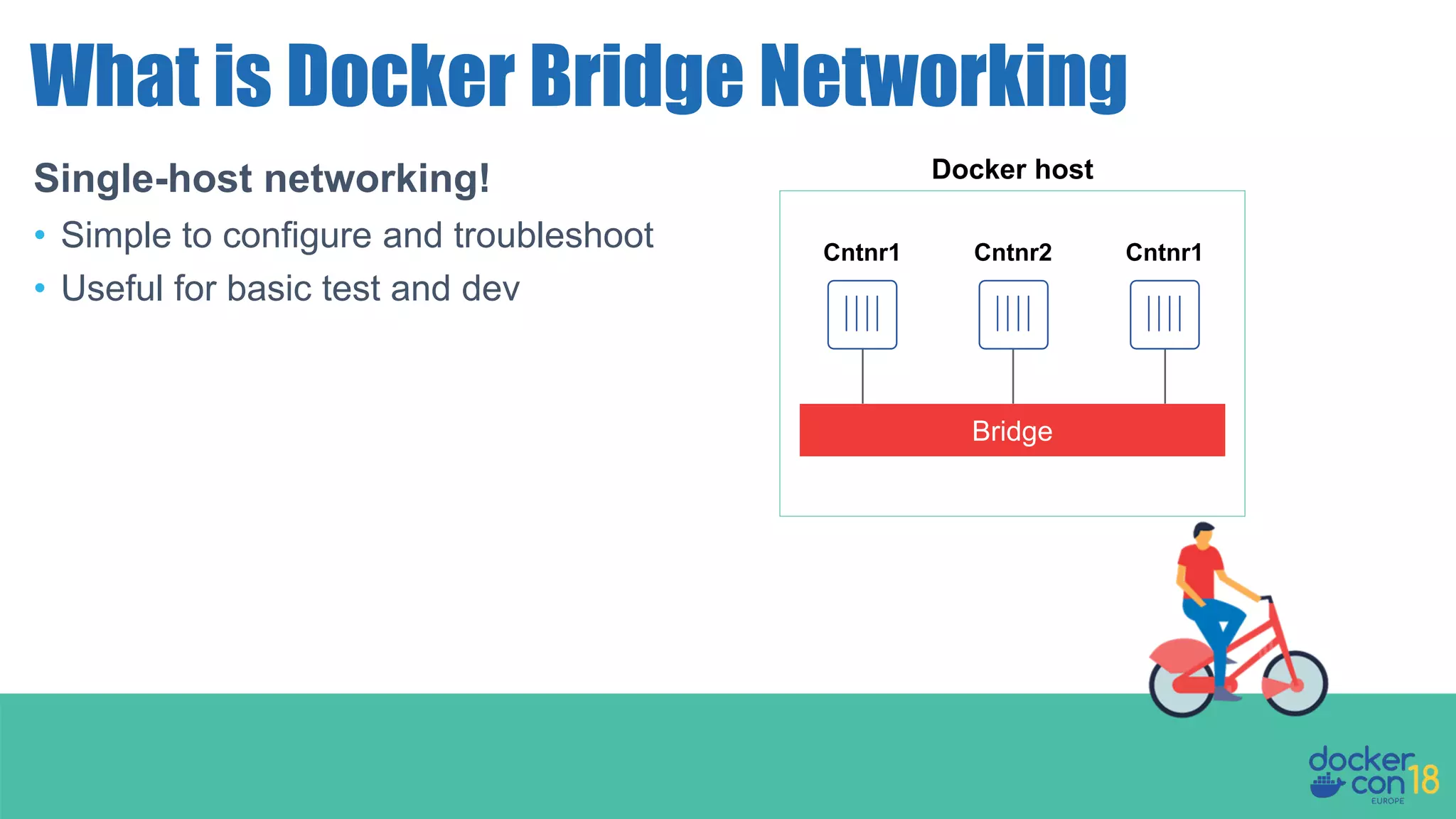

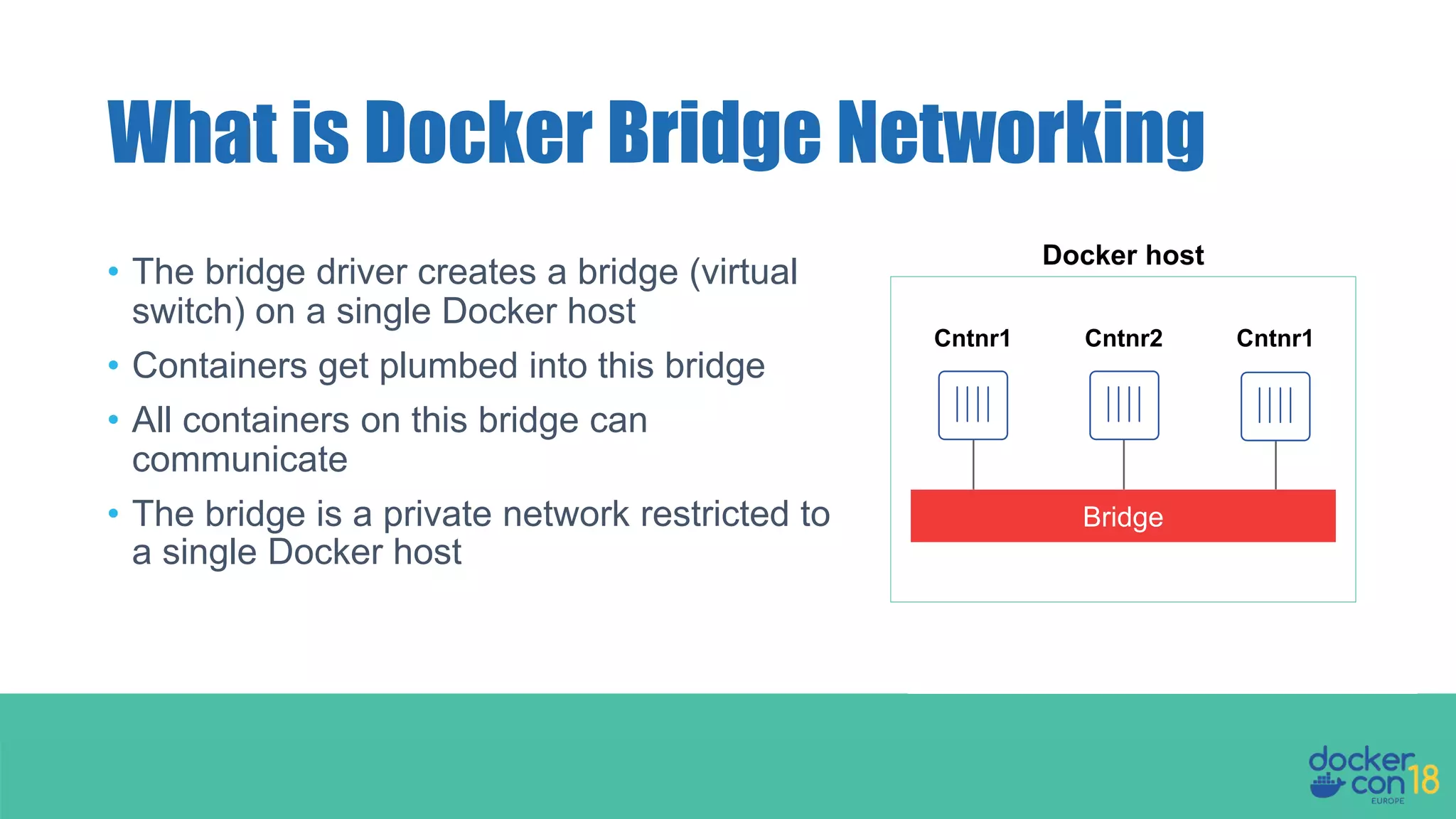

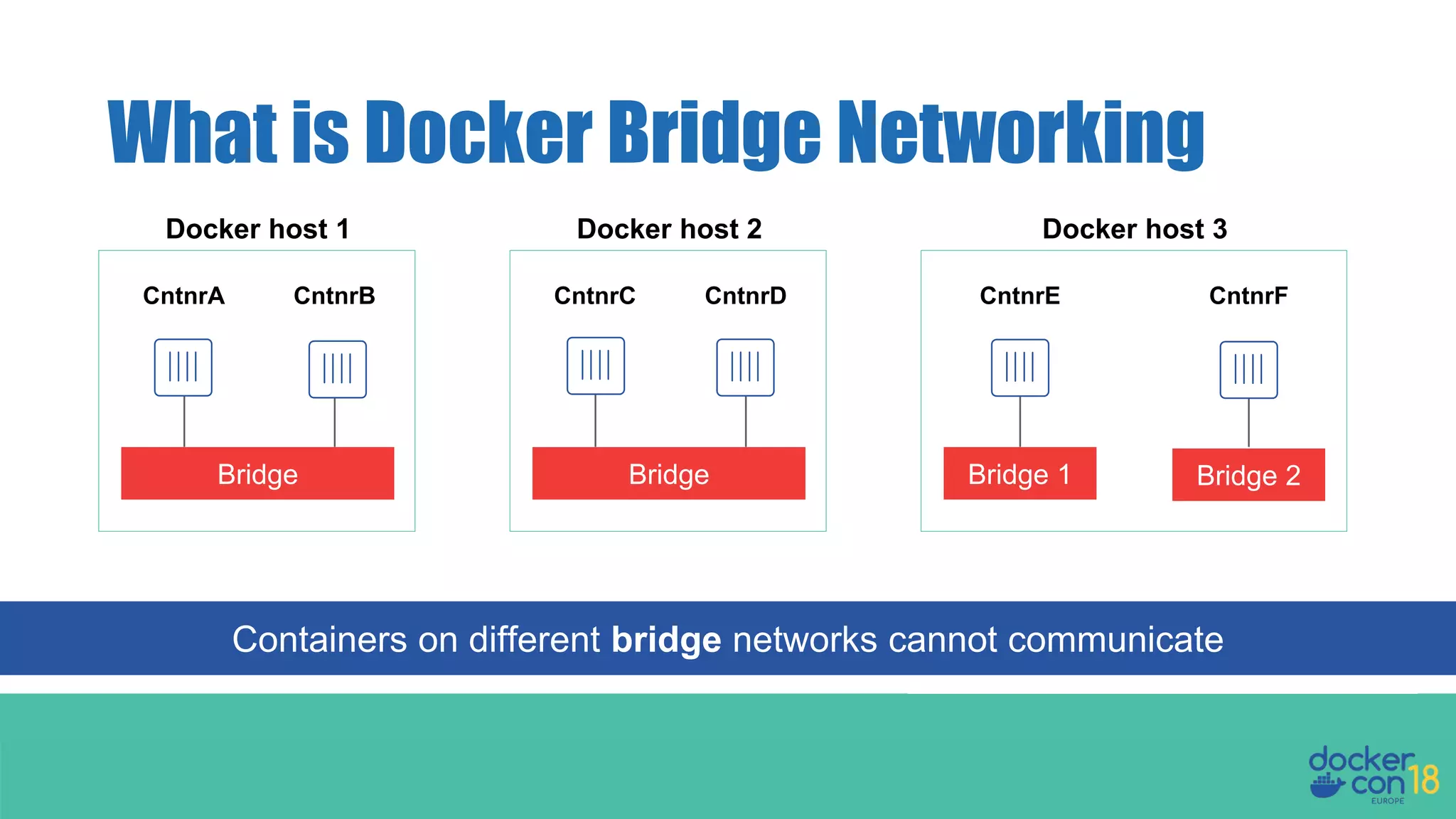

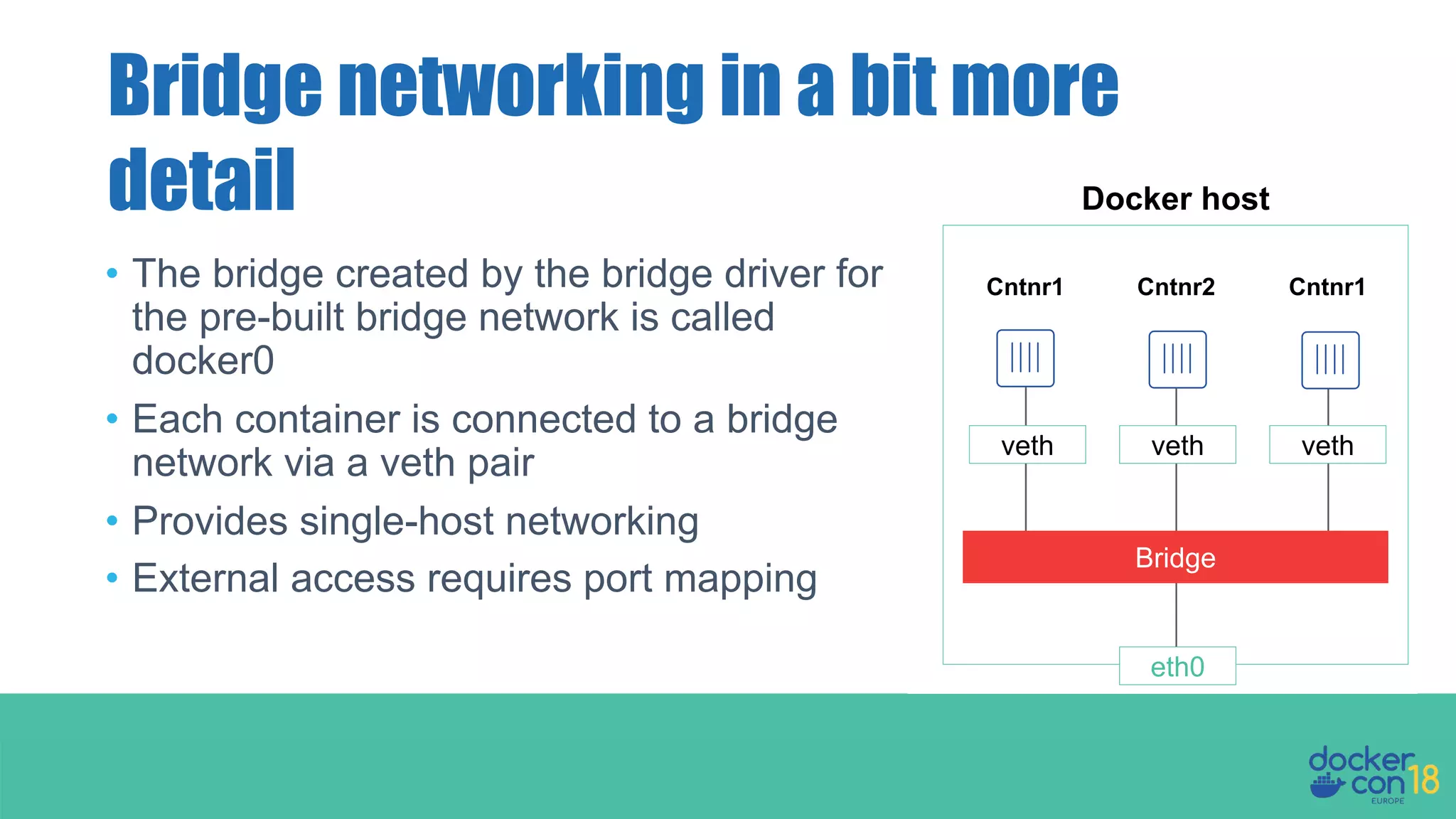

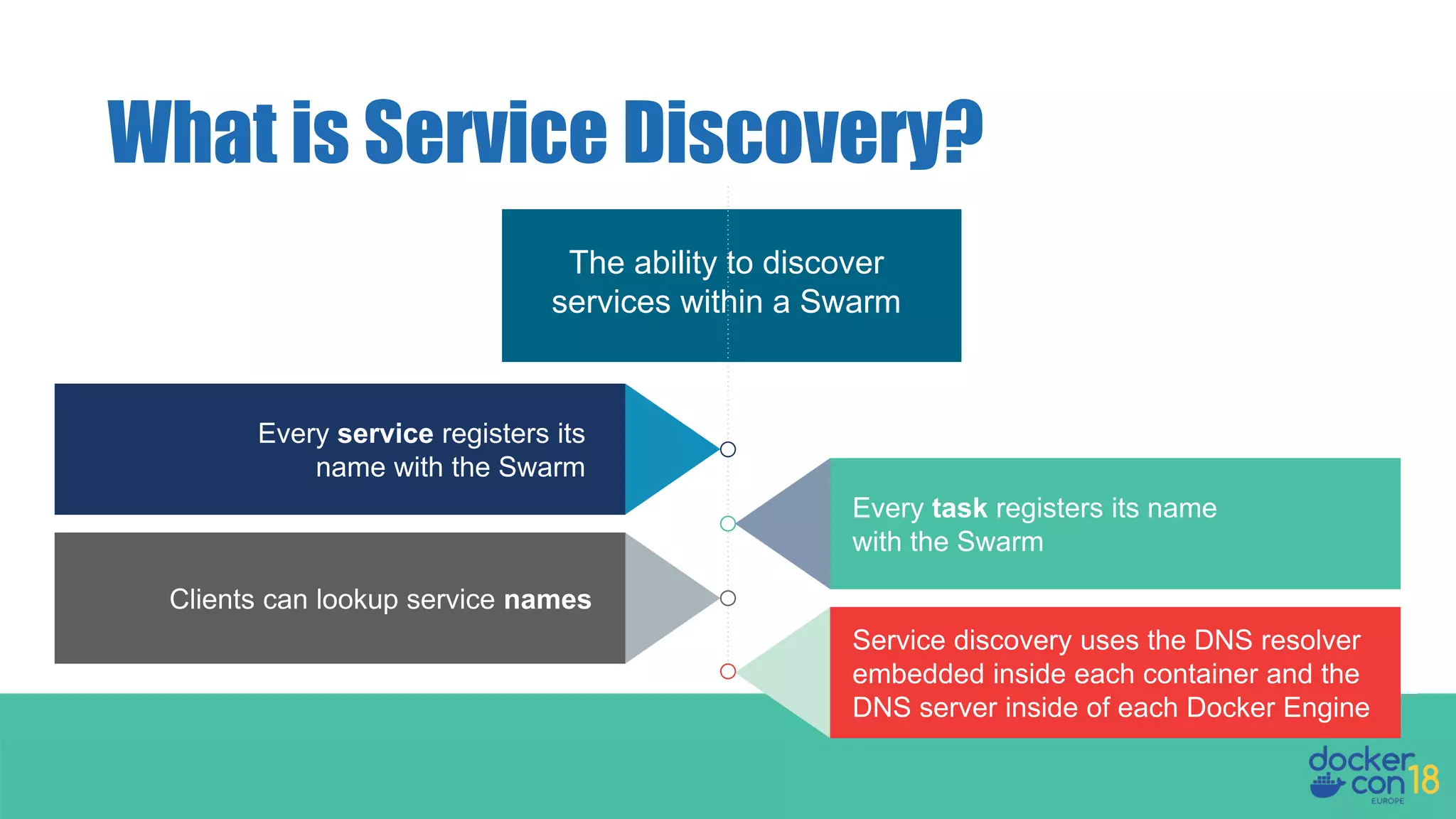

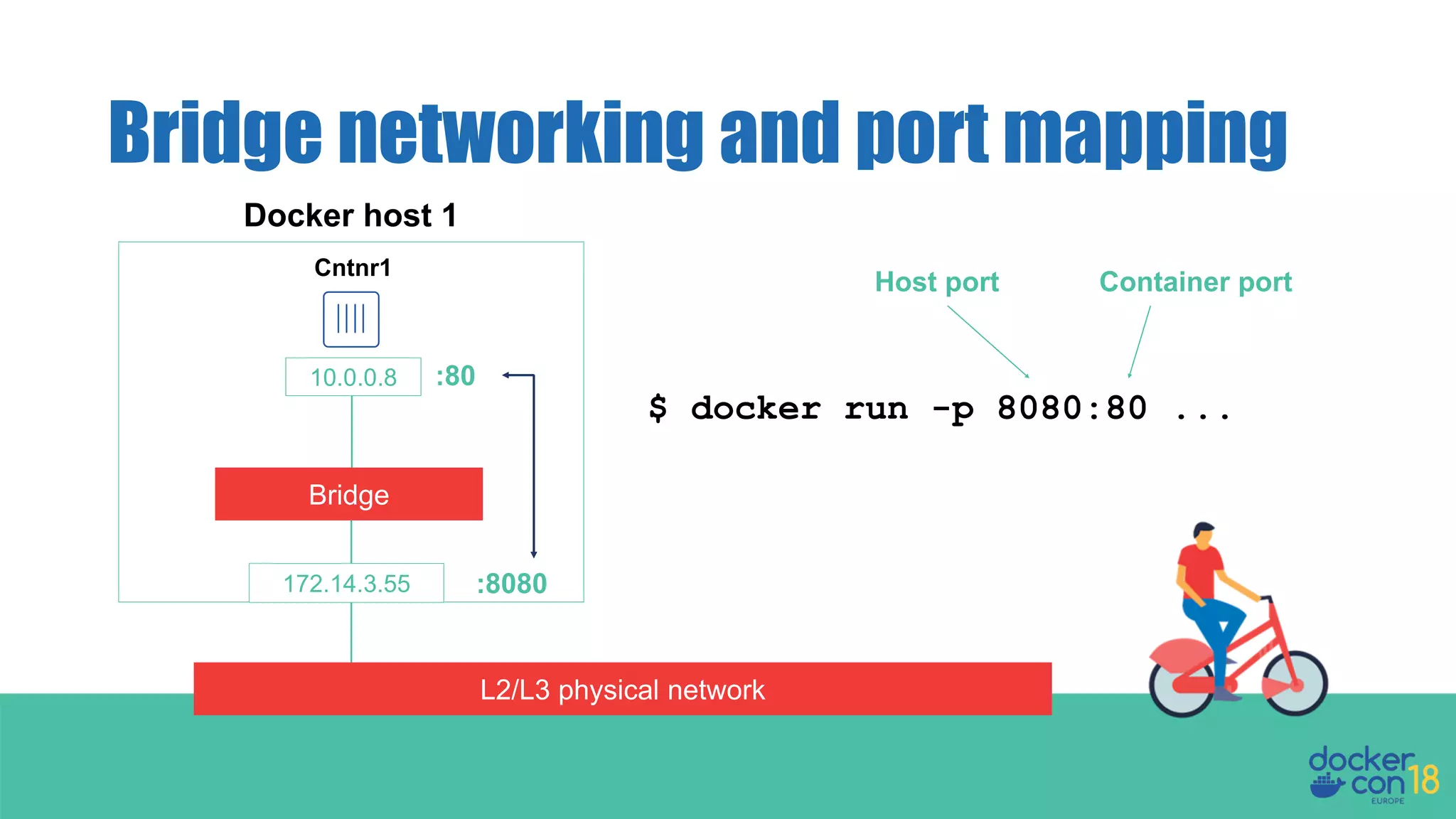

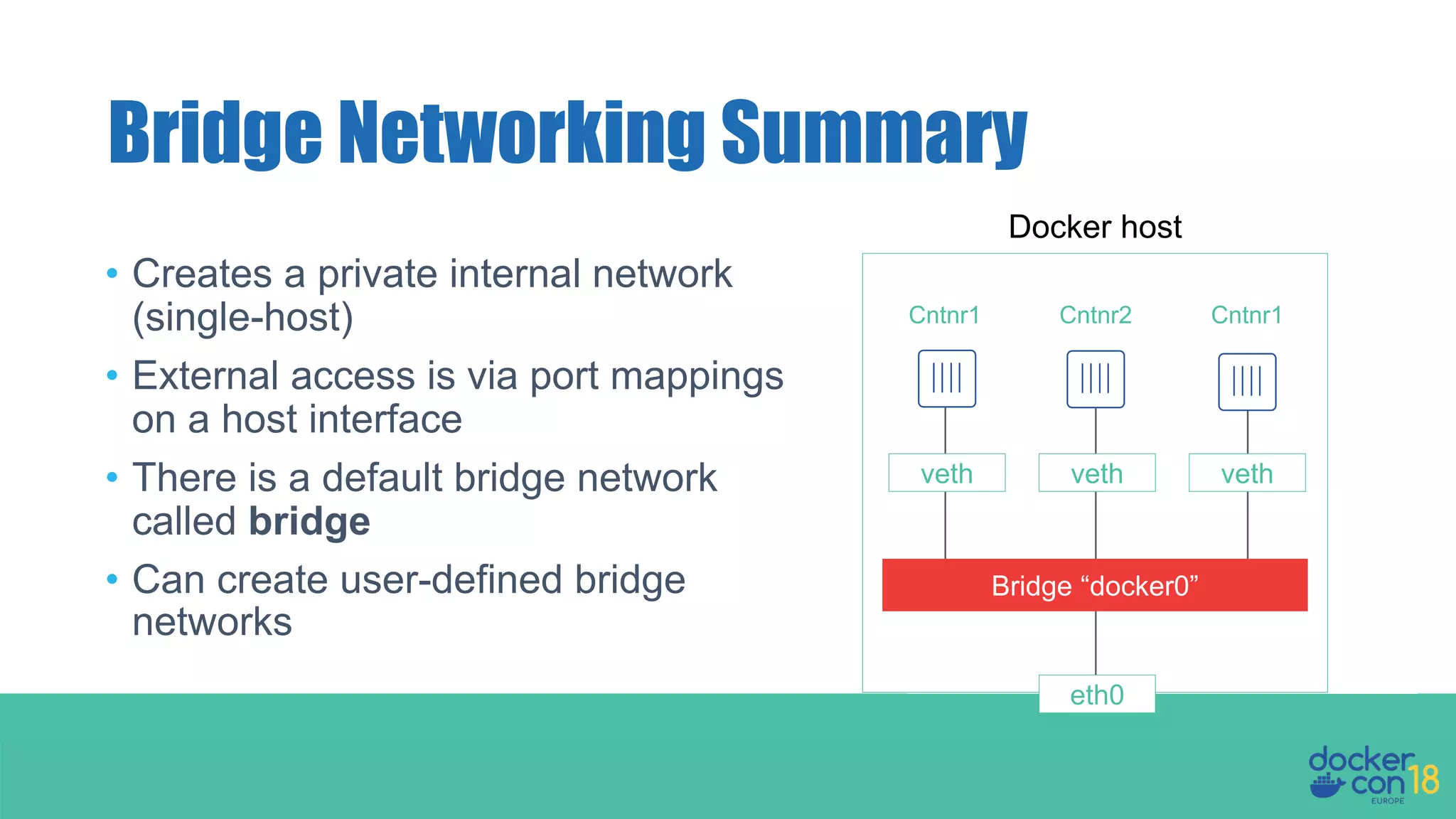

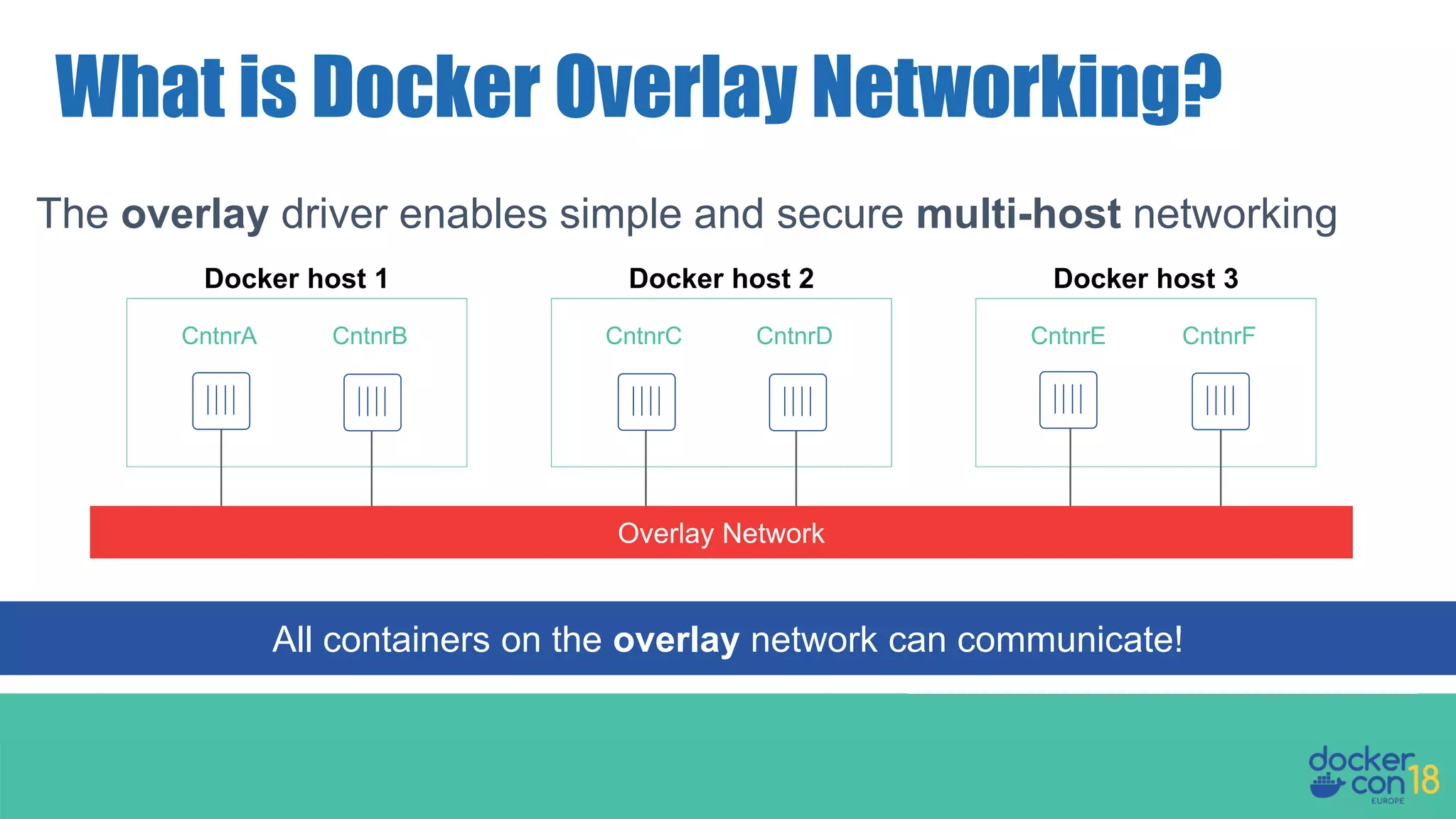

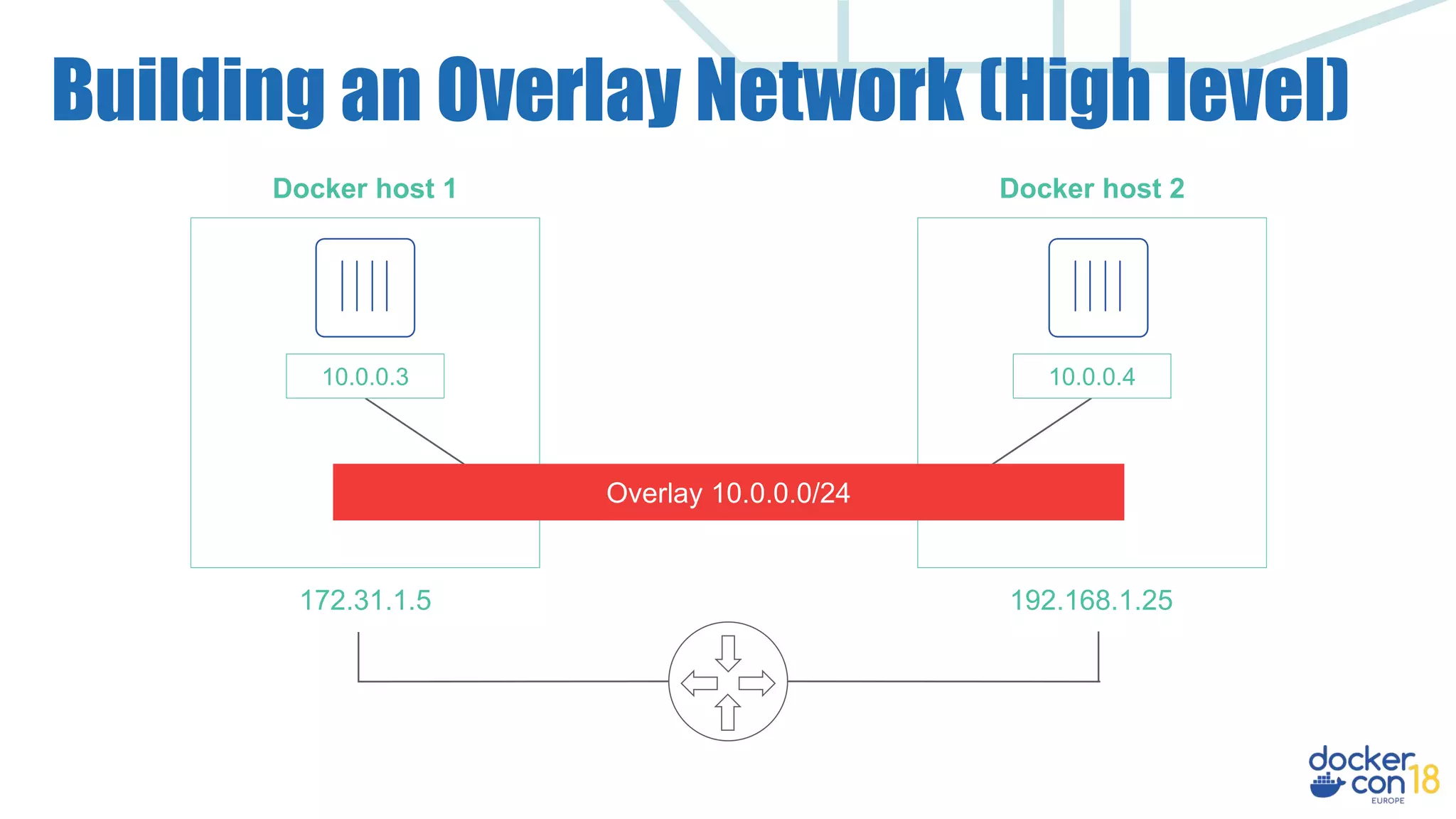

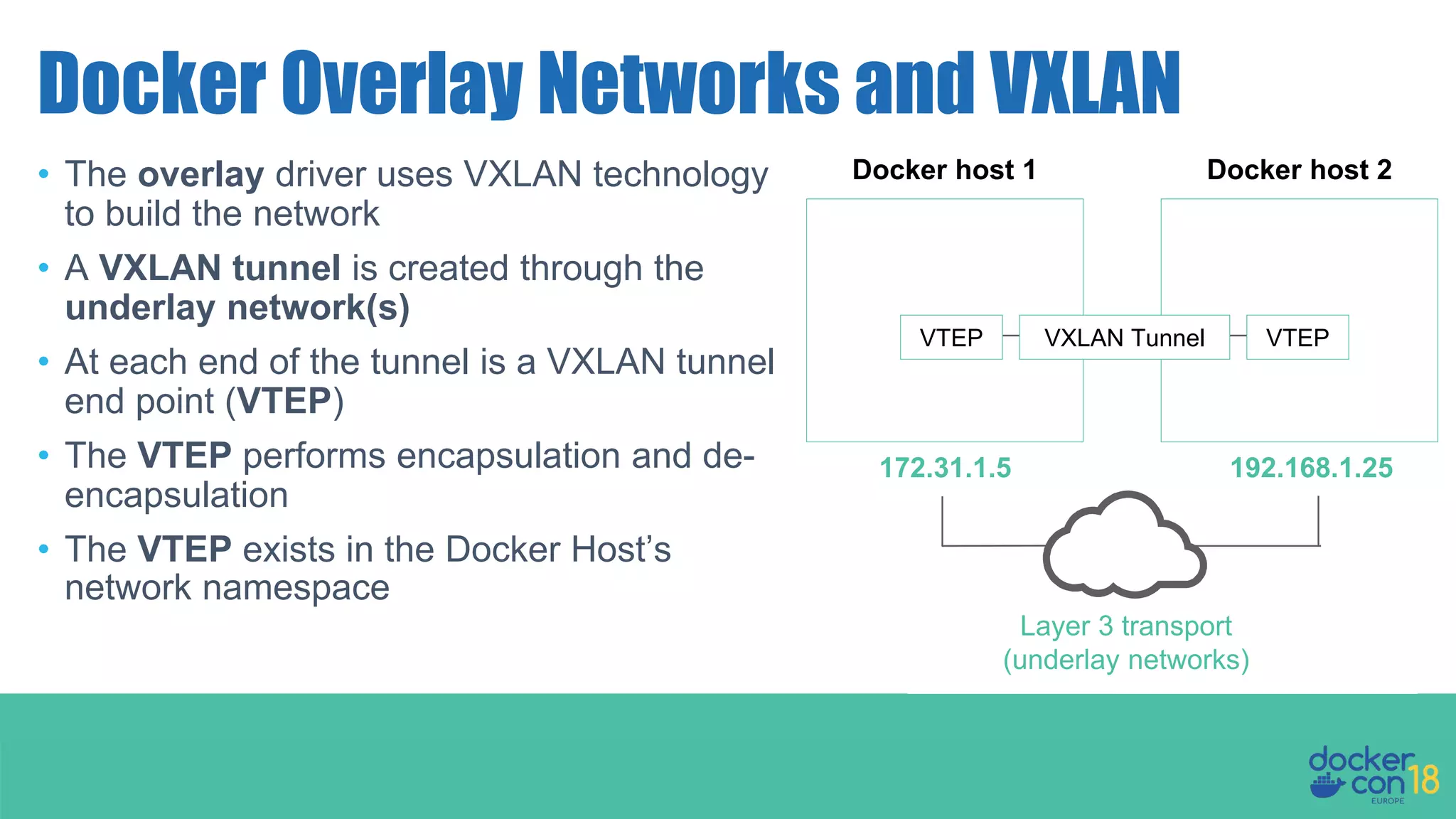

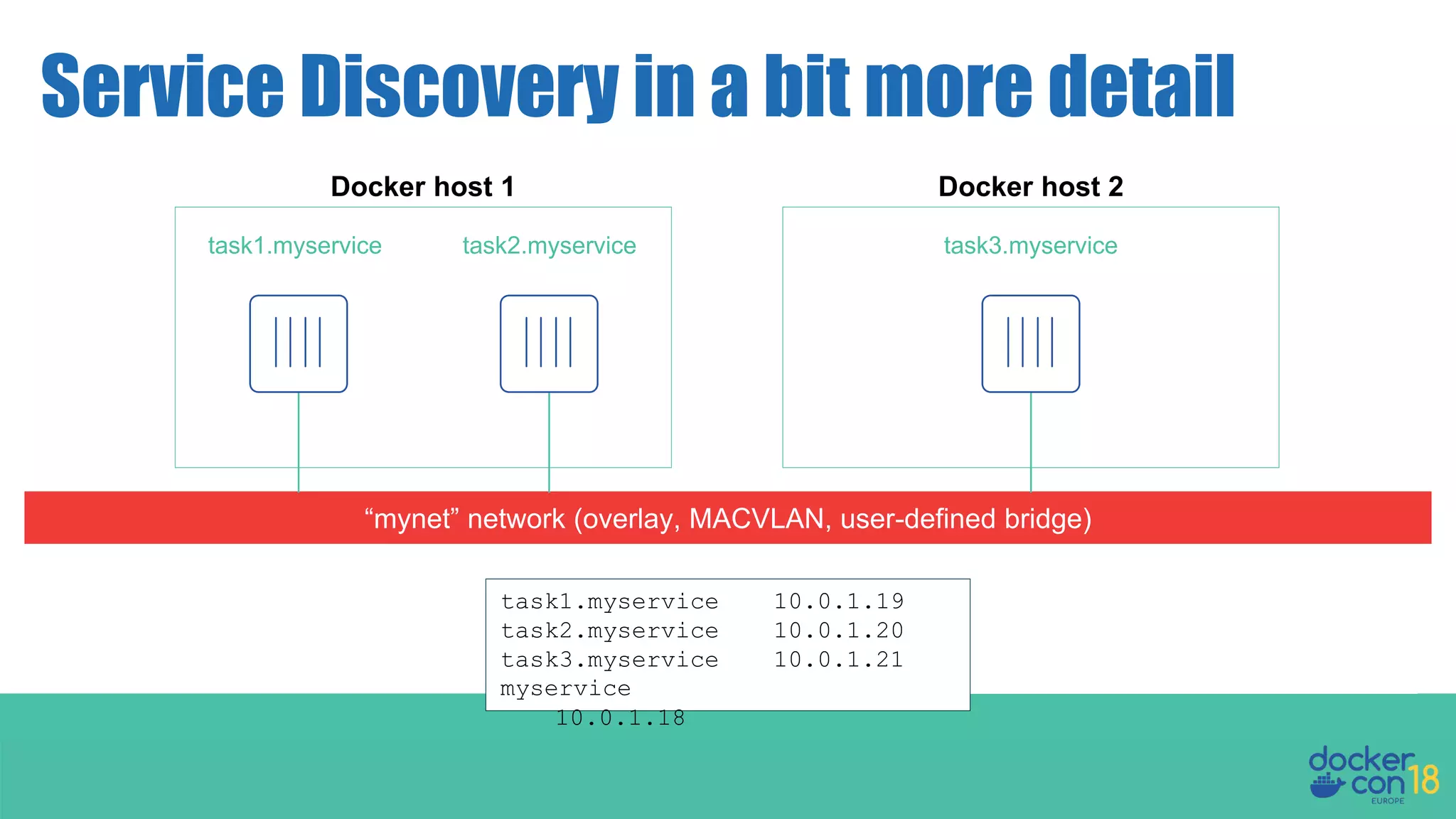

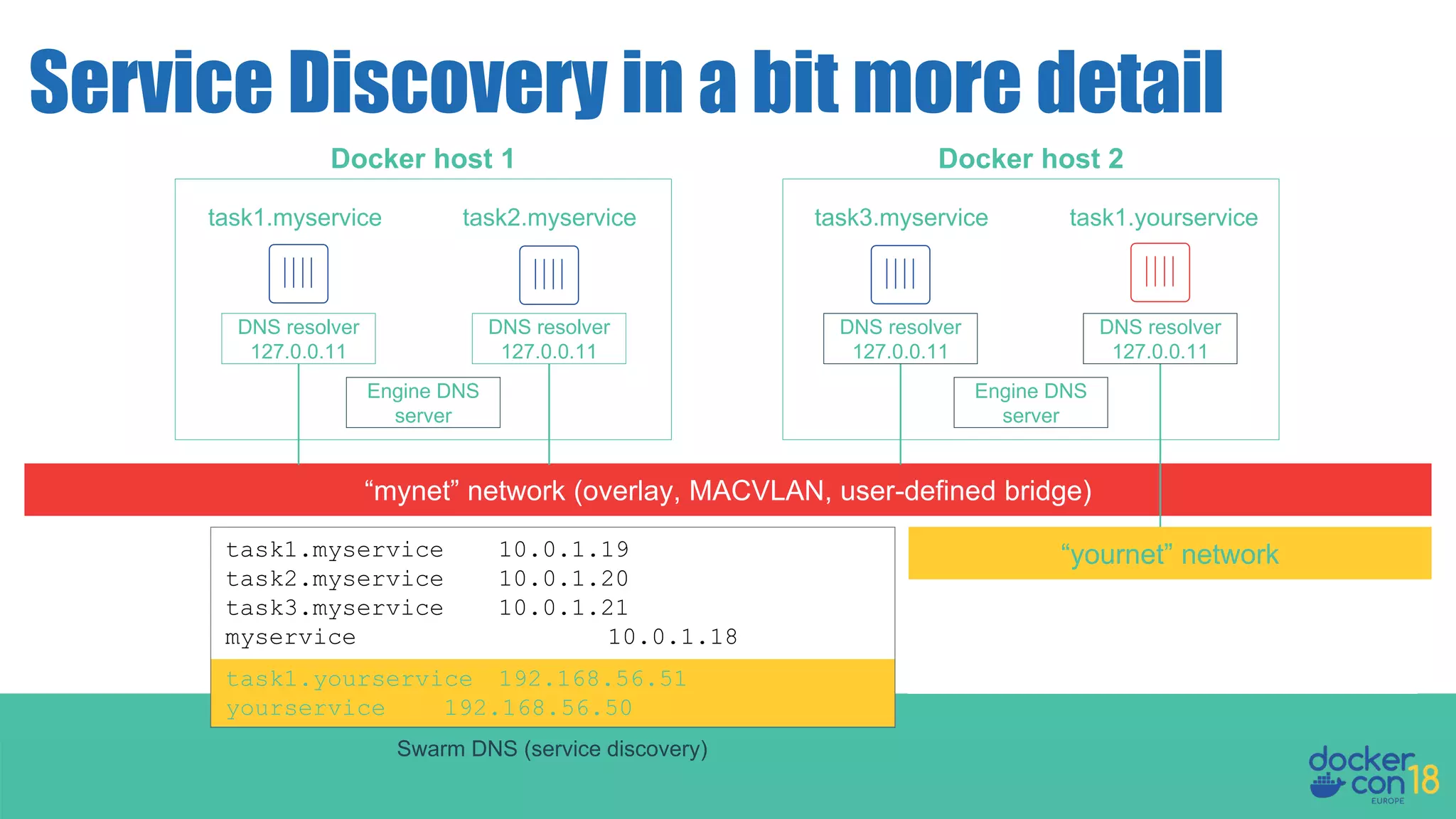

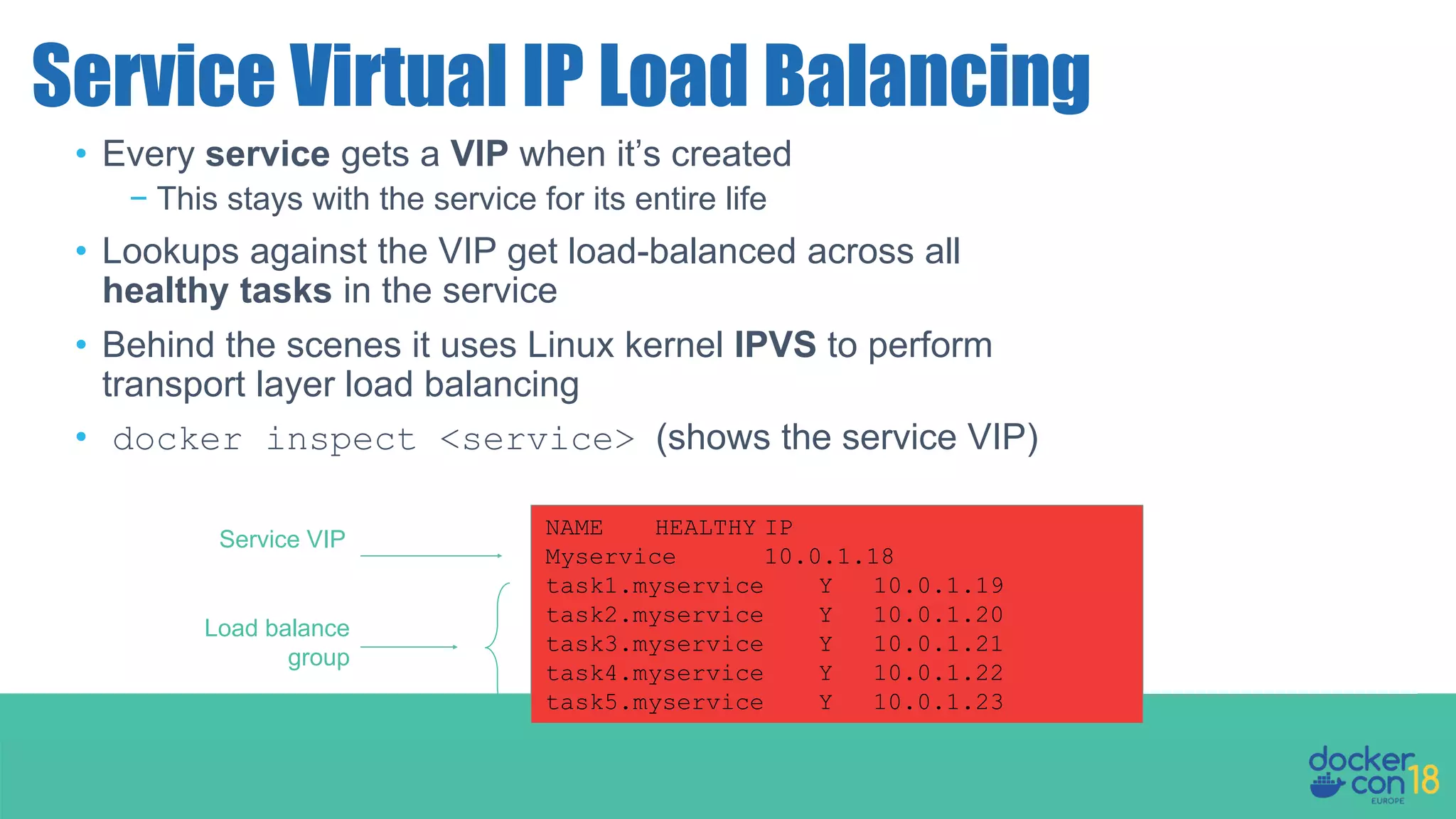

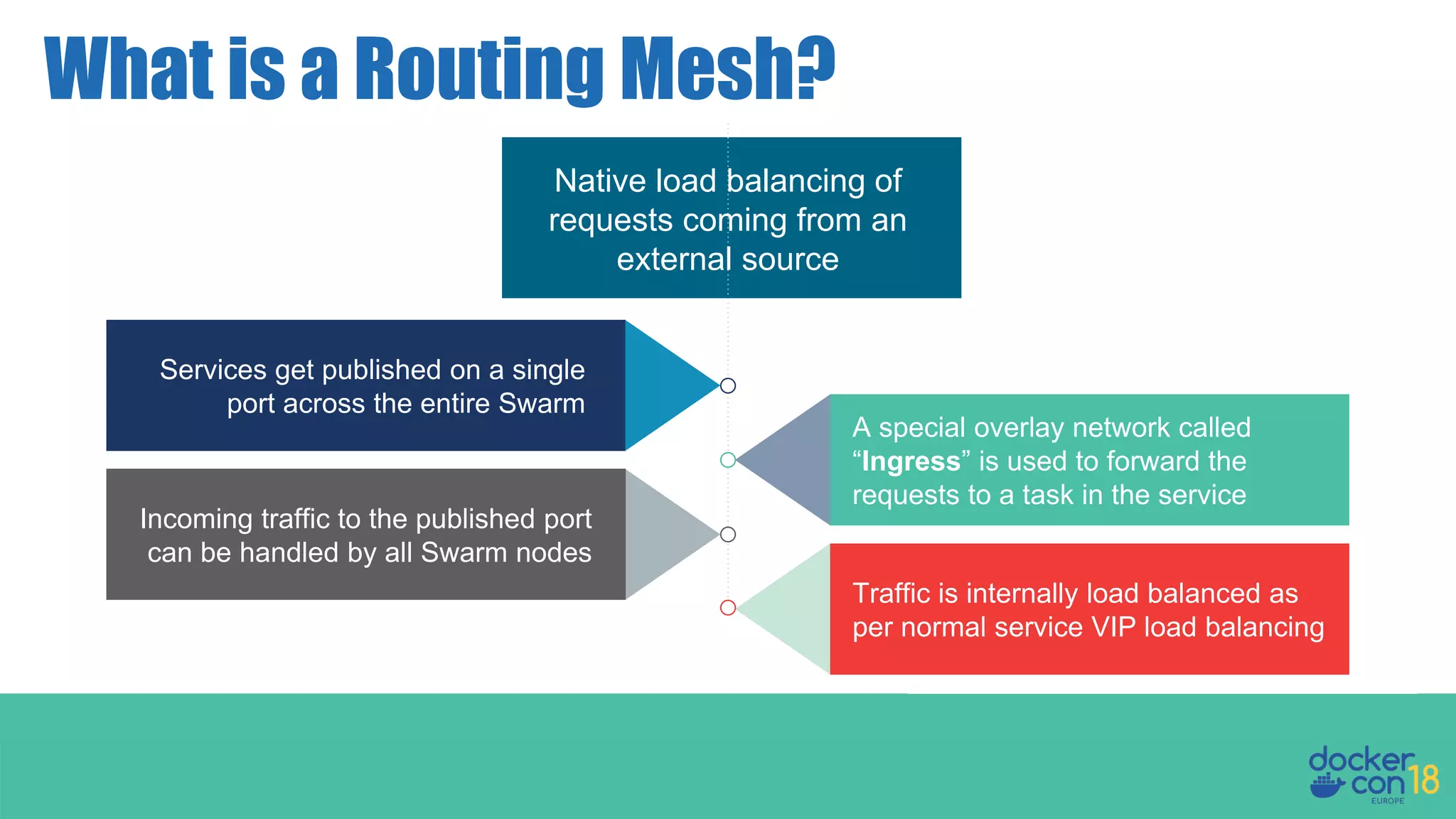

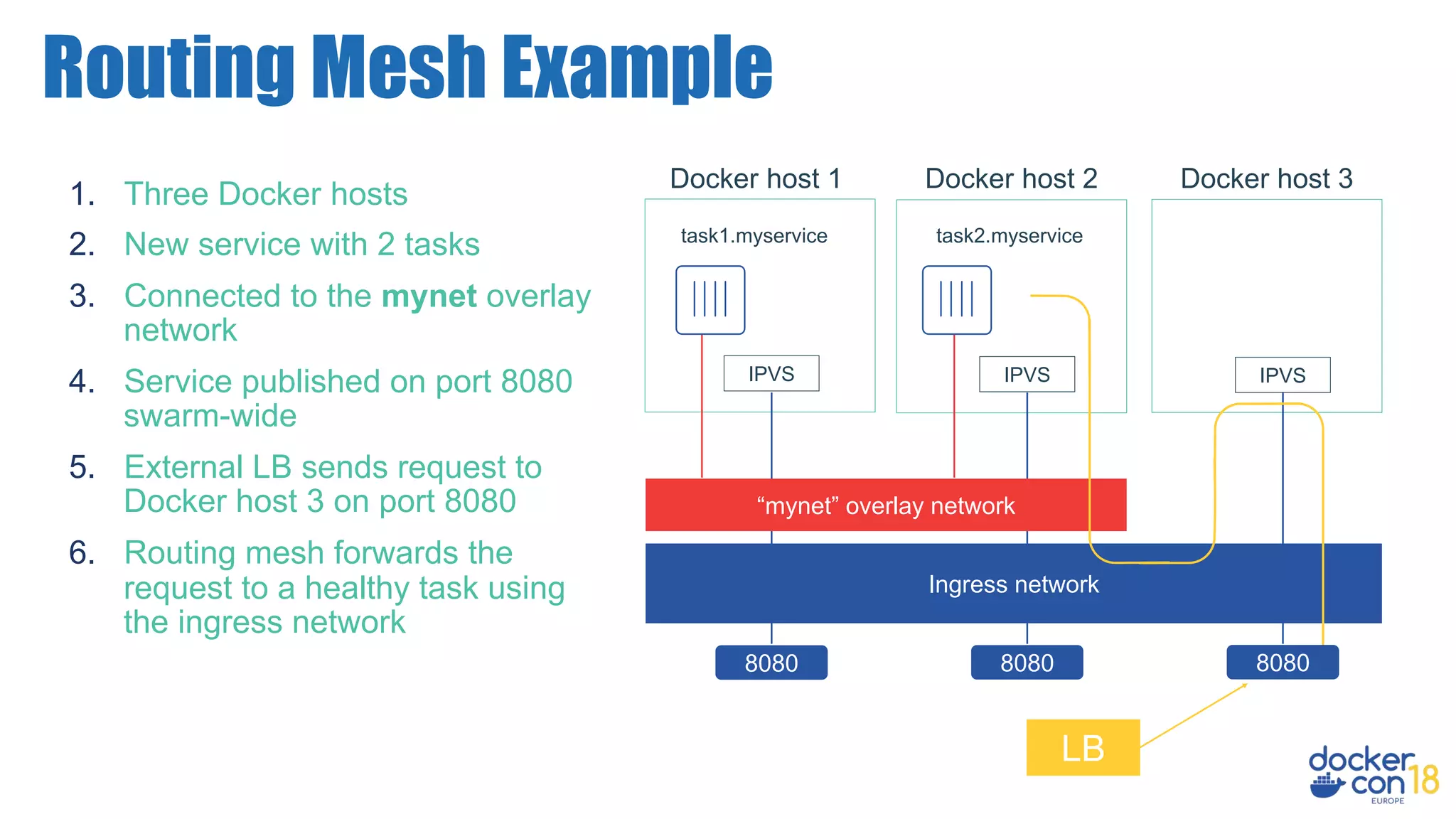

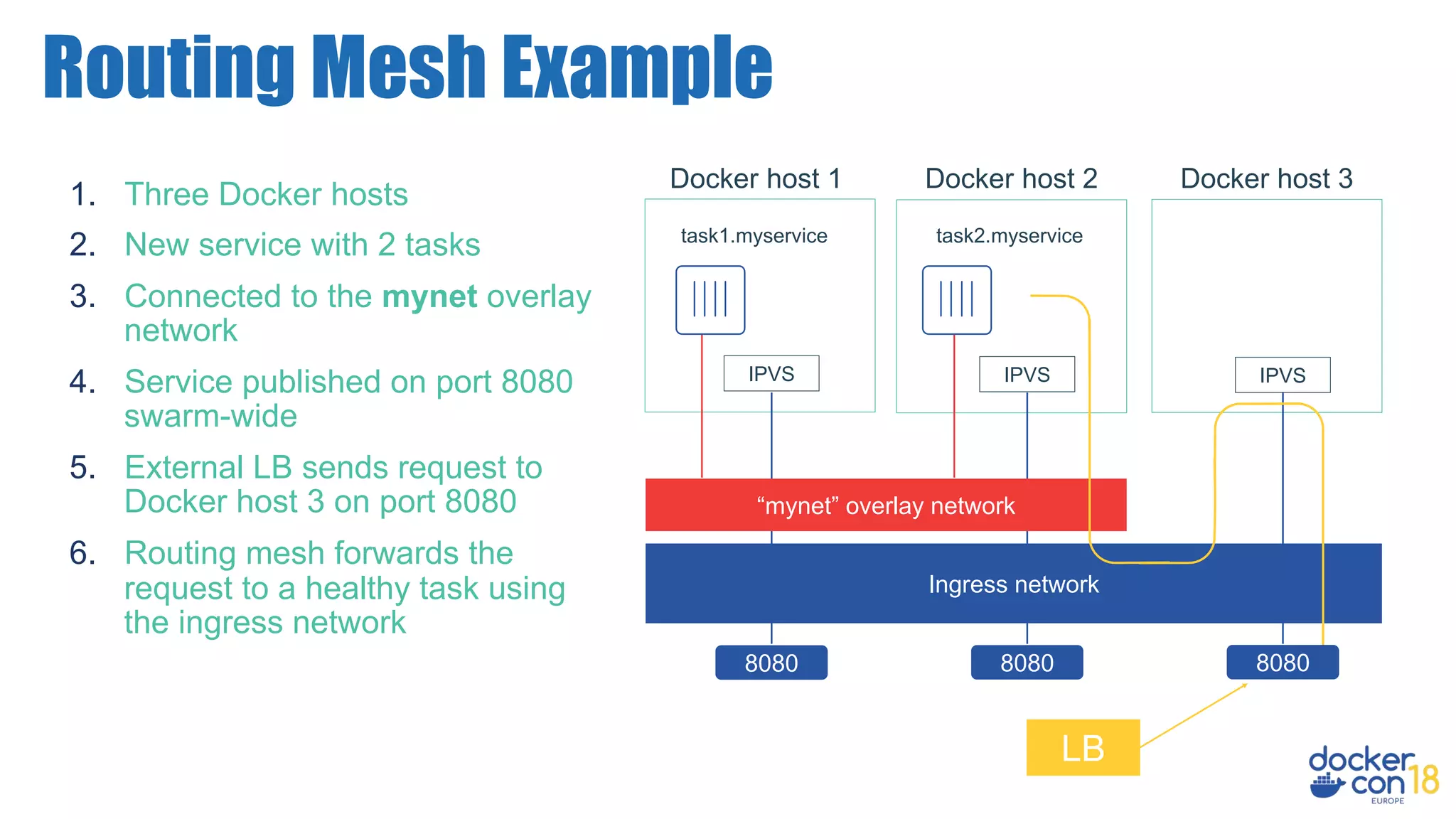

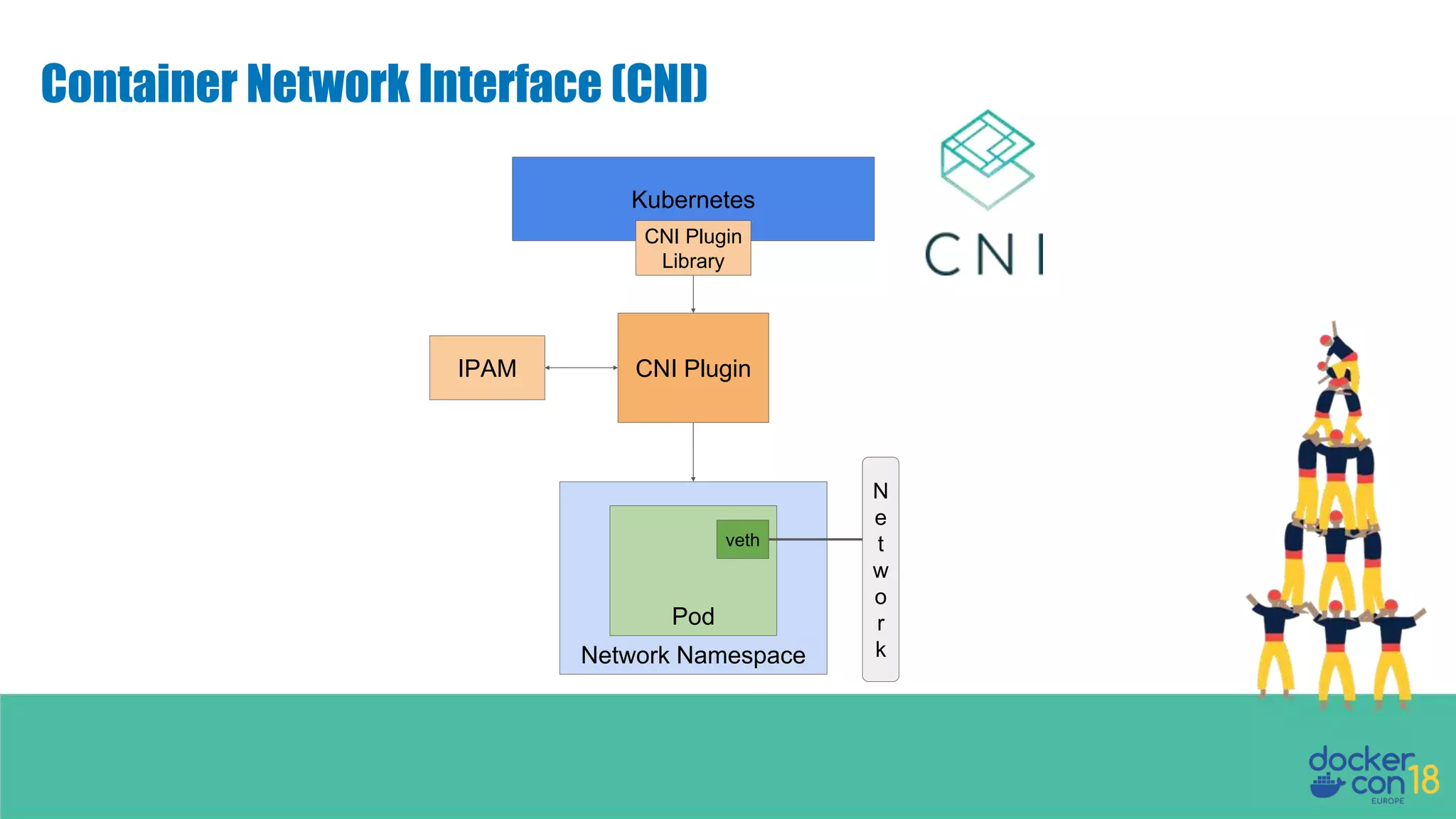

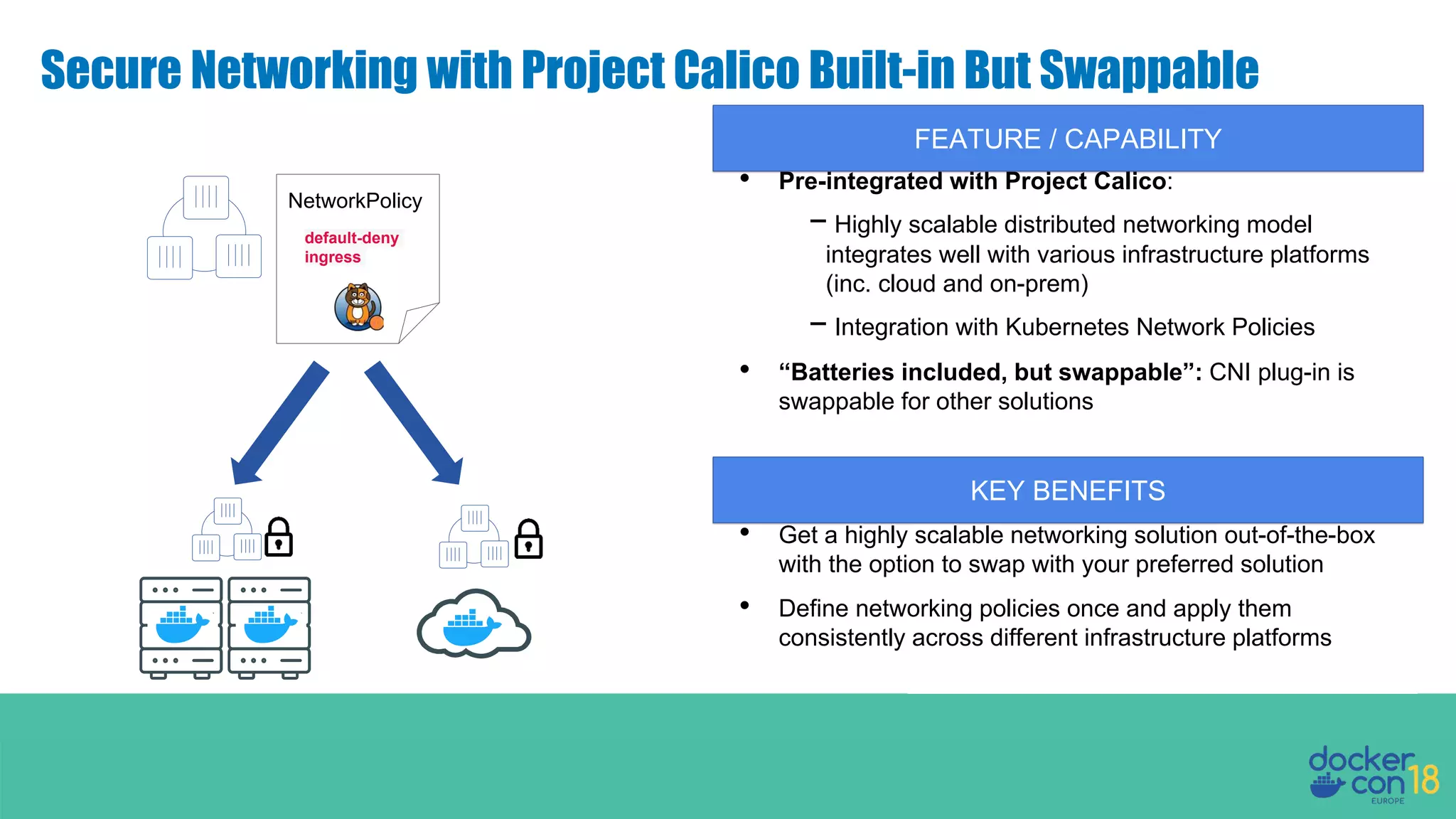

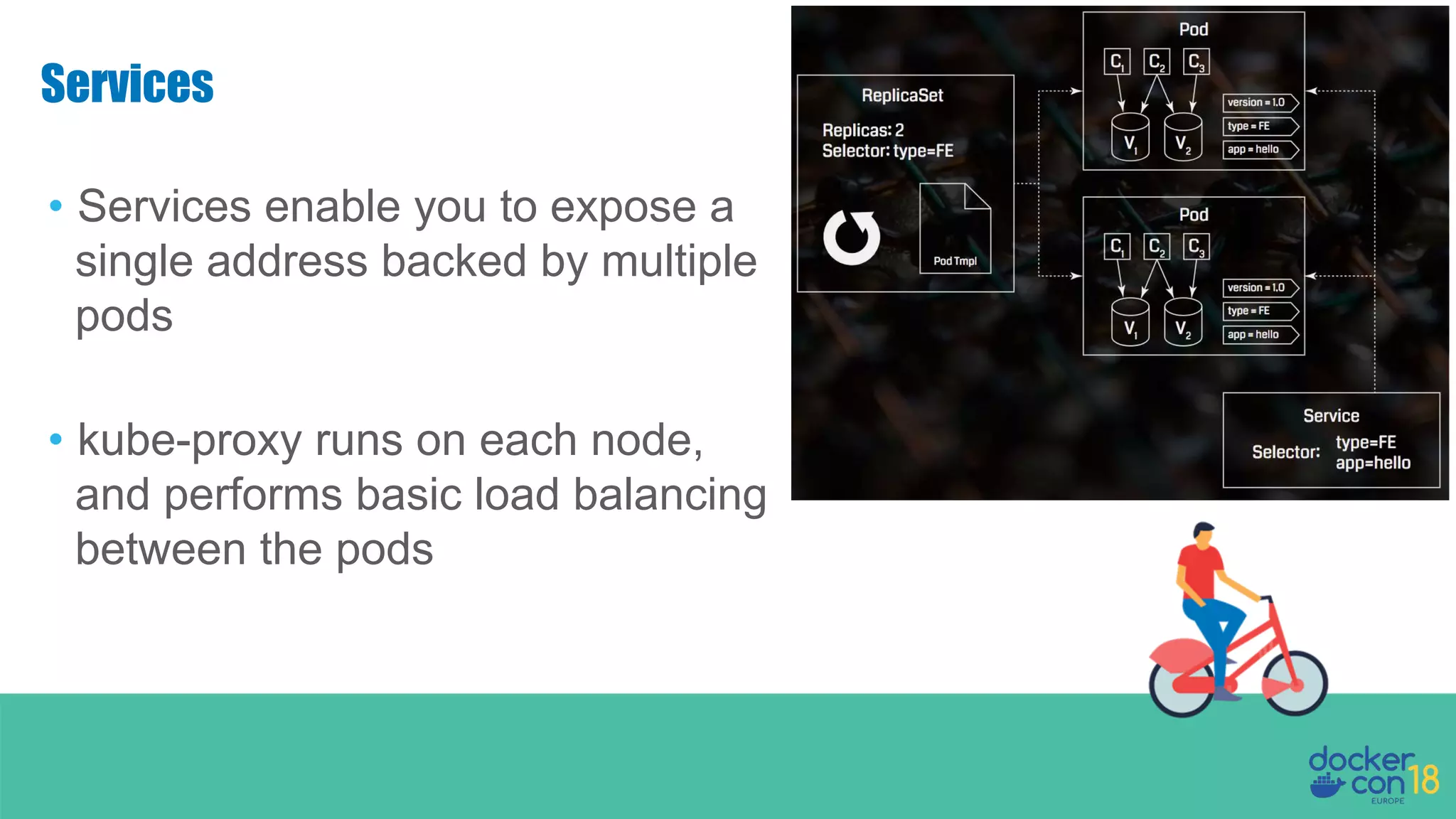

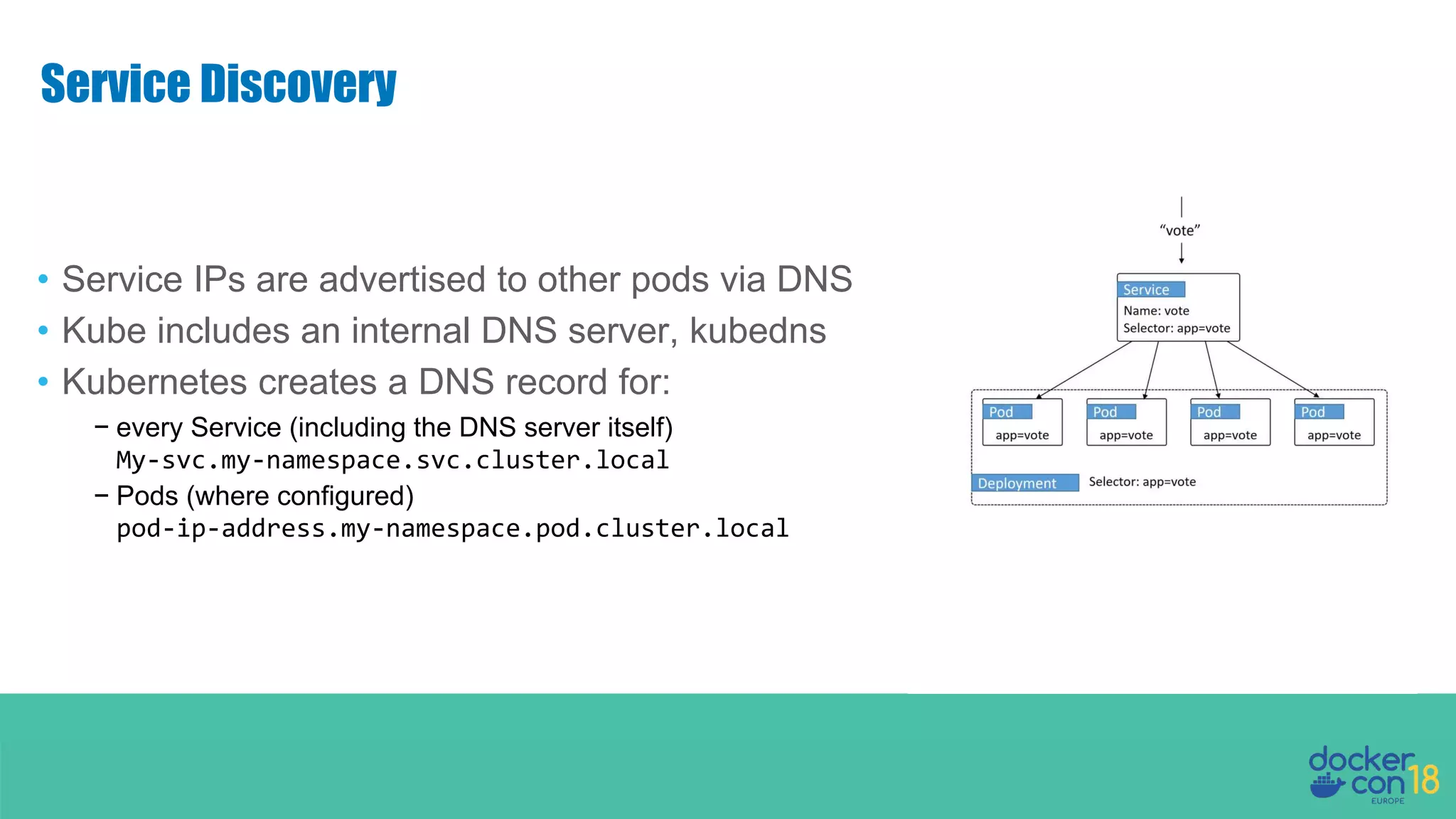

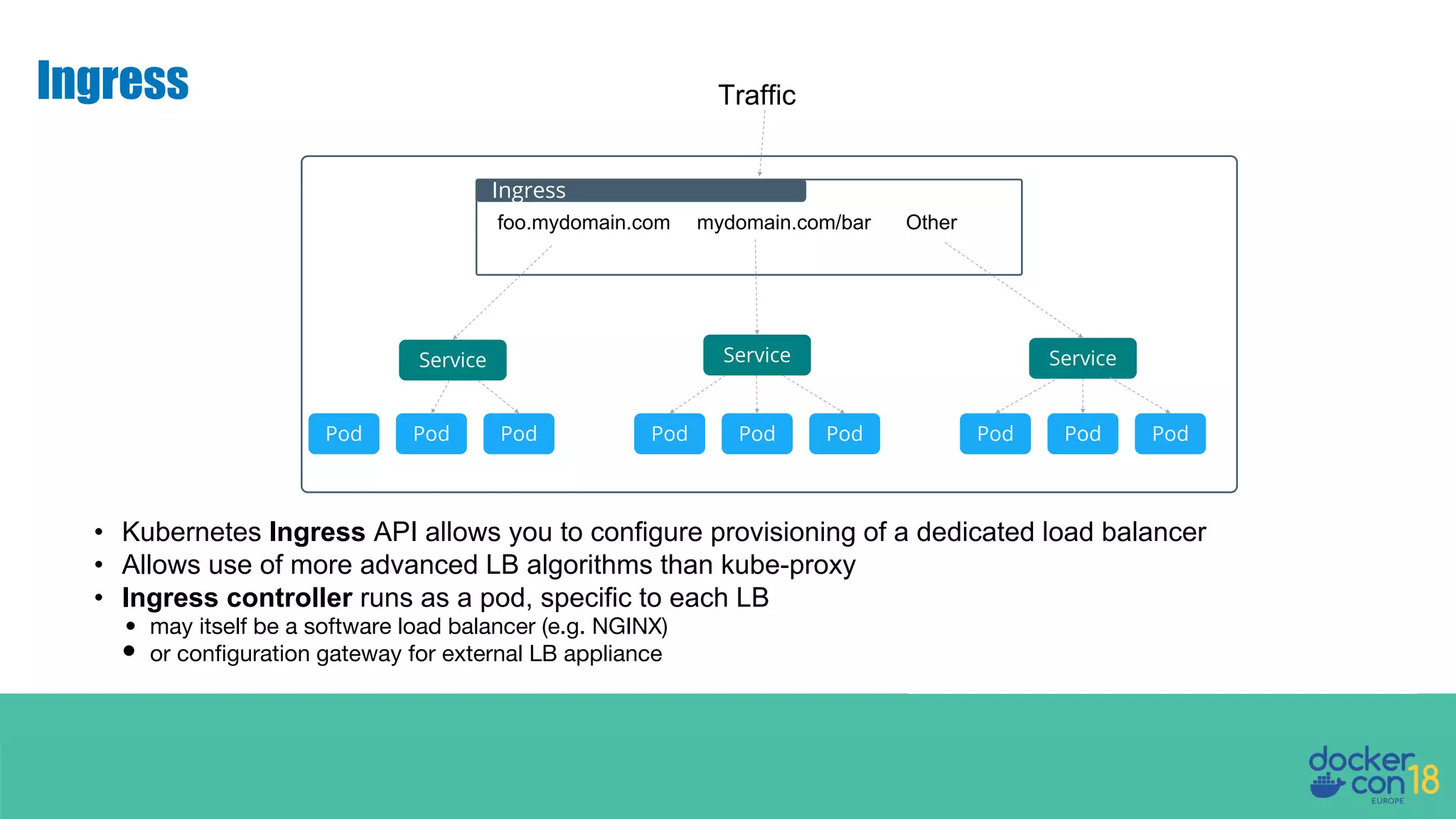

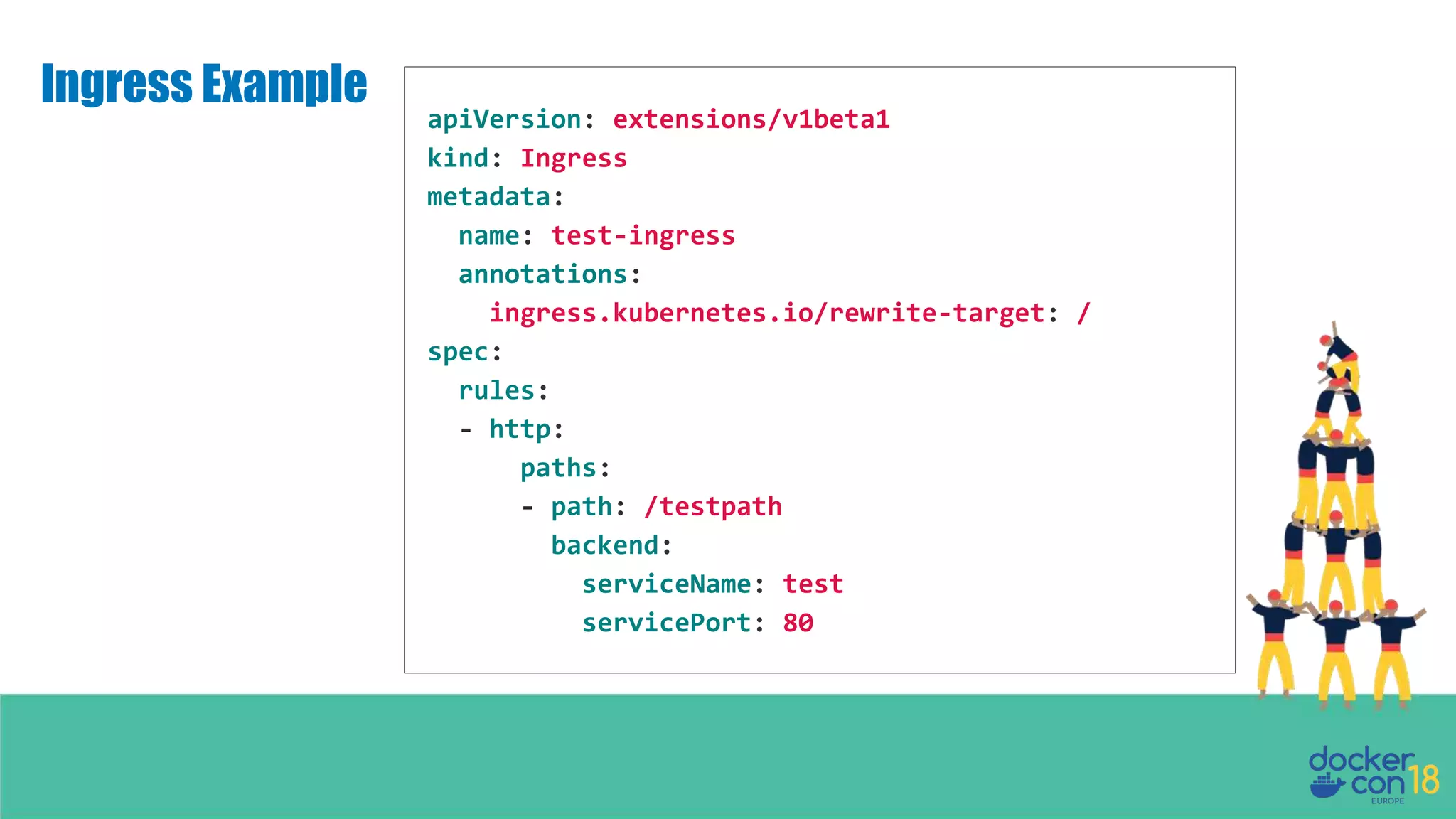

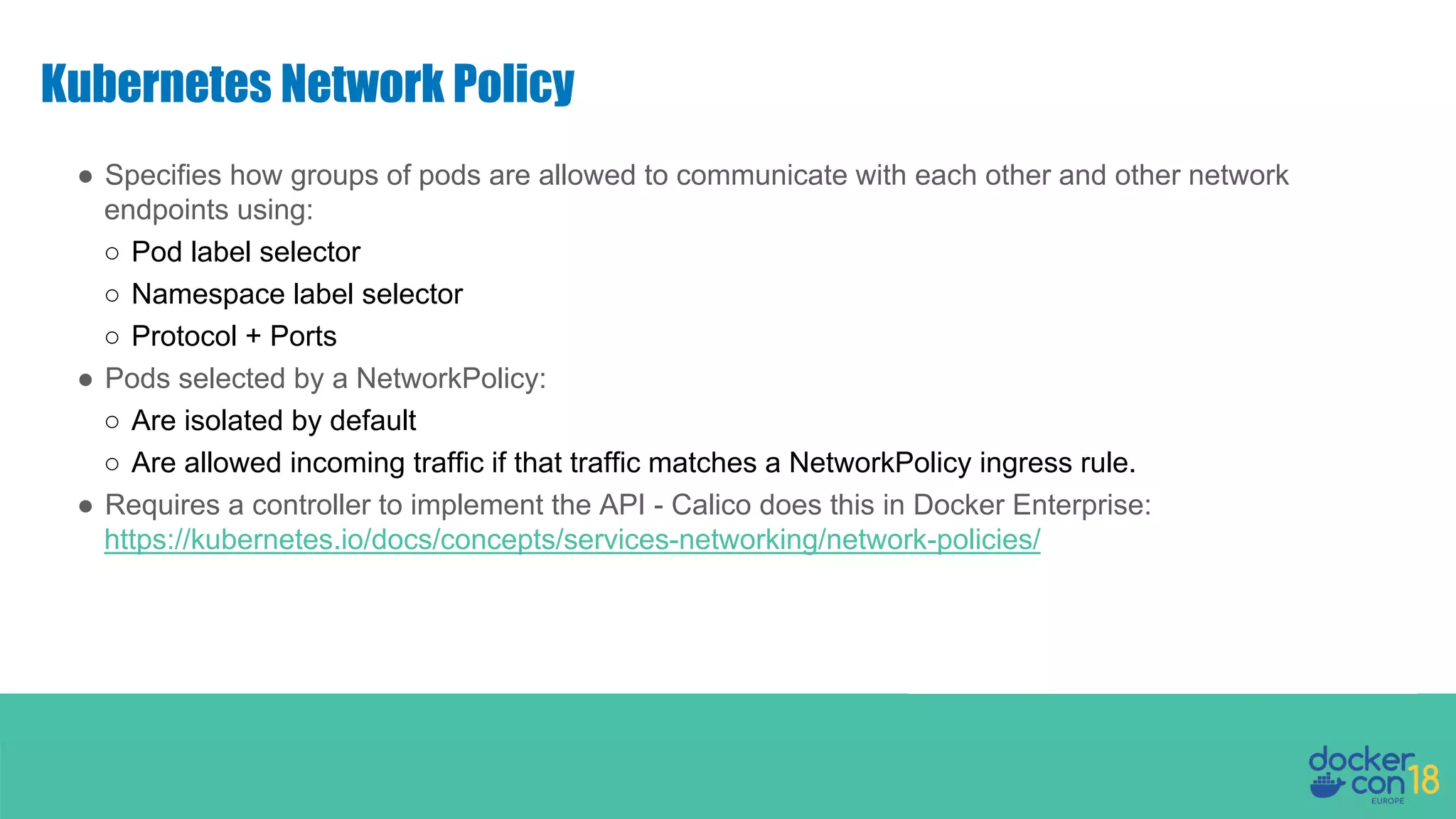

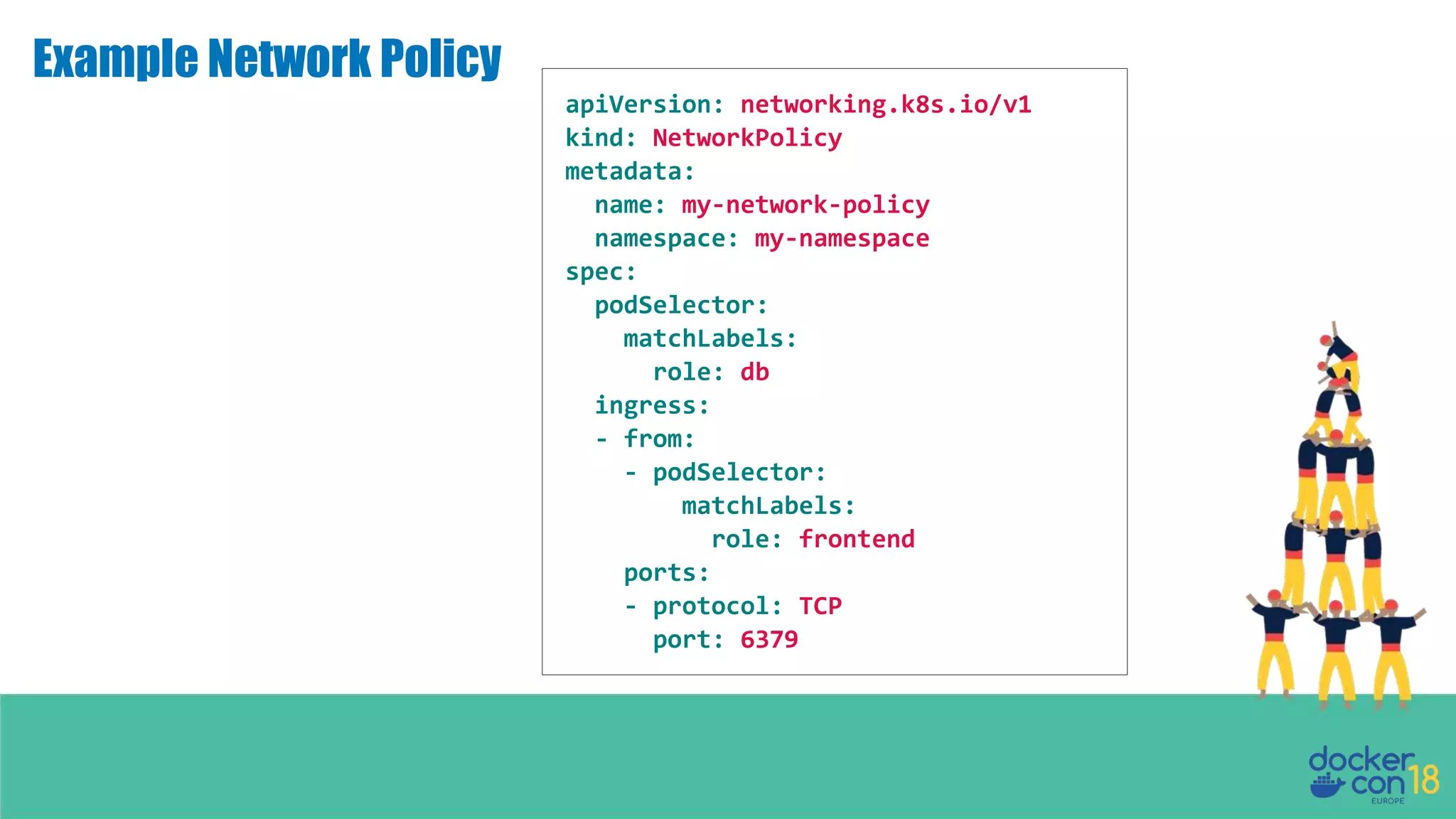

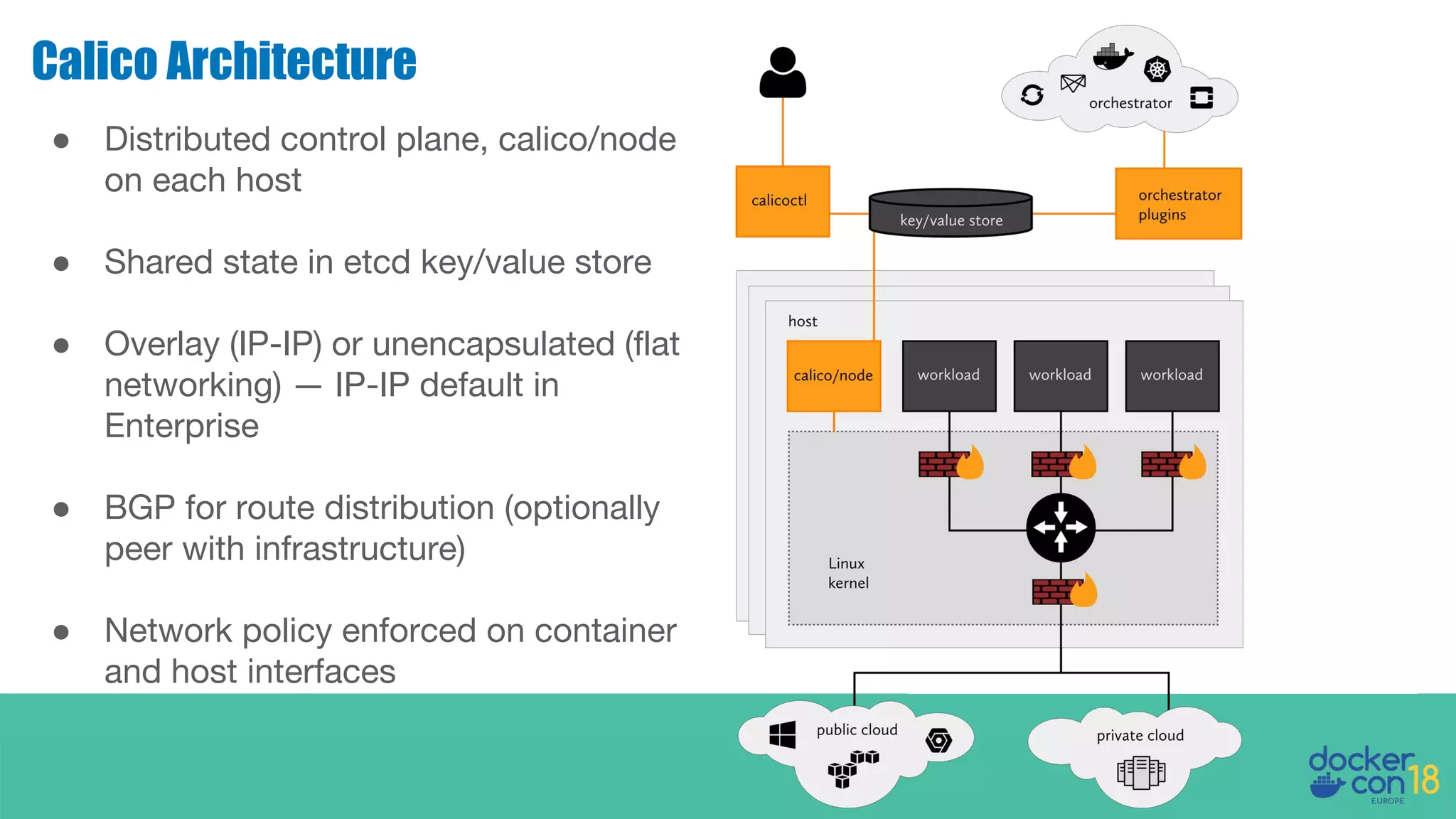

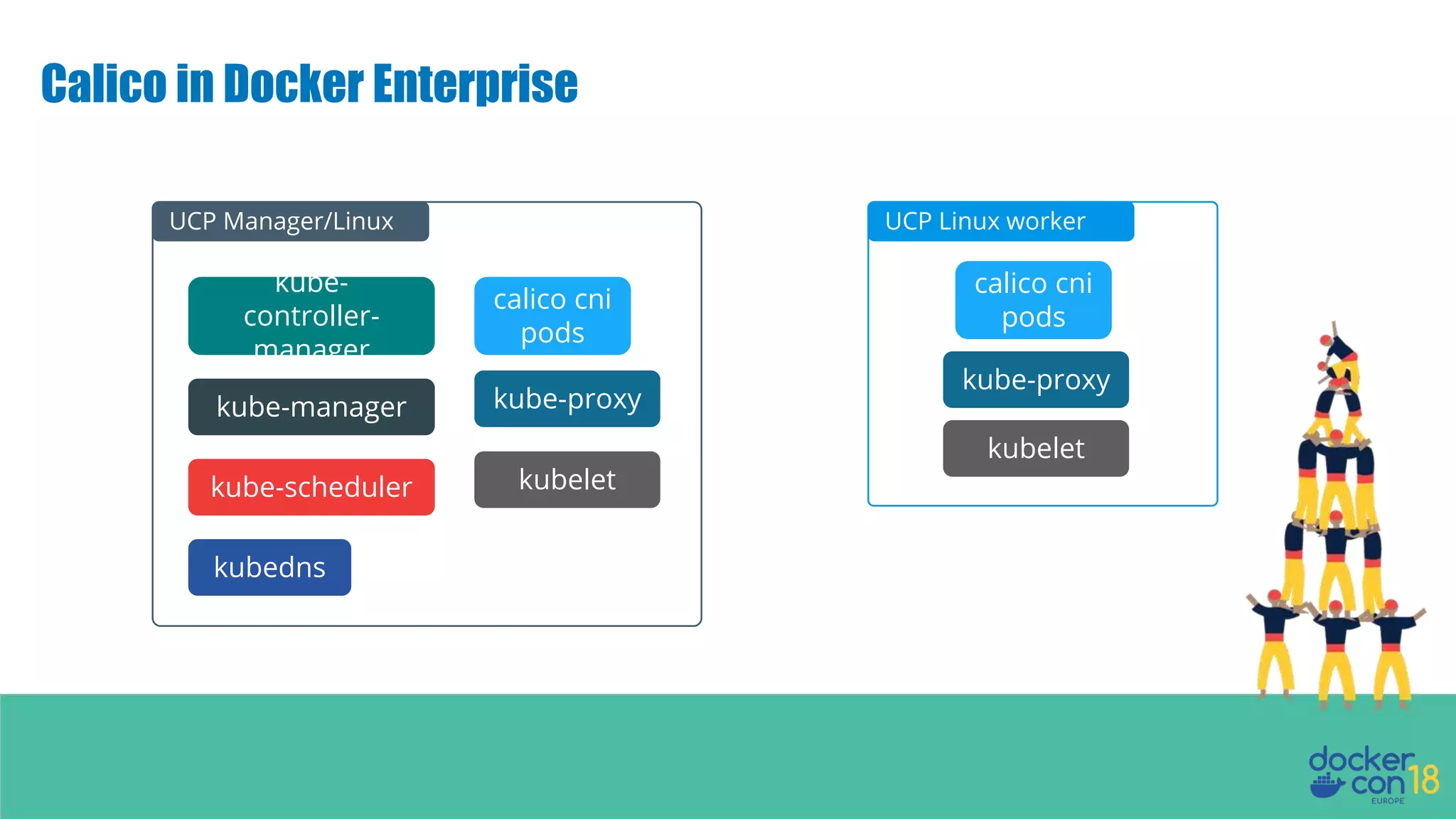

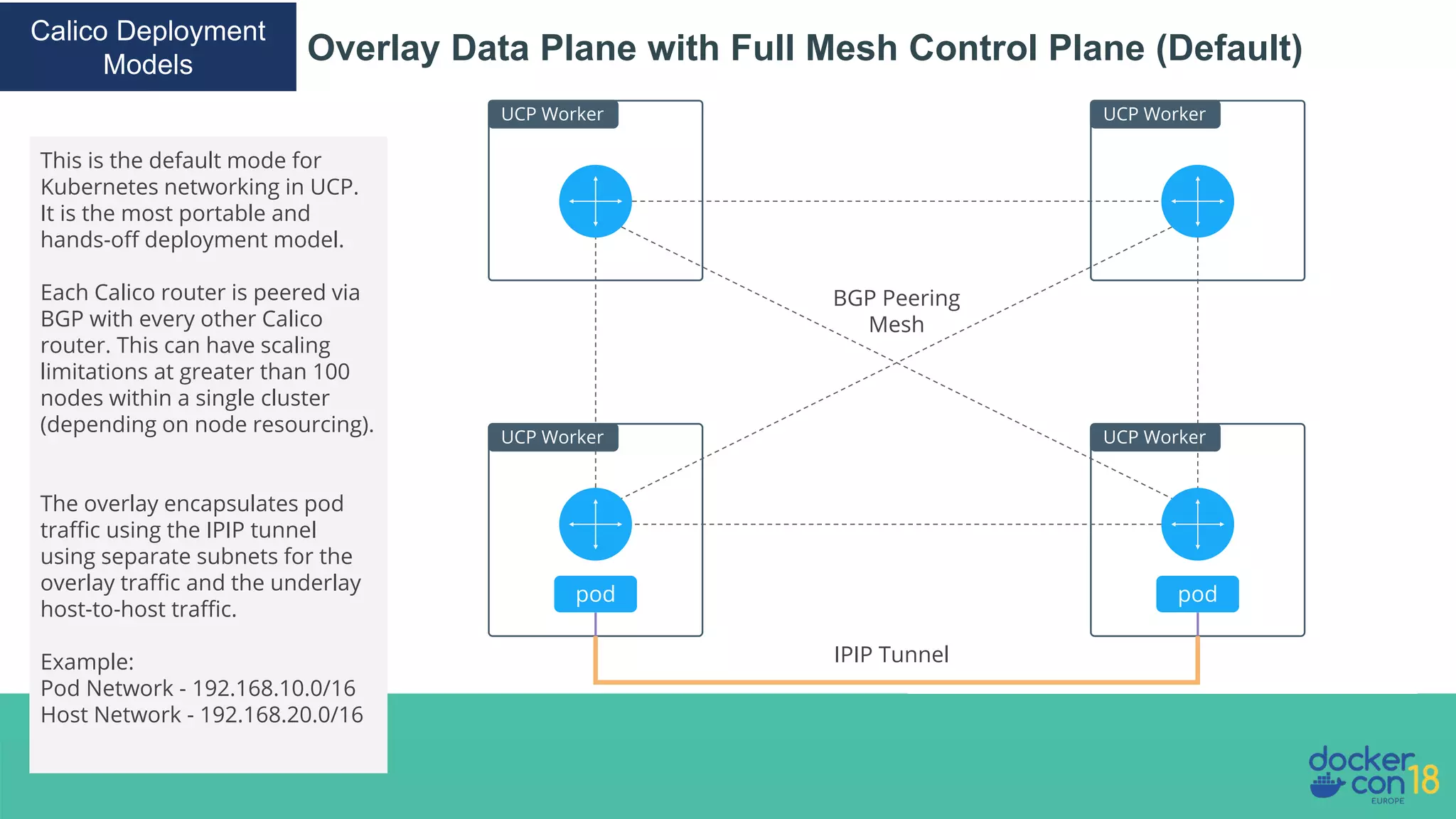

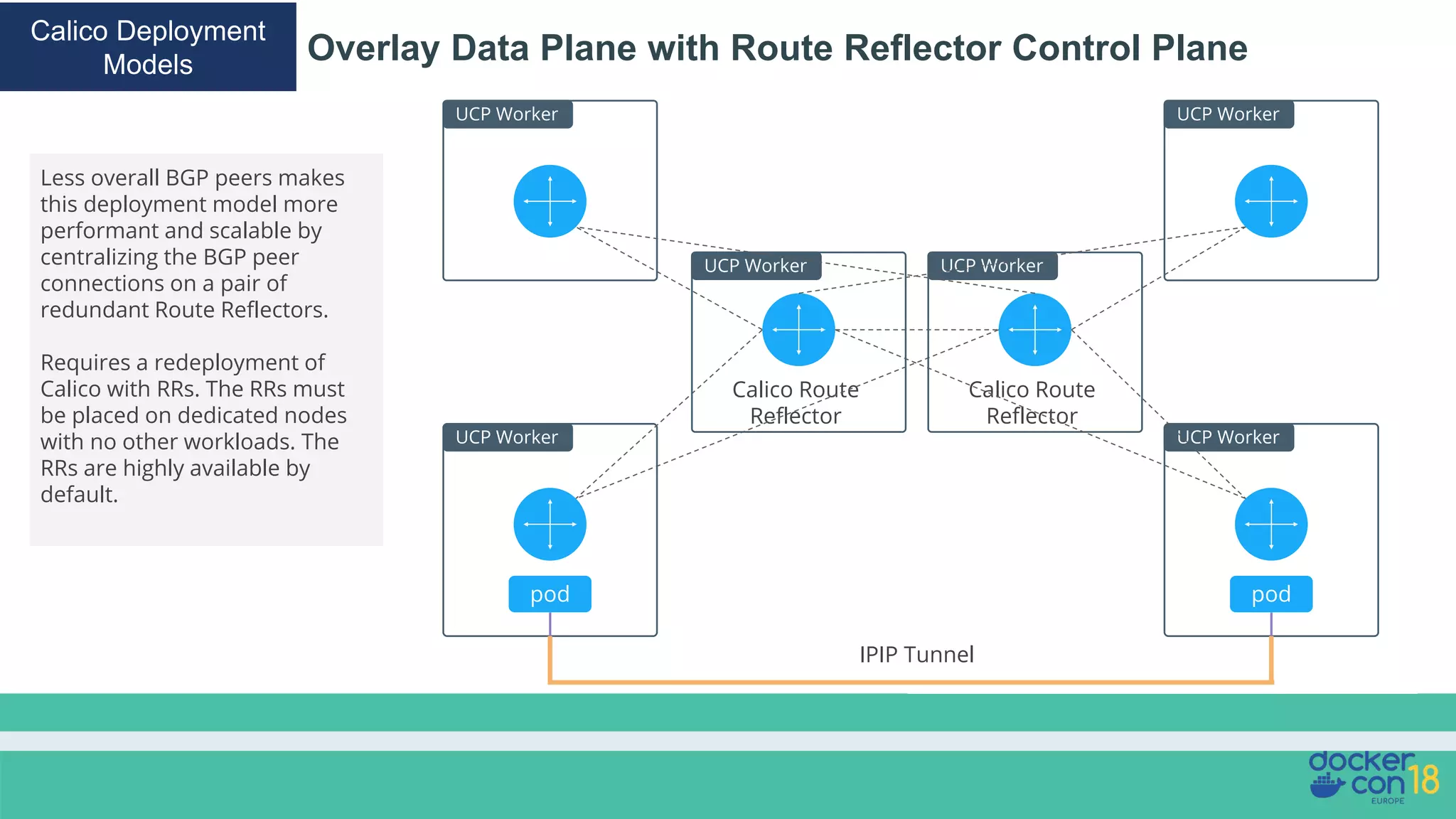

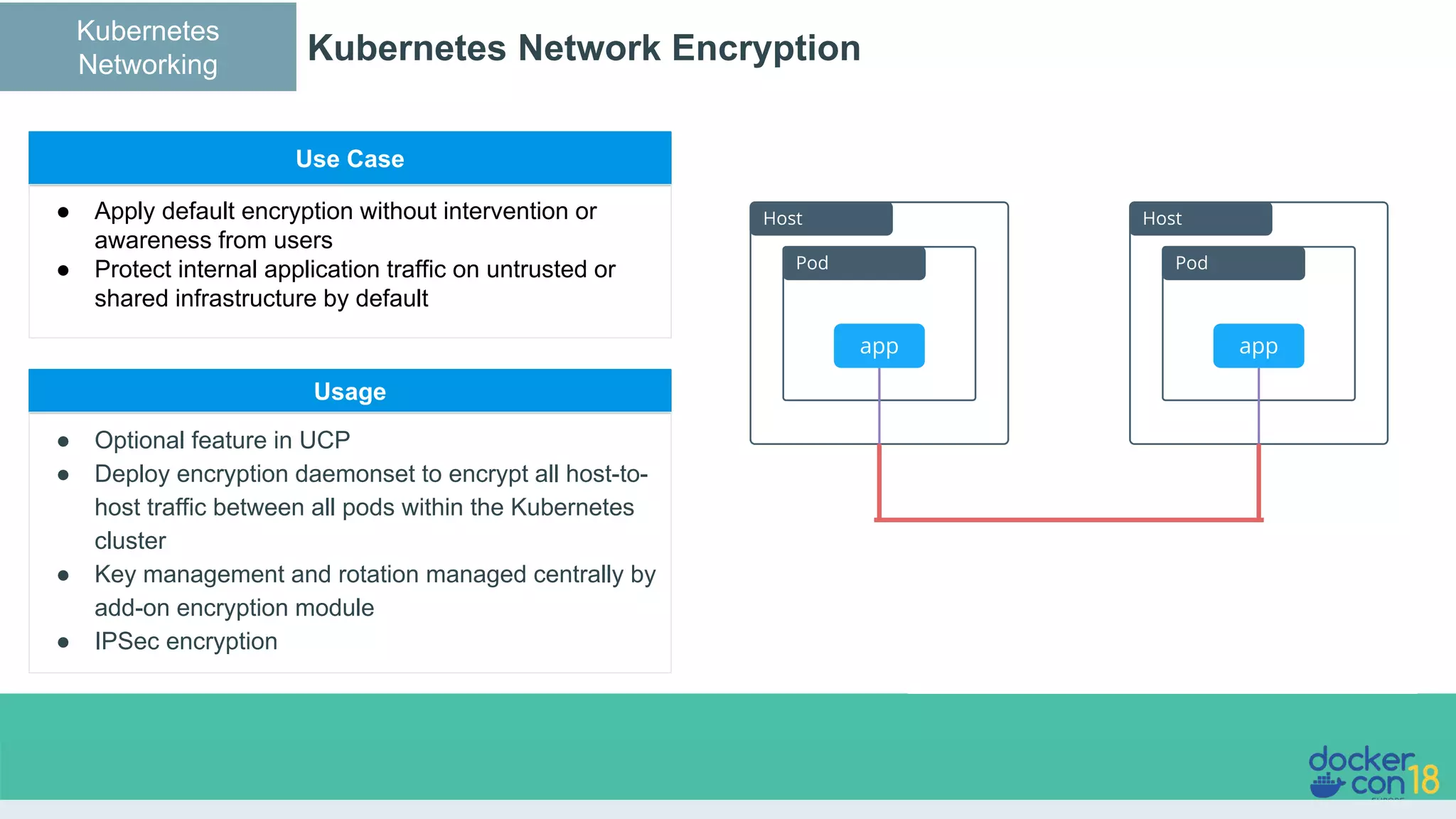

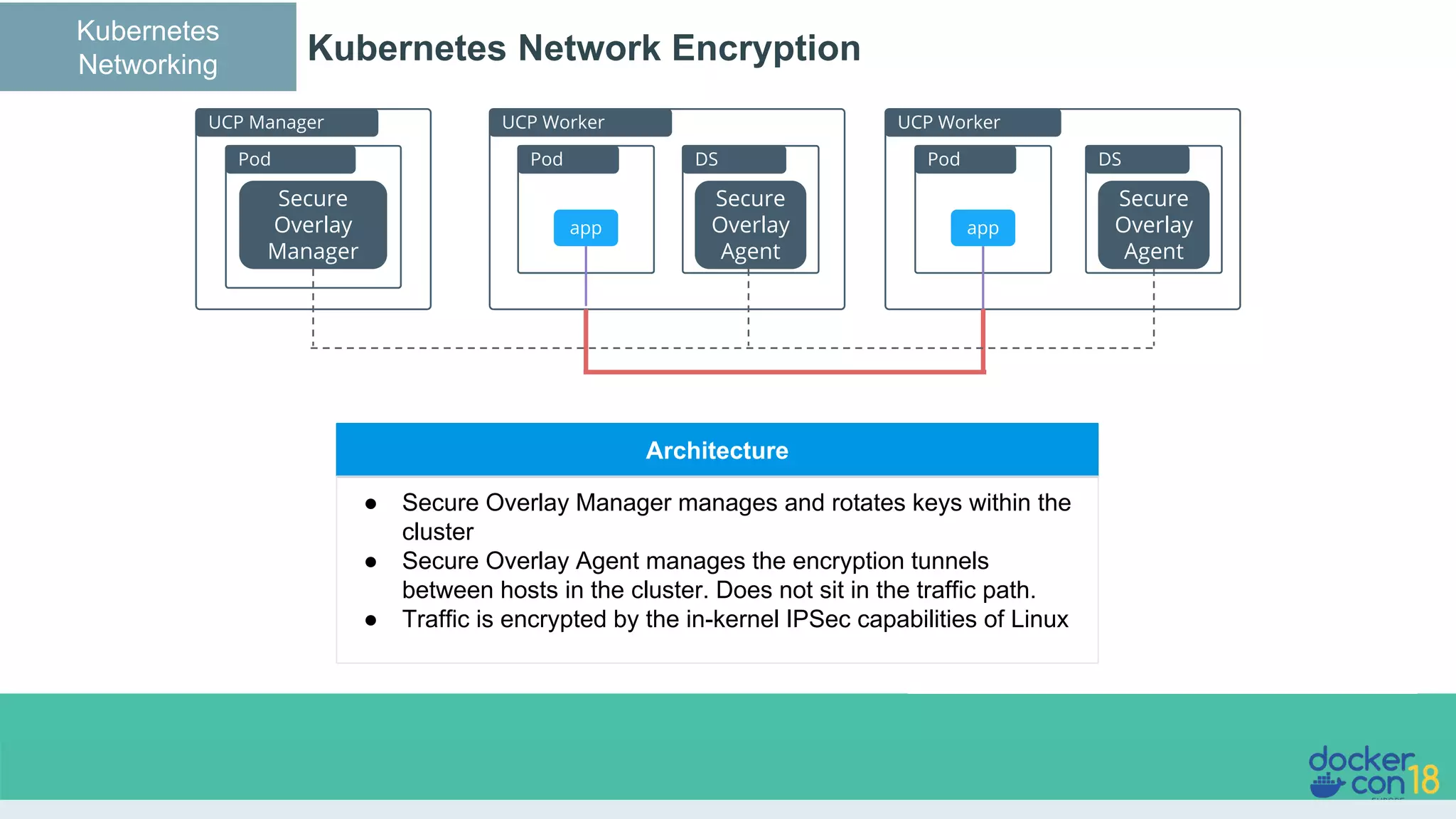

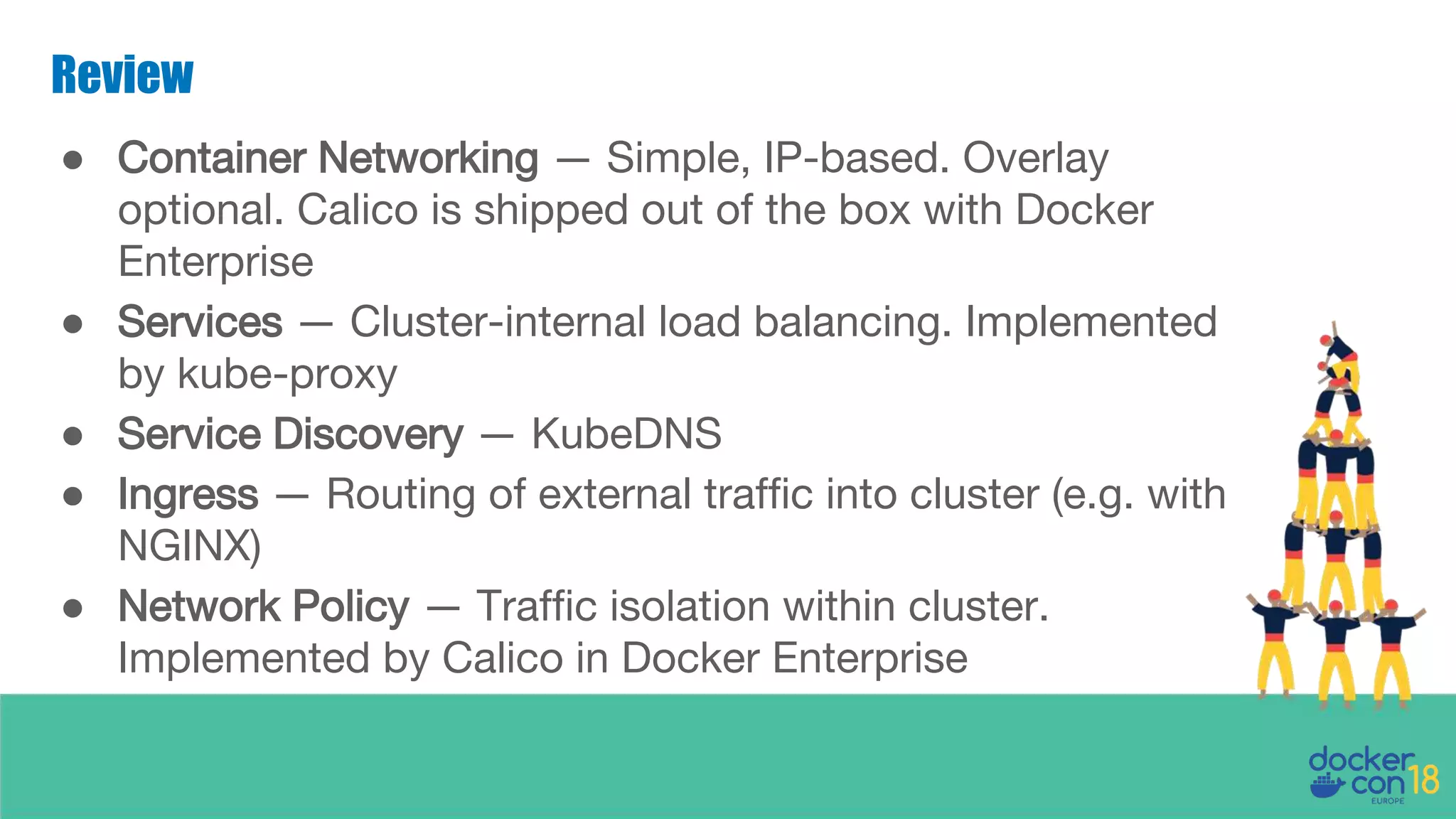

The document details networking in Docker Enterprise using both Swarm and Kubernetes, highlighting the architecture, networking models, and key components like service discovery and load balancing. It discusses concepts such as bridge and overlay networking, container network interface (CNI) with Calico, and network policies for Kubernetes. The workshop instructions and resources are also provided, emphasizing a hands-on approach to understanding Docker networking solutions.