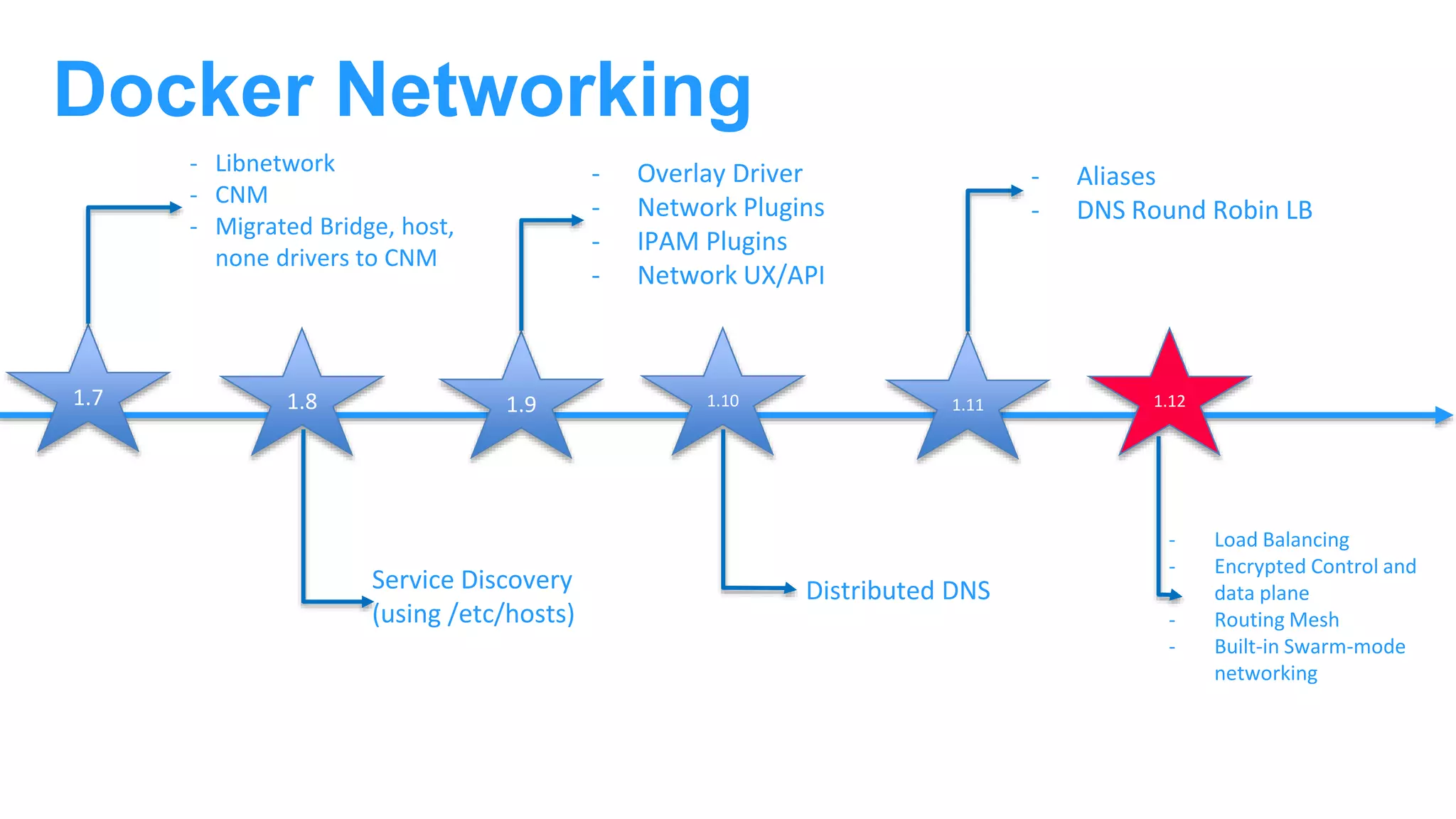

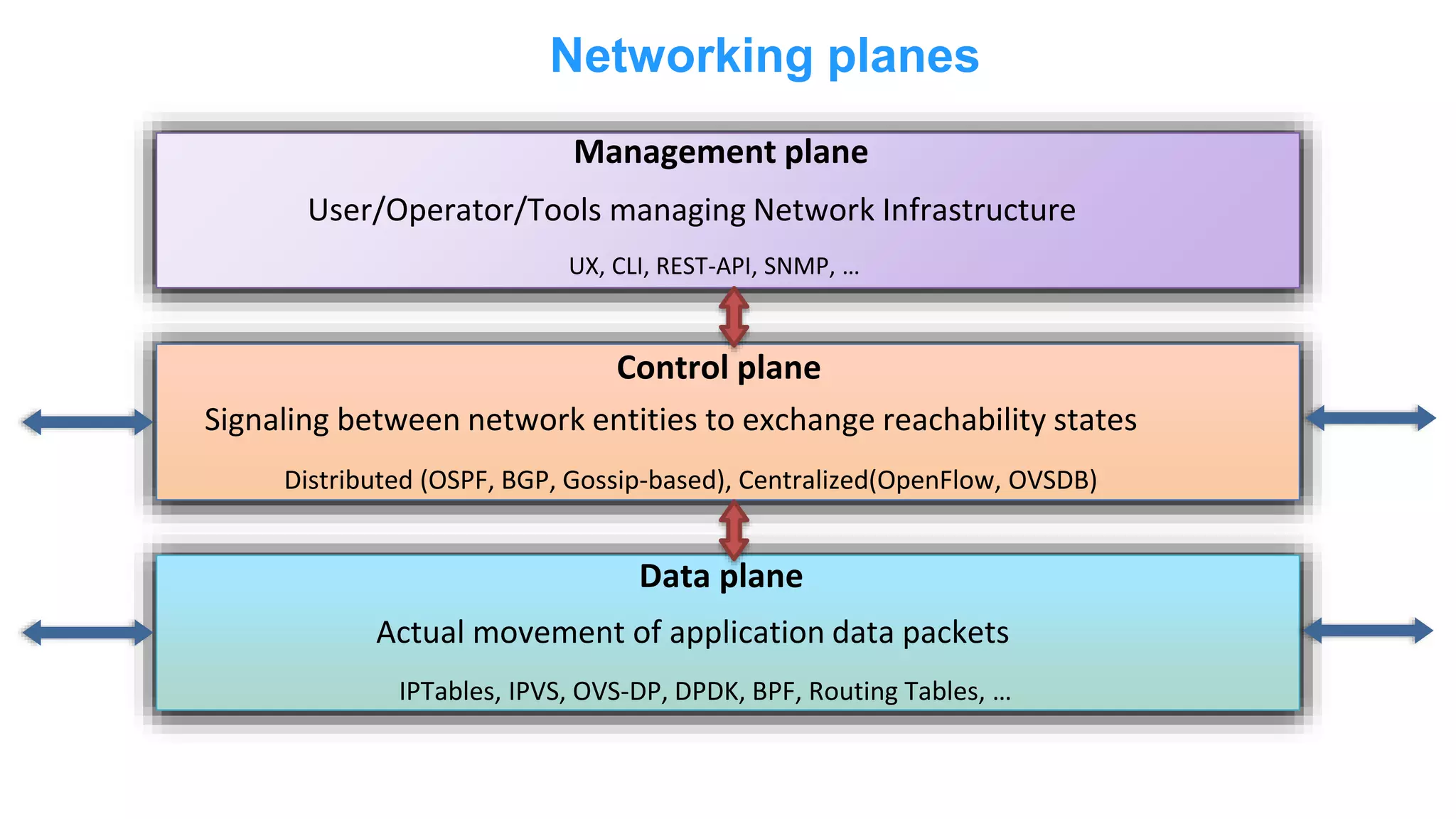

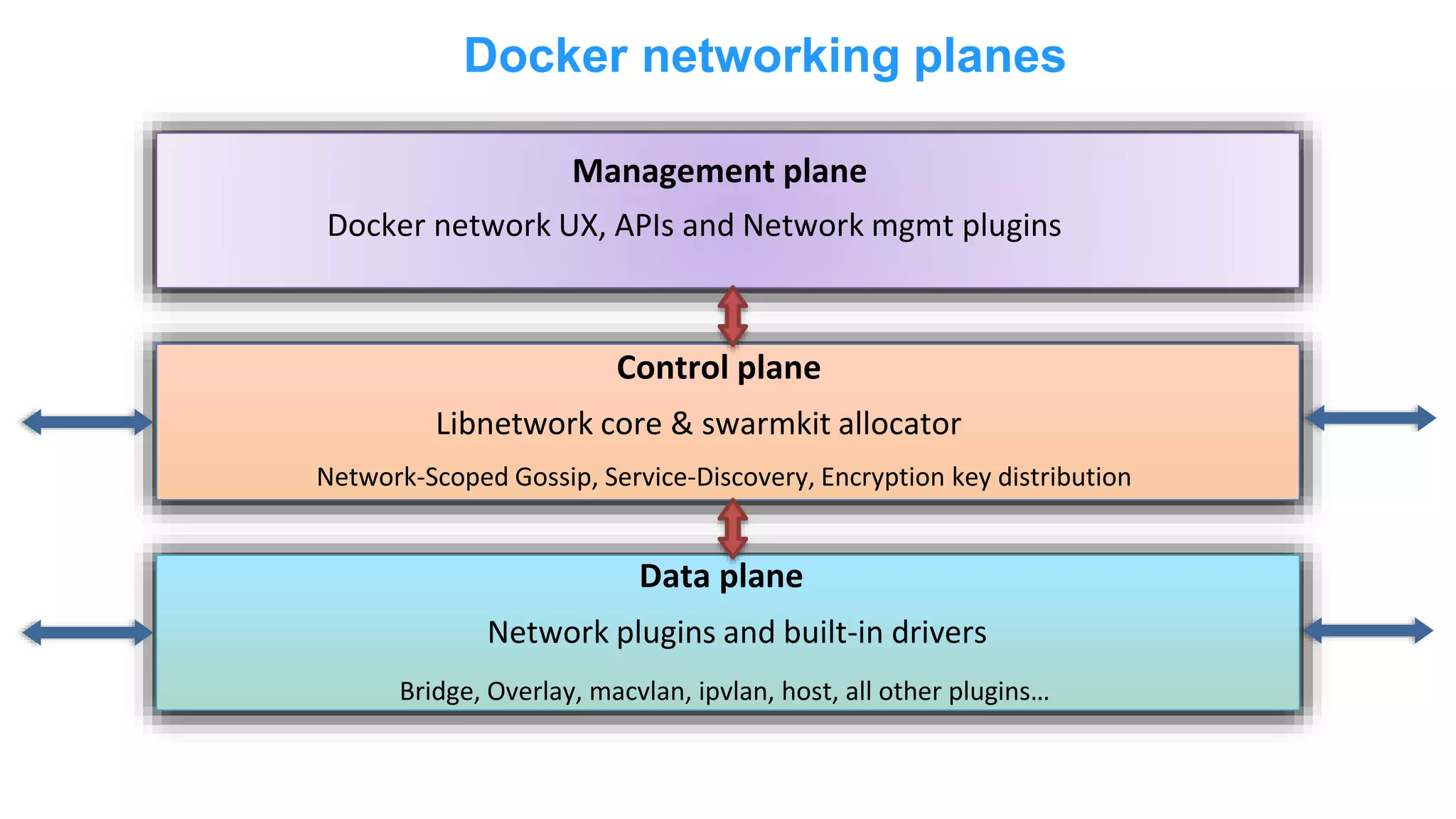

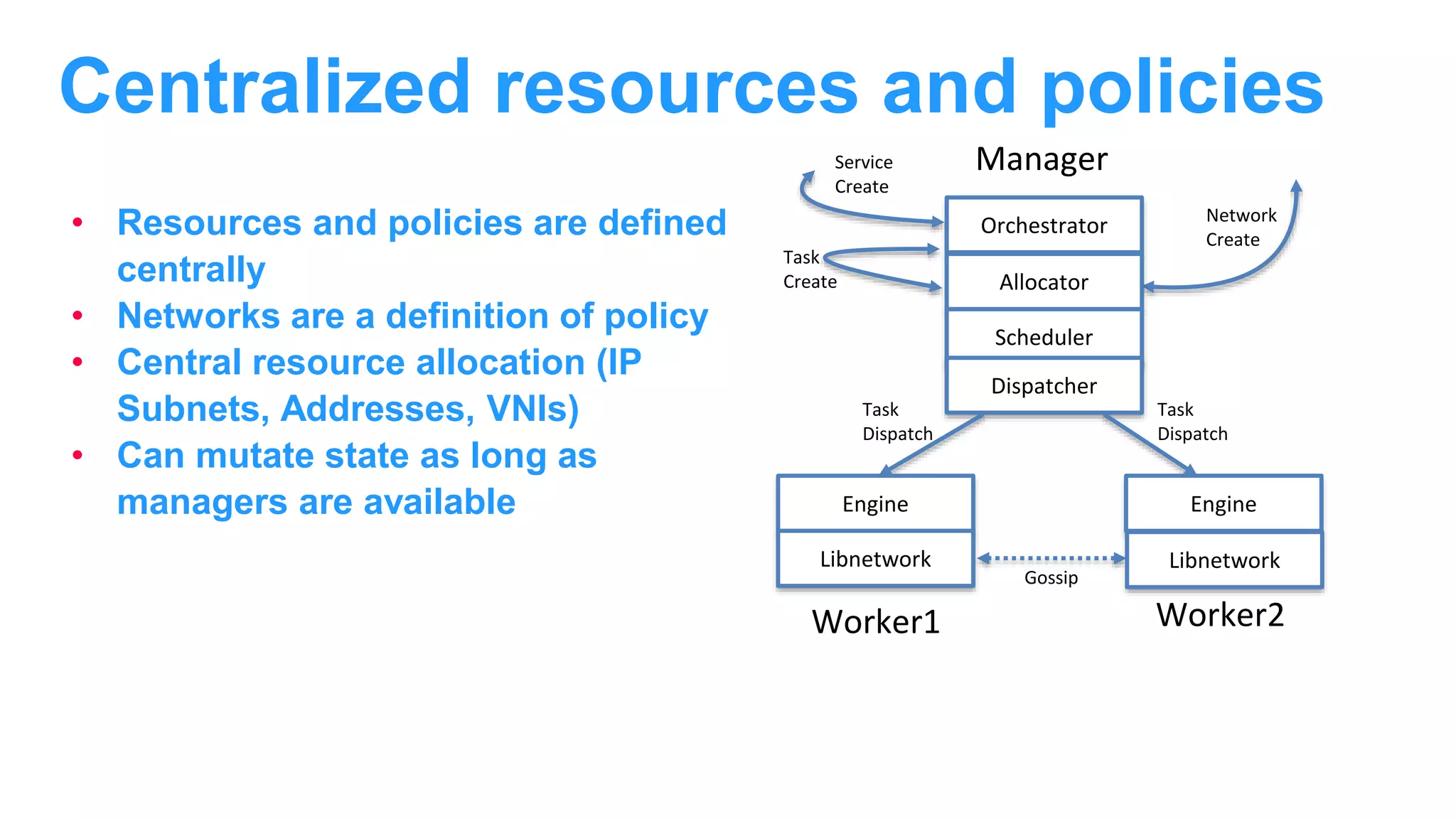

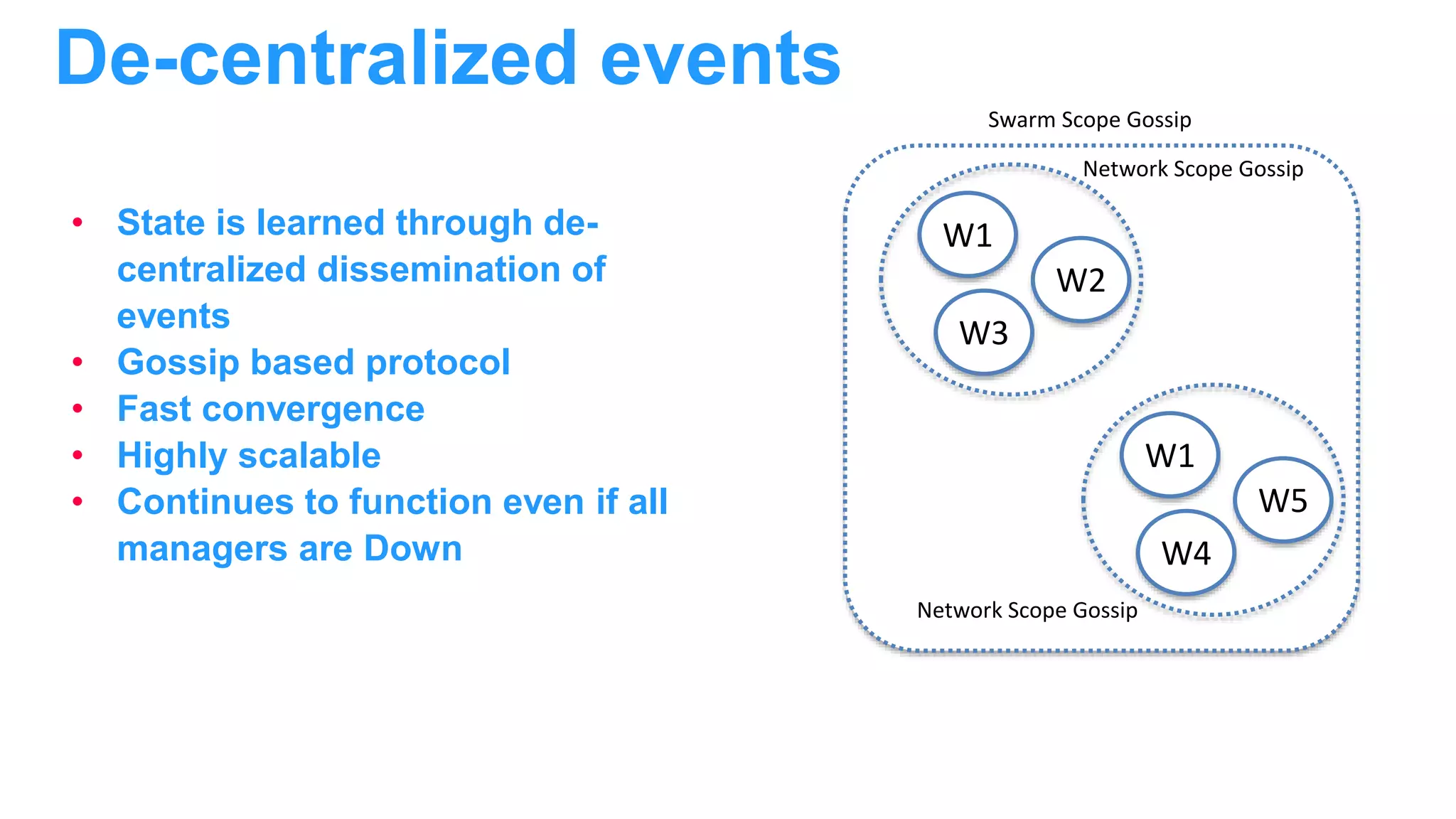

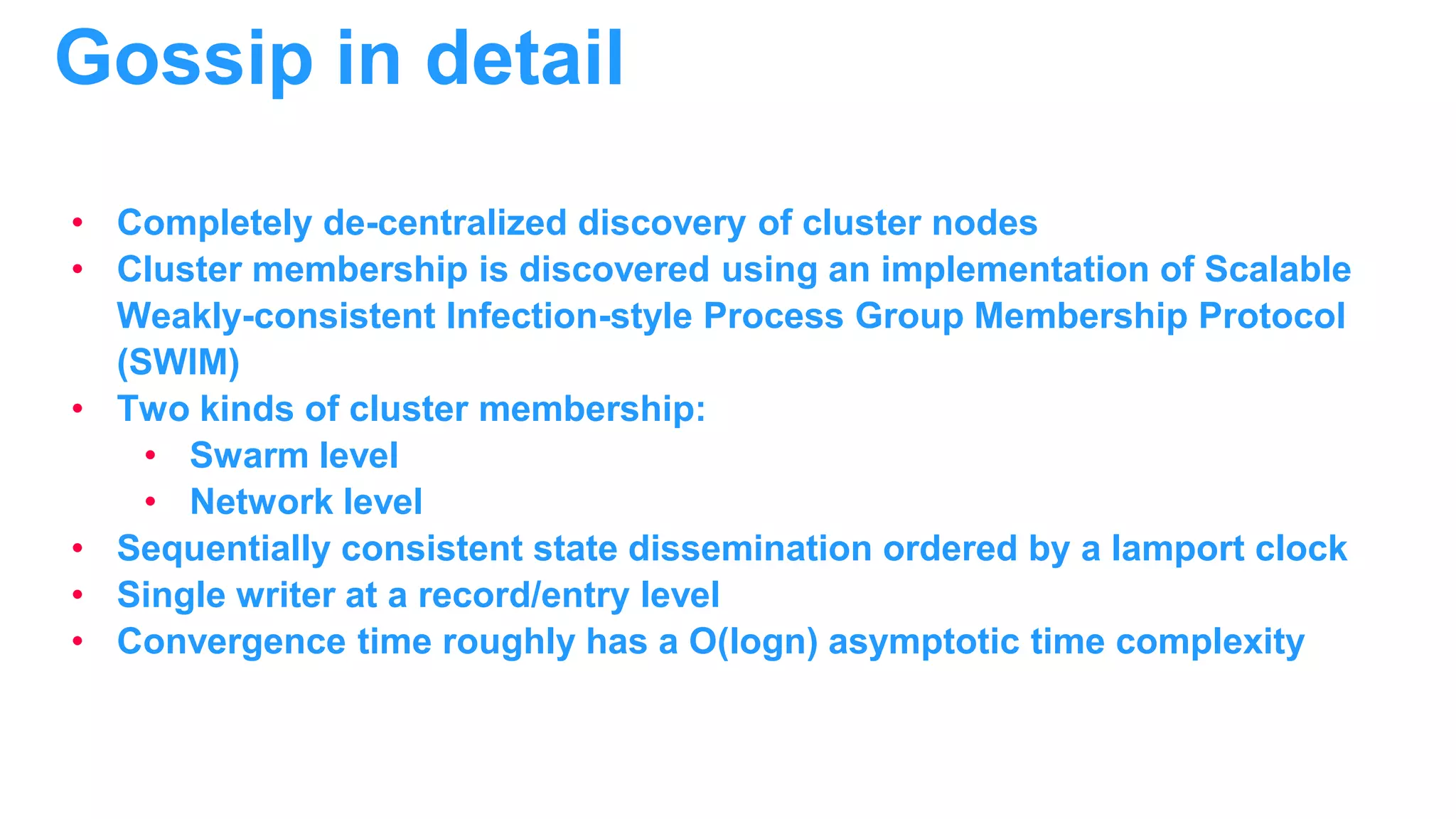

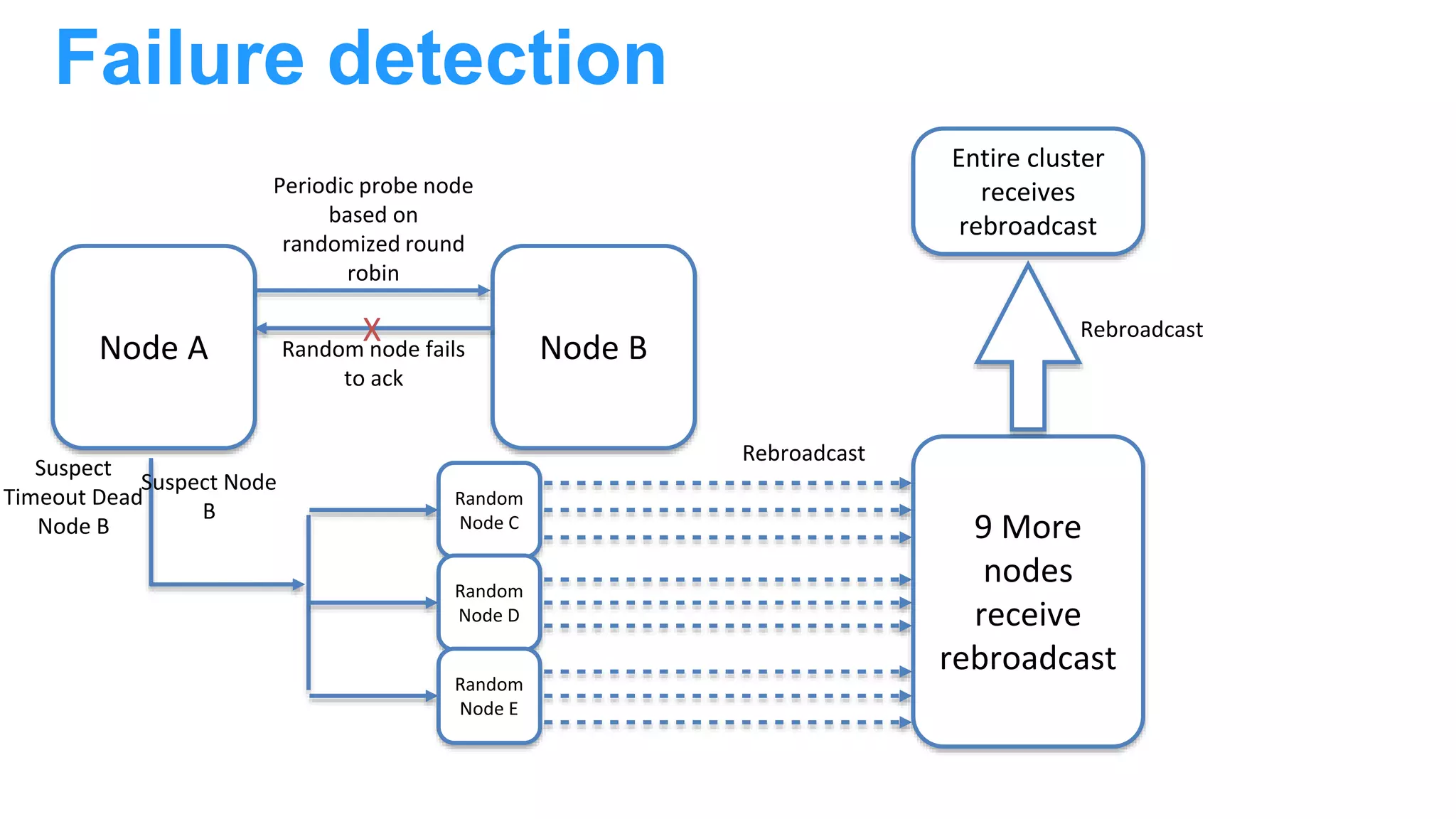

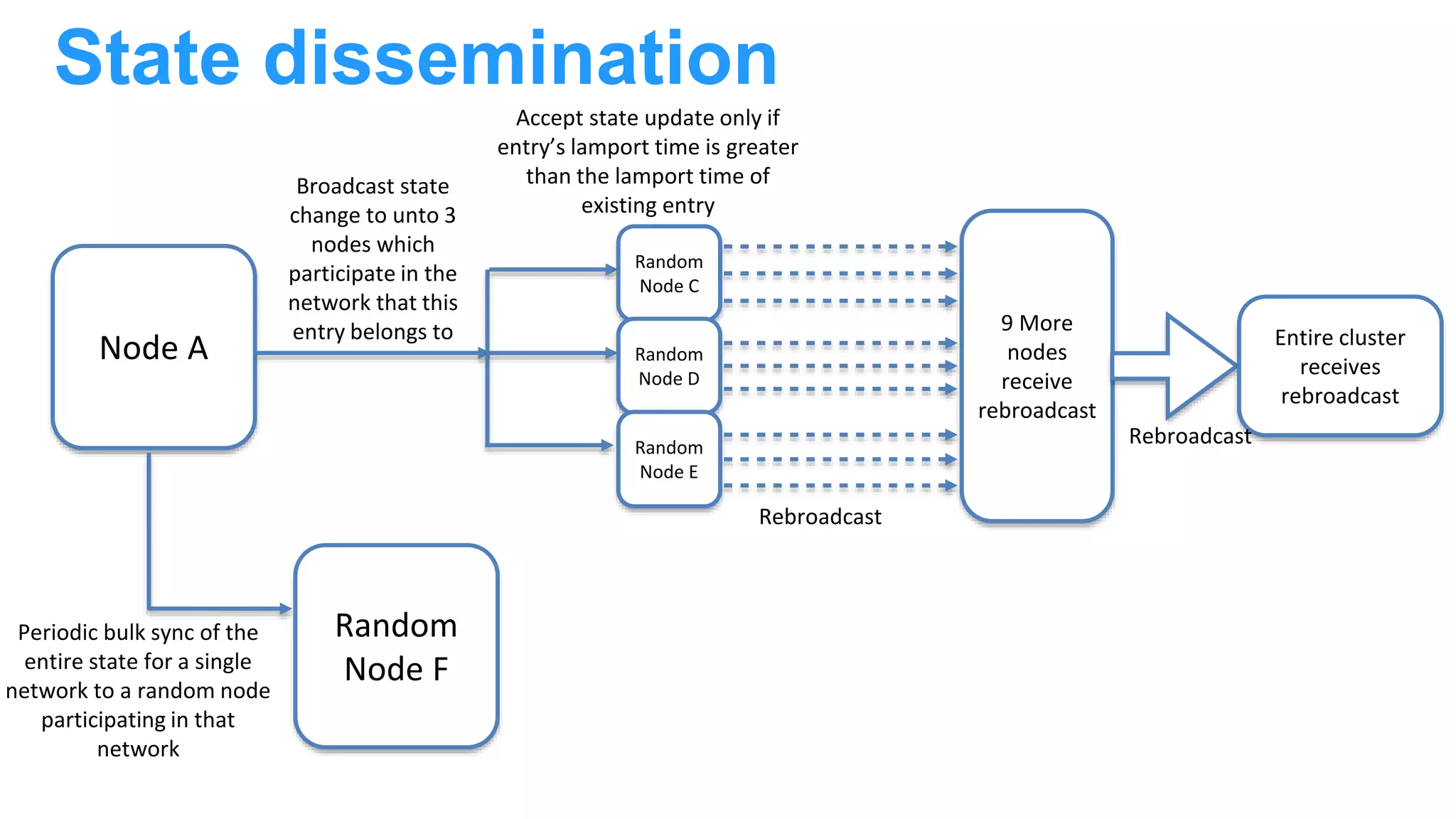

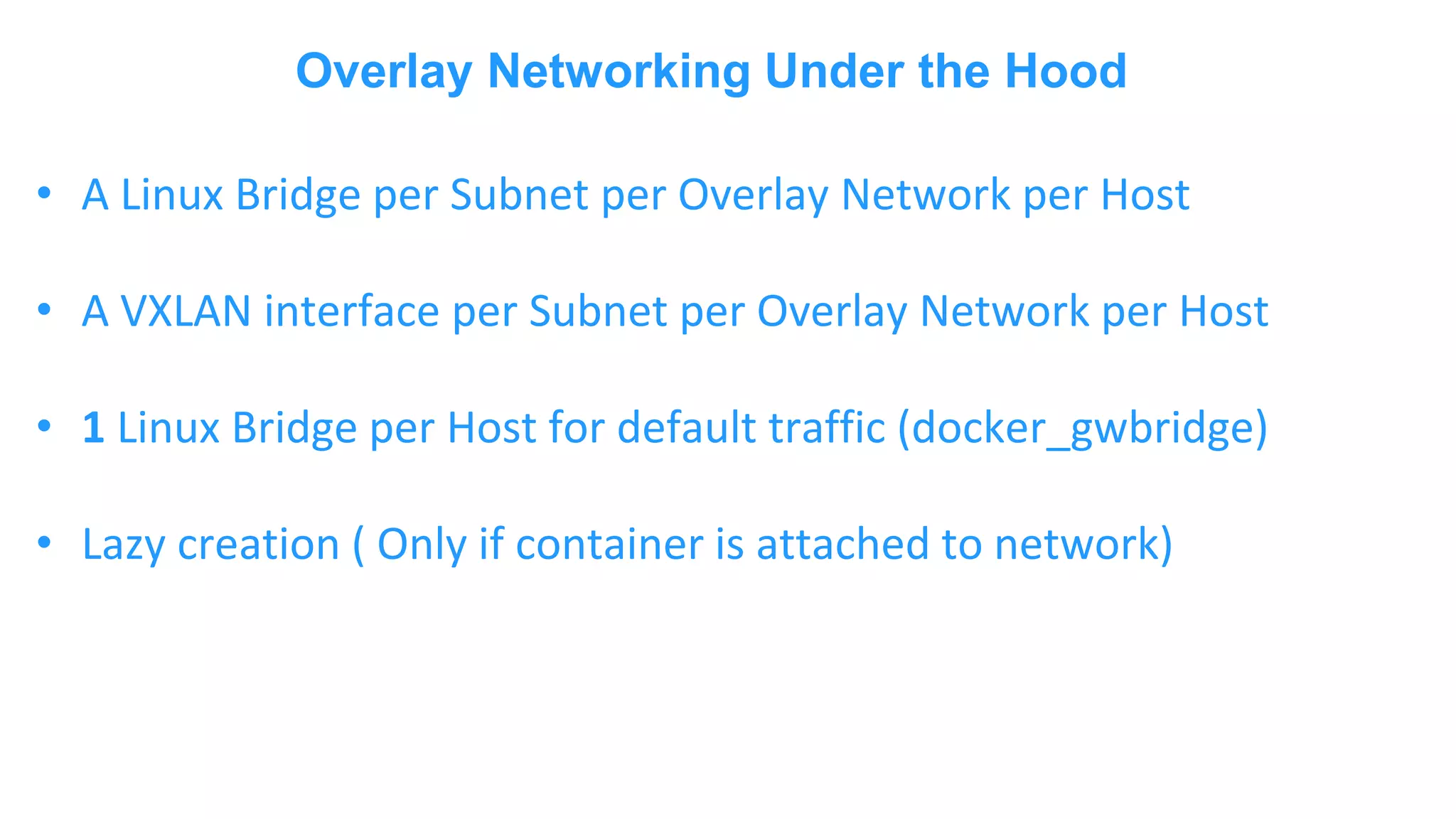

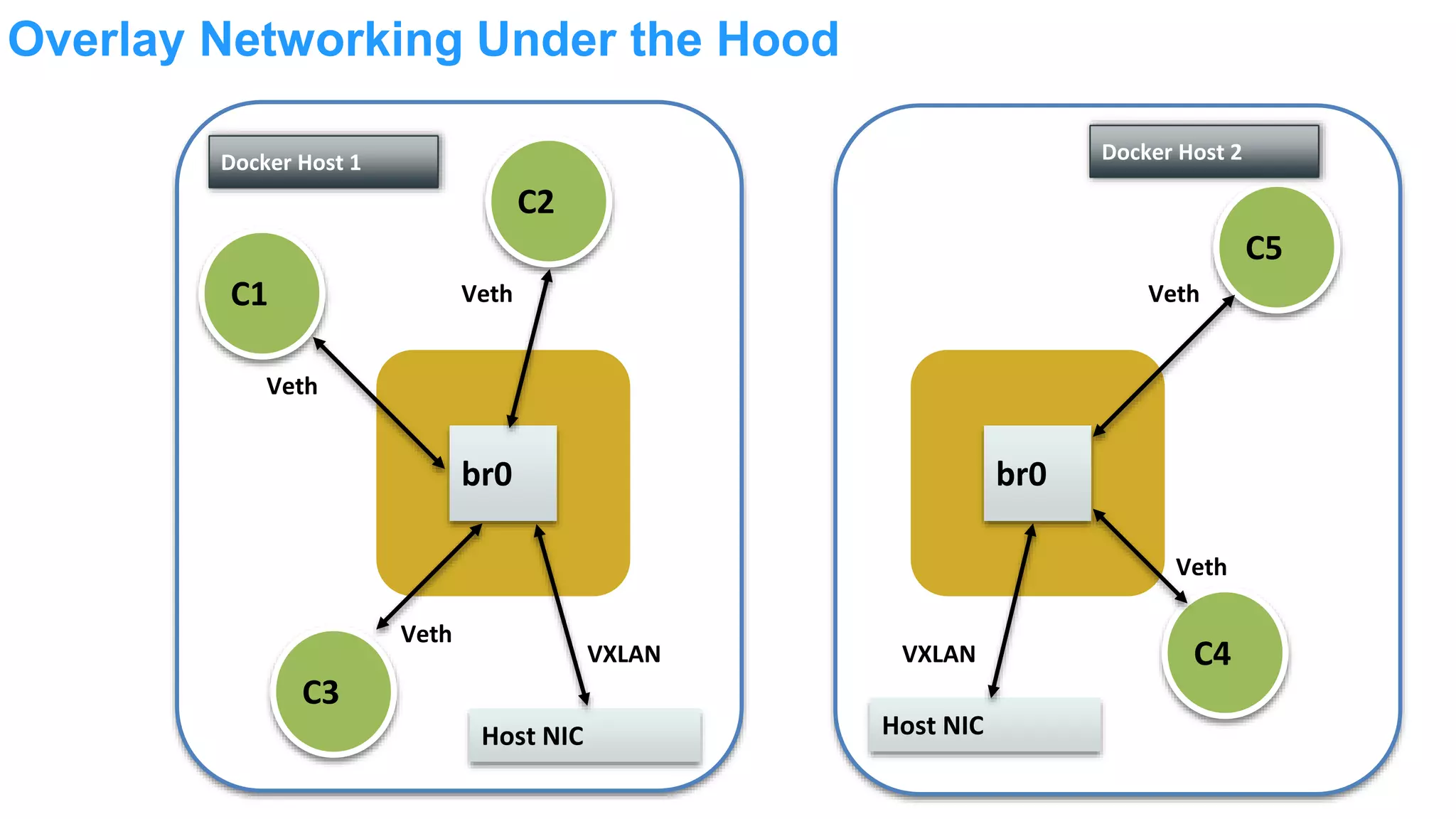

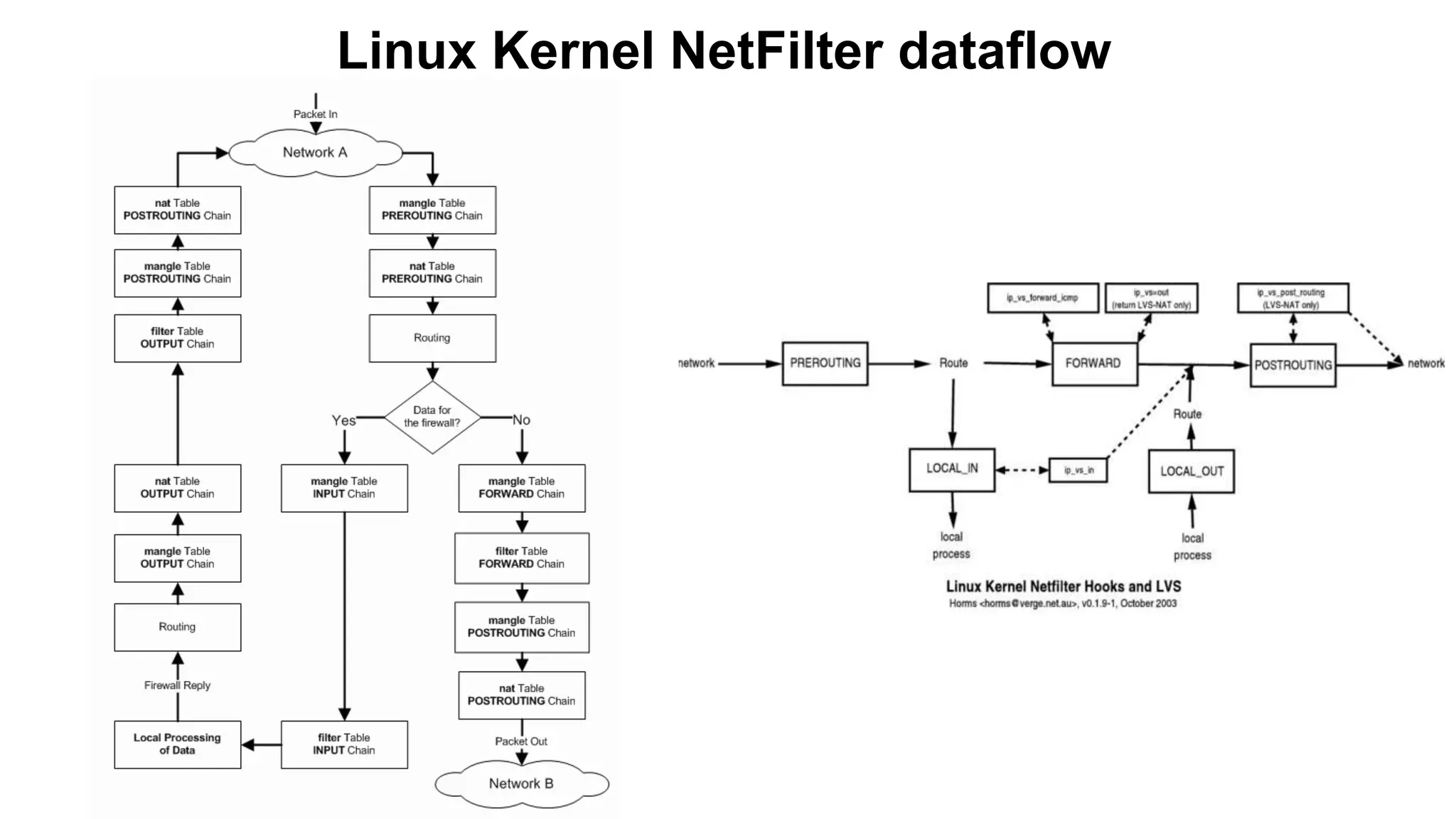

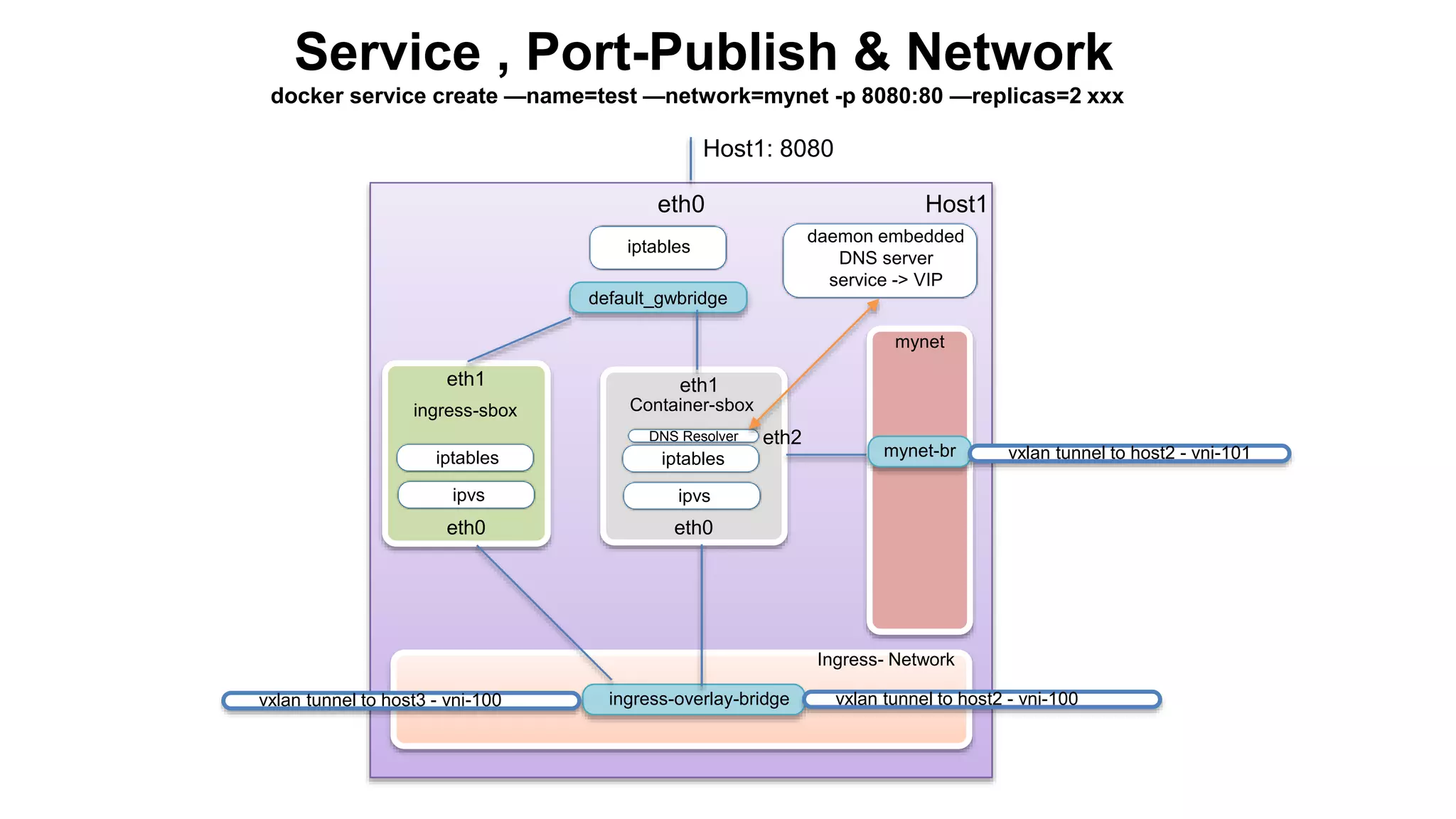

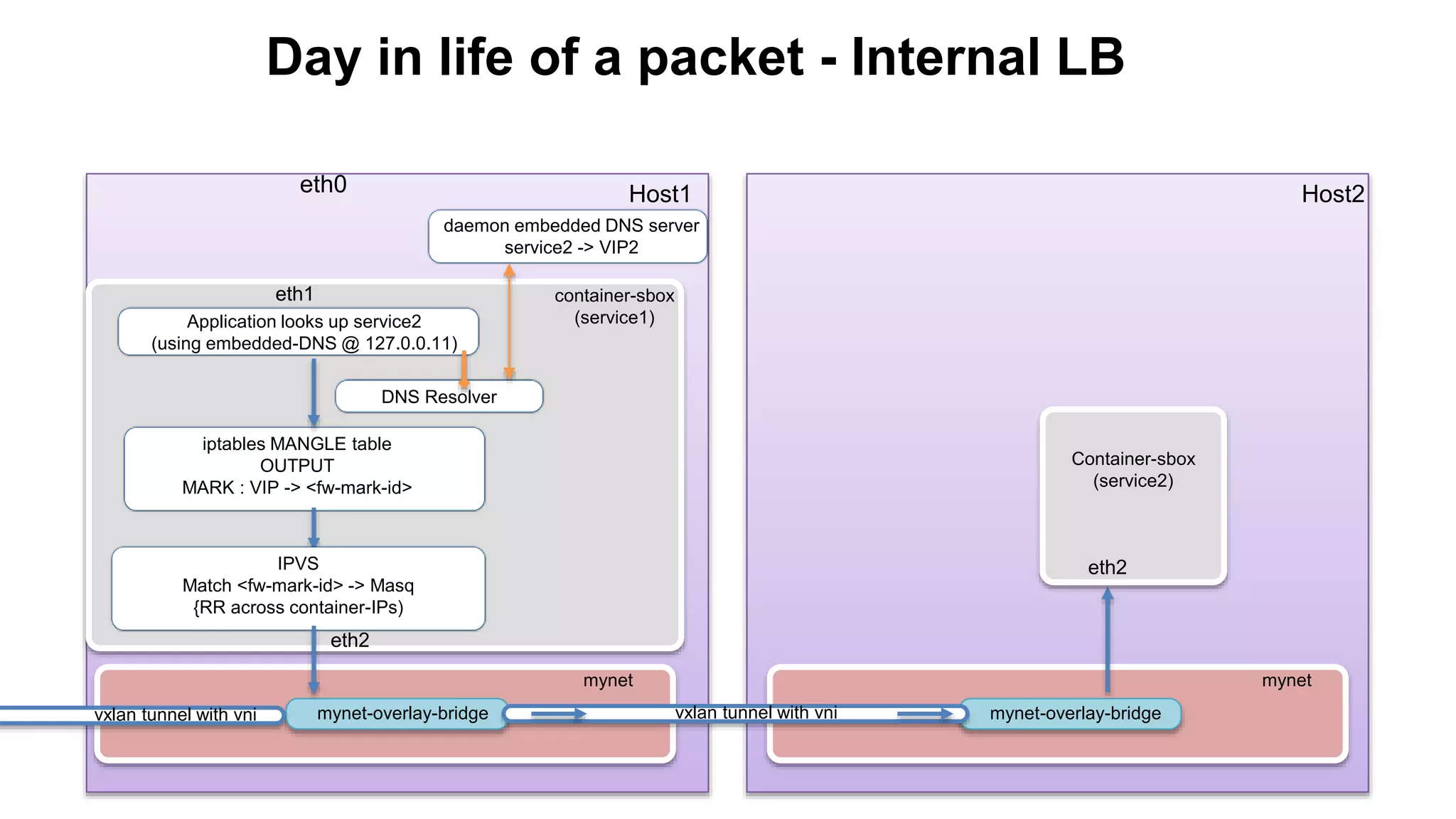

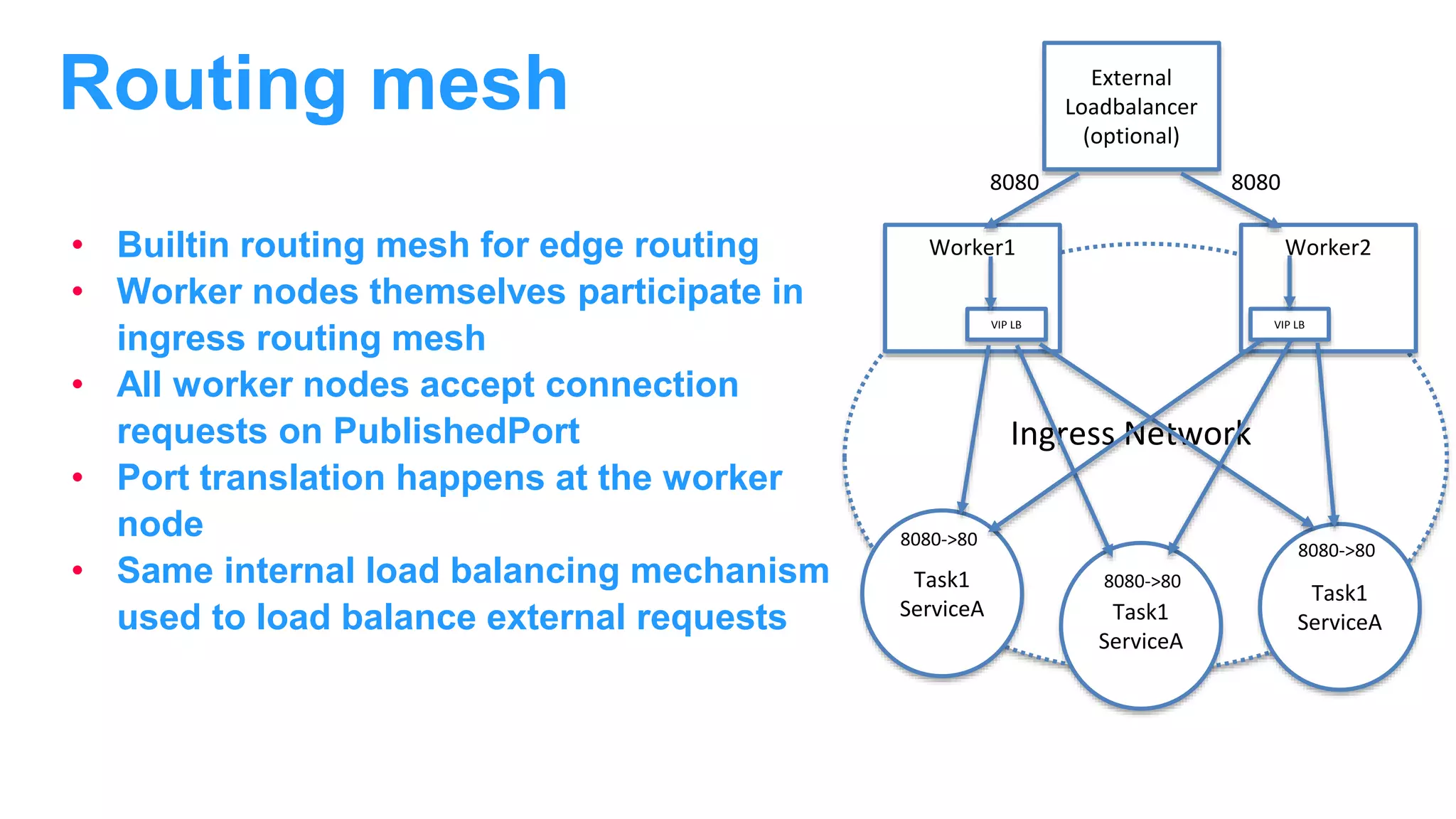

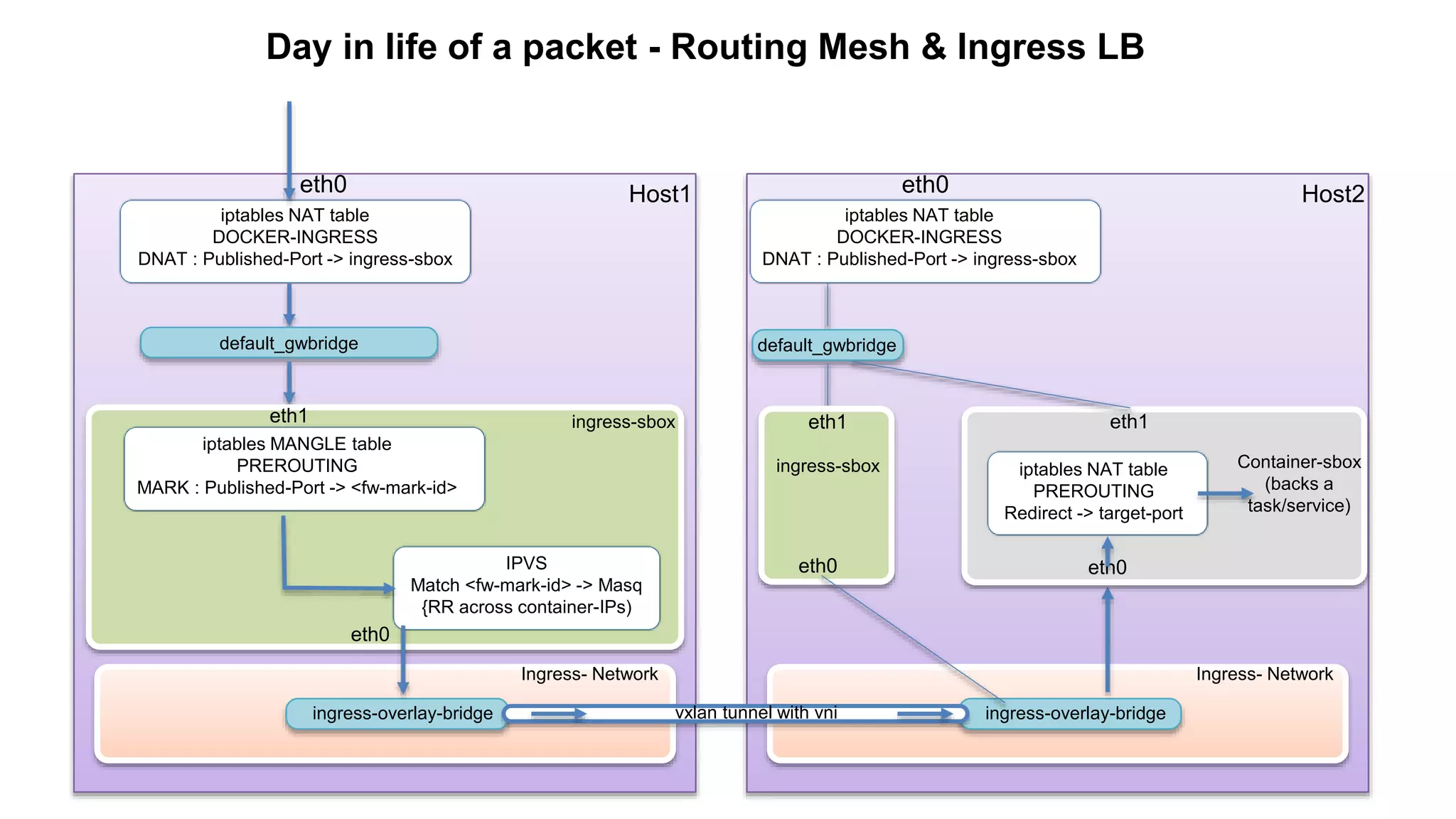

The document discusses Docker networking and provides an overview of its control plane and data plane components. The control plane uses a gossip-based protocol for decentralized event dissemination and failure detection across nodes. The data plane uses overlay networking with Linux bridges and VXLAN interfaces to provide network connectivity between containers on different Docker hosts. Load balancing for internal and external traffic is implemented using IPVS for virtual IP addresses associated with Docker services.