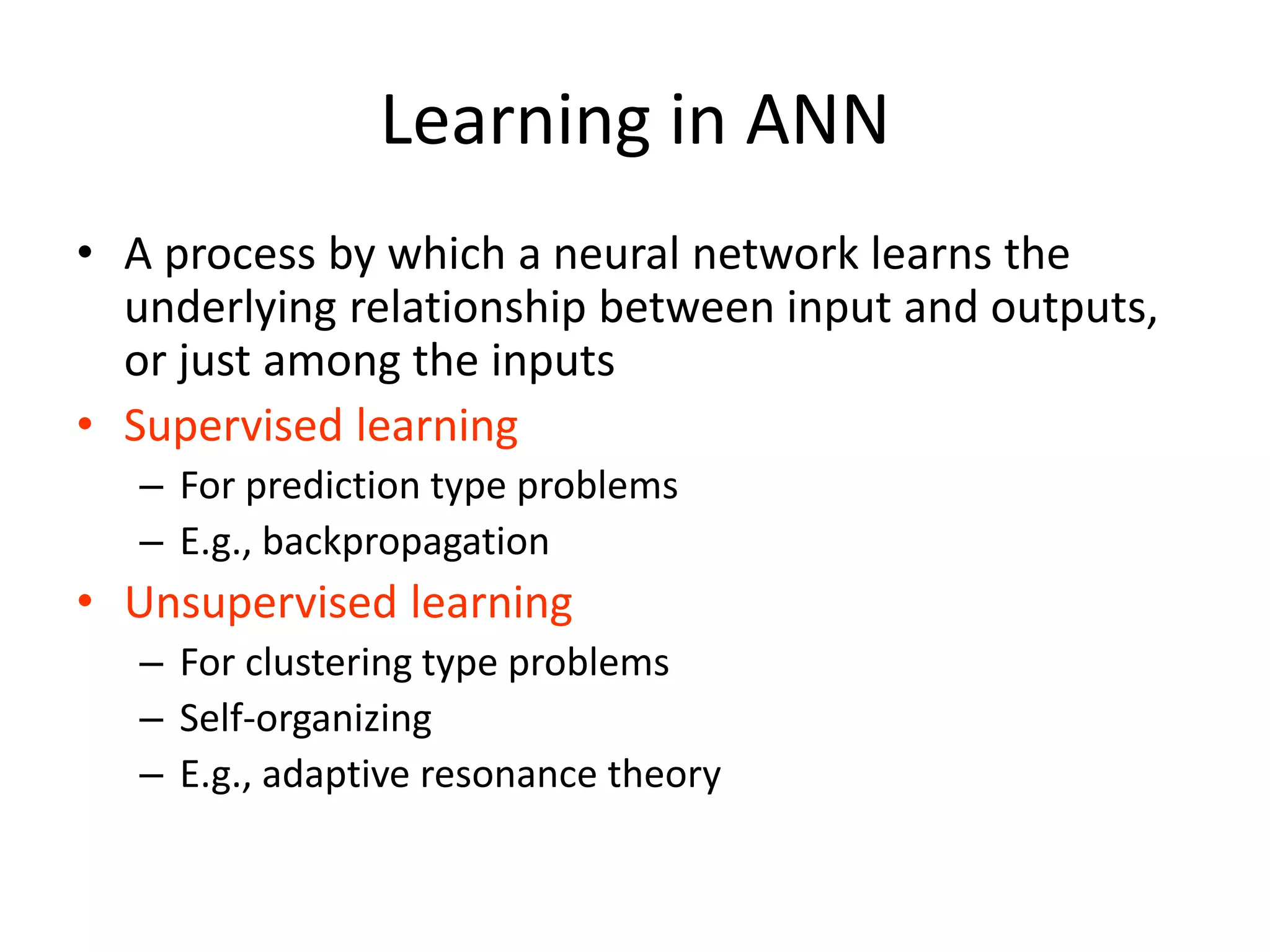

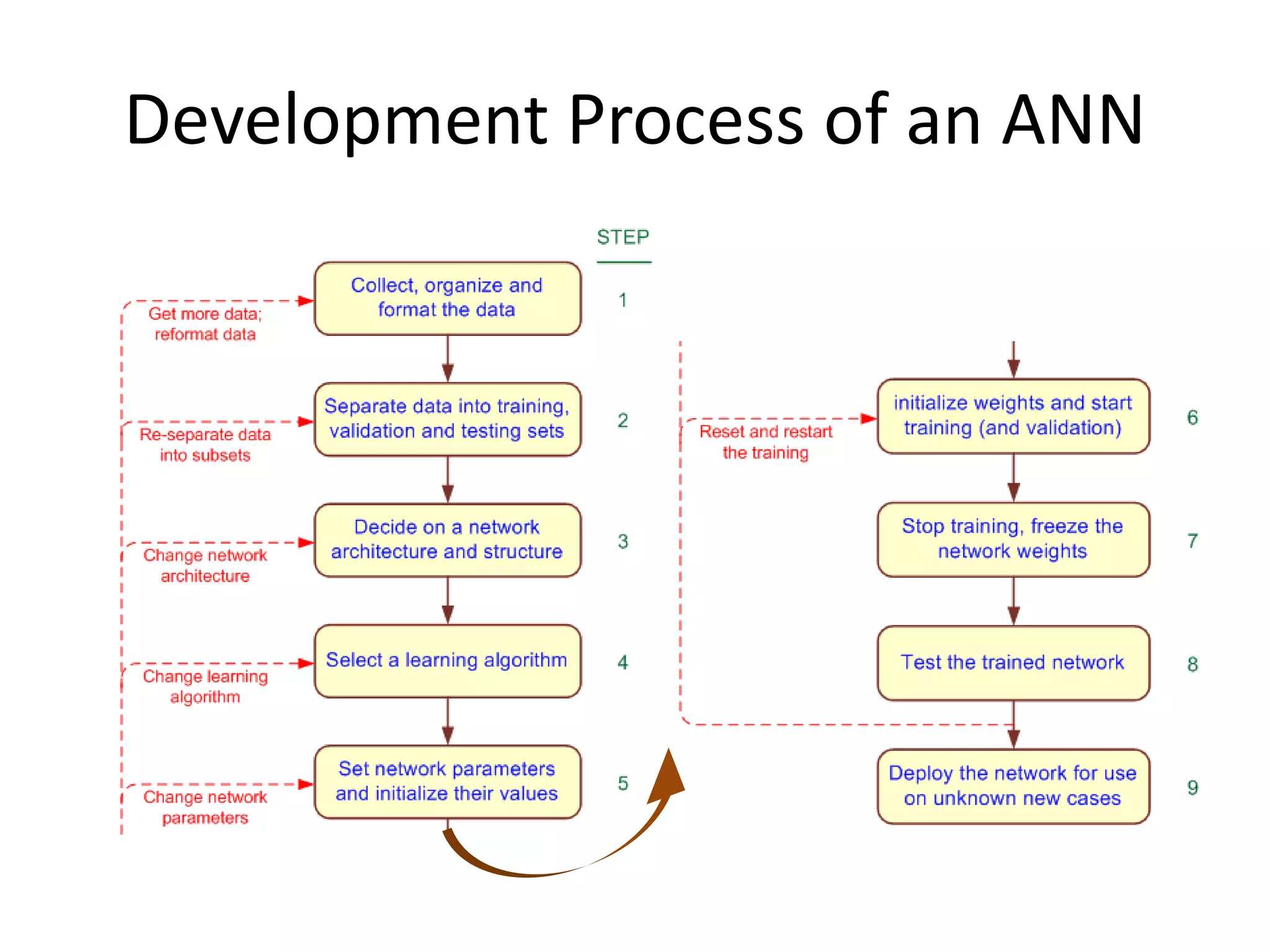

The document provides an overview of artificial neural networks (ANNs) and their application in data mining for classification and prediction tasks. It discusses the significance of decision trees and neural network architectures while outlining the steps involved in developing ANN applications, including data preparation, training, testing, and implementation. Additionally, it highlights the benefits and limitations of ANNs, focusing on their effectiveness in handling complex, noisy data while addressing challenges such as long training times and the black-box nature of these models.

![[Artificial] Neural Networks • A class of powerful, general-purpose tools readily applied to: – Prediction – Classification – Clustering • Biological Neural Net (human brain) is the most powerful – we can generalize from experience • Computers are best at following pre-determined instructions • Computerized Neural Nets attempt to bridge the gap – Predicting time-series in financial world – Diagnosing medical conditions – Identifying clusters of valuable customers – Fraud detection – Etc…](https://image.slidesharecdn.com/ann5-180403045344/75/Artificial-Neural-Networks-for-Data-Mining-17-2048.jpg)

![Neural Network Classifier • Input: Classification data It contains classification attribute • Data is divided, as in any classification problem. [Training data and Testing data] • All data must be normalized. (i.e. all values of attributes in the database are changed to contain values in the internal [0,1] or[-1,1]) Neural Network can work with data in the range of (0,1) or (-1,1) • Two basic normalization techniques [1] Max-Min normalization [2] Decimal Scaling normalization](https://image.slidesharecdn.com/ann5-180403045344/75/Artificial-Neural-Networks-for-Data-Mining-22-2048.jpg)