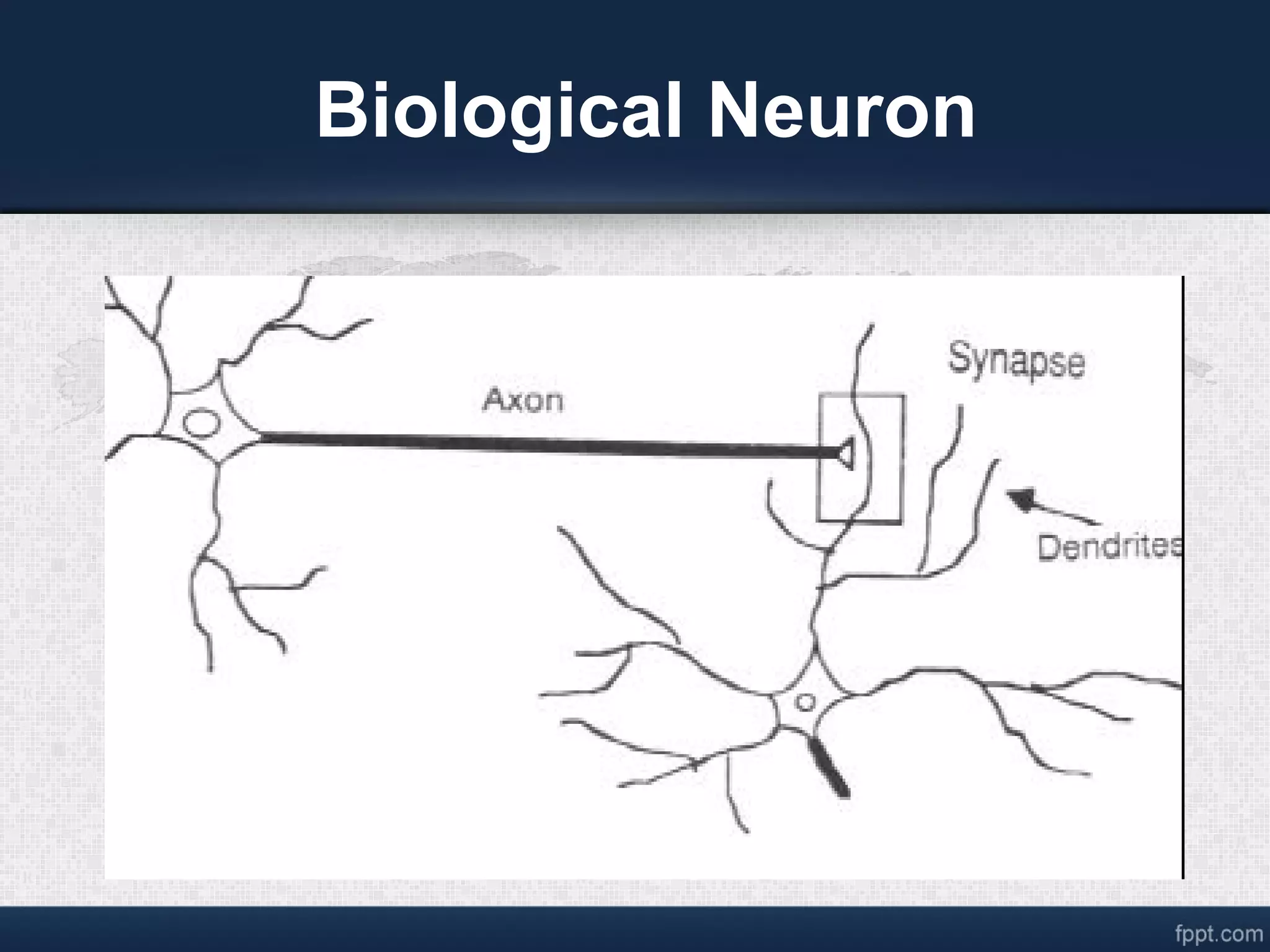

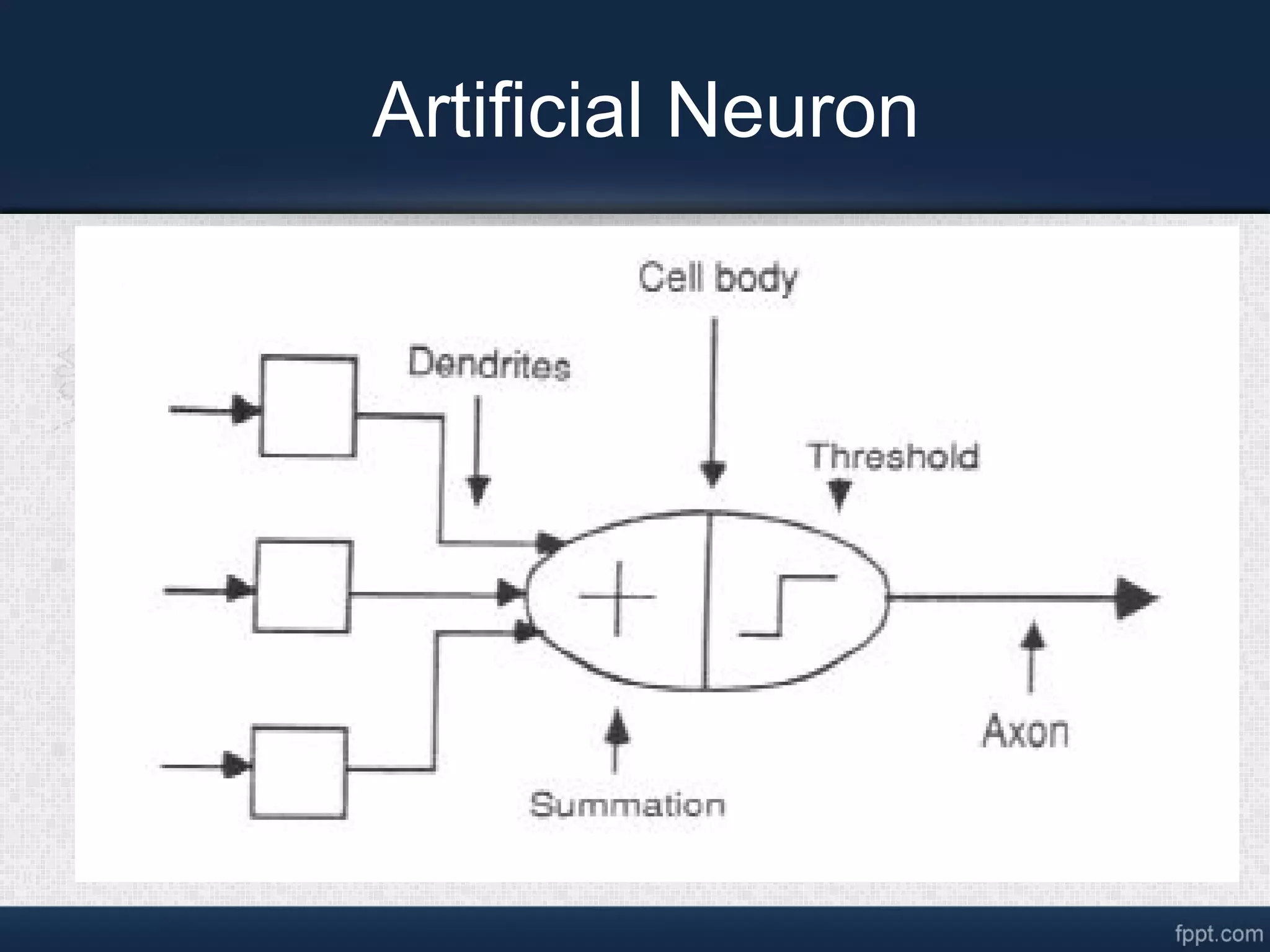

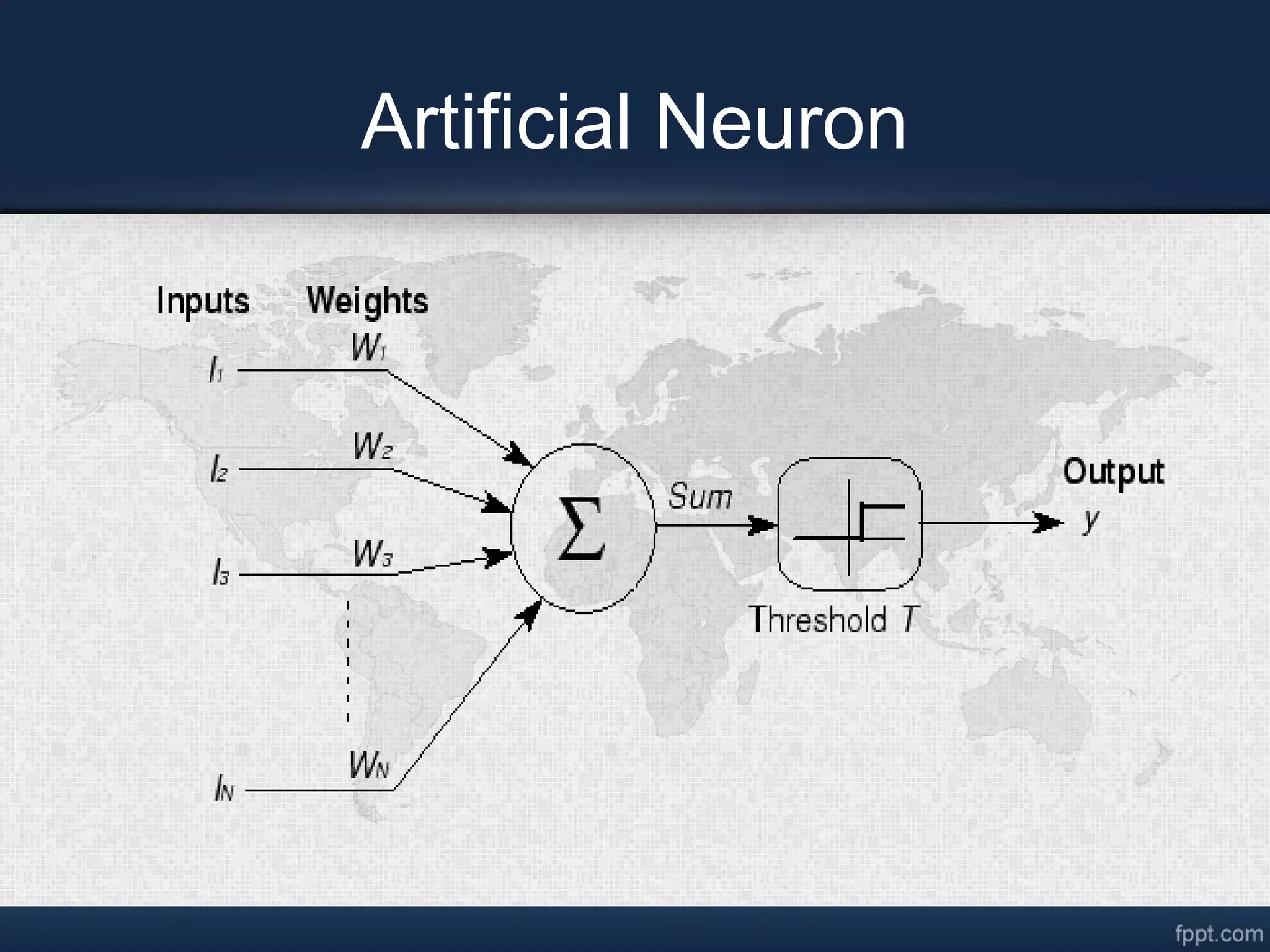

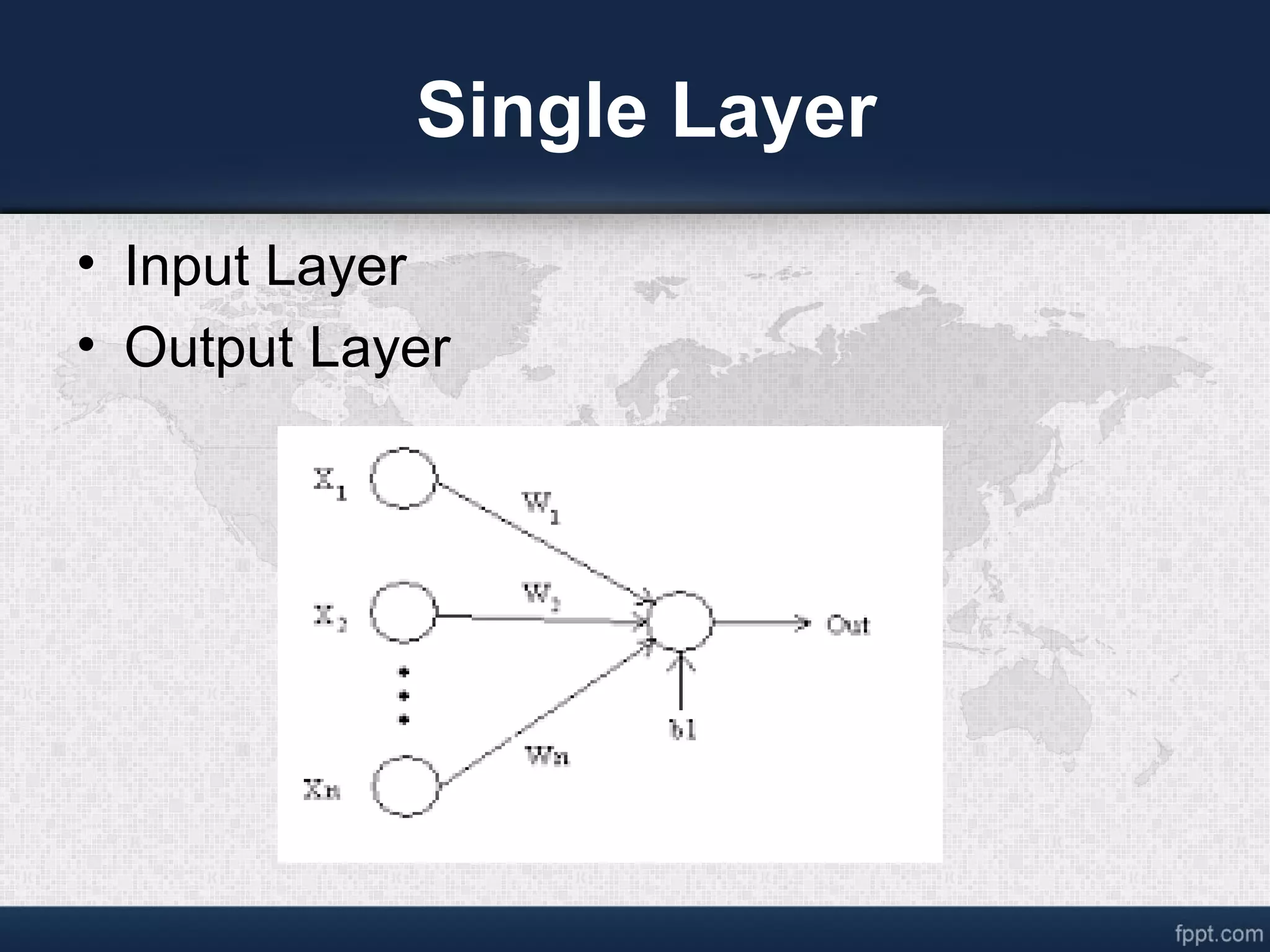

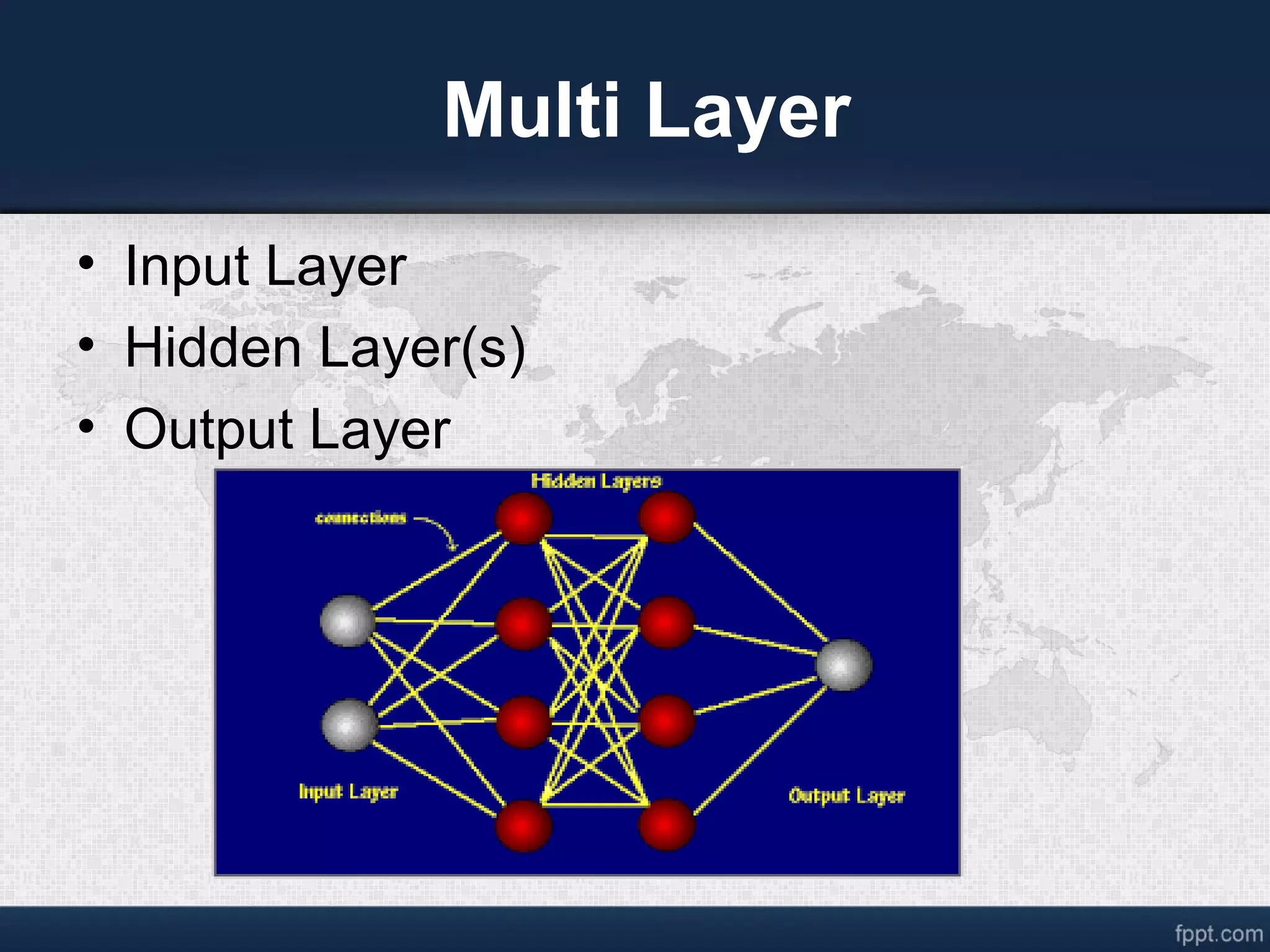

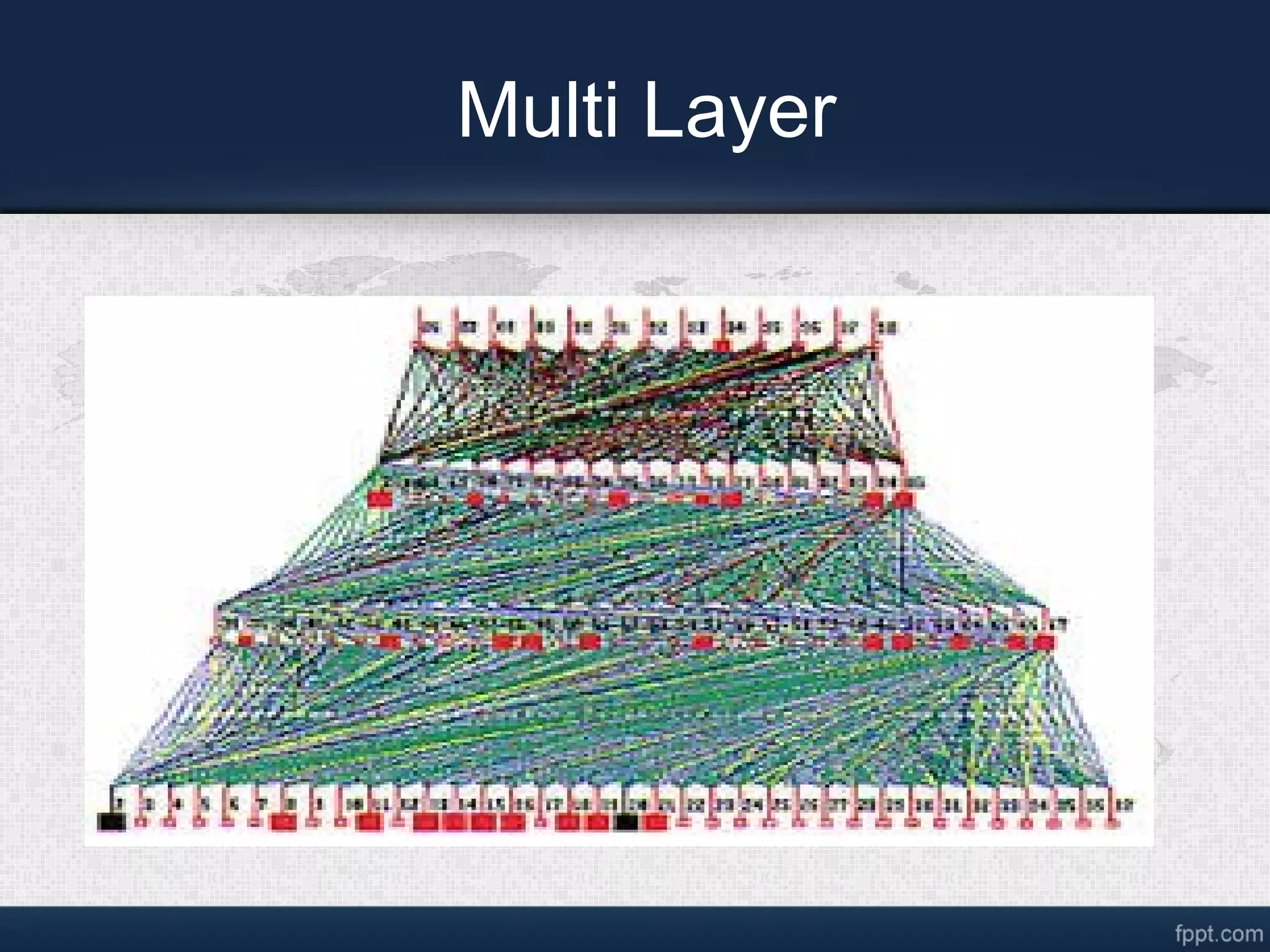

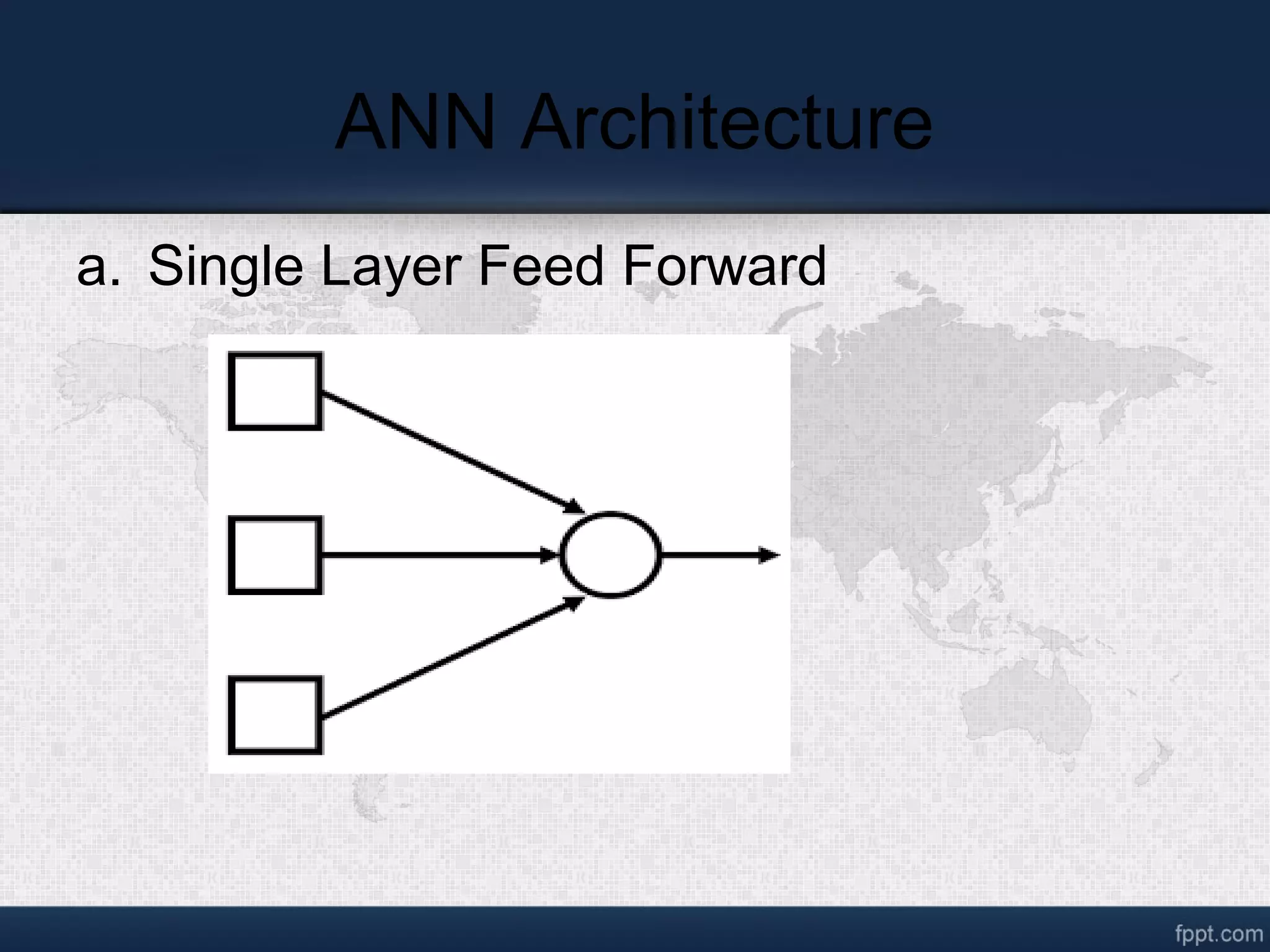

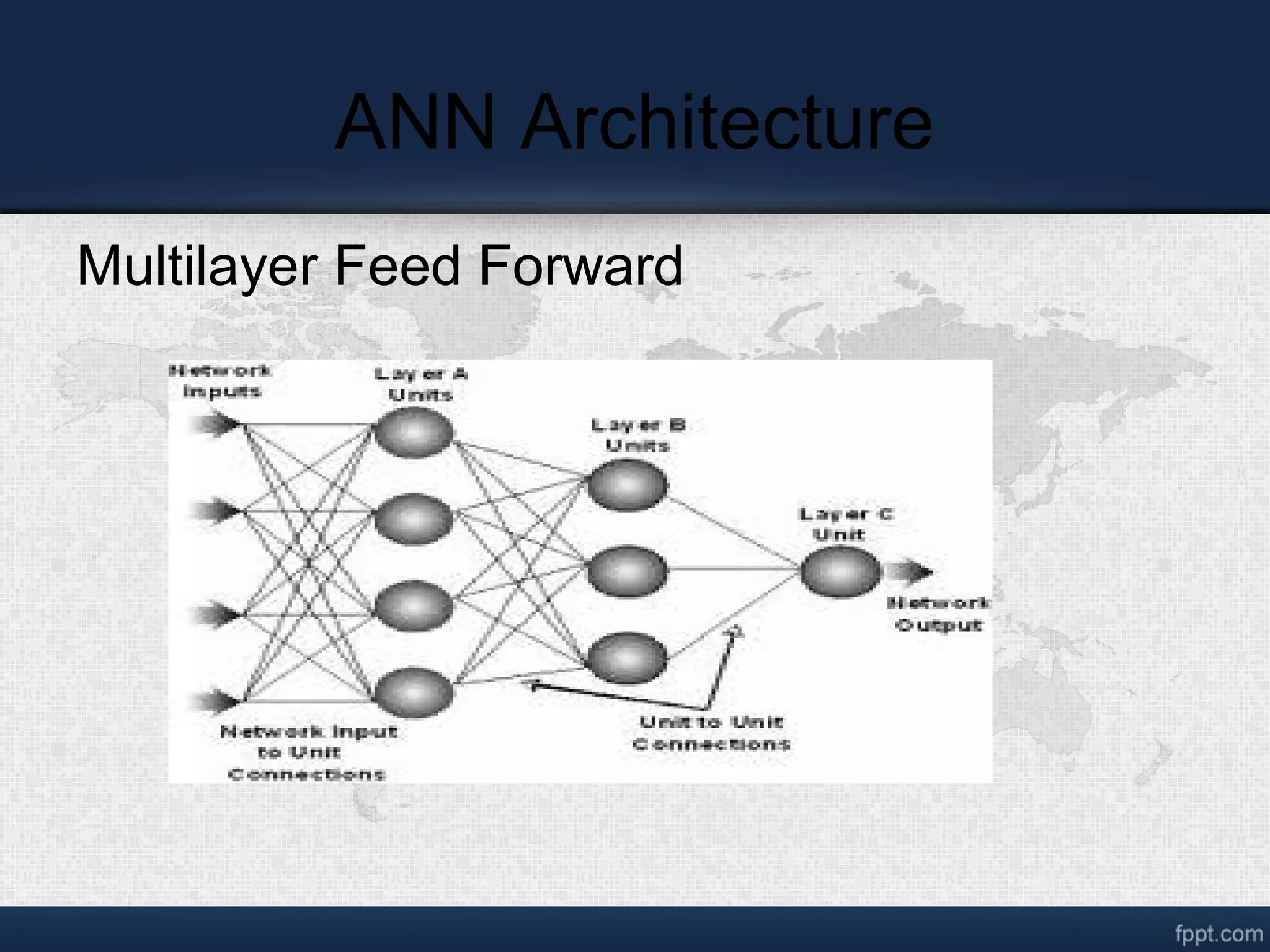

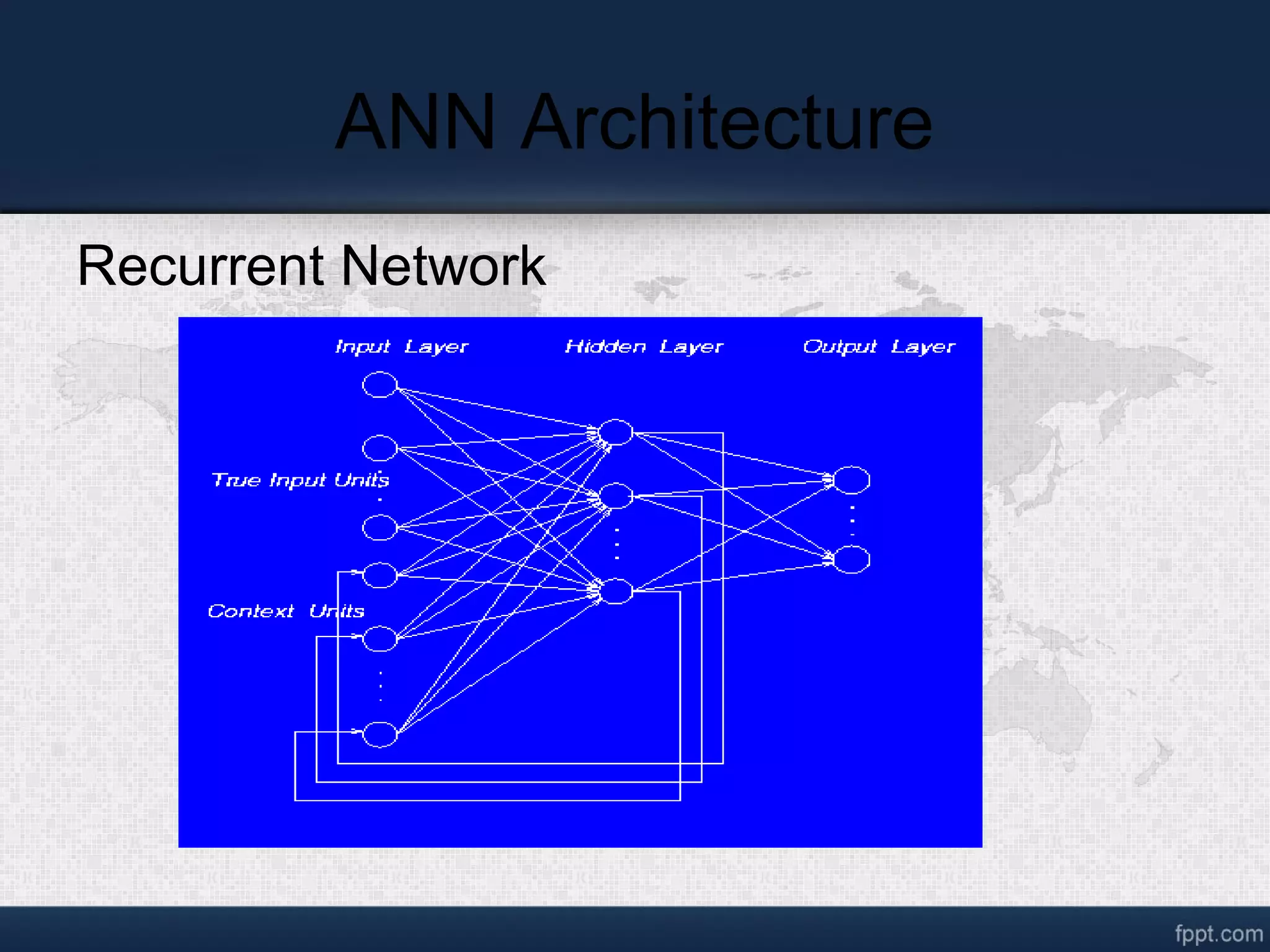

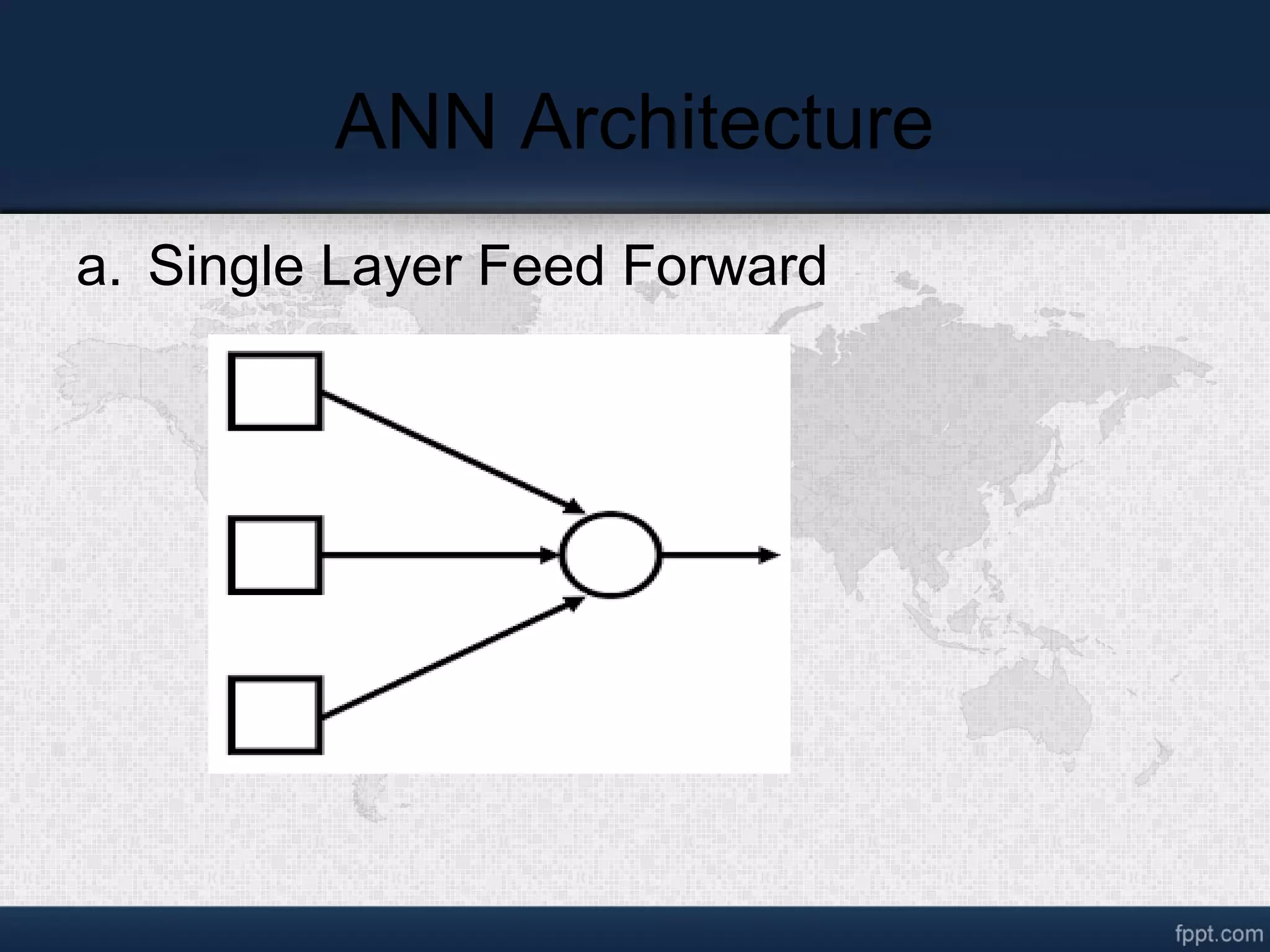

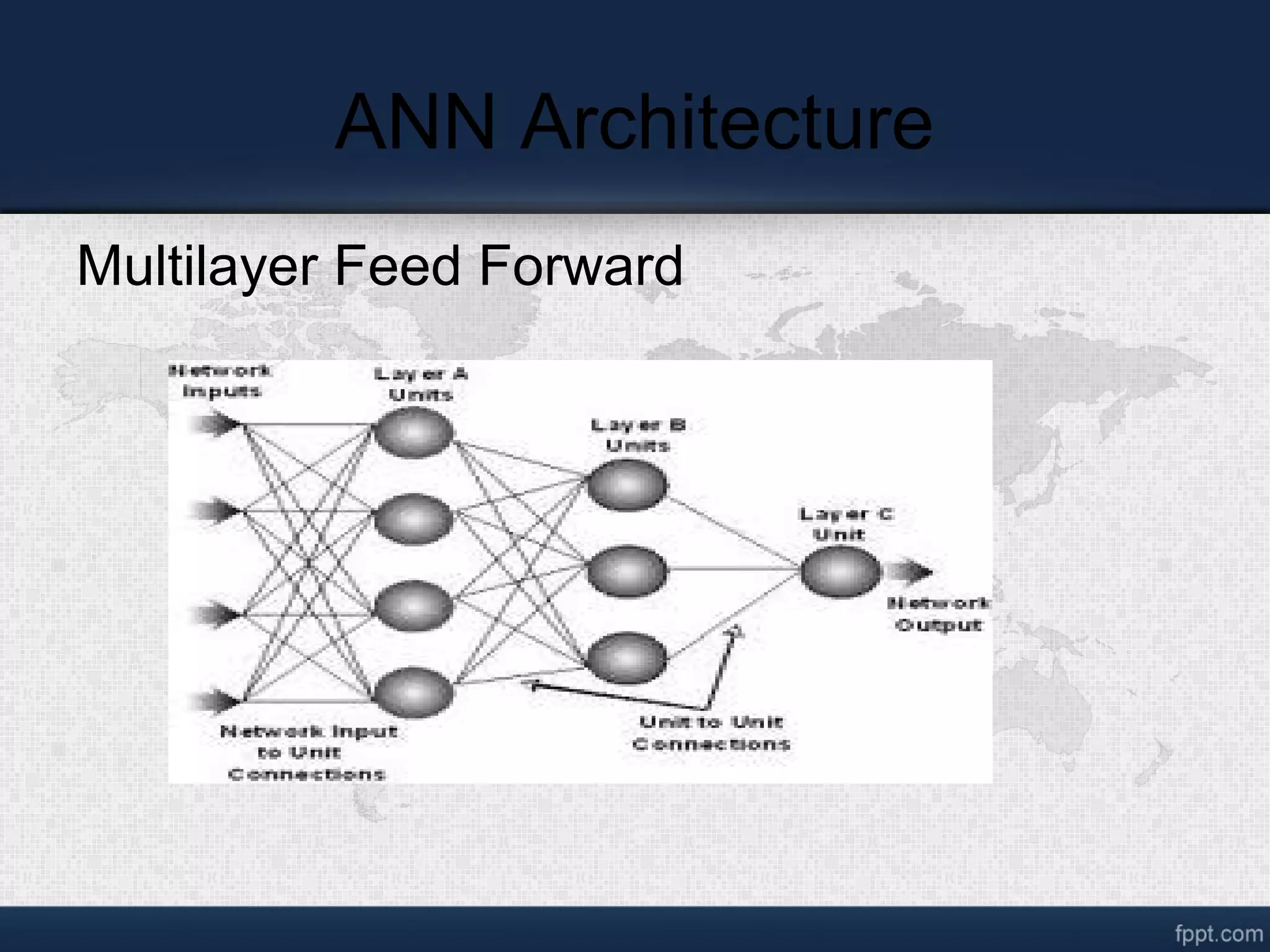

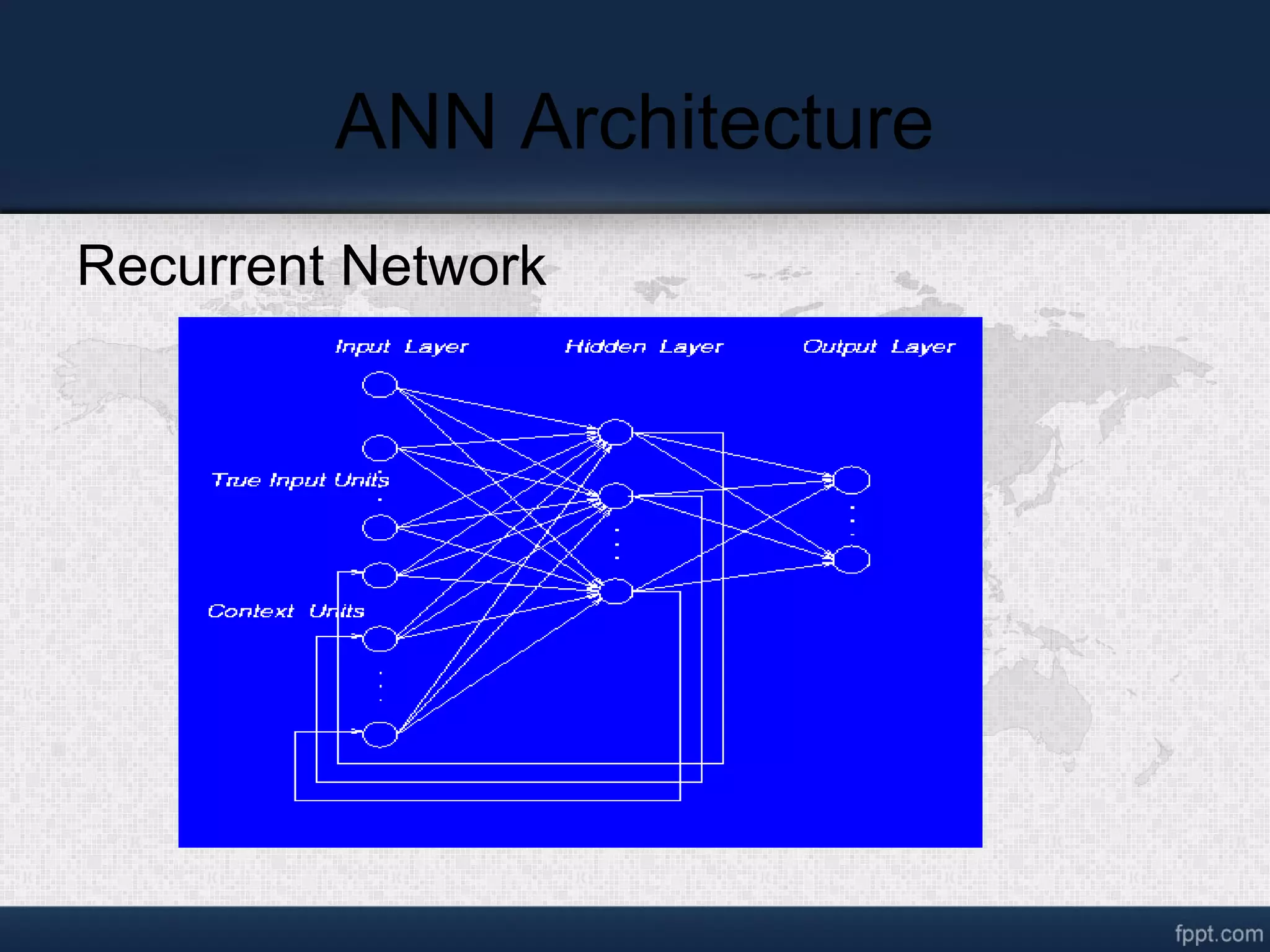

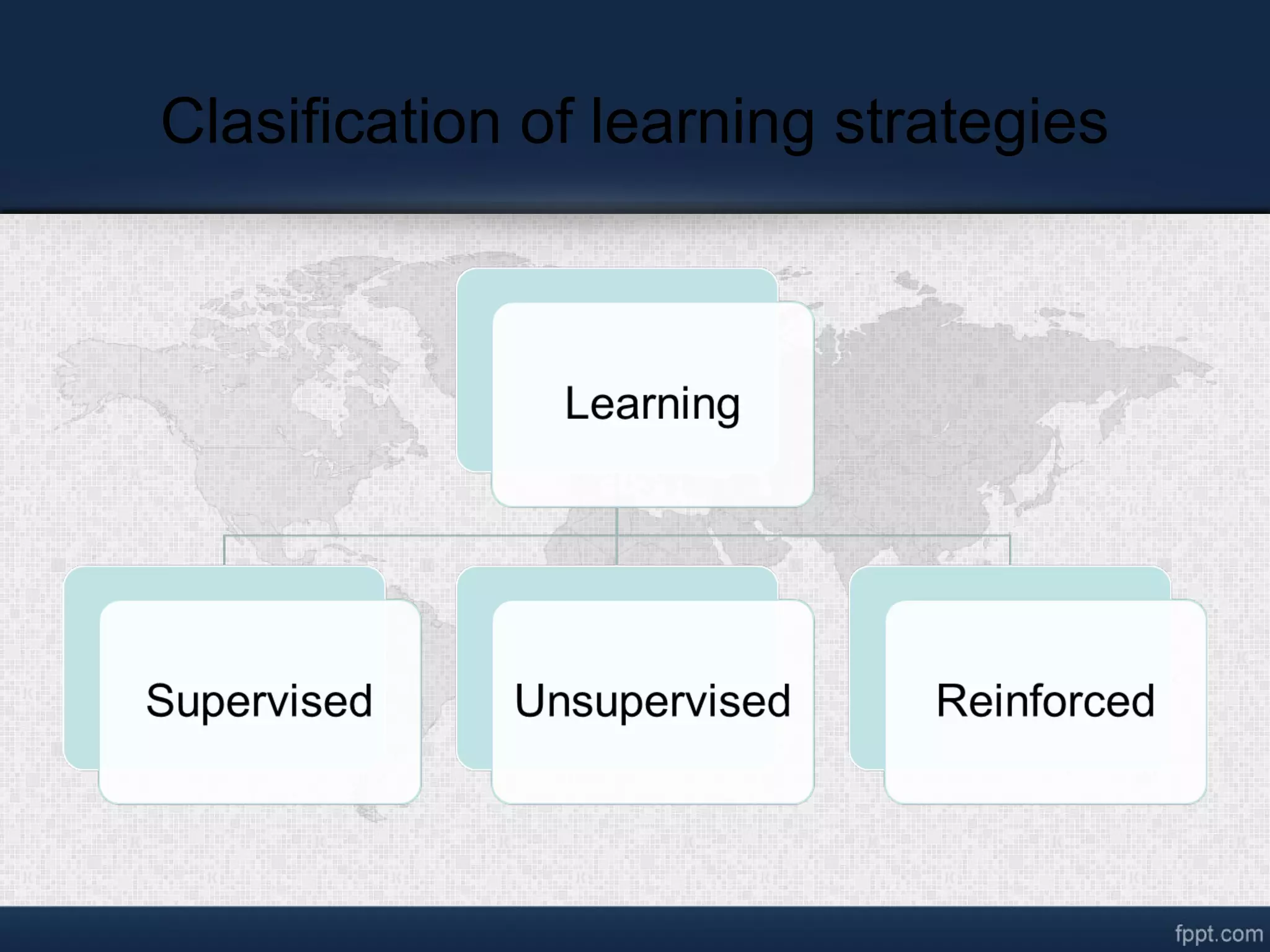

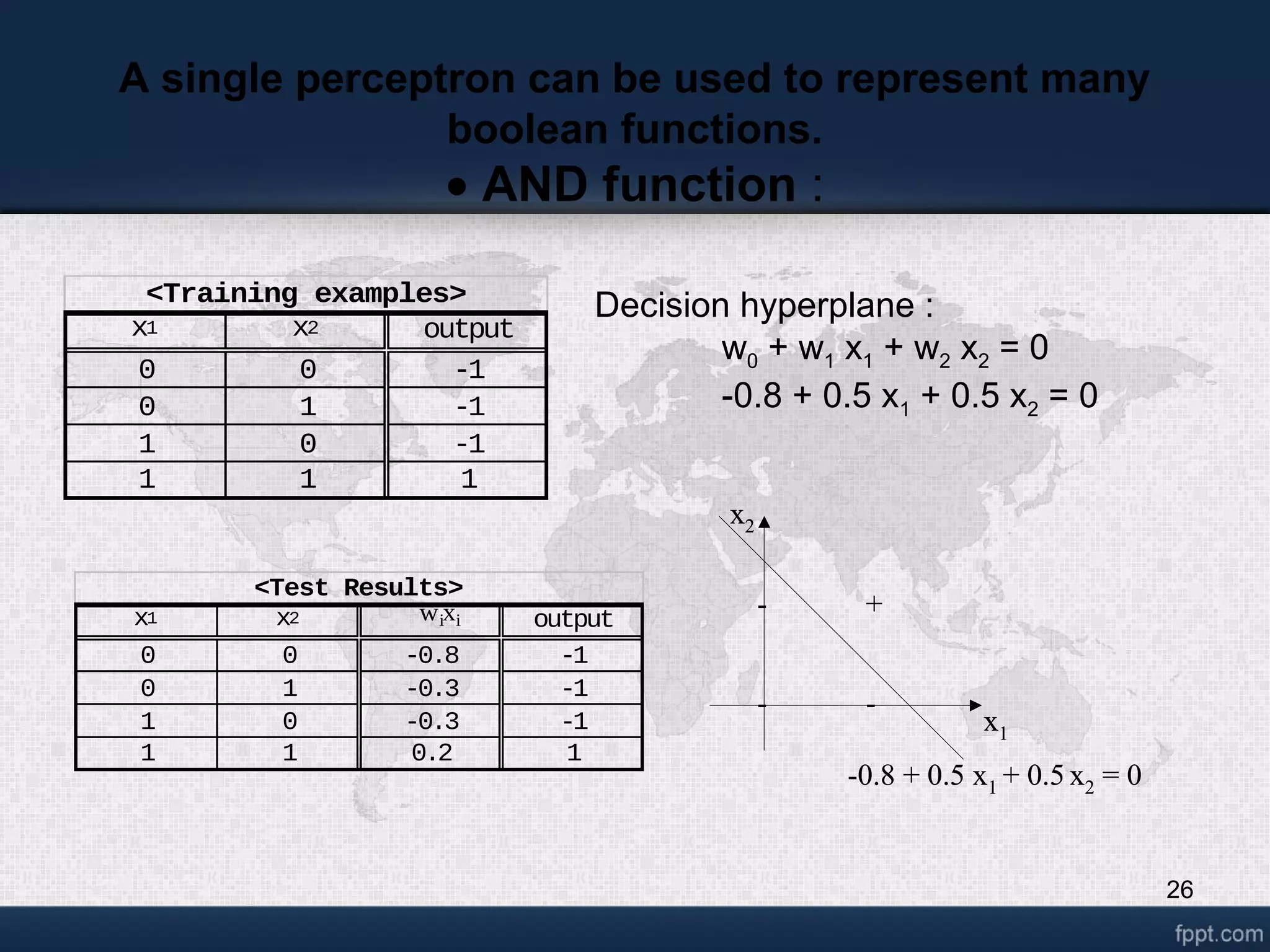

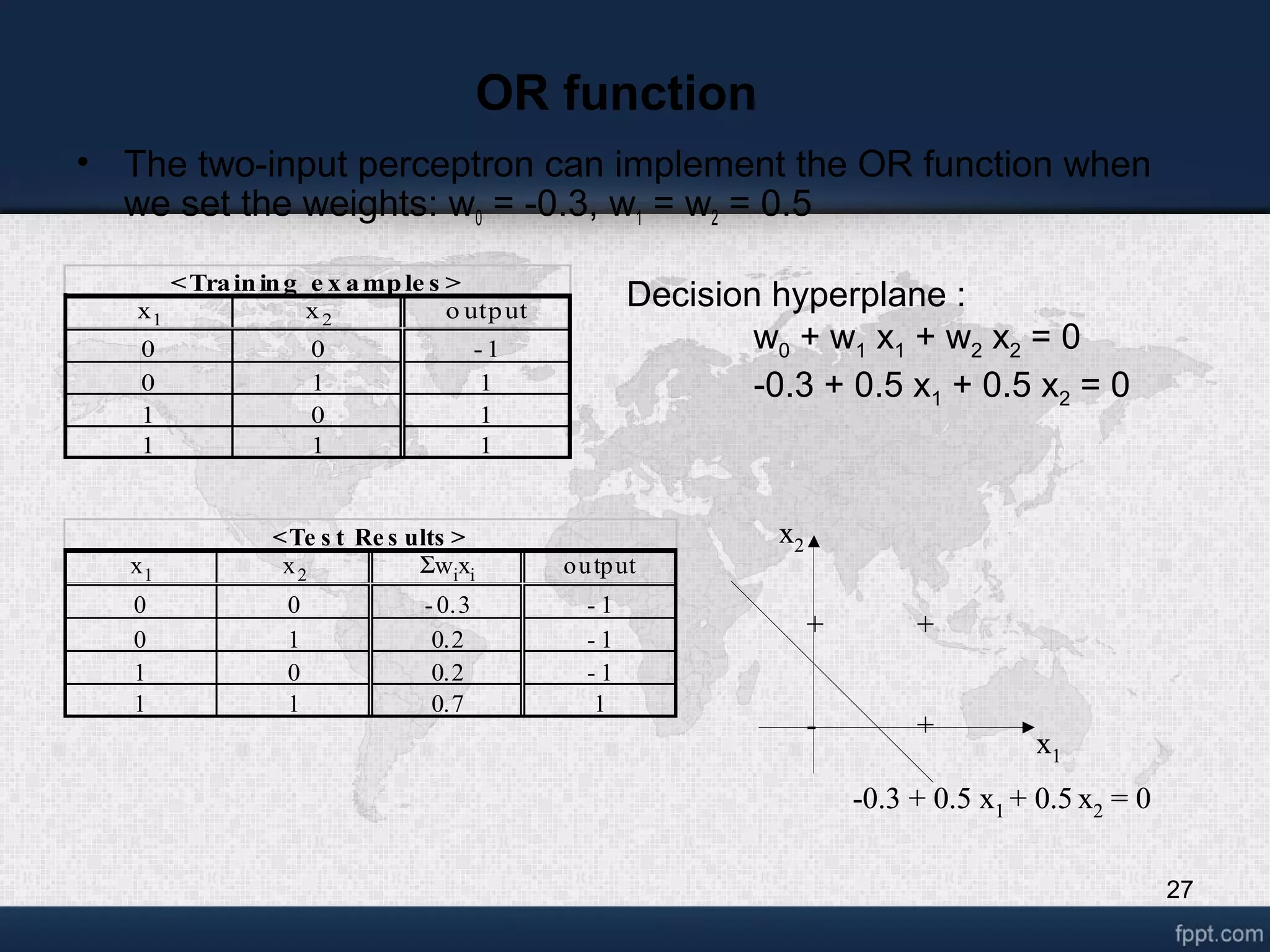

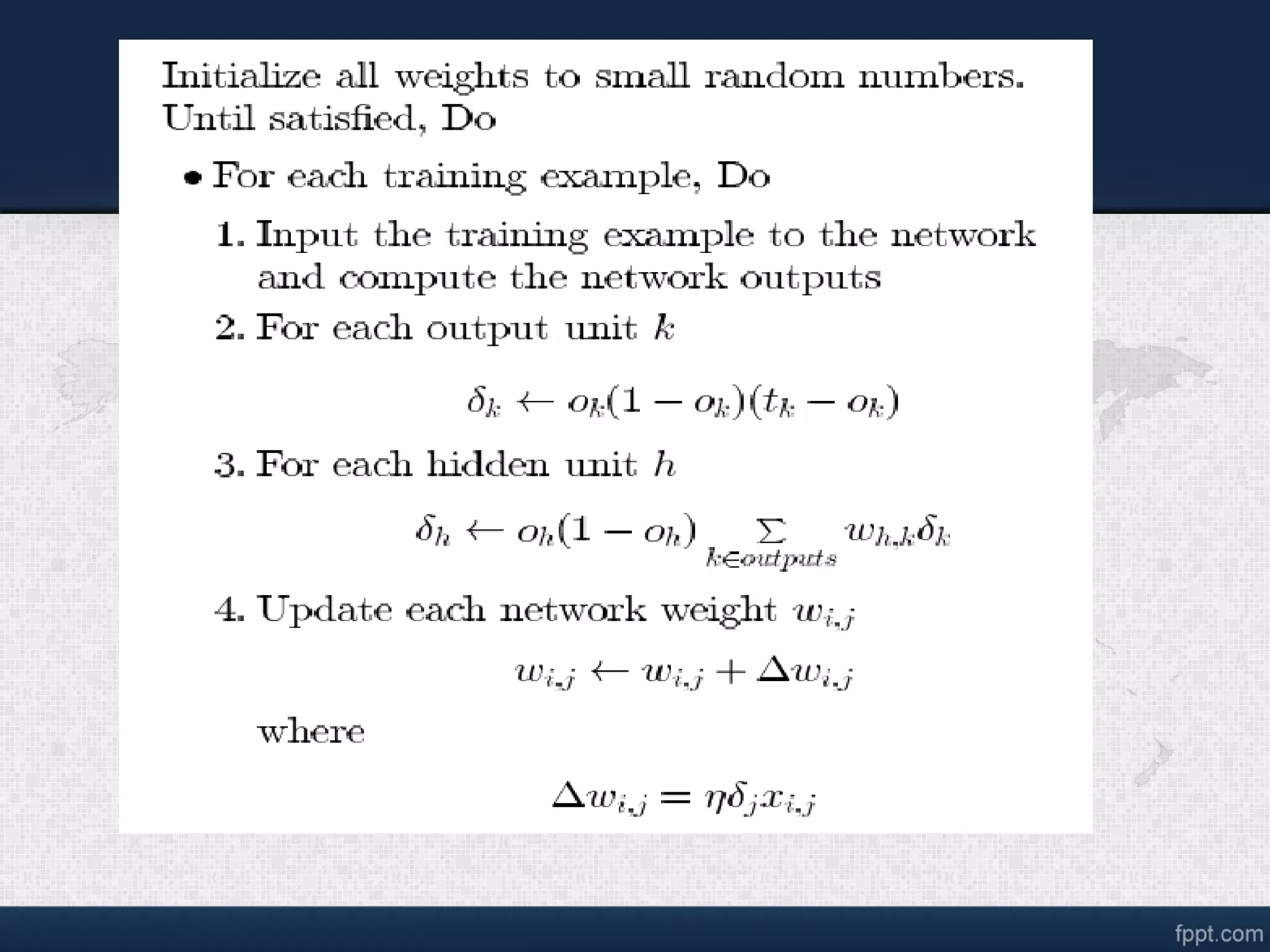

Artificial neural networks (ANNs) are computational models inspired by biological neural networks. ANNs can process large amounts of inputs to learn from data in a way similar to the human brain. There are different types of ANN architectures including single layer feedforward networks, multilayer feedforward networks, and recurrent networks. ANNs use supervised, unsupervised, or reinforced learning. The backpropagation algorithm is commonly used for training multilayer networks by propagating errors backwards from the output to adjust weights. Developing an ANN application involves collecting data, separating it into training and testing sets, designing the network architecture, initializing parameters/weights, transforming data, training the network using an algorithm like backpropagation, testing performance on new data, and