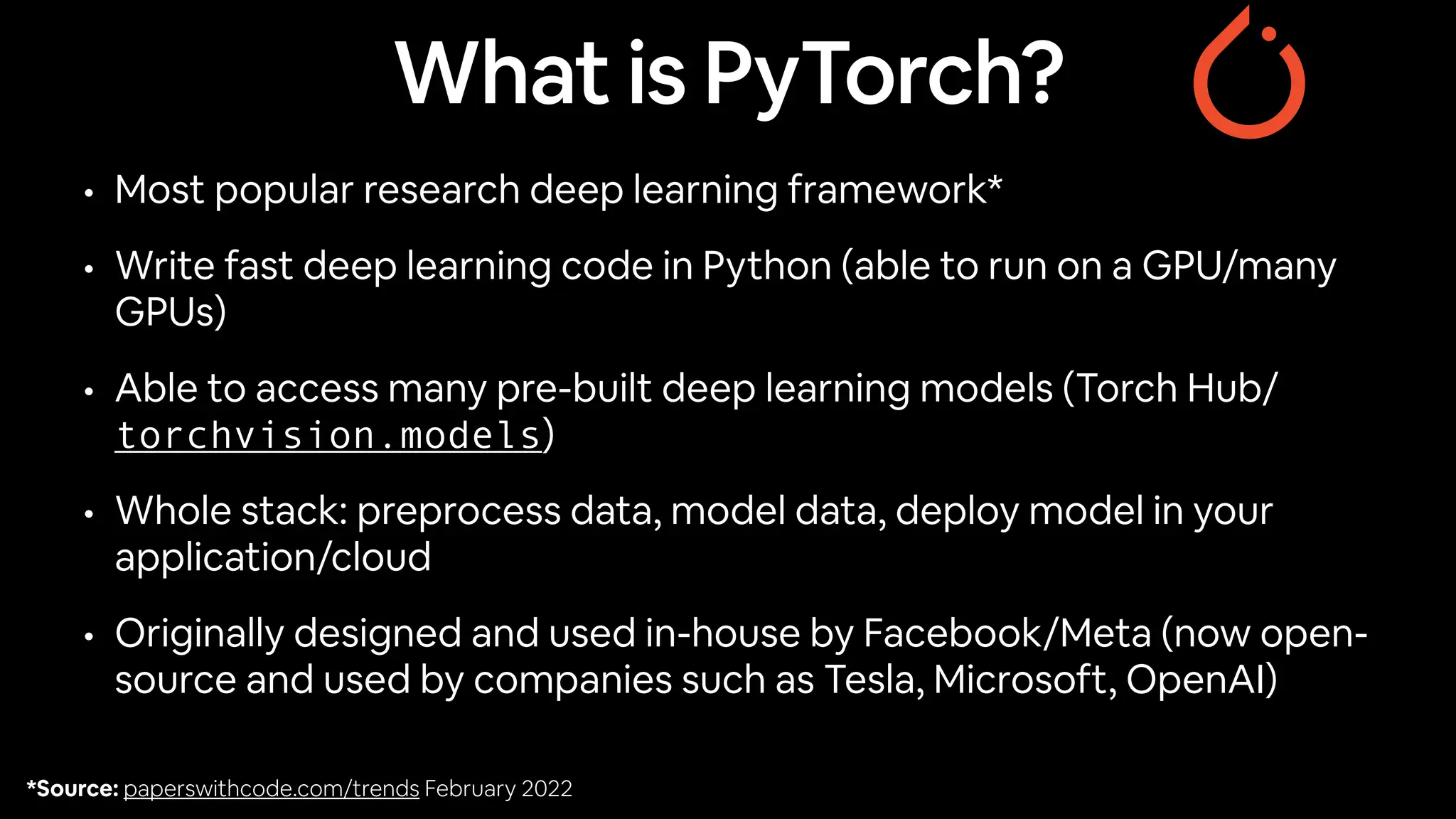

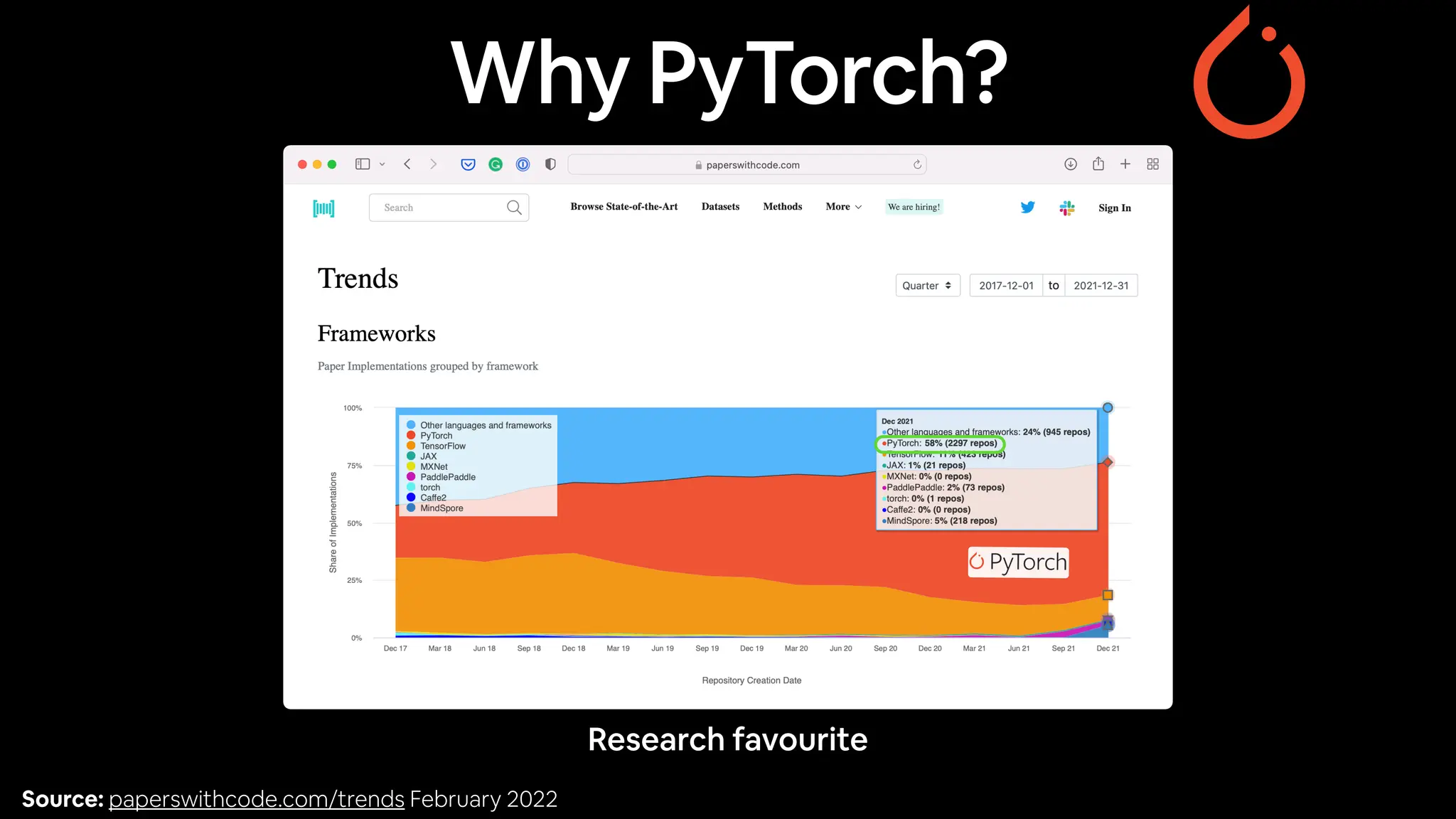

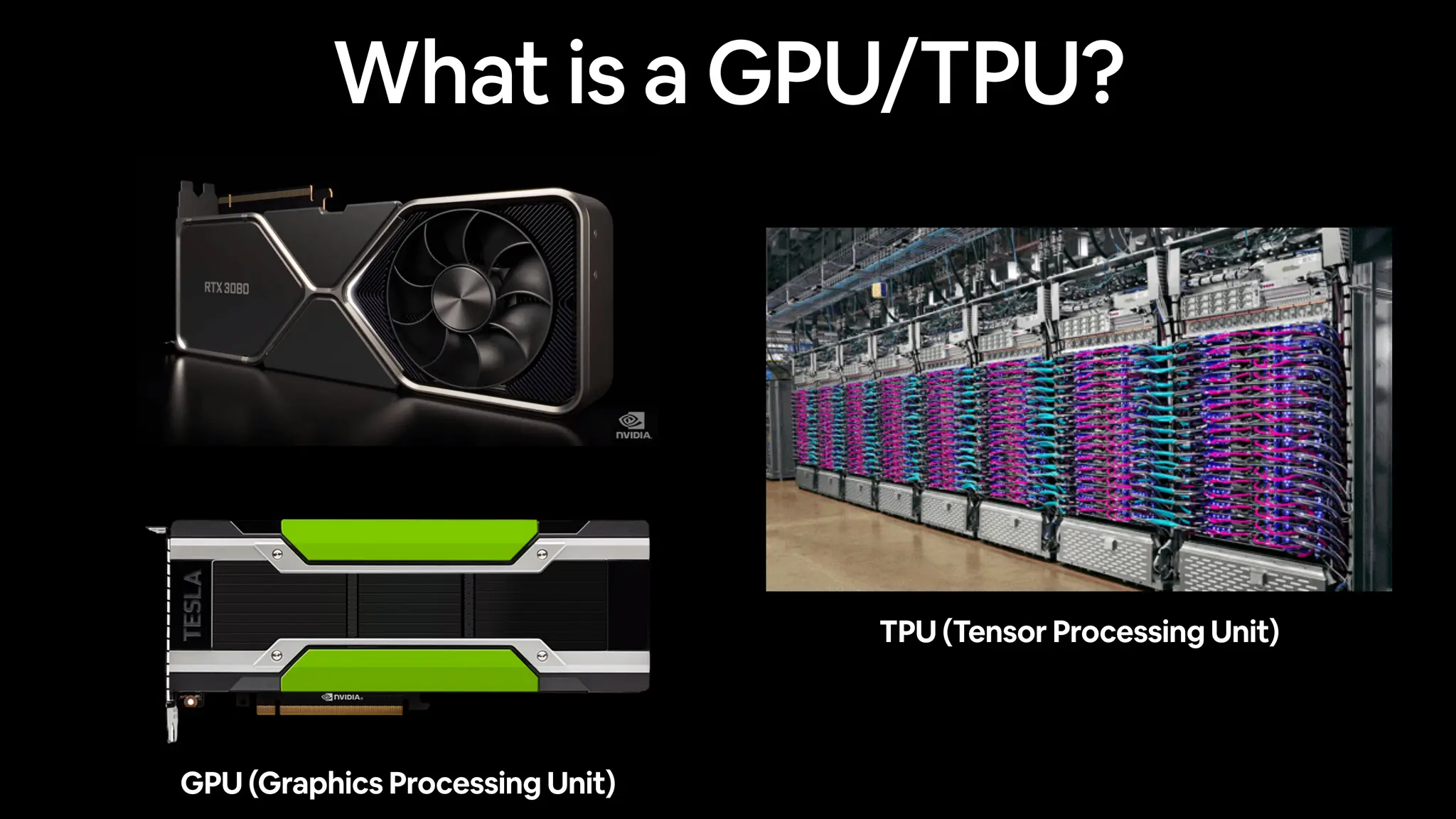

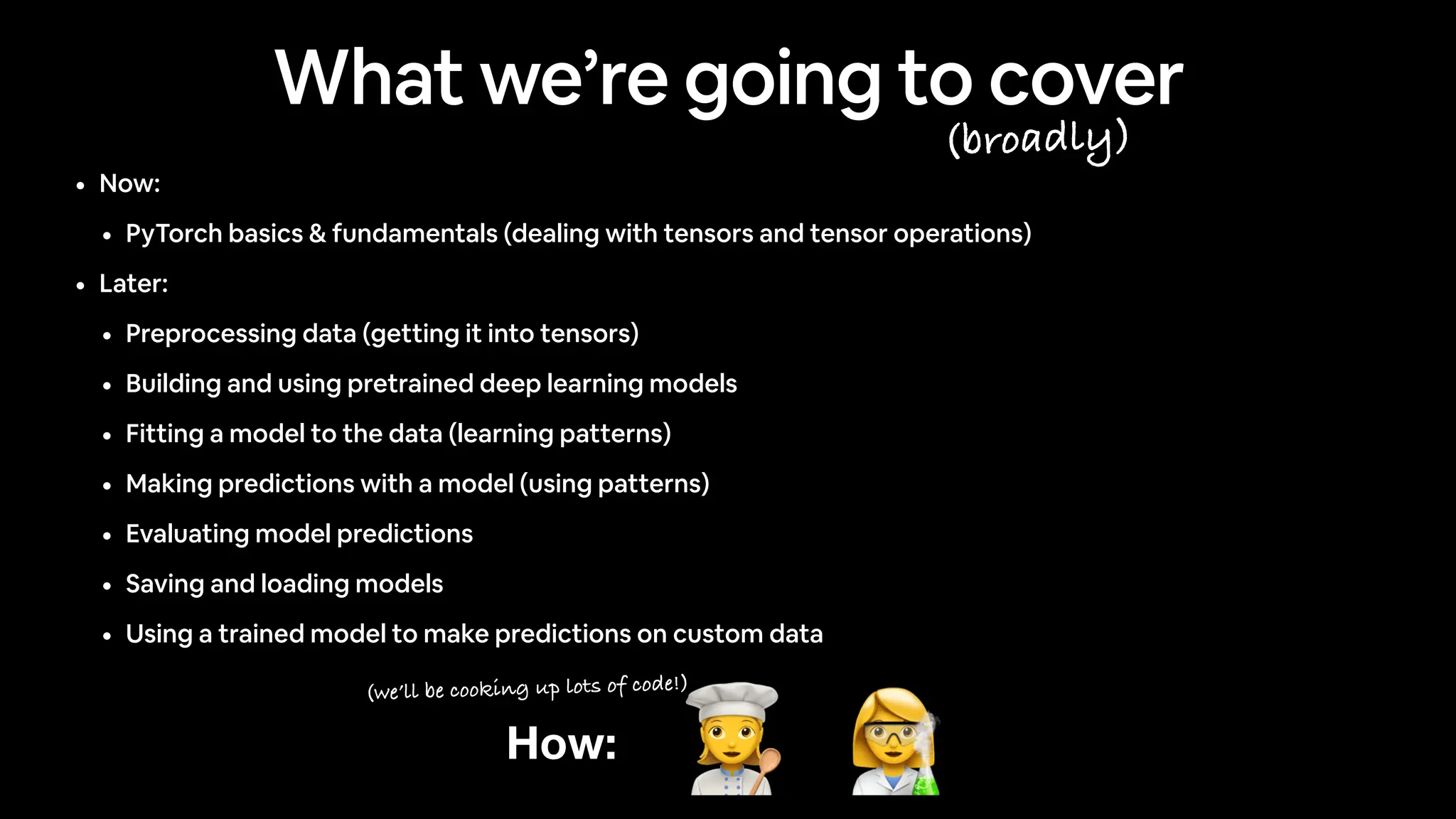

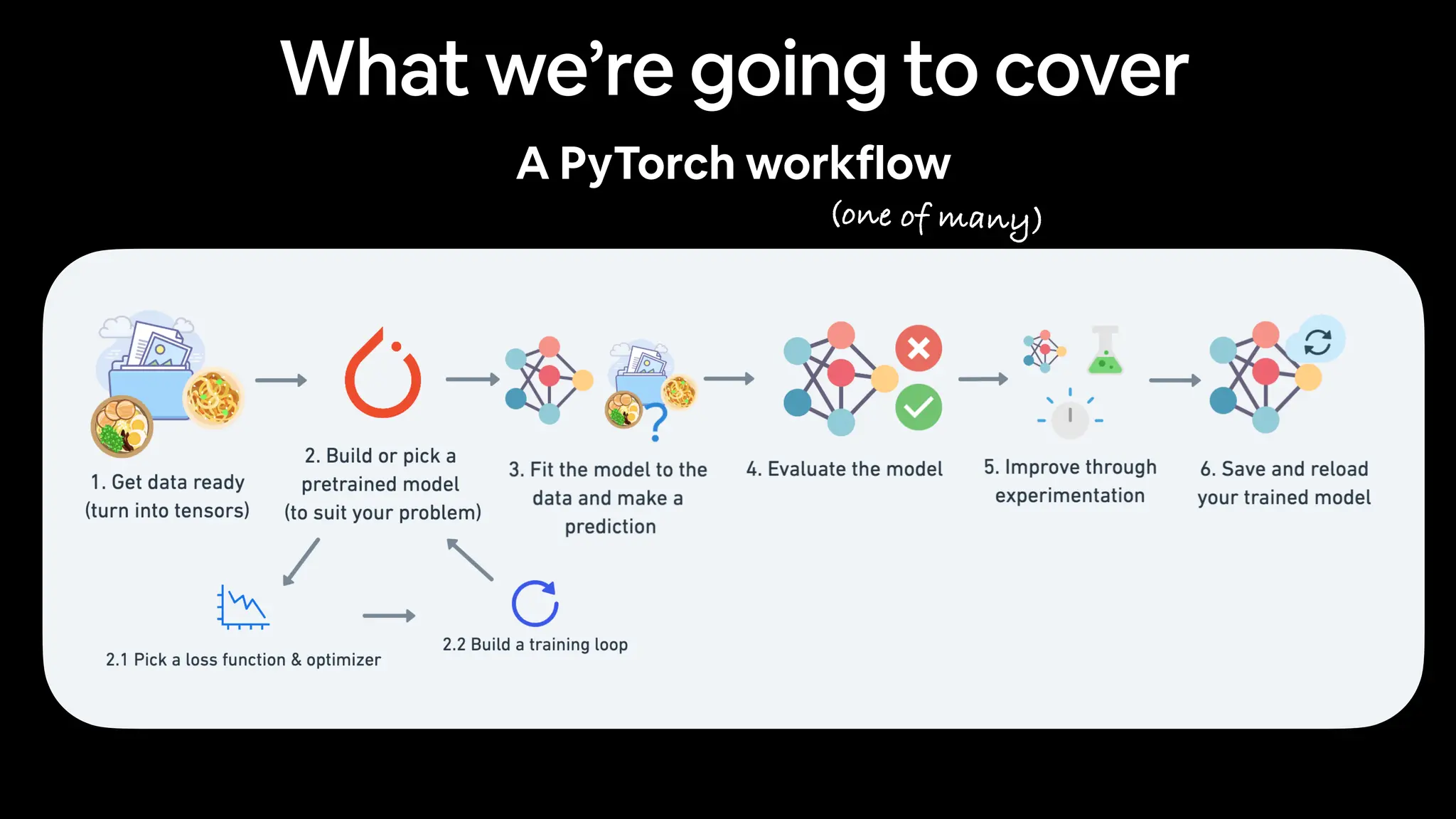

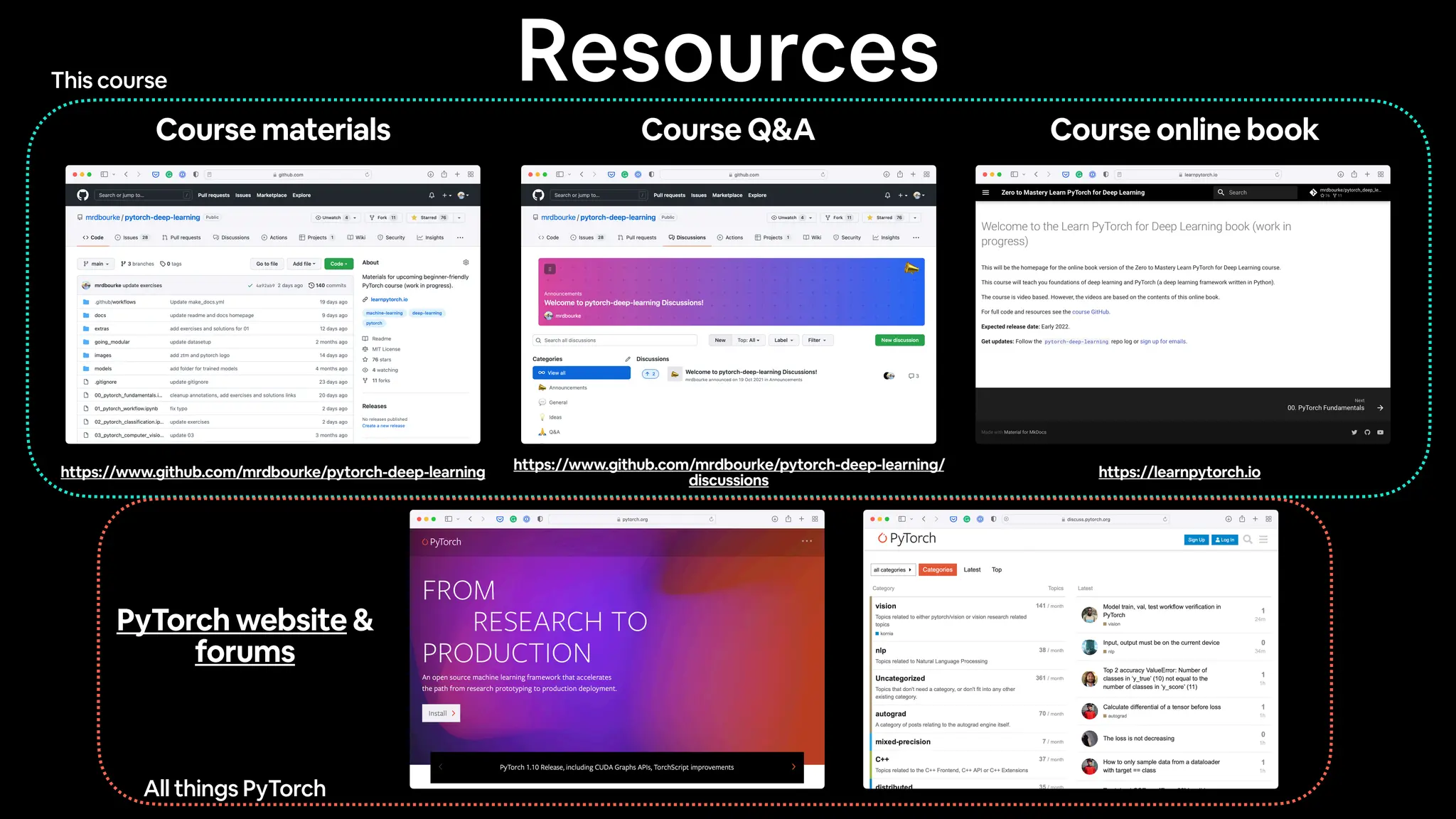

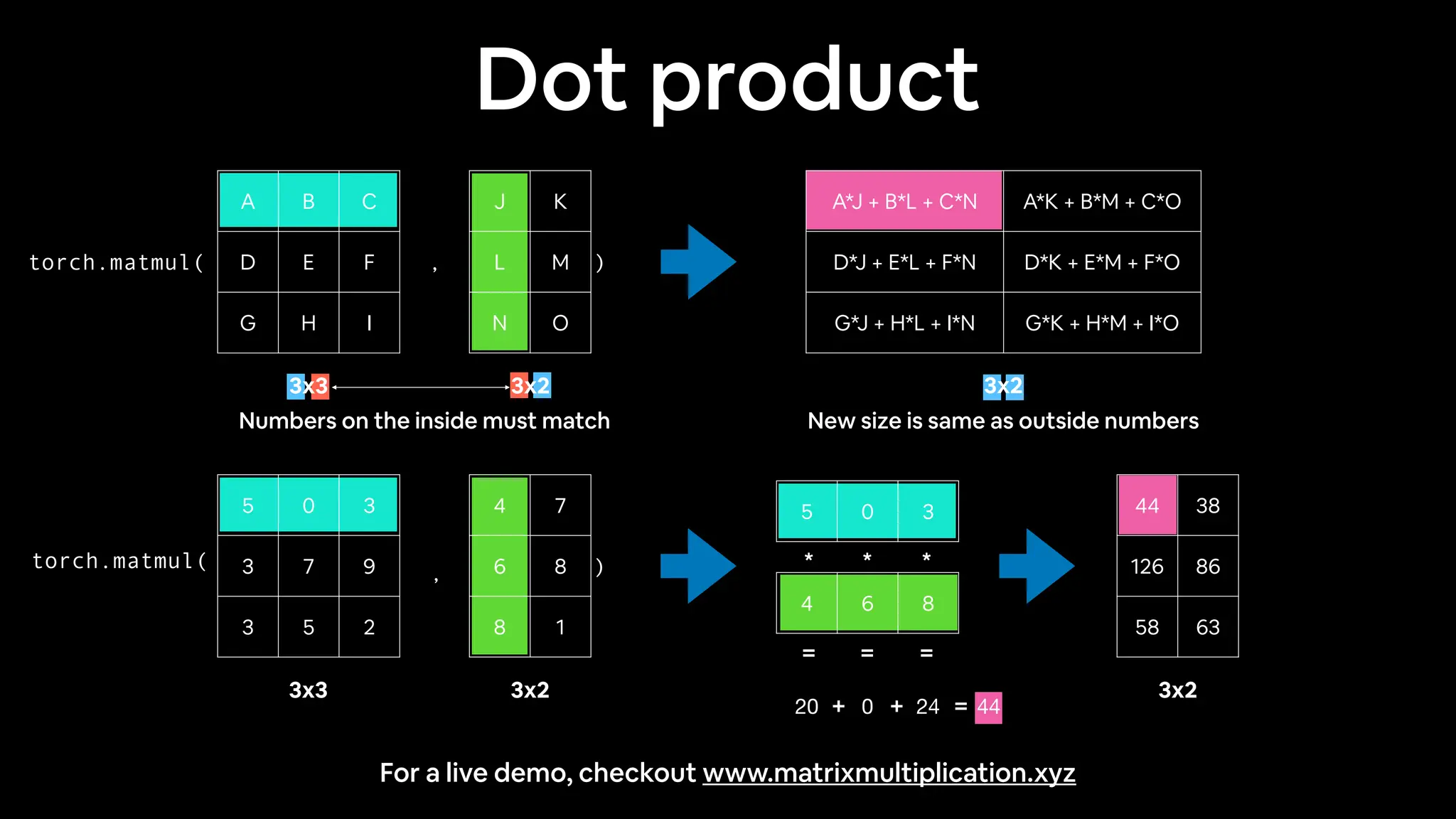

This document provides an overview of deep learning concepts using PyTorch. It begins with definitions of machine learning, deep learning, and neural networks. It then discusses what PyTorch is and why it is commonly used, as well as key PyTorch concepts like tensors. The document outlines what will be covered in the course, including PyTorch fundamentals, preprocessing data, building models, training models, evaluation, and making predictions. It encourages an experimental approach to learning and offers resources for further exploration.

![Neural Networks [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs (a human can understand these) Ramen, Spaghetti Not a diaster “Hey Siri, what’s the weather today?” (choose the appropriate neural network for your problem) (before data gets used with a neural network, it needs to be turned into numbers) Each of these nodes is called a “hidden unit” or “neuron”.](https://image.slidesharecdn.com/00pytorchanddeeplearningfundamentals-231116134513-9c5ed4a1/75/00_pytorch_and_deep_learning_fundamentals-pdf-15-2048.jpg)

![Neural Networks [[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs (a human can understand these) Ramen, Spaghetti Not spam “Hey Siri, what’s the weather today?” (choose the appropriate neural network for your problem) (before data gets used with an algorithm, it needs to be turned into numbers) These are tensors!](https://image.slidesharecdn.com/00pytorchanddeeplearningfundamentals-231116134513-9c5ed4a1/75/00_pytorch_and_deep_learning_fundamentals-pdf-29-2048.jpg)

![[[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs Ramen, Spaghetti These are tensors!](https://image.slidesharecdn.com/00pytorchanddeeplearningfundamentals-231116134513-9c5ed4a1/75/00_pytorch_and_deep_learning_fundamentals-pdf-30-2048.jpg)

![tensor([[[1, 2, 3], [3, 6, 9], [2, 4, 5]]]) 0 1 2 0 1 2 tensor([[[1, 2, 3], [3, 6, 9], [2, 4, 5]]]) tensor([[[1, 2, 3], [3, 6, 9], [2, 4, 5]]]) torch.Size([1, 3, 3]) 0 1 2 Dimension (dim) dim=0 dim=1 dim=2 Tensor dimensions](https://image.slidesharecdn.com/00pytorchanddeeplearningfundamentals-231116134513-9c5ed4a1/75/00_pytorch_and_deep_learning_fundamentals-pdf-40-2048.jpg)

![[[116, 78, 15], [117, 43, 96], [125, 87, 23], …, [[0.983, 0.004, 0.013], [0.110, 0.889, 0.001], [0.023, 0.027, 0.985], …, Inputs Numerical encoding Learns representation (patterns/features/weights) Representation outputs Outputs Ramen, Spaghetti [[0.092, 0.210, 0.415], [0.778, 0.929, 0.030], [0.019, 0.182, 0.555], …, 1. Initialise with random weights (only at beginning) 2. Show examples 3. Update representation outputs 4. Repeat with more examples Supervised learning (overview)](https://image.slidesharecdn.com/00pytorchanddeeplearningfundamentals-231116134513-9c5ed4a1/75/00_pytorch_and_deep_learning_fundamentals-pdf-42-2048.jpg)

![Tensor attributes Attribute Meaning Code Shape The length (number of elements) of each of the dimensions of a tensor. tensor.shape Rank/dimensions The total number of tensor dimensions. A scalar has rank 0, a vector has rank 1, a matrix is rank 2, a tensor has rank n. tensor.ndim or tensor.size() Speci fi c axis or dimension (e.g. “1st axis” or “0th dimension”) A particular dimension of a tensor. tensor[0], tensor[:, 1]…](https://image.slidesharecdn.com/00pytorchanddeeplearningfundamentals-231116134513-9c5ed4a1/75/00_pytorch_and_deep_learning_fundamentals-pdf-43-2048.jpg)