快速接入

目前 OpenAI-HK 提供 GPT、Dall-e-3、gpt-4o o1 o3 gpt-image-1 等 openai 中转接口服务,需要支付费用后才可调用

同时 OpenAI-HK 还提供 Suno、Flux、Midjourney、Google Veo3、Riffusion 等涵盖 AI Music、AI Video、AI Image、AI Chat 服务

官方参考 需要科学上网

调用方式

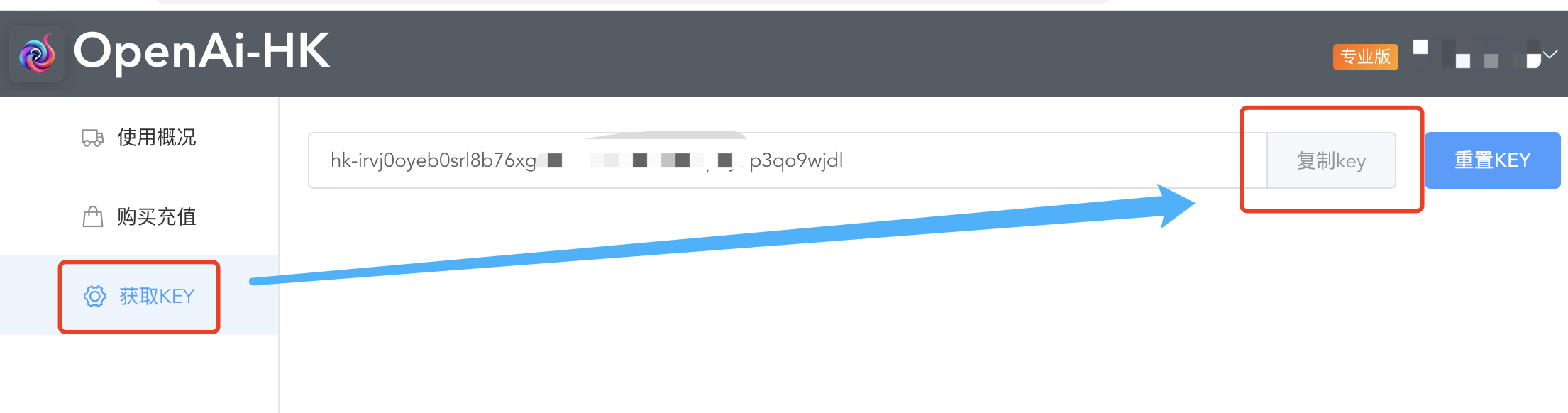

- 先通过 openai-hk注册一个帐号;

- 登录后,点击:【获取 key】即可获得 api-key;

- 复制 KEY 返回的 hk-开头的 api_key,将原请求头中的 api_key 替换成我们专属的 api_key

例如:

例如:

原来: sk-sdiL2SMN4D7GBqFhBsYdT2345kFJhwEHGXbU5RzCM8dS3lrn

现在: hk-wsvj0oyeb0srl8b76xgzolc678996mhwlk3p3y7p3qo9wjdl

OpenAI 的 api_key 通常以 sk- 作为开头,但我们平台的 api_key 以 hk- 开头 后面是 48 位随机字符串

原请求地址 api.openai.com 的后面加上-hk,即 api.openai-hk.com

例如:

原来: https://api.openai.com/v1/chat/completions

现在: https://api.openai-hk.com/v1/chat/completions

🔥 对话接口实例

- 实例是以对话(v1/chat/completions) 接口 作为样例;官方还有很多类型的结果

- 更多对话接口参数,请参考官网文档 https://platform.openai.com/docs/api-reference/chat/create

- 下面为各位准备好多实例

CURL 实例

curl --request POST \ --url https://api.openai-hk.com/v1/chat/completions \ --header 'Authorization: Bearer hk-替换为你的key' \ -H "Content-Type: application/json" \ --data '{ "max_tokens": 1200, "model": "gpt-3.5-turbo", "temperature": 0.8, "top_p": 1, "presence_penalty": 1, "messages": [ { "role": "system", "content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible." }, { "role": "user", "content": "你是chatGPT多少?" } ] }'curl --request POST \ --url https://api.openai-hk.com/v1/chat/completions \ --header 'Authorization: Bearer hk-替换为你的key' \ -H "Content-Type: application/json" \ --data '{ "max_tokens": 1200, "model": "gpt-3.5-turbo", "temperature": 0.8, "top_p": 1, "presence_penalty": 1, "messages": [ { "role": "system", "content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible." }, { "role": "user", "content": "你是chatGPT多少?" } ] }'如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

PHP 实例

$ch = curl_init(); curl_setopt($ch, CURLOPT_URL, 'https://api.openai-hk.com/v1/chat/completions'); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_POST, 1); $headers = array(); $headers[] = 'Content-Type: application/json'; $headers[] = 'Authorization: Bearer hk-替换为你的key'; curl_setopt($ch, CURLOPT_HTTPHEADER, $headers); $data = array( 'max_tokens' => 1200, 'model' => 'gpt-3.5-turbo', 'temperature' => 0.8, 'top_p' => 1, 'presence_penalty' => 1, 'messages' => array( array( 'role' => 'system', 'content' => 'You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.' ), array( 'role' => 'user', 'content' => '你是chatGPT多少?' ) ) ); $data_string = json_encode($data); curl_setopt($ch, CURLOPT_POSTFIELDS, $data_string); $result = curl_exec($ch); if (curl_errno($ch)) { echo 'Error:' . curl_error($ch); } curl_close($ch); echo $result; $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, 'https://api.openai-hk.com/v1/chat/completions'); curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1); curl_setopt($ch, CURLOPT_POST, 1); $headers = array(); $headers[] = 'Content-Type: application/json'; $headers[] = 'Authorization: Bearer hk-替换为你的key'; curl_setopt($ch, CURLOPT_HTTPHEADER, $headers); $data = array( 'max_tokens' => 1200, 'model' => 'gpt-3.5-turbo', 'temperature' => 0.8, 'top_p' => 1, 'presence_penalty' => 1, 'messages' => array( array( 'role' => 'system', 'content' => 'You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.' ), array( 'role' => 'user', 'content' => '你是chatGPT多少?' ) ) ); $data_string = json_encode($data); curl_setopt($ch, CURLOPT_POSTFIELDS, $data_string); $result = curl_exec($ch); if (curl_errno($ch)) { echo 'Error:' . curl_error($ch); } curl_close($ch); echo $result;如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

Python 实例

import requests import json url = "https://api.openai-hk.com/v1/chat/completions" headers = { "Content-Type": "application/json", "Authorization": "Bearer hk-替换为你的key" } data = { "max_tokens": 1200, "model": "gpt-3.5-turbo", "temperature": 0.8, "top_p": 1, "presence_penalty": 1, "messages": [ { "role": "system", "content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible." }, { "role": "user", "content": "你是chatGPT多少?" } ] } response = requests.post(url, headers=headers, data=json.dumps(data).encode('utf-8') ) result = response.content.decode("utf-8") print(result)import requests import json url = "https://api.openai-hk.com/v1/chat/completions" headers = { "Content-Type": "application/json", "Authorization": "Bearer hk-替换为你的key" } data = { "max_tokens": 1200, "model": "gpt-3.5-turbo", "temperature": 0.8, "top_p": 1, "presence_penalty": 1, "messages": [ { "role": "system", "content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible." }, { "role": "user", "content": "你是chatGPT多少?" } ] } response = requests.post(url, headers=headers, data=json.dumps(data).encode('utf-8') ) result = response.content.decode("utf-8") print(result)如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

javascript 实例

const axios = require("axios"); const url = "https://api.openai-hk.com/v1/chat/completions"; const headers = { "Content-Type": "application/json", Authorization: "Bearer hk-替换为你的key", }; const data = { max_tokens: 1200, model: "gpt-3.5-turbo", temperature: 0.8, top_p: 1, presence_penalty: 1, messages: [ { role: "system", content: "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.", }, { role: "user", content: "你是chatGPT多少?", }, ], }; axios .post(url, data, { headers }) .then((response) => { const result = response.data; console.log(result); }) .catch((error) => { console.error(error); });const axios = require("axios"); const url = "https://api.openai-hk.com/v1/chat/completions"; const headers = { "Content-Type": "application/json", Authorization: "Bearer hk-替换为你的key", }; const data = { max_tokens: 1200, model: "gpt-3.5-turbo", temperature: 0.8, top_p: 1, presence_penalty: 1, messages: [ { role: "system", content: "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.", }, { role: "user", content: "你是chatGPT多少?", }, ], }; axios .post(url, data, { headers }) .then((response) => { const result = response.data; console.log(result); }) .catch((error) => { console.error(error); });如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

typescript 实例

import axios from "axios"; const url = "https://api.openai-hk.com/v1/chat/completions"; const headers = { "Content-Type": "application/json", Authorization: "Bearer hk-替换为你的key", }; const data = { max_tokens: 1200, model: "gpt-3.5-turbo", temperature: 0.8, top_p: 1, presence_penalty: 1, messages: [ { role: "system", content: "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.", }, { role: "user", content: "你是chatGPT多少?", }, ], }; axios .post(url, data, { headers }) .then((response) => { const result = response.data; console.log(result); }) .catch((error) => { console.error(error); });import axios from "axios"; const url = "https://api.openai-hk.com/v1/chat/completions"; const headers = { "Content-Type": "application/json", Authorization: "Bearer hk-替换为你的key", }; const data = { max_tokens: 1200, model: "gpt-3.5-turbo", temperature: 0.8, top_p: 1, presence_penalty: 1, messages: [ { role: "system", content: "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.", }, { role: "user", content: "你是chatGPT多少?", }, ], }; axios .post(url, data, { headers }) .then((response) => { const result = response.data; console.log(result); }) .catch((error) => { console.error(error); });如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

java 实例

import okhttp3.*; import java.io.IOException; public class OpenAIChat { public static void main(String[] args) throws IOException { String url = "https://api.openai-hk.com/v1/chat/completions"; OkHttpClient client = new OkHttpClient(); MediaType mediaType = MediaType.parse("application/json"); String json = "{\n" + " \"max_tokens\": 1200,\n" + " \"model\": \"gpt-3.5-turbo\",\n" + " \"temperature\": 0.8,\n" + " \"top_p\": 1,\n" + " \"presence_penalty\": 1,\n" + " \"messages\": [\n" + " {\n" + " \"role\": \"system\",\n" + " \"content\": \"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.\"\n" + " },\n" + " {\n" + " \"role\": \"user\",\n" + " \"content\": \"你是chatGPT多少?\"\n" + " }\n" + " ]\n" + "}"; RequestBody body = RequestBody.create(mediaType, json); Request request = new Request.Builder() .url(url) .post(body) .addHeader("Content-Type", "application/json") .addHeader("Authorization", "Bearer hk-替换为你的key") .build(); Call call = client.newCall(request); Response response = call.execute(); String result = response.body().string(); System.out.println(result); } }import okhttp3.*; import java.io.IOException; public class OpenAIChat { public static void main(String[] args) throws IOException { String url = "https://api.openai-hk.com/v1/chat/completions"; OkHttpClient client = new OkHttpClient(); MediaType mediaType = MediaType.parse("application/json"); String json = "{\n" + " \"max_tokens\": 1200,\n" + " \"model\": \"gpt-3.5-turbo\",\n" + " \"temperature\": 0.8,\n" + " \"top_p\": 1,\n" + " \"presence_penalty\": 1,\n" + " \"messages\": [\n" + " {\n" + " \"role\": \"system\",\n" + " \"content\": \"You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.\"\n" + " },\n" + " {\n" + " \"role\": \"user\",\n" + " \"content\": \"你是chatGPT多少?\"\n" + " }\n" + " ]\n" + "}"; RequestBody body = RequestBody.create(mediaType, json); Request request = new Request.Builder() .url(url) .post(body) .addHeader("Content-Type", "application/json") .addHeader("Authorization", "Bearer hk-替换为你的key") .build(); Call call = client.newCall(request); Response response = call.execute(); String result = response.body().string(); System.out.println(result); } }如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

go 实例

package main import ( "bytes" "encoding/json" "fmt" "net/http" ) func main() { url := "https://api.openai-hk.com/v1/chat/completions" apiKey := "hk-替换为你的key" payload := map[string]interface{}{ "max_tokens": 1200, "model": "gpt-3.5-turbo", "temperature": 0.8, "top_p": 1, "presence_penalty": 1, "messages": []map[string]string{ { "role": "system", "content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.", }, { "role": "user", "content": "你是chatGPT多少?", }, }, } jsonPayload, err := json.Marshal(payload) if err != nil { fmt.Println("Error encoding JSON payload:", err) return } req, err := http.NewRequest("POST", url, bytes.NewBuffer(jsonPayload)) if err != nil { fmt.Println("Error creating HTTP request:", err) return } req.Header.Set("Authorization", "Bearer "+apiKey) req.Header.Set("Content-Type", "application/json") client := &http.Client{} resp, err := client.Do(req) if err != nil { fmt.Println("Error making API request:", err) return } defer resp.Body.Close() // 处理响应 // 请根据实际需求解析和处理响应数据 fmt.Println("Response HTTP Status:", resp.StatusCode) }package main import ( "bytes" "encoding/json" "fmt" "net/http" ) func main() { url := "https://api.openai-hk.com/v1/chat/completions" apiKey := "hk-替换为你的key" payload := map[string]interface{}{ "max_tokens": 1200, "model": "gpt-3.5-turbo", "temperature": 0.8, "top_p": 1, "presence_penalty": 1, "messages": []map[string]string{ { "role": "system", "content": "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.", }, { "role": "user", "content": "你是chatGPT多少?", }, }, } jsonPayload, err := json.Marshal(payload) if err != nil { fmt.Println("Error encoding JSON payload:", err) return } req, err := http.NewRequest("POST", url, bytes.NewBuffer(jsonPayload)) if err != nil { fmt.Println("Error creating HTTP request:", err) return } req.Header.Set("Authorization", "Bearer "+apiKey) req.Header.Set("Content-Type", "application/json") client := &http.Client{} resp, err := client.Do(req) if err != nil { fmt.Println("Error making API request:", err) return } defer resp.Body.Close() // 处理响应 // 请根据实际需求解析和处理响应数据 fmt.Println("Response HTTP Status:", resp.StatusCode) }如需调用 4.0,可将上面 modle 中 gpt-3.5-turbo 改为 gpt-4 或 gpt-4-0613 即可。

🚀js 实现 sse 打字效果

注意

可 node.js 后端运行;也支持浏览器模式;需要注意的是如果是前端 注意保护好你的 key。

如何保护好 key?可以跟 nginx 配合 将 header 部分的 'Authorization': 'Bearer hk-你的key' 放到 nginx 当中

//记得引入 `axios` const chatGPT = (msg, opt) => { let content = ""; const dataPar = (data) => { let rz = {}; let dz = data.split("data:", 2); const str = dz[1].trim(); if (str == "[DONE]") rz = { finish: true, text: "" }; else { rz = JSON.parse(str); rz.text = rz.choices[0].delta.content; } return rz; }; const dd = (data) => { let arr = data.trim().split("\n\n"); let rz = { text: "", arr: [] }; const atext = arr.map((v) => { const aa = dataPar(v); return aa.text; }); rz.arr = atext; rz.text = atext.join(""); if (opt.onMessage) opt.onMessage(rz); return rz; }; return new Promise((resolve, reject) => { axios({ method: "post", url: "https://api.openai-hk.com/v1/chat/completions", data: { max_tokens: 1200, model: "gpt-3.5-turbo", //模型替换 如需调用4.0,改为gpt-4或gpt-4-0613即可 temperature: 0.8, top_p: 1, presence_penalty: 1, messages: [ { role: "system", content: opt.system ?? "You are ChatGPT", }, { role: "user", content: msg, }, ], stream: true, //数据流方式输出 }, headers: { "Content-Type": "application/json", Authorization: "Bearer hk-你的key", }, onDownloadProgress: (e) => dd(e.target.responseText), }) .then((d) => resolve(dd(d.data))) .catch((e) => reject(e)); }); }; //调用 chatGPT("你是谁?", { //system:'', //角色定义 onMessage: (d) => console.log("过程性结果:", d.text), }) .then((d) => console.log("✅最终结果:", d)) .catch((e) => console.log("❎错误:", e));//记得引入 `axios` const chatGPT = (msg, opt) => { let content = ""; const dataPar = (data) => { let rz = {}; let dz = data.split("data:", 2); const str = dz[1].trim(); if (str == "[DONE]") rz = { finish: true, text: "" }; else { rz = JSON.parse(str); rz.text = rz.choices[0].delta.content; } return rz; }; const dd = (data) => { let arr = data.trim().split("\n\n"); let rz = { text: "", arr: [] }; const atext = arr.map((v) => { const aa = dataPar(v); return aa.text; }); rz.arr = atext; rz.text = atext.join(""); if (opt.onMessage) opt.onMessage(rz); return rz; }; return new Promise((resolve, reject) => { axios({ method: "post", url: "https://api.openai-hk.com/v1/chat/completions", data: { max_tokens: 1200, model: "gpt-3.5-turbo", //模型替换 如需调用4.0,改为gpt-4或gpt-4-0613即可 temperature: 0.8, top_p: 1, presence_penalty: 1, messages: [ { role: "system", content: opt.system ?? "You are ChatGPT", }, { role: "user", content: msg, }, ], stream: true, //数据流方式输出 }, headers: { "Content-Type": "application/json", Authorization: "Bearer hk-你的key", }, onDownloadProgress: (e) => dd(e.target.responseText), }) .then((d) => resolve(dd(d.data))) .catch((e) => reject(e)); }); }; //调用 chatGPT("你是谁?", { //system:'', //角色定义 onMessage: (d) => console.log("过程性结果:", d.text), }) .then((d) => console.log("✅最终结果:", d)) .catch((e) => console.log("❎错误:", e));😁embeddings 接口

请求地址: POST https://api.openai-hk.com/v1/embeddings

node.js 请求实例

const fetch = require("node-fetch"); fetch("https://api.openai-hk.com/v1/embeddings", { method: "POST", headers: { Authorization: "Bearer hk-替换为你的key", "Content-Type": "application/json", }, body: JSON.stringify({ input: "一起来使用ChatGPT", model: "text-embedding-ada-002", }), });const fetch = require("node-fetch"); fetch("https://api.openai-hk.com/v1/embeddings", { method: "POST", headers: { Authorization: "Bearer hk-替换为你的key", "Content-Type": "application/json", }, body: JSON.stringify({ input: "一起来使用ChatGPT", model: "text-embedding-ada-002", }), });😁moderations 接口

请求地址: POST https://api.openai-hk.com/v1/moderations

node.js 请求实例

const fetch = require("node-fetch"); fetch("https://api.openai-hk.com/v1/moderations", { method: "POST", headers: { Authorization: "Bearer hk-替换为你的key", "Content-Type": "application/json", }, body: JSON.stringify({ input: "有人砍我" }), });const fetch = require("node-fetch"); fetch("https://api.openai-hk.com/v1/moderations", { method: "POST", headers: { Authorization: "Bearer hk-替换为你的key", "Content-Type": "application/json", }, body: JSON.stringify({ input: "有人砍我" }), });😁 各种应用

现实中有好多应用,可以选择一种你喜欢的

chatgpt-web

docker 启动 默认模型是 gpt-3.5

docker run --name chatgpt-web -d -p 6011:3002 \ --env OPENAI_API_KEY=hk-替换为你的key \ --env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \ --env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webdocker run --name chatgpt-web -d -p 6011:3002 \ --env OPENAI_API_KEY=hk-替换为你的key \ --env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \ --env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webchatgpt-web gpt-4

默认模型是 gpt-3.5 如何起一个默认模型 gpt-4.0 呢? 使用环境变量 OPENAI_API_MODEL

docker run --name chatgpt-web -d -p 6040:3002 \ --env OPENAI_API_KEY=hk-替换为你的key \ --env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \ --env OPENAI_API_MODEL=gpt-4-0613 \ --env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-webdocker run --name chatgpt-web -d -p 6040:3002 \ --env OPENAI_API_KEY=hk-替换为你的key \ --env TIMEOUT_MS=600000 --env OPENAI_MAX_TOKEN=1000 \ --env OPENAI_API_MODEL=gpt-4-0613 \ --env OPENAI_API_BASE_URL=https://api.openai-hk.com chenzhaoyu94/chatgpt-web可选模型有哪些

| 模型 | 说明 |

|---|---|

| gpt-3.5-turbo-0613 | 3.5 的 4k 上下文支持到 4k |

| gpt-3.5-turbo-16k-0613 | 3.5 的 16k 能够支持更长的上下文 价格比普通 3.5 贵些 |

| gpt-3.5-turbo-1106 | 3.5 的 16k 价格与 3.5 4k 一样 |

| gpt-4 | 8k 的 4.0 上下文支持到 8k |

| gpt-4-0613 | 4.0 的 0613 版本 |

| gpt-4-1106-preview | 128k 的 4.0 价格是普通 gpt-4 的一半 |

| gpt-4-vision-preview | token 价格跟 gpt-4-1106-preview 一样,支持 4k, 有额外的图片费用 |

| dall-e-3 | openai 的画图 |

| Midjourney | 非 openai 的画图产品 |

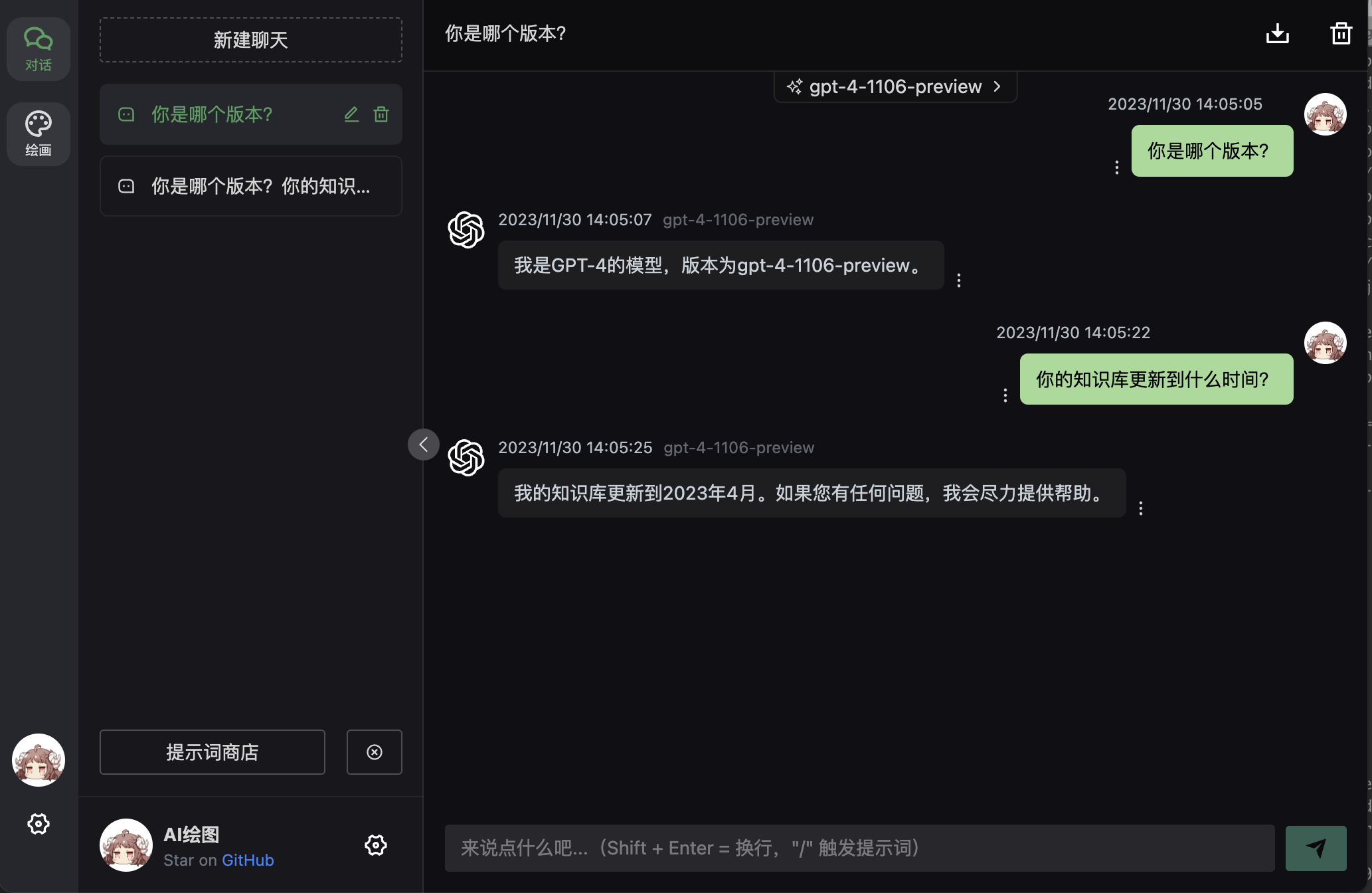

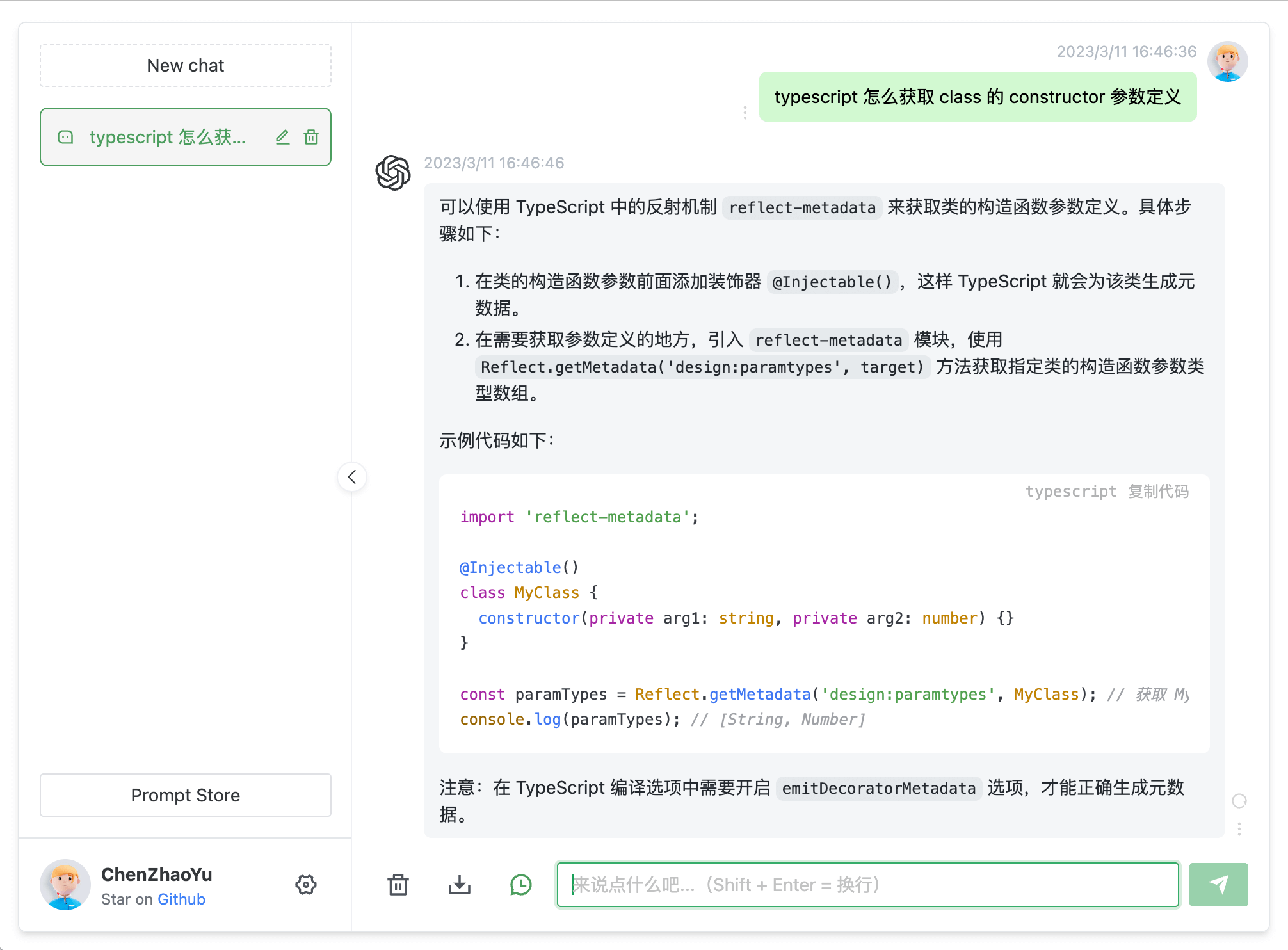

看效果

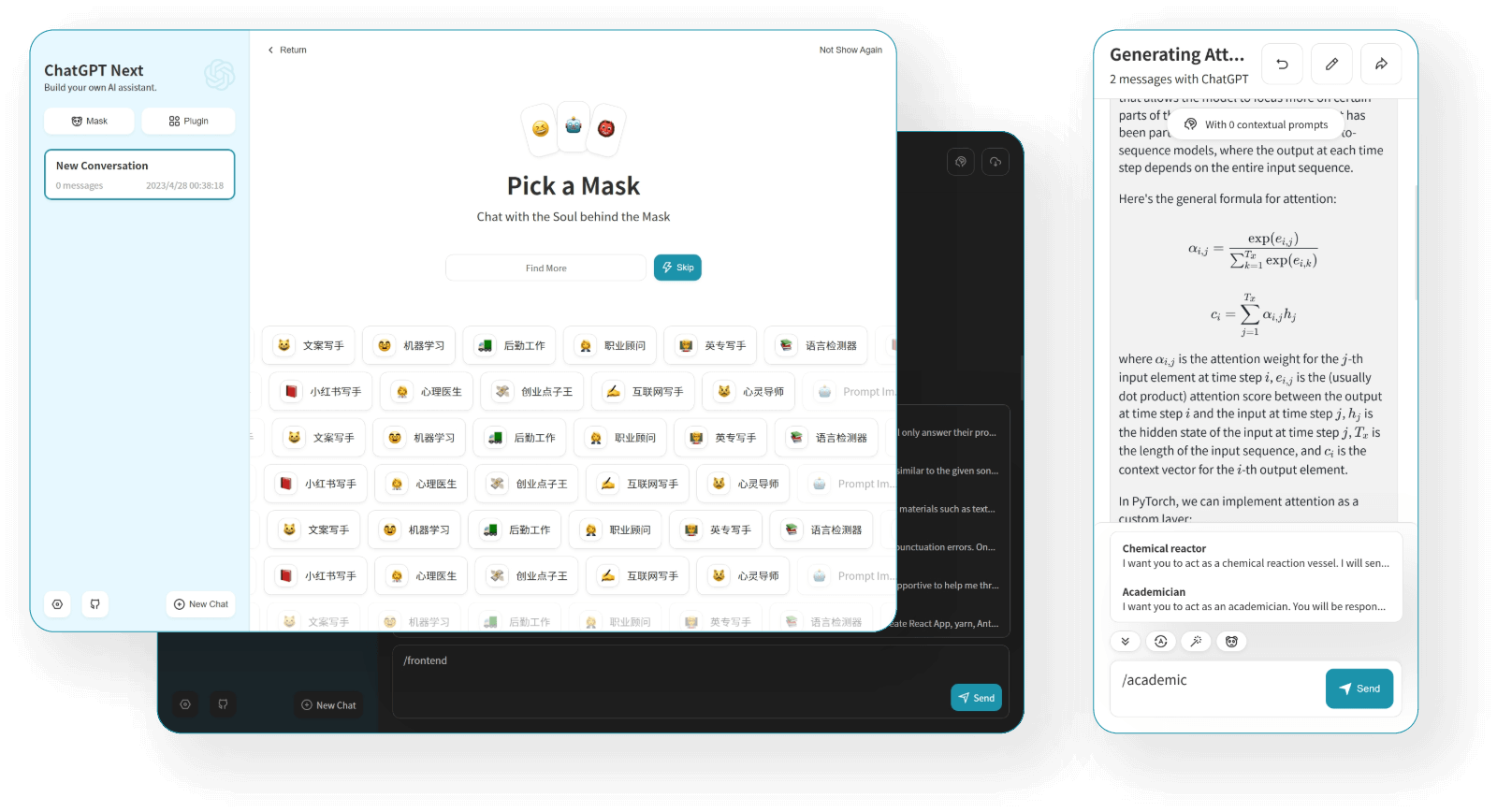

chatgpt-next-web

docker run --name chatgpt-next-web -d -p 6013:3000 \ -e OPENAI_API_KEY="hk-替换为你的key" \ -e BASE_URL=https://api.openai-hk.com yidadaa/chatgpt-next-webdocker run --name chatgpt-next-web -d -p 6013:3000 \ -e OPENAI_API_KEY="hk-替换为你的key" \ -e BASE_URL=https://api.openai-hk.com yidadaa/chatgpt-next-web看效果

其他

如果还有其应用 请联系我们客服协助

🚀 直接使用

1、chatgpt web 多模型自由切换

注意 1.访问 https://chat.ddaiai.com/ (如果被墙,请换用将二级域名 chat更换为 hi)

2.如果发现被墙了 可以自己换地址 https://hello.ddaiai.com 把 hello 换其他的 如 https://202312.ddaiai.com 都能访问

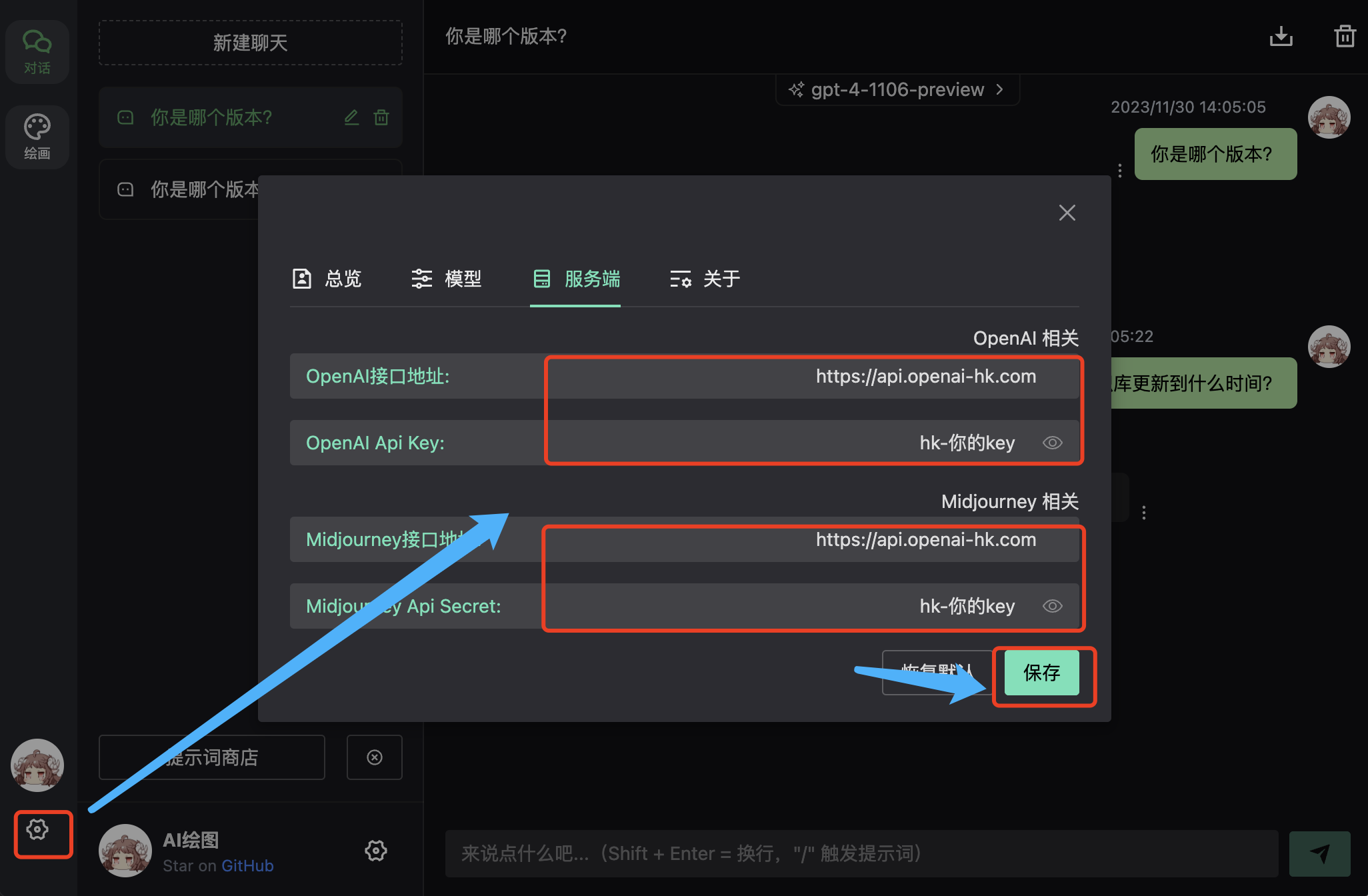

设置

然后在下图相应的地方设置 OpenAi接口地址: https://api.openai-hk.com OpenAi API KEY: hk-你的apiKey 如果想画图 把midjourney的资料也完善 midjourney 接口地址: https://api.openai-hk.com midjourney Api Secret: hk-你的apiKey然后在下图相应的地方设置 OpenAi接口地址: https://api.openai-hk.com OpenAi API KEY: hk-你的apiKey 如果想画图 把midjourney的资料也完善 midjourney 接口地址: https://api.openai-hk.com midjourney Api Secret: hk-你的apiKey 效果

效果

输入框 提问对话

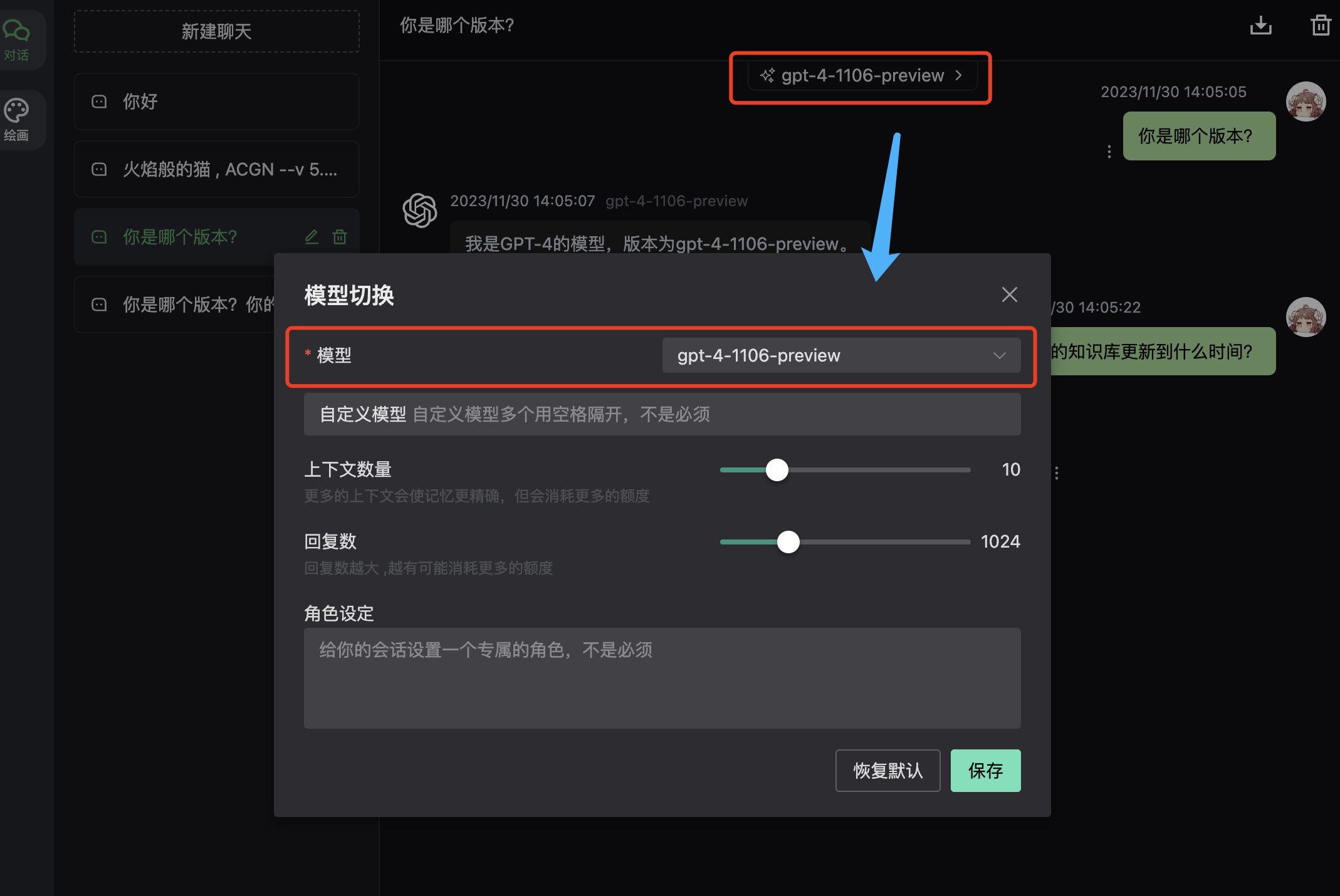

模型切换 支持自定义模型,多个可以用逗号隔开

2、next-web 多模型自由切换

注意 1.访问 https://web.ccaiai.com/ (需要科学)

2.如果发现被墙了 可以自己换地址 https://suibian.ccaiai.com 把 suibian 换其他的 如 https://abc.ccaiai.com 都能访问

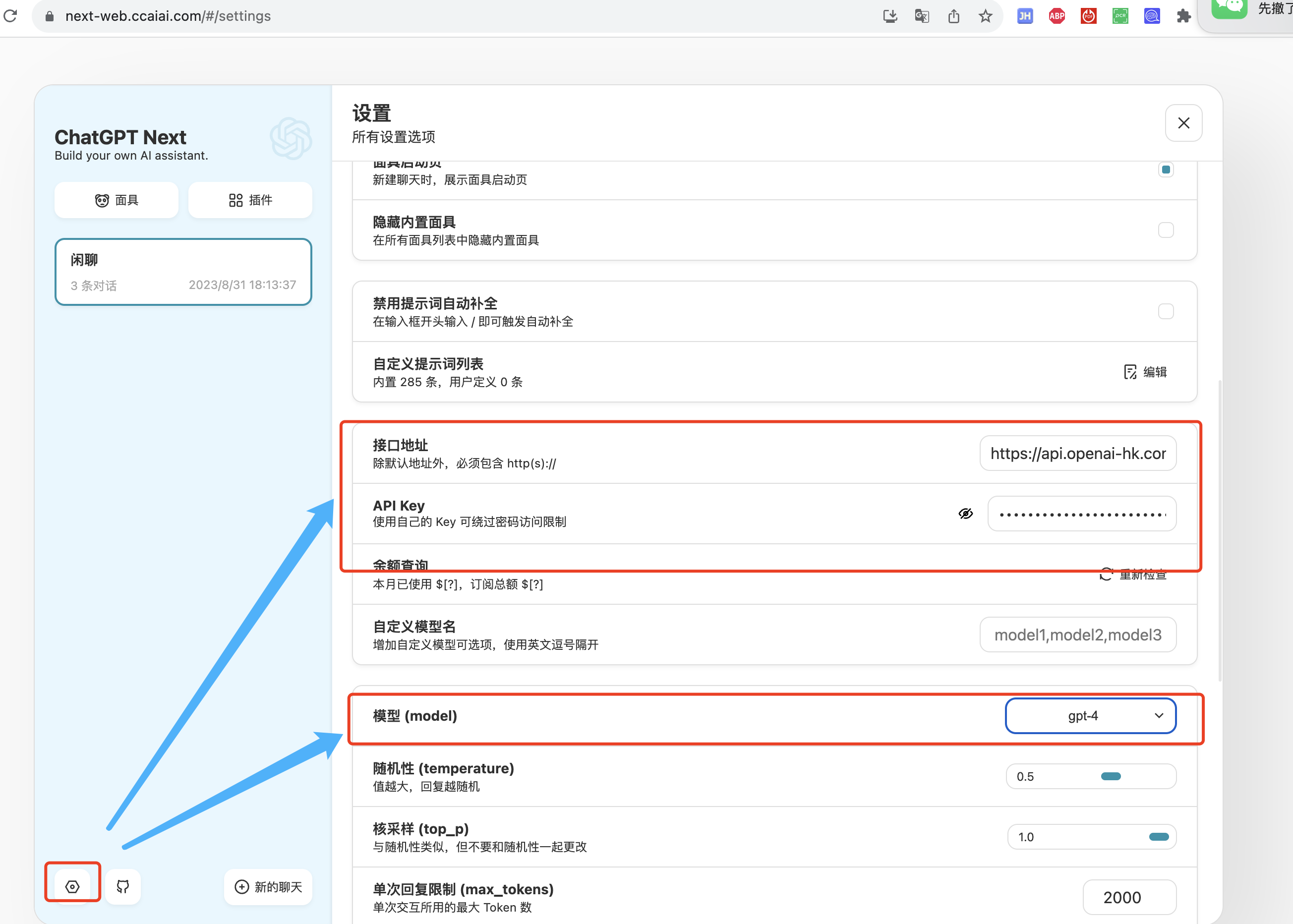

然后在下图相应的地方设置 接口地址:https://api.openai-hk.com API KEY: hk-你的apiKey 模型:选择 GPT-4然后在下图相应的地方设置 接口地址:https://api.openai-hk.com API KEY: hk-你的apiKey 模型:选择 GPT-4

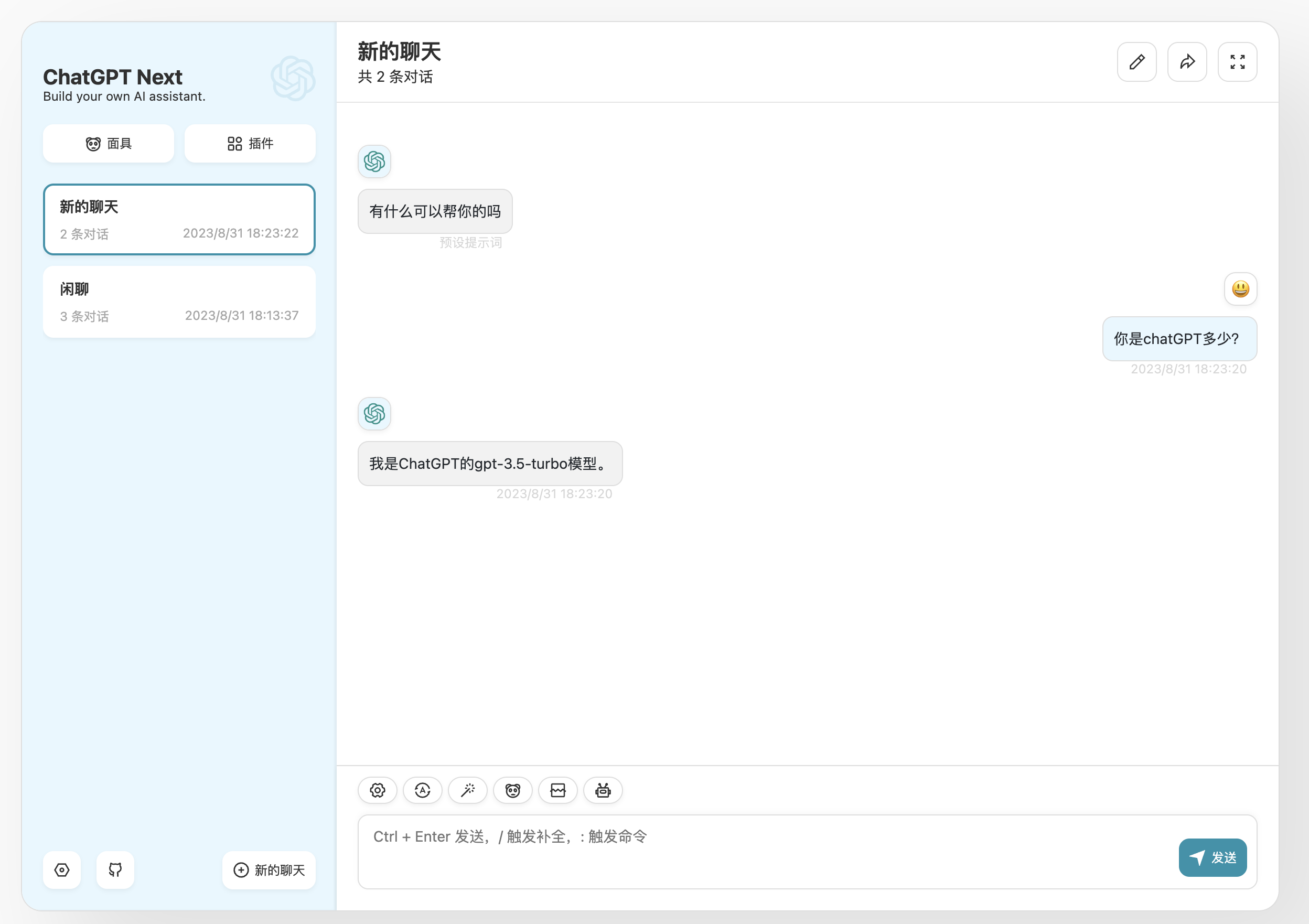

输入框 提问对话

OpenAi-HK

OpenAi-HK