Ingest logs from a Python application using Filebeat

Stack

In this guide, we show you how to ingest logs from a Python application and deliver them securely into an Elastic Cloud Hosted deployment. You’ll set up Filebeat to monitor a JSON-structured log file with fields formatted according to the Elastic Common Schema (ECS). You’ll then view real-time visualizations of the log events in Kibana as they occur.

While we use Python for this example, you can apply the same approach to monitoring log output across many client types. Check the list of available ECS logging plugins. We also use Elastic Cloud Hosted as the target Elastic Stack destination for our logs, but with small modifications, you can adapt the steps in this guide to other deployments such as self-managed Elastic Stack and Elastic Cloud Enterprise.

In this guide, you will:

- Create a Python script with logging

- Prepare your connection and authentication details

- Set up Filebeat

- Send Python logs to Elasticsearch

- Create log visualizations in Kibana

Time required: 1 hour

To complete the steps in this guide, you need to have:

- An Elastic Cloud Hosted deployment with the Elastic for Observability solution view and the superuser credentials provided at deployment creation. For more details, see Create an Elastic Cloud Hosted deployment.

- A Python version installed which is compatible with the ECS logging library for Python. For a list of compatible Python versions, check the library's README.

- The ECS logging library for Python installed.

To install the ECS logging library for Python, run:

python -m pip install ecs-logging Depending on the Python version you're using, you may need to install the library in a Python virtual environment.

In this step, you’ll create a Python script that generates logs in JSON format using Python’s standard logging module.

In a local directory, create a new file named

elvis.py, and save it with these contents:#!/usr/bin/python import logging import ecs_logging import time from random import randint #logger = logging.getLogger(__name__) logger = logging.getLogger("app") logger.setLevel(logging.DEBUG) handler = logging.FileHandler('elvis.json') handler.setFormatter(ecs_logging.StdlibFormatter()) logger.addHandler(handler) print("Generating log entries...") messages = [ "Elvis has left the building.",# "Elvis has left the oven on.", "Elvis has two left feet.", "Elvis was left out in the cold.", "Elvis was left holding the baby.", "Elvis left the cake out in the rain.", "Elvis came out of left field.", "Elvis exited stage left.", "Elvis took a left turn.", "Elvis left no stone unturned.", "Elvis picked up where he left off.", "Elvis's train has left the station." ] while True: random1 = randint(0,15) random2 = randint(1,10) if random1 > 11: random1 = 0 if(random1<=4): logger.info(messages[random1], extra={"http.request.body.content": messages[random1]}) elif(random1>=5 and random1<=8): logger.warning(messages[random1], extra={"http.request.body.content": messages[random1]}) elif(random1>=9 and random1<=10): logger.error(messages[random1], extra={"http.request.body.content": messages[random1]}) else: logger.critical(messages[random1], extra={"http.request.body.content": messages[random1]}) time.sleep(random2)This Python script randomly generates one of twelve log messages, continuously, at a random interval of between 1 and 10 seconds. The log messages are written to an

elvis.jsonfile, each with a timestamp, a log level of info, warning, error, or critical, and other data. To add some variance to the log data, the info message Elvis has left the building is set to be the most probable log event.For simplicity, there is just one log file (

elvis.json), and it is written to the local directory whereelvis.pyis located. In a production environment, you may have multiple log files associated with different modules and loggers and likely stored in/var/logor similar. To learn more about configuring logging in Python, check Logging facility for Python.Having your logs written in a JSON format with ECS fields allows for easy parsing and analysis, and for standardization with other applications. A standard, easily parsable format becomes increasingly important as the volume and type of data captured in your logs expands over time.

Together with the standard fields included for each log entry is an extra

http.request.body.contentfield. This extra field is there to give you some additional, interesting data to work with, and also to demonstrate how you can add optional fields to your log data. Check the ECS field reference for the full list of available fields.Let’s give the Python script a test run. Open a terminal instance in the location where you saved

elvis.py, and run the following:python elvis.pyAfter the script has run for about 15 seconds, enter CTRL + C to stop it. Have a look at the newly generated

elvis.jsonfile. It should contain one or more entries like this one:{"@timestamp":"2025-06-16T02:19:34.687Z","log.level":"info","message":"Elvis has left the building.","ecs":{"version":"1.6.0"},"http":{"request":{"body":{"content":"Elvis has left the building."}}},"log":{"logger":"app","origin":{"file":{"line":39,"name":"elvis.py"},"function":"<module>"},"original":"Elvis has left the building."},"process":{"name":"MainProcess","pid":3044,"thread":{"id":4444857792,"name":"MainThread"}}}After confirming that

elvis.pyruns as expected, you can deleteelvis.json.

To connect to your Elastic Cloud Hosted deployment, stream data, and issue queries, you have to specify the connection details using your deployment's Cloud ID, and you have to authenticate using either basic authentication or an API key.

To find the Cloud ID of your deployment, go to the Kibana main menu, then select Management → Integrations → Connection details. Note that the Cloud ID value is in the format deployment-name:hash. Save this value to use it later.

To authenticate and send data to Elastic Cloud Hosted, you can use the username and password you saved when you created your deployment. We use this method to set up the Filebeat connection in the Configure Filebeat to access Elastic Cloud Hosted section.

You can also generate an API key through the Elastic Cloud Hosted console, and configure Filebeat to use the new key to connect securely to your deployment. API keys are the preferred method for connecting to production environments.

To create an API key for Filebeat:

Log in to the Elastic Cloud Console, and select your deployment.

In the main menu, go to Developer tools.

Enter the following request:

POST /_security/api_key { "name": "filebeat-api-key", "role_descriptors": { "logstash_read_write": { "cluster": ["manage_index_templates", "monitor"], "index": [ { "names": ["filebeat-*"], "privileges": ["create_index", "write", "read", "manage"] } ] } } }This request creates an API key with the cluster

monitorprivilege, which gives read-only access for determining the cluster state, andmanage_index_templatesprivilege, which allows all operations on index templates. Additional privileges allowcreate_index,write, andmanageoperations for the specified index (filebeat-*). The indexmanageprivilege is added to enable index refreshes.Click ▶ to run the request. The output should be similar to the following:

{ "api_key": "tV1dnfF-GHI59ykgv4N0U3", "id": "2TBR42gBabmINotmvZjv", "name": "filebeat-api-key" }

Learn how to set up the API key in the Filebeat configuration in the Configure an API key section.

Filebeat offers a straightforward, easy-to-configure way to monitor your Python log files, and port the log data into your deployment.

Download Filebeat, then unpack it on the machine where you created the elvis.py script.

Go to the directory where you unpacked Filebeat, and open the filebeat.yml configuration file. In the Elastic Cloud section, make the following modifications to set up basic authentication:

# =============================== Elastic Cloud ================================ # These settings simplify using Filebeat with the Elastic Cloud (https://cloud.elastic.co/). # The cloud.id setting overwrites the `output.elasticsearch.hosts` and # `setup.kibana.host` options. # You can find the `cloud.id` in the Elastic Cloud web UI. cloud.id: deployment-name:hash # The cloud.auth setting overwrites the `output.elasticsearch.username` and # `output.elasticsearch.password` settings. The format is `<user>:<pass>`. cloud.auth: username:password - Uncomment the

cloud.idline, and add the deployment’s Cloud ID as the key's value. Note that thecloud.idvalue is in the formatdeployment-name:hash. Find your Cloud ID by going to the Kibana main menu, and selecting Management → Integrations → Connection details. - Uncomment the

cloud.authline, and add the username and password for your deployment in the formatusername:password. For example,cloud.auth: elastic:57ugj782kvkwmSKg8uVe.

As an alternative to configuring the connection using cloud.id and cloud.auth, you can specify the Elasticsearch URL and authentication details directly in the Elasticsearch output. This is useful when connecting to a different deployment type, such as a self-managed cluster.

To use an API key to authenticate, leave the comment on the cloud.auth line as Filebeat will use an API key instead of the deployment credentials to authenticate.

In the output.elasticsearch section of filebeat.yml, uncomment the api_key line, and add the API key you've created for Filebeat. The format of the value is id:api_key, where id and api_key are the values returned by the Create API key API.

Using the example values returned by the POST request we used earlier, the configuration for an API key authentication would look like this:

cloud.id: my-deployment:yTMtd5VzdKEuP2NwPbNsb3VkLtKzLmldJDcyMzUyNjBhZGP7MjQ4OTZiNTIxZTQyOPY2C2NeOGQwJGQ2YWQ4M5FhNjIyYjQ9ODZhYWNjKDdlX2Yz4ELhRYJ7 #cloud.auth: output.elasticsearch: ... api_key: "2TBR42gBabmINotmvZjv:tV1dnfF-GHI59ykgv4N0U3" Filebeat has several ways to collect logs. For this example, you’ll configure log collection manually. In the filebeat.inputs section of filebeat.yml:

filebeat.inputs: # Each - is an input. Most options can be set at the input level, so # you can use different inputs for various configurations. # Below are the input-specific configurations. # filestream is an input for collecting log messages from files. - type: filestream # Unique ID among all inputs, an ID is required. id: my-filestream-id # Change to true to enable this input configuration. enabled: true # Paths that should be crawled and fetched. Glob based paths. paths: - /path/to/log/files/*.json - Set

enabled: true. - Set

pathsto the location of your log files. For this example, setpathsto the directory where you savedelvis.py.

You can use a wildcard (*) character to indicate that all log files in the specified directory should be read. You can also use a wildcard to read logs from multiple directories. For example, /var/log/*/*.log.

Filebeat’s filestream input configuration includes several options for decoding logs structured as JSON messages. You can set these options in parsers.ndjson. Filebeat processes the logs line by line, so it’s important that they contain one JSON object per line.

For this example, set Filebeat to use the ndjson parser with the following decoding options:

parsers: - ndjson: target: "" overwrite_keys: true expand_keys: true add_error_key: true message_key: msg To learn more about these settings, check the ndjson parser's configuration options and Decode JSON fields in the Filebeat reference.

Append parsers.ndjson with the set decoding options to the filebeat.inputs section of filebeat.yml, so that the section now looks like this:

# ============================== Filebeat inputs =============================== filebeat.inputs: # Each - is an input. Most options can be set at the input level, so # you can use different inputs for various configurations. # Below are the input-specific configurations. # filestream is an input for collecting log messages from files. - type: filestream # Unique ID among all inputs, an ID is required. id: my-filestream-id # Change to true to enable this input configuration. enabled: true # Paths that should be crawled and fetched. Glob based paths. paths: - /path/to/log/files/*.json #- c:\programdata\elasticsearch\logs\* parsers: - ndjson: target: "" overwrite_keys: true expand_keys: true add_error_key: true message_key: msg Filebeat comes with predefined assets for parsing, indexing, and visualizing your data. To load these assets into Kibana on your Elastic Cloud Hosted deployment, run the following from the Filebeat installation directory:

./filebeat setup -e Depending on variables including the installation location, environment, and local permissions, you might need to change the ownership of filebeat.yml. You can also try running the command as root: sudo ./filebeat setup -e*, or you can disable strict permission checks by running the command with the --strict.perms=false option.

The setup process takes a couple of minutes. If the setup is successful, you should get a confirmation message:

Loaded Ingest pipelines The Filebeat data view (formerly index pattern) is now available in Elasticsearch. To verify:

Beginning with Elastic Stack version 8.0, Kibana index patterns have been renamed to data views. To learn more, check the Kibana What’s new in 8.0 page.

- Log in to the Elastic Cloud Console, and select your deployment.

- In the main menu, select Management → Stack Management → Data Views.

- In the search bar, search for filebeat. You should see filebeat-* in the search results.

Filebeat is now set to collect log messages and stream them to your deployment.

It’s time to send some log data into Elasticsearch.

In a new terminal:

Navigate to the directory where you created the

elvis.pyPython script, then run it:python elvis.pyLet the script run for a few minutes, then make sure that the

elvis.jsonfile is generated and is populated with several log entries.Launch Filebeat by running the following from the Filebeat installation directory:

./filebeat -c filebeat.yml -eIn this command:

- The

-eflag sends output to the standard error instead of the configured log output. - The

-cflag specifies the path to the Filebeat config file.

NoteIn case the command doesn't work as expected, check the Filebeat quick start for the detailed command syntax for your operating system. You can also try running the command as

root:sudo ./filebeat -c filebeat.yml -e.Filebeat should now be running and monitoring the contents of the

elvis.jsonfile.- The

To confirm that the log data has been successfully sent to your deployment:

- Log in to the Elastic Cloud Console, and select your deployment.

- In the main menu, select Management → Stack Management → Data Views.

- In the search bar, search for filebeat, then select filebeat-*.

The filebeat data view shows a list of fields and their details.

Now you can create visualizations based off of the Python application log data:

In the main menu, select Dashboards → Create dashboard.

Select Create visualization. The Lens visualization editor opens.

In the Data view dropdown box, select filebeat-*, if it isn’t already selected.

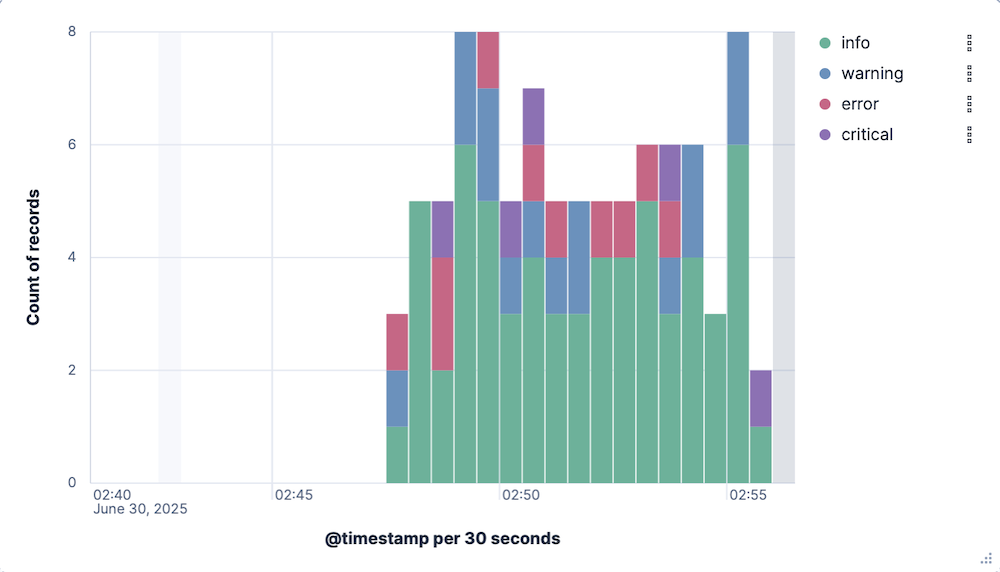

In the menu for setting the visualization type, select Bar and Stacked, if they aren’t already selected.

Check that the time filter is set to Last 15 minutes.

From the Available fields list, drag and drop the

@timestampfield onto the visualization builder.Drag and drop the

log.levelfield onto the visualization builder.In the chart settings area under Breakdown, select Top values of log.level.

Set the Number of values field to 4 to display all of four levels of severity in the chart legend.

Select Refresh. A stacked bar chart now shows the relative frequency of each of the four log severity levels over time.

Select Save and return to add this visualization to your dashboard.

Let’s create a second visualization:

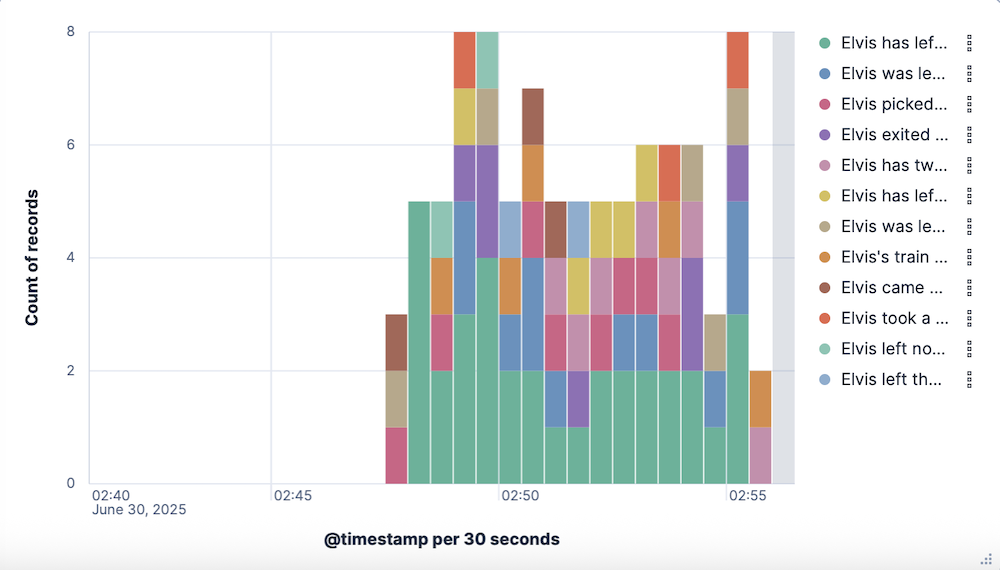

Select Create visualization.

In the menu for setting the visualization type, select Bar and Stacked, if they aren’t already selected.

From the Available fields list, drag and drop the

@timestampfield onto the visualization builder.Drag and drop the

http.request.body.contentfield onto the visualization builder.In the chart settings area under Breakdown, select Top values of http.request.body.content.

Set the Number of values to 12 to display all twelve log messages in the chart legend.

Select Refresh. A stacked bar chart now shows the relative frequency of each of the log messages over time.

Select Save and return to add this visualization to your dashboard.

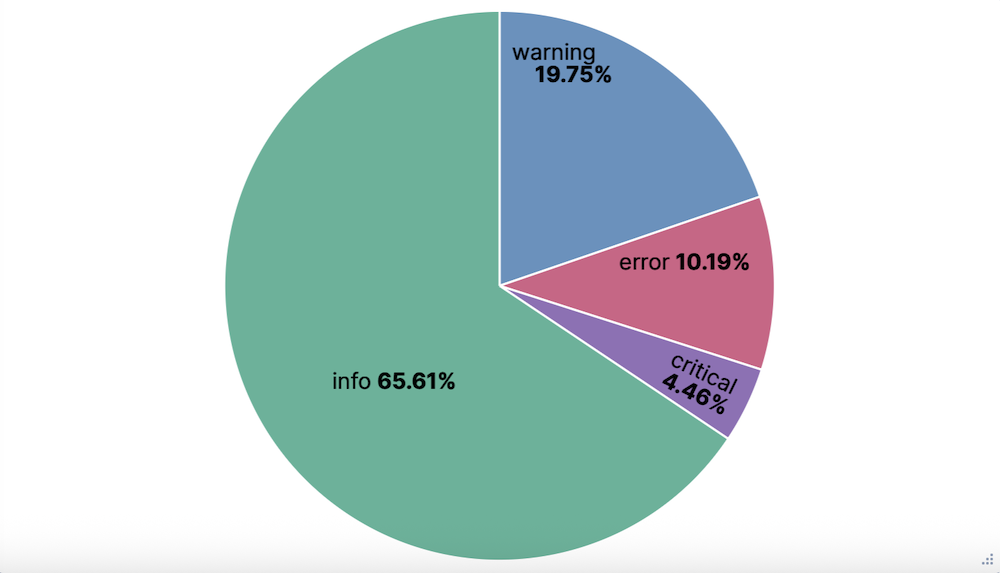

Now, create one final visualization:

Select Create visualization.

In the menu for setting the visualization type, select Pie.

From the Available fields list, drag and drop the

log.levelfield onto the visualization builder. A pie chart appears.

Select Save and return to add this visualization to your dashboard.

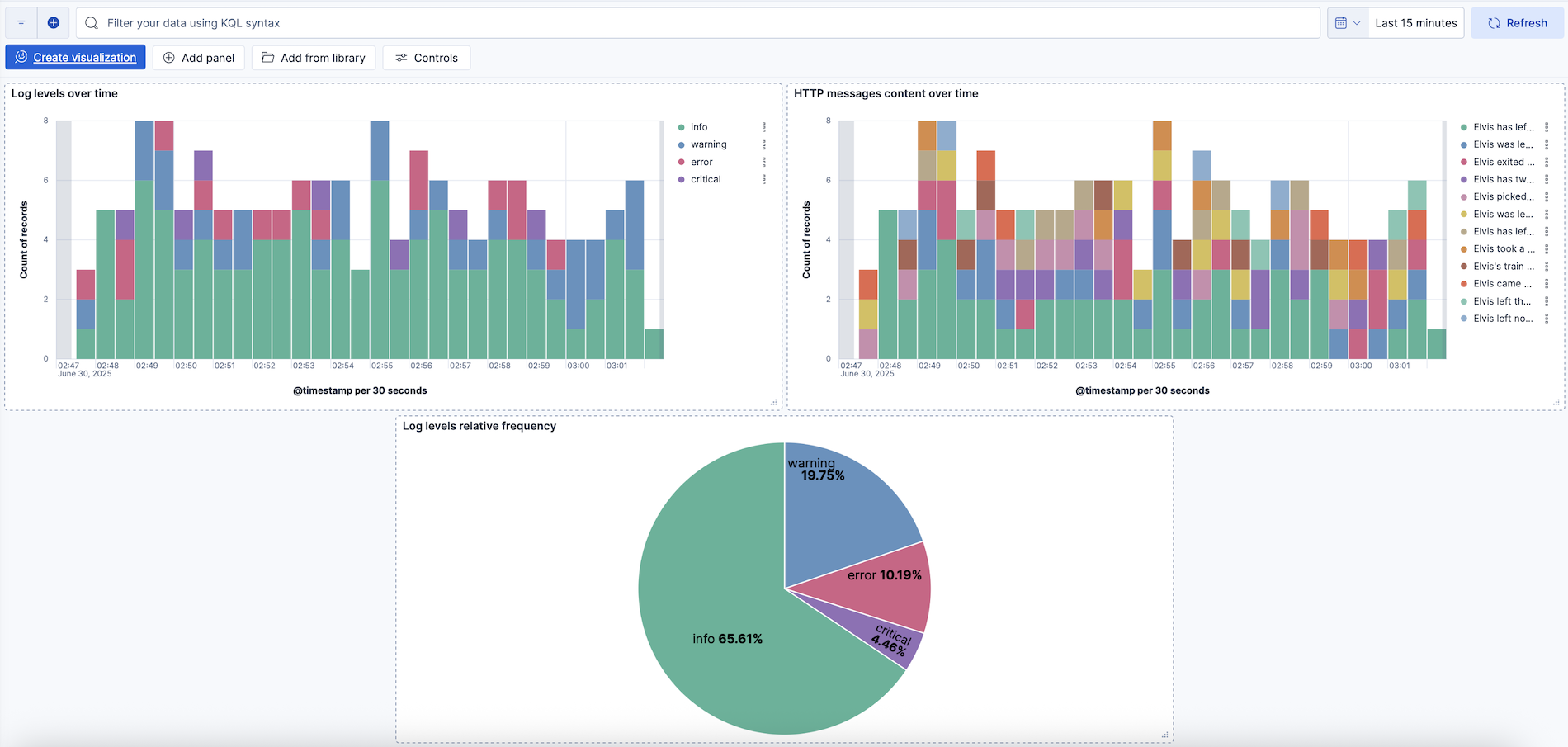

Select Save and add a title to save your new dashboard.

You now have a Kibana dashboard with three visualizations: a stacked bar chart showing the frequency of each log severity level over time, another stacked bar chart showing the frequency of various message strings over time (from the added http.request.body.content parameter), and a pie chart showing the relative frequency of each log severity type.

You can add titles to the visualizations, resize and position them as you like, and then save your changes.

Select Refresh on the Kibana dashboard. Because

elvis.pycontinues to run and generate log data, your Kibana visualizations update with each refresh.

As a final step, remember to stop Filebeat and the Python script. Enter CTRL + C in both your Filebeat terminal and in your

elvis.pyterminal.

You now know how to monitor log files from a Python application, deliver the log event data securely into an Elastic Cloud Hosted deployment, and then visualize the results in Kibana in real time. Consult the Filebeat documentation to learn more about the Filebeat ingestion and processing options available for your data. You can also explore our documentation to learn more about ingesting data with other tools.