Isaac Sim Version

5.0

Operating System

Ubuntu 22.04

Topic Description

Detailed Description

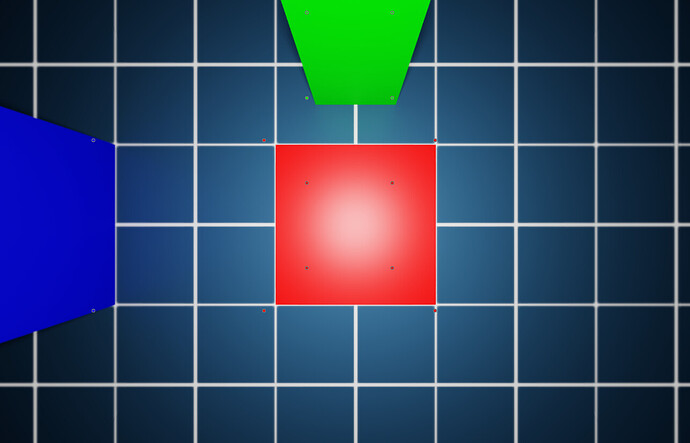

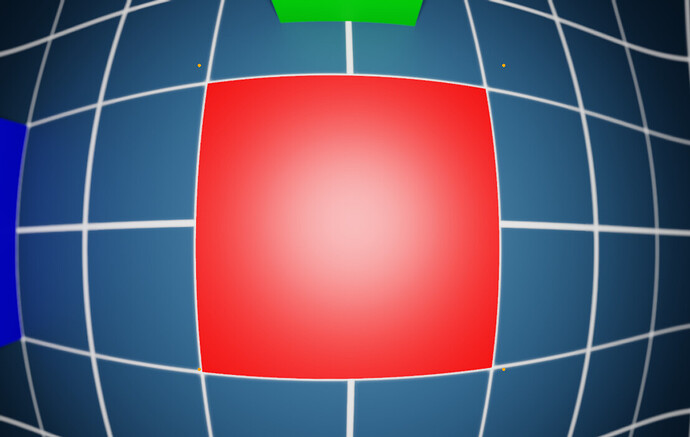

When generating camera data using Isaac sim with the opencv-fisheye lens distortion model, the images generated are not consistent with where the image points should be using cv2.projectPoints for the same extrinsic and intrinsic camera parameters.

I have a standalone script based on this tutorial to reproduce the issue, runnable standalone with python.sh from the Isaac Sim build folder:

from isaacsim import SimulationApp simulation_app = SimulationApp(launch_config={"headless": False}) # example code starts here (from https://docs.isaacsim.omniverse.nvidia.com/latest/sensors/isaacsim_sensors_camera.html#opencv-fisheye) import isaacsim.core.utils.numpy.rotations as rot_utils import numpy as np from isaacsim.core.api import World from isaacsim.core.api.objects import DynamicCuboid from isaacsim.core.utils.stage import add_reference_to_stage from isaacsim.sensors.camera import Camera from isaacsim.storage.native import get_assets_root_path from PIL import Image, ImageDraw # Desired image resolution, camera intrinsics matrix, and distortion coefficients width, height = 1920, 1200 camera_matrix = [[455.8, 0.0, 943.8], [0.0, 454.7, 602.3], [0.0, 0.0, 1.0]] distortion_coefficients = [0.05, 0.01, -0.003, -0.0005] # Camera sensor size and optical path parameters. These parameters are not the part of the # OpenCV camera model, but they are nessesary to simulate the depth of field effect. # # Note: To disable the depth of field effect, set the f_stop to 0.0. This is useful for debugging. # Set pixel size (microns) pixel_size = 3 # Set f-number, the ratio of the lens focal length to the diameter of the entrance pupil (unitless) f_stop = 1.8 # Set focus distance (meters) - chosen as distance from camera to cube focus_distance = 1.5 # Add a ground plane to the scene usd_path = get_assets_root_path() + "/Isaac/Environments/Grid/default_environment.usd" add_reference_to_stage(usd_path=usd_path, prim_path="/ground_plane") # Add some cubes and a Camera to the scene cube_1 = DynamicCuboid( prim_path="/new_cube_1", name="cube_1", position=np.array([0, 0, 0.5]), scale=np.array([1.0, 1.0, 1.0]), size=1.0, color=np.array([255, 0, 0]), ) cube_2 = DynamicCuboid( prim_path="/new_cube_2", name="cube_2", position=np.array([2, 0, 0.5]), scale=np.array([1.0, 1.0, 1.0]), size=1.0, color=np.array([0, 255, 0]), ) cube_3 = DynamicCuboid( prim_path="/new_cube_3", name="cube_3", position=np.array([0, 4, 1]), scale=np.array([2.0, 2.0, 2.0]), size=1.0, color=np.array([0, 0, 255]), ) camera = Camera( prim_path="/World/camera", position=np.array([0.0, 0.0, 2.0]), # 1 meter away from the side of the cube frequency=30, resolution=(width, height), orientation=rot_utils.euler_angles_to_quats(np.array([0, 90, 0]), degrees=True), ) camera.initialize() # Calculate the focal length and aperture size from the camera matrix ((fx, _, cx), (_, fy, cy), (_, _, _)) = camera_matrix # fx, fy are in pixels, cx, cy are in pixels horizontal_aperture = pixel_size * width * 1e-6 # convert to meters vertical_aperture = pixel_size * height * 1e-6 # convert to meters focal_length_x = pixel_size * fx * 1e-6 # convert to meters focal_length_y = pixel_size * fy * 1e-6 # convert to meters focal_length = (focal_length_x + focal_length_y) / 2 # convert to meters # Set the camera parameters, note the unit conversion between Isaac Sim sensor and Kit camera.set_focal_length(focal_length) camera.set_focus_distance(focus_distance) camera.set_lens_aperture(f_stop) camera.set_horizontal_aperture(horizontal_aperture) camera.set_vertical_aperture(vertical_aperture) camera.set_clipping_range(0.05, 1.0e5) # Set the distortion coefficients camera.set_opencv_fisheye_properties(cx=cx, cy=cy, fx=fx, fy=fy, fisheye=distortion_coefficients) # example code ends here import os import omni.replicator.core as rep # https://docs.isaacsim.omniverse.nvidia.com/5.0.0/replicator_tutorials/tutorial_replicator_overview.html rp = rep.create.render_product("/World/camera", (1920,1200)) writer = rep.writers.get("BasicWriter") # https://docs.omniverse.nvidia.com/py/replicator/1.11.35/source/extensions/omni.replicator.core/docs/API.html#basicwriter out_dir = os.path.join(os.getcwd(), "replicator_output") print(f"Output directory: {out_dir}") writer.initialize(output_dir=out_dir, rgb=True) writer.attach(rp) rep.orchestrator.step(rt_subframes=16) writer.detach() rp.destroy() rep.orchestrator.wait_until_complete() import cv2 from scipy.spatial.transform import Rotation img = cv2.imread(os.path.join(out_dir, "rgb_0000.png")) cube_corners = np.array([ [0.5, 0.5, 0.5], [-0.5, 0.5, 0.5], [0.5, -0.5, 0.5], [-0.5, -0.5, 0.5], [0.5, 0.5, -0.5], [-0.5, 0.5, -0.5], [0.5, -0.5, -0.5], [-0.5, -0.5, -0.5], ], dtype=np.float32) cube1_corners = cube_corners + np.array([0, 0, 0.5]) cube2_corners = cube_corners + np.array([2, 0, 0.5]) cube3_corners = 2*cube_corners + np.array([0, 4, 1]) all_cube_corners = np.vstack((cube1_corners, cube2_corners, cube3_corners)) distortion_coefficients_cv = np.array(distortion_coefficients, dtype=np.float32) isaac_to_cv2_mat = np.array([ [0, -1, 0], [0, 0, -1], [1, 0, 0] ], dtype=np.float32) isaac_to_cv2 = Rotation.from_matrix(isaac_to_cv2_mat) rot_mat = np.array([ [1, 0, 0], [0, 0, 1], [0, -1, 0] ], dtype=np.float32) # cv2_r = Rotation.from_matrix(isaac_to_cv2_mat) * Rotation.from_euler('y', [90], degrees=True) cv2_r = Rotation.from_matrix(rot_mat) cam_rotation = Rotation.from_euler('y', [90], degrees=True) # cam_rotation = Rotation.identity() # cam_rotation = Rotation.from_euler('y', [30], degrees=True) cam_position = np.array([0, 0, 2], dtype=np.float32) rvec_rot = isaac_to_cv2 * cam_rotation.inv() * isaac_to_cv2.inv() tvec = -rvec_rot.apply(cam_position @ isaac_to_cv2_mat.T) image_points, _ = cv2.projectPoints( all_cube_corners @ isaac_to_cv2_mat.T, rvec_rot.as_rotvec(), tvec, np.array(camera_matrix), distortion_coefficients_cv ) for i, point in enumerate(image_points): if i < 8: color = (0,0,255) elif i < 16: color = (0,255,0) else: color = (255,0,0) if np.any(point[0].astype(int)<0): continue cv2.circle(img, tuple(point[0].astype(int)), 5, (120,120,120), -1) cv2.circle(img, tuple(point[0].astype(int)), 3, color, -1) cv2.imwrite(os.path.join(out_dir, "projected_points.png"), img) simulation_app.close() If Isaac’s rendering was matching opencv, the dots on the image would line up with the corners of each cube:

fisheye:

pinhole:

I’m working on a use case where it’s important that the camera intrinsics model matches exactly with opencv, so the discrepancy here makes Isaac Sim unusable. Are the opencv-based rendering models supposed to match up exactly? Am I doing anything wrong in my snippet to reproduce this issue?