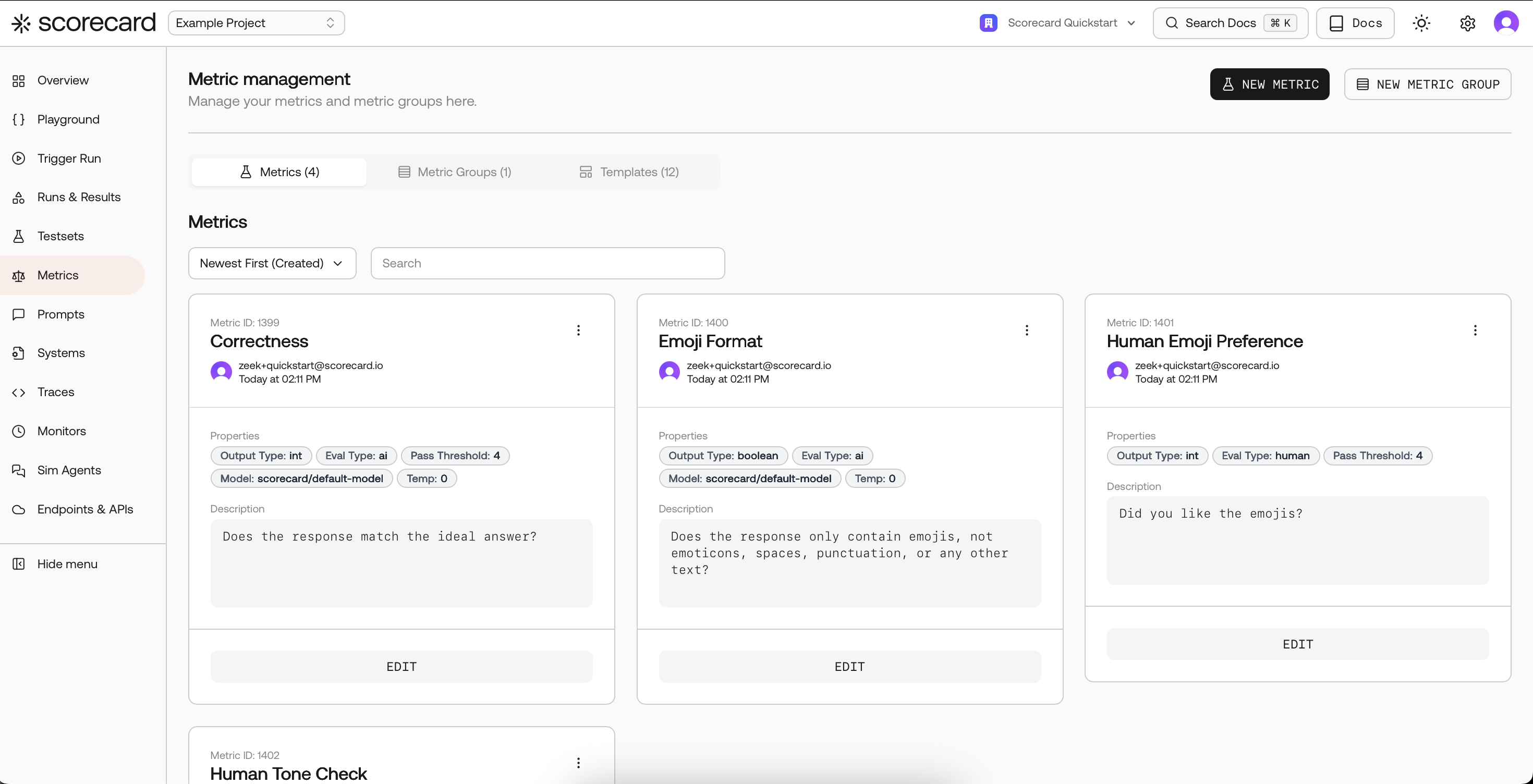

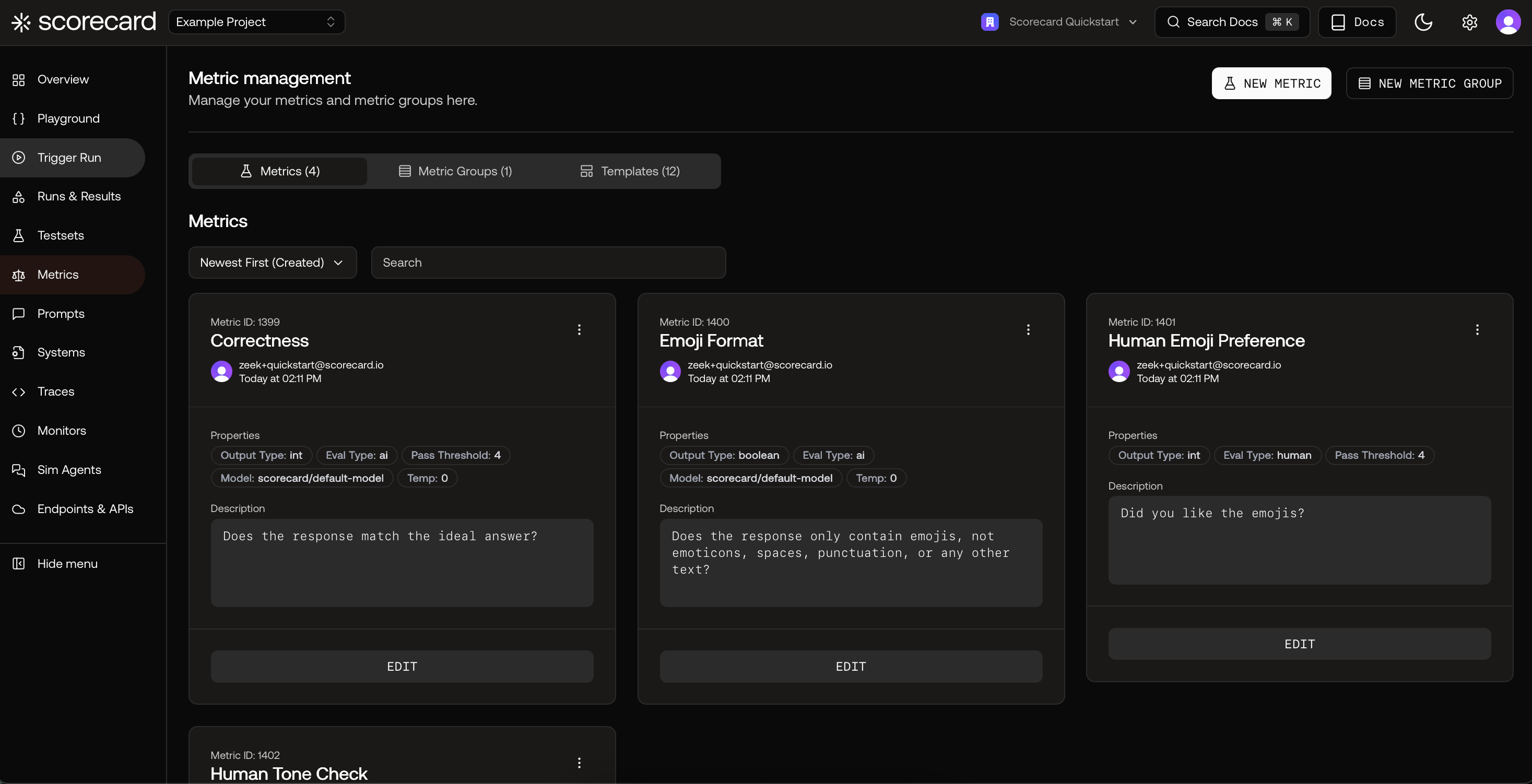

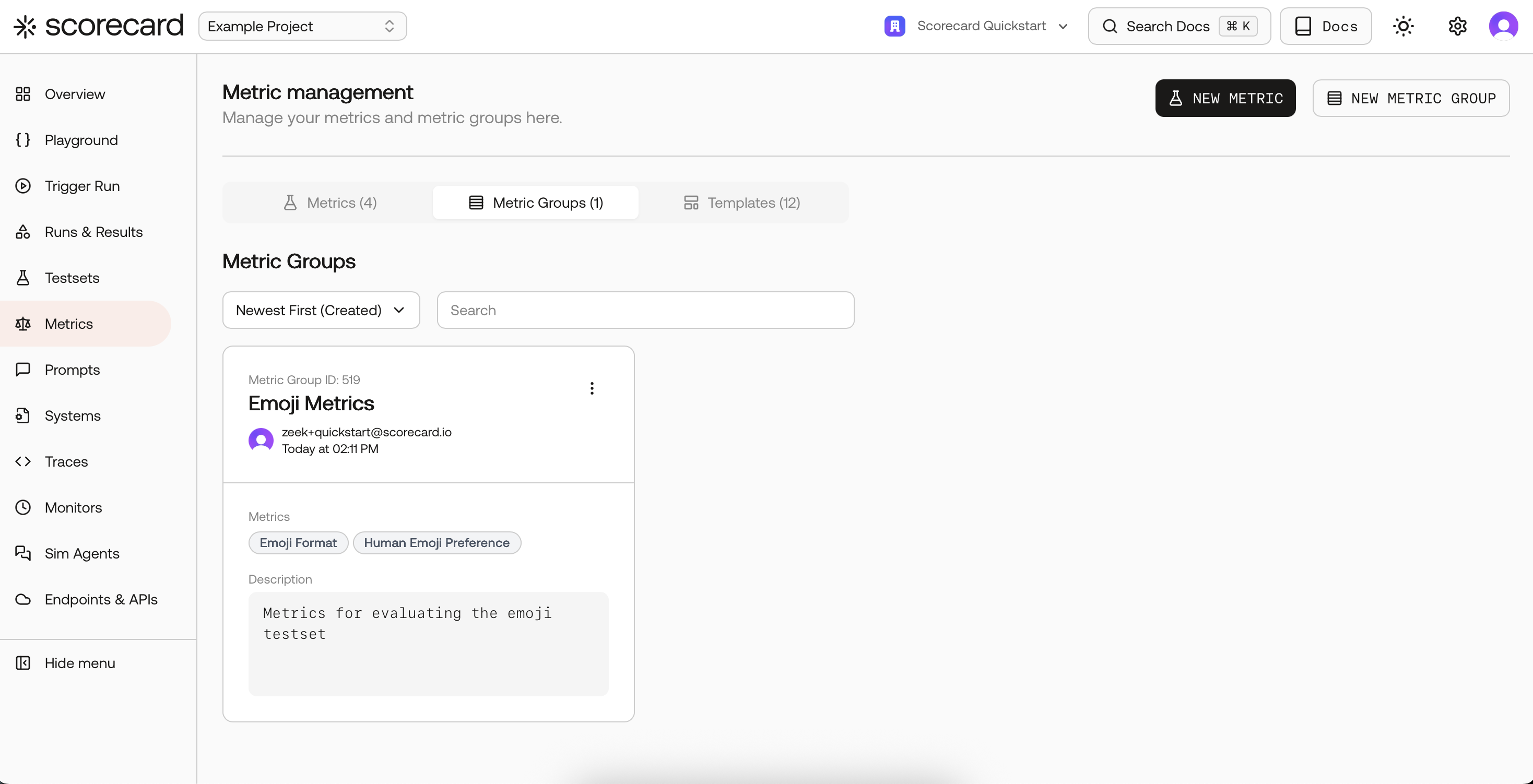

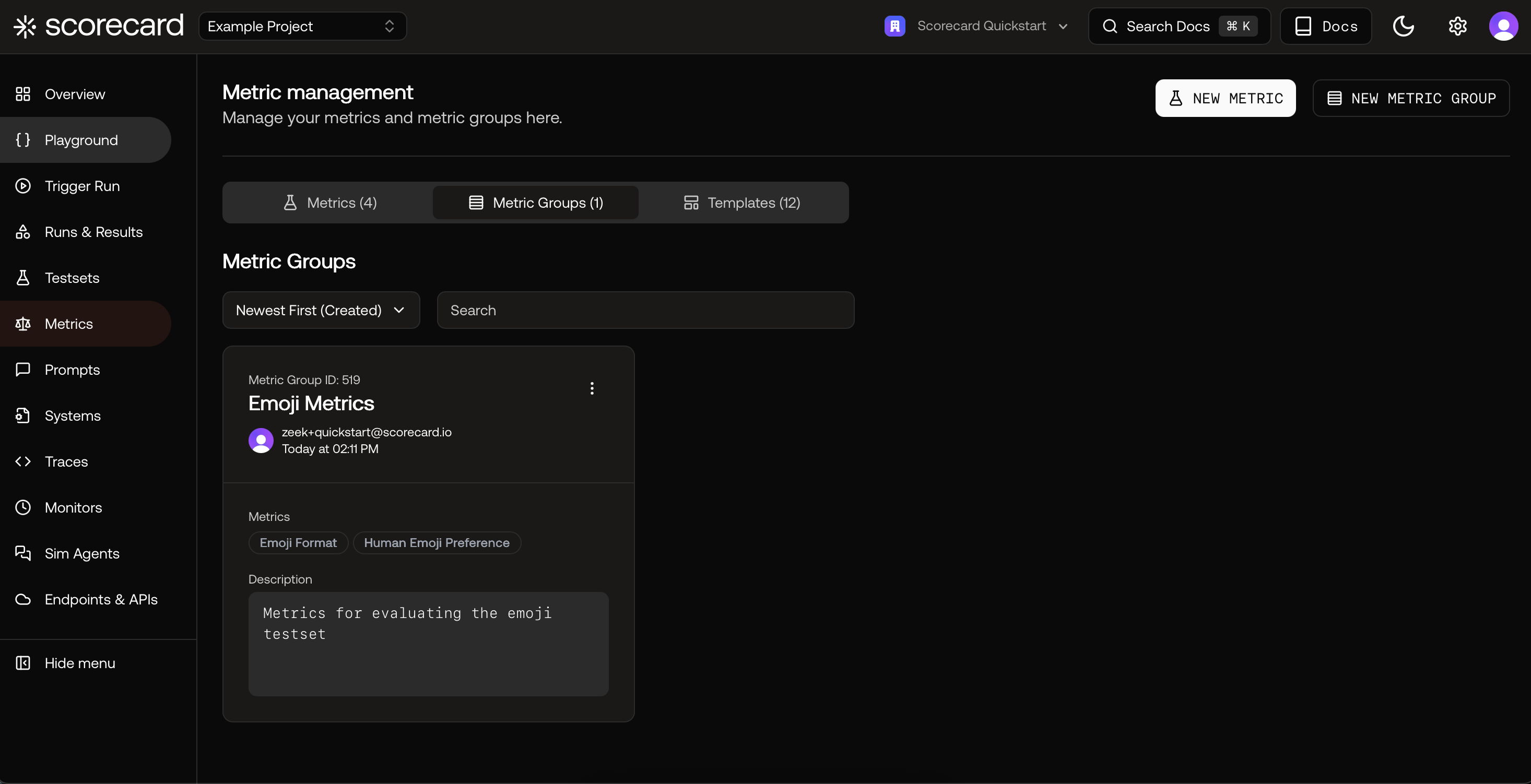

Metrics page with Metrics, Groups, and Templates tabs.

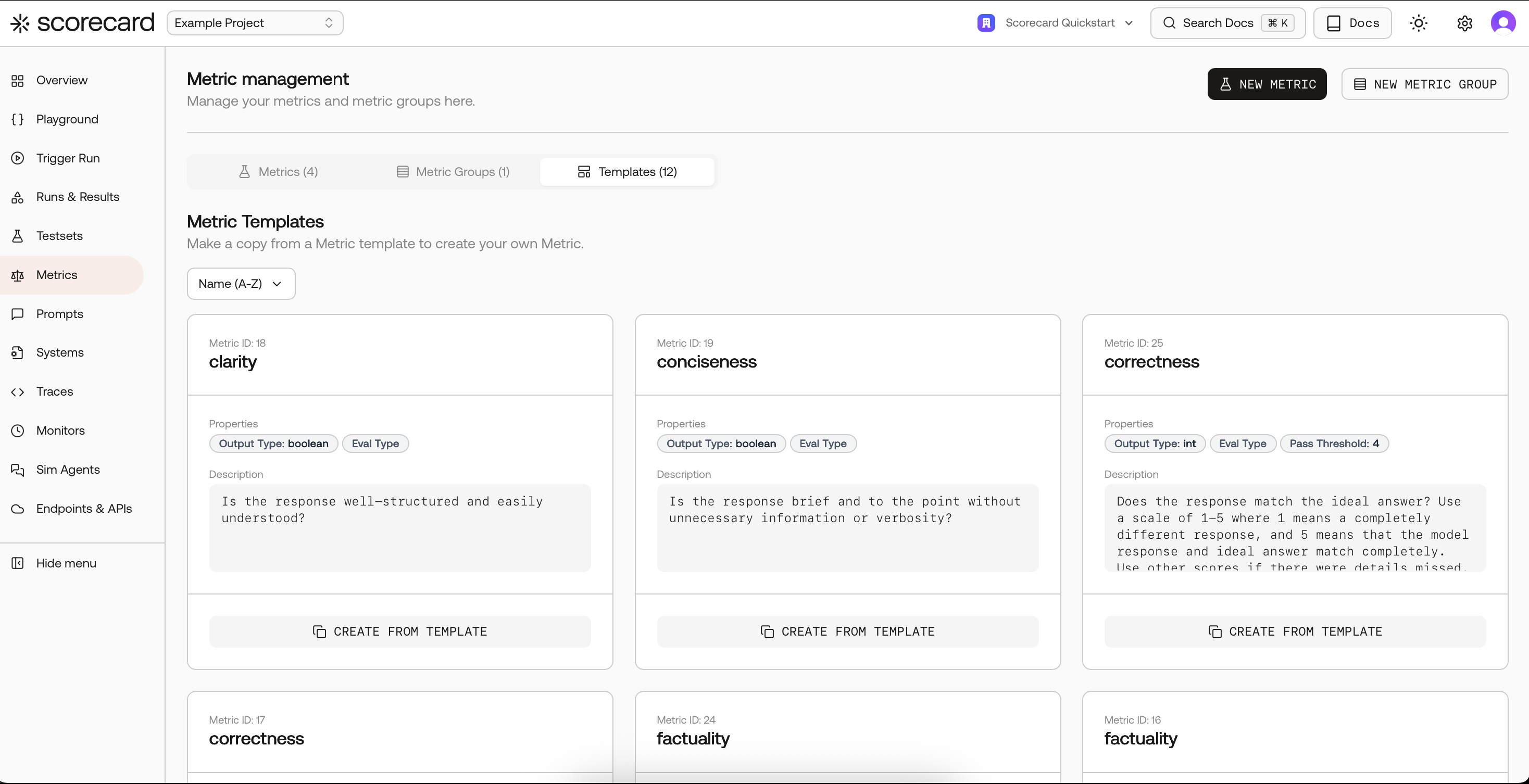

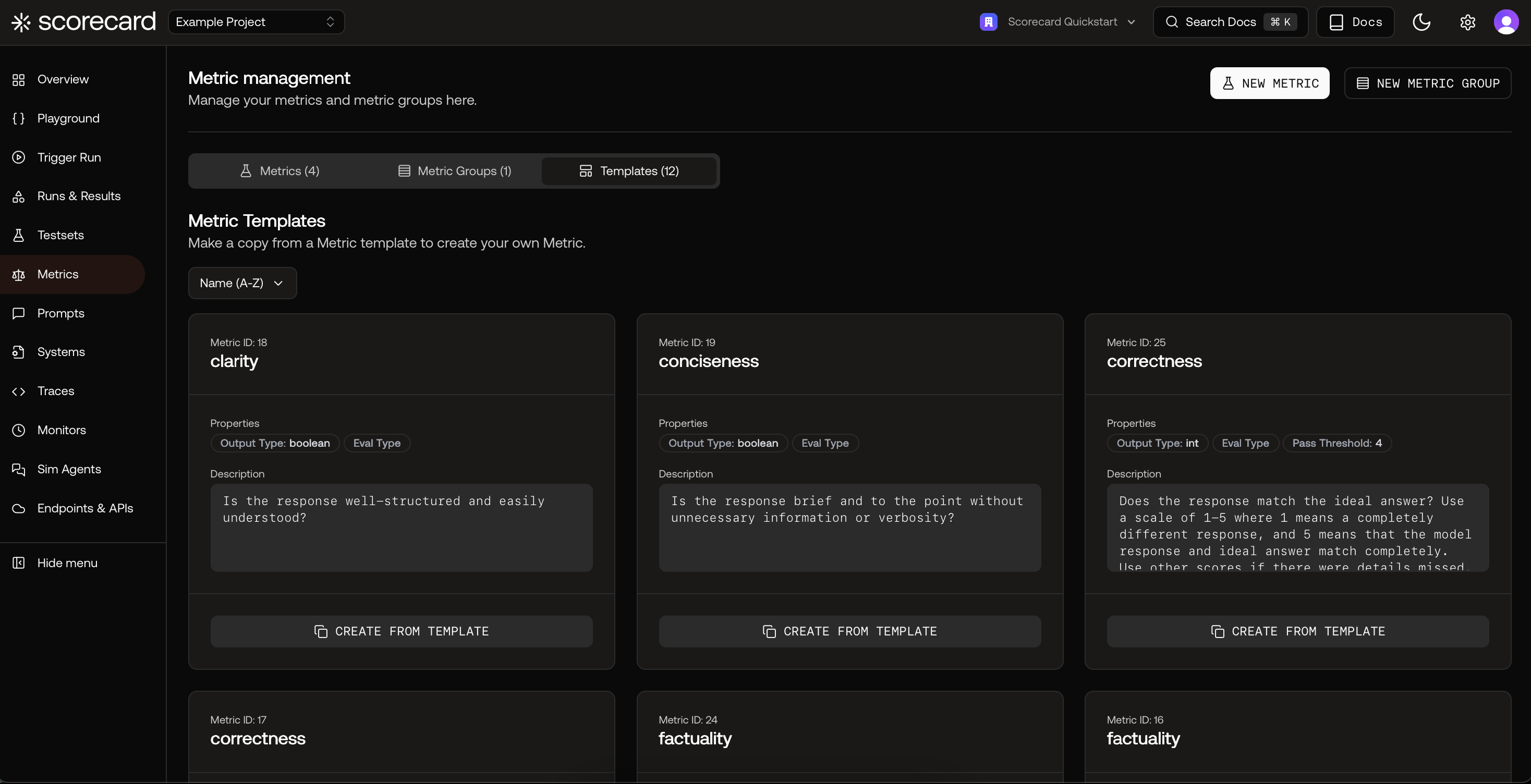

Open Metrics and explore templates

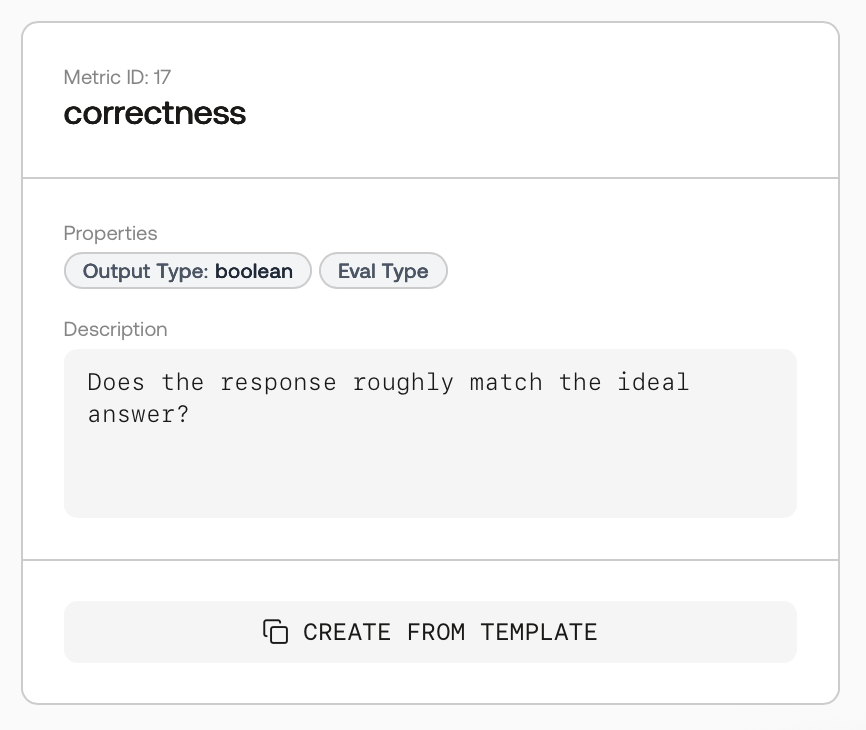

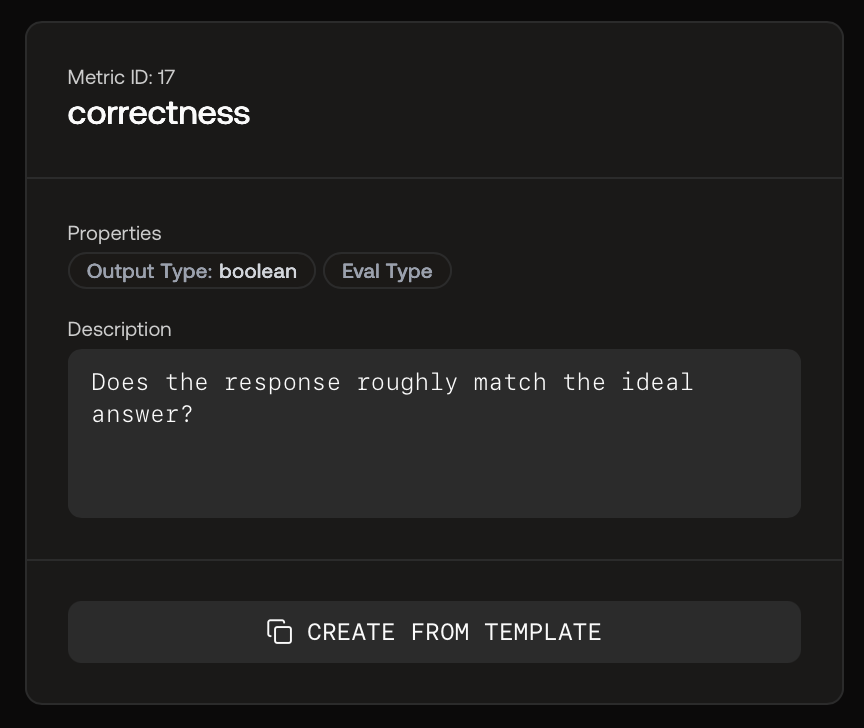

Templates list with Create from Template.

Template details with description and output type.

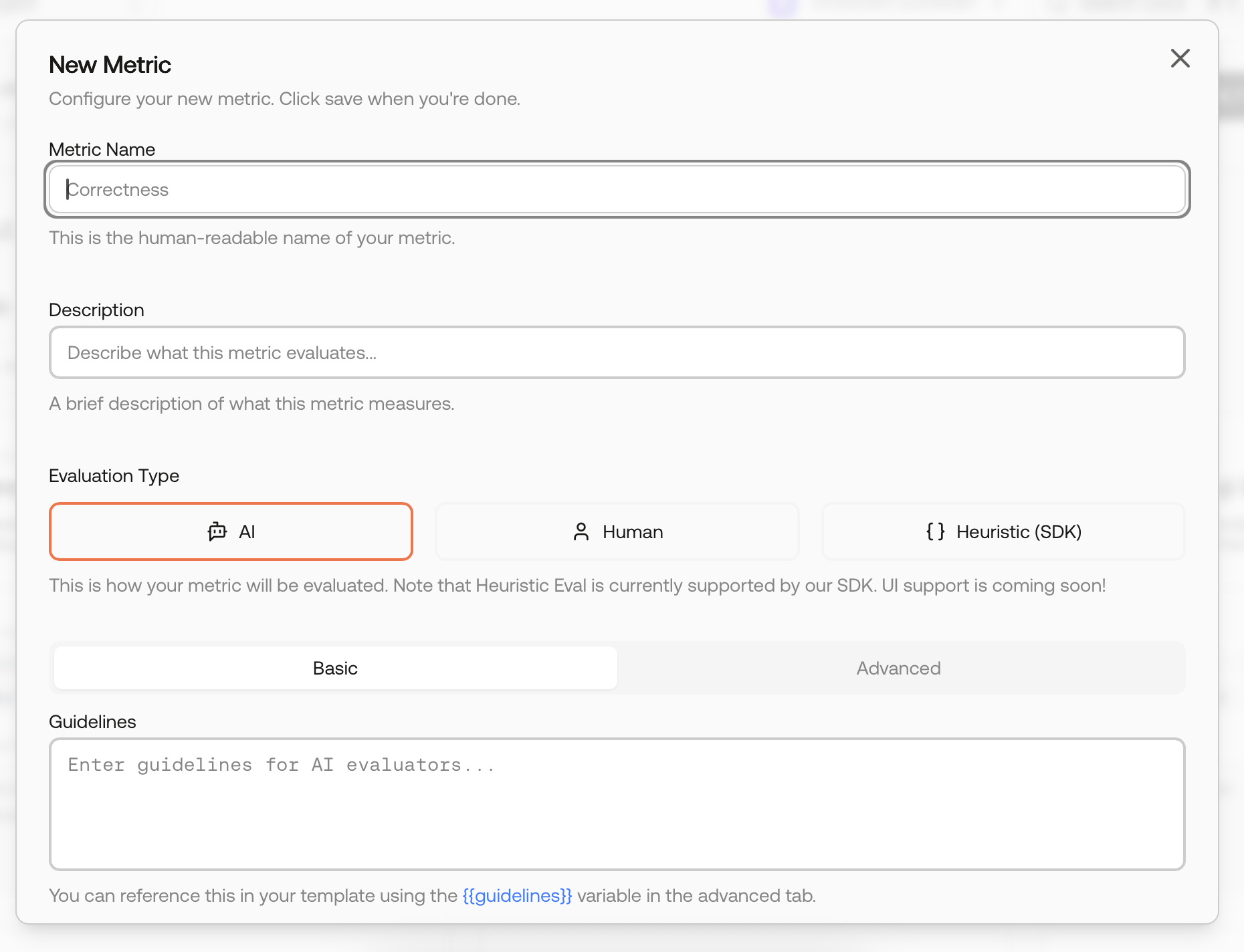

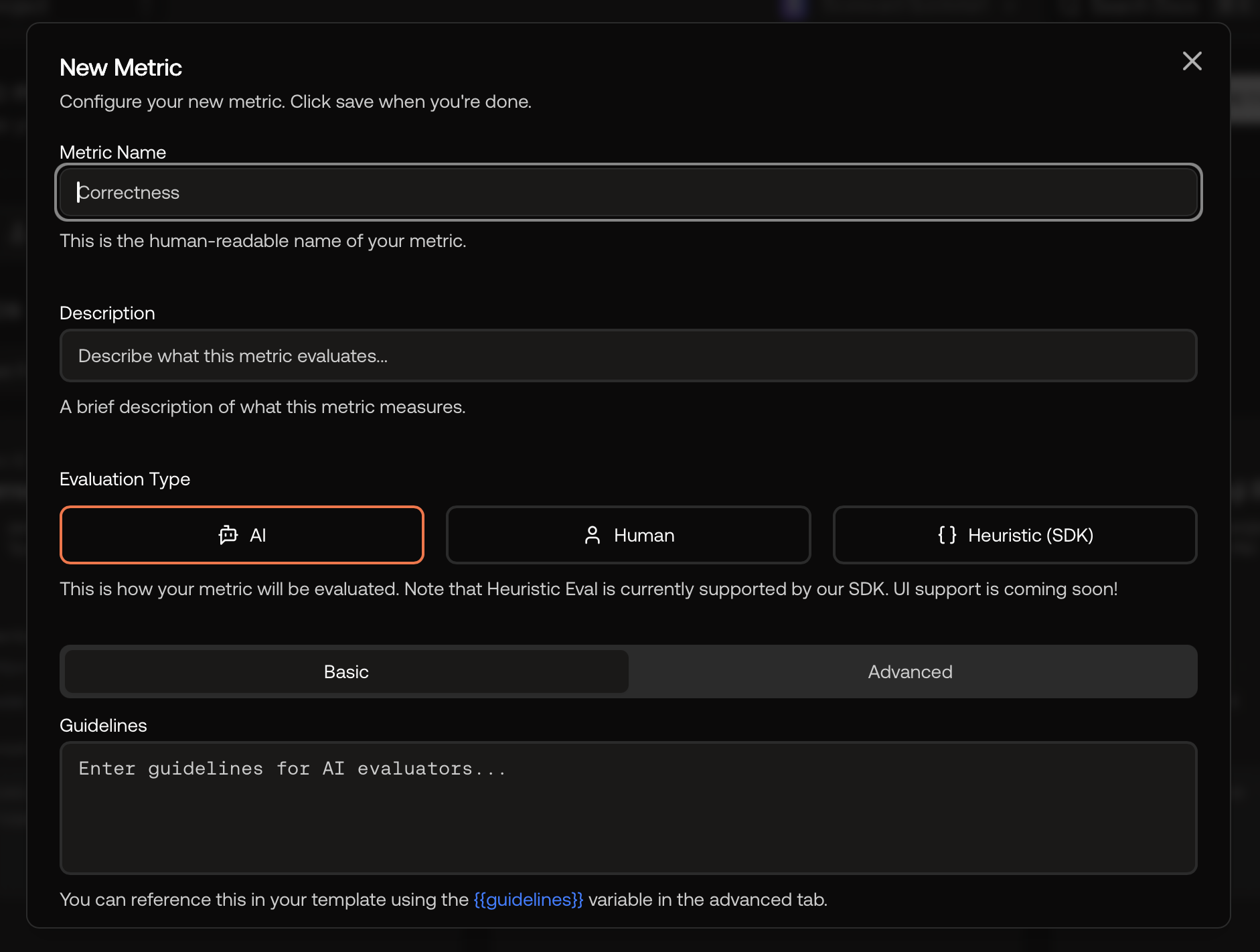

Create your first metric (AI‑scored)

New Metric modal – name, description, evaluation type, and guidelines.

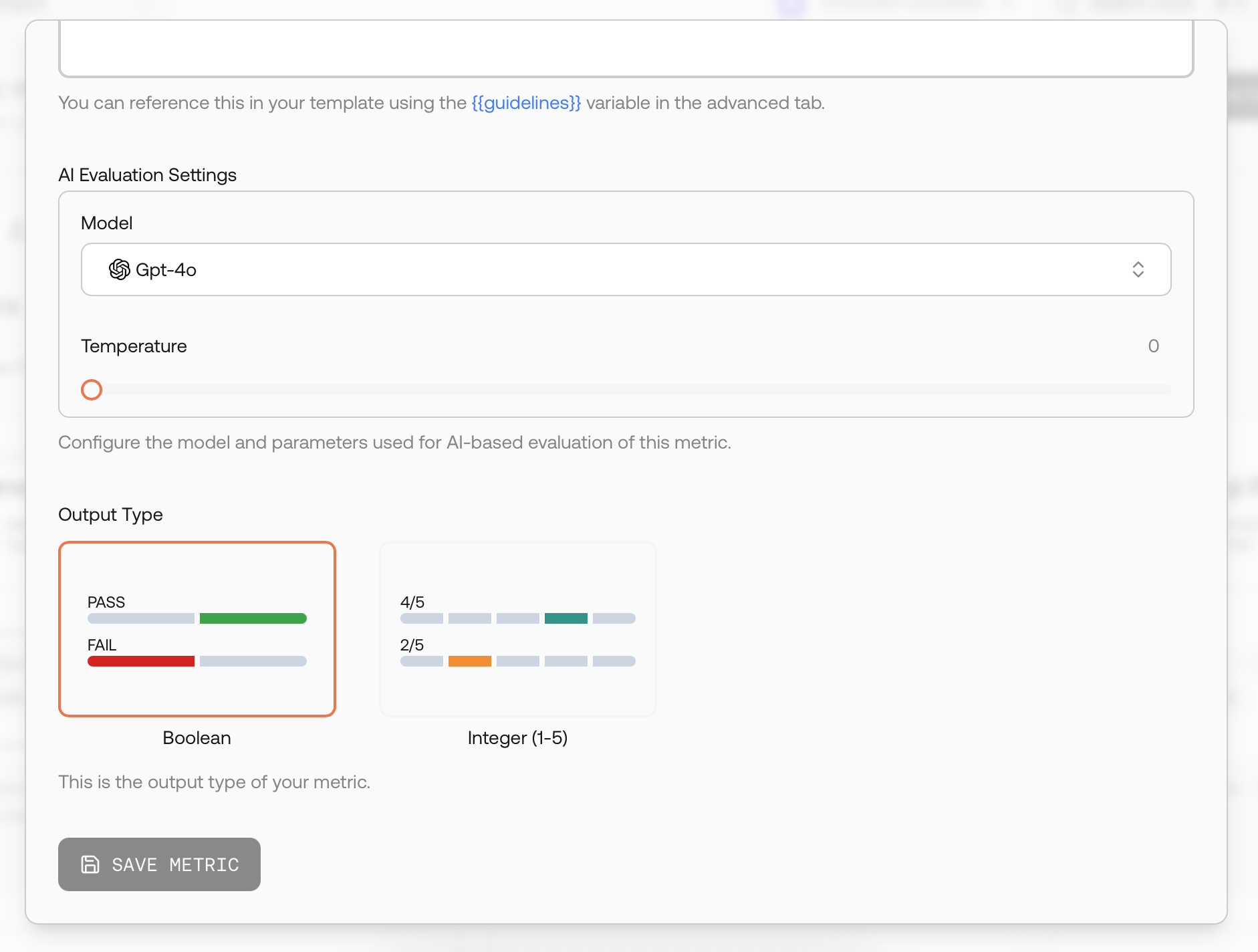

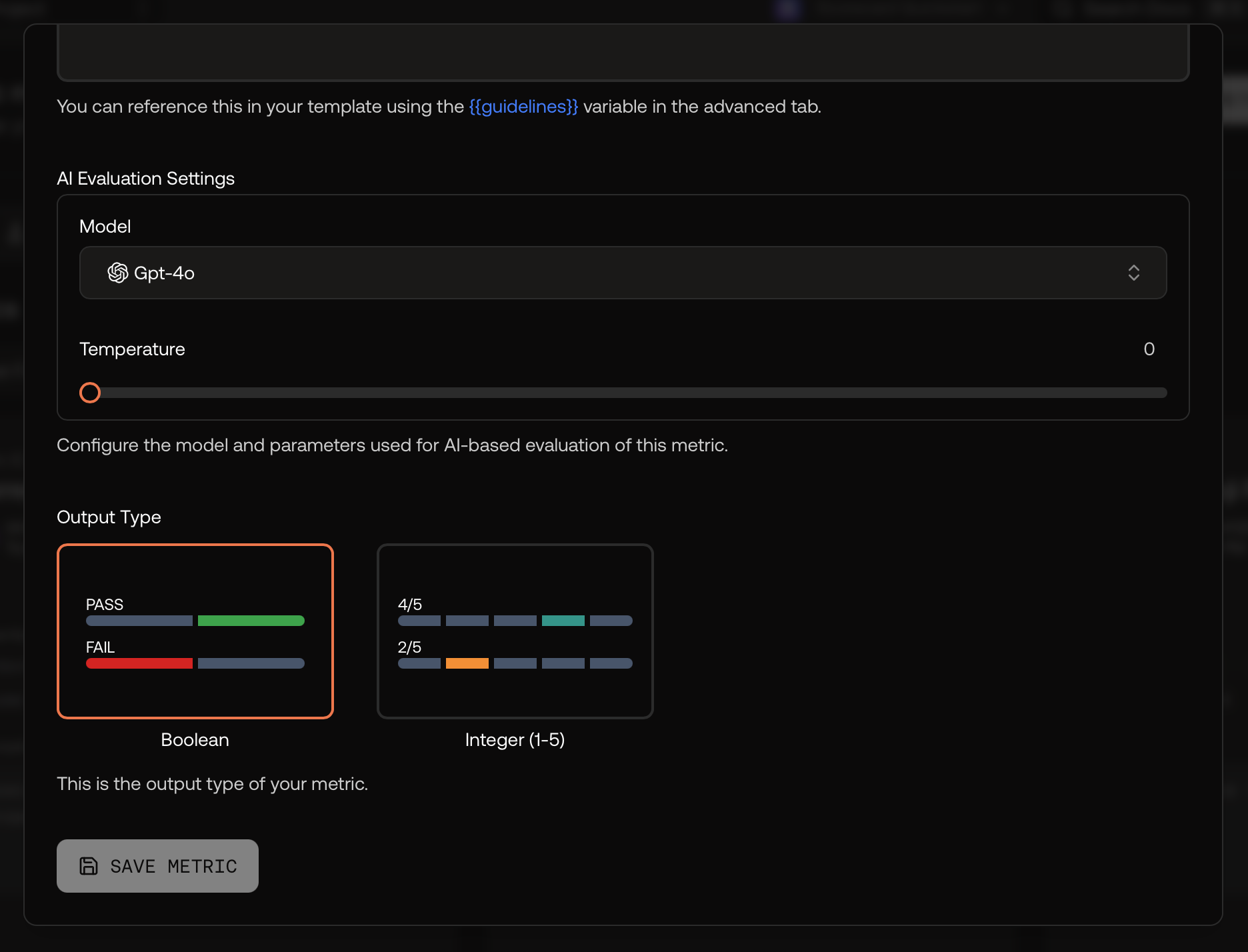

AI evaluator settings and output type.

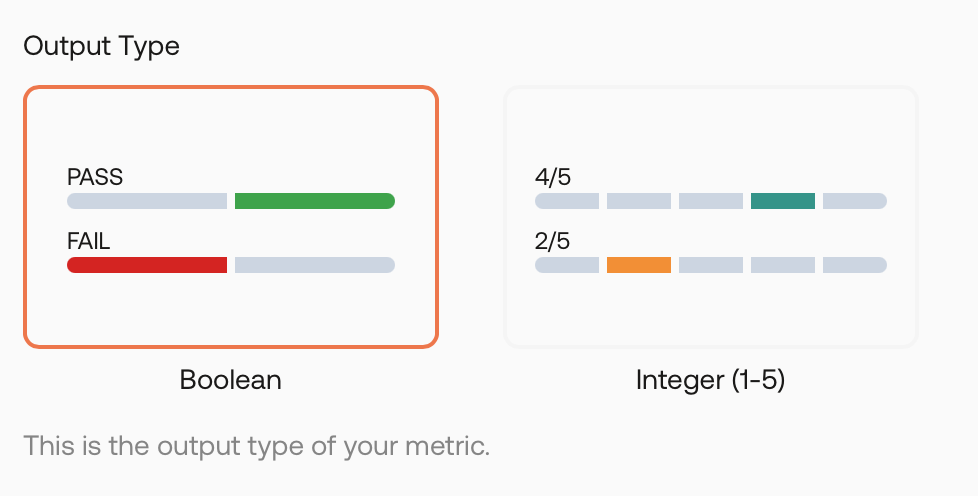

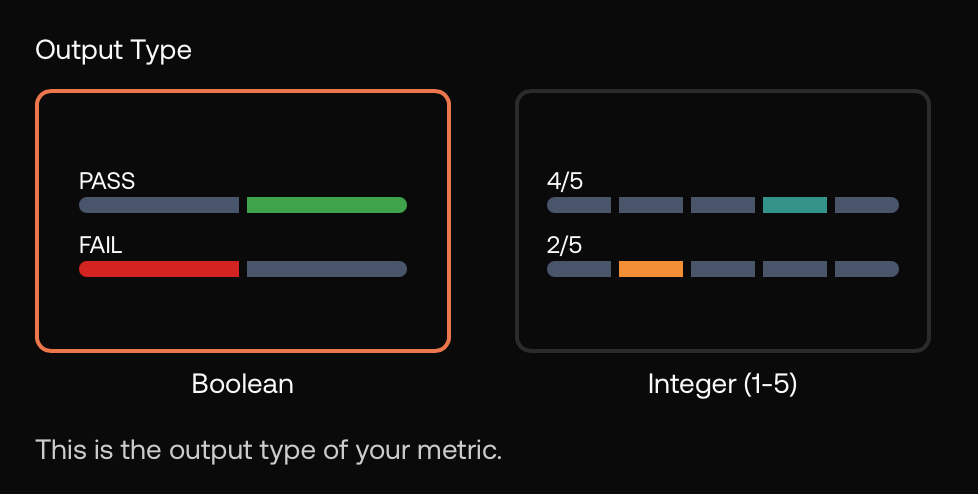

Choose Boolean (pass/fail) or Integer (1–5).

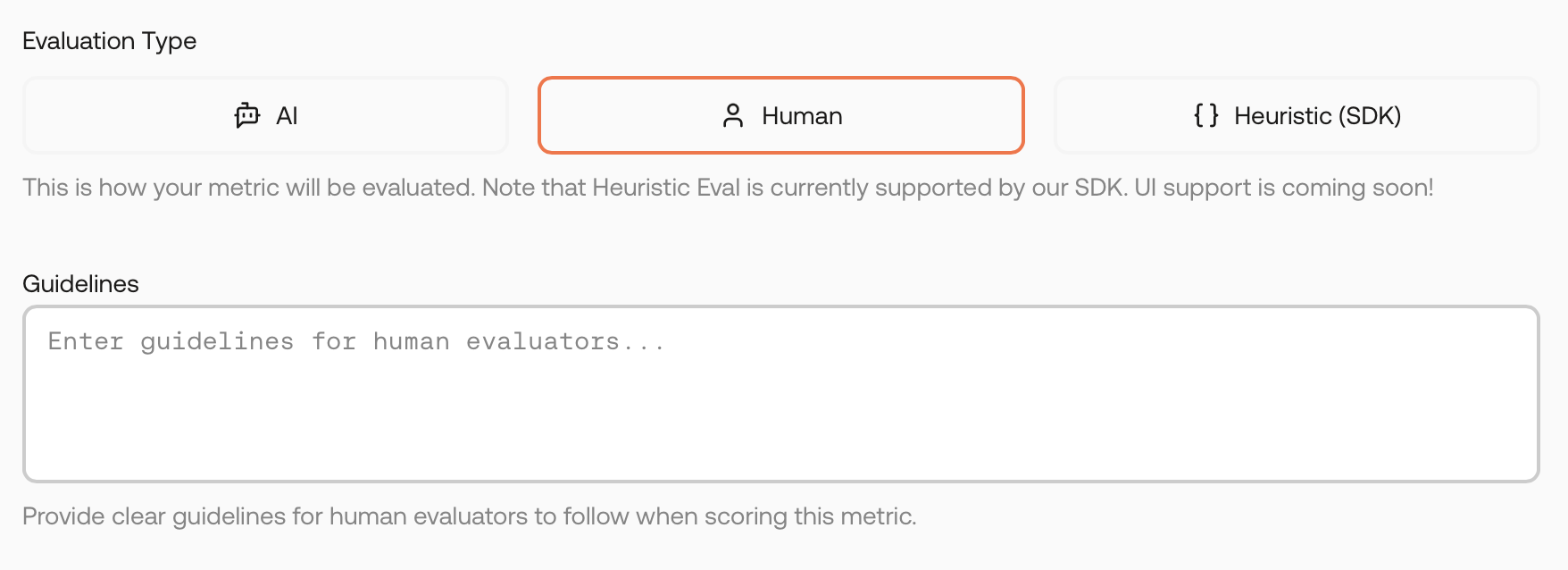

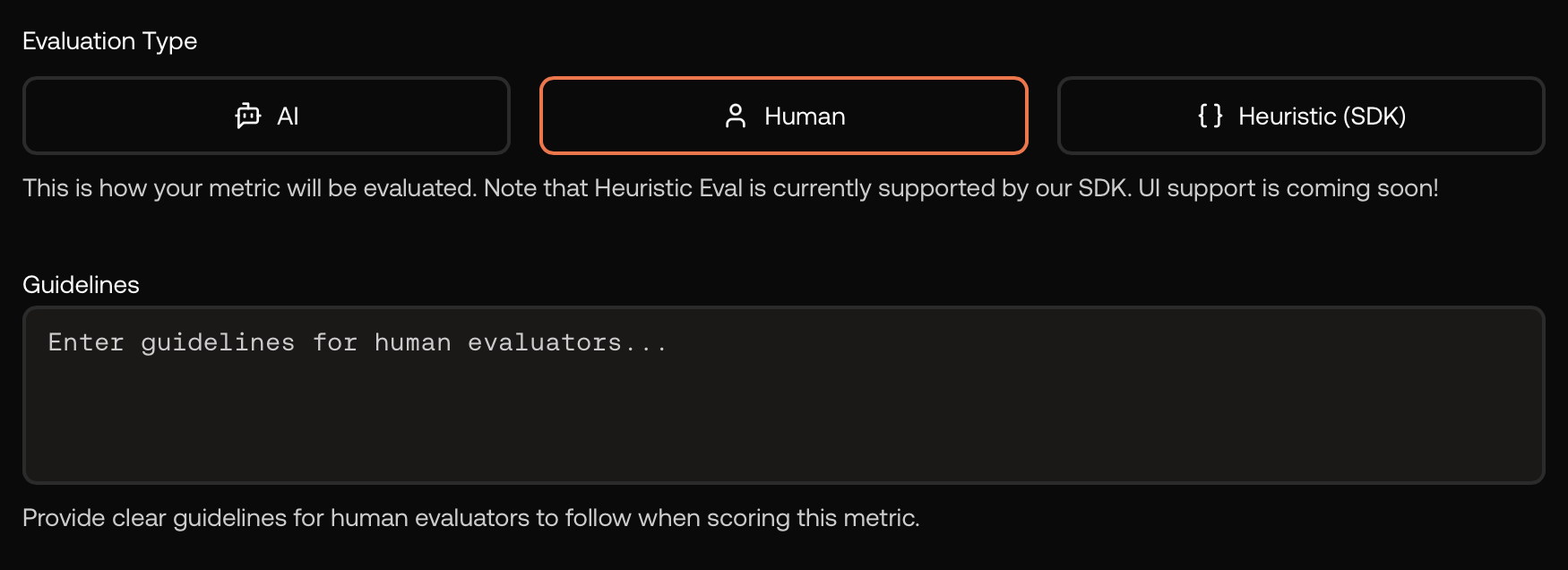

Optional: Human‑scored metric

Human evaluation – provide instructions for reviewers.

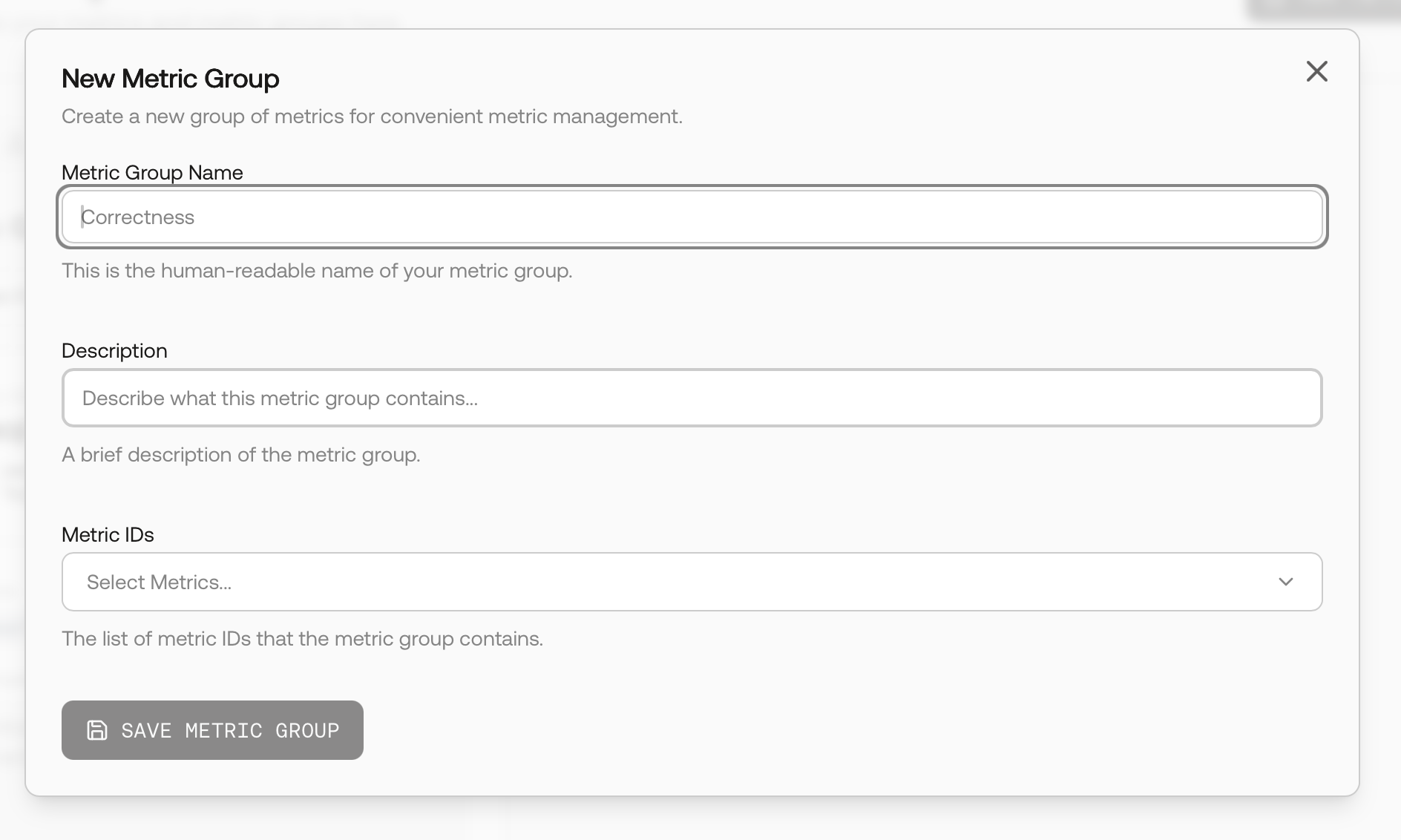

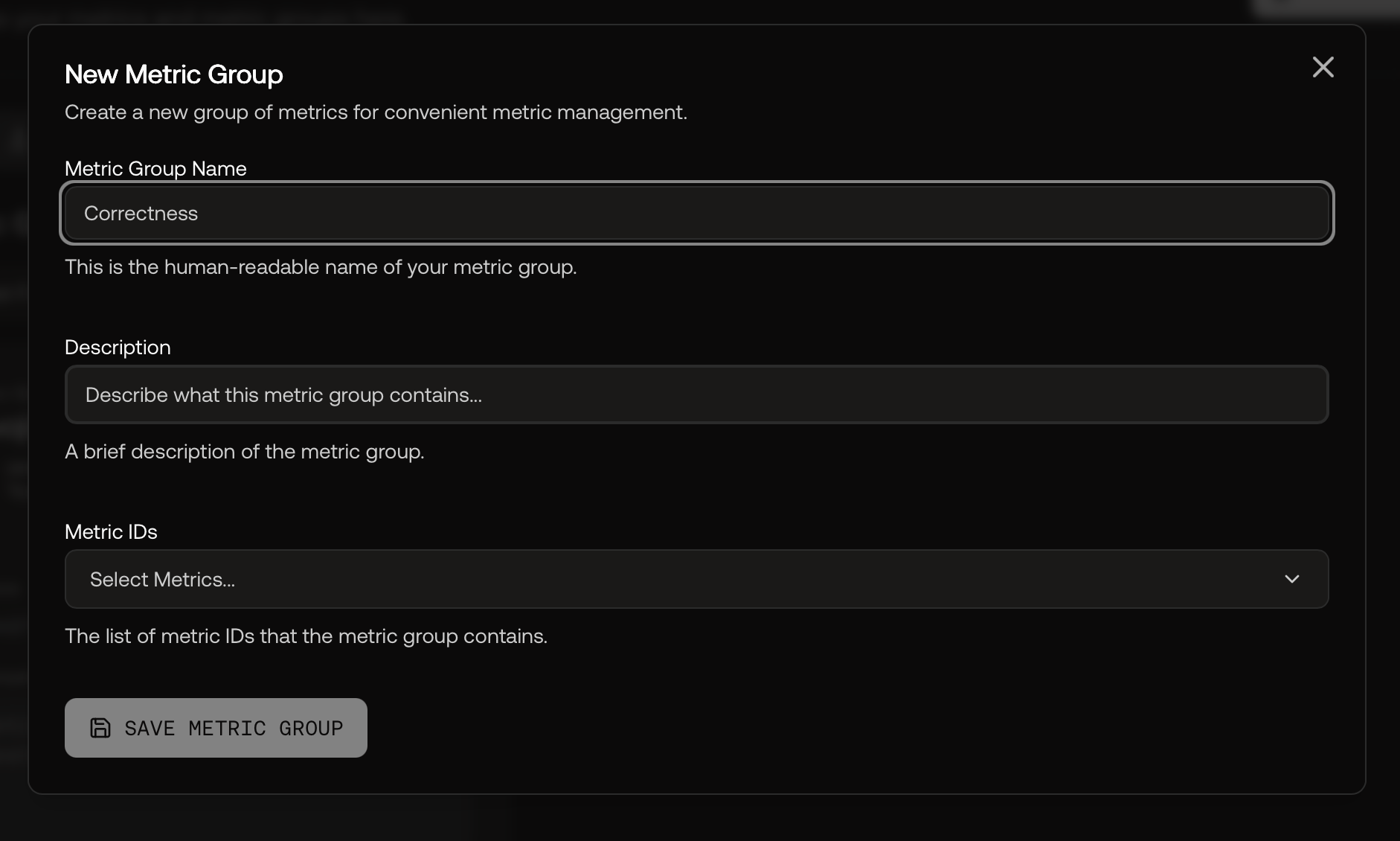

Create a Metric Group

Create a Metric Group and select metrics to include.

Metric Groups overview.

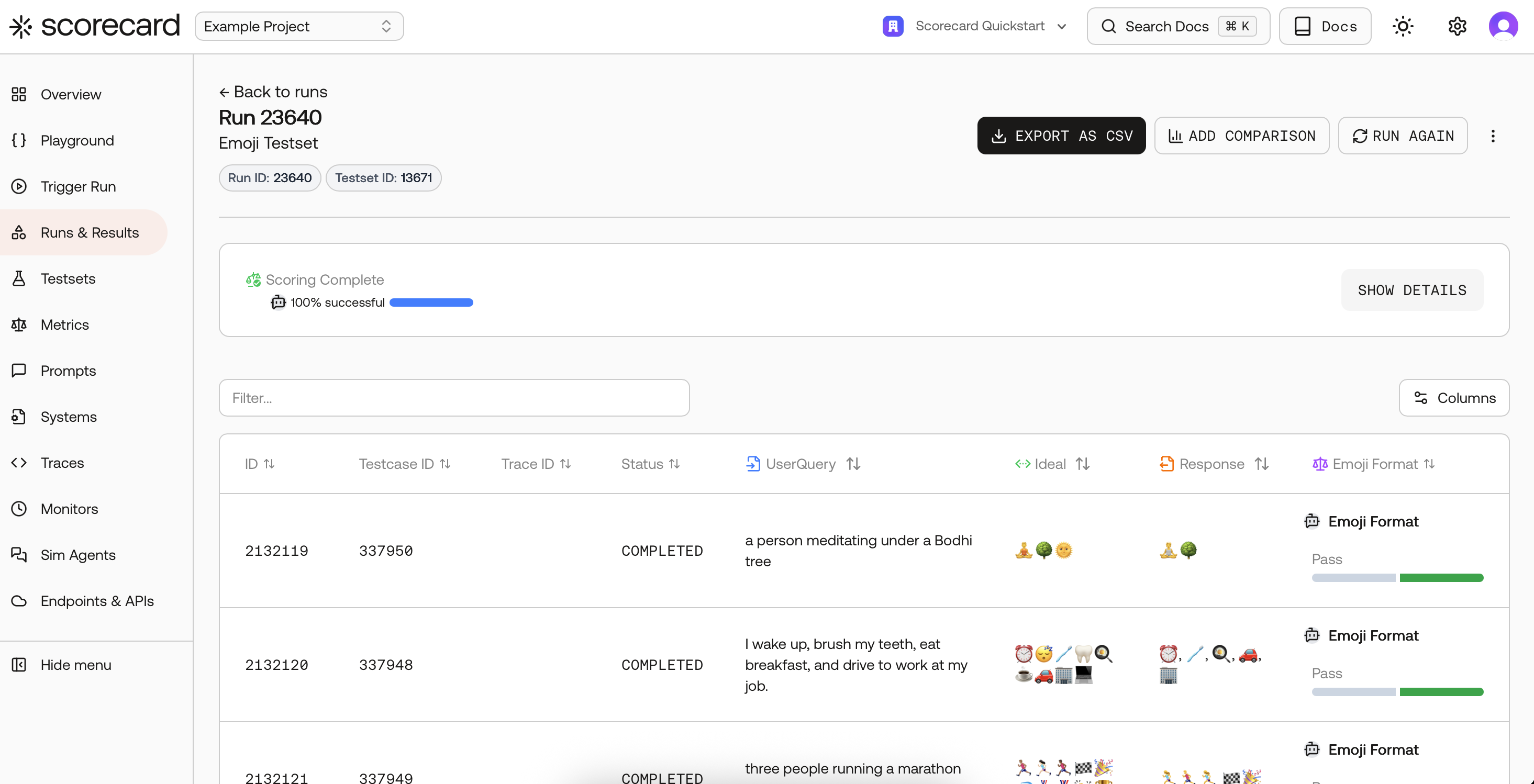

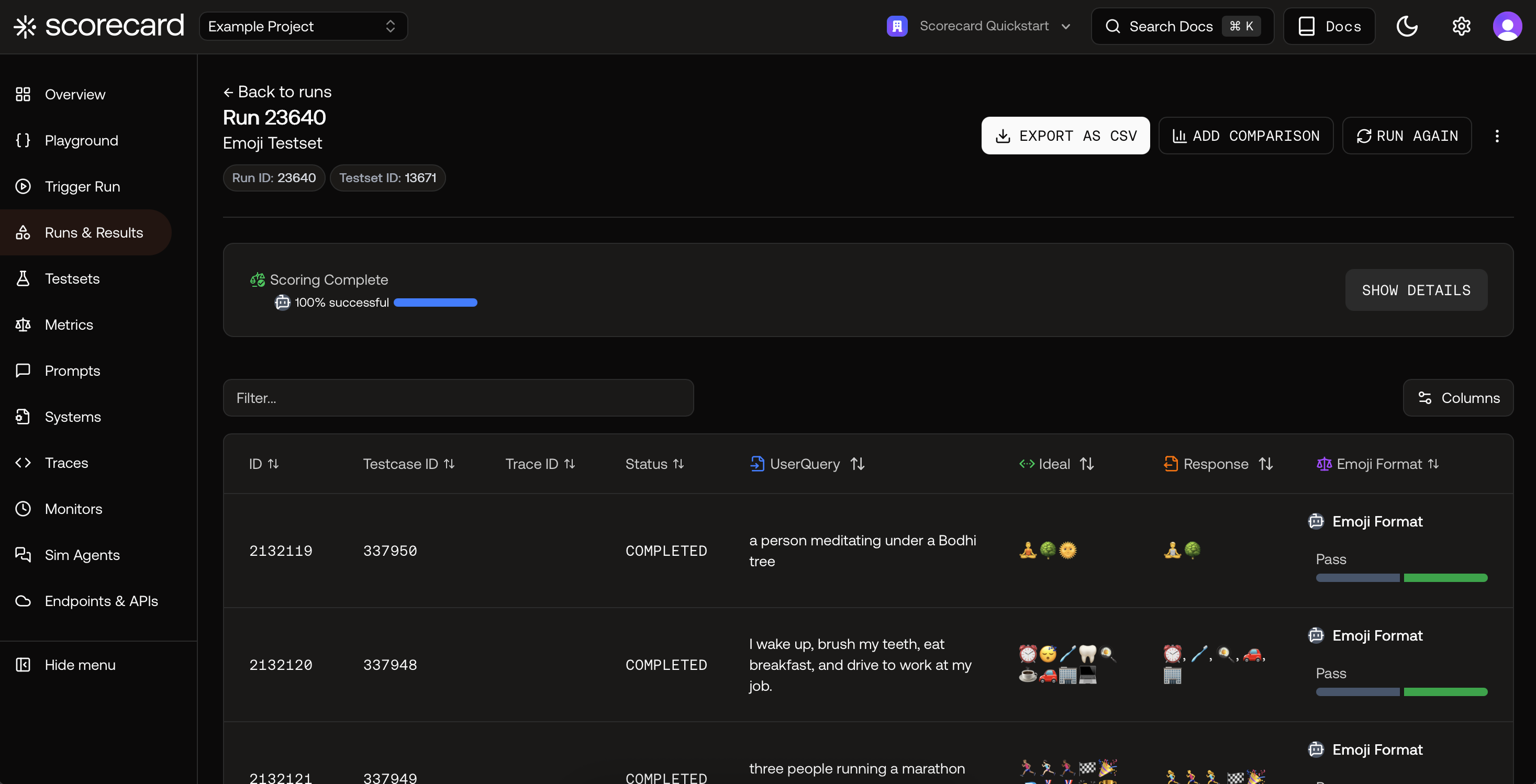

Kick off a Run and pick metrics

- Pick individual metrics by name, or select a Metric Group for consistency.

- Integer metrics show an average on the run; Boolean metrics show pass‑rate.

- Runs with human‑scored metrics will show status Scoring until reviewers submit results.

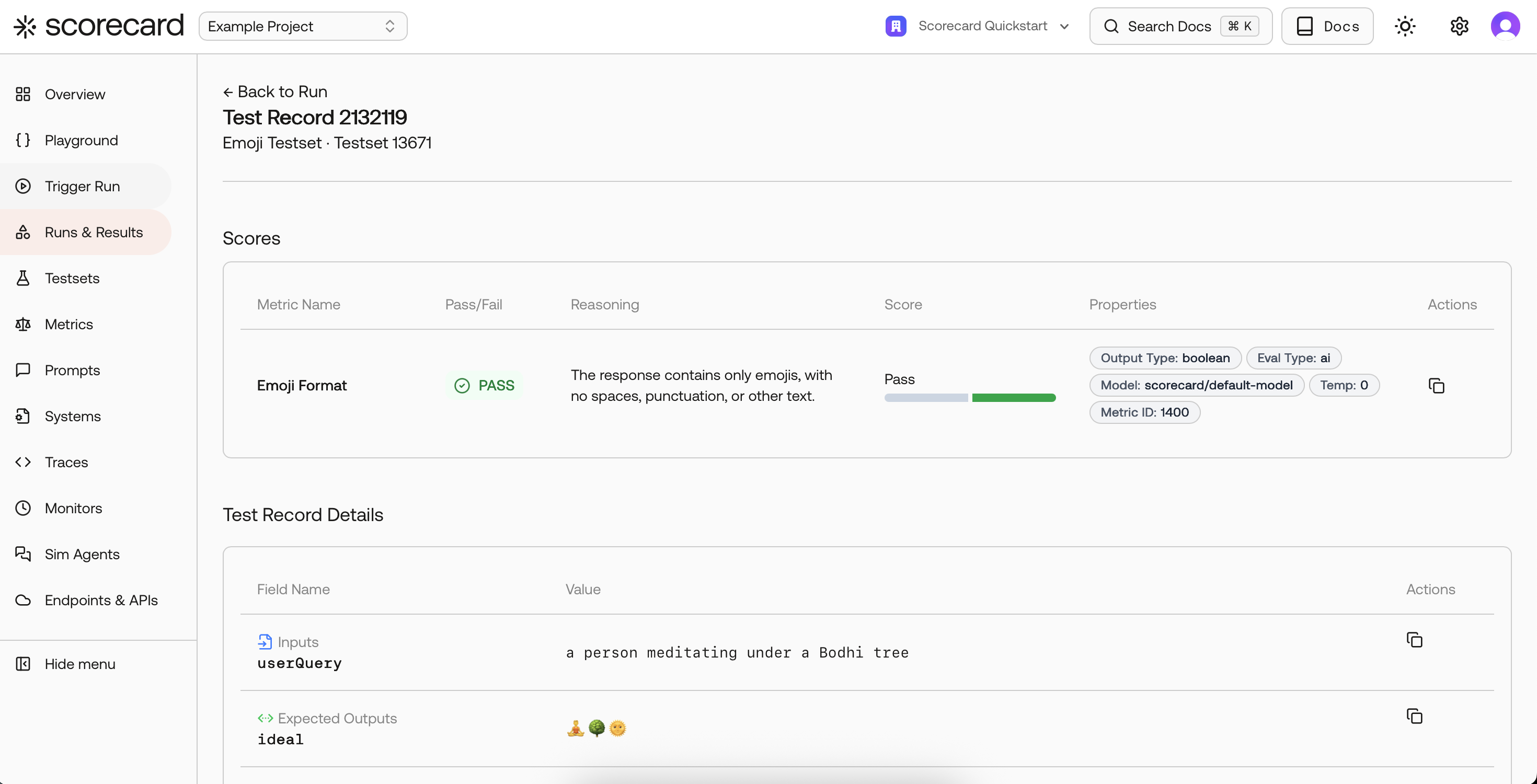

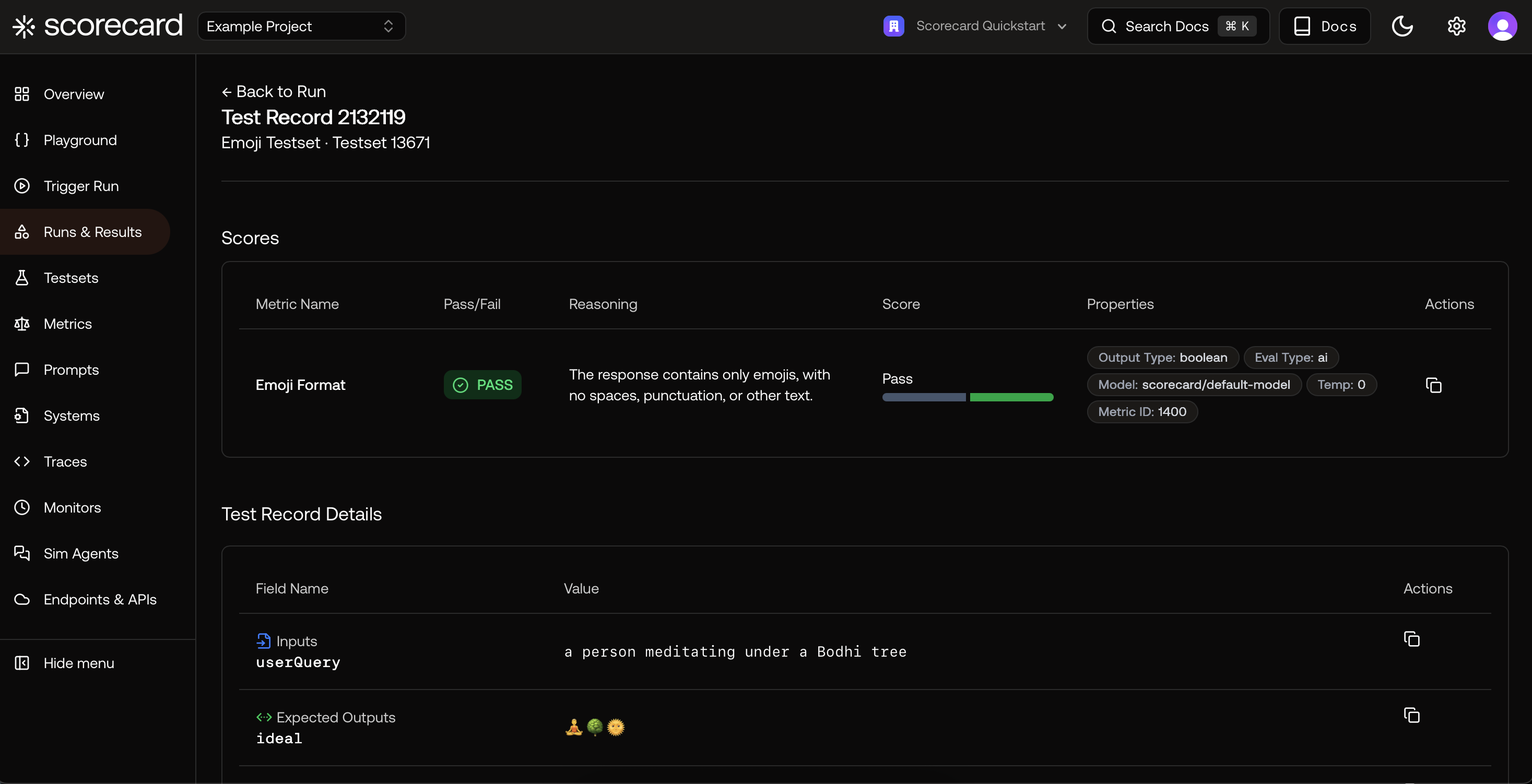

Run results with per‑record scores.

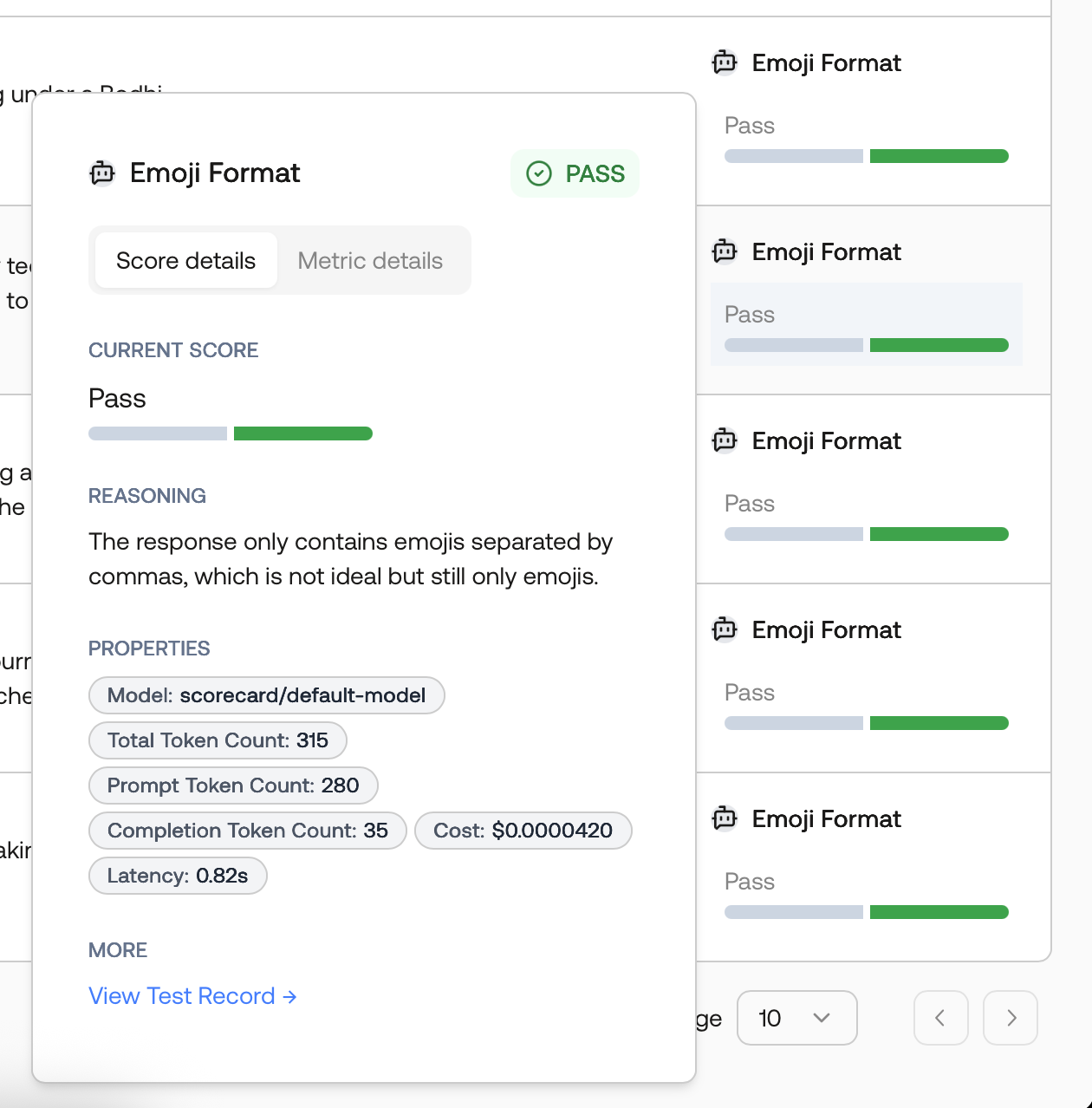

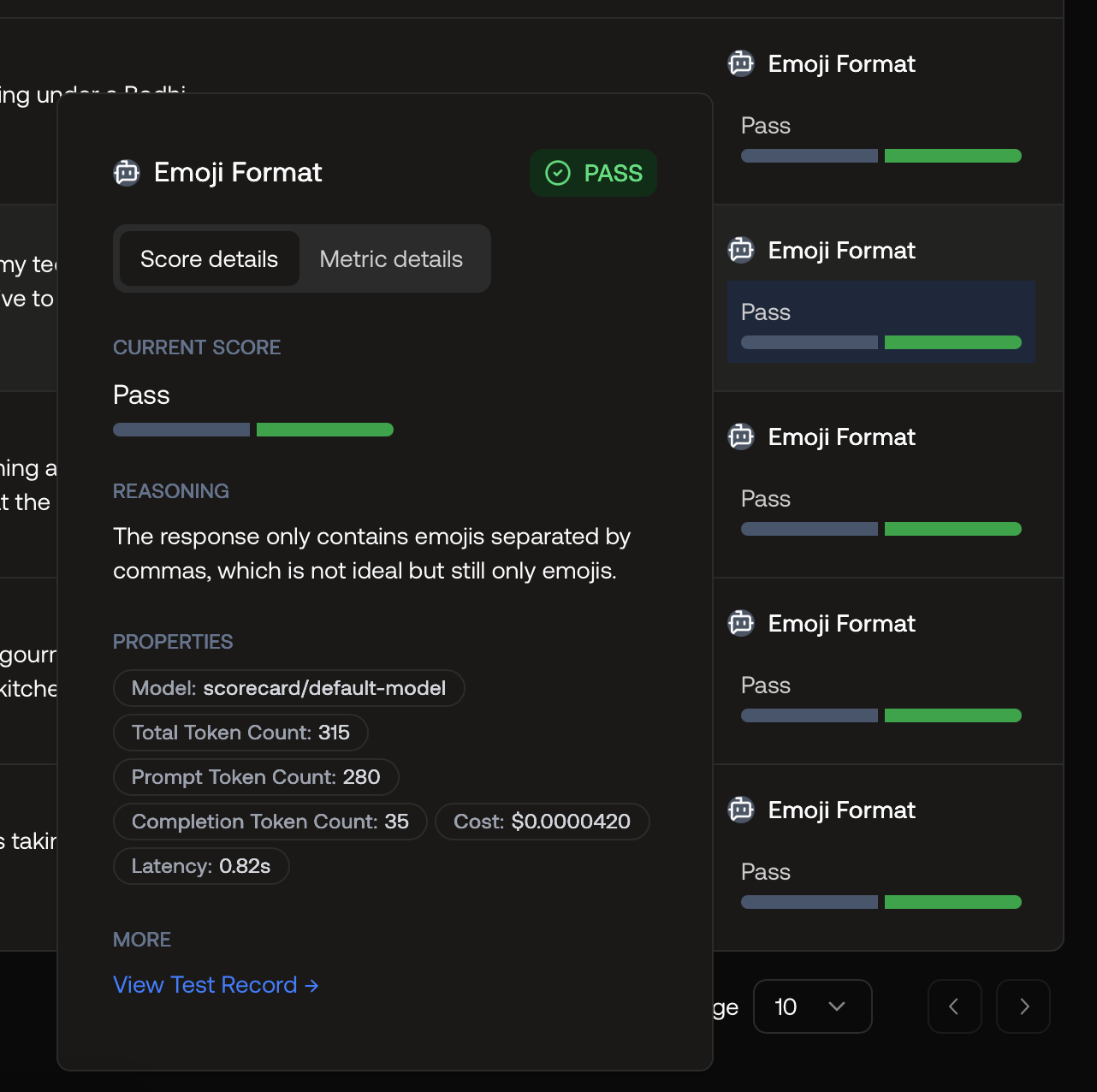

Inspect scores and explanations

Score explanation with reasoning and model details.

Record view showing inputs/outputs and metric results.

Metric types

- AI‑scored: Uses a model to apply your guidelines consistently and at scale.

- Human‑scored: Great for nuanced judgments or gold‑standard baselines.

- Heuristic (SDK): Deterministic, code‑based checks via the SDK (e.g., latency, regex, policy flags).

- Output types: Choose Boolean (pass/fail) or Integer (1–5).

Second‑party metrics (optional)

If you already use established evaluation libraries, you can mirror those metrics in Scorecard:- MLflow genai: Relevance, Answer Relevance, Faithfulness, Answer Correctness, Answer Similarity

- RAGAS: Faithfulness, Answer Relevancy, Context Recall, Context Precision, Context Relevancy, Answer Semantic Similarity

Best practices for strong metrics

- Be specific. Minimize ambiguity in guidelines; include “what not to do.”

- Pick the right output type. Use Boolean for hard requirements; 1–5 for nuance.

- Keep temperature low. Use ≈0 for repeatable AI scoring.

- Pilot and tighten. Run on 10–20 cases, then refine wording to reduce false positives.

- Bundle into groups. Combine complementary checks (e.g., Relevance + Faithfulness + Safety) to keep evaluations consistent.