- OS - Home

- OS - Needs

- OS - Overview

- OS - History

- OS - Components

- OS - Structure

- OS - Architecture

- OS - Services

- OS - Properties

- Process Management

- OS - Processes

- OS - Process Control Block

- OS - Operations on Processes

- OS - Inter Process Communication

- OS - Context Switching

- OS - Multi-threading

- Scheduling Algorithms

- OS - Process Scheduling

- Preemptive and Non-Preemptive Scheduling

- Scheduling Algorithms Overview

- FCFS Scheduling Algorithm

- SJF Scheduling Algorithm

- Round Robin Scheduling Algorithm

- HRRN Scheduling Algorithm

- Priority Scheduling Algorithm

- Multilevel Queue Scheduling

- Lottery Scheduling Algorithm

- OS - TAT & WAT

- Predicting Burst Time in SJF Scheduling

- Process Synchronization

- OS - Process Synchronization

- OS - Critical Section Problem

- OS - Critical Section Synchronization

- OS - Mutual Exclusion Synchronization

- OS - Semaphores

- OS - Counting Semaphores

- OS - Mutex

- OS - Turn Variable

- OS - Bounded Buffer Problem

- OS - Reader Writer Locks

- OS - Test and Set Lock

- OS - Peterson's Solution

- OS - Monitors

- OS - Sleep and Wake

- OS - Race Condition

- OS Deadlock

- Introduction to Deadlock in Operating System

- Conditions for Deadlock in Operating System

- Memory Management

- OS - Memory Management

- OS - Contiguous Memory Allocation

- OS - Non-Contiguous Memory Allocation

- OS - First Fit Algorithm

- OS - Next Fit Algorithm

- OS - Best Fit Algorithm

- OS - Worst Fit Algorithm

- OS - Fragmentation

- OS - Virtual Memory

- OS - Segmentation

- OS - Buddy System

- OS - Allocating Kernel Memory

- OS - Overlays

- Paging and Page Replacement

- OS - Paging

- OS - Demand Paging

- OS - Page Table

- OS - Page Replacement Algorithms

- OS - Optimal Page Replacement Algorithm

- OS - Belady's Anomaly

- OS - Thrashing

- Storage and File Management

- OS - File Systems

- OS - File Attributes

- OS - Structures of Directory

- OS - Linked Index Allocation

- OS - Indexed Allocation

- I/O Systems

- OS - I/O Hardware

- OS - I/O Software

- OS Types

- OS - Types

- OS - Batch Processing

- OS - Multiprocessing

- OS - Hybrid

- OS - Monolithic

- OS - Zephyr

- OS - Nix

- OS - Linux

- OS - Blackberry

- OS - Garuda

- OS - Tails

- OS - Clustered

- OS - Haiku

- OS - AIX

- OS - Solus

- OS - Tizen

- OS - Bharat

- OS - Fire

- OS - Bliss

- OS - VxWorks

- OS - Embedded

- OS - Single User

- Miscellaneous Topics

- OS - Security

- OS Questions Answers

- OS - Questions Answers

- OS Useful Resources

- OS - Quick Guide

- OS - Useful Resources

- OS - Discussion

Operating System - Critical Section Problem

Critical Section

A critical section is a code segment where shared variables can be accessed. An atomic action is required in a critical section; only one process can execute in at a time, while all other processes must wait to enter their critical sections.

The critical section contains shared variables or resources that need to be synchronized to maintain data consistency. A critical section is a group of instructions that ensures code execution, such as resource access. If one process tries to access shared data while another thread reads the value simultaneously, the result is unique and unpredictable. Therefore, access to shared variables must be synchronized.

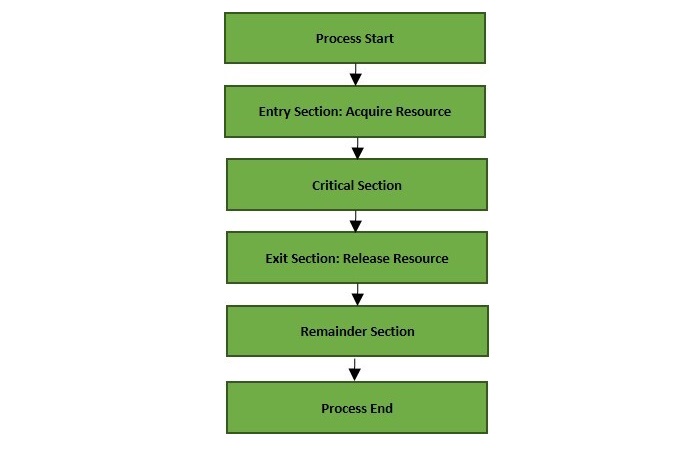

A diagram that demonstrates the critical section is as follows

In the above diagram, the entry section manages the entry into the critical section. It acquires the resources needed for process execution. The exit section manages the exit from the critical section, releasing the resources and informing other processes that the critical section is free.

Critical Section Problem

The critical section problem requires a solution to synchronize different processes. The solution must satisfy the following conditions

-

Mutual Exclusion: Mutual exclusion means only one process can be inside the critical section at a time. If other processes require the critical section, they must wait until it is free. If Pi is executing in its critical section, no other processes can execute in their critical sections: The resources involved are non-shareable.

At least one resource must be held in a non-shareable mode i.e, only one process at a time claims exclusive control of the resource. If another process requests that resource, it must be delayed until the resource is released.

-

Progress: Progress means that if a process is not using the critical section, it should not prevent other processes from accessing it. In other words, any process can enter the critical section if it is free.

If no process can enter the critical section and some processes which to enter their critical sections, only those processes not in their critical sections, only those processes not in their remainder sections can participate in deciding which will enter next. This selection cannot be postponed indefinitely.

-

Bounded Waiting: Bounded waiting means that each process must have a limited waiting time and should not wait endlessly to access the critical section. There exists a limit on the number of times that order processes are allowed to enter critical section after a process has requested entry and before that request is granted.

Solution to Critical Section Problem

Deadlock, starvation, and overhead are mentioned as potential issues. There are more specific strategies for avoiding or mitigating these problems, such as using timeouts to prevent deadlocks and implementing priority inheritance to reduce overhead. Scalability can impact systems where multiple nodes access shred resources. A critical section is a code segment where a process accesses the section and then exits it, releasing the resources.

To ensure that only one process executes the critical section at a time, process synchronization mechanisms such as semaphores are used. A semaphore is a variable that determines whether a resource is available and provides mutual exclusion to shred resources.

When process enters critical section, it must first request the access the semaphore or mutex associated with the section. If the resource is available, then the process can proceed to execute the critical section. If the resourced is not determined, then the process must wait until it is released by the process currently executing the critical section.

When a process finishes executing the critical section, it releases the semaphore or mutex, allowing another process to enter the critical section if needed.

Advantages of Critical Section

The following are the specific advantages of the critical section problem in process synchronization −

Reduces CPU Utilization: By specifying processes to wait without wasting CPU cycles, critical sections can enhance overall system efficiency.

Provides Mutual Exclusion: Critical section ensures mutual exclusion for shared resources, preventing multiple processes from accessing the same resource simultaneously and causing synchronization issues.

Simplifies Synchronization: This simplifies the synchronization of shared resources, as only one process can access the resources at a time, eliminating the need for more complex synchronization mechanisms.