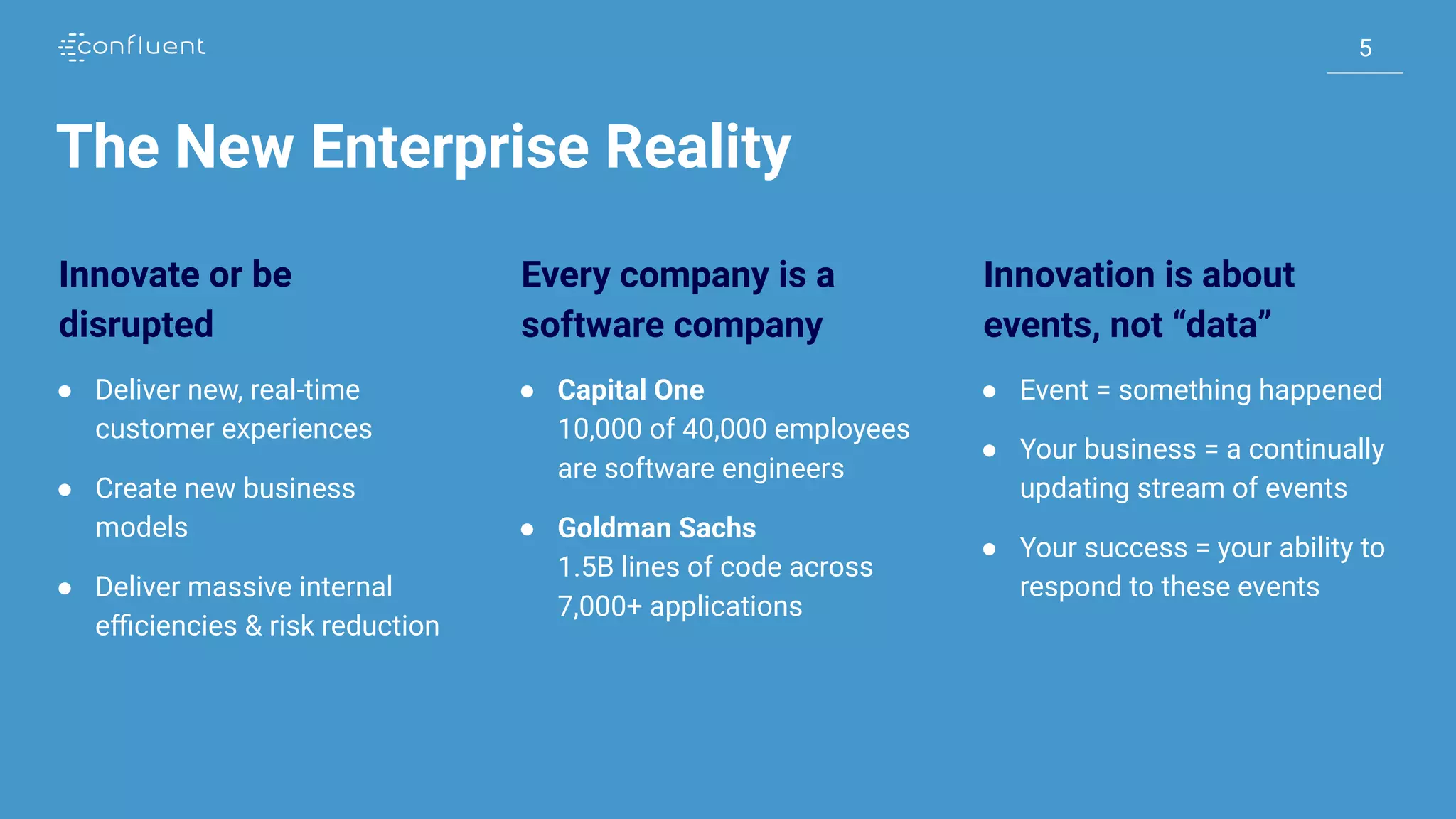

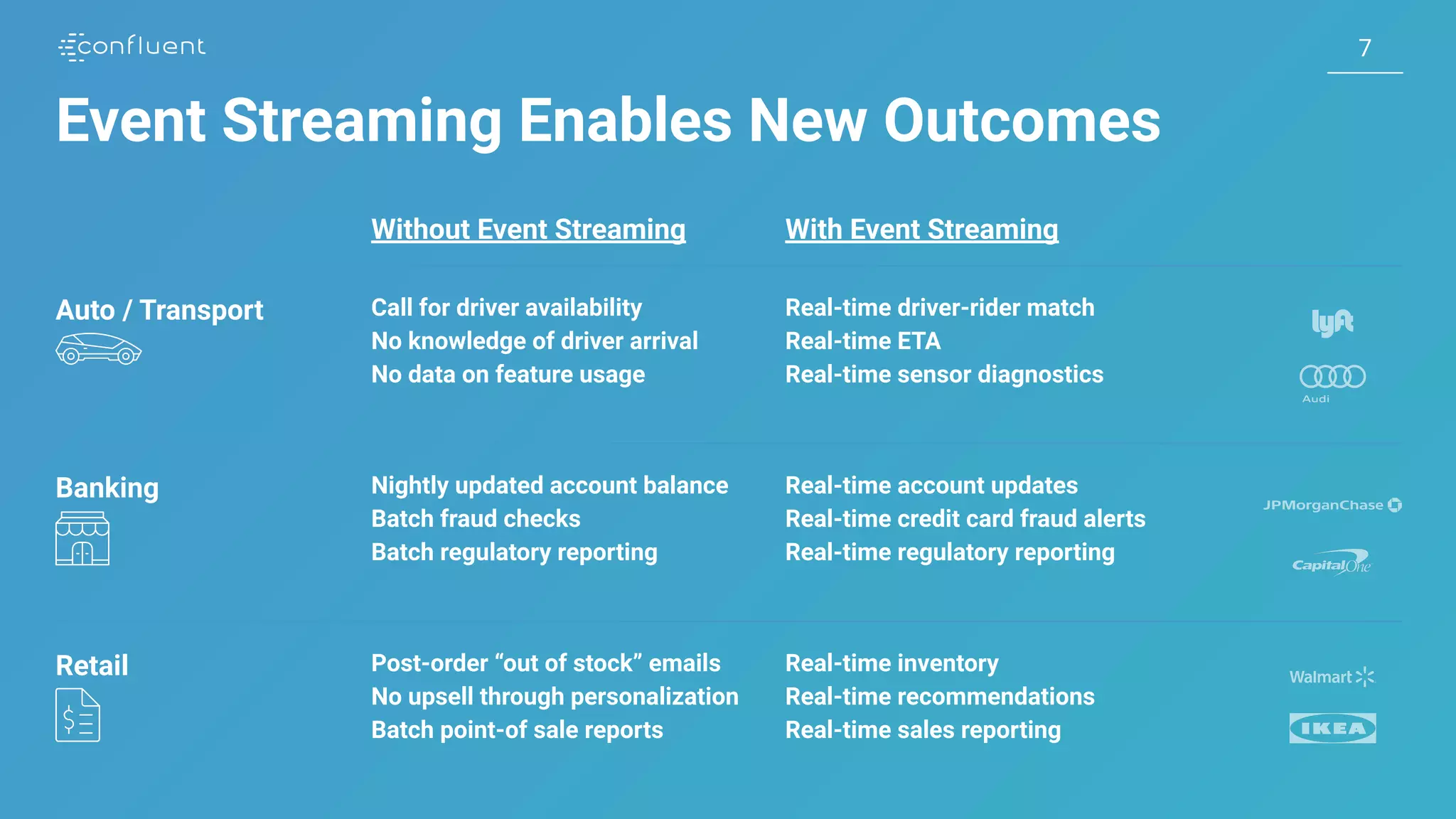

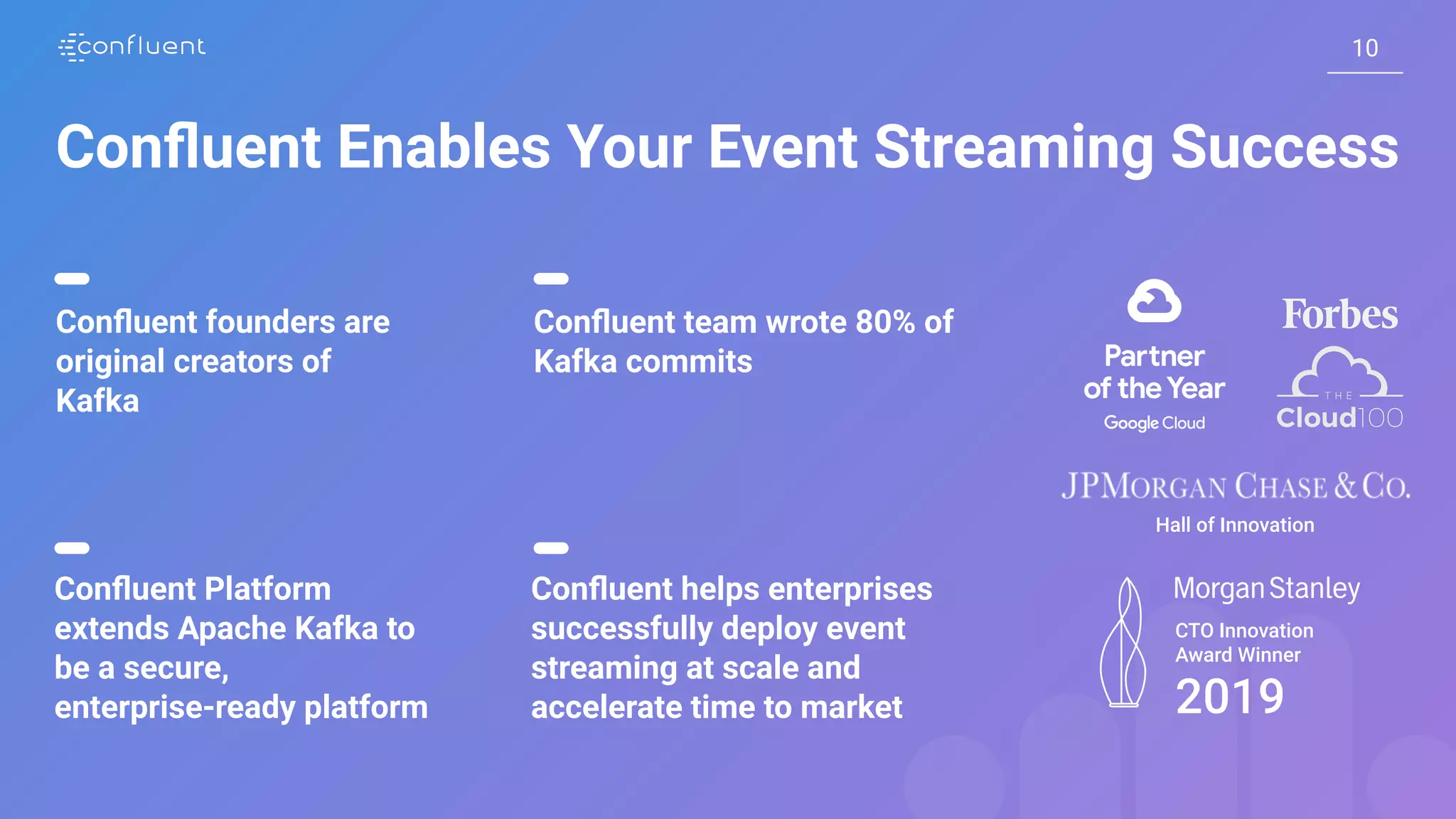

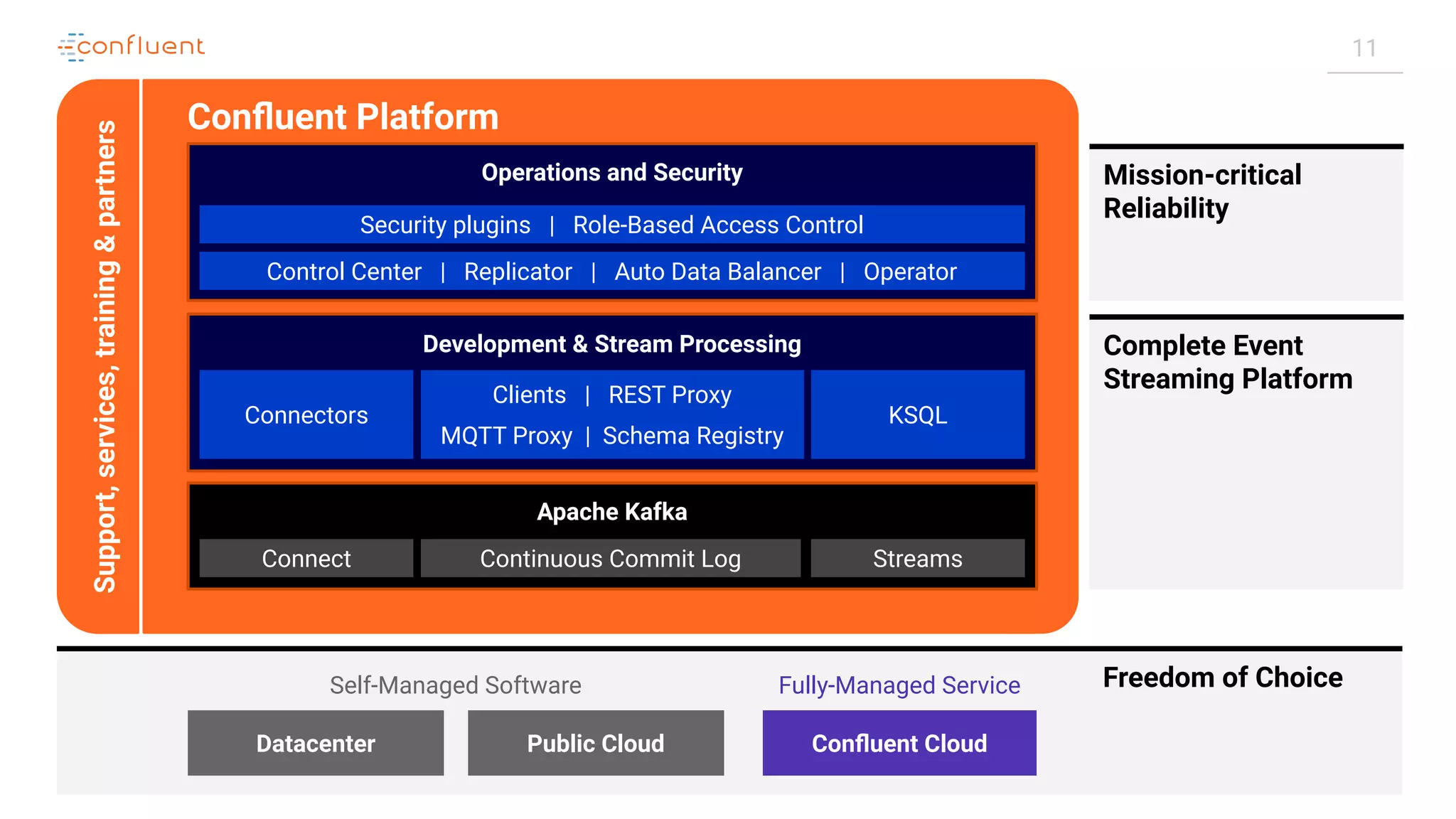

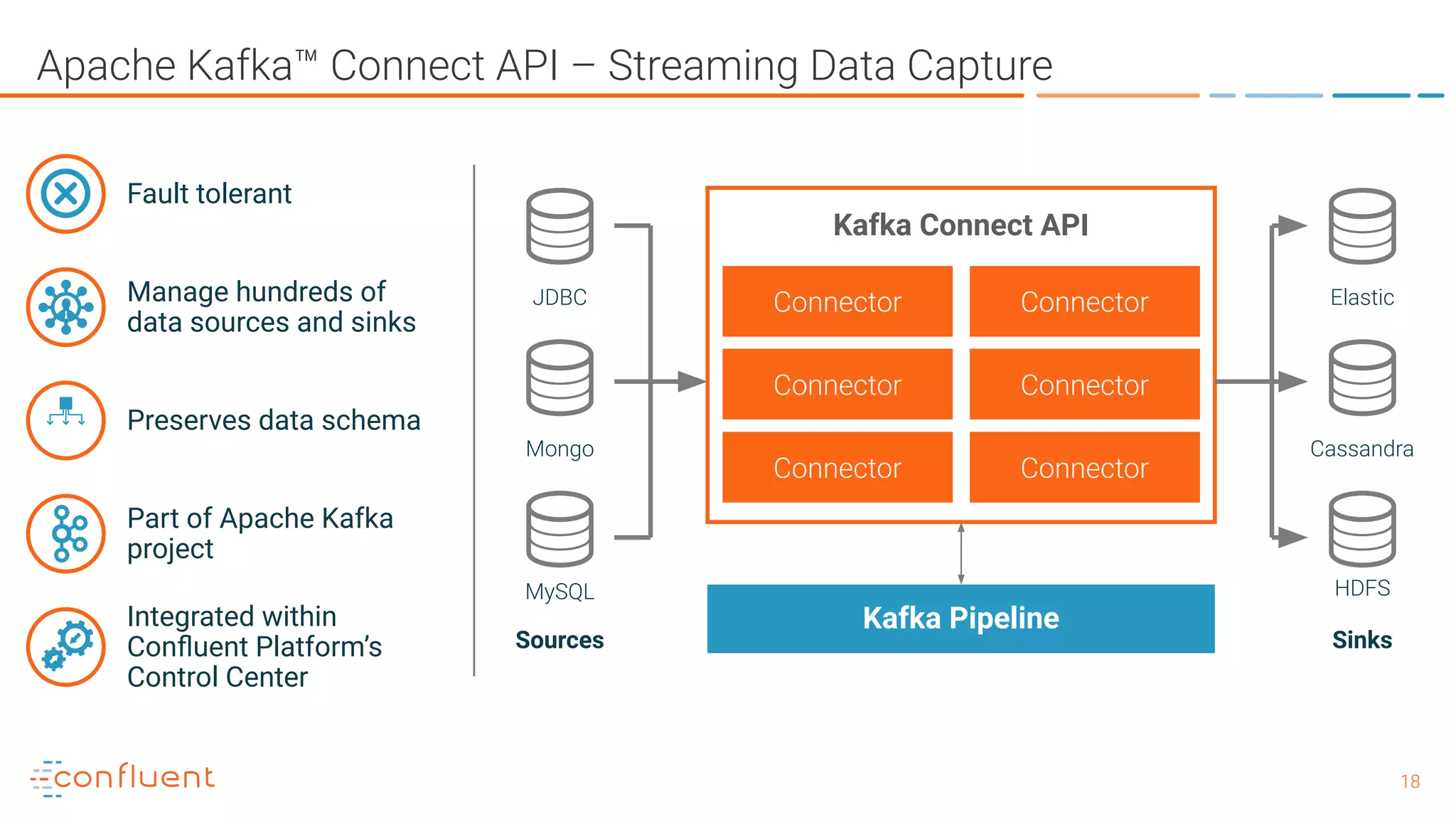

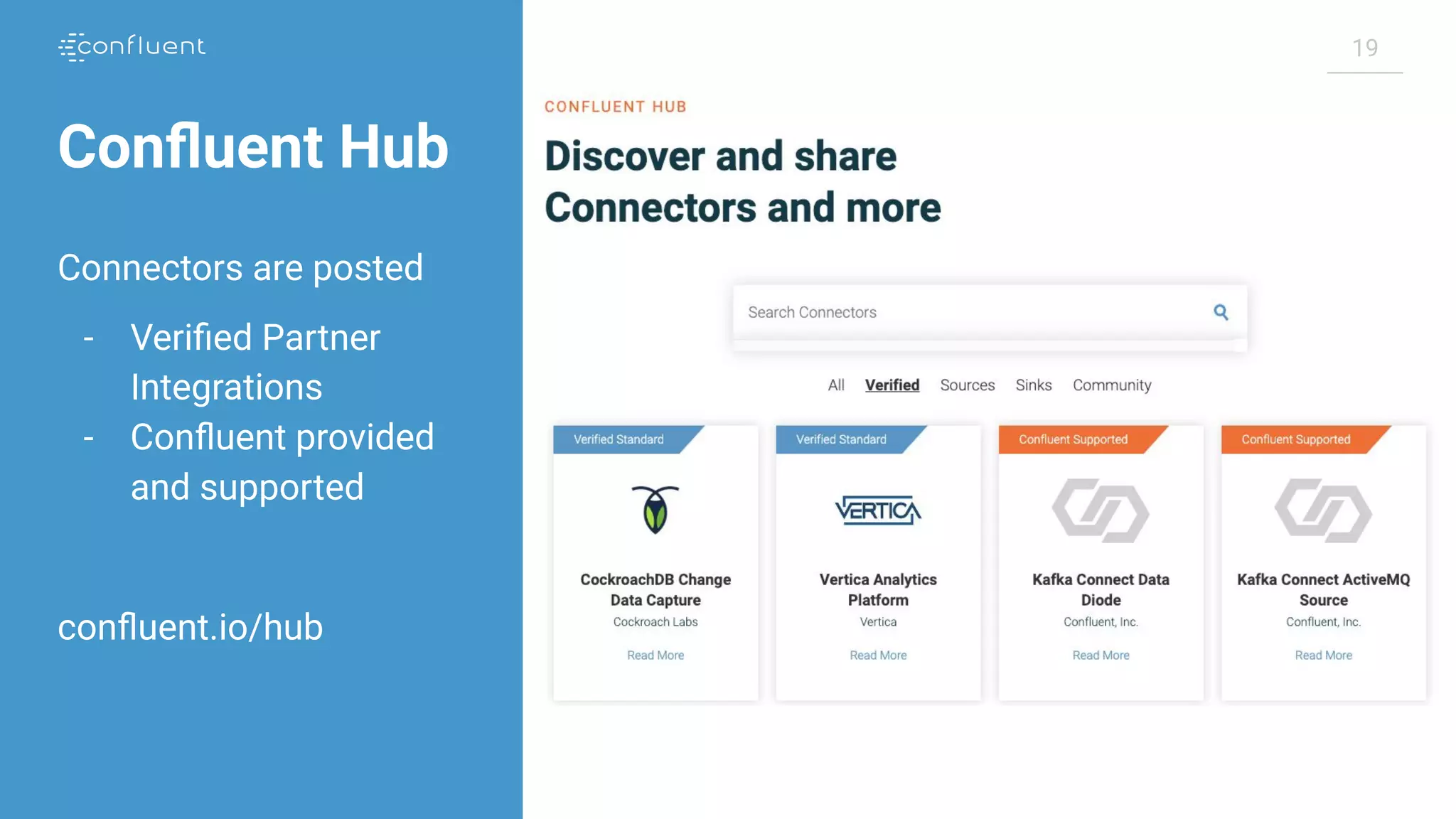

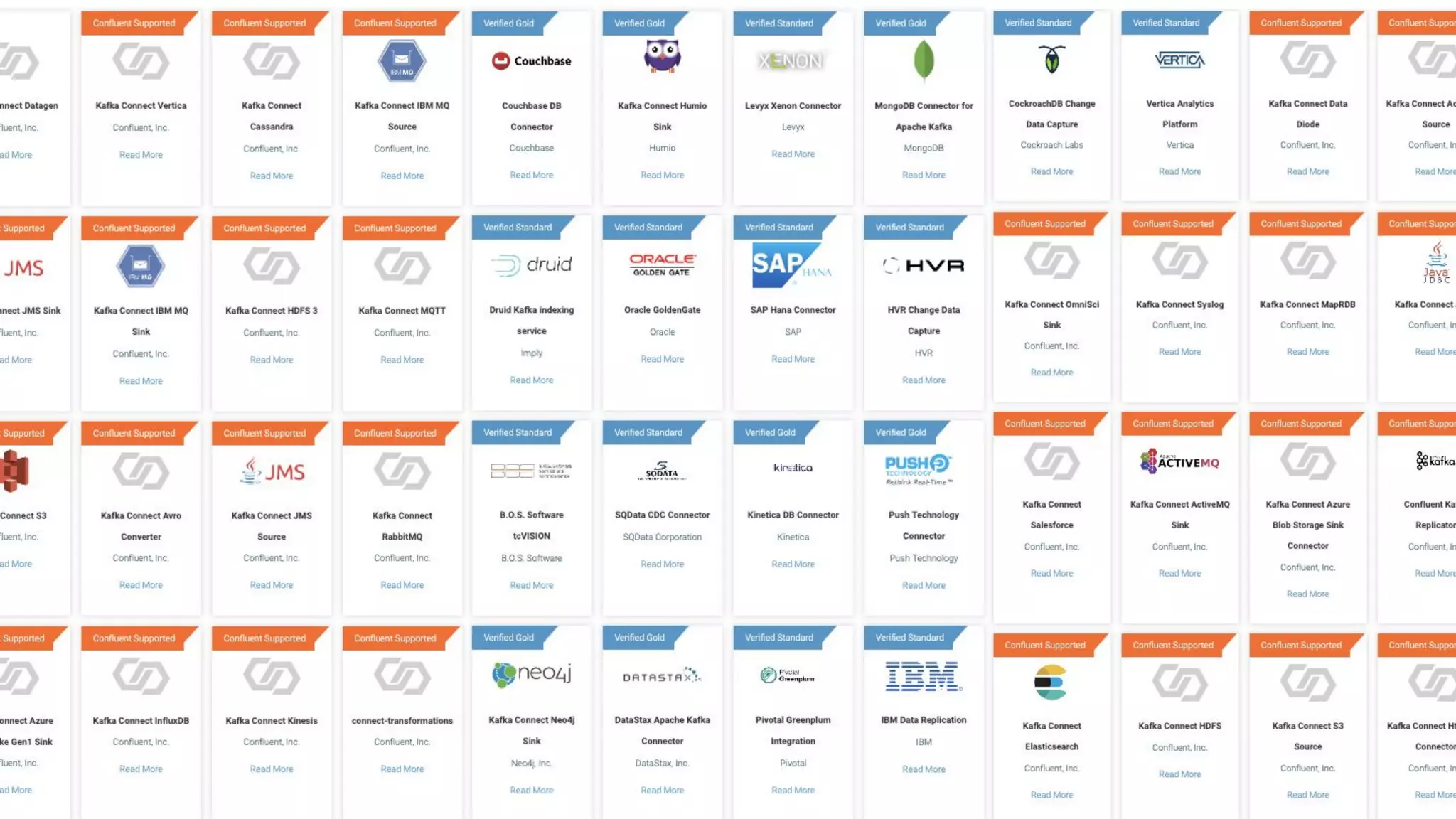

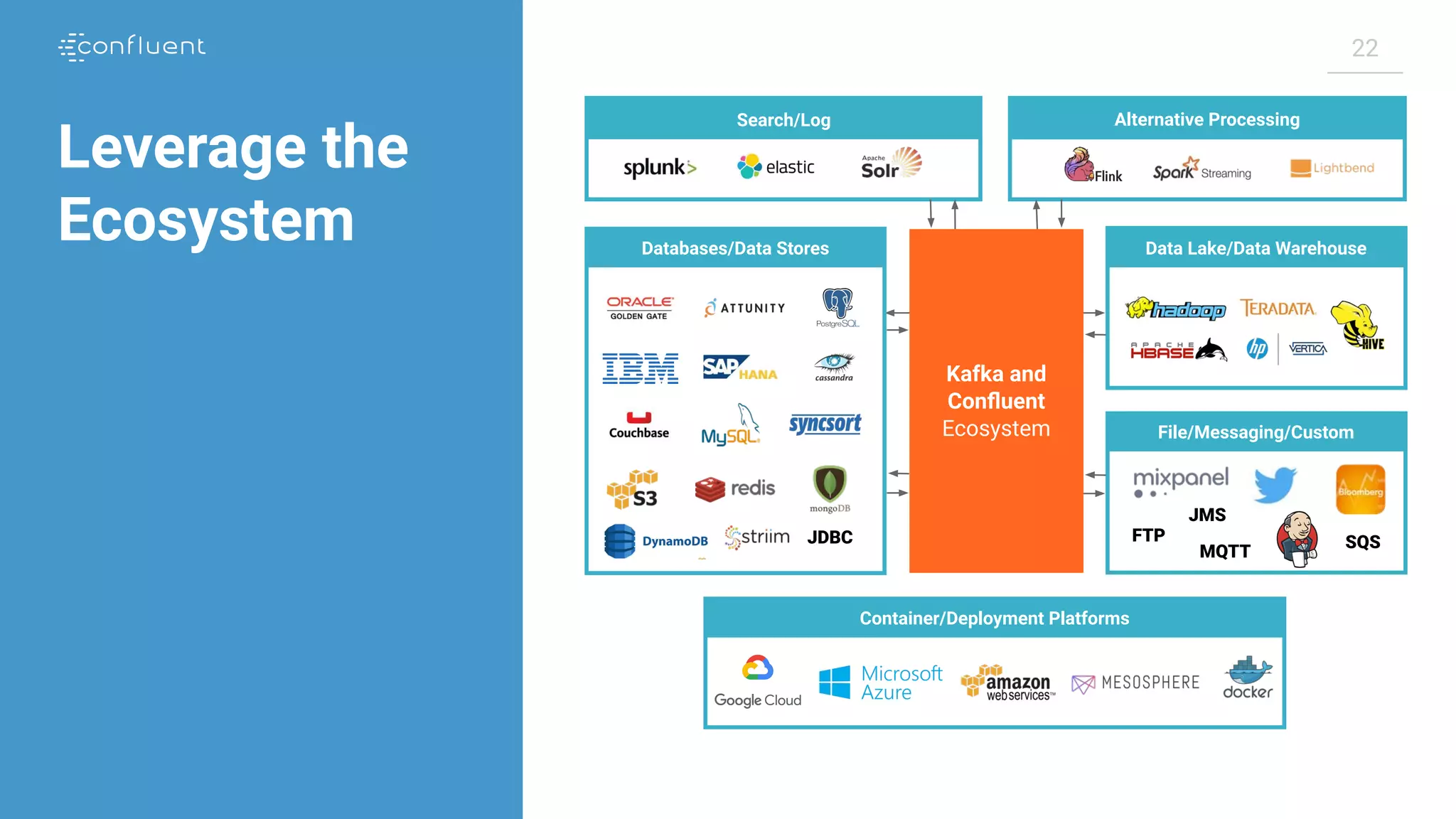

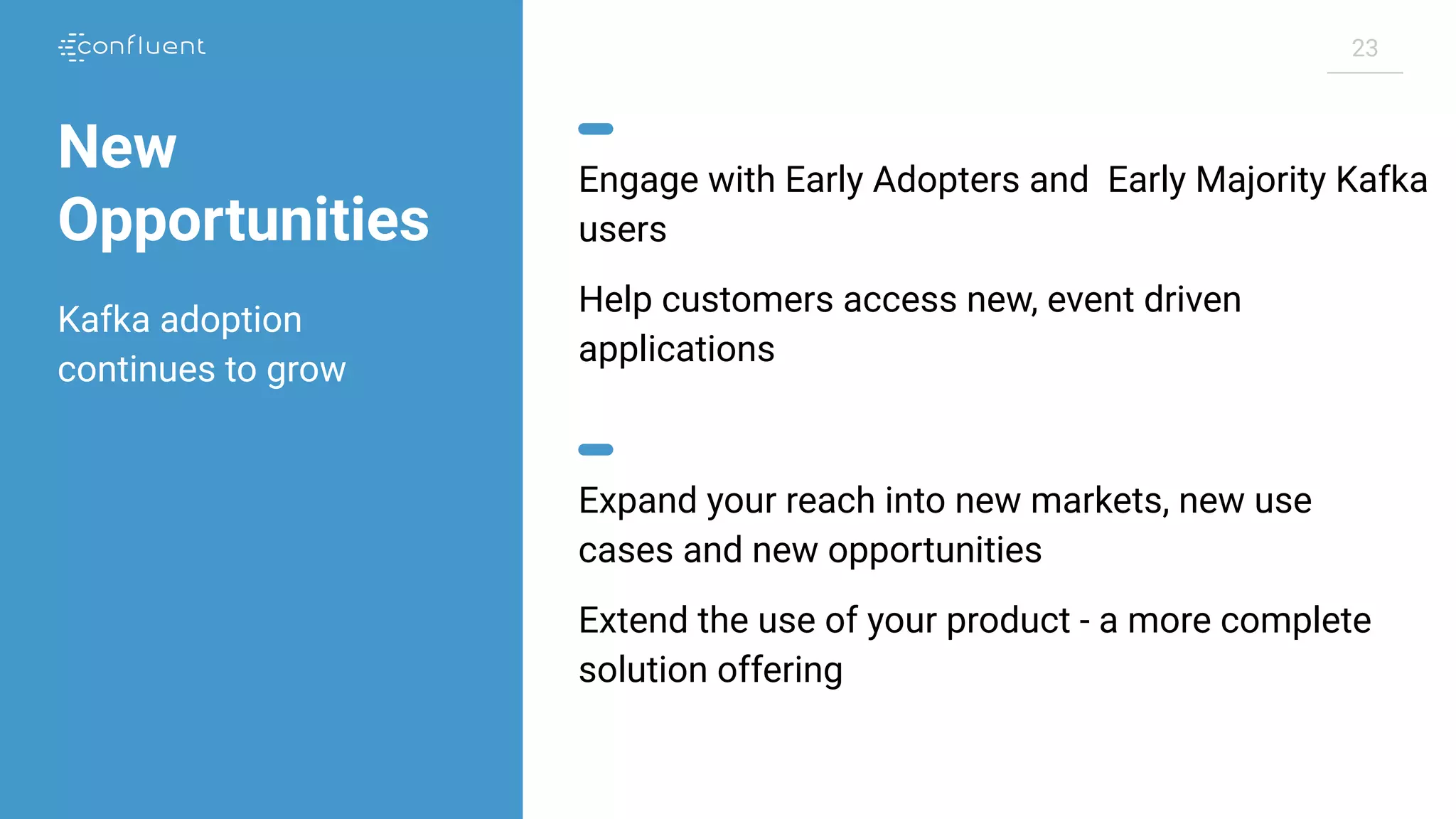

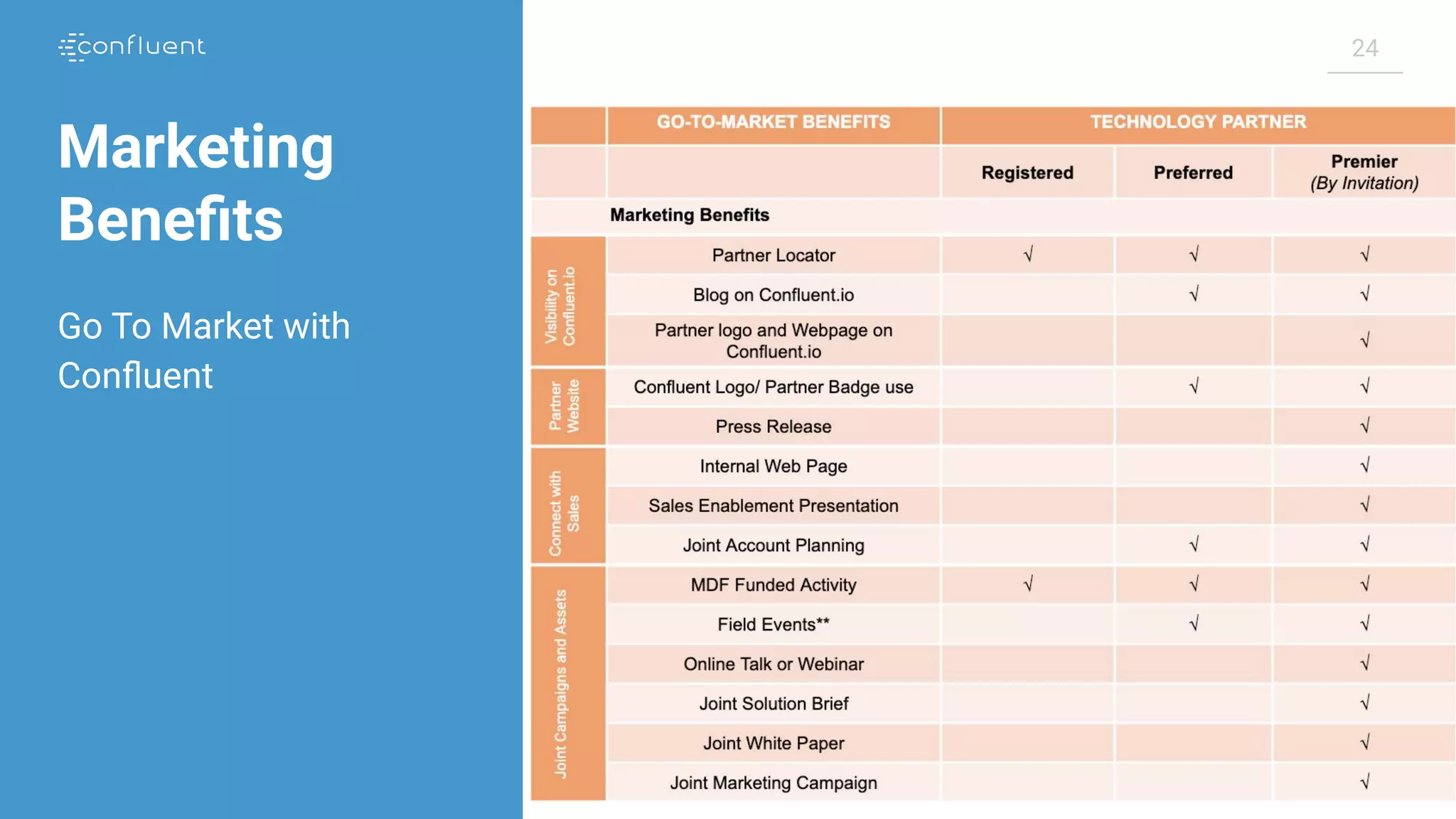

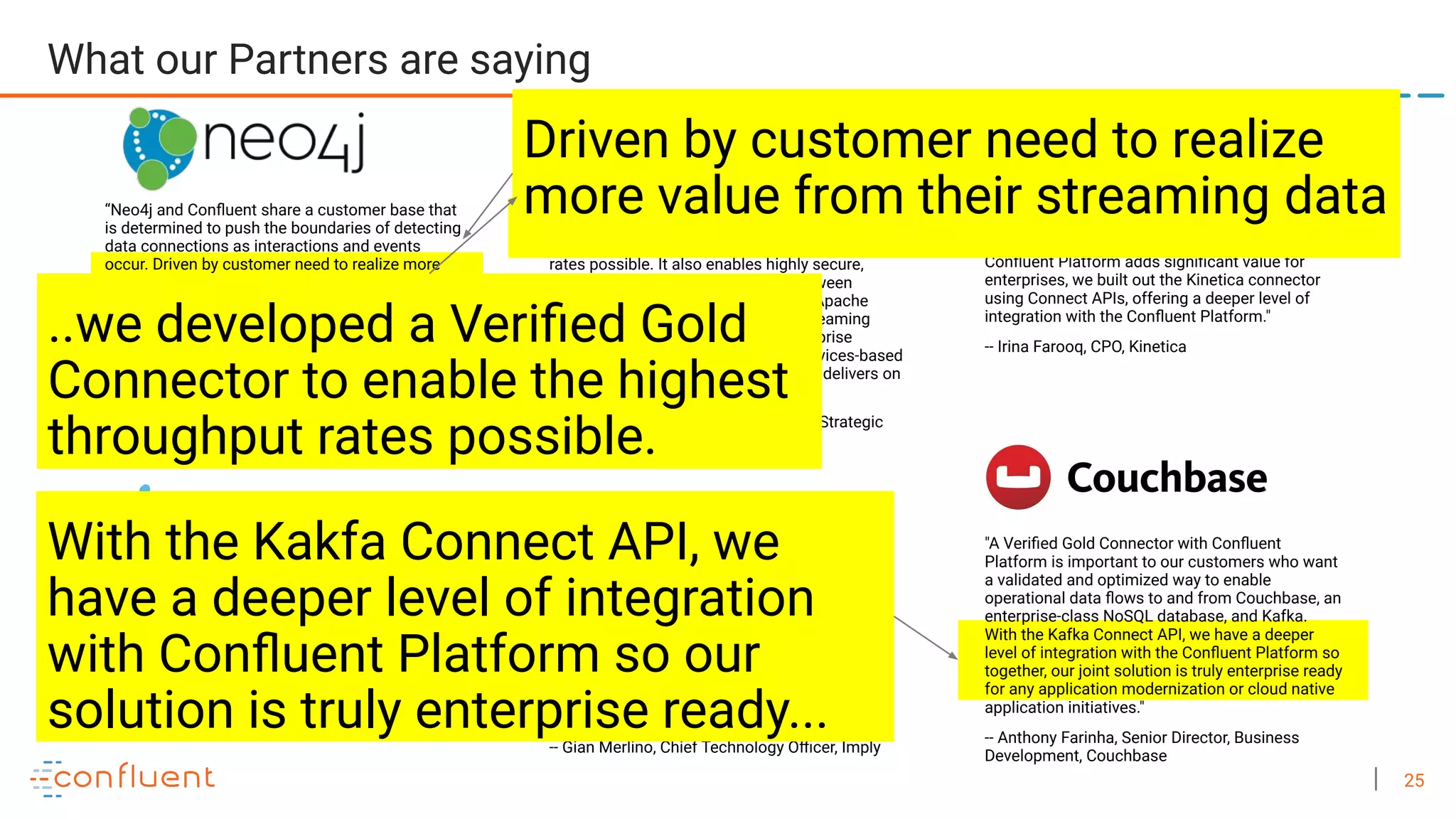

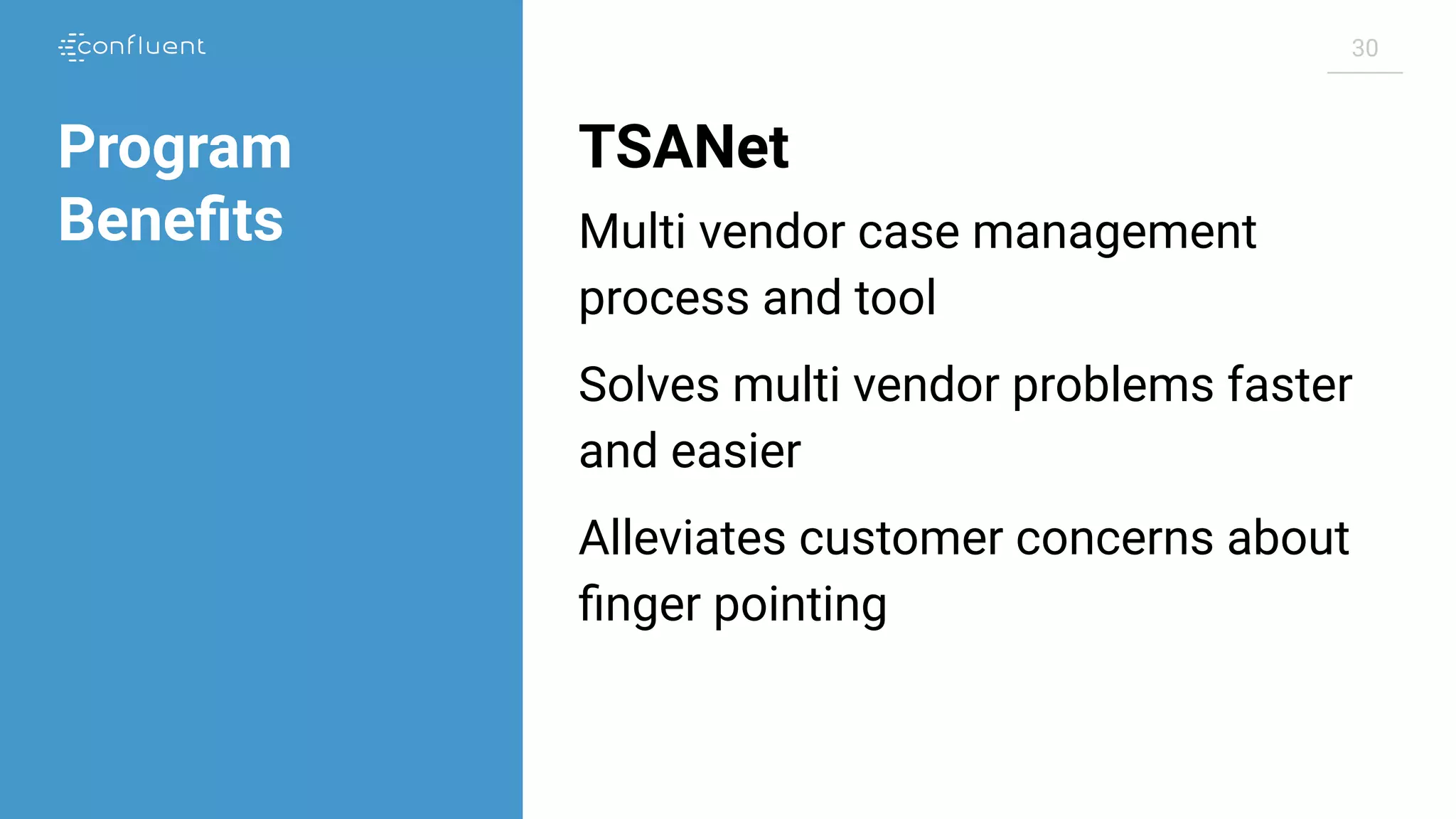

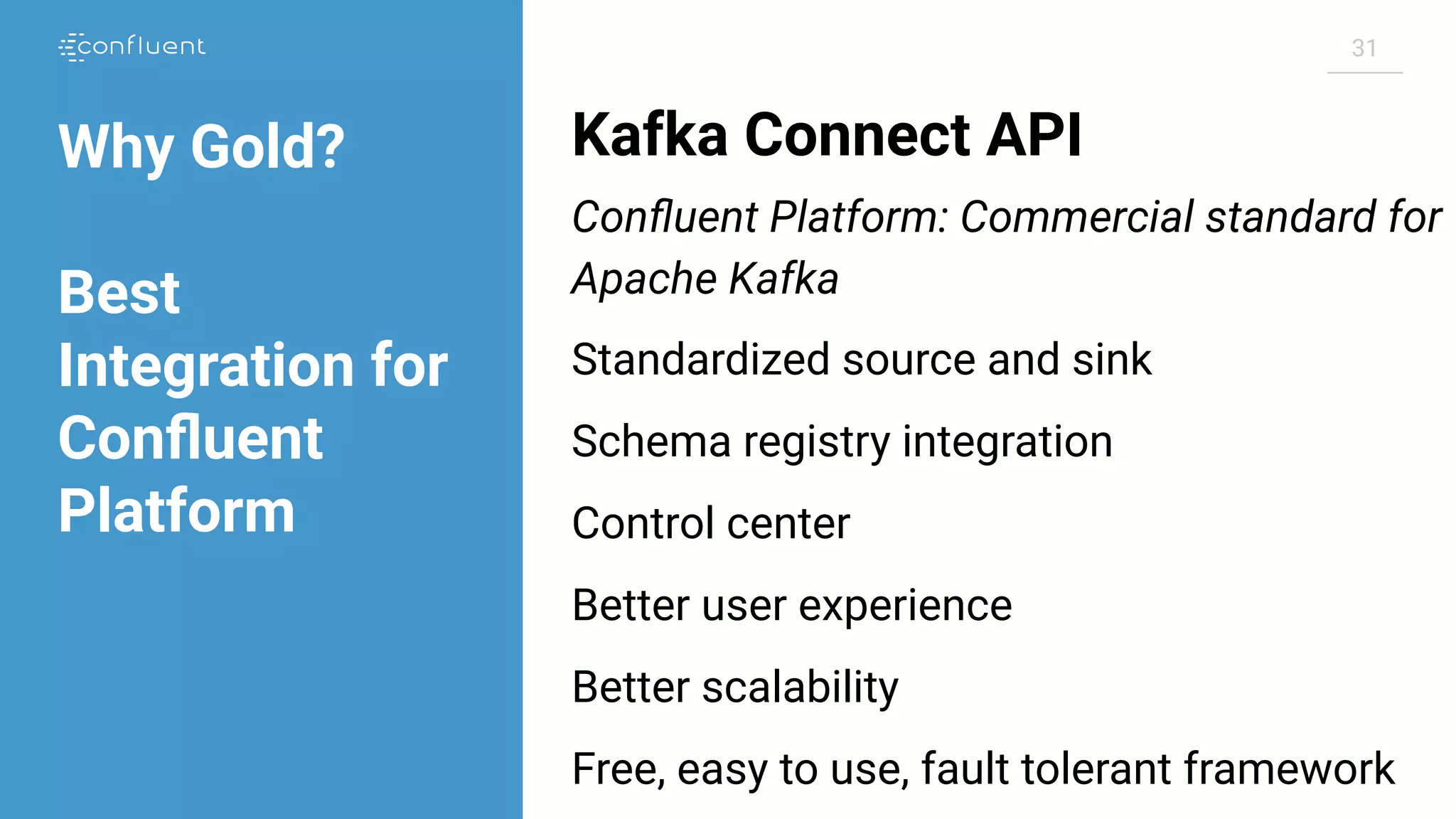

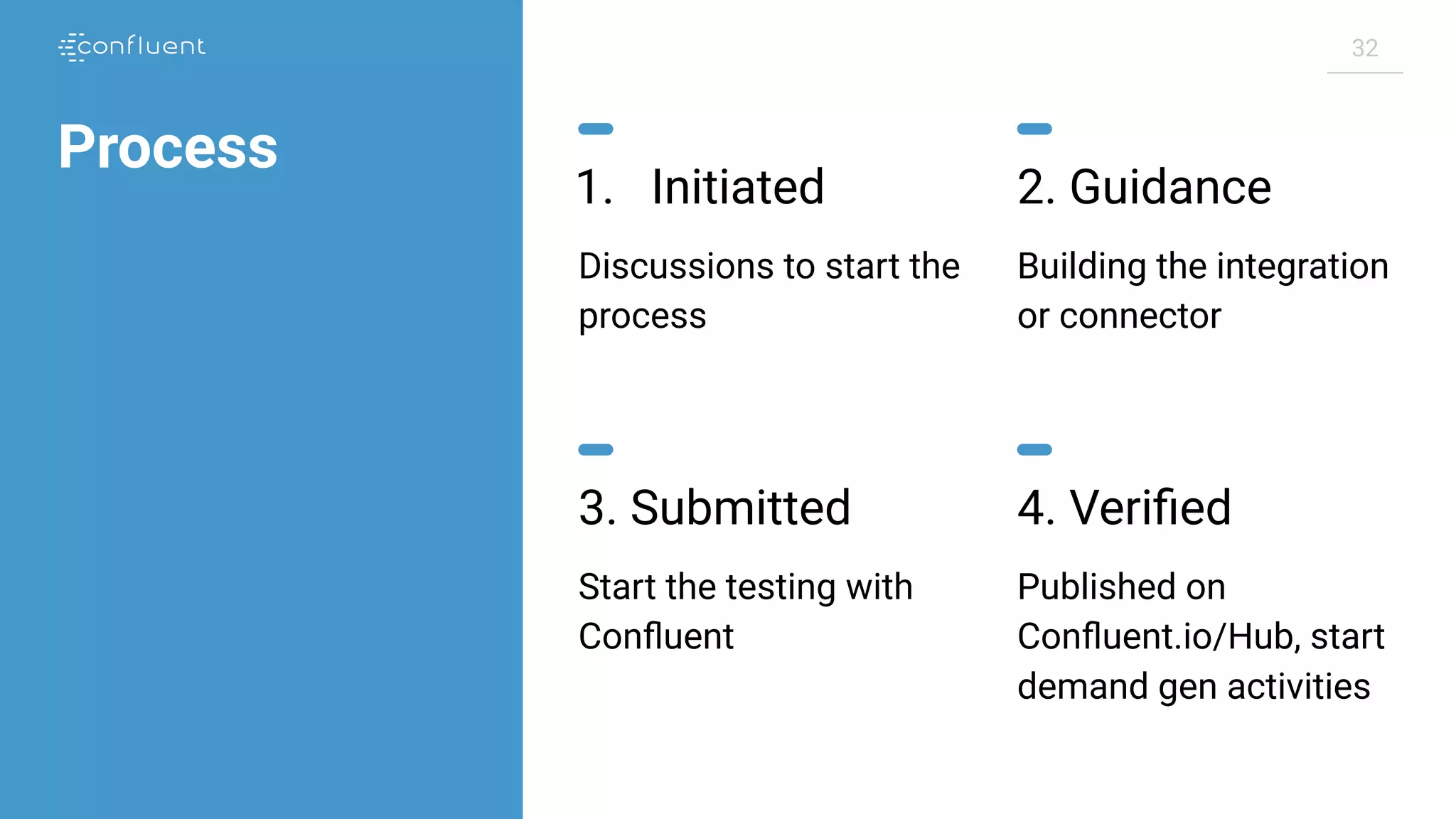

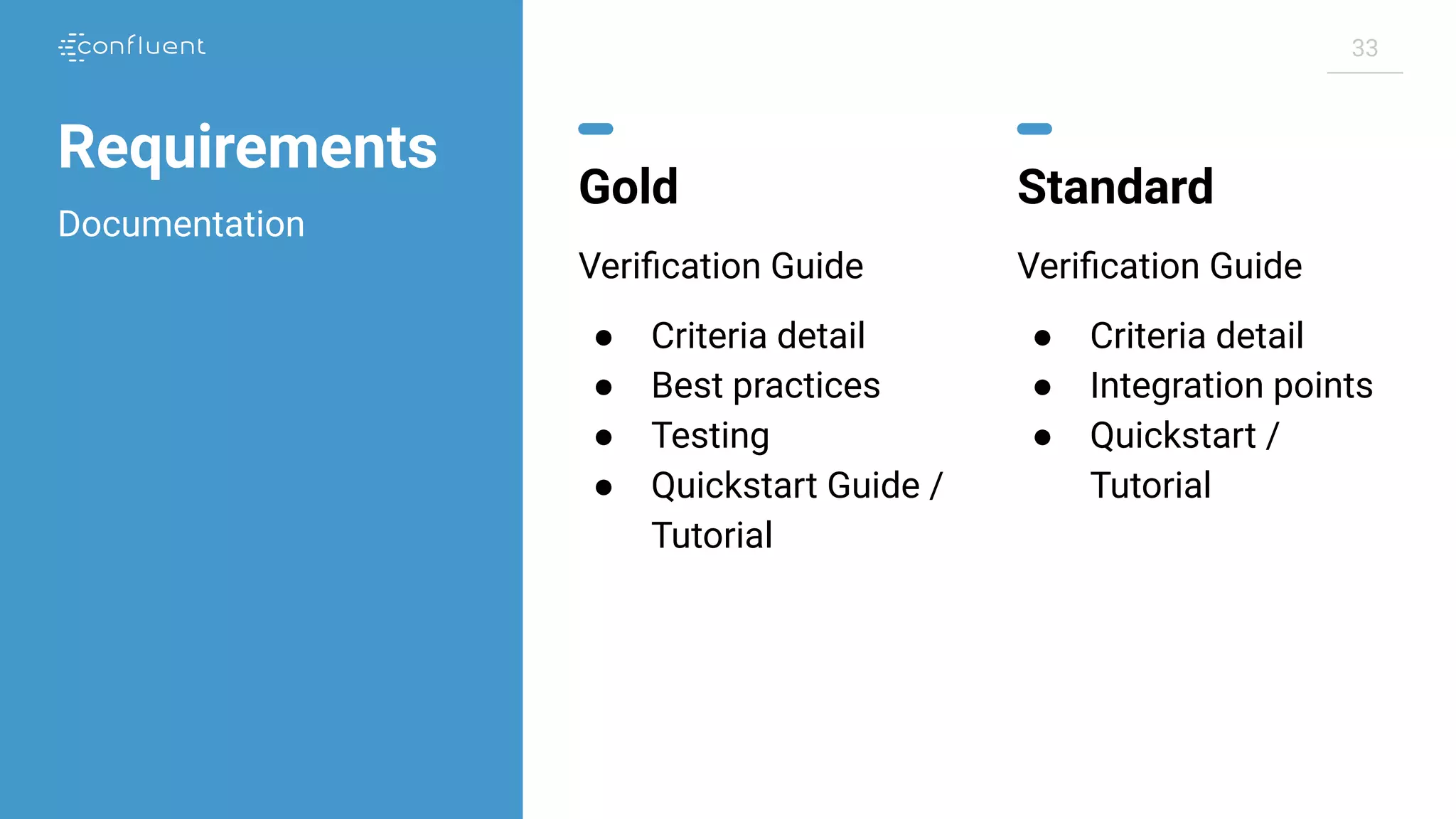

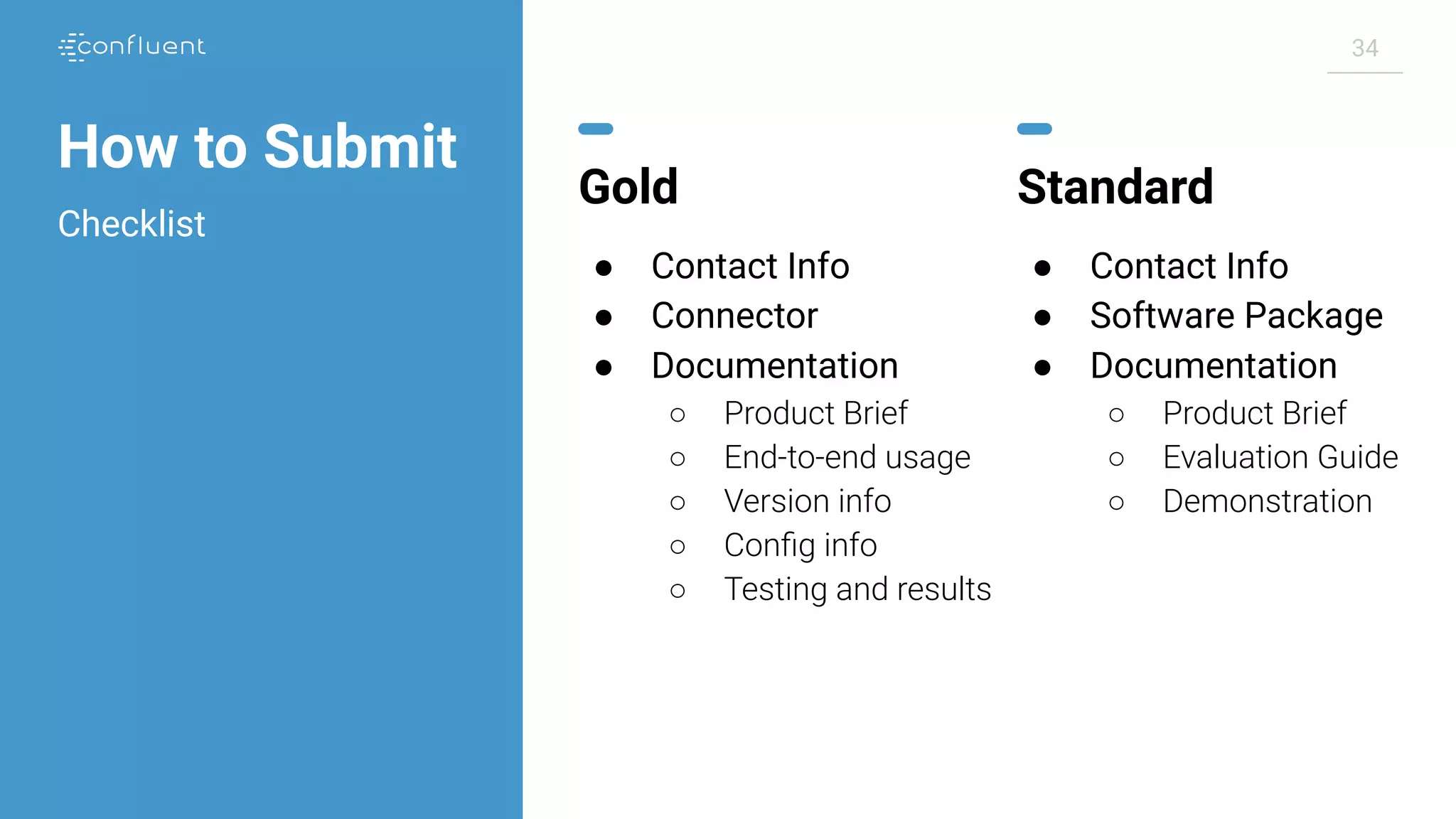

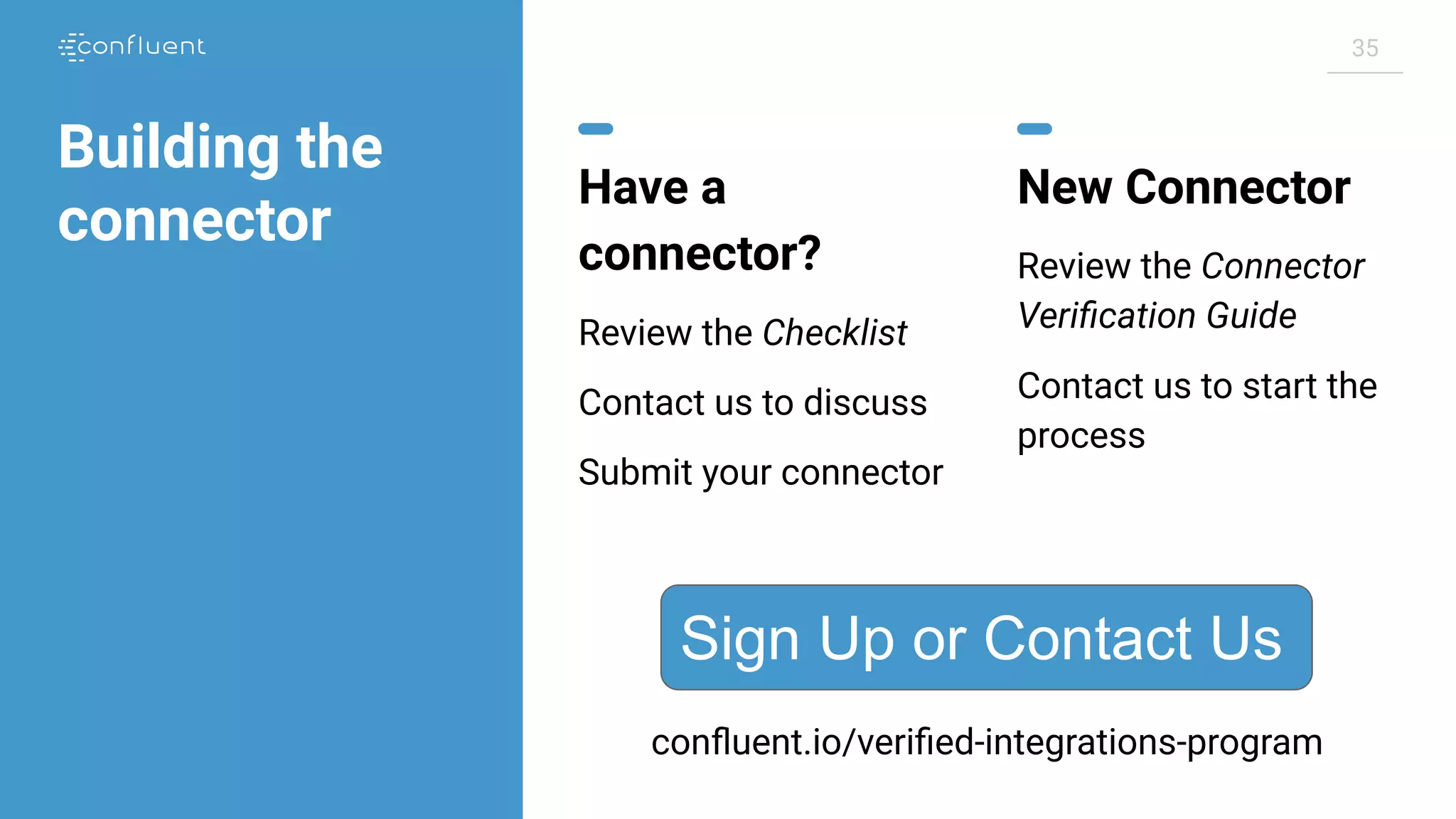

The document discusses why companies should build Apache Kafka connectors. It begins with an agenda that covers what event streaming is, Kafka and connecting to Kafka, the value of building a connector, and a Q&A session. It then discusses how event streaming is enabling new business models and outcomes across many industries. The Confluent Verified Integrations Program helps partners build and verify connectors, with benefits like marketing support and a multi-vendor case management process. The presentation provides information on the verification levels (Gold for Kafka Connect API connectors, Standard for other integrations), requirements, submission process, and how Confluent can help support partners. It encourages attendees to sign up for the program or contact them with any questions.