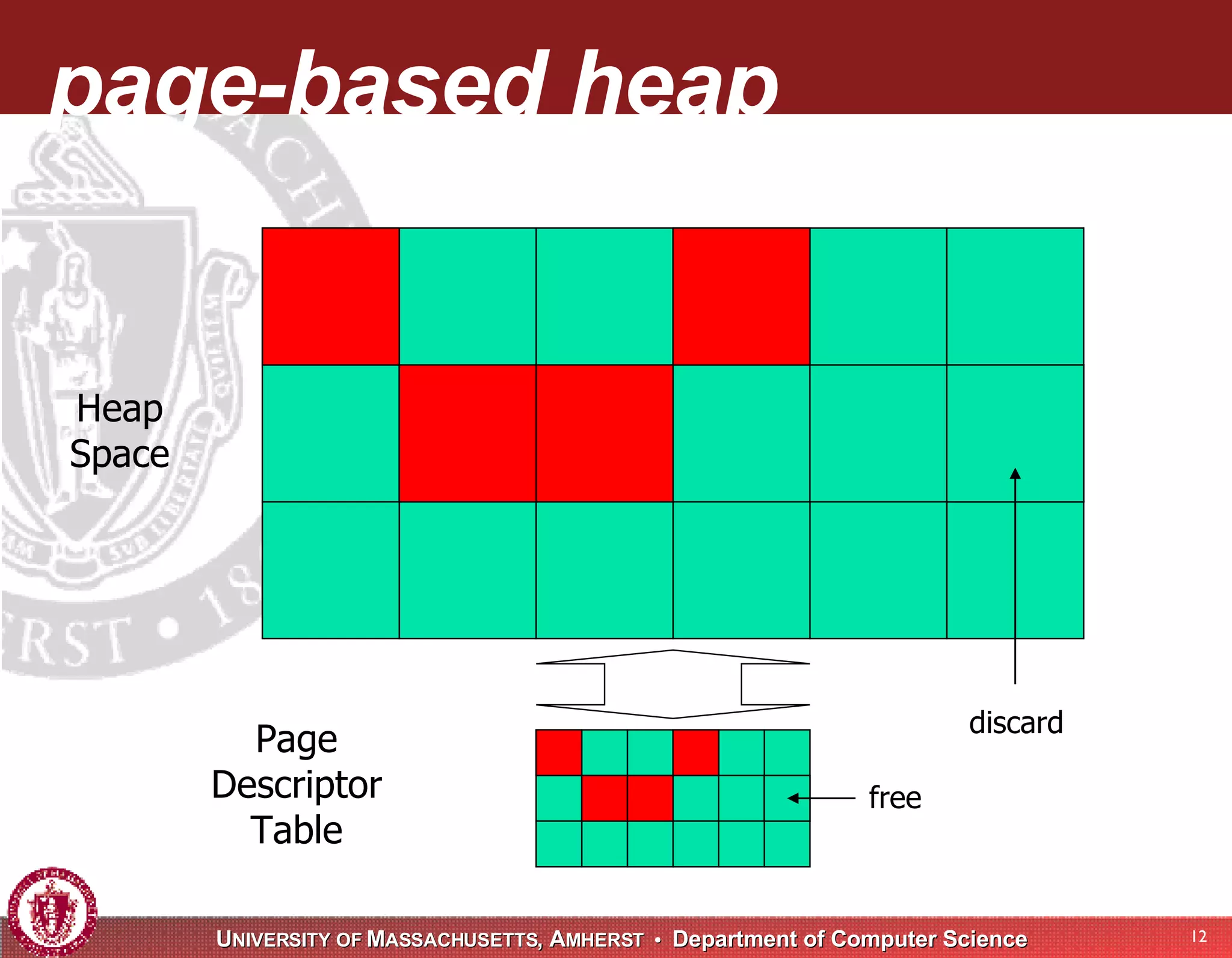

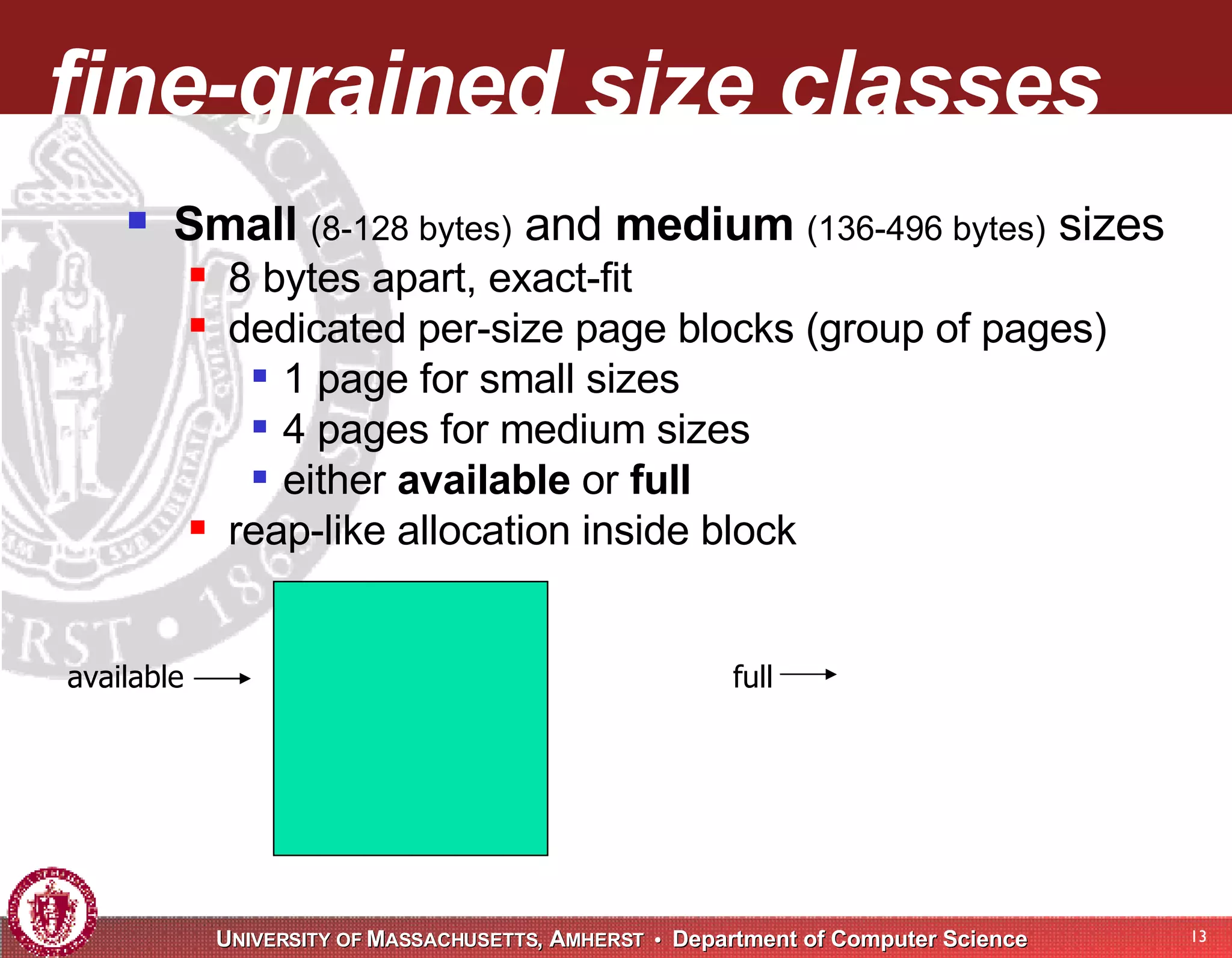

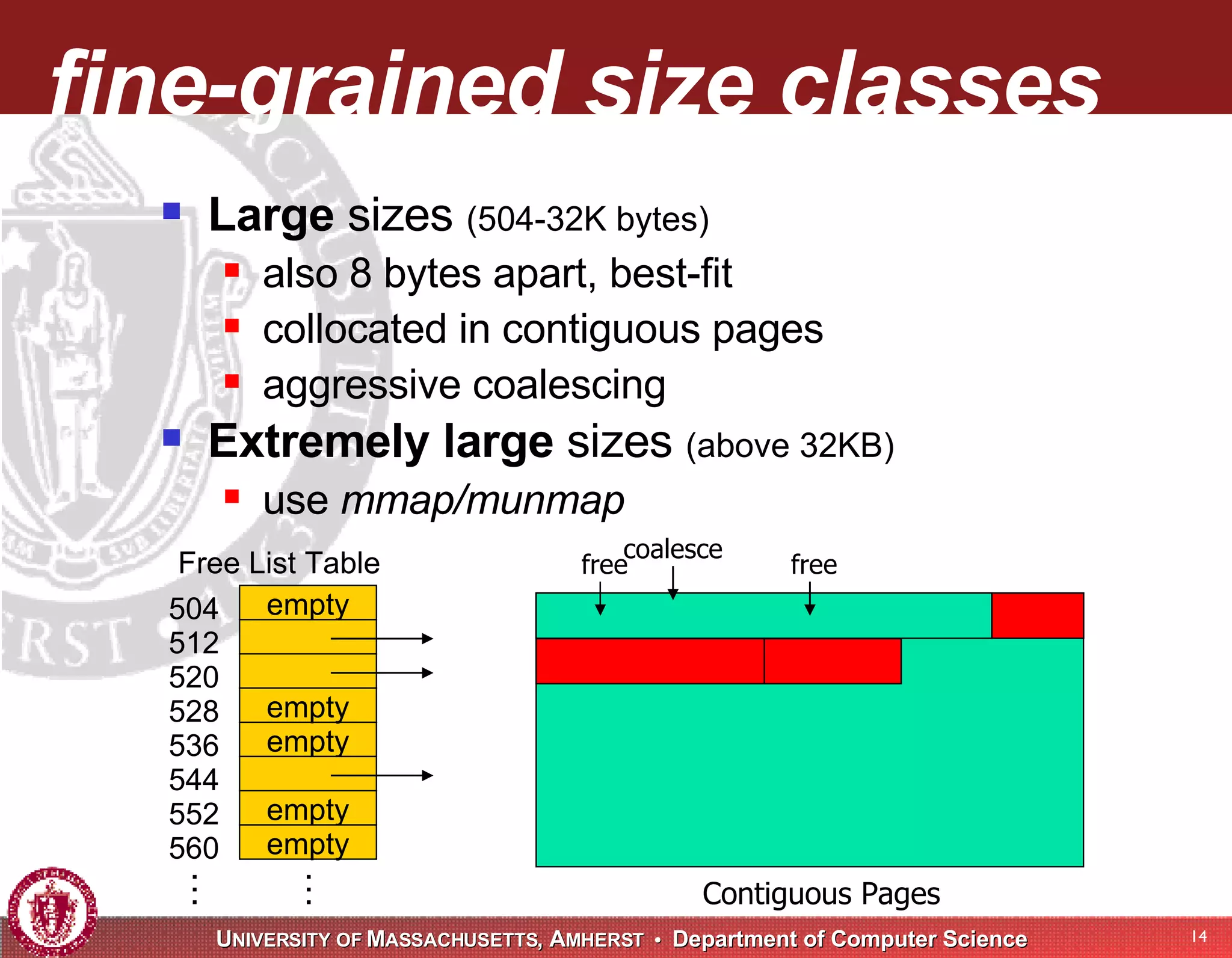

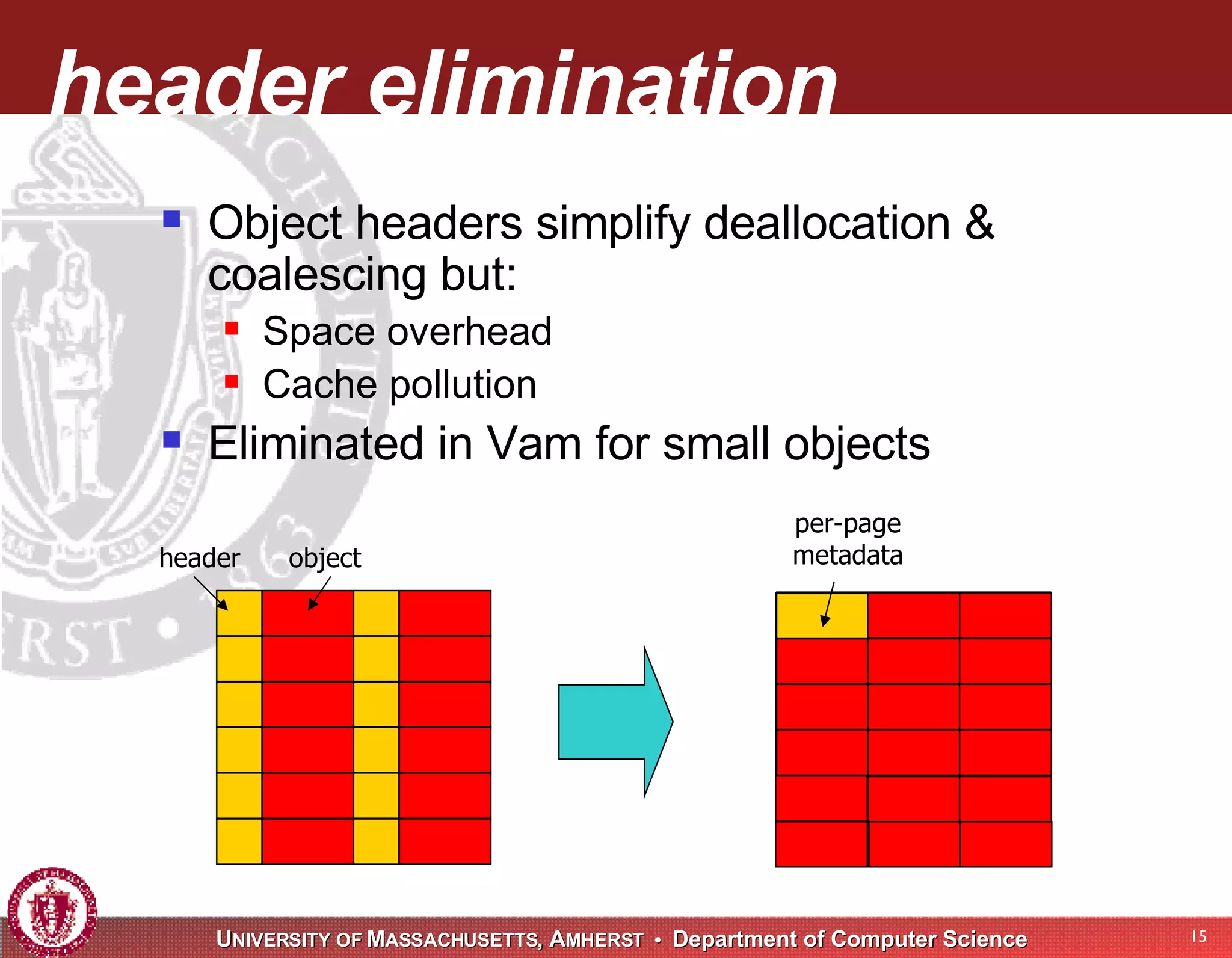

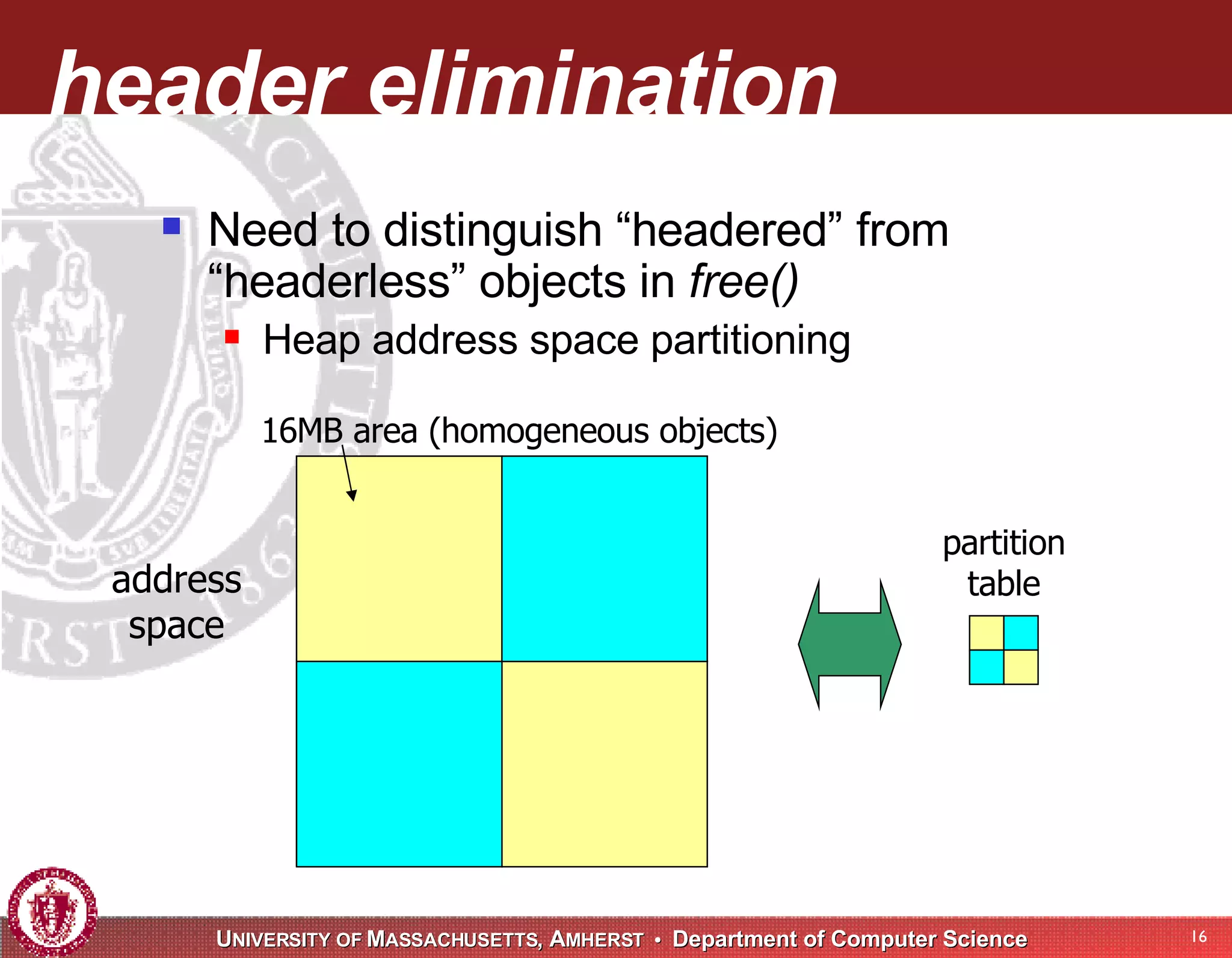

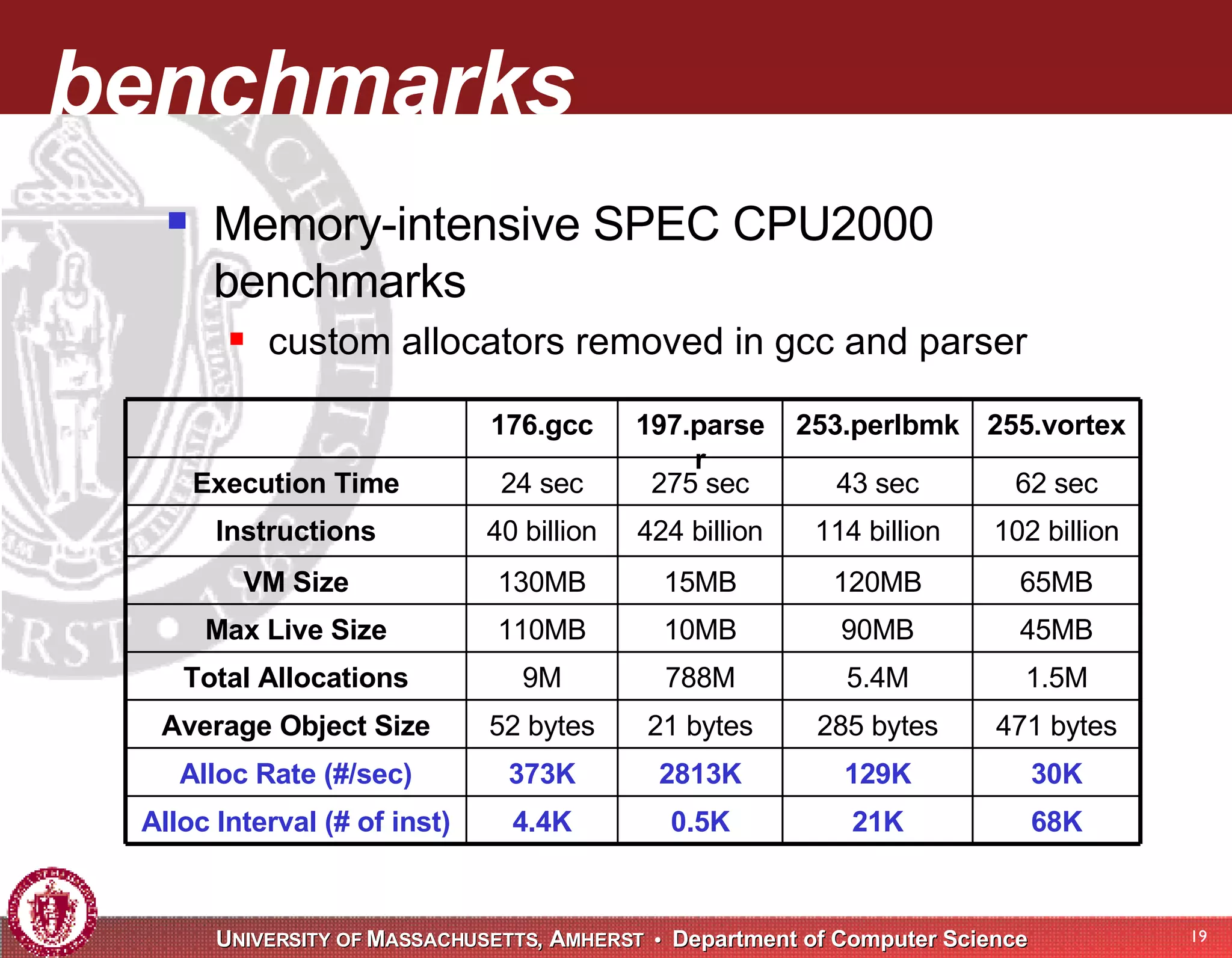

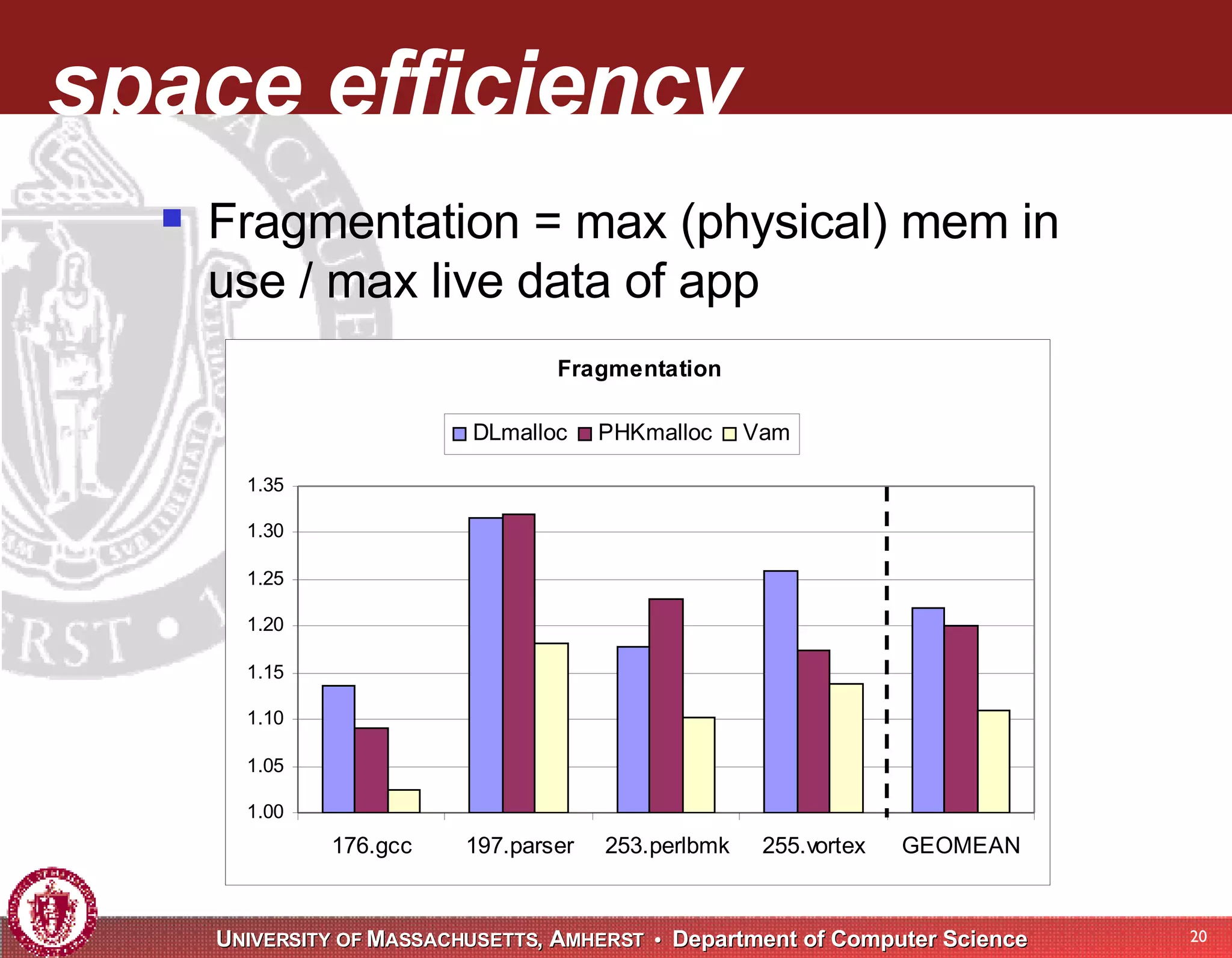

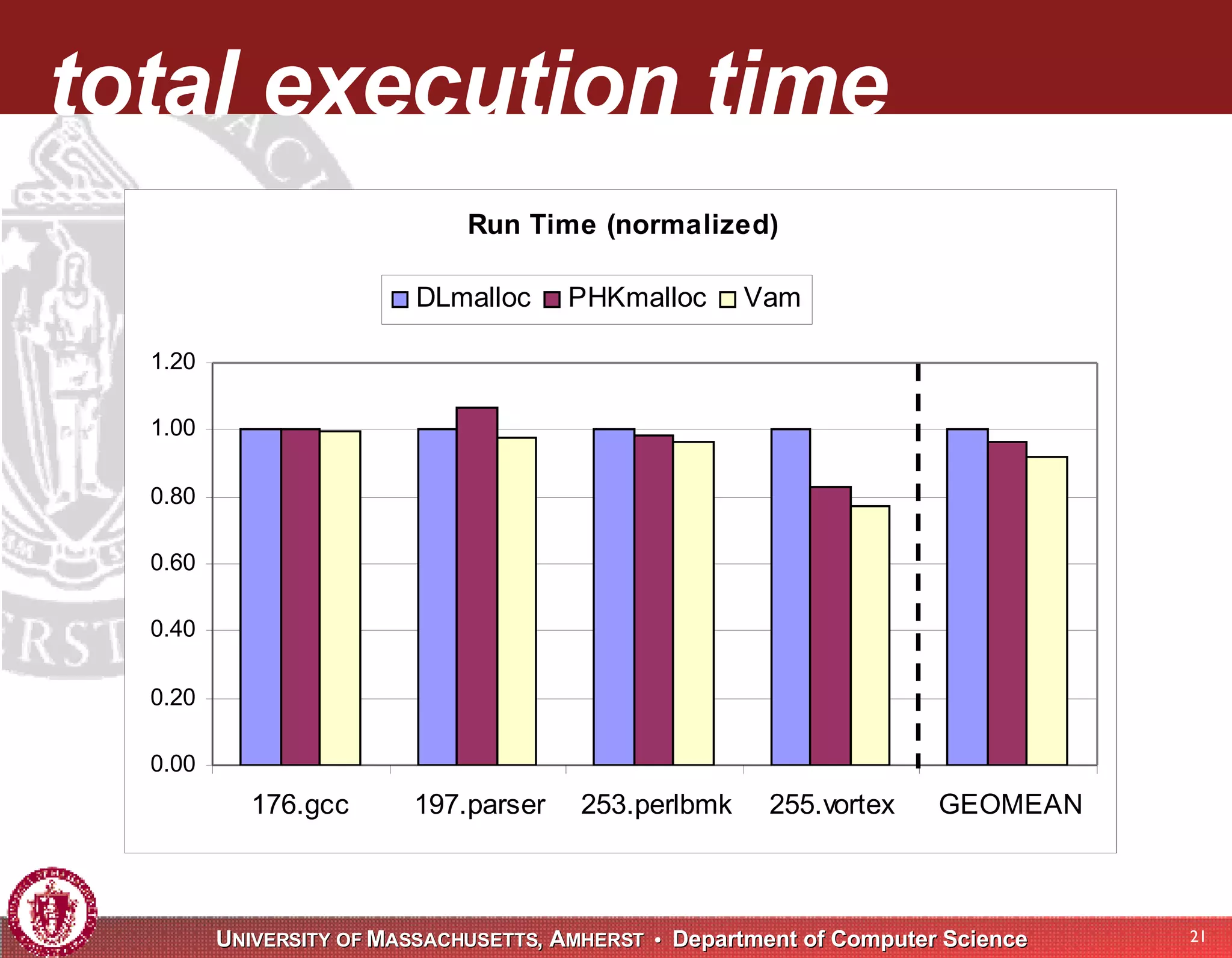

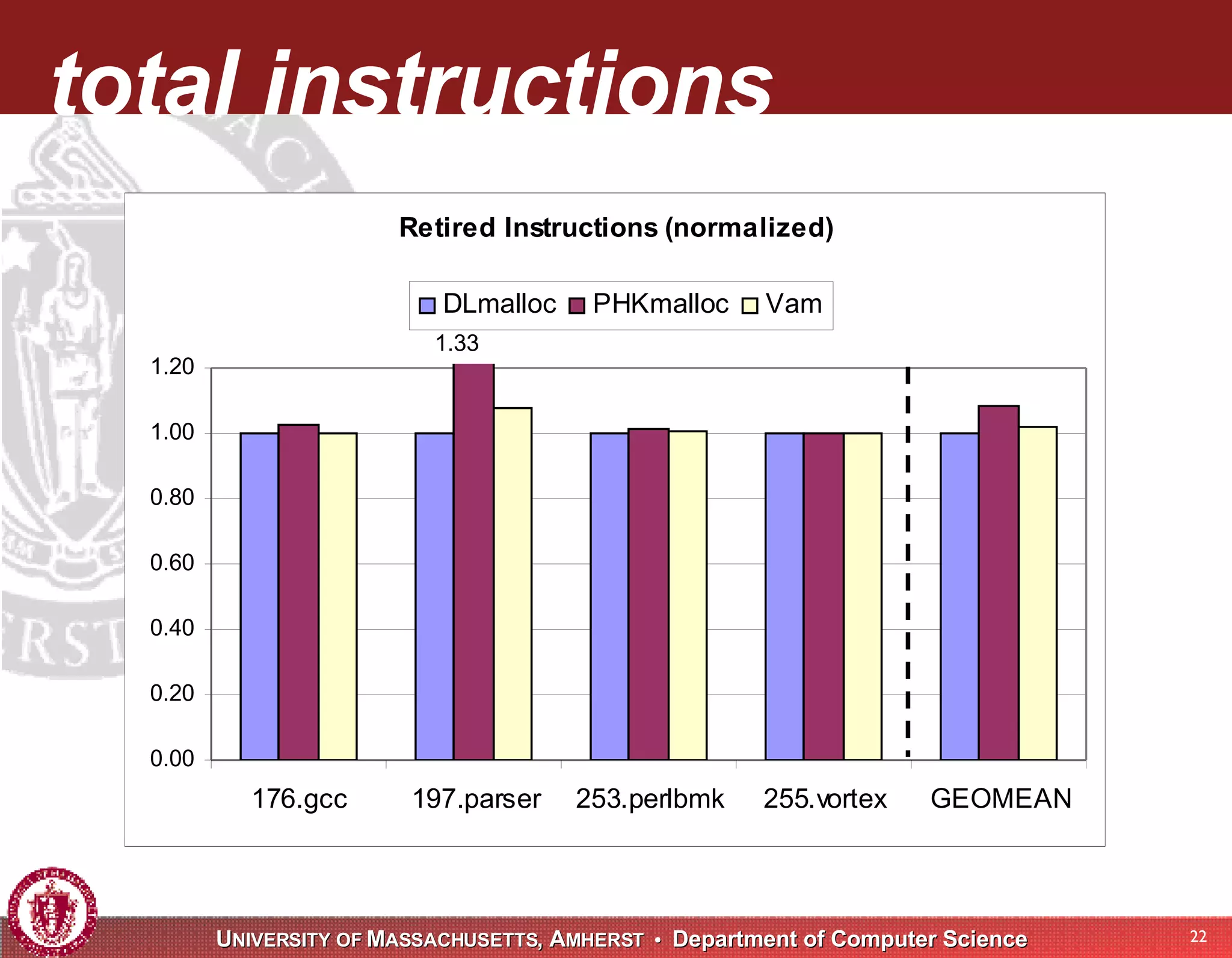

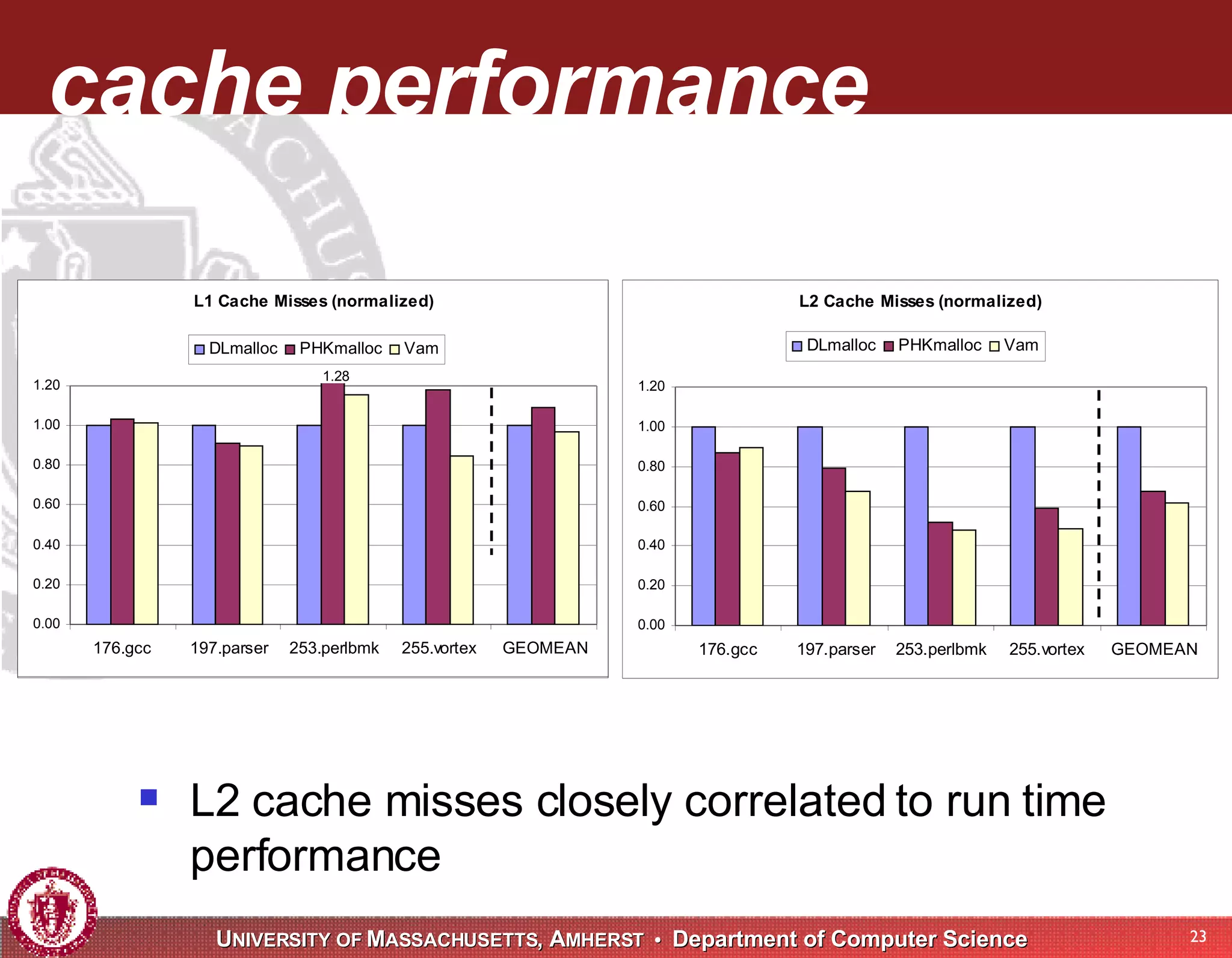

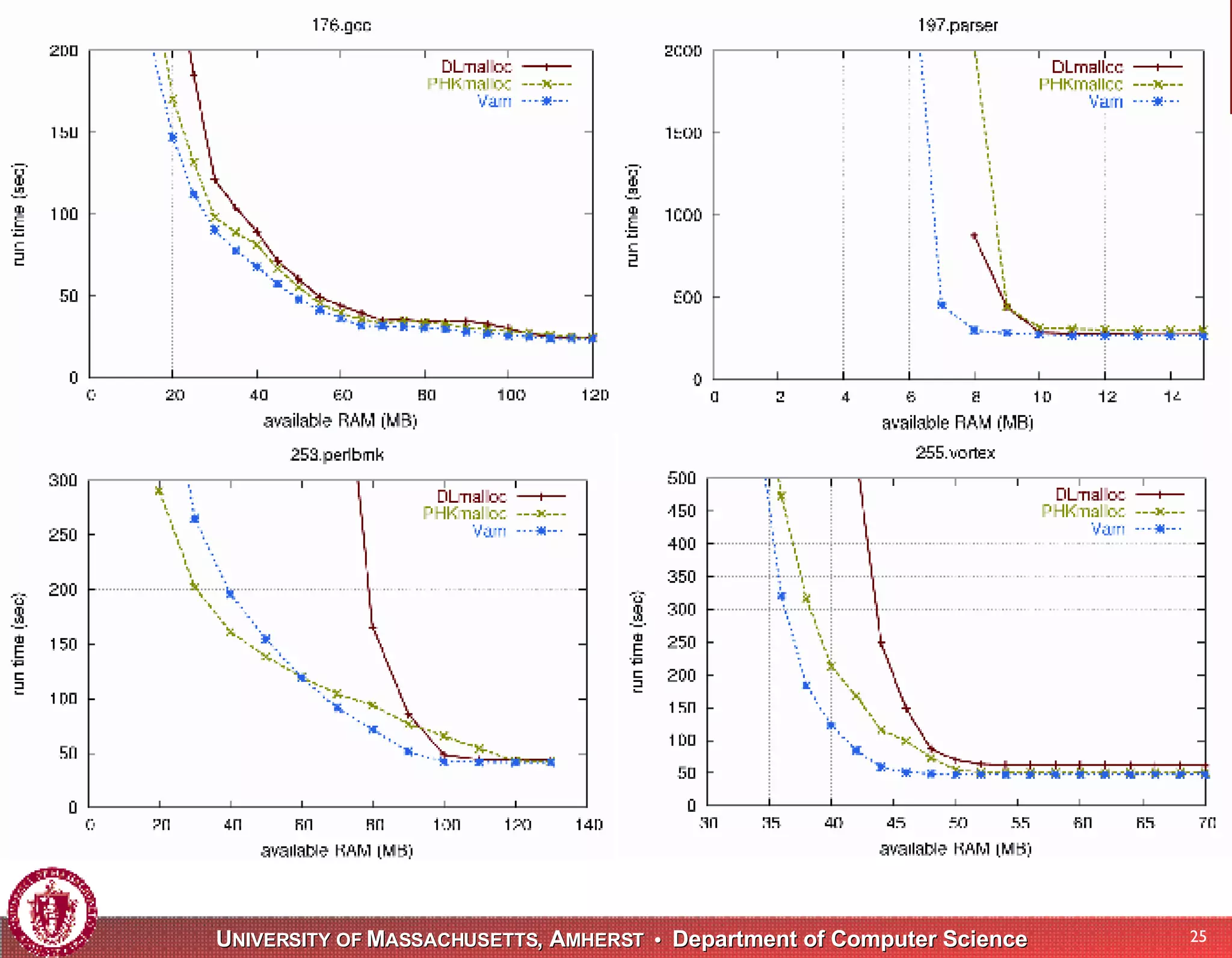

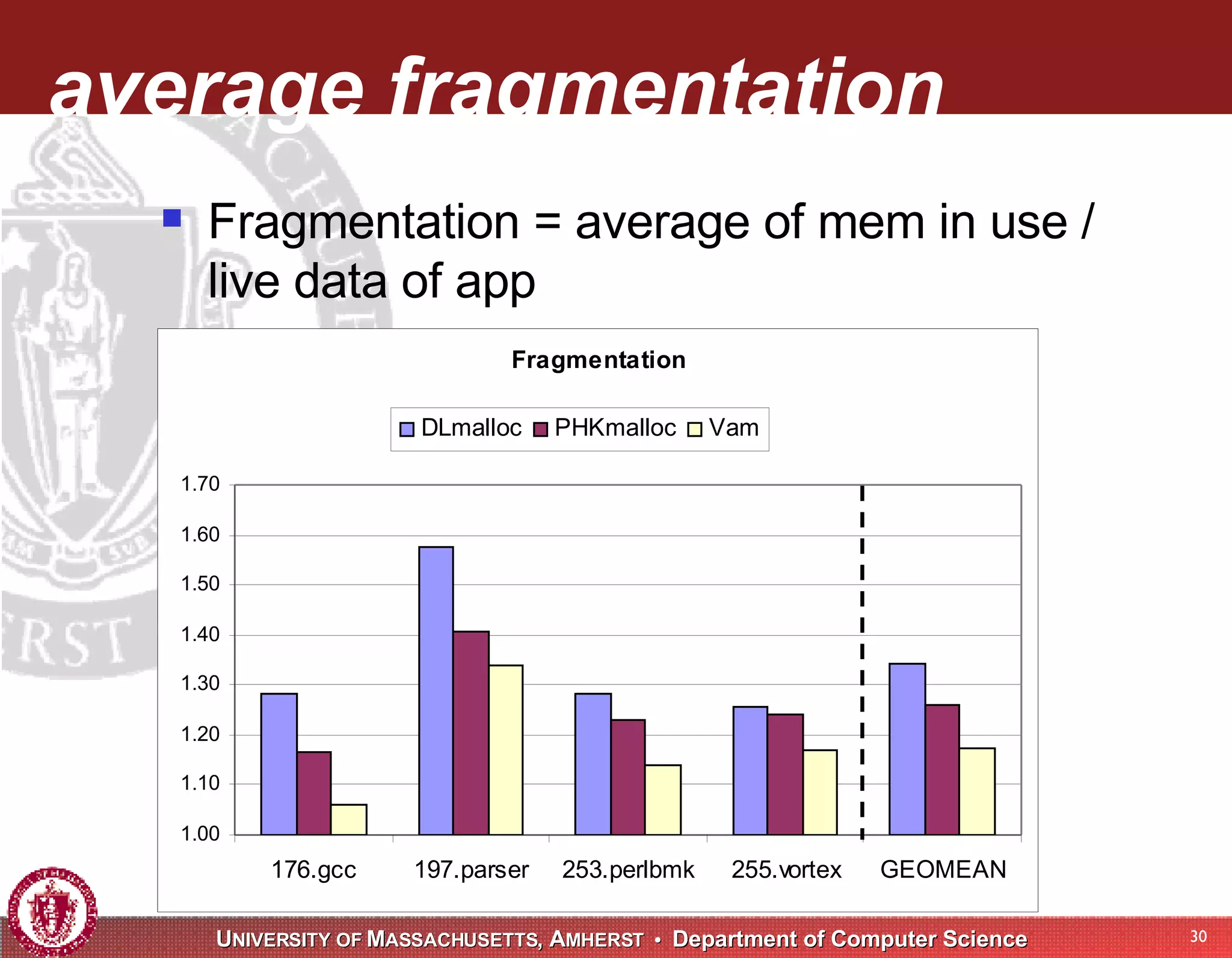

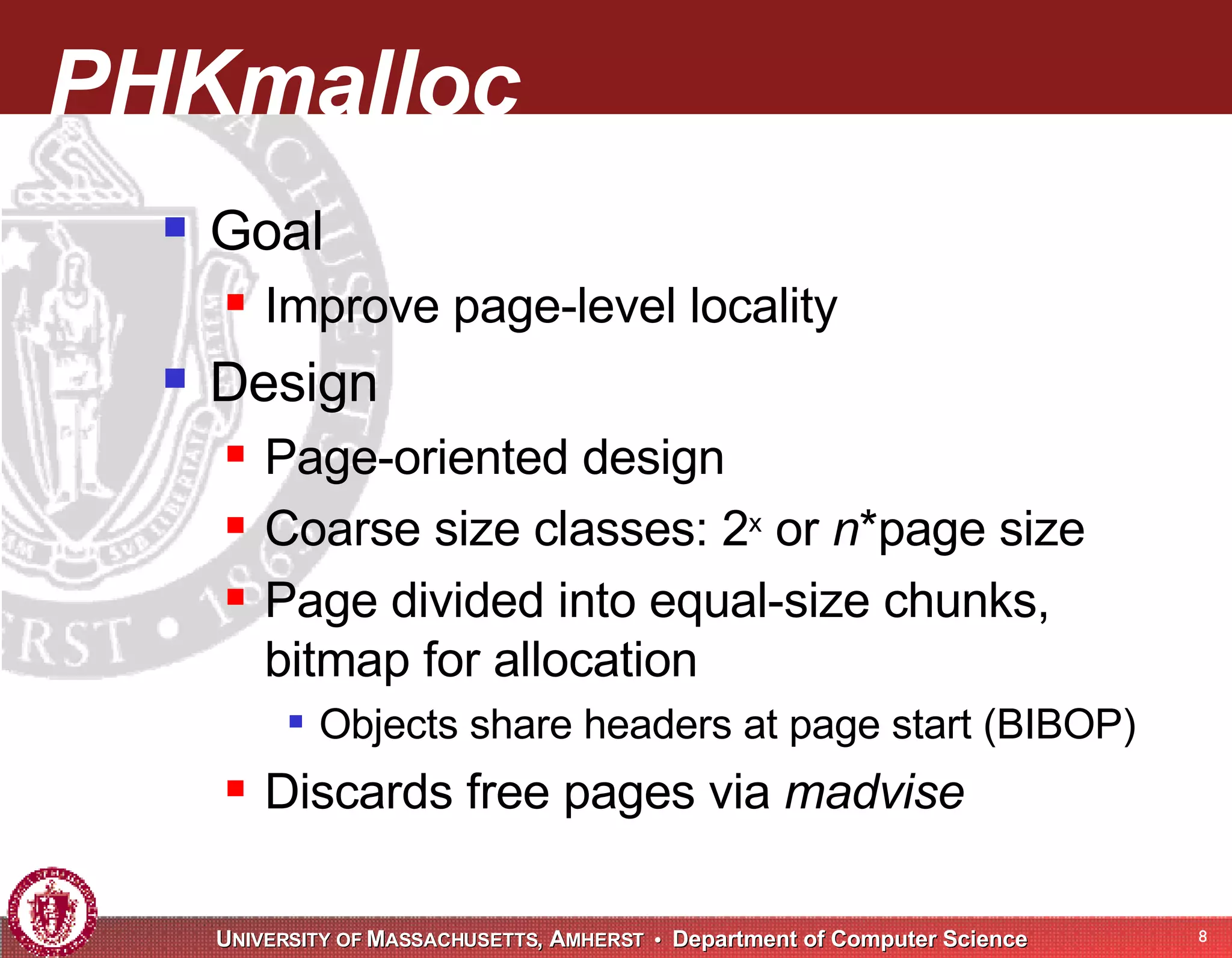

The document presents VAM, a dynamic memory allocator designed to enhance memory performance by reducing fragmentation and improving spatial locality of heap objects. VAM utilizes a page-based design with fine-grained size classes and eliminates object headers for small objects to optimize cache and page-level performance. Experimental evaluations demonstrate that VAM outperforms existing allocators in both ample and constrained memory environments, with a focus on real-world application performance.

![related work Previous work on dynamic allocation Reducing fragmentation [survey: Wilson et al., Wilson & Johnstone] Improving locality Search inside allocator [Grunwald et al.] Programmer-assisted [Chilimbi et al., Truong et al.] Profile-based [Barrett & Zorn, Seidl & Zorn]](https://image.slidesharecdn.com/vam-a-localityimproving-dynamic-memory-allocator1687/75/Vam-A-Locality-Improving-Dynamic-Memory-Allocator-3-2048.jpg)

![Vam design Builds on previous allocator designs DLmalloc Doug Lea, default allocator in Linux/GNU libc PHKmalloc Poul-Henning Kamp, default allocator in FreeBSD Reap [Berger et al. 2002] Combines best features](https://image.slidesharecdn.com/vam-a-localityimproving-dynamic-memory-allocator1687/75/Vam-A-Locality-Improving-Dynamic-Memory-Allocator-6-2048.jpg)

![Vam overview Goal Improve application performance across wide range of available RAM Highlights Page-based design Fine-grained size classes No headers for small objects Implemented in Heap Layers using C++ templates [Berger et al. 2001]](https://image.slidesharecdn.com/vam-a-localityimproving-dynamic-memory-allocator1687/75/Vam-A-Locality-Improving-Dynamic-Memory-Allocator-10-2048.jpg)