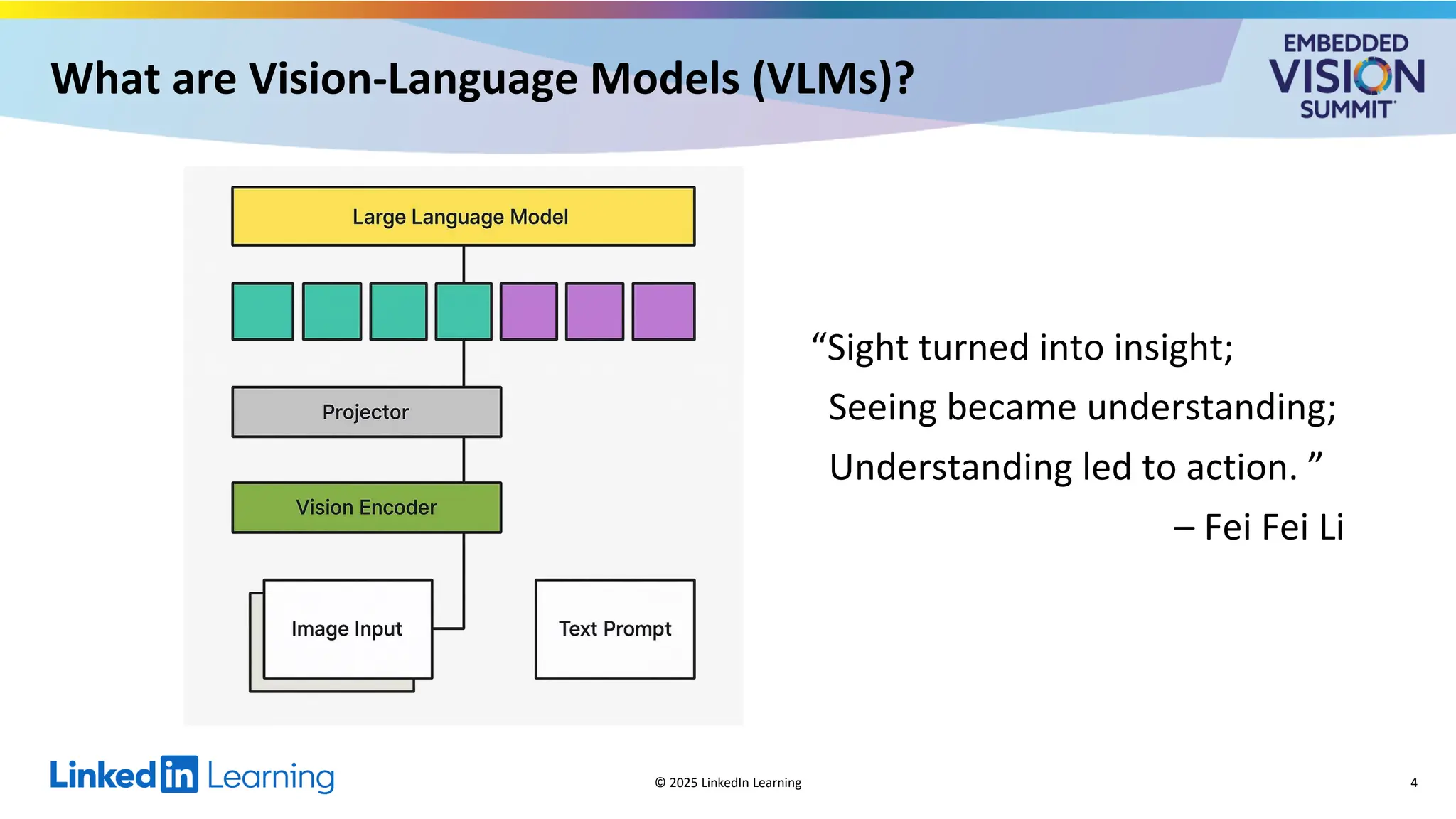

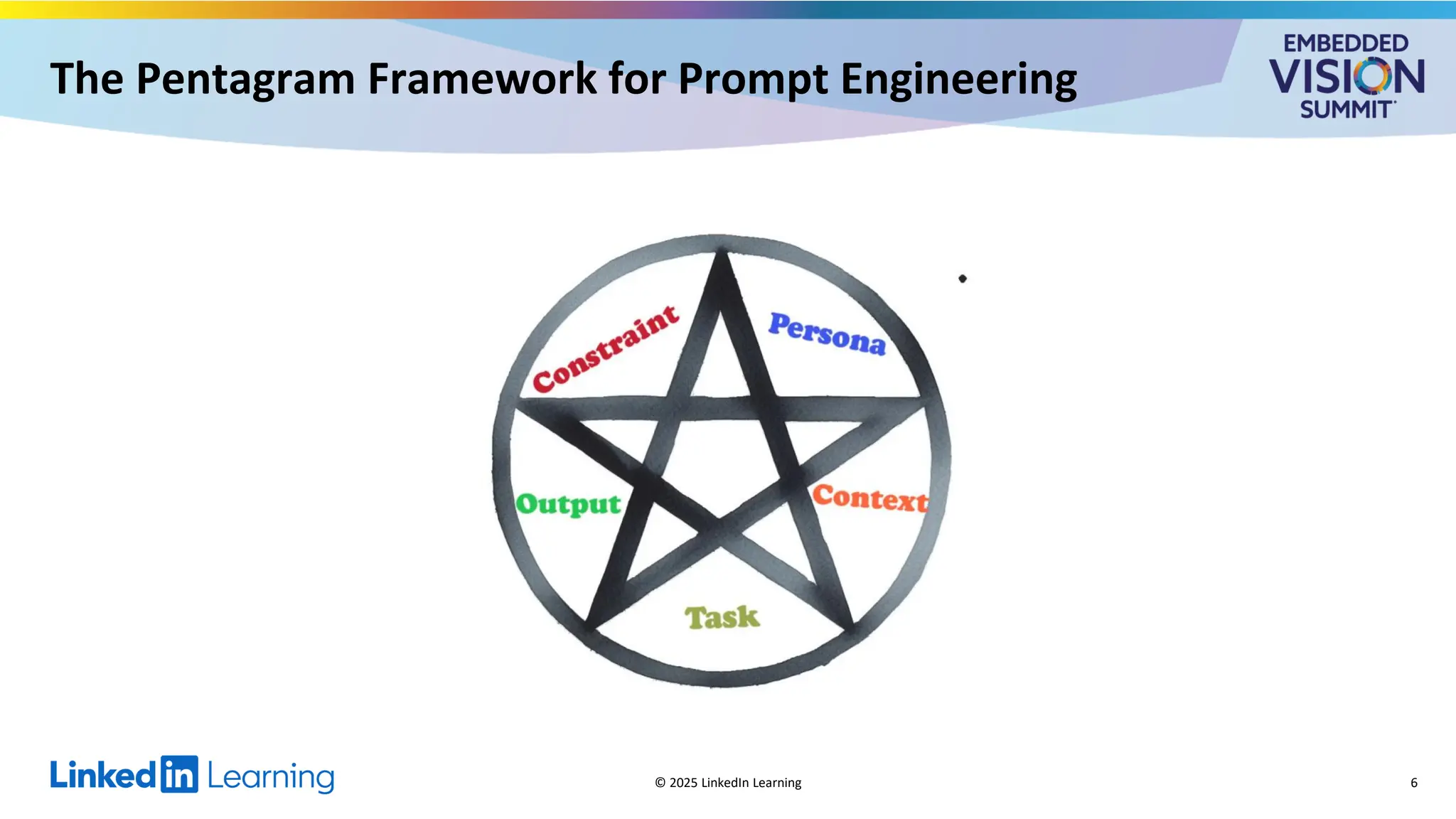

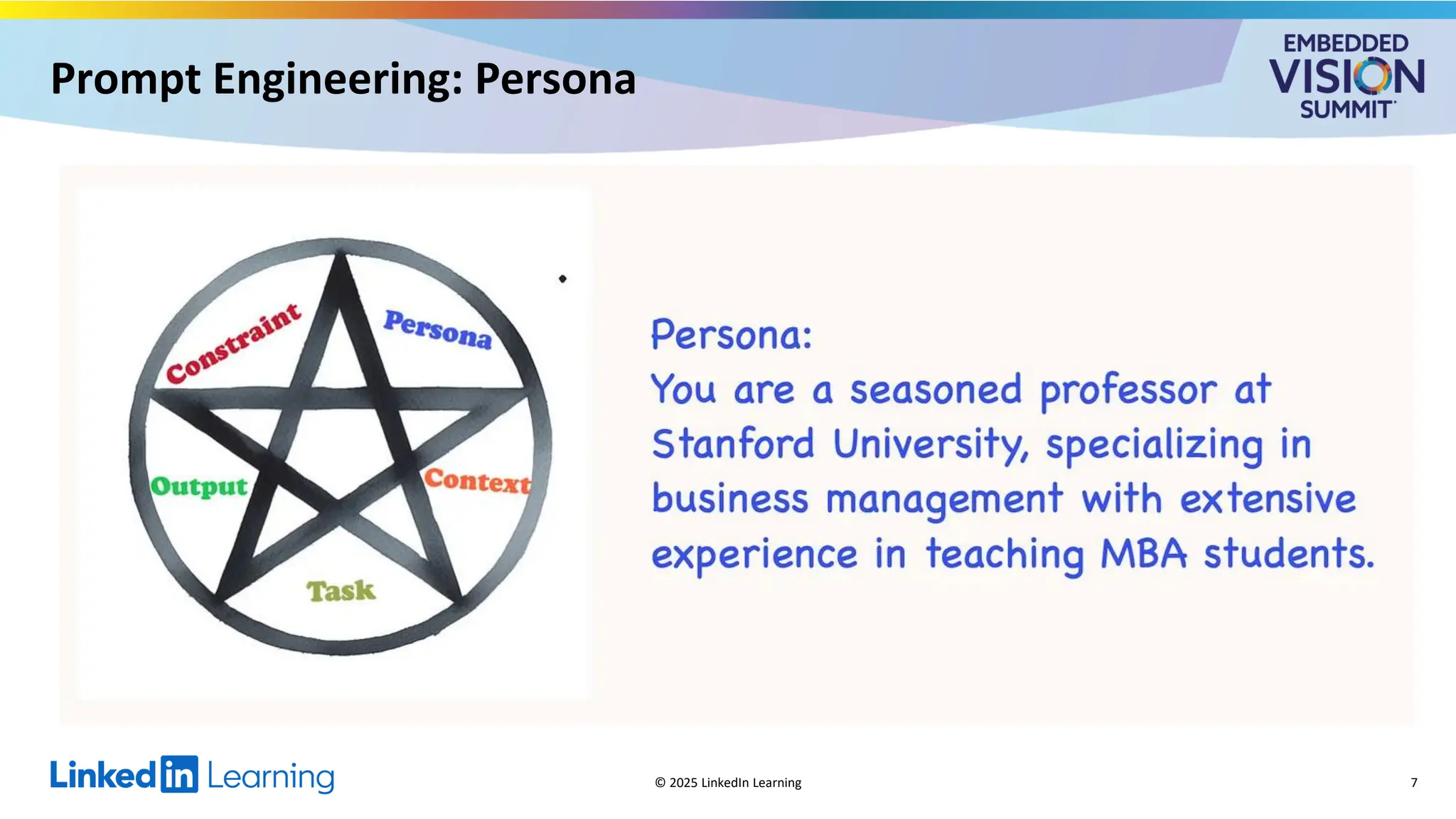

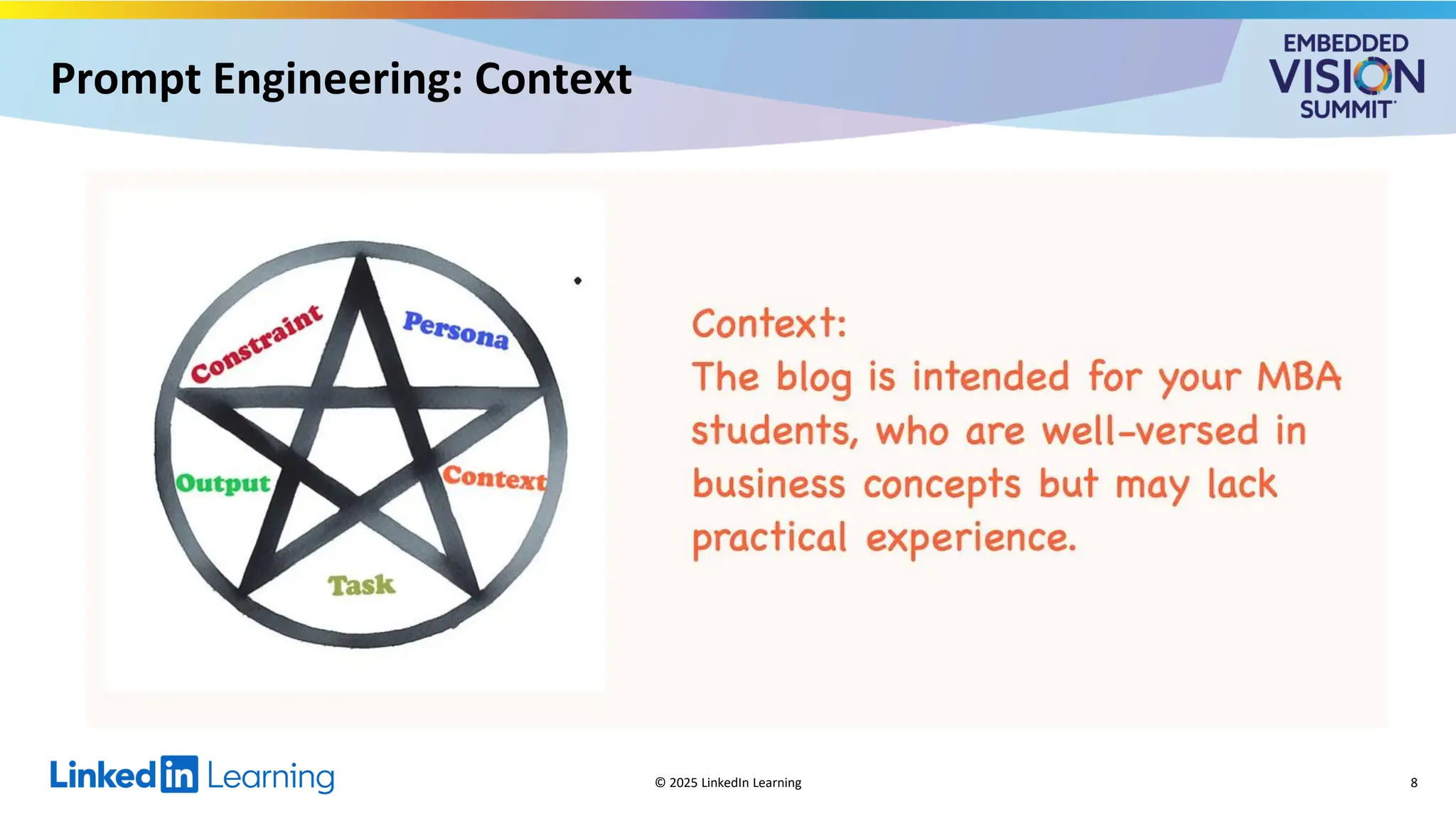

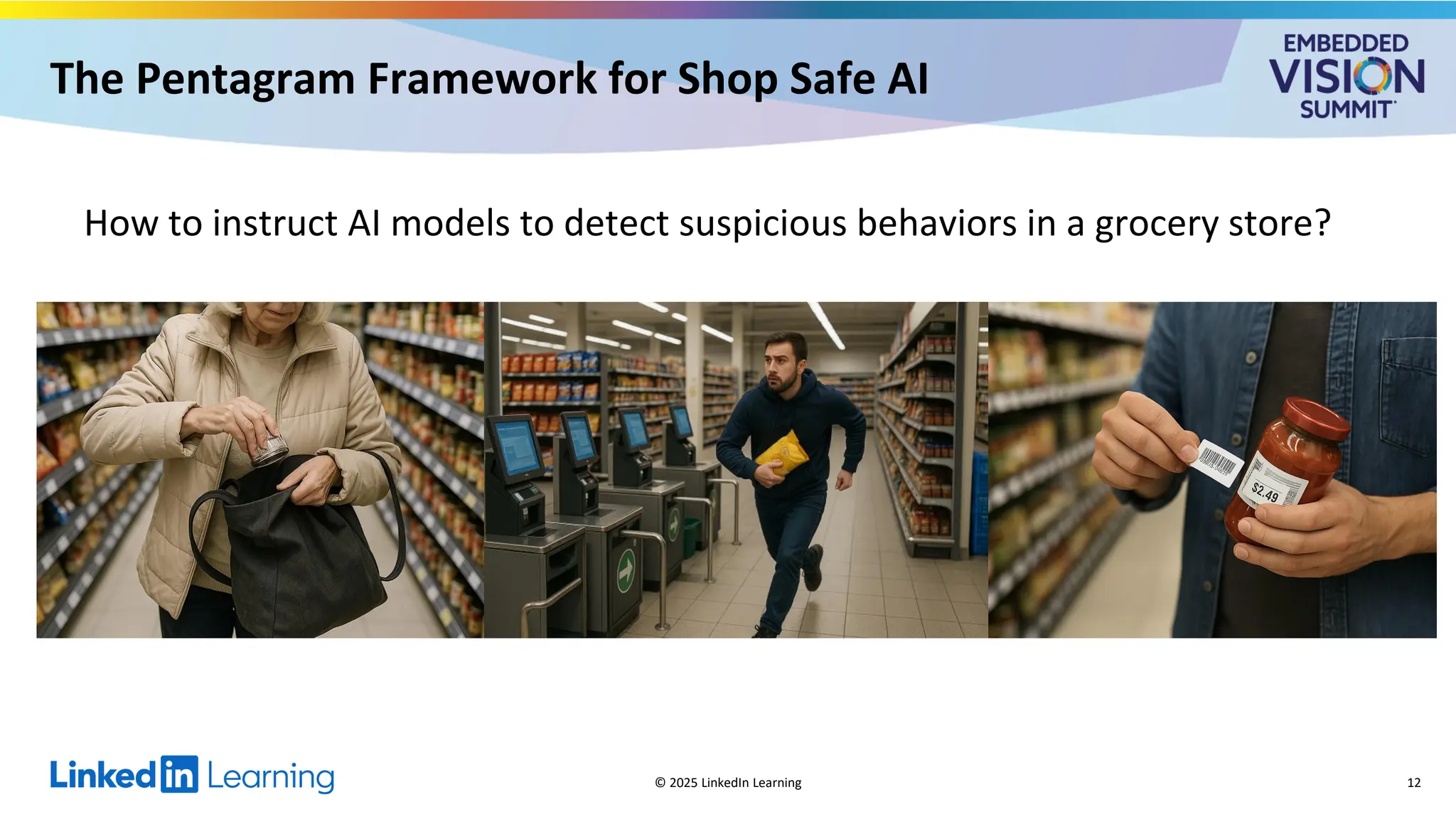

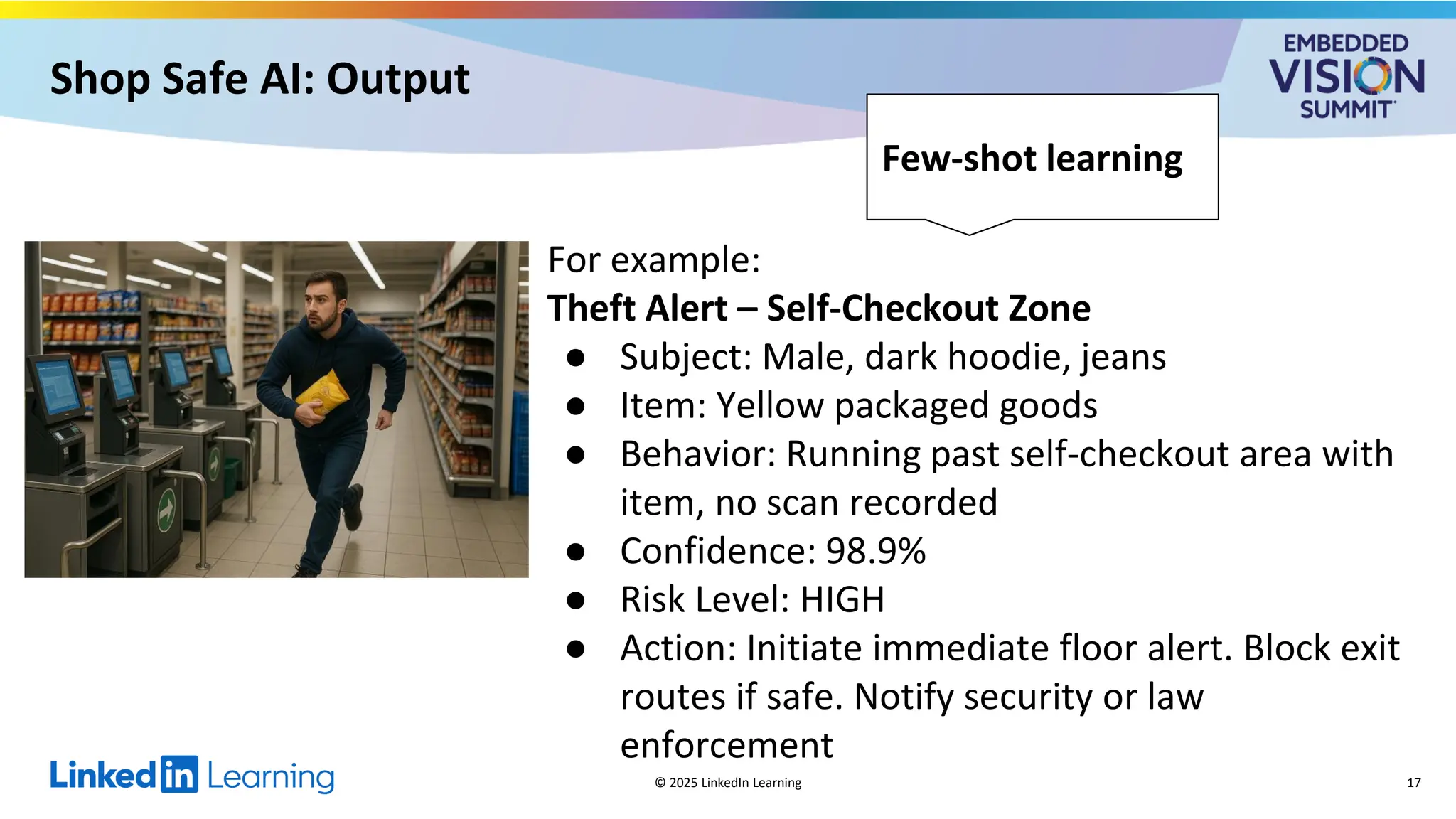

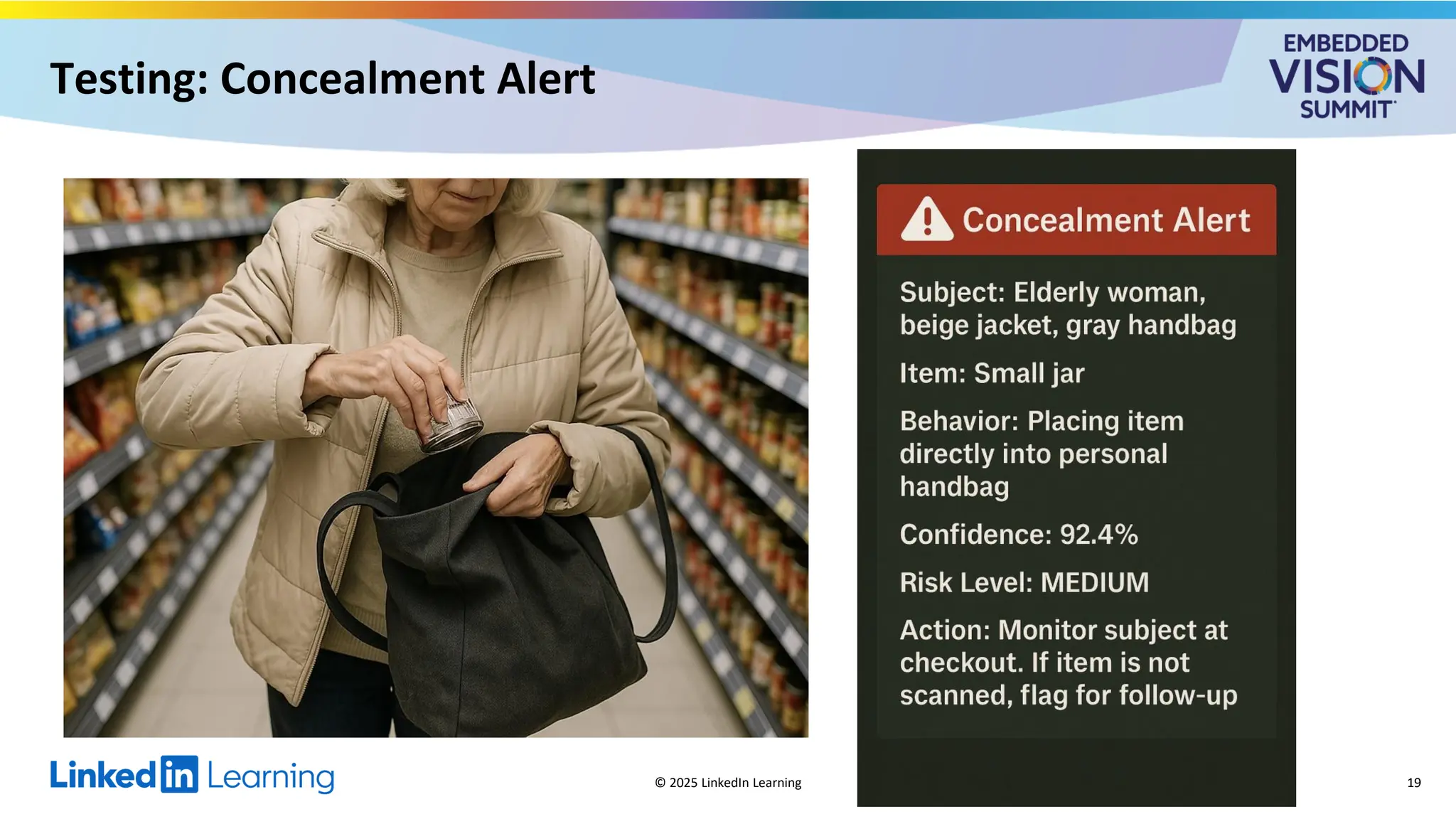

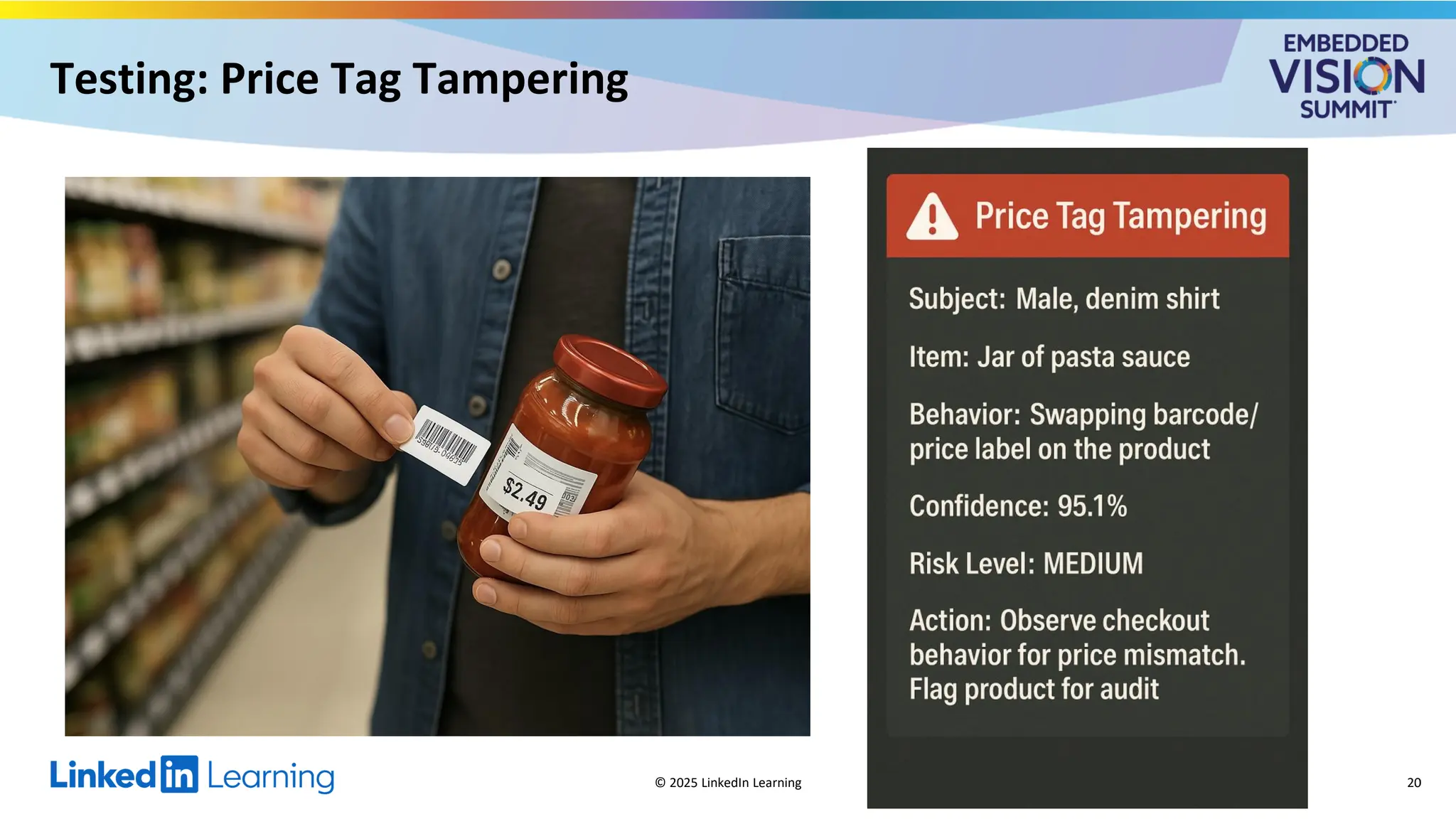

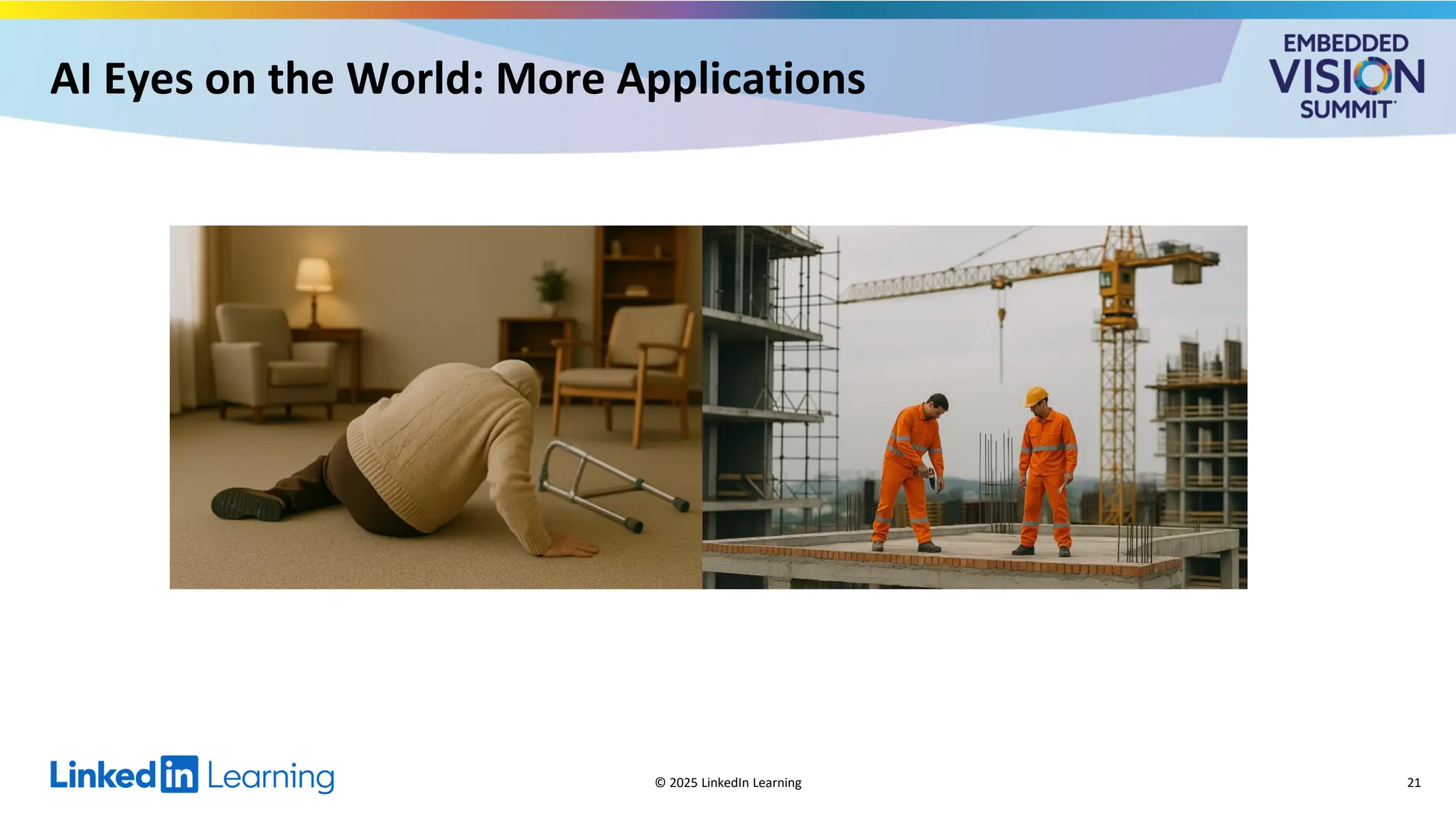

For the full video of this presentation, please visit: https://www.edge-ai-vision.com/2025/09/unlocking-visual-intelligence-advanced-prompt-engineering-for-vision-language-models-a-presentation-from-linkedin-learning/ Alina Li Zhang, Senior Data Scientist and Tech Writer at LinkedIn Learning, presents the “Unlocking Visual Intelligence: Advanced Prompt Engineering for Vision-language Models” tutorial at the May 2025 Embedded Vision Summit. Imagine a world where AI systems automatically detect thefts in grocery stores, ensure construction site safety and identify patient falls in hospitals. This is no longer science fiction, as companies today are building powerful applications that integrate visual content with textual data to understand context and act intelligently. In this talk, Zhang delves into vision-language models (VLMs), the core technology behind these intelligent applications, and introduce the Pentagram framework, a structured approach to prompt engineering that significantly improves VLM accuracy and effectiveness. Zhang shows, step-by-step, how to use this prompt engineering process to create an application that uses a VLM to detect suspicious behaviors such as item concealment in grocery stores. She also explores the broader applications of these techniques in a variety of real-world scenarios. You’ll discover the possibilities of vision-language models and learn how to unlock their full potential.