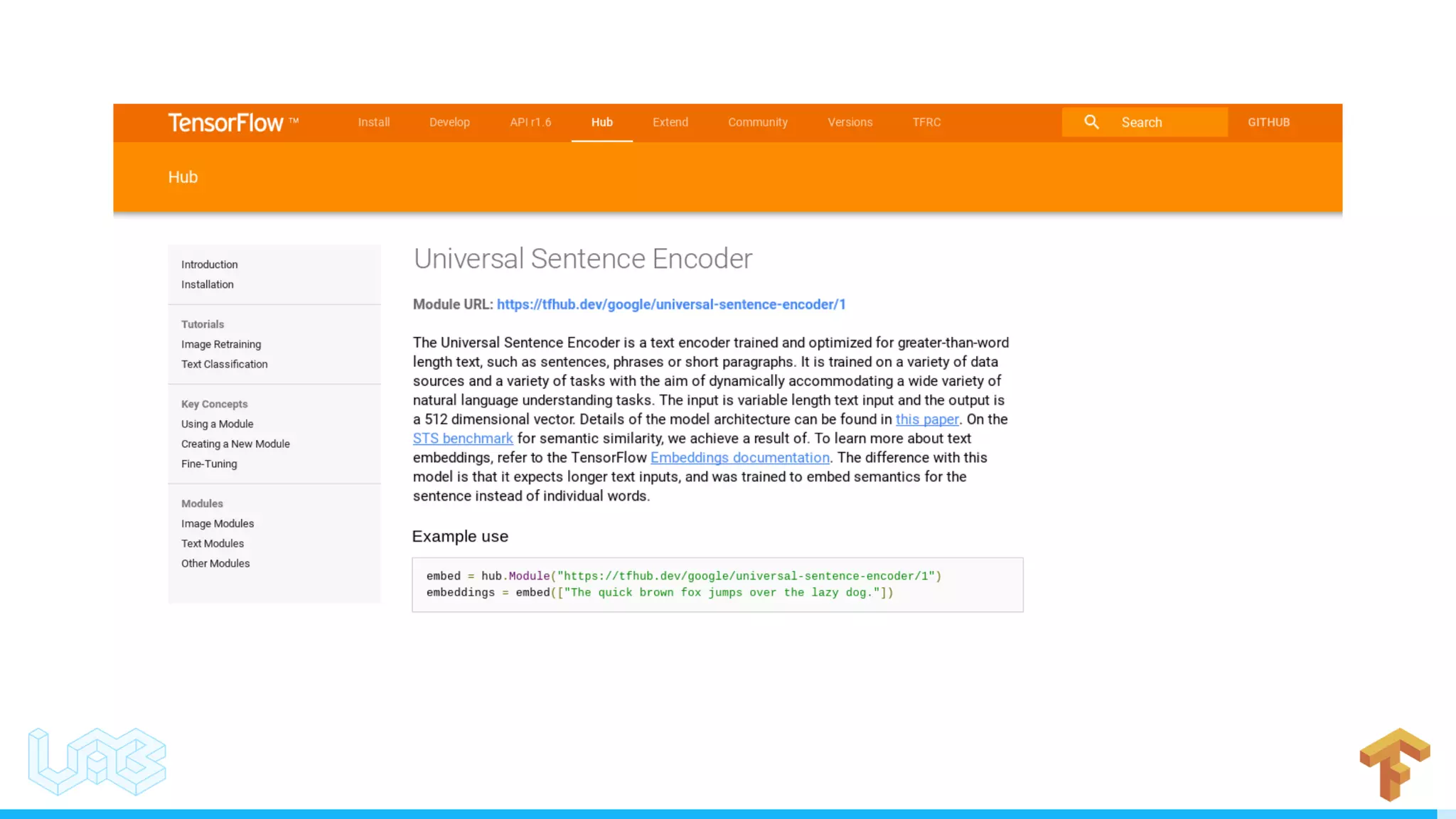

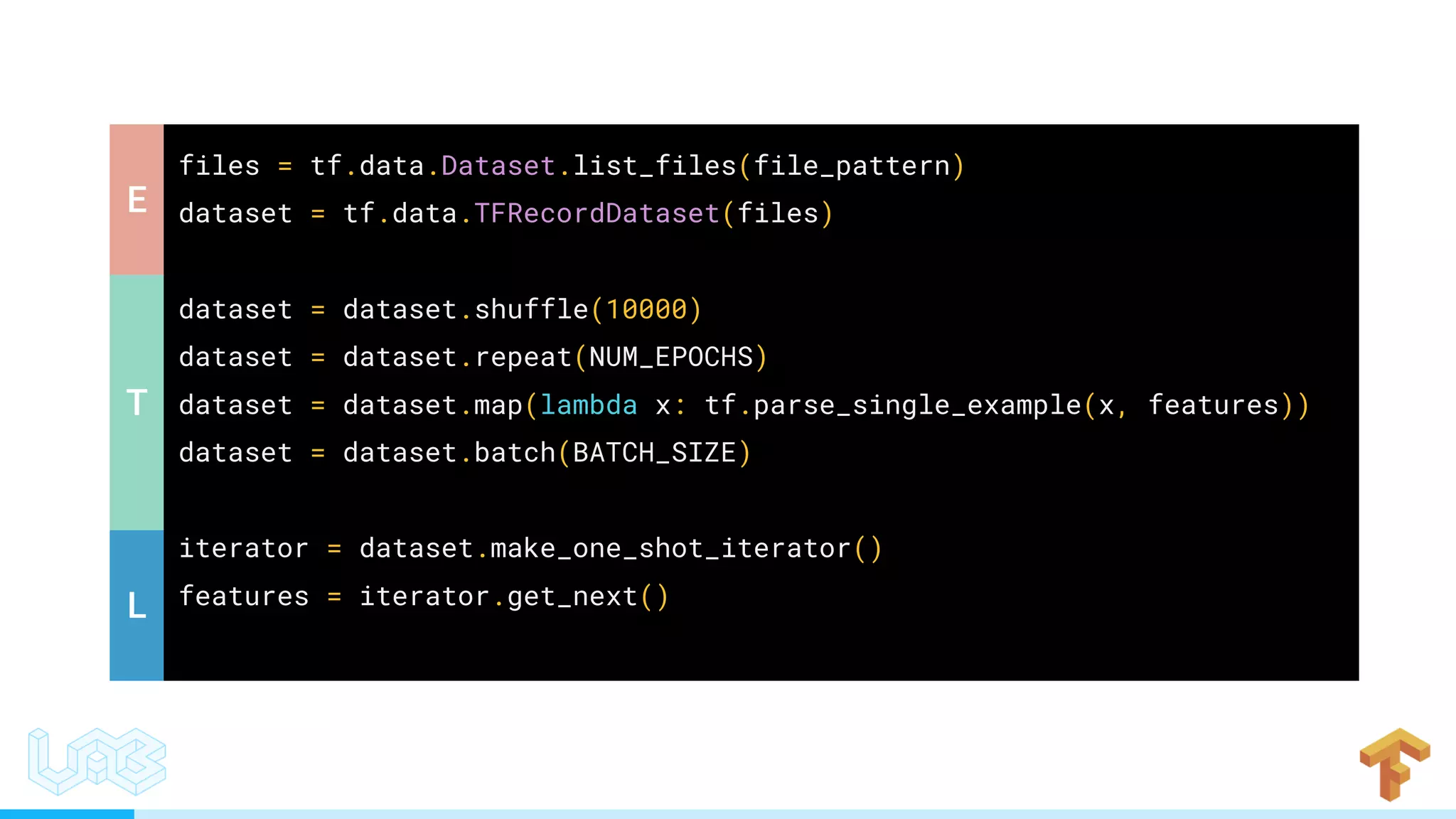

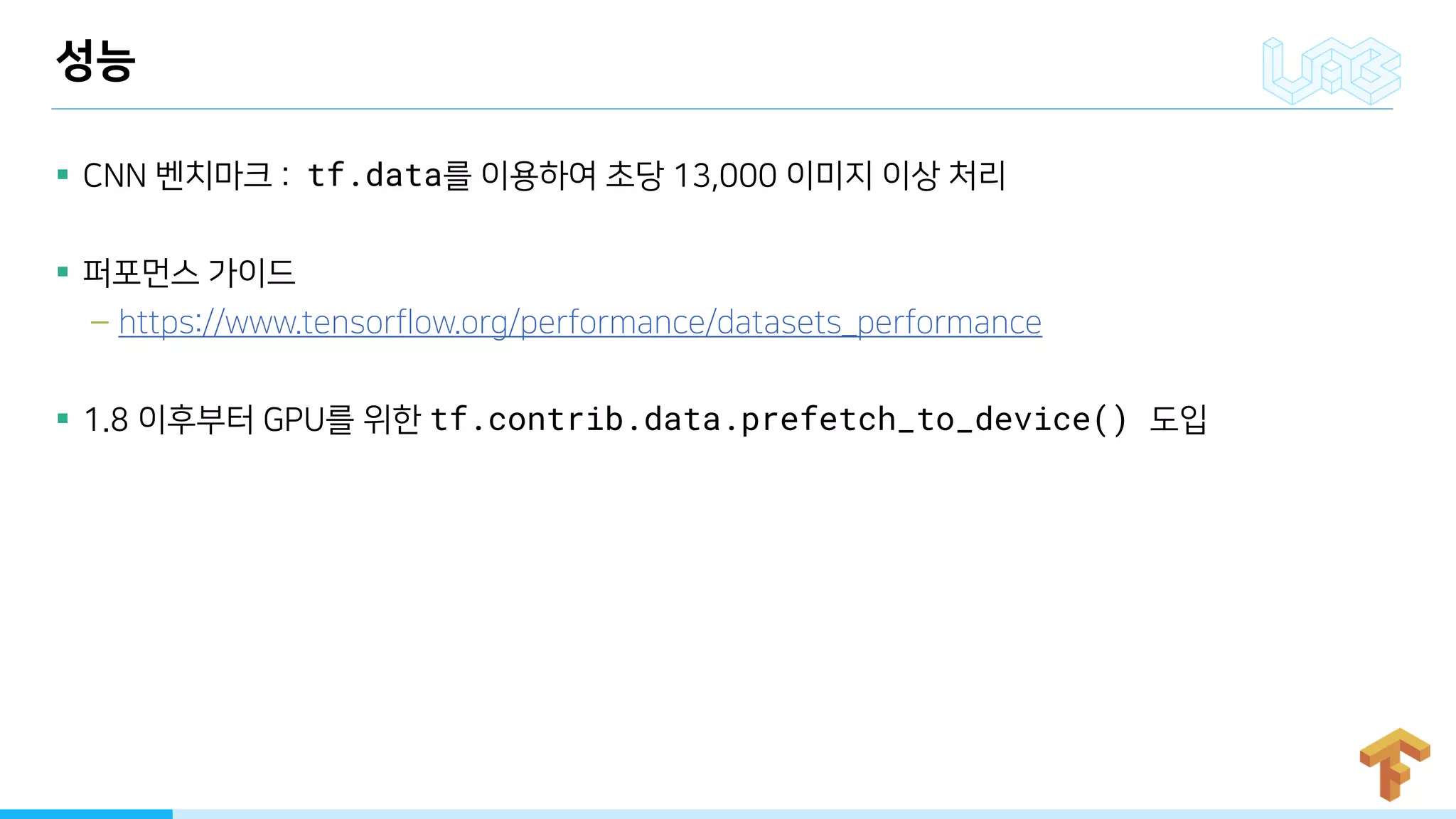

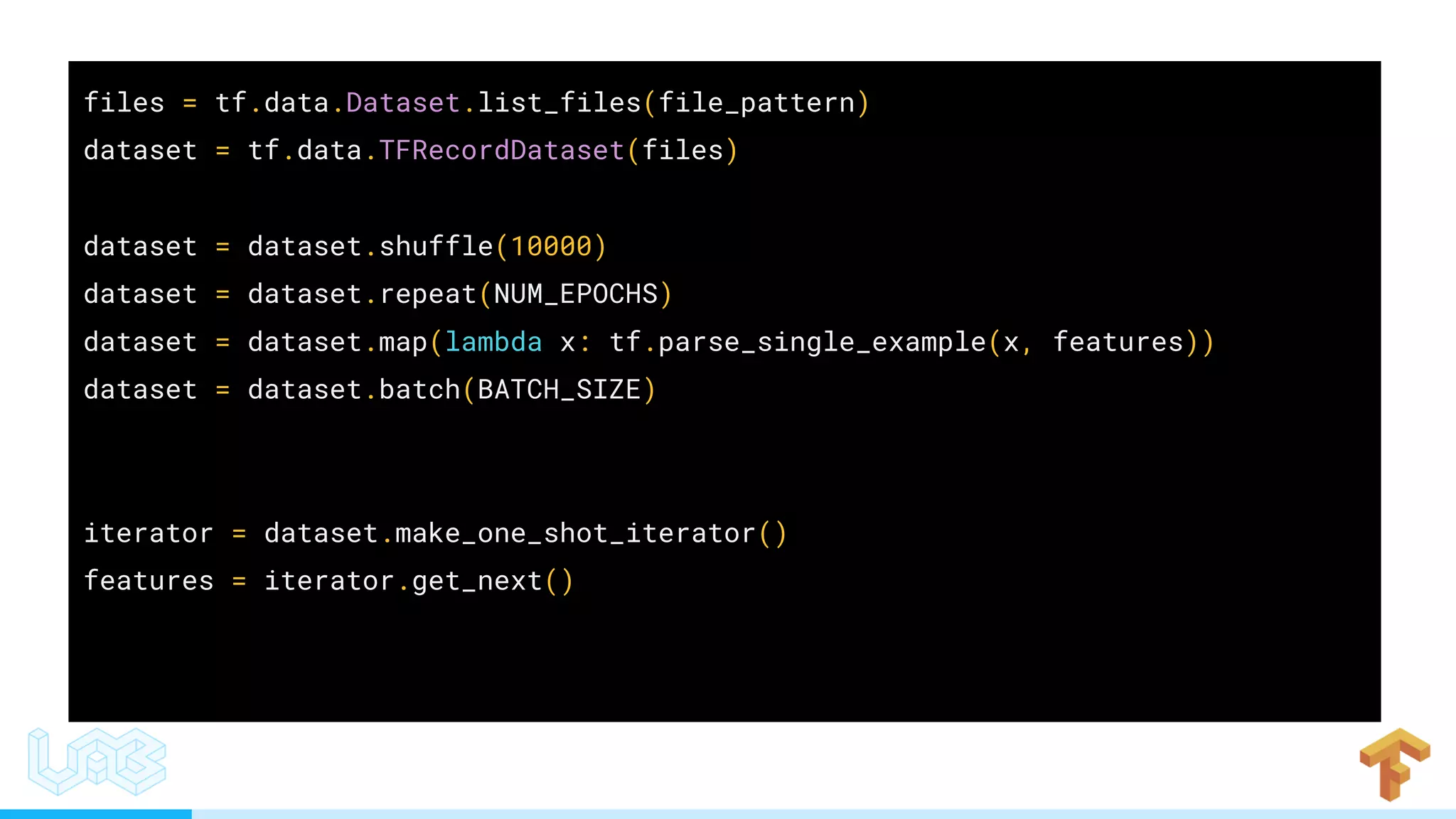

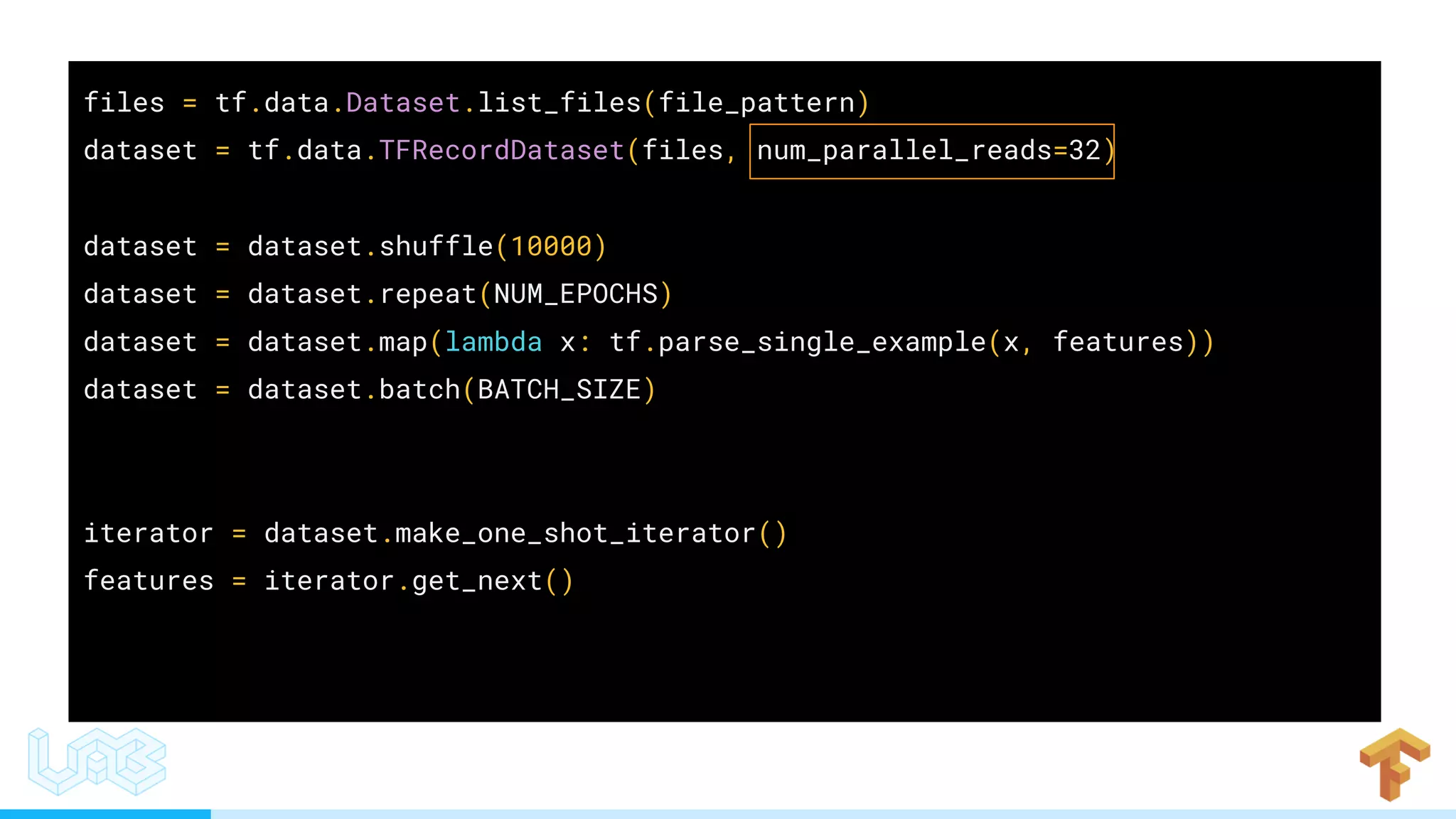

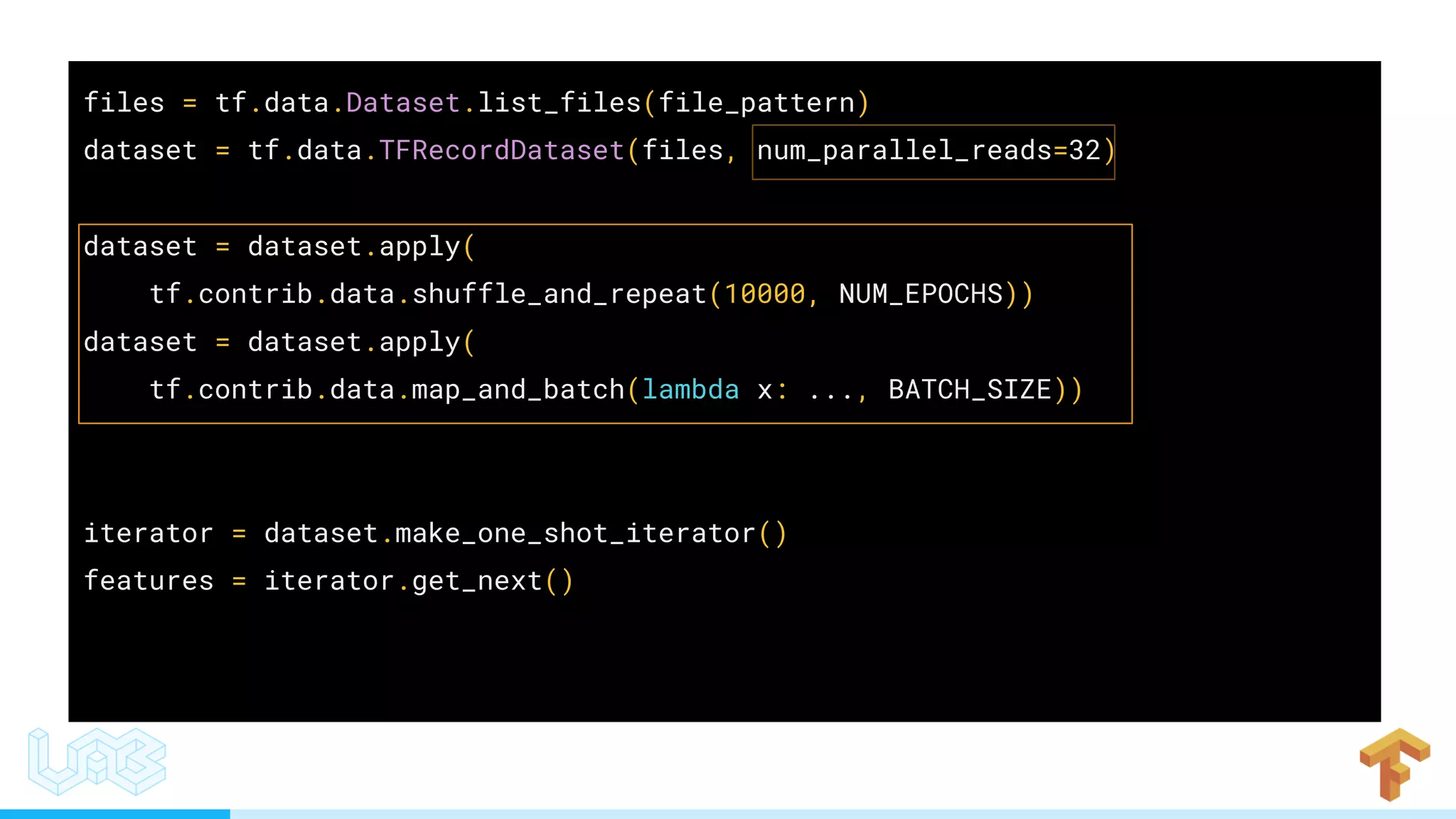

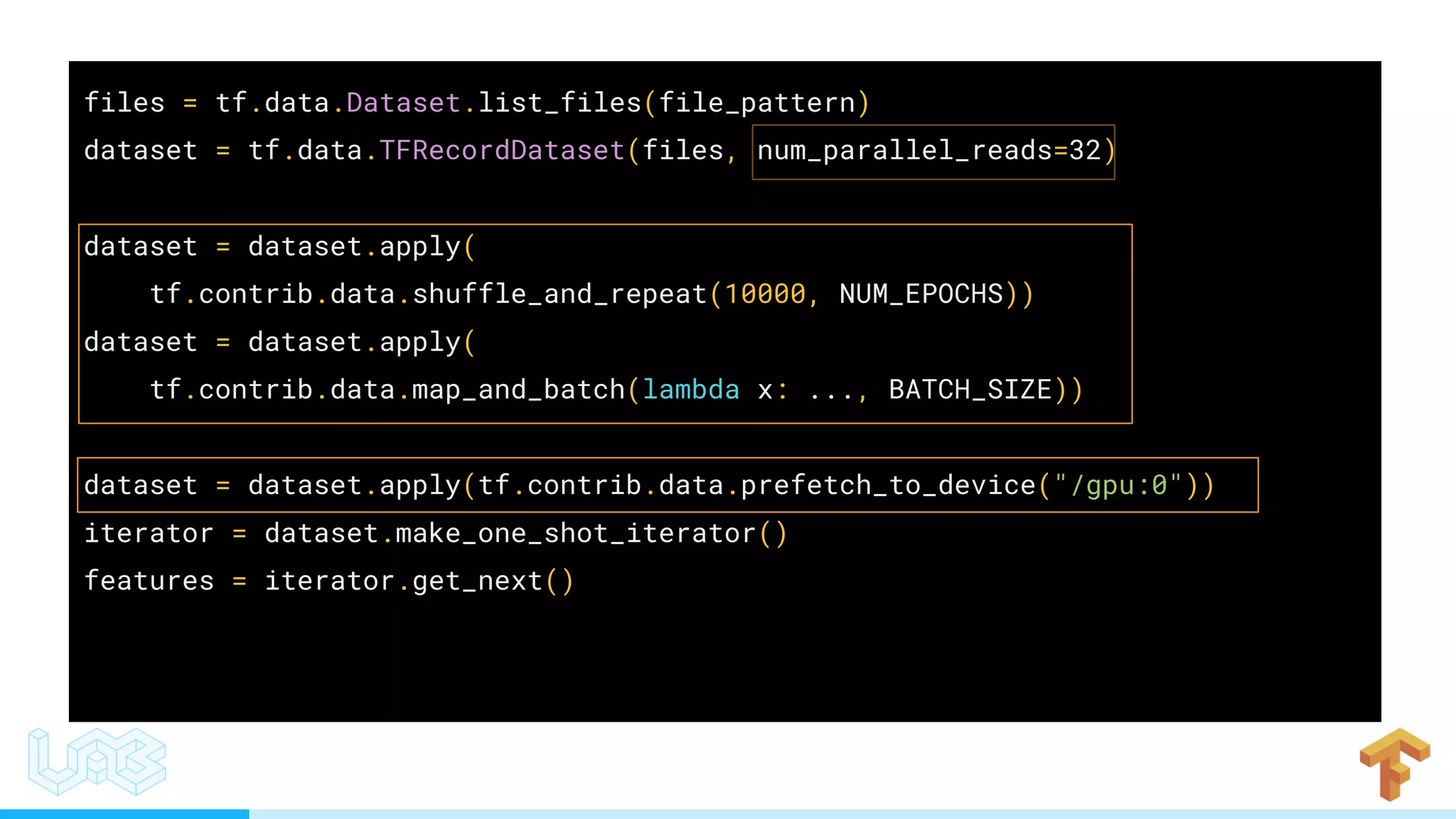

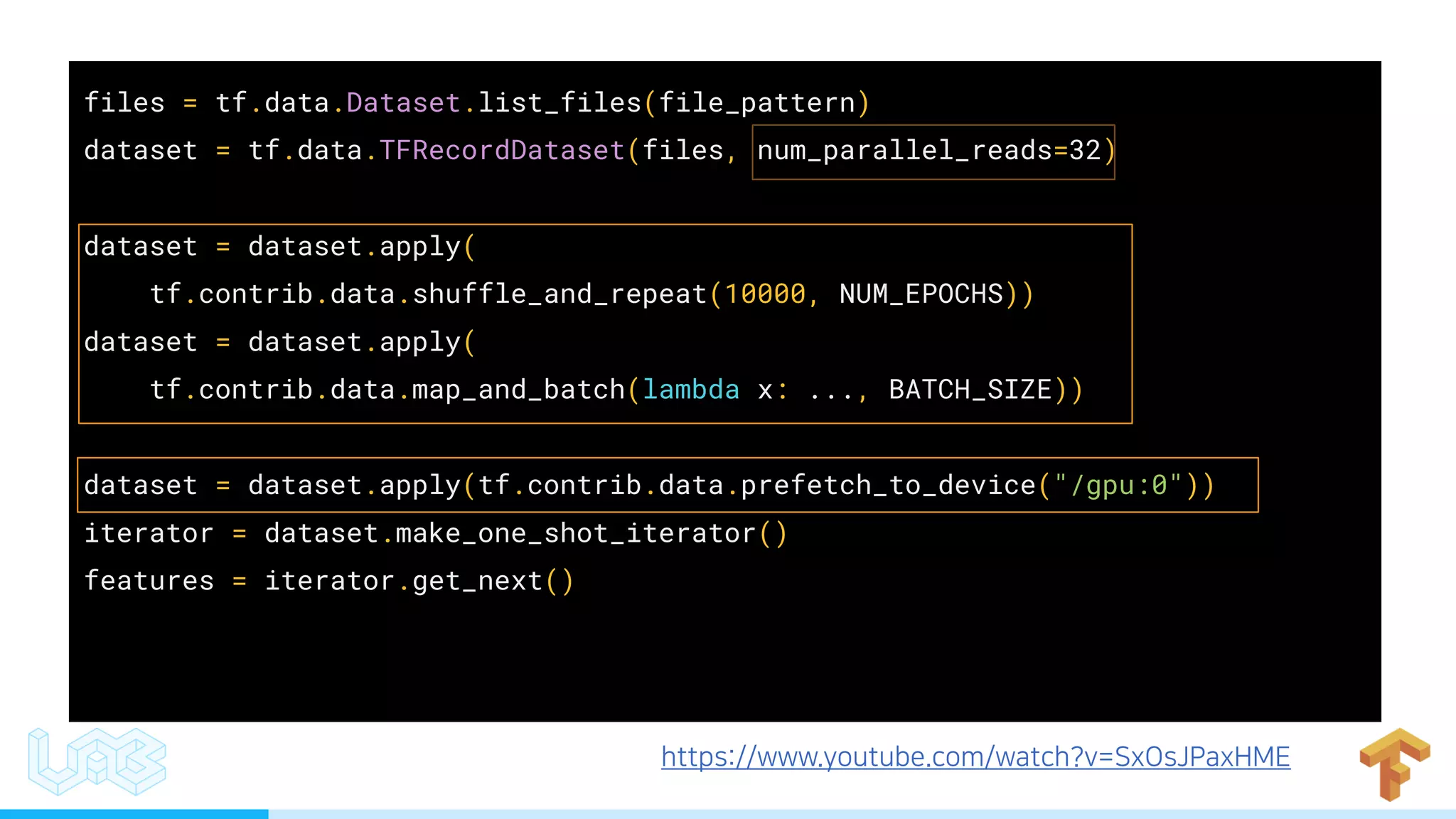

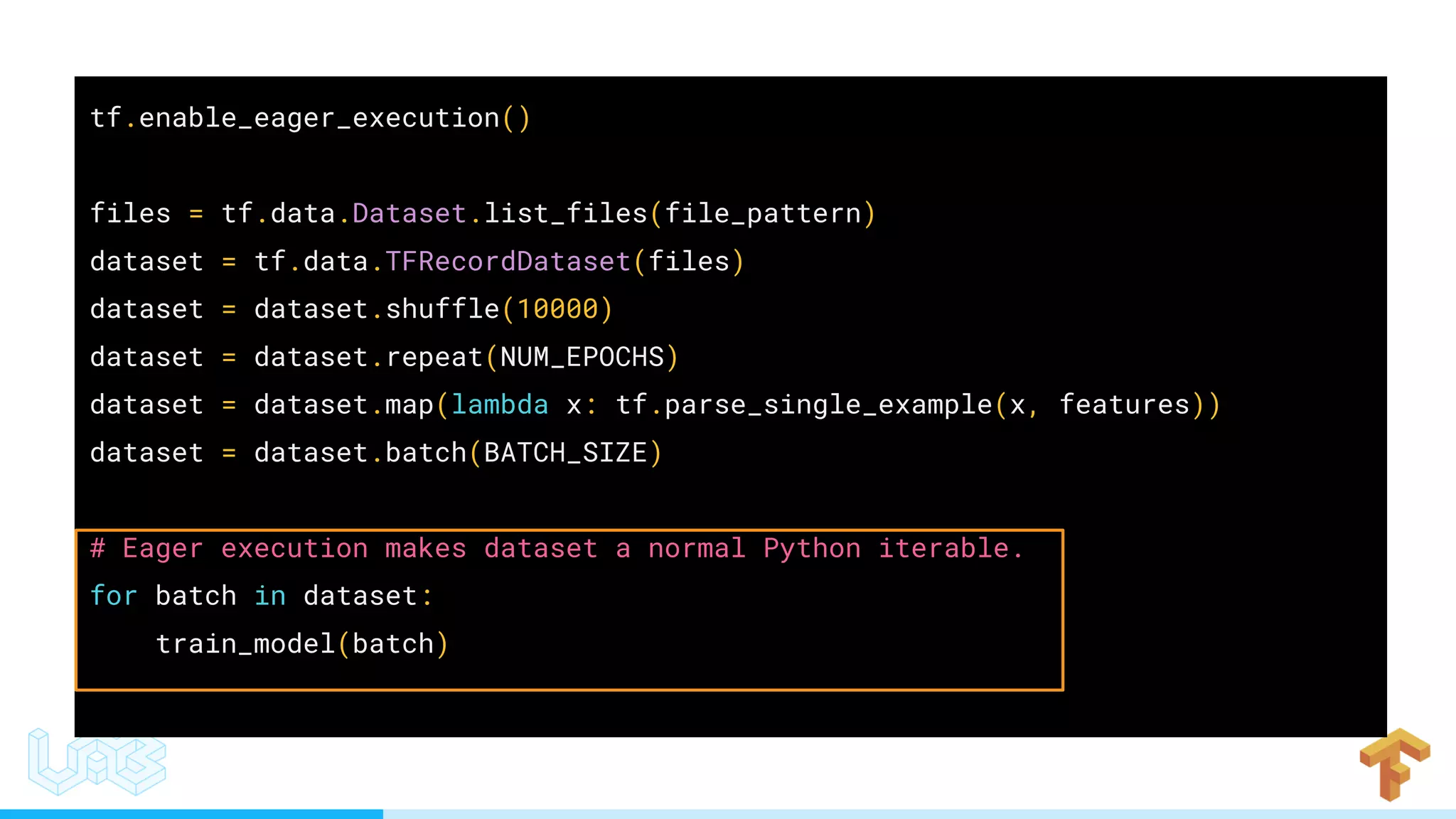

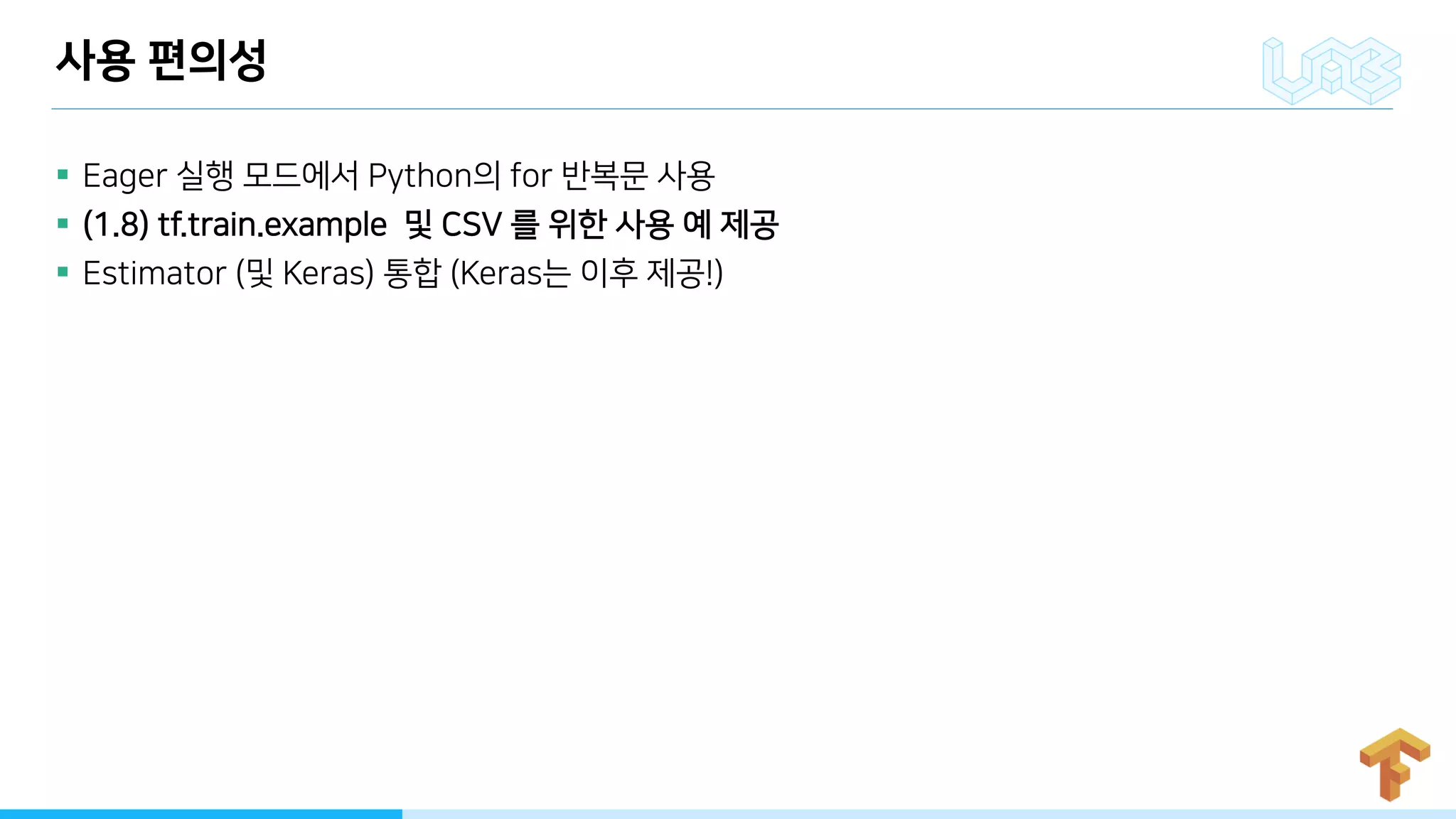

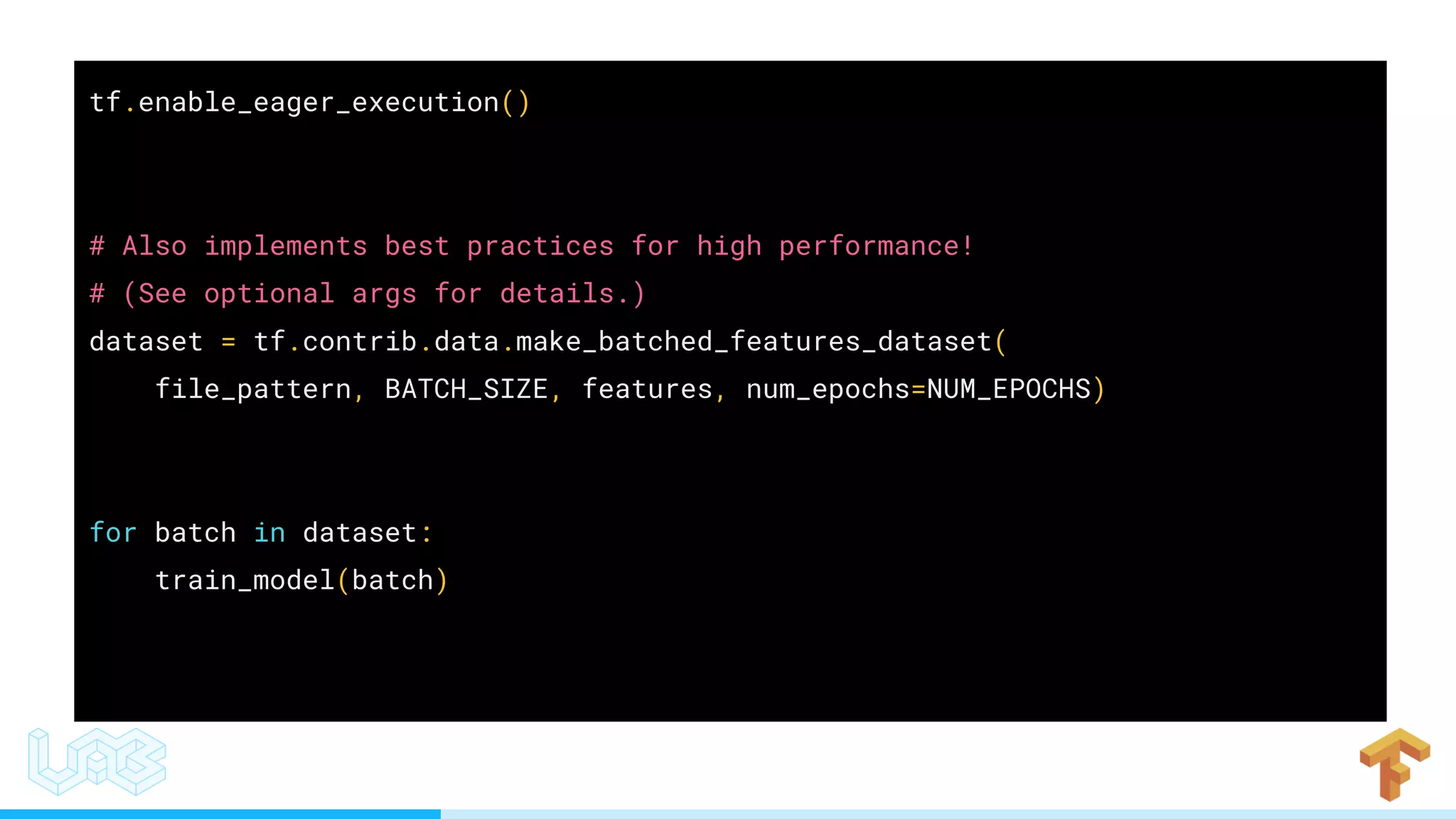

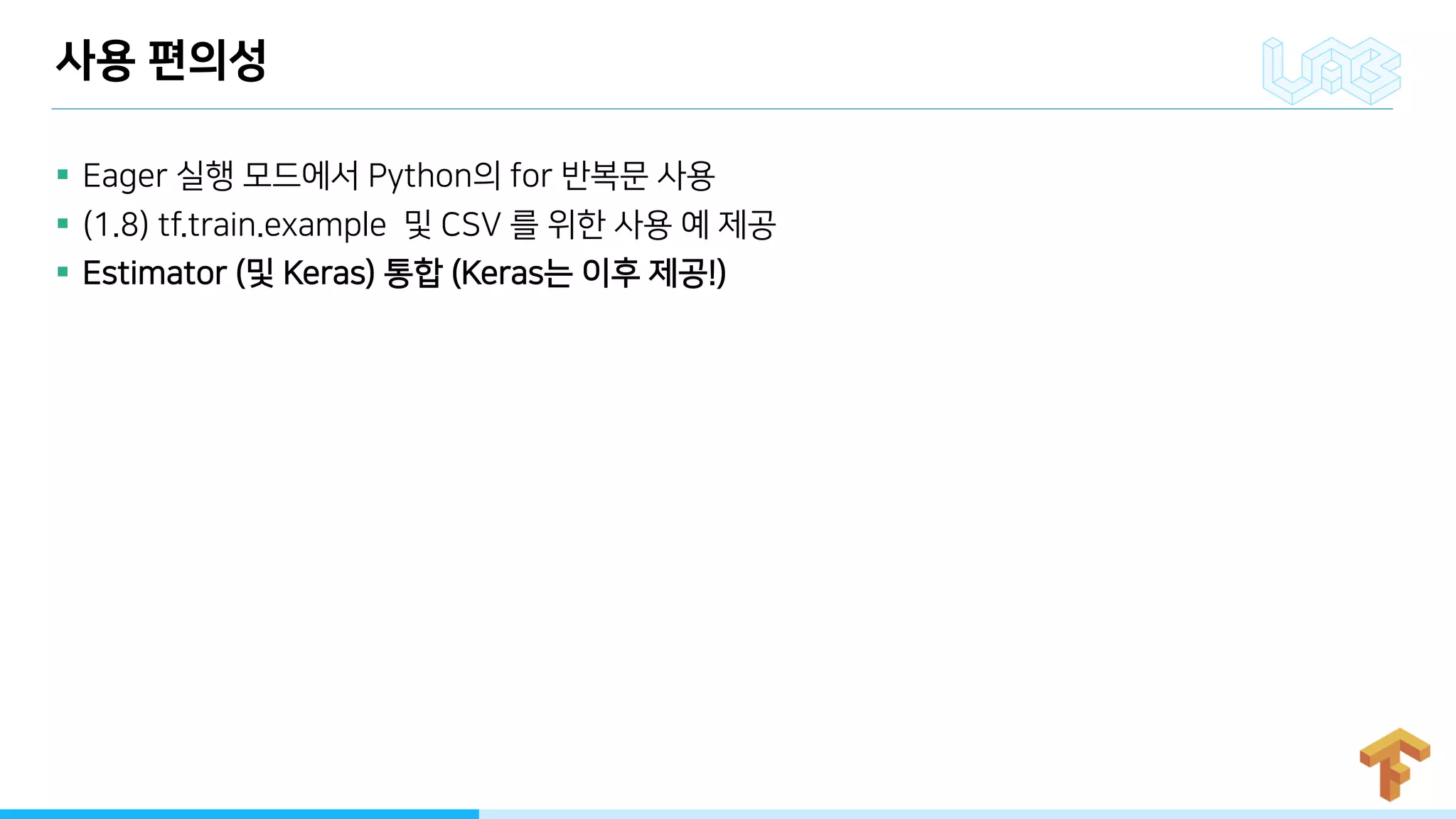

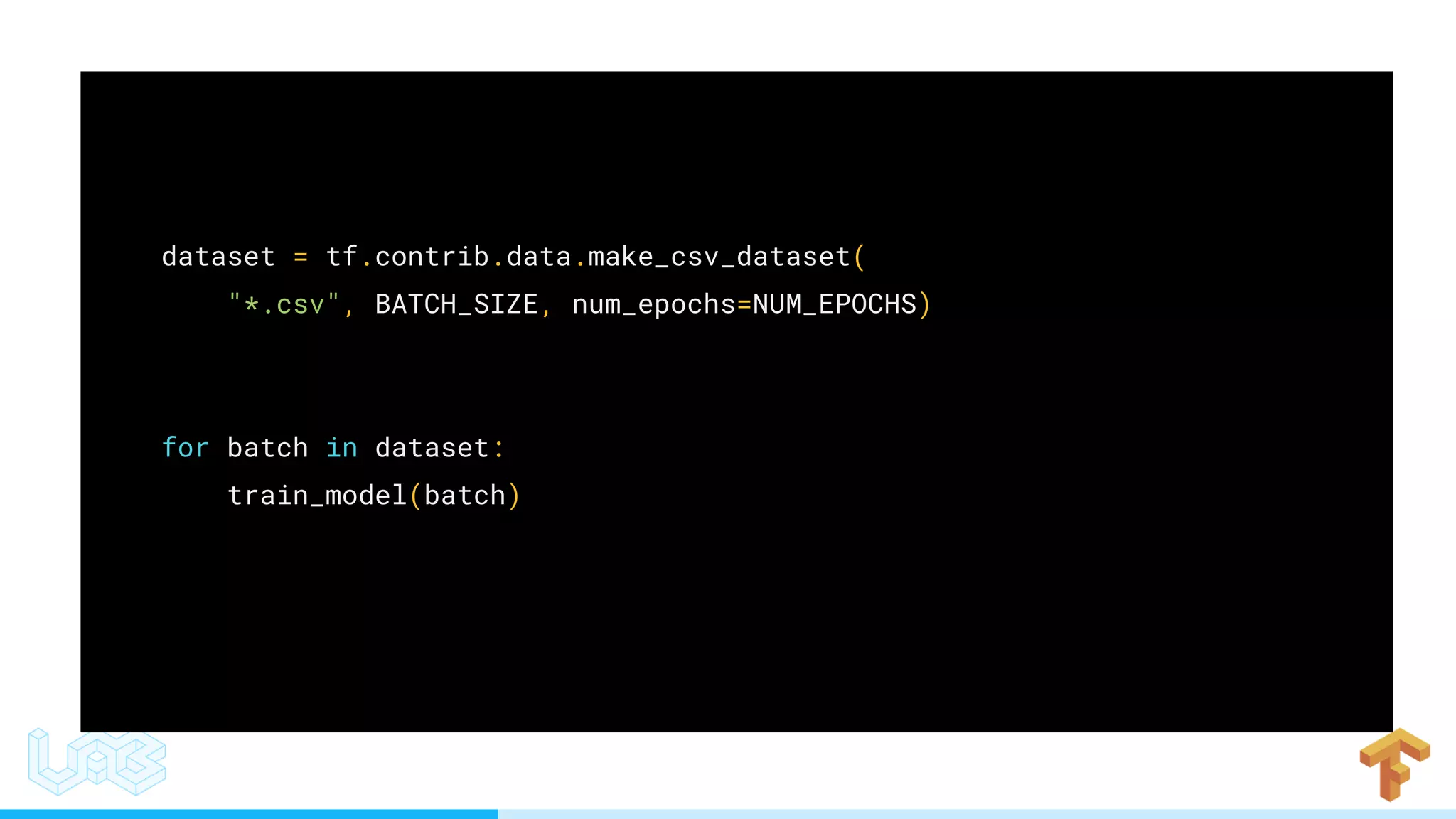

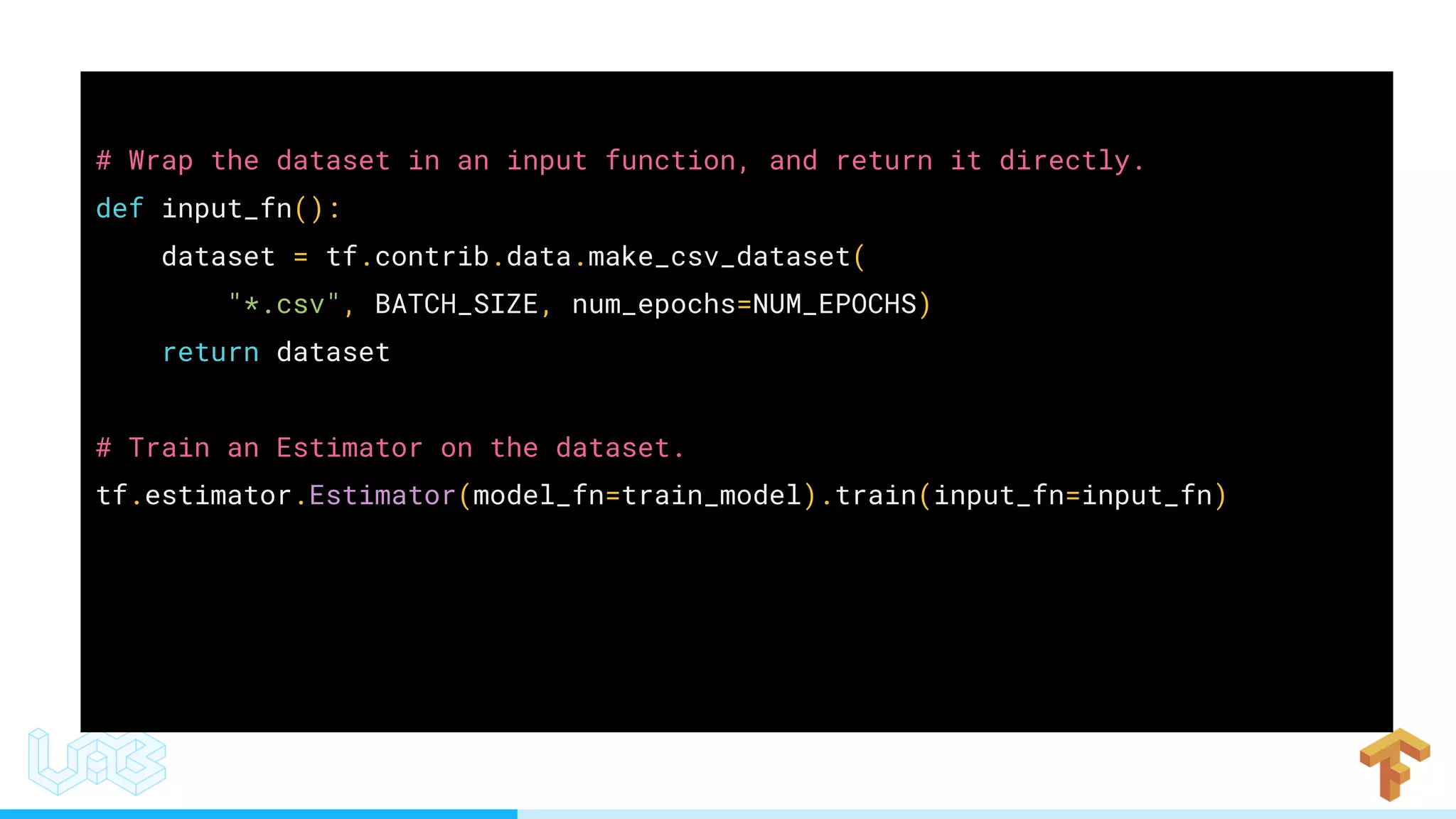

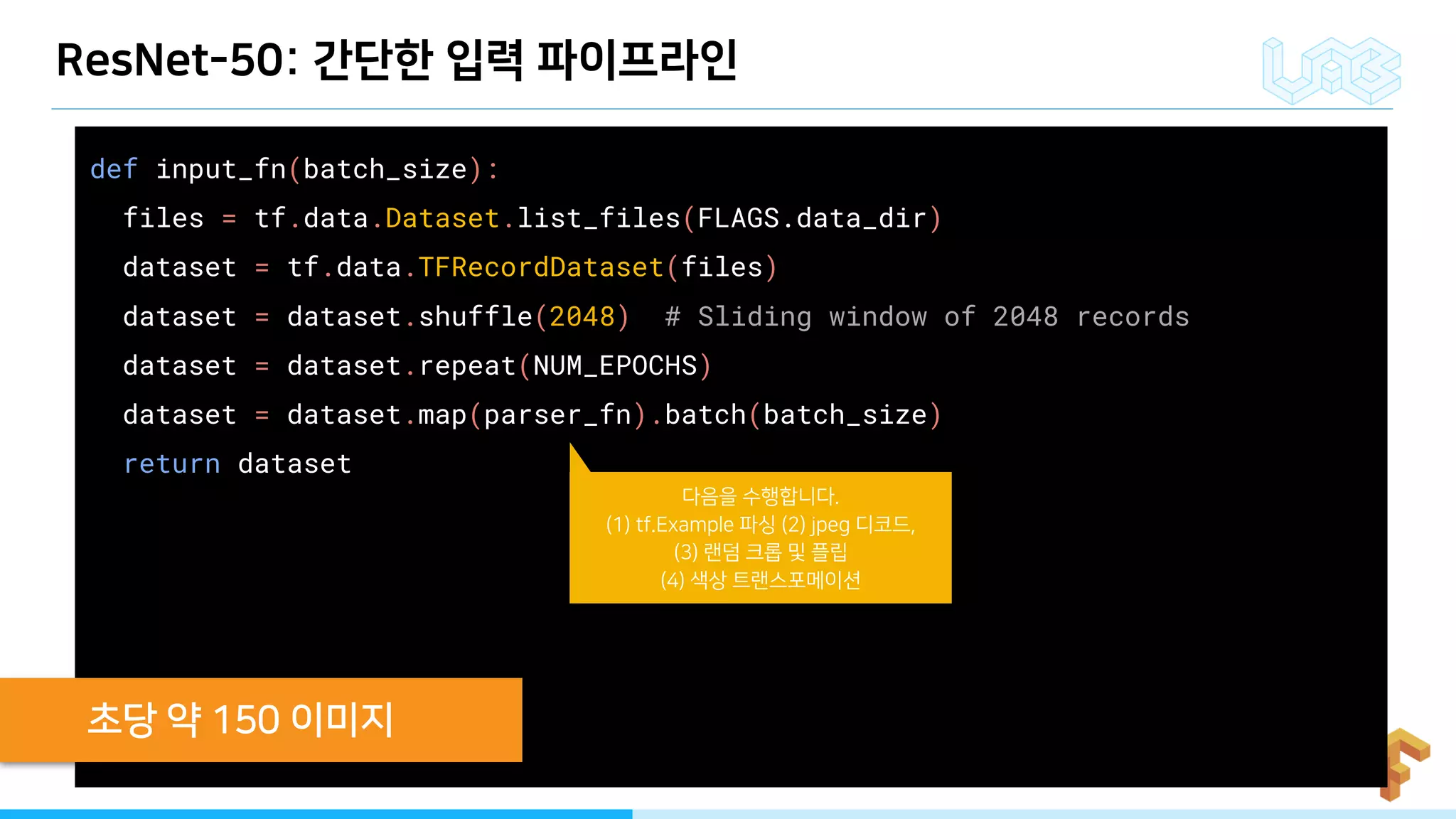

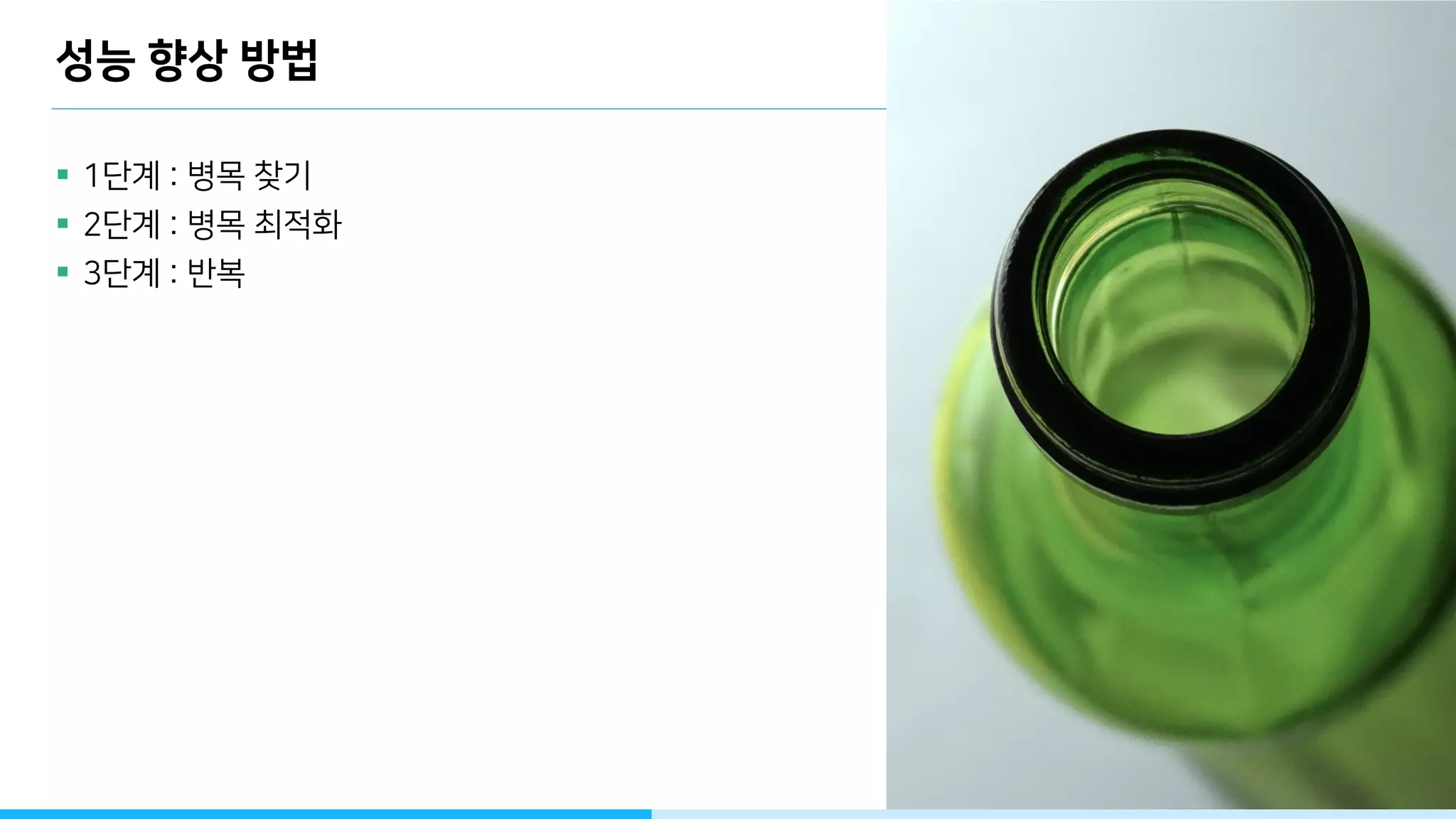

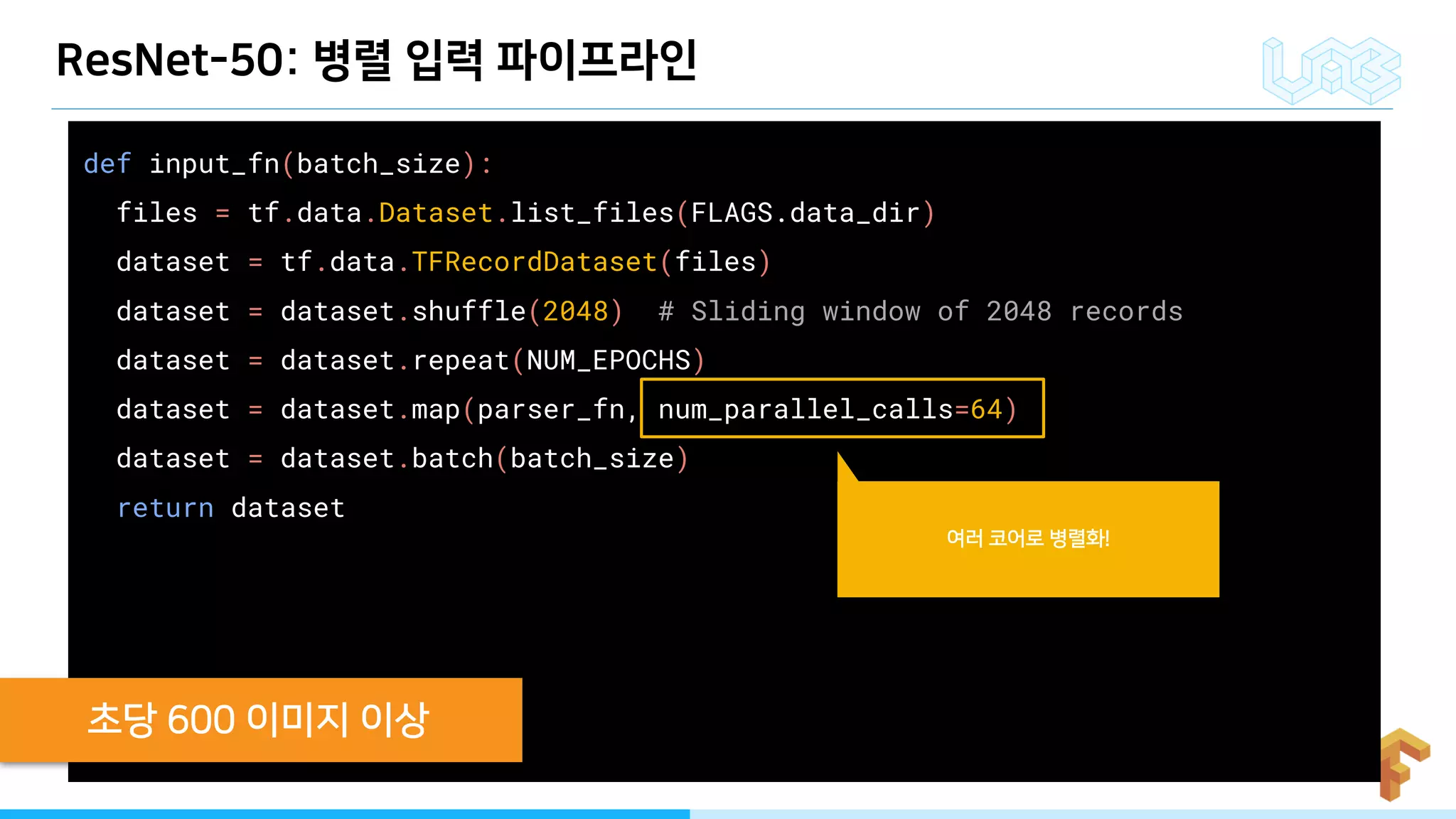

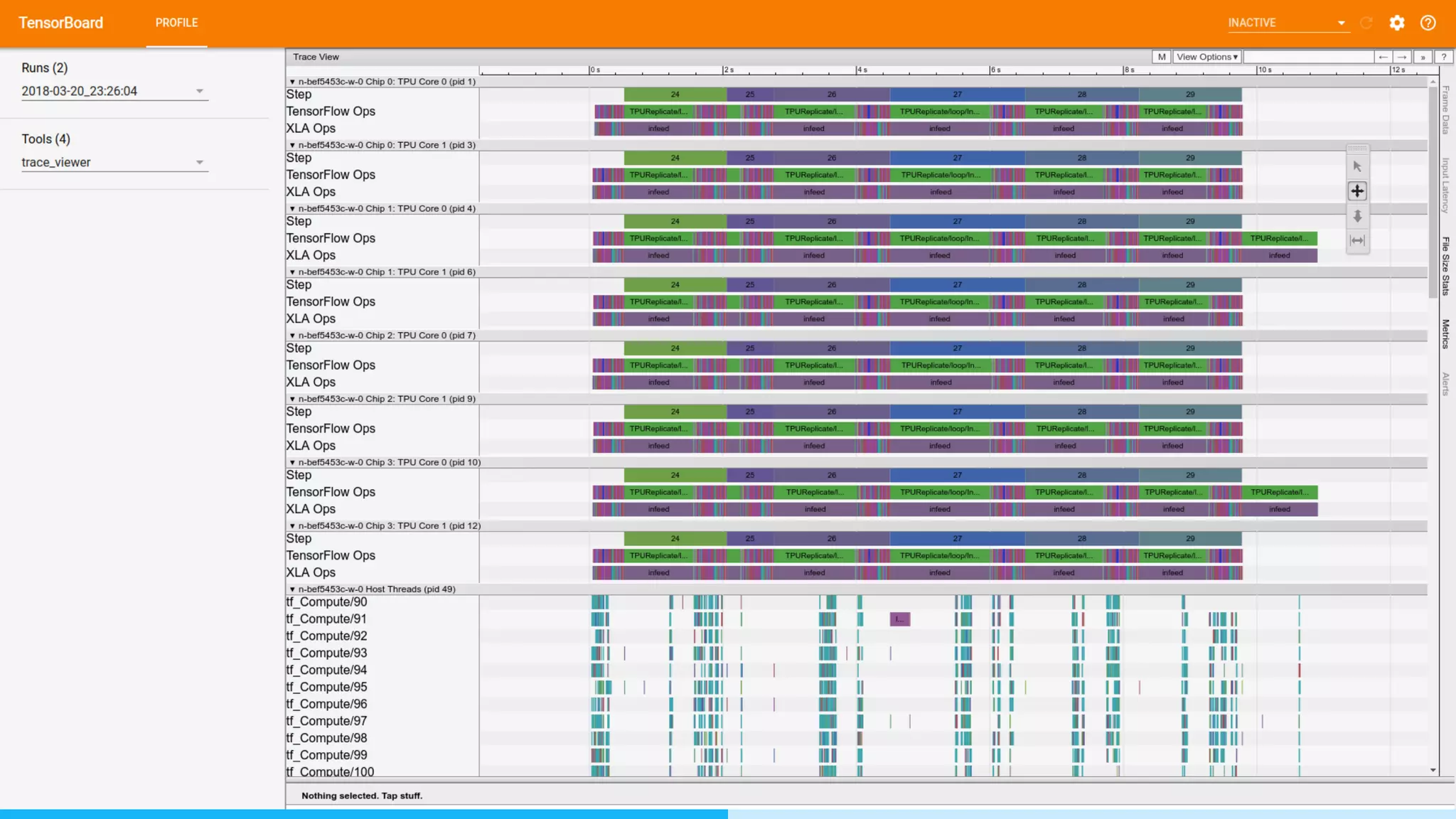

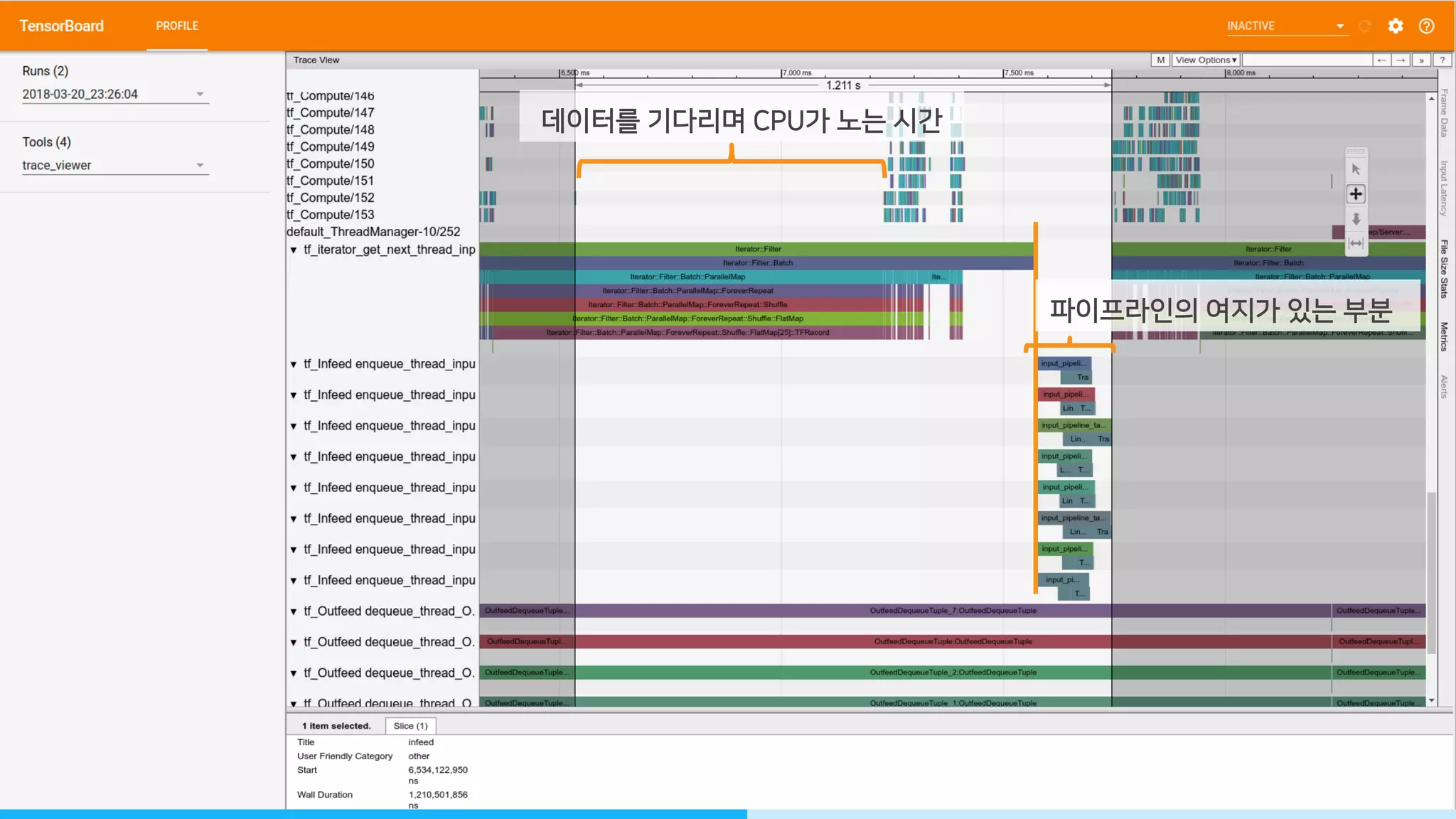

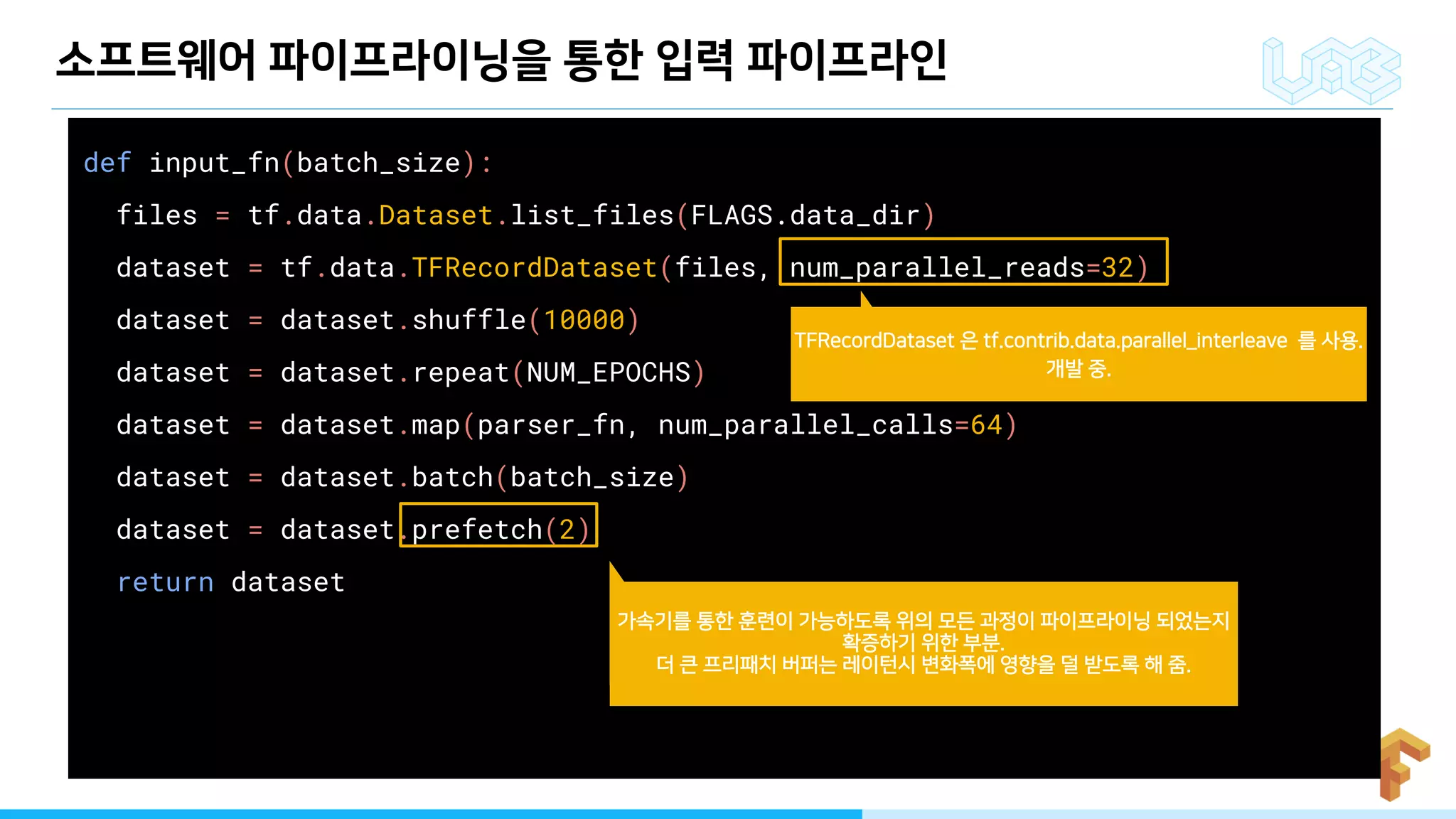

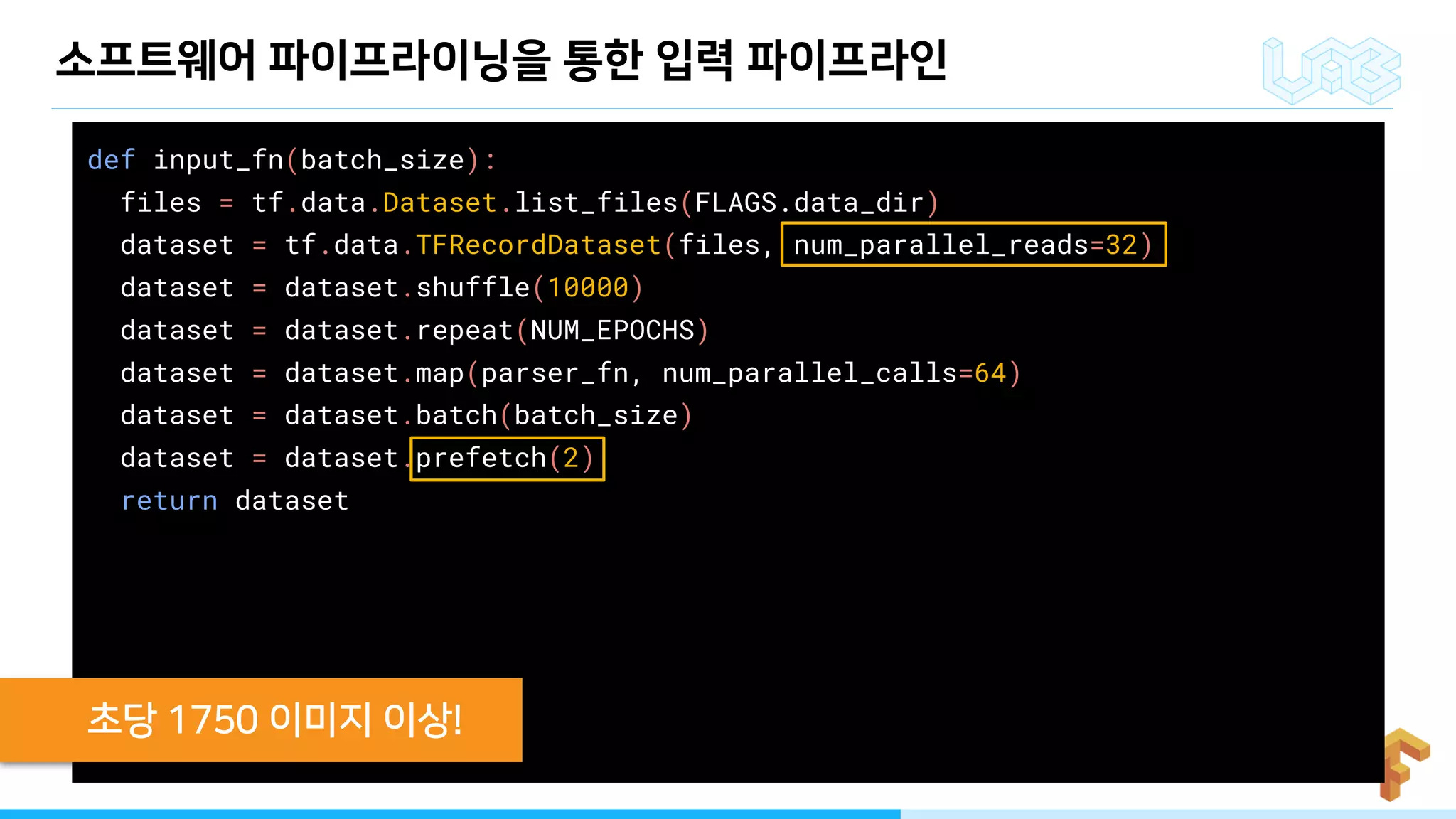

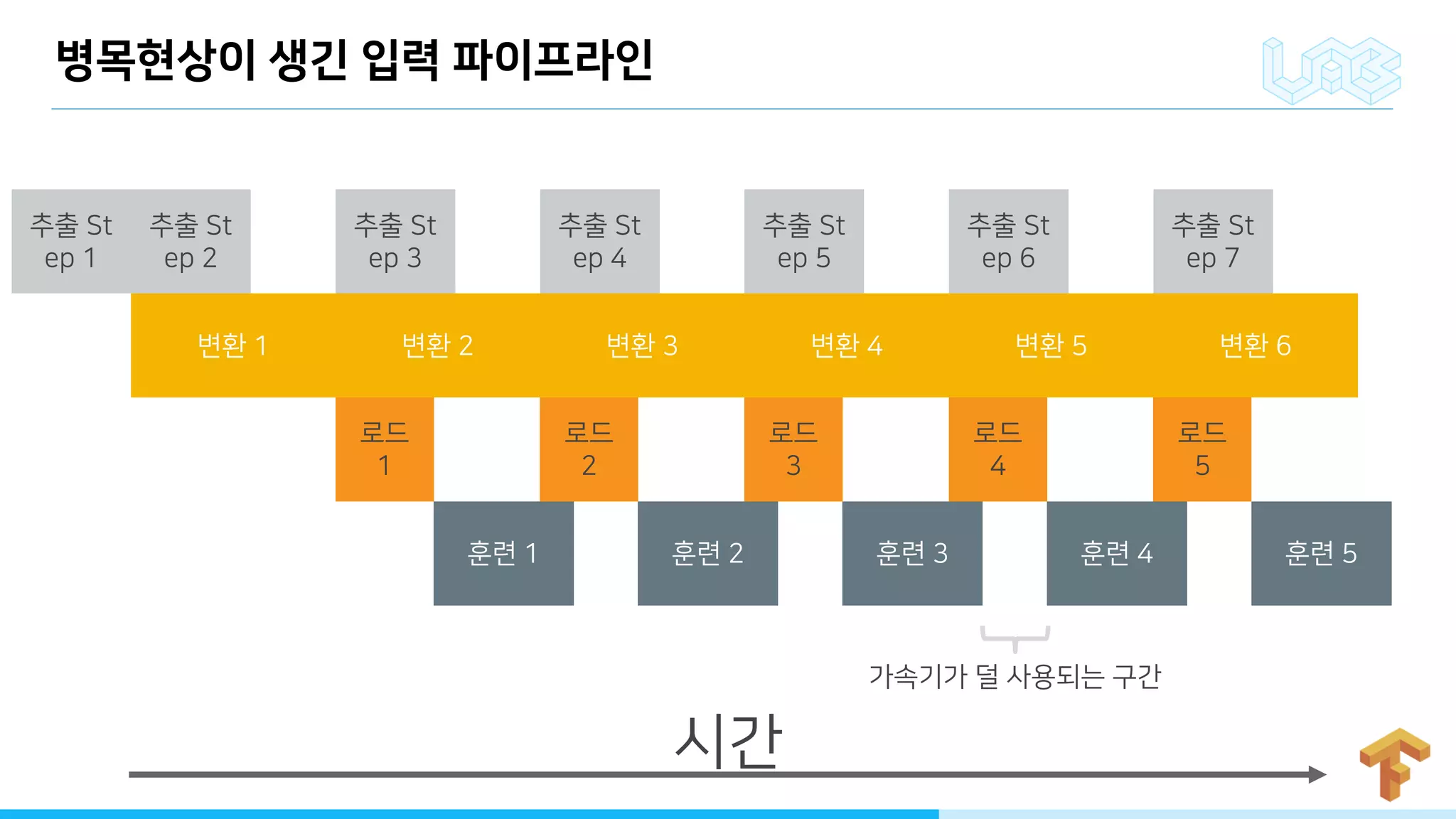

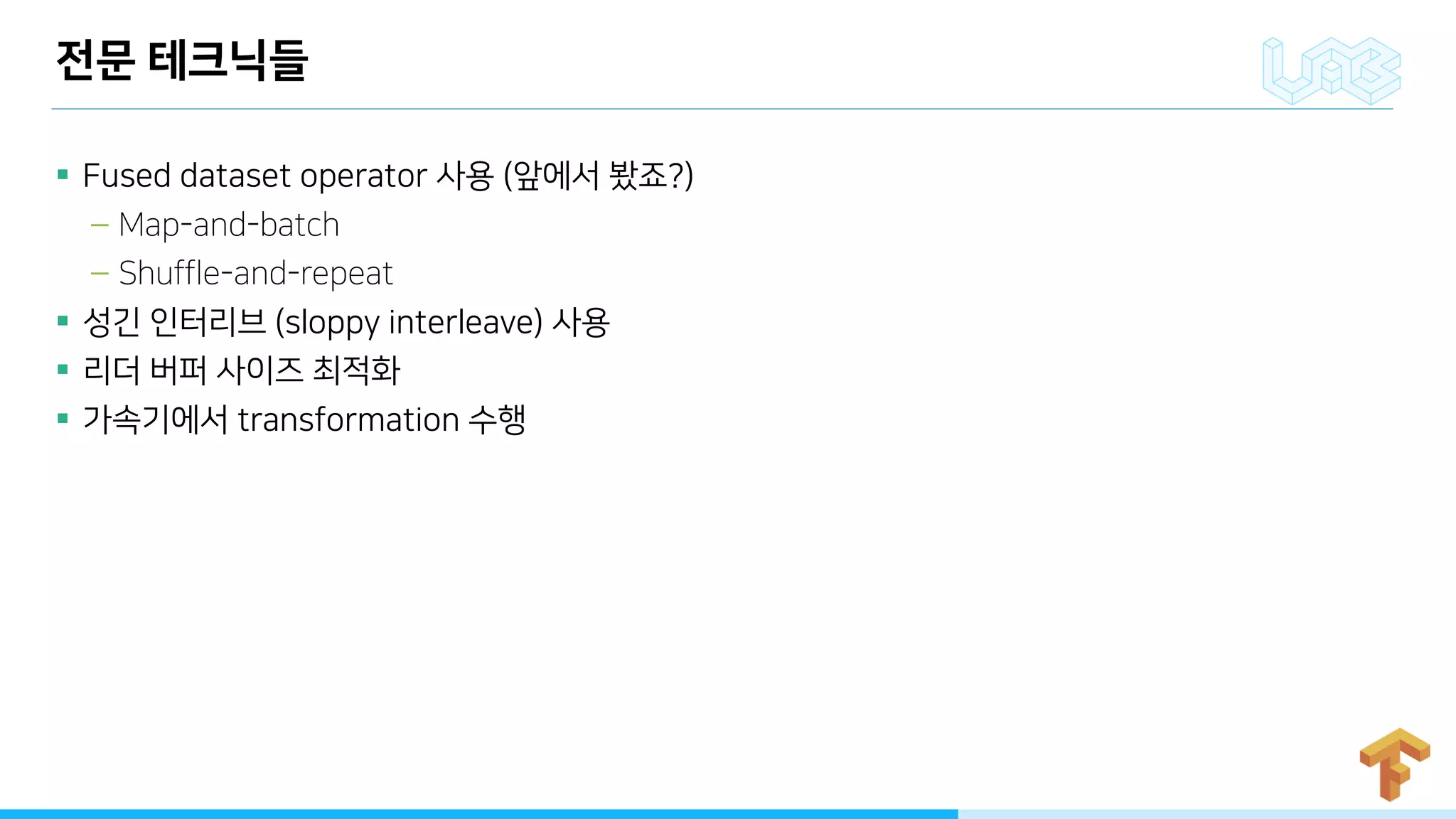

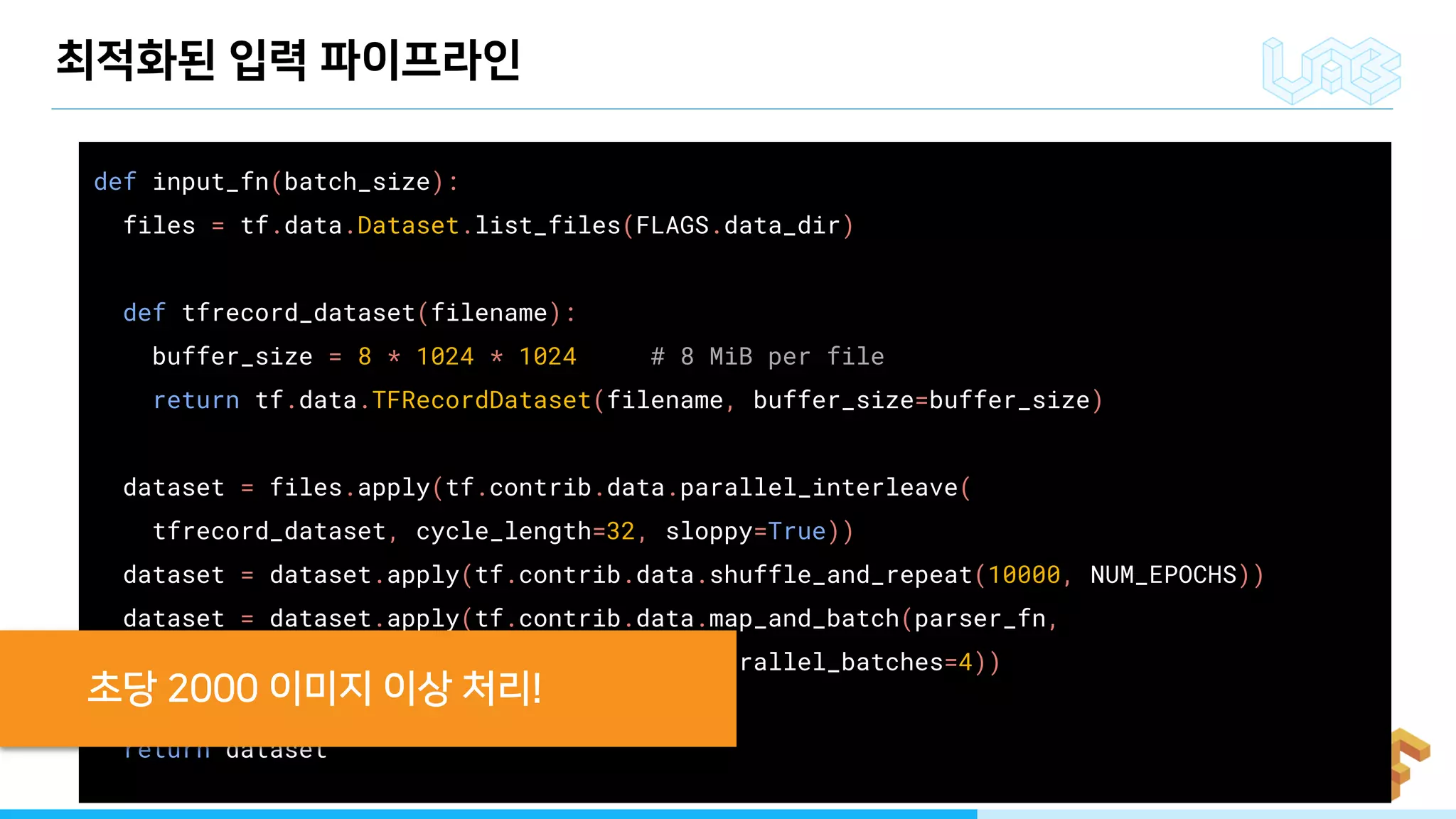

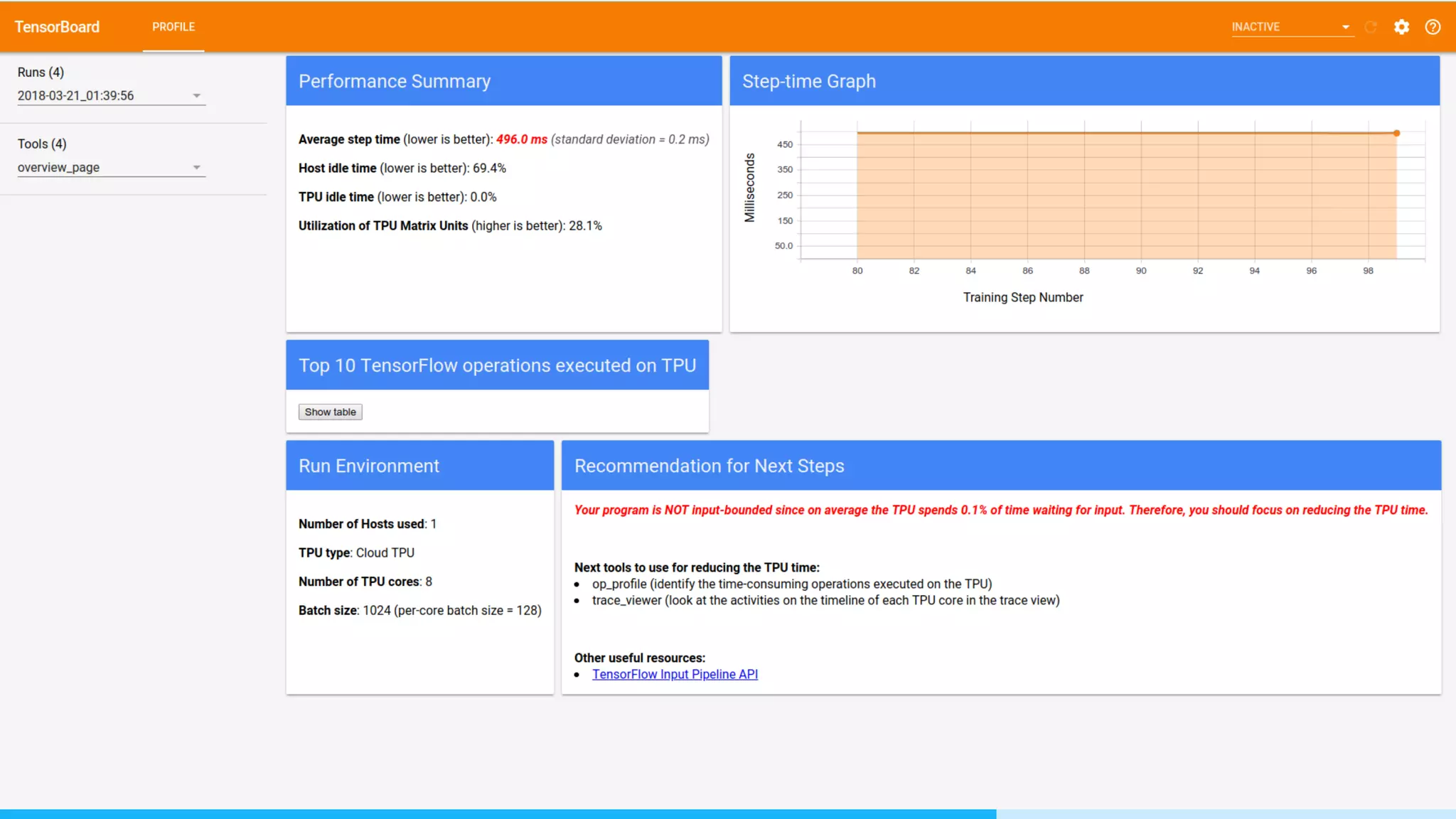

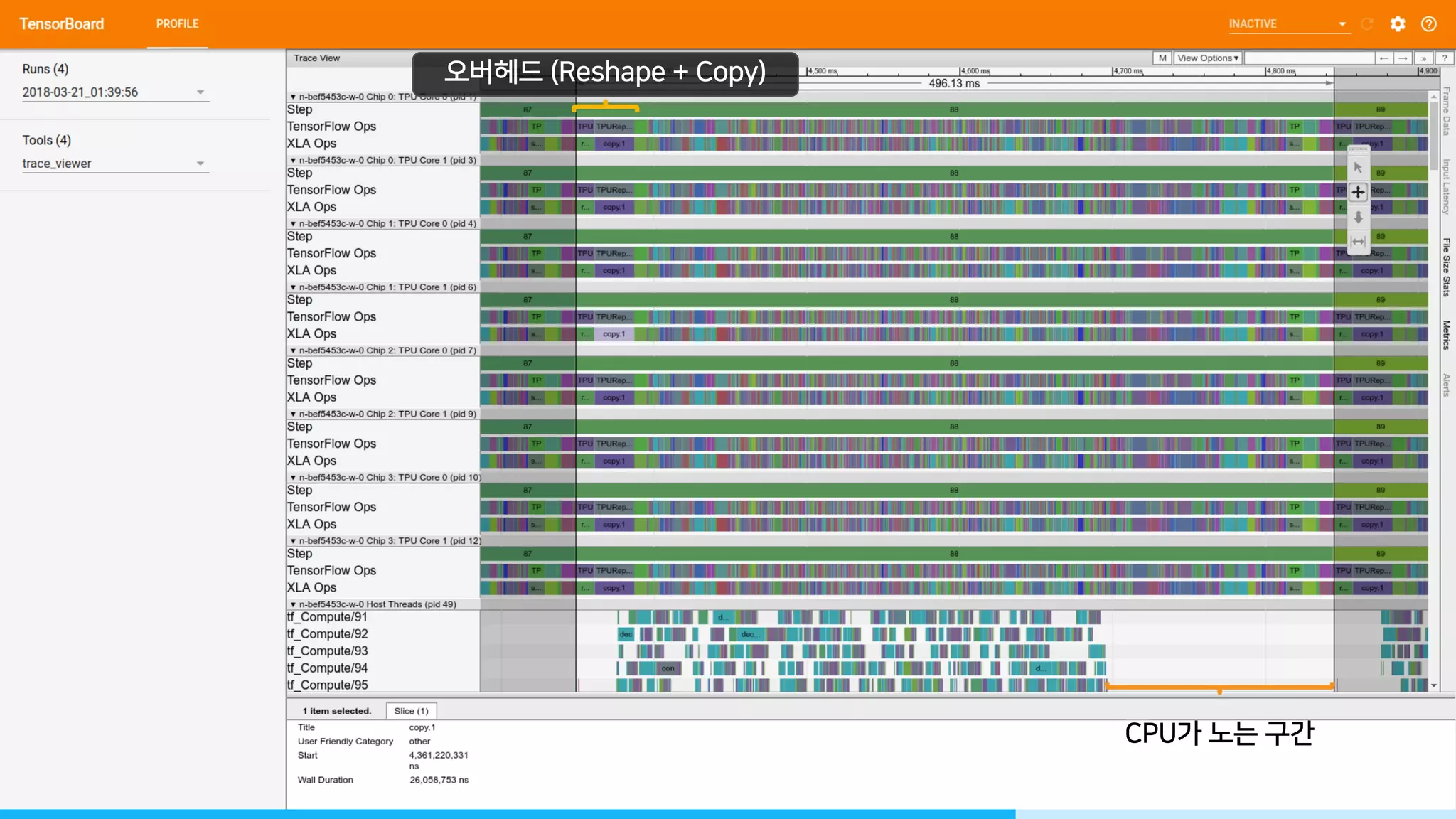

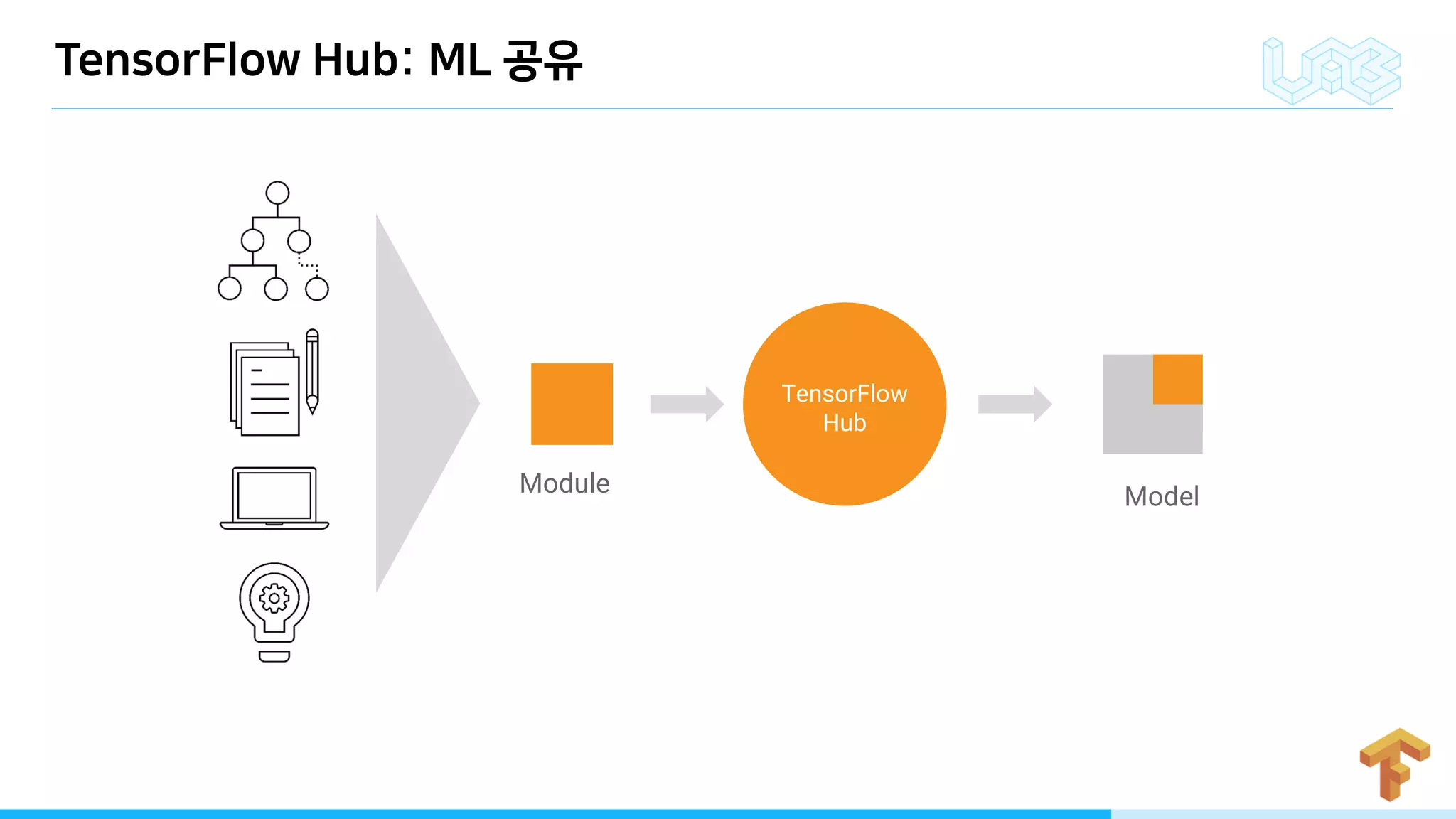

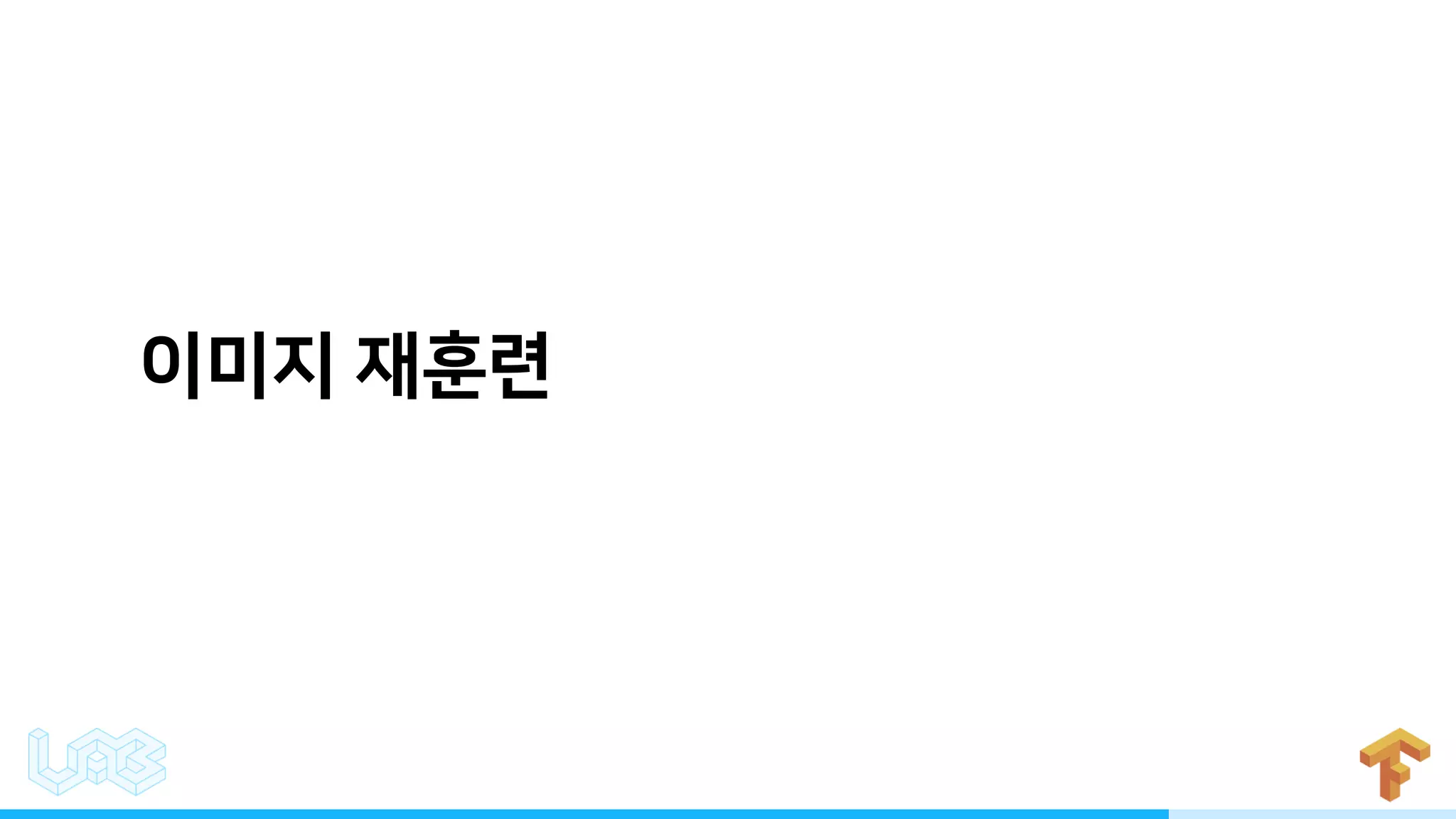

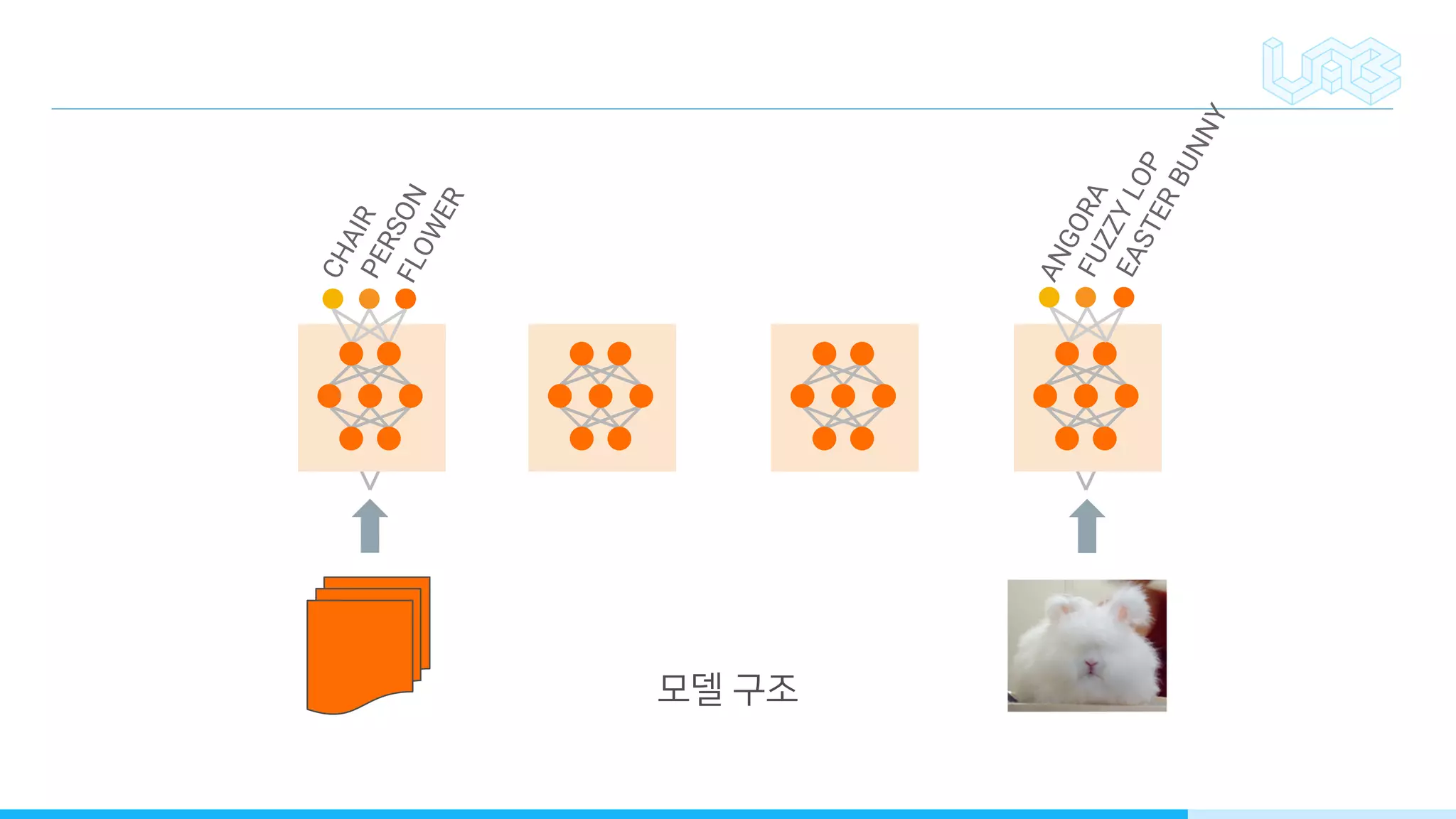

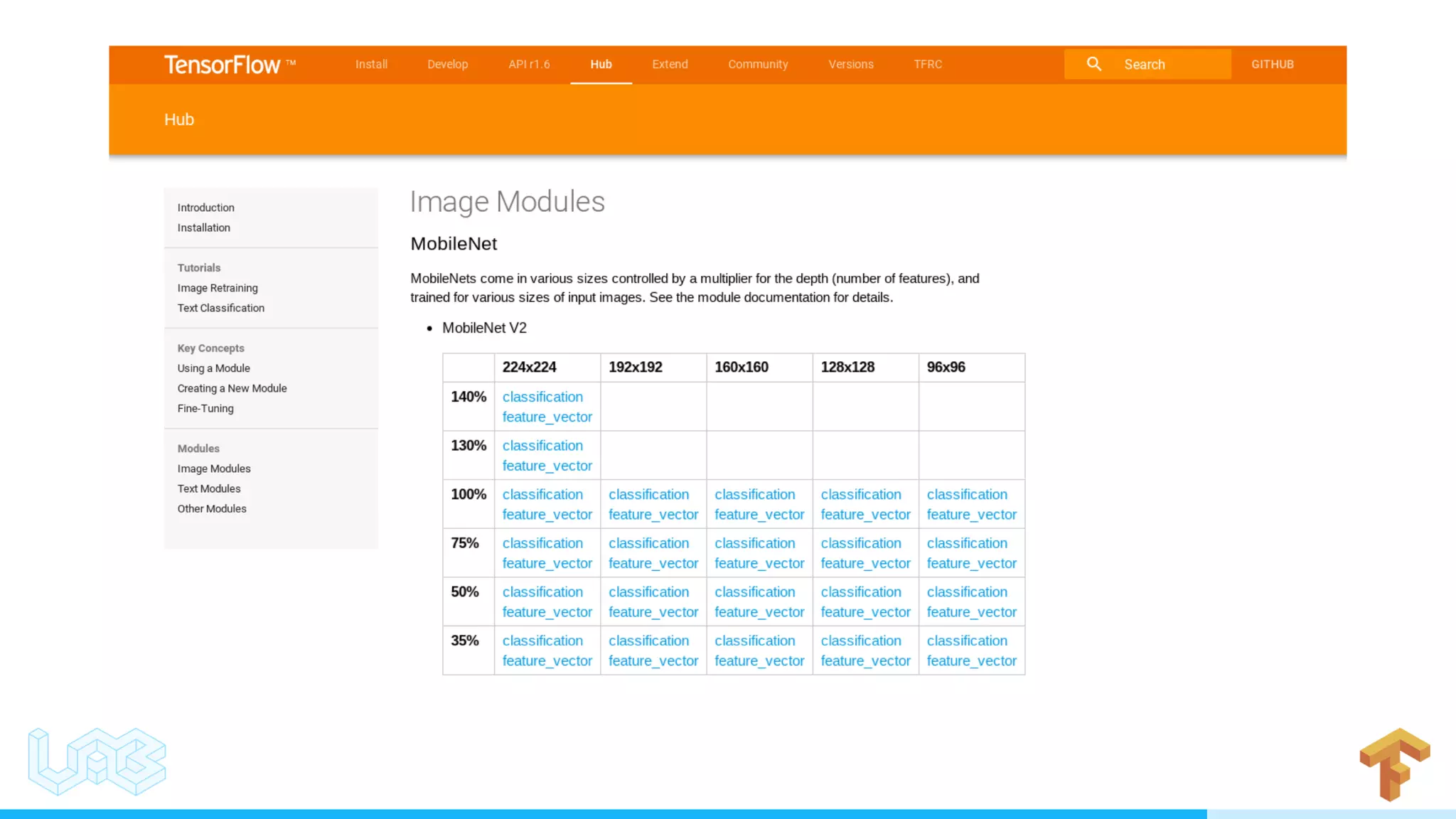

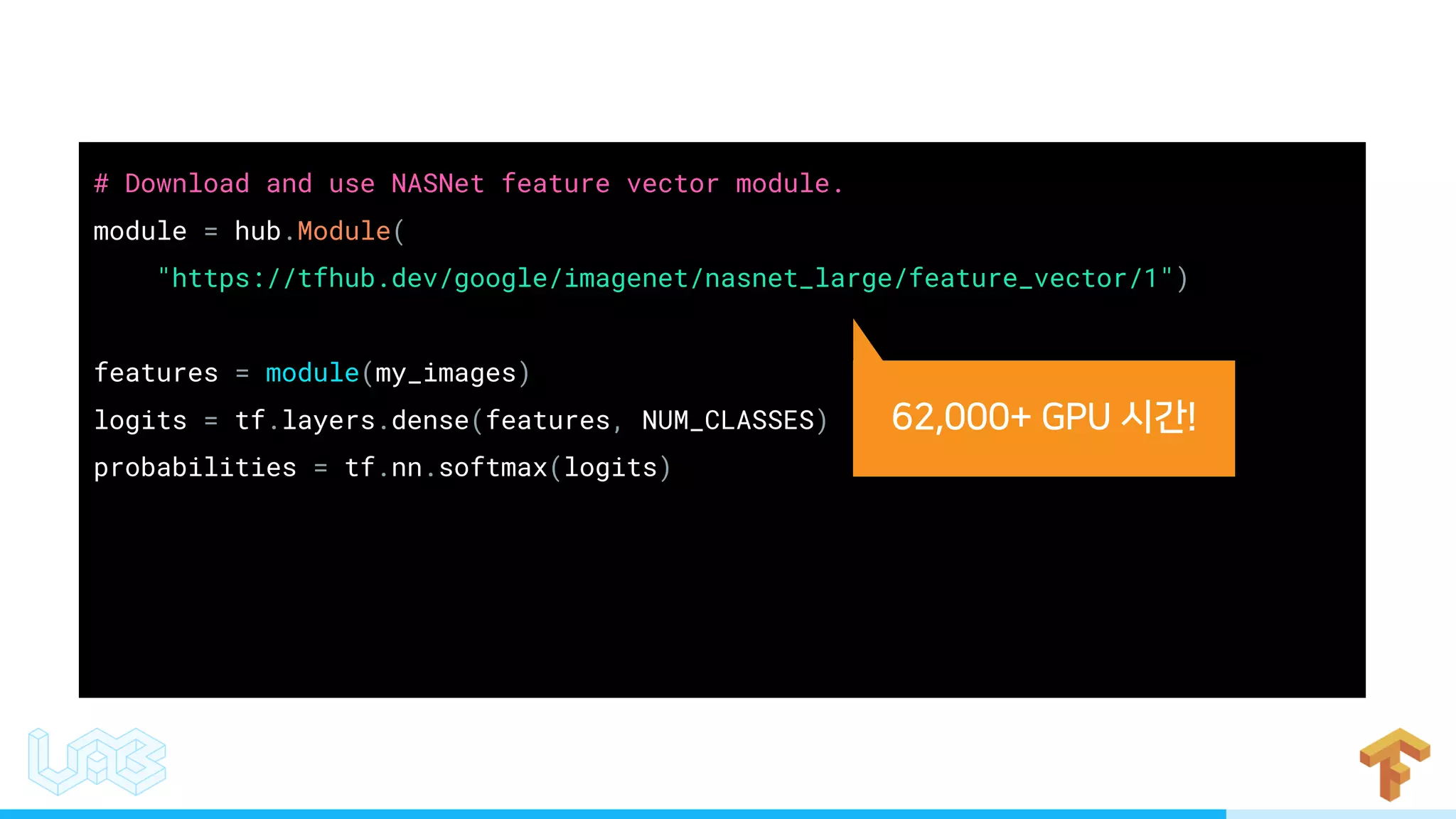

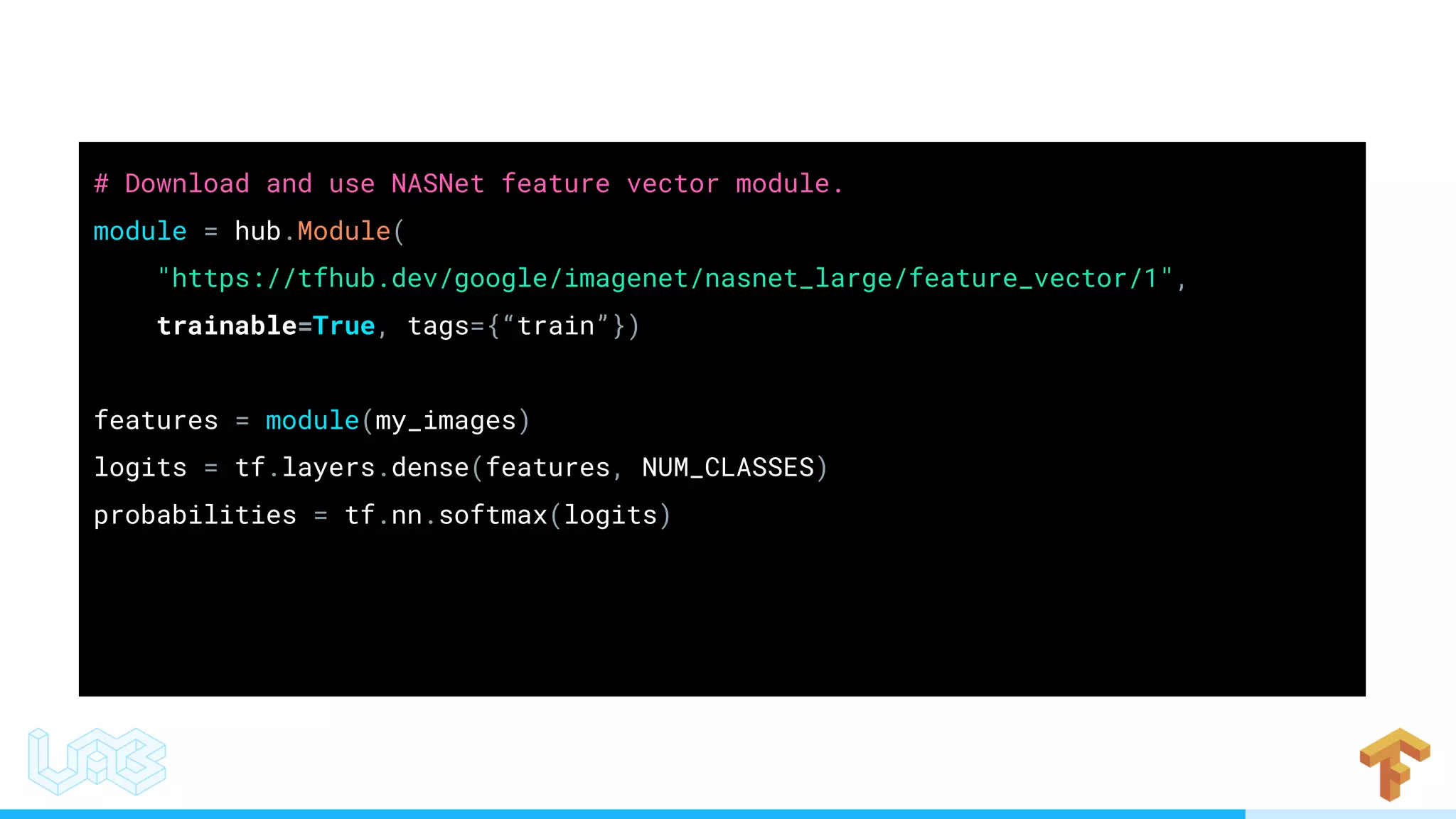

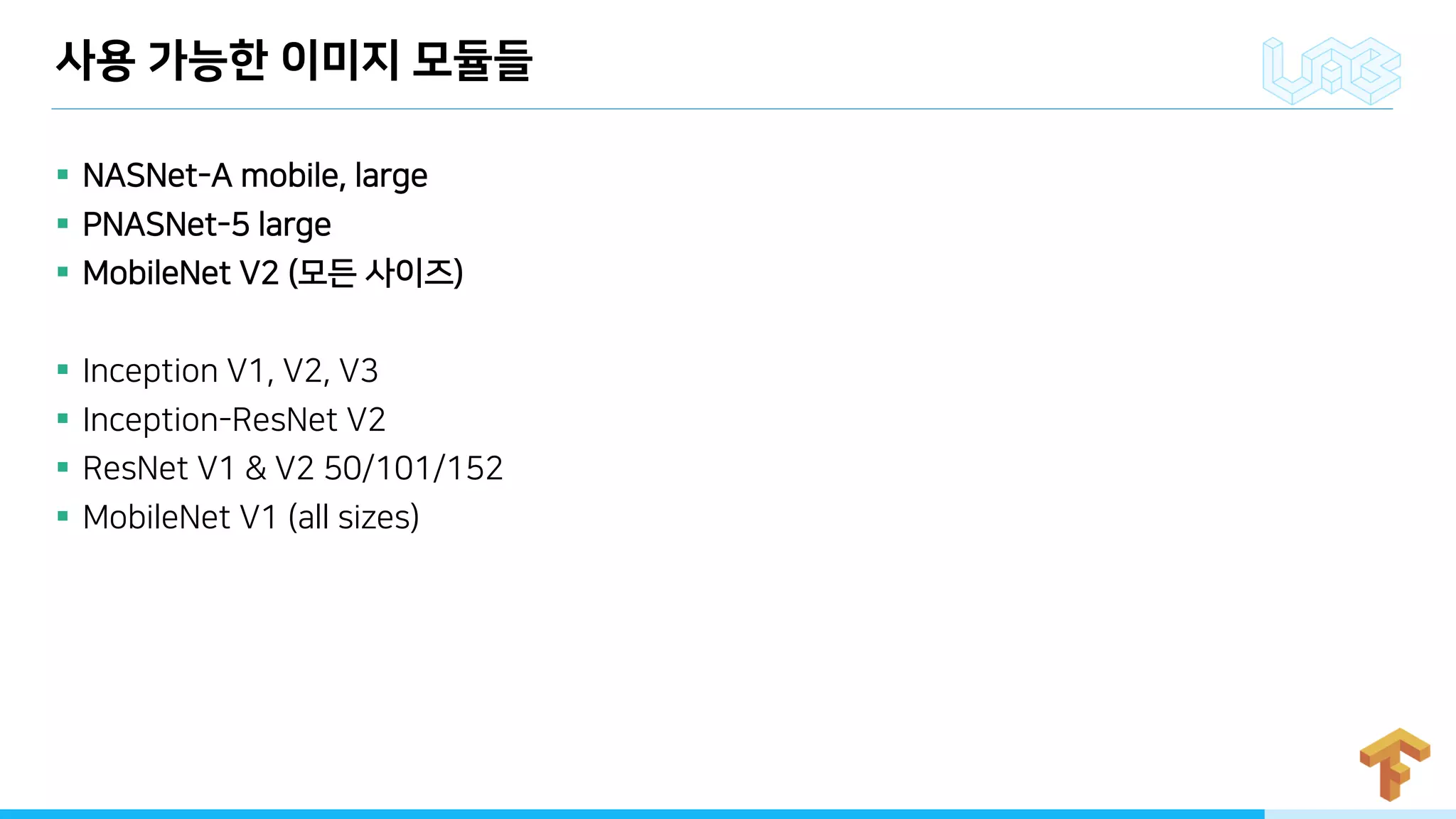

The document outlines multiple methods for loading, processing, and training datasets using TensorFlow's data pipeline features, particularly with TFRecord datasets and CSV datasets. It describes techniques for shuffling, repeating, and batching datasets for model training, as well as using pre-trained models from TensorFlow Hub. Additionally, it highlights best practices for high performance and offers code snippets for implementing input functions and training estimators.

![tf.enable_eager_execution() # In a terminal, run the following commands, e.g.: # $ pip install kaggle # $ kaggle datasets download -d therohk/million-headlines -p . dataset = tf.contrib.data.make_csv_dataset( "*.csv", BATCH_SIZE, num_epochs=NUM_EPOCHS) for batch in dataset: train_model(batch["publish_date"], batch["headline_text"])](https://image.slidesharecdn.com/20180414-180419053531/75/TensorFlow-Data-TensorFlow-Hub-31-2048.jpg)

![saeta@saeta:~$ capture_tpu_profile --tpu_name=saeta --logdir=myprofile/ --duration_ms=10000 Welcome to the Cloud TPU Profiler v1.5.1 Starting to profile TPU traces for 10000 ms. Remaining attempt(s): 3 Limiting the number of trace events to 1000000 2018-03-21 01:13:12.350004: I tensorflow/contrib/tpu/profiler/dump_tpu_profile.cc:155] Converting trace events to TraceViewer JSON. 2018-03-21 01:13:12.392162: I tensorflow/contrib/tpu/profiler/dump_tpu_profile.cc:69] Dumped raw-proto trace data to profiles/5/plugins/profile/2018-03-21_01:13:12/tr ace Trace contains 998114 events. Dumped JSON trace data to myprofile/plugins/profile/2018-03-21_01:13:12/trace.json.gz Dumped json op profile data to myprofile/plugins/profile/2018-03-21_01:13:12/op_profile.json Dumped tool data for input_pipeline.json to myprofile/plugins/profile/2018-03-21_01:13:12/input_pipeline.json Dumped tool data for overview_page.json to myprofile/plugins/profile/2018-03-21_01:13:12/overview_page.json NOTE: using the trace duration 10000ms. Set an appropriate duration (with --duration_ms) if you don't see a full step in your trace or the captured trace is too large. saeta@saeta:~$ tensorboard --logdir=myprofile/ TensorBoard 1.6.0 at <redacted> (Press CTRL+C to quit) /: // # / : # : /: : -/ /: // - .# /#- . - - . - :/ : -/](https://image.slidesharecdn.com/20180414-180419053531/75/TensorFlow-Data-TensorFlow-Hub-42-2048.jpg)

![# Use pre-trained universal sentence encoder to build text vector column. review = hub.text_embedding_column( "review", "https://tfhub.dev/google/universal-sentence-encoder/1") features = { "review": np.array(["an arugula masterpiece", "inedible shoe leather", ...]) } labels = np.array([[1], [0], ...]) input_fn = tf.estimator.input.numpy_input_fn(features, labels, shuffle=True) estimator = tf.estimator.DNNClassifier(hidden_units, [review]) estimator.train(input_fn, max_steps=100)](https://image.slidesharecdn.com/20180414-180419053531/75/TensorFlow-Data-TensorFlow-Hub-78-2048.jpg)

![# Use pre-trained universal sentence encoder to build text vector column. review = hub.text_embedding_column( "review", "https://tfhub.dev/google/universal-sentence-encoder/1", trainable=True) features = { "review": np.array(["an arugula masterpiece", "inedible shoe leather", ...]) } labels = np.array([[1], [0], ...]) input_fn = tf.estimator.input.numpy_input_fn(features, labels, shuffle=True) estimator = tf.estimator.DNNClassifier(hidden_units, [review]) estimator.train(input_fn, max_steps=100)](https://image.slidesharecdn.com/20180414-180419053531/75/TensorFlow-Data-TensorFlow-Hub-79-2048.jpg)

![# Use pre-trained universal sentence encoder to build text vector column. review = hub.text_embedding_column( "review", "https://tfhub.dev/google/universal-sentence-encoder/1") features = { "review": np.array(["an arugula masterpiece", "inedible shoe leather", ...]) } labels = np.array([[1], [0], ...]) input_fn = tf.estimator.input.numpy_input_fn(features, labels, shuffle=True) estimator = tf.estimator.DNNClassifier(hidden_units, [review]) estimator.train(input_fn, max_steps=100)](https://image.slidesharecdn.com/20180414-180419053531/75/TensorFlow-Data-TensorFlow-Hub-80-2048.jpg)