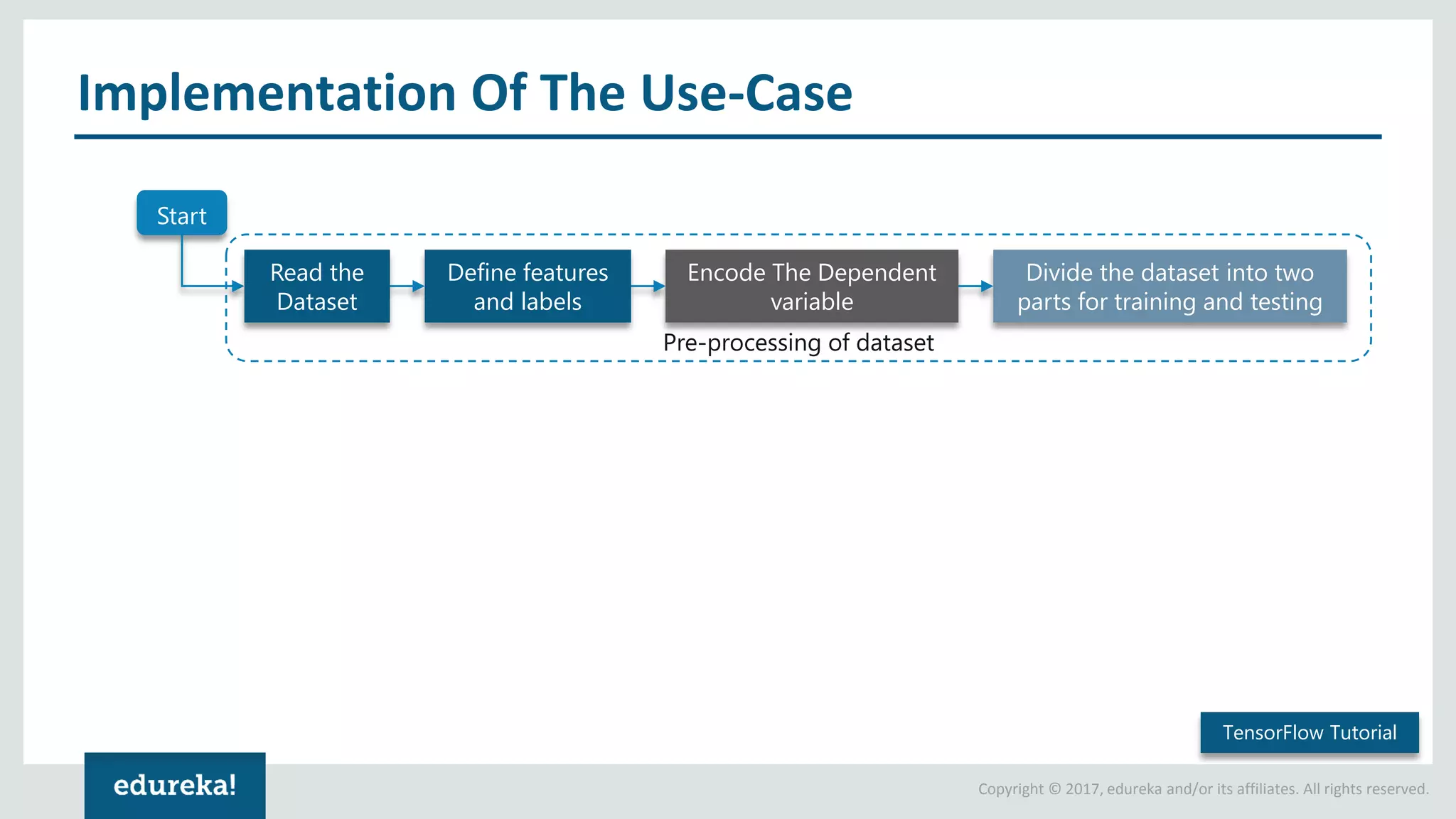

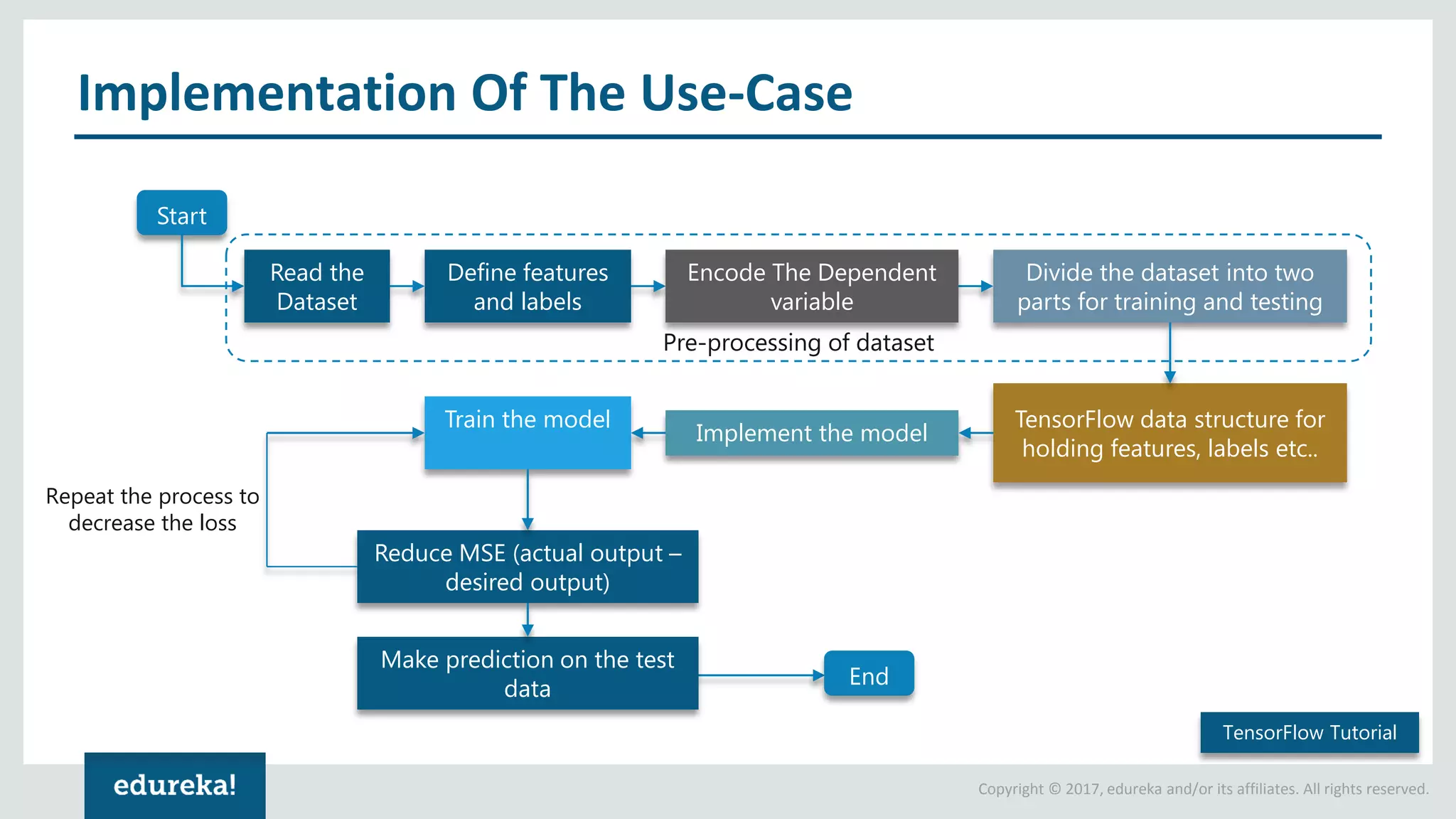

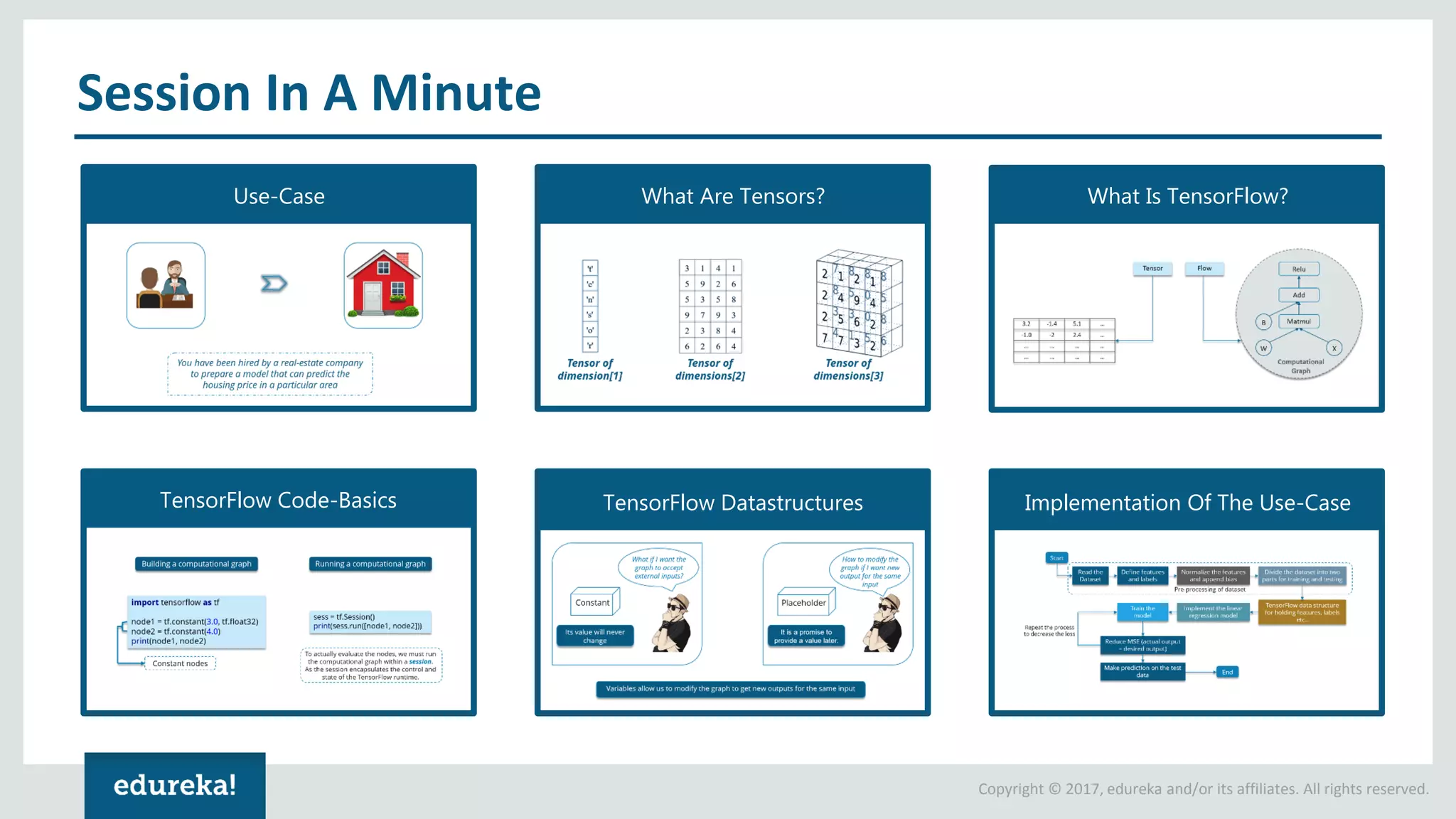

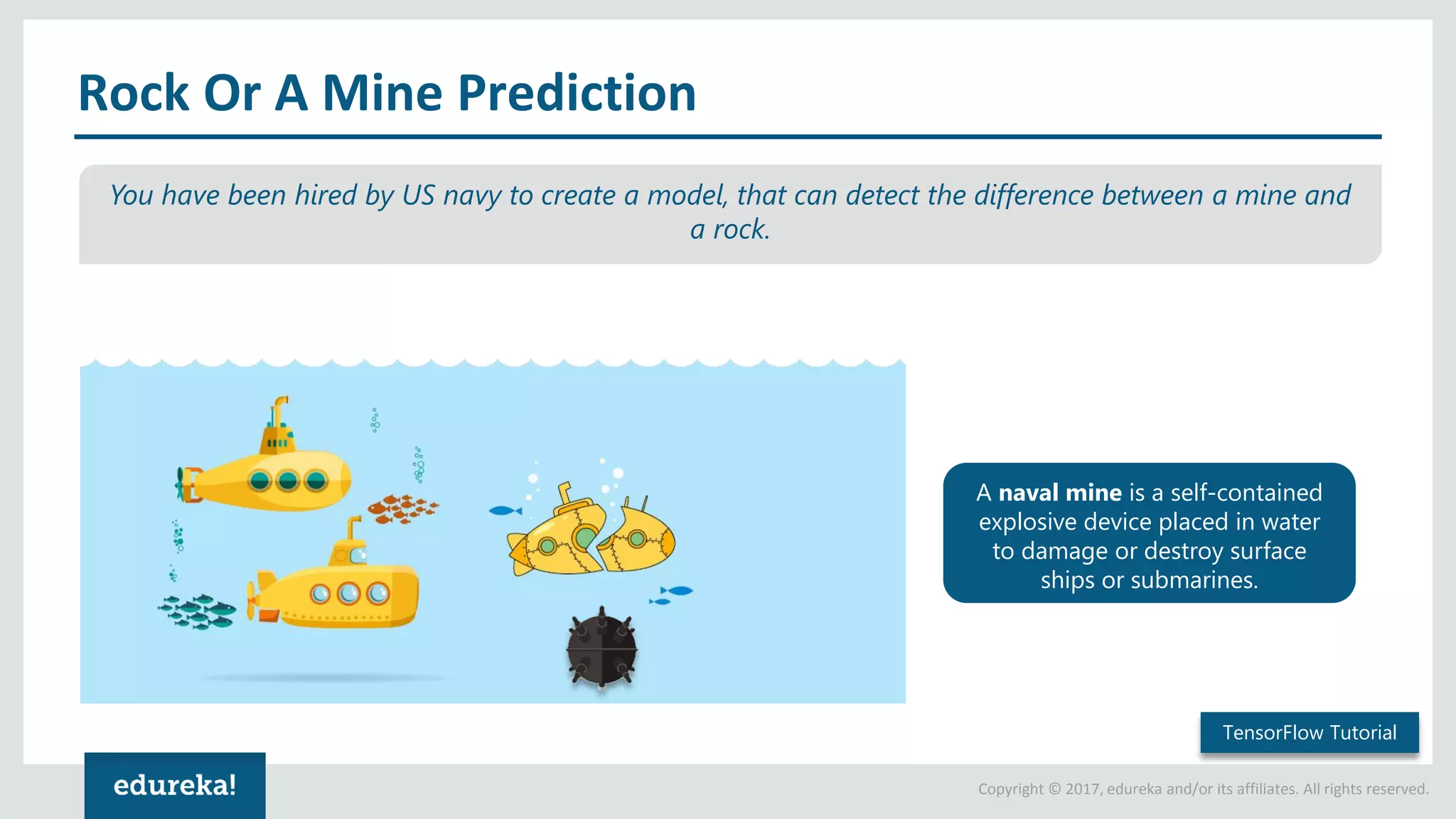

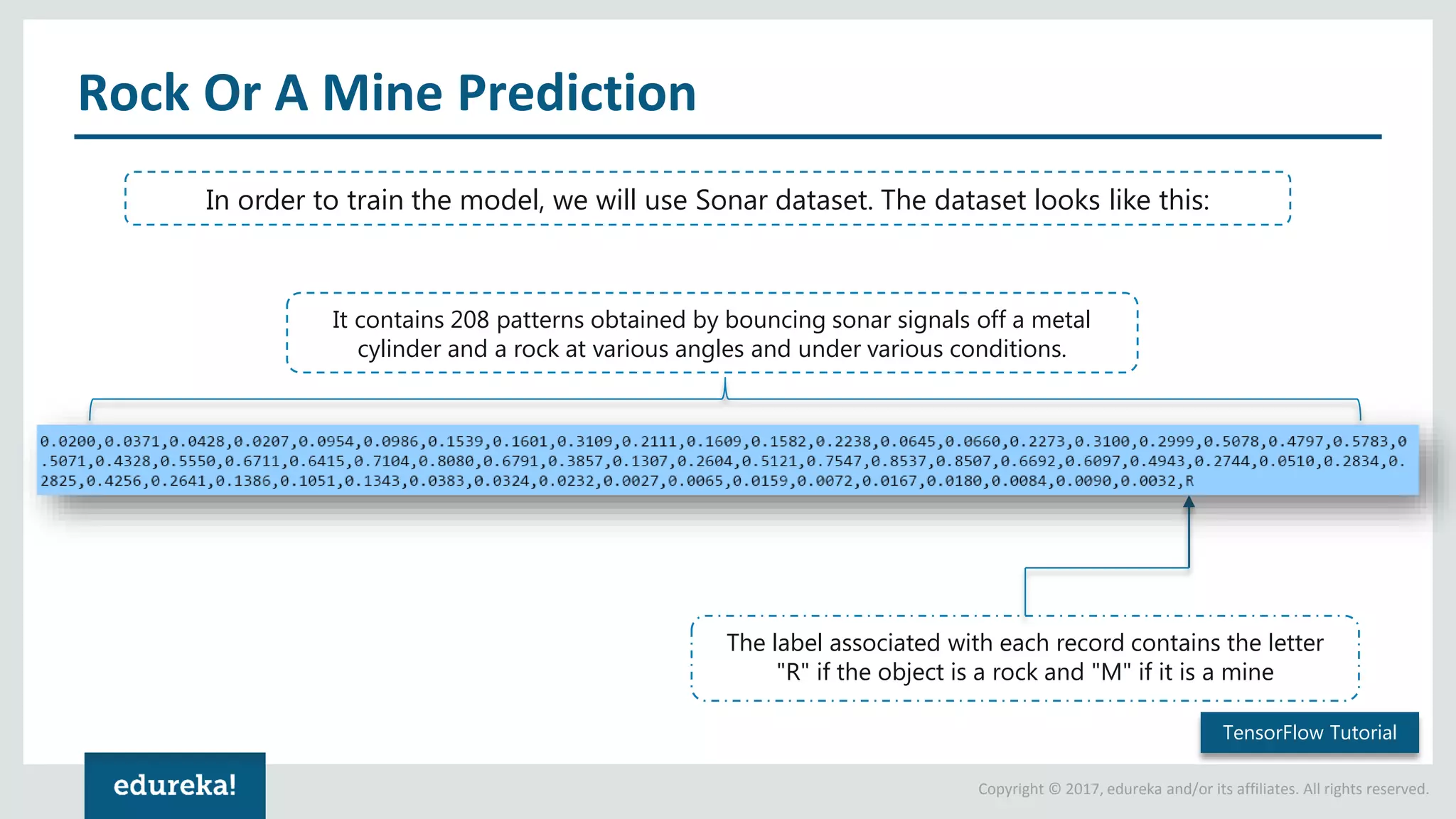

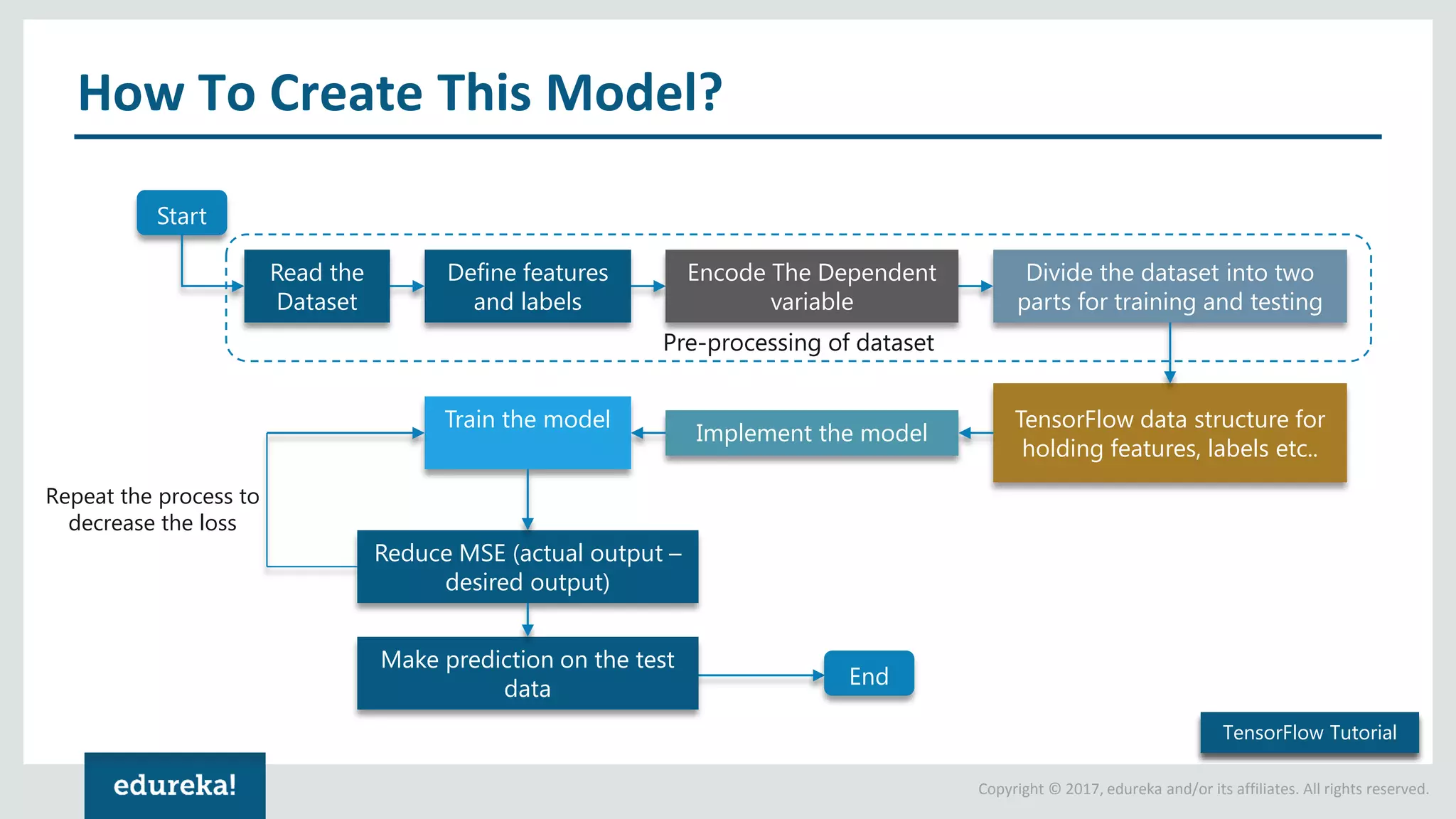

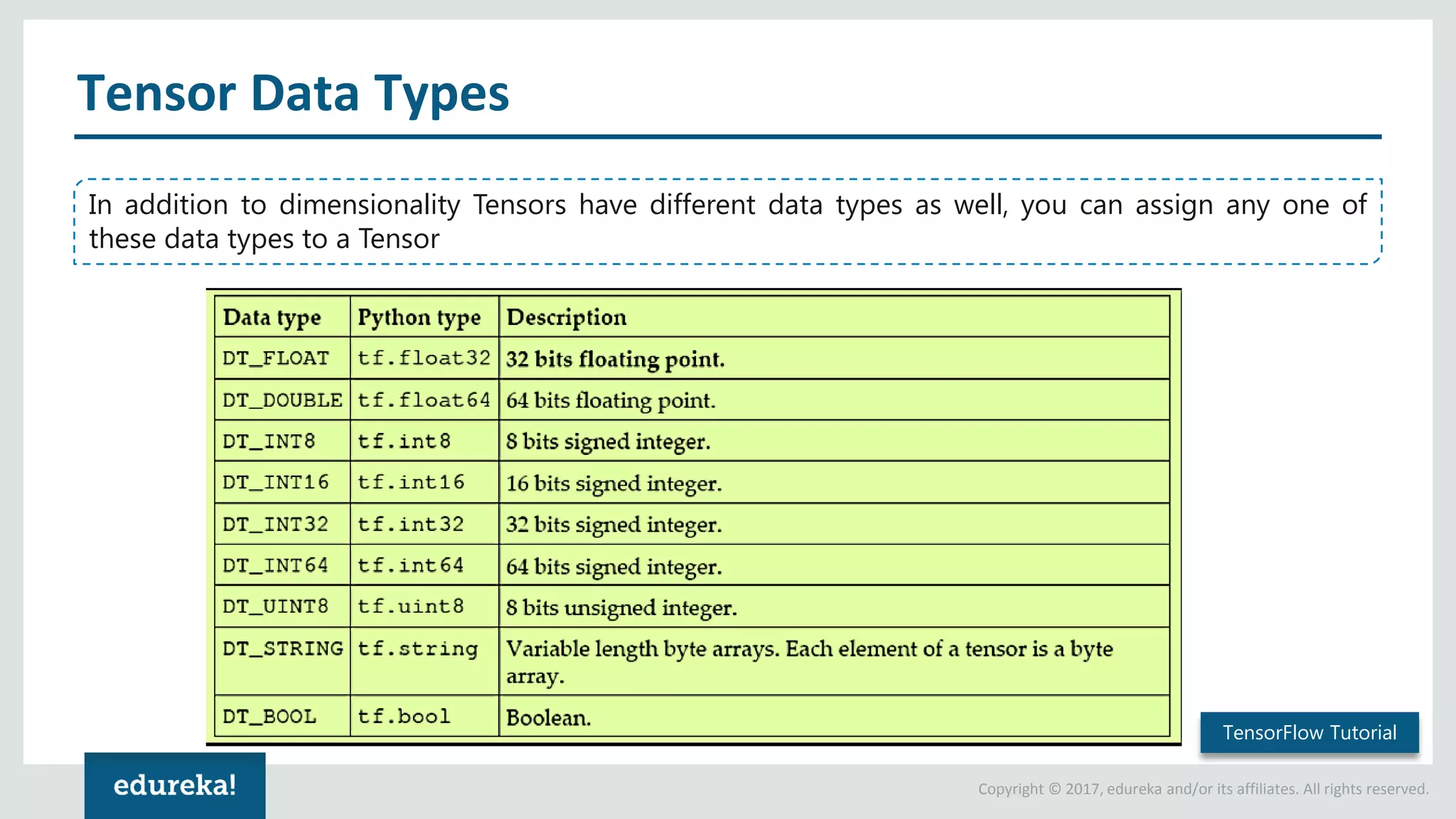

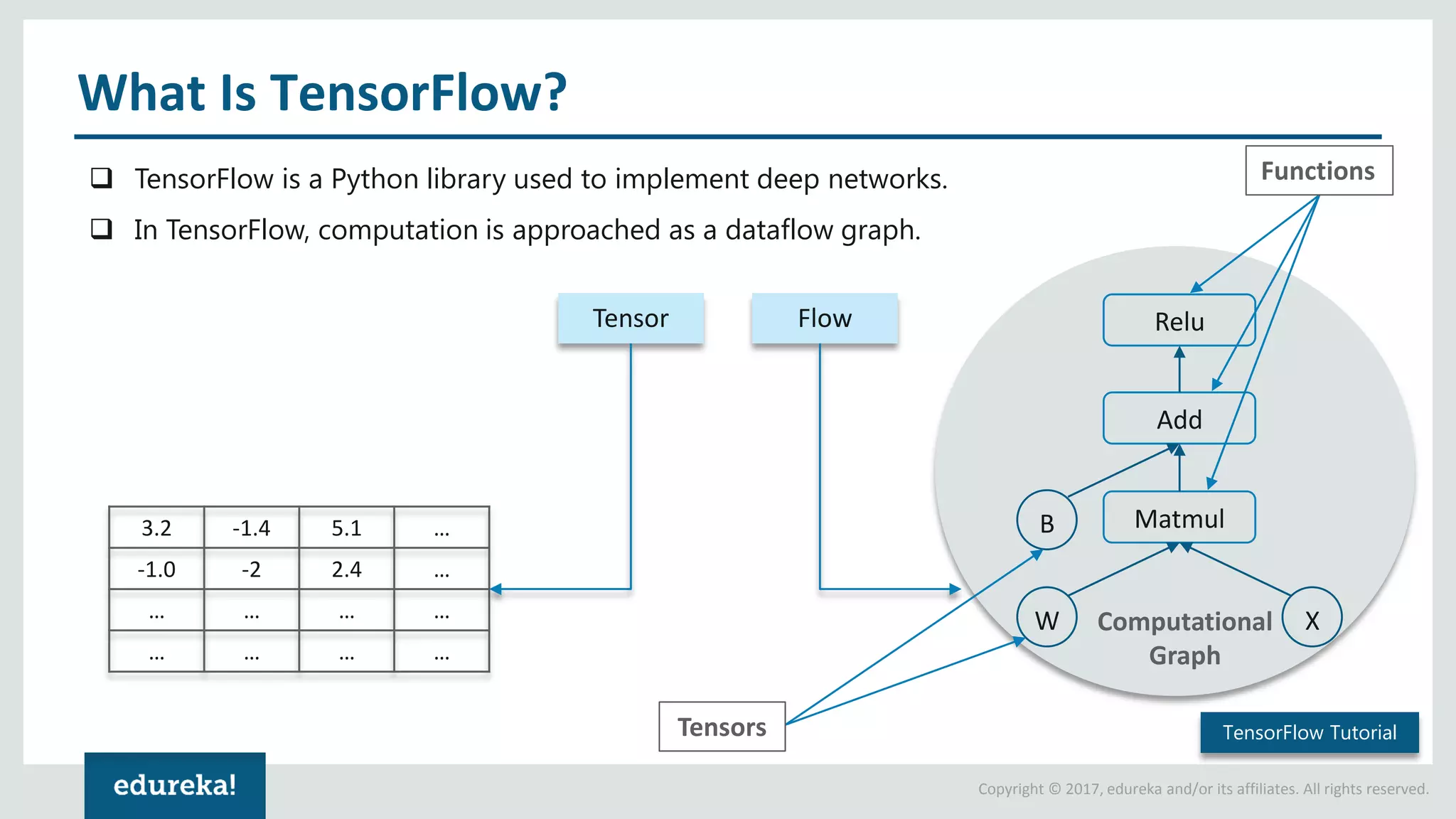

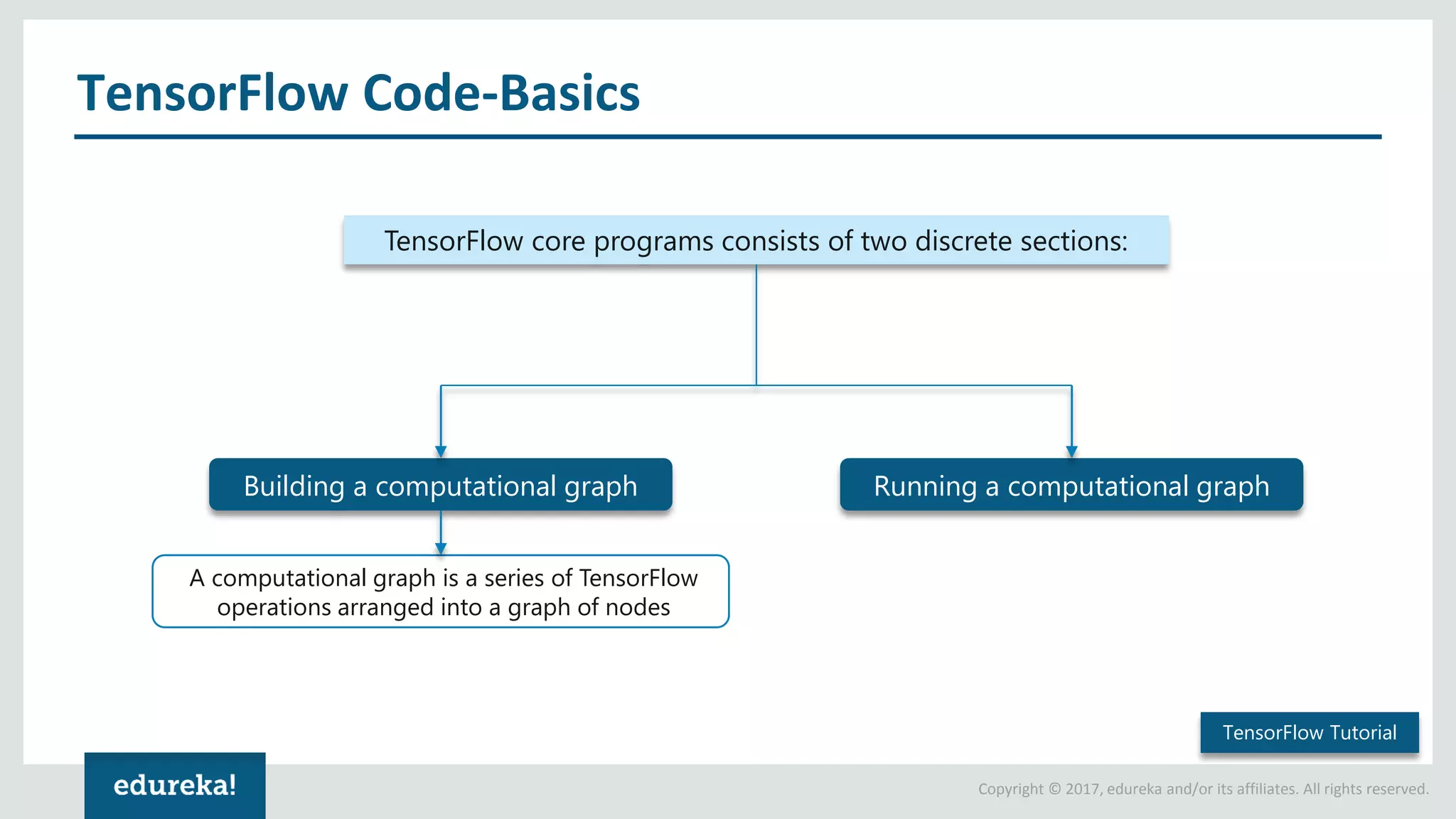

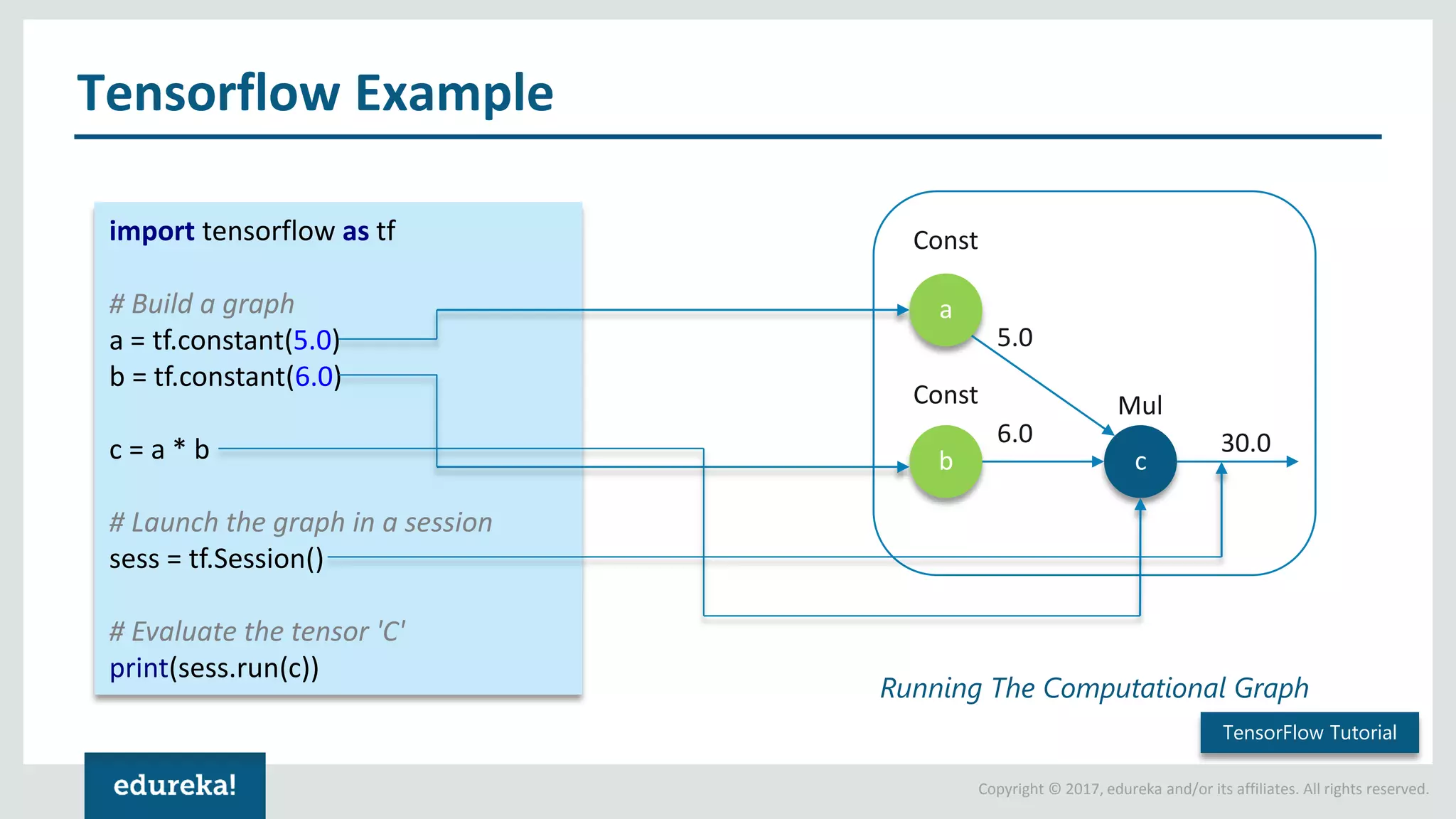

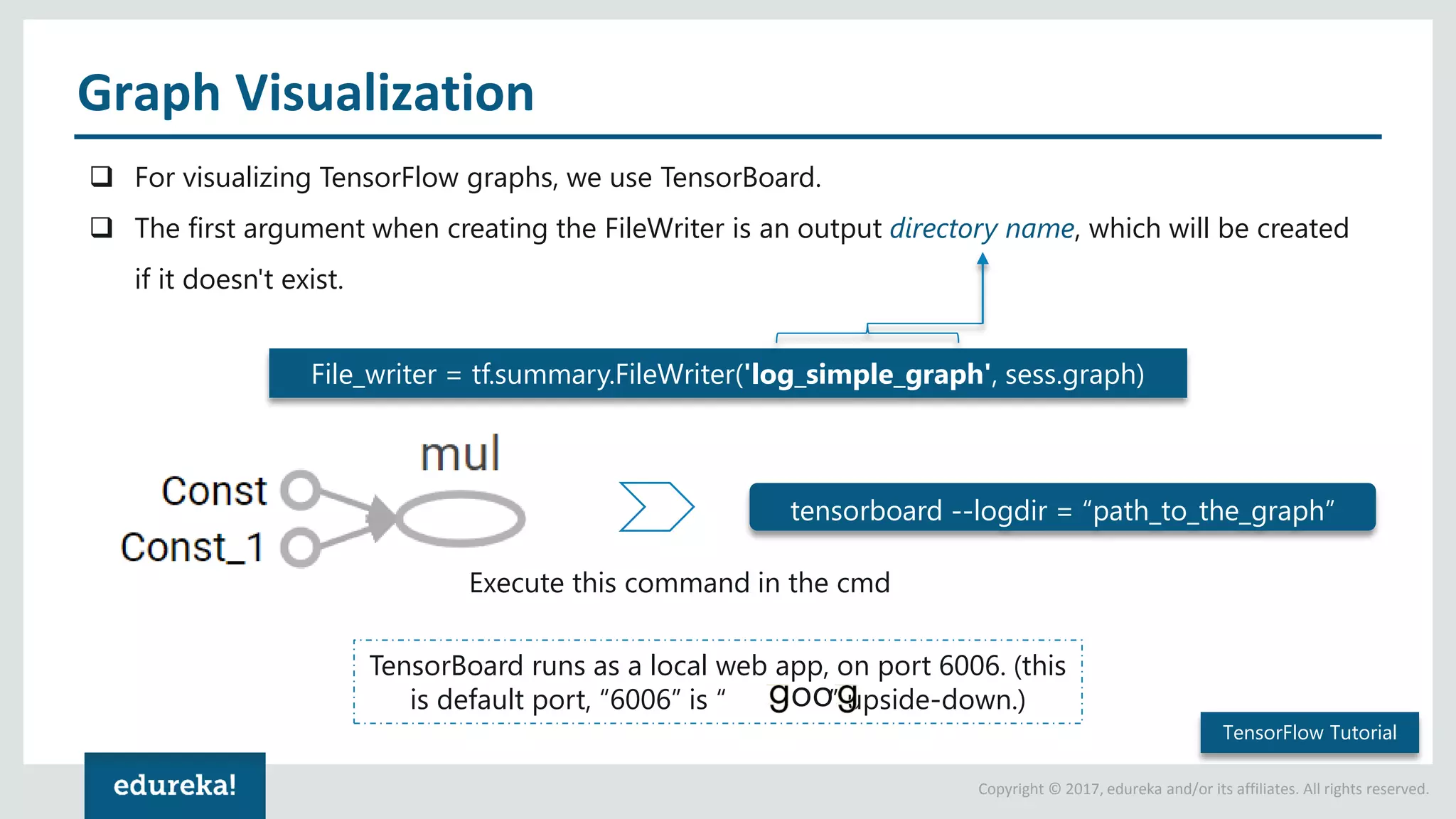

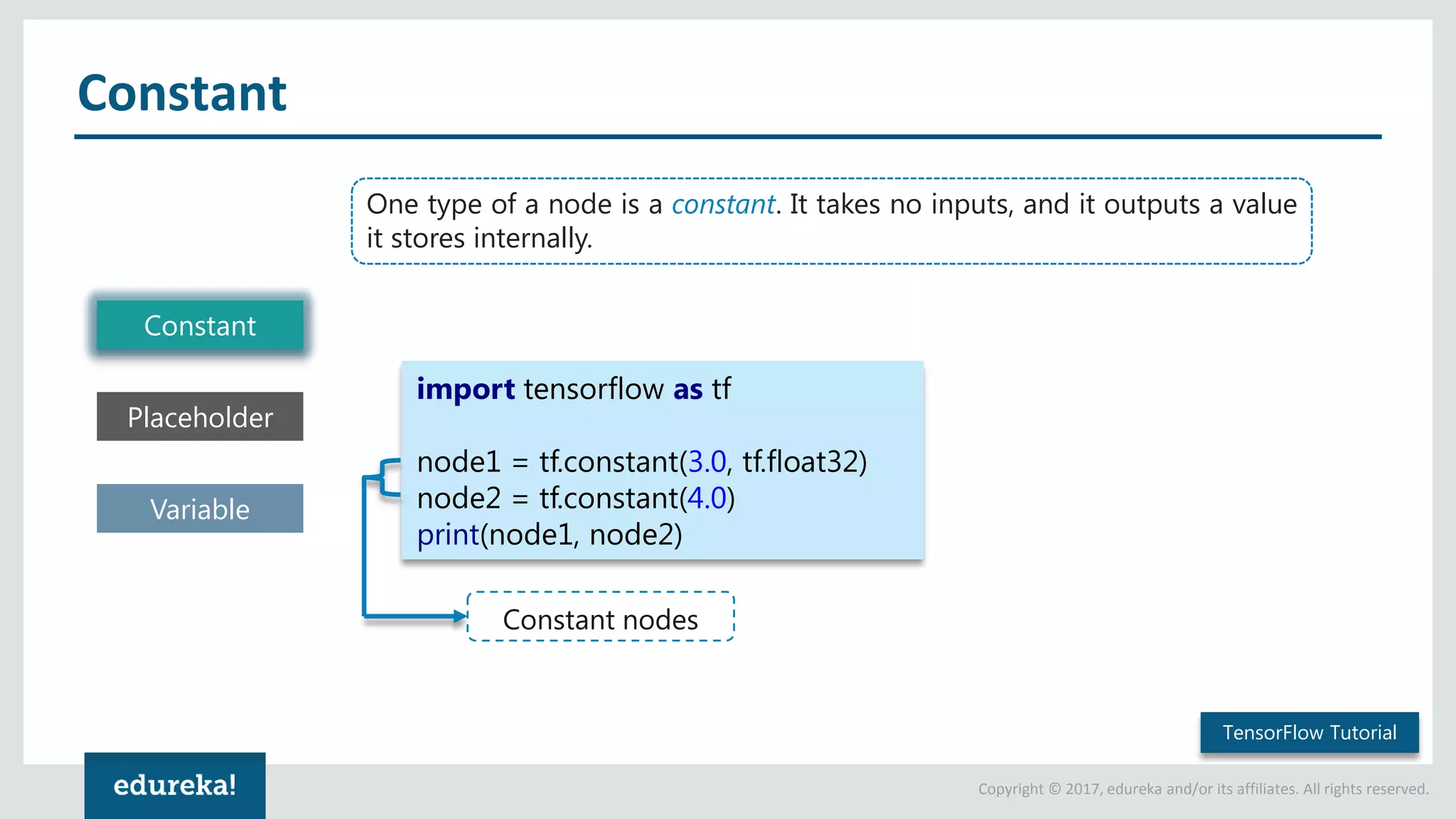

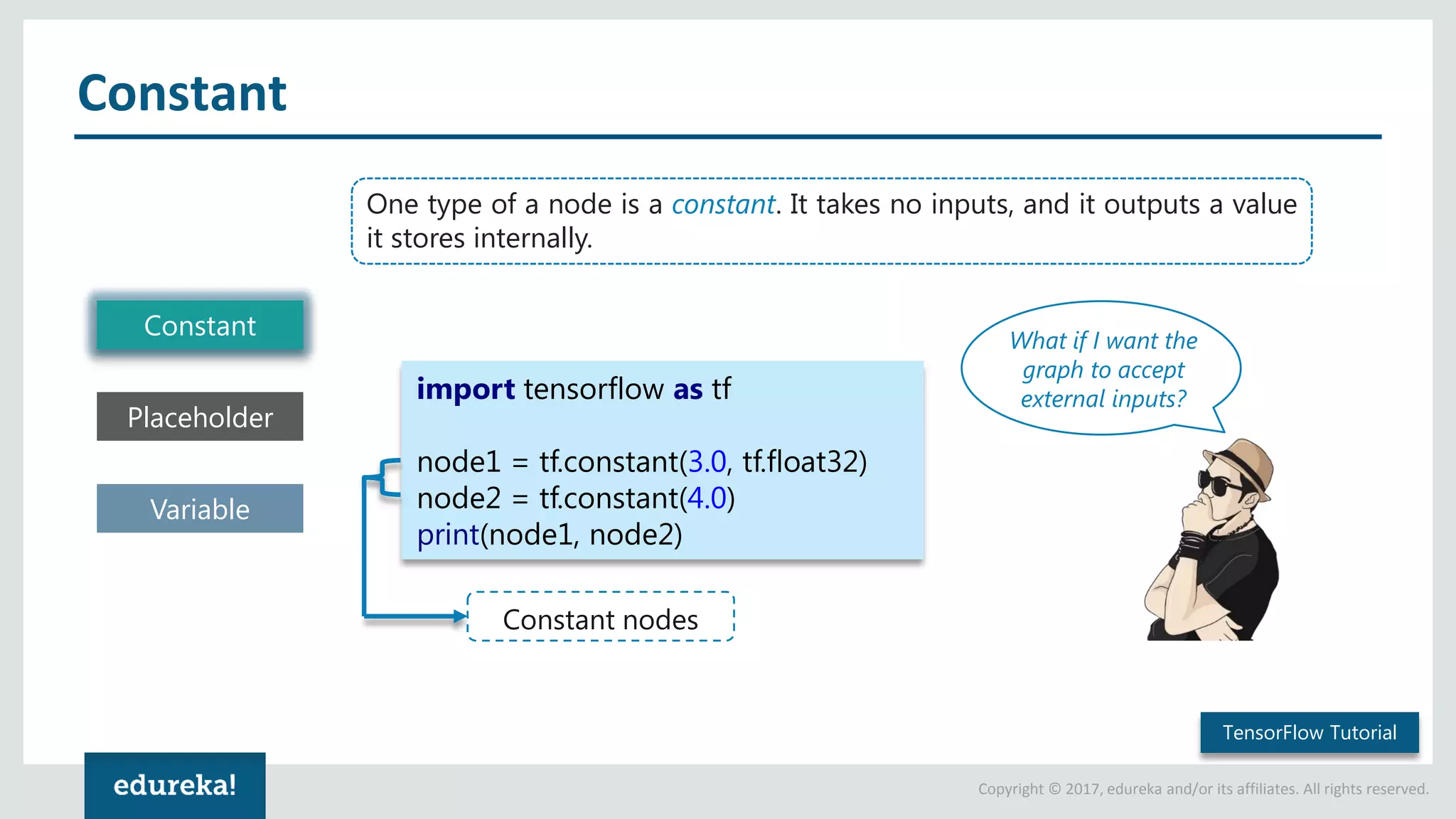

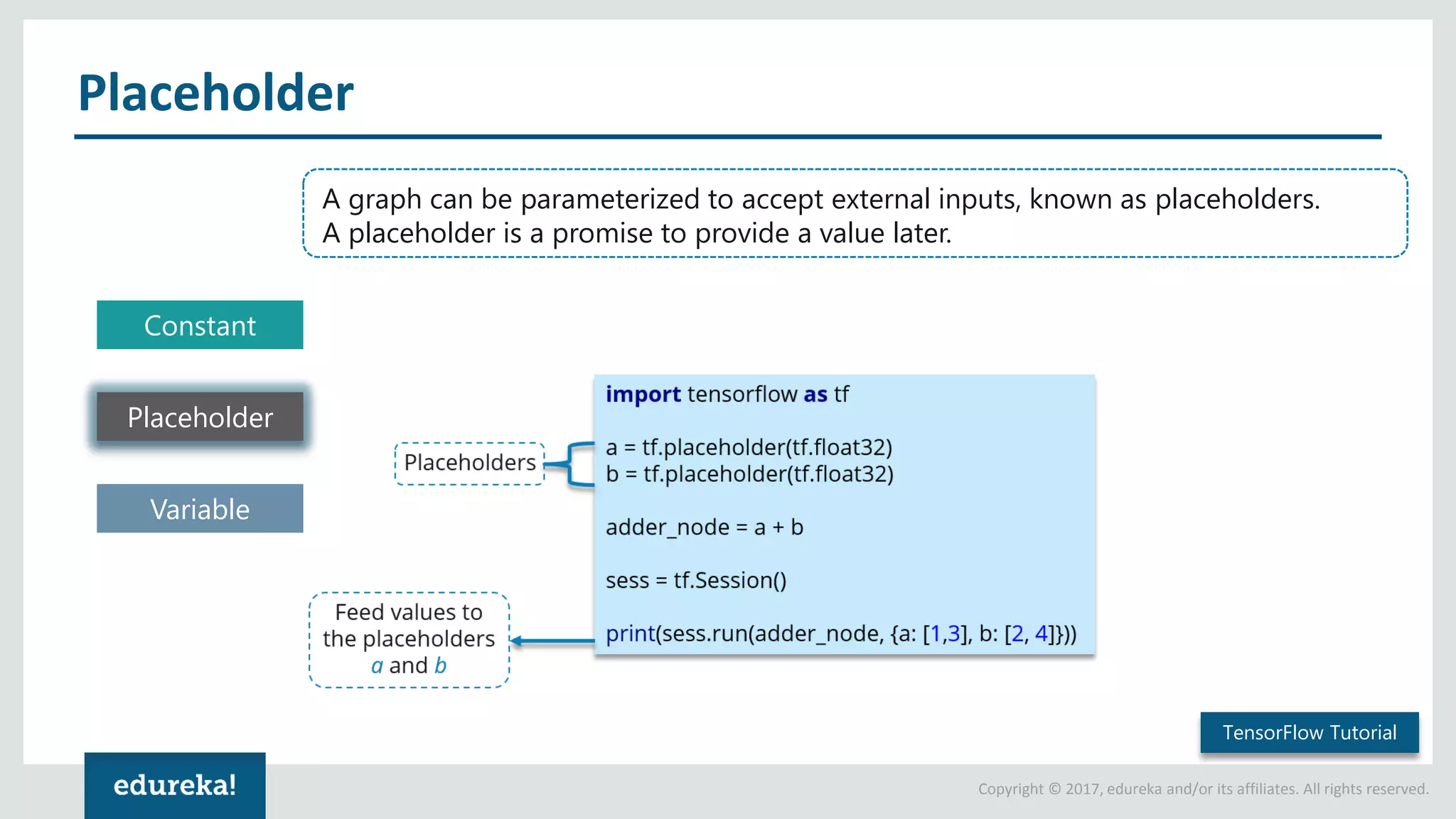

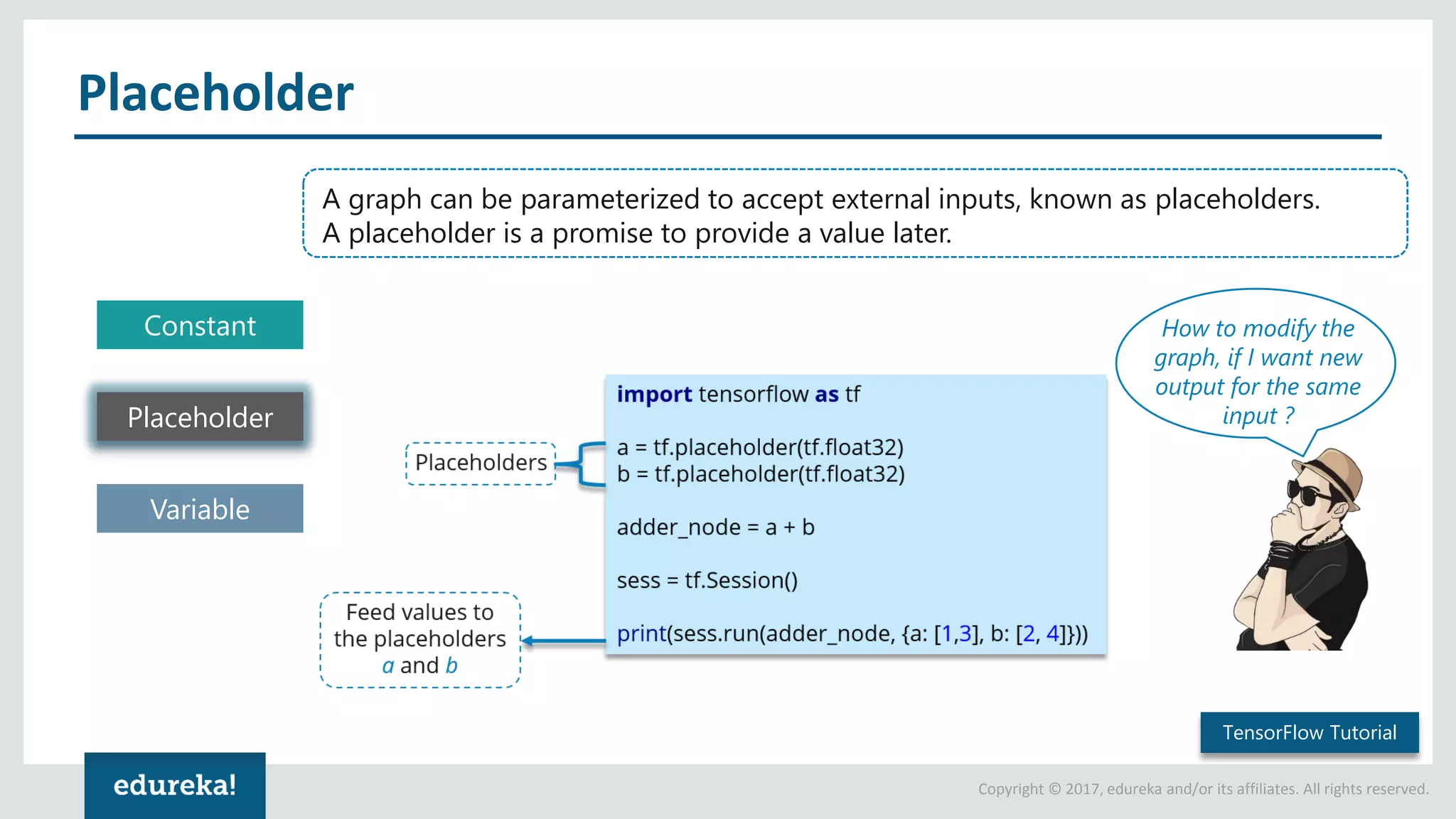

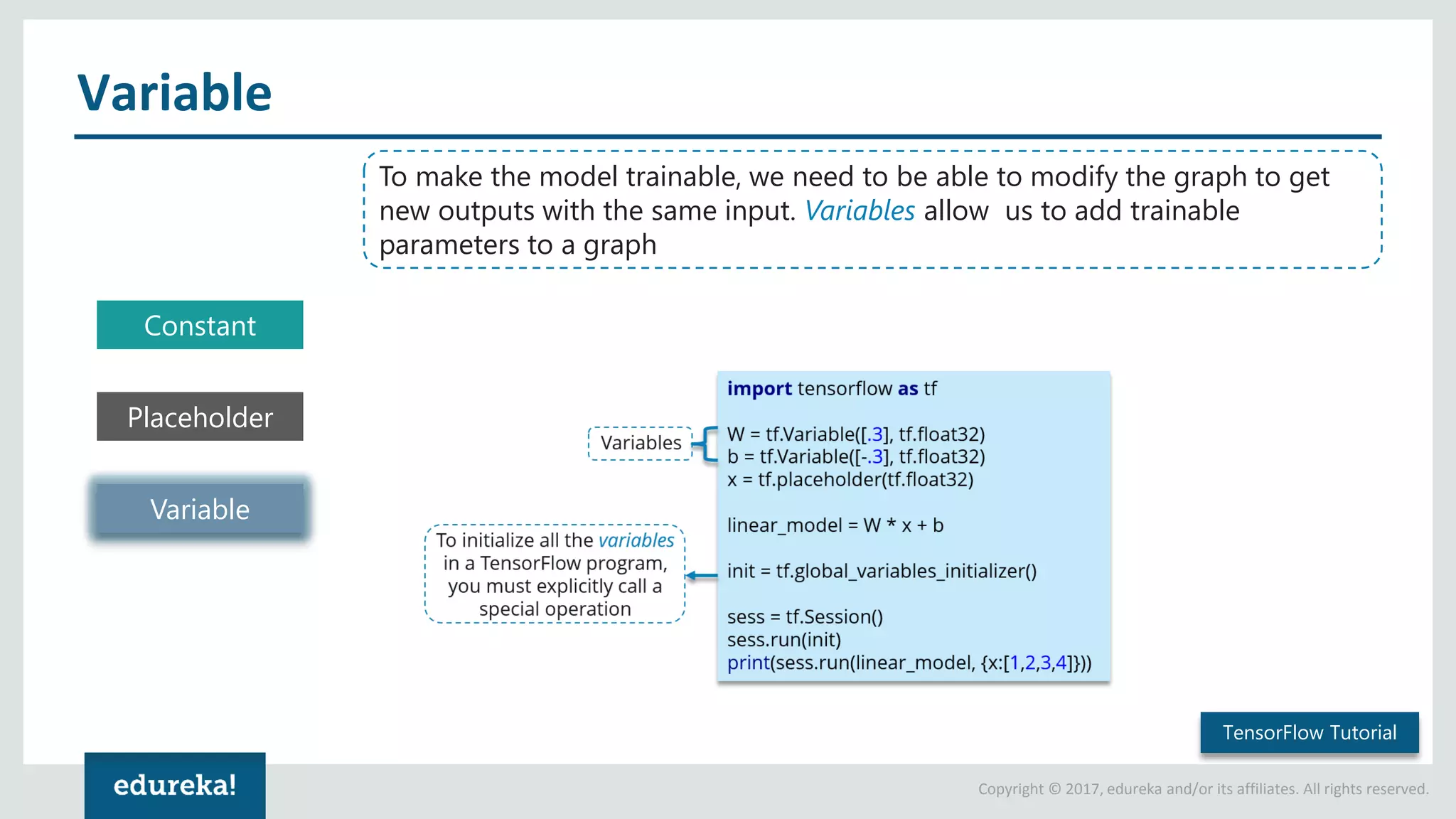

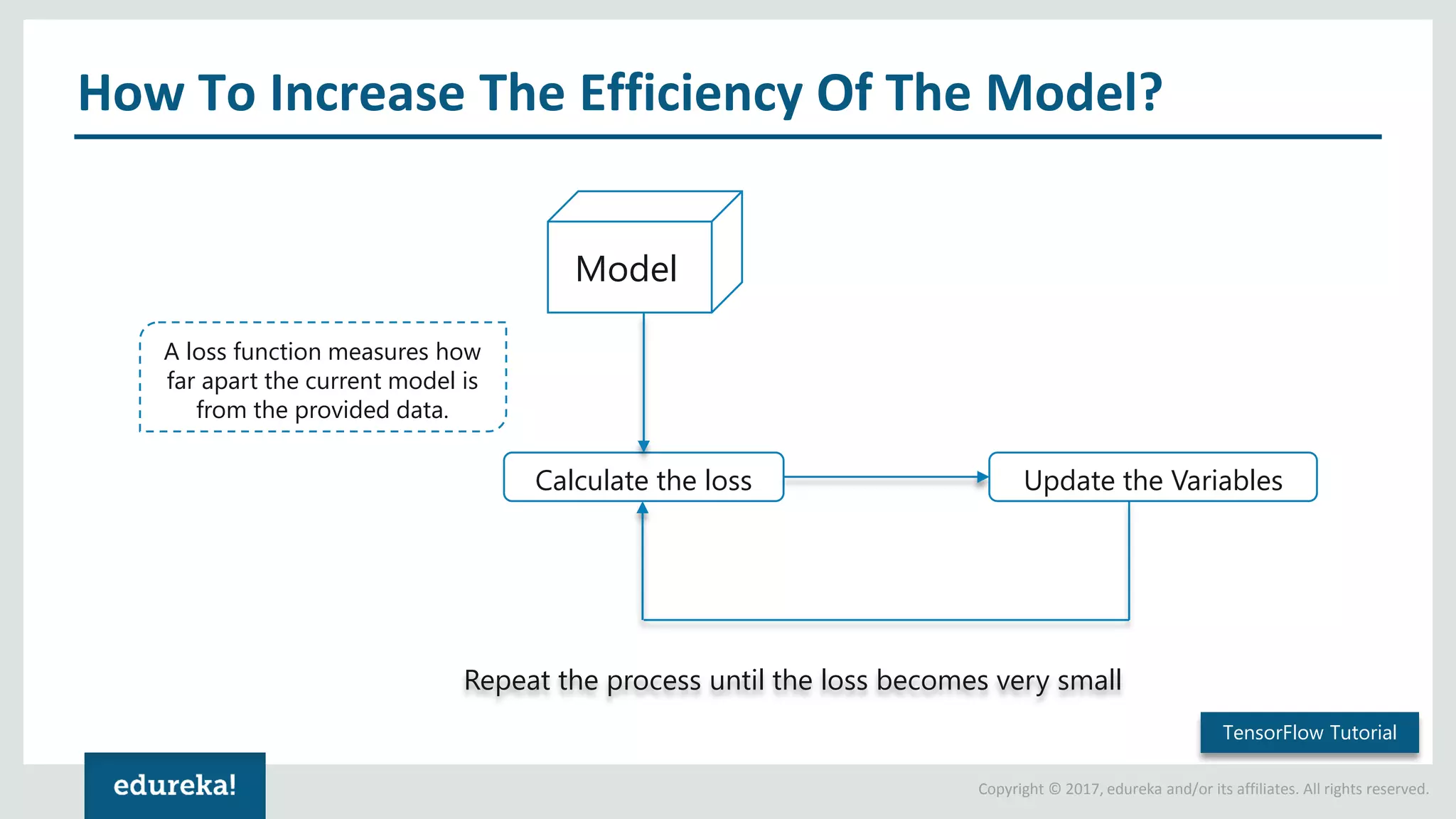

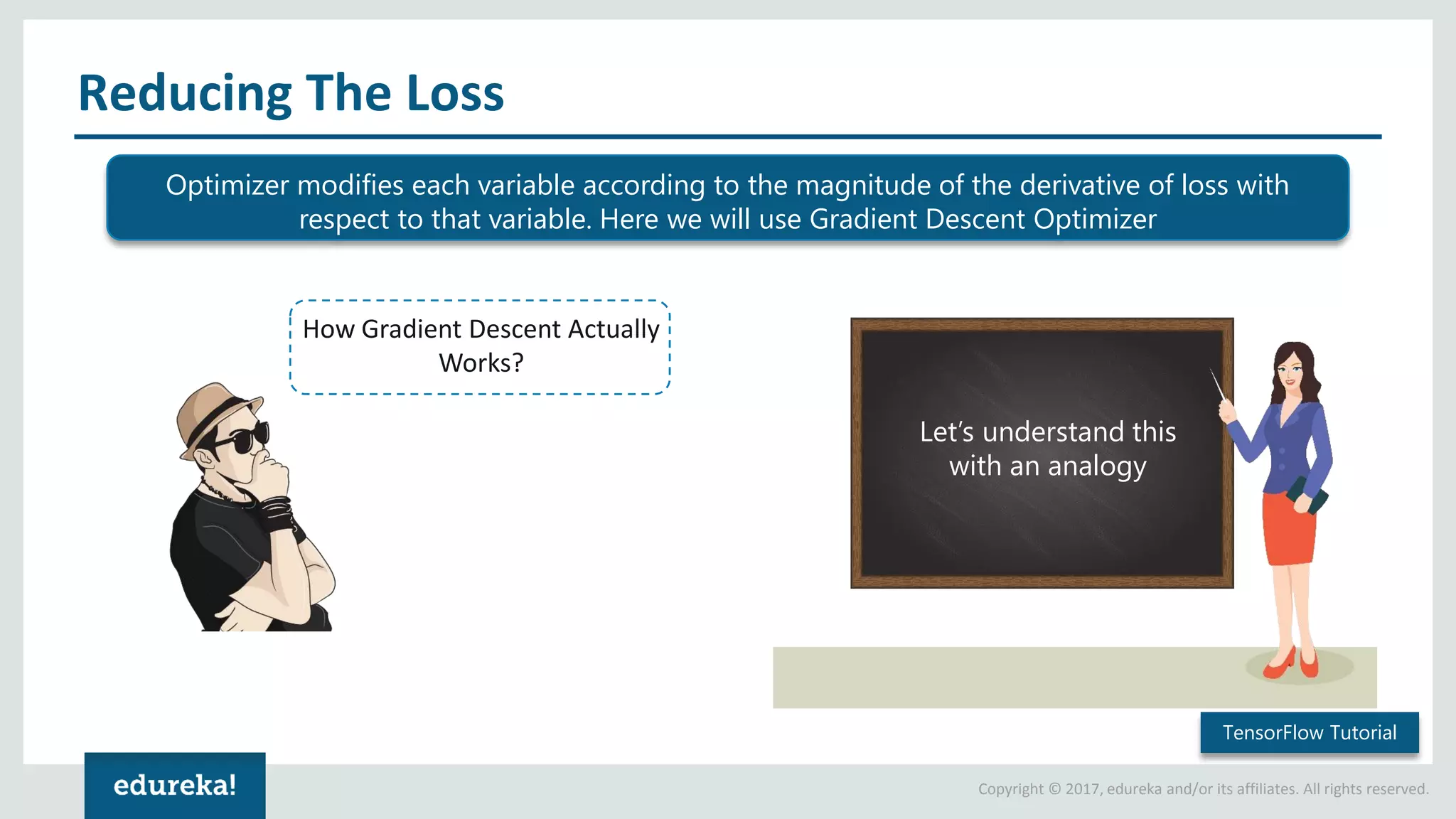

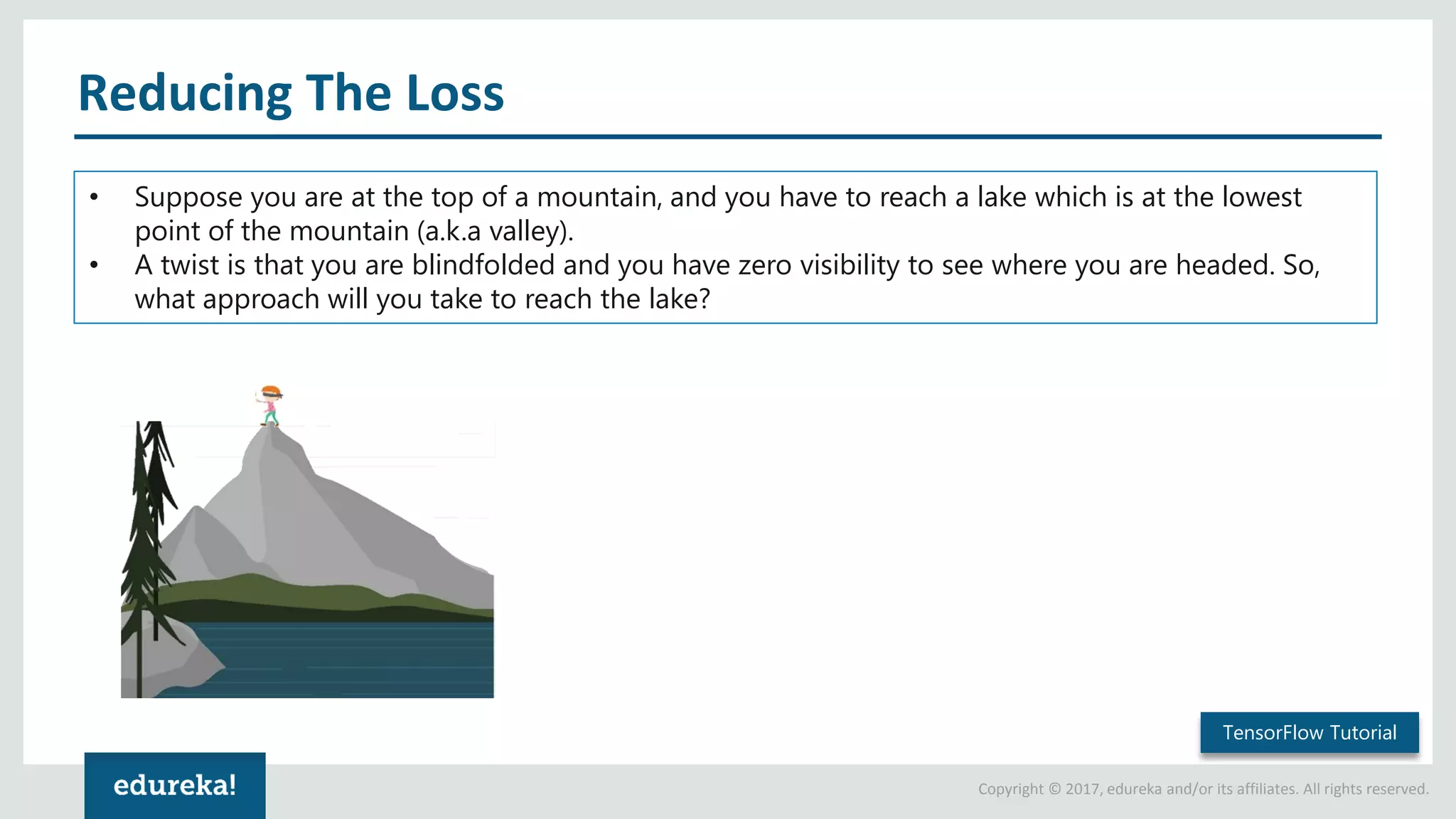

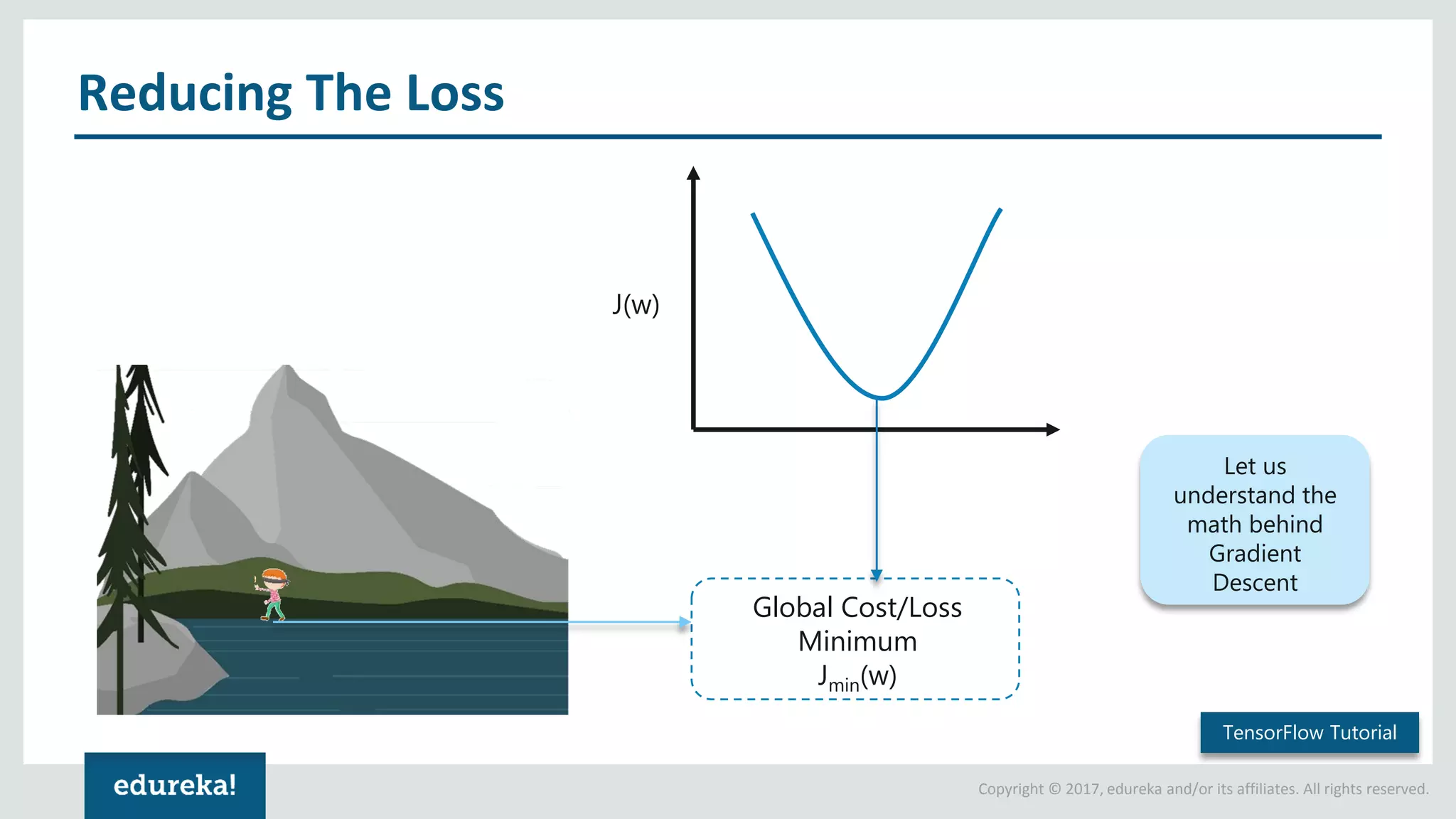

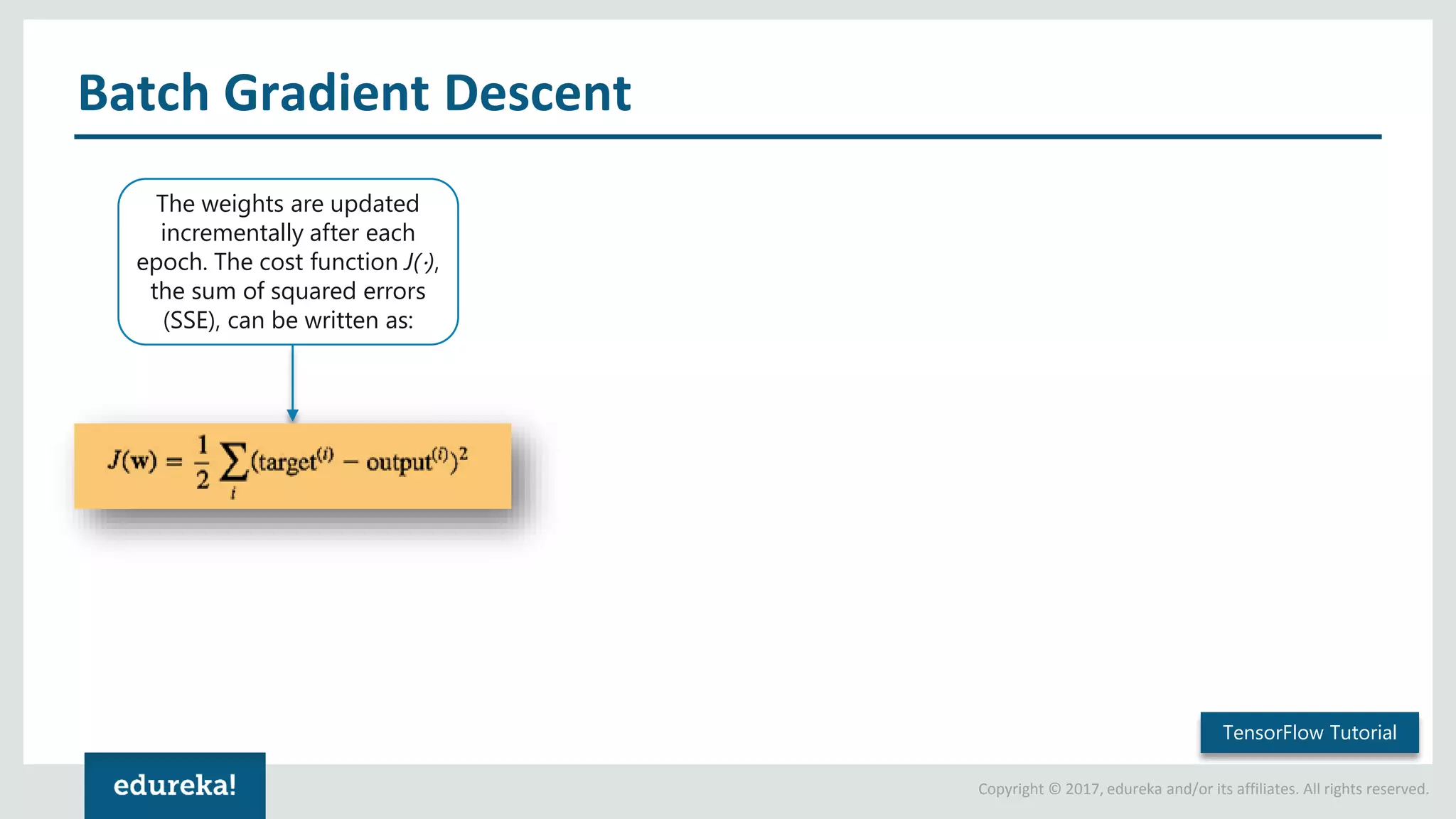

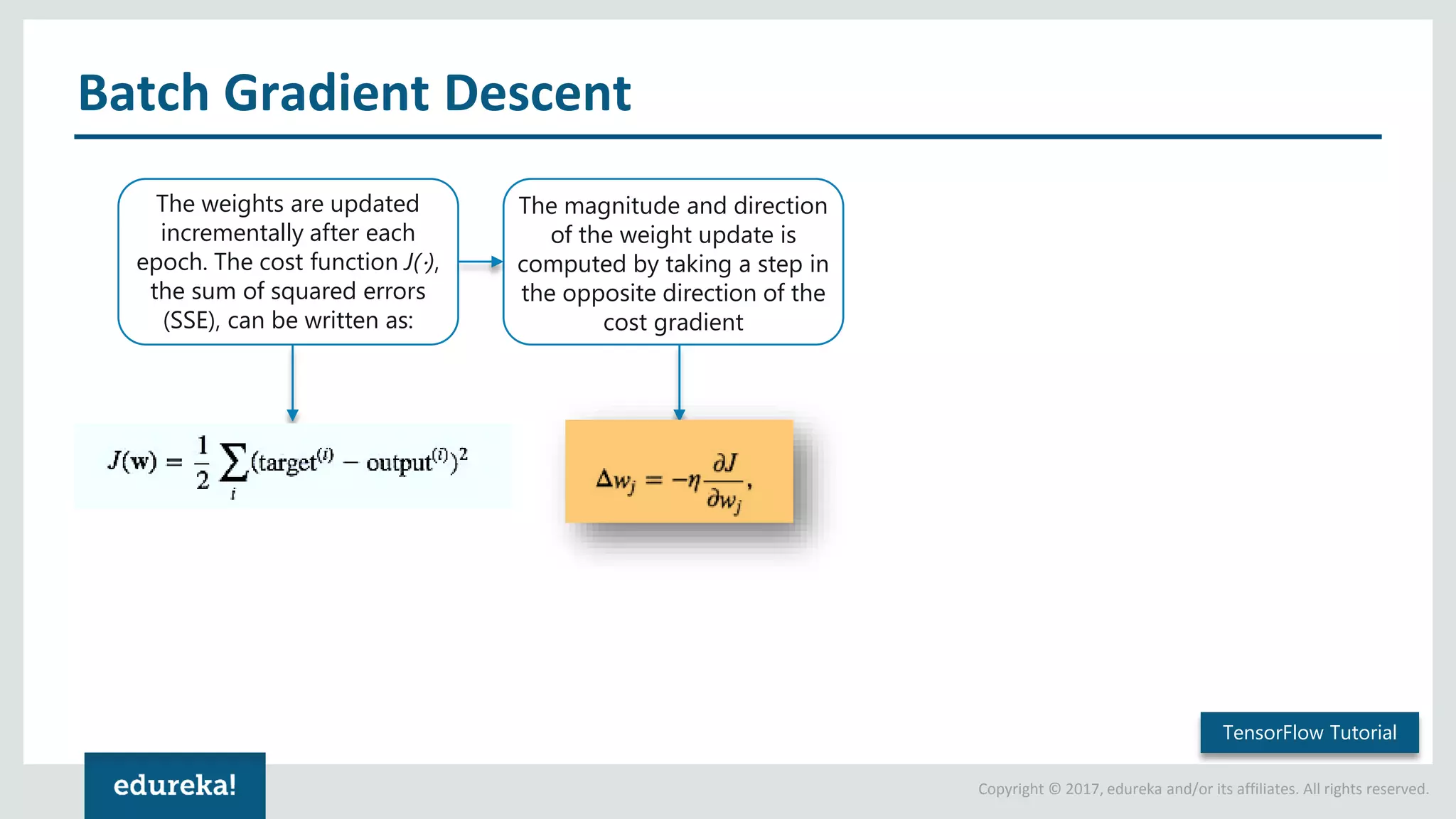

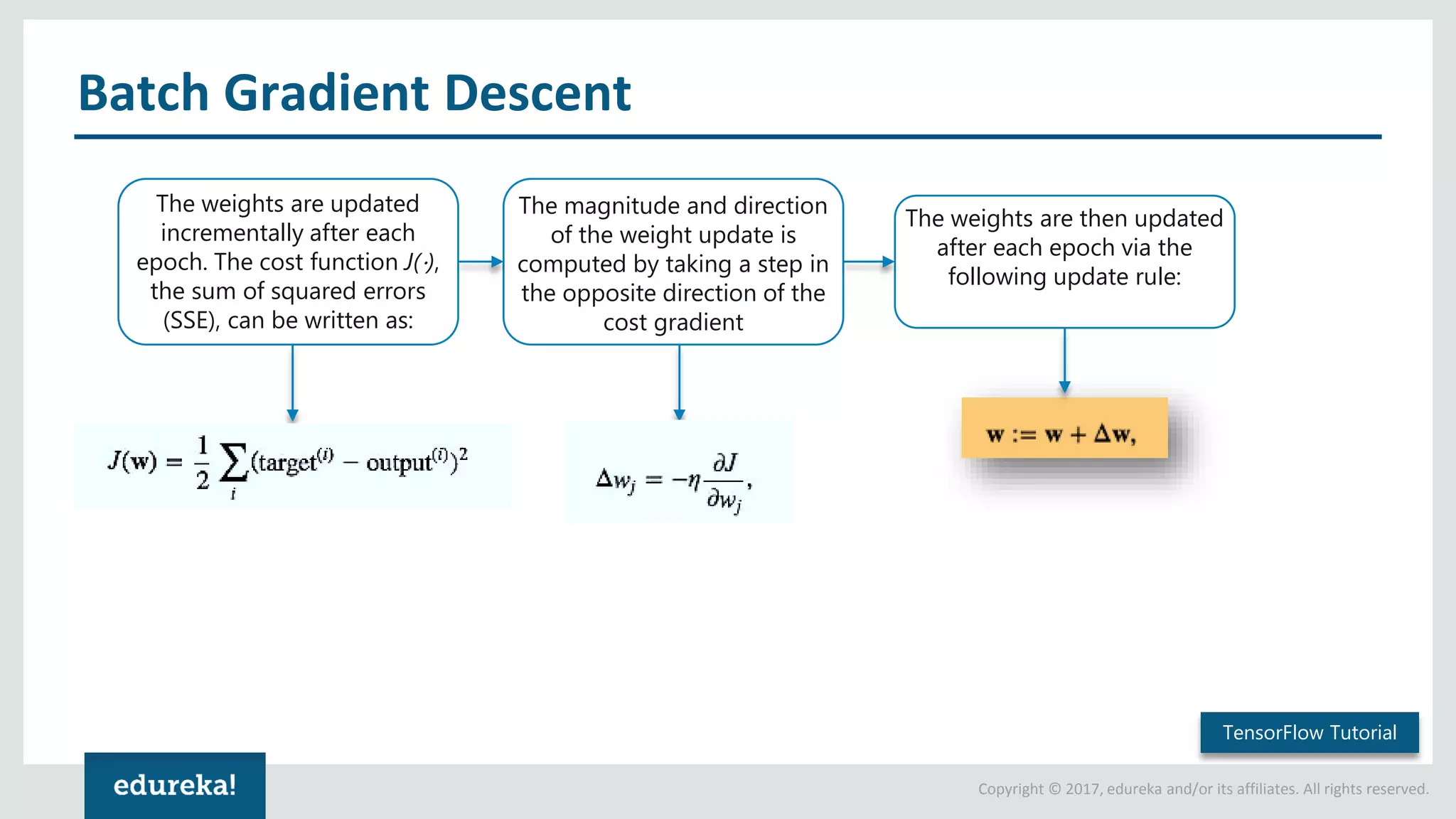

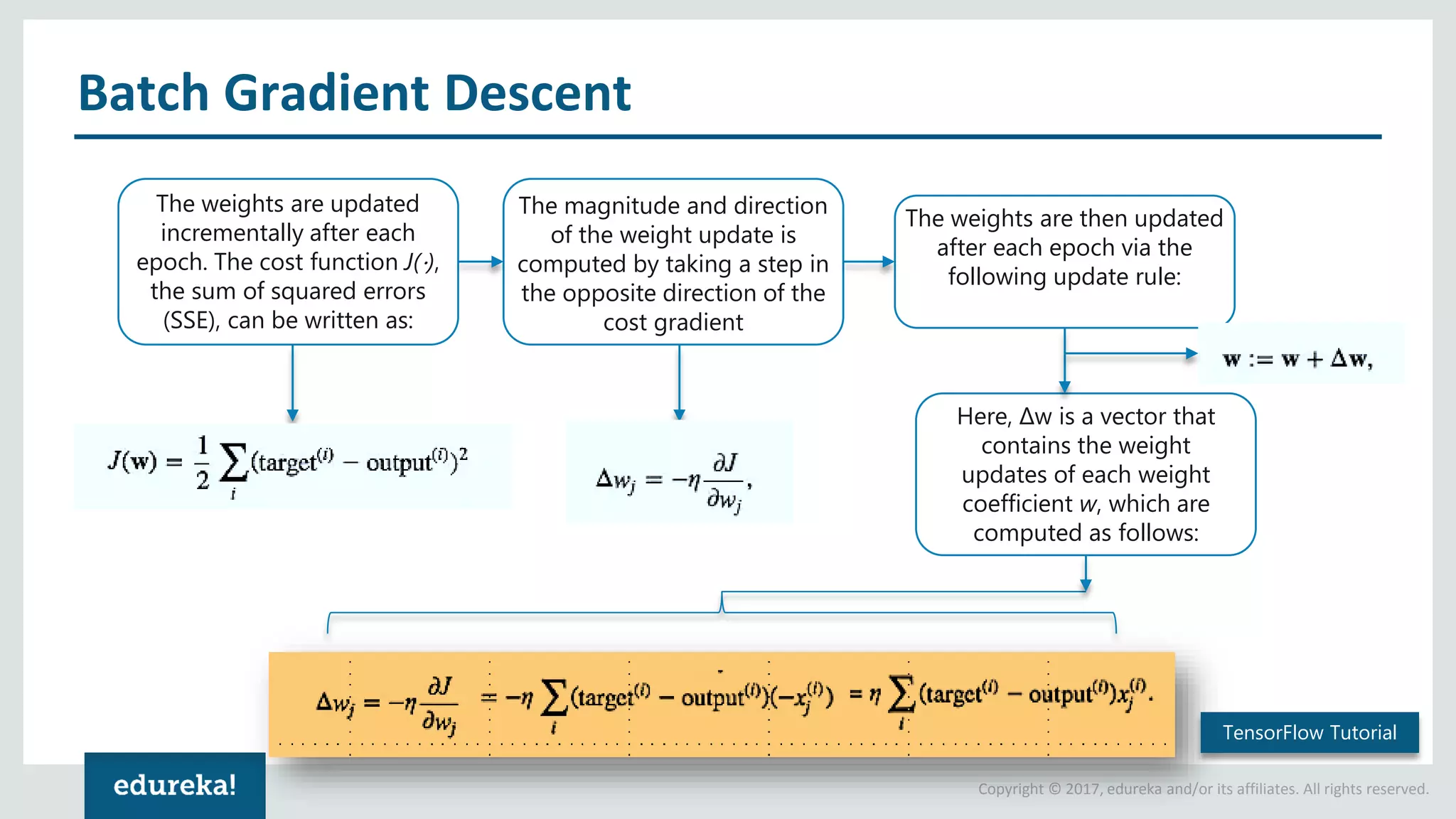

The document outlines a TensorFlow tutorial aimed at creating a model for distinguishing between naval mines and rocks using a sonar dataset. It explains the fundamentals of TensorFlow, including tensors, computational graphs, constants, placeholders, and variables, while also covering how to train models and minimize loss through gradient descent. Key steps in the implementation process, such as data preparation, model creation, training, and evaluation, are detailed throughout the tutorial.

![Copyright © 2017, edureka and/or its affiliates. All rights reserved. What Are Tensors? Tensors are the standard way of representing data in TensorFlow (deep learning). Tensors are multidimensional arrays, an extension of two-dimensional tables (matrices) to data with higher dimension. Tensor of dimension[1] Tensor of dimensions[2] Tensor of dimensions[3] TensorFlow Tutorial](https://image.slidesharecdn.com/tensorflowtutorial-edureka-170718045444/75/TensorFlow-Tutorial-Deep-Learning-Using-TensorFlow-TensorFlow-Tutorial-Python-Edureka-8-2048.jpg)

![Copyright © 2017, edureka and/or its affiliates. All rights reserved. Tensors Rank TensorFlow Tutorial Rank Math Entity Python Example 0 Scalar (magnitude only) s = 483 1 Vector (magnitude and direction) v = [1.1, 2.2, 3.3] 2 Matrix (table of numbers) m = [[1, 2, 3], [4, 5, 6], [7, 8, 9]] 3 3-Tensor (cube of numbers) t = [[[2], [4], [6]], [[8], [10], [12]], [[14], [16], [18 ]]] n n-Tensor (you get the idea) ....](https://image.slidesharecdn.com/tensorflowtutorial-edureka-170718045444/75/TensorFlow-Tutorial-Deep-Learning-Using-TensorFlow-TensorFlow-Tutorial-Python-Edureka-9-2048.jpg)

![Copyright © 2017, edureka and/or its affiliates. All rights reserved. TensorFlow Building And Running A Graph Building a computational graph Running a computational graph import tensorflow as tf node1 = tf.constant(3.0, tf.float32) node2 = tf.constant(4.0) print(node1, node2) Constant nodes sess = tf.Session() print(sess.run([node1, node2])) To actually evaluate the nodes, we must run the computational graph within a session. As the session encapsulates the control and state of the TensorFlow runtime. TensorFlow Tutorial](https://image.slidesharecdn.com/tensorflowtutorial-edureka-170718045444/75/TensorFlow-Tutorial-Deep-Learning-Using-TensorFlow-TensorFlow-Tutorial-Python-Edureka-15-2048.jpg)

![Copyright © 2017, edureka and/or its affiliates. All rights reserved. Simple Linear Model import tensorflow as tf W = tf.Variable([.3], tf.float32) b = tf.Variable([-.3], tf.float32) x = tf.placeholder(tf.float32) linear_model = W * x + b init = tf.global_variables_initializer() sess = tf.Session() sess.run(init) print(sess.run(linear_model, {x:[1,2,3,4]})) We've created a model, but we don't know how good it is yet TensorFlow Tutorial](https://image.slidesharecdn.com/tensorflowtutorial-edureka-170718045444/75/TensorFlow-Tutorial-Deep-Learning-Using-TensorFlow-TensorFlow-Tutorial-Python-Edureka-29-2048.jpg)

![Copyright © 2017, edureka and/or its affiliates. All rights reserved. Calculating The Loss In order to understand how good the Model is, we should know the loss/error. To evaluate the model on training data, we need a y i.e. a placeholder to provide the desired values, and we need to write a loss function. We'll use a standard loss model for linear regression. (linear_model – y ) creates a vector where each element is the corresponding example's error delta. tf.square is used to square that error. tf.reduce_sum is used to sum all the squared error. y = tf.placeholder(tf.float32) squared_deltas = tf.square(linear_model - y) loss = tf.reduce_sum(squared_deltas) print(sess.run(loss, {x:[1,2,3,4], y:[0,-1,-2,-3]})) TensorFlow Tutorial](https://image.slidesharecdn.com/tensorflowtutorial-edureka-170718045444/75/TensorFlow-Tutorial-Deep-Learning-Using-TensorFlow-TensorFlow-Tutorial-Python-Edureka-31-2048.jpg)

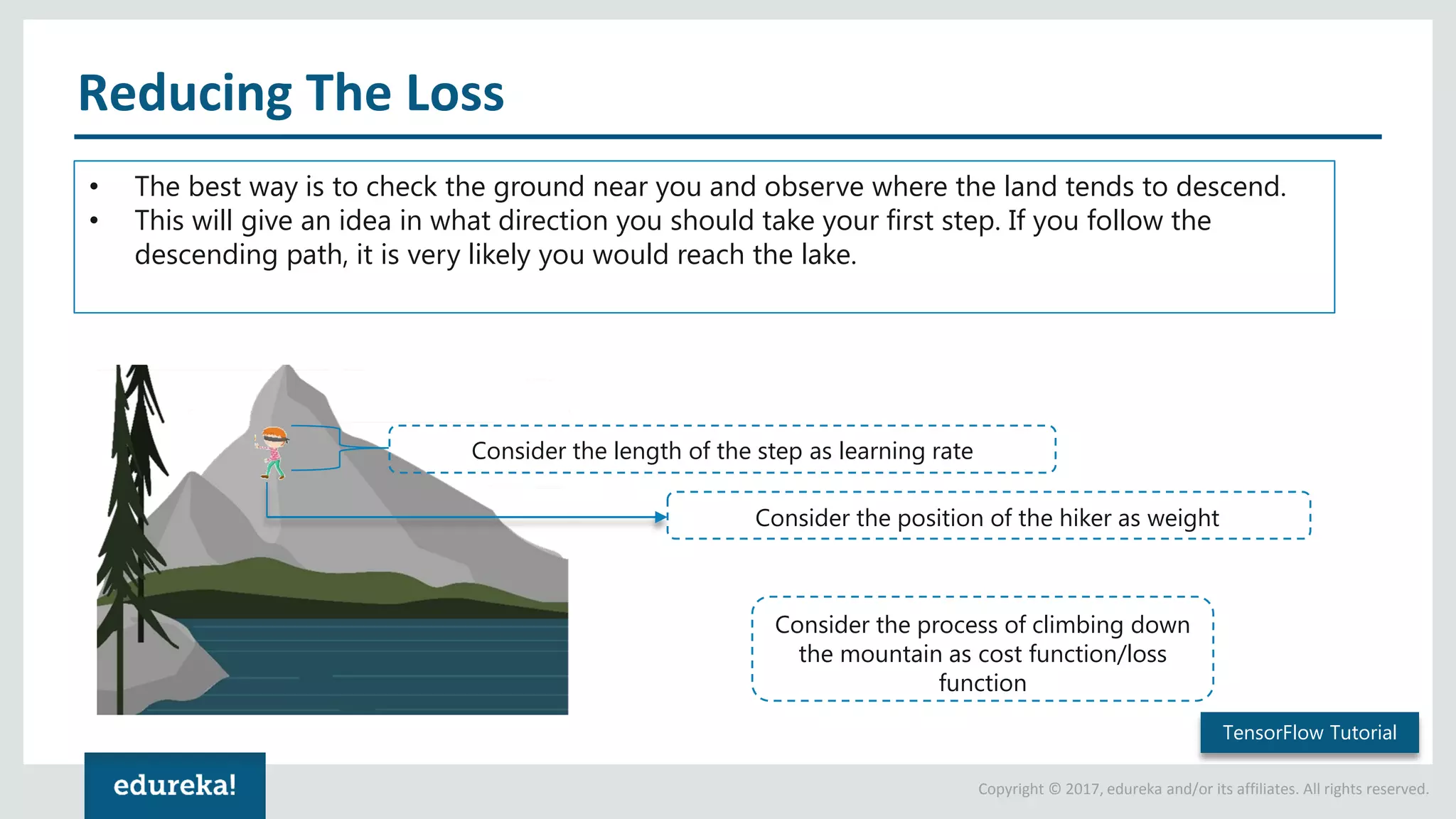

![Copyright © 2017, edureka and/or its affiliates. All rights reserved. Reducing The Loss Suppose, we want to find the best parameters (W) for our learning algorithm. We can apply the same analogy and find the best possible values for that parameter. Consider the example below: optimizer = tf.train.GradientDescentOptimizer(0.01) train = optimizer.minimize(loss) sess.run(init) for i in range(1000): sess.run(train, {x:[1,2,3,4], y:[0,-1,-2,-3]}) print(sess.run([W, b])) TensorFlow Tutorial](https://image.slidesharecdn.com/tensorflowtutorial-edureka-170718045444/75/TensorFlow-Tutorial-Deep-Learning-Using-TensorFlow-TensorFlow-Tutorial-Python-Edureka-40-2048.jpg)