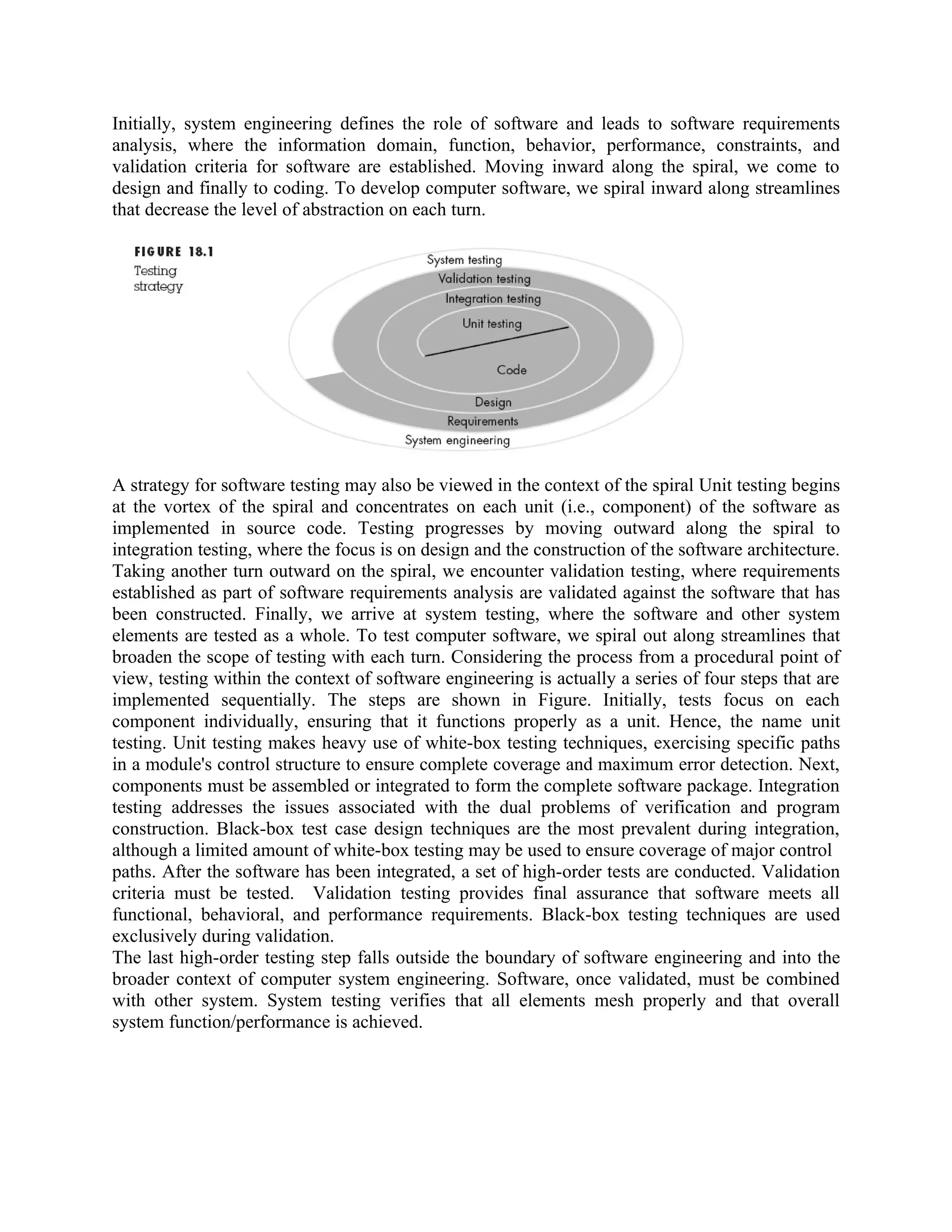

The document discusses strategies for software testing including: 1) Testing begins at the component level and works outward toward integration, with different techniques used at different stages. 2) A strategy provides a roadmap for testing including planning, design, execution, and evaluation. 3) The main stages of a strategy are unit testing, integration testing, validation testing, and system testing, with the scope broadening at each stage.