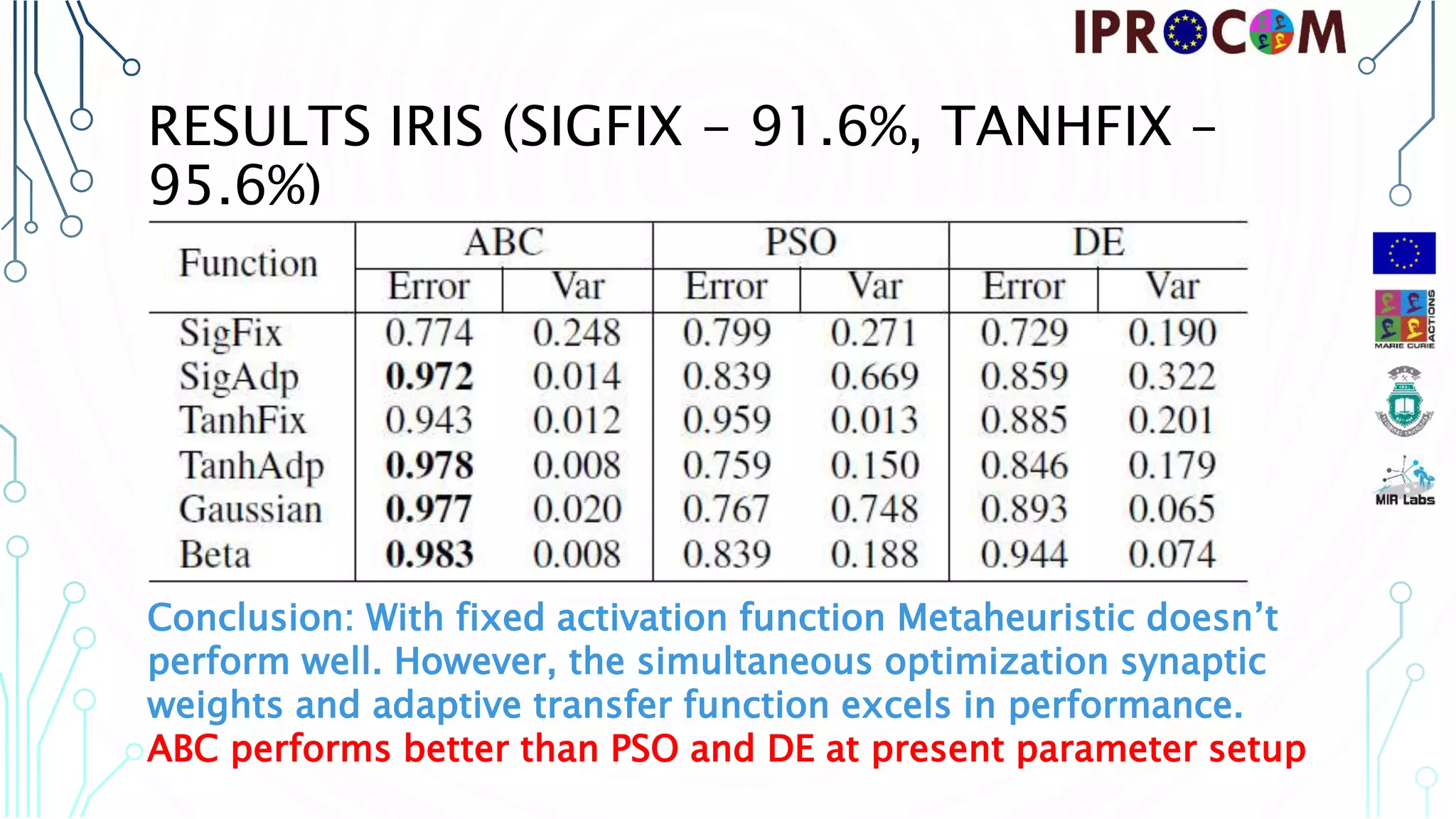

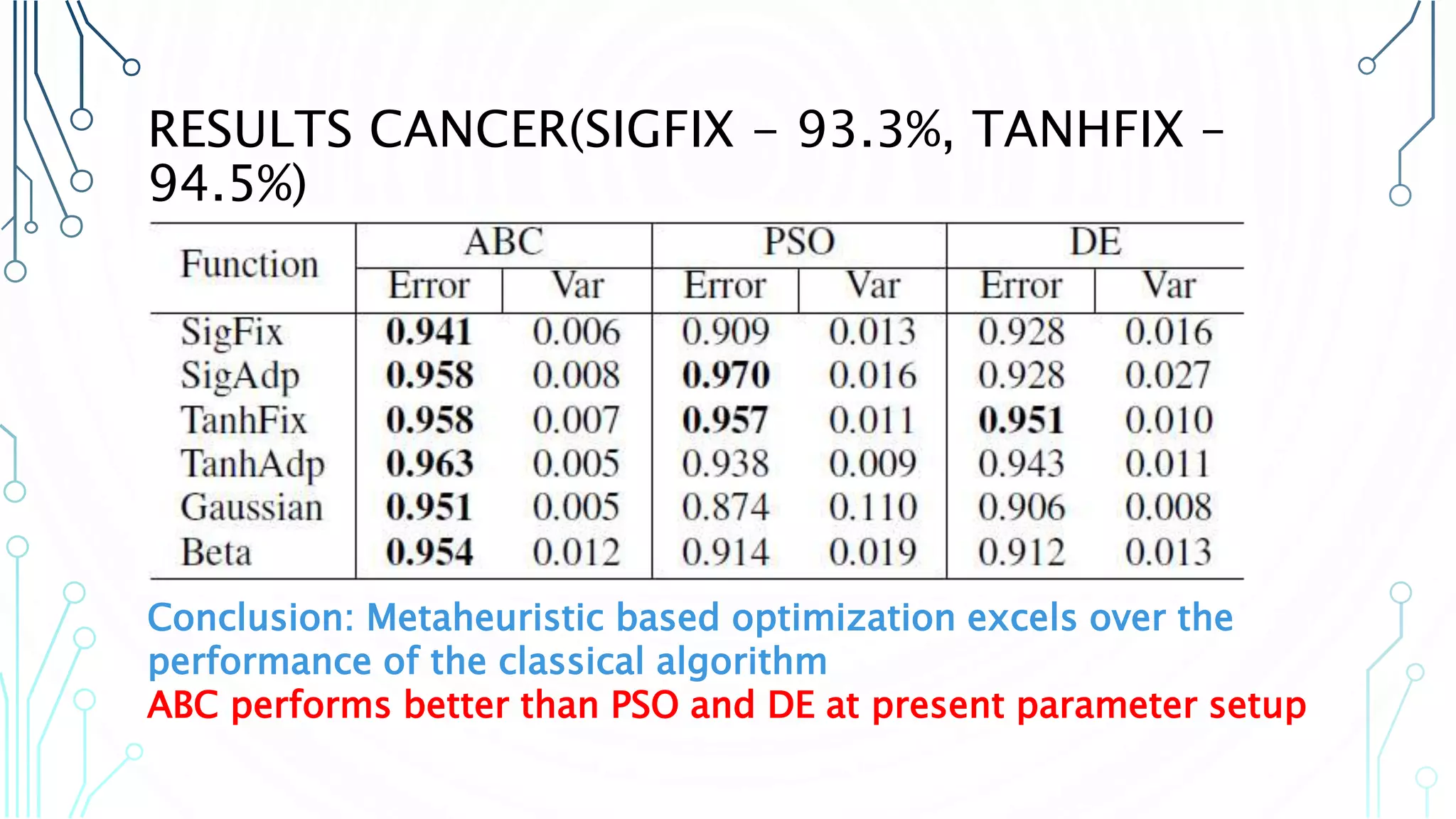

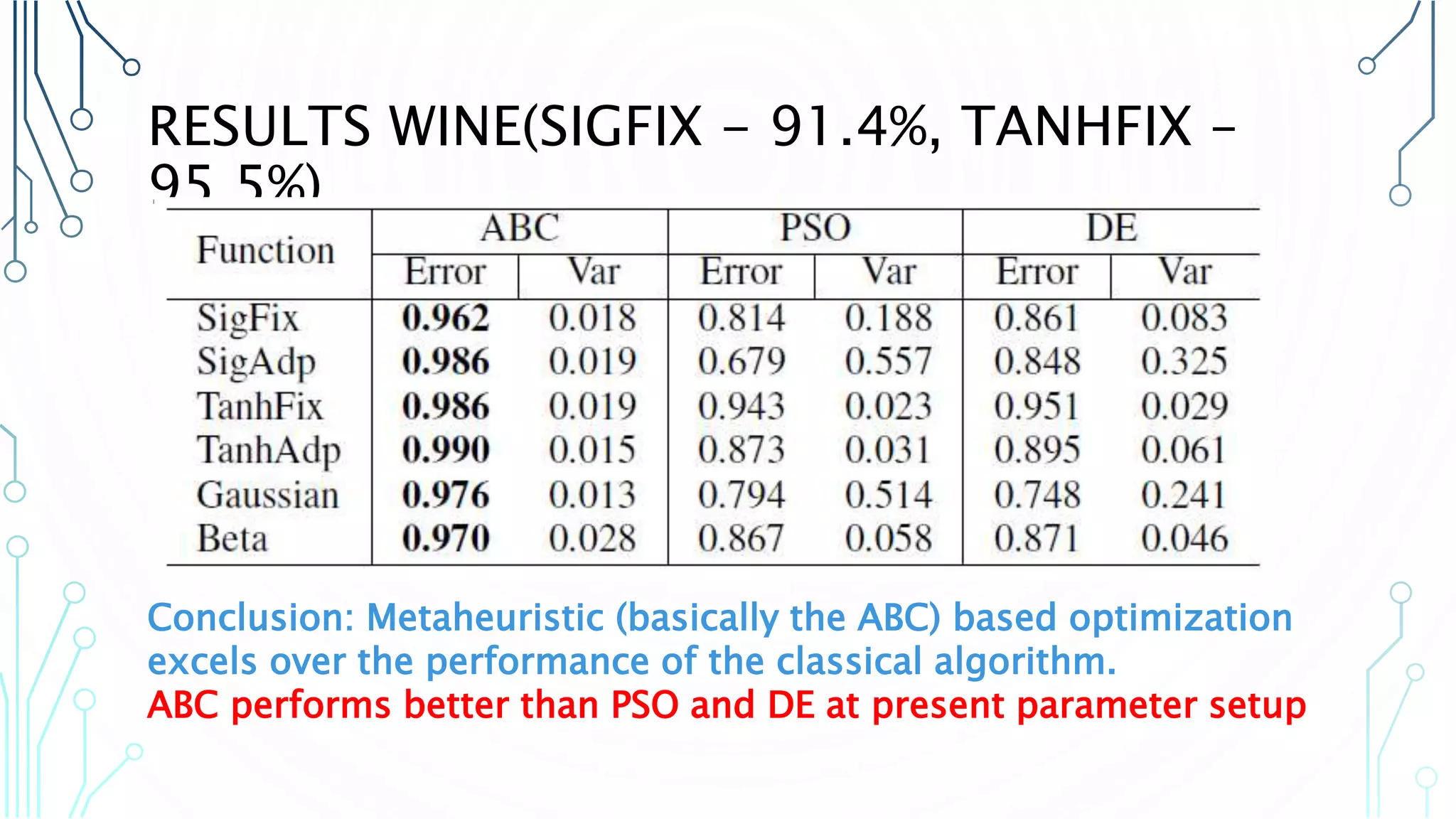

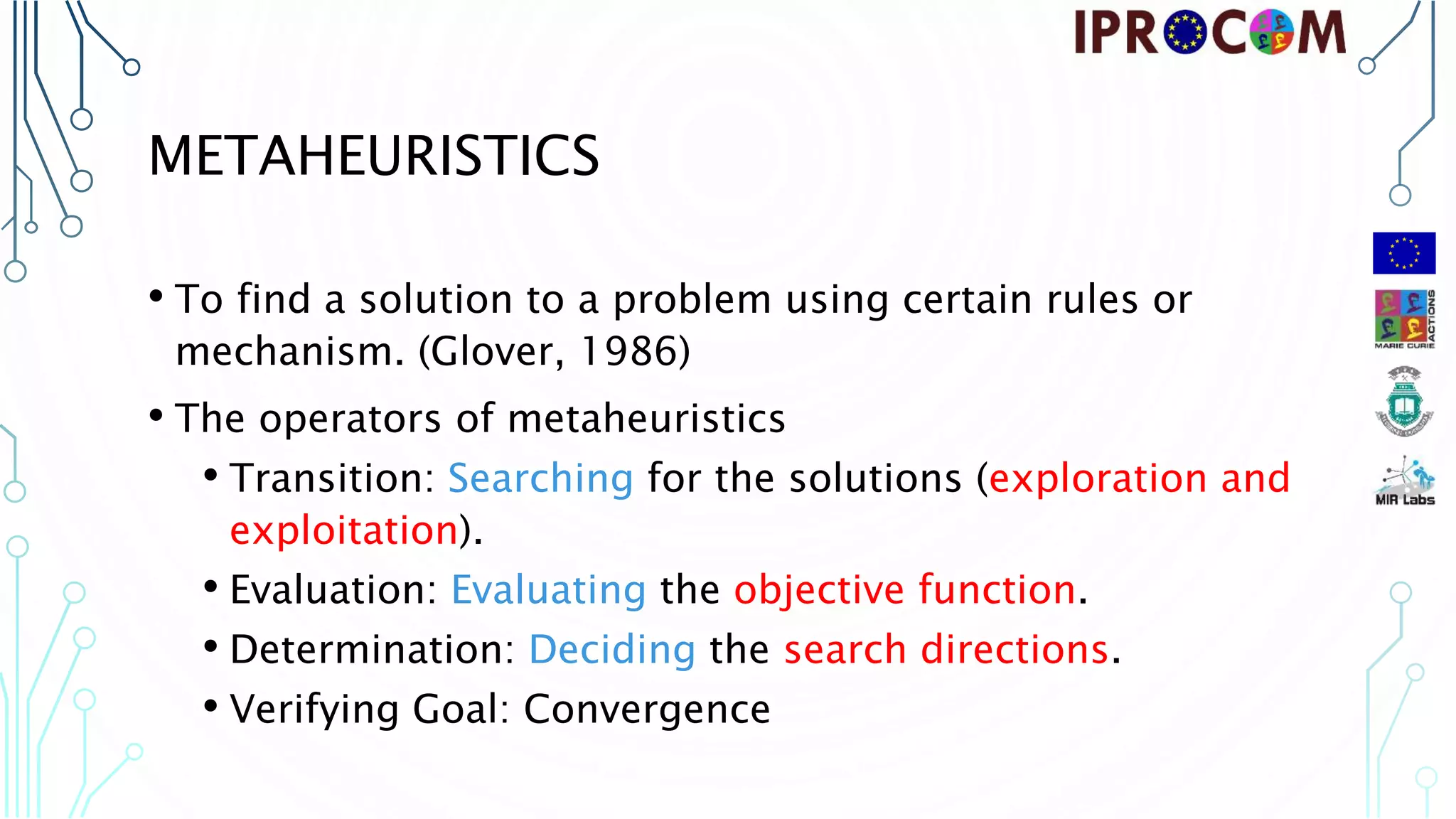

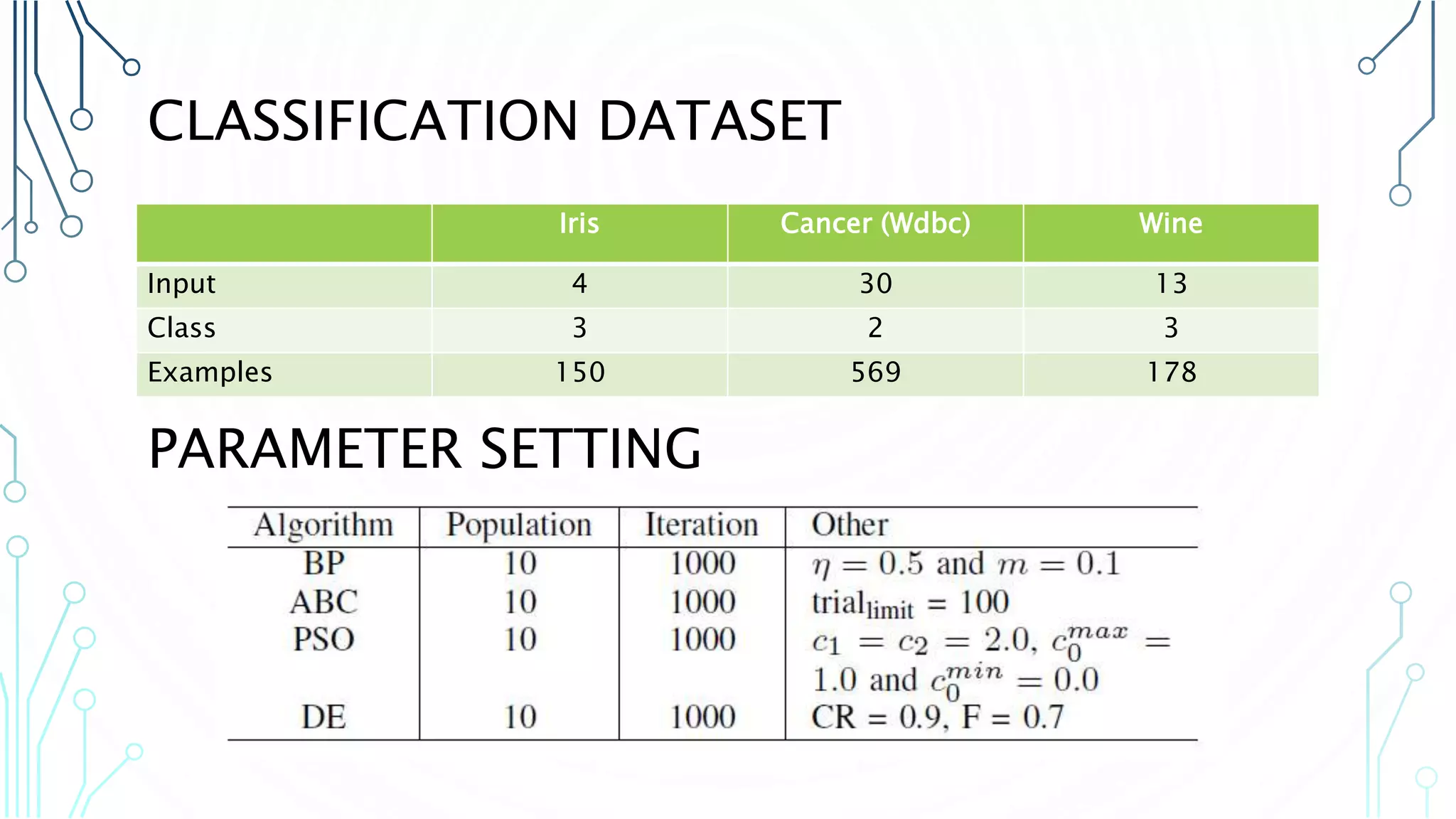

The document proposes using metaheuristic algorithms like Artificial Bee Colony, Particle Swarm Optimization, and Differential Evolution to simultaneously optimize the weights and activation functions of neural networks. It tests this approach on three datasets: Iris, Cancer, and Wine. Results show the metaheuristic-based simultaneous optimization performs better than classical backpropagation with fixed activation functions. Of the metaheuristics tested, Artificial Bee Colony achieved the best results on the given datasets and parameter settings. The paper concludes metaheuristics are effective for optimizing both neural network weights and transfer functions.

![METAHEURISTICS • Artificial Bee Colony (Karaboga, 2005) is a meta-heuristic algorithm inspired by foraging behavior of honey bee swarm. Depends of food position is updated by the artificial bees in iterative fashion. • Particle Swarm Optimization (Eberhart and Kennedy, 1995) is a population based meta-heuristic algorithm imitates the mechanisms of the foraging behavior of swarms. Depends of Velocity and Position Update of Particles in Swarm. • Deferential Evaluation (Storn and Price, 1995) Evolutionary Algorithm based optimization algorithm [operator – Selection and Crossover ]](https://image.slidesharecdn.com/varunhis2014-180403112502/75/Simultaneous-optimization-of-neural-network-weights-and-active-nodes-using-metaheuristics-9-2048.jpg)

![RESULTS – CLASSICAL ALGORITHM FOR WEIGHTS OPTIMIZATION SigFix and TanhFix – Indicates activation function with fixed parameter and optimization of the synaptic weights only Default values: 𝜆 = 1 𝑎𝑛𝑑 𝜃 = 0. Weight Initialization: [-1.5, 1.5]](https://image.slidesharecdn.com/varunhis2014-180403112502/75/Simultaneous-optimization-of-neural-network-weights-and-active-nodes-using-metaheuristics-11-2048.jpg)