The document discusses a project focused on real-time hand gesture recognition for controlling an Arduino robot, highlighting its relevance in the fields of IoT, robotics, and automation. It details the design, functioning, and testing of a gesture-controlled rover that allows users to interact with the robot wirelessly using predefined hand gestures for various commands. The paper also outlines the applications of this technology across diverse industries and its potential for future expansion and enhancement.

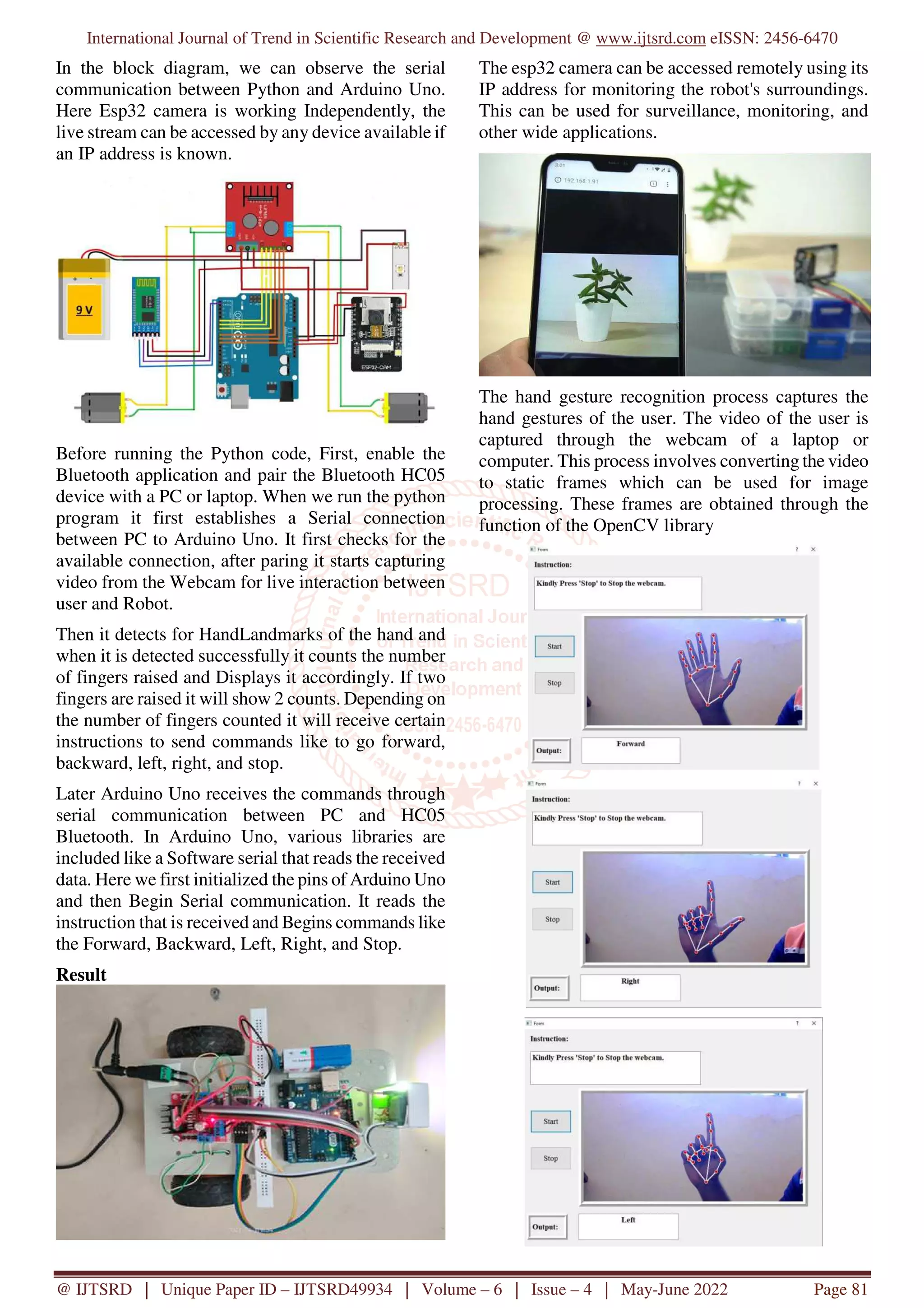

![International Journal of Trend in Scientific Research and Development @ www.ijtsrd.com eISSN: 2456-6470 @ IJTSRD | Unique Paper ID – IJTSRD49934 | Volume – 6 | Issue – 4 | May-June 2022 Page 80 wireless connectivity and Bluetooth support for easy data transmission, this rover can be monitored and controlled on multiple devices. The camera module capture and send real-time footage to users remotely here as an Ip address link for live tracking of the environment surrounding the rover. Adding motor control gave us maneuverability and Arduino functions as the brains of this rover. To instruct this rover we took the help of serial communication to coordinate tasks between Arduino and python programming language. Literature Survey: In past, a lot of research work has been done on recognizing hand gestures efficiently. Systems were developed with applications in robotic control, gaming applications, and many more. There has been much research in the field of hand gesture recognition for controlling robots. Rafiqul Zaman Khan et al [2] discussed the issues in hand gesture recognition and provided the literature review on different techniques used for Hand Gesture Recognition. R. Pradipa et al [4] performed an analysis of different Hand Gesture Recognition Algorithms and provided their advantages and disadvantages. A Computer Vision-based approach for gesture recognition includes four phases commonly Image Acquisition, Pre-processing, Feature Extraction, and Classification as shown in Fig. 1. Firstly, the image of the user providing gestures is collected through a webcam and is followed by some preprocessing. Then, the hand portion is extracted and classified into the category of a particular gesture [5]. F. Arce et al [6] illustrated the use of an accelerometer based on 3 axes to recognize Hand Gestures Proposed System: The system hardware includes Arduino Uno microcontroller, Dc Motors, L298N motor Driver, HC-05 Bluetooth Module, LED, and Esp3- Cam.Esp32 camera is a small size, low power consumption camera module based on ESP32. It is widely used in IoT applications such as wireless video monitoring, Wifi image upload, and QR identification. The battery is required to power everything in this project from Arduino to motors. The system software includes Arduino IDE, and Python IDE (Pycharm). Pyqt5 designer (GUI toolkit) for designing a user interface. Libraries Used: Pyserial, OpenCV, Matplotlib, NumPy, Cvlib, Urlib, SoftwareSerial library. Mediapipe: MediaPipe is a framework that is used for applying in a machine learning pipeline, and it is an open-source framework of Google. The MediaPipe framework is beneficial for cross-platform development since the framework is made using statistical data. The MediaPipe framework is multimodal, where this framework is often applied to varied audios and videos. Methodology: A. Hand gesture recognition The proposed system presented in this paper performs real-time hand gesture recognition which is done using various image processing techniques. The overall system architecture of the hand gesture recognition system using media pipe is shown in fig B. System Architecture](https://image.slidesharecdn.com/13real-timehandgesturerecognitionbasedcontrolofarduinorobot-220803105246-70f23353/75/Real-Time-Hand-Gesture-Recognition-Based-Control-of-Arduino-Robot-2-2048.jpg)

![International Journal of Trend in Scientific Research and Development @ www.ijtsrd.com eISSN: 2456-6470 @ IJTSRD | Unique Paper ID – IJTSRD49934 | Volume – 6 | Issue – 4 | May-June 2022 Page 83 gestures in virtual reality in addition to controlling a PC. The Leap Motion controller is a USB device that observes the area of about one meter with the help of two IR camera sensors and three infrared LEDs. A hand tracking application developed by ManoMotion recognizes gestures in three dimensions using just a smartphone camera (on both Android and iOS) and can be applied in VR and AR environments. The use cases for this technology include IoT devices, VR- gaming and interaction with virtual simulations. Consumer electronics Home automation is another vast field within the consumer electronics domain in which gesture recognition can be used for various applications. uSens has developed a hardware and software to make smart TVs sense finger movements and hand gestures. Gestoos is a AI Platform in which gestures can be created and assigned by a smartphone or another device, and one gesture can be used to enable various commands. It also offers touch less control over lighting and audio systems The consumer market is open to accept and try new HMI technologies, and hand gesture recognition technology can be said as evolution from touchscreens. Demand for more smooth and means of interaction with devices as well as a concern for Safety of driver are pushing the adoption of HGR. Future scope: A limited (five) number of hand gestures have been considered here. Though the number of gestures can be extended by adding different algorithm, the computation will be increased. OpenCV library is preferred here as it is applicable for real time and execution is faster. It may be used on a variety of robotic systems with a set of gesture commands appropriate to that system. Further the robot can be designed to perform various other tasks and gestures can be designed accordingly. The algorithm improved further working in various light conditions. The performance analysis of the algorithm will be done by taking images of gestures at different conditions like non uniform background, poor light conditions. Conclusion: The proposed system was achieved by using a webcam or a built-in camera which detects the hand gestures. The main advantage of the system is that it does not require any physical contact with humans and provides a natural way of communicating with the robots. The algorithm implementation does not require any additional hardware. The proposed system can be extended further for a wide range of applications. Movement of robot is controlled using these five gestures, which were implemented. The arduino robot moves in the respective direction with the help of wheels attached to the DC motors. References: [1] T. Jha, B. Singh, A. Sharma, S. Sharma and R. Kumar, "Real Time Hand Gesture Recognition for Robotic Control," 2018 Second International Conference on Green Computing and Internet of Things (ICGCIoT), 2018, pp. 371-375, doi: 10.1109/ICGCIoT.2018.8752966. [2] Rafiqul Zaman Khan and Noor Adnan Ibraheem, "Hand Gesture Recognition: a Literature Review", International Journal of Artificial Intelligence & Applications (IJAIA), July 2012. [3] N. Mohamed, M. B. Mustafa and N. Jomhari, "A Review of the Hand Gesture Recognition System: Current Progress and Future Directions," in IEEE Access, vol. 9, pp. 157422-157436, 2021, doi: 10.1109/ACCESS.2021.3129650. [4] Pradipa, R. and S N Kavitha. “Hand Gesture Recognition - Analysis of Various Techniques, Methods and Their Algorithms.” (2014). [5] Ebrahim Aghajari and Damayanti Gharpure, "Real Time Vision-Based Hand Gesture Recognition for Robotic Application", International Journal, vol. 4, no. 3, 2014 [6] F. Arce and J. M. G. Valdez, "Accelerometer- Based Hand Gesture Recognition Using Artificial Neural Networks", Soft Computing for Intelligent Control and Mobile Robotics Studies in Computational Intelligence, 2011 [7] J. Hossain Gourob, S. Raxit, and A. Hasan, "A Robotic Hand: Controlled With Vision-Based Hand Gesture Recognition System," 2021 International Conference on Automation, Control and Mechatronics for Industry 4.0 (ACMI), 2021, pp. 1-4, doi: 10.1109/ACMI53878.2021.9528192. [8] Shaikh, S.A., Gupta, R., Shaikh, I., & Borade, J. (2016). Hand Gesture Recognition Using Open CV. [9] Joseph Howse. OpenCV computer vision with python. Packt Publishing Birmingham, 2013. [10] Sudhakar Kumar, Manas Ranjan Das, Rajesh Kushalkar, Nirmala Venkat, Chandrashekhar Gourshete, and Kannan M Moudgalya. Microcontroller programming with Arduino and python. 2021. [11] Google, MP, https://ai.googleblog.com/2019/08/on-device- real-time-hand-tracking-with.html. [12] https://learnopencv.com/introduction-to- mediapipe](https://image.slidesharecdn.com/13real-timehandgesturerecognitionbasedcontrolofarduinorobot-220803105246-70f23353/75/Real-Time-Hand-Gesture-Recognition-Based-Control-of-Arduino-Robot-5-2048.jpg)