This document presents the first analysis and taxonomy of dynamic and streaming graph processing systems, highlighting their architectural differences and performance aspects. It addresses various concepts such as dynamic, temporal, online, and time-evolving graphs, and discusses the recent theoretical advancements that could enhance practical frameworks. The work aims to guide architects and developers by categorizing and comparing different graph streaming frameworks and their corresponding workloads and challenges.

![1 Practice of Streaming Processing of Dynamic Graphs: Concepts, Models, and Systems Maciej Besta1, Marc Fischer2, Vasiliki Kalavri3, Michael Kapralov4, Torsten Hoefler1 1Department of Computer Science, ETH Zurich 2PRODYNA (Schweiz) AG; 3Department of Computer Science, Boston University 4School of Computer and Communication Sciences, EPFL Abstract—Graph processing has become an important part of various areas of computing, including machine learning, medical applications, social network analysis, computational sciences, and others. A growing amount of the associated graph processing workloads are dynamic, with millions of edges added or removed per second. Graph streaming frameworks are specifically crafted to enable the processing of such highly dynamic workloads. Recent years have seen the development of many such frameworks. However, they differ in their general architectures (with key details such as the support for the concurrent execution of graph updates and queries, or the incorporated graph data organization), the types of updates and workloads allowed, and many others. To facilitate the understanding of this growing field, we provide the first analysis and taxonomy of dynamic and streaming graph processing. We focus on identifying the fundamental system designs and on understanding their support for concurrency, and for different graph updates as well as analytics workloads. We also crystallize the meaning of different concepts associated with streaming graph processing, such as dynamic, temporal, online, and time-evolving graphs, edge-centric processing, models for the maintenance of updates, and graph databases. Moreover, we provide a bridge with the very rich landscape of graph streaming theory by giving a broad overview of recent theoretical related advances, and by discussing which graph streaming models and settings could be helpful in developing more powerful streaming frameworks and designs. We also outline graph streaming workloads and research challenges. F 1 INTRODUCTION Analyzing massive graphs has become an important task. Example applications are investigating the Internet struc- ture [46], analyzing social or neural relationships [25], or capturing the behavior of proteins [73]. Efficient processing of such graphs is challenging. First, these graphs are large, reaching even tens of trillions of edges [57], [155]. Second, the graphs in question are dynamic: new friendships appear, novel links are created, or protein interactions change. For example, 500 million new tweets in the Twitter social net- work appear per day, or billions of transactions in retail transaction graphs are generated every year [13]. Graph streaming frameworks such as STINGER [85] or Aspen [71] emerged to enable processing and analyzing dy- namically evolving graphs. Contrarily to static frameworks such as Ligra [108], [209], such systems execute graph an- alytics algorithms (e.g., PageRank) concurrently with graph updates (e.g., edge insertions). Thus, these frameworks must tackle unique challenges, for example effective modeling and storage of dynamic datasets, efficient ingestion of a stream of graph updates concurrently with graph queries, or support for effective programming model. In this work, we present the first taxonomy and analysis of such system aspects of the streaming processing of dynamic graphs. Moreover, we crystallize the meaning of different con- cepts in streaming and dynamic graph processing. We in- vestigate the notions of temporal, time-evolving, online, and dynamic graphs. We also discuss the differences between graph streaming frameworks and the edge-centric engines, as well as a related class of graph database systems. We also analyze relations between the practice and the theory of streaming graph processing to facilitate incorpo- rating recent theoretical advancements into the practical setting, to enable more powerful streaming frameworks. There exist different related theoretical settings, such as streaming graphs [167] or dynamic graphs [43] that come with different goals and techniques. Moreover, each of these set- tings comes with different models, for example the dynamic graph stream model [130] or the semi-streaming model [84]. These models assume different features of the processed streams, and they are used to develop provably efficient streaming algorithms. We analyze which theoretical settings and models are best suited for different practical scenarios, providing guidelines for architects and developers on what concepts could be useful for different classes of systems. Next, we outline models for the maintenance of updates, such as the edge decay model [235]. These models are independent of the above-mentioned models for developing streaming algorithms. Specifically, they aim to define the way in which edge insertions and deletions are considered for updating different maintained structural graph proper- ties such as distances between vertices. For example, the edge decay model captures the fact that edge updates from the past should gradually be made less relevant for the current status of a given structural graph property. Finally, there are general-purpose dataflow systems such as Apache Flink [54] or Differential Dataflow [168]. We discuss the support for graph processing in such designs. In general, we provide the following contributions: arXiv:1912.12740v3 [cs.DC] 11 Mar 2021](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-1-2048.jpg)

![2 n: number of vertices m: number of edges d: maximum graph degree 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 1 1 0 0 0 1 0 0 0 0 0 0 0 0 1 1 0 1 0 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 1 1 0 0 0 1 0 0 0 0 1 0 0 0 0 1 1 1 0 0 0 1 1 0 0 0 0 0 1 1 0 0 1 0 0 1 1 0 0 0 1 0 1 0 0 1 1 0 1 1 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 1 0 0 0 1 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 1 0 0 0 0 0 1 1 1 1 0 1 1 1 1 1 0 1 n ... 1 ... n 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1 n ... 1 ∅ 11 12 11 12 10 9 10 11 12 16 9 10 12 14 16 16 6 7 1116 5 6 7 1112 2 3 6 9 10 16 14 3 4 6 7 1016 16 15 7 1116 16 13 6 7 8 9 11 13 12 15 14 d ... ... Number of tuples: 2 3 4 5 6 7 16 11 11 12 10 9 10 11 12 16 9 10 12 14 16 15 3 12 6 6 6 6 7 7 7 7 ... 2 3 4 5 6 7 16 11 11 12 10 9 10 11 12 16 9 10 12 14 16 15 3 12 6 6 6 6 7 7 7 7 1 ... 2m (undirected), m (directed) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 1 n ... Neighborhoods can be sorted or unsorted ... 1 2m or m ... An n x n matrix Unweighted graph: a cell is one bit Pointers from vertices to their neighborhoods Neighbor- hoods contain records with vertex IDs, linked with pointers Weighted graph: a cell is one integer Pointers from vertices to their neighborhoods 2m or m One tuple corresponds to one edge Offset array is optional Adjacency Matrix (AM) Adj. List (AL) & CSR Edge List (sorted, unsorted) INPUT GRAPH: No offset array in unsorted edge list 7 9 Adjacency List CSR Neighbor- hoods are contiguous Remarks on enabling dynamic updates in a given representation: O(1) O(n ) 2 Used approach: compression to limit storage overheads O(d) + O(1) O(log d) + O(d) O(m) + O(n) O(m) + O(m) Used approach: neighborhoods formed by linked lists of contiguous chunks of edges AL CSR Add or delete edge: Size: finding edge edge removal Tradeoffs for edge lists are similar to those for AL or CSR AM Add or delete edge: Size: AL CSR edge data pointers + + ( ) ( ) 1 2 3 11 12 13 14 15 16 1 n ... ∅ 11 12 11 2 3 6 9 10 16 14 3 4 6 7 1016 16 15 7 1116 16 13 6 7 8 9 11 13 12 15 14 A block, size (example) = 3 Blocking (within a neighborhood) Blocked CSR Blocks form a linked list 1 2 3 11 12 13 14 15 16 1 n ... ∅ 11 12 11 2 3 6 9 10 16 14 3 4 6 7 1016 16 15 7 1116 16 13 6 7 8 9 11 13 12 15 14 Empty space preserved at the end of each block, to accelerate edge inserts Gaps (within a neighborhood) Blocked CSR Block size determines the tradeoff between locality and ease of updates Blocking (across neighborhoods) Small neighborhoods are stored in the same block in memory (e.g. a page) to speed up some read queries 1 2 3 11 12 13 14 15 16 1 n ... ∅ 11 12 11 2 3 6 9 10 16 14 3 4 6 7 1016 16 15 7 1116 16 13 6 7 8 9 11 13 12 15 14 Blocked CSR Selected popular optimizations related to CSR (more details: Table 2, Section 4) Used in: STINGER, LLAMA, faimGraph, LiveGraph, ... Used in: LiveGraph, Sha et al. Used in: Concerto, Hornet Fig. 1: Illustration of fundamental graph representations (Adjacency Matrix, Adjacency List, Edge List, CSR) and remarks on their usage in dynamic settings. • We crystallize the meaning of different concepts in dy- namic and streaming graph processing, and we analyze the connections to the areas of graph databases and to the theory of streaming and dynamic graph algorithms. • We provide the first taxonomy of graph streaming frameworks, identifying and analyzing key dimensions in their design, including data models and organiza- tion, concurrent execution, data distribution, targeted architecture, and others. • We use our taxonomy to survey, categorize, and com- pare over graph streaming frameworks. • We discuss in detail the design of selected frameworks. Complementary Surveys and Analyses We provide the first taxonomy and survey on general streaming and dynamic graph processing. We complement related surveys on the theory of graph streaming models and algorithms [6], [167], [183], [240], analyses on static graph processing [23], [39], [75], [110], [166], [207], and on general streaming [129]. Finally, only one prior work summarized types of graph updates, partitioning of dynamic graphs, and some chal- lenges [225]. 2 BACKGROUND AND NOTATION We first present concepts used in all the sections. We sum- marize the key symbols in Table 1. G = (V, E) An unweighted graph; V and E are sets of vertices and edges. w(e) The weight of an edge e = (u, v). n, m Numbers of vertices and edges in G; |V | = n, |E| = m. Nv The set of vertices adjacent to vertex v (v’s neighbors). dv, d The degree of a vertex v, the maximum degree in a graph. TABLE 1: The most important symbols used in the paper. Graph Model We model an undirected graph G as a tuple (V, E); V = {v1, ..., vn} is a set of vertices and E = {e1, ..., em} ⊆ V × V is a set of edges; |V | = n and |E| = m. If G is directed, we use the name arc to refer to an edge with a direction. Nv denotes the set of vertices adjacent to vertex v, dv is v’s degree, and d is the maximum degree in G. If G is weighted, it is modeled by a tuple (V, E, w). Then, w(e) is the weight of an edge e ∈ E. Graph Representations We also summarize fundamen- tal static graph representations; they are used as a basis to develop dynamic graph representations in different frame- works. These are the adjacency matrix (AM), the adjacency](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-2-2048.jpg)

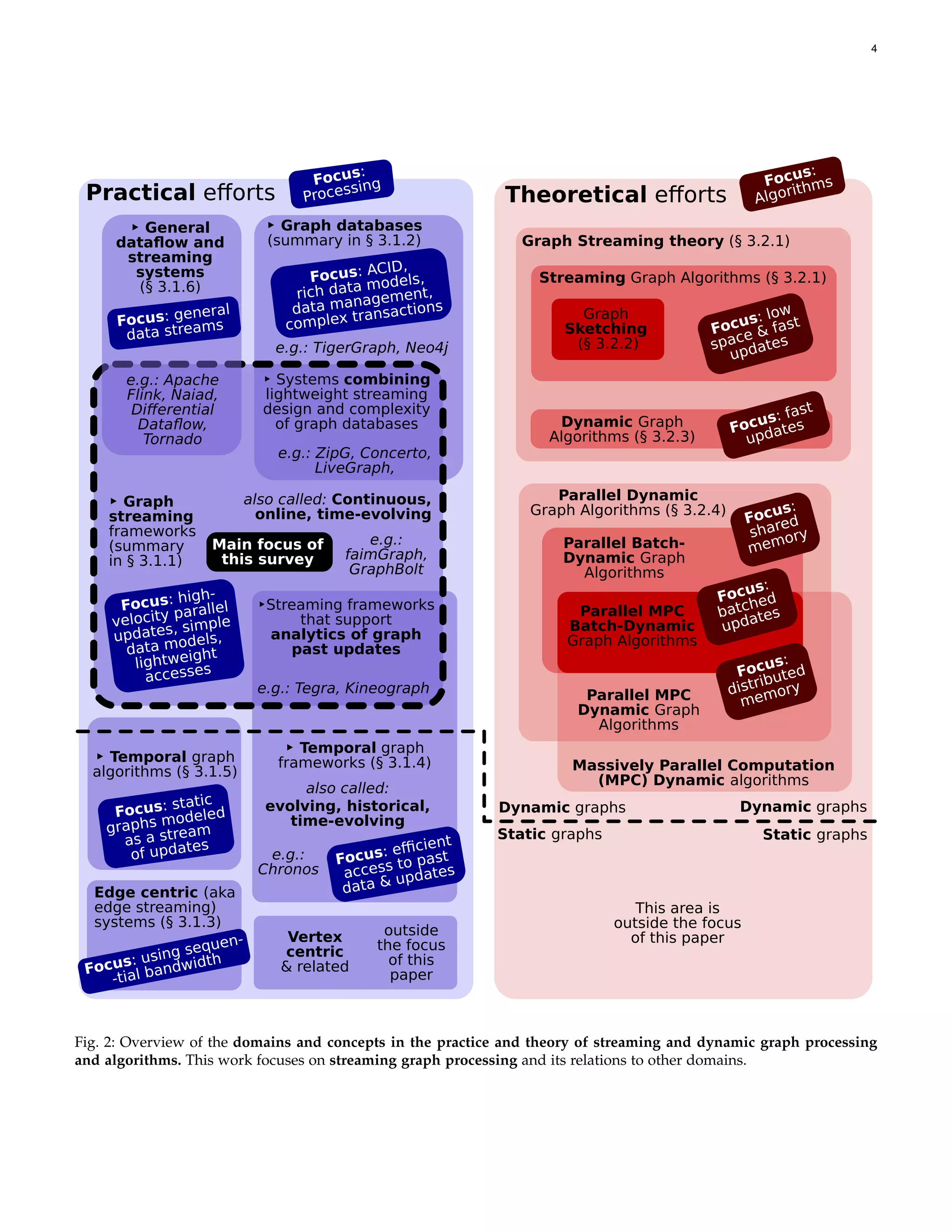

![3 list (AL), the edge list (EL), and the Compressed Sparse Row (CSR, sometimes referred to as Adjacency Array [49])1 . We illustrate these representations and we provide remarks on their dynamic variants in Figure 1. In AM, a matrix M ∈ {0, 1}n,n determines the connectivity of vertices: Mu,v = 1 ⇔ (u, v) ∈ E. In AL, each vertex u has an associ- ated adjacency list Au. This adjacency list maintains the IDs of all vertices adjacent to u. We have v ∈ Au ⇔ (u, v) ∈ E. AM uses O n2 space and can check connectivity of two vertices in O (1) time. AL requires O (n + m) space and it can check connectivity in O (|Au|) ⊆ O (d) time. EL is similar to AL in the asymptotic time and space complexity as well as the general design. The main difference is that each edge is stored explicitly, with both its source and destination vertex. In AL and EL, a potential cause for inefficiency is scanning all edges to find neighbors of a given vertex. To alleviate this, index structures are employed [42]. Finally, CSR resembles AL but it consists of n contiguous arrays with neighborhoods of vertices. Each array is usually sorted by vertex IDs. CSR also contains a structure with offsets (or pointers) to each neighborhood array. Graph Accesses We often distinguish between graph queries and graph updates. A graph query (also called a read) may perform some computation on a graph and it returns information about the graph without modifying its struc- ture. Such query can be local, also referred to as fine (e.g., accessing a single vertex or edge) or global (e.g., a PageRank analytics computation returning ranks of vertices). A graph update, also called a mutation, modifies the graph structure and/or attached labels or values (e.g., edge weights). 3 CLARIFICATION OF CONCEPTS AND AREAS The term “graph streaming” has been used in different ways and has different meanings, depending on the context. We first extensively discuss and clarify these meanings, and we use this discussion to precisely illustrate the scope of our taxonomy and analyses. We illustrate all the considered concepts in Figure 2. To foster developing more powerful and versatile systems for dynamic and streaming graph processing, we also summarize theoretical concepts. 3.1 Applied Dynamic and Streaming Graph Processing We first outline the applied aspects and areas of dynamic and streaming graph processing. 3.1.1 Streaming, Dynamic, and Time-Evolving Graphs Many works [71], [79] use a term “streaming” or “streaming graphs” to refer to a setting in which a graph is dynamic [202] (also referred to as time-evolving [122], continuous [70], or online [87]) and it can be modified with updates such as edge insertions/deletions. This setting is the primary focus of this survey. In the work, we use “dynamic” to refer to the graph dataset being modified, and we reserve “streaming” to refer to the form of incoming graph accesses or updates. 3.1.2 Graph Databases and NoSQL Stores Graph databases [38] are related to streaming and dy- namic graph processing in that they support graph updates. 1 Some works use CSR to describe a graph representation where all neighborhoods form a single contiguous array [147]. In this work, we use CSR to indicate a representation where each neighborhood is contiguous, but not necessarily all of them together. Graph databases (both “native” graph database systems and NoSQL stores used as graph databases (e.g., RDF stores or document stores)) were described in detail in a recent work [38] and are beyond the main focus of this paper. However, there are numerous fundamental differences and similarities between graph databases and graph streaming frameworks, and we discuss these aspects in Section 7. 3.1.3 Streaming Processing of Static Graphs Some works [41], [181], [195], [242] use “streaming” (also referred to as edge-centric) to indicate a setting in which the input graph is static but its edges are processed in a stream- ing fashion (as opposed to an approach based on random accesses into the graph data). Example associated frame- works are X-Stream [195], ShenTu [155], RStream [229], and several FPGA designs [41]. Such designs are outside the main focus of this survey; some of them were described by other works dedicated to static graph processing [41], [75]. 3.1.4 Historical Graph Processing There exist efforts into analyzing historical (also referred to as – somewhat confusingly – temporal or [time]-evolving) graphs [50], [83], [92], [111]–[113], [140], [141], [154], [169], [170], [172], [173], [188], [191], [199], [213], [214], [219], [228], [234], [239]. As noted by Dhulipala et al. [71], these efforts differ from streaming/dynamic/time-evolving graph anal- ysis in that one stores all past (historical) graph data to be able to query the graph as it appeared at any point in the past. Contrar- ily, in streaming/dynamic/time-evolving graph processing, one focuses on keeping a graph in one (present) state. Additional snapshots are mainly dedicated to more efficient ingestion of graph updates, and not to preserving historical data for time-related analytics. Moreover, almost all works that focus solely on temporal graph analysis, for example the Chronos system [112], are not dynamic (i.e., they are offline): there is no notion of new incoming updates, but solely a series of past graph snapshots (instances). These ef- forts are outside the focus of this survey (we exclude these efforts, because they come with numerous challenges and design decisions (e.g., temporal graph models [239], tempo- ral algebra [172], strategies for snapshot retrieval [234]) that require separate extensive treatment, while being unrelated to the streaming and dynamic graph processing). Still, we describe concepts and systems that – while focusing on streaming processing of dynamic graphs, also enable keeping and processing historical data. One such example is Tegra [121]. 3.1.5 Temporal Graph Algorithms Certain works analyze graphs where edges carry timing information, e.g., the order of communication between en- tities [232], [233]. One method to process such graphs is to model them as a stream of incoming edges, with the arrival time based on temporal information attached to edges. Thus, while being static graphs, their representation is dynamic. Thus, we picture these schemes as being partially in the dynamic setting in Figure 2. These works come with no frameworks, and are outside the focus of our work. 3.1.6 General Dataflow and Streaming Systems General streaming and dataflow systems, such as Apache Flink [54], Naiad [177], Tornado [206], or Differential Dataflow [168], can also be used to process dynamic graphs.](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-3-2048.jpg)

![5 However, most of the dimensions of our taxonomy are not well-defined for these general purpose systems. Overall, these systems provide a very general programming model and impose no restrictions on the format of streaming updates or graph state that the users construct. Thus, in principle, they could process queries and updates concur- rently, support rich attached data, or even use transactional semantics. However, they do not come with pre-built fea- tures specifically targeting graphs. 3.2 Theory of Streaming and Dynamic Graphs We next proceed to outline concepts in the theory of dy- namic and streaming graph models and algorithms. Despite the fact that detailed descriptions are outside the scope of this paper, we firmly believe that explaining the associated general theoretical concepts and crystallizing their relations to the applied domain may facilitate developing more pow- erful streaming systems by – for example – incorporating efficient algorithms with provable bounds on their perfor- mance. In this section, we outline different theoretical areas and their focus. In general, in all the following theoret- ical settings, one is interested in maintaining (sometimes approximations to) a structural graph property of interest, such as connectivity structure, spectral structure, or shortest path distance metric, for graphs that are being modified by incoming updates (edge insertions and deletions). 3.2.1 Streaming Graph Algorithms In streaming graph algorithms [63], [84], one usually starts with an empty graph with no edges (but with a fixed set of vertices). Then, at each algorithm step, a new edge is inserted into the graph, or an existing edge is deleted. Each such algorithm is parametrized by (1) space complexity (space used by a data structure that maintains a graph being up- dated), (2) update time (time to execute an update), (3) query time (time to compute an estimate of a given structural graph property), (4) accuracy of the computed structural property, and (5) preprocessing time (time to construct the initial graph data structure) [44]. Different streaming models can introduce additional assumptions, for example the Sliding Window Model provides restrictions on the number of previous edges in the stream, considered for estimating the property [63]. The goal is to develop algorithms that minimize different pa- rameter values, with a special focus on minimizing the storage for the graph data structure. While space complexity is the main focus, significant effort is devoted to optimizing the runtime of streaming algorithms, specifically the time to process an edge update, as well as the time to recover the final solution (see, e.g., [150] and [134] for some recent developments). Typically the space requirement of graph streaming algorithms is O(n polylog n) (this is known as the semi-streaming model [84]), i.e., about the space needed to store a few spanning trees of the graph. Some recent works achieve ”truly sublinear” space o(n), which is sublinear in the number of vertices of the graph and is particularly good for sparse graphs [21], [22], [48], [81], [132], [133], [185]. The reader is referred to surveys on graph streaming algorithms [105], [167], [178] for more references. Applicability in Practical Settings Streaming algo- rithms can be used when there are hard limits on the max- imum space allowed for keeping the processed graph, as well as a need for very fast updates per edge. Moreover, one should bear in mind that many of these algorithms provide approximate outcomes. Finally, the majority of these algo- rithms assumes the knowledge of certain structural graph properties in advance, most often the number of vertices n. 3.2.2 Graph Sketching and Dynamic Graph Streams Graph sketching [11] is an influential technique for pro- cessing graph streams with both insertions and deletions. The idea is to apply classical sketching techniques such as COUNTSKETCH [171] or distinct elements sketch (e.g., HYPERLOGLOG [90]) to the edge incidence matrix of the input graph. Existing results show how to approximate the connectivity and cut structure [11], [15], spectral struc- ture [134], [135], shortest path metric [11], [136], or sub- graph counts [128], [130] using small sketches. Extensions to some of these techniques to hypergraphs were also pro- posed [106]. Some streaming graph algorithms use the notion of a bounded stream, i.e., the number of graph updates is bounded. Streaming and applying all such updates once is referred to as a single pass. Now, some streaming graph algorithms allow for multiple passes, i.e., streaming all edge updates more than once. This is often used to improve the approximation quality of the computed solution [84]. There exist numerous other works in the theory of streaming graphs. Variations of the semi-streaming model allow stream manipulations across passes, (also known as the W-Stream model [68]) or stream sorting passes (known as the Stream-Sort model [7]). We omit these efforts are they are outside the scope of this paper. 3.2.3 Dynamic Graph Algorithms In the related area of dynamic graph algorithms one is inter- ested in developing algorithms that approximate a combi- natorial property of the input graph of interest (e.g., connec- tivity, shortest path distance, cuts, spectral properties) under edge insertions and deletions. Contrarily to graph stream- ing, in dynamic graph algorithms one puts less focus on minimizing space needed to store graph data. Instead, the primary goal is to minimize time to conduct graph updates. This has led to several very fast algorithms that provide updates with amortized poly-logarithmic update time complexity. See [24], [43], [55], [76], [78], [91], [223] and references within for some of the most recent developments. Applicability in Practical Settings Dynamic graph al- gorithms can match settings where primary focus is on fast updates, without severe limitations on the available space. 3.2.4 Parallel Dynamic Graph Algorithms Many algorithms were developed under the parallel dy- namic model, in which a graph undergoes a series of incom- ing parallel updates. Next, the parallel batch-dynamic model is a recent development in the area of parallel dynamic graph algorithms [3], [4], [210], [221]. In this model, a graph is modified by updates coming in batches. A batch size is usually a function of n, for example log n or √ n. Updates from each batch can be applied to a graph in parallel. The motivation for using batches is twofold: (1) incorporating parallelism into ingesting updates, and (2) reducing the cost per update. The associated algorithms focus on minimizing](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-5-2048.jpg)

![6 time to ingest updates into the graph while accurately maintaining a given structural graph property. A variant [77] that combines the parallel batch-dynamic model with the Massively Parallel Computation (MPC) model [137] was also recently described. The MPC model is motivated by distributed frameworks such as MapRe- duce [67]. In this model, the maintained graph is stored on a certain number of machines (additionally assuming that the data in one batch fits into one machine). Each machine has a certain amount of space sublinear with respect to n. The main goal of MPC algorithms is to solve a given problem using O(1) communication rounds while minimizing the volume of data communicated between the machines [137]. Finally, another variant of the MPC model that ad- dresses dynamic graph algorithms but without considering batches, was also recently developed [119]. Applicability in Practical Settings Algorithms devel- oped in the above models may be well-suited for enhancing streaming graph frameworks as these algorithms explicitly (1) maximize the amount of parallelism by using the concept of batches, and (2) minimize time to ingest updates. 4 TAXONOMY OF FRAMEWORKS We identify a taxonomy of graph streaming frameworks. We offer a detailed analysis of concrete frameworks using the taxonomy in Section 5 and in Tables 2–3. Overall, the identified taxonomy divides all the associated aspects into six classes: ingesting updates (§ 4.1), historical data mainte- nance (§ 4.2), dynamic graph representation (§ 4.3), incremen- tal changes (§ 4.4), programming API and models (§ 4.5), and general architectural features (§ 4.6). Due to space constraints, we focus on the details of the system architecture and we only sketch the straightforward taxonomy aspects (e.g., whether a system targets CPUs or GPUs) and list2 them in § 4.6. 4.1 Architecture of Ingesting Updates The first core architectural aspect of any graph streaming framework are the details of ingesting incoming updates. 4.1.1 Concurrent Queries and Updates We start with the method of achieving concurrency between queries and updates (mutations). One such popular method is based on snapshots. Here, updates and queries are isolated from each other by making them execute on two different copies (snapshots) of the graph data. At some point, such snapshots are merged together. Depending on a system, the scope of data duplica- tion (i.e., only a part of the graph may be copied into a new snapshot) and the details of merging may differ. Second, one can use logging. The graph representation contains a dedicated data structure (a log) for keeping the incoming updates; queries are being processed in parallel. At some point, depending on system details, the logged updates are integrated into the main graph representation. In fine-grained synchronization, in contrast to snap- shots and logging (where updates are merged with the main graph representation during dedicated phases), updates are incorporated into the main dataset as soon as they arrive, often interleaved with queries, using synchronization proto- cols based on fine-grained locks and/or atomic operations. 2 More details are in the extended paper version (see the link on page 1) A variant of fine-grained synchronization is Differential Dataflow [168], where the ingestion strategy allows for concurrent updates and queries by relying on a combina- tion of logical time, maintaining the knowledge of updates (referred to as deltas), and progress tracking. Specifically, the differential dataflow design operates on collections of key-value pairs enriched with timestamps and delta values. It views dynamic data as additions to or removals from input collections and tracks their evolution using logical time. The Rust implementation of differential dataflow3 contains implementations of incremental operators that can be composed into a possibly cyclic dataflow graph to form complex, incremental computations that automatically up- date their outputs when their inputs change. Finally, as also noted in past work [71], a system may simply do not enable concurrency of queries and updates, and instead alternate between incorporating batches of graph updates and graph queries (i.e., updates are being applied to the graph structure while queries wait, and vice versa). This type of architecture may enable a high ratio of digesting updates as it does not have to resolve the problem of the consistency of graph queries running interleaved, concurrently, with updates being digested. However, it does not enable a concurrent execution of updates and queries. 4.1.2 Batching Updates and Queries A common design choice is to ingest updates, or resolve queries, in batches, i.e., multiple at a time, to amortize over- heads from ensuring consistency of the maintained graph. We distinguish this design choice in the taxonomy because of its widespread use. Moreover, we identify a popular optimization in which a batch of edges to be removed or inserted is first sorted based on the ID of adjacent vertices. This introduces a certain overhead, but it also facilitates par- allel ingestion of updates: updates associated with different vertices can be easier identified. 4.1.3 Transactional Support We distinguish systems that support transactions, under- stood as units of work that enable isolation between concur- rent accesses and correct recovery from potential failures. Moreover, some (but not all) systems ensure the ACID semantics of transactions. 4.2 Architecture of Historical Data Maintenance While we do not focus on systems solely dedicated to the off-line analysis of historical graph data, some streaming systems enable different forms of accessing/analyzing such data. 4.2.1 Storing Past Snapshots In general, a streaming system may enable storing past snapshots, i.e., consistent past views (instances) of the whole dataset. However, one rarely keeps the whole past graph instances in memory due to storage overheads. Two methods for maintaining such instances while minimizing storage requirements can be identified across different sys- tems. First, one can store updates together with timestamps to be able to derive a graph instance at a given moment in time. Second, one can keep differences (“deltas”) between past graph instances (instead of full instances). 3 https://github.com/TimelyDataflow/differential-dataflow](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-6-2048.jpg)

![7 4.2.2 Visibility of Past Graph Updates There are several ways in which the information about past updates can be stored. Most systems only maintain a “live” version of the graph, where information about the past updates is not maintained4 , in which all incoming graph updates are being incorporated into the structure of the maintained graph and they are all used to update or derive maintained structural graph properties. For example, if a user is interested in distances between vertices, then – in the snapshot model – the derived distances use all past graph updates. Formally, if we define the maintained graph at a given time t as Gt = (V, Et), then we have Et = {e | e ∈ E ∧ t(e) ≤ t}, where E are all graph edges and t(e) is the timestamp of e ∈ E [235]. Some streaming systems use the sliding window model, in which edges beyond certain moment in the past are being omitted when computing graph properties. Using the same notation as above, the maintained graph can be modeled as Gt,t0 = (V, Et,t0 ), where Et,t0 = {e | e ∈ E ∧ t ≤ t(e) ≤ t0 }. Here, t and t0 are moments in time that define the width of the sliding window, i.e., a span of time with graph updates that are being used for deriving certain query answers [235]. Both the snapshot model and the sliding window model do not reflect certain important aspects of the changing re- ality. The former takes into account all relationships equally, without distinguishing between the older and more re- cent ones. The latter enables omitting old relationships but does it abruptly, without considering the fact that certain connections may become less relevant in time but still be present. To alleviate these issues, the edge decay model was proposed [235]. In this model, each edge e (with a timestamp t(e) ≤ t) has an independent probability Pf (e) of being included in an analysis. Pf (e) = f(t − t(e)) is a non- decreasing decay function that determines how fast edges age. The authors of the edge decay model set f to be decreasing exponentially, with the resulting model being called the probabilistic edge decay model. 4.3 Architecture of Dynamic Graph Representation Another core aspect of a streaming framework is the used representation of the maintained graph. 4.3.1 Used Fundamental Graph Representations While the details of how each system maintains the graph dataset usually vary, the used representations can be grouped into a small set of fundamental types. Some frame- works use one of the basic graph representations (AL, EL, CSR, or AM) which are described in Section 2. No systems that we analyzed uses an uncompressed AM as it is inefficient with O(n2 ) space, especially for sparse graphs. Systems that use AM, for example GraphIn, focus on compression of the adjacency matrix [35], trying to mitigate storage and query overheads. Other graph representations are based on trees, where there is some additional hierarchical data structure imposed on the otherwise flat connectivity data; this hierarchical information is used to accelerate dynamic queries. Finally, frameworks constructed on top of more 4 This approach is sometimes referred to as the “snapshot” model. Here, the word “snapshot” means “a complete view of the graph, with all its updates”. This naming is somewhat confusing, as “snapshot” can also mean “a specific copy of the graph generated for concurrent processing of updates and queries”, cf. § 4.1. general infrastructure use a representation provided by the underlying system. We also consider whether a framework supports data distribution over multiple serves. Any of the above rep- resentations can be developed for either a single server or for a distributed-memory setting. Details of such distributed designs are system-specific. 4.3.2 Blocking Within and Across Neighborhoods In the taxonomy, we distinguish a common design choice in systems based on CSR or its variants. Specifically, one can combine the key design principles of AL and CSR by dividing each neighborhood into contiguous blocks (also referred to as chunks) that are larger than a single vertex ID (as in a basic AL) but smaller than a whole neighborhood (as in a basic CSR). This offers a tradeoff between flexible mod- ifications in AL and more locality (and thus more efficient neighborhood traversals) in CSR [193]. Now, this blocking scheme is applied within each single neighborhood. We also distinguish a variant where multiple neighborhoods are grouped inside one block. We will refer to this scheme as blocking across neighborhoods. An additional optimization in the blocking scheme is to pre-allocate some reserved space at the end of each such contiguous block, to offer some number of fast edge insertions that do not require block reallocation. All these schemes are pictured in Figure 1. 4.3.3 Supported Types of Vertex and Edge Data Contrarily to graph databases that heavily use rich graph models such as the Labeled Property Graph [16], graph streaming frameworks usually offer simple data models, focusing on the graph structure and not on rich data attached to vertices or edges. Still, different frameworks support basic additional vertex or edge data, most often weights. Next, in certain systems, both an edge and a vertex can have a type or an attached property. Finally, an edge can also have a timestamp that indicates the time of inserting this edge into the graph. A timestamp can also indicate a modification (e.g., an update of a weight of an existing edge). Details of such rich data are specific to each framework. 4.3.4 Indexing Structures One uses indexing structures to accelerate different queries. In our taxonomy, we distinguish indices that speed up queries related to the graph structure, rich data (i.e., vertex or edge properties or labels), and historic (temporal) aspects (e.g., indices for edge timestamps). 4.4 Architecture of Incremental Changes A streaming framework may support an approach called “incremental changes” for faster convergence of graph algo- rithms. Assume that a certain graph algorithm is executed and produces some results, for example page ranks of each vertex. Now, the key observation behind the incremental changes is that the subsequent graph updates may not necessarily result in large changes to the derived page rank values. Thus, instead of recomputing the ranks from scratch, one can attempt to minimize the scope of recomputation, resulting in “incremental” changes to the ranking results. In our taxonomy, we will distinguish between supporting incremental changes in the post-compute mode and in the live mode. In the former, an algorithm first finishes,](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-7-2048.jpg)

![8 then some graph mutations are applied, and afterwards the system may apply incremental changes to update the results of the algorithm. In the latter, both the mutations and the incremental changes may be applied during the execution of the algorithm, to update its outcomes as soon as possible. 4.5 Supported Programming API The final part of our taxonomy is the supported program- ming API. We identify two key classes of such APIs. First, a framework may offer a selection of functions for modifying the maintained graph; such API may consist of simple basic functions (e.g., insert an edge) or complex ones (e.g., merge two graphs). Here, we additionally identify APIs for triggered events taking place upon specific updates, and for accessing and manipulating the logged graph updates (that await being ingested into the graph representation). The second key API that a framework may support consists of functions for running graph computations on top of the maintained graph. Here, we identify specific APIs for controlling graph algorithms (e.g., PageRank) processing the main (i.e., “live”) graph snapshot, or for controlling such computations running on top of past snapshots. Moreover, our taxonomy includes an API for incremental processing of the outcomes of graph algorithms (cf. § 4.4). 4.6 General Architectural Features of Frameworks The general features are the location of the maintained graph data (e.g., main memory or GPU memory), whether it is distributed, what is the targeted hardware architecture (general CPUs or GPUs), and whether a system is general- purpose or is it developed specifically for graph analytics. 5 ANALYSIS OF FRAMEWORKS We now analyze existing frameworks using our taxonomy (cf. Section 4) in Tables 2 – 3, and in the following text. We also describe selected frameworks in more detail. We use symbols “–”, “˜”, and “é” to indicate that a given system offers a given feature, offers a given feature in a limited way, and does not offer a given feature, respectively5 . “?” indicates we were unable to infer this information based on the available documentation. 5.1 Analysis of Designs for Ingesting Updates We start with analyzing the method for achieving concur- rency between updates and queries. Note that, with queries, we mean both local (fine) reads (e.g., fetching a weight of a given edge), but also global analytics (e.g., running PageRank) that also do not modify the graph structure. First, most frameworks use snapshots. We observe that such frameworks have also some other snapshot-related design feature, for example Grace (uses snapshots also to implement transactions), GraphTau and Tegra (both support storing past snapshots), or DeltaGraph (harnesses Haskell’s feature to create snapshots). Second, a large group of frame- works use logging and fine-grained synchronization. In the latter case, the interleaving of updates and read queries is supported only with respect to fine reads (i.e., parallel 5 We encourage participation in this survey. In case the reader possesses additional information relevant for the tables, the authors would welcome the input. We also encourage the reader to send us any other information that they deem important, e.g., details of systems not mentioned in the current survey version. ingestion of updates while running global analytics such as PageRank are not supported in the considered systems). Furthermore, two interesting methods for efficient con- current ingestion of updates and queries have recently been proposed in the RisGraph system [86] and by Sha et al. [202]. The former uses scheduling of updates, i.e., the system uses fine-grained synchronization enhanced with a specialized scheduler that manipulates the ordering and timing of applying incoming updates to maximize through- put and minimize latency (different timings of applying updates may result in different performance penalties). In the latter, one overlaps the ingestion of updates with trans- ferring the information about queries (e.g., over PCIe). We observe that, while almost all systems use batching, only a few sort batches; the sorting overhead often exceeds benefits from faster ingestion. Next, only five frameworks support transactions, and four in total offer the ACID se- mantics of transactions. This illustrates that performance and high ingestion ratios are prioritized in the design of streaming frameworks over overall system robustness. Some frameworks that support ACID transactions rely with this respect on some underlying data store infrastructure: Sinfonia (for Concerto) and CouchDB (for the system by Mondal et al.). Others (Grace and LiveGraph) provide their own implementations of ACID. 5.2 Analysis of Support for Keeping Historical Data Our analysis shows that reasonably many systems (11) support keeping past data in some way. Yet, only a few offer more than simply keeping past updates with timestamps. Specifically, Kineograph, CelliQ, GraphTau, a system by Sha et al., and Tegra, fully support keeping past graph snapshots, as well as the sliding window model and vari- ous optimizations, such as maintaining indexing structures over historical data to accelerate fetching respective past instances. We discover that Tegra has a particularly rich set of features for analyzing historical data efficiently, ap- proaching in its scope offline temporal frameworks such as Chronos [112]. Another system with a rich set of such fea- tures is Kineograph, the only one to support the exponential decay model of the visibility of past updates. 5.3 Analysis of Graph Representations Most frameworks use some form of CSR. In certain cases, CSR is combined with an EL to form a dual representation; EL is often (but not exclusively) used in such cases as a log to store the incoming edges, for example in GraphOne. Certain other frameworks use AL, prioritizing the flexibility of graph updates over locality of accesses. Most frameworks based on CSR use blocking within neighborhoods (i.e., each neighborhood consists of a linked list of contiguous blocks (chunks)). This enables a tradeoff between the locality of accesses and time to perform up- dates. The smaller the chunks are, the easier is to update a graph, but simultaneously traversing vertex neighborhoods requires more random memory accesses. Larger chunks improve locality of traversals, but require more time to update the graph structure. Two frameworks (Concerto and Hornet) use blocking across neighborhoods. This may help in achieving more locality whenever processing many small neighborhoods that fit in a block.](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-8-2048.jpg)

![9 Reference D? Data location Arch. Con? B? sB? T? acid? P? L? S? D? Edge updates Vertex updates Remarks STINGER [79] é Main mem. CPU é – – é é ˜ (τ) – é é – (A/R) ˜∗ (A/R) ∗ Removal is unclear UNICORN [216] – Main mem. CPU é – é é é é – é é – (A/R) – (A/R) Extends IBM InfoSphere Streams [45] DISTINGER [85] – Main mem. CPU é – é é é é – é é – (A/R) – (A/R) Extends STINGER [79] cuSTINGER [103] é GPU mem. GPU∗ é – é é é é – é é – (A/R) – (A/R) Extends STINGER [79]. ∗ Single GPU. EvoGraph [200] é Main mem. GPU∗ é – é é é ˜ – é é – (A/R) – (A/R) Supports multi-tenancy to share GPU resources. ∗ Single GPU. Hornet [49] é GPU, main mem. GPU† é∗ – – é é é – é é – (A/R/U) – (A/R/U) ∗ Not mentioned. † Single GPU GraPU [204], [205] – Main mem., disk CPU é – é∗ é é é – é é – (A/R) é ∗ Batches are processed with non-straightforward schemes Grace [189] é Main mem. CPU – (s) – ? – – ˜† – é é – (A/R/U) – (A/R) † To implement transactions Kineograph [56] – Main mem. CPU – (s) – é – é – – – – – (A/U∗ ) – (A/U∗ ) ∗ Custom update functions are possible LLAMA [159] é Main mem., disk CPU – (s) – – é é – (∆) – é é – (A/R) – (A/R) — CellIQ [120] – Disk (HDFS) CPU – (s) ˜∗ é é é – – – é – (A/R) – (A/R) Extends GraphX [101] and Spark [237]. ∗ No details. GraphTau [122] – Main mem., disk CPU – (s)∗ – é é é – (∆) – – é – (A/R) – (A/R) Extends Spark. ∗ Offers more than simple snapshots. DeltaGraph [69] é Main mem. CPU – (s)∗ é é é é é – é é – (A/R) – (A/R) ∗ Relies on Haskell’s features to create snapshots GraphIn [201] é∗ Main mem. CPU ˜ (s) – é é é é† – é é ˜∗ (A/R) ˜∗ (A/R) ∗ Details are unclear. † Only mentioned Aspen [71] é Main mem., disk CPU – (s)∗ ? ? é é é – é é – (A/R) – (A/R) ∗ Focus on lightweight snapshots; enables serializability Tegra [121] – Main mem., disk CPU – (s) ? ? é é – (∆) ˜∗ – ? – (A/R) – (A/R) Extends Spark. ∗ Live updates are considered but outside core focus. GraphInc [51] – Main mem., disk CPU – (l)∗ ? ? é é é – é é – (A/R/U) – (A/R/U) Extends Apache Giraph [1]. ∗ Keeps separate storage for the graph structure and for Pregel computations, but no details are provided. ZipG [139] – Main mem. CPU – (l) ? ? é é ˜ (τ) – é é – (A/R/U) – (A/R/U) Extends Spark Succinct [5] GraphOne [147] é Main mem. CPU – (l) – – é é ˜ – é é – (A/R) – (A/R) Updates of weights are possible LiveGraph [243] é Main mem., disk CPU – (l) é na – – é – é é – (A/R/U) – (A/R/U) — Concerto [151] – Main mem. CPU – (f)∗ – é – – é – é é ˜ (A/U) ˜ (A/U) ∗ A two-phase commit protocol based on fine-grained atomics aimGraph [230] é GPU mem. GPU∗ ˜ (f)† – ? é é é – é é – (A/R) é ∗ Single GPU. † Only fine reads/updates are considered. faimGraph [231] é GPU, main mem. GPU∗ ˜ (f)† – – é é é – é é – (A/R) – (A/R) ∗ Single GPU. † Only fine reads/updates, using locks/atomics. GraphBolt [162] é Main mem. CPU ˜ (f)∗ – – é é é – é é – (A/R) – (A/R) Uses Ligra [209]. ∗ Fine edge updates are supported. RisGraph [86] é Main mem. CPU – (sc)∗ –† ? é é – – é é – (A/R) ˜ (A/R) ∗ Details in § 5.1. GPMA (Sha [202]) ˜∗ GPU mem. GPU∗ ˜ (o)† – ? é é é – – é – (A/R) é ∗ Multiple GPUs within one server. † Details in § 5.1. KickStarter [227]∗ – Main mem. CPU na∗ – na∗ na∗ na∗ na∗ – na∗ na∗ – (A/R) ? Uses ASPIRE [226]. ∗ It is a technique, not a full system. Mondal et al. [174] – Main mem.∗ CPU ˜† ?† ?† – – é – ?† ?† ˜† (A) ˜† (A) ∗ Uses CouchDB as backend [14], † Unclear (relies on CouchDB) iGraph [126] – Main mem. CPU ? – é é é é – é é ˜ (A/U) ˜ (A/U) Extends Spark Sprouter [2] – Main mem., disk CPU ? ? é é é é – é é ˜ (A) ? Extends Spark TABLE 2: Comparison of selected representative works. They are grouped by the method of achieving concurrency between queries and updates (mutations). Within each group, the systems are sorted by publication date. “D?” (distributed): does a design target distributed environments such as clusters, supercomputers, or data centers? “Data location”: the location of storing the processed dataset (“Main mem.”: main memory; a system is primarily in-memory). “Arch.”: targeted architecture. “Con?” (a method of achieving concurrent updates and queries): does a design support updates (e.g., edge insertions and removals) proceeding concurrently with queries that access the graph structure (e.g., edge lookups or PageRank computation). Whenever supported, we detail the method used for maintaining this concurrency: (s): snapshots, (l): logging, (f): fine-grained synchronization, (sc): scheduling, (o): overlap. “B?” (batches): are updates batched? “sB?” (sorted batches): can batches of updates be sorted for more performance? “T?” (transactions): are transactions supported? “acid?”: are ACID transaction properties offered? “P”: Does the system enable storing past graph snapshots? “(∆)”: Snapshots are stored using some “delta scheme”. “(τ)”: snapshots can be inferred from maintained timestamps. “L?” (live): are live updates supported (i.e., does a system maintain a graph snapshot that is “up-to-date”: it continually ingests incoming updates)? “S?” (sliding): does a system support the Sliding Window Model for accessing past updates? “D?” (decay): does a system support the Decay Model for accessing past updates? “Vertex / edge updates”: support for inserting and/or removing edges and/or vertices; “A”: add, “R”: remove, “U”: update. “–”: Support. “˜”: Partial / limited support. “é”: No support. “?”: Unknown. A few systems use graph representations based on trees. For example, Sha et al. [202] use a variant of packed memory array (PMA), which is an array with all neighborhoods (i.e., essentially a CSR) augmented with an implicit binary tree structure that enables edge insertions and deletions in O(log2 n) time. Frameworks constructed on top of a more general in- frastructure use a representation provided by the under- lying system. For example, GraphTau [122], built on top of Apache Spark [238], uses the underlying data structure called Resilient Distributed Datasets (RDDs) [238]. Other frameworks from this category use data representations that are harnessed by general processing systems or databases, for example KV stores, tables, or general collections. All considered frameworks use some form of indexing. However, the indexes mostly keep the locations of vertex neighborhoods. Such an index is usually a simple array of size n, with cell i storing a pointer to the neighborhood Ni; this is a standard design for frameworks based on CSR. Whenever CSR is combined with blocking, a correspond- ing framework also offers the indexing of blocks used for storing neighborhoods contiguously. For example, this is the case for faimGraph and LiveGraph. Frameworks based on more complex underlying infrastructure benefit from indexing structures offered by the underlying system. For example, Concerto uses hash indexing offered by MySQL, and CellIQ and others can use structures offered by Spark. Finally, relatively few frameworks apply indexing of addi- tional rich vertex or edge data, such as properties or labels. This is due to the fact that streaming frameworks, unlike graph databases, place more focus on the graph structure and much less on rich attached data. For example, STINGER indexes edges and vertices with given labels.](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-9-2048.jpg)

![10 Reference Rich edge data Rich vertex data Tested analytics workloads Fundamental representation iB? aB? Id? Ic? IL?PrM? PrC? Remarks STINGER [79] – (T, W, TS) – (T) CL, BC, BFS, CC, k-core CSR – é – (a, d)é é – (sm) é Grace [189] ˜ (W) é PR, CC, SSSP, BFS, DFS CSR ˜∗ é – (a) é é – (sm) é ∗ Due to partitioning of neighborhoods. Concerto [151] – (P) – (P) k-hop, k-core CSR ? – – é é – (sm, tr∗ )– (sa, i)∗ ∗ Graph views and event-driven processing LLAMA [159] – (P) – (P) PR, BFS, TC CSR∗ – é – (a, t) é é – (sm) é ∗ multiversioned DISTINGER [85] – (T, W, TS) – (T, W) PR CSR – é – (a, d)é é – (sm) é — cuSTINGER [103] ˜∗ (W, P, TS)˜∗ (W, P)TC CSR é é – (a, d)é é – (sm) é ∗ No details aimGraph [230] ˜∗ (W) ˜∗ (W) — CSR∗ – é – (a) é é – (sm) é ∗ Resembles CSR. Hornet [49] ˜ (W) é BFS, SpMV, k-Truss CSR é – – (a) é é – (sm) é — faimGraph [231] – (W, P) – (W, P) PR, TC CSR∗ – é – (a) é é – (sm) é ∗ Resembles CSR LiveGraph [243] – (T, P) – (P) PR, CC CSR – (g) é – (a) é é – (sm)∗ é ∗ Primarily a data store GraphBolt [162] – (W) é PR, BP, LP, CoEM, CF, TCCSR – é – (a) – (m)– – (sm) – (sa∗ , i) ∗ Relies on BSP and Ligra’s mappings GraphIn [201] é ˜ (P) BFS, CC, CL CSR + EL é é ? – é – (sm) – (sa, i)† † Relies on GAS. EvoGraph [200] é é TC, CC, BFS CSR + EL é é ? – é – (sm) – (sa, i) — GraphOne [147] ˜ (W) é BFS, PR, 1-Hop-query CSR + EL – é – (a, t) é é – (sm, ss) – (sa, p) — GraPU [204], [205] ˜ (W) é BFS, SSSP, SSWP AL∗ é é – (a) – (m)˜ – (sm) – (sa, i, sai)∗ Relies on GoFS RisGraph [86] – (W) é CC, BFS, SSSP, SSWP AL ? ? – (a) – é – (sm) – (sa, p) — Kineograph [56] é é TR, SSSP, k-exposure KV store + AL∗ ? é – (a) – é – (sm) ˜ (sa)† ∗ Details are unclear. † Uses vertex-centric Mondal et al. [174]é é — KV store + documents∗ é é – (a) é é – (sm)∗ ?∗ ∗ Relies on CouchDB CellIQ [120] – (P) – (P) Cellular specific Collections (series)∗ é é – (a, d)– é – (sm) – (sa, i)† ∗ Based on RDDs. † Focus on geopartitioning. iGraph [126] ? é PR RDDs é é – – é – (sm) – (sa, i)∗ ∗ Relies on vertex-centric BSP GraphTau [122] é é PR, CC RDDs (series) é é ? –∗ – – (sm) – (sa, i, p)† ∗ Unclear. † Relies on BSP and vertex-centric. ZipG [139] – (T, P, TS) – (P) TAO LinkBench Compressed flat files é é – (a) é é – (sm) é — Sprouter [2] ? ? PR Tables∗ é é ? é é – (sm) é ∗ Relies on HGraphDB DeltaGraph [69] é é — Inductive graphs∗ é é é é é – (sm, am)– (sa)† ∗ Specific to functional languages [69]. † Mappings of vertices/edges GPMA (Sha [202]) ˜ (TS) é PR, BFS, CC Tree-based (PMA) ˜ (g)∗ é – (a) é é – (sm) é ∗ A single contiguous array with gaps in it Aspen [71] é é∗ BFS, BC, MIS, 2-hop, CL Tree-based (C-Trees) – é – (a) é é – (sm) ˜ (sa)∗ ∗ Relies on Ligra Tegra [121] – (P) – (P) PR, CC Tree-based (PART [65]) –∗ é – (a) – é – (sm) – (sa† , i, p)∗ For properties. † Relies on GAS GraphInc [51] – (P) – (P) SSSP, CC, PR ?∗ é é ˜ (a) – é – (sm) ˜ (sa)∗ ∗ Relies on Giraph’s structures and model UNICORN [216] é é PR, RW ?∗ é é ? – é – (sm) – (sa, i) ∗ Uses InfoSphere KickStarter [227] ˜ (W) é SSWP, CC, SSSP, BFS na∗ na∗ na∗ na∗ – (m)é – (sm) na∗ ∗ Kickstarter is a technique, not a system TABLE 3: Comparison of selected representative works. They are grouped by the used fundamental graph representation (within each group, by publication date). “Rich edge/vertex data”: enabling additional data to be attached to an edge or a vertex (“T”: type, “P”: property, “W”: weight, “TS”: timestamp). “Tested analytics workloads”: evaluated workloads beyond simple queries (PR: PageRank, TR: TunkRank, CL: clustering, BC: Betweenness Centrality, CC: Connected Components, BFS: Breadth-First Search, SSSP: Single Source Shortest Paths, DFS: Depth-First Search, TC: Triangle Counting, SpMV: Sparse matrix-vector multiplication, BP: Belief Propagation, LP: Label Propagation, CoEM: Co-Training Expectation Maximization, CF: Collaborative Filtering, SSWP: Single Source Widest Path, TAO LinkBench: workloads used in Facebook’s TAO and in LinkBench [19], MIS: Maximum Independent Set), RW: Random Walk. “Fundamental Representation”: A key representation used to store the graph structure; all representation are explained in Section 4. “iB”: Is blocking used to increase the locality of edges within the representation of a single neighborhood? “(g)”: one uses empty gaps at the ends of blocks, to provide pre-allocated empty storage for faster edge insertions. “aB”: Is blocking used to increase the locality of edges across different neighborhoods (i.e., can one store different neighborhoods within one block)? “Id”: Is indexing used? “(a)”: Indexing of the graph adjacency data, “(d)”: Indexing of rich edge/vertex data, “(t)”: Indexing of different graph snapshots, in the time dimension? “Ic”: Are incremental changes supported? “(m)”: Explicit support for monotonic algorithms in the context of incremental changes. “IL”: Does the system support live (on-the-fly) incremental changes? “PrM”: Does the system offer a dedicated programming model (or API) related to graph modifications? “(sm)”: API for simple graph modifications. “(am)”: API for advanced graph modifications. “(tr)”: API for triggered reactions to graph modifications. “(ss)”: API for manipulating with the updates awaiting being ingested (e.g., stored in the log). “PrC”: Does the system offer a dedicated programming model (or API) related to graph computations (i.e., analytics running on top of the graph being modified)? “(sa)”: API for graph algorithms / analytics (e.g., PageRank) processing the main (i.e., up- to-date) graph snapshot. “(p)”: API for graph algorithms / analytics (e.g., PageRank) processing the past graph snapshots. “(i)”: API for incremental processing of graph algorithms / analytics. “(sai)” (i.e., (sa) + (i)): API for graph algorithms / analytics processing the incremental changes themselves. “–”, “˜”, “é”: A design offers a given feature, offers it in a limited way, and does not offer it, respectively. “?”: Unknown.](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-10-2048.jpg)

![11 5.4 Analysis of Support for Incremental Changes Around half of the considered frameworks support incre- mental changes to accelerate global graph analytics running on top of the maintained graph datasets. Frameworks that do not support them (e.g., faimGraph) usually put less focus on global analytics in the streaming setting. While the details for achieving incremental changes vary across systems, they all first identify which graph parts require re- computation. For example, GraphIn and EvoGraph make the developer responsible for implementing a dedicated func- tion that detects inconsistent vertices, i.e., vertices that became affected by graph updates. This function takes as arguments a batch of incoming updates and the vertex property related to the graph problem being solved (e.g., a parent in the BFS traversal problem). Whenever any update in the batch affects a specified property of some vertex, this vertex is marked as inconsistent, and is scheduled for recomputation. GraphBolt and KickStarter both carefully track dependen- cies between vertex values (that are being computed) and edge modifications. The difference between these two lies in how they minimize the amount of needed recomputation. For this, GraphBolt assumes the Bulk Synchronous Parallel (BSP) [222] computation model while KickStarter uses the fact that in many graph algorithms, the vertex value is simply selected from one single incoming edge. Unlike some other systems (e.g., Kineograph), GraphBolt and KickStarter enable performance gains also in the event of edge dele- tions, not only insertions. Similarly to GraphBolt, GraphInc also targets iterative algorithms; it uses a technique called memoization to reduce the amount of recomputation. Specif- ically, it maintains the state of all computations performed, and uses this state whenever possible to quickly deliver results if a graph changes. RisGraph applies KickStarter’s approach for incremental computation to its design based on concurrent ingestion of fine-grained updates and queries. Finally, Tegra, GraphTau, GraPU, CellIQ, and Kineograph implement incremental computation using the underlying infrastructure and its capability to maintain past graph snapshots. They derive differences between consecutive snapshots, and use these differences to identify parts of a graph that must be recomputed. We discover that GraphTau and GraphBolt employ “live” incremental changes, i.e., they are able to identify opportunities for reusing the results of a graph algorithm even before it finishes running. This is done in the context of iterative analytics such as PageRank, where the potential for incremental changes is identified between iterations. The systems that support incremental changes focus on monotonic graph algorithms, i.e., algorithms, where the computed properties (e.g., vertex distances) are consistently either increasing or decreasing. 5.5 Analysis of Offered Programming APIs We first analyze the supported APIs for graph modifica- tions. All considered frameworks support a simple API for manipulating the graph, which includes operations such as adding or removing an edge. However, some frameworks offer more capabilities. Concerto has special functions for programming triggered events, i.e., events taking place automatically upon certain specific graph modifications. DeltaGraph offers unique graph modification capabilities, for example merging graphs. GraphOne comes with a set of interesting functions for accessing and analyzing the updates waiting in the log structure to be ingested. This can be used to apply some form of preprocessing of the updates, or to run some analytics on the updates. We also discuss supported APIs for running global ana- lytics on the maintained graph. First, we observe that a large fraction of frameworks do not support developing graph an- alytics at all. These systems, for example faimGraph, focus completely on graph mutations and local queries. However, other systems do offer an API for graph analytics (e.g., PageRank) processing the main (live) graph snapshot. These systems usually harness some existing programming model, for example Bulk Synchronous Parallel (BSP) [222]. Further- more, frameworks that enable maintaining past snapshots, for example Tegra, also offer APIs for running analytics on such graphs. Finally, systems offering incremental changes also offer the associated APIs. 5.6 Used Programming Model We also discuss in more detail what programming models are used to develop graph analytics. As of now, there are no established programming models for dynamic graph analysis. Most frameworks, for example GraphInc, fall back to a model used for static graph processing (most often the vertex-centric model [127], [161]), and make the dynamic nature of the graph transparent to the developer. Another re- cent example is GraphBolt that offers the Bulk Synchronous Parallel (BSP) [222] programming model and combines it with incremental updates to be able to solve certain graph problems on dynamic graphs. Some engines, however, extend an existing model for static graph processing. For example, GraphIn extends the gather-apply-scatter (GAS) paradigm [156] to enable react- ing to incremental updates. Specifically, the key part of this Incremental Gather Apply Scatter (I-GAS) is an API that enables the user to specify how to construct the inconsistency graph i.e., a part of the processed graph that must be recom- puted in order to appropriately update the desired results (for a specific graph problem such as BFS or PageRank). For this, the user implements a designated method that takes as input the batch of next graph updates, and uses this information to construct a list of vertices and/or edges, for which a given property (e.g., the rank) must be recomputed. This also includes a user-defined function that acts as a heuristic to check if a static full recomputation is cheaper in expectation than an incremental pass. It is the users responsibility to ensure that correctness is guaranteed in this model, for example by conservatively marking vertices inconsistent. Graph updates can consist of both inserts and removals. They are applied in batches and exposed to the user automatically by a list of inconsistent vertices for which properties (e.g., vertex degree) have been changed by the update. Therefore, queries are always computed on the most recent graph state. 5.7 Supported Types of Graph Updates Different systems support different forms of graph updates. The most widespread update is edge insertion, offered by all the considered systems. Second, edge deletions are supported by most frameworks. Finally, a system can also](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-11-2048.jpg)

![12 explicitly enable adding or removing a specified vertex. In the latter, a given vertex is removed with its adjacent edges. 5.8 Analysis of Relations to Theoretical Models First, despite the similarity of names, the (theoretical) field of streaming graph algorithms is not well connected to graph streaming frameworks: the focus of the former are fast algorithms operating with tight memory constraints that by assumption prevent from keeping the whole graph in mem- ory, which is not often the case for the latter. Similarly, graph sketching focuses on approximate algorithms in a streaming setting, which is of little interest to streaming frameworks. On the other hand, the (theoretical) settings of dynamic graph algorithms and parallel dynamic graph algorithms are similar to that of the streaming frameworks. Their common assumption is that the whole maintained graph is available for queries (in-memory), which is also common for the streaming frameworks. Moreover, the batch dynamic model is even closer, as it explicitly assumes that edge updates arrive in batches, which reflects a common optimization in the streaming frameworks. We conclude that future de- velopments in streaming frameworks could benefit from deepened understanding of the above mentioned theoretical areas. For example, one could use the recent parallel batch dynamic graph connectivity algorithm [3] in the imple- mentation of any streaming framework, for more efficient connected components problem solution. 6 DISCUSSION OF SELECTED FRAMEWORKS We now provide general descriptions about selected frame- works, for readers interested in some specific systems. 6.1 STINGER [79] And Its Variants STINGER [79] is a data structure and a corresponding software package. It adapts and extends the CSR format to support graph updates. Contrarily to the static CSR design, where IDs of the neighbors of a given vertex are stored contiguously, neighbor IDs in STINGER are divided into contiguous blocks of a pre-selected size. These blocks form a linked list, i.e., STINGER uses the blocking design. The block size is identical for all the blocks except for the last blocks in each list. One neighbor vertex ID u in the neighborhood of a vertex v corresponds to one edge (v, u). STINGER supports both vertices and edges with different types. One vertex can have adjacent edges of different types. One block always contains edges of one type only. Besides the associated neighbor vertex ID and type, each edge has its weight and two time stamps. The time stamps can be used in algorithms to filter edges, for example based on the insertion time. In addition to this, each edge block contains certain metadata, for example lowest and highest time stamps in a given block. Moreover, STINGER provides the edge type array (ETA) index data structure. ETA contains pointers to all blocks with edges of a given type to accelerate algorithms that operate on specific edge types. To increase parallelism, STINGER updates a graph in batches. For graphs that are not scale-free, a batch of around 100,000 updates is first sorted so that updates to different vertices are grouped. In the process, deletions may be sepa- rated from insertions (they can also be processed in parallel with insertions). For scale-free graphs, there is no sorting phase since a small number of vertices will face many updates which leads to workload imbalance. Instead, each update is processed in parallel. Fine locking on single edges is used for synchronization of updates to the neighborhood of the same vertex. To insert an edge or to verify if an edge exists, one traverses a selected list of blocks, taking O(d) time. Consequently, inserting an edge into Nv takes O(dv) work and depth. STINGER is optimized for the Cray XMT supercomputing systems that allow for massive thread- level parallelism. Still, it can also be executed on general multi-core commodity servers. Contrarily to other works, STINGER and its variants does not provide a framework but a library to operate on the data structure. Therefore, the user is in full control, for example to determine when updates are applied and what programming model is used. DISTINGER [85] is a distributed version of STINGER that targets “shared-nothing” commodity clusters. DISTINGER inherits the STINGER design, with the following modifica- tions. First, a designated master process is used to interact between the DISTINGER instance and the outside world. The master process maps external (application-level) vertex IDs to the internal IDs used by DISTINGER. The master process maintains a list of slave processes and it assigns incoming queries and updates to slaves that maintain the associated part of the processed graph. Each slave maintains and is responsible for updating a portion of the vertices together with edges attached to each of these vertices. The graph is partitioned with a simple hash-based scheme. The inter-process communication uses MPI [97], [104] with es- tablished optimizations such as message batching or overlap of computation and communication. cuSTINGER [103] extends STINGER for CUDA GPUs. The main design change is to replace lists of edge blocks with contiguous adjacency arrays, i.e. a single adjacency array for each vertex. Moreover, contrarily to STINGER, cuSTINGER always separately processes updates and deletions, to better utilize massive parallelism in GPUs. cuSTINGER offers several “meta-data modes”: based on the user’s needs, the framework can support only unweighted edges, weighted edges without any additional associated data, or edges with weights, types, and additional data such as time stamps. However, the paper focuses on unweighted graphs that do not use time stamps and types, and the exact GPU design of the last two modes is unclear [103]. 6.2 Work by Mondal et al. [174] A system by Mondal et al. [174] focuses on data replica- tion, graph partitioning, and load balancing. As such, the system is distributed: on each compute node, a replication manager decides locally (based on analyzing graph queries) what vertex is replicated and what compute nodes store its copies. The main contribution is the definition of a fairness criterion which denotes that at least a certain configurable fraction of neighboring vertices must be replicated on some compute node. This approach reduces pressure on network bandwidth and improves latency for queries that need to fetch neighborhoods (common in social network analysis). The framework stores the data on Apache CouchDB [17], an in-memory key-value store. No detailed information how the data is represented is given.](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-12-2048.jpg)

![13 6.3 LLAMA [159] LLAMA [159] (Linked-node analytics using LArge Multiversioned Arrays) – similarly to STINGER – digests graph updates in batches. It differs from STINGER in that each such batch generates a new snapshot of graph data using a copy-on-write approach. Specifically, the graph in LLAMA is represented using a variant of CSR that relies on large multiversioned arrays. Contrarily to CSR, the array that maps vertices to per-vertex structures is divided into smaller parts, so called data pages. Each part can belong to a different snapshot and contains pointers to the single edge array that stores graph edges. To create a new snapshot, new data pages and a new edge array are allocated that hold the delta that represents the update. This design points to older snapshots and thus shares some data pages and parts of the edge array among all snapshots, enabling lightweight updates. For example, if there is a batch with edge insertions into the neighborhood of vertex v, this batch may become a part of v’s adjacency list within a new snapshot, but only represents the update and relies on the old graph data. Contiguous allocations are used for all data structures to improve allocation and access time. LLAMA also focuses on out-of-memory graph process- ing. For this, snapshots can be persisted on disk and mapped to memory using mmap. The system is implemented as a library, such that users are responsible to ingest graph updates and can use a programming model of their choice. LLAMA does not impose any specific programming model. Instead, if offers a simple API to iterate over the neighbors of a given vertex v (most recent ones, or the ones belonging to a given snapshot). 6.4 GraphIn [201] GraphIn [201] uses a hybrid dynamic data structure. First, it uses an AM (in the CSR format) to store the adjacency data. This part is static and is not modified when updates arrive. Second, incremental graph updates are stored in dedicated edge lists. Every now and then, the AM with graph structure and the edge lists with updates are merged to update the structure of AM. Such a design maximizes performance and the amount of used parallelism when accessing the graph structure that is mostly stored in the CSR format. 6.5 GraphTau [122] GraphTau [122] is a framework based on Apache Spark and its data model called resilient distributed datasets (RDD) [238]. RDDs are read-only, immutable, partitioned collections of data sets that can be modified by different operators (e.g., map, reduce, filter, and join). Similarly to GraphX [101], GraphTau exploits RDDs and stores a graph snapshot (called a GraphStream) using two RDDs: an RDD for storing vertices and edges. Due to the snapshots, the framework offers fault tolerance by replaying the processing of respective data streams. Different operations allow to re- ceive data form multiple sources (including graph databases such as Neo4j and Titan) and to include unstructured and tabular data (e.g., from RDBMS). To maximize parallelism when ingesting updates, it applies the snapshot scheme: graph workloads run concurrently with graph updates us- ing different snapshots. GraphTau only enables using the window sliding model. It provides options to write custom iterative and window algorithms by defining a directed acyclic graph (DAG) of operations. The underlying Apache Spark framework analyzes the DAG and processes the data in parallel on a compute cluster. For example, it is possible to write a function that explicitly handles sub-graphs that are not part of the graph any more due to the shift of the sliding window. The work focuses on iterative algorithms and stops the next iteration when an update arrives even when the algorithm has not converged yet. This is not an issue since the implemented algorithms (PageRank and CC) can reuse the previous result and converge on the updated snapshot. In GraphTau, graph updates can consist of both inserts and removals. They are applied in batches and exposed to the program automatically by the new graph snapshot. Therefore, queries are always computed on the most recent graph for the selected window. 6.6 faimGraph [231] faimGraph [231] (fully-dynamic, autonomous, independent management of graphs) is a library for graph processing on a single GPU with focus on fully-dynamic edge and vertex updates (add, remove) - contrarily, some GPU frame- works [202], [230] focus only on edge updates. It allocates a single block of memory on the GPU to prevent memory fragmentation. A memory manager autonomously handles data management without round-trips to the CPU, enabling fast initialization and efficient updates since no intervention from the host is required. Generally, the GPU memory is partitioned into vertex data, edge data and management data structures such as index queues which keep track of free memory. Also, the algorithms that run on the graph operate on this allocated memory. The vertex data and the edge data grow from opposite sides of the memory region to not restrict the amount of vertices and edges. Vertices are stored in dedicated vertex data blocks that can also contain user-defined properties and meta information. For example, vertices store their according host identifier since the host can dynamically create vertices with arbitrary identifiers and vertices are therefore identified on the GPU using their memory offset. To store edges, the library implements a combination of the linked list and adjacency array resulting in pages that form a linked list. This enables the growth and shrink of edge lists and also optimizes memory locality. Further, properties can be stored together with edges. The design does not return free memory to the device, but keeps it allocated as it might be used during graph processing - so the parallel use of the GPU for other processing is limited. In such cases, faimGraph can be reinitialized to claim memory (or expand memory if needed). Updates can be received from the device or from the host. Further, faimGraph relies on a bulk update scheme, where queries cannot be interleaved with updates. However, the library supports exploiting parallelism of the GPU by running updates in parallel. faimGraph mainly presents a new data structure and therefore does not enforce a certain program- ming model.](https://image.slidesharecdn.com/1912-210605084152/75/Practice-of-Streaming-Processing-of-Dynamic-Graphs-Concepts-Models-and-Systems-NOTES-13-2048.jpg)