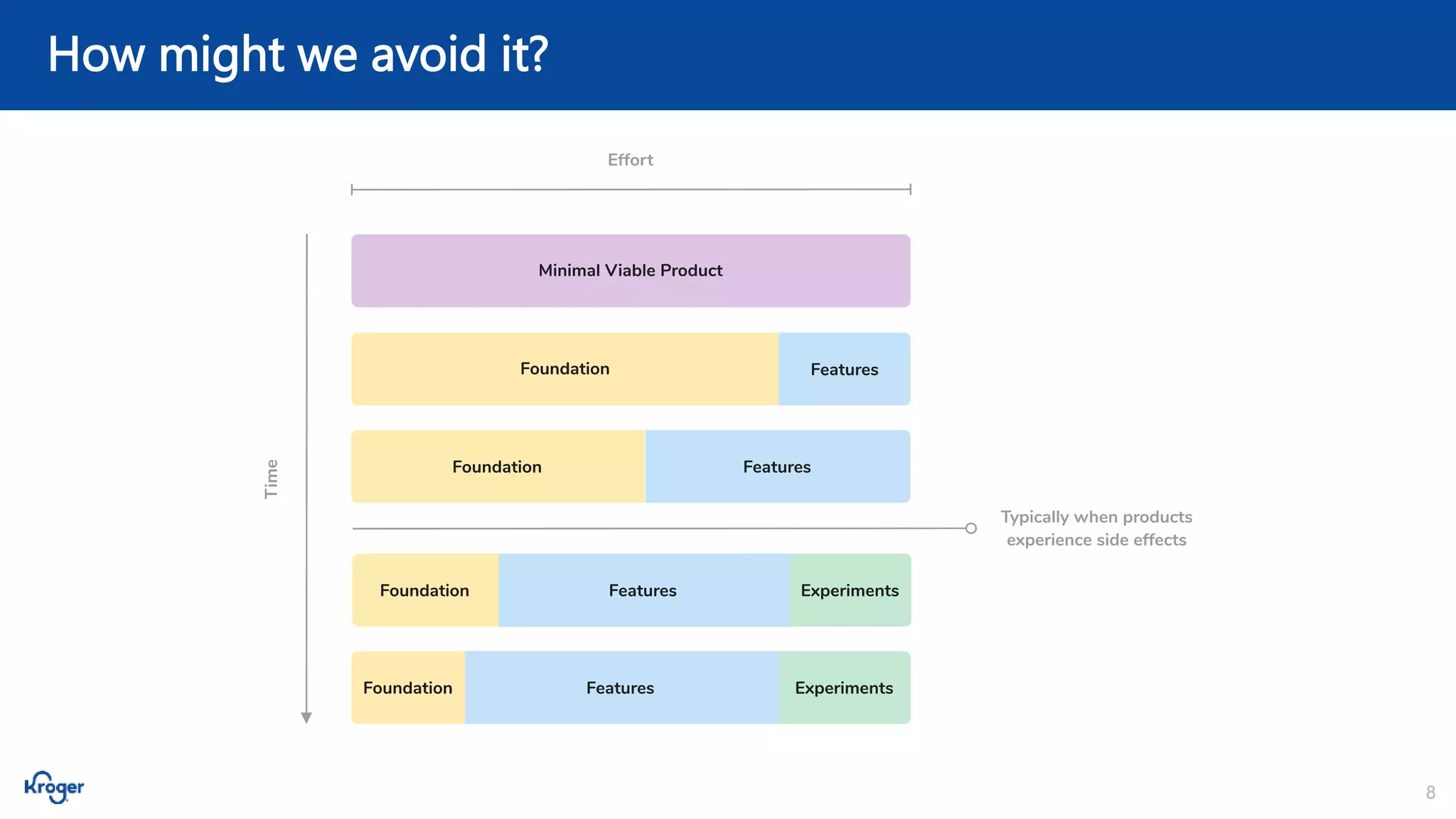

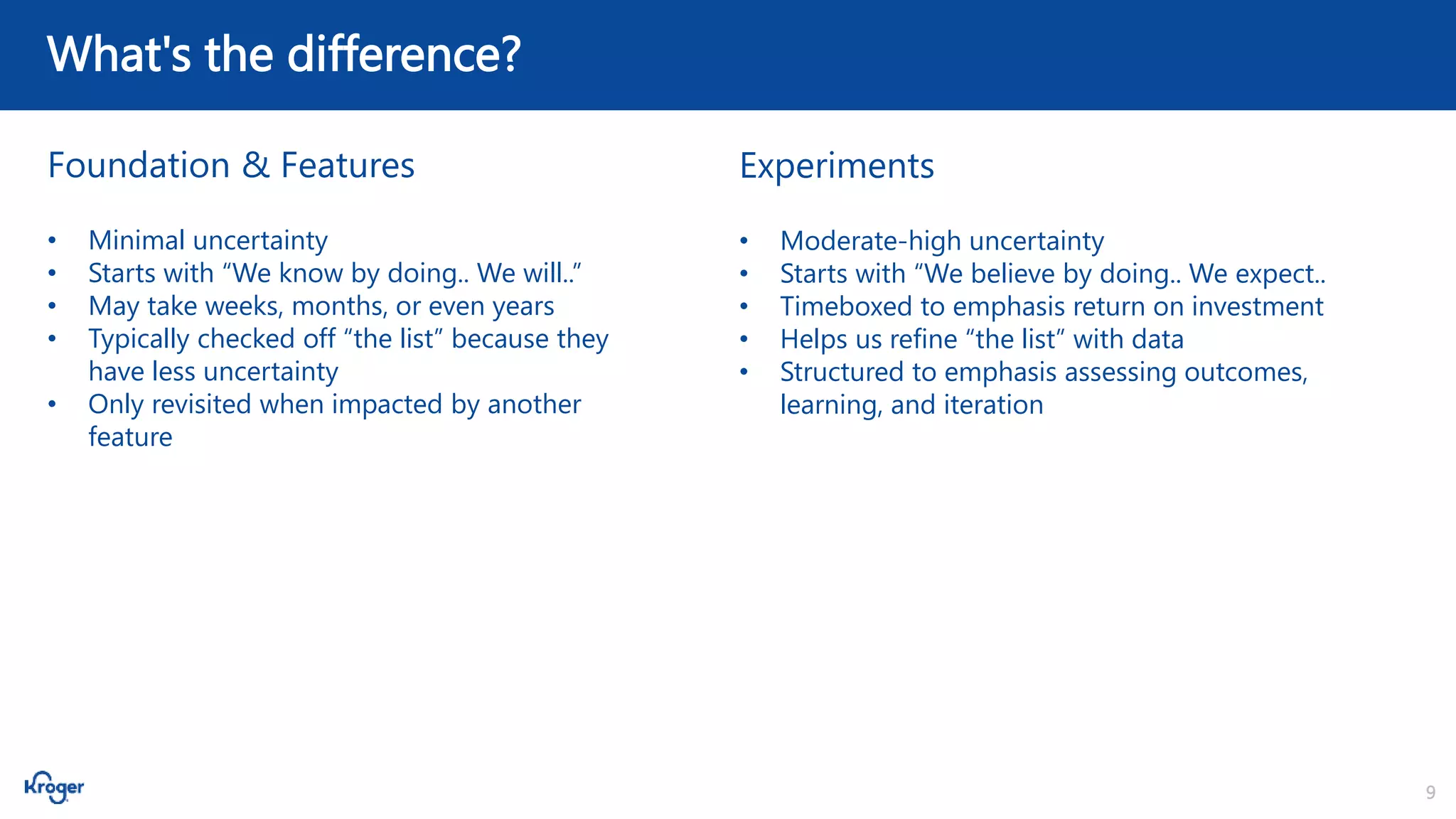

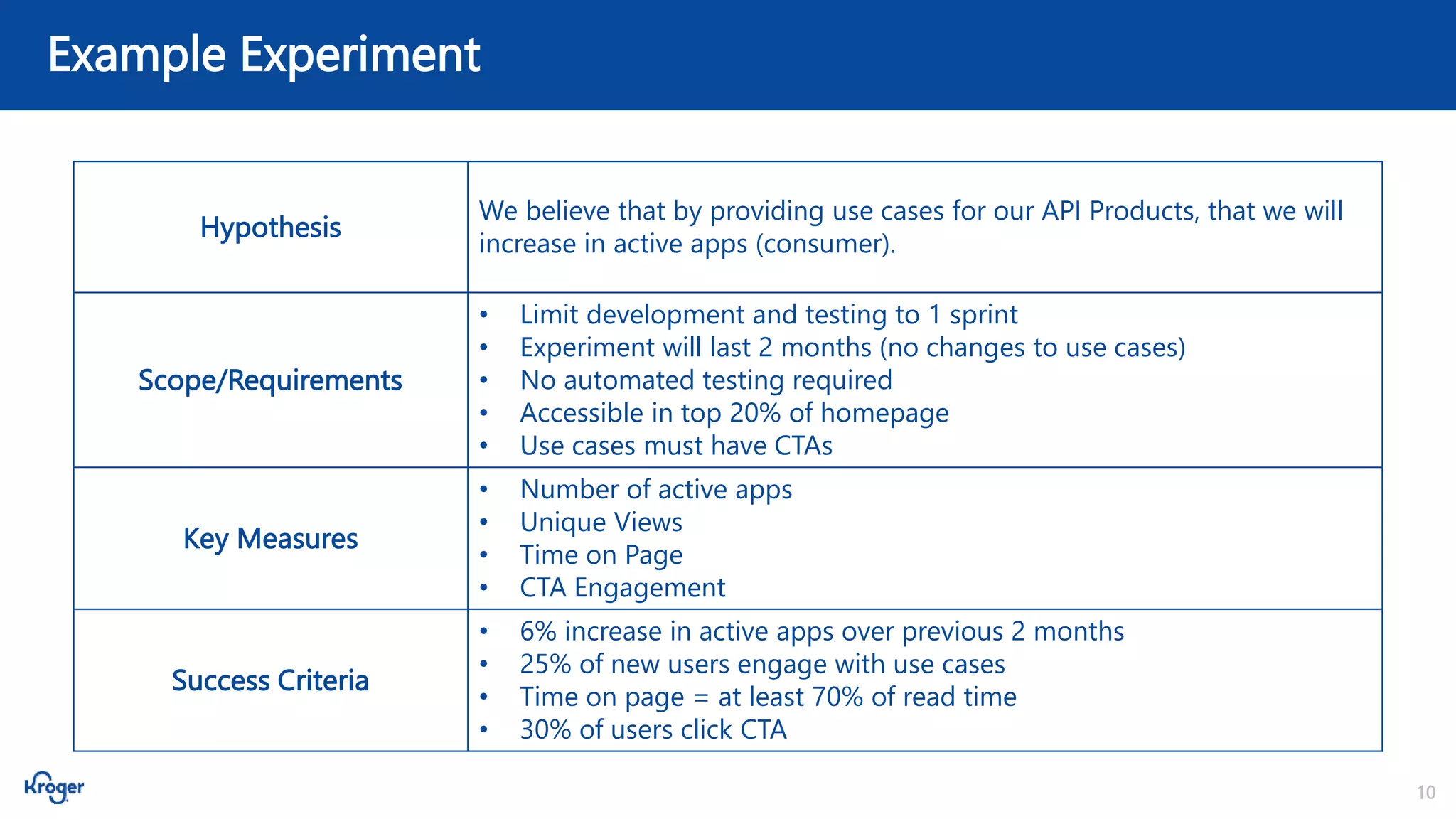

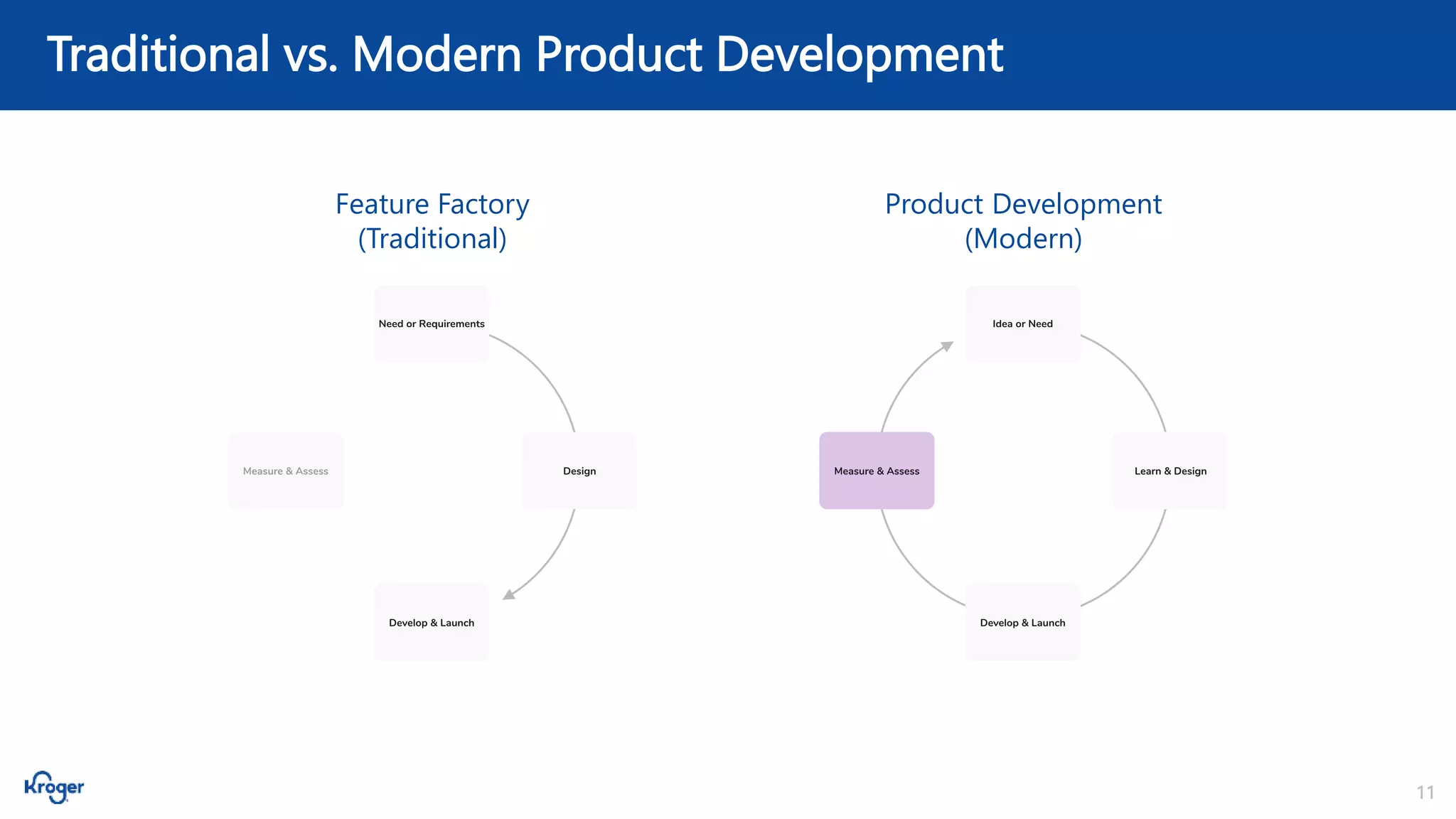

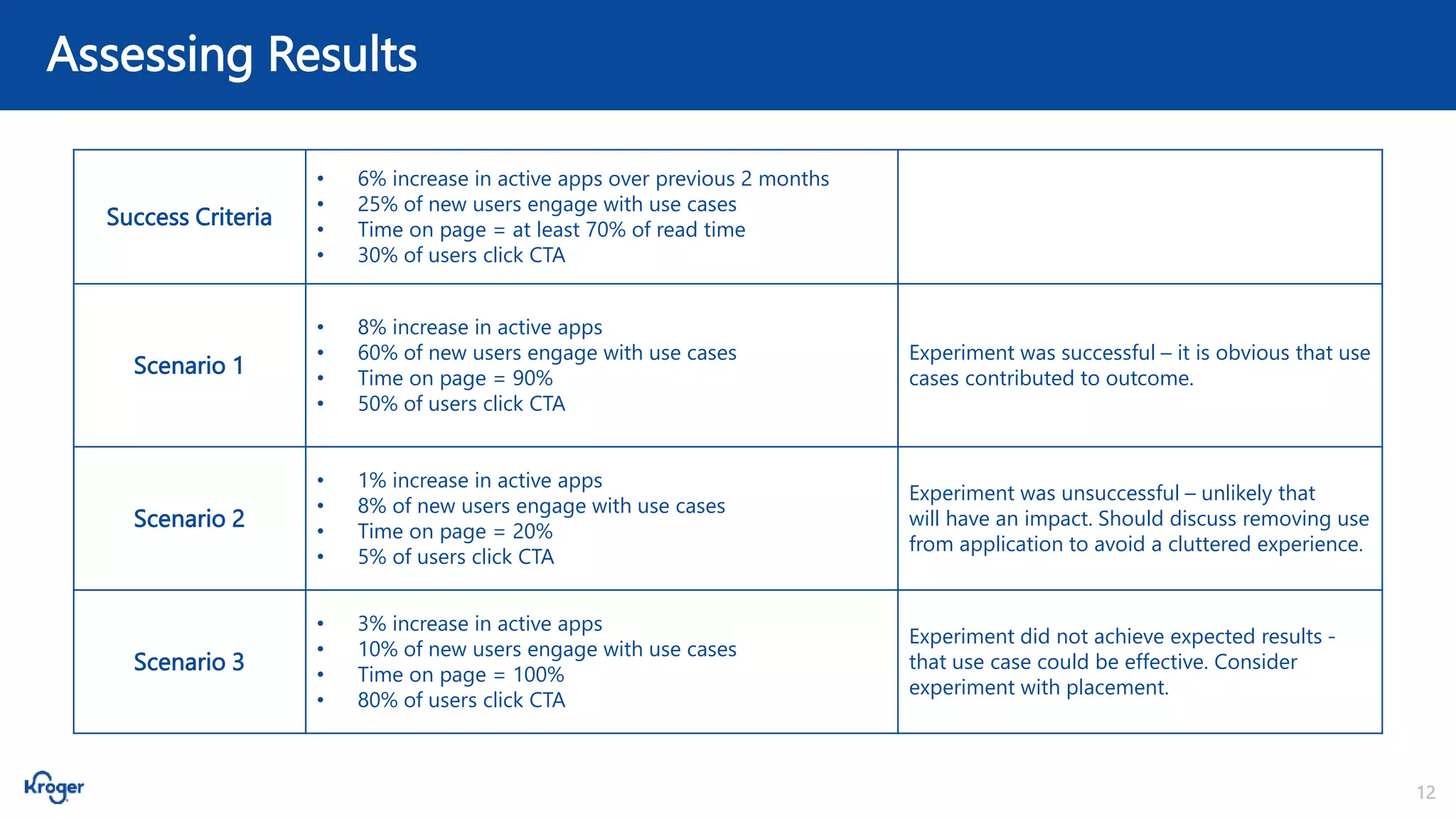

The document discusses optimizing developer portals through analytics and feedback, highlighting the unique challenges and opportunities involved. It emphasizes the importance of modern product development approaches, such as experimentation, to prioritize outcomes over outputs, reduce uncertainty, and improve user engagement. Recommendations include maintaining an experimental mindset, focusing on learning from experiments, and using multiple key measures for success.