The increasing demands of large-scale network system towards data acquisition and control from multiple sources has led to the proliferated adoption of internet of things (IoT) that is further witnessed with massive generation of voluminous data. Review of literature showcases the scope and problems associated with data compression approaches towards massive scale of heterogeneous data management in IoT. Therefore, the proposed study addresses this problem by introducing a novel computational framework that is capable of downsizing the data by harnessing the potential problem-solving characteristic of artificial intelligence (AI). The scheme is presented in form of triple-layered architecture considering layer with IoT devices, fog layer, and distributed cloud storage layer. The mechanism of downsizing is carried out using deep learning approach to predict the probability of data to be downsized. The quantified outcome of study shows significant data downsizing performance with higher predictive accuracy.

![IAES International Journal of Artificial Intelligence (IJ-AI) Vol. 14, No. 4, August 2025, pp. 2613~2621 ISSN: 2252-8938, DOI: 10.11591/ijai.v14.i4.pp2613-2621 2613 Journal homepage: http://ijai.iaescore.com Novel framework for downsizing the massive data in internet of things using artificial intelligence Salma Firdose1 , Shailendra Mishra2 1 School of Information Science, Presidency University, Bengaluru, India 2 College of Computer and Information Science, Majmaah University, Al Majma'ah, Saudi Arabia Article Info ABSTRACT Article history: Received Mar 27, 2024 Revised Feb 21, 2025 Accepted Mar 15, 2025 The increasing demands of large-scale network system towards data acquisition and control from multiple sources has led to the proliferated adoption of internet of things (IoT) that is further witnessed with massive generation of voluminous data. Review of literature showcases the scope and problems associated with data compression approaches towards massive scale of heterogeneous data management in IoT. Therefore, the proposed study addresses this problem by introducing a novel computational framework that is capable of downsizing the data by harnessing the potential problem-solving characteristic of artificial intelligence (AI). The scheme is presented in form of triple-layered architecture considering layer with IoT devices, fog layer, and distributed cloud storage layer. The mechanism of downsizing is carried out using deep learning approach to predict the probability of data to be downsized. The quantified outcome of study shows significant data downsizing performance with higher predictive accuracy. Keywords: Artificial intelligence Cloud Data compression Internet of things Voluminous data This is an open access article under the CC BY-SA license. Corresponding Author: Salma Firdose School of Information Science, Presidency University Itgalpur Rajanakunte, Yelahanka, Bengaluru, Karnataka 560064, India Email: salma.firdose@presidencyuniversity.in 1. INTRODUCTION With a targeted deployment of large scale environment towards data acquisition, internet of things (IoT) has been generating a staggering size of voluminous data [1]. The prime reason for generation of such massive data in IoT can be reasoned by proliferation of devices [2], continous data generation [3], high granularity [4], diverse data types [5], and global reach [6]. Therefore, managing such a masisve streams of generated data posses a significant challenges towards performing robust analytical operation, distributed data storage, and security as well [7]. However, there are various preferred approaches evolved towards managing such challenging size of data. The primary solution evolved is to adopt edge computing in IoT that can not only conserve bandwidth but also minimize latency [8]. Various operations e.g. preliminary analysis, aggregation, data filtering can be carried out effectively by edge devices prior to forwarding the data to cloud. Another significant solution is towards adopting filtering and prioritization of data at the gateway or edge node by considering only essential information and discarding less effective information [9]. Such approach can minimize teh data traffic to a large extent and emphasize towards resource management in IoT. The third essential approach towards traffic management is associated with compression or reduction of data while transmitting over the network [10]. Adopting various approaches e.g. data deduplication [11], lossless compression [12], delta encoding [13] is reported to accelerate the data trasmission and minimizes the consumption of channel capacity. Apart from above three approaches, other frequently adopted approaches are distributed architecture, quality of service (QoS) management, constructing scalable infrastructure, and](https://image.slidesharecdn.com/0625614-250904093828-ac910021/75/Novel-framework-for-downsizing-the-massive-data-in-internet-of-things-using-artificial-intelligence-1-2048.jpg)

![ ISSN: 2252-8938 Int J Artif Intell, Vol. 14, No. 4, August 2025: 2613-2621 2614 adopting effective security measures. However, these are less frequently adopted compared to the other three approaches discussed above. The prominent research challenges associated with all the above approaches are associated with bandwidth constraint, scalability, data latency, data security, data storage management, data quality and reliability, interoperability and standardization, and energy efficiency [14]. Out of all these approaches, data compression approaches are commonly preferred as a candidate solution towards downsizing the data in IoT. However, there are various significant research problems associated with it as follows: i) compression approaches that claims of higher compression ratio is witnessed to consume extensive computational resources that posses as a bigger challenges for restricted procesisng capability of edge devices as well as IoT nodes; ii) adoption of compression and decompression process is also associated with inclusion of additional latency that affects data transmission performance especially for the applications that demands real-time functionalities with instantaneous response system; iii) energy consumption is another critical challenges in existing compression approaches that can eventually reduce the sustainable operations of IoT devices; iv) adoption of lossy compression schemes offers maximized compression ratio but at the cost of data quality thereby affecting certain applications in IoT that demands accurate representation of data; v) overhead in data compression is another significant challenges especially when metadata is compressed or additional synchronization takes place or to manage the decompression dictionaries; and vi) the demands of a compression algorithm to be adaptable as well as compatible while working with diversified protocols, platforms, and devices in IoT is truely challenging. Addressing these challenges requires careful consideration of the specific requirements, constraints, and trade-offs associated with compression in IoT and cloud deployments. The major gap identified is towards selection of an appropriate compression algorithms, optimizing compression parameters, and implementing efficient compression techniques tailored to the characteristics of the data and the underlying infrastructure, which is not much reported in existing system targetting towards maximizing the benefits of data compression in IoT and cloud environments. The related work in the area of larger-sized IoT data management are as follows: Nwogbaga et al. [15] have presented discussion of data minimization approaches considering cloud environment, fog computing, and IoT. The idea of the work is towards downsizing the massive data to reduce the offloading delay. The work presented by Rong et al. [16] have constructed a collaborative model using cloud and edge computing for converging IoT with artificial intelligence (AI) in order to generate a data-driven approach for supporting IoT applications. Similar line of discussion has been carried out by Bourechak et al. [17]. Such types of studies with an inclusion of machine learning applied on edge computing in IoT is also advocated in work of Merenda et al. [18]. Heavier traffic management in IoT has been attempted to control using data minimization scheme as presented by Elouali et al. [19] using a unique information dissipation framework. Karras et al. [20] have presented a machine learning approach which is meant for performing management of big data where the idea of the work is – to perform anomaly detection after cleaning the data using federated learning, to integrate self-organizing map with reinforcement learning for clustering, and to use neural network to compress the data. Similar line of work is also carried out by Signoretti et al. [21]. Neto et al. [22] have designed a dataset that can be used for real-time investigation on IoT ecosystem. The study overcomes the issues of limited real-time data for analyzing IoT performance that acts as an impediment towards researching data management in IoT. Nasif et al. [23] have implemented deep learning approach along with lossless compression in IoT in order to address the memory and processing limitation of an IoT nodes. The study towards lossless compression was also witnessed in work of Hwang et al. [24] where a bit-depth compression technique has been adopted to witness optimal resource utilization. Sayed et al. [25] have presented a predictive model for traffic management in IoT in order to address the congestion problems using both machine and deep learning approaches. Zhang et al. [26] have presented a data minimization approach using adaptive thresholding and dynamic adjustment. Bosch et al. [27] have presented a data compression scheme especially meant for event filtering by sensors with a target of minimizing the data throughput. Therefore, the contribution of the proposed study is towards developing a novel computational framework that can perform an effective management of streaming the raw data in IoT using layer-based architecture harnessing the potential of AI. The value added contribution of the proposed study different from existing system are as follows: i) the study model presents an optimal modelling of data minimization for a large scale of IoT traffic for facilitating an effective and quality data management; ii) the layer-based interactive architecture is designed considering IoT devices, fog layer, and cloud storage units that facilitates a unique filtering and transformation of raw and complex data to reduced and quality data; iii) a deep neural network is adopted in order to facilitate downsizing of the data without affecting the quality of essential information within it; and iv) an extensive test environment is constructed with dual settings representing normal and peak traffic condition in order to benchmark the outcome of proposed system in contrast to conventional data encoding system and learning-based model. The next section illustrates the research methodology involved in proposed study.](https://image.slidesharecdn.com/0625614-250904093828-ac910021/75/Novel-framework-for-downsizing-the-massive-data-in-internet-of-things-using-artificial-intelligence-2-2048.jpg)

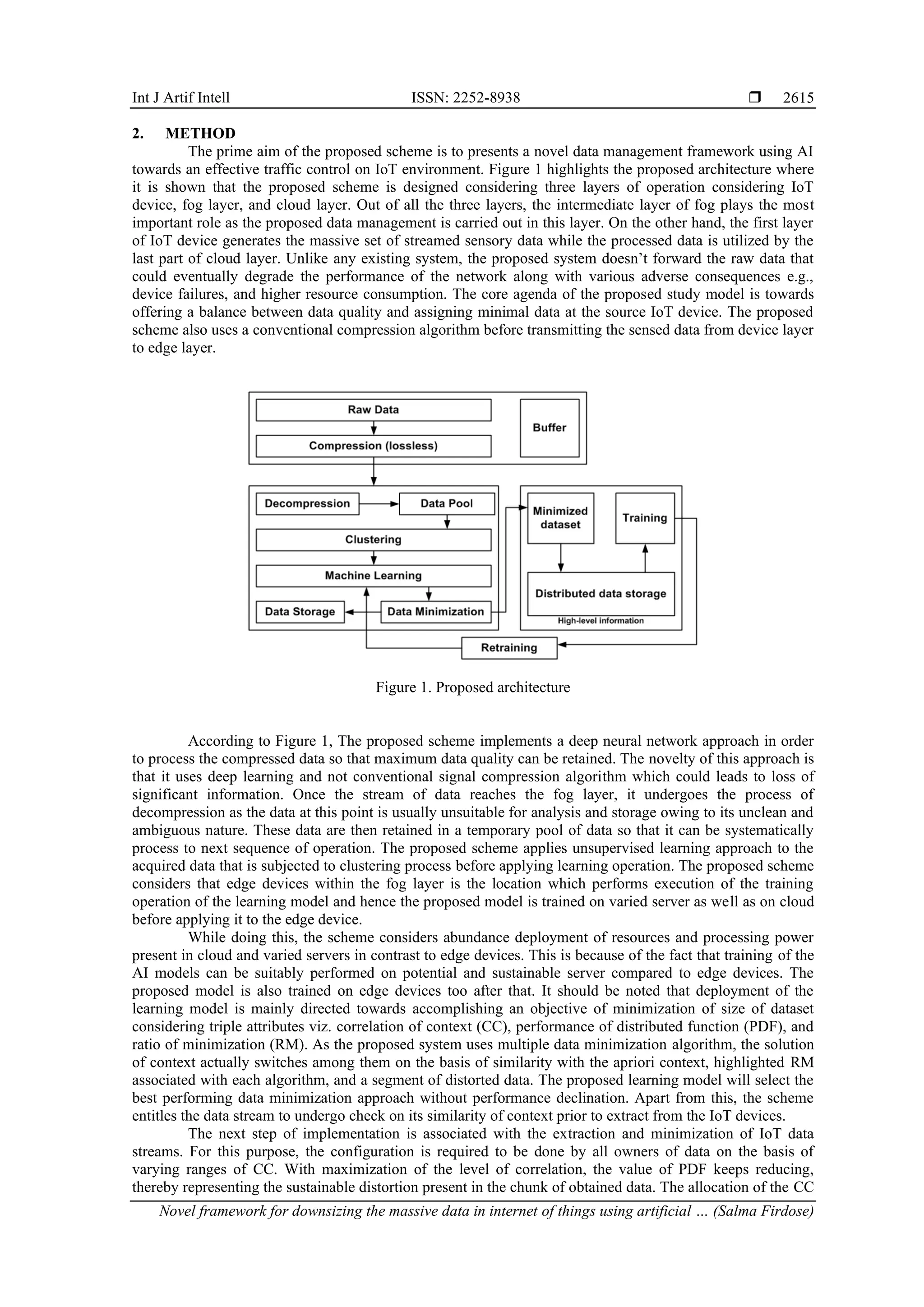

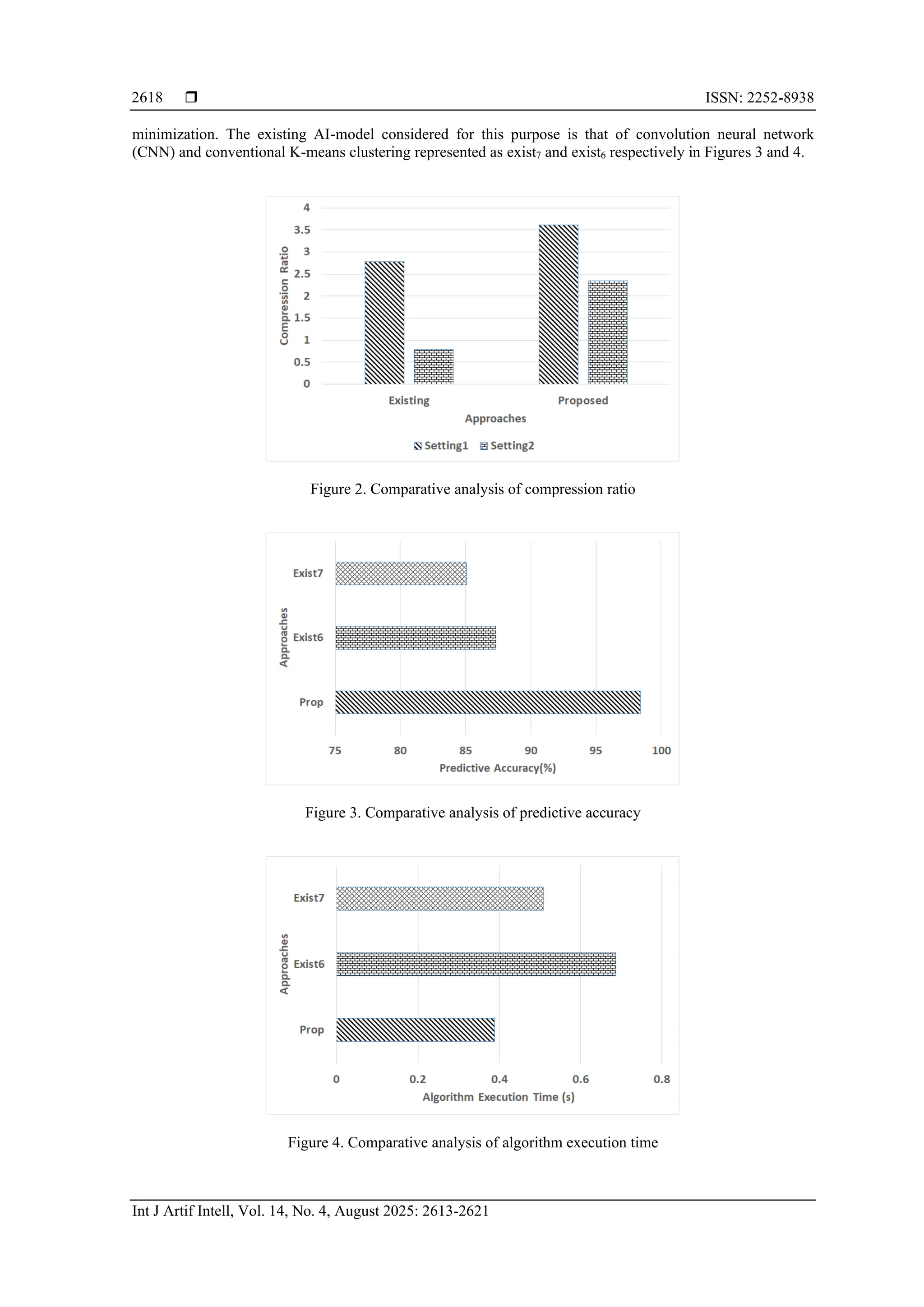

![Int J Artif Intell ISSN: 2252-8938 Novel framework for downsizing the massive data in internet of things using artificial … (Salma Firdose) 2617 − Exist1: this data minimization approach was presented by Adedeji [28] which uses symbol that are structured in sequence ranging from maximal to minimal probabilities followed by classifying the two sets whose value of probability is in proximity of equalness to each other. − Exist2: this approach was presented by Abdo et al. [29] that performs specification of frequencies of iterativeness of signal followed by the score of the signal coefficient. The core agenda is to minimize the bits number for data set representation. − Exist3: this approach was discussed in work of Chowdary et al. [30] applied on edge computing which attempts to identify the iterative and longer phrases followed by encoding them. The individual phrases has prefix similar to prior phrase that has already been encoded along with one extra alphabetical charecter. − Exist4: this data minimization approach was presented by Zafar et al. [31] that evaluates teh occurances of appearance of specific symbols within a set of information thereby facilitating unambiguous and efficient code. − Exist5: this approach was implemented by Lee et al. [32] where the data minimization is carried out using less number of bits. The model of encoding was done specifically considering edge device to offer better coding performance. The proposed system has been evaluated using standard dataset [33] with extensive sensory readings. Hence, a proper test-case has been designed considering two settings viz. Setting-1 consists of 10,526,380 bytes of data where each line of CSV file consists of ten sensory readings and setting-2 consists of 41,117,828 bytes of data where each line of CSV file consist of individual information. It is to be noted that the first setting is used for assessing normal traffic condition while second setting is used for assessing peak traffic condition. The numerical outcomes are exhibited in Tables 1 and 2 corresponding to both the primary and secondary settings. Table 1. Numerical outcomes for setting-1 Approach Compressed data (bytes) Mean compressed file (bytes) Compression ratio Exist1 5,178,711 217.60 0.74 Exist2 11,085,434 511.83 0.85 Exist3 4,814,433 299.14 0.85 Exist4 4,184,031 157.20 1.18 Exist5 1,880,441 140.47 2.78 Prop 2,781,665 230.64 3.69 Table 2. Numerical outcomes for setting-2 Approach Compressed data (bytes) Mean compressed file (bytes) Compression ratio Exist1 23,616,330 184.35 0.49 Exist2 51,042,266 238.82 0.73 Exist3 24,286,087 188.18 0.36 Exist4 16,808,436 146.12 0.76 Exist5 17,815,801 151.75 0.79 Prop 29,815,832 251.87 0.93 The numerical outcome exhibited in Tables 1 and 2 showcase that proposed prop scheme offers better outcome for normal traffic (compression ratio=3.69) in contrast to peak traffic condition (compression ratio=0.93), which is quite aggregable in perspective of practical environment. A closer look into this numerical trend will show that proposed scheme has extensible capacity to offer highly optimal compression ratio. This is in contrast to all individual encoding approaches reportedly used in IoT and cloud environment. In order to arrive at a conclusive outcome, a mean value of all the existing approaches is considered and compared to proposed scheme with respect to dual settings as exhibited in Figure 2. The size of the traffic is programmatically increased to 20% more to understand its impact on the outcome. The outcome showcases that the proposed system is found to offer approximately 17% and 39% of increased in compression ratio in setting-1 and setting-2 respectively. The prime reason behind the improvement of compression ratio in proposed system compared to existing system can be attributed by its involvement of learning-based approach. None of the existing system performs data minimizations in preemptive form and involves extensive algorithmic operation; however, proposed scheme exhibited a predictive-based methodology where the reduction is carried out on the sequential basis of observation of data within fog layer. Further, cloud layer too contributes towards data minimization unlike any of existing approaches. Further, the proposed study outcome has been compared with the existing AI-based models used for data](https://image.slidesharecdn.com/0625614-250904093828-ac910021/75/Novel-framework-for-downsizing-the-massive-data-in-internet-of-things-using-artificial-intelligence-5-2048.jpg)

![ ISSN: 2252-8938 Int J Artif Intell, Vol. 14, No. 4, August 2025: 2613-2621 2620 C : Conceptualization M : Methodology So : Software Va : Validation Fo : Formal analysis I : Investigation R : Resources D : Data Curation O : Writing - Original Draft E : Writing - Review & Editing Vi : Visualization Su : Supervision P : Project administration Fu : Funding acquisition CONFLICT OF INTEREST STATEMENT Authors state no conflict of interest. DATA AVAILABILITY The data that support the findings of this study are available from the corresponding author upon reasonable request. REFERENCES [1] A. Koohang, C. S. Sargent, J. H. Nord, and J. Paliszkiewicz, “Internet of things (IoT): from awareness to continued use,” International Journal of Information Management, vol. 62, 2022, doi: 10.1016/j.ijinfomgt.2021.102442. [2] B. Nagajayanthi, “Decades of internet of things towards twenty-first century: a research-based introspective,” Wireless Personal Communications, vol. 123, no. 4, pp. 3661–3697, 2022, doi: 10.1007/s11277-021-09308-z. [3] J. L. Herrera, J. Berrocal, S. Forti, A. Brogi, and J. M. Murillo, “Continuous QoS-aware adaptation of cloud-IoT application placements,” Computing, vol. 105, no. 9, pp. 2037–2059, 2023, doi: 10.1007/s00607-023-01153-1. [4] X. Zhang, G. Zhang, X. Huang, and S. Poslad, “Granular content distribution for IoT remote sensing data supporting privacy preservation,” Remote Sensing, vol. 14, no. 21, 2022, doi: 10.3390/rs14215574. [5] A. Naghib, N. J. Navimipour, M. Hosseinzadeh, and A. Sharifi, “A comprehensive and systematic literature review on the big data management techniques in the internet of things,” Wireless Networks, vol. 29, no. 3, pp. 1085–1144, 2023, doi: 10.1007/s11276-022-03177-5. [6] A. M. Rahmani, S. Bayramov, and B. K. Kalejahi, “Internet of things applications: opportunities and threats,” Wireless Personal Communications, vol. 122, no. 1, pp. 451–476, 2022, doi: 10.1007/s11277-021-08907-0. [7] T. Alsboui, Y. Qin, R. Hill, and H. Al-Aqrabi, “Distributed intelligence in the internet of things: challenges and opportunities,” SN Computer Science, vol. 2, no. 4, 2021, doi: 10.1007/s42979-021-00677-7. [8] J. Pérez, J. Díaz, J. Berrocal, R. López-Viana, and Á. González-Prieto, “Edge computing: a grounded theory study,” Computing, vol. 104, no. 12, pp. 2711–2747, 2022, doi: 10.1007/s00607-022-01104-2. [9] D. Ameyed, F. Jaafar, F. Petrillo, and M. Cheriet, “Quality and security frameworks for IoT-architecture models evaluation,” SN Computer Science, vol. 4, no. 4, 2023, doi: 10.1007/s42979-023-01815-z. [10] A. A. Sadri, A. M. Rahmani, M. Saberikamarposhti, and M. Hosseinzadeh, “Data reduction in fog computing and internet of things: a systematic literature survey,” Internet of Things, vol. 20, 2022, doi: 10.1016/j.iot.2022.100629. [11] P. G. Shynu, R. K. Nadesh, V. G. Menon, P. Venu, M. Abbasi, and M. R. Khosravi, “A secure data deduplication system for integrated cloud-edge networks,” Journal of Cloud Computing, vol. 9, no. 1, 2020, doi: 10.1186/s13677-020-00214-6. [12] S. K. Idrees and A. K. Idrees, “New fog computing enabled lossless EEG data compression scheme in IoT networks,” Journal of Ambient Intelligence and Humanized Computing, vol. 13, no. 6, pp. 3257–3270, 2022, doi: 10.1007/s12652-021-03161-5. [13] S. Wielandt, S. Uhlemann, S. Fiolleau, and B. Dafflon, “TDD LoRa and Delta encoding in low-power networks of environmental sensor arrays for temperature and deformation monitoring,” Journal of Signal Processing Systems, vol. 95, no. 7, pp. 831–843, 2023, doi: 10.1007/s11265-023-01834-2. [14] H. Omrany, K. M. Al-Obaidi, M. Hossain, N. A. M. Alduais, H. S. Al-Duais, and A. Ghaffarianhoseini, “IoT-enabled smart cities: a hybrid systematic analysis of key research areas, challenges, and recommendations for future direction,” Discover Cities, vol. 1, no. 1, Mar. 2024, doi: 10.1007/s44327-024-00002-w. [15] N. E. Nwogbaga, R. Latip, L. S. Affendey, and A. R. A. Rahiman, “Investigation into the effect of data reduction in offloadable task for distributed IoT-fog-cloud computing,” Journal of Cloud Computing, vol. 10, no. 1, 2021, doi: 10.1186/s13677-021-00254-6. [16] G. Rong, Y. Xu, X. Tong, and H. Fan, “An edge-cloud collaborative computing platform for building AIoT applications efficiently,” Journal of Cloud Computing, vol. 10, no. 1, 2021, doi: 10.1186/s13677-021-00250-w. [17] A. Bourechak, O. Zedadra, M. N. Kouahla, A. Guerrieri, H. Seridi, and G. Fortino, “At the confluence of artificial intelligence and edge computing in IoT-based applications: a review and new perspectives,” Sensors, vol. 23, no. 3, 2023, doi: 10.3390/s23031639. [18] M. Merenda, C. Porcaro, and D. Iero, “Edge machine learning for AI-enabled iot devices: a review,” Sensors, vol. 20, no. 9, 2020, doi: 10.3390/s20092533. [19] A. Elouali, H. Mora Mora, and F. J. Mora-Gimeno, “Data transmission reduction formalization for cloud offloading-based IoT systems,” Journal of Cloud Computing, vol. 12, no. 1, 2023, doi: 10.1186/s13677-023-00424-8. [20] A. Karras et al., “TinyML algorithms for big data management in large-scale IoT systems,” Future Internet, vol. 16, no. 2, 2024, doi: 10.3390/fi16020042. [21] G. Signoretti, M. Silva, P. Andrade, I. Silva, E. Sisinni, and P. Ferrari, “An evolving tinyml compression algorithm for IoT environments based on data eccentricity,” Sensors, vol. 21, no. 12, 2021, doi: 10.3390/s21124153. [22] E. C. P. Neto, S. Dadkhah, R. Ferreira, A. Zohourian, R. Lu, and A. A. Ghorbani, “CICIoT2023: a real-time dataset and benchmark for large-scale attacks in IoT environment,” Sensors, vol. 23, no. 13, 2023, doi: 10.3390/s23135941. [23] A. Nasif, Z. A. Othman, and N. S. Sani, “The deep learning solutions on lossless compression methods for alleviating data load on iot nodes in smart cities,” Sensors, vol. 21, no. 12, 2021, doi: 10.3390/s21124223. [24] S. H. Hwang, K. M. Kim, S. Kim, and J. W. Kwak, “Lossless data compression for time-series sensor data based on dynamic bit packing,” Sensors, vol. 23, no. 20, 2023, doi: 10.3390/s23208575.](https://image.slidesharecdn.com/0625614-250904093828-ac910021/75/Novel-framework-for-downsizing-the-massive-data-in-internet-of-things-using-artificial-intelligence-8-2048.jpg)

![Int J Artif Intell ISSN: 2252-8938 Novel framework for downsizing the massive data in internet of things using artificial … (Salma Firdose) 2621 [25] S. A. Sayed, Y. Abdel-Hamid, and H. A. Hefny, “Artificial intelligence-based traffic flow prediction: a comprehensive review,” Journal of Electrical Systems and Information Technology, vol. 10, no. 1, 2023, doi: 10.1186/s43067-023-00081-6. [26] H. Zhang, J. Na, and B. Zhang, “Autonomous internet of things (IoT) data reduction based on adaptive threshold,” Sensors, vol. 23, no. 23, 2023, doi: 10.3390/s23239427. [27] R. E. Bosch, J. R. Ponce, A. S. Estévez, J. M. B. Rodríguez, V. H. Bosch, and J. F. T. Alarcón, “Data compression in the NEXT- 100 data acquisition system,” Sensors, vol. 22, no. 14, 2022, doi: 10.3390/s22145197. [28] K. B. Adedeji, “Performance evaluation of data compression algorithms for IoT-based smart water network management applications,” Journal of Applied Science and Process Engineering, vol. 7, no. 2, pp. 554–563, 2020, doi: 10.33736/jaspe.2272.2020. [29] A. Abdo, T. S. Karamany, and A. Yakoub, “A hybrid approach to secure and compress data streams in cloud computing environment,” Journal of King Saud University - Computer and Information Sciences, vol. 36, no. 3, 2024, doi: 10.1016/j.jksuci.2024.101999. [30] K. M. R. Chowdary, V. Tiwari, and M. Jebarani, “Edge computing by using LZW algorithm,” International Journal of Advance Research, Ideas, and Innovations in Technology, vol. 5, no. 1, pp. 228–230, 2019. [31] S. Zafar, N. Iftekhar, A. Yadav, A. Ahilan, S. N. Kumar, and A. Jeyam, “An IoT method for telemedicine: lossless medical image compression using local adaptive blocks,” IEEE Sensors Journal, vol. 22, no. 15, pp. 15345–15352, 2022, doi: 10.1109/JSEN.2022.3184423. [32] J. H. Lee, J. Kong, and A. Munir, “Arithmetic coding-based 5-bit weight encoding and hardware decoder for CNN inference in edge devices,” IEEE Access, vol. 9, pp. 166736–166749, 2021, doi: 10.1109/ACCESS.2021.3136888. [33] L. M. Candanedo, V. Feldheim, and D. Deramaix, “Data driven prediction models of energy use of appliances in a low-energy house,” Energy and Buildings, vol. 140, pp. 81–97, 2017, doi: 10.1016/j.enbuild.2017.01.083. BIOGRAPHIES OF AUTHORS Salma Firdose working as an Assistant Professor in the School of Information Science at Presidency University, Bangalore. She completed her Doctor of Philosophy (Ph.D.) in Software Engineering from Bharathiar University, in 2019. She has 15+ years of teaching experience in the national and international universities. She has published several national and international papers. Her area of specialization is in software engineering, networking, and operating systems. She can be contacted at email: salma.firdose@presidencyuniversity.in. Shailendra Mishra working as a Professor in the College of Computer and Information Science, Majmaah University, Majmaah, Kingdom of Saudi Arabia. He received Ph.D. degrees in Computer Science and Computer Science and Engineering in the years 2007 and 2011 from Gurukul Kangri University Uttrakhand, India, and Uttrakhand Technical University, Dehradun, India respectively. He received the Young Scientist Award in 2006 and 2008 from the Department of Science and Technology, UCOST Government of Uttrakhand, India. He has published and presented 76 research papers in international journals and international conferences and wrote more than 10 articles on various topics in national magazines. He can be contacted at email: s.mishra@mu.edu.sa.](https://image.slidesharecdn.com/0625614-250904093828-ac910021/75/Novel-framework-for-downsizing-the-massive-data-in-internet-of-things-using-artificial-intelligence-9-2048.jpg)