This document provides an overview of image analysis and pattern recognition techniques for remote sensing applications using algorithms in ENVI/IDL. It covers topics such as image statistics, transformations like Fourier transforms and principal components analysis, radiometric enhancement, topographic modeling, image registration, sharpening, change detection, classification (both unsupervised and supervised), and hyperspectral analysis. The intended audience is researchers and practitioners working with remote sensing images.

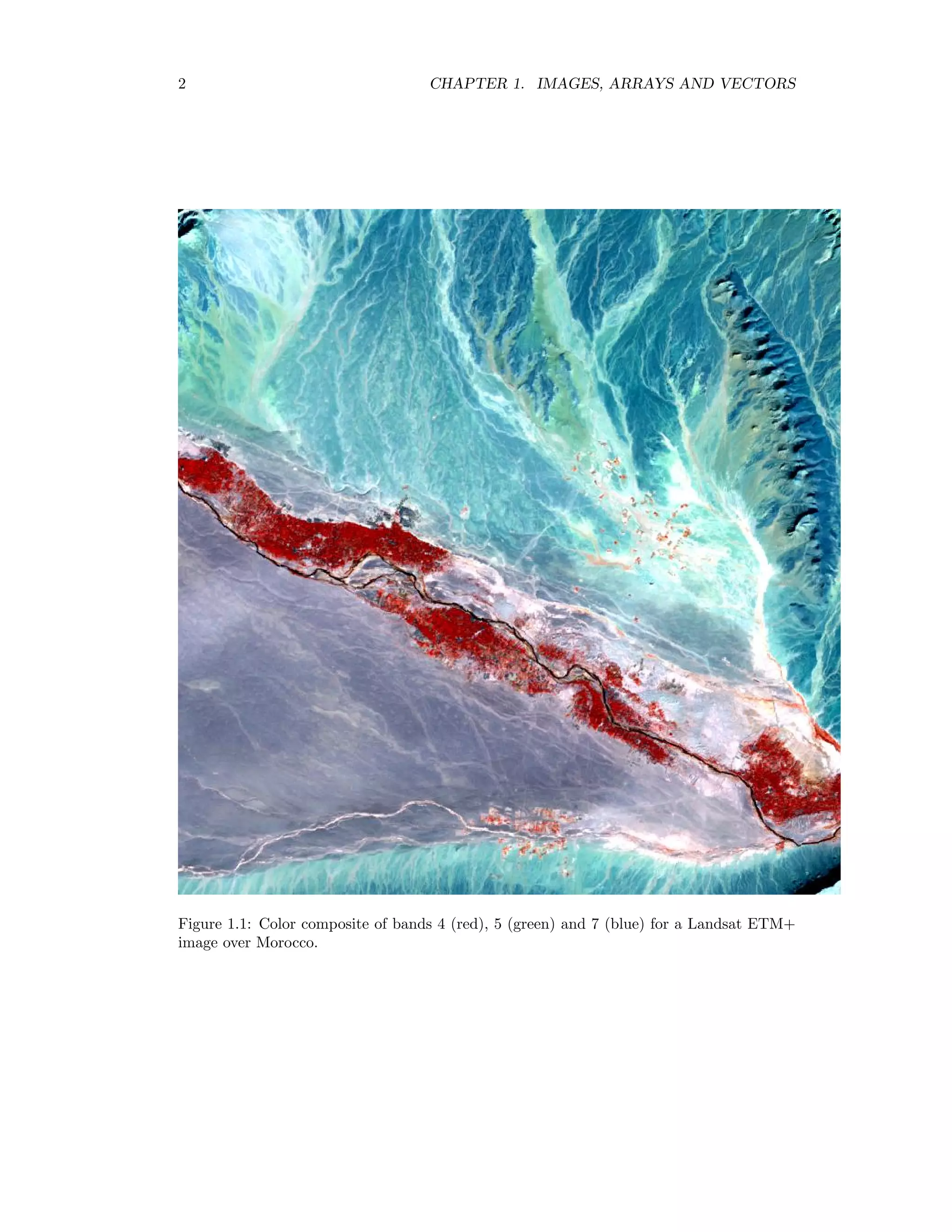

![1.1. MULTISPECTRAL SATELLITE IMAGES 3 or digital number g and at-sensor radiance f is determined by the sensor calibration as measured (and maintained) by the satellite image provider: f = Cg(i, j) + fmin where C = (fmax − fmin)/255, in which fmax and fmin are maximum and minimum mea- surable radiances at the sensor. Atmospheric scattering and absorption models are used to calculate surface reflectance from the observed at-sensor radiance, as it is the reflectance which is directly related to the physical properties of the surface being examined. Various conventions can be used for storing the image array g(i, j) in computer memory or on storage media. In band interleaved by pixel (BIP) format, for example, a two-channel, 3 × 3 pixel image would be stored as g1(1, 1) g2(1, 1) g1(2, 1) g2(2, 1) g1(3, 1) g2(3, 1) g1(1, 2) g2(1, 2) g1(2, 2) g2(2, 2) g1(3, 2) g2(3, 2) g1(1, 3) g2(1, 3) g1(2, 3) g2(2, 3) g1(3, 3) g2(3, 3), whereas in band interleaved by line (BIL) it would be stored as g1(1, 1) g1(2, 1) g1(3, 1) g2(1, 1) g2(2, 1) g2(3, 1) g1(1, 2) g1(2, 2) g1(3, 2) g2(2, 1) g1(2, 2) g2(2, 3) g1(1, 3) g2(2, 3) g1(3, 3) g2(3, 1) g1(2, 3) g2(3, 3), and in band sequential (BSQ) format it is stored as g1(1, 1) g1(2, 1) g1(3, 1) g1(1, 2) g1(2, 2) g1(3, 2) g1(1, 3) g1(2, 3) g1(3, 3) g2(1, 1) g2(2, 1) g2(3, 1) g2(1, 2) g2(2, 2) g2(3, 2) g2(1, 3) g2(2, 3) g2(3, 3). In the computer language IDL, so-called row major indexing is used for arrays and the elements in an array are numbered from zero. This means that, if a gray-scale image g is stored in an IDL array variable G, then the intensity value g(i, j) is addressed as G[i-1,j-1]. An N-band multispectral image is stored in BIP format as an N × c × r array in IDL, in BIL format as a c × N × r and in BSQ format as an c × r × N array. Auxiliary information, such as image acquisition parameters and georeferencing, is nor- mally included with the image data on the same file, and the format may or may not make use of compression algorithms. Examples are the geoTIFF1 file format used for example by Space Imaging Inc. for distributing Carterra(c) imagery and which includes lossless compres- sion, the HDF (Hierachical Data Format) in which for example ASTER images are distributed and the cross-platform PCDSK format employed by PCI Geomatics with its image process- ing software, which is in plain ASCII code and not compressed. ENVI uses a simple “flat binary” file structure with an additional ASCII header file. 1geoTIFF refers to TIFF files which have geographic (or cartographic) data embedded as tags within the TIFF file. The geographic data can then be used to position the image in the correct location and geometry on the screen of a geographic information display.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-11-2048.jpg)

![1.2. ALGEBRA OF VECTORS AND MATRICES 5 IDL x=[[1],[2]] IDL print,x 1 2 IDL print,transpose(x) 1 2 b X x yθ x cos θ Figure 1.3: The inner product. The inner product can be written in terms of the vector lengths and the angle θ between the two vectors as x y = |x||y| cos θ = xy cos θ, see Fig. 1.3. If θ = 90o the vectors are orthogonal so that x y = 0. Any vector can be decomposed into orthogonal unit vectors: x = x1 x2 = x1 1 0 + x2 0 1 . A two-by-two matrix is written A = a11 a12 a21 a22 . When a matrix is multiplied with a vector the result is another vector, e.g. Ax = a11 a12 a21 a22 x1 x2 = a11x1 + a12x2 a21x1 + a22x2 . The IDL operator for matrix and vector multiplication is ##. IDL a=[[1,2],[3,4]] IDL print,a 1 2 3 4 IDL print,a##x 5 11](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-13-2048.jpg)

![1.3. EIGENVALUES AND EIGENVECTORS 7 matrices. As we will see later, covariance matrices are always symmetric. A matrix A is symmetric if it doesn’t change when it is transposed, i.e. if A = A . Very often we have to solve the so-called eigenvalue problem, which is to find eigenvectors x and eigenvalues λ that satisfy the equation Ax = λx or, equivalently, a11 a12 a21 a22 x1 x2 = λ x1 x2 . This is the same as the two equations (a11 − λ)x1 + a12x2 = 0 a21x1 + (a22 − λ)x2 = 0. (1.2) If we eliminate x1 and make use of the symmetry a12 = a21, we obtain [(a11 − λ)(a22 − λ) − a2 12]x2 = 0. In general x2 = 0, so we must have (a11 − λ)(a22 − λ) − a2 12 = 0, which is known as the characteristic equation for the eigenvalue problem. It is a quadratic equation in λ with solutions λ(1) = 1 2 a11 + a22 + (a11 + a22)2 − 4(a11a22 − a2 12) λ(2) = 1 2 a11 + a22 − (a11 + a22)2 − 4(a11a22 − a2 12) . (1.3) Thus there are two eigenvalues and, correspondingly, two eigenvectors x(1) and x(2) , which can be obtained by substituting λ(1) and λ(2) into (1.2) and solving for x1 and x2. It is easy to show that the eigenvalues are orthogonal (x(1) ) x(2) = 0. The matrix formed by the two eigenvectors, u = (x(1) , x(2) ) = x (1) 1 x (2) 1 x (1) 2 x (2) 2 , is said to diagonalize the matrix a. That is u Au = λ(1) 0 0 λ(2) . (1.4) We can illustrate the whole procedure in IDL as follows:](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-15-2048.jpg)

![8 CHAPTER 1. IMAGES, ARRAYS AND VECTORS IDL a=float([[1,2],[2,3]]) IDL print,a 1.00000 2.00000 2.00000 3.00000 IDL print,eigenql(a,eigenvectors=u,/double) 4.2360680 -0.23606798 IDL print,transpose(u)##a##u 4.2360680 -2.2204460e-016 -1.6653345e-016 -0.23606798 Note that, after diagonalization, the off-diagonal elements are not precisely zero due to rounding errors in the computation. All of the above properties generalize easily to N dimensions. 1.4 Finding minima and maxima In order to maximize some desirable property of a multispectral image, such as signal to noise or spread in intensity, we often need to take derivatives of vectors. A vector (partial) derivative in two dimensions is written ∂ ∂x and is defined as the vector ∂ ∂x = 1 0 ∂ ∂x1 + 0 1 ∂ ∂x2 . Many of the operations with vector derivatives correspond exactly to operations with or- dinary scalar derivatives (They can all be verified easily by writing out the expressions component-by component): ∂ ∂x (x y) = y analogous to ∂ ∂x xy = y ∂ ∂x (x x) = 2x analogous to ∂ ∂x x2 = 2x The scalar expression x Ay, where A is a matrix, is called a quadratic form. We have ∂ ∂x (x Ay) = Ay ∂ ∂y (x Ay) = A x and ∂ ∂x (x Ax) = Ax + A x. Note that, if A is a symmetrix matrix, this last equation can be written ∂ ∂x (x Ax) = 2Ax. Suppose x∗ is a critical point of the function f(x), i.e. d dx f(x∗ ) = d d f(x) x=x∗ = 0, (1.5)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-16-2048.jpg)

![10 CHAPTER 1. IMAGES, ARRAYS AND VECTORS However suppose that x is constrained by the equation g(x) = 0. For example, we might have g(x) = x2 1 + x2 2 − 1 = 0 which constrains x to lie on a circle of radius 1. Finding an minimum of f subject to g = 0 is equivalent to finding an unconstrained minimum of f(x) + λg(x), (1.9) where λ is called a Lagrange multiplier and is treated like an additional variable, see [Mil99]. That is, we solve the set of equations ∂ ∂xi (f(x) + λg(x)) = 0, i = 1, 2 ∂ ∂λ (f(x) + λg(x)) = 0. (1.10) The latter equation is just g(x) = 0. For example, let f(x) = ax2 1 + bx2 2 and g(x) = x1 + x2 − 1. Then we get the three equations ∂ ∂x1 (f(x) + λg(x)) = 2ax1 + λ = 0 ∂ ∂x2 (f(x) + λg(x)) = 2bx2 + λ = 0 ∂ ∂λ (f(x) + λg(x)) = x1 + x2 − 1 = 0 The solution is x1 = b a + b , x2 = a a + b .](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-18-2048.jpg)

![14 CHAPTER 2. IMAGE STATISTICS For continuous random variables, such as the measured radiance at a satellite sensor, the distribution function is not expressed in terms of discrete probabilities, but rather in terms of a probability density function p(x), where p(x)dx is the probability that the value of the random variable X lies in the interval [x, x + dx]. Then P(x) = Pr(X ≤ x) = x −∞ p(t)dt and, of course, P(−∞) = 0, P(∞) = 1. Two random variables X and Y are said to be independent when Pr(X ≤ x and Y ≤ y) = Pr(X ≤ x, Y ≤ y) = P(x)P(y). The mean or expected value of a random variable X is written X and is defined in terms of the probability density function: X = ∞ −∞ xp(x)dx. The variance of X, written var(X) is defined as the expected value of the random variable (X − X )2 , i.e. var(X) = (X − X )2 . In terms of the probability density function, it is given by var(X) = ∞ −∞ (x − X )2 p(x)dx. Two simple but very useful identities follow from the definition of variance: var(X) = X2 − X 2 var(aX) = a2 var(X). (2.1) 2.2 The normal distribution It is often the case that random variables are well-described by the normal or Gaussian probability density function p(x) = 1 √ 2πσ exp(− 1 2σ2 (x − µ)2 ). In that case X = µ, var(X) = σ2 . The expected value of pixel intensities G(x) = G1(x) G2(x) ... GN (x) ,](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-22-2048.jpg)

![16 CHAPTER 2. IMAGE STATISTICS This is obvious in two dimensions, since we have var(a G) = cov(a1G1 + a2G2, a1G1 + a2G2) = a2 1var(G1) + a1a2cov(G1, G2) + a1a2cov(G2, G1) + a2 2var(G2) = (a1, a2) var(G1) cov(G1, G2) cov(G2, G1) var(G2) a1 a2 . Variance is always nonnegative and the vector a in (2.2) is arbitrary, so we have a Σa ≥ 0 for all a. The covariance matrix is therefore said to be positive semi-definite. The correlation matrix C is similar to the covariance matrix, except that each matrix element (i, j) is normalized to var(Gi)var(Gj). In two dimensions C = 1 ρ12 ρ21 1 = 1 cov(G1,G2) √ var(G1)var(G2) cov(G2,G1) √ var(G1)var(G2) 1 = 1 σ12 σ1σ2 σ21 σ1σ2 1 . The following ENVI/IDL program calculates and prints out the covariance matrix of a multispectral image: envi_select, title=’Choose multispectral image’,fid=fid,dims=dims,pos=pos if (fid eq -1) then return num_cols = dims[2]-dims[1]+1 num_rows = dims[4]-dims[3]+1 num_pixels = (num_cols*num_rows) num_bands = n_elements(pos) samples=intarr(num_bands,n_elements(num_pixels)) for i=0,num_bands-1 do samples[i,*]=envi_get_data(fid=fid,dims=dims,pos=pos[i]) print, correlate(samples,/covariance,/double) end ENVI .GO 111.46663 82.123236 159.58377 133.80637 82.123236 64.532431 124.84815 104.45298 159.58377 124.84815 246.18004 205.63420 133.80637 104.45298 205.63420 192.70367 2.3 A special function If n is an integer, the factorial of n is defined by n! = n(n − 1) · · · 1, 1! = 0! = 1. The generalization of this to non-integers z is the gamma function Γ(z) = ∞ 0 tz−1 e−t dt. It has the property Γ(z + 1) = zΓ(z).](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-24-2048.jpg)

![3.2. WAVELETS 23 Thus we can write g(j) = 1 c c−1 k=0 ˆg(k)ei2πkj/c , j = 0 . . . c − 1, (3.4) if we interpret ˆg(k) → ˆg(k − c) when k ≥ c/2. The solution to (3.4) for the complex frequency components ˆg(k) is called the discrete Fourier transform and is given by ˆg(k) = c−1 j=0 g(j)e−i2πkj/c , k = 0 . . . c − 1. (3.5) This follows from the following orthogonality property: c−1 j=0 ei2π(k−k )j/c = cδk,k . (3.6) Eq. (3.4) itself is the discrete inverse Fourier transform. The discrete analog of Parsival’s formula is c−1 k=0 |ˆg(k)|2 = 1 c c−1 j=0 g(j)2 . (3.7) Determining the frequency components in (3.5) would appear to involve, in all, c2 floating point multiplication operations. The fast Fourier transform (FFT) exploits the structure of the complex e-functions to reduce this to order c log c, see for example [PFTV86]. 3.1.2 Discrete Fourier transform of an image The discrete Fourier transform is easily generalized to two dimensions for the purpose of image analysis. Let g(i, j), i, j = 0 . . . c − 1, represent a (quadratic) gray scale image. Its discrete Fourier transform is ˆg(k, ) = c−1 i=0 c−1 j=0 g(i, j)e−i2π(ik+j )/c (3.8) and the corresponding inverse transform is g(i, j) = 1 c2 c−1 k=0 c−1 =0 ˆg(k, )ei2π(ik+j )/c . (3.9) 3.2 Wavelets Unlike the Fourier transform, which represents a signal (array of pixel intensities) in terms of pure frequency functions, the wavelet transform expresses the signal in terms of functions which are restricted both in terms of frequency and spatial extent. In many applications, this turns out to be particularly efficient and useful. We’ll see an example of this in Chapter 7, where we discuss image fusion in more detail. The wavelet transform is discussed in Appendix B.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-31-2048.jpg)

![24 CHAPTER 3. TRANSFORMATIONS 3.3 Principal components The principal components transformation forms linear combinations of multispectral pixel intensities which are mutually uncorrelated and which have maximum variance. We assume without loss of generality that G = 0, so that the covariance matrix of a multispectral image is is Σ = GG , and look for a linear combination Y = a G with maximum variance, subject to the normalization condition a a = 1. Since the covariance of Y is a Σa, this is equivalent to maximizing an unconstrained Lagrange function, see Section 1.4, L = a Σa − 2λ(a a − 1). The maximum of L occurs at that value of a for which ∂L ∂a = 0. Recalling the rules for vector differentiation, ∂L ∂a = 2Σa − 2λa = 0 which is the eigenvalue problem Σa = λa. Since Σ is real and symmetric, the eigenvectors are orthogonal (and normalized). Denote them a1 . . . aN for eigenvalues λ1 ≥ . . . ≥ λN . Define the matrix A = (a1 . . . aN ), AA = I, and let the the transformed principal component vector be Y = A G with covariance matrix Σ . Then we have Σ = YY = A GG A = A ΣA = Diag(λ1 . . . λN ) = λ1 0 · · · 0 0 λ2 · · · 0 ... ... ... ... 0 0 · · · λN =: Λ. The fraction of the total variance in the original multispectral image which is described by the first i principal components is λ1 + . . . + λi λ1 + . . . + λi + . . . + λN . If the original multispectral channels are highly correlated, as is usually the case, the first few principal components will account for a very high percentage of the variance the image. For example, a color composite of the first 3 principal components of a LANDSAT TM scene displays essentially all of the information contained in the 6 spectral components in one single image. Nevertheless, because of the approximation involved in the assumption of a normal distribution, higher order principal components may also contain significant information [JRR99]. The principal components transformation can be performed directly from the ENVI main menu. However the following IDL program illustrates the procedure in detail: ; Principal components analysis envi_select, title=’Choose multispectral image’, $](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-32-2048.jpg)

![3.4. MINIMUM NOISE FRACTION 25 fid=fid, dims=dims,pos=pos if (fid eq -1) then return num_cols = dims[2]+1 num_lines = dims[4]+1 num_pixels = (num_cols*num_lines) num_channels = n_elements(pos) image=intarr(num_channels,num_pixels) for i=0,num_channels-1 do begin temp=envi_get_data(fid=fid,dims=dims,pos=pos[i]) m = mean(temp) image[i,*]=temp-m endfor ; calculate the transformation matrix A sigma = correlate(image,/covariance,/double) lambda = eigenql(sigma,eigenvectors=A,/double) print,’Covariance matrix’ print, sigma print,’Eigenvalues’ print, lambda print,’Eigenvectors’ print, A ; transform the image image = image##transpose(A) ; reform to BSQ format PC_array = bytarr(num_cols,num_lines,num_channels) for i = 0,num_channels-1 do PC_array[*,*,i] = $ reform(image[i,*],num_cols,num_lines,/overwrite) ; output the result to memory envi_enter_data, PC_array end 3.4 Minimum noise fraction Principal components analysis maximizes variance. This doesn’t always lead to images of decreasing image quality (i.e. of increasing noise). The MNF transformation minimizes the noise content rather than maximizing variance, so, if this is the desired criterion, it is to be preferred over PCA. Suppose we can represent a gray scale image G with covariance matrix Σ and zero mean as a sum of uncorrelated signal and noise noise components G = S + N,](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-33-2048.jpg)

![26 CHAPTER 3. TRANSFORMATIONS both normally distributed, with covariance matrices ΣS and ΣN and zero mean. Then we have Σ = GG = (S + N)(S + N) = SS + NN , since noise and signal are uncorrelated, i.e. SN = NS = 0. Thus Σ = ΣS + ΣN . (3.10) Now let us seek a linear combination a G for which the signal to noise ratio SNR = var(a S) var(a N) = a ΣSa a ΣN a is maximized. From (3.10) we can write this in the form SNR = a Σa a ΣN a − 1. (3.11) Differentiating we get ∂ ∂a SNR = 1 a ΣN a 1 2 Σa − a Σa (a ΣN a)2 1 2 ΣN a = 0, or, equivalently, (a ΣN a)Σa = (a Σa)ΣN a . This condition is met when a solves the generalized eigenvalue problem ΣN a = λΣa. (3.12) Both ΣN and Σ are symmetric and the latter is also positive definite. Its Cholesky factor- ization is Σ = LL , where L is a lower triangular matrix, and can be thought of as the “square root” of Σ. Such an L always exists is Σ is positive definite. With this, we can write (3.12) as ΣN a = λLL a or, equivalently, L−1 ΣN (L )−1 L a = λL a or, with b = L a and commutivity of inverse and transpose, [L−1 ΣN (L−1 ) ]b = λb, a standard eigenproblem for a real, symmetric matrix L−1 ΣN (L−1 ) . From (3.11) we see that the SNR for eigenvalue λi is just SNRi = ai Σai ai (λiΣai) − 1 = 1 λi − 1. Thus the eigenvector ai corresponding to the smallest eigenvalue λi will maximize the signal to noise ratio. Note that (3.12) can be written in the form ΣN A = ΣAΛ, (3.13)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-34-2048.jpg)

![3.5. MAXIMUM AUTOCORRELATION FACTOR (MAF) 29 Differentiating, ∂R ∂a = 1 a Σa 1 2 Σ∆a − a Σ∆a (a Σa)2 1 2 Σa = 0 or (a Σa)Σ∆a = (a Σ∆a)Σa. This condition is met when a solves the generalized eigenvalue problem Σ∆a = λΣa, (3.18) which is seen to have the same form as (3.12). Again both Σ∆ and Σ are symmetric and the latter is also positive definite and we obtain the standard eigenproblem [L−1 Σ∆(L−1 ) ]b = λb, for the real, symmetric matrix L−1 Σ∆(L−1 ) . Let the eigenvalues be λ1 ≥ . . . λN and the corresponding (orthogonal) eigenvectors be bi. We have 0 = bi bj = ai LL aj = ai Σaj, i = j, and therefore cov(ai G(x), aj G(x)) = ai Σaj = 0, i = j, so that the MAF components are orthogonal (uncorrelated). Moreover with equation (2.14) we have corr(ai G(x), ai G(x + ∆)) = 1 − 1 2 λi, and the first MAF component has minimum autocorrelation. An ENVI plug-in for performing the MAF transformation is given in Ap- pendix D.5.2.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-37-2048.jpg)

![30 CHAPTER 3. TRANSFORMATIONS Exercises 1. Show that, for x(t) = sin(2πt) in Eq. (2.2), ˆx(−1) = − 1 2i , ˆx(1) = 1 2i , and ˆx(k) = 0 otherwise. 2. Calculate the discrete Fourier transform of the sequence 2, 4, 6, 8 from (3.4). You have to solve four simultaneous equations, the first of which is 2 = 1 4 ˆg(0) + ˆg(1) + ˆg(2) + ˆg(3) . Verify your result in IDL with the command print, FFT([2,4,6,8])](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-38-2048.jpg)

![Chapter 4 Radiometric enhancement 4.1 Lookup tables Figure 4.1: Contrast enhancement with a lookup table represented as the continuous function f(x) [JRR99]. Intensity enhancement of an image is easily accomplished by means of lookup tables. For byte-encoded data, the pixel intensities g are used to index an array LUT[k], k = 0 . . . 255, the entries of which also lie between 0 and 255. These entries can be chosen to implement linear stretching, saturation, histogram equalization, etc. according to ˆgk(i, j) = LUT[gk(i, j)], 0 ≤ i ≤ r − 1, 0 ≤ j ≤ c − 1. 31](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-39-2048.jpg)

![32 CHAPTER 4. RADIOMETRIC ENHANCEMENT It is also useful to think of the the lookup table as an approximately continuous function y = f(x). If hin(x) is the histogram of the original image and hout(y) is the histogram of the image after transformation through the lookup table, then, since the number of pixels is constant, hout(y) dy = hin(x) dx, see Fig.4.1 4.1.1 Histogram equalization For histogram equalization, we want hout(y) to be constant independent of y. Hence dy ∼ hin(x) dx and y = f(x) ∼ x 0 hin(t)dt. The lookup table y for histogram equalization is thus proportional to the cumulative sum of the original histogram. 4.1.2 Histogram matching Figure 4.2: Steps required for histogram matching [JRR99]. It is often desirable to match the histogram of one image to that of another so as to make their apparent brightnesses as similar as possible, for example when the two images](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-40-2048.jpg)

![4.2. CONVOLUTIONS 33 are combined in a mosaic. We can do this by first equalizing both the input histogram hin(x) and the reference histogram href (y) with the cumulative lookup tables z = f(x) and z = g(y), respectively. The required lookup table is then y = g−1 (z) = g−1 (f(x)). The necessary steps for implementing this function are illustrated in Fig. 1.5 taken from [JRR99]. 4.2 Convolutions With the convention ω = 2πk/c we can write (3.5) in the form ˆg(ω) = c−1 j=0 g(j)e−iωj . (4.1) The convolution of g with a filter h = (h(0), h(1), . . .) is defined by f(j) = k h(k)g(j − k) =: h ∗ g, (4.2) where the sum is over all nonzero elements of the filter h. If the number of nonzero elements is finite, we speak of a finite impulse response filter (FIR). Theorem 1 (Convolution theorem) In the frequency domain, convolution is replaced by multiplication: ˆf(ω) = ˆh(ω)ˆg(ω). Proof: ˆf(ω) = j f(j)e−iωj = j,k h(k)g(j − k)e−iωj ˆh(ω)ˆg(ω) = k h(k)e−iωk g( )e−iω = k, h(k)g( )e−iω(k+ ) = k,j h(k)g(j − k)e−iωj = ˆf(ω). This can of course be generalized to two dimensional images, so that there are three basic steps involved in image filtering: 1. The image and the convolution filter are transformed from the spatial domain to the frequency domain using the FFT. 2. The transformed image is multiplied with the frequency filter. 3. The filtered image is transformed back to the spatial domain.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-41-2048.jpg)

![34 CHAPTER 4. RADIOMETRIC ENHANCEMENT We often distinguish between low-pass and high-pass filters. Low pass filters perform some sort of averaging. The simplest example is h = (1/2, 1/2, 0 . . .), which computes the average of two consecutive pixels. A high-pass filter computes differences of nearby pixels, e.g. h = (1/2, −1/2, 0 . . .). Figure 4.3 shows the Fourier transforms of these two simple filters generated by the the IDL program ; Hi-Lo pass filters x = fltarr(64) x[0]=0.5 x[1]=-0.5 p1 =abs(FFT(x)) x[1]=0.5 p2 =abs(FFT(x)) envi_plot_data,lindgen(64),[[p1],[p2]] end Figure 4.3: Low-pass(red) and high-pass (white) filters in the frequency domain. The quan- tity |ˆh(k)|2 is plotted as a function of k. The highest frequency is at the center of the plots, k = c/2 = 32 . 4.2.1 Laplacian of Gaussian filter We shall illustrate image filtering with the so-called Laplacian of Gaussian (LoG) filter, which will be used in Chapter 6 to implement contour matching for automatic determination of ground control points. To begin with, consider the gradient operator for a two-dimensional image: = ∂ ∂x = i ∂ ∂x1 + j ∂ ∂x2 ,](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-42-2048.jpg)

![4.2. CONVOLUTIONS 35 where i and j are unit vectors in the vertical and horizontal directions, respectively. g(x) is a vector in the direction of the maximum rate of change of gray scale intensity. Since the intensity values are discrete, the partial derivatives must be approximated. For example we can use the Sobel operators: ∂g(x) ∂x1 ≈ [g(i − 1, j − 1) + 2g(i, j − 1) + g(i + 1, j − 1)] − [g(i − 1, j + 1) + 2g(i, j + 1) + g(i + 1, j + 1)] = 2(i, j) ∂g(x) ∂x2 ≈ [g(i − 1, j − 1) + 2g(i − 1, j) + g(i − 1, j + 1)] − [g(i + 1, j − 1) + 2g(i + 1, j) + g(i + 1, j + 1)] = 1(i, j) which are equivalent to the two-dimensional FIR filters h1 = −1 0 1 −2 0 2 −1 0 1 and h2 = 1 2 1 0 0 0 −1 −2 −1 , respectively. The magnitude of the gradient is | | = 2 1 + 2 2. Edge detection can be achieved by calculating the filtered image f(i, j) = | |(i, j) and setting an appropriate threshold. Figure 4.4: Laplacian of Gaussian filter.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-43-2048.jpg)

![36 CHAPTER 4. RADIOMETRIC ENHANCEMENT Now consider the second derivatives of the image intensities, which can be represented formally by the Laplacian 2 = · = ∂2 ∂x2 1 + ∂2 ∂x2 2 . 2 g(x) is a scalar quantity which is zero whenever the gradient is maximum. Therefore changes in intensity from dark to light or vice versa correspond to sign changes in the Laplacian and these can also be used for edge detection. The Laplacian can also be ap- proximated by a FIR filter, however such filters tend to be very sensitive to image noise. Usually a low-pass Gauss filter is first used to smooth the image before the Laplacian filter is applied. It is more efficient, however, to calculate the Laplacian of the Gauss function itself and then use the resulting function to derive a high-pass filter. The Gauss function in two dimensions is given by 1 2πσ2 exp − 1 2σ2 (x2 1 + x2 2), where the parameter σ determines its extent. Its Laplacian is 1 2πσ6 (x2 1 + x2 2 − 2σ2 ) exp − 1 2σ2 (x2 1 + x2 2) a plot of which is shown in Fig. 4.4. The following program illustrates the application of the filter to a gray scale image, see Fig. 4.5: pro LoG sigma = 2.0 filter = fltarr(17,17) for i=0L,16 do for j=0L,16 do $ filter[i,j] = (1/(2*!pi*sigma^6))*((i-8)^2+(j-8)^2-2*sigma^2) $ *exp(-((i-8)^2+(j-8)^2)/(2*sigma^2)) ; output as EPS file thisDevice =!D.Name set_plot, ’PS’ Device, Filename=’c:tempLoG.eps’,xsize=4,ysize=4,/inches,/Encapsulated shade_surf,filter device,/close_file set_plot, thisDevice ; read a jpg image filename = Dialog_Pickfile(Filter=’*.jpg’,/Read) OK = Query_JPEG(filename,fileinfo) if not OK then return xsize = fileinfo.dimensions[0] ysize = fileinfo.dimensions[1] window,11,xsize=xsize,ysize=ysize Read_JPEG,filename,image1 image = bytarr(xsize,ysize)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-44-2048.jpg)

![4.2. CONVOLUTIONS 37 image[*,*] = image1[0,*,*] tvscl,image ; run the filter filt = image*0.0 filt[0:16,0:16]=filter[*,*] image1= float(fft(fft(image)*fft(filt),1)) ; get zero-crossings and display image2 = bytarr(xsize,ysize) indices = where( (image1*shift(image1,1,0) lt 0) or (image1*shift(image1,0,1) lt 0) ) image2[indices]=255 wset, 11 tv, image2 end Figure 4.5: Image filtered with the Laplacian of Gaussian filter.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-45-2048.jpg)

![Chapter 5 Topographic modelling Satellite images are two-dimensional representations of the three-dimensional earth surface. The correct treatment of the third dimension – the elevation – is essential for terrain mod- elling and accurate georeferencing. 5.1 RST transformation Transformations of spatial coordinates1 in 3 dimensions which involve only rotations, scaling and translations can be represented by a 4 × 4 transformation matrix A v∗ = Av (5.1) where v is the column vector containing the original coordinates v = (X, Y, Z, 1) and v∗ contains the transformed coordinates v∗ = (X∗ , Y ∗ , Z∗ , 1) . For example the translation X∗ = X + X0 Y ∗ = Y + Y0 Z∗ = Z + Z0 corresponds to the transformation matrix T = 1 0 0 X0 0 1 0 Y0 0 0 1 Z0 0 0 0 1 , a uniform scaling by 50% to S = 1/2 0 0 0 0 1/2 0 0 0 0 1/2 0 0 0 0 1 , 1The following treatment closely follows Chapter 2 of Gonzales and Woods [GW02]. 39](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-47-2048.jpg)

![40 CHAPTER 5. TOPOGRAPHIC MODELLING and a simple rotation θ about the Z-axis to Rθ = cos θ sin θ 0 0 −sinθ cosθ 0 0 0 0 1 0 0 0 0 1 , etc. The complete RST transformation is then v∗ = RSTv = Av. (5.2) The inverse transformation is of course represented by A−1 . 5.2 Imaging transformations An imaging (or perspective) transformation projects 3D points onto a plane. It is used to describe the formation of a camera image and, unlike the RST transformation, is non-linear since it involves division by coordinate values. Figure 5.1: Basic imaging process, from [GW02]. In Figure 5.1, the camera coordinate system (x, y, x) is aligned with the world coordinate system, describing the terrain to be imaged. The camera focal length is λ. From sim- ple geometry we obtain expressions for the image plane coordinates in terms of the world coordinates: x = λX λ − Z y = λY λ − Z . (5.3) Solving for the X and Y world coordinates: X = x λ (λ − Z) Y = y λ (λ − Z). (5.4)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-48-2048.jpg)

![5.3. CAMERA MODELS AND RFM APPROXIMATIONS 41 Thus, in order to extract the geographical coordinates (X, Y ) of a point on the earth’s surface from its image coordinates, we require knowledge of the elevation Z. Correcting for the elevation in this way constitutes the process of orthorectification. 5.3 Camera models and RFM approximations Equation (5.3) is overly simplified, as it assumes that the origin of world and image coordi- nates coincide. In order to apply it, one has first to transform the image coordinate system from the satellite to the world coordinate system. This is done in a straightforward way with the rotation and translation transformations introduced in Section 5.1. However it requires accurate knowledge of the height and orientation of the satellite imaging system at the time of the image acquisition (or, more exactly, during the acquisition, since the latter is normally not instantaneous). The resulting non-linear equations that relate image and world coordinates are what constitute the camera or sensor model for that particular image. Direct use of the camera model for image processing is complicated as it requires ex- tremely exact, sometimes proprietary information about the sensor system and its orbit. An alternative exists if the image provider also supplies a so-called rational function model (RFM) which approximates the camera model for each acquisition as a ratio of rational polynomials, see e.g. [TH01]. Such RFMs have the form r = f(X , Y , Z ) = a(X , Y , Z ) b(X , Y , Z ) c = g(X , Y , Z ) = c(X , Y , Z ) d(X , Y , Z ) (5.5) where c and r are the column and row (XY) coordinates in the image plane relative to an origin (c0, r0) and scaled by a factor cs resp. rs: c = c − c0 cs , r = r − r0 rs . Similarly X , Y and Z are relative, scaled world coordinates: X = X − X0 Xs , Y = Y − Y0 Ys , Z = Z − Z0 Zs . The polynomials a, b, c and d are typically to third order in the world coordinates, e.g. a(X, Y, Z) = a0 + a1X + a2Y + a3Z + a4XY + a5XZ + a6Y Z + a7X2 + a8Y 2 + a9Z2 + a10XY Z + a11X3 + a12XY 2 + a13XZ2 + a14X2 Y + a15Y 3 + a16Y Z2 + a17X2 Z + a18Y 2 Z + a19Z3 The advantage of using ratios of polynomials is that these are less subject to interpolation error. For a given acquisition the provider fits the RFM to his camera model using a three- dimensional grid of points covering the image and world spaces with a least squares fitting procedure. The RFM is capable of representing the camera model extremely well and can be used as a replacement for it. Both Space Imaging and Digital Globe provide RFMs with their high resolution IKONOS and QuickBird imagery. Below is a sample Quickbird RFM file giving the origins, scaling factors and polynomial coefficients needed in Eq. (5.5).](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-49-2048.jpg)

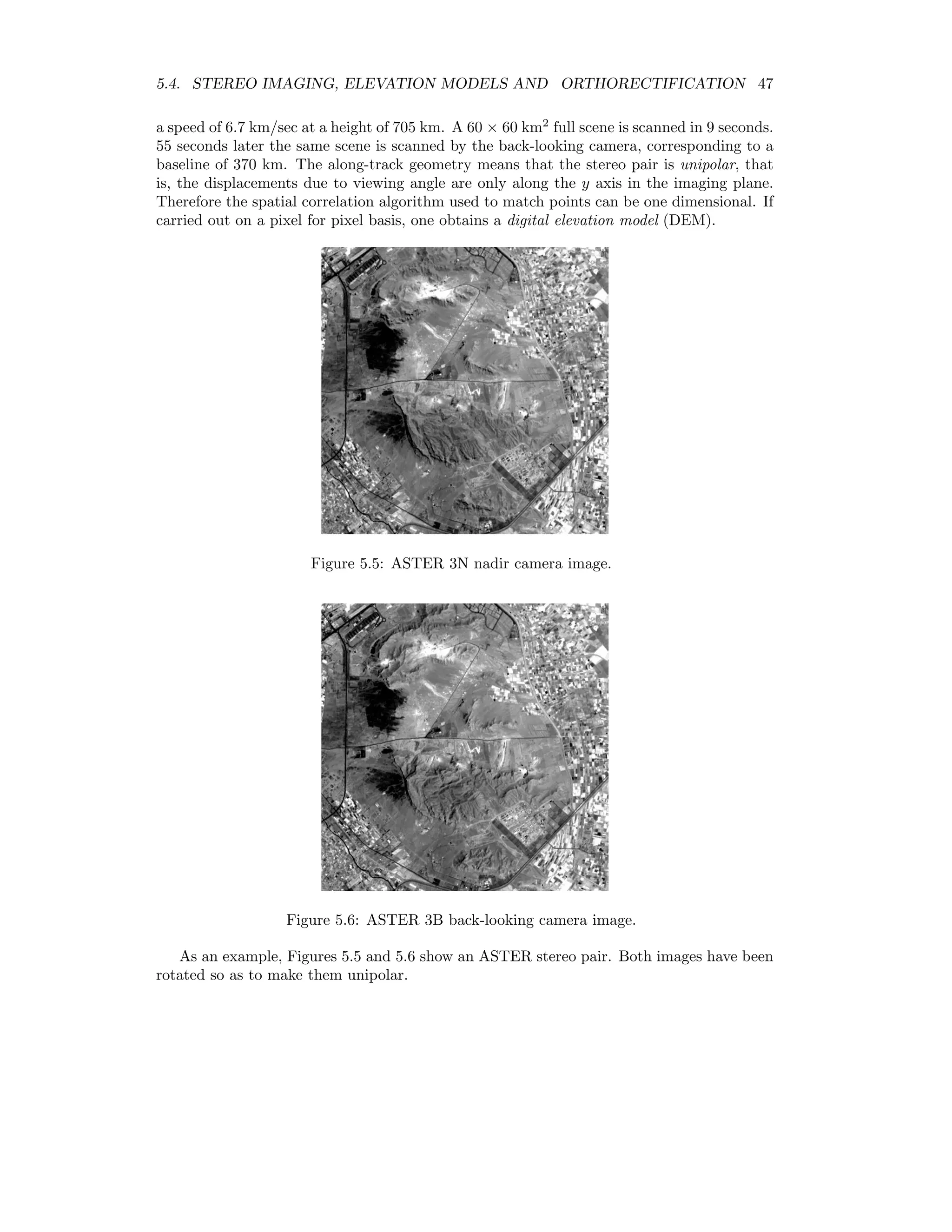

![5.4. STEREO IMAGING, ELEVATION MODELS AND ORTHORECTIFICATION 45 Figure 5.2: The stereo imaging process, from [GW02]. Figure 5.3: Top view of Figure 5.2, from [GW02].](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-53-2048.jpg)

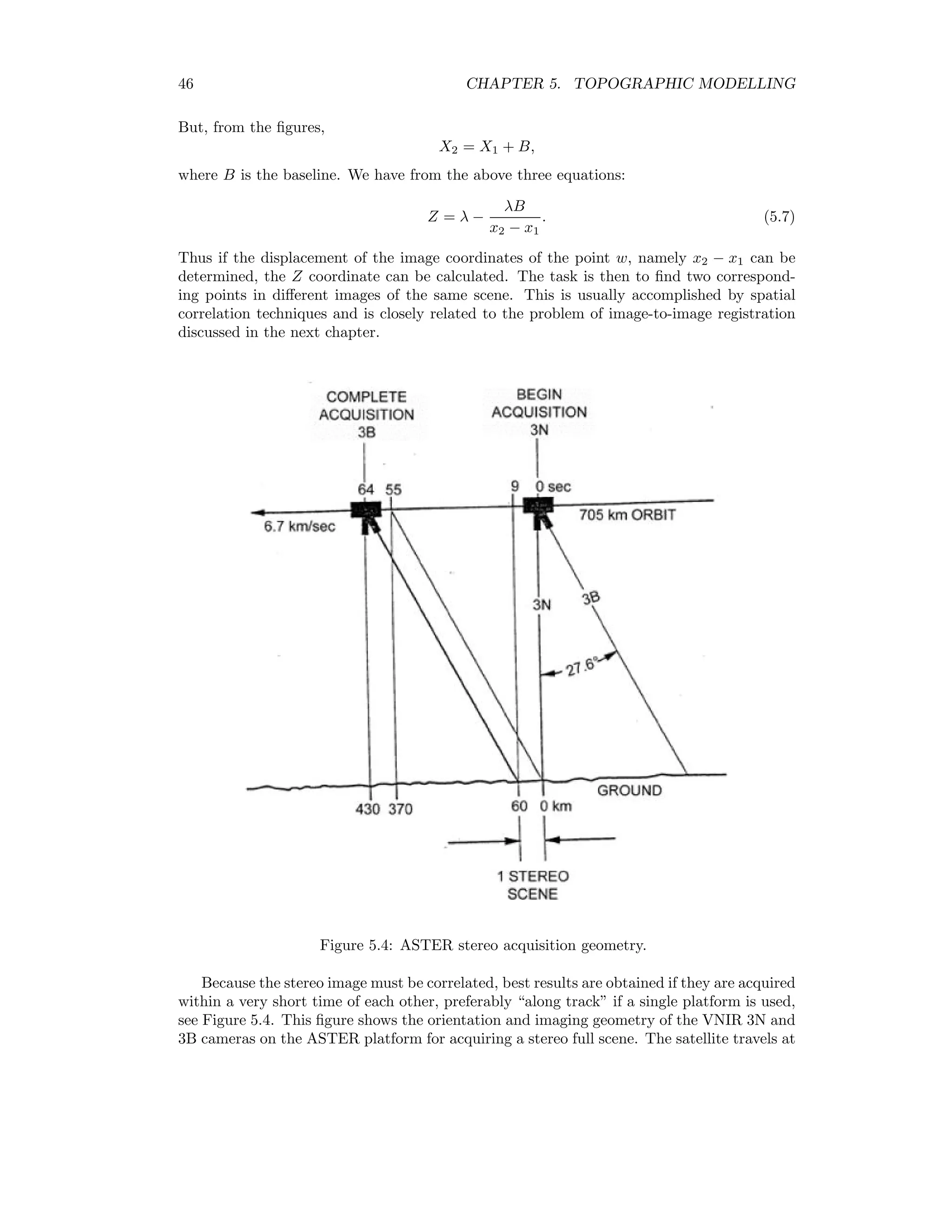

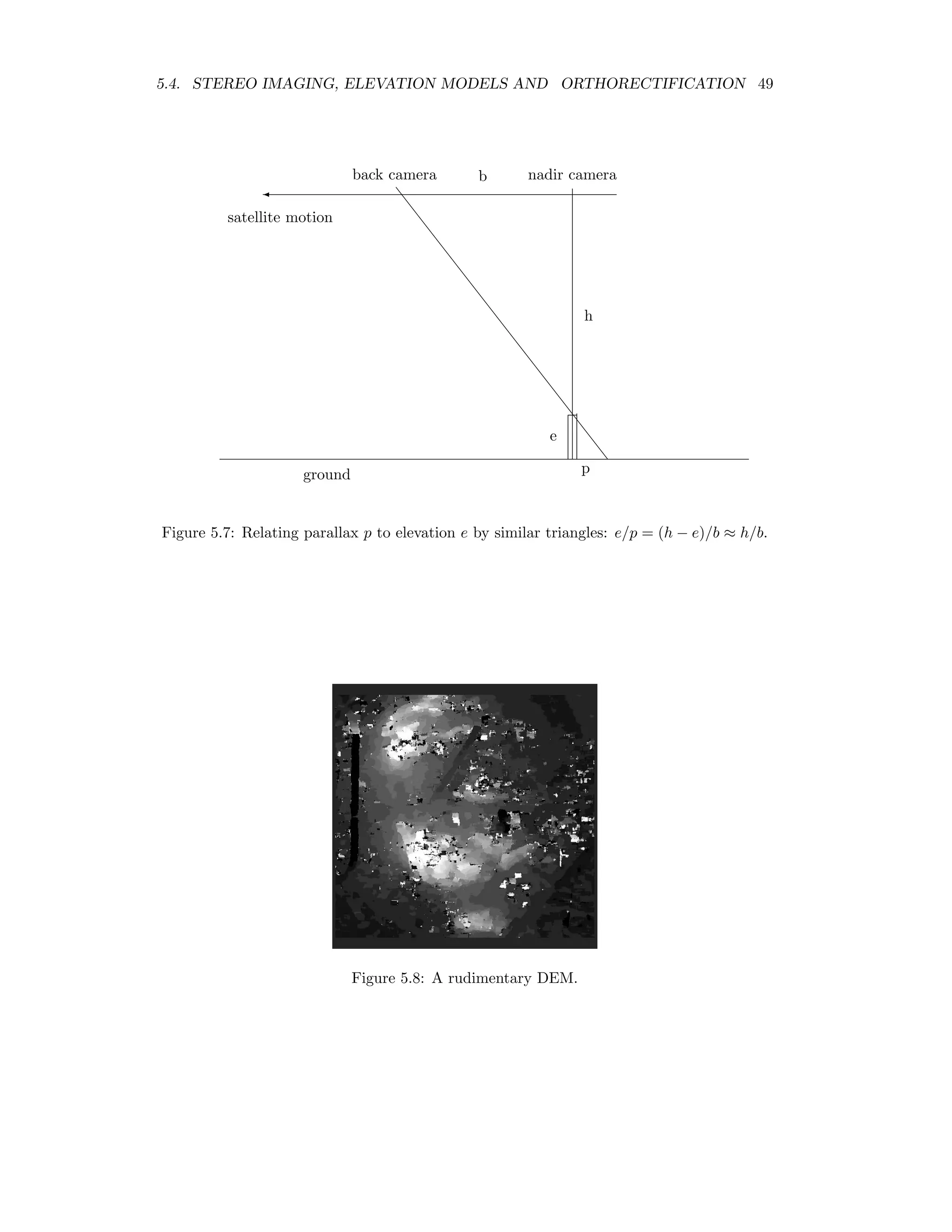

![48 CHAPTER 5. TOPOGRAPHIC MODELLING The following IDL program calculates a very rudimentary DEM: pro test_correl_images height = 705.0 base = 370.0 pixel_size = 15.0 envi_select, title=’Choose 1st image’, fid=fid1, dims=dims1, pos=pos1, /band_only envi_select, title=’Choose 2nd image’, fid=fid2, dims=dims2, pos=pos2, /band_only im1 = envi_get_data(fid=fid1,dims=dims1,pos=pos1) im2 = envi_get_data(fid=fid2,dims=dims2,pos=pos2) n_cols = dims1[2]-dims1[1]+1 n_rows = dims1[4]-dims1[3]+1 parallax = fltarr(n_cols,n_rows) progressbar = Obj_New(’progressbar’, Color=’blue’, Text=’0’,$ title=’Cross correlation, column ...’,xsize=250,ysize=20) progressbar-start for i=7L,n_cols-8 do begin if progressbar-CheckCancel() then begin envi_enter_data,pixel_size*parallax*(height/base) progressbar-Destroy return endif progressbar-Update,(i*100)/n_cols,text=strtrim(i,2) for j=25L,n_rows-26 do begin cim = correl_images(im1[i-5:i+5,j-5:j+5],im2[i-7:i+7,j-25:j+25], $ xoffset_b=0,yoffset_b=-20,xshift=0,yshift=20) corrmat_analyze,cim,xoff,yoff,m,e,p parallax[i,j] = yoff (-5.0) endfor endfor progressbar-destroy envi_enter_data,pixel_size*parallax*(height/base) end This program makes use of the routines correl images and corrmat analyze from the IDL Astronomy User’s Library2 to calculate the cross-correlation of the two images. For each pixel in the nadir image an 11 × 11 window is moved along an 11 × 51 window in the back- looking image centered at the same position. The point of maximum correlation defines the parallax or displacement p. This is related to the relative elevation e of the pixel according to e = h b p × 15m, where h is the height of the sensor and b is the baseline, see Figure 5.7. Figure 5.8 shows the result. Clearly there are many problems due to the correlation errors, however the relative elevations are approximately correct when compared to the DEM determined with the ENVI commercial add-on AsterDTM, see Figure 5.9. 2www.astro.washington.edu/deutsch/idl/htmlhelp/index.html](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-56-2048.jpg)

![5.6. ILLUMINATION CORRECTION 51 a b c d e f g h i Figure 5.10: Pixel elevations in an 8-neighborhood. The letters represent elevations. Slope/aspect determinations from a DEM are available in the ENVI main menu under Topographic/Topographic Modelling. 5.6 Illumination correction Figure 5.11: Angles involved in computation of local solar elevation, taken from [RCSA03]. Topographic modelling can be used to correct images for the effects of local solar illu- mination, which depends not only upon the sun’s position (elevation and azimuth) but also upon the local slope and aspect of the terrain being illuminated. Figure 5.11 shows the angles involved [RCSA03]. Solar elevation is θi, solar azimuth is φa, θp is the slope and φ0 is the aspect. The quantity to be calculated is the local solar elevation γi which determines](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-59-2048.jpg)

![52 CHAPTER 5. TOPOGRAPHIC MODELLING the local irradiance. From trigonometry we have cos γi = cos θp cos θi + sin θp sin θi cos(φa − φ0). (5.8) An example of a cos γi image in hilly terrain is shown in Figure 5.12. Figure 5.12: Cosine of local solar illumination angle stretched across a DEM. Let ρT represent the reflectance of the inclined surface in Figure 5.11. Then for a Lambertian surface, i.e. a surface which scatters reflected radiation uniformly in al directions, the reflectance of the corresponding horizontal surface ρH would be ρH = ρT cos θi cos γi . (5.9) The Lambertian assumption is in general not correct, the actual reflectance being de- scribed by a complicated bidirectional reflectance distribution function (BRDF). An empiri- cal appraoch which gives a better approximation to the BRDF is the C-correction [TGG82]. Let m and b be the slope and intercept of a regression line for reflectance vs. cos γi for a particular image band. Then instead of (5.9) one uses ρH = ρT cosθi + b/m cos γi + b/m . (5.10) An ENVI plug-in for illumination correction with the C-correction approxi- mation is given in Appendix D.2.2.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-60-2048.jpg)

![Chapter 6 Image Registration Image registration, either to another image or to a map, is a fundamental task in image processing. It is required for georeferencing, stereo imaging, accurate change detection, or any kind of multitemporal image analysis. Image-to-image registration methods can be divided into roughly four classes [RC96]: 1. algorithms that use pixel values directly, i.e. correlation methods 2. frequency- or wavelet-domain methods that use e.g. the fast fourier transform(FFT) 3. feature-based methods that use low-level features such as edges and corners 4. algorithms that use high level features and the relations between them, e.g. object- oriented methods We consider examples of frequency-domain and feature-based methods here. 6.1 Frequency domain registration Consider two N × N gray scale images g1(i , j ) and g2(i, j), where g2 is offset relative to g1 by an integer number of pixels: g2(i, j) = g1(i , j ) = g1(i − i0, j − j0), i0, j0 N. Taking the Fourier transform we have ˆg2(k, l) = ij g1(i − i0, j − j0)e−i2π(ik+jl)/N , or with a change of indices to i j , ˆg2(k, l) = i j g1(i , j )e−i2π(i k+j l)/N e−i2π(i0k+j0l)/N = ˆg1(k, l)e−i2π(i0k+j0l)/N . (This is referred to as the Fourier translation property.) Therefore we can write ˆg2(k, l)ˆg∗ 1(k, l) |ˆg2(k, l)ˆg∗ 1(k, l)| = e−i2π(i0k+j0l)/N , (6.1) 53](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-61-2048.jpg)

![54 CHAPTER 6. IMAGE REGISTRATION Figure 6.1: Phase correlation of two identical images shifted by 10 pixels. where ˆg∗ 1 is the complex conjugate of ˆg1. The inverse transform of the right hand side exhibits a Dirac delta function (spike) at the coordinates (i0, j0). Thus if two otherwise identical images are offset by an integer number of pixels, the offset can be found by taking their Fourier transforms, computing the ratio on the left hand side of (6.1) (the so-called cross-power spectrum) and then taking the inverse transform of the result. The position of the maximum value in the inverse transform gives the values of i0 and j0. The following IDL program illustrates the procedure, see Fig. 6.1 ; Image matching by phase correlation ; read a bitmap image and cut out two 512x512 pixel arrays filename = Dialog_Pickfile(Filter=’*.jpg’,/Read) if filename eq ’’ then print, ’cancelled’ else begin Read_JPeG,filename,image g1 = image[0,10:521,10:521] g2 = image[0,0:511,0:511] ; perform Fourier transforms f1 = fft(g1, /double) f2 = fft(g2, /double) ; Determine the offset g = fft( f2*conj(f1)/abs(f1*conj(f1)), /inverse, /double )](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-62-2048.jpg)

![6.2. FEATURE MATCHING 55 pos = where(g eq max(g)) print, ’Offset = ’ + strtrim(pos mod 512) + strtrim(pos/512) ; output as EPS file thisDevice =!D.Name set_plot, ’PS’ Device, Filename=’c:tempphasecorr.eps’,xsize=4,ysize=4,/inches,/Encapsulated shade_surf,g[0,0:50,0:50] device,/close_file set_plot, thisDevice endelse end Images which differ not only by an offset but also by a rigid rotation and change of scale can in principle be registered similarly, see [RC96]. 6.2 Feature matching A tedious task associated with image-image registration using low level image features is the setting of ground control points (GCPs) since, in general, it is necessary to resort to the manual entry. However various techniques for automatic determination of GCPs have been suggested in the literature. We will discuss one such method, namely contour matching [LMM95]. This technique has been found to function reliably in bitemporal scenes in which vegetation changes do not dominate. It can of course be augmented (or replaced) by other automatic methods or by manual determination. The procedures involved in image-image registration using contour matching are shown in Fig. 6.2 [LMM95]. LoG Zero Crossing Edge Strength Contour Finder Chain Code Encoder Closed Contour Matching Consistency Check Warping E E E E E E cc ''' Image 1 Image 2 Image 2 (registered) Figure 6.2: Image-image registration with contour matching.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-63-2048.jpg)

![56 CHAPTER 6. IMAGE REGISTRATION 6.2.1 Contour detection The first step involves the application of a Laplacian of Gaussian filter to both images. After determining the contours by examining zero-crossings of the LoG-filtered image, the contour strengths are encoded in the pixel intensities. Strengths are taken to be proportional to the magnitude of the gradient at the zero-crossing. 6.2.2 Closed contours In the next step, all closed contours with strengths above some given threshold are deter- mined by tracing the contours. Pixels which have been visited during tracing are set to zero so that they will not be visited again. 6.2.3 Chain codes For subsequent matching purposes, all significant closed contours found in the preceding step are chain encoded. Any digital curve can be represented by an integer sequence {a1, a2 . . . ai . . .}, ai ∈ {0, 1, 2, 3, 4, 5, 6, 7}, depending on the relative position of the current pixel with respect to the previous pixel in the curve. This simple code has the drawback that some contours produce wrap around. For example the line in the direction −22.5o has the chain code {707070 . . .}. Li et al. [LMM95] suggest the smoothing operation: {a1a2 . . . an} → {b1b2 . . . bn}, where b1 = a1 and bi = qi, qi is an integer satisfying (qi−ai) mod 8 = 0 and |qi−bi−1| → min, i = 2, 3 . . . n. They also suggest the applying the Gaussian smoothing filter {0.1, 0.2, 0.4, 0.2, 0.1} to the result. Two chain codes can be compared by “sliding” one over the other and determining the maximum correlation between them. 6.2.4 Invariant moments The closed contours are first matched according to their invariant moments. These are defined as follows, see [Hab95, GW02]. Let the set C denote the set of pixels defining a contour, with |C| = n, that is, n is the number of pixels on the contour. The moment of order p, q of the contour is defined as mpq = i,j∈C jp iq . (6.2) Note that n = m00. The center of gravity xc, yc of the contour is thus xc = m10 m00 , yc = m01 m00 . The centralized moments are then given by µpq = i,j∈C (j − xc)p (i − yc)q , (6.3)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-64-2048.jpg)

![6.2. FEATURE MATCHING 57 and the normalized centralized moments by ηpq = 1 µ (p+q)/2+1 00 µpq. (6.4) For example, η20 = 1 µ2 00 µ20 = 1 n2 i,j∈C (j − yc)2 . The normalized centralized moments are, apart from effects of digital quantization, invariant under scale changes and translations of the contours. Finally, we can define moments which are also invariant under rotations, see [Hu62]. The first two such invariant moments are h1 = η20 + η02 h2 = (η20 − η02)2 + 4η2 11. (6.5) For example, consider a general rotation of the coordinate axes with origin at the center of gravity of a contour: j i = cos θ sin θ − sin θ cos θ j i = A j i . The first invariant moment in the rotated coordinate system is h1 = 1 n2 i ,j ∈C (j 2 + i 2 ) = 1 n2 i ,j ∈C (j , i ) j i = 1 n2 i,j∈C (j, i)A A j i = 1 n2 i,j∈C (j2 + i2 ), since A A = I. 6.2.5 Contour matching Each significant contour in one image is first matched with contours in the second image according to their invariant moments h1, h2. This is done by setting a threshold on the allowed differences, for instance 1 standard deviation. If one or more matches is found, the best candidate for a GCP pair is then chosen to be that matched contour in the second image for which the chain code correlation with the contour in the first image is maximum. If the maximum correlation is less that some threshold, e.g. 0.9, then no match is found. The actual GCP coordinates are taken to be the centers of gravity of the matched contours. 6.2.6 Consistency check The contour matching procedure invariably generates false GCP pairs, so a further process- ing step is required. In [LMM95] use is made of the fact that distances are preserved under a rigid transformation. Let A1A2 represent the distance between two points A1 and A2 in](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-65-2048.jpg)

![58 CHAPTER 6. IMAGE REGISTRATION an image. For two sets of m matched contour centers {Ai} and {Bi} in image 1 and 2, the ratios AiAj/BiBj, i = 1 . . . m, j = i + 1 . . . m, are calculated. These should form a cluster, so that pairs scattered away from the cluster center can be rejected as false matches. An ENVI plug-in for GCP determination via contour matching is given in Appendix D.3. 6.3 Re-sampling and warping We represent with (x, y) the coordinates of a point in image 1 and the corresponding point in image 2 with (u, v). A second order polynomial map of image 2 to image 1, for example, is given by u = a0 + a1x + a2y + a3xy + a4x2 + a5y2 v = b0 + b1x + b2y + b3xy + b4x2 + b5y2 . Since there are 12 unknown coefficients, we require at least 6 GCP pairs to determine the map (each pair generates 2 equations). If more than 6 pairs are available, the coefficients can be found by least squares fitting. This has the advantage that an RMS error for the mapping can be estimated. Similar considerations apply for lower or higher order polynomial maps. Having determined the map coefficients, image 2 can be registered to image 1 by re- sampling. Nearest neighbor resampling simply chooses the actual pixel in image 2 that has its center nearest the calculated coordinates (u, v) and transfers it to location (x, y). This is the preferred technique for classification or change detection, since the registered image consists of the original pixel brightnesses, simply rearranged in position to give a correct image geometry. Other commonly used resampling methods are bilinear interpolation and cubic convolution interpolation, see [JRR99] for details. These methods mix the spectral intensities of neighboring pixels.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-66-2048.jpg)

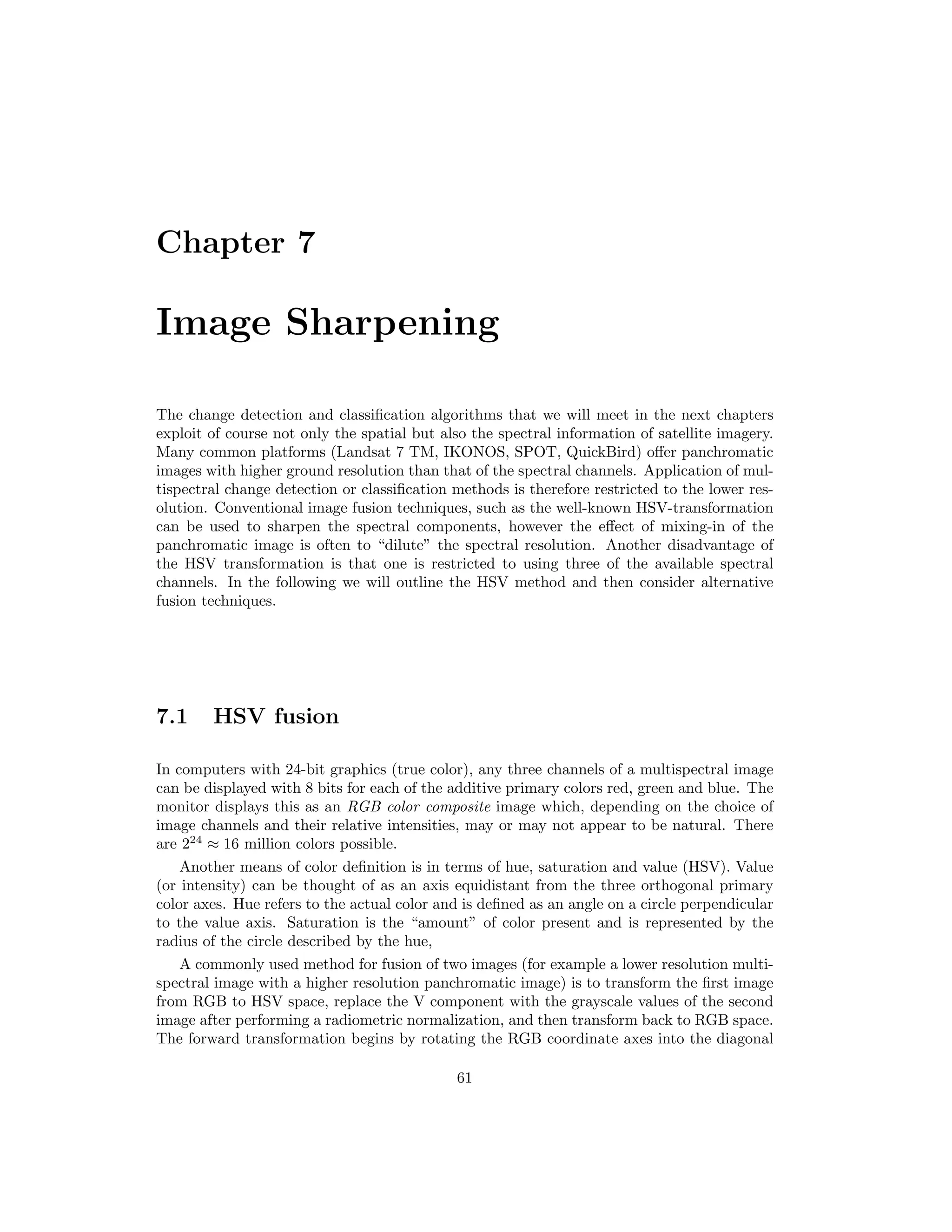

![62 CHAPTER 7. IMAGE SHARPENING axis of the RGB color cube. The coordinates in the new reference system are given by m1 m2 i1 = 2/ √ 6 −1/ √ 6 −1/ √ 6 0 1/ √ 2 −1/ √ 2 1/ √ 3 1/ √ 3 1/ √ 3 · R G B . Then the the rectangular coordinates (m1, m2, i1) are transformed into the cylindrical HSV coordinates: H = arctan(m1/m2), S = m2 1 + m2 2, I = √ 3 i1. The following IDL code illustrates the necessary steps for HSV fusion making use of ENVI batch procedures. These are also invoked directly from the ENVI main menu. pro HSVFusion, event ; get MS image envi_select, title=’Select low resolution three-band input file’, $ fid=fid1, dims=dims1, pos=pos1 if (fid1 eq -1) or (n_elements(pos1) ne 3) then return ; get PAN image envi_select, title=’Select panchromatic image’, $ fid=fid2, pos=pos2, dims=dims2, /band_only if (fid2 eq -1) then return envi_check_save, /transform ; linear stretch the images and convert to byte format envi_doit,’stretch_doit’, fid=fid1, dims=dims1, pos=pos1, method=1, $ r_fid=r_fid1, out_min=0, out_max=255, $ range_by=0, i_min=0, i_max=100, out_dt=1, out_name=’c:temphsv_temp’ envi_doit,’stretch_doit’, fid=fid2, dims=dims2, pos=pos2, method=1, $ r_fid=r_fid2, out_min=0, out_max=255, $ range_by=0, i_min=0, i_max=100, out_dt=1, /in_memory envi_file_query, r_fid2, ns=f_ns, nl=f_nl f_dims = [-1l, 0, f_ns-1, 0, f_nl-1] ; HSV sharpening envi_doit, ’sharpen_doit’, $ fid=[r_fid1,r_fid1,r_fid1], pos=[0,1,2], f_fid=r_fid2, $ f_dims=f_dims, f_pos=[0], method=0, interp=0, /in_memory ; remove temporary files from ENVI envi_file_mng, id=r_fid1, /remove, /delete envi_file_mng, id=r_fid2, /remove end](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-70-2048.jpg)

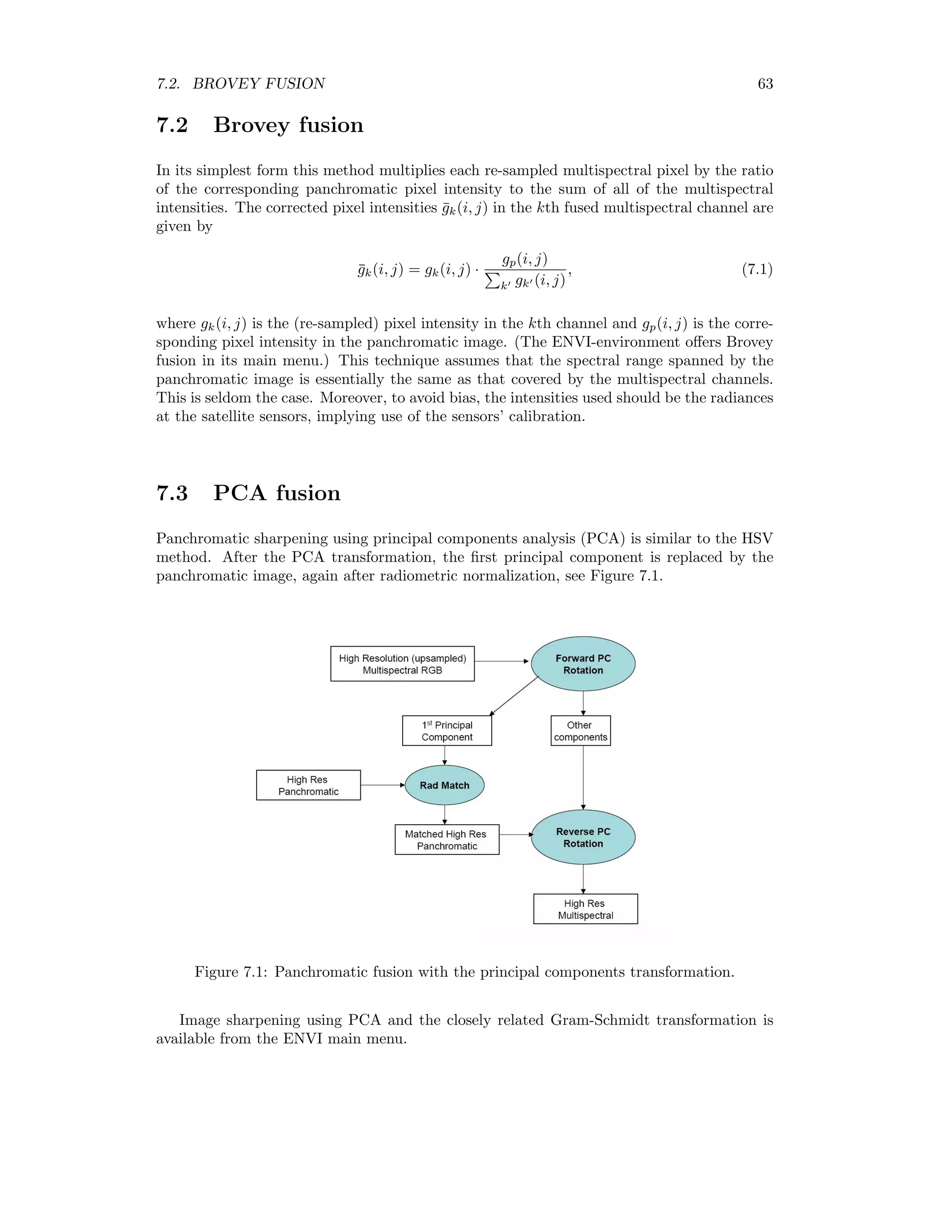

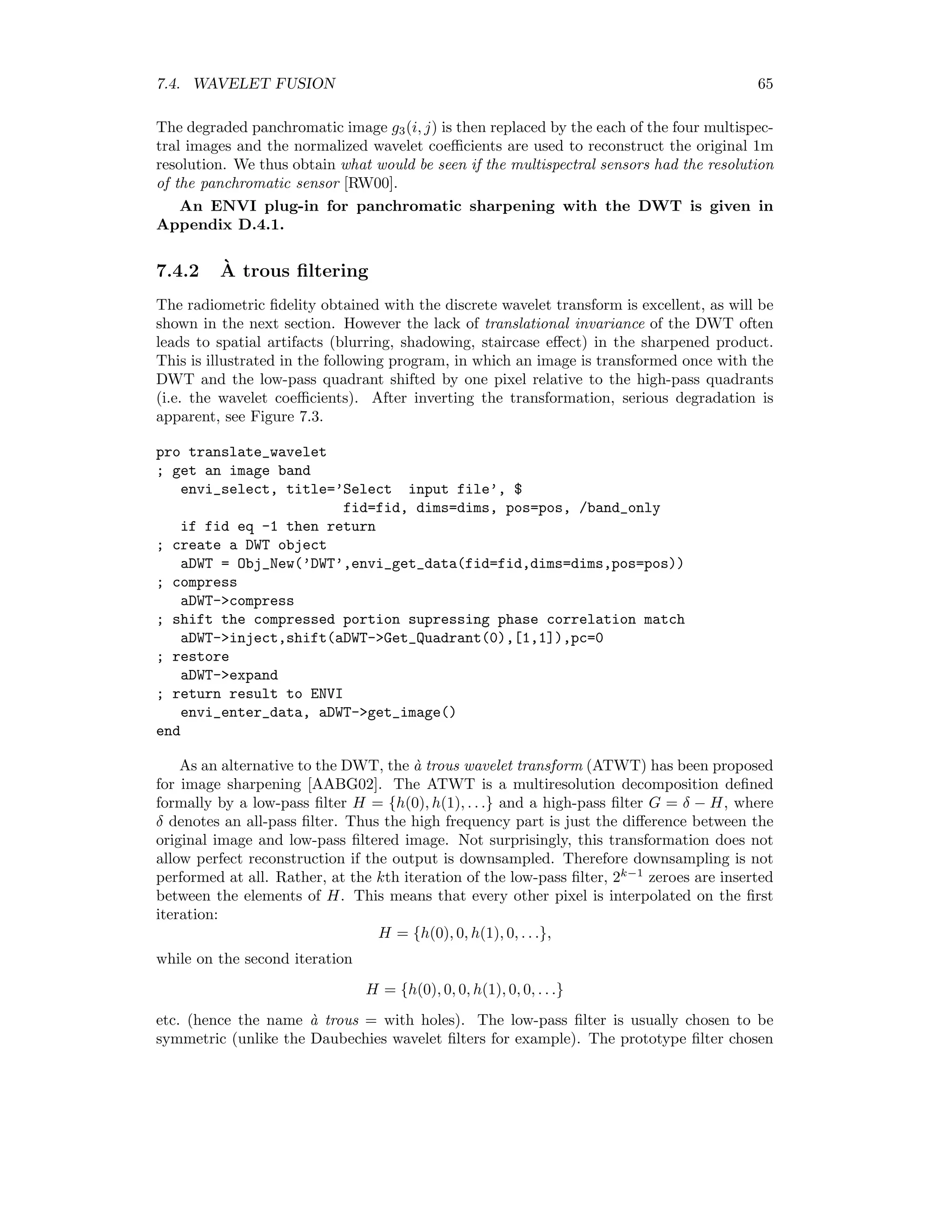

![7.4. WAVELET FUSION 65 The degraded panchromatic image g3(i, j) is then replaced by the each of the four multispec- tral images and the normalized wavelet coefficients are used to reconstruct the original 1m resolution. We thus obtain what would be seen if the multispectral sensors had the resolution of the panchromatic sensor [RW00]. An ENVI plug-in for panchromatic sharpening with the DWT is given in Appendix D.4.1. 7.4.2 `A trous filtering The radiometric fidelity obtained with the discrete wavelet transform is excellent, as will be shown in the next section. However the lack of translational invariance of the DWT often leads to spatial artifacts (blurring, shadowing, staircase effect) in the sharpened product. This is illustrated in the following program, in which an image is transformed once with the DWT and the low-pass quadrant shifted by one pixel relative to the high-pass quadrants (i.e. the wavelet coefficients). After inverting the transformation, serious degradation is apparent, see Figure 7.3. pro translate_wavelet ; get an image band envi_select, title=’Select input file’, $ fid=fid, dims=dims, pos=pos, /band_only if fid eq -1 then return ; create a DWT object aDWT = Obj_New(’DWT’,envi_get_data(fid=fid,dims=dims,pos=pos)) ; compress aDWT-compress ; shift the compressed portion supressing phase correlation match aDWT-inject,shift(aDWT-Get_Quadrant(0),[1,1]),pc=0 ; restore aDWT-expand ; return result to ENVI envi_enter_data, aDWT-get_image() end As an alternative to the DWT, the `a trous wavelet transform (ATWT) has been proposed for image sharpening [AABG02]. The ATWT is a multiresolution decomposition defined formally by a low-pass filter H = {h(0), h(1), . . .} and a high-pass filter G = δ − H, where δ denotes an all-pass filter. Thus the high frequency part is just the difference between the original image and low-pass filtered image. Not surprisingly, this transformation does not allow perfect reconstruction if the output is downsampled. Therefore downsampling is not performed at all. Rather, at the kth iteration of the low-pass filter, 2k−1 zeroes are inserted between the elements of H. This means that every other pixel is interpolated on the first iteration: H = {h(0), 0, h(1), 0, . . .}, while on the second iteration H = {h(0), 0, 0, h(1), 0, 0, . . .} etc. (hence the name `a trous = with holes). The low-pass filter is usually chosen to be symmetric (unlike the Daubechies wavelet filters for example). The prototype filter chosen](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-79-2048.jpg)

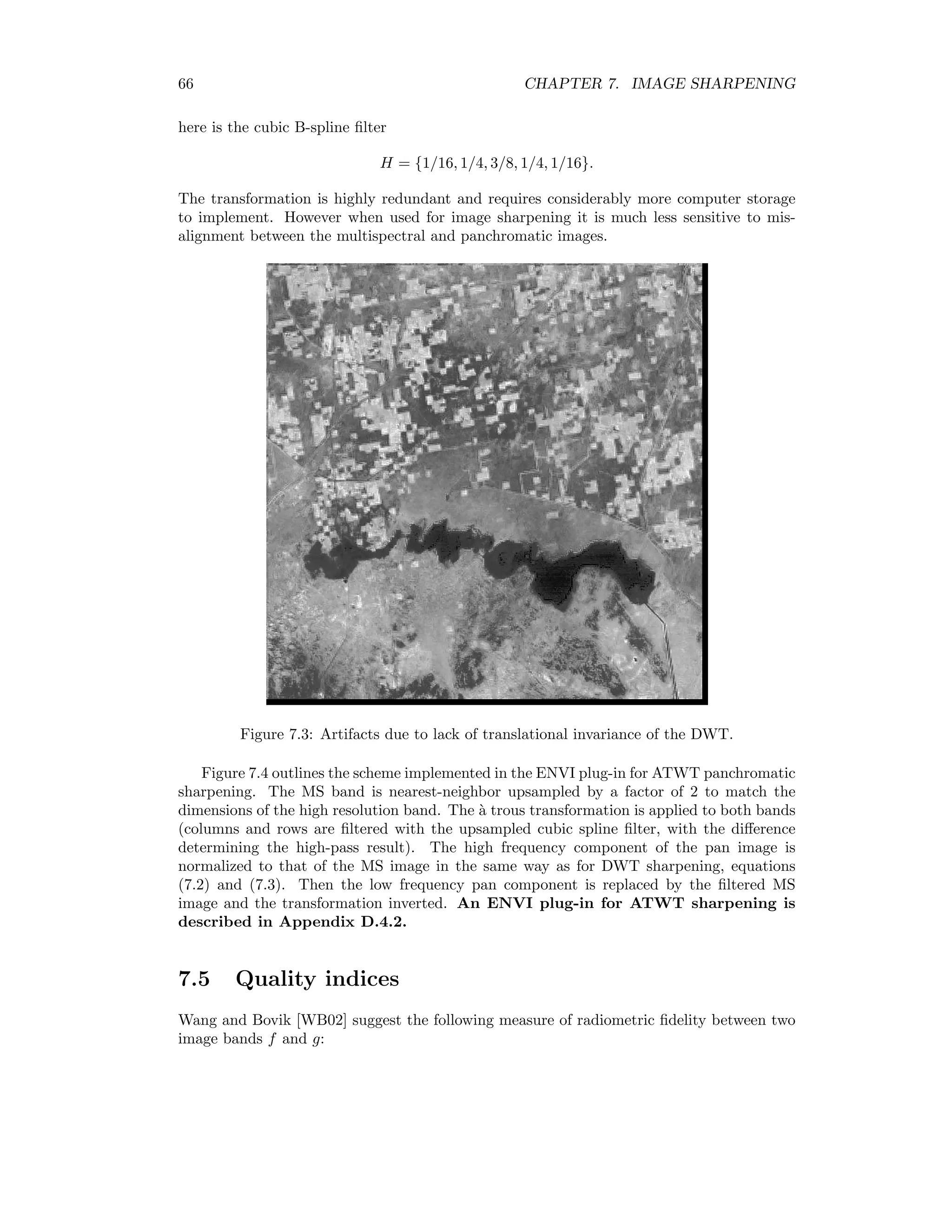

![66 CHAPTER 7. IMAGE SHARPENING here is the cubic B-spline filter H = {1/16, 1/4, 3/8, 1/4, 1/16}. The transformation is highly redundant and requires considerably more computer storage to implement. However when used for image sharpening it is much less sensitive to mis- alignment between the multispectral and panchromatic images. Figure 7.3: Artifacts due to lack of translational invariance of the DWT. Figure 7.4 outlines the scheme implemented in the ENVI plug-in for ATWT panchromatic sharpening. The MS band is nearest-neighbor upsampled by a factor of 2 to match the dimensions of the high resolution band. The `a trous transformation is applied to both bands (columns and rows are filtered with the upsampled cubic spline filter, with the difference determining the high-pass result). The high frequency component of the pan image is normalized to that of the MS image in the same way as for DWT sharpening, equations (7.2) and (7.3). Then the low frequency pan component is replaced by the filtered MS image and the transformation inverted. An ENVI plug-in for ATWT sharpening is described in Appendix D.4.2. 7.5 Quality indices Wang and Bovik [WB02] suggest the following measure of radiometric fidelity between two image bands f and g:](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-80-2048.jpg)

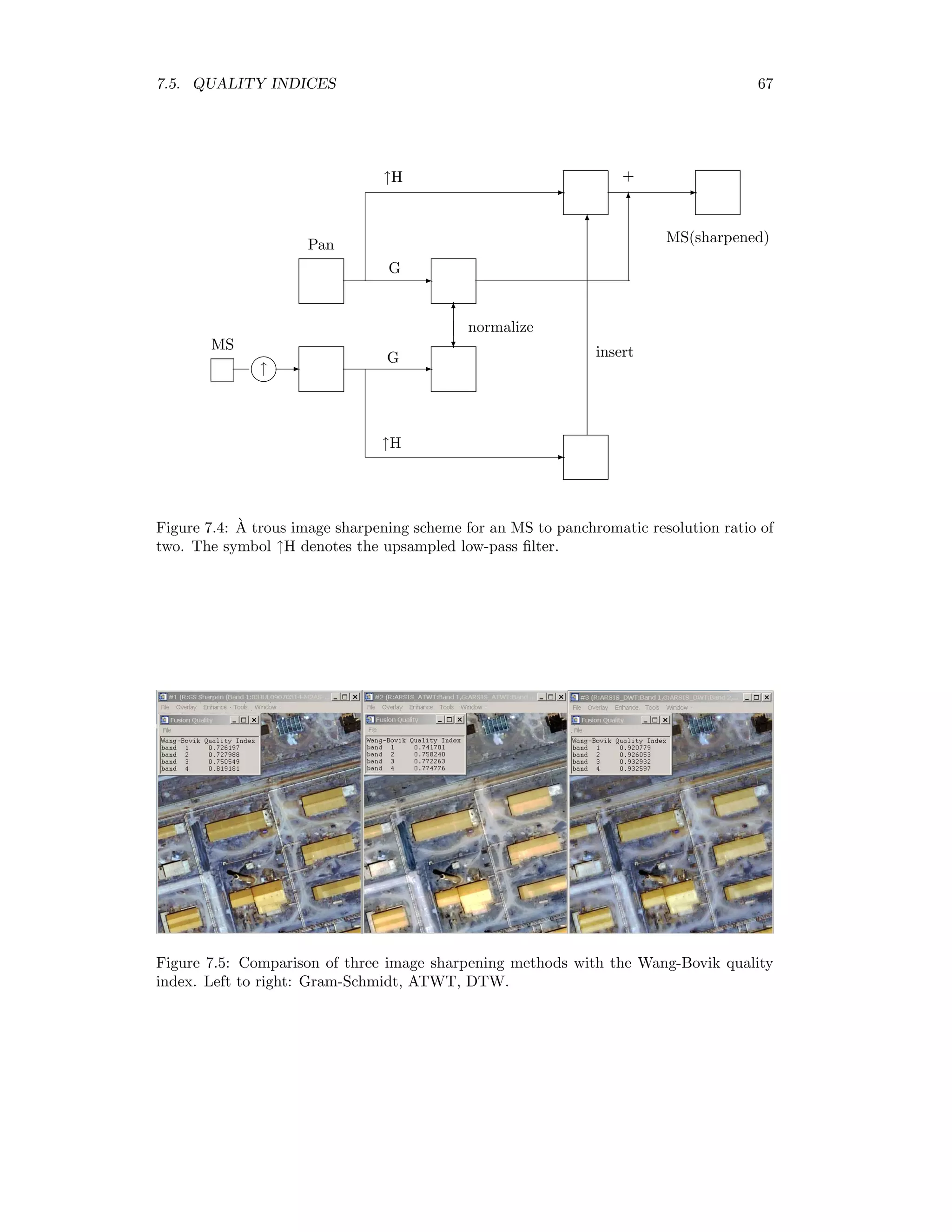

![68 CHAPTER 7. IMAGE SHARPENING Q = σfg σf σg · 2 ¯f¯g ¯f2 + ¯g2 · 2σf σg σ2 f + σ2 g = 4σfg ¯f¯g ( ¯f2 + ¯g2)(σ2 f + σ2 g) (7.4) where ¯f and σf are mean and variance of band f and σfg is the covariance of the two bands. This first term in (7.4) is seen to be the correlation coefficient between the two images, with values in [−1, 1], the second term compares their average brightness, with values in [0, 1] and the third term compares their contrasts, also in [0, 1]. Thus perfect radiometric correspondence would give a value Q = 1. Since image quality is usually not spatially invariant, it is usual to compute Q in, say, M sliding windows and then average over all such windows: Q = 1 M M j=1 Qj. An ENVI plug-in for determining the quality index for pansharpened images is given in Appendix D.4.3. Figure 7.5 shows a comparison of three image sharpening methods applied to a QuickBird image, namely the Gram-Schmidt, ATWT and DWT transformations. The latter is by far the best, but spatial artifacts are apparent.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-83-2048.jpg)

![Chapter 8 Change Detection To quote Singh’s review article on change detection [Sin89], “The basic premise in using remote sensing data for change detection is that changes in land cover must result in changes in radiance values ... [which] must be large with respect to radiance changes from other factors.” In the present chapter we will mention briefly the most commonly used digital techniques for enhancing this “change signal” in bitemporal satellite images, and then focus our attention on the so-called multivariate alteration detection algorithm of Nielsen et al. [NCS98]. 8.1 Algebraic methods In order to see changes in the two multispectral images represented by N-dimensional ran- dom vectors F and G, a simple procedure is to subtract them from each other component- by-component, examining the N differenced images characterized by F − G = (F1 − G1, F2 − G2 . . . FN − GN ) (8.1) for significant changes. Pixel intensity differences near zero indicate no change, large positive or negative values indicate change, and decision thresholds can be set to define significant changes. If the difference signatures in the spectral channels are used to classify the kind of change that has taken place, one speaks of change vector analysis. Thresholds are usually expressed in standard deviations from the mean difference value, which is taken to correspond to no change. Alternatively, ratios of intensities of the form Fk Gk , k = 1 . . . N (8.2) can be built between successive images. Ratios near unity correspond to no-change, while small and large values indicate change. A disadvantage of this method is that random variables of the form (8.2) are not normally distributed, so simple threshold values defined in terms of standard deviations are not valid. Other algebraic combinations, such as differences in vegetation indices (Section 2.1) are also in use. All of these “band math” operations can of course be performed conveniently within the ENVI/IDL environment. 69](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-84-2048.jpg)

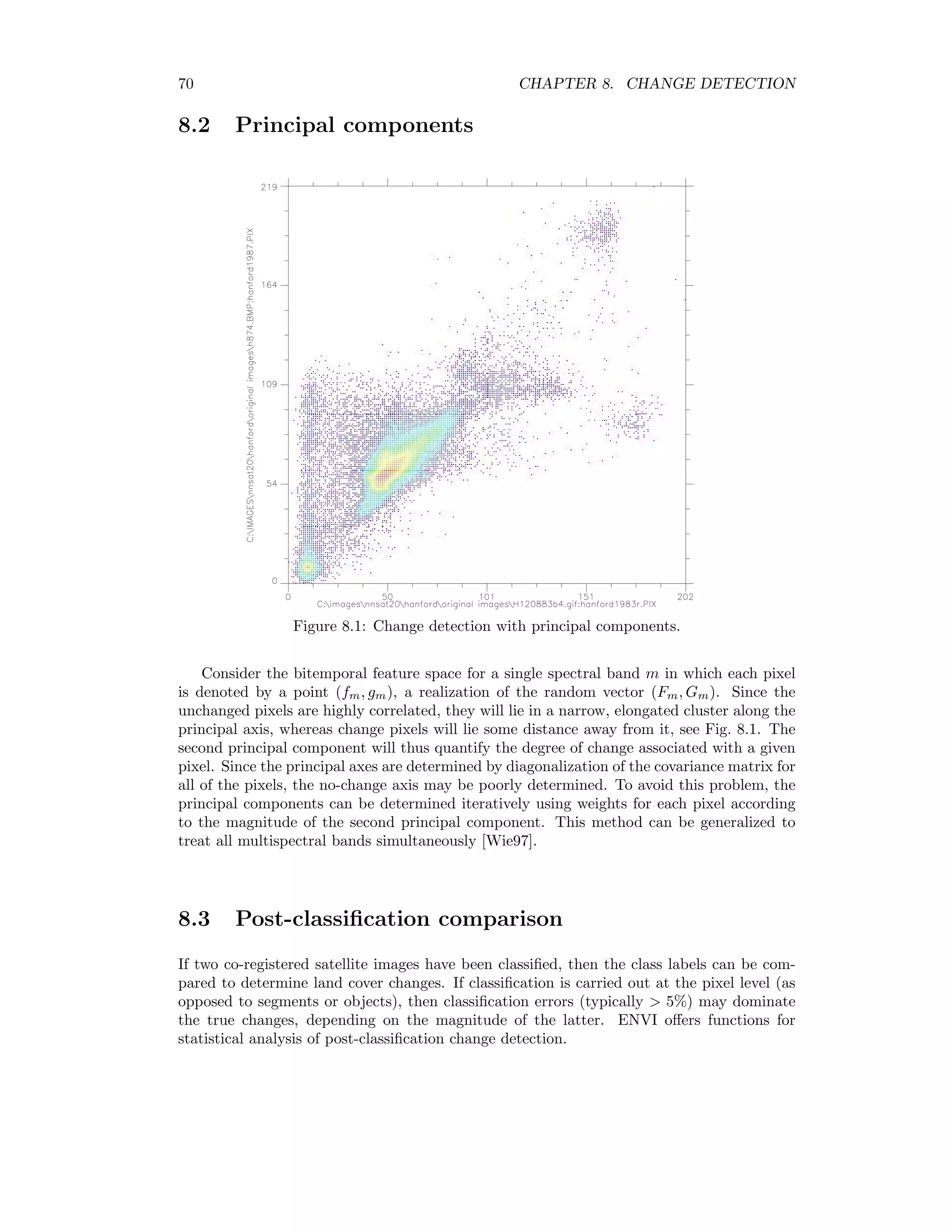

![70 CHAPTER 8. CHANGE DETECTION 8.2 Principal components Figure 8.1: Change detection with principal components. Consider the bitemporal feature space for a single spectral band m in which each pixel is denoted by a point (fm, gm), a realization of the random vector (Fm, Gm). Since the unchanged pixels are highly correlated, they will lie in a narrow, elongated cluster along the principal axis, whereas change pixels will lie some distance away from it, see Fig. 8.1. The second principal component will thus quantify the degree of change associated with a given pixel. Since the principal axes are determined by diagonalization of the covariance matrix for all of the pixels, the no-change axis may be poorly determined. To avoid this problem, the principal components can be determined iteratively using weights for each pixel according to the magnitude of the second principal component. This method can be generalized to treat all multispectral bands simultaneously [Wie97]. 8.3 Post-classification comparison If two co-registered satellite images have been classified, then the class labels can be com- pared to determine land cover changes. If classification is carried out at the pixel level (as opposed to segments or objects), then classification errors (typically 5%) may dominate the true changes, depending on the magnitude of the latter. ENVI offers functions for statistical analysis of post-classification change detection.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-85-2048.jpg)

![8.4. MULTIVARIATE ALTERATION DETECTION 71 8.4 Multivariate alteration detection Suppose we make a linear combination of the intensities for all N channels in the first image acquired at time t2, represented by the random vector F. That is, we create a single image whose pixel intensities are U = a F = a1F1 + a2F2 + . . . aN FN , where the vector of coefficients a is as yet unspecified. We do the same for t2, i.e. we make the linear combination V = b G, and then look at the scalar difference image U − V . This procedure combines all the information into a single image, whereby one still hast to choose the coefficients a and b in some suitable way. Nielsen et al. [NCS98] suggest determining the coefficients so that the positive correlation between U and V is minimized. This means that the resulting difference image U − V will show maximum spread in its pixel intensities. If we assume that the spread is primarily due to actual changes that have taken place in the scene over the interval t2 − t1, then this procedure will enhance those changes as much as possible. Specifically we seek linear combinations such that var(U − V ) = var(U) + var(V ) − 2cov(U, V ) → maximum, (8.3) subject to the constraints var(U) = var(V ) = 1. (8.4) Note that under these constraints var(U − V ) = 2(1 − ρ), (8.5) where ρ is the correlation of the transformed vectors U and V , ρ = corr(U, V ) = cov(U, V ) var(U)var(V ) . Since we are dealing with change detection, we require that the random variables U and V be positively correlated, that is, cov(U, V ) 0. We thus seek vectors a and b which minimize the positive correlation ρ. 8.4.1 Canonical correlation analysis Canonical correlation analysis leads to a transformation of each set of variables F and G such that their mutual correlation is displayed unambiguously, see [And84], Chapter 12. We can derive the transformation as follows: For multivariate normally distributed data the combined random vector is distributed as F G ∼ N 0 0 , Σff Σfg Σgf Σgg . Recalling the property (1.6) we have var(U) = a Σff a, var(V ) = b Σggb, cov(U, V ) = a Σfgb.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-86-2048.jpg)

![72 CHAPTER 8. CHANGE DETECTION If we introduce the Lagrange multipliers ν/2 and µ/2, extremalizing the covariance cov(U, V ) under the constraints (8.4) is equivalent to extremalizing the unconstrained Lagrange func- tion L = a Σfgb − ν 2 (a Σff a − 1) − µ 2 (b Σggb − 1). Differentiating, we obtain ∂L ∂a = Σfgb − ν 2 2Σff a = 0, ∂L ∂b = Σfga − µ 2 2Σggb = 0 or a = 1 ν Σ−1 ff Σfgb, b = 1 µ Σ−1 gg Σfga. The correlation between the random variables U and V is ρ = cov(U, V ) var(U)var(V ) = a Σfgb a Σff a b Σggb . Substituting for a and b in this equation gives (with Σfg = Σgf ) ρ2 = a ΣfgΣ−1 gg Σgf a a Σff a , ρ2 = b Σgf Σ−1 ff Σfgb b Σggb , which are equivalent to the two generalized eigenvalue problems ΣfgΣ−1 gg Σgf a = ρ2 Σff a Σgf Σ−1 ff Σfgb = ρ2 Σggb. (8.6) Thus the desired projections U = a F are given by the eigenvectors a1 . . . aN corresponding to the generalized eigenvalues ρ2 ∼ λ1 ≥ . . . ≥ λN of ΣfgΣ−1 gg Σgf with respect to Σff . Similarly the desired projections V = b G are given by the eigenvectors b1 . . . bN of Σgf Σ−1 ff Σfg with respect to Σgg corresponding to the same eigenvalues. Nielsen et al. [NCS98] refer to the N difference components Mi = Ui − Vi = ai F − bi G, i = 1 . . . N, (8.7) as the multivariate alteration detection (MAD) components of the combined bitemporal image. 8.4.2 Solution by Cholesky factorization Equations (8.6) are of the form Σ1a = λΣa, where both Σ1 and Σ are symmetric and Σ is positive definite. The Cholesky factorization of Σ is Σ = LL , where L is a lower triangular matrix, and can be thought of as the “square root” of Σ. Such an L always exists is Σ is positive definite. Therefore we can write Σ1a = LL a](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-87-2048.jpg)

![8.4. MULTIVARIATE ALTERATION DETECTION 73 or, equivalently, L−1 Σ1(L )−1 L a = λL a or, with d = L a and commutivity of inverse and transpose, [L−1 Σ1(L−1 ) ]d = λd, a standard eigenproblem for a real, symmetric matrix L−1 Σ1(L−1 ) . Let the (orthogonal) eigenvectors be di. We have 0 = di dj = ai LL aj = ai Σaj, i = j. (8.8) 8.4.3 Properties of the MAD components We have, from (8.4) and (8.8), for the eigenvectors ai and bi, ai Σff aj = bi Σggbj = δij. Furthermore bi = 1 √ λi Σ−1 gg Σgf ai, i.e. substituting this into the LHS of the second equation in (8.6): Σgf Σ−1 ff Σfg 1 √ λi Σ−1 gg Σgf ai = Σgf Σ−1 ff 1 √ λi λiΣff ai = Σgf λiai = λiΣggbi, as required. It follows that ai Σfgbj = ai 1 λj ΣfgΣ−1 gg Σgf aj = λj ai Σff ai = λj δij, and similarly for bi Σgf aj. Thus the covariances of the MAD components are given by cov(Ui − Vi, Uj − Vj) = cov(ai F − bi G, aj F − bj G) = 2δij(1 − λj). The MAD components are therefore orthogonal (uncorrelated) with variances var(Ui − Vi) = σ2 MADi = 2(1 − λi). (8.9) The transformation corresponding to the smallest eigenvalue, namely (aN , bN ), will thus give maximal variance for the difference U − V . We can derive change probabilities from a MAD image as follows. The sum of the squares of the standardized MAD components for no-change pixels, given by Z = MAD1 σMAD1 2 + . . . + MADN σMADN 2 , is approximately chi-square distributed with N degrees of freedom, i.e., Pr(Z ≤ z) = ΓP (N/2, z/2). For a given measured value z for some pixel, the probability that Z could be that large or larger, given that the pixel is no-change, is 1 − ΓP (N/2, z/2).](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-88-2048.jpg)

![8.4. MULTIVARIATE ALTERATION DETECTION 75 which are identical to (8.6) with b = T c. Therefore the MAD components in the trans- formed situation are ai F − ci H = ai F − ci TG = ai F − (T ci) G = ai F − bi G as before. 8.4.6 Improving signal to noise The MAD transformation can be augmented by subsequent application of the maximum autocorrelation factor (MAF) transformation, in order to improve the spatial coherence of the difference components, see [NCS98]. When image noise is estimated as the difference between intensities of neighboring pixels, the MAF transformation is equivalent to the MNF transformation. The MAF/MAD variates thus generated are also orthogonal and invariant under affine transformations. An ENVI plug-in for performing the MAF transfor- mation is given in Appendix D.5.2. 8.4.7 Decision thresholds Since the MAD components are approximately normally distributed about zero and uncor- related, see Figure 8.2, decision thresholds for change or no change pixels can be set in terms of standard deviations about the mean for each component separately. This can be done arbitrarily, for example by saying that all pixels in a MAD component whose intensities are within ±2σMAD are no-change pixels. Figure 8.2: Scatter plot of two MAD components. We can do better than this, however, using a Bayesean technique. Let us consider the following mixture model for a random variable X representing one of the MAD components: p(x) = p(x | NC)p(NC) + p(x | C−)p(C−) + p(x | C+)p(C+), (8.11)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-90-2048.jpg)

![76 CHAPTER 8. CHANGE DETECTION Figure 8.3: Probability mixture model for MAD components. where C+, C− and NC denote positive change, negative change and no change, respectively, see Fig. 8.3. The set of measurements S = {xi} may be grouped into four disjoint sets: SNC, SC−, SC+, SU = SSNC ∪ SC− ∪ SC+, with SU denoting the set of ambiguous pixels.1 From the sample mean and sample variance, we estimate initially the moments for the distribution of no-change pixels: µNC = 1 |SNC| · i∈SNC xi, (σNC)2 = 1 |SNC| · i∈SNC (xi − µNC)2 (|S| denotes set cardinality) and similarly for C− and C+. Bruzzone and Prieto [BP00] suggest improving these estimates by using the pixels in SU and applying the so-called EM algorithm (see [Bis95] for a good explanation): µNC = i∈S p(NC | xi)xi / i∈S p(NC | xi) (σNC)2 = i∈S p(NC | xi)(xi − µNC)2 / i∈S p(NC | xi) p (NC) = 1 |S| · i∈S p(NC | xi) , (8.12) where p(NC | xi) is the a posteriori probability for a no-change pixel conditional on mea- surement xi. We have the following rules for determining p(NC | xi): 1. i ∈ SNC : p(NC | xi) = 1 2. i ∈ SC± : p(NC | xi) = 0 1The symbols ∪ and denote set union and set difference, respectively. These sets can be determined in practice by setting generous, scene-independent thresholds for change and no-change pixel intensities, see [BP00].](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-91-2048.jpg)

![78 CHAPTER 8. CHANGE DETECTION homogeneous and can be approximated by linear functions. The critical aspect is the deter- mination of suitable time-invariant features upon which to base the normalization. As we have seen, the MAD transformation invariant to linear and affine scaling. Thus, if one uses MAD for change detection applications, preprocessing by linear radiometric normal- ization is superfluous. However radiometric normalization of imagery is important for many other applications, such as mosaicing, tracking vegetation indices over time, supervised and unsupervised land cover classification, etc. Furthermore, if some other, non-invariant change detection procedure is preferred, it must generally be preceded by radiometric normaliza- tion [CNS04]. Taking advantage of this invariance, one can apply the MAD transformation to select the no-change pixels in bitemporal images, and then used them for radiometric normalization. The procedure is simple, fast and completely automatic and compares very favorably with normalization using hand-selected, time-invariant features. An ENVI plug-in for radiometric normalization with the MAD transforma- tion is given in Appendix D.5.3.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-93-2048.jpg)

![80 CHAPTER 9. UNSUPERVISED CLASSIFICATION clustering given the data. From Bayes’ rule, p(C | x) = p(x | C)p(C) p(x) . (9.2) The quantity p(x|C) is the joint probability density function for clustering C, also referred to as the likelihood of observing the clustering C given the data x, P(C) is the prior probability for C and p(x) is a normalization independent of C. The joint probability density for the data is the product of the individual probability densities, i.e., p(x | C) = K k=1 i∈Ck p(xi | Ck) = K k=1 i∈Ck (2π)−N/2 |Σk|−1/2 exp − 1 2 (xi − µk) Σ−1 k (xi − µk) . Forming the product in this way is justified by the independence of the samples. The log-likelihood is given by [Fra96] L = log p(x | C) = K k=1 i∈Ck − N 2 log(2π) − 1 2 log |Σk| − 1 2 (xi − µk) Σ−1 k (xi − µk) . With (9.2) we can therefore write log p(C | x) ∝ L + log p(C). (9.3) If all K classes exhibit identical covariance matrices according to Σk = σ2 I, k = 1 . . . K, (9.4) where I is the identity matrix, then L is maximized when the expression K k=1 i∈Ck (xi) − µk) ( 1 2σ2 I)(xi − µk) = K k=1 i∈Ck (xi − µk) (xi − µk) 2σ2 is minimized. We are thus led to the cost function E(C) = K k=1 i∈Ck (xi − µk) (xi − µk) 2σ2 − log p(C). (9.5) An optimal clustering C under these assumptions is achieved for E(C) → min . Now we introduce a “hard” class dependency in the form of a matrix u with elements given by uki = 1 if i ∈ Ck 0 otherwise. (9.6)](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-95-2048.jpg)

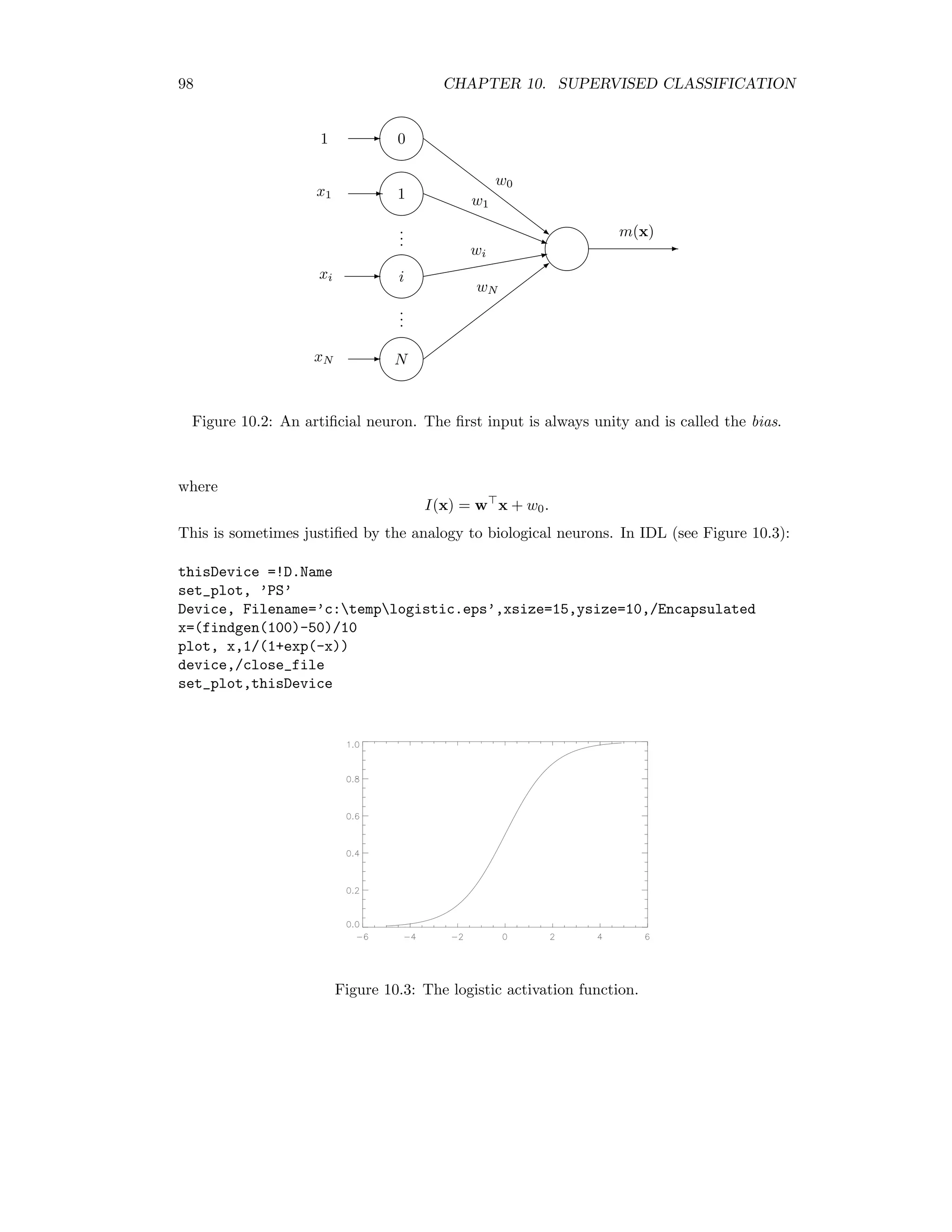

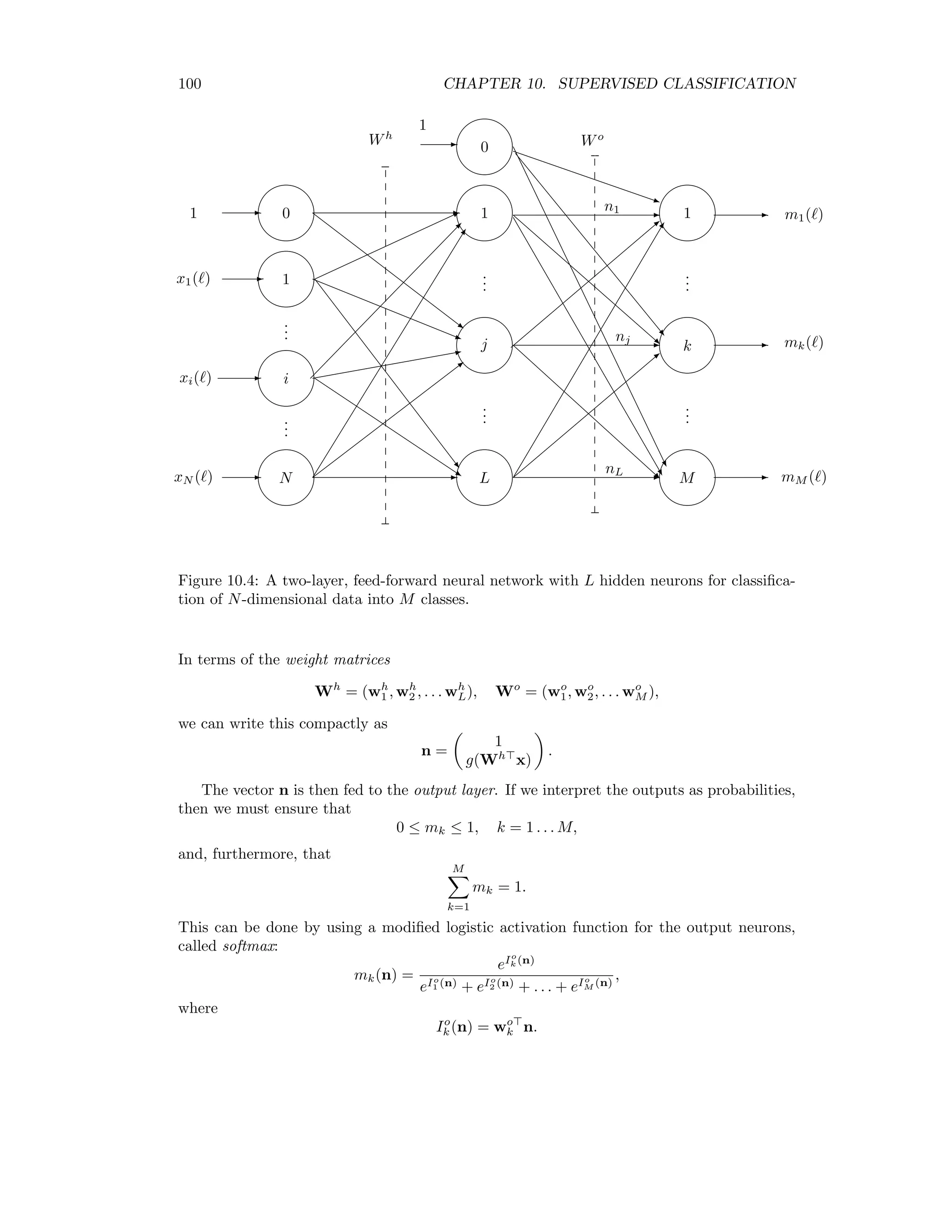

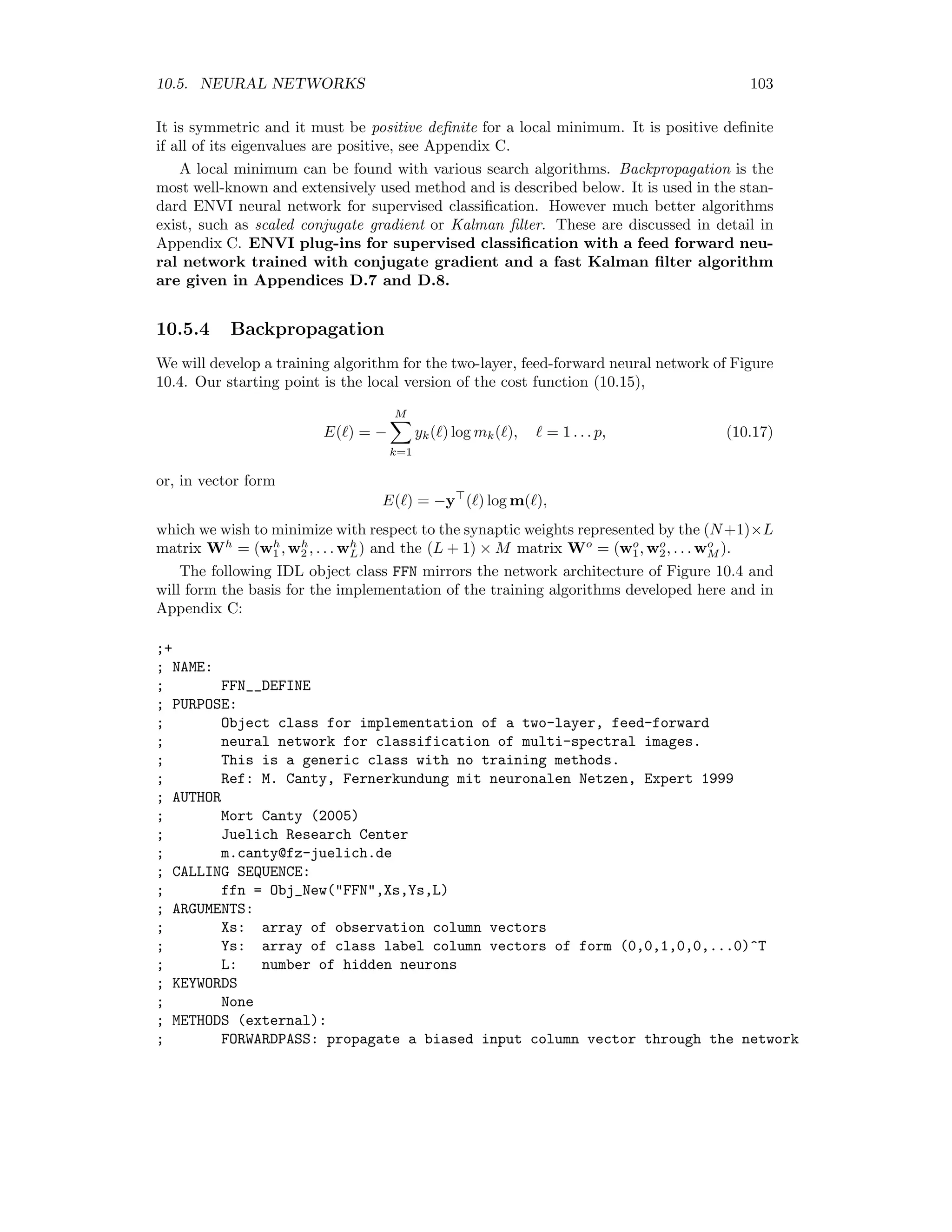

![9.2. ALGORITHMS THAT MINIMIZE THE SIMPLE COST FUNCTION 81 The matrix u satisfies the conditions K k=1 uki = 1, i = 1 . . . n, (9.7) meaning that each sampled pixel xi, i = 1 . . . n, belongs to precisely one class, and n i=1 uki 0, k = 1 . . . K, (9.8) meaning that no class Ck, k = 1 . . . K, is empty. The sum in (9.8) is the number nk of pixels in the kth class. An unbiased estimate mk of the expected value µk for the kth cluster is therefore given by µk ≈ mk = 1 nk i∈Ck xi = n i=1 ukixi n i=1 uki , k = 1 . . . K, (9.9) and an estimate Fk of the covariance matrix Σk by Σk ≈ Fk = n i=1 uki(xi − mk)(xi − mk) n i=1 uki , k = 1 . . . K. (9.10) We can now write (9.5) in the form E(C) = K k=1 n i=1 uki (xi − mk) (xi − mk) 2σ2 − log p(C). (9.11) Finally, if we do not wish to include prior probabilities, we can simply say that all clustering configurations C are a priori equally likely. Then the last term in (refe911) is independent of C and we have, dropping the multiplicative constant 1/2σ2 , the well-known sum-of-squares cost function E(C) = K k=1 n i=1 uki(xi − mk) (xi − mk). (9.12) 9.2 Algorithms that minimize the simple cost function We begin with the popular K-means method and then consider an algorithm due to (Palu- binskas 1998) [Pal98], which uses cost function (9.11) and for which the number of clusters is determined automatically. Then we discuss a common version of bottom-up or agglomer- ative hierarchical clustering, and finally a “fuzzy” version of the K-means algorithm. 9.2.1 K-means The K-means clustering algorithm (KM) (sometimes referred to as basic Isodata [DH73] or migrating means [JRR99]) is based on the cost function (9.12). After initialization of the cluster centers, the distance measure corresponding to a minimization of (9.12), namely d(i, k) = (xi − mk) (xi − mk) is used to re-cluster the pixel vectors. Then (9.9) is used to recalculate the cluster centers. This procedure is iterated until the centers cease to change significantly. K-means clustering may be performed within the ENVI environment from the main menu.](https://image.slidesharecdn.com/mortonjohncanty-imageanalysisandpatternrecognitionforremotesensingwithalgorithmsinenvi-idl-190425063306/75/Morton-john-canty-image-analysis-and-pattern-recognition-for-remote-sensing-with-algorithms-in-envi-idl-96-2048.jpg)