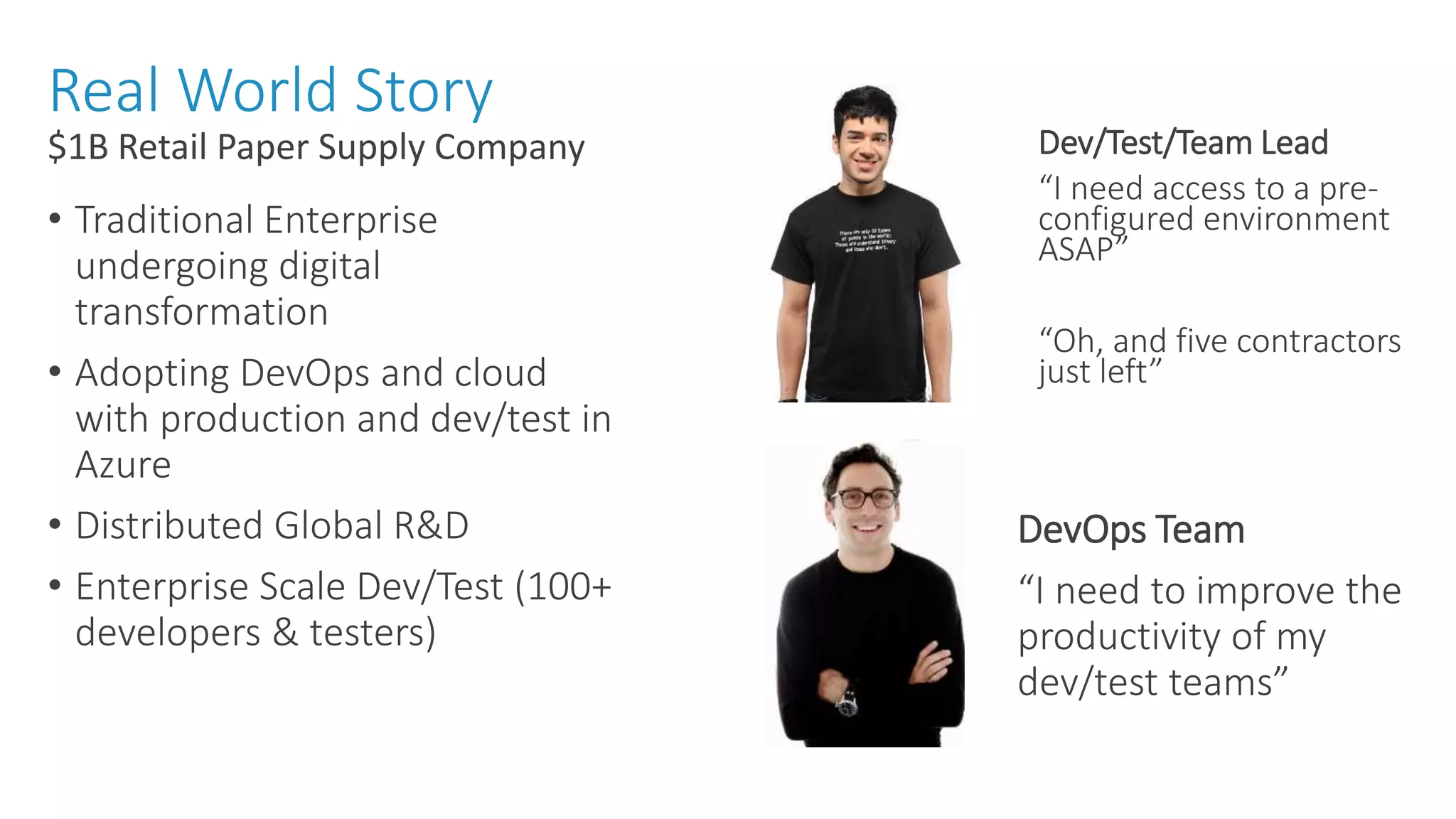

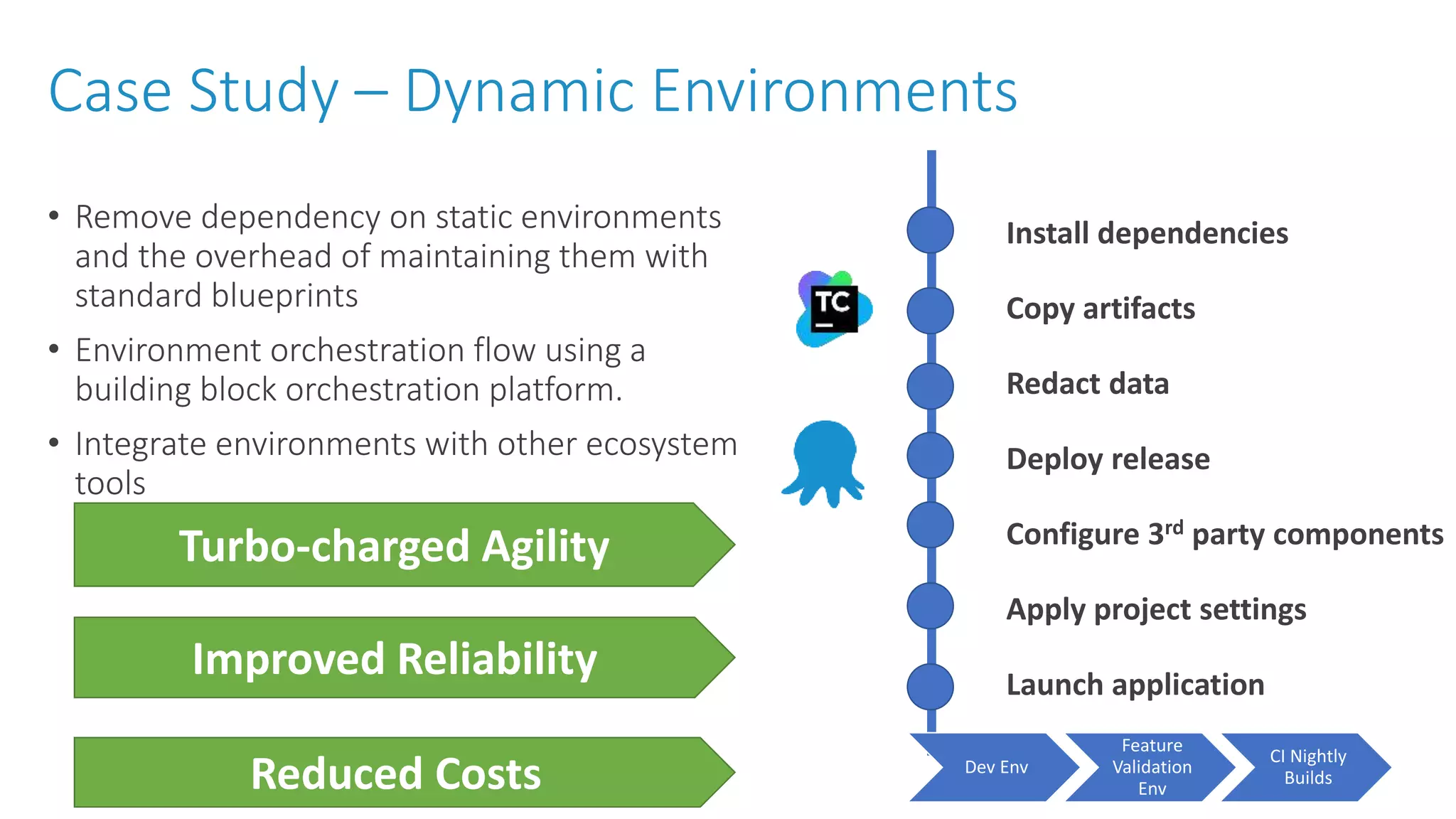

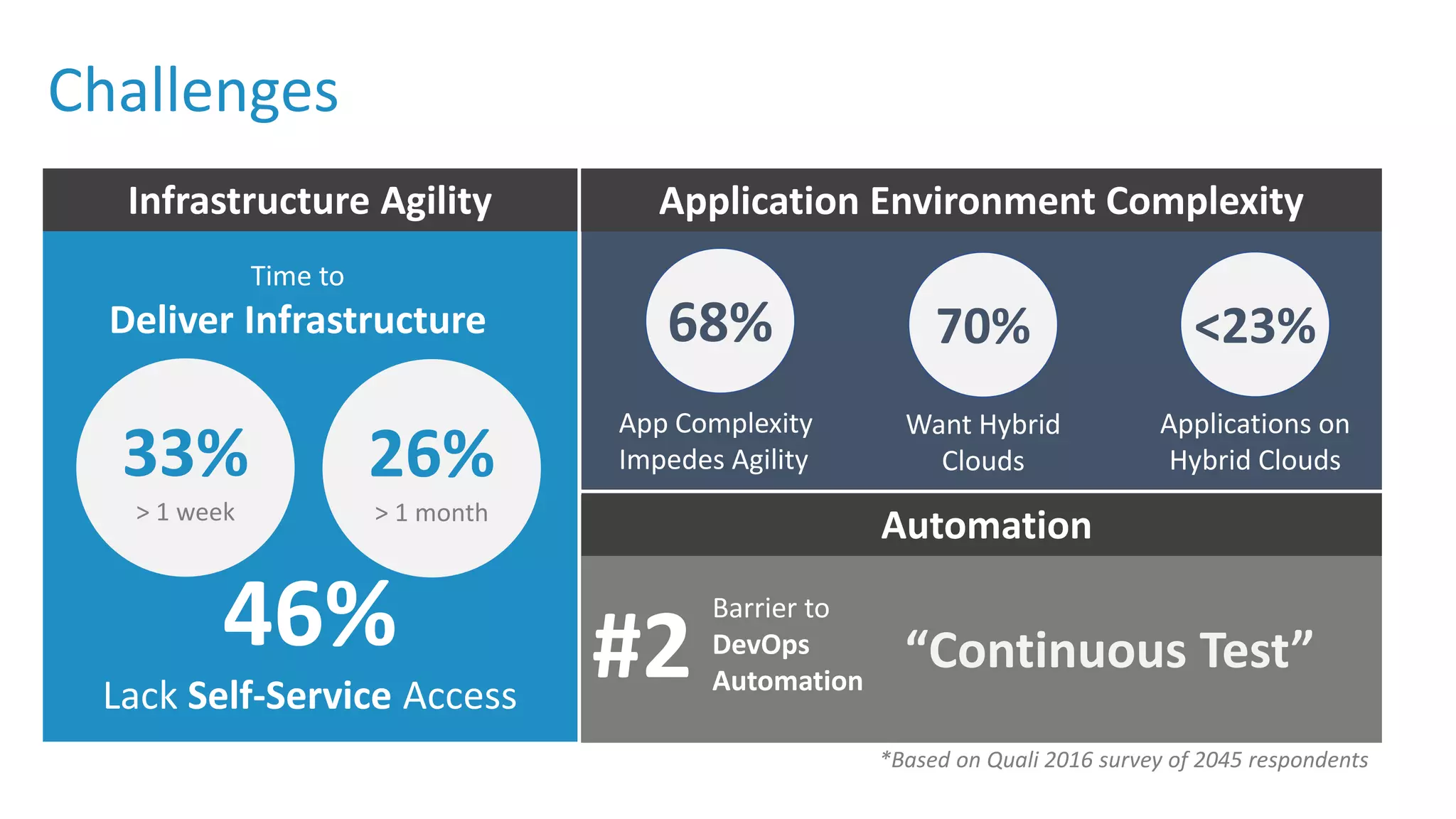

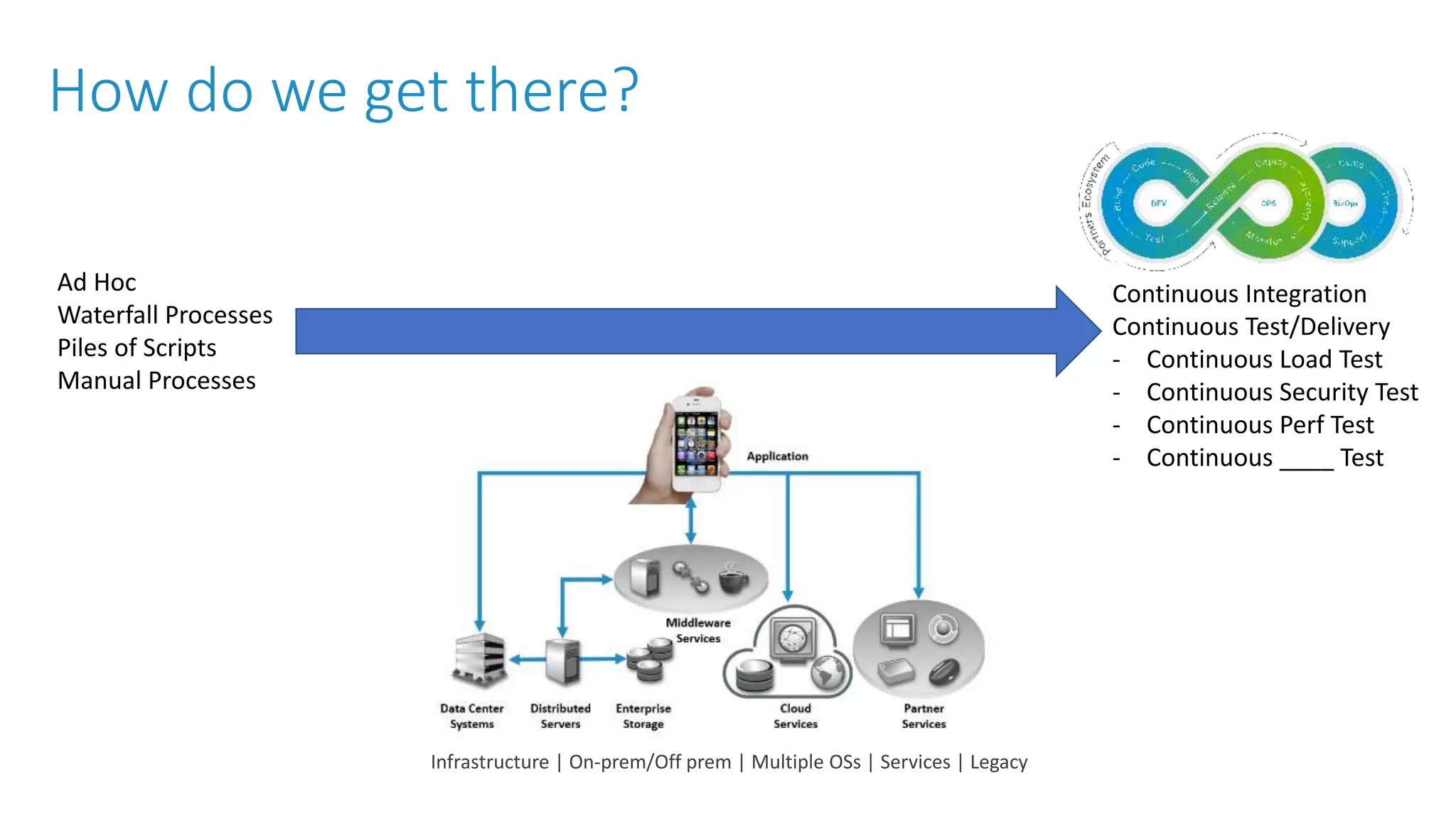

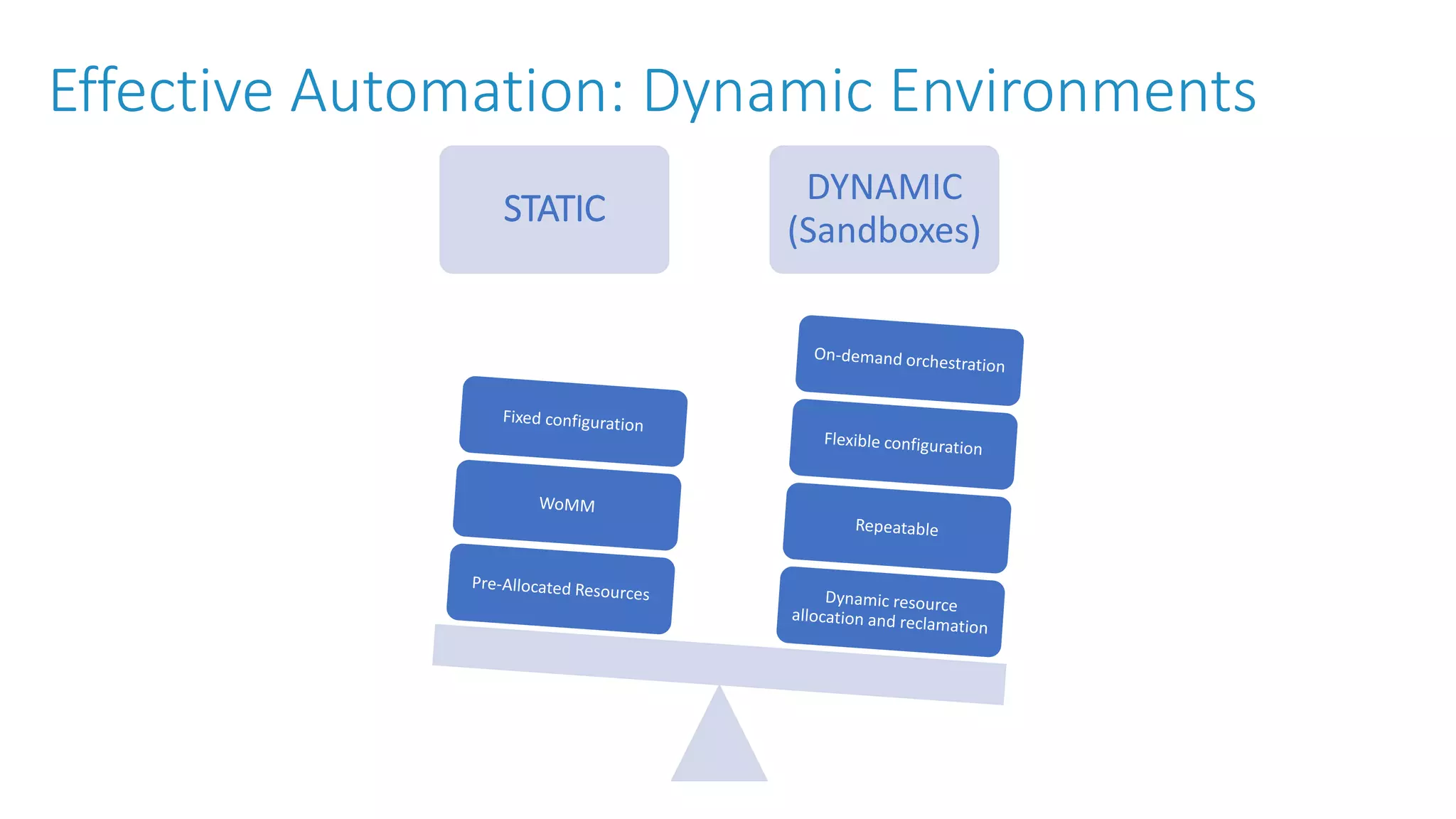

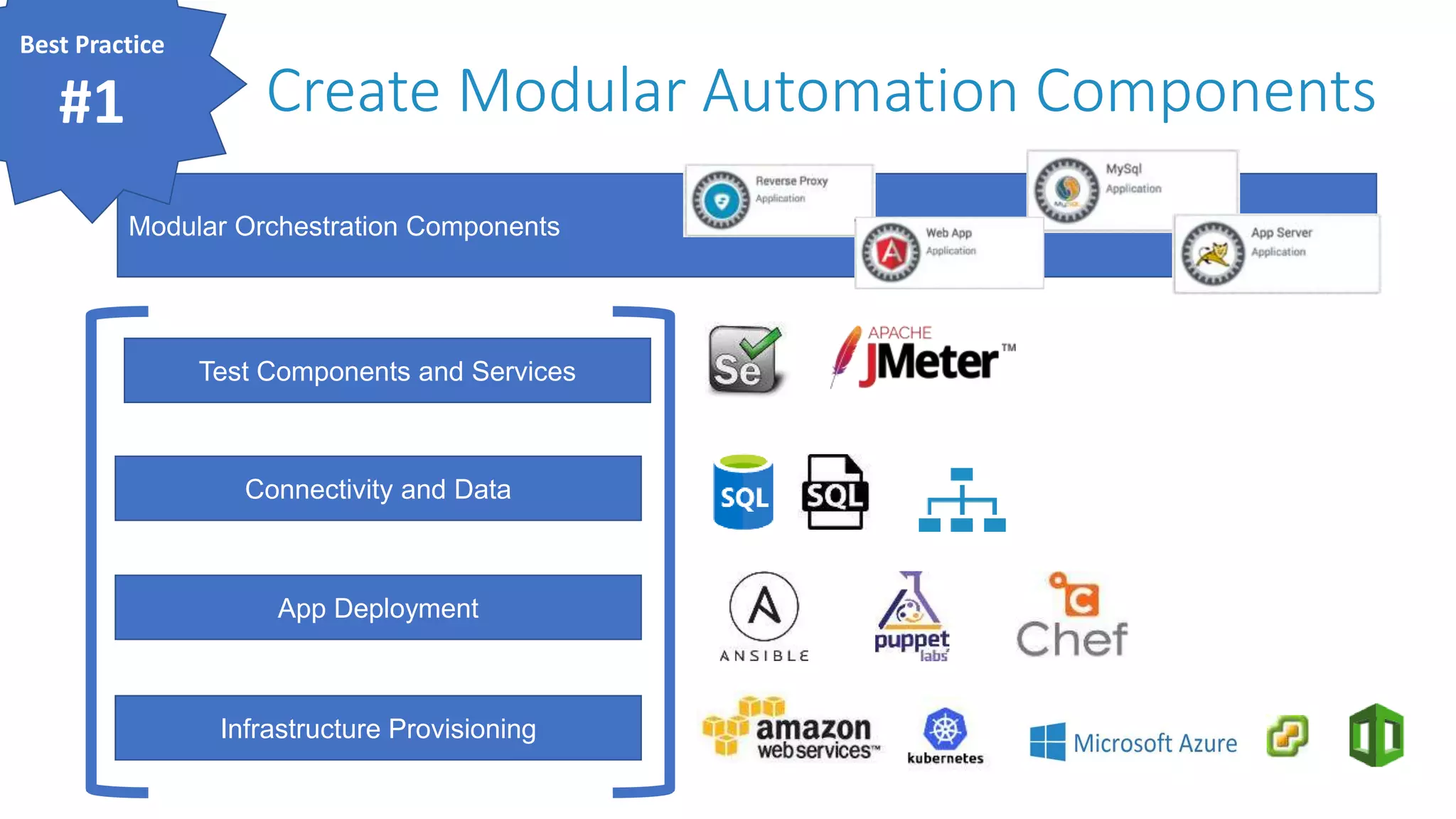

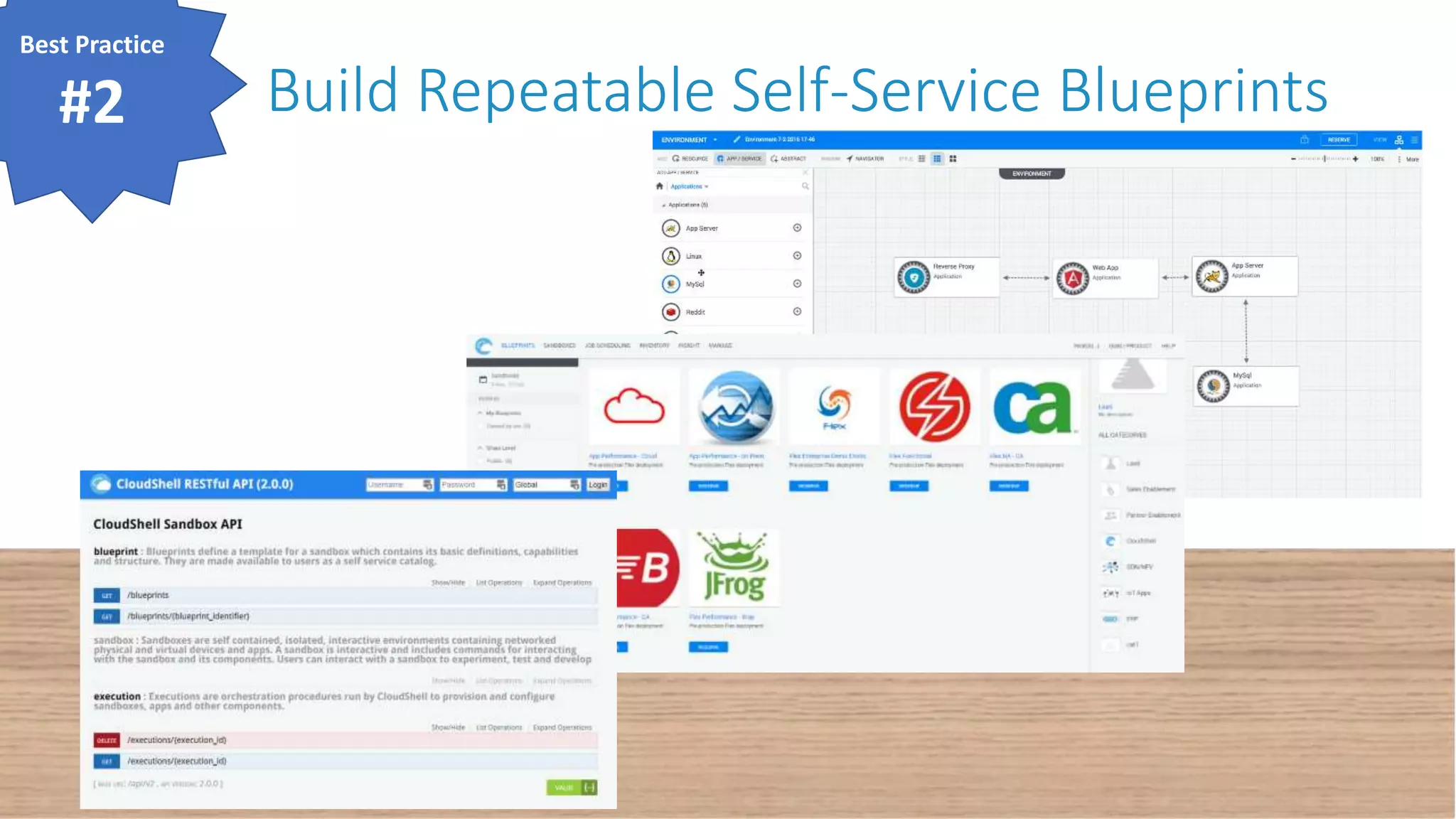

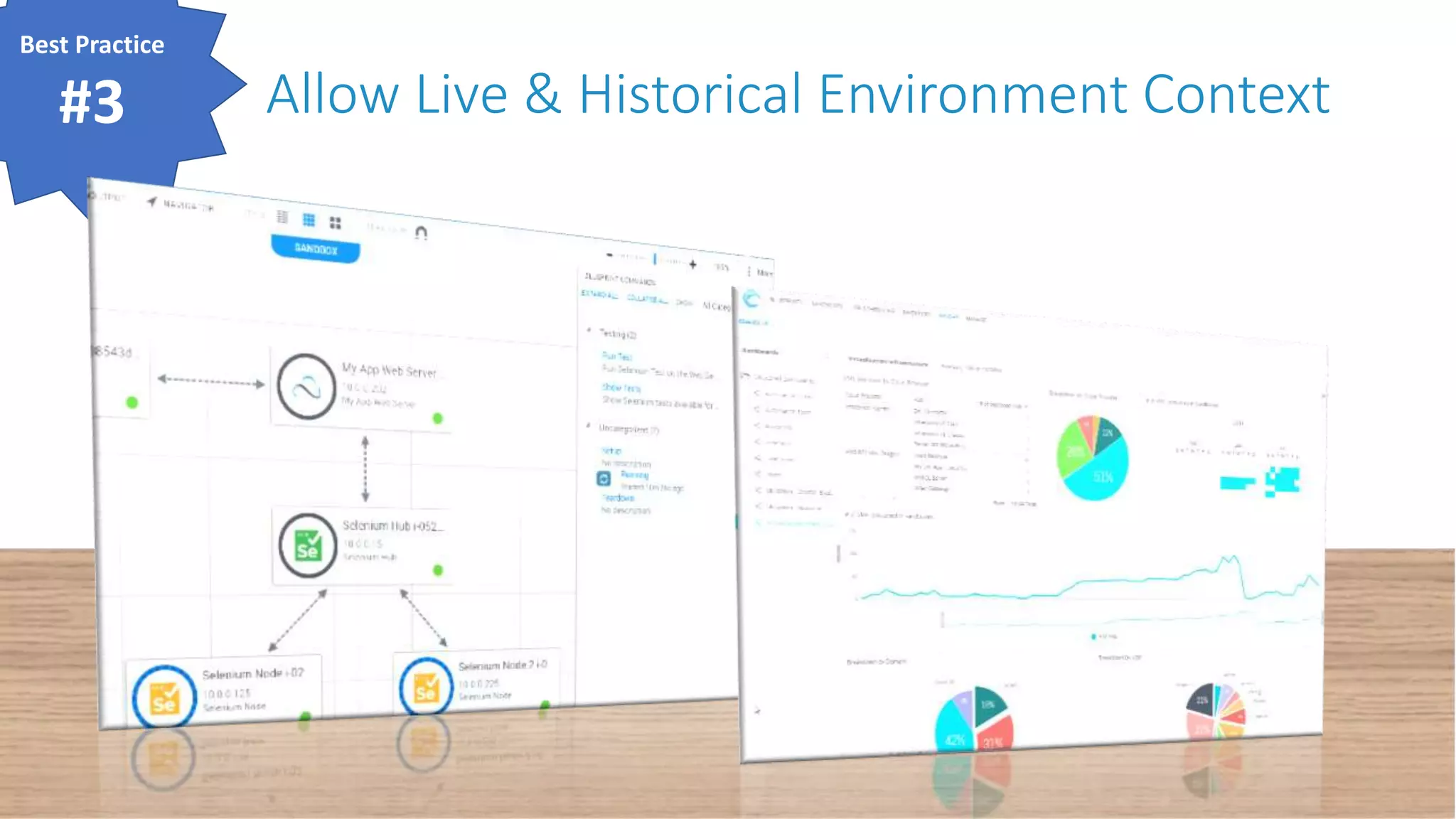

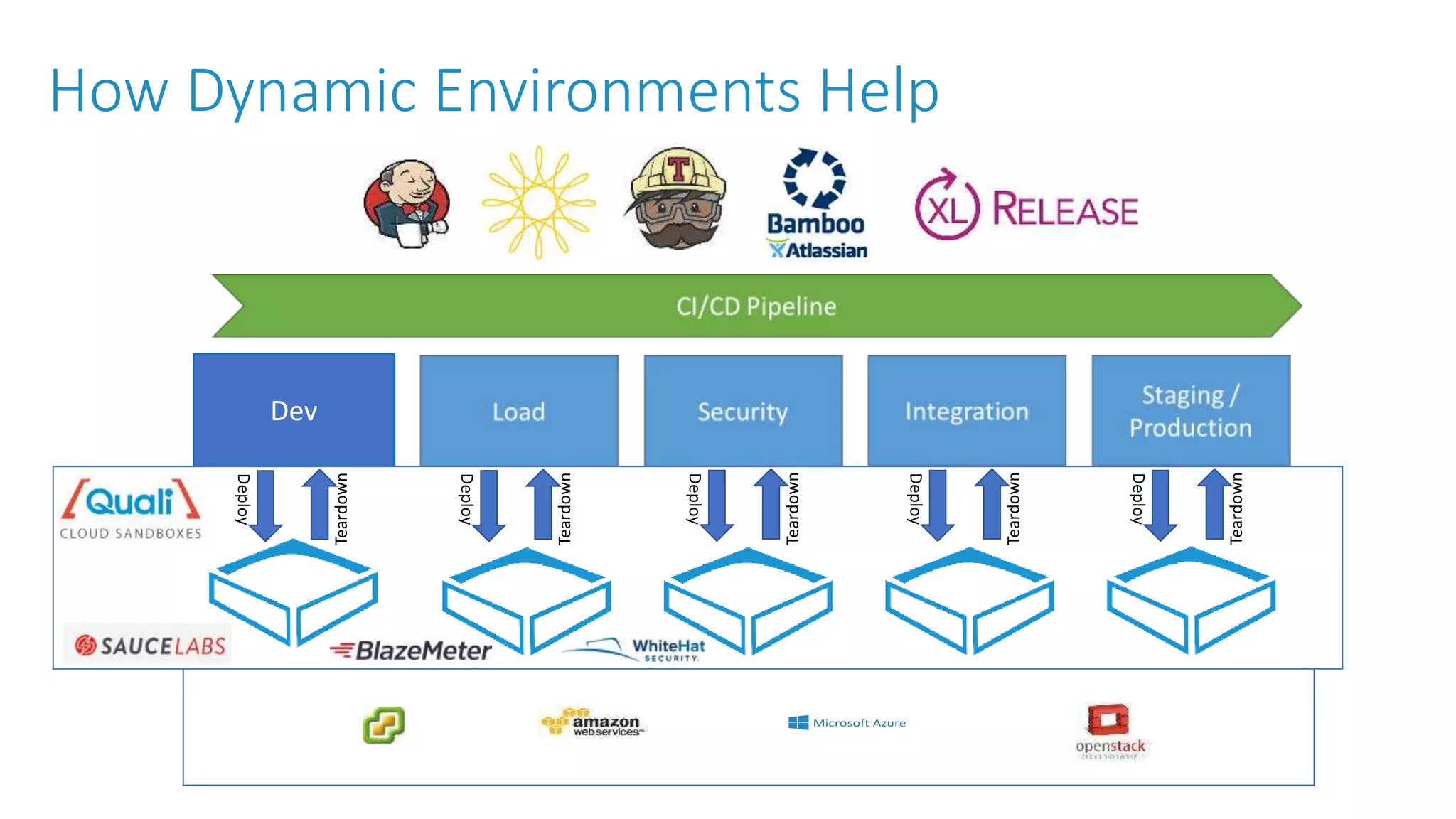

The document discusses improving automation in dynamic environments, highlighting a case study of a digital transformation at a large retail paper supply company. It emphasizes the need for modular orchestration and self-service blueprints to reduce the complexity and time involved in delivering development and testing environments. Key benefits include increased agility, improved reliability, and reduced costs through the elimination of static environments.