* 360Brew Ranking & Reasoning Engine: The New L2 Ranker * Art of the Prompt: Crafting Effective Inputs * Profile Optimization Checklist for Marketers * Content Pre-Launch Checklist for Creators * Content Creation for AI Engagement * Optimizing Posts for Discovery and Engagement * High-Impact Engagement Activities for Professionals

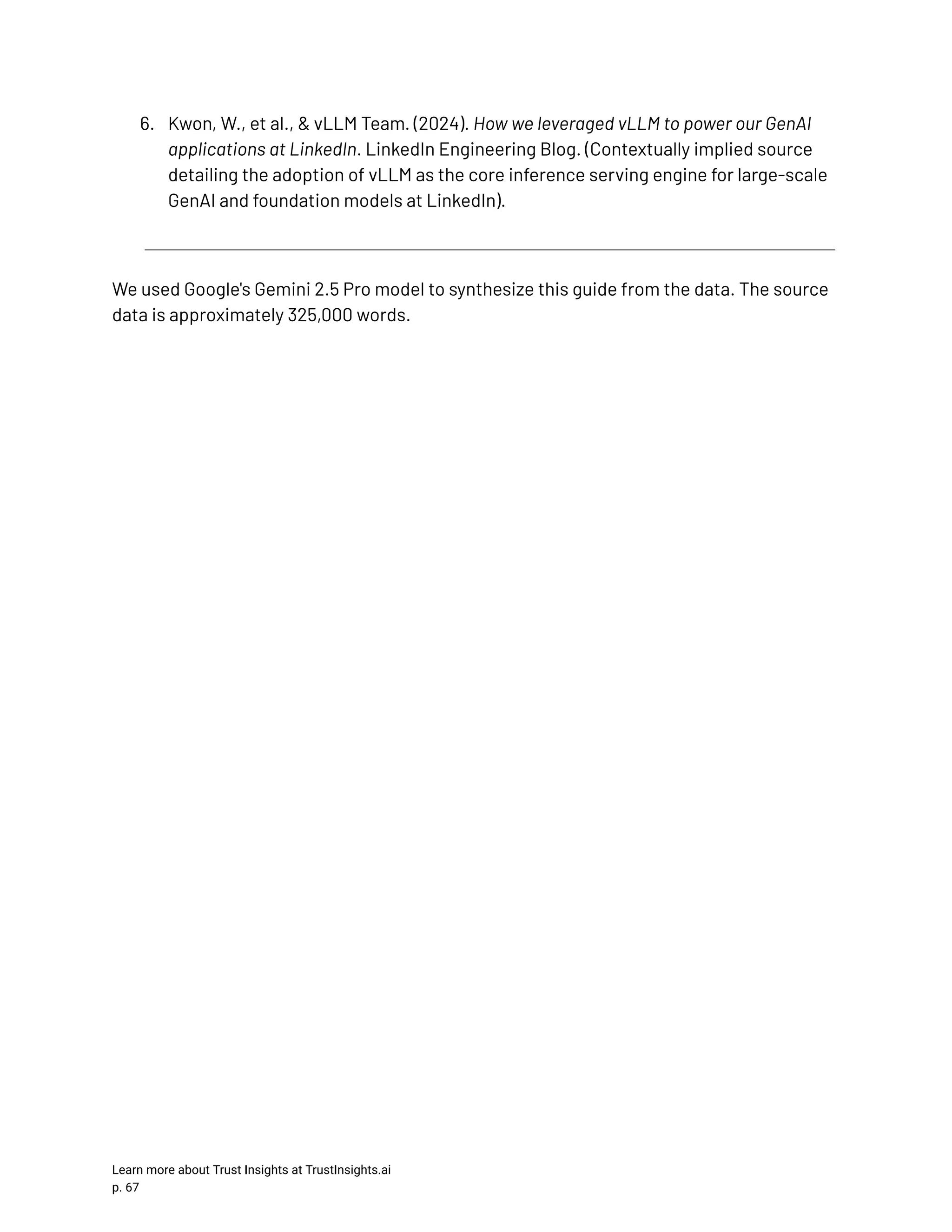

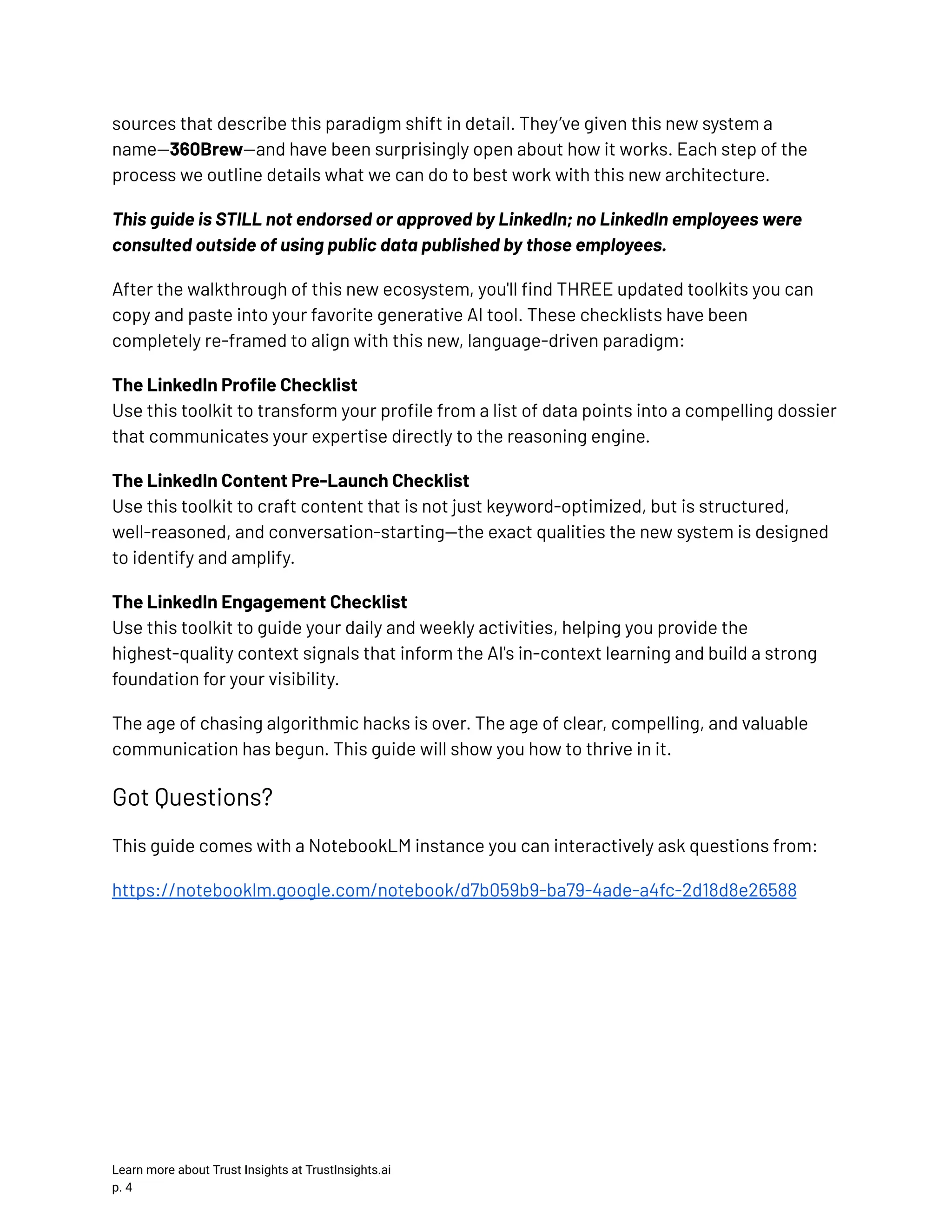

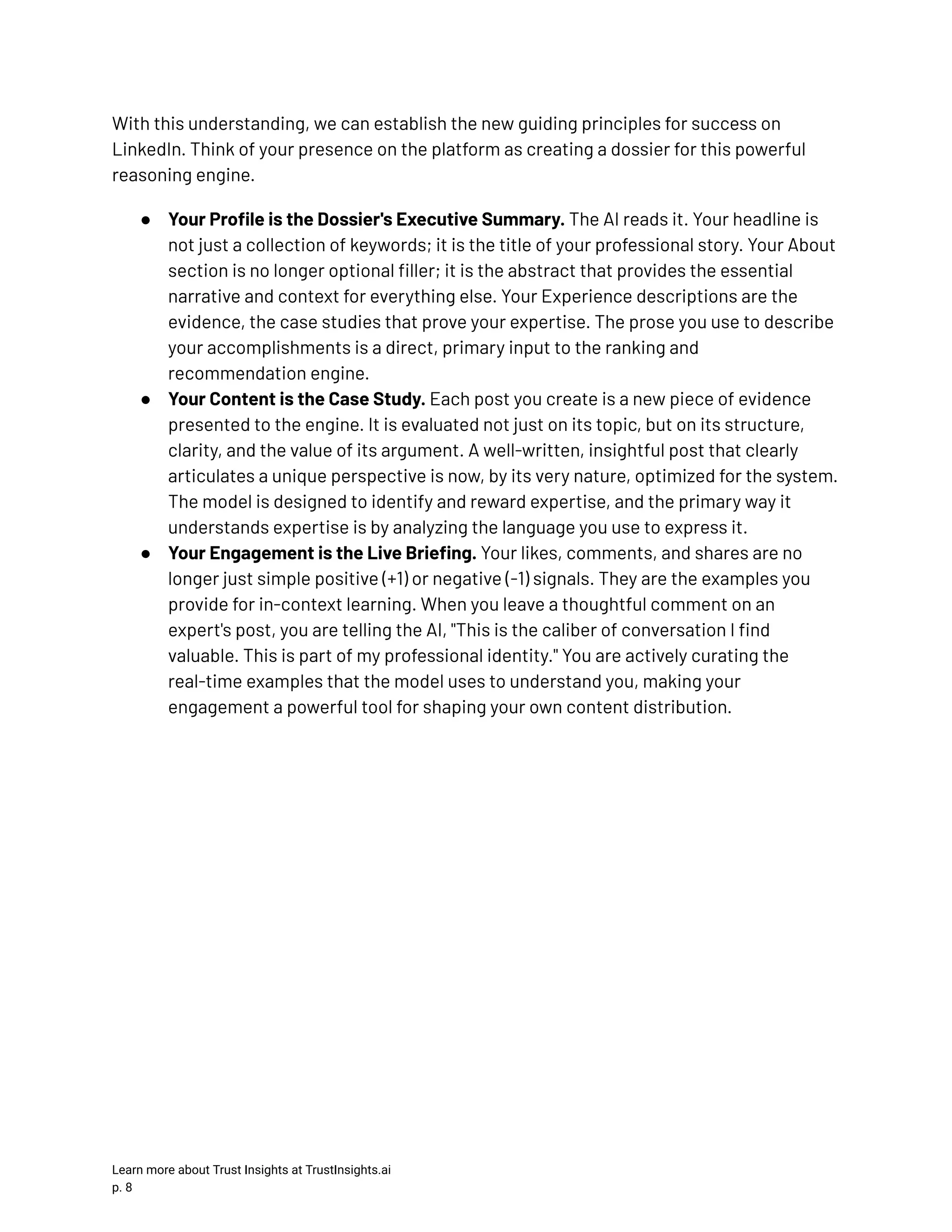

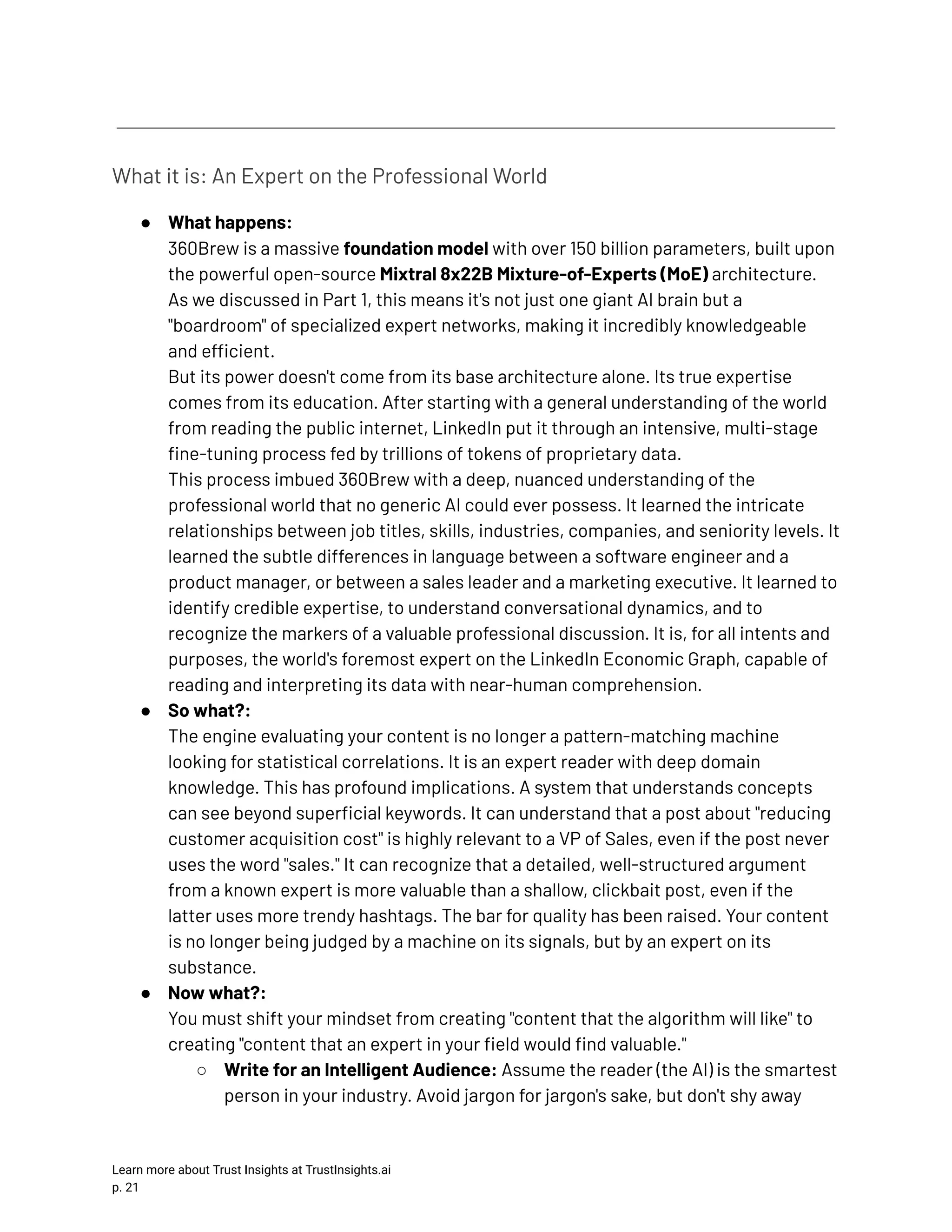

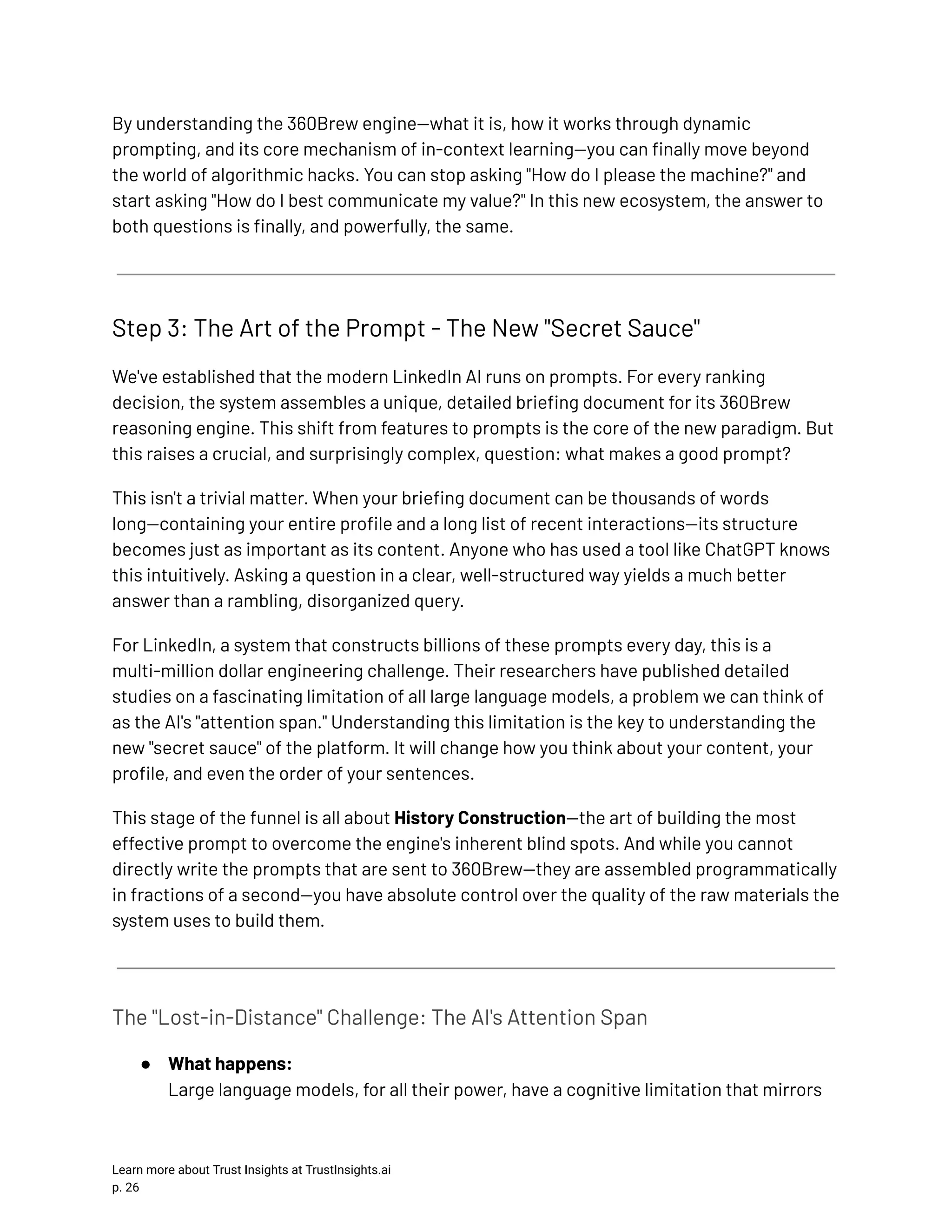

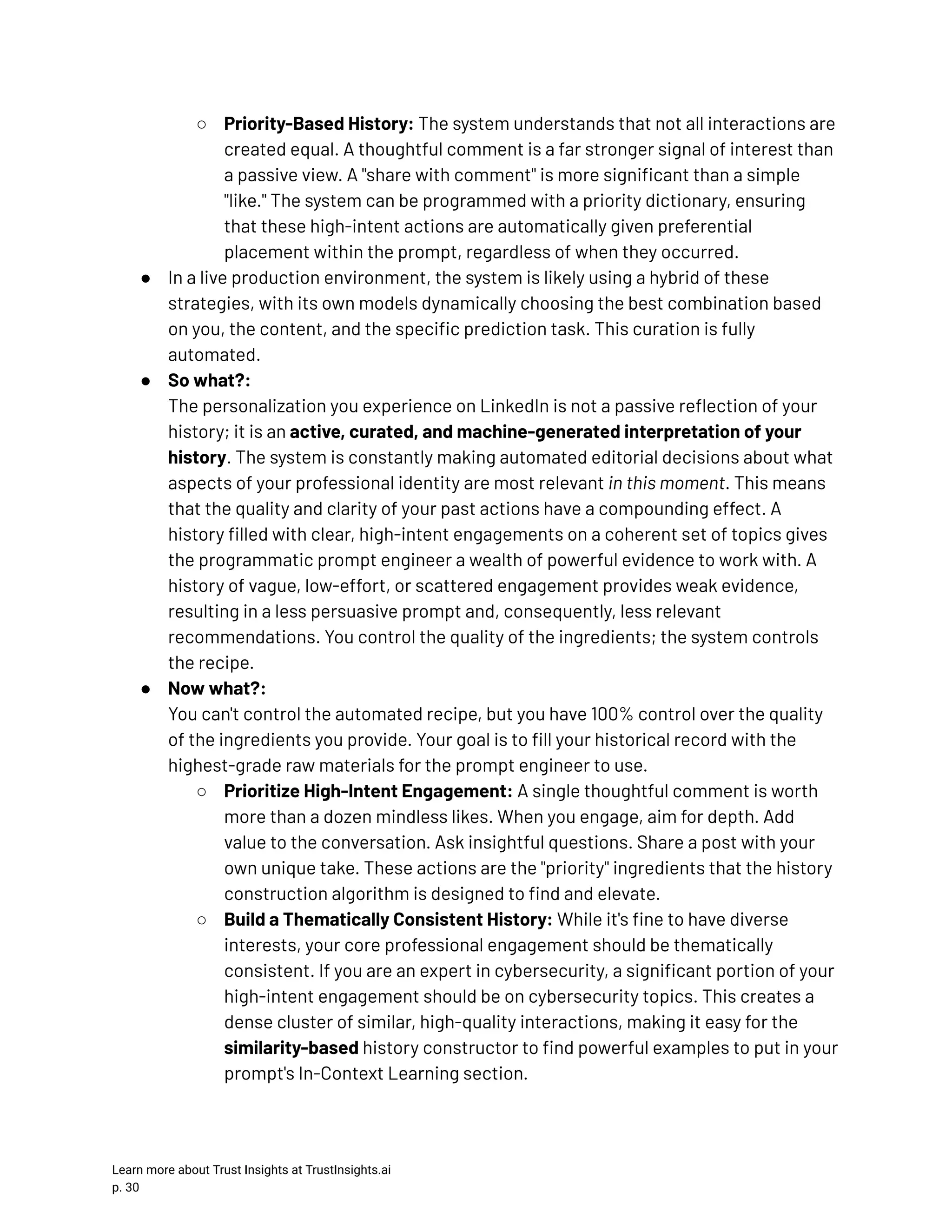

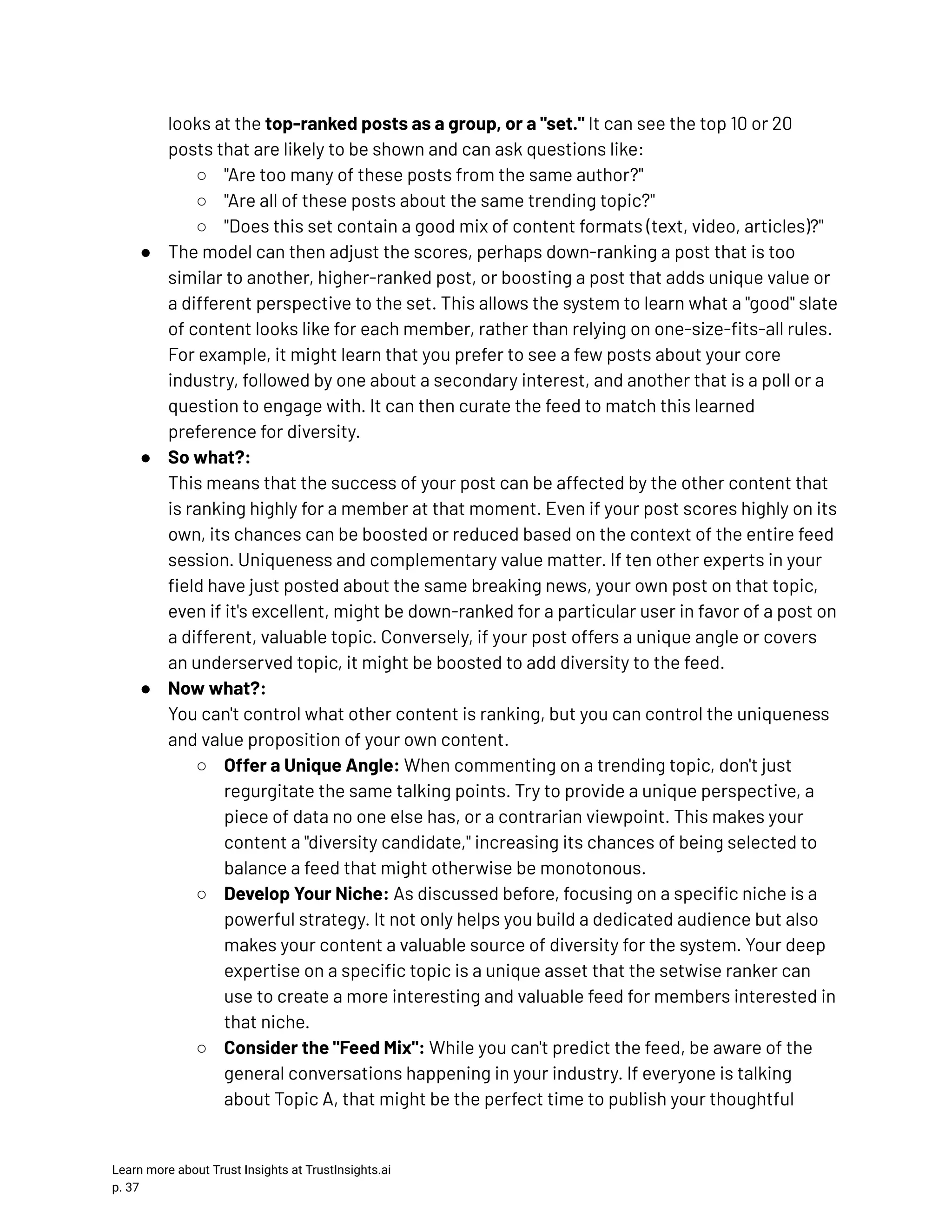

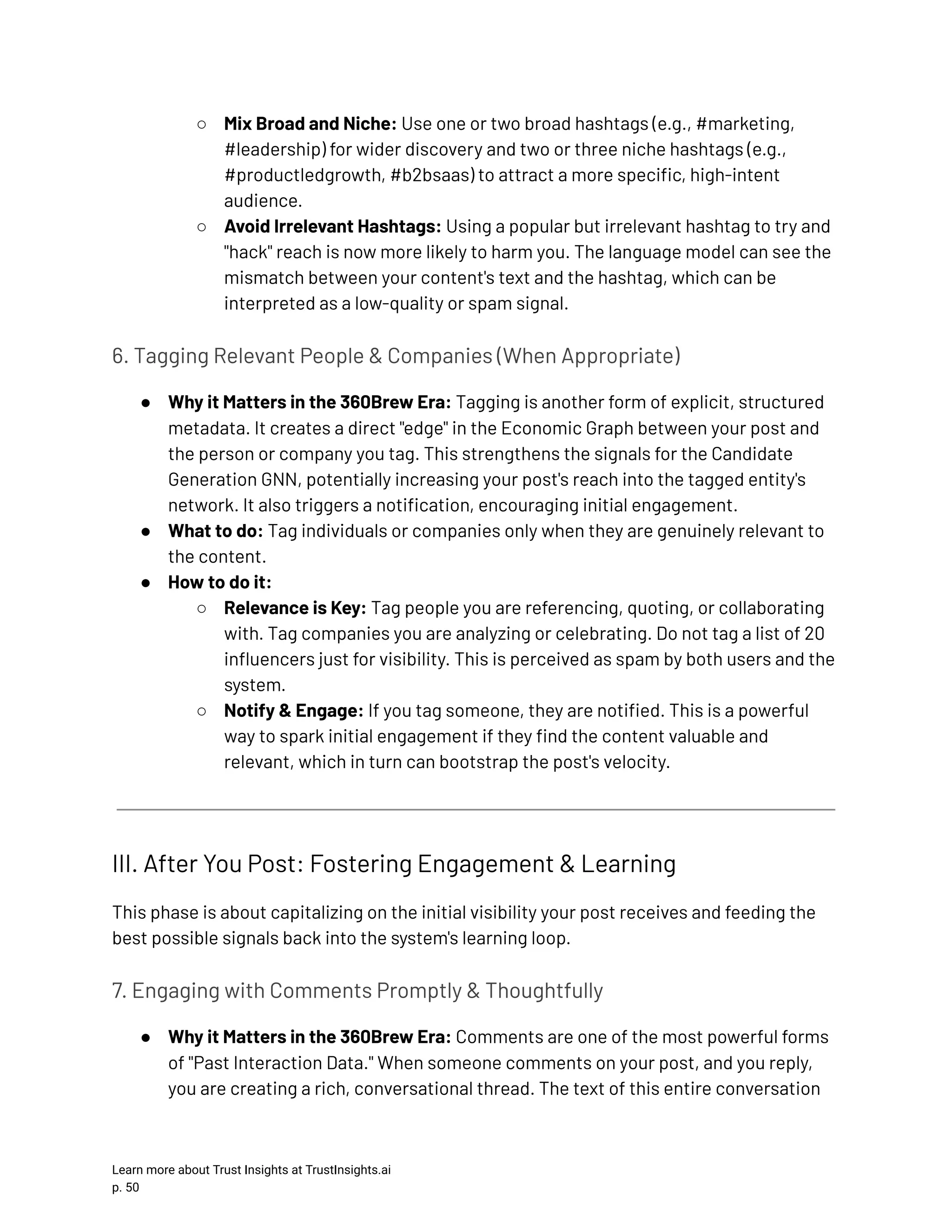

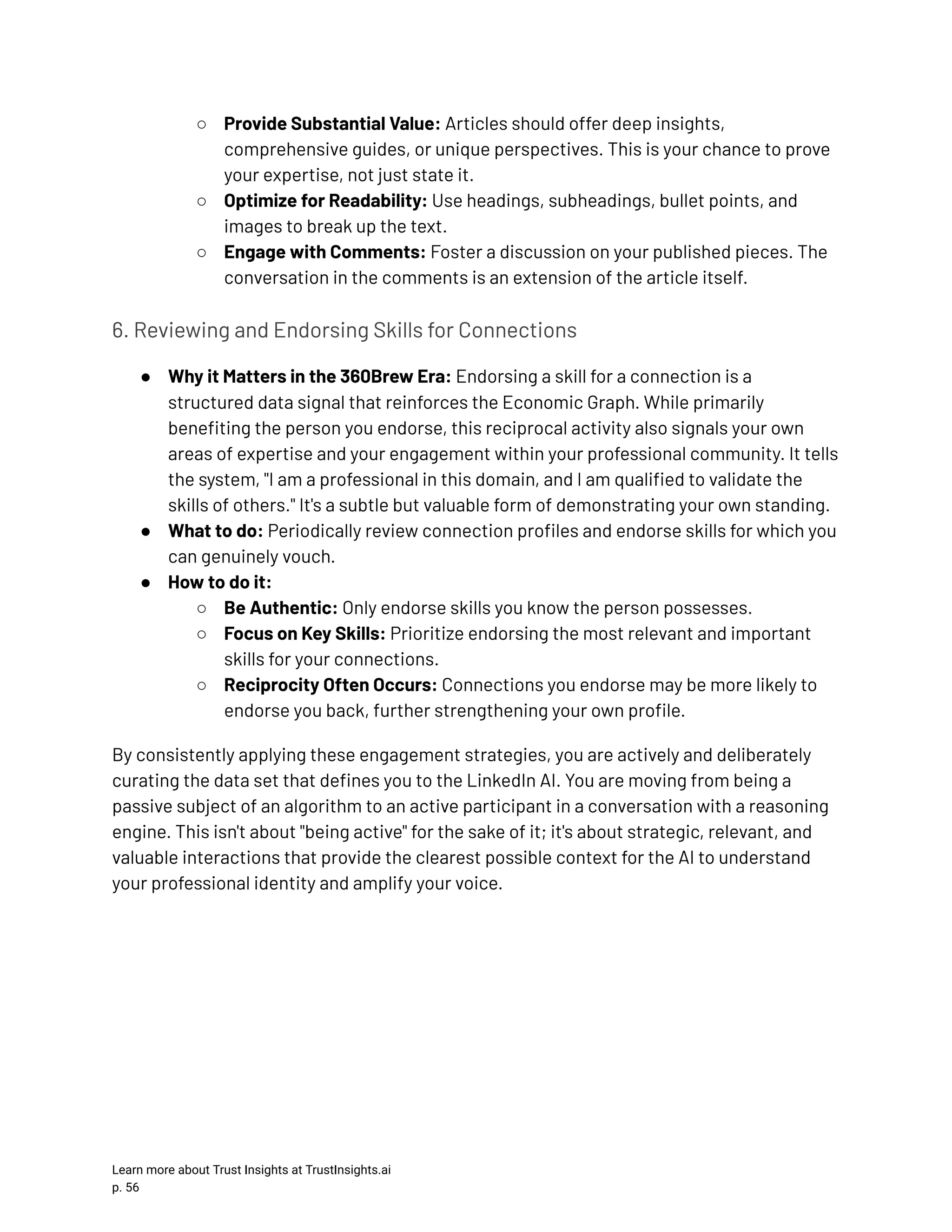

![from using precise, professional language. Explain complex topics with clarity and depth. The model is trained to recognize and reward genuine expertise, which is demonstrated through the quality and coherence of your writing. ○ Demonstrate, Don't Just Declare: Don't just put "Marketing Expert" in your headline. Demonstrate that expertise in your content. Share unique insights, provide a contrarian (but well-reasoned) take on a popular topic, or create a detailed framework that helps others solve a problem. 360Brew is designed to evaluate the substance of your contribution, not just the labels you attach to it. ○ Focus on Your Niche: An expert model respects niche expertise. A deep, insightful post for a specific audience (e.g., "A Guide to ASC 606 Revenue Recognition for SaaS CFOs") is now more likely to be identified and shown to that exact audience than ever before. The model's deep domain knowledge allows it to perform this highly specific matchmaking with incredible accuracy. Don't be afraid to go deep; the system can now follow you there. How it Works: The Power of the Prompt ● What happens: The most revolutionary aspect of 360Brew is how it receives information. It does not ingest a long list of numerical features. Instead, for each of the thousands of candidate posts, the system constructs a detailed prompt in natural language. This prompt is a dynamically generated briefing document, a bespoke dossier created for the sole purpose of evaluating a single post for a single member. Based on the research papers, this prompt is assembled from several key textual components: ○ The Instruction: A clear directive given to the model, such as, "You are provided a member's profile, their recent activity, and a new post. Your task is to predict whether the member will like, comment on, or share this post." ○ The Member Profile: The relevant parts of your profile, rendered as text. This includes your headline, your current and past roles, and likely key aspects of your About section. ○ The Past Interaction Data: A curated list of your most recent and relevant interactions on the platform, also rendered as text. For example: "Member has commented on the following posts: [Post content by Author A]... Member has liked the following posts: [Post content by Author B]..." Learn more about Trust Insights at TrustInsights.ai p. 22](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-23-2048.jpg)

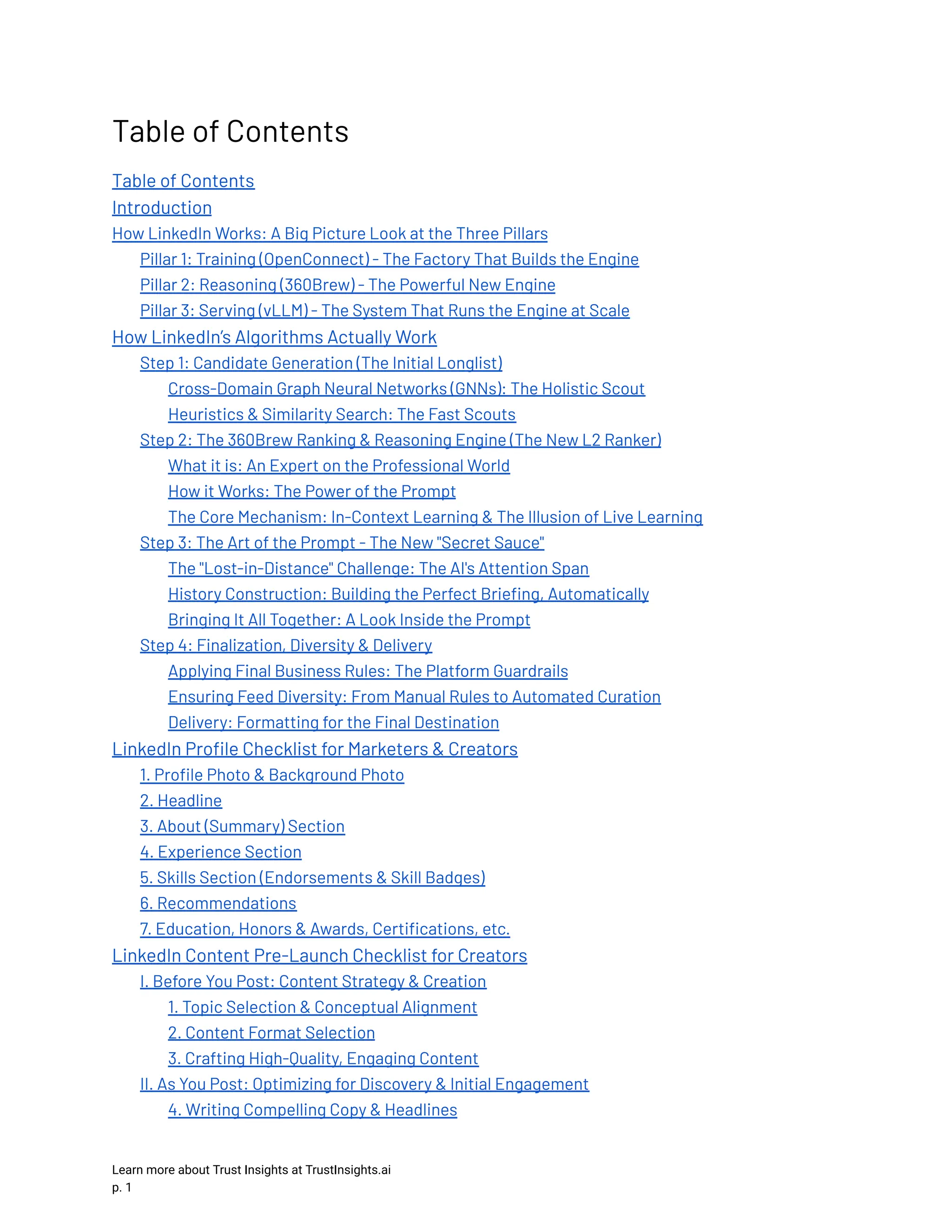

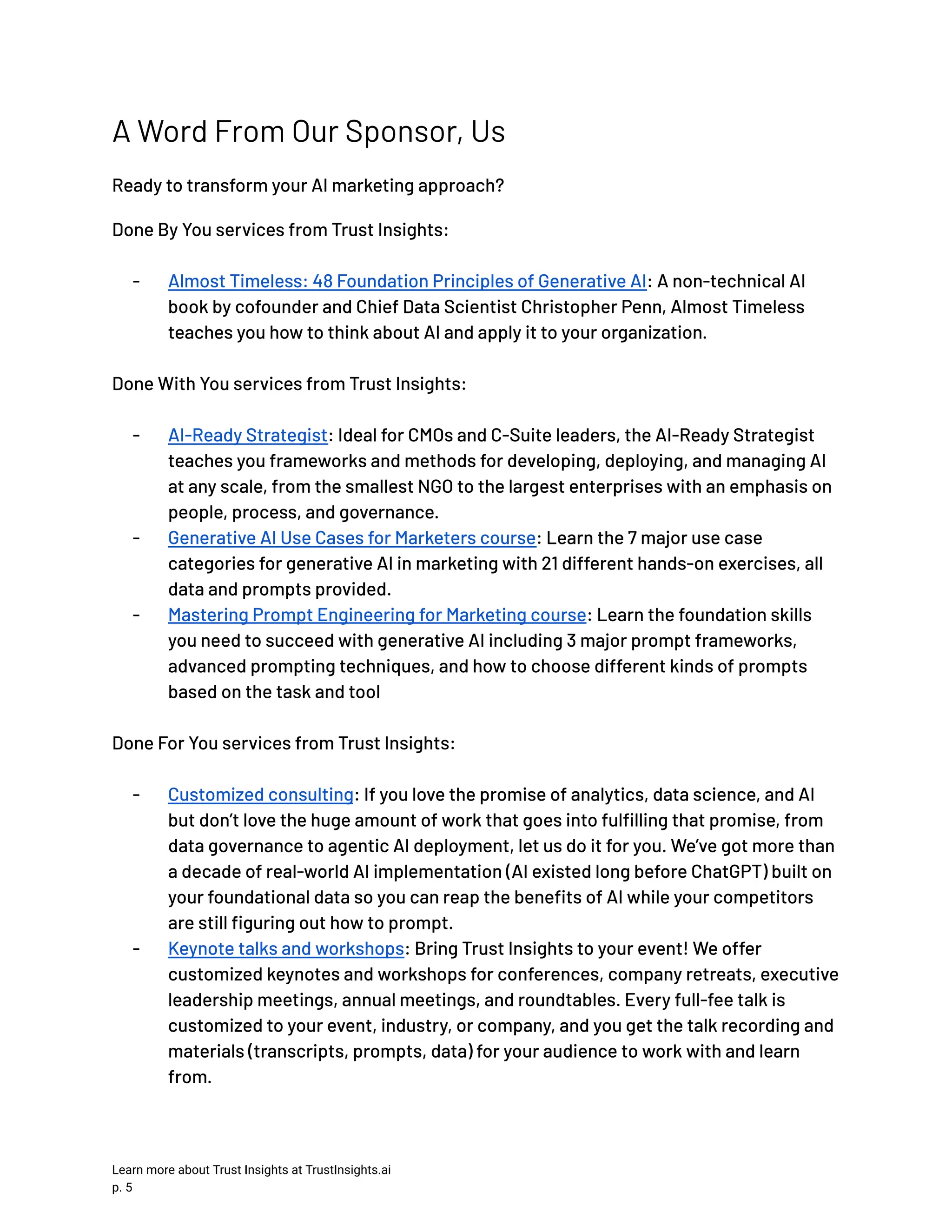

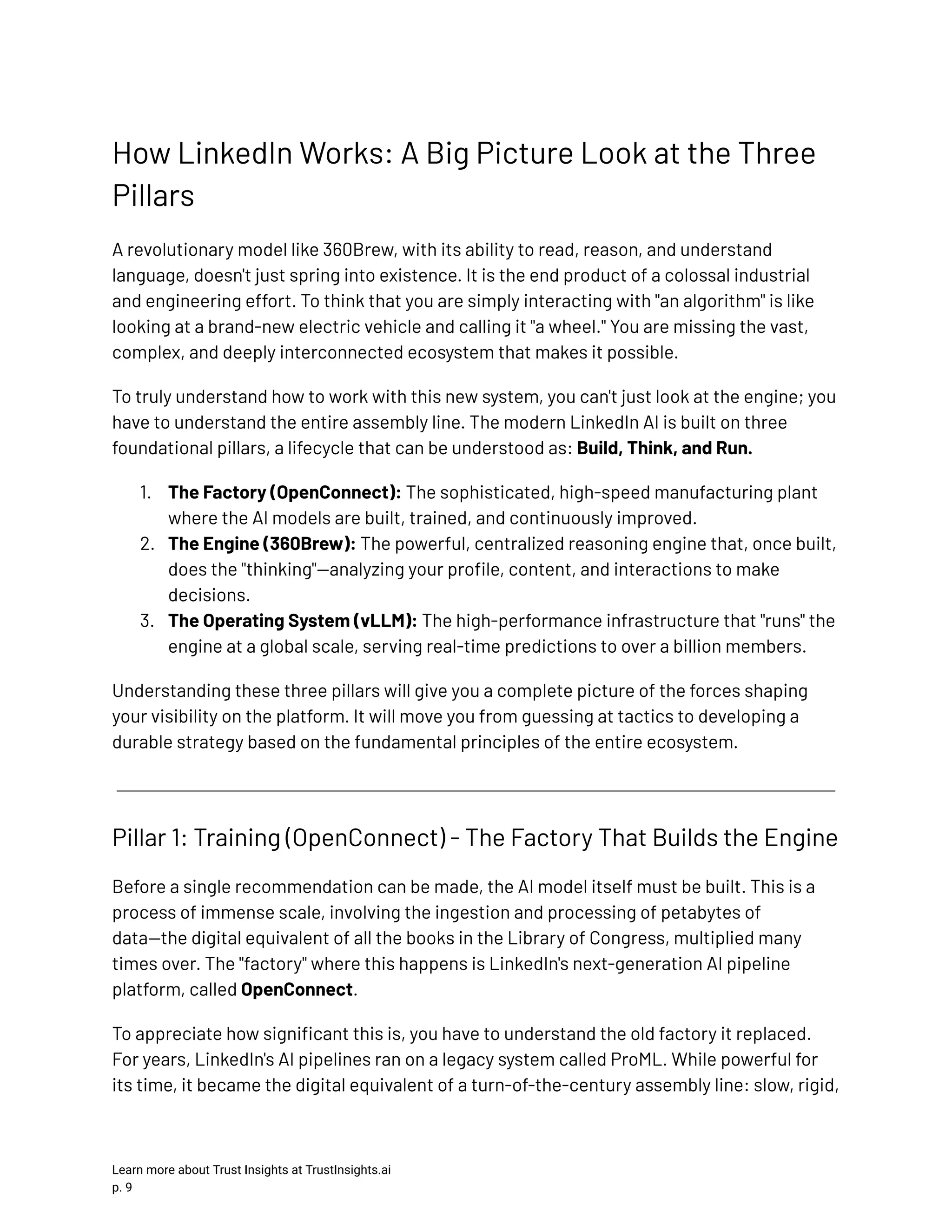

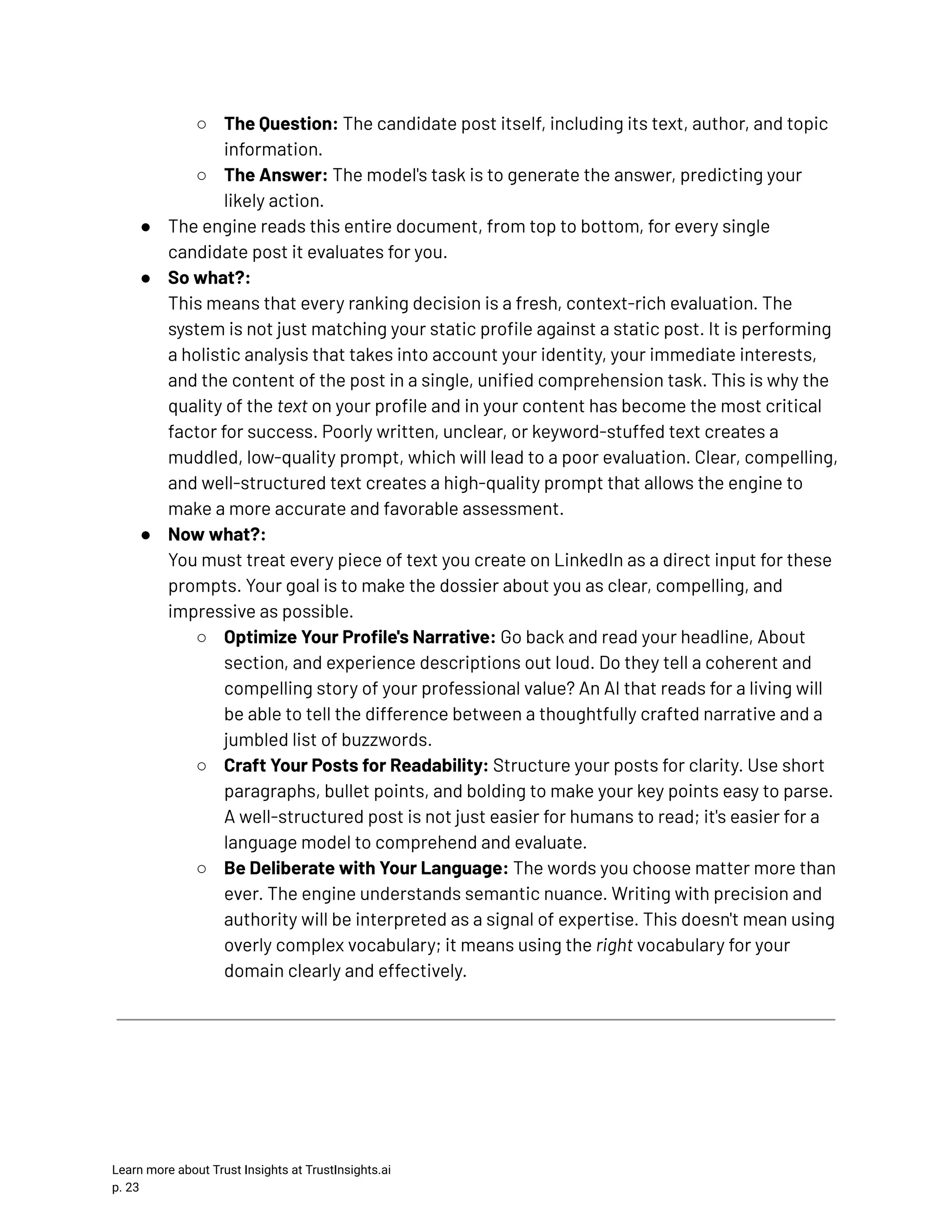

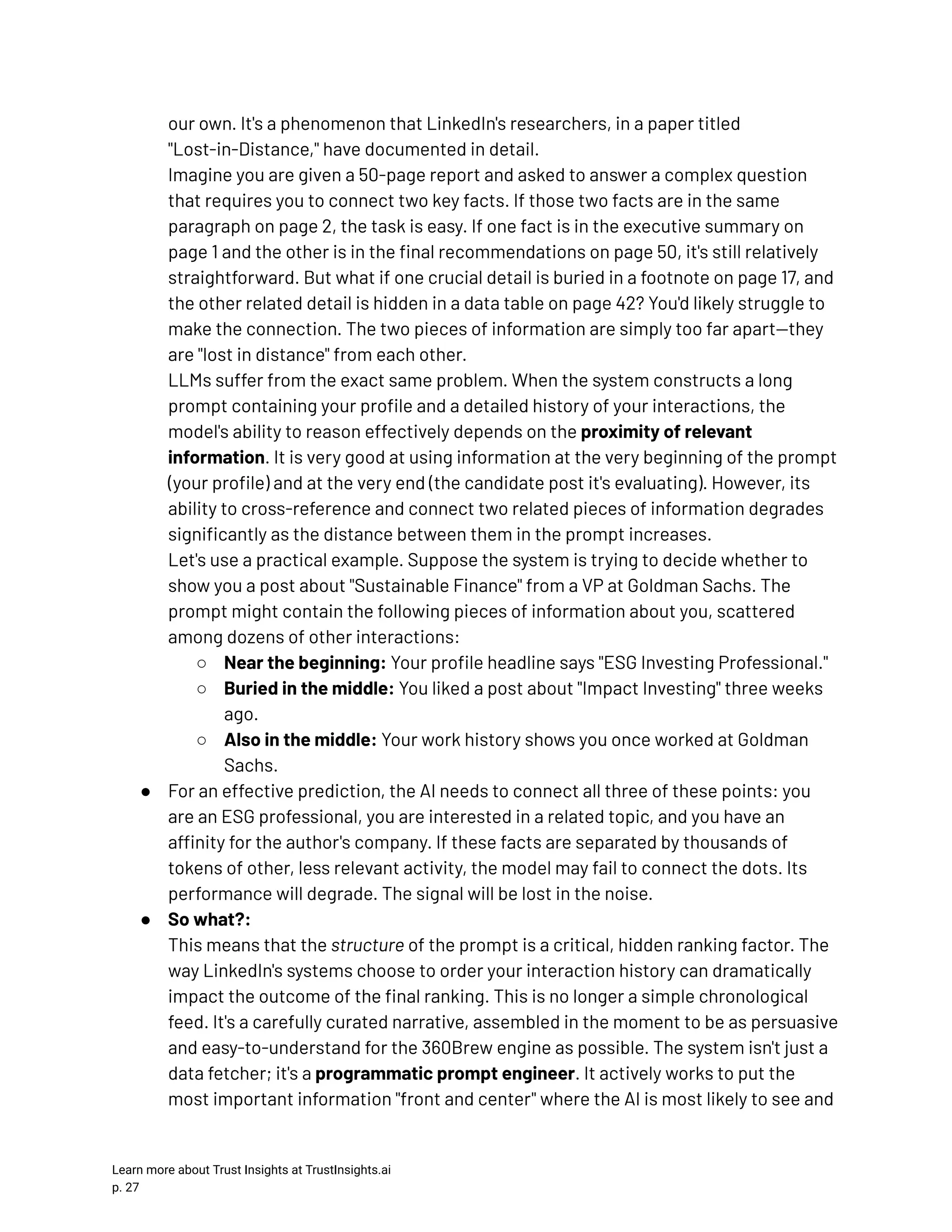

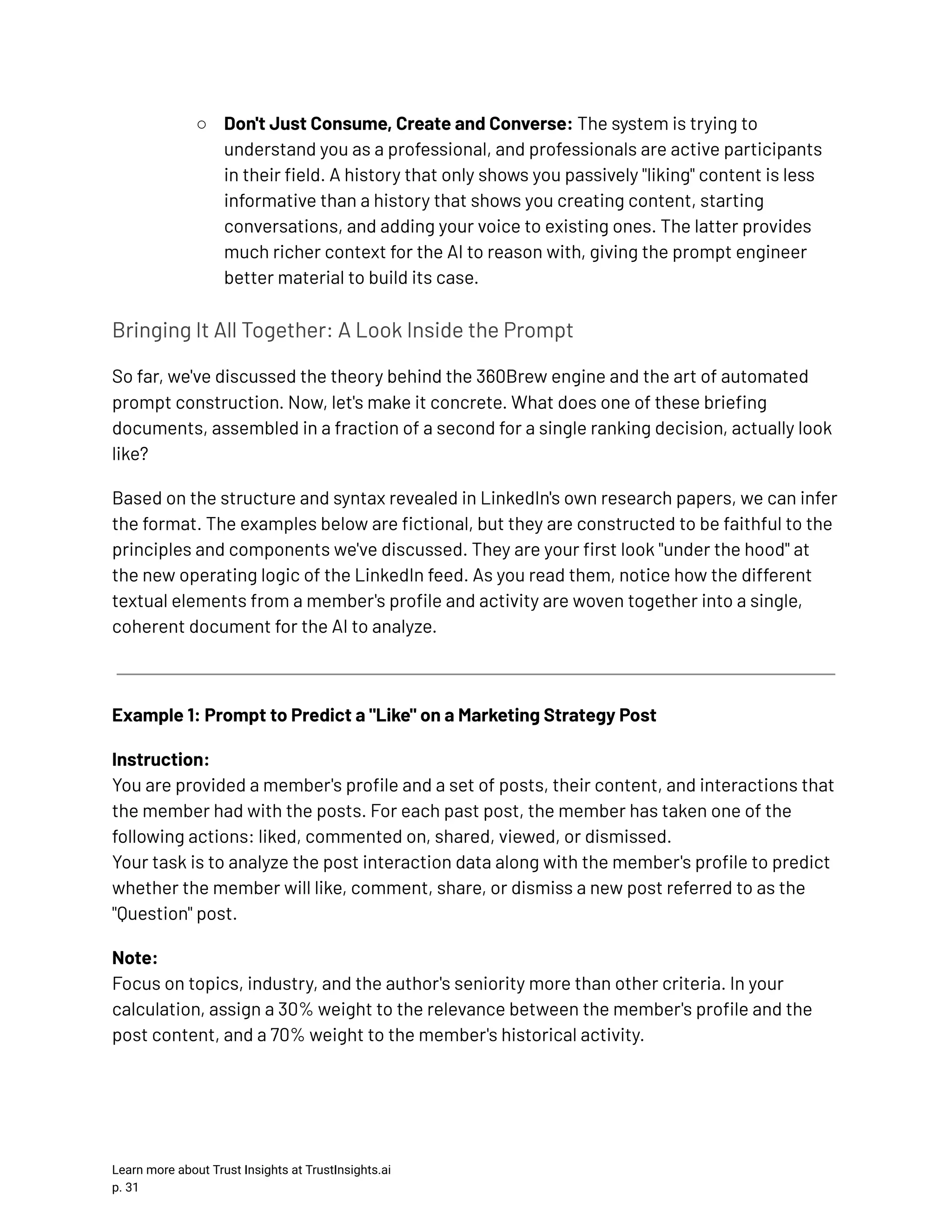

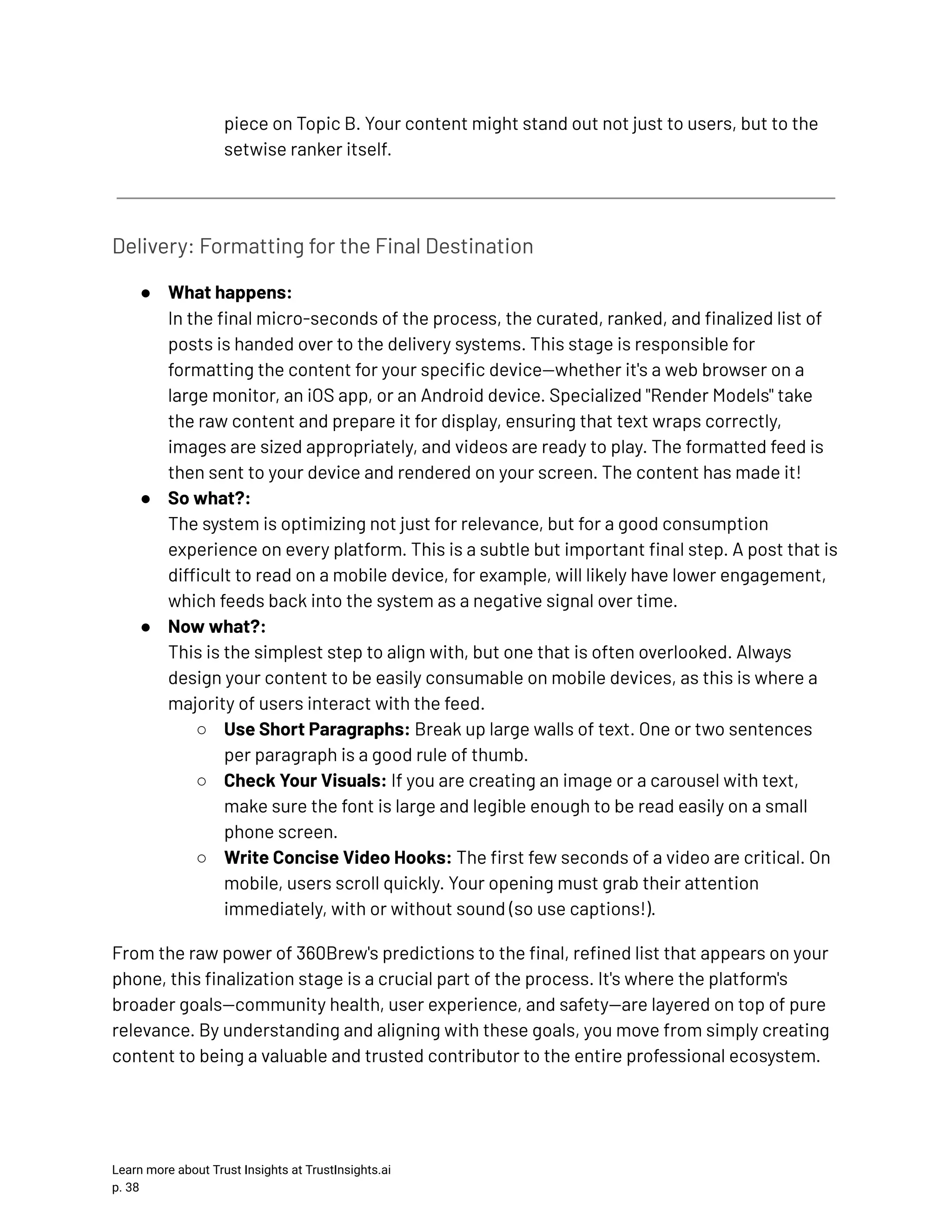

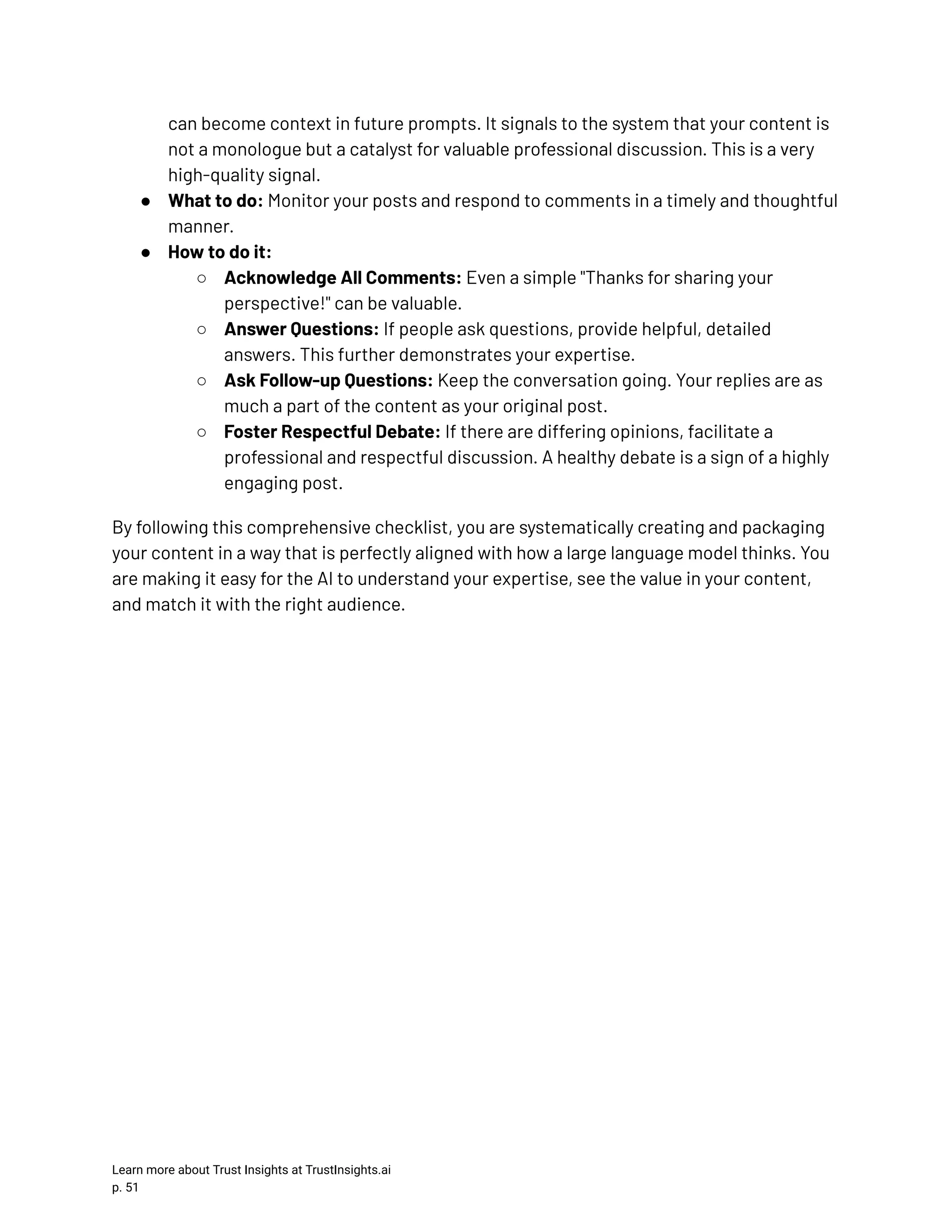

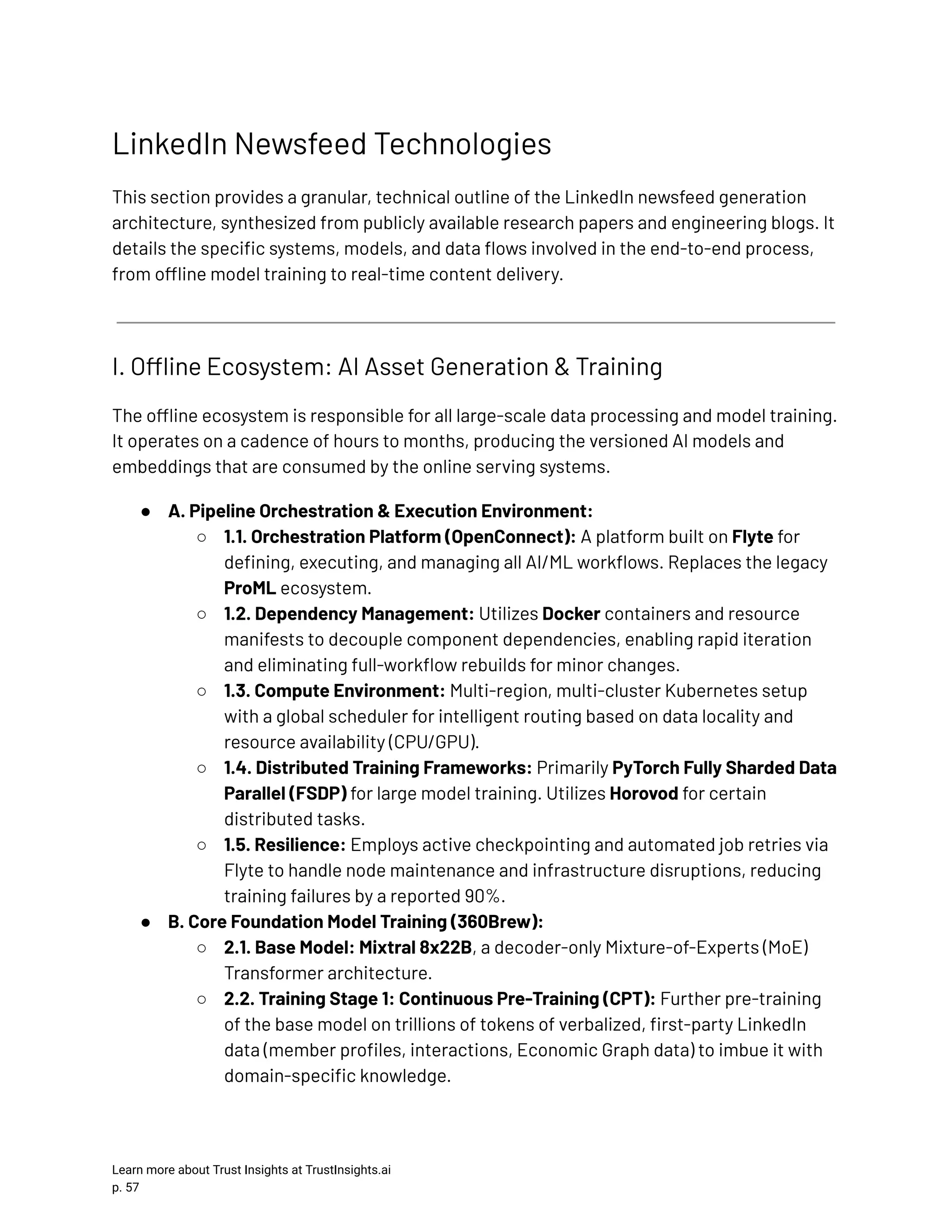

![Member Profile: Current position: Senior Content Marketing Manager, current company: HubSpot, Location: Boston, Massachusetts. Past post interaction data: Member has commented on the following posts: [Author: Ann Handley, Content: 'Great content isn't about storytelling; it's about telling a true story well. In B2B, that means focusing on customer success...', Topics: content marketing, B2B marketing] Member has liked the following posts: [Author: Christopher Penn, Content: 'Ran the numbers on the latest generative AI model's impact on SEO. The results are surprising... see the full analysis here...', Topics: generative AI, SEO, marketing analytics] Member has dismissed the following posts: [Author: Gary Vaynerchuk, Content: 'HUSTLE! There are no shortcuts. Stop complaining and start doing...', Topics: entrepreneurship, motivation] Question: Will the member like, comment, share, or dismiss the following post: [Author: Rand Fishkin, Content: 'Everyone is focused on AI-generated content, but the real opportunity is in AI-powered distribution. Here's a framework for thinking about it...', Topics: marketing strategy, AI, content distribution] Answer: The member will like Analysis of the Prompt: In this first example, you can see the core components in action. The Member Profile establishes a clear identity ("Senior Content Marketing Manager"). The Past post interaction data provides powerful in-context examples: the member engages with industry leaders (Ann Handley, Christopher Penn) on core topics (content marketing, AI, SEO) but dismisses generic motivational content. The Question presents a post that is a perfect topical and conceptual match. The engine reads this entire narrative and, using its reasoning capabilities, correctly predicts a high-intent action like a "like." Example 2: Prompt to Predict a "Comment" on a Product Marketing Post Instruction: You are provided a member's profile and a set of posts, their content, and interactions that the member had with the posts. For each past post, the member has taken one of the Learn more about Trust Insights at TrustInsights.ai p. 32](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-33-2048.jpg)

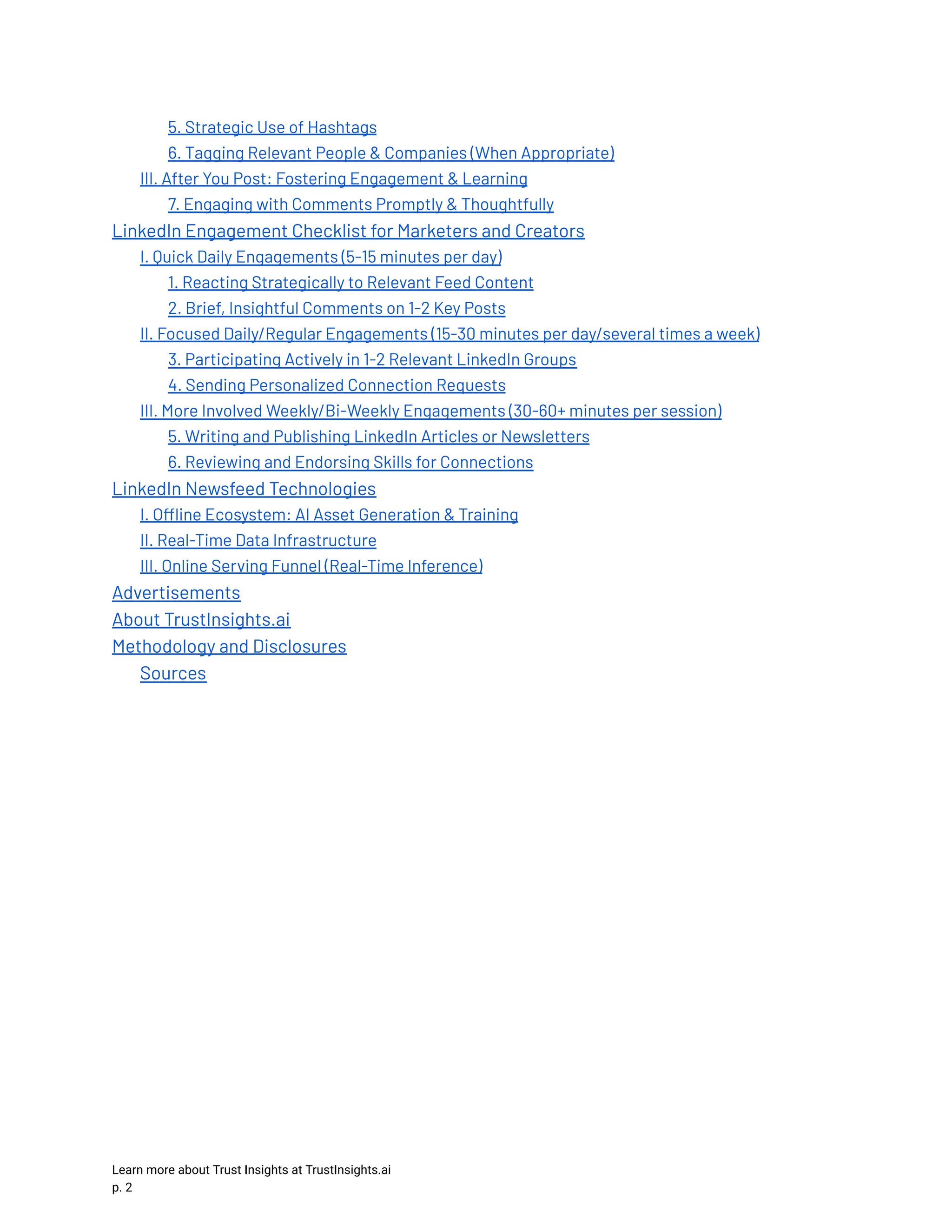

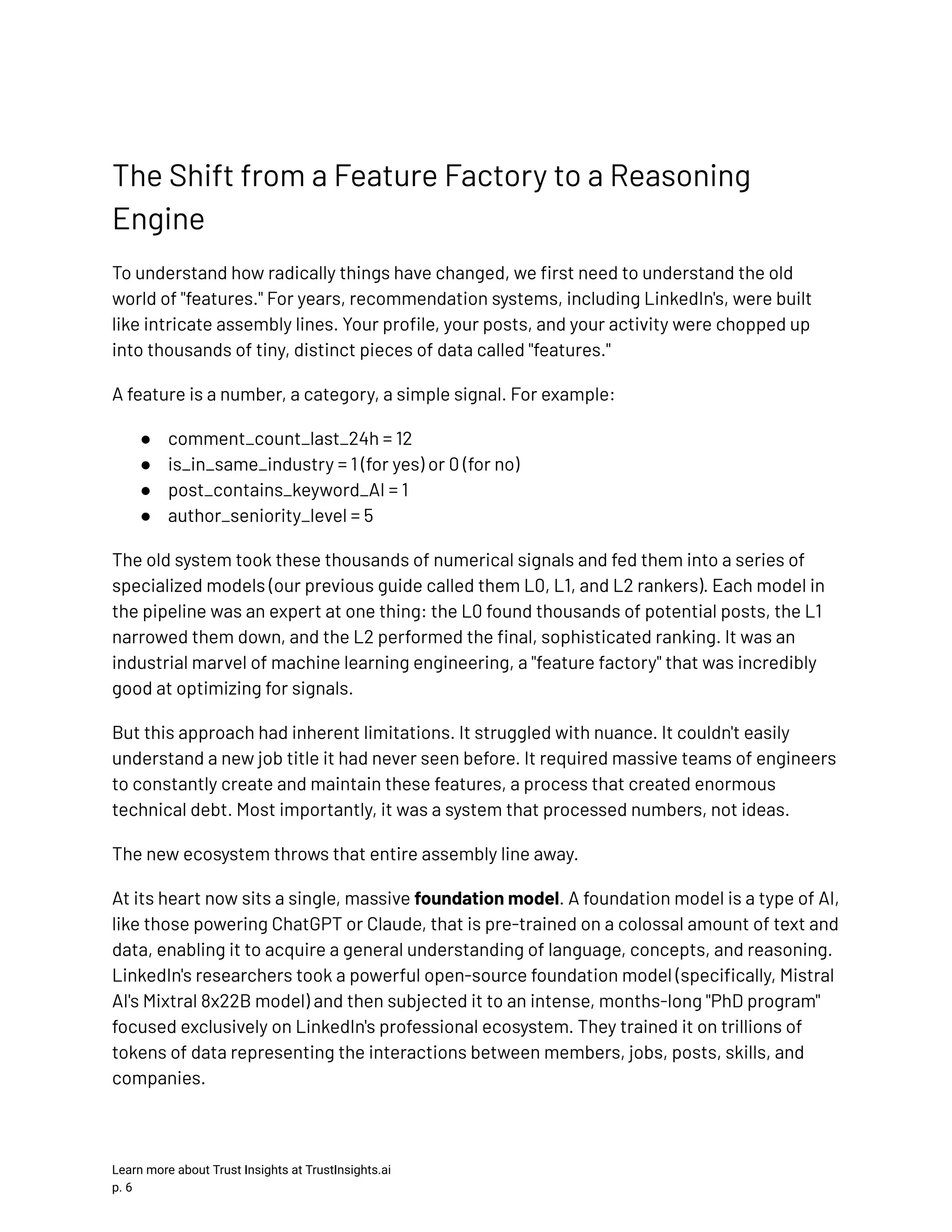

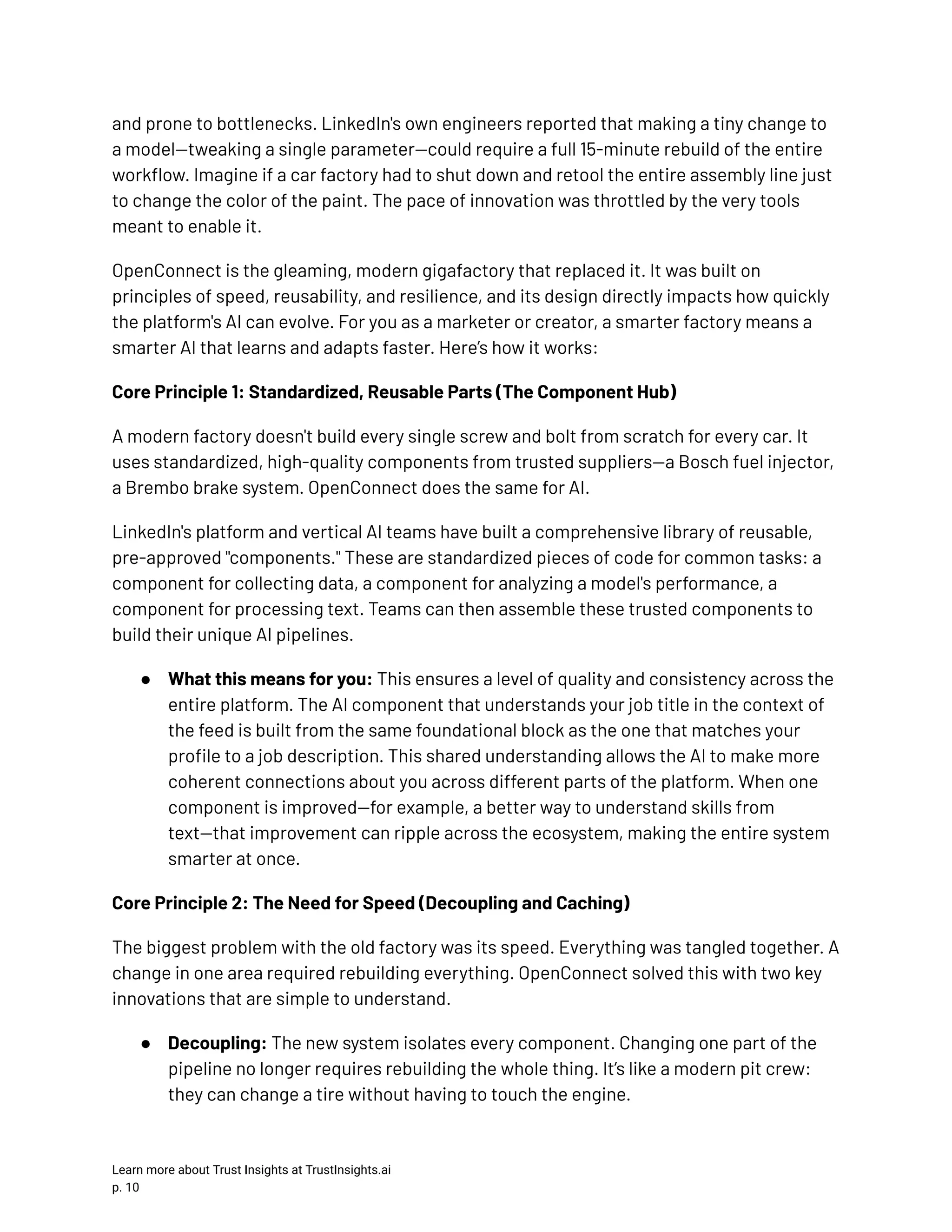

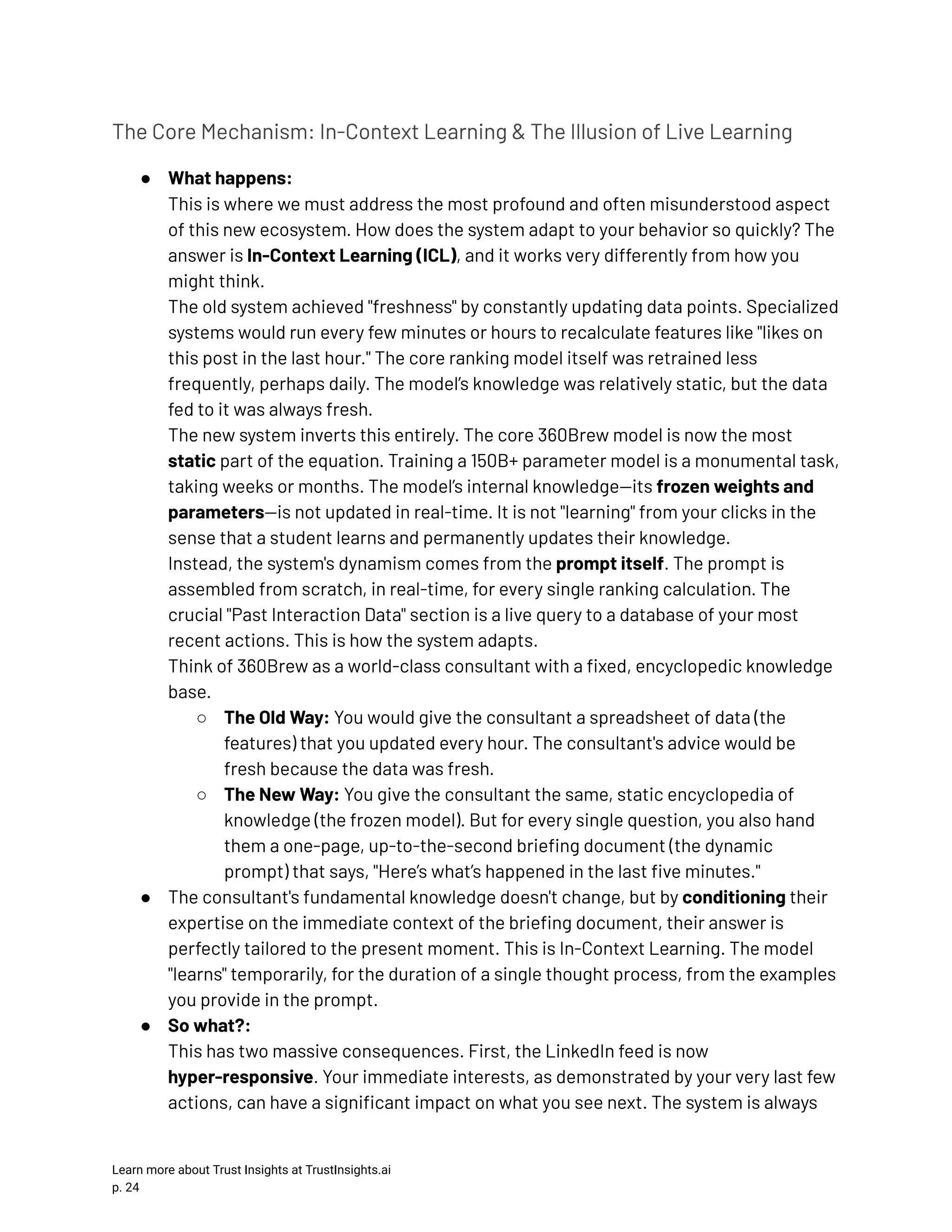

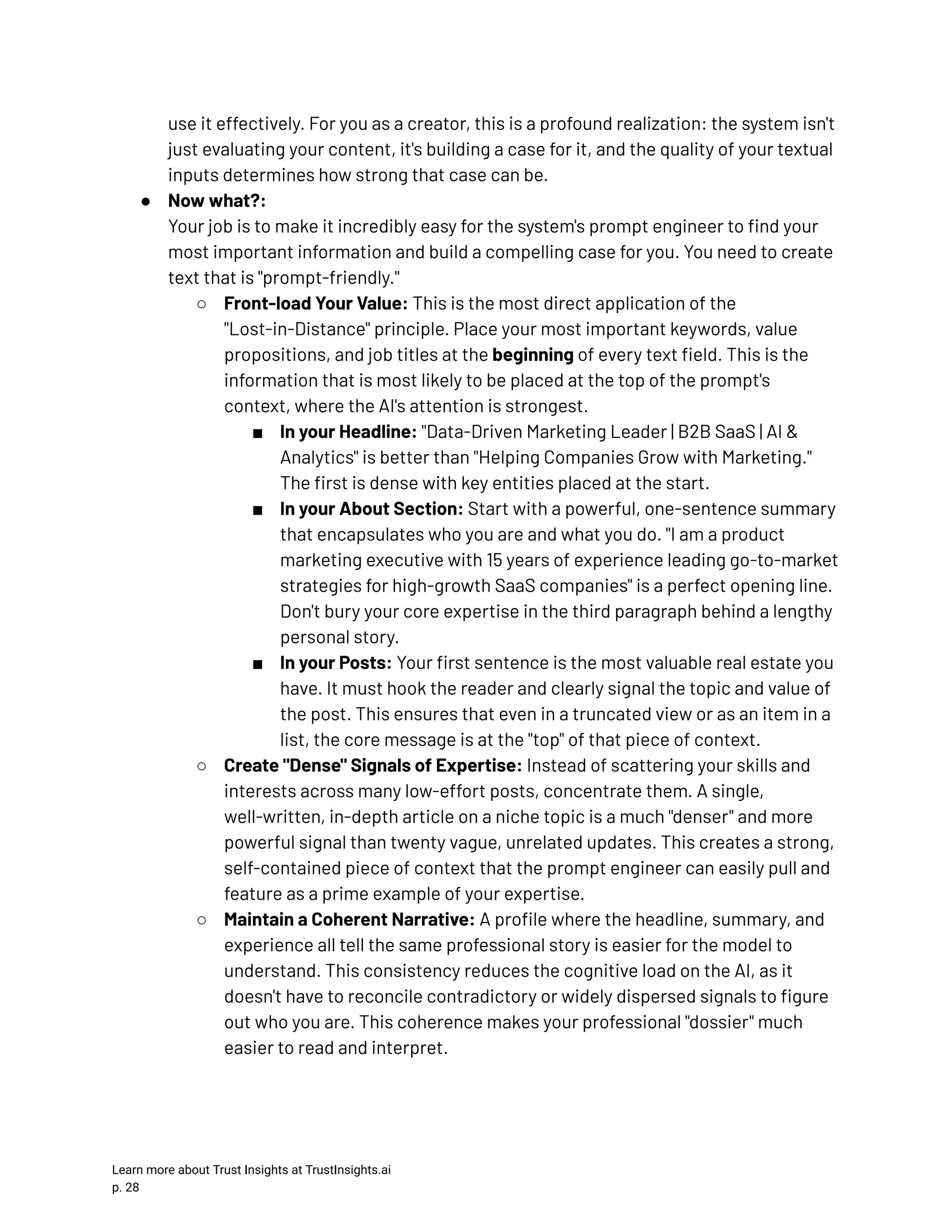

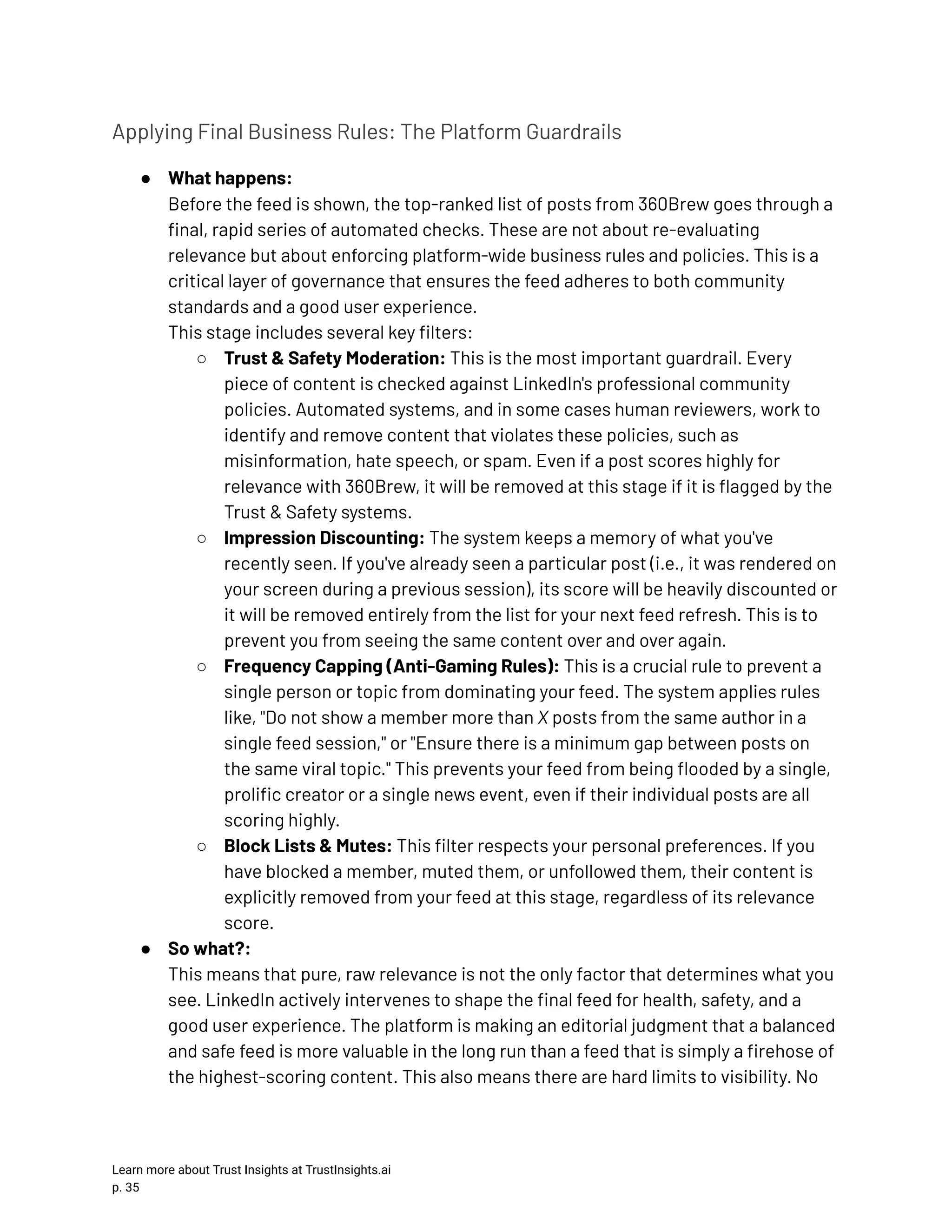

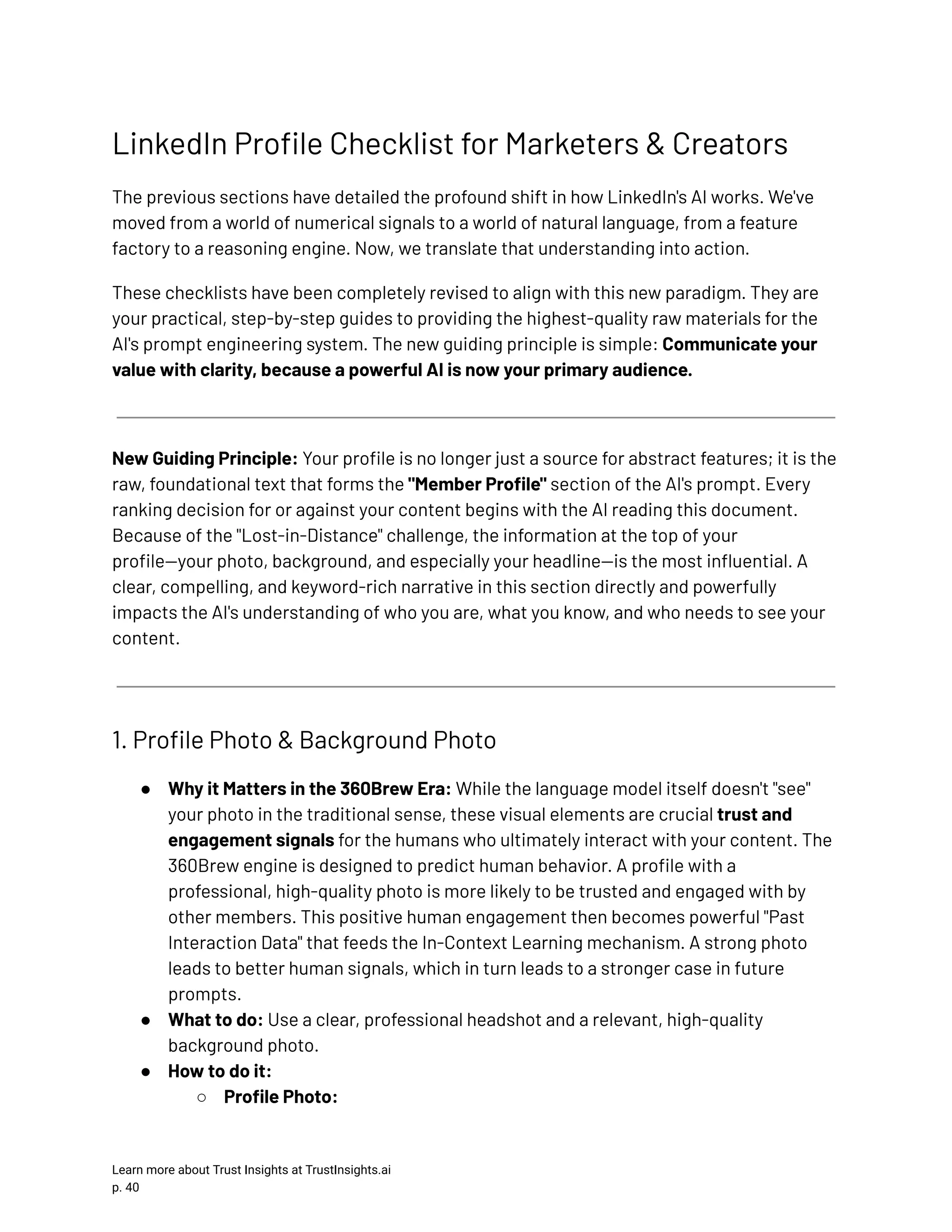

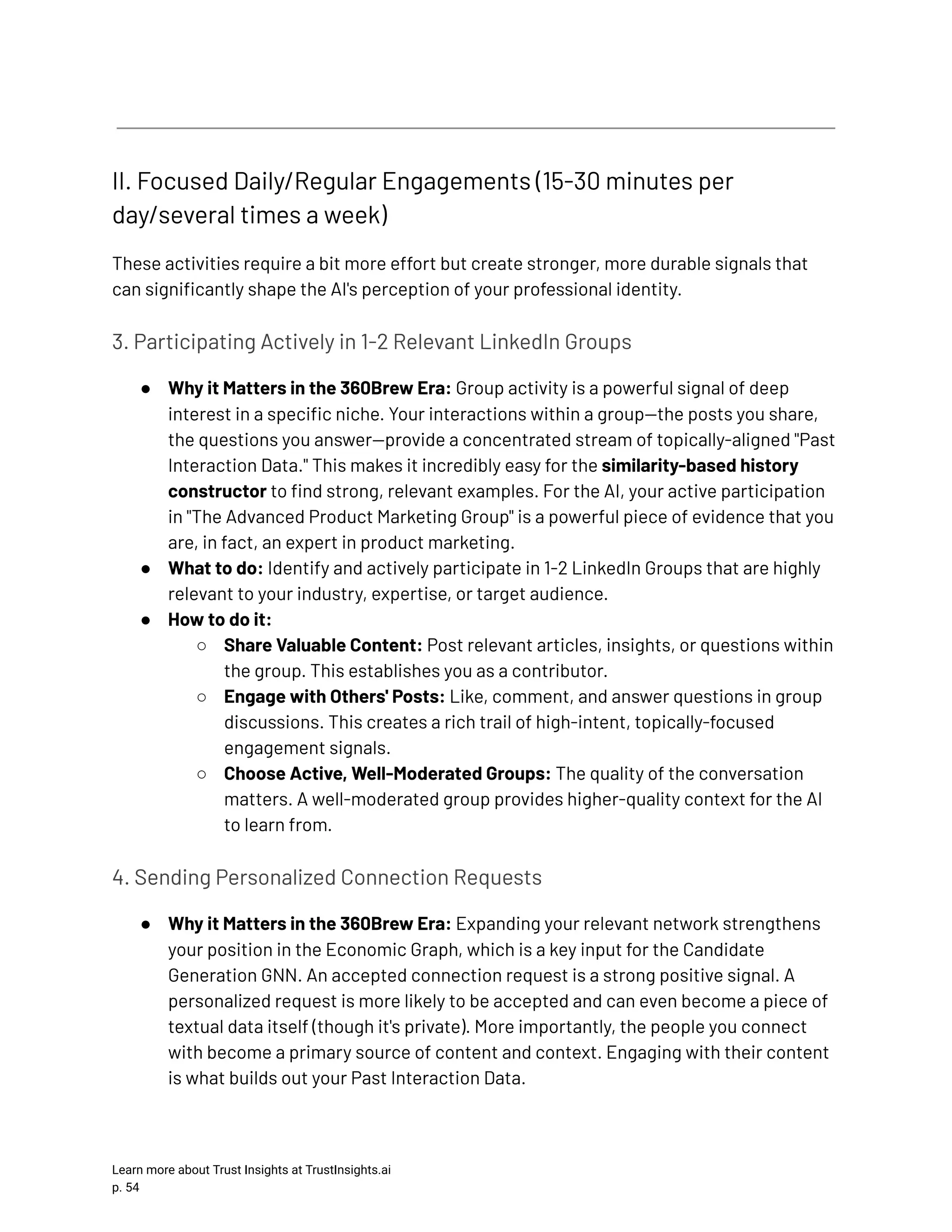

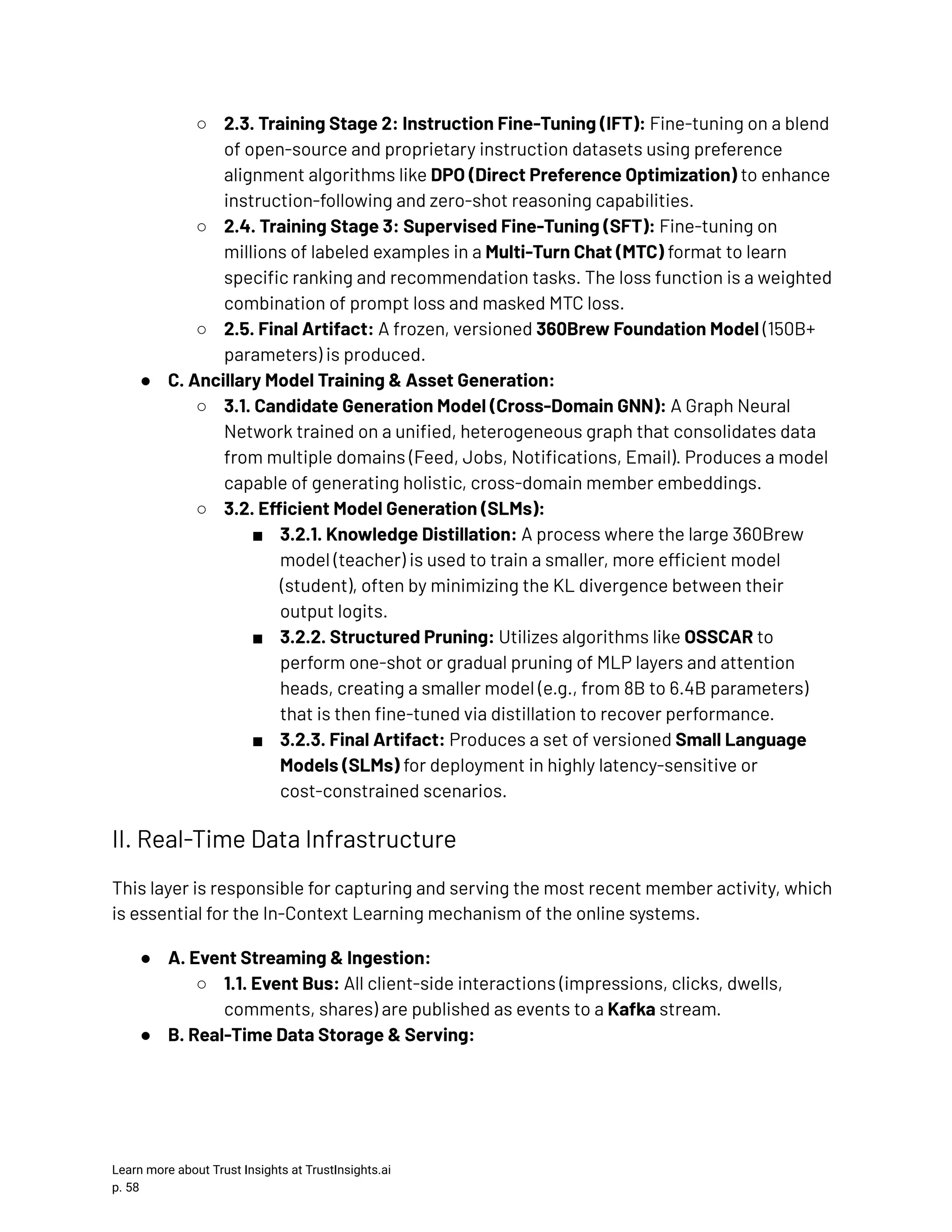

![following actions: liked, commented on, shared, viewed, or dismissed. Your task is to analyze the post interaction data along with the member's profile to predict whether the member will like, comment, share, or dismiss a new post referred to as the "Question" post. Note: Focus on topics, industry, and the author's seniority more than other criteria. In your calculation, assign a 30% weight to the relevance between the member's profile and the post content, and a 70% weight to the member's historical activity. Member Profile: Current position: Director of Product Marketing, current company: Salesforce, Location: San Francisco, California. Past post interaction data: Member has commented on the following posts: [Author: Avinash Kaushik, Content: 'Most analytics dashboards are data pukes. I'm challenging you to present just ONE metric that matters this week. What would it be?', Topics: data analytics, marketing metrics] Member has shared with comment the following posts: [Author: Joanna Wiebe, Content: 'Just released a new case study on how a simple copy tweak increased conversion by 45%. The key was changing the call to value, not call to action...', Topics: copywriting, conversion optimization] Member has liked the following posts: [Author: Melissa Perri, Content: 'Product strategy is not a plan to build features. It's a system of achievable goals and visions that work together to align the team around what's important.', Topics: product management, strategy] Question: Will the member like, comment, share, or dismiss the following post: [Author: April Dunford, Content: 'Hot take: Most companies get their positioning completely wrong because they listen to their customers instead of observing their customers. What's the biggest positioning mistake you've seen?', Topics: product marketing, positioning, strategy] Answer: The member will comment Analysis of the Prompt: This second example illustrates a more nuanced prediction. The historical data shows a pattern of not just liking, but actively commenting and sharing with comment, particularly on posts that ask questions or present strong opinions. The Question itself, from a known Learn more about Trust Insights at TrustInsights.ai p. 33](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-34-2048.jpg)

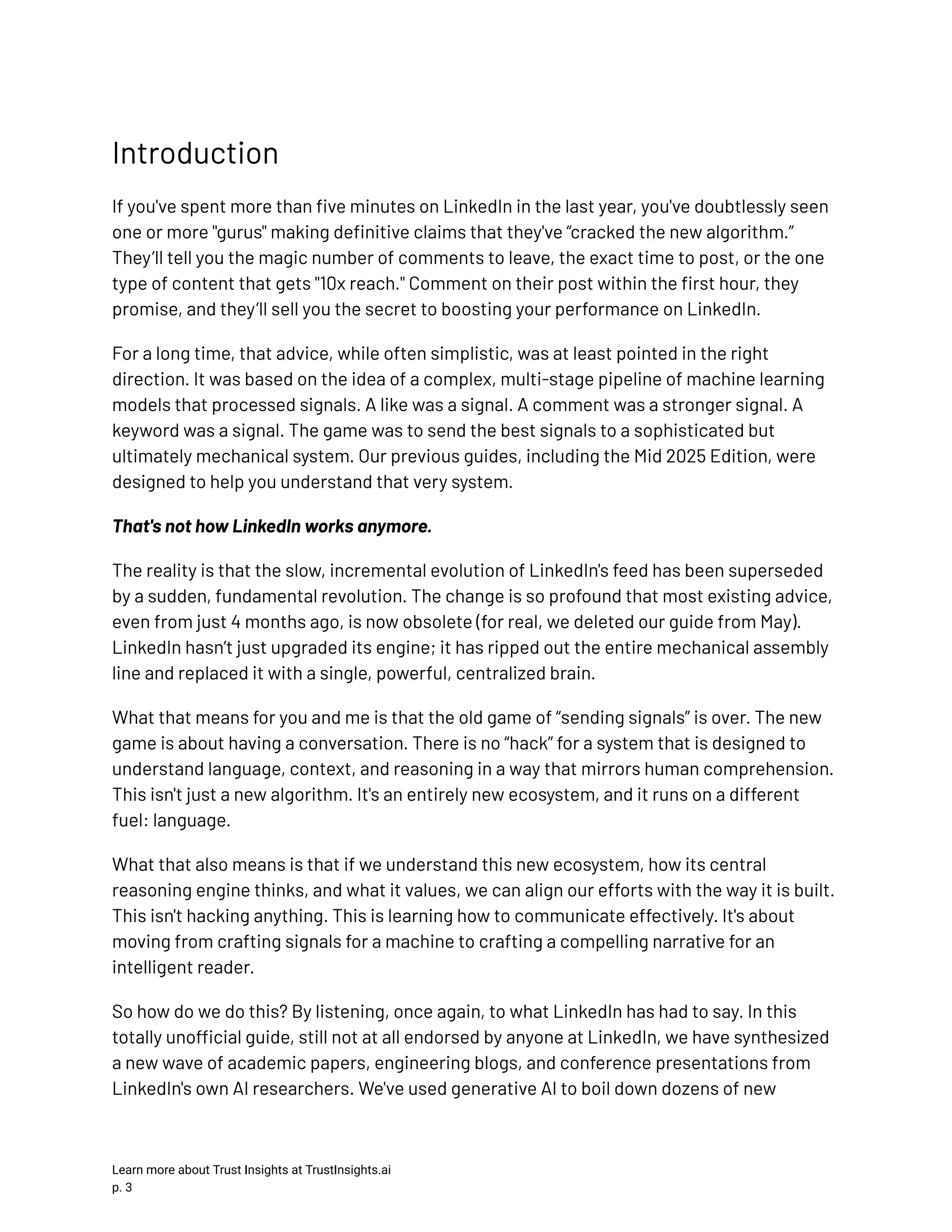

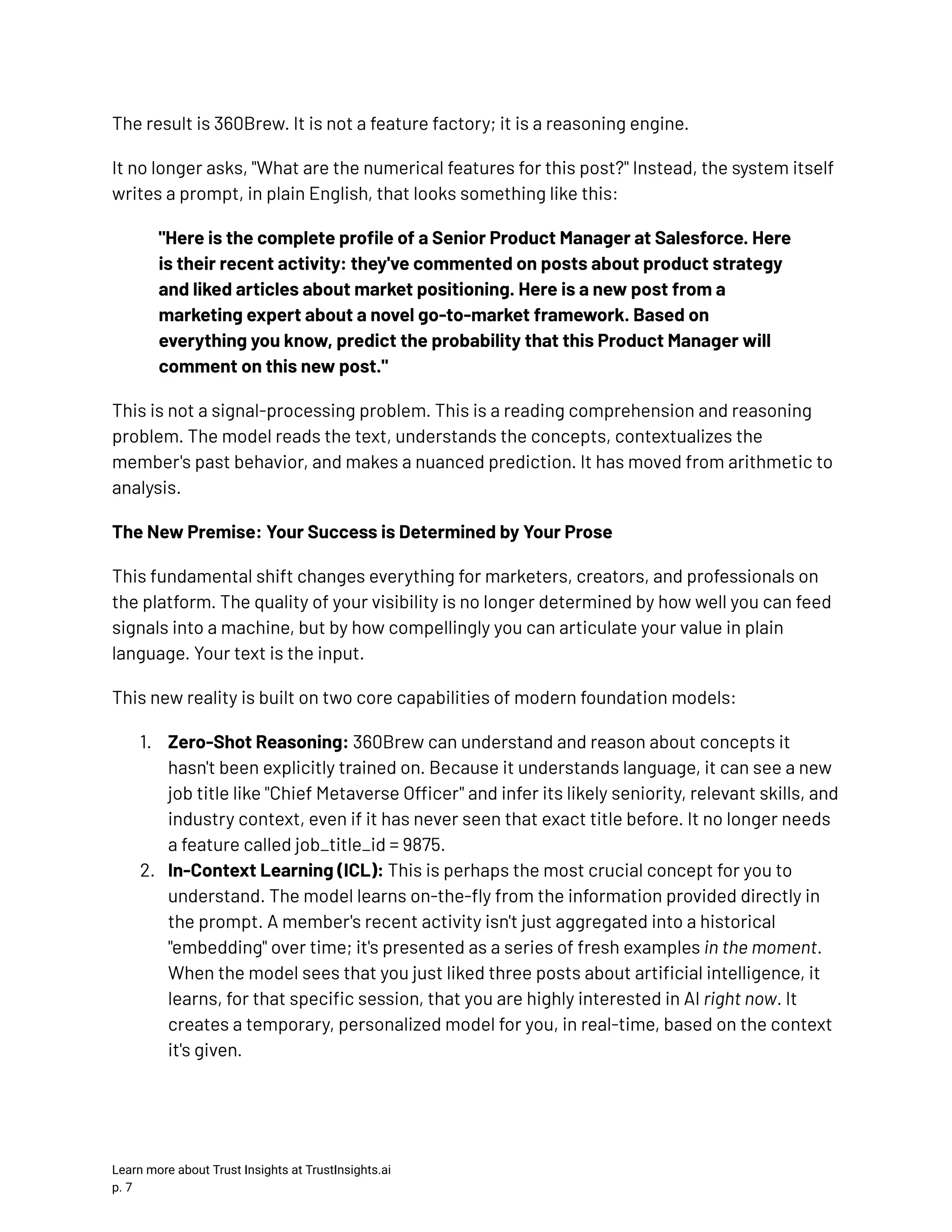

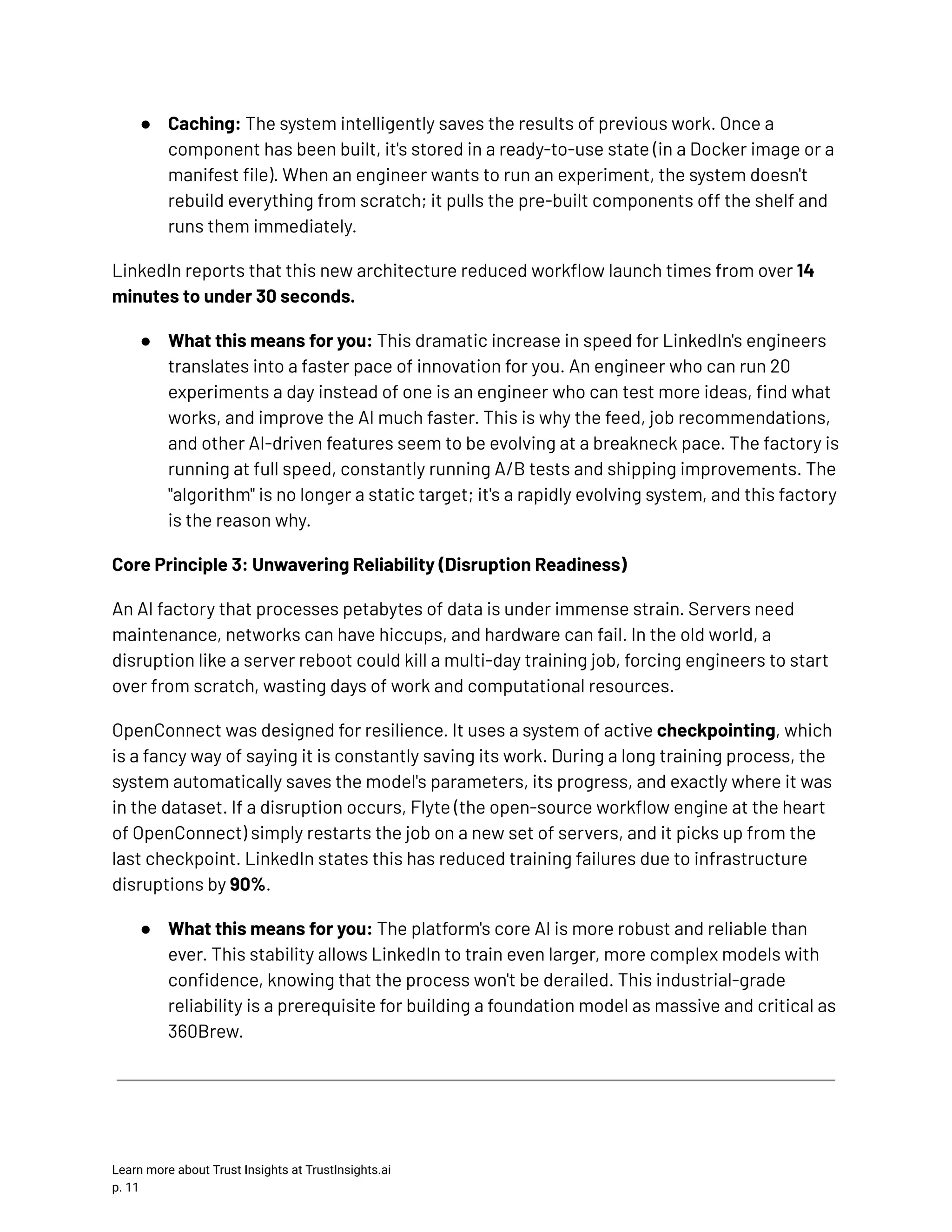

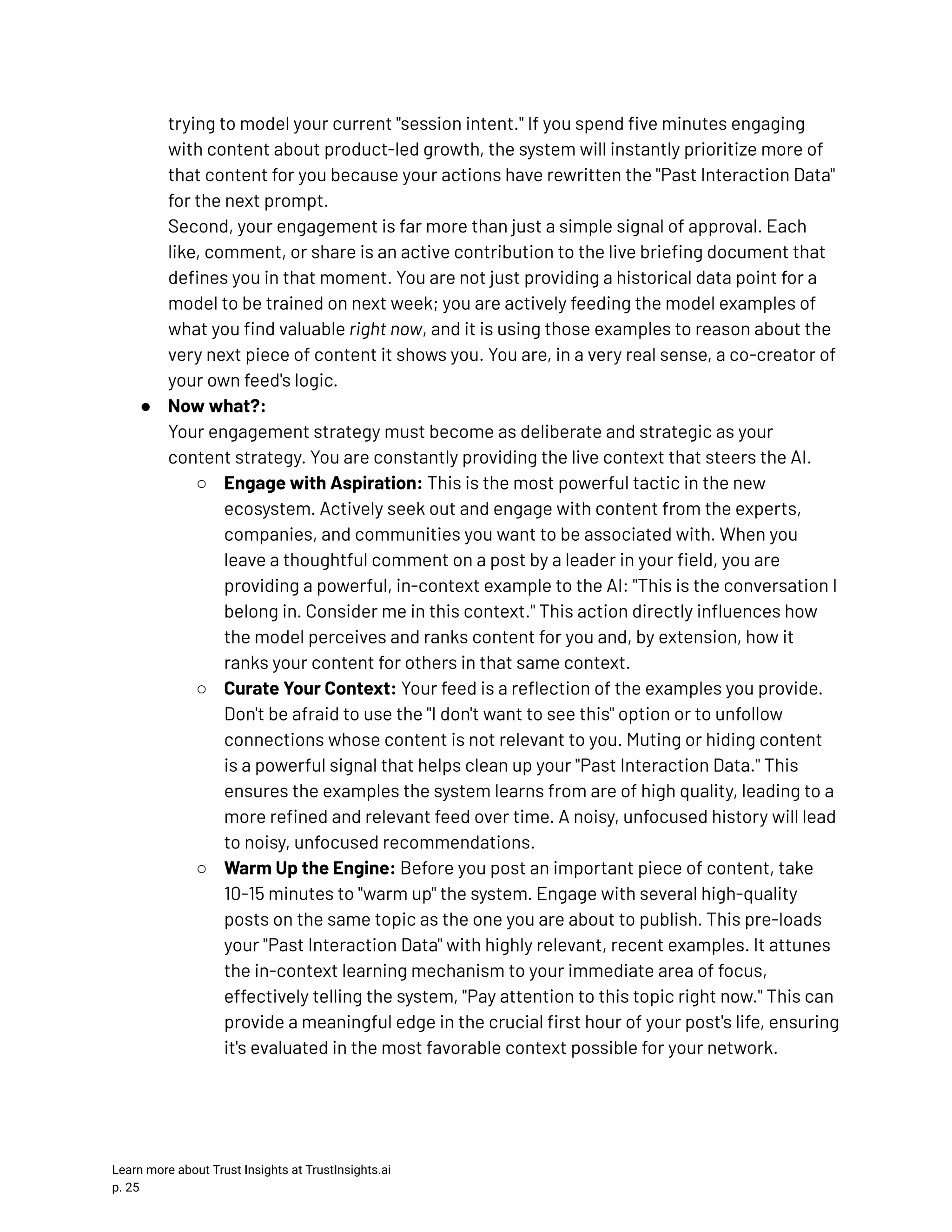

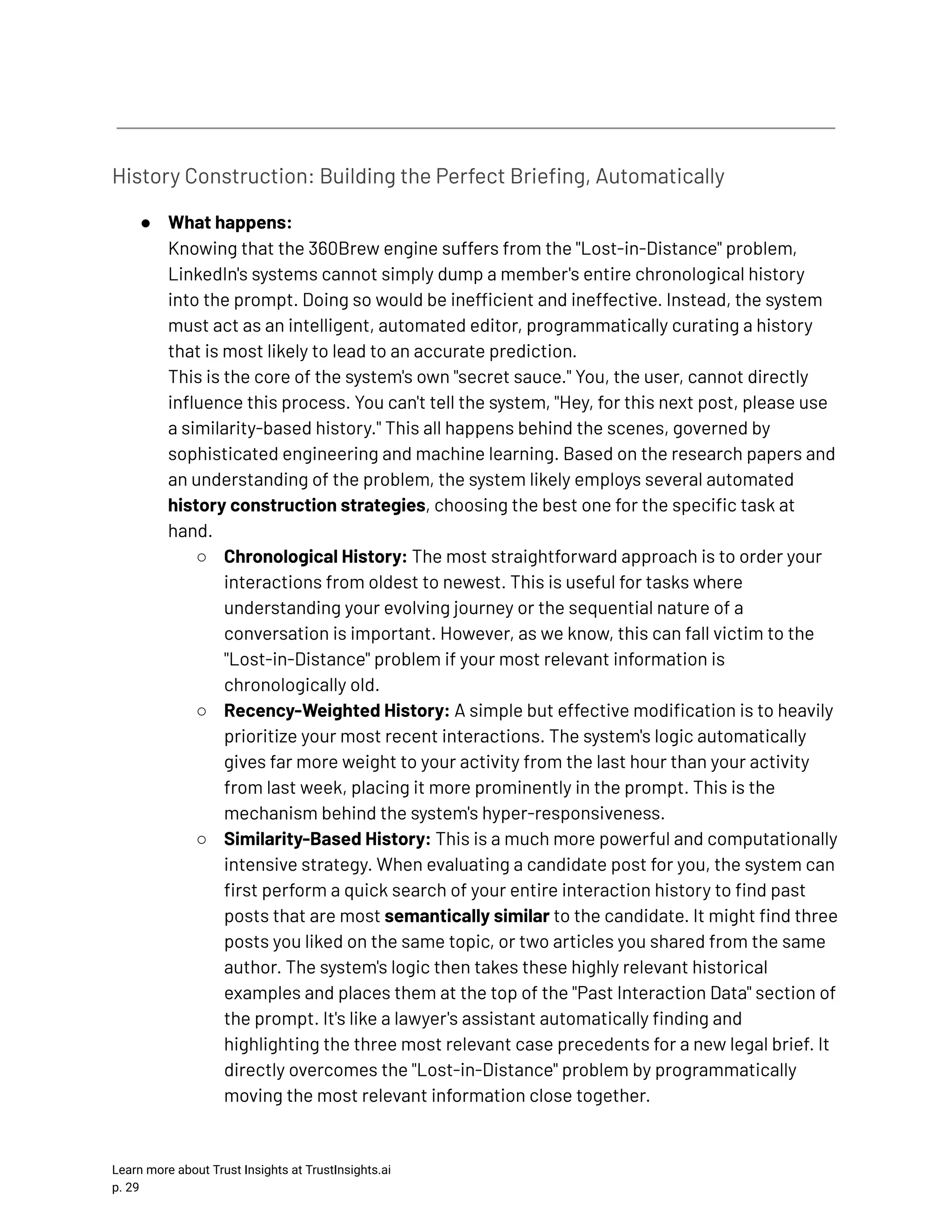

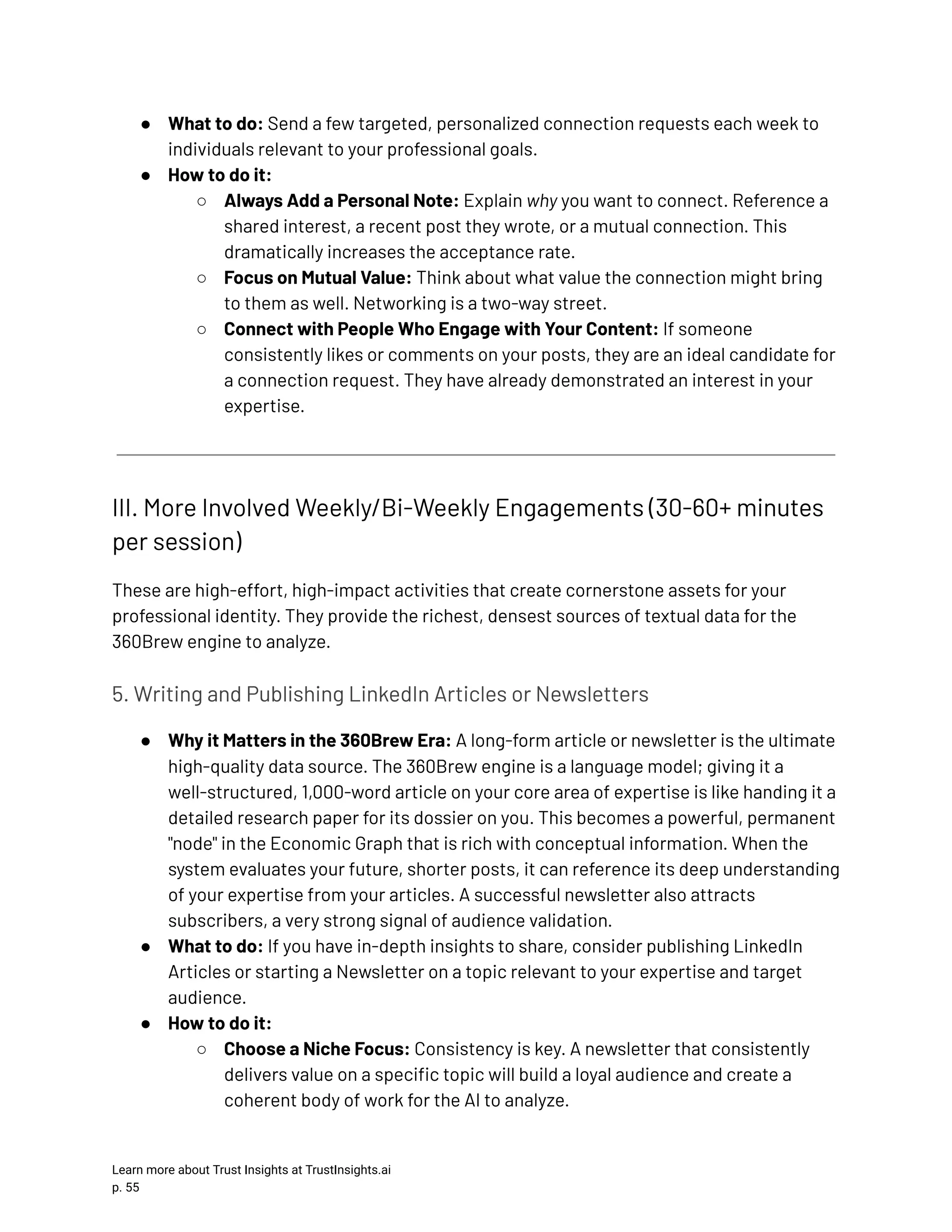

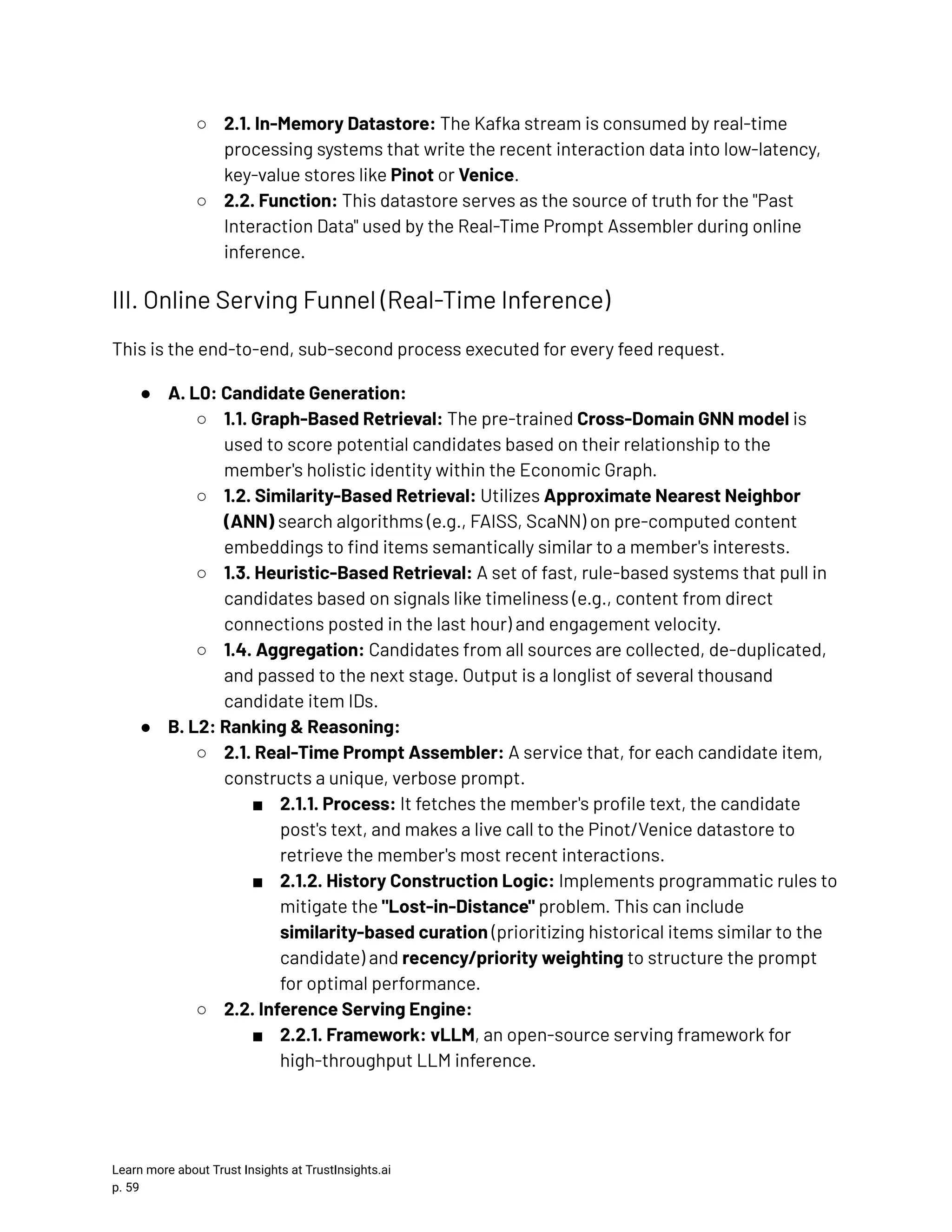

![LinkedIn Engagement Checklist for Marketers and Creators New Guiding Principle: Your activity (likes, comments, shares) is the raw material for the "Past Interaction Data" section of the AI's prompt. Every engagement you make is no longer just a passive vote; it is an active contribution to the live, personalized briefing document that the 360Brew engine reads to understand you. Strategic engagement is the act of deliberately curating this data set. You are providing the real-time examples that the model uses for In-Context Learning, effectively teaching it what you value, who you are, and what conversations you belong in. A high-quality engagement history leads to a powerful, persuasive prompt and, consequently, a more relevant and valuable feed experience. I. Quick Daily Engagements (5-15 minutes per day) These are small, consistent actions that keep your "Past Interaction Data" fresh and aligned with your goals. Think of this as the daily maintenance of your professional identity signal. 1. Reacting Strategically to Relevant Feed Content ● Why it Matters in the 360Brew Era: Each reaction (Like, Celebrate, Insightful, etc.) you make is an explicit data point that gets logged and is eligible for inclusion in future prompts. When the prompt engineer assembles your Past Interaction Data, it might include a line like: "Member has liked the following posts: [Content of Post X]..." A reaction is a direct, unambiguous way of telling the system, "This content is relevant to me." Reacting to content from your target audience or on your core topics reinforces your position within that "conceptual neighborhood," strengthening the signals for both the Candidate Generation GNN and the 360Brew reasoning engine. ● What to do: Quickly scan your feed and thoughtfully react to 3-5 posts that are highly relevant to your expertise, industry, or target audience. ● How to do it: ○ Prioritize Relevance over Volume: Focus on reacting to posts from key connections, industry leaders, and on topics central to your brand. A single reaction on a highly relevant post is a better signal than 20 reactions on random content. Learn more about Trust Insights at TrustInsights.ai p. 52](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-53-2048.jpg)

![○ Use Diverse Reactions for Nuance: Don't just "Like" everything. Using "Insightful" on a data-driven post or "Celebrate" on a colleague's promotion provides a richer, more nuanced signal. While it's not explicitly stated how each reaction is weighted, it provides more detailed semantic information for the model to potentially learn from. ○ Avoid Indiscriminate Reacting: Mass-liking dozens of posts in a few minutes can dilute the signal of your true interests. It creates a noisy "Past Interaction Data" set, making it harder for the prompt engineer to identify what you genuinely find valuable. Be deliberate. 2. Brief, Insightful Comments on 1-2 Key Posts ● Why it Matters in the 360Brew Era: A comment is one of the most powerful signals you can create. It is a high-intent action that generates rich, textual data. When you comment, two things happen: ○ Your action is logged for your own Past Interaction Data: "Member has commented on the following posts: [Content of Post Y]..." ○ The text of your comment itself becomes associated with your professional identity. The 360Brew engine can read your comment and use its content to refine its understanding of your expertise and perspective. Leaving a relevant, insightful comment on another expert's post is like co-authoring a small piece of content with them. It explicitly links your identity to theirs in a meaningful, conceptual way. ● What to do: Identify 1-2 highly relevant posts in your feed and add a brief, thoughtful comment that contributes to the discussion. ● How to do it: ○ Add Value, Don't Just Agree: Instead of just writing "Great post!", expand on a point, ask a clarifying question, or share a brief, related experience. This provides unique text for the AI to analyze. ○ Use Relevant Concepts Naturally: Your comment text becomes a signal of your expertise. If you're a cybersecurity expert, commenting with insights about "zero-trust architecture" on a relevant post reinforces your authority on that topic. ○ Be Timely: Commenting on fresher posts often yields more visibility and is more likely to be part of the "recency-weighted" history construction for others who see that post. ○ Keep it Professional and Constructive: Your comments are a permanent part of your professional record, readable by both humans and the AI. Learn more about Trust Insights at TrustInsights.ai p. 53](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-54-2048.jpg)

![Methodology and Disclosures Sources Original Sources (Pre-360Brew Era) 1. Borisyuk, F., Zhou, M., Song, Q., Zhu, S., Tiwana, B., Parameswaran, G., Dangi, S., Hertel, L., Xiao, Q. C., Hou, X., Ouyang, Y., Gupta, A., Singh, S., Liu, D., Cheng, H., Le, L., Hung, J., Keerthi, S., Wang, R., Zhang, F., Kothari, M., Zhu, C., Sun, D., Dai, Y., Luan, X., Zhu, S., Wang, Z., Daftary, N., Shen, Q., Jiang, C., Wei, H., Varshney, M., Ghoting, A., & Ghosh, S. (2024). LiRank: Industrial large scale ranking models at LinkedIn. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD '24). Association for Computing Machinery. https://doi.org/10.1145/3637528.3671561 (Also arXiv:2402.06859v2 [cs.LG]) 2. Borisyuk, F., Hertel, L., Parameswaran, G., Srivastava, G., Ramanujam, S., Ocejo, B., Du, P., Akterskii, A., Daftary, N., Tang, S., Sun, D., Xiao, C., Nathani, D., Kothari, M., Dai, Y., & Gupta, A. (2025). From features to transformers: Redefining ranking for scalable impact. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD '25)[Anticipated publication ]. (Also arXiv:2502.03417v1[cs.LG]) 3. Borisyuk, F., He, S., Ouyang, Y., Ramezani, M., Du, P., Hou, X., Jiang, C., Pasumarthy, N., Bannur, P., Tiwana, B., Liu, P., Dangi, S., Sun, D., Pei, Z., Shi, X., Zhu, S., Shen, Q., Lee, K.-H., Stein, D., Li, B., Wei, H., Ghoting, A., & Ghosh, S. (2024). LiGNN: Graph neural networks at LinkedIn. In Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD '24). Association for Computing Machinery. https://doi.org/10.1145/3637528.3671566 4. Zhang, F., Kothari, M., & Tiwana, B. (2024, August 7). Leveraging Dwell Time to Improve Member Experiences on the LinkedIn Feed. LinkedIn Engineering Blog. Retrieved from https://www.linkedin.com/blog/engineering/feed/leveraging-dwell-time-to-improv e-member-experiences-on-the-linkedin-feed (Original post: Dangi, S., Jia, J., Somaiya, M., & Xuan, Y. (2020, October 29). Understanding dwell time to improve LinkedIn feed ranking. LinkedIn Engineering Blog. Retrieved from https://engineering.linkedin.com/blog/2020/understanding-feed-dwell-time) 5. Ackerman, I., & Kataria, S. (2021, August 19). Homepage feed multi-task learning using TensorFlow. LinkedIn Engineering Blog. Retrieved from https://engineering.linkedin.com/blog/2021/homepage-feed-multi-task-learning-u Learn more about Trust Insights at TrustInsights.ai p. 64](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-65-2048.jpg)

![https://engineering.linkedin.com/blog/2018/03/a-look-behind-the-ai-that-powers- linkedins-feed-sifting-through 14.Yu, Y. Y., & Saint-Jacques, G. (n.d.). Choosing an algorithmic fairness metric for an online marketplace: Detecting and quantifying algorithmic bias on LinkedIn. [Unpublished manuscript/Preprint, contextually implied source]. 15.Sanjabi, M., & Firooz, H. (2025, February 7). 360Brew : A Decoder-only Foundation Model for Personalized Ranking and Recommendation. arXiv. arXiv:2501.16450v3 [cs.IR] 16.Firooz, H., Sanjabi, M., Jiang, W., & Zhai, X. (2025, January 2). LOST-IN-DISTANCE: IMPACT OF CONTEXTUAL PROXIMITY ON LLM PERFORMANCE IN GRAPH TASKS. arXiv. arXiv:2410.01985v2[cs.AI] New Sources (360Brew Era & Modern Ecosystem) 1. Sanjabi, M., Firooz, H., & 360Brew Team. (2025, August 23). 360Brew: A Decoder-only Foundation Model for Personalized Ranking and Recommendation. arXiv. arXiv:2501.16450v4 [cs.IR]. (Primary source for the 360Brew model, its prompt-based architecture, and In-Context Learning mechanism). 2. He, S., Choi, J., Li, T., Ding, Z., Du, P., Bannur, P., Liang, F., Borisyuk, F., Jaikumar, P., Xue, X., Gupta, V. (2025, June 15). Large Scalable Cross-Domain Graph Neural Networks for Personalized Notification at LinkedIn. arXiv. arXiv:2506.12700v1 [cs.LG]. (Primary source for the evolution from domain-specific GNNs to the holistic Cross-Domain GNN for candidate generation). 3. Firooz, H., Sanjabi, M., Jiang, W., & Zhai, X. (2025, January 2). LOST-IN-DISTANCE: IMPACT OF CONTEXTUAL PROXIMITY ON LLM PERFORMANCE IN GRAPH TASKS. arXiv. arXiv:2410.01985v2 [cs.AI]. (Primary source detailing the core technical challenge of long-context reasoning that informs the prompt engineering and history construction strategies for 360Brew). 4. Behdin, K., Dai, Y., Fatahibaarzi, A., Gupta, A., Song, Q., Tang, S., et al. (2025, February 20). Efficient AI in Practice: Training and Deployment of Efficient LLMs for Industry Applications. arXiv. arXiv:2502.14305v1 [cs.IR]. (Primary source for the practical deployment strategies, including knowledge distillation and pruning, used to create efficient SLMs from large foundation models like 360Brew for production use). 5. Lyu, L., Zhang, C., Shang, Y., Jha, S., Jain, H., Ahmad, U., & the OpenConnect Team. (2024). OpenConnect: LinkedIn’s next-generation AI pipeline ecosystem. LinkedIn Engineering Blog. (Contextually implied source describing the replacement of the legacy ProML platform with the modern OpenConnect/Flyte-based ecosystem for all AI/ML training pipelines). Learn more about Trust Insights at TrustInsights.ai p. 66](https://image.slidesharecdn.com/linkedinalgorithmguide-251102133919-094c6236/75/LinkedIn-Marketing-Guide-AI-Algorithm-Changes-67-2048.jpg)