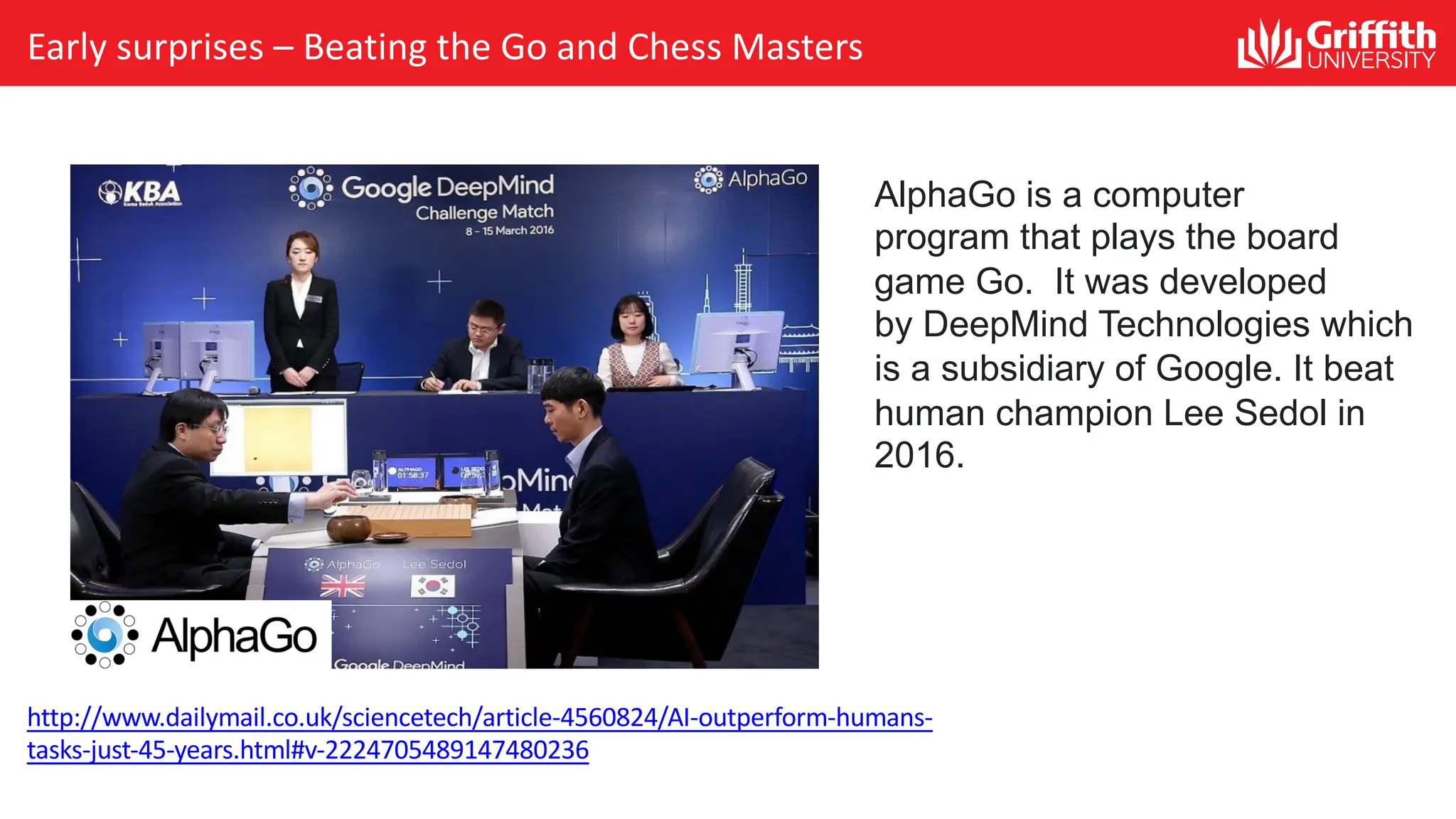

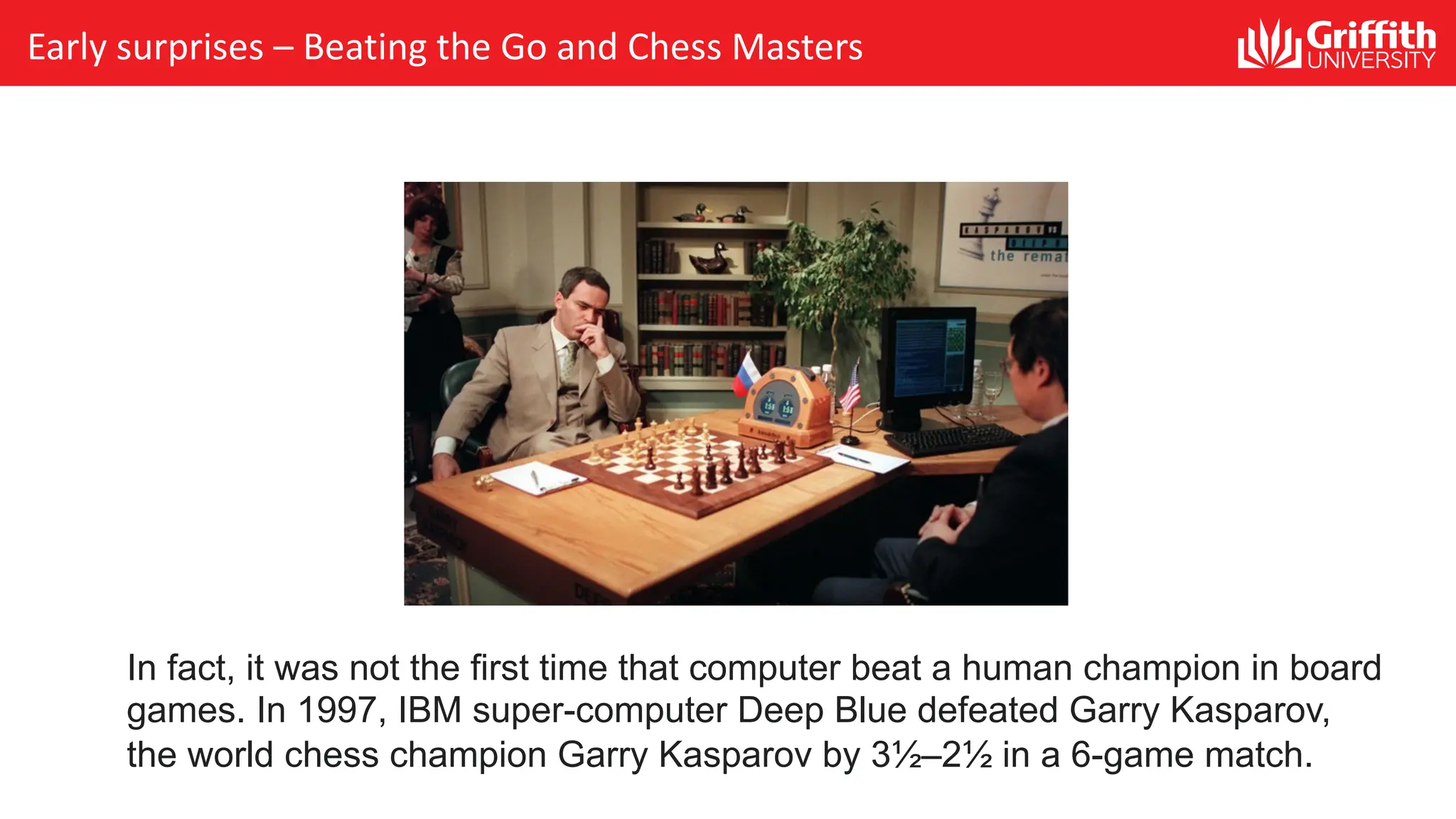

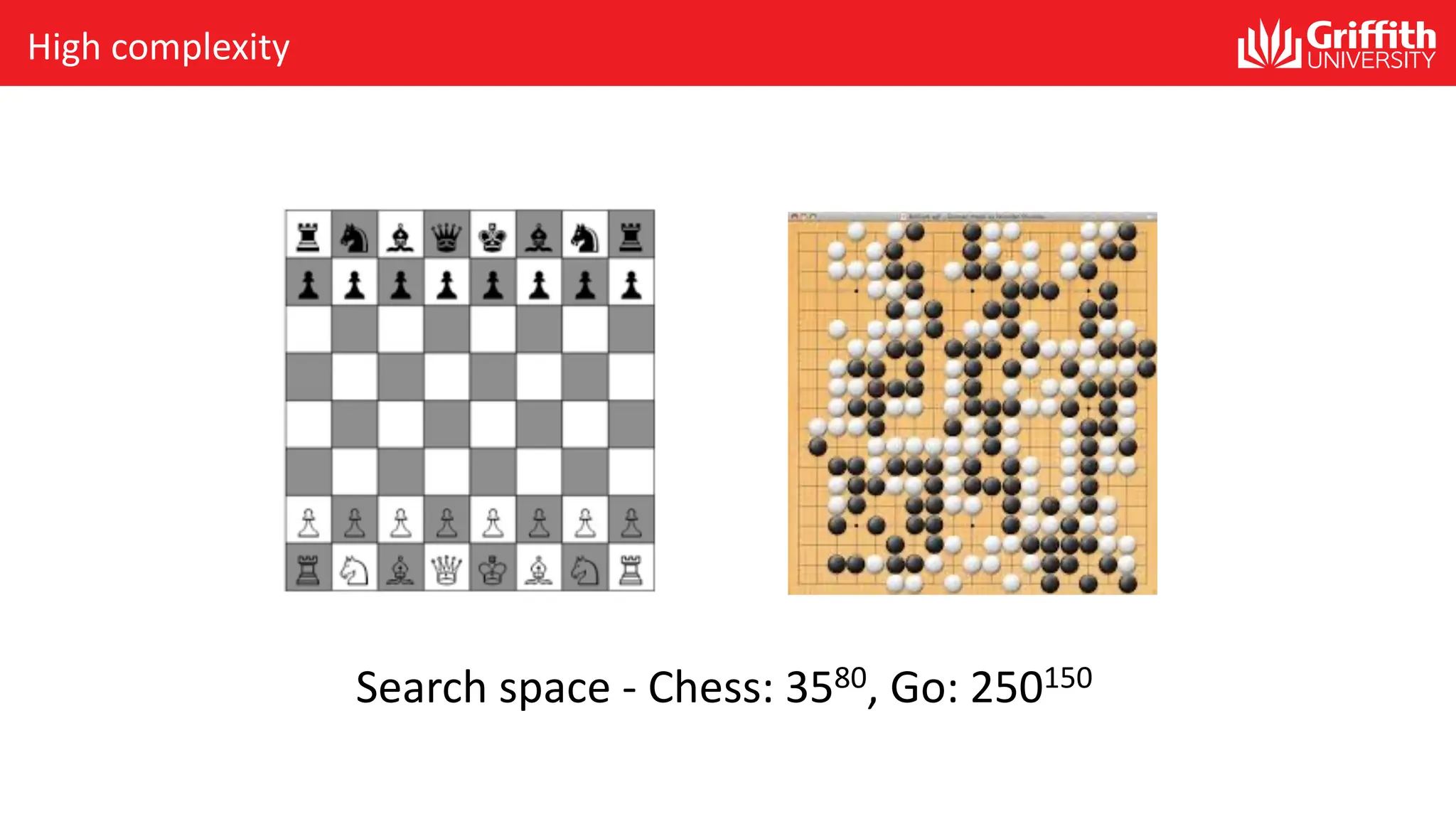

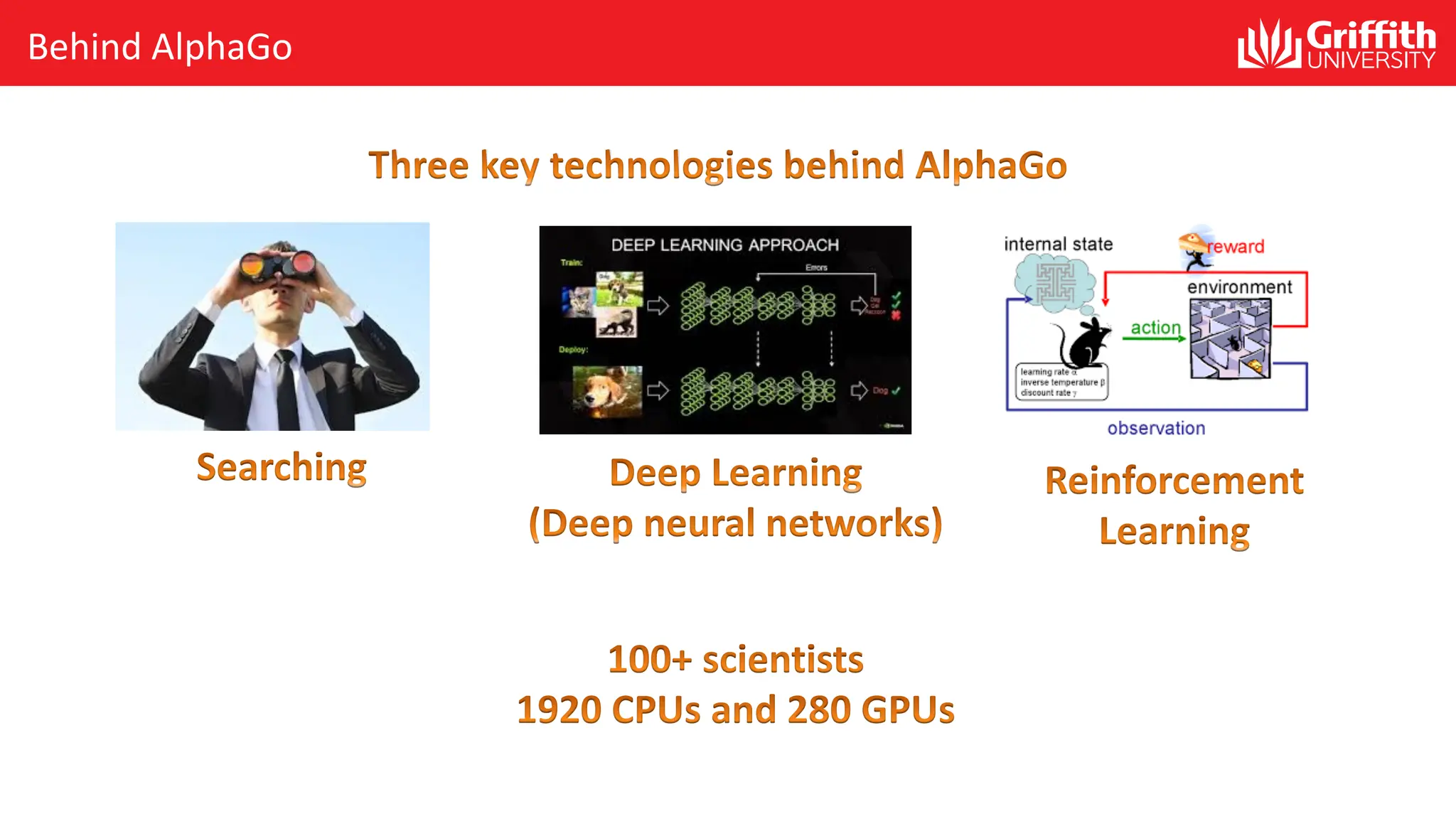

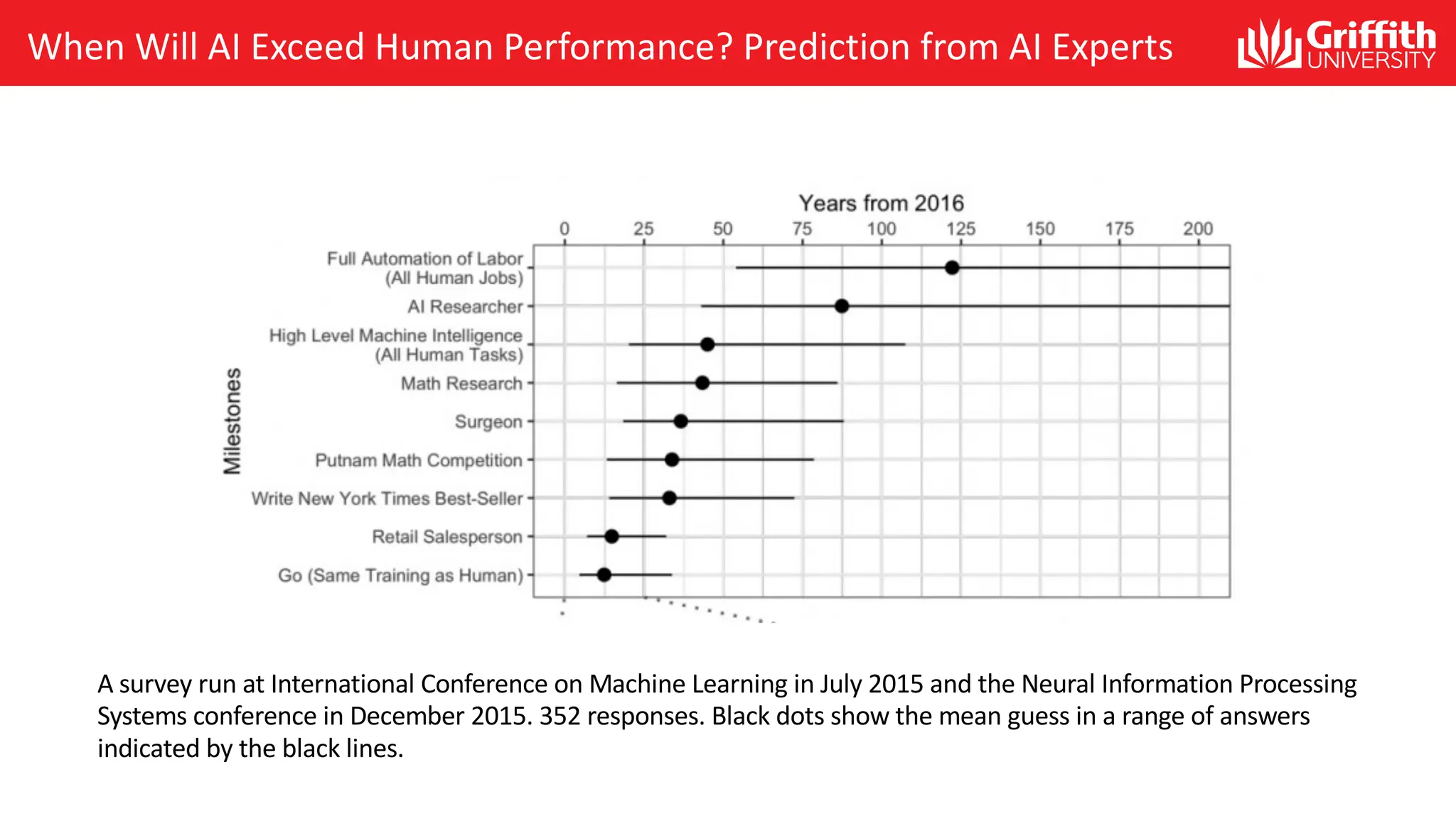

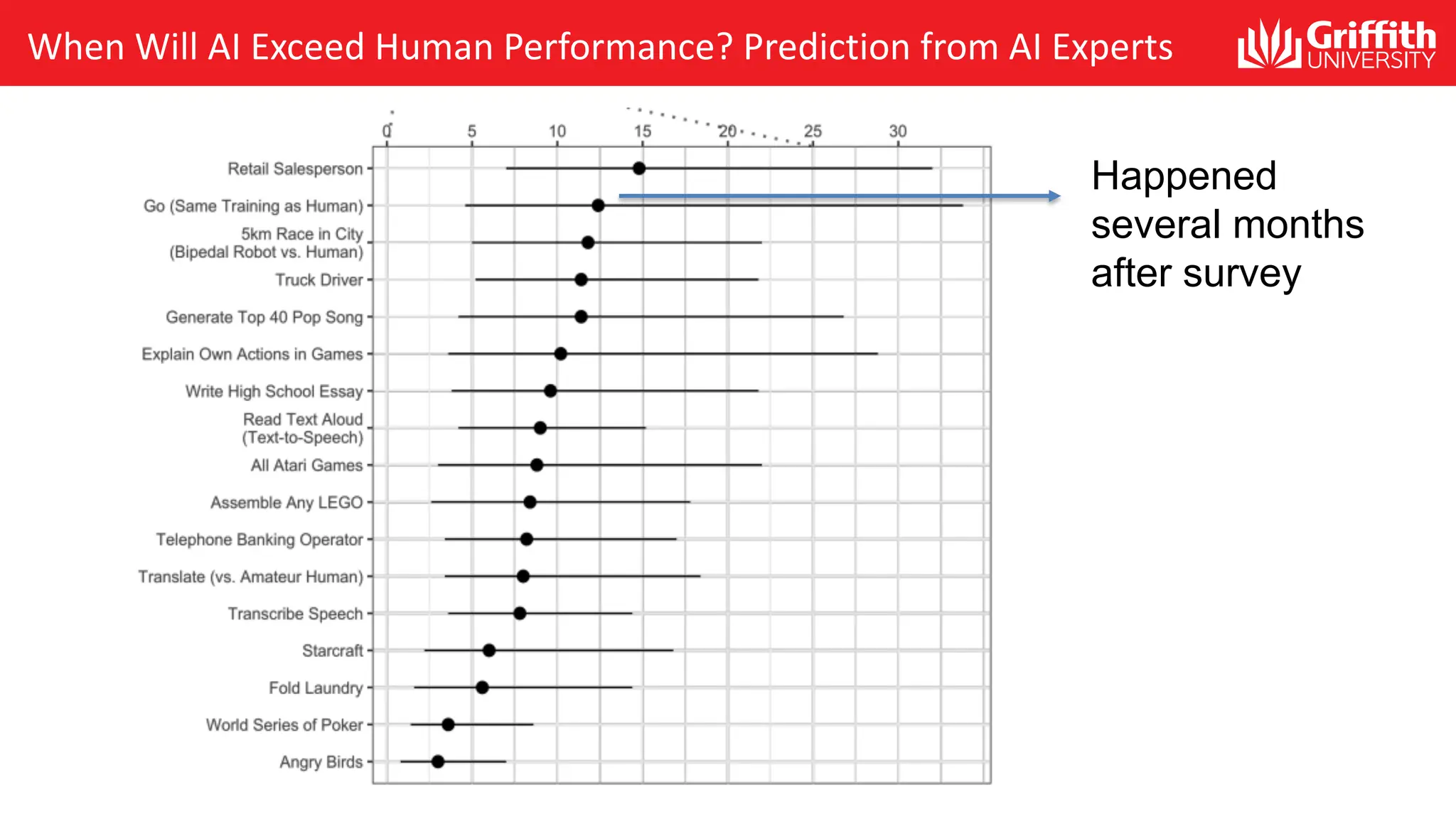

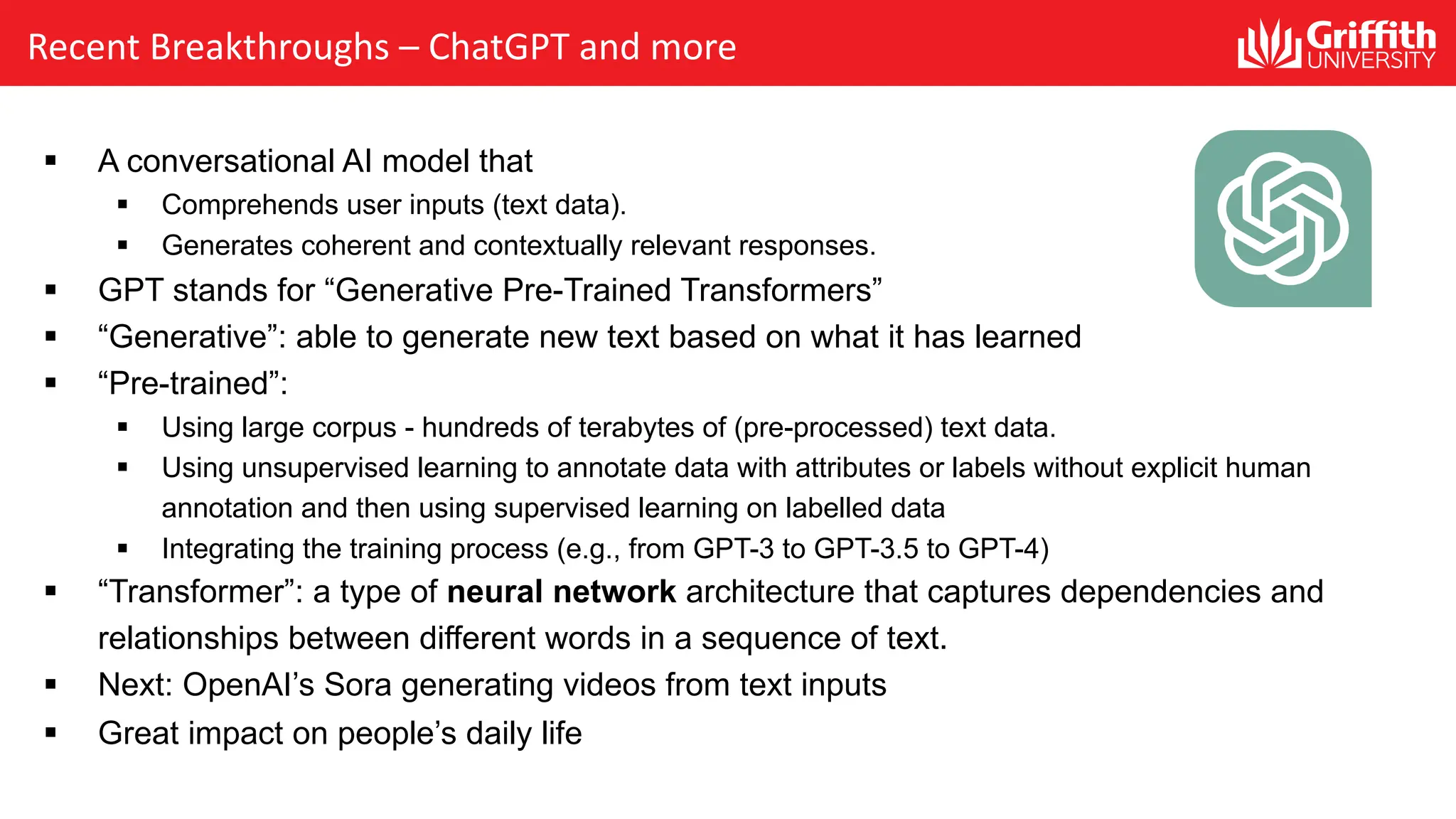

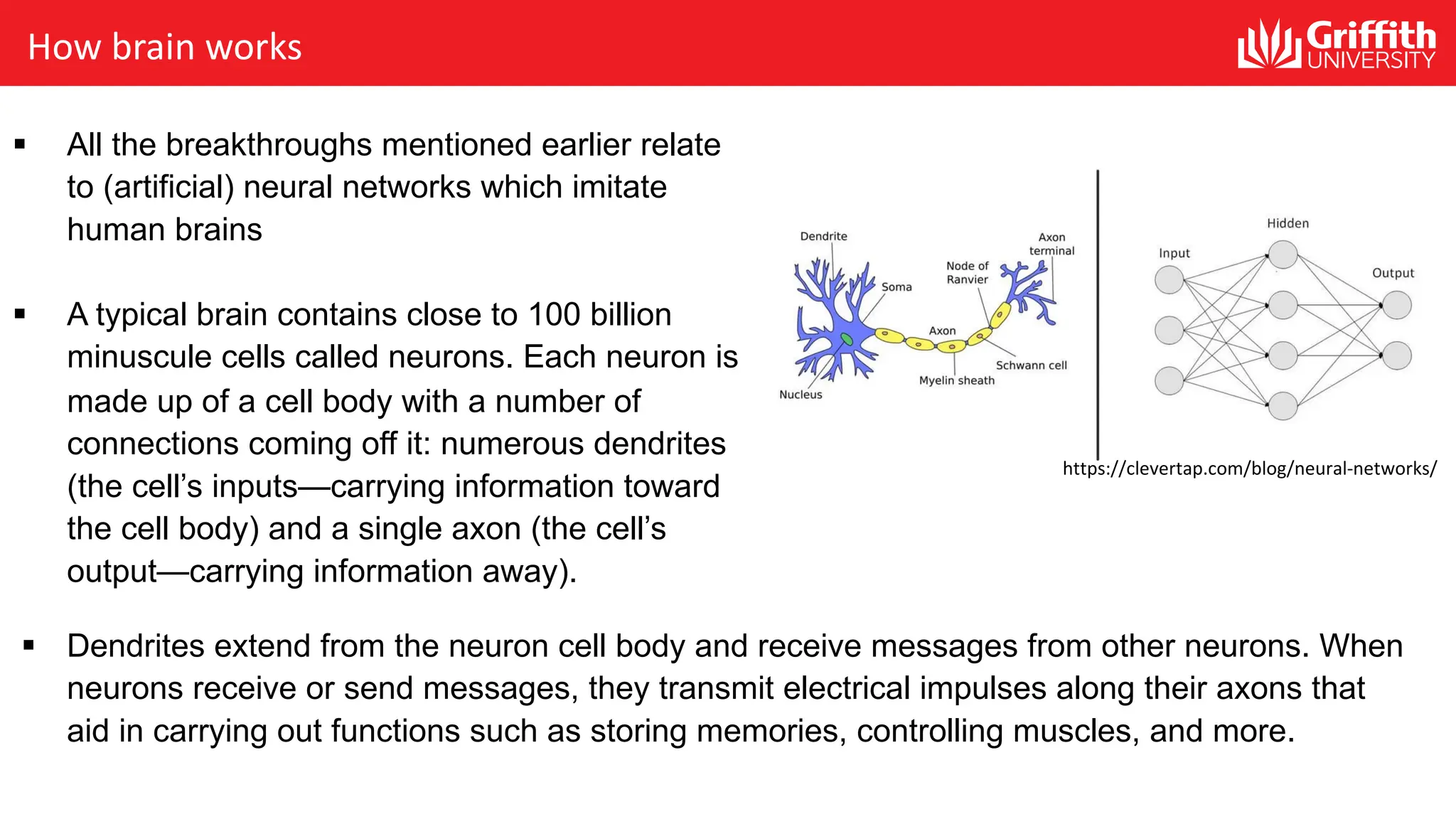

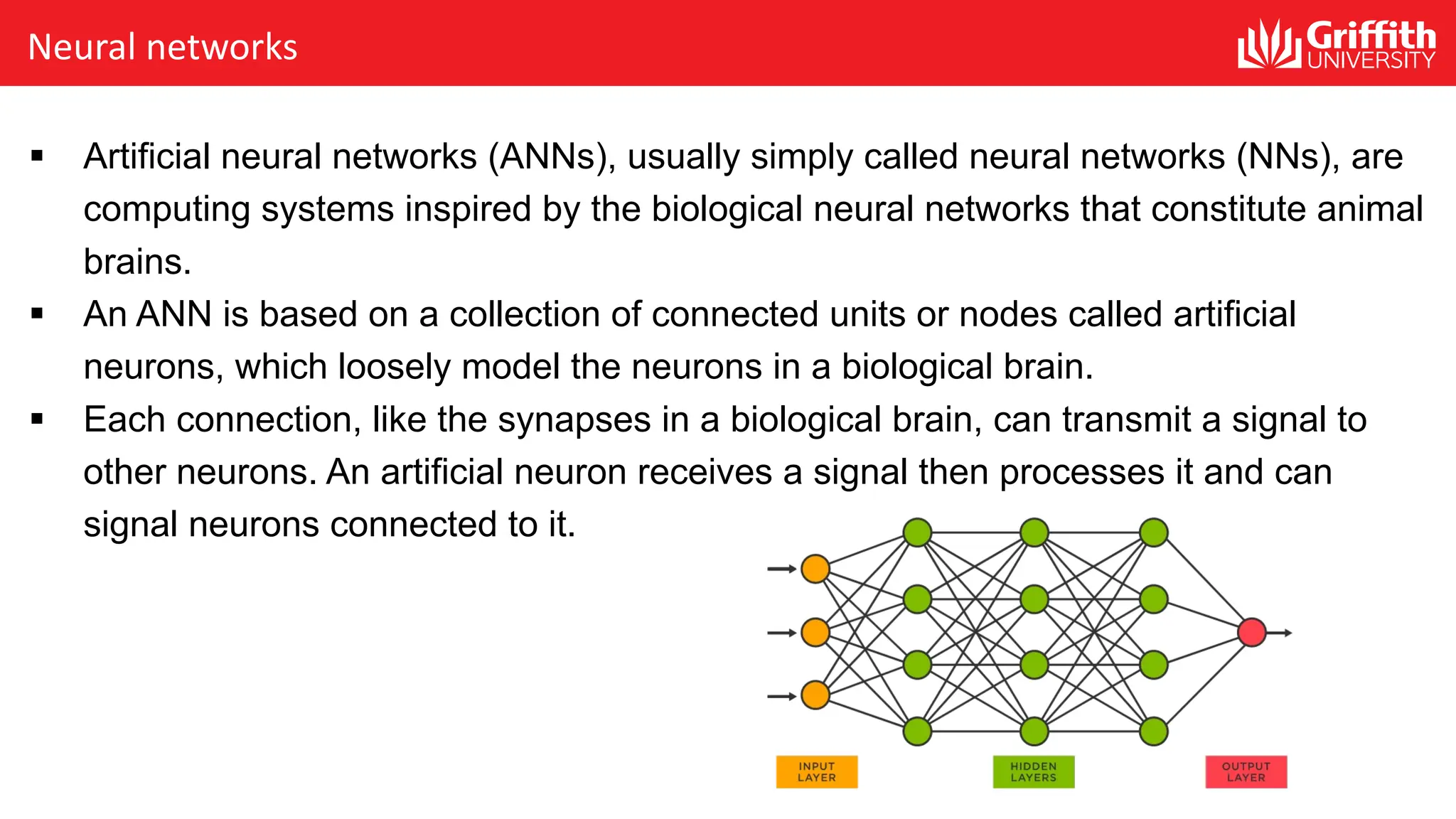

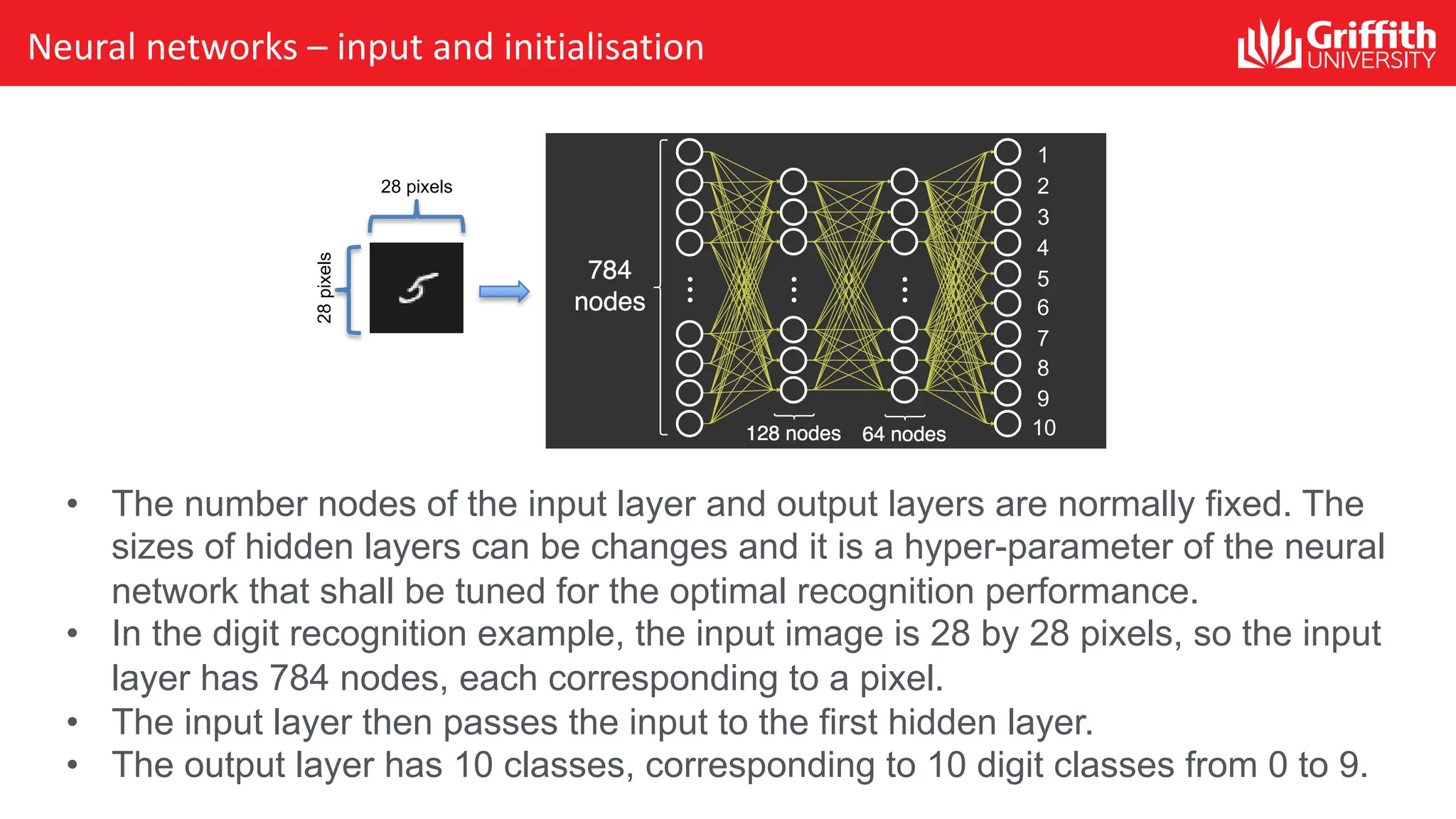

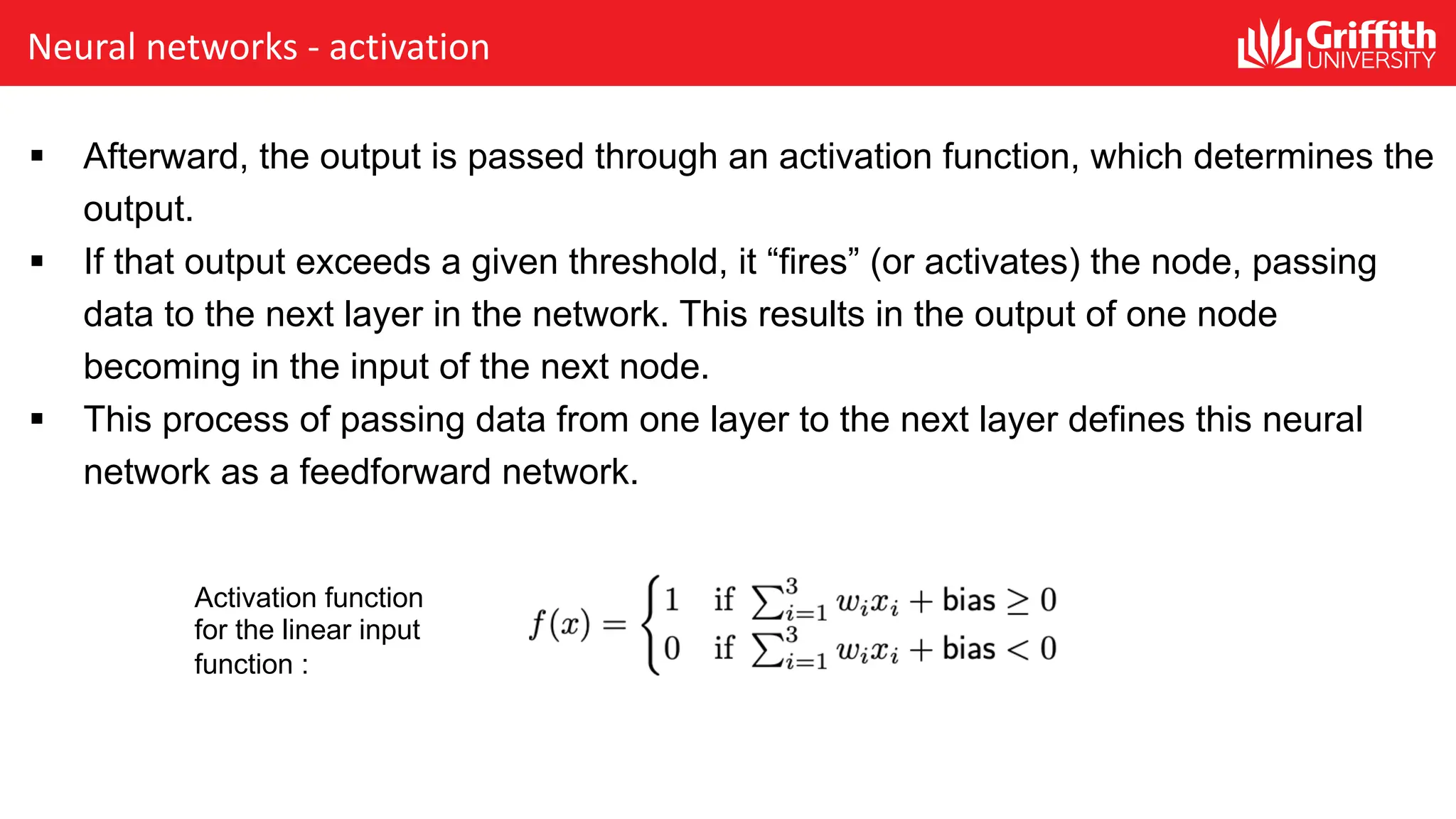

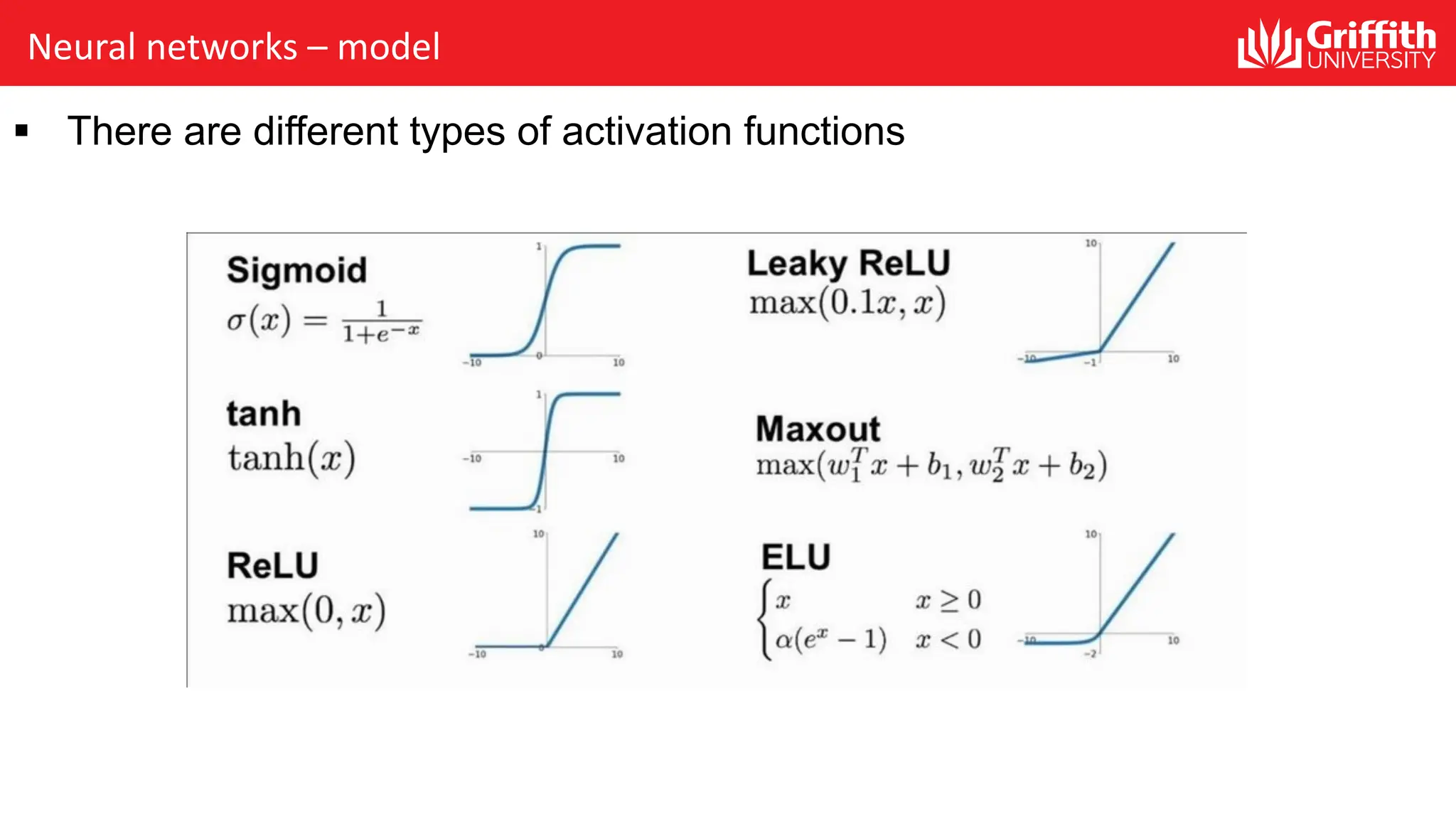

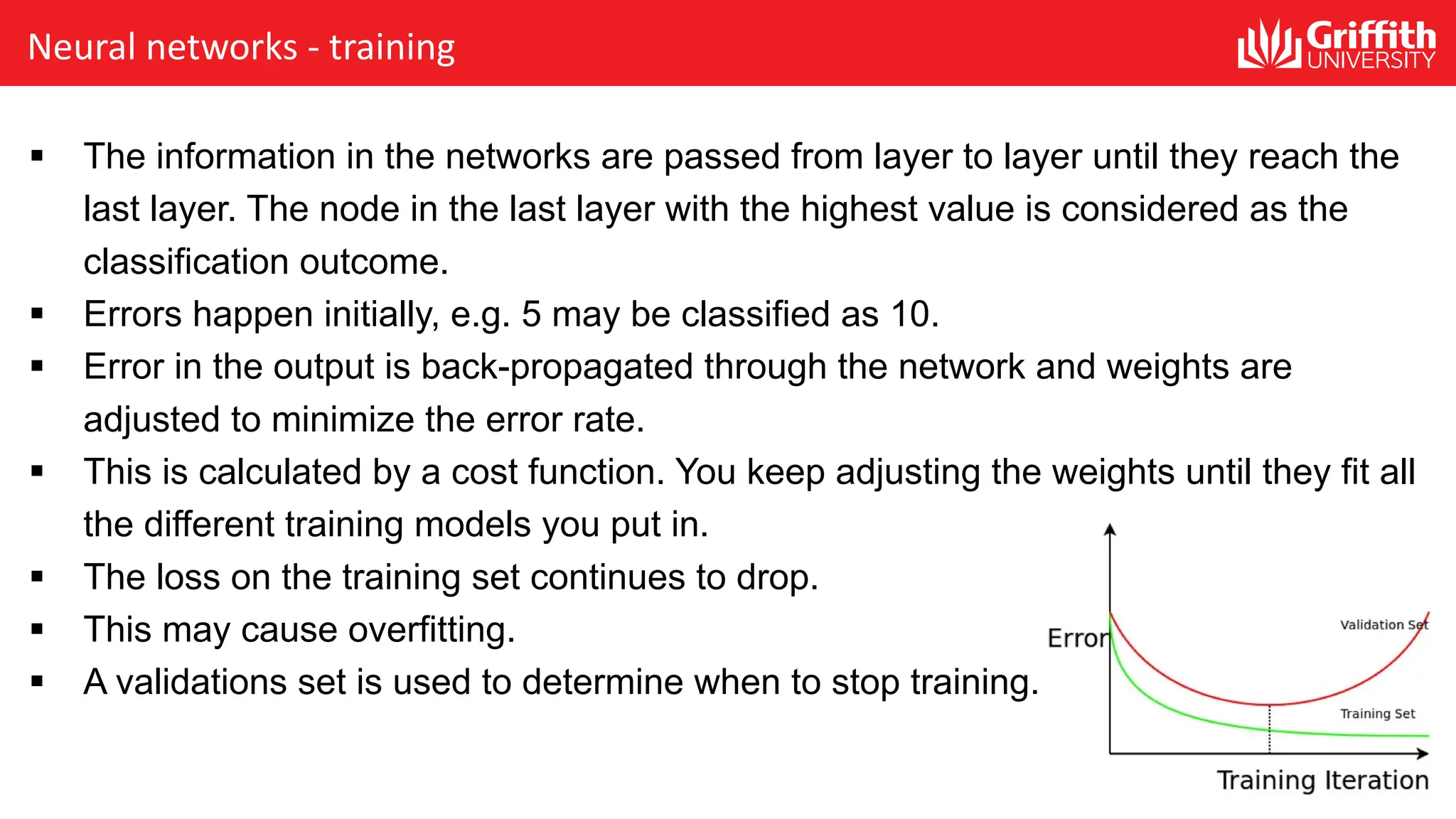

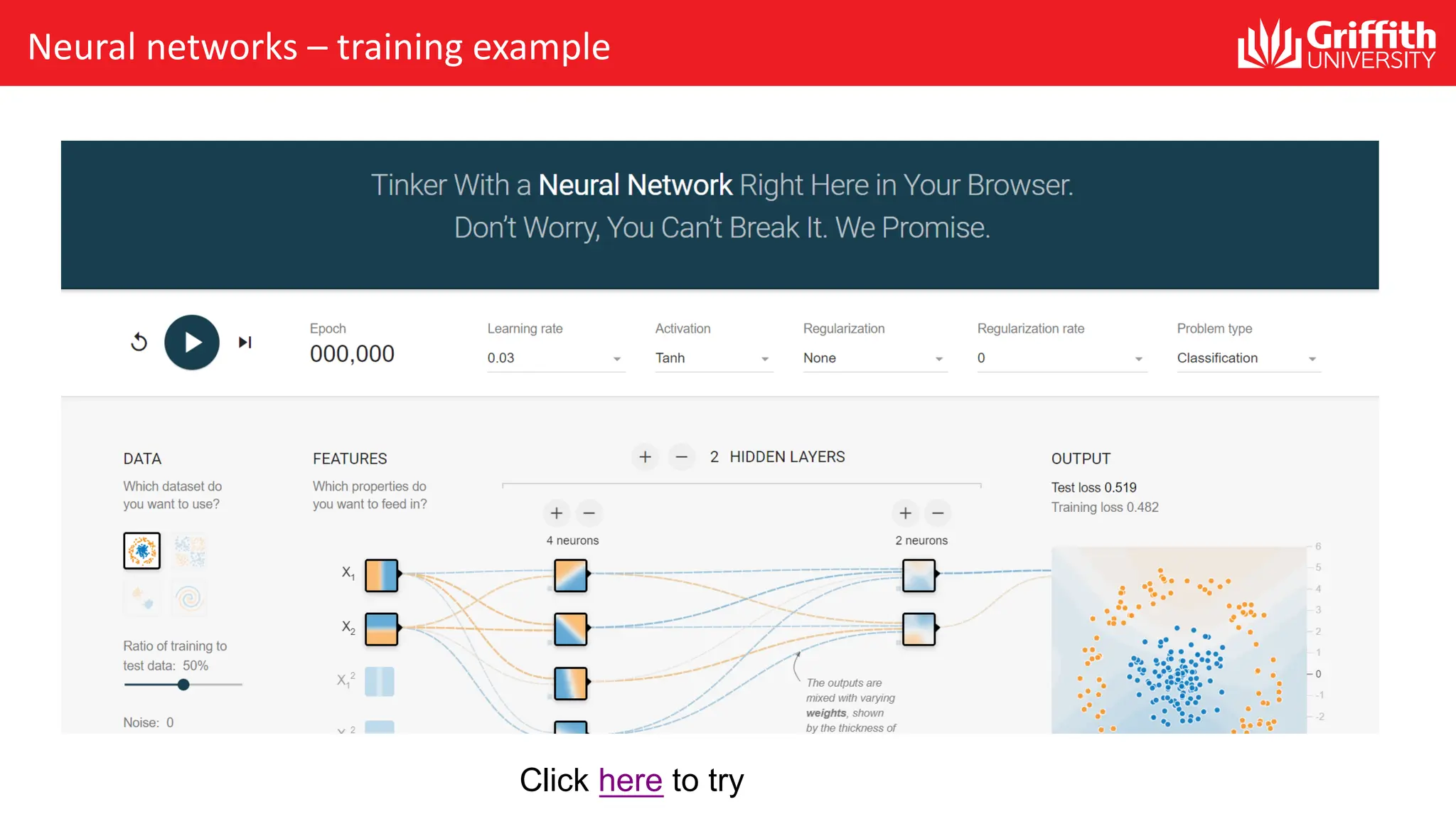

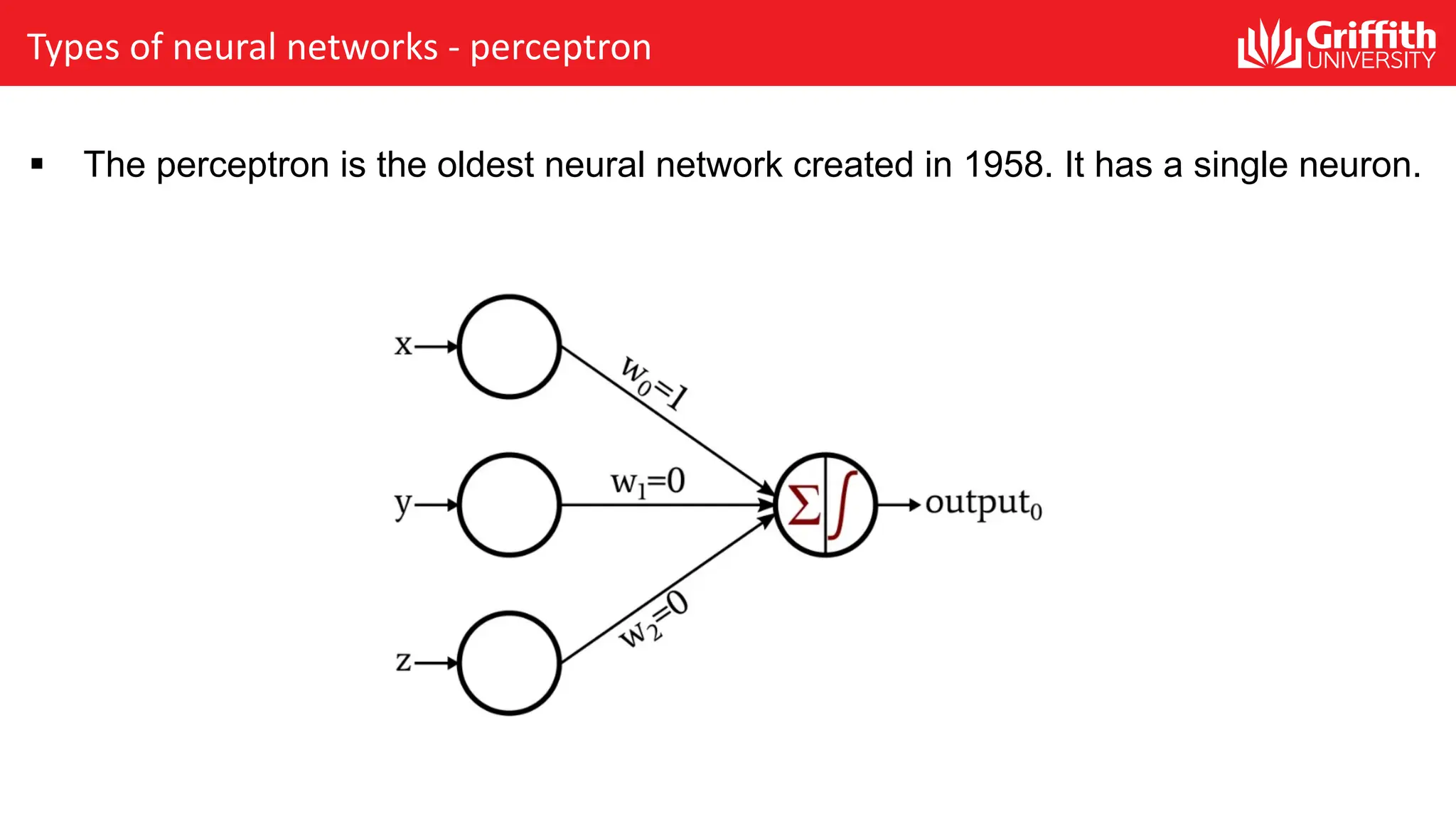

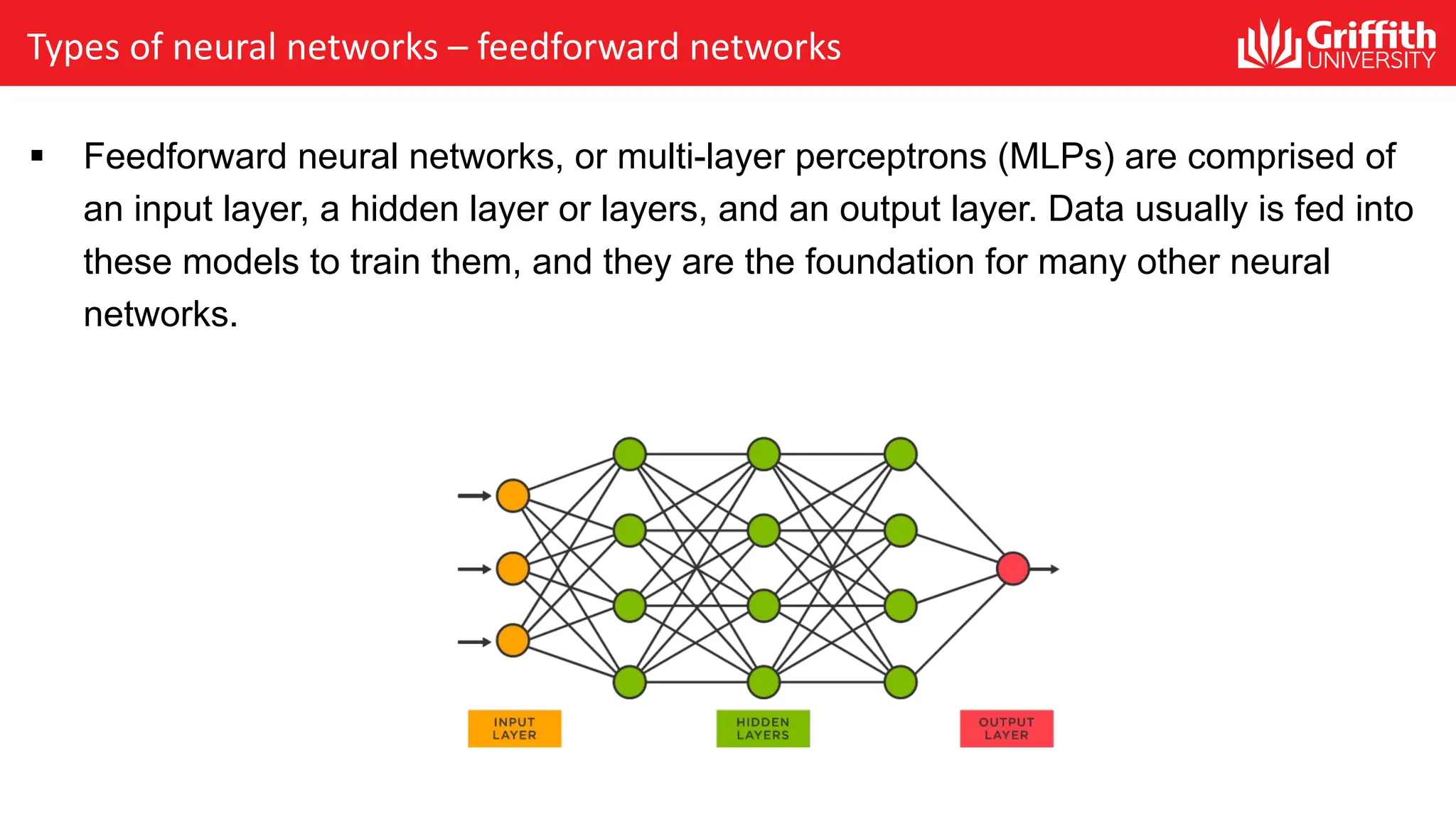

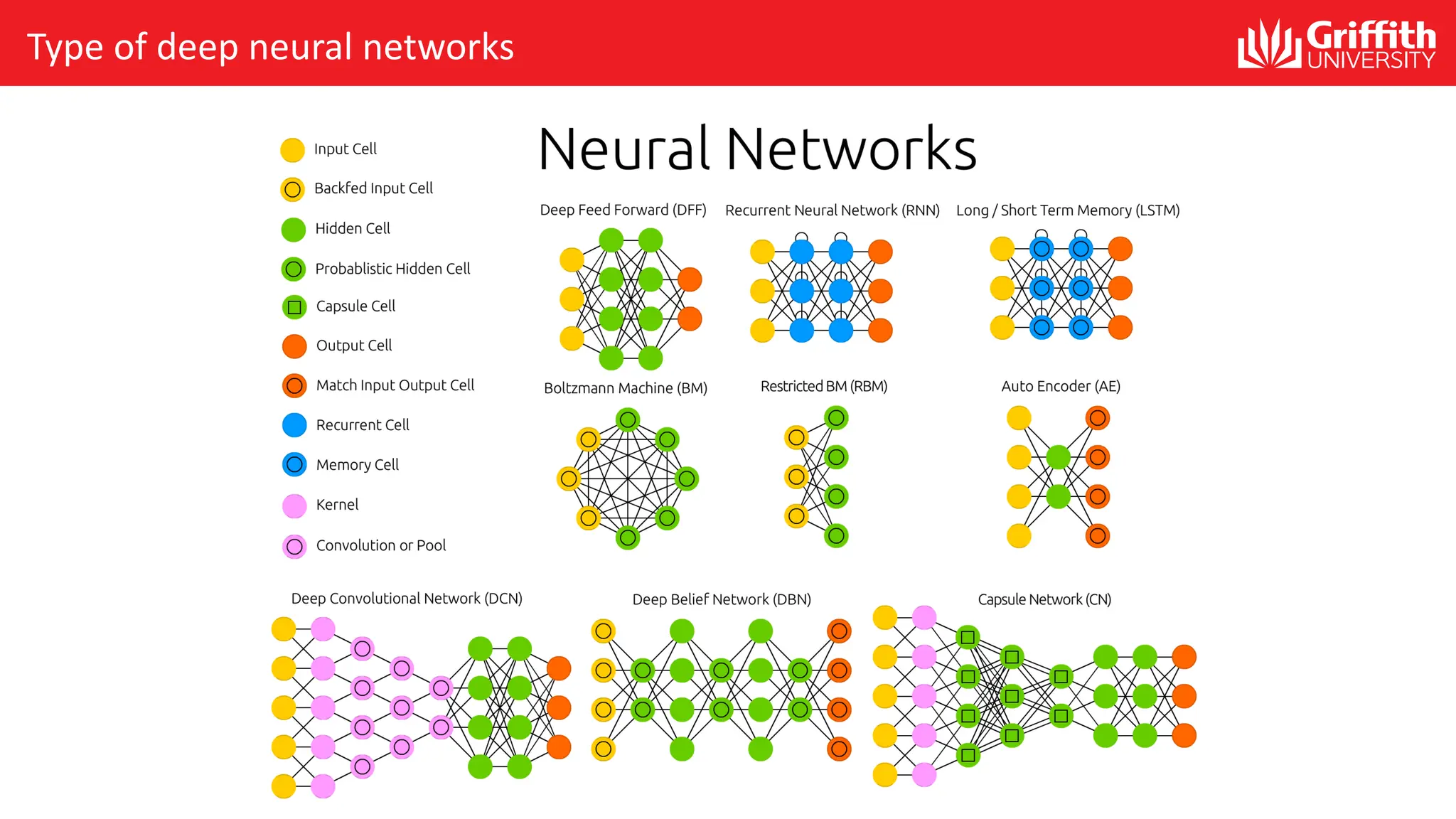

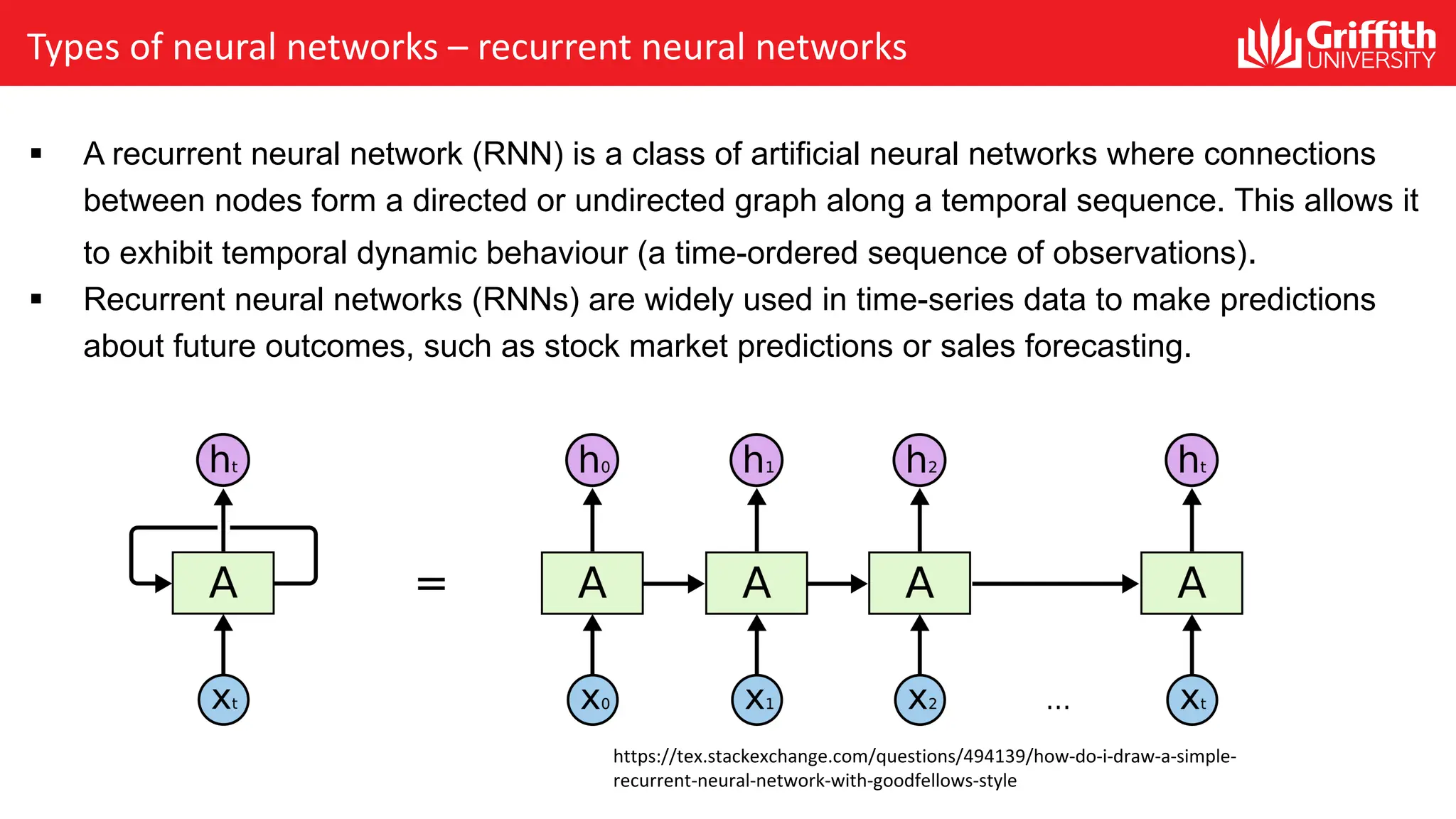

The document covers the evolution of artificial intelligence (AI) and neural networks, highlighting significant milestones from the 1960s to recent advancements such as ChatGPT and AlphaGo. It explains the structure and function of artificial neural networks, including how they process information and are trained, and describes various types of neural networks like convolutional and recurrent networks. Furthermore, it touches on recent AI breakthroughs and the influence of AI on everyday life.