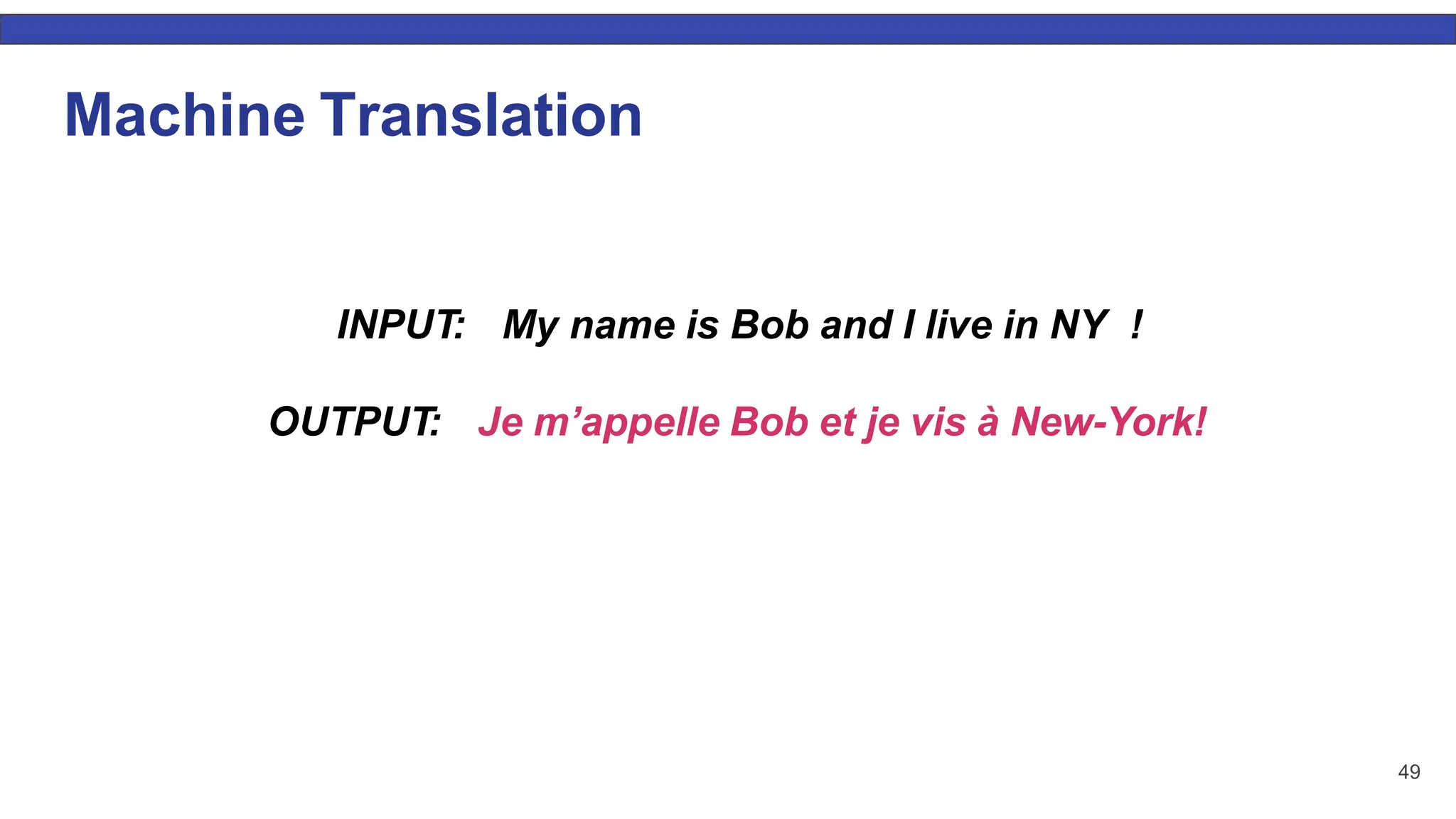

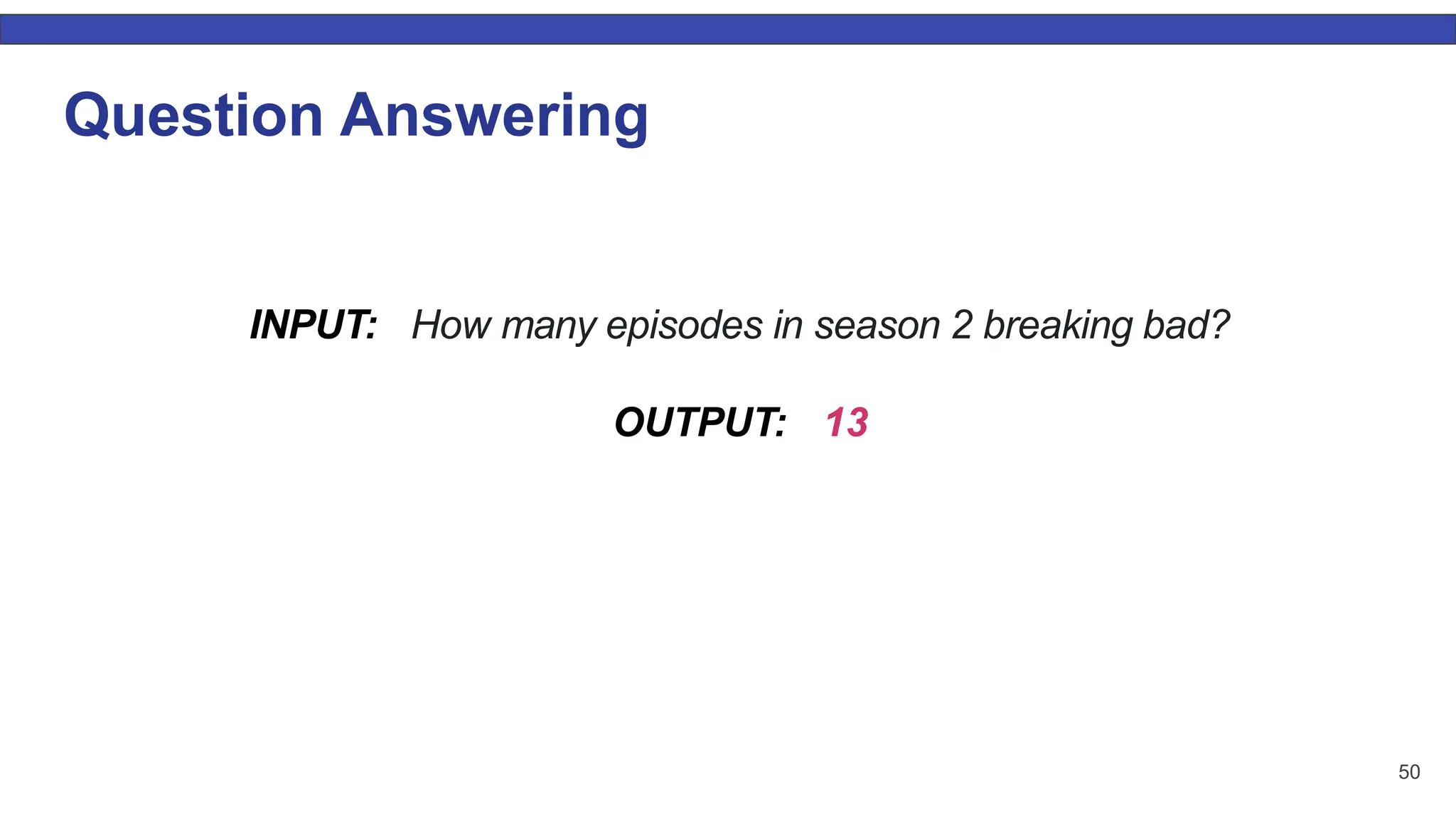

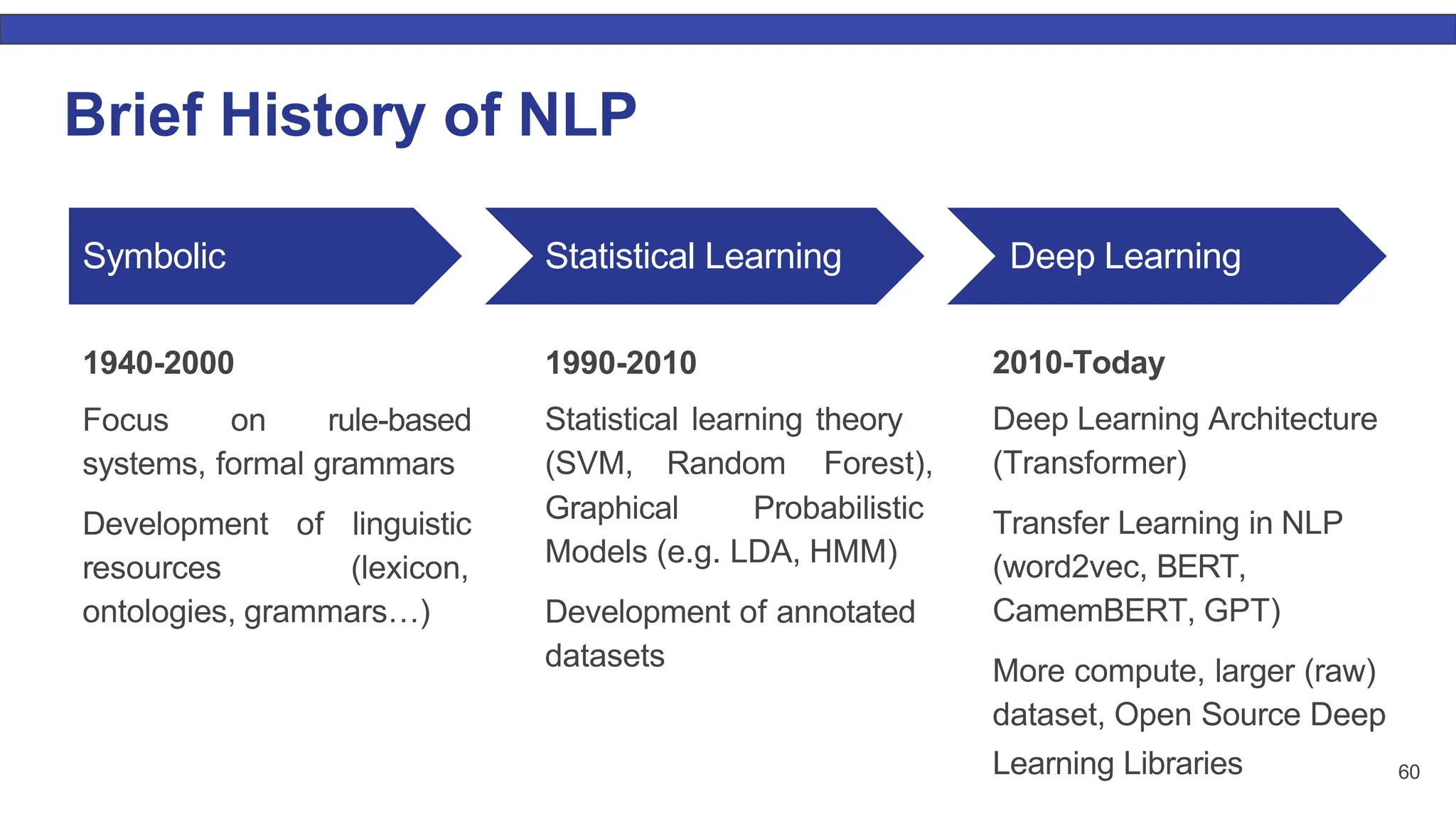

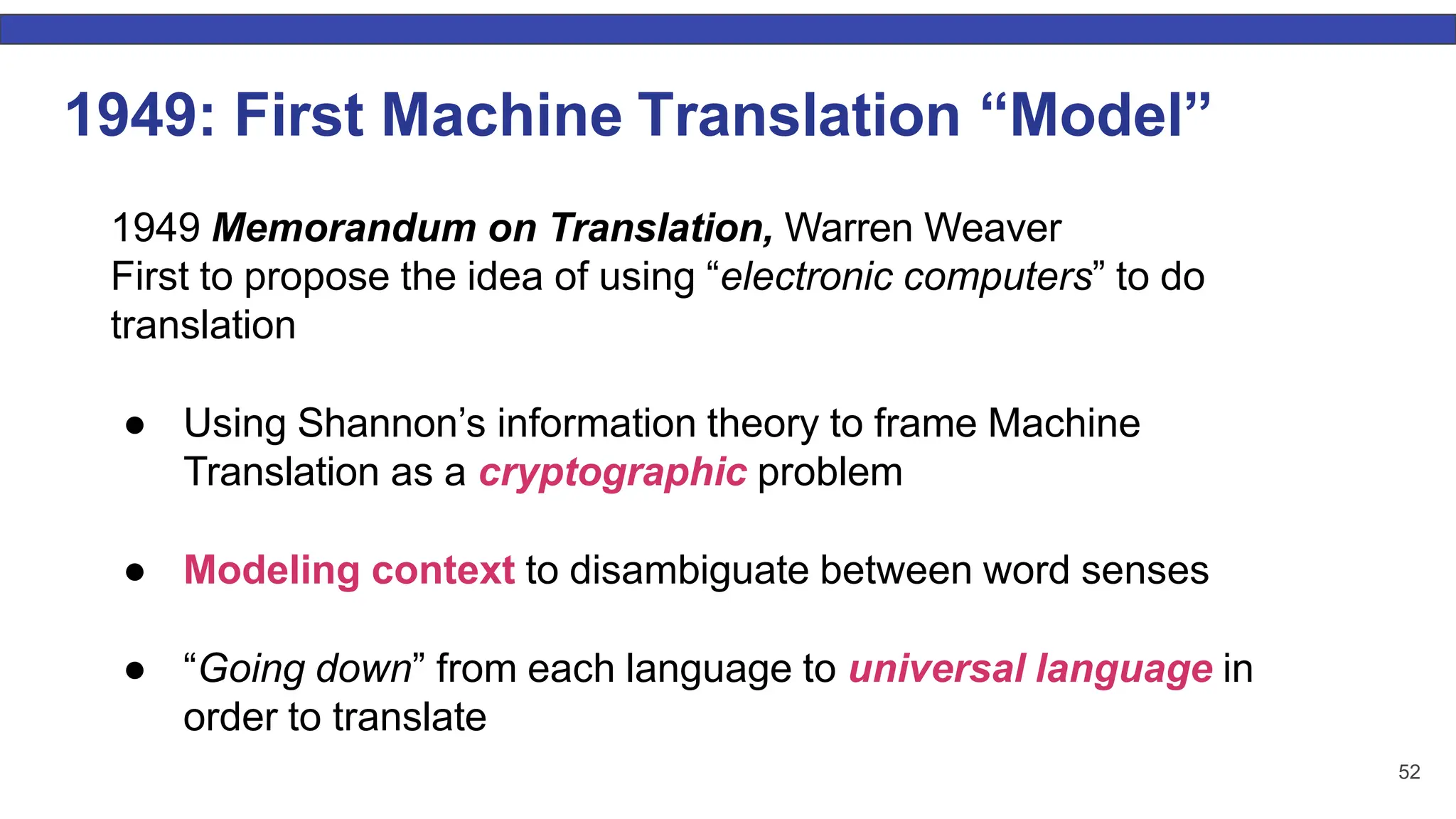

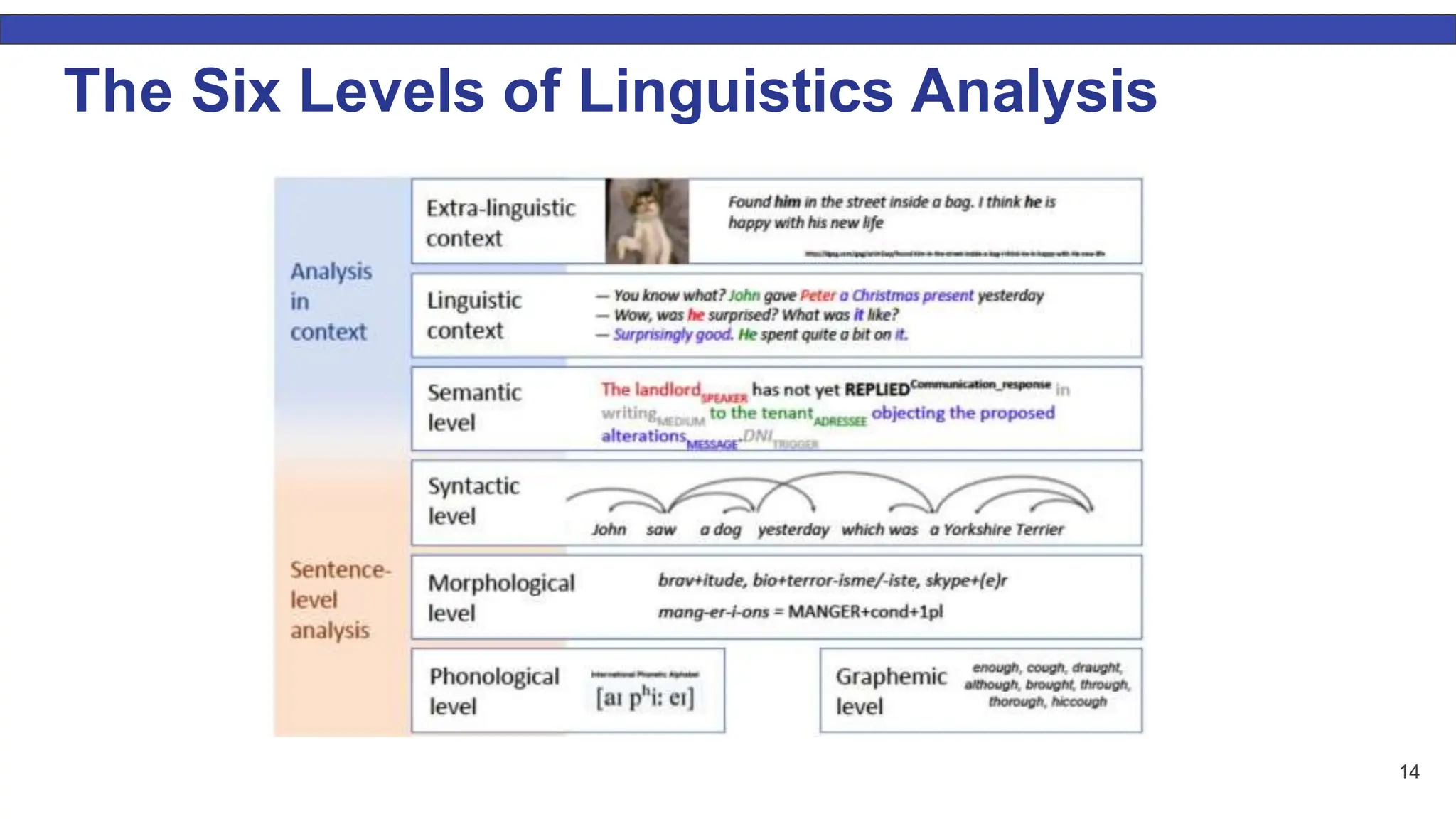

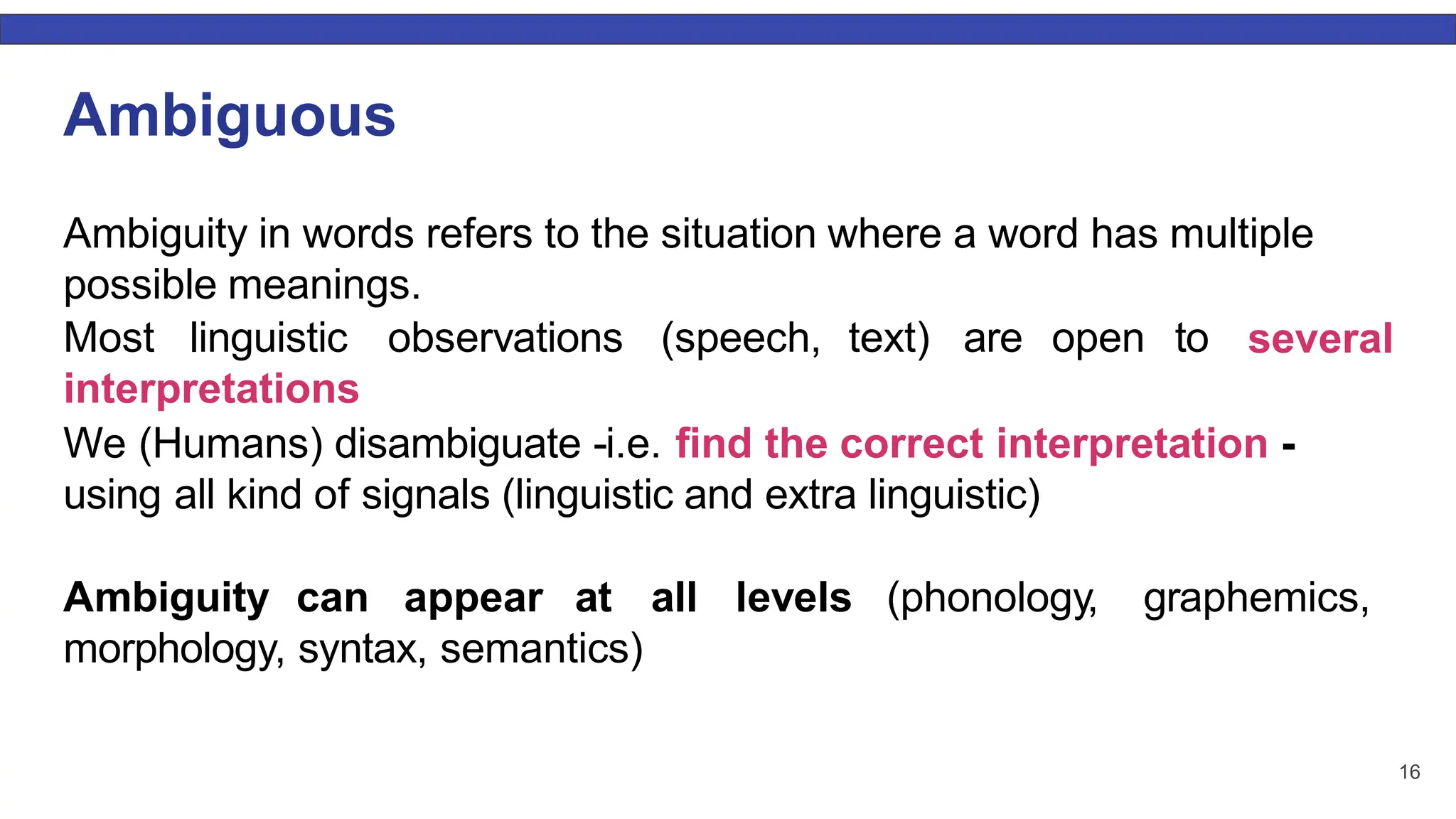

The document outlines a course on natural language processing (NLP) focusing on machine learning and deep learning techniques, while emphasizing practical applications and empirical evaluations. It discusses the significance of language processing in accessing knowledge, communication, and its challenges such as ambiguity and diversity. The document also introduces various NLP tasks along with a historical overview of NLP development from symbolic methods to deep learning approaches.

![A Definition of Language Definition 1: Language is a means to communicate, it is a semiotic system. By that we simply mean that it is a set of signs. A sign is a pair consisting in [...] a signifier and a signified. Definition 2: A sign consists in a phonological structure, a morphological structure, a syntactic structure and a semantic structure 13](https://image.slidesharecdn.com/lecture1intronlp-240701142705-d57214cc/75/lecture-1-intro-NLP_lecture-1-intro-NLP-pptx-11-2048.jpg)

![43 NLP Tasks: Part-of-Speech Tagging POS Tagging: Find the grammatical category of each word [My , name, is, Bob, and, I, live, in, NY, ! ]](https://image.slidesharecdn.com/lecture1intronlp-240701142705-d57214cc/75/lecture-1-intro-NLP_lecture-1-intro-NLP-pptx-43-2048.jpg)

![44 NLP Tasks: Part-of-Speech Tagging POS Tagging: Find the grammatical category of each word [My , name, is, Bob, and, I, live, in, NY, ! ] [PRON , NOUN, VERB, NOUN, CC, PRON, VERB, PREP, NOUN, PUNCT ]](https://image.slidesharecdn.com/lecture1intronlp-240701142705-d57214cc/75/lecture-1-intro-NLP_lecture-1-intro-NLP-pptx-44-2048.jpg)

![46 Slot-Filling / Intent Detection Intent Detection is a sequence classification task that consists in classifying the intent of a user in a pre-defined category. Slot-Filling is a sequence labelling task that consists in identifying specific parameters in a user request. Can you please play Hello from Adele ? Intent: play_music Slots: [Can, you, please, play, Hello, from, Adele, ? ] [O , O , O , O , SONG, O , ARTIST, O ]](https://image.slidesharecdn.com/lecture1intronlp-240701142705-d57214cc/75/lecture-1-intro-NLP_lecture-1-intro-NLP-pptx-46-2048.jpg)

![48 NLP Tasks: Name Entity Recognition NER: Find the Name-Entities in a sentence [My , name, live, in, NY, ! ] O, LOCATION, O ] [O , O, O, is, Bob, and, I, PERSON, O, O, O,](https://image.slidesharecdn.com/lecture1intronlp-240701142705-d57214cc/75/lecture-1-intro-NLP_lecture-1-intro-NLP-pptx-48-2048.jpg)