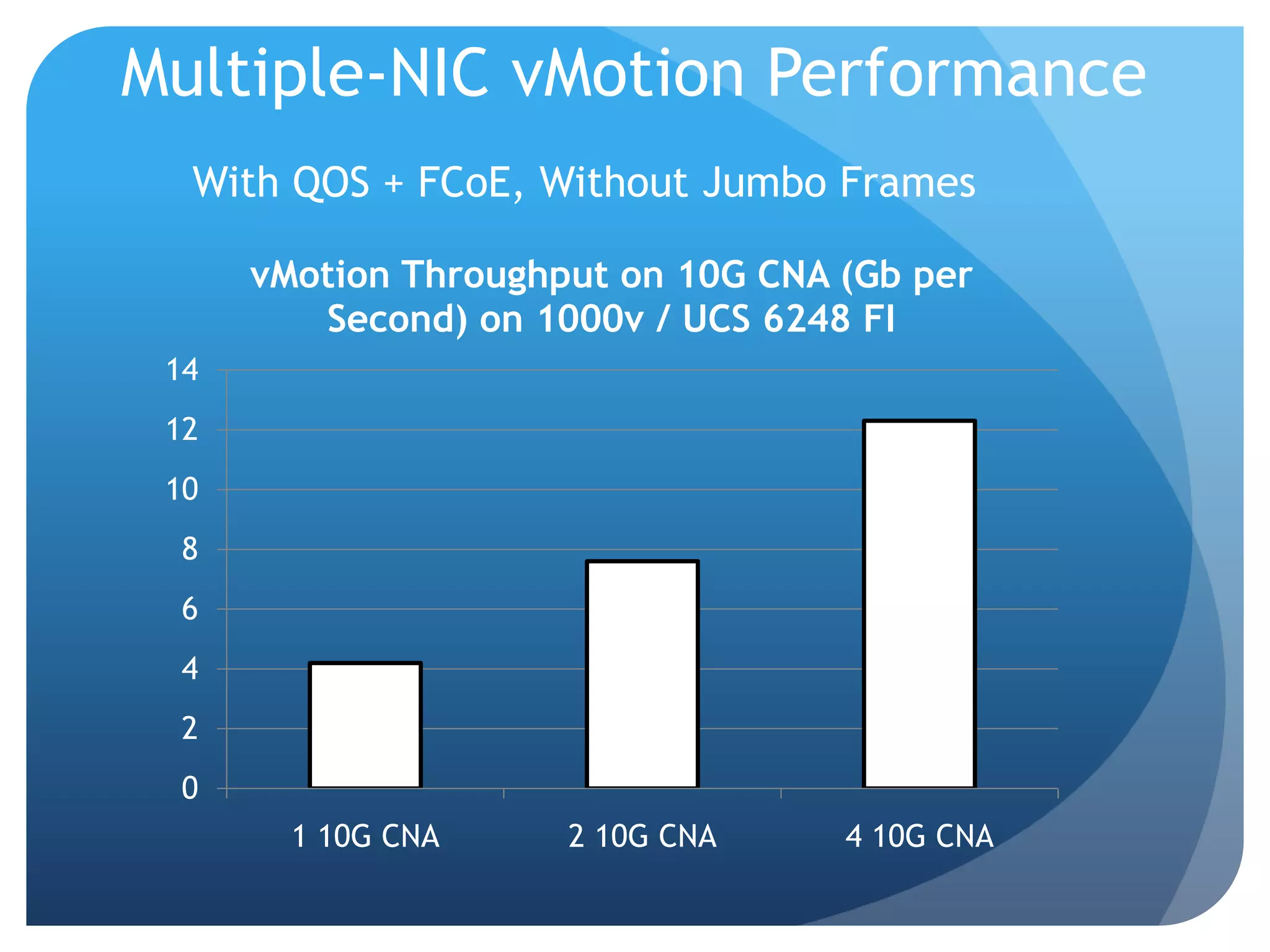

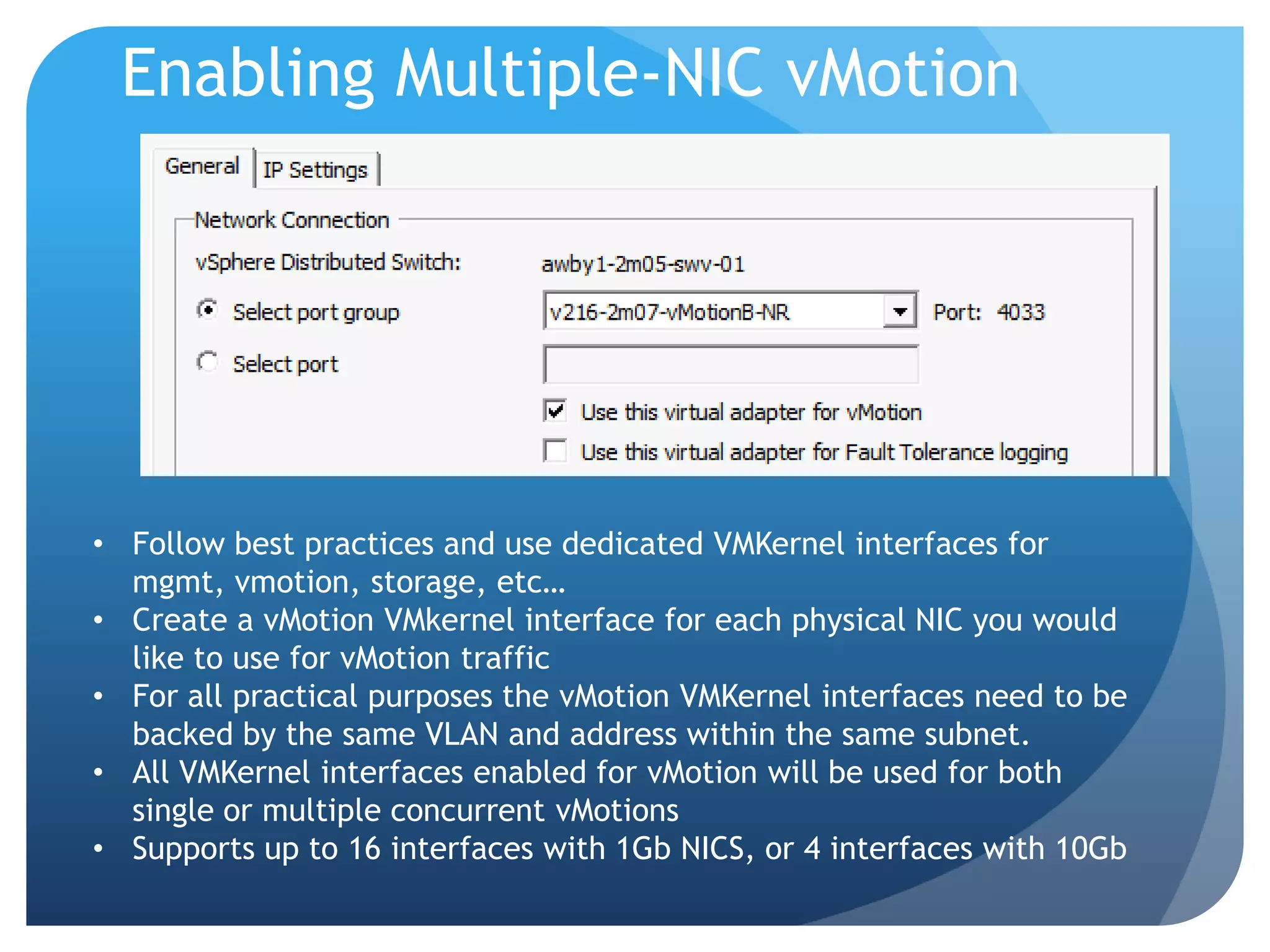

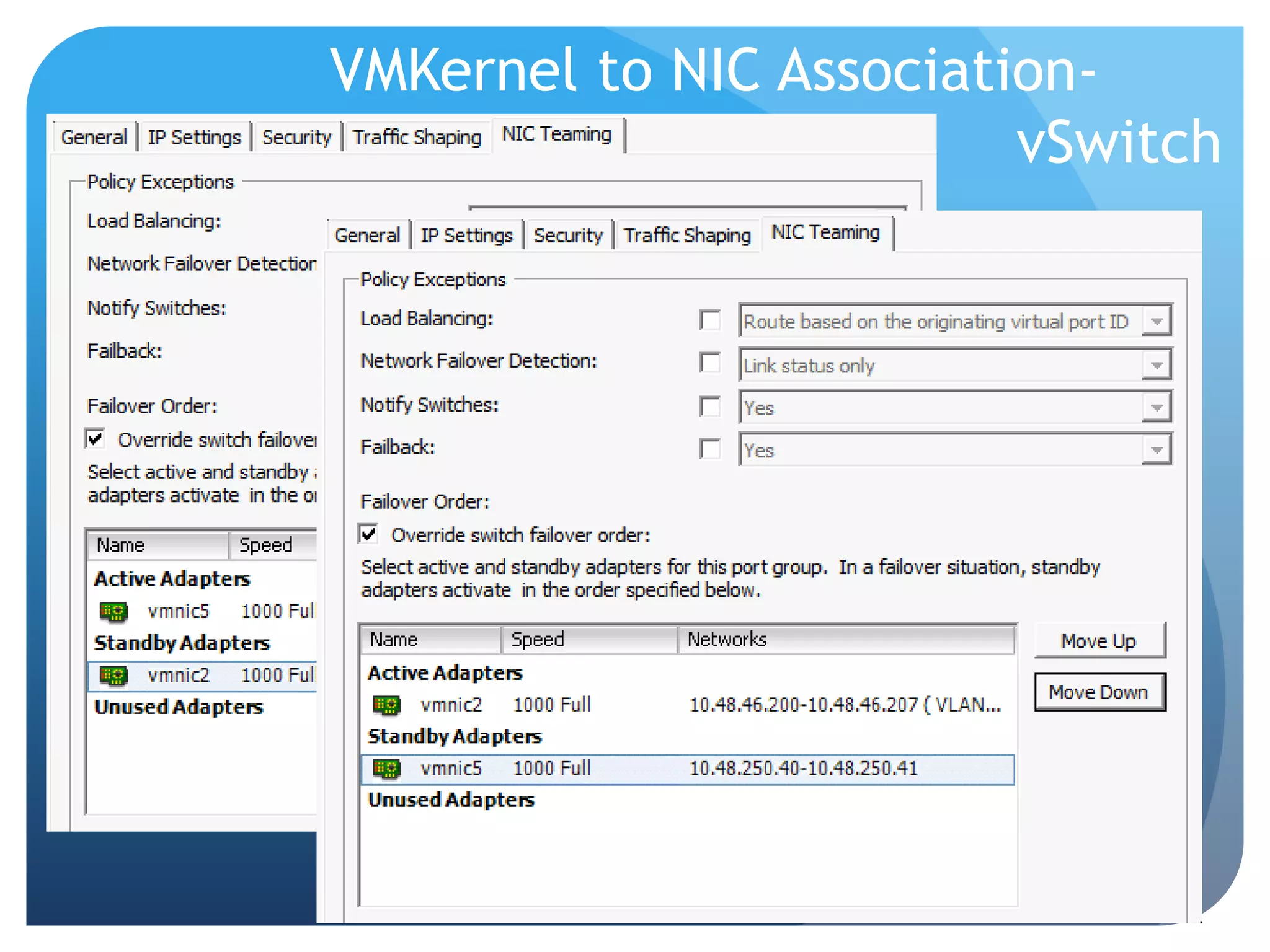

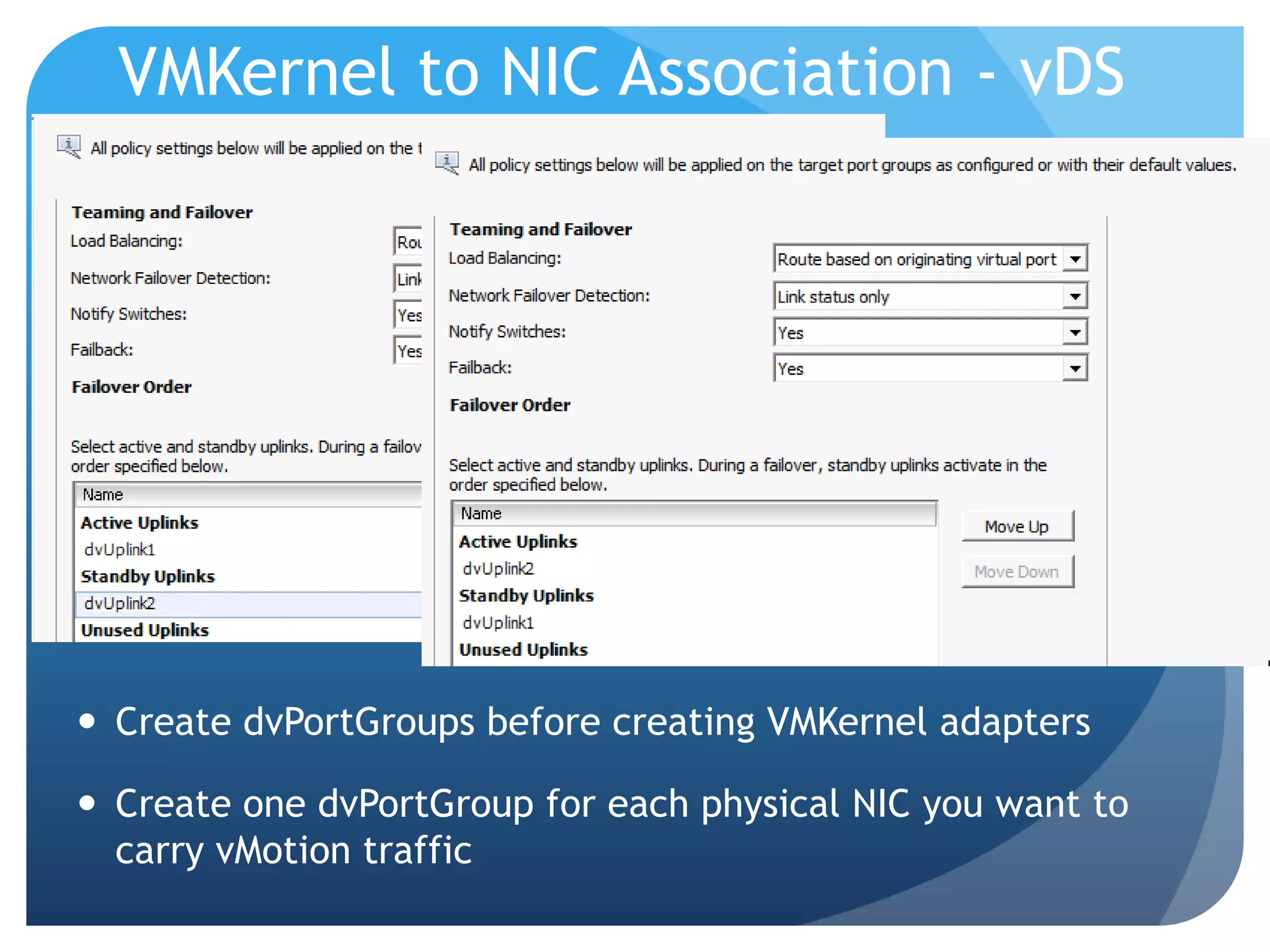

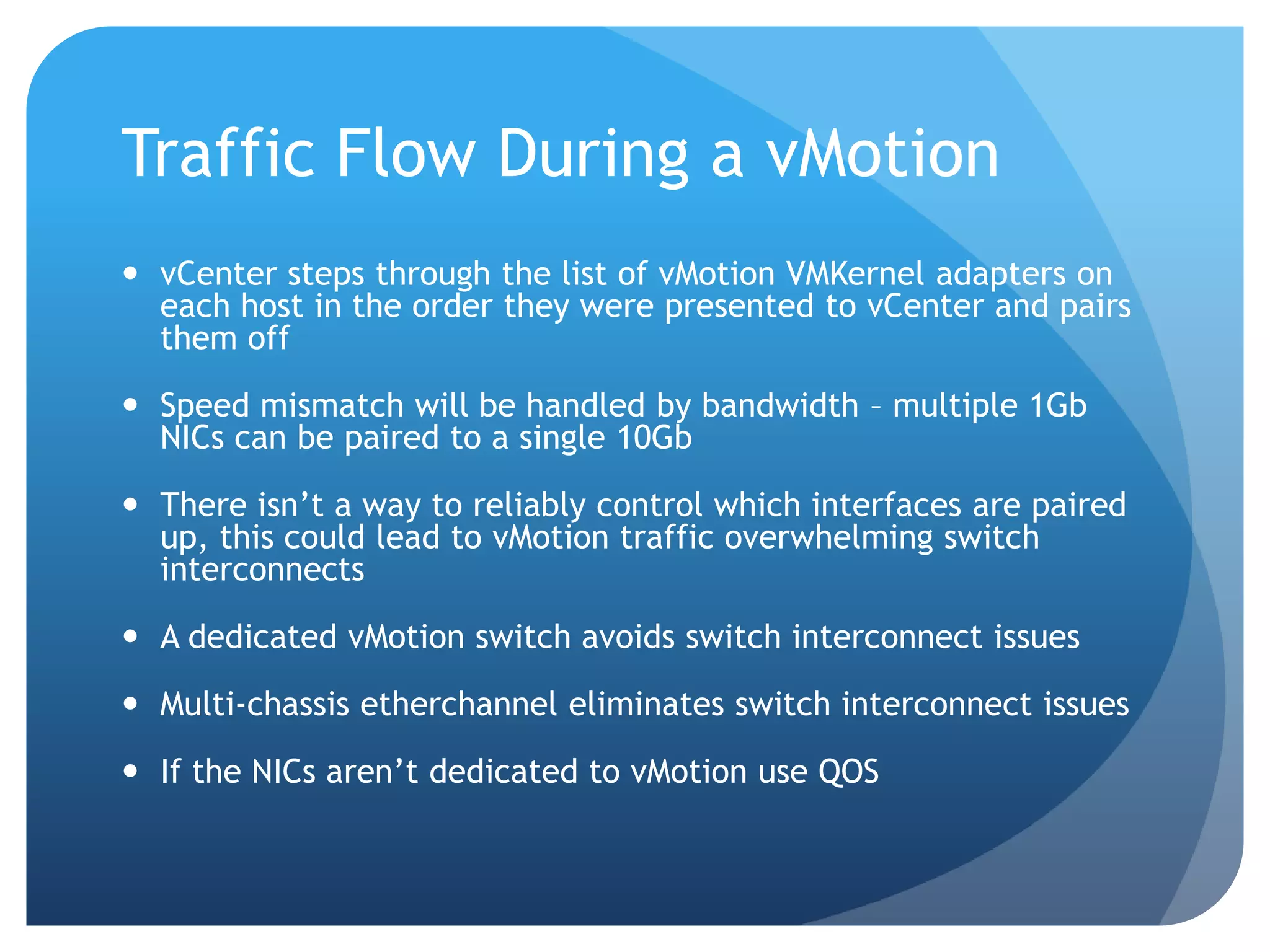

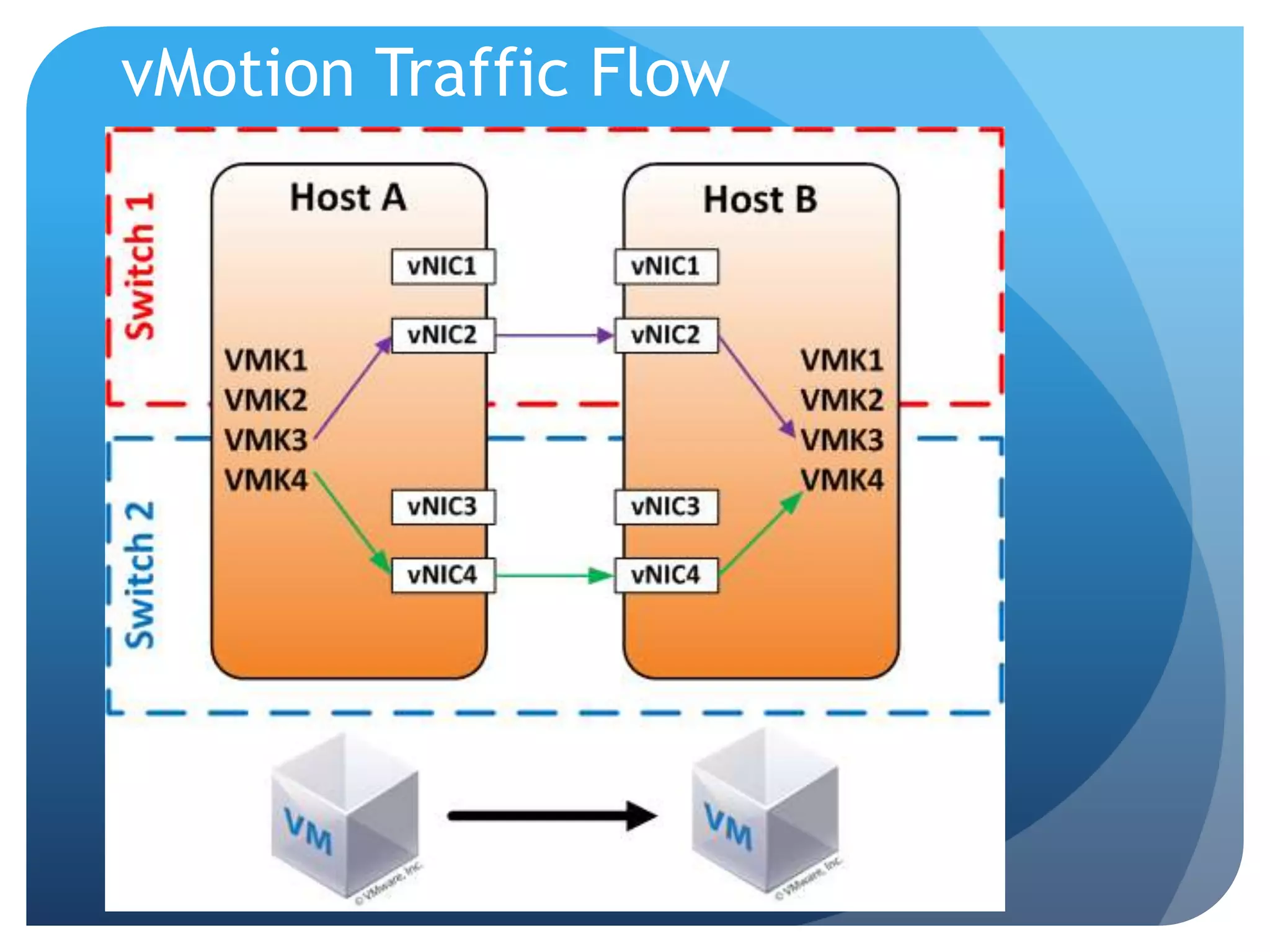

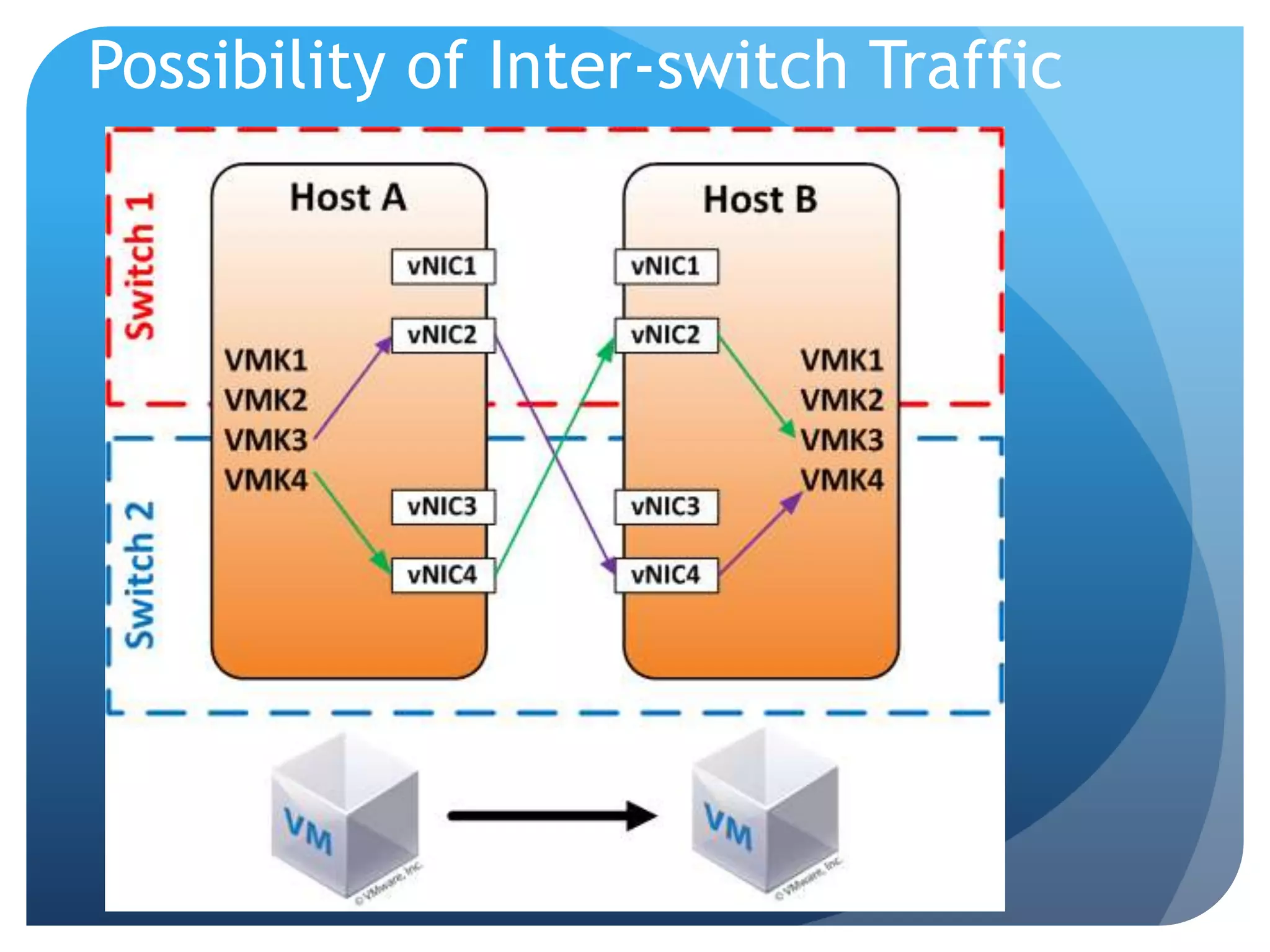

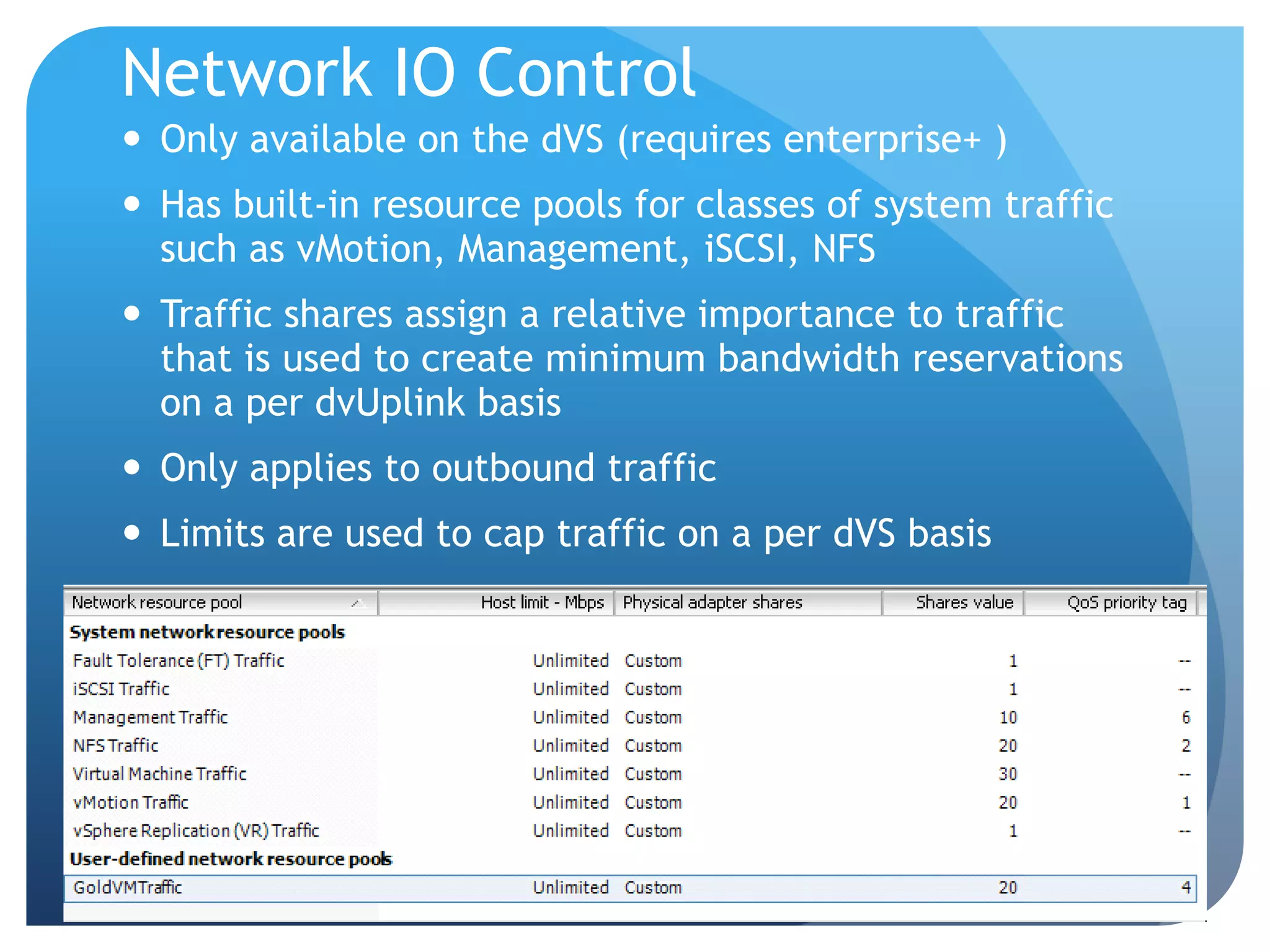

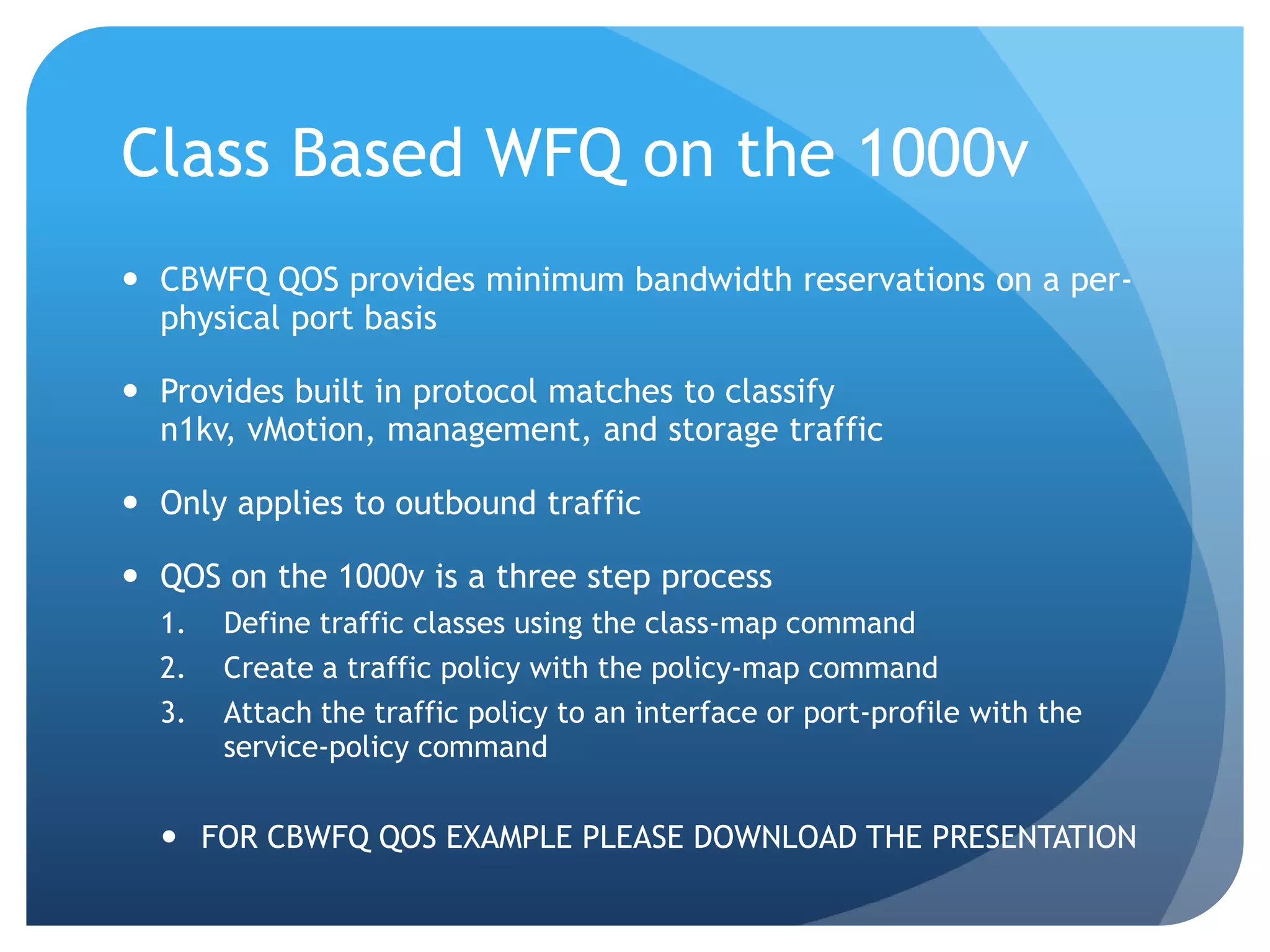

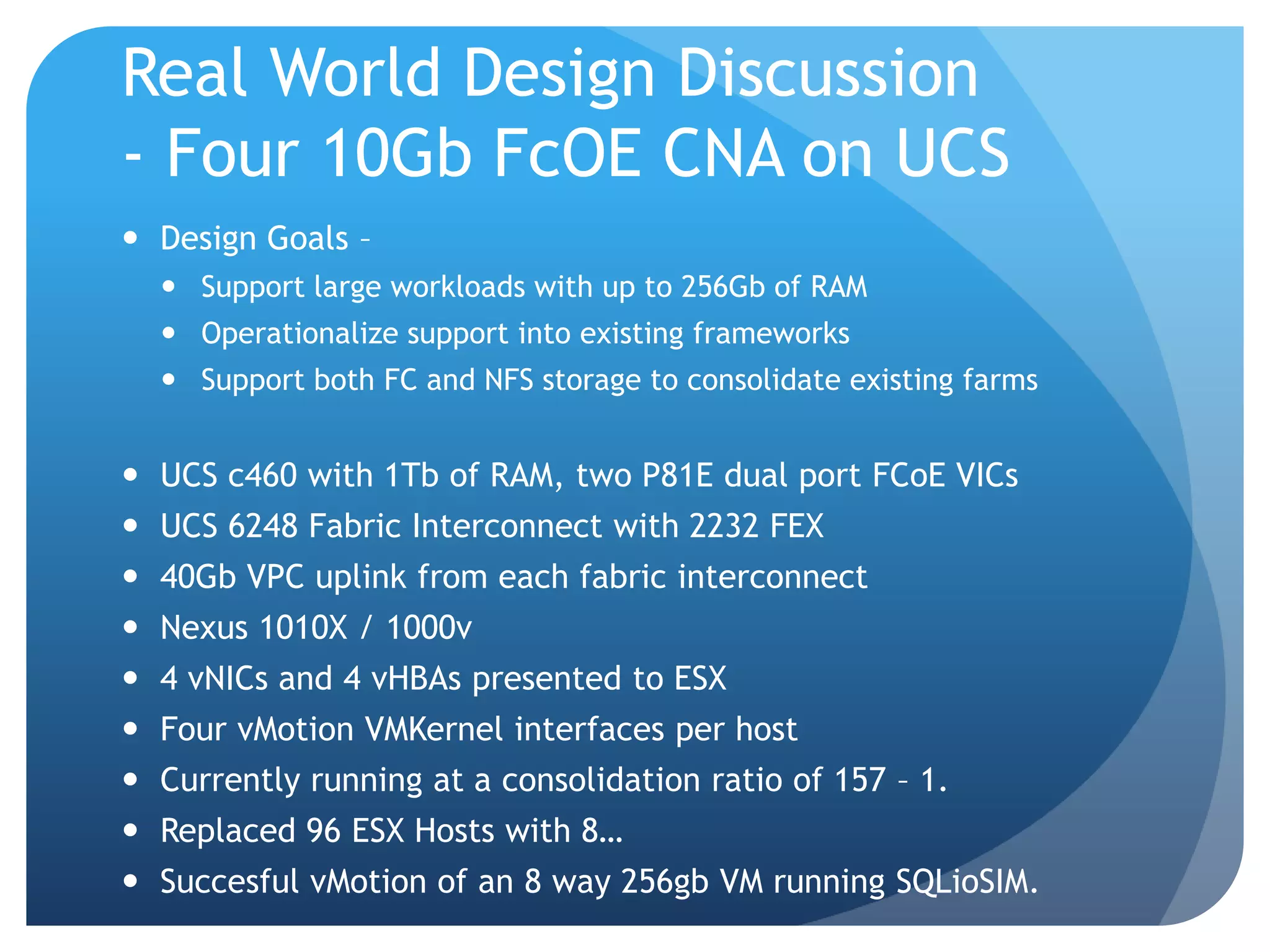

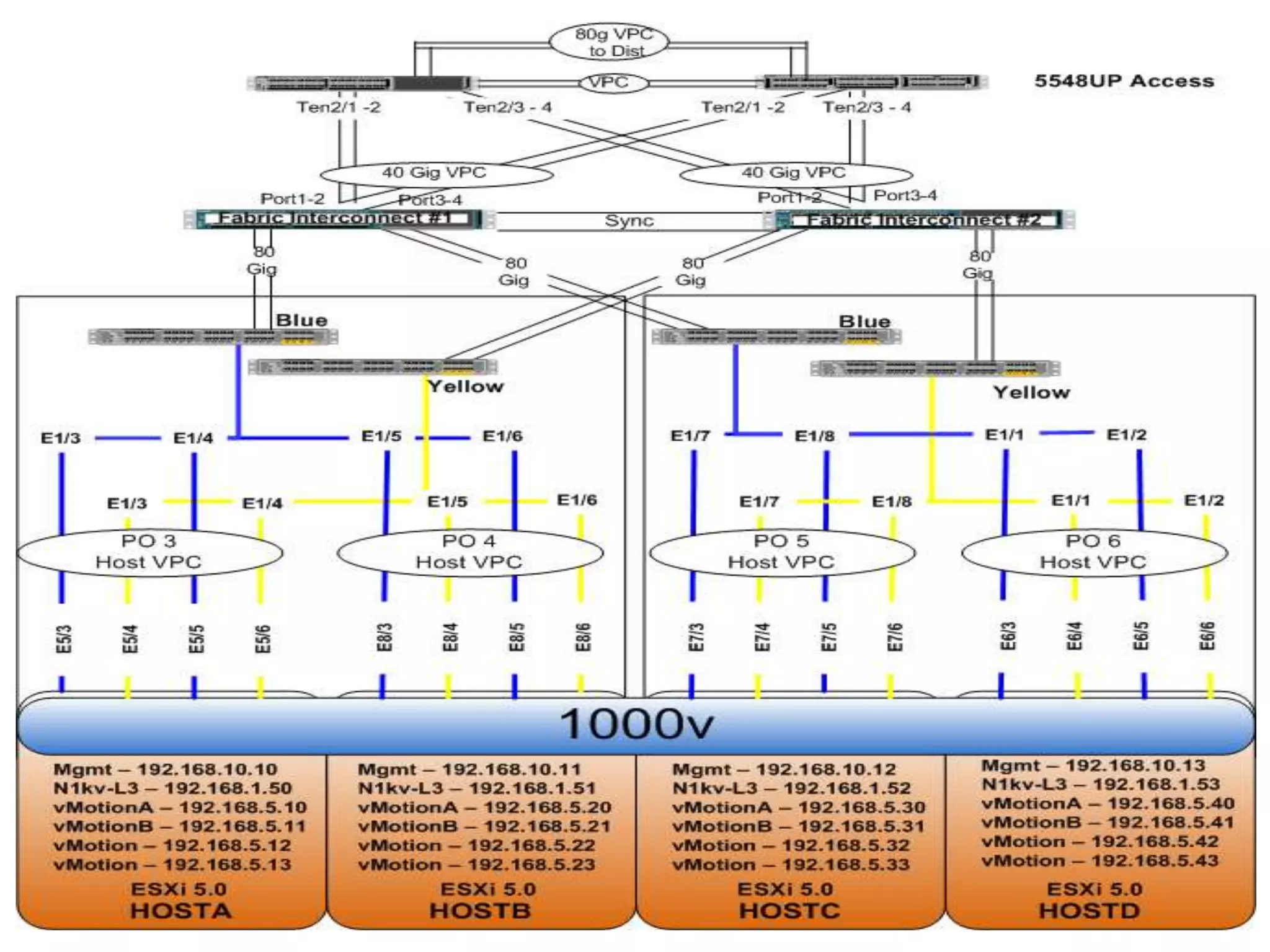

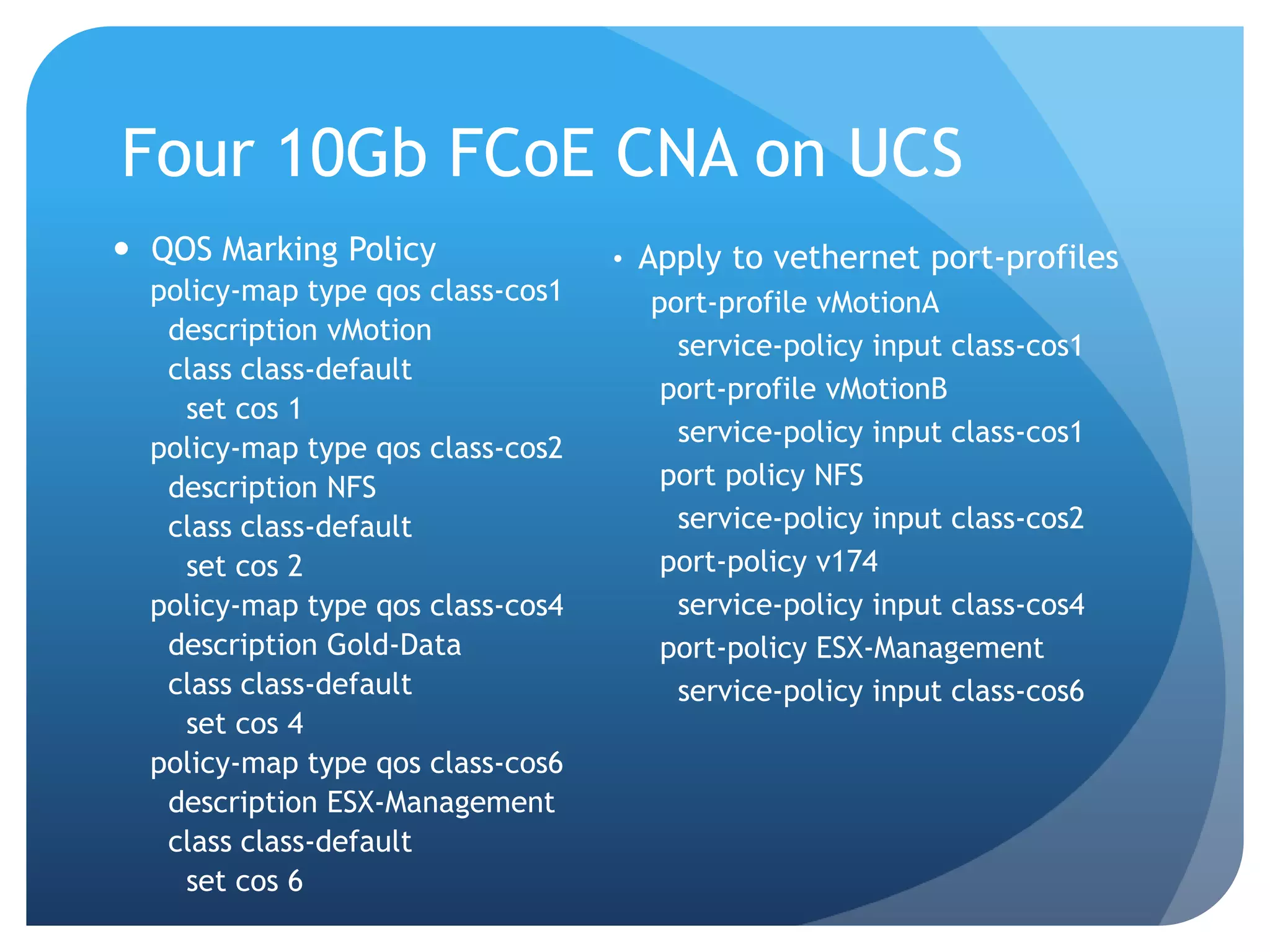

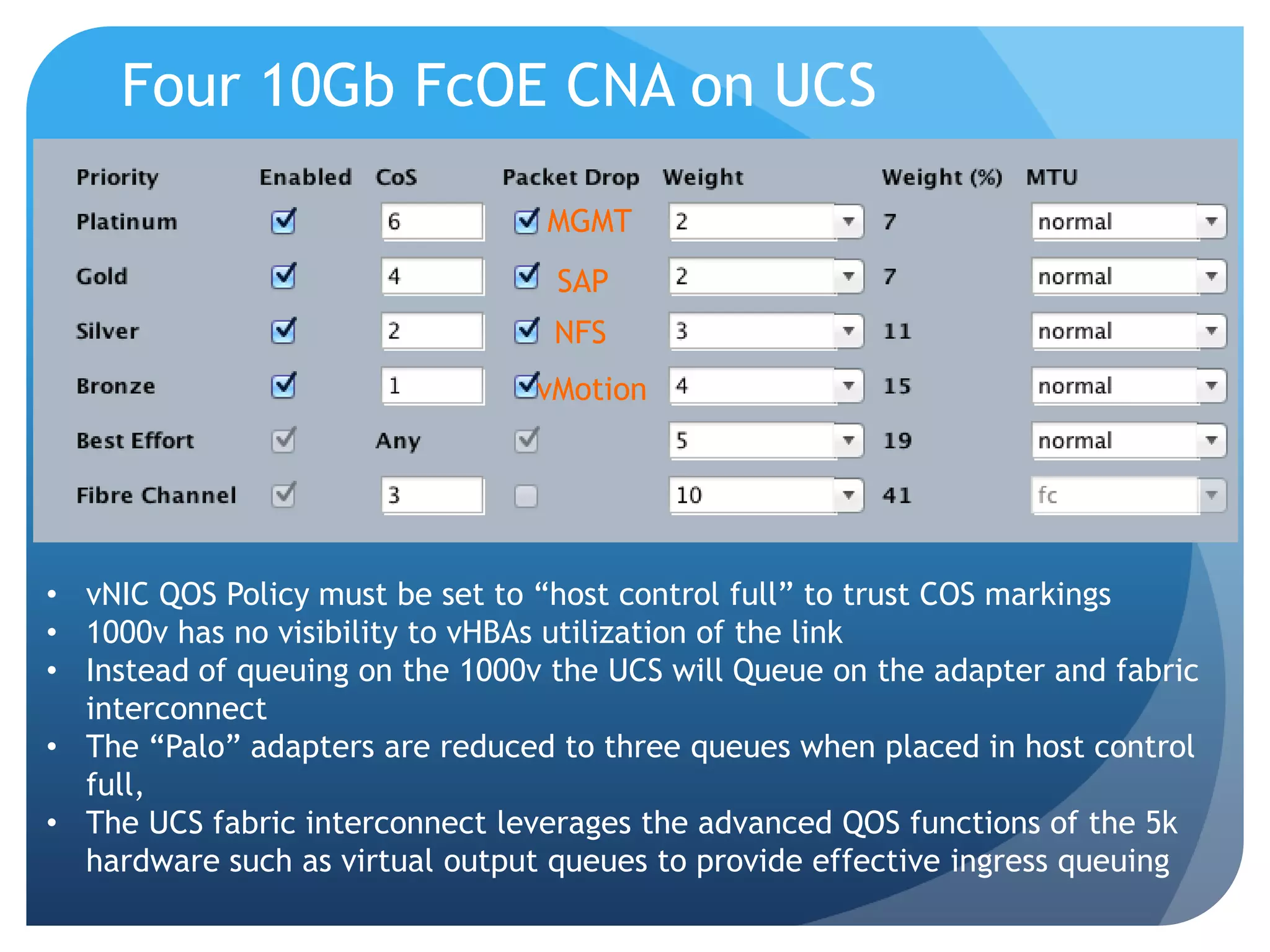

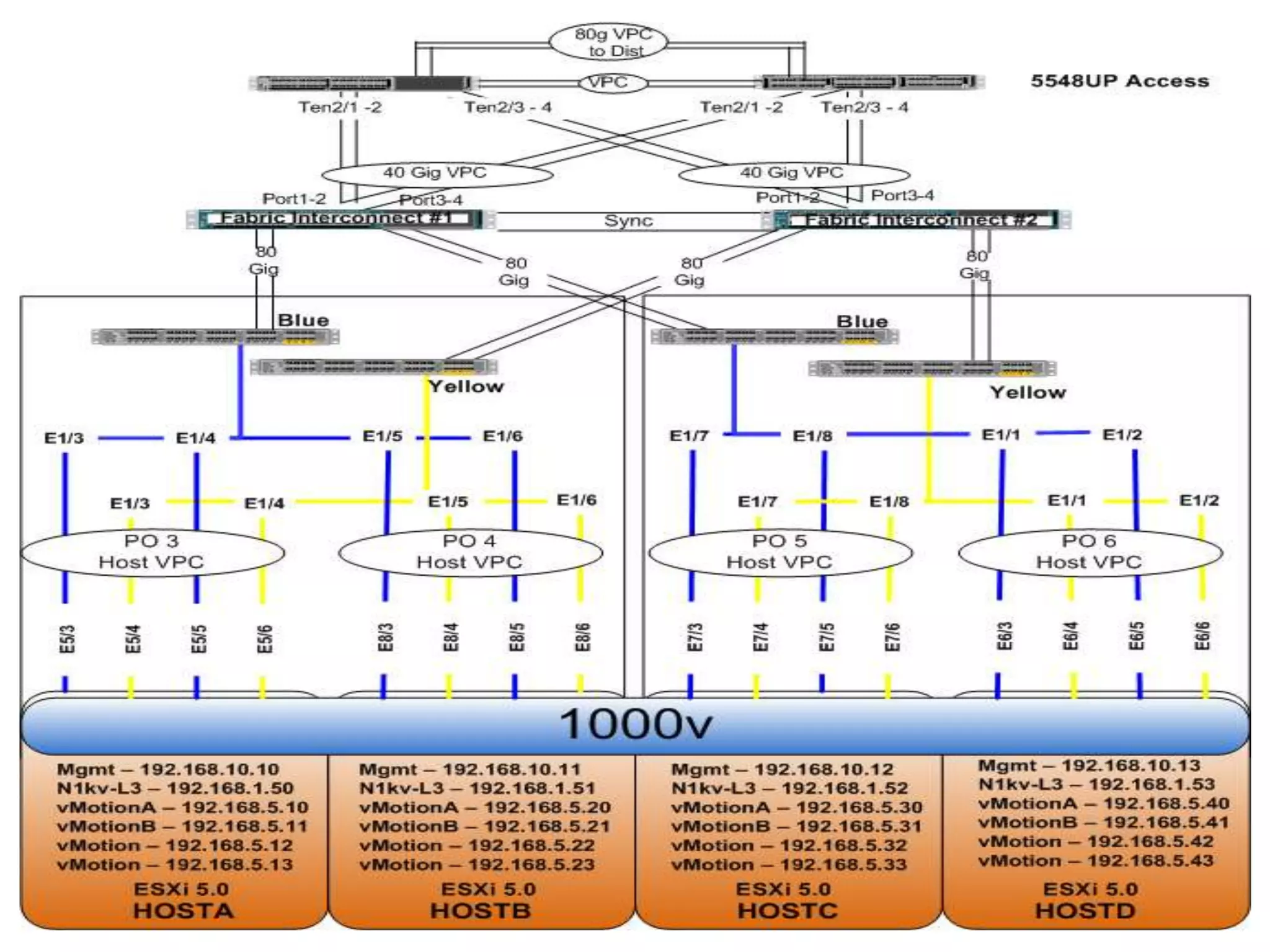

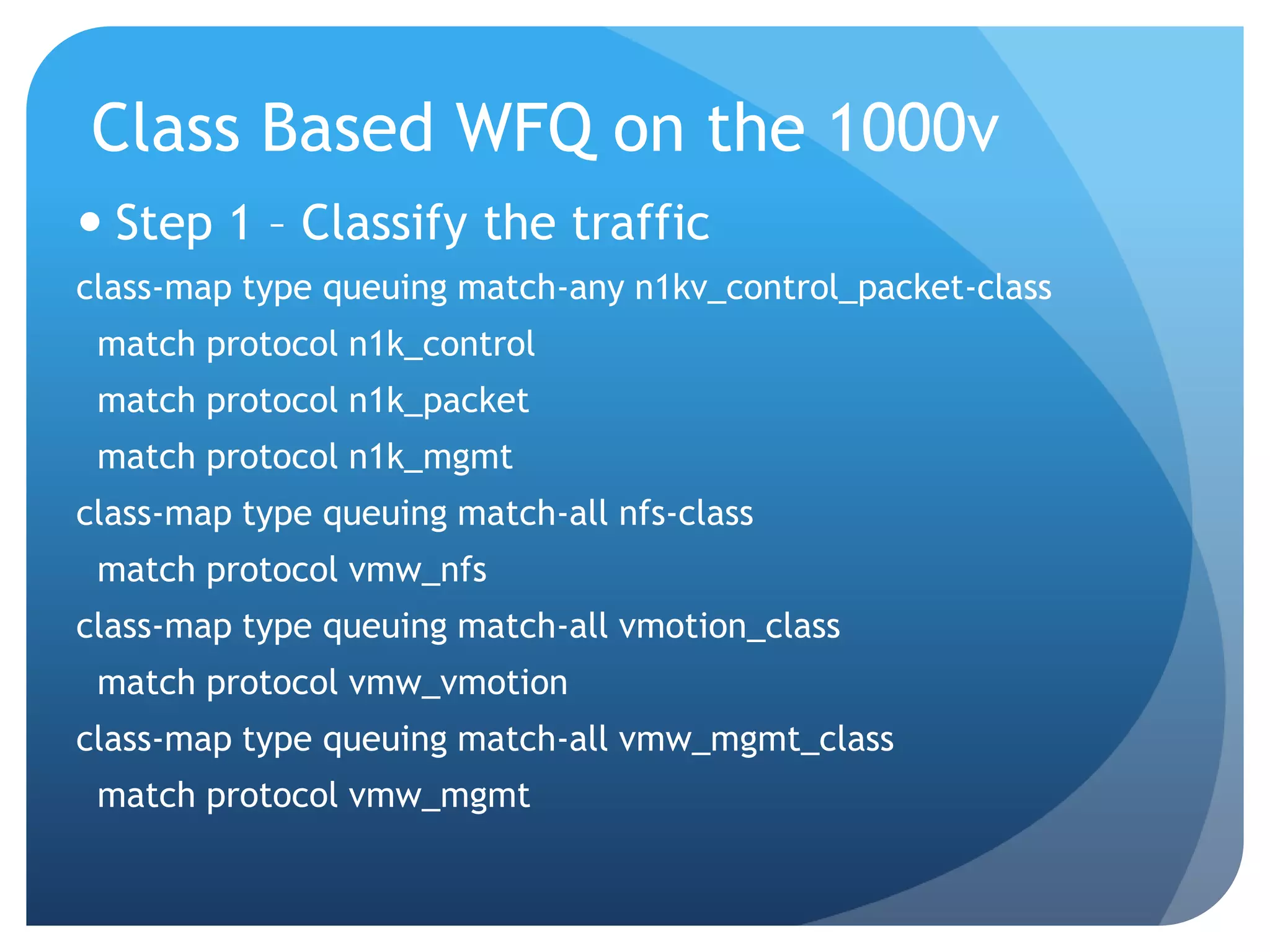

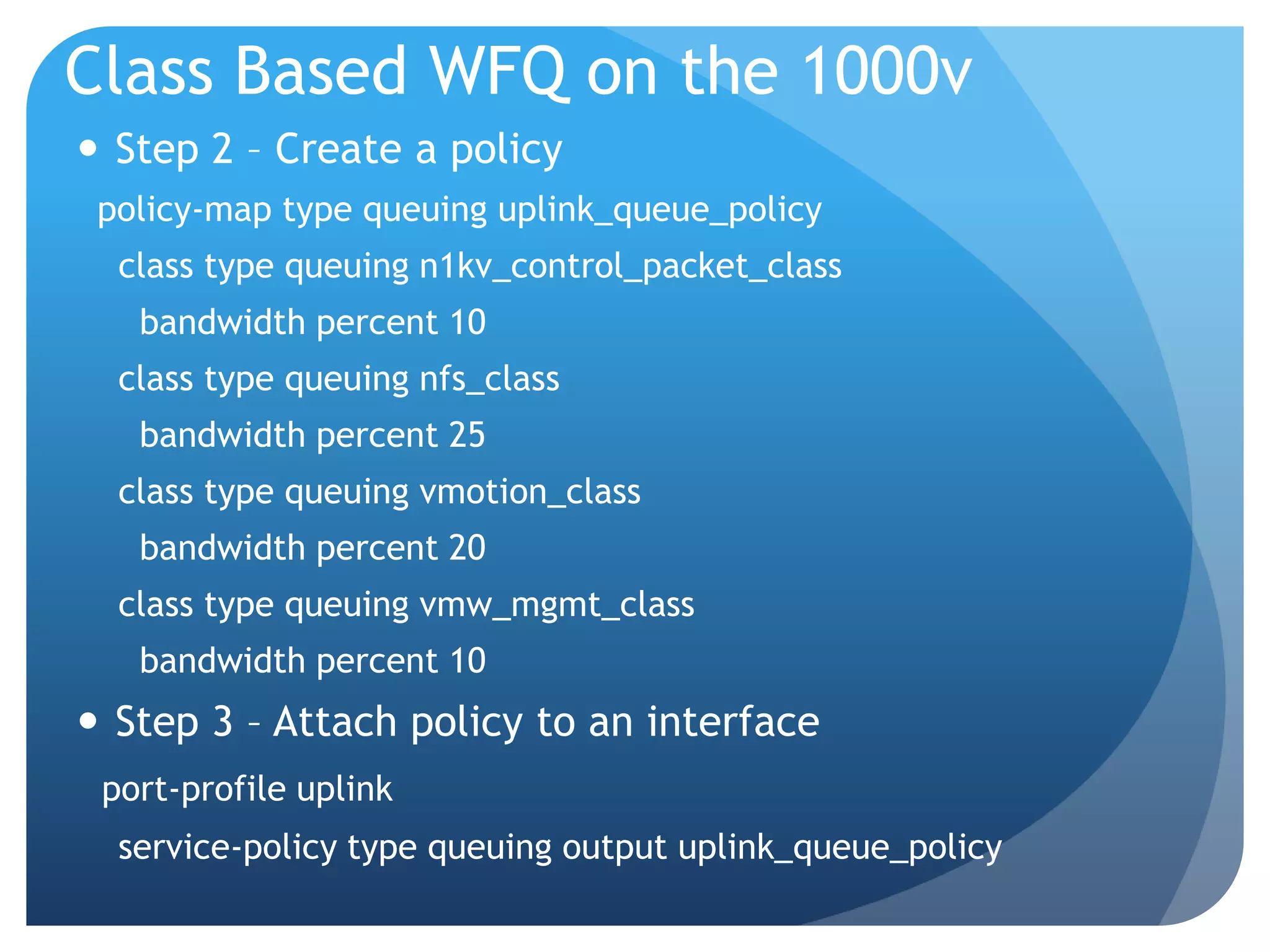

Medtronic had challenges virtualizing large workloads over 1Gb connections with vMotion failures in ESX 4.1. Upgrading to ESX 5.0 enabled features like multiple-NIC vMotion and Stun During Page-Send (SDPS) to improve performance for migrating large VMs. Using multiple 10Gb NICs for vMotion provided more bandwidth and reduced migration times. Quality of service (QoS) was important to prioritize traffic and avoid overwhelming switch interconnects when not using dedicated vMotion switches. Medtronic deployed a solution with UCS servers, Nexus 1000v switches, and four 10Gb FCoE NICs per host, achieving a 157:1 consolidation ratio while successfully