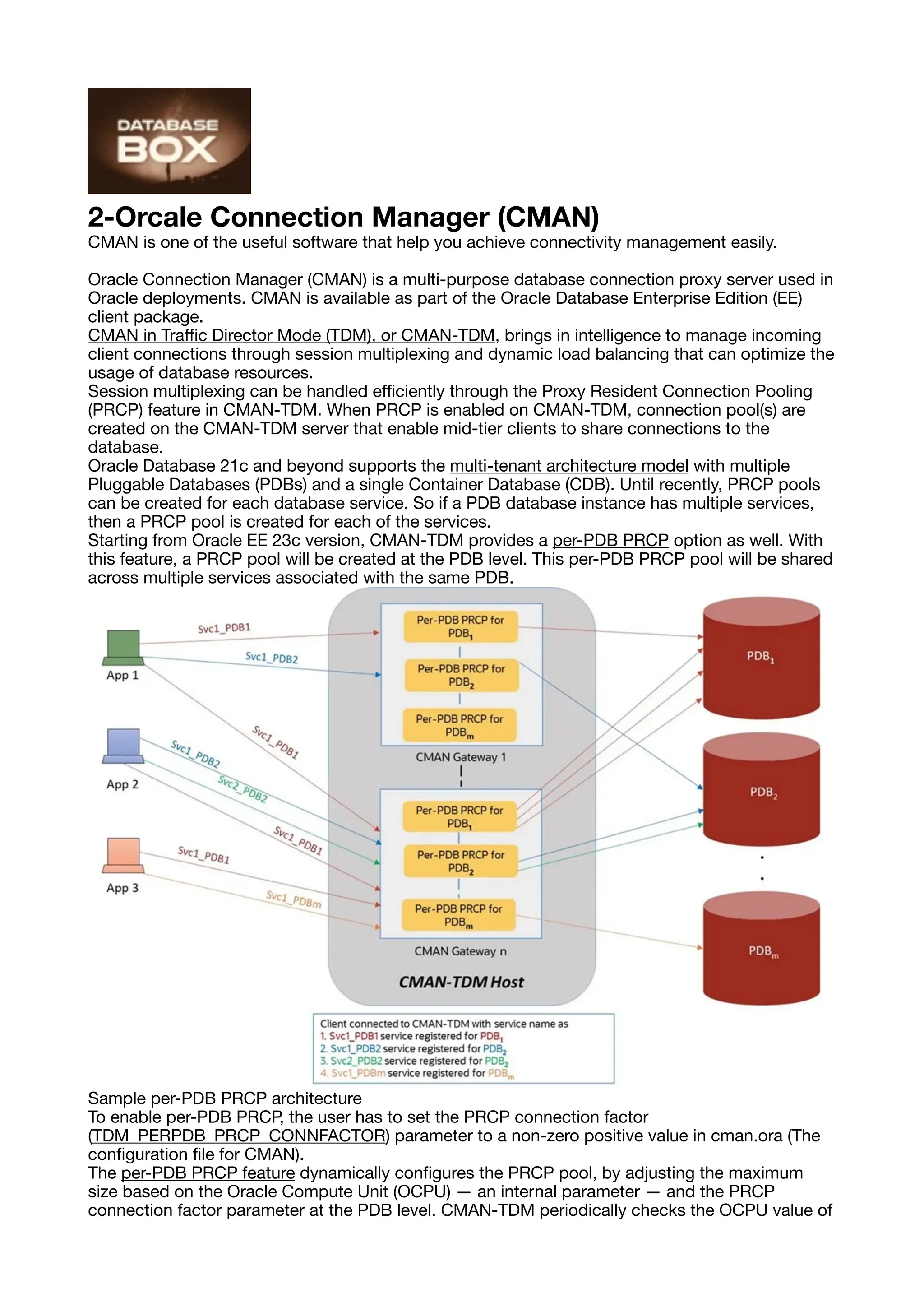

This document discusses improving database performance through connection pooling and load balancing. It describes how connection pooling reuses database connections to optimize performance as traffic and clients grow. It then summarizes several Oracle and MySQL/MariaDB solutions for connection pooling and load balancing, including Oracle Traffic Director, Oracle Connection Manager, MariaDB MaxScale, and ProxySQL. These solutions can distribute database requests, provide high availability, and monitor performance.