Download as PDF, PPTX

![Francesco Casalegno – Hyperparameter Optimization ● F(x) ○ x = [x1 … xN ], [F(x1 ) … F(xN )] ○ ■ ■ → 13](https://image.slidesharecdn.com/tech-talk-hyperparam-optimization-180216181743/75/Hyperparameter-Optimization-for-Machine-Learning-13-2048.jpg)

The document discusses hyperparameter optimization techniques, focusing on methods such as GridSearchCV, RandomizedSearchCV, and Bayesian optimization strategies. It mentions the use of tools like TPOT for automated machine learning, highlighting the importance of efficiently tuning model parameters to enhance performance. The content includes various representations and functions related to the optimization process.

![Francesco Casalegno – Hyperparameter Optimization ● F(x) ○ x = [x1 … xN ], [F(x1 ) … F(xN )] ○ ■ ■ → 13](https://image.slidesharecdn.com/tech-talk-hyperparam-optimization-180216181743/75/Hyperparameter-Optimization-for-Machine-Learning-13-2048.jpg)

Overview of the presentation on Hyperparameter Optimization by Francesco Casalegno.

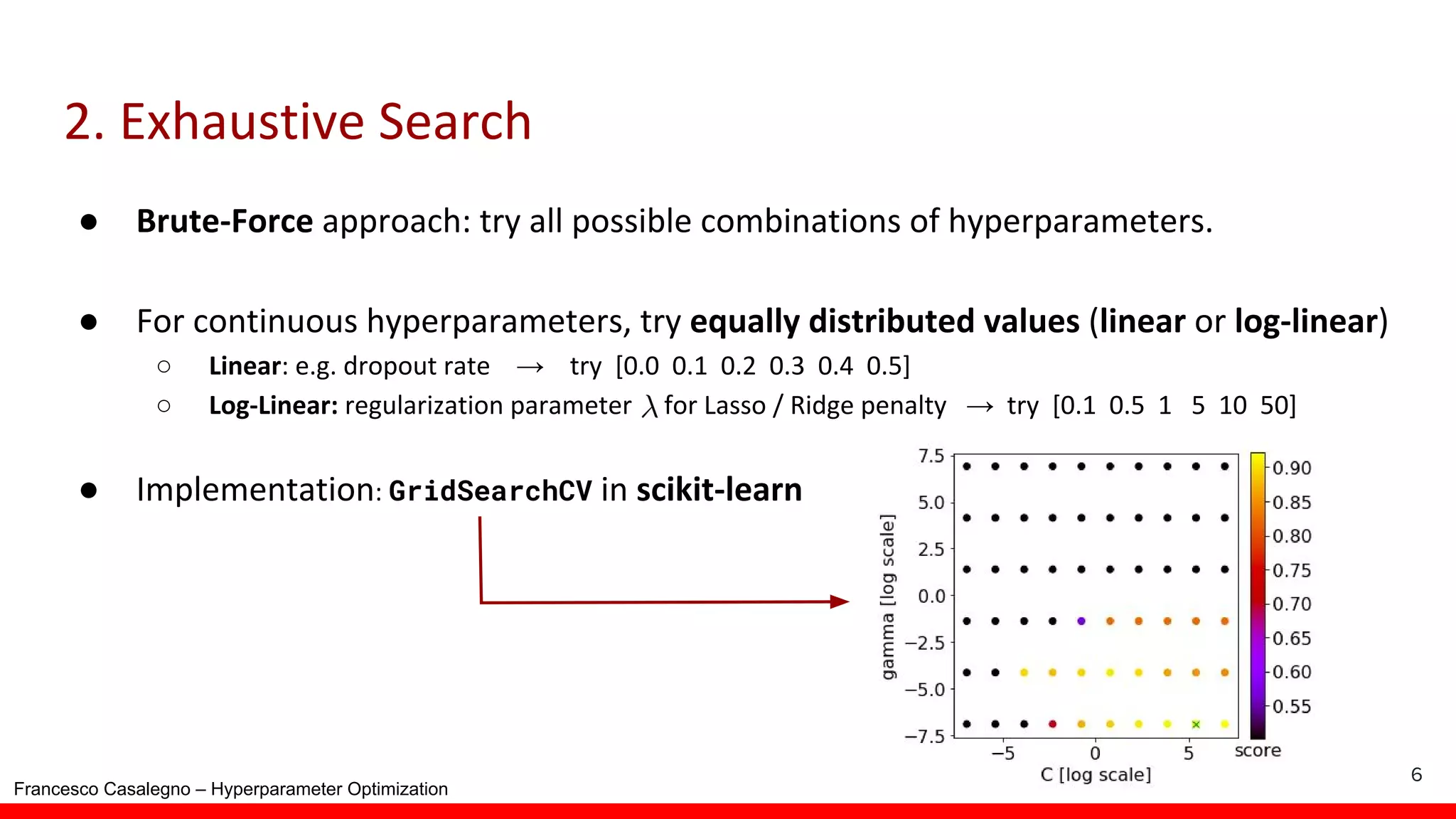

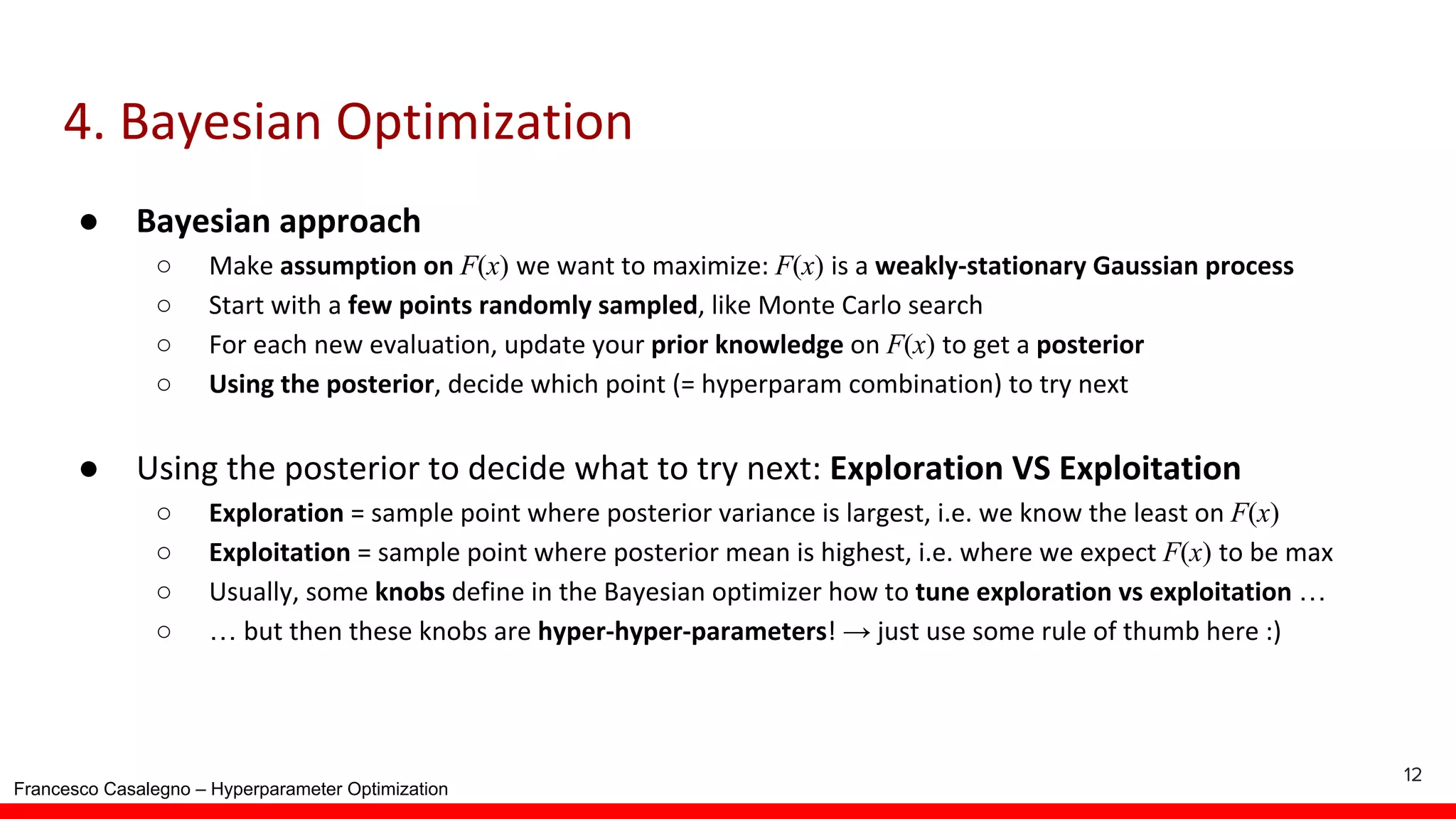

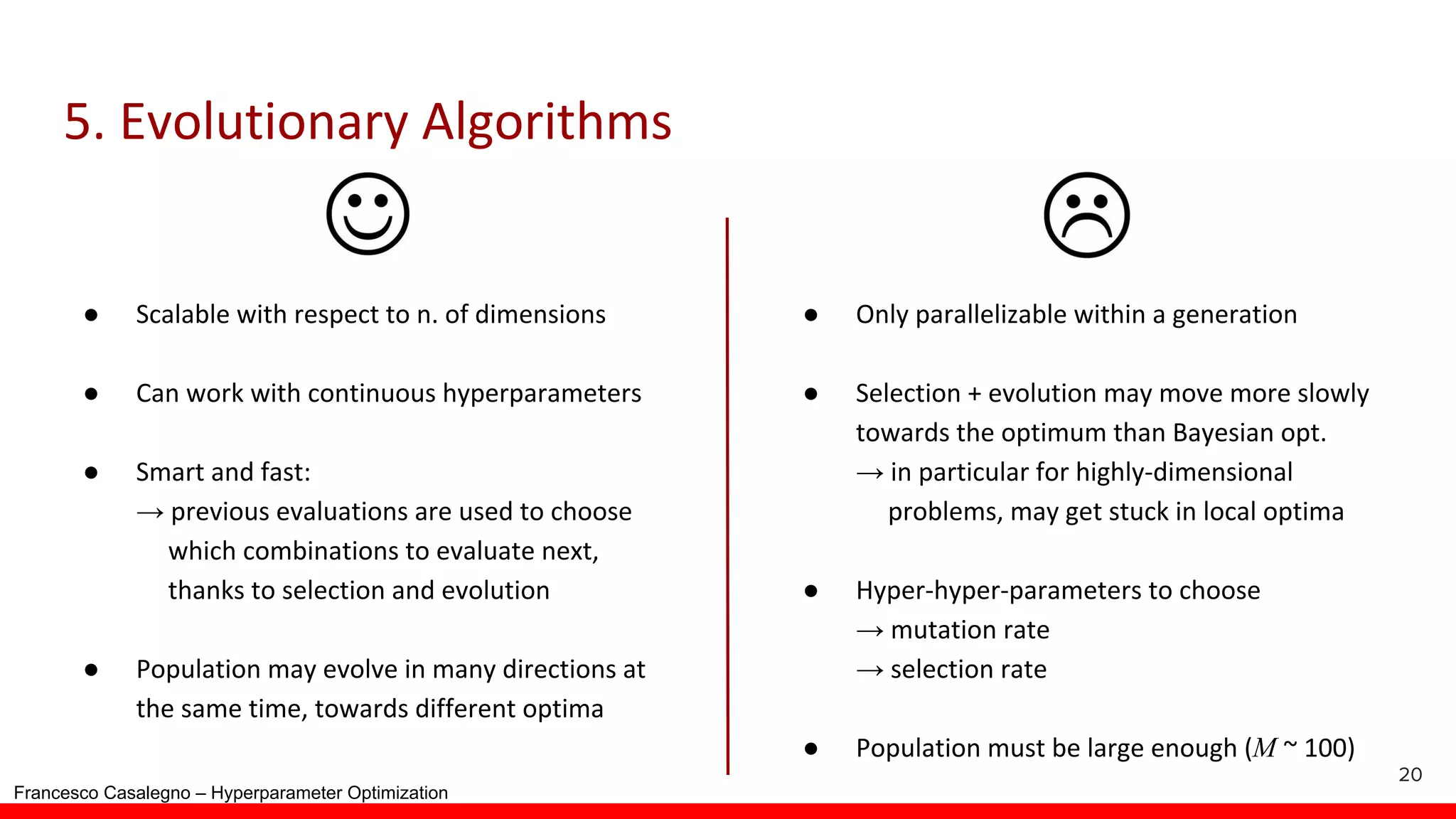

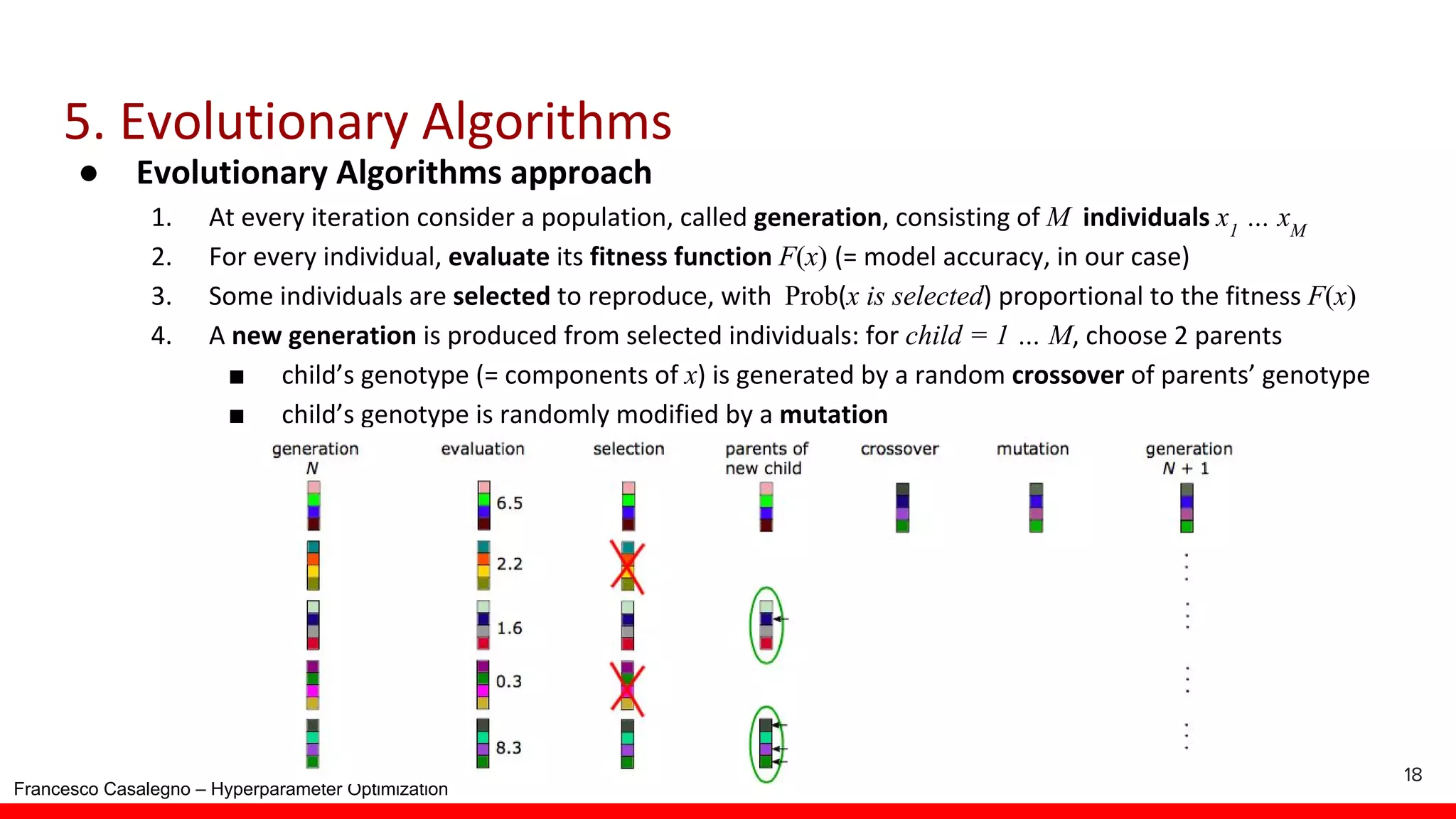

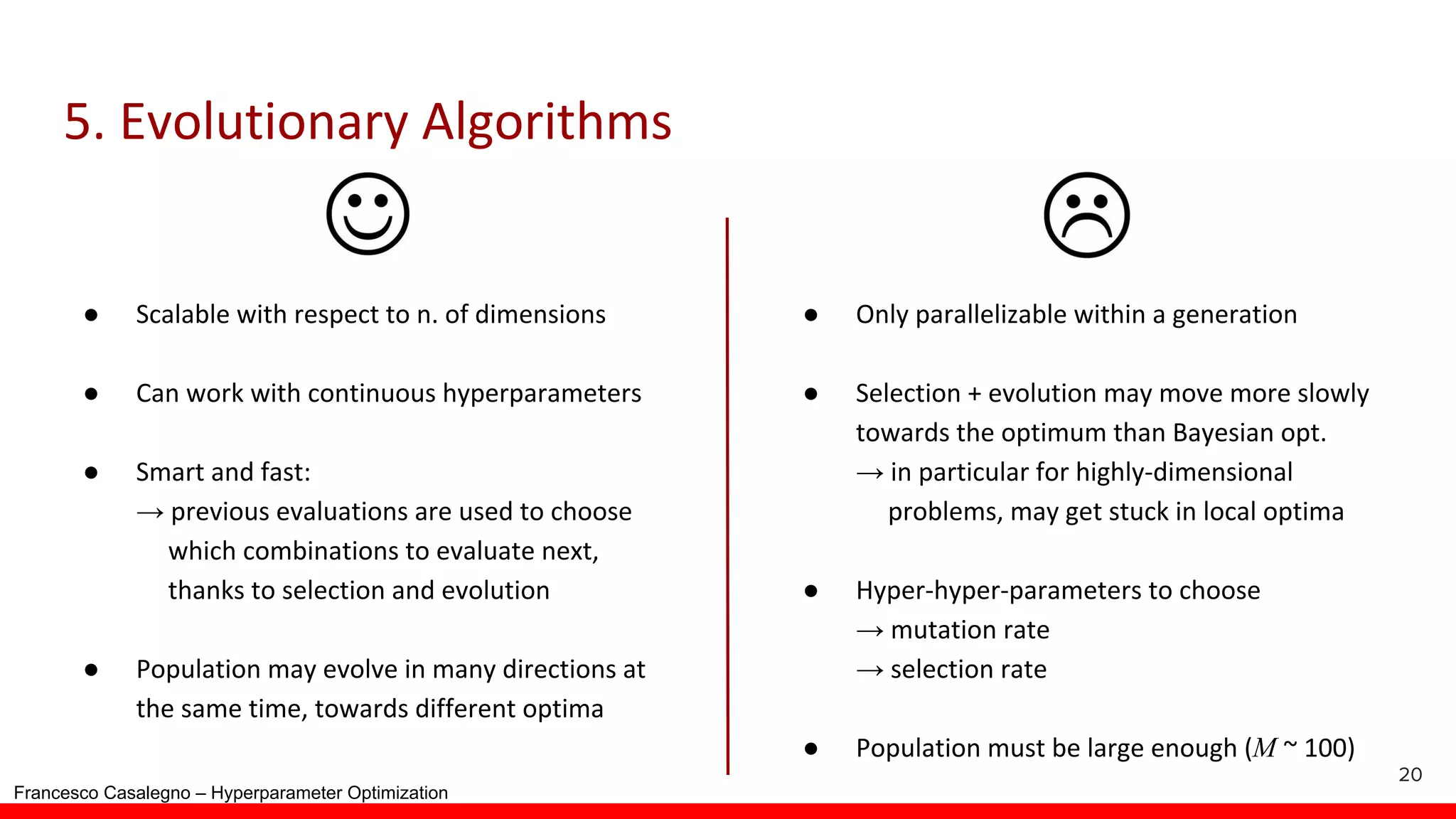

Discussion on various hyperparameter strategies and techniques used in optimization.

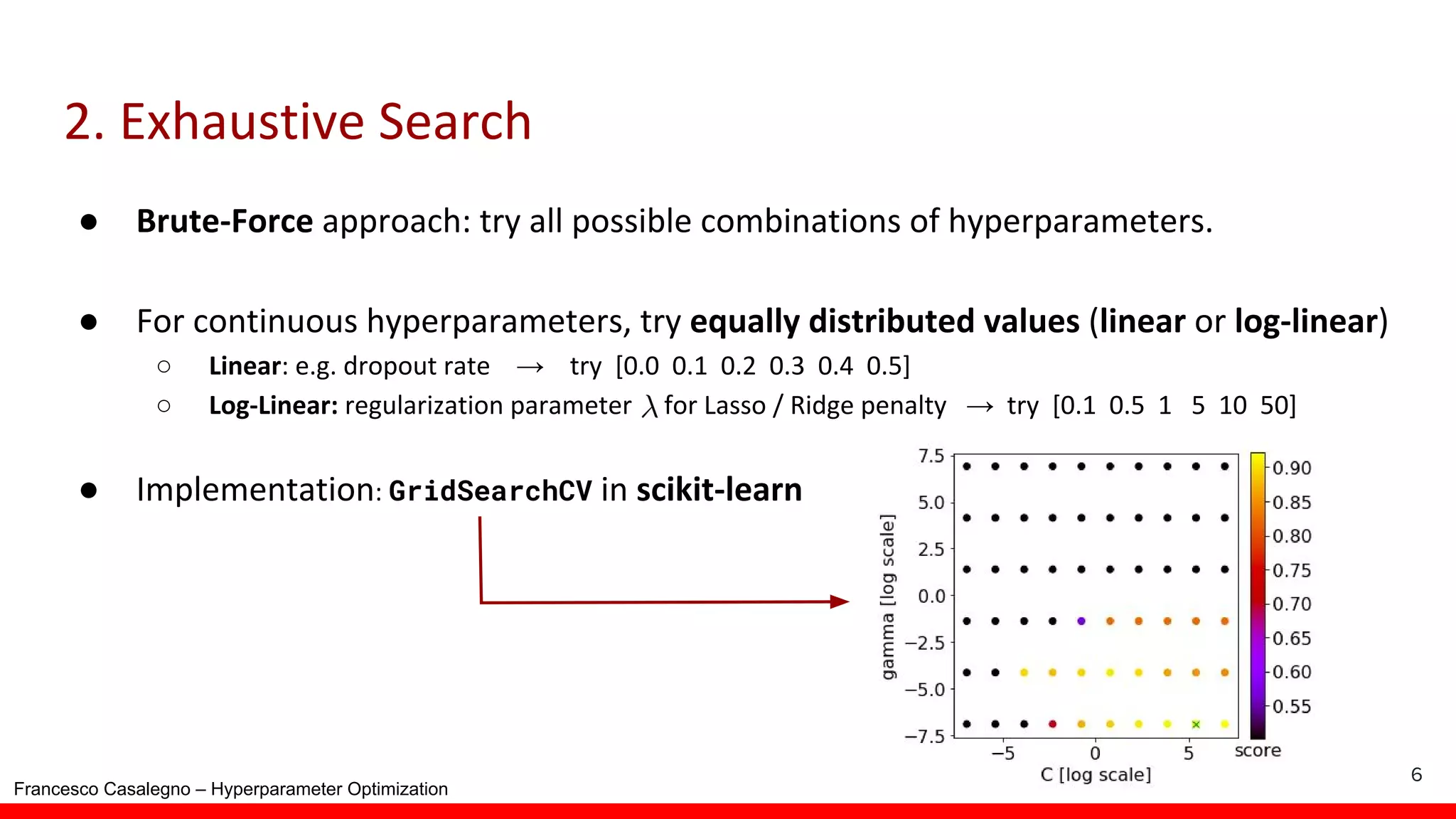

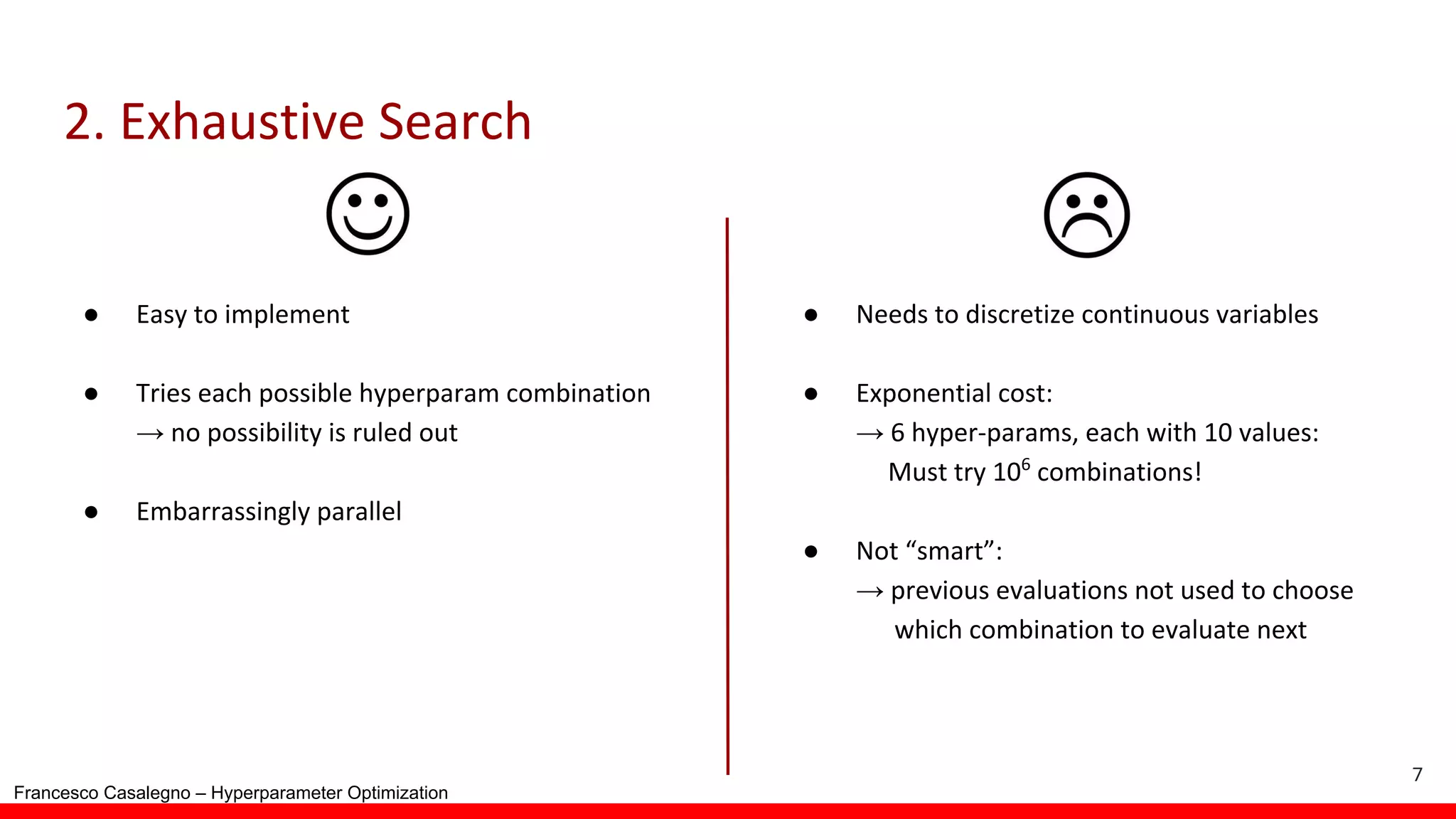

Introduction to GridSearchCV as a method for hyperparameter optimization.

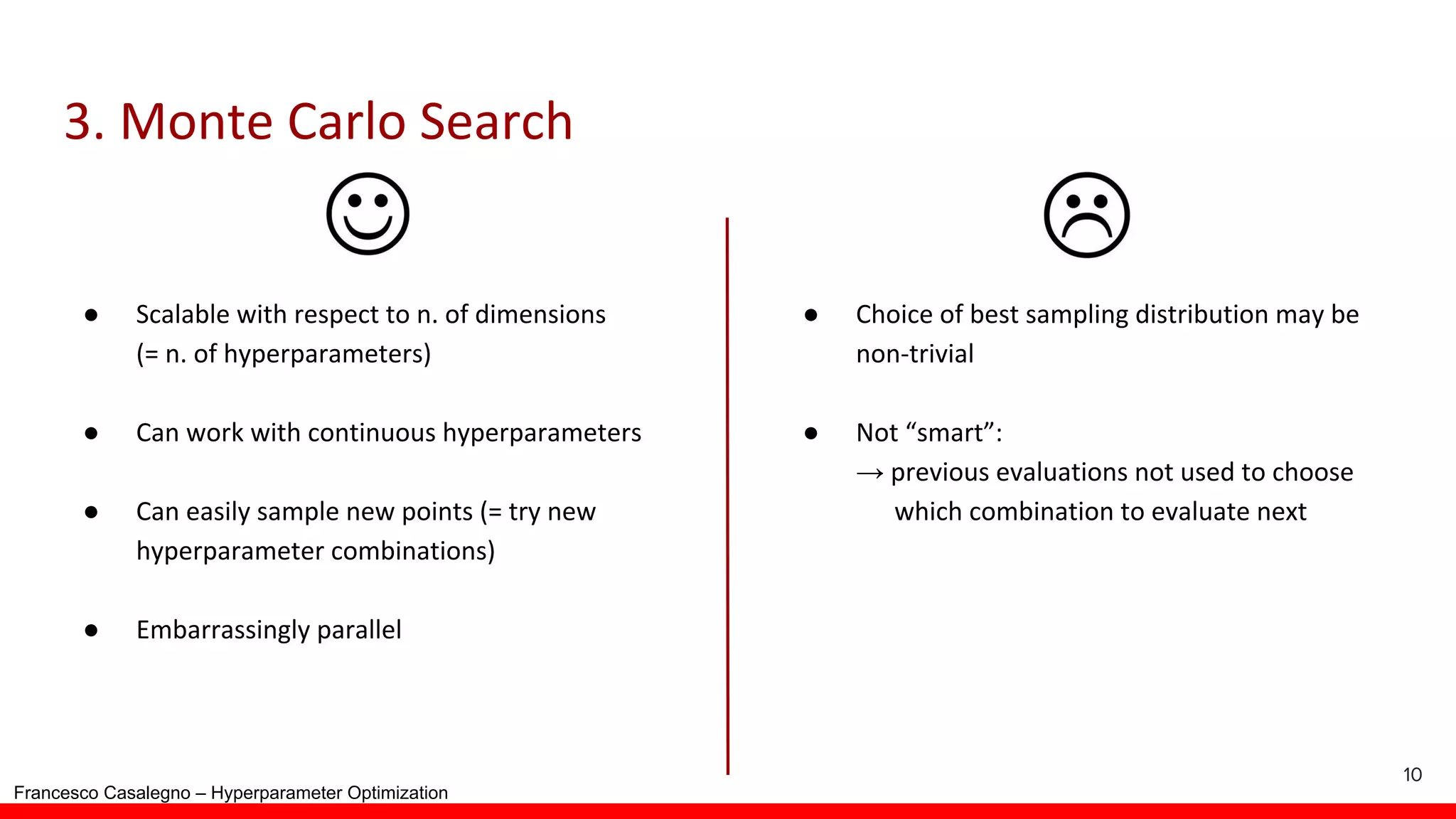

Explains RandomizedSearchCV and its complexity, O(N - 1/2) approach for selecting hyperparameters.

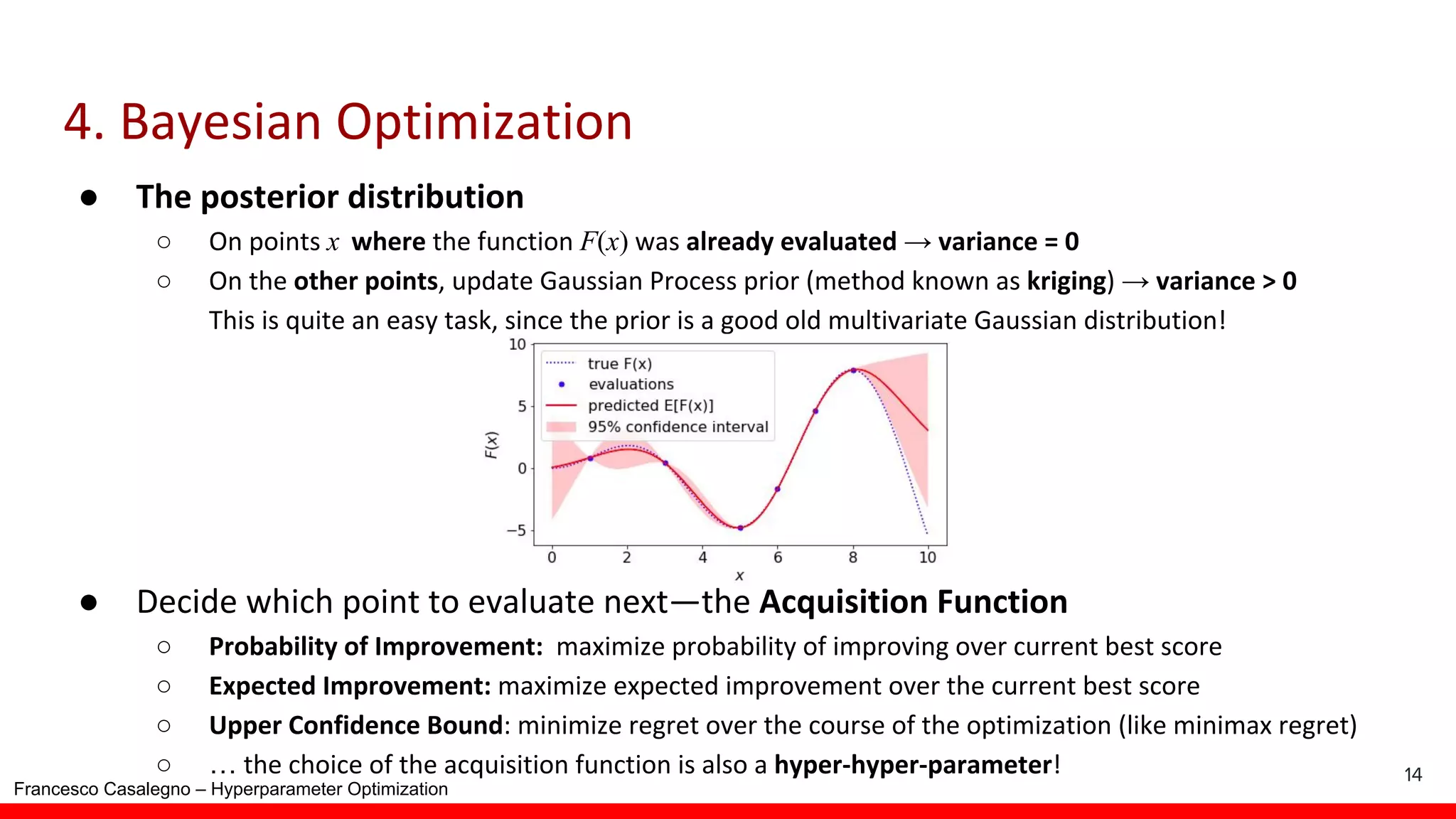

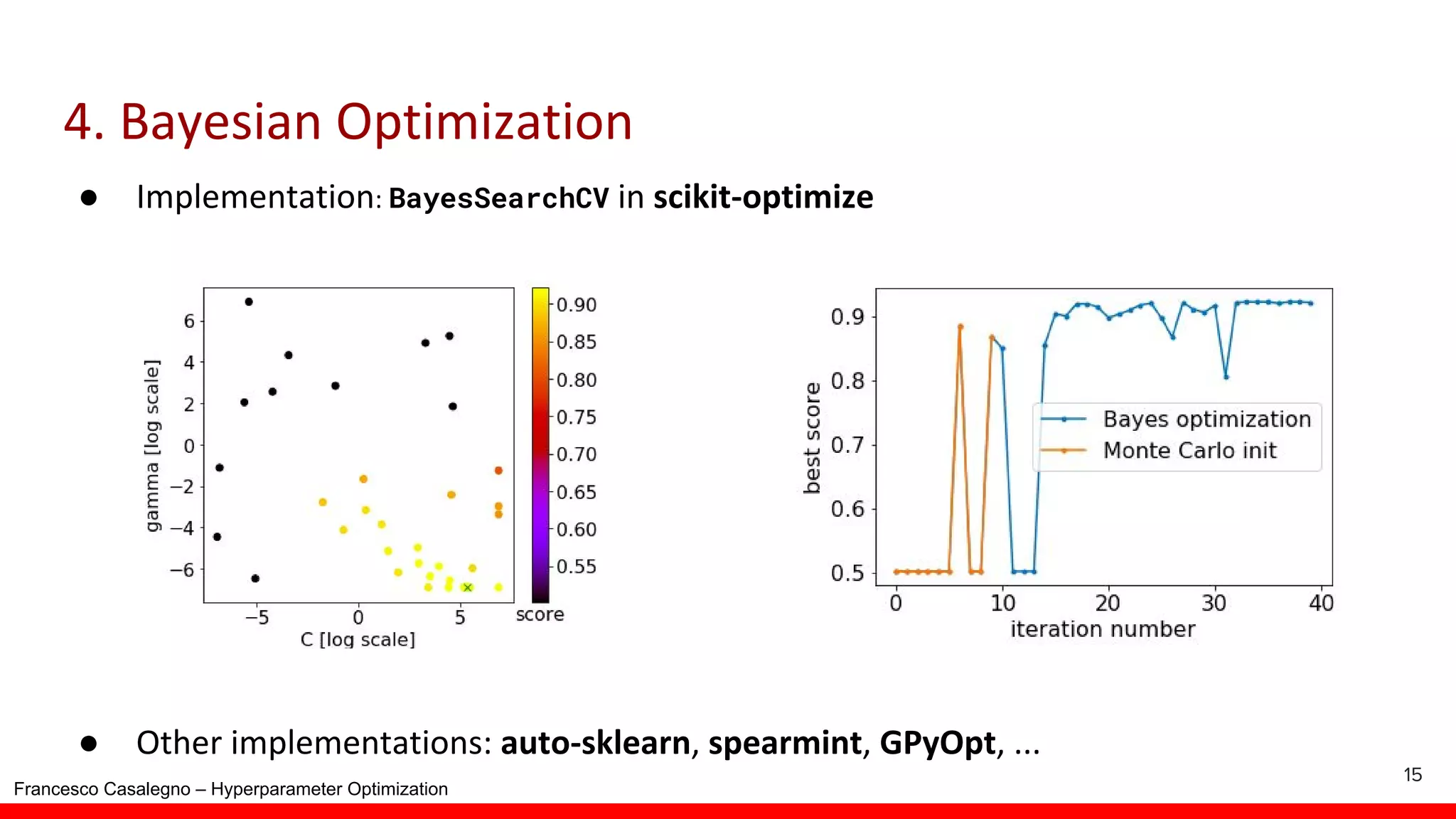

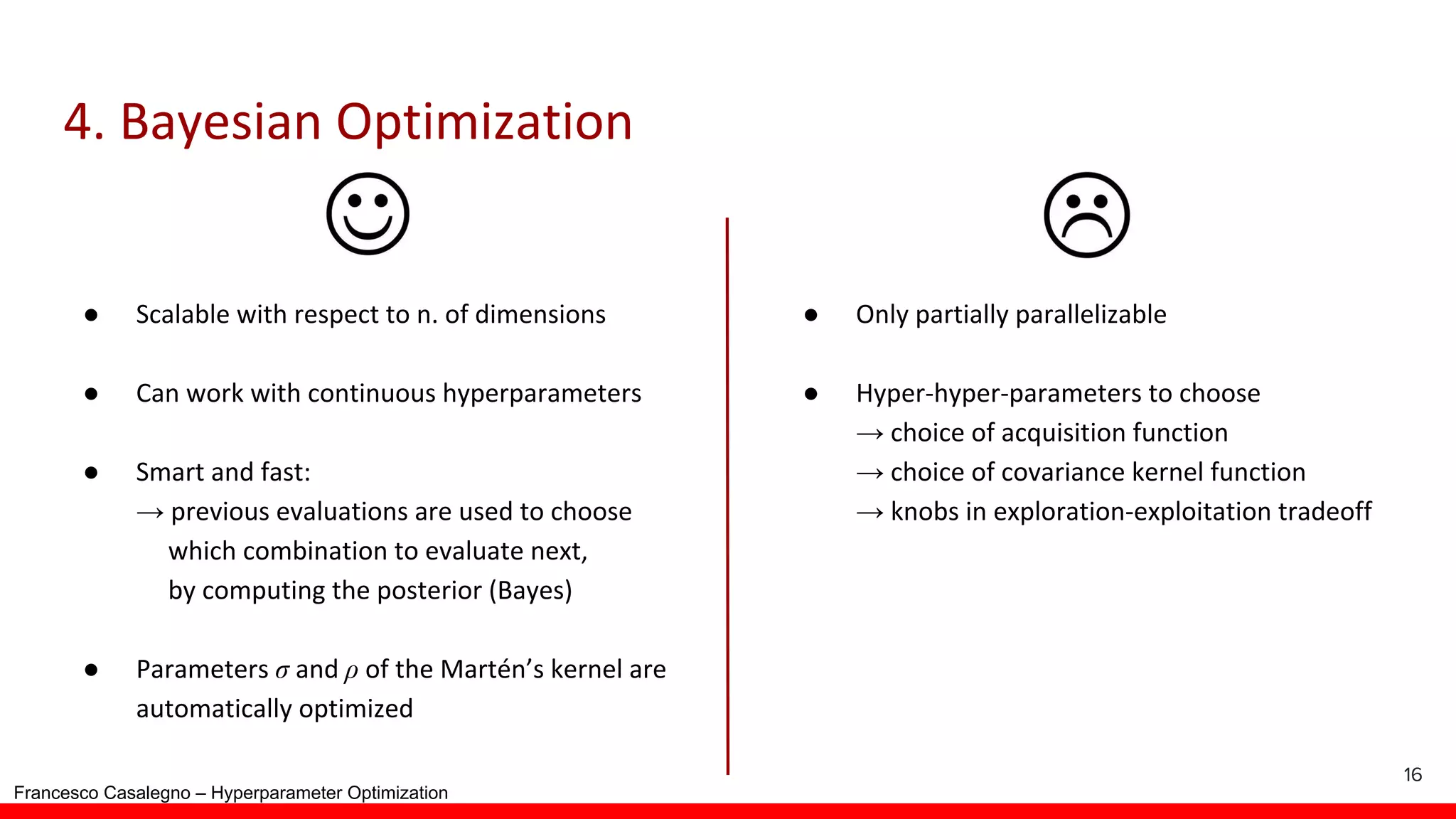

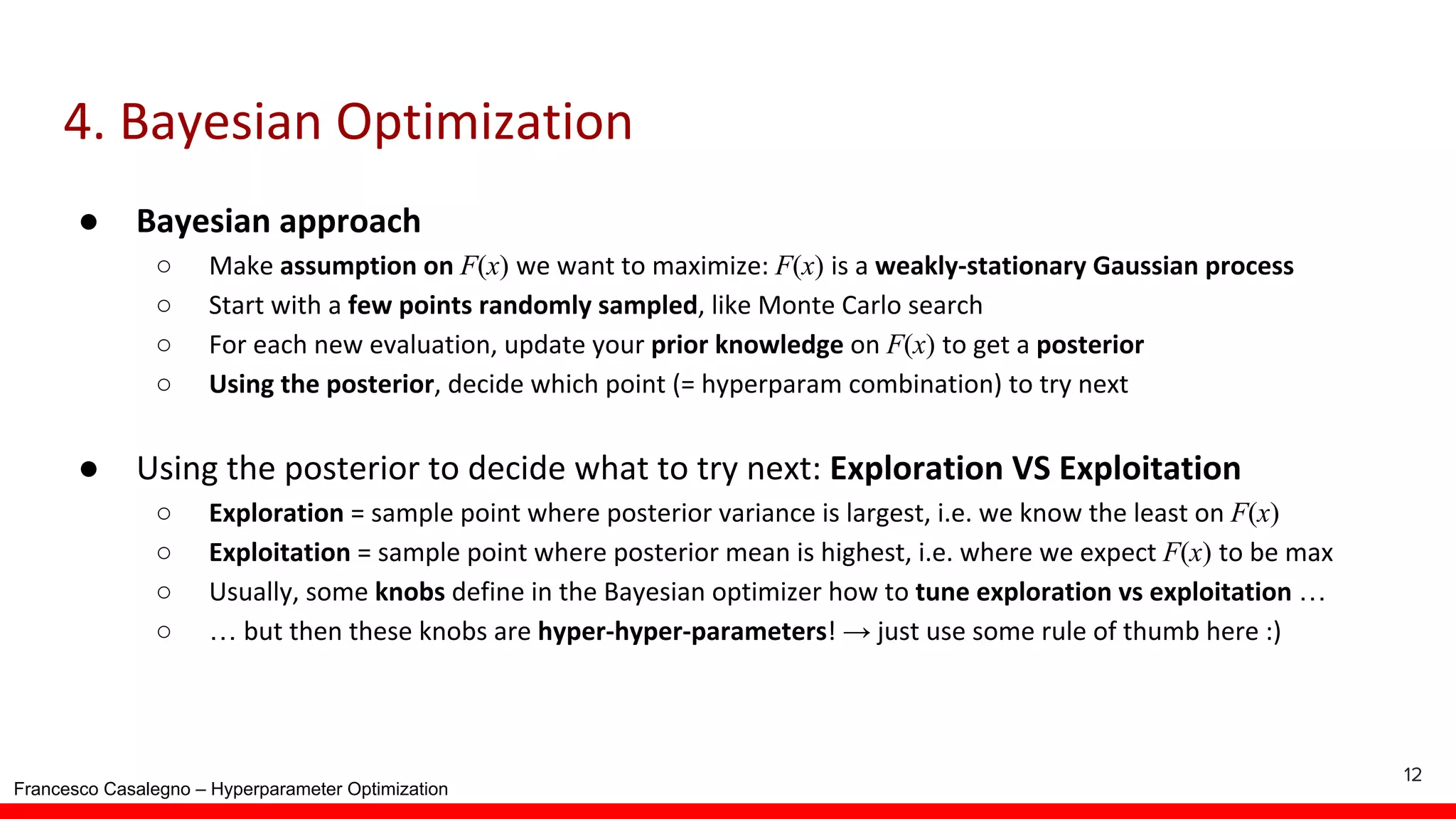

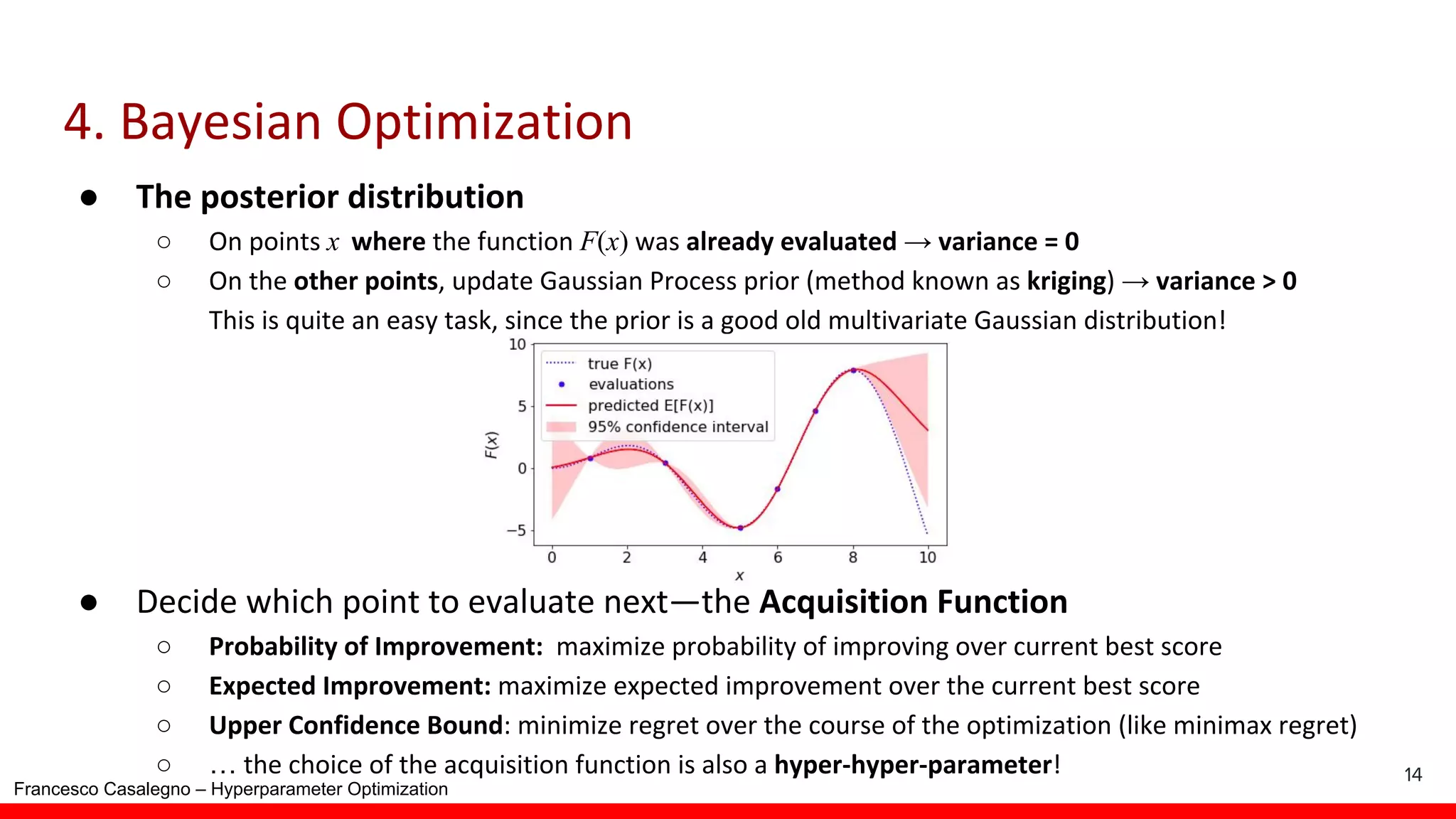

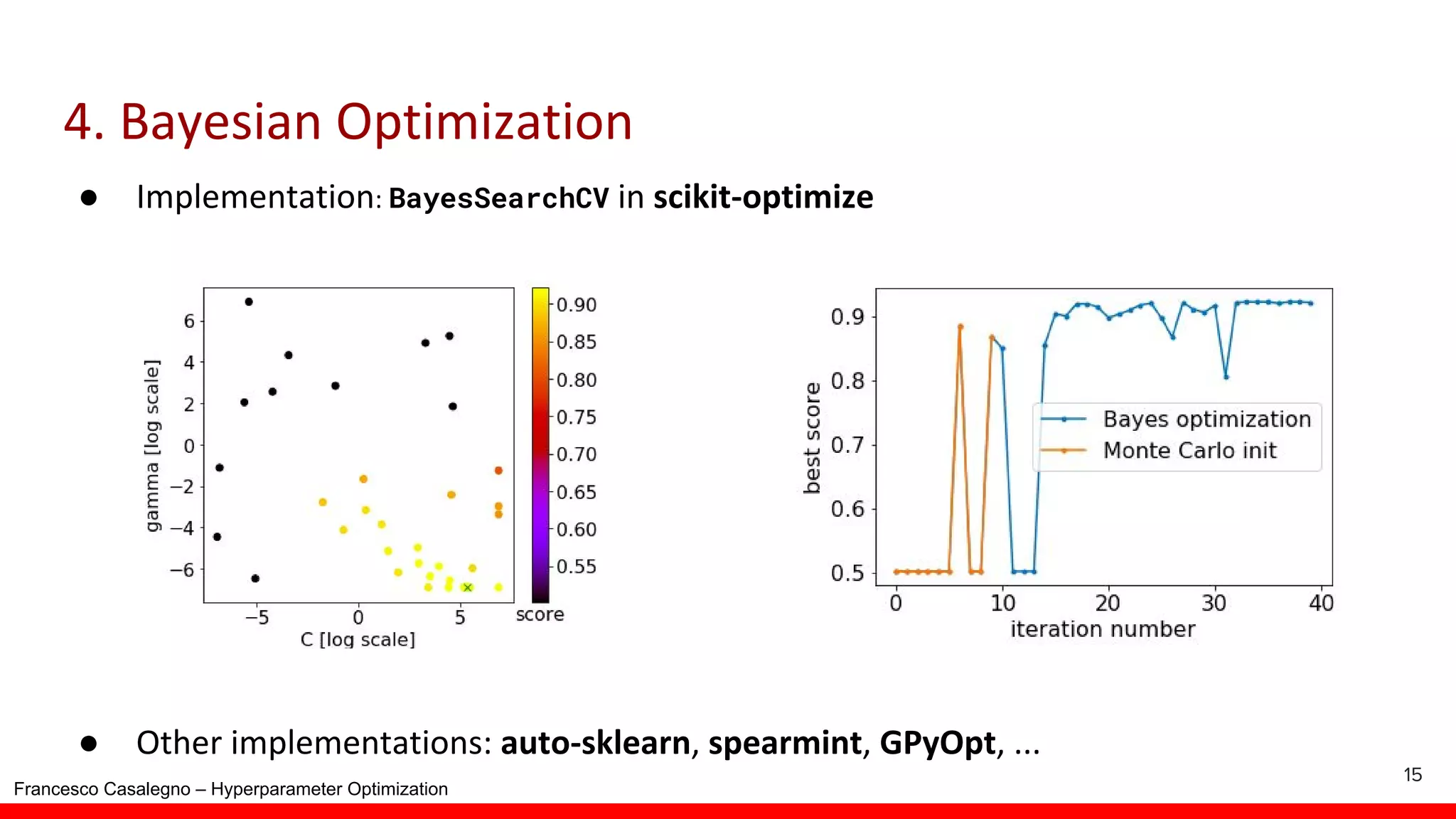

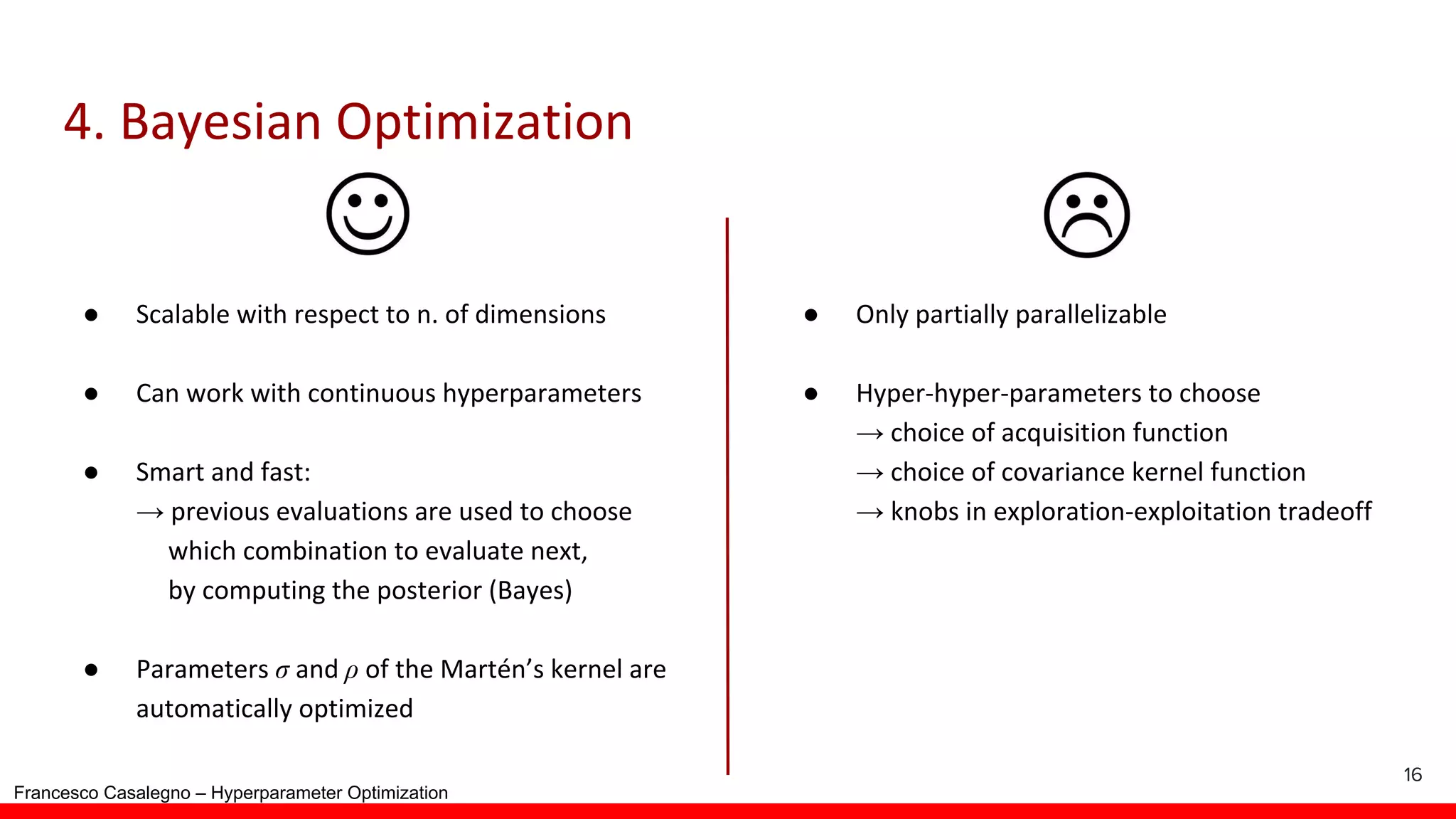

Overview of BayesSearchCV, discussing its application in hyperparameter optimization.

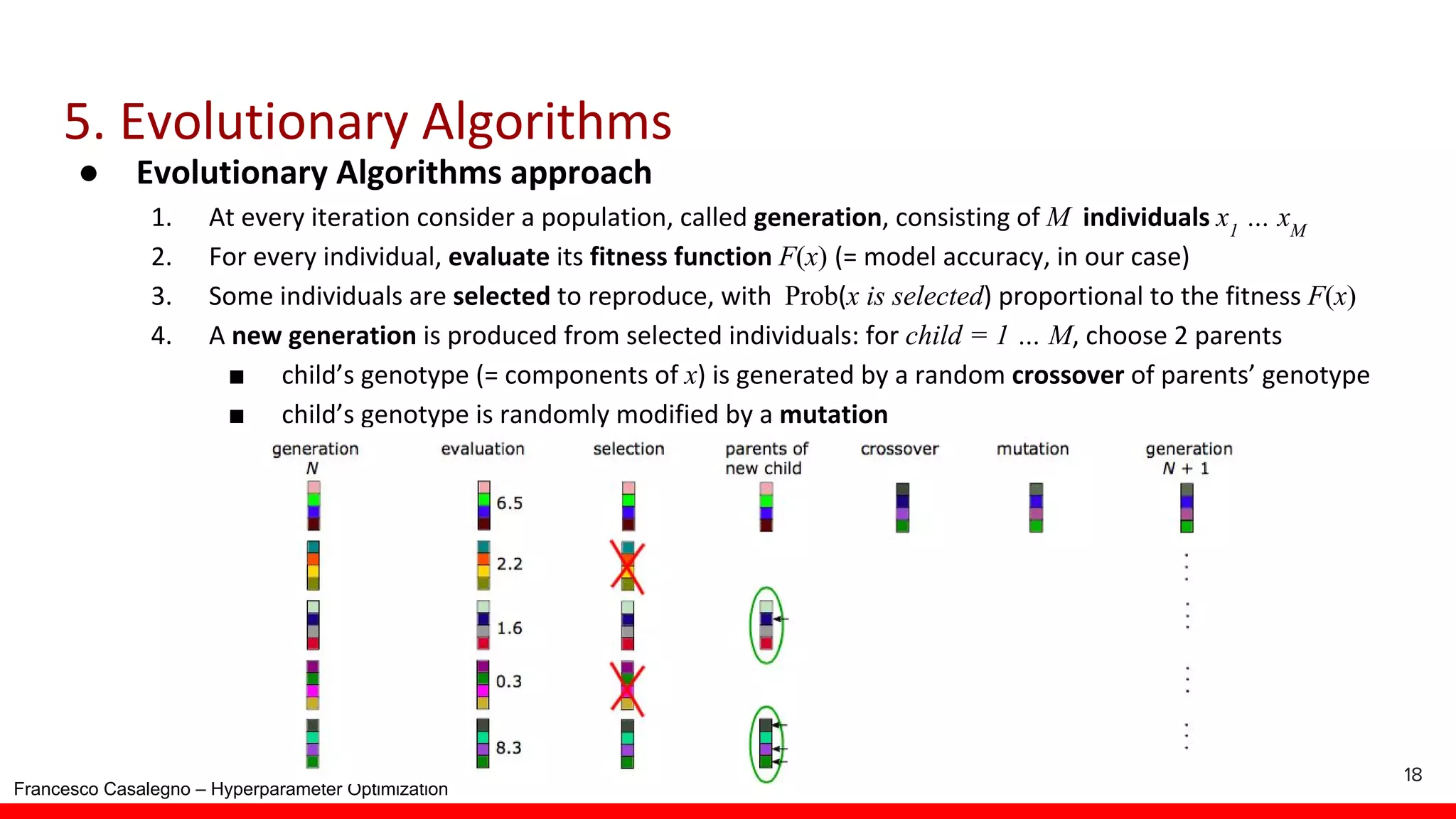

Discussion on probability-based selection methods for hyperparameter optimization using F(x).

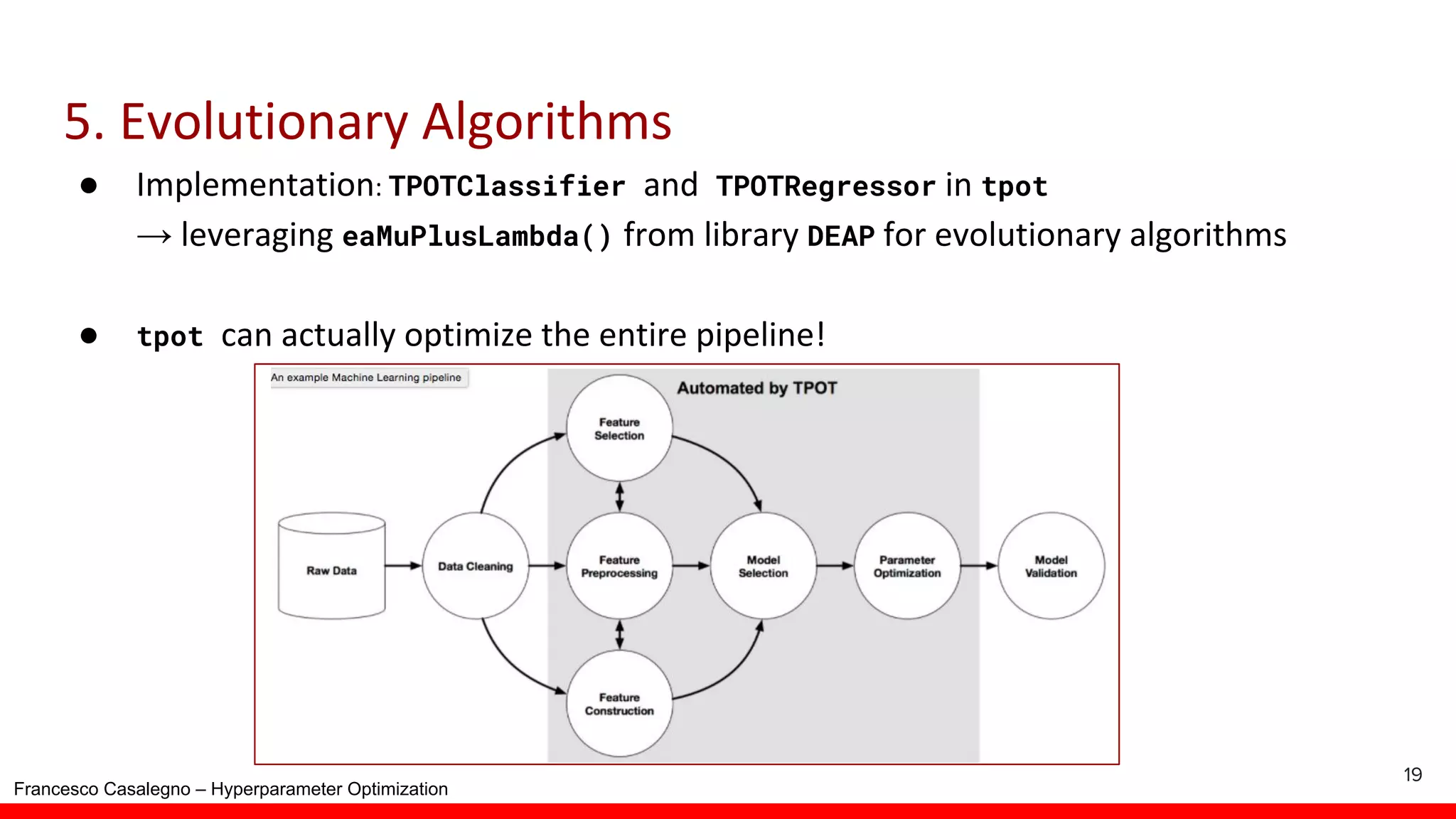

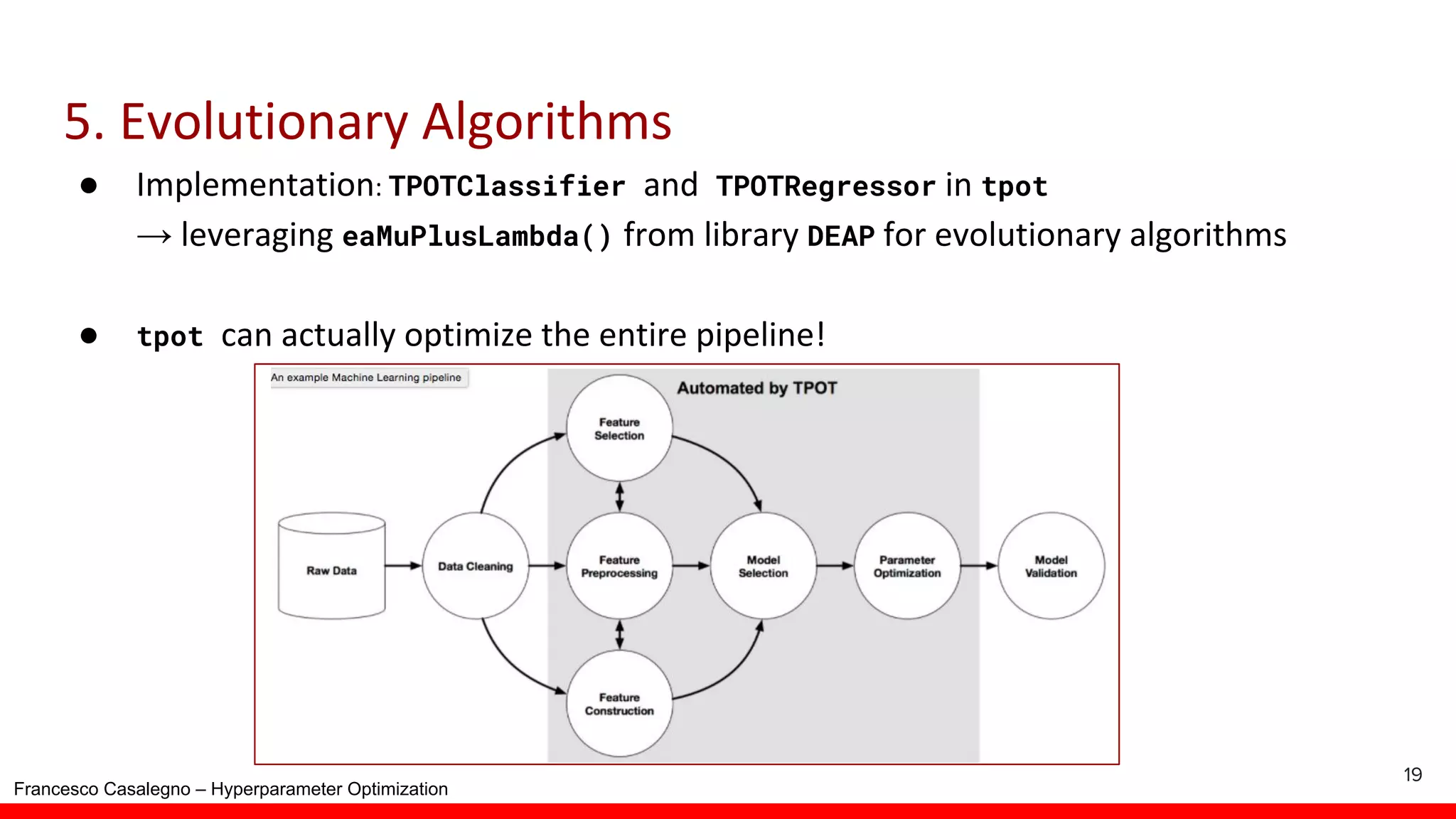

Introduction to TPOTClassifier and TPOTRegressor for automating hyperparameter optimization.

Summary of additional methods and concluding remarks on hyperparameter optimization techniques.