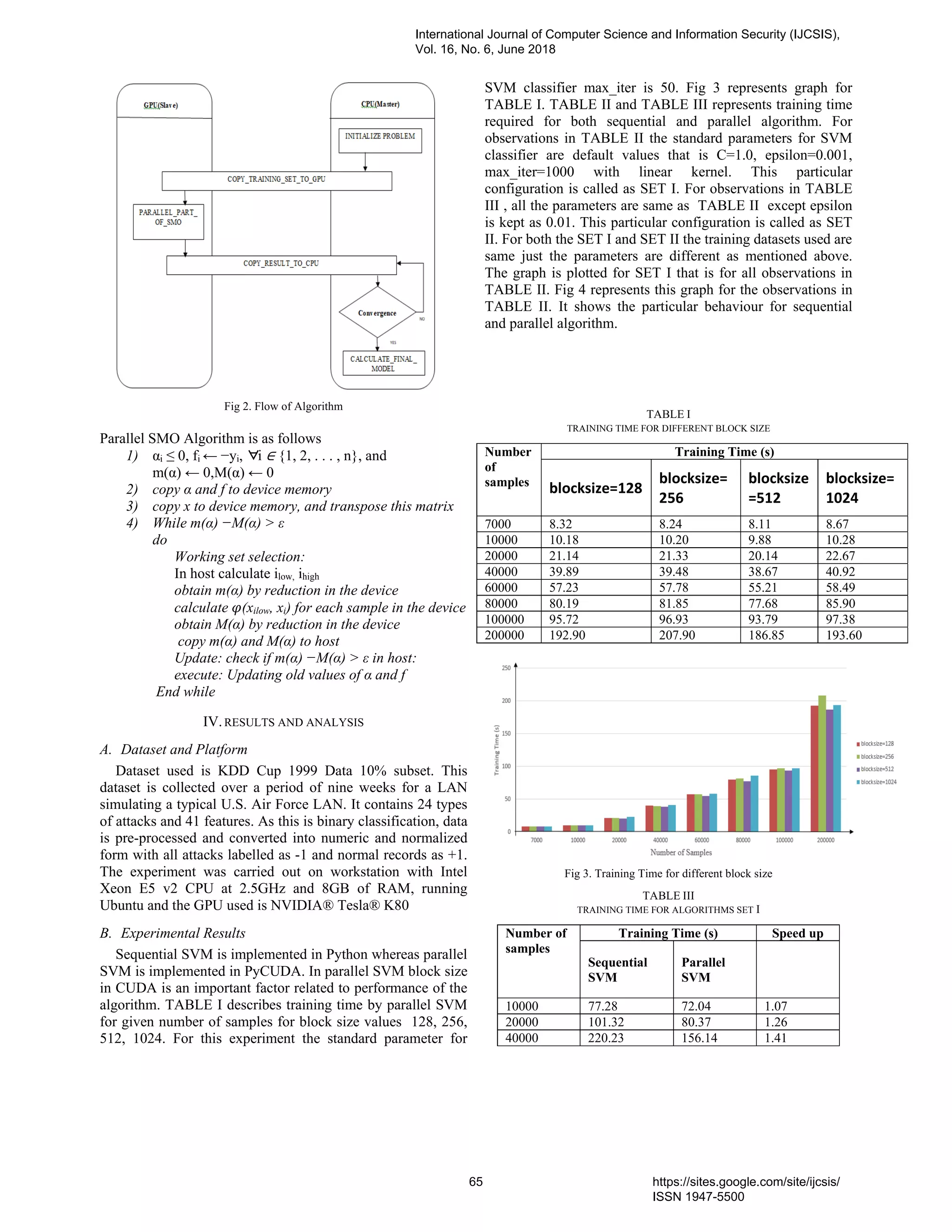

This document discusses the use of GPU parallel computing to enhance the efficiency of support vector machine (SVM) algorithms applied in intrusion detection systems. It describes the challenges of high computational costs in SVM training and proposes a parallel implementation using GPU to significantly reduce training time while maintaining classification accuracy. Experimental results demonstrate improved performance and speedup in the detection of network anomalies with large datasets.

![GPU Parallel Computing of Support Vector Machines as applied to Intrusion Detection System Nabha Kshirsagar1 , N. Z. Tarapore2 1 Research Scholar, Vishwakarma Institute of Technology, Pune 2 Assistant Professor, Vishwakarma Institute of Technology, Pune Abstract—The network anomaly detection technology based on support vector machine (SVM) can efficiently detect unknown attacks or variants of known attacks. However, it cannot be used for detection of large-scale intrusion scenarios due to the demand of computational time. The graphics processing unit (GPU) has the characteristics of multi-threads and powerful parallel processing capability. Hence Parallel computing framework is used to accelerate the SVM-based classification. Keywords— support vector machine, parallel computing, graphics processing unit, intrusion detection system. I. INTRODUCTION An intrusion detection system (IDS) is a device or software application that monitors a network or systems for malicious activity or policy violations. Intrusion Detection Systems (IDS) have become a standard component in network security infrastructures. An IDS gathers information from various areas within a computer or a network. A survey of the literature available indicates that using supervised machine learning approach this gathered information can be analysed to identify possible security breaches, which include both intrusions (attacks from outside the organization) and misuse (attacks from within the organization). In machine learning, IDS can be viewed as classic classification problem, where given machine learning model predicts if given state is attack or not. Supervised learning uses the gathered information as training data and produces a model which is a function that if given input to then produces required output. A Classification algorithm is a procedure for selecting a hypothesis from a set of alternatives that best fits a set of observations, in this case the alternatives will be attack or not. A Support Vector Machine (SVM) is a discriminative classifier formally defined by a separating hyper plane. In other words, given labelled training data (supervised learning), the algorithm outputs an optimal hyper plane which categorizes new examples. SVM is very powerful classifier for handling large datasets in high dimensional space with a strong mathematical property that is quadratic optimization problem. However, it has high computational cost. Thus, this results into more training time for large datasets. To reduce this training time one of the approaches can be parallel computing with graphics processing unit (GPU). Nowadays GPU are popular for machine learning. A new area has emerged due to highly parallel nature of GPU, known as general purpose computing with GPU (GPGPU). With GPU, parallel computing can be achieved with low cost and low power consumption. SVM problem is known as quadratic optimization problem. Sequential minimal optimization (SMO) is an iterative algorithm for solving this optimization problem. This paper describes a parallel sequential minimal optimization (SMO) algorithm which solves the SVM problem using GPU for IDS. II. RELATED WORK The network anomaly detection technology based on SVM can efficiently detect unknown attacks or variants of known attacks, however, it cannot be used for detection of large-scale intrusion scenarios due to the demand of computational time. The graphics processing unit (GPU) has the characteristics of multi-threads and powerful parallel processing capability. Based on the system structure and parallel computation framework of GPU, a parallel algorithm of SVM, named GSVM, is proposed in this paper. Extensive experiments were carried out on KDD99 and other large-scale datasets, the results showed that GSVM significantly improves the efficiency of intrusion detection, while retaining detection performance [2]. SVM is considered as one of the most powerful classifiers for hyperspectral remote sensing images. However, it has high computational cost. In this paper, a novel two-level parallel computing framework to accelerate the SVM-based classification by utilizing CUDA and OpenMP, is proposed. For a binary SVM classifier, the kernel function is optimized on GPU, and then a second-order working set selection (WSS) procedure is employed and optimized especially for GPU to reduce the cost of communication between GPU and host. In addition to the parallel binary SVM classifier on GPU as data processing level parallelization, a multiclass SVM is addressed by a “one-against-one” approach in OpenMP, and several binary SVM classifiers are run simultaneously to conduct task-level parallelization [1]. SMO is one popular algorithm for training SVM, but it still requires a large amount of computation time for solving large International Journal of Computer Science and Information Security (IJCSIS), Vol. 16, No. 6, June 2018 63 https://sites.google.com/site/ijcsis/ ISSN 1947-5500](https://image.slidesharecdn.com/09paper31051827ijcsiscamerareadypp-180710101714/75/GPU-Parallel-Computing-of-Support-Vector-Machines-as-applied-to-Intrusion-Detection-System-1-2048.jpg)

![size problems. This paper proposes one parallel implementation of SMO for training SVM. The parallel SMO is developed using message passing interface (MPI). Specifically, the parallel SMO first partitions the entire training data set into smaller subsets and then simultaneously runs multiple CPU processors to deal with each of the partitioned data sets. Experiments based on this approach show that there is great speed up on different datasets when many processors are used. [3] Training a support vector machine requires the solution of a very large quadratic programming (QP) optimization problem. SMO breaks this large QP problem into a series of smallest possible QP problems. These small QP problems are solved analytically, which avoids using a time-consuming numerical QP optimization as an inner loop. The amount of memory required for SMO is linear in the training set size, which allows SMO to handle very large training sets. Since matrix computation is avoided, SMO scales somewhere between linear and quadratic in the training set size for various test problems, while the standard chunking SVM algorithm scales somewhere between linear and cubic in the training set size. SMO’s computation time is dominated by SVM evaluation, hence SMO is fastest for linear SVMs and sparse data sets. On real world sparse data sets, SMO can be more than 1000 times faster than the chunking algorithm [8]. The scaling of serial algorithms cannot rely on the improvement of CPUs anymore. The performance of classical Support Vector Machine (SVM) implementation–ns has reached its limit and the arrival of the multi core era requires these algorithms to adapt to a new parallel scenario. GPUs have arisen as high-performance platforms to implement data parallel algorithms. In this paper, it is described how a native implementation of a multiclass classifier based on SVMs can map its inherent degrees of parallelism to the GPU programming model and efficiently use its computational throughput. Empirical results show that the training and classification time of the algorithm can be reduced an order of magnitude compared to a classical multiclass solver, LIBSVM, while guaranteeing the same accuracy [5]. III. METHODOLOGY The binary SVM training step is to solve a typical convex optimization problem, and is usually addressed by the SMO algorithm. The SMO algorithm is mainly composed of a WSS procedure and an optimality function updating procedure. [1] In this procedure [1], the second-order WSS procedure is used to obtain a two-element working set B = {ilow, ihigh}. B is selected from the following subset of training points: I (α) = { i|αi < C, yi = 1 or αi > 0, yi = −1 } I = { i|αi < C, yi = −1 or αi > 0, yi = 1 } where C is a constant in the process. To minimize the optimality function f, the working set B is selected to satisfy the following conditions: = arg { -yifi |i I (α) } = arg , | I , where and , are defined as 0 , , , 2 , After the working set is selected, the optimality function f is updated. This procedure iterates until the algorithm converges, and the terminating condition is defined as: Where is constant, and are defined as: max , ∈ min , ∈ Finding the minimum and maximum for the convergence condition can be done using parallel reduction shown in figure Fig 1 Fig 1 Finding maximum using parallel reduction As parallel reduction works best for finding the value and not for finding the index for the value. Hence and are calculated in CPU itself. But some kernel computations required for can be parallelized. The overall flow for the algorithm is shown in Fig 2. International Journal of Computer Science and Information Security (IJCSIS), Vol. 16, No. 6, June 2018 64 https://sites.google.com/site/ijcsis/ ISSN 1947-5500](https://image.slidesharecdn.com/09paper31051827ijcsiscamerareadypp-180710101714/75/GPU-Parallel-Computing-of-Support-Vector-Machines-as-applied-to-Intrusion-Detection-System-2-2048.jpg)

![60000 478.75 304.68 1.57 80000 629.88 390.15 1.61 100000 998.71 550.66 1.81 200000 1490.47 730.04 2.04 Fig 4. Training Time for algorithms SET I TABLE IIIII TRAINING TIME FOR ALGORITHMS SET II Number of samples Training Time (s) Speed up Sequential SVM Parallel SVM 10000 73.48 69.2 1.06 20000 98.22 77.29 1.27 40000 202.33 140.32 1.44 60000 430.12 280.97 1.53 80000 610.35 374.33 1.63 100000 980.18 530.74 1.84 200000 1444.58 690.91 2.09 C. Analysis of Results With TABLE I and Fig 3, we can see that training time for block size 512 is minimum for all training sample size. It is concluded that for the given configuration of system 512 block size is more suitable. Even with block size 1024 training time is more than other block size values for parallel algorithm. For CUDA the maximum block size used is 1024 that is why at the boundary value system does not show its best performance, as too much of resources are used. With less values of block size the, resources are underused. For the choice of block size GPU family that is also the architecture plays an important role. It can be seen with graph in Fig 4 that training time for sequential SVM increases rapidly with increase in number of samples. Whereas for parallel algorithm the training time increases gradually with increase in number of samples. We can see that up to training sample size 10,000 the time required by both the algorithm is somewhat similar with sequential algorithm taking less time than parallel algorithm. After size 10,000 is crossed speedup increases rapidly as the number of training samples increases with parallel algorithm taking less time than sequential algorithm. We can see low speed up for small values of number of training samples. This is a typical behaviour for parallel algorithm. For less number of records the cost of parallelism that is transferring data from CPU to GPU and then again back to CPU is more than the benefit due to parallel processing. By analysing observations in tables TABLE I and TABLE II, we can see that when the threshold value, known as epsilon, is increased then both the sequential and parallel algorithm converges sooner. In this case also after size 10,000 is crossed speedup increases rapidly as the number of training samples increases with parallel algorithm taking less time than sequential algorithm. With dataset divided into 70% training and 30% testing datasets, both sequential SVM and parallel SVM classifier, show 98.99% accuracy. The maximum training size used for the given experiment is 2 lakhs. Only 10 precent of KDD CUP dataset contains more than 4 lakh records. As we can see more benefit in terms of speedup with increase in number of samples , this approach is more beneficial for whole dataset as training dataset. V. CONCLUSION SVM is a powerful classifier with high computational cost. The computational cost increases with increase in sample size. The emergence of GPUs as massively parallel processors has opened a wide range of opportunities for acceleration of large- scale classification problems. The data-parallel characteristic of many learning algorithms like SVM conveniently fits the set of problems that modern GPUs are meant to solve. In this a paper parallel SVM using GPU for intrusion detection is proposed. Using this approach, we can conclude that with given GPU having compute capability of 2.91 teraflops, achieved maximum speedup is 2.09 with accuracy 98.99%. This speedup can be further increased for given number of training samples by using GPUs having more compute capability. This approach can be further extended for multi classification by exploiting parallelism related to both CPUs and GPUs. Also, this approach can be more beneficial with more complex datasets. REFERENCES [1] K. Tan, J. Zhang, Q. Du, X. Wang , “GPU Parallel Implementation of Support Vector Machines for Hyperspectral Image Classification”, IEEE, 2015. [2] X. Zhang, Y. Zhang, C. Gu , “GPU Implementation of Parallel Support Vector Machine Algorithm with Applications to Intruder Detection”, Journal Of Computers, vol. 9, no. 5, May 2014. [3] L. J. Cao, S. S. Keerthi, C. Ong, J. Q. Zhang, U. Periyathamby, Xiu Ju Fu, and H. P. Lee, “Parallel Sequential Minimal Optimization for the Training of Support Vector Machines”, IEEE Transactions On Neural Networks, vol. 17, no. 4, July 2006. [4] J. Silva, A. Aguiar, F. Silva, “A Parallel Computing Hybrid Approach for Feature Selection”, IEEE 2015. [5] S. H. Lopez, J. R. Williams, Abel Sanchez, “Parallel Multiclass Classification using SVMs on GPUs”, ACM 2010. [6] R.E. Fan, P.H. Chen, C.-J. Lin, “Working set selection using second order information for training support vector machines”, J. Mach. Learning Res., vol. 6, 2005, pp. 1889–1918. [7] J. C. Platt, “Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines”, Microsoft Research, Technical Report, MSR-TR-98-14, April 21, 1998. [8] B. C. Catanzaro, N. Sundaram, K. Keutzer, “Fast Support Vector Machine Training and Classification on Graphics Processors”. International Journal of Computer Science and Information Security (IJCSIS), Vol. 16, No. 6, June 2018 66 https://sites.google.com/site/ijcsis/ ISSN 1947-5500](https://image.slidesharecdn.com/09paper31051827ijcsiscamerareadypp-180710101714/75/GPU-Parallel-Computing-of-Support-Vector-Machines-as-applied-to-Intrusion-Detection-System-4-2048.jpg)

![[9] K. Zhu, H. Wang, H. Bai, J. Li, Z. Qiu ,H. Cui, E. Y. Chang, “Parallelizing Support Vector Machines on Distributed Computers”, Google research,China. [10] H. P. Graf, E. Cosatto, L. Bottou, I. Durdanovic, V. Vapnik, “Parallel Support Vector Machines: The Cascade SVM”, Advances in Neural Information processing, vol 17, MIT Press,2005. [11] M. S. Abadeh, J. Habibi, Z. Barzegar, M. Sergi, “A parallel genetic local search algorithm for intrusion detection in computer networks”, Engineering Applications of Artificial Intelligence, 2007. [12] C. Kyrkou, T. Theocharides, “A Parallel Hardware Architecture for Real-Time Object Detection with Support Vector Machines”, IEEE Transactions On Computers, vol. 61, No. 6, June 2012. [13] M. Kruczkowski, E. Niewiadomska-Szynkiewicz, “Support Vector Machine for malware analysis and classification”, IEEE/WIC/ACM International Joint Conferences on Web Intelligence (WI) and Intelligent Agent Technologies (IAT), 2014. International Journal of Computer Science and Information Security (IJCSIS), Vol. 16, No. 6, June 2018 67 https://sites.google.com/site/ijcsis/ ISSN 1947-5500](https://image.slidesharecdn.com/09paper31051827ijcsiscamerareadypp-180710101714/75/GPU-Parallel-Computing-of-Support-Vector-Machines-as-applied-to-Intrusion-Detection-System-5-2048.jpg)