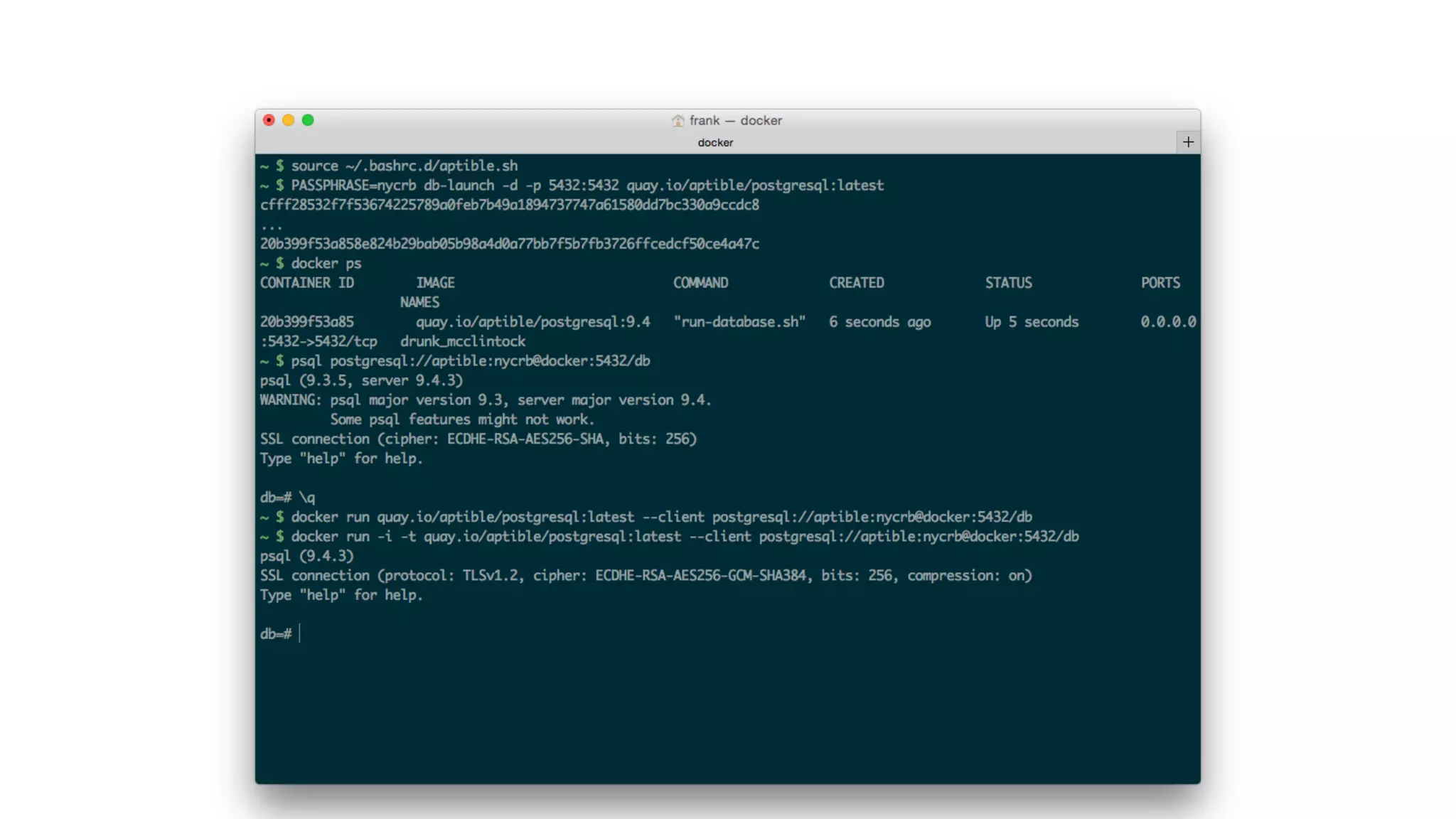

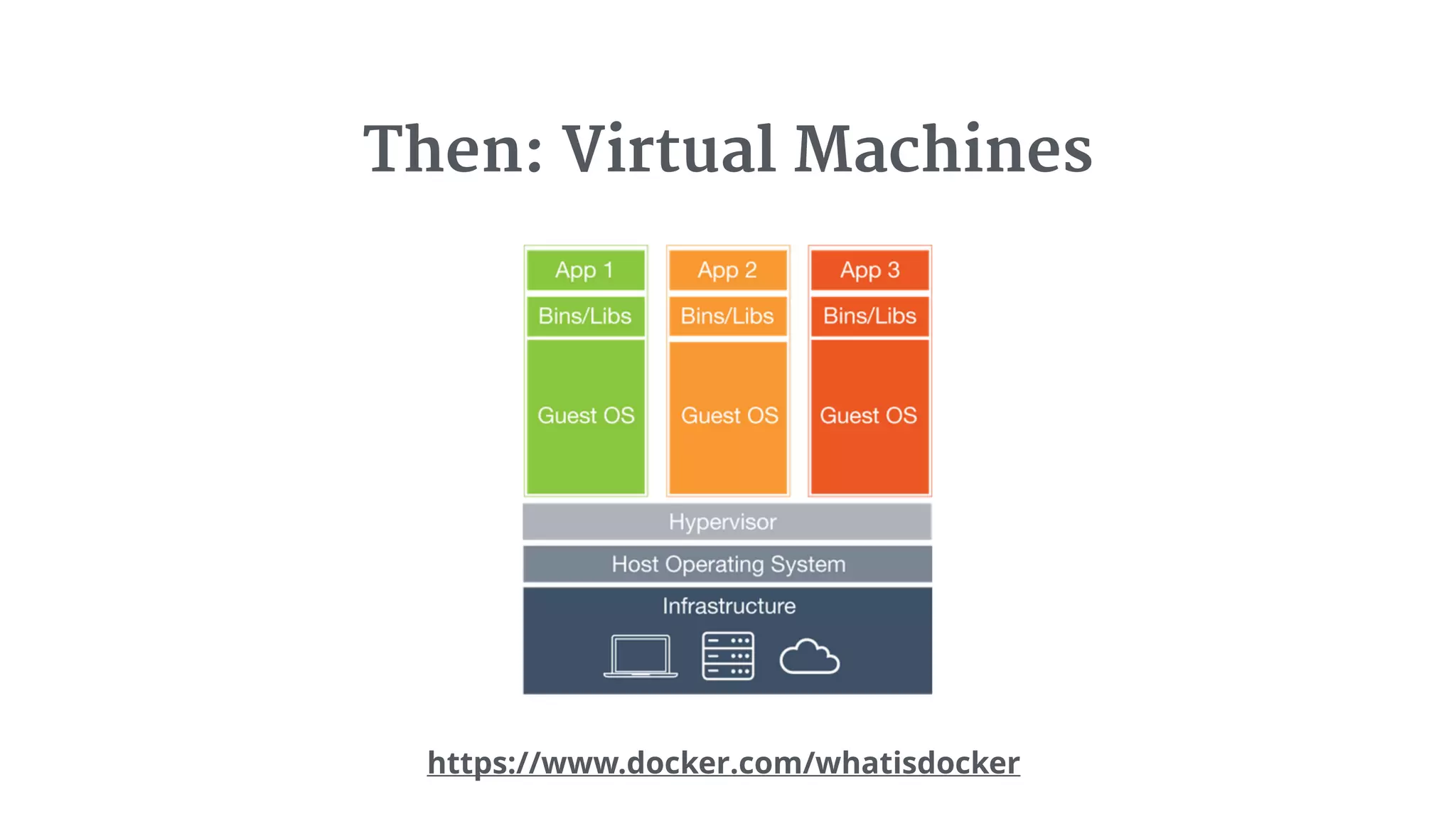

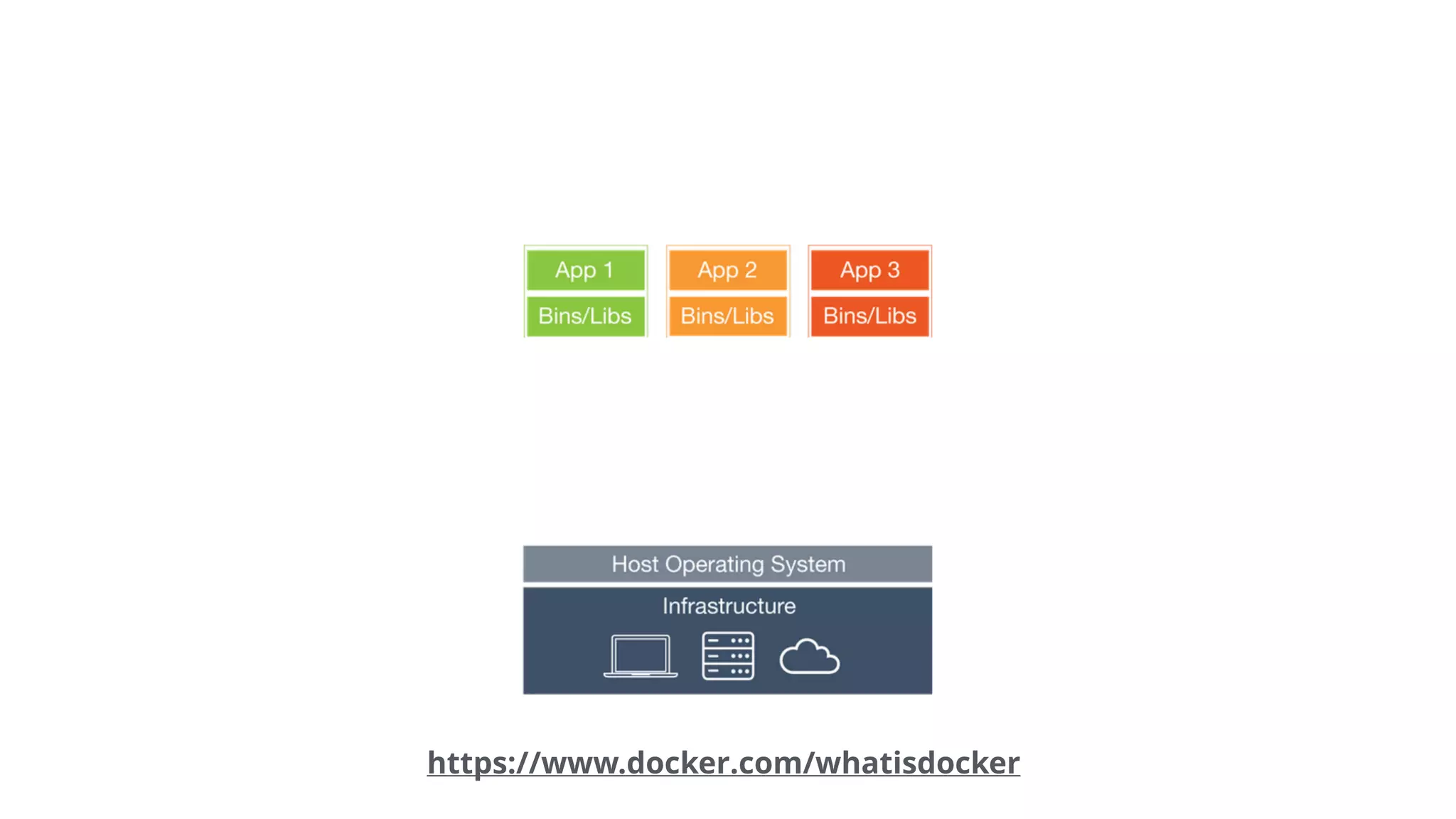

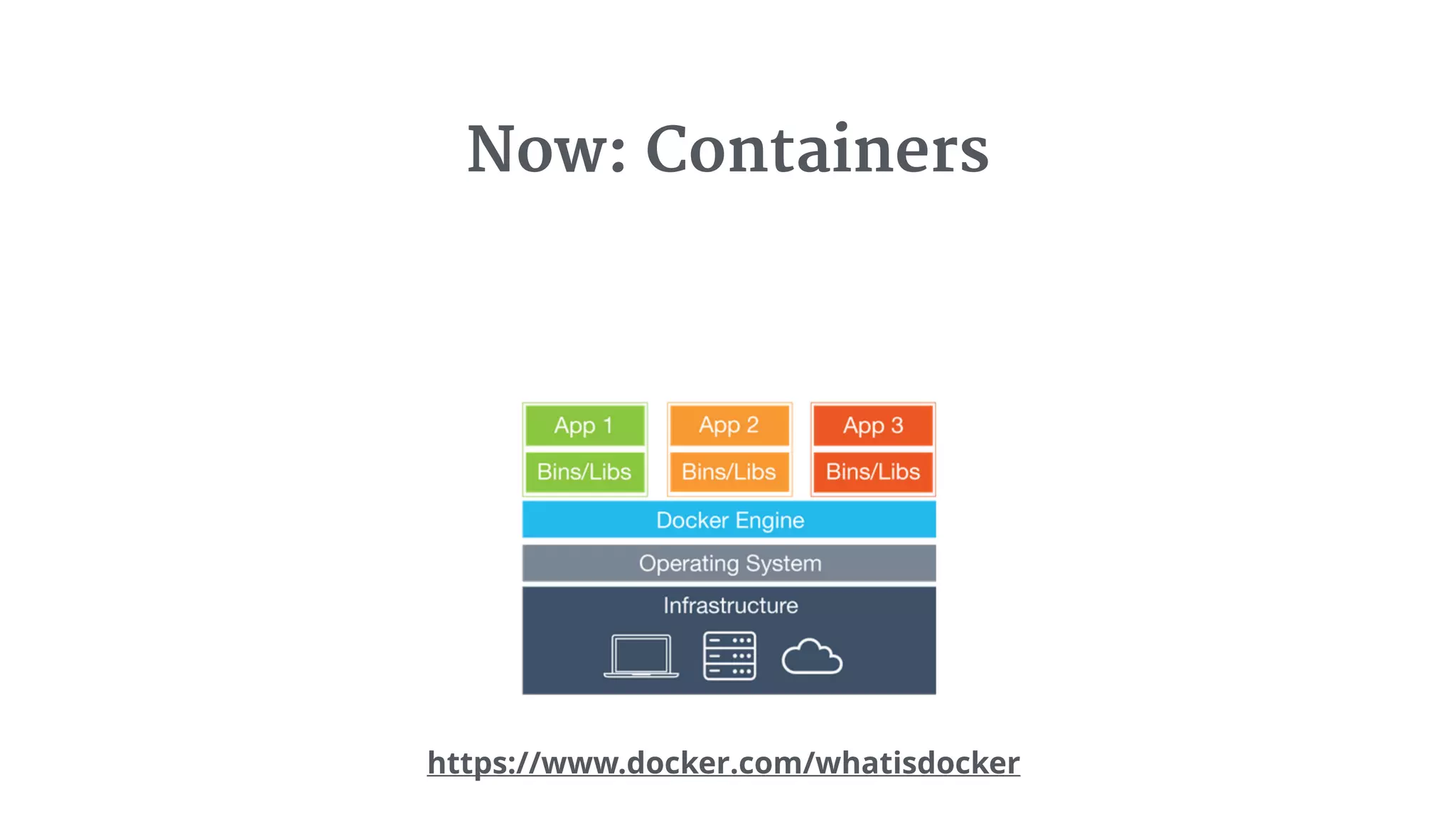

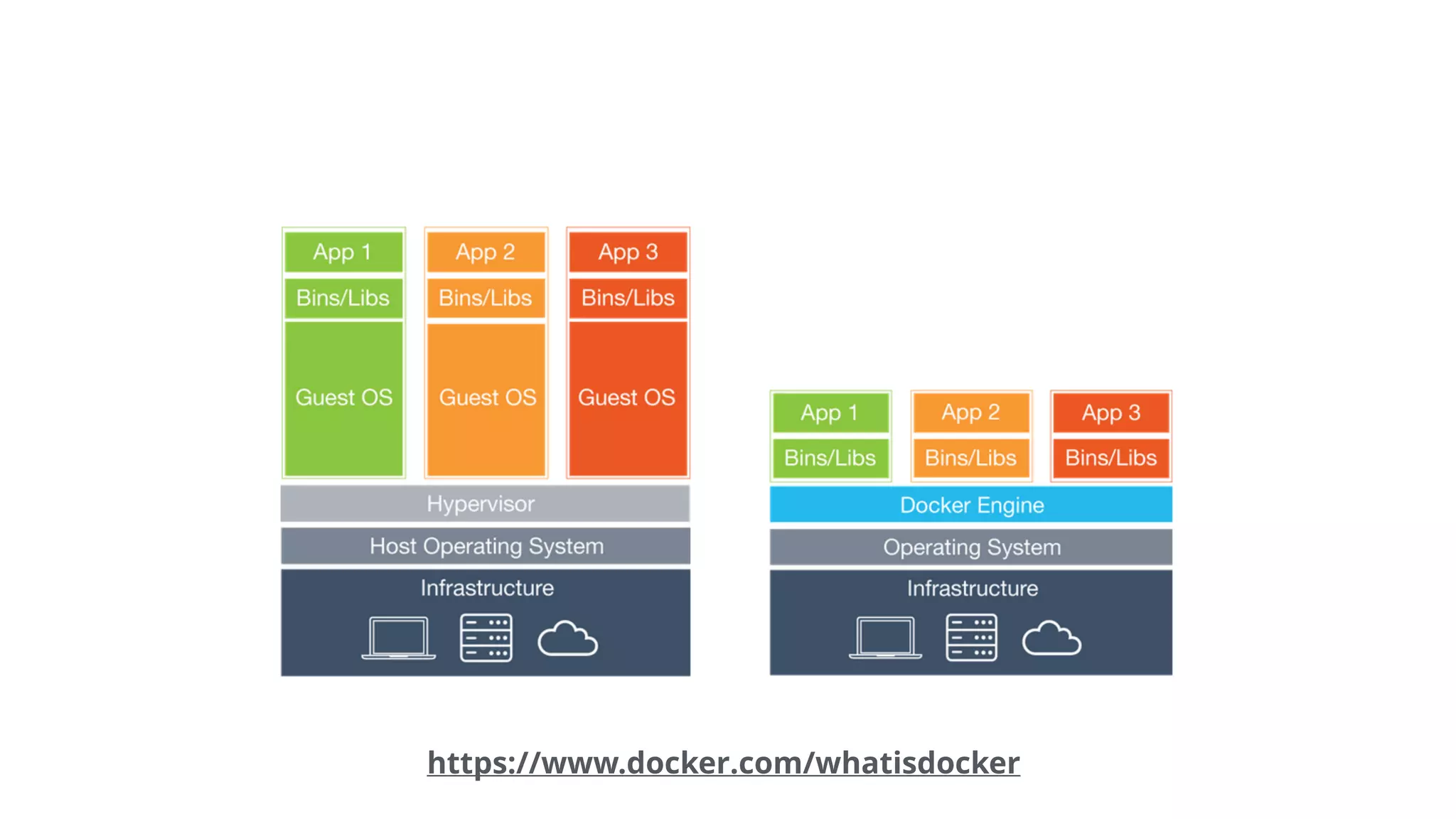

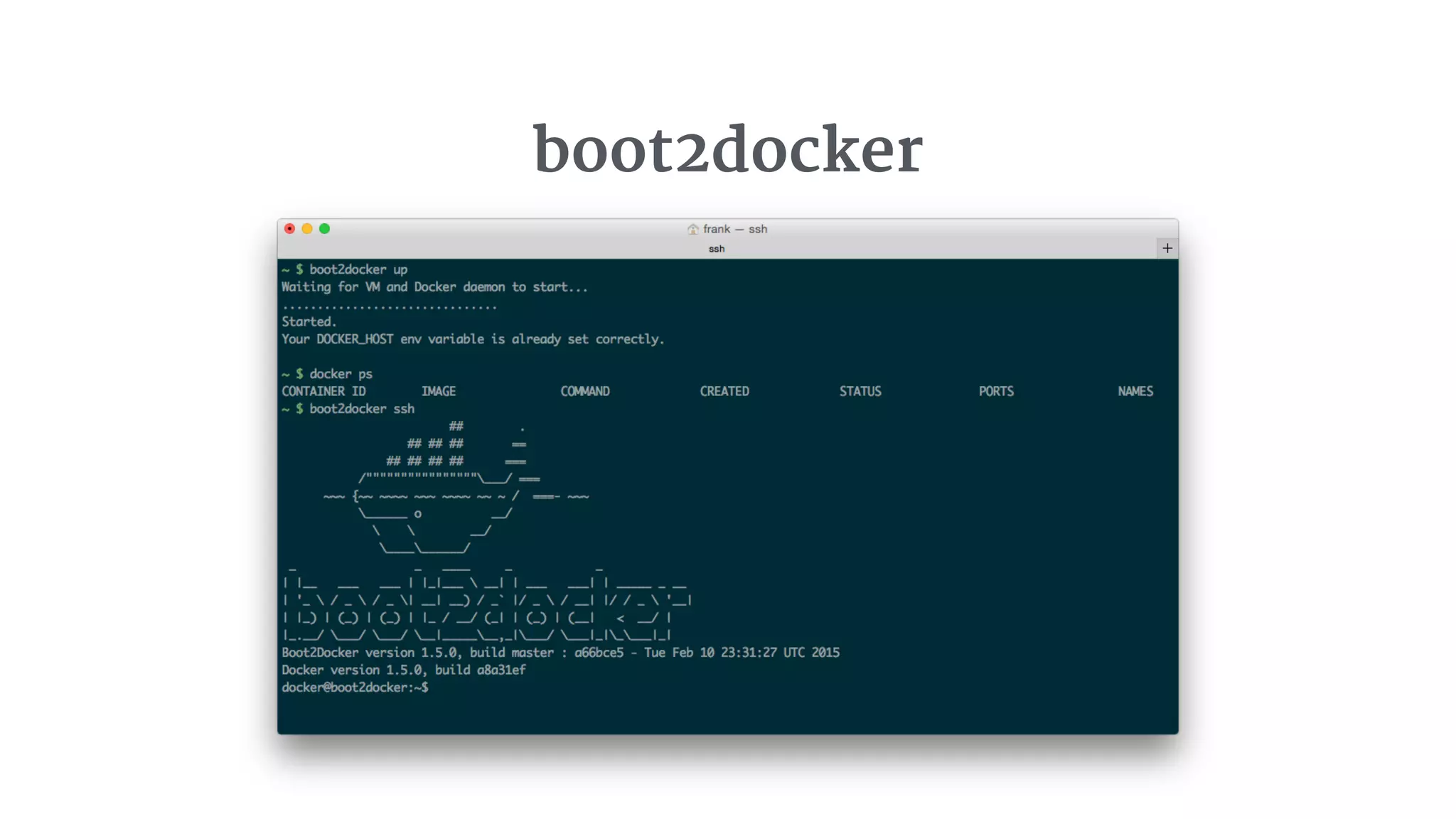

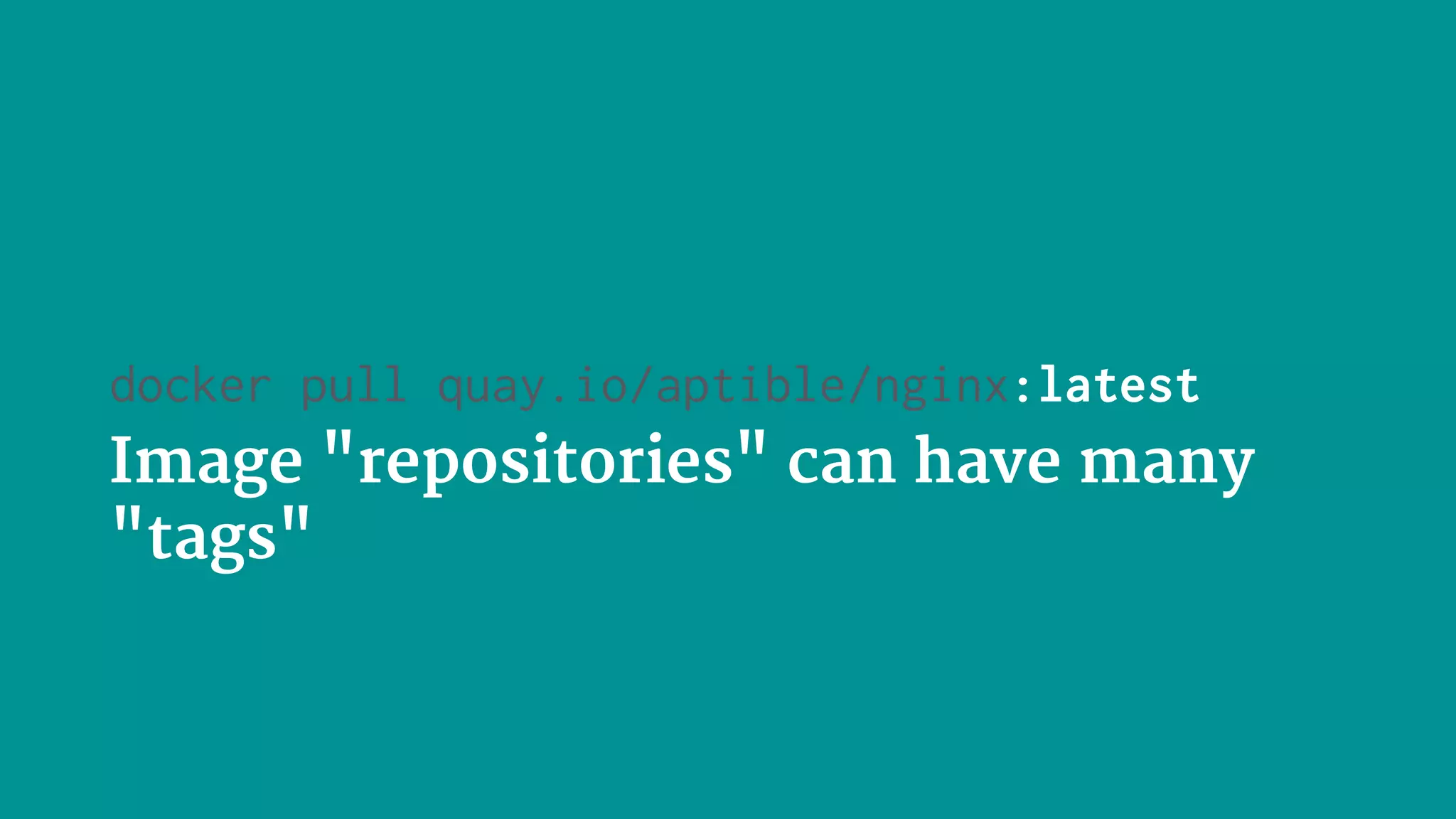

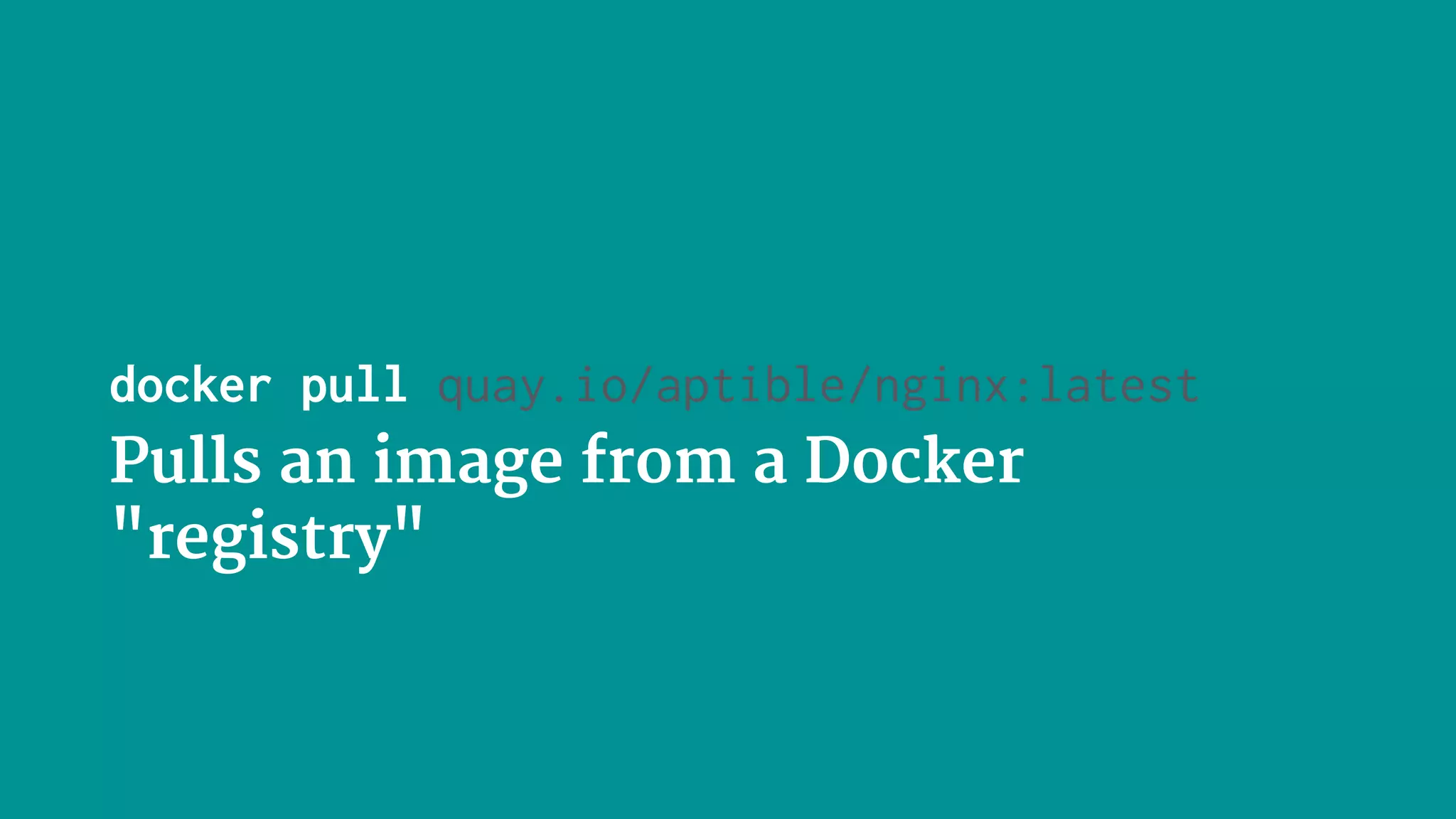

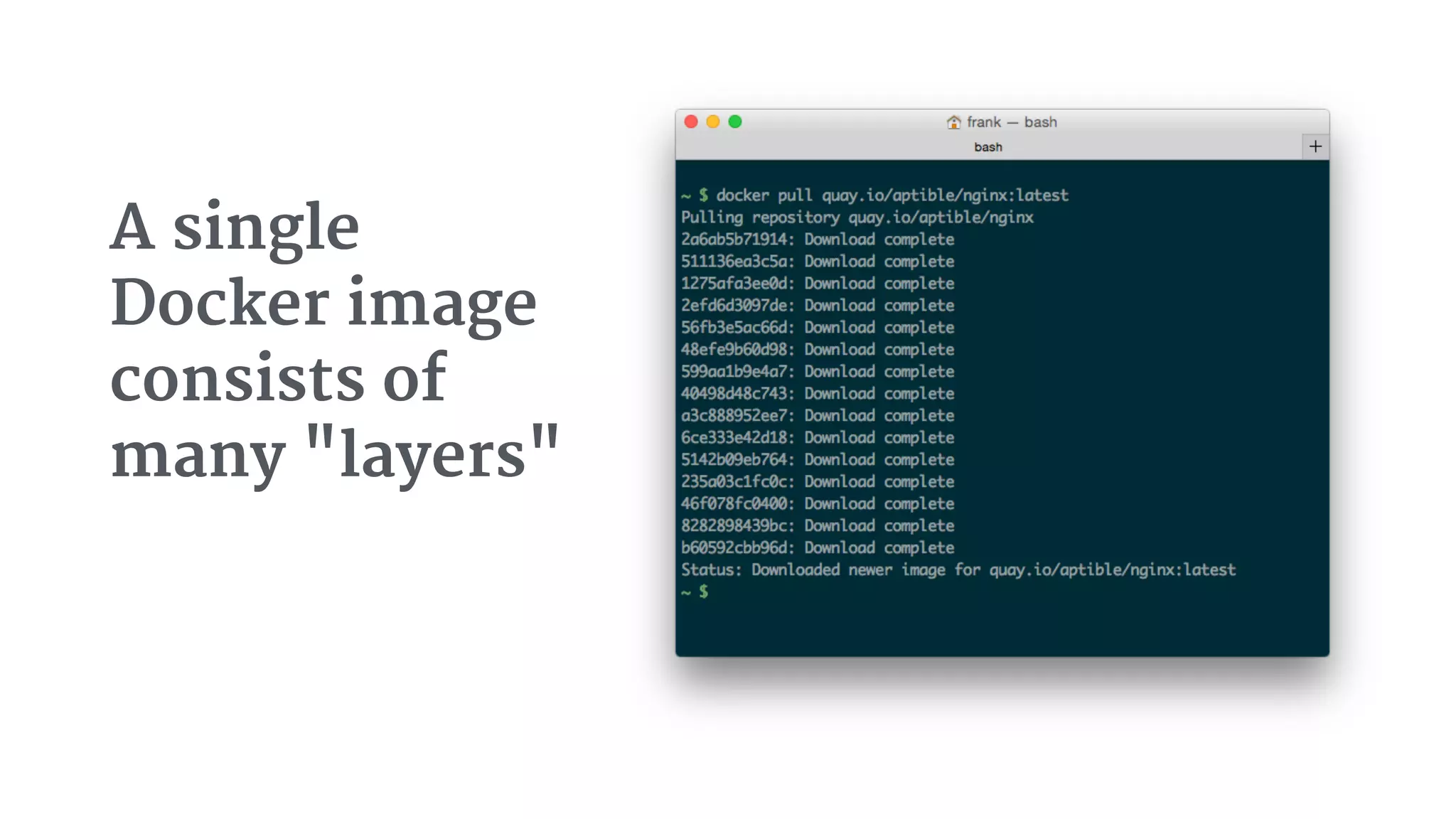

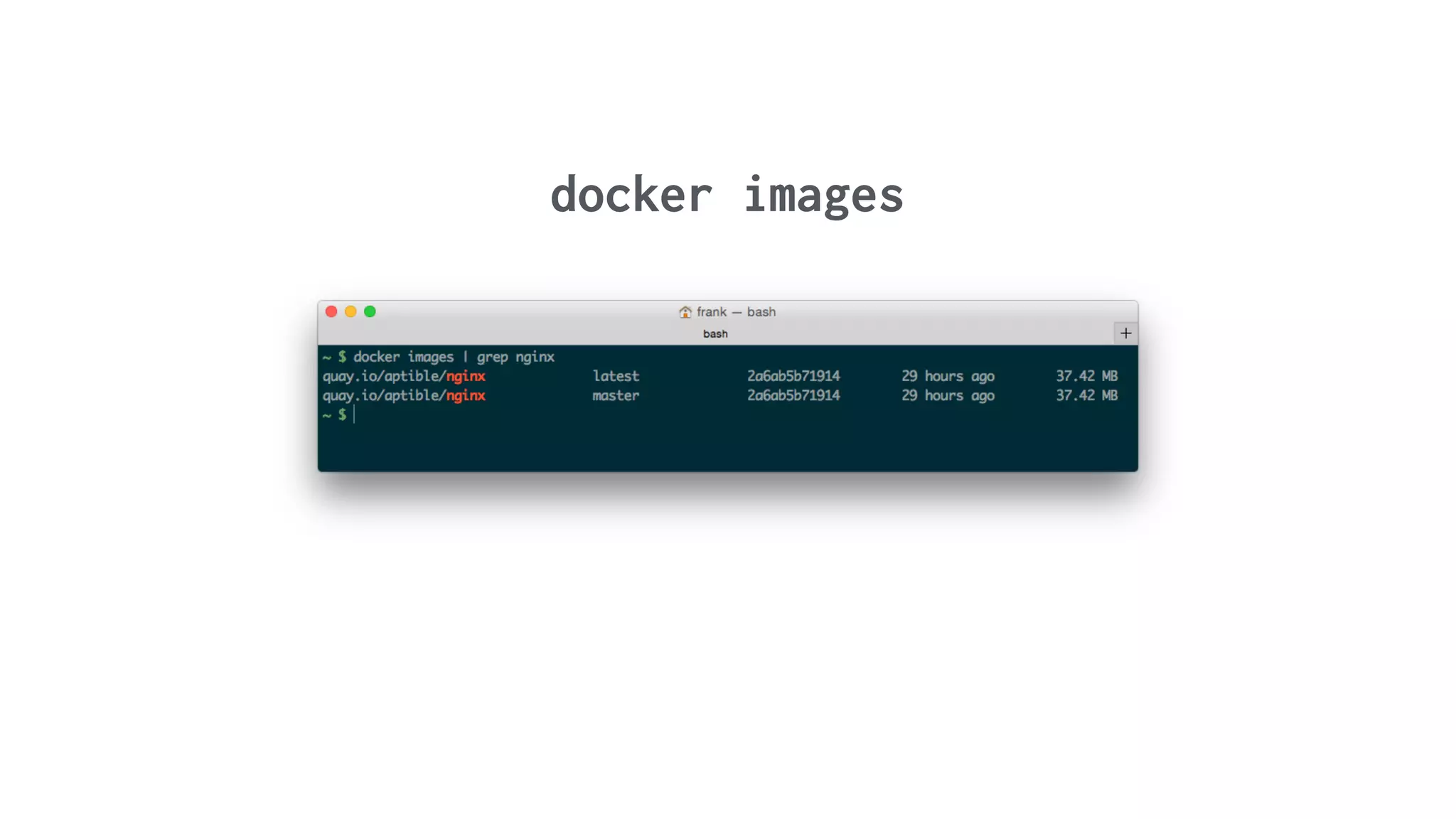

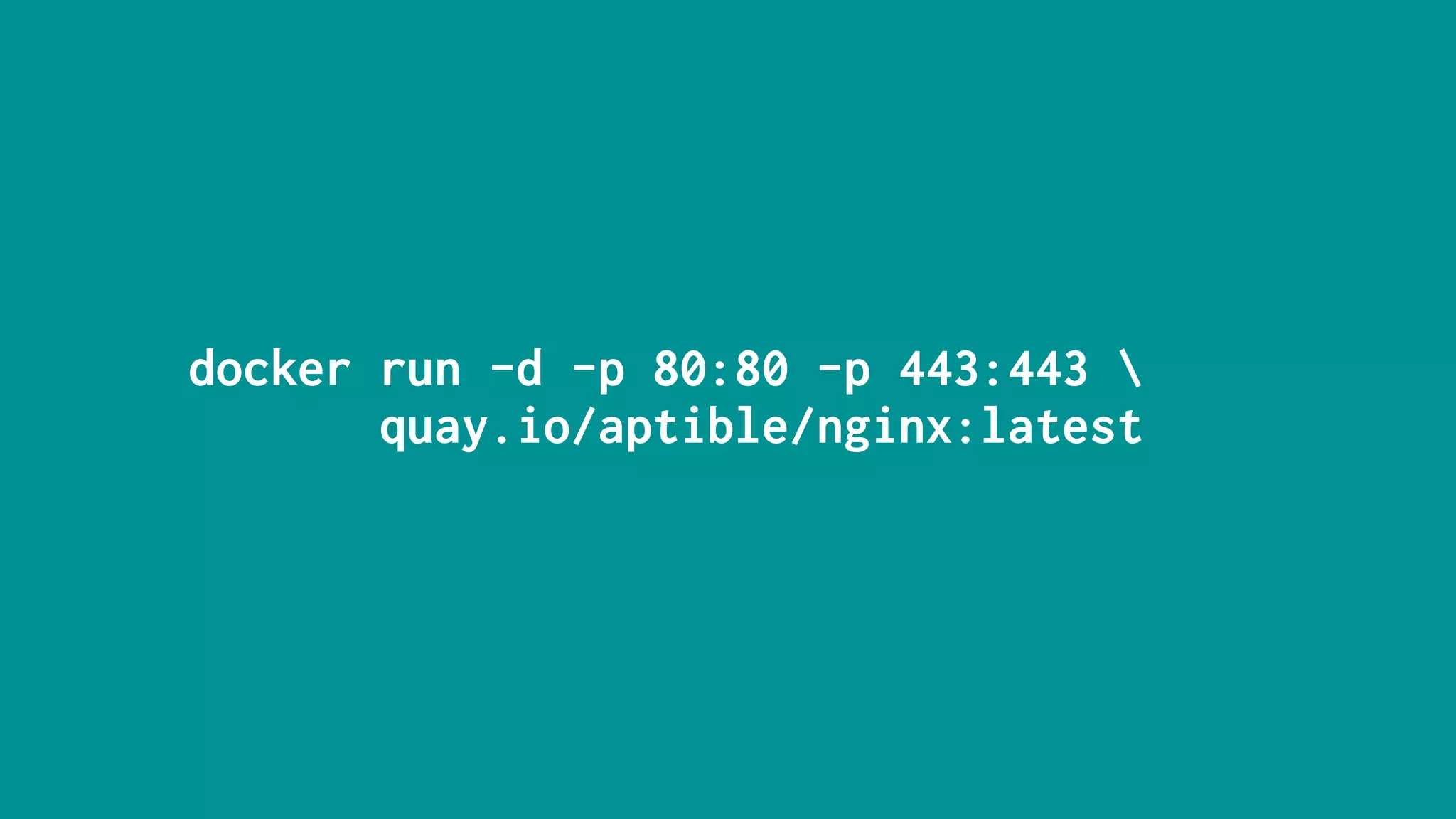

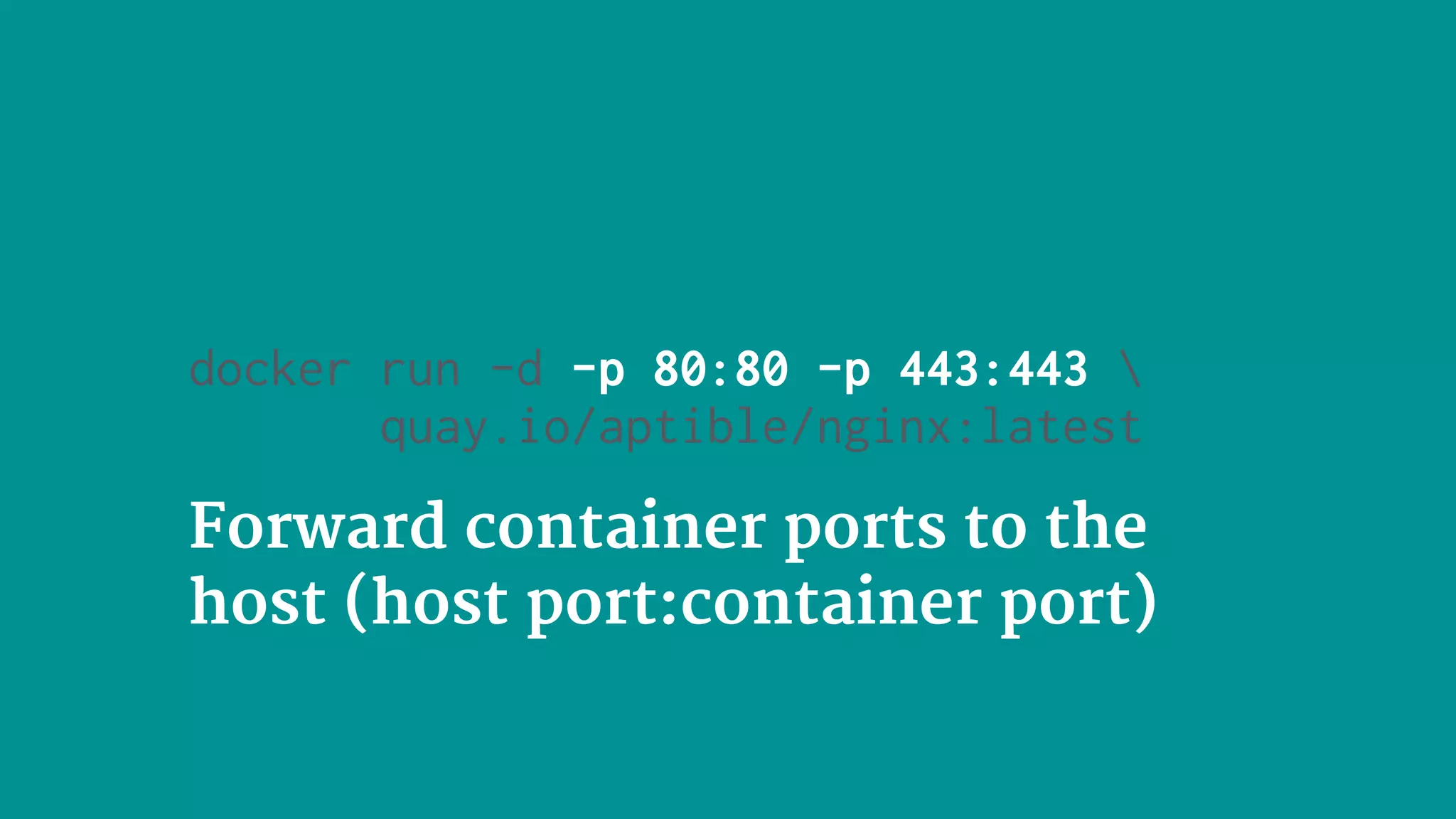

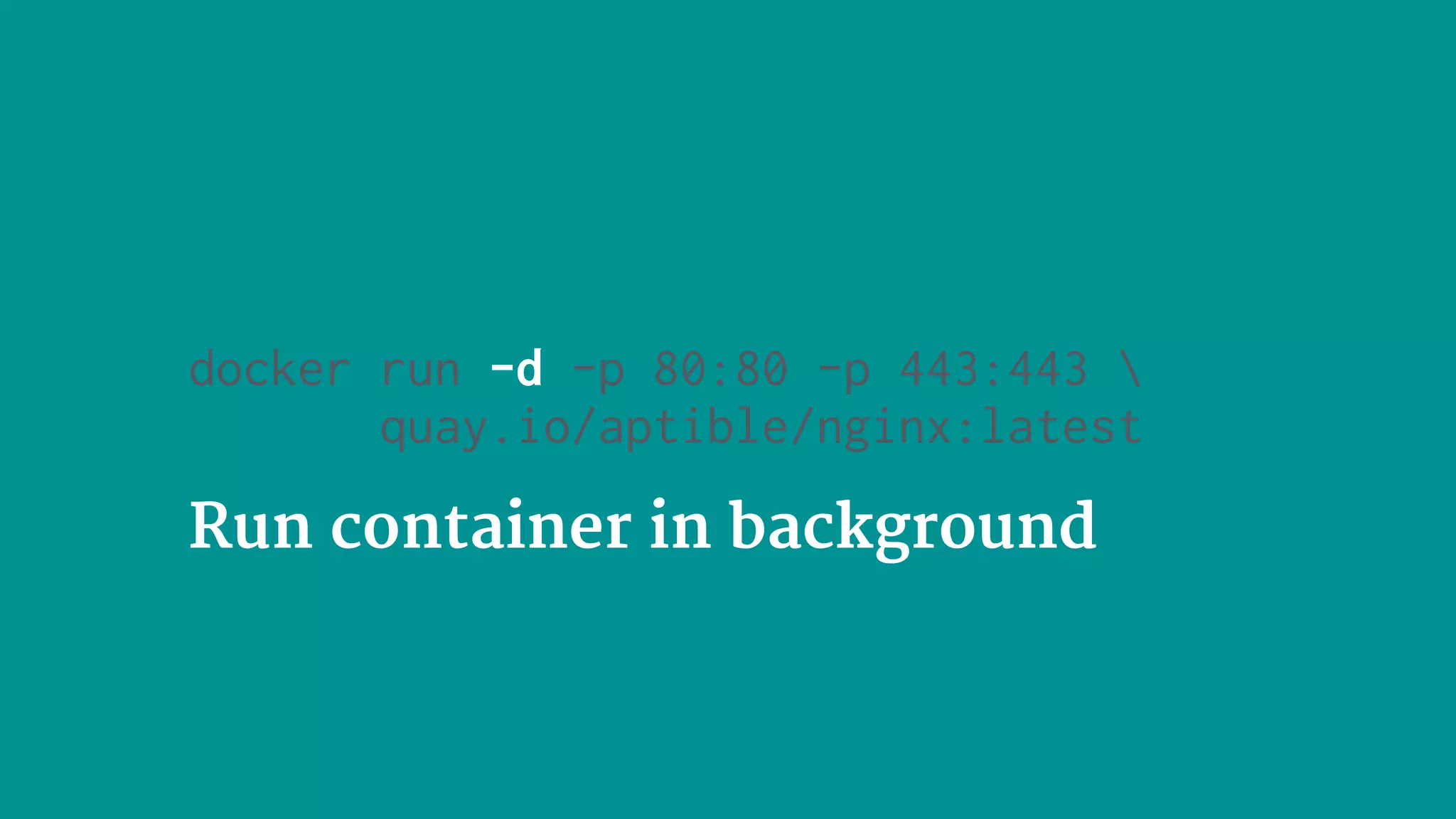

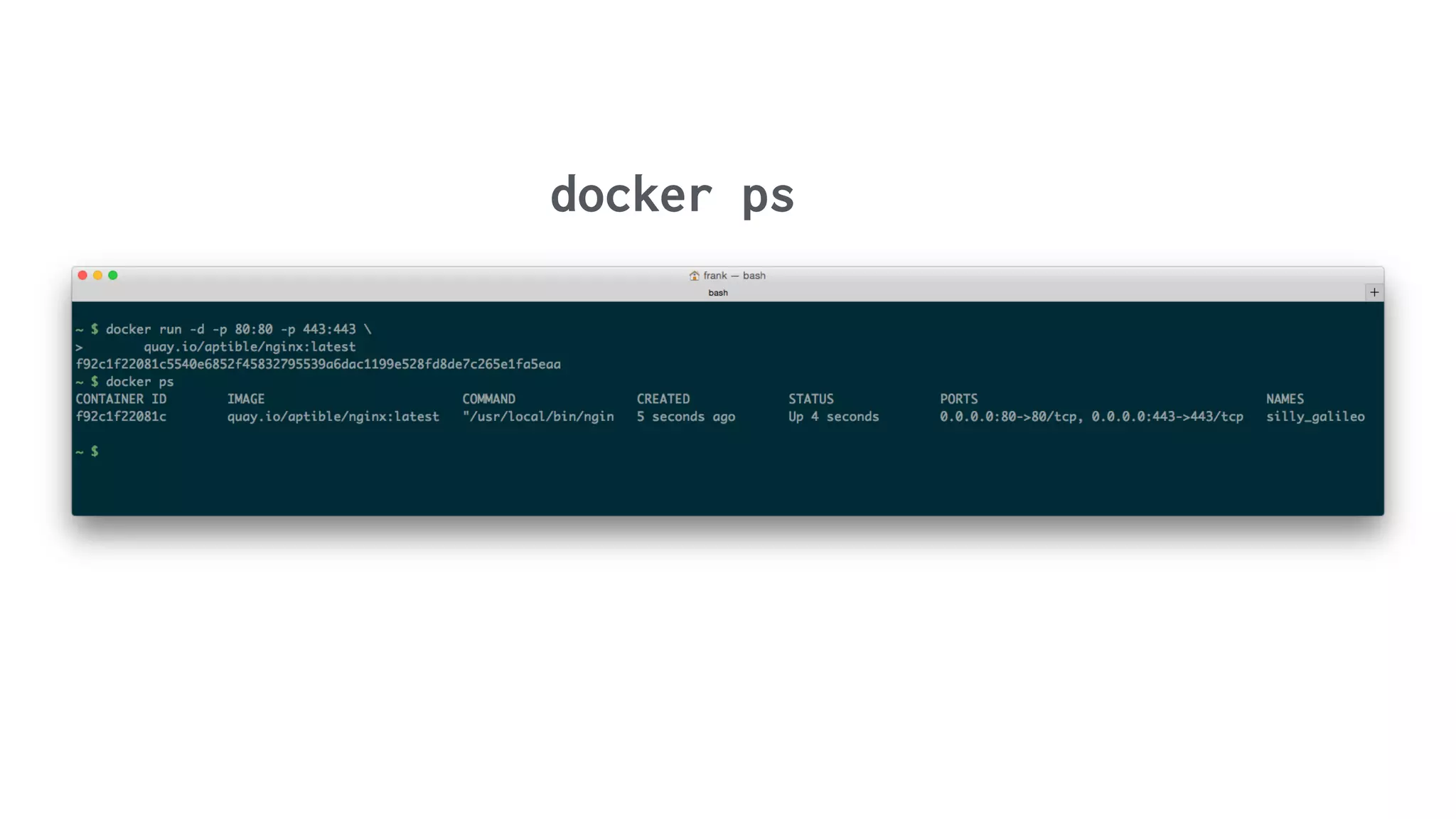

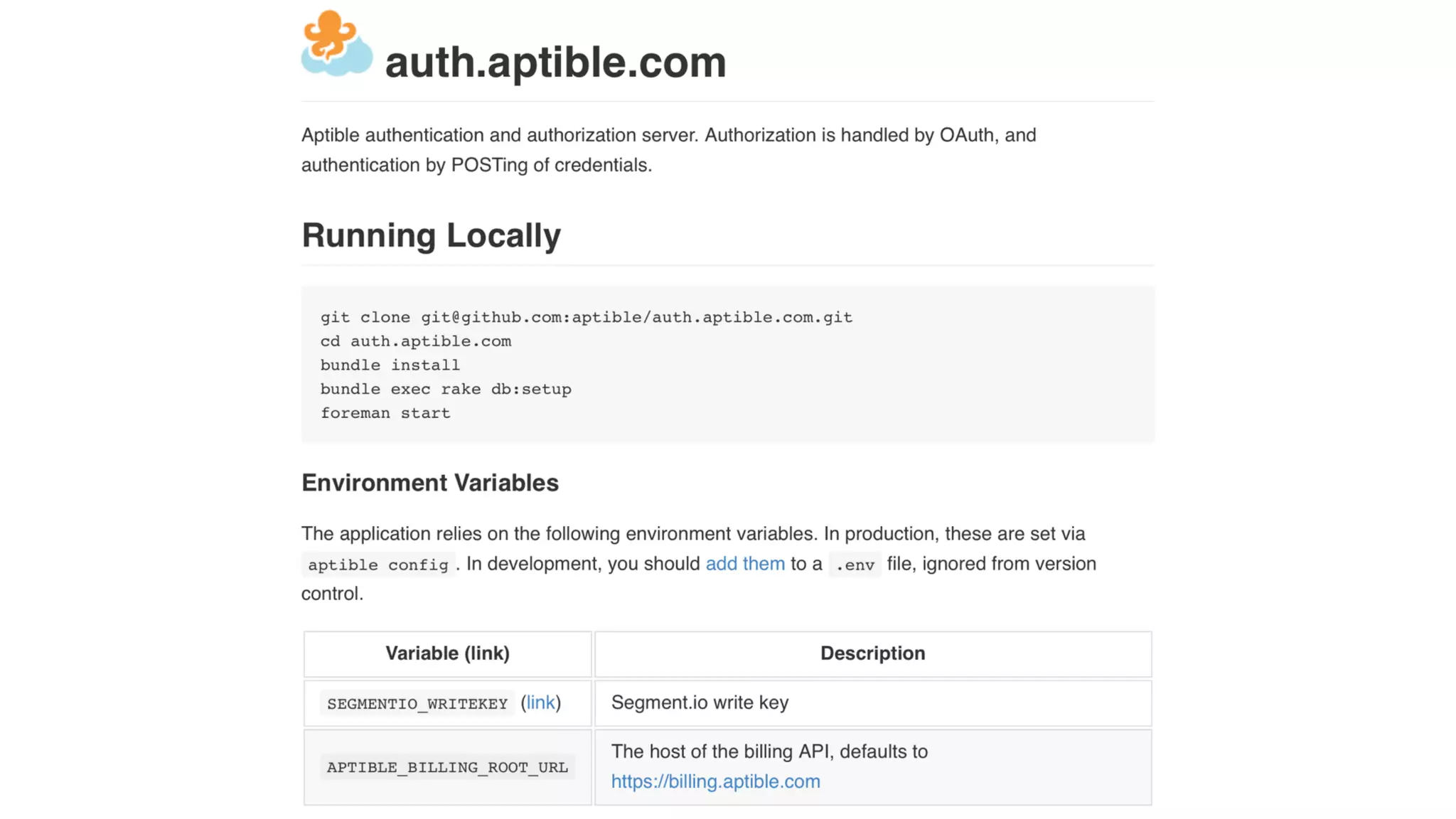

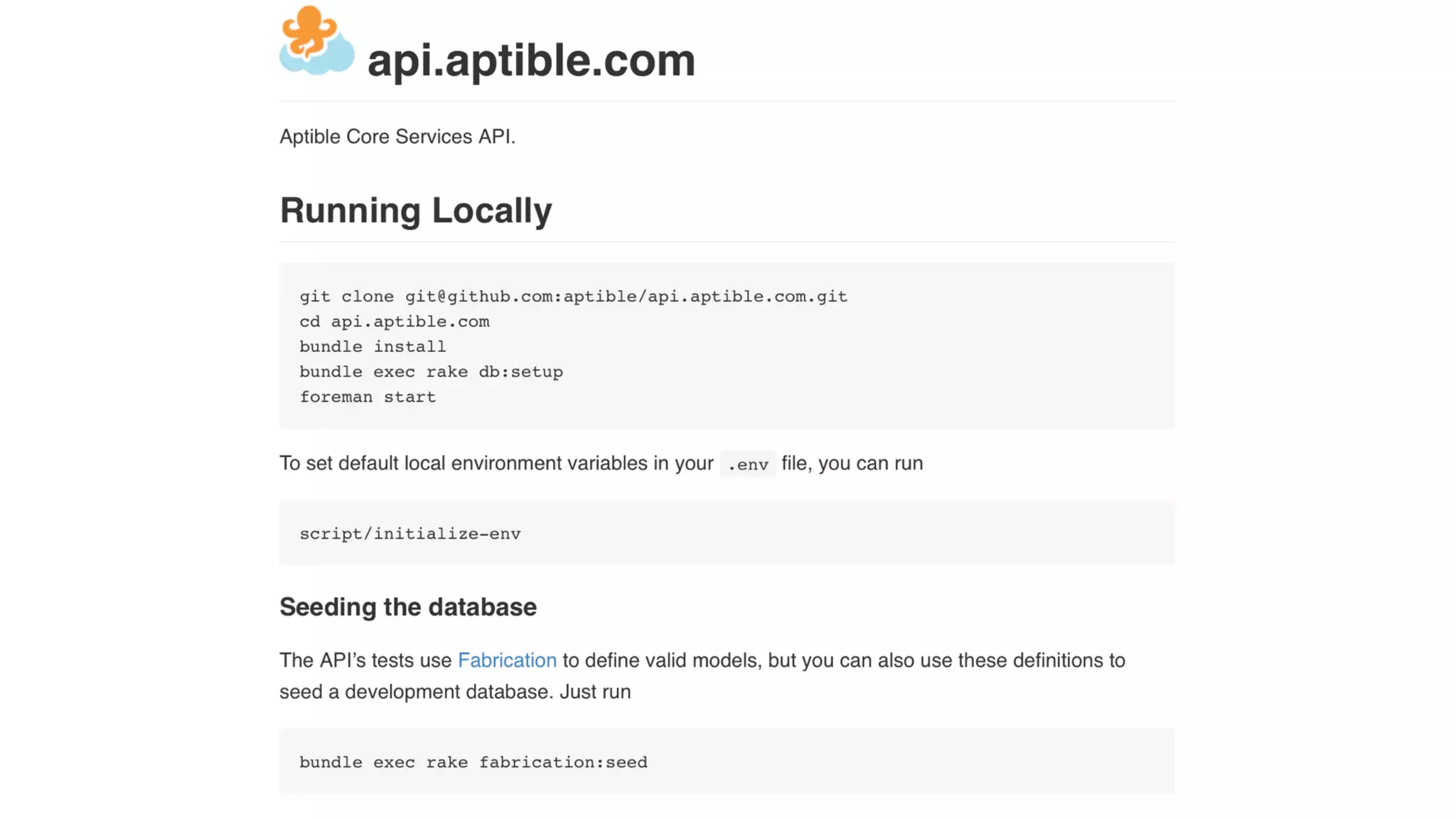

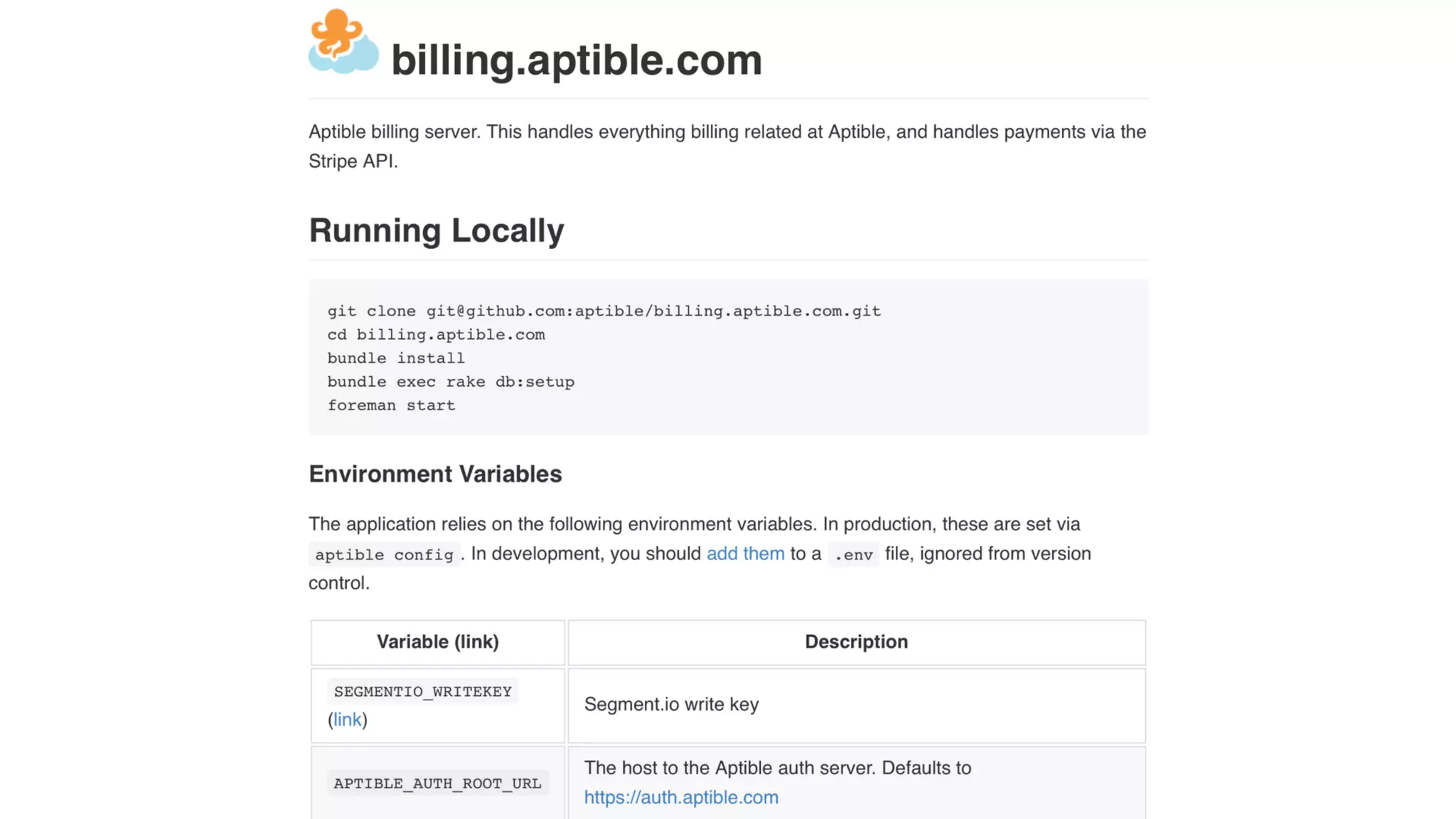

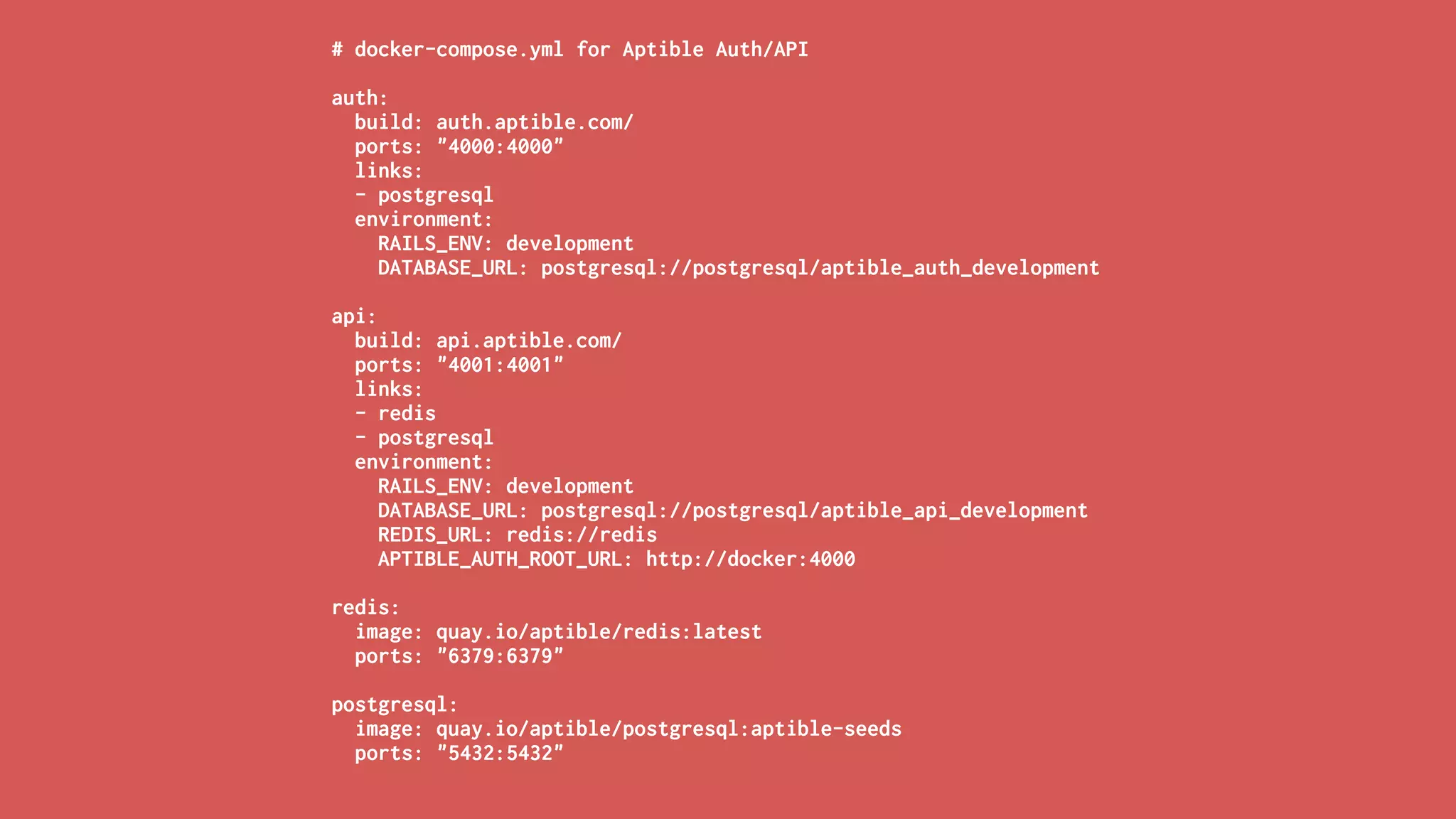

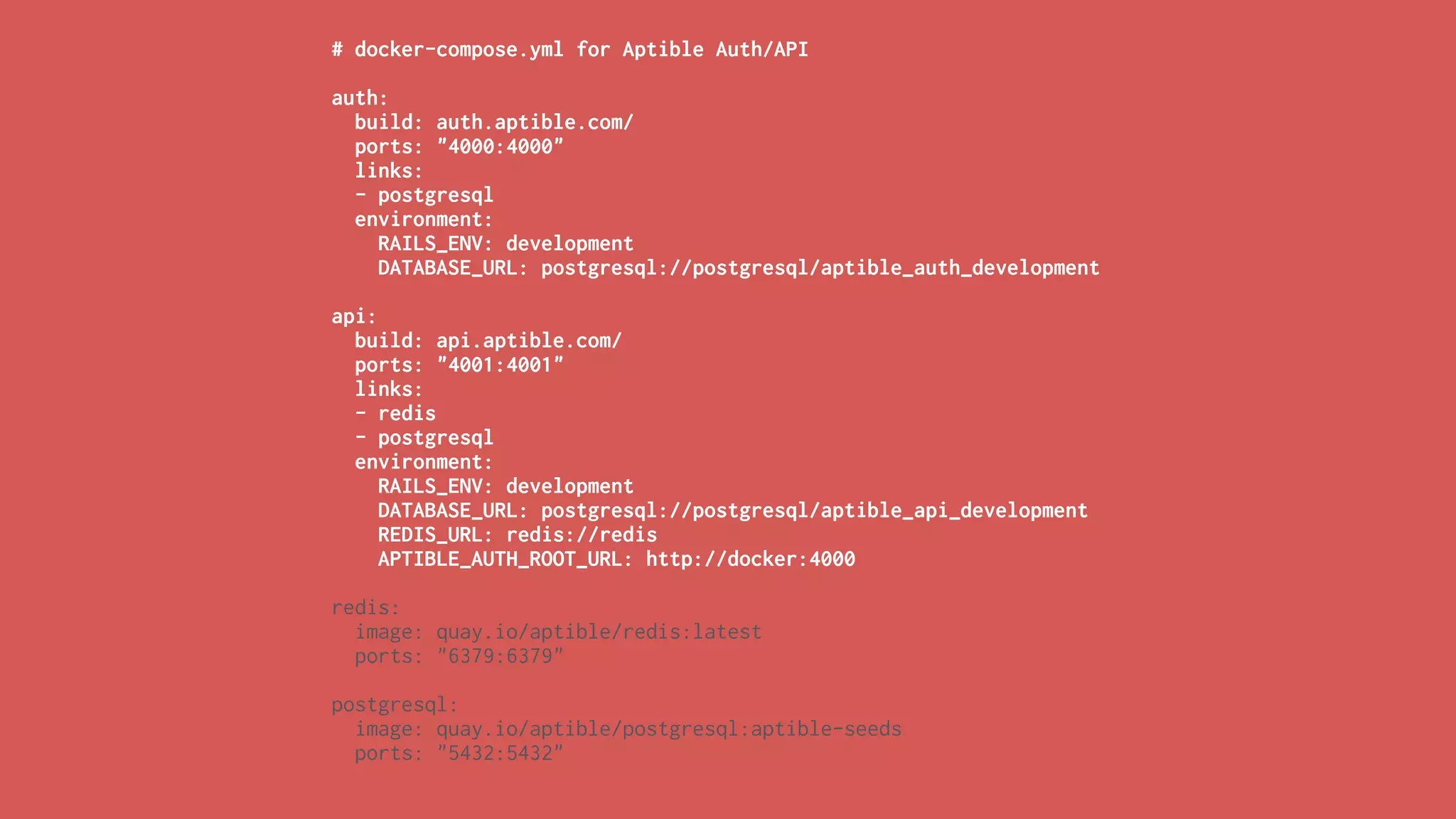

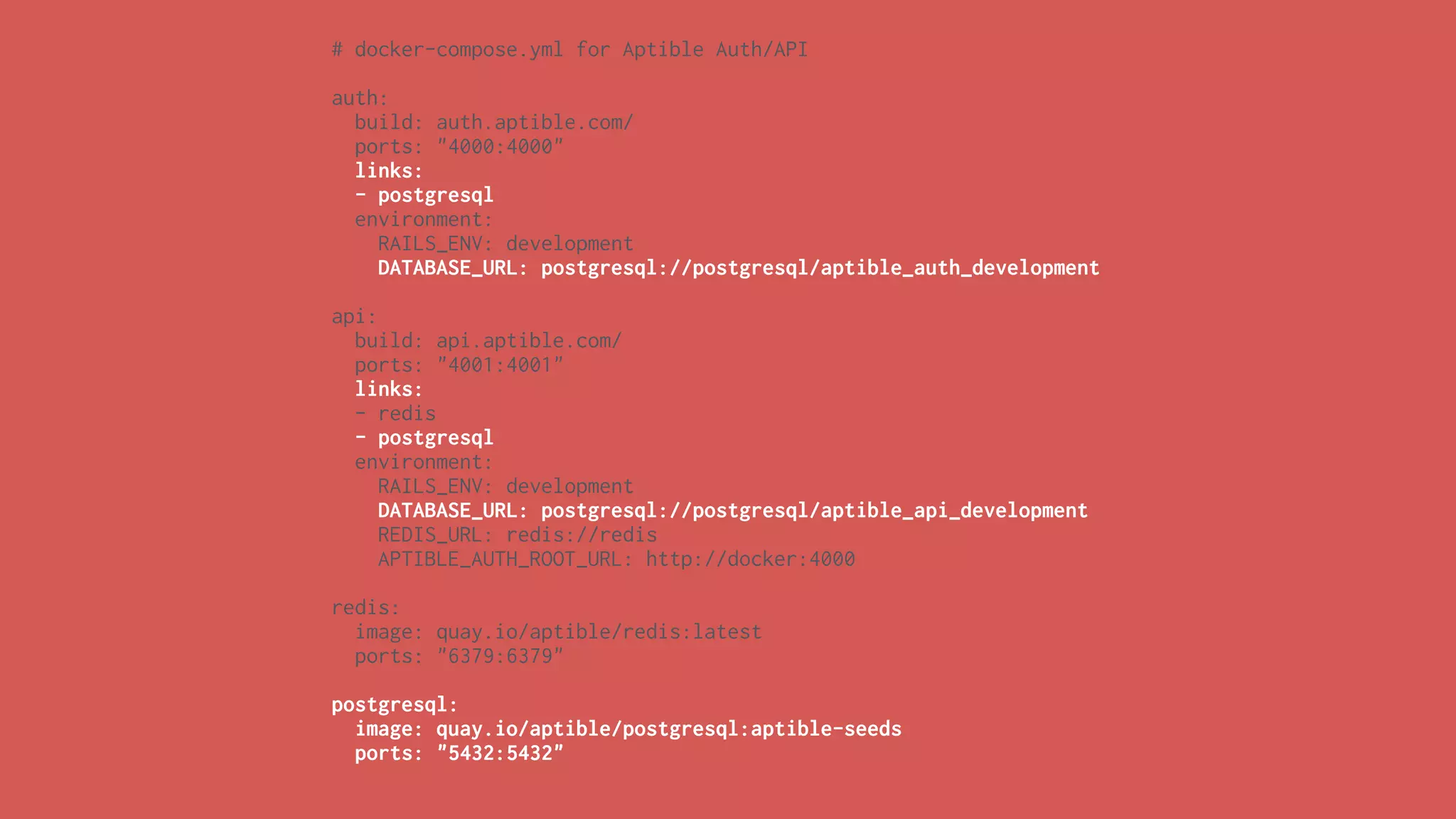

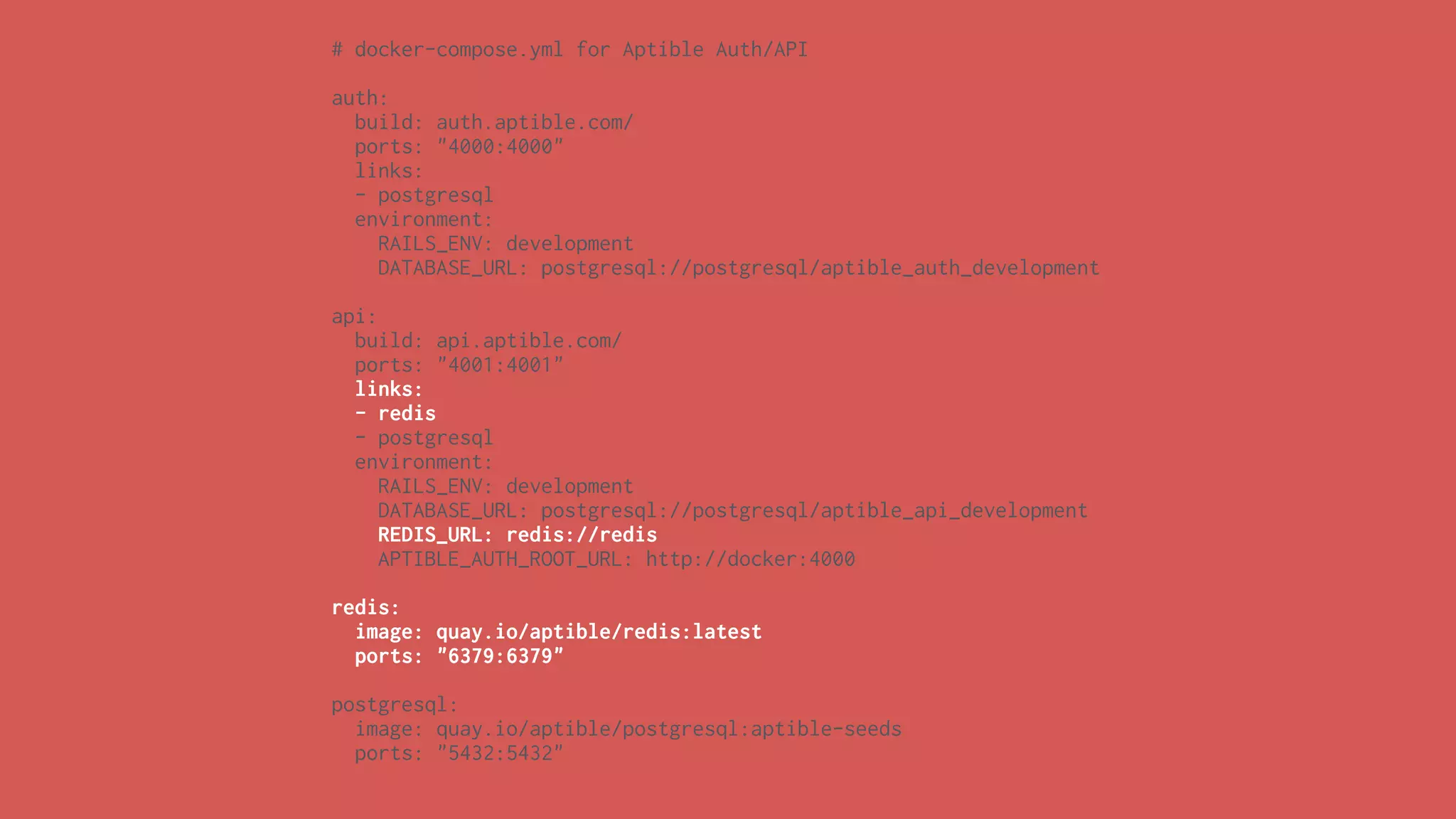

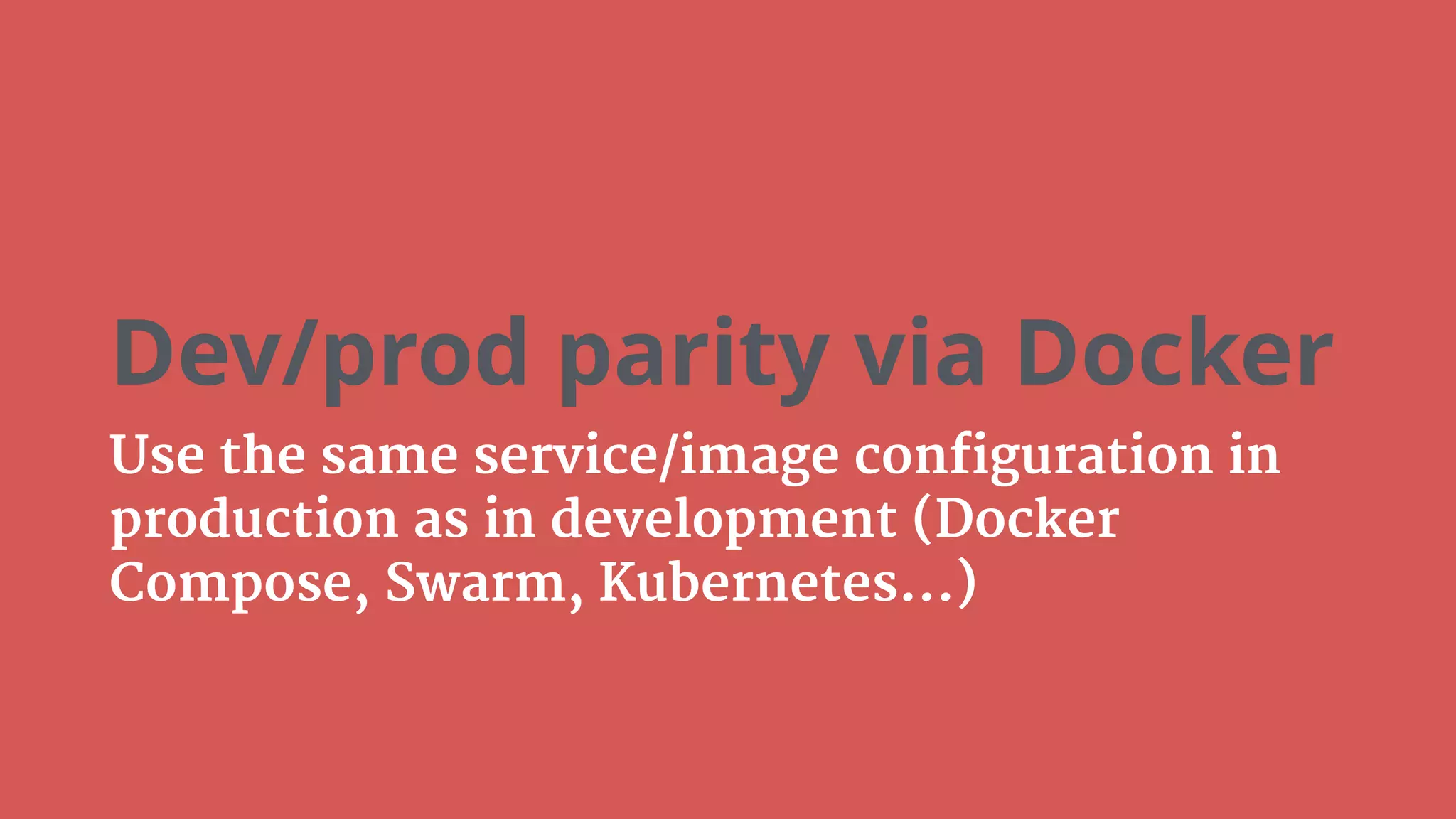

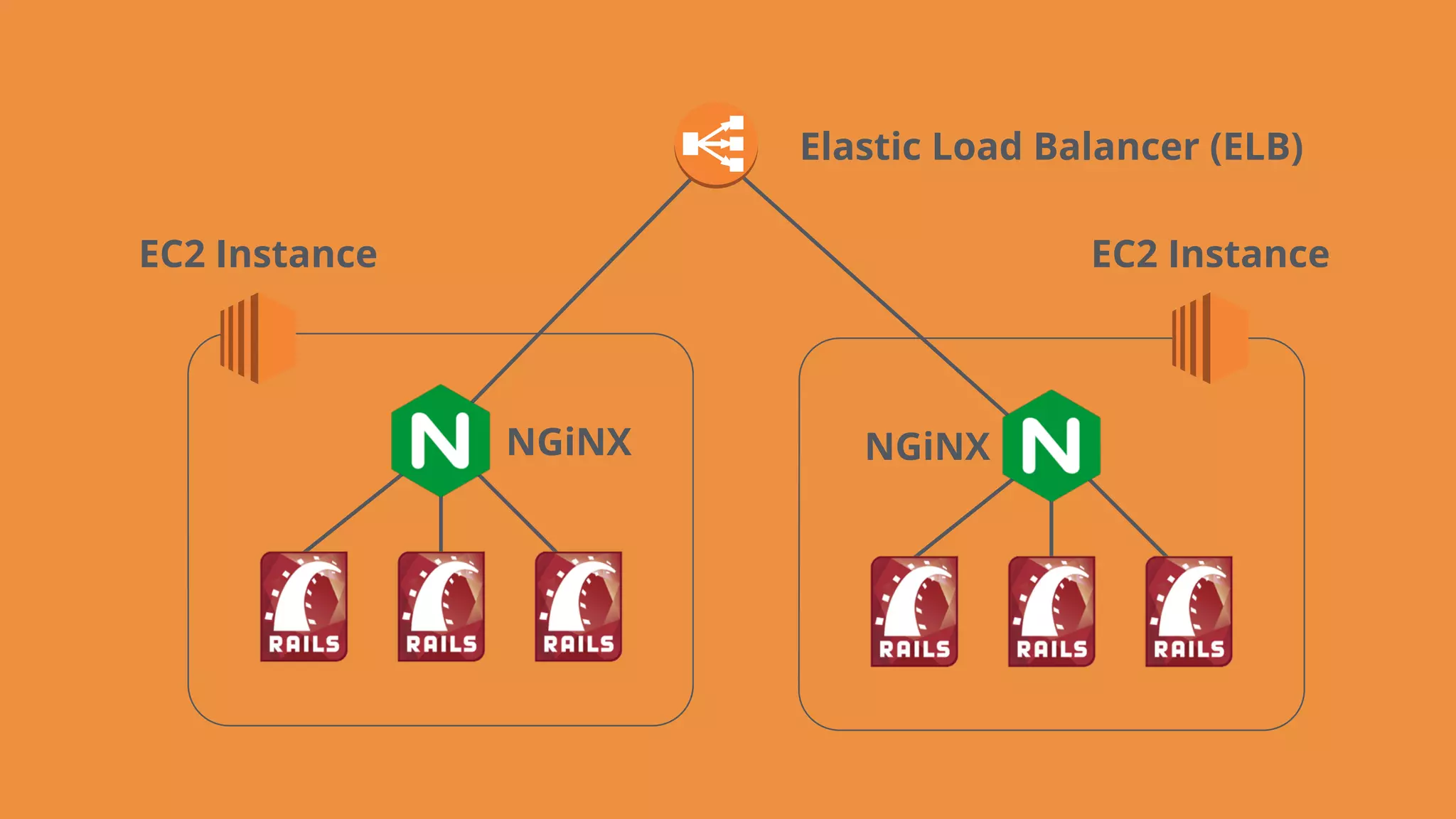

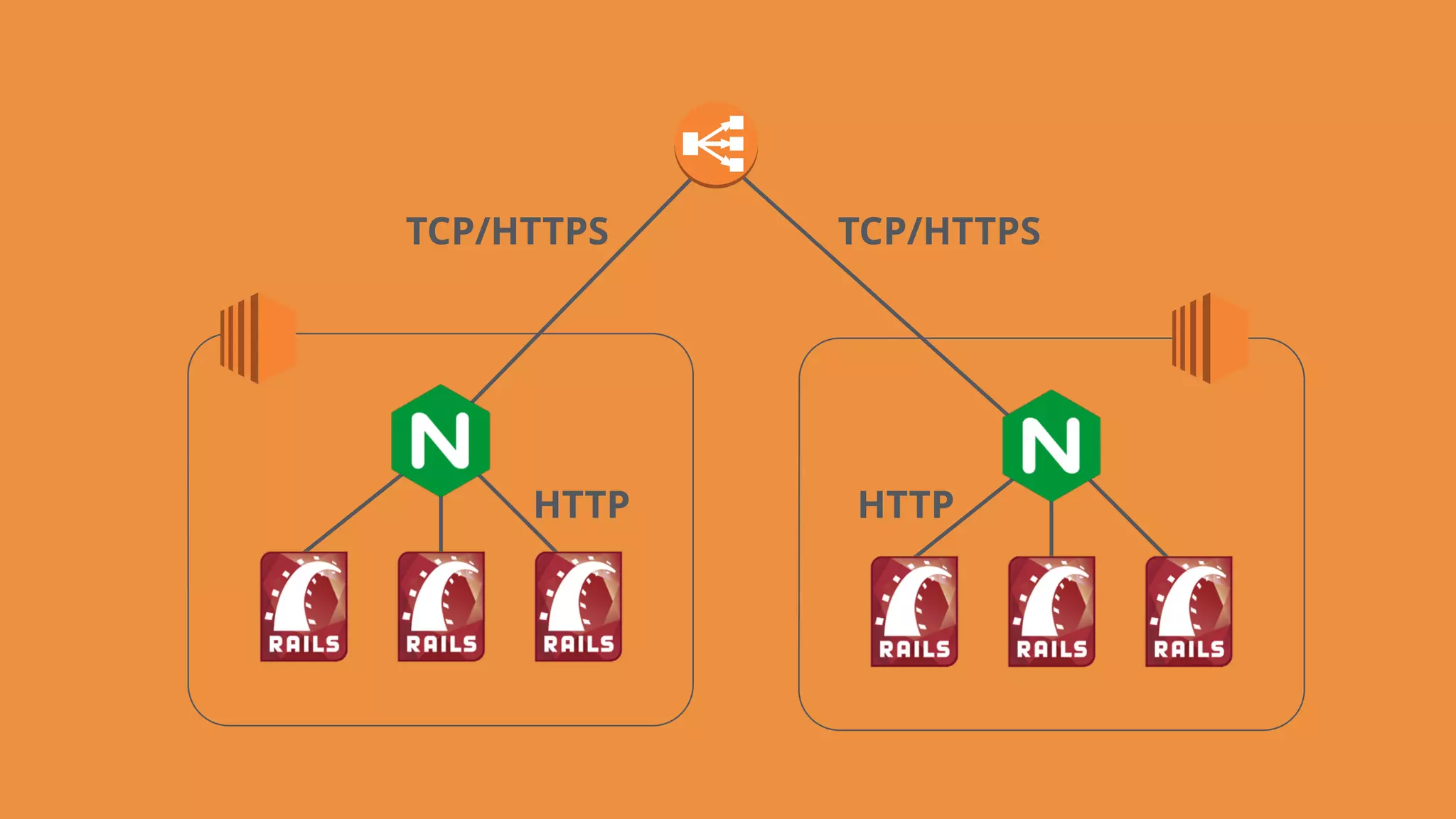

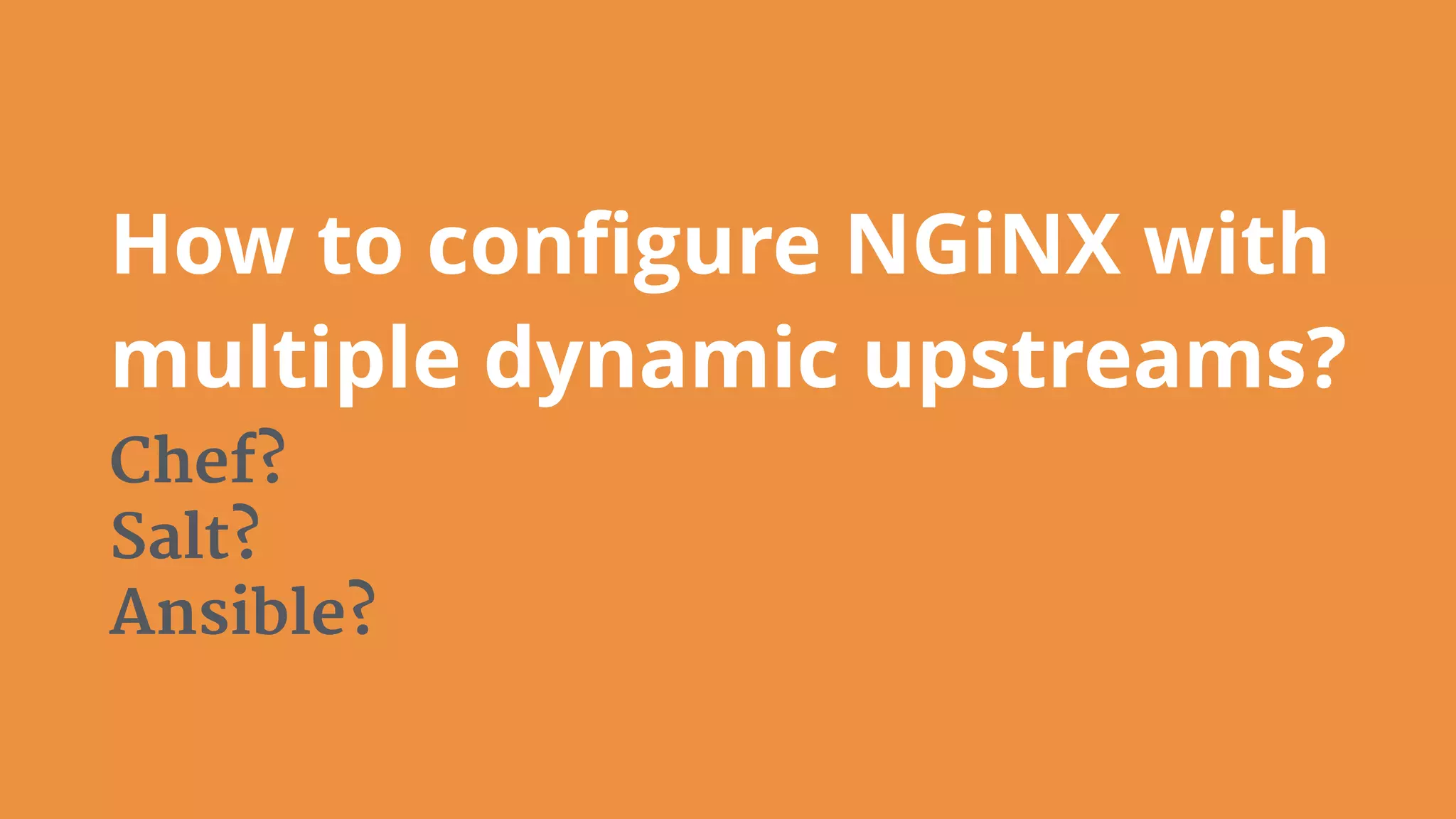

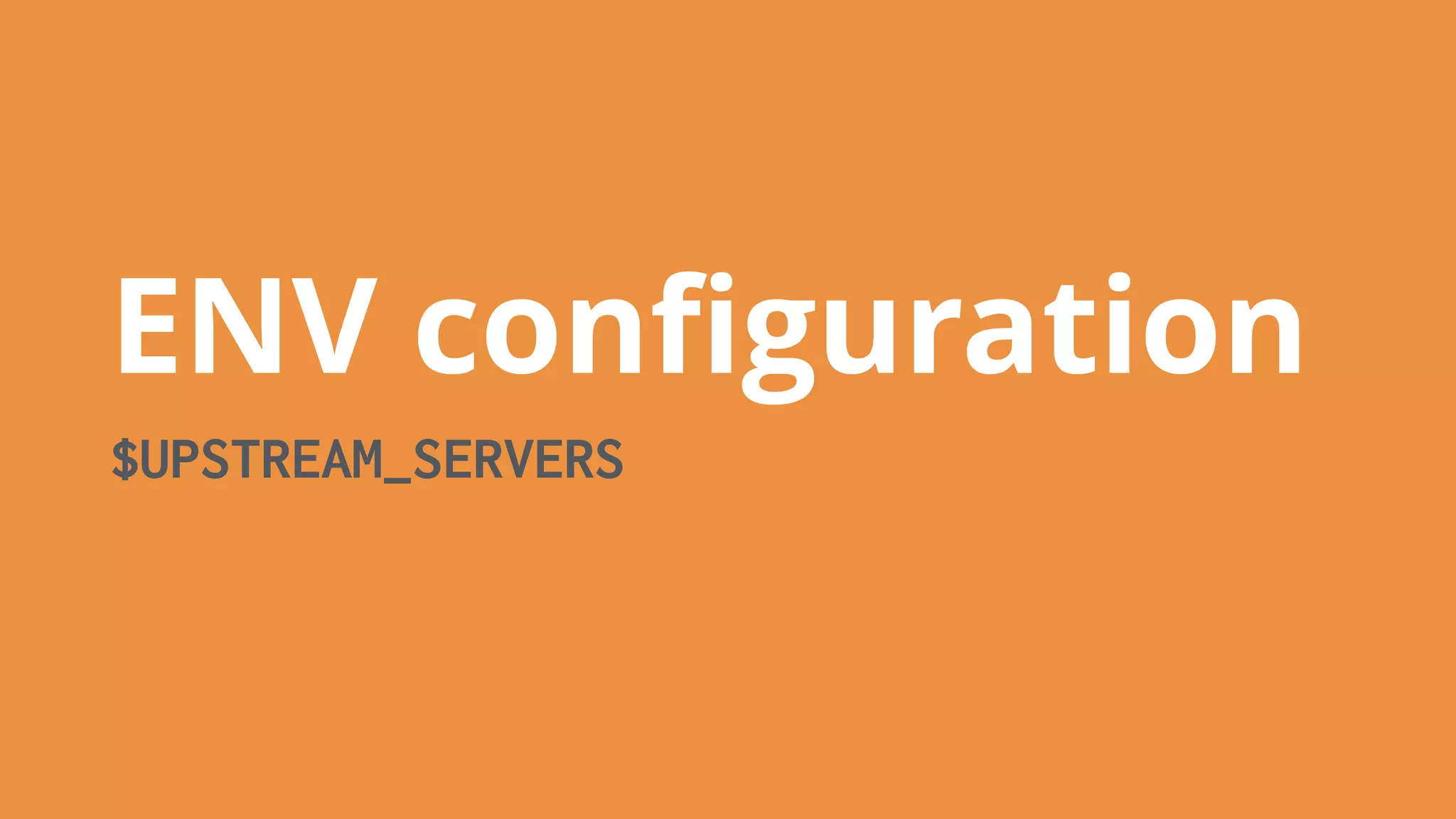

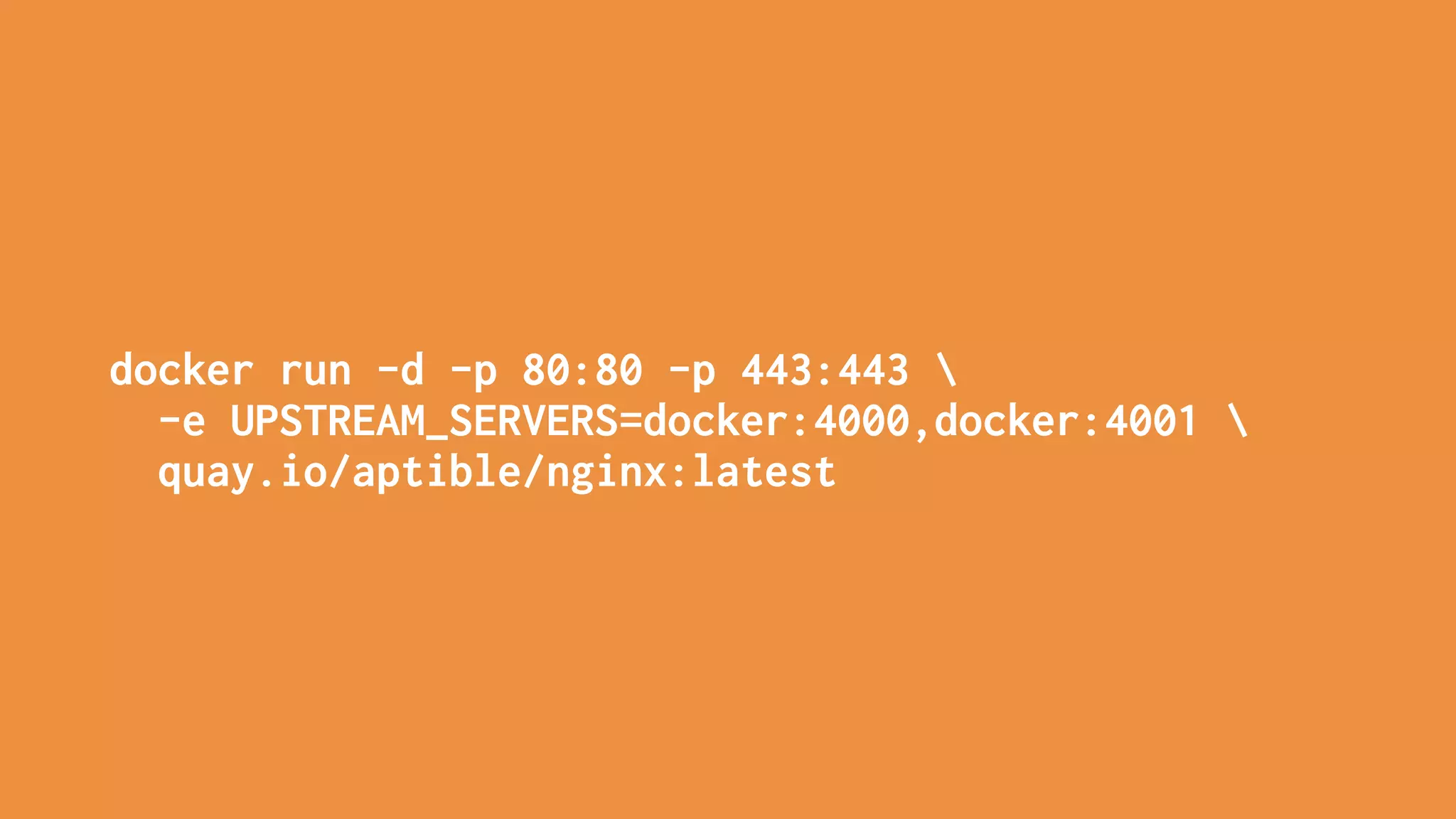

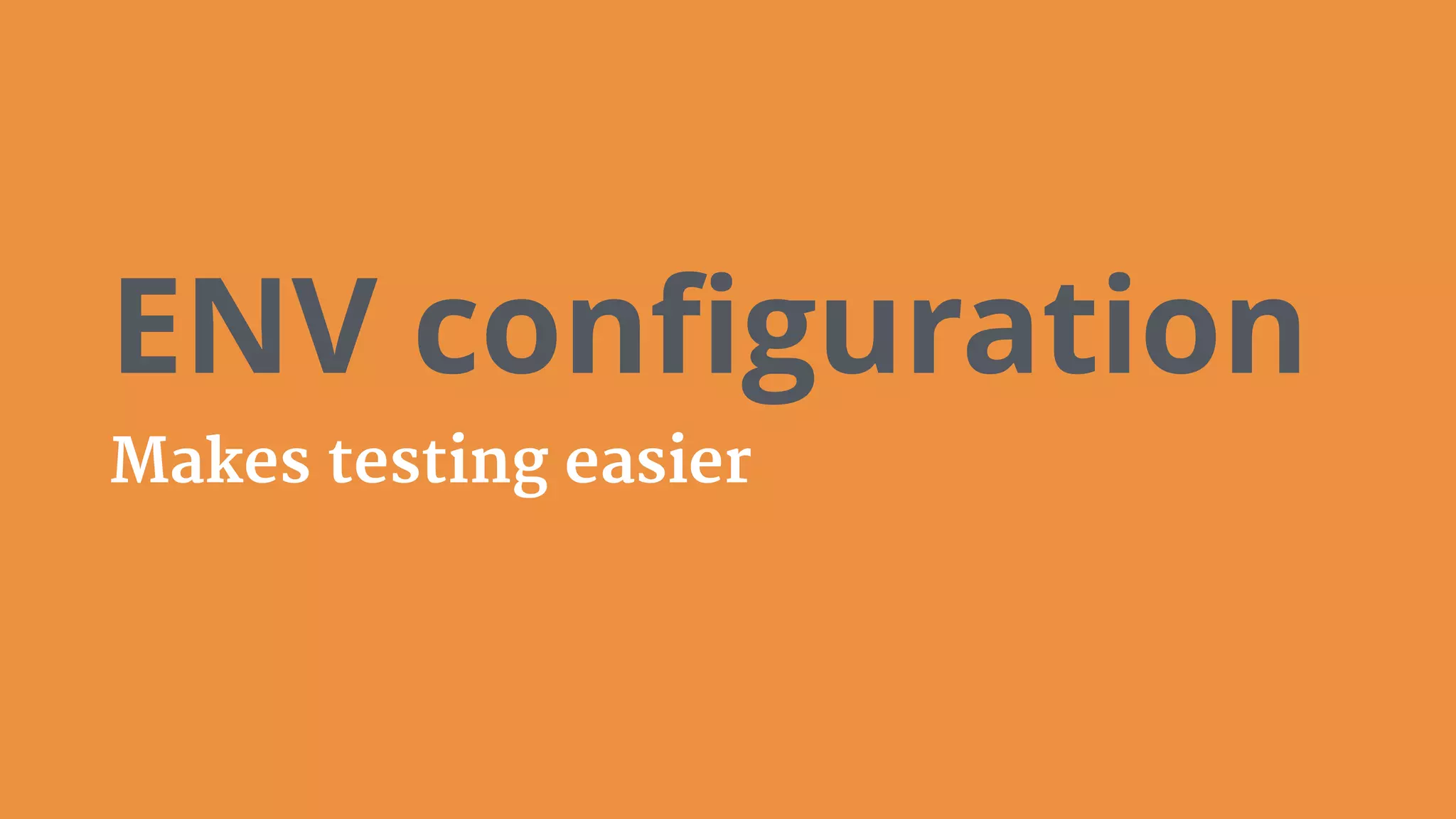

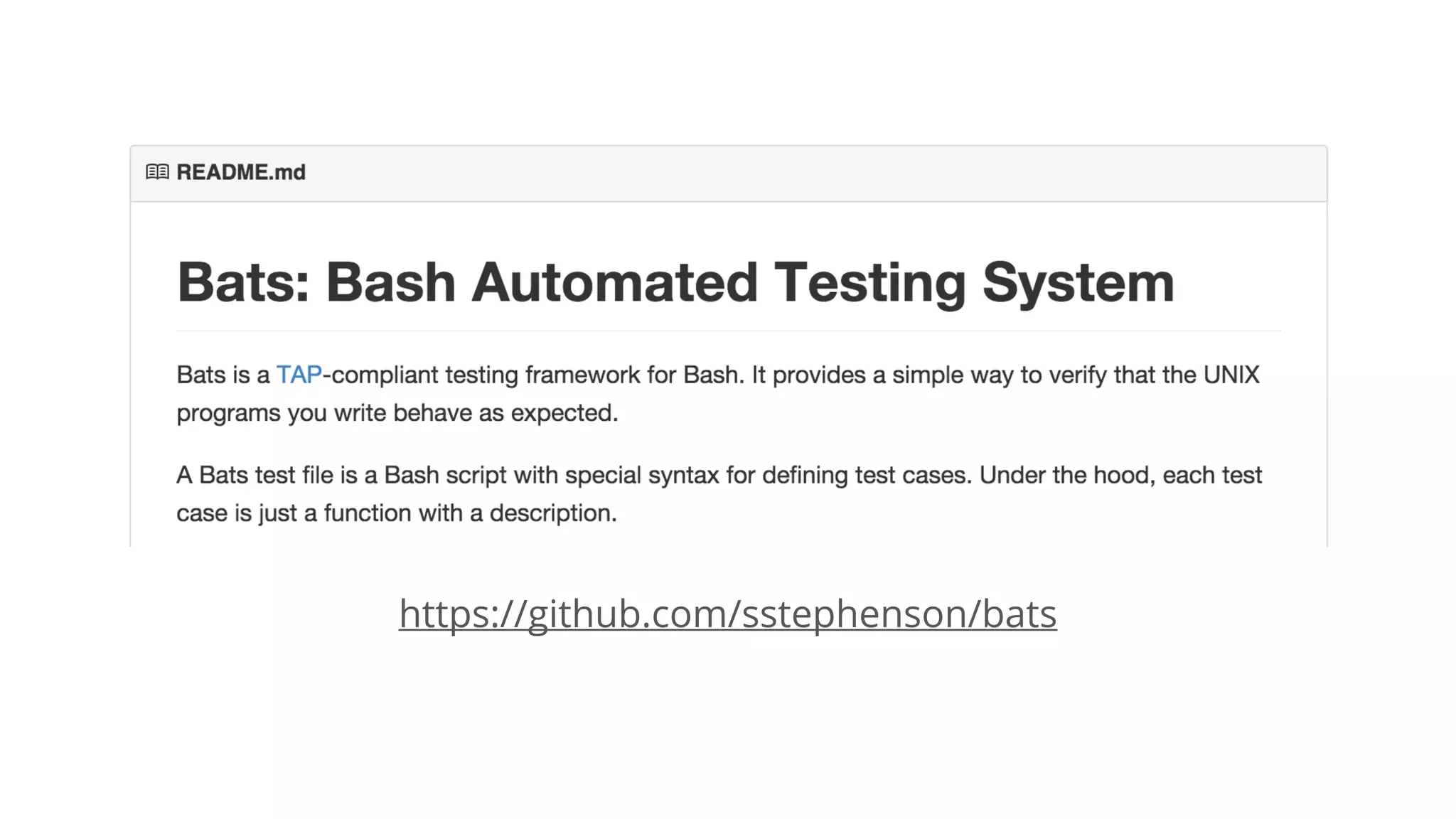

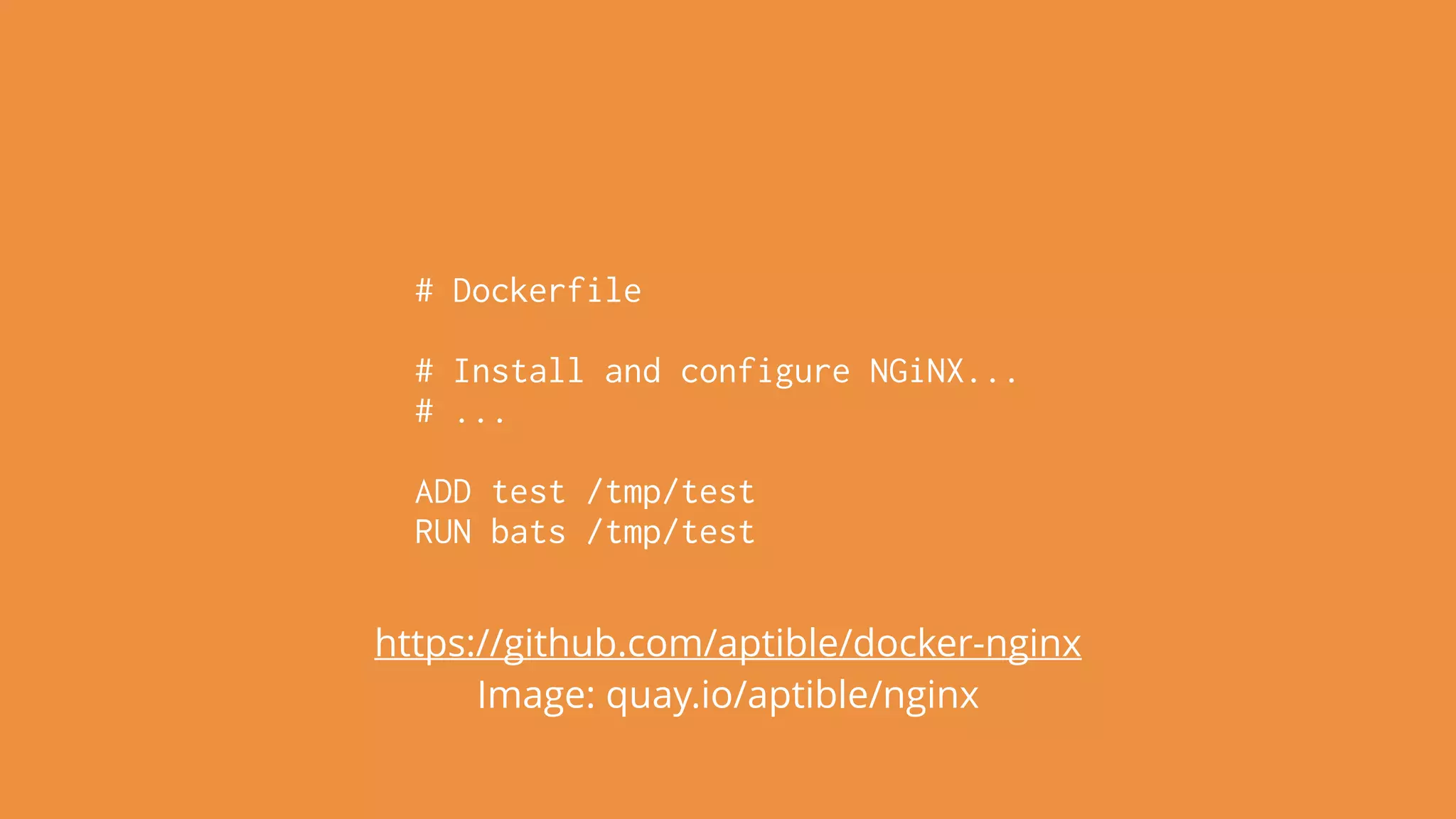

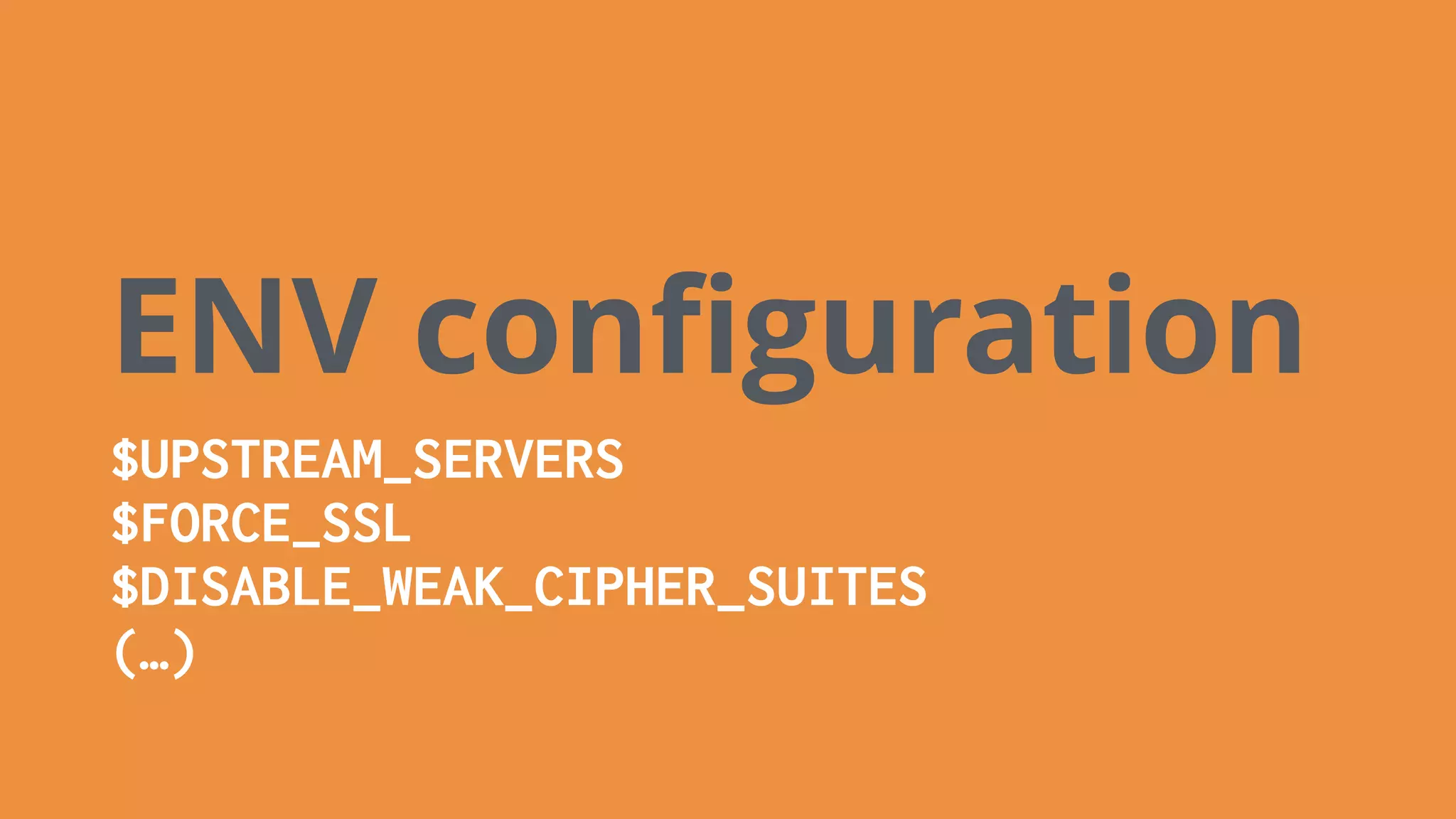

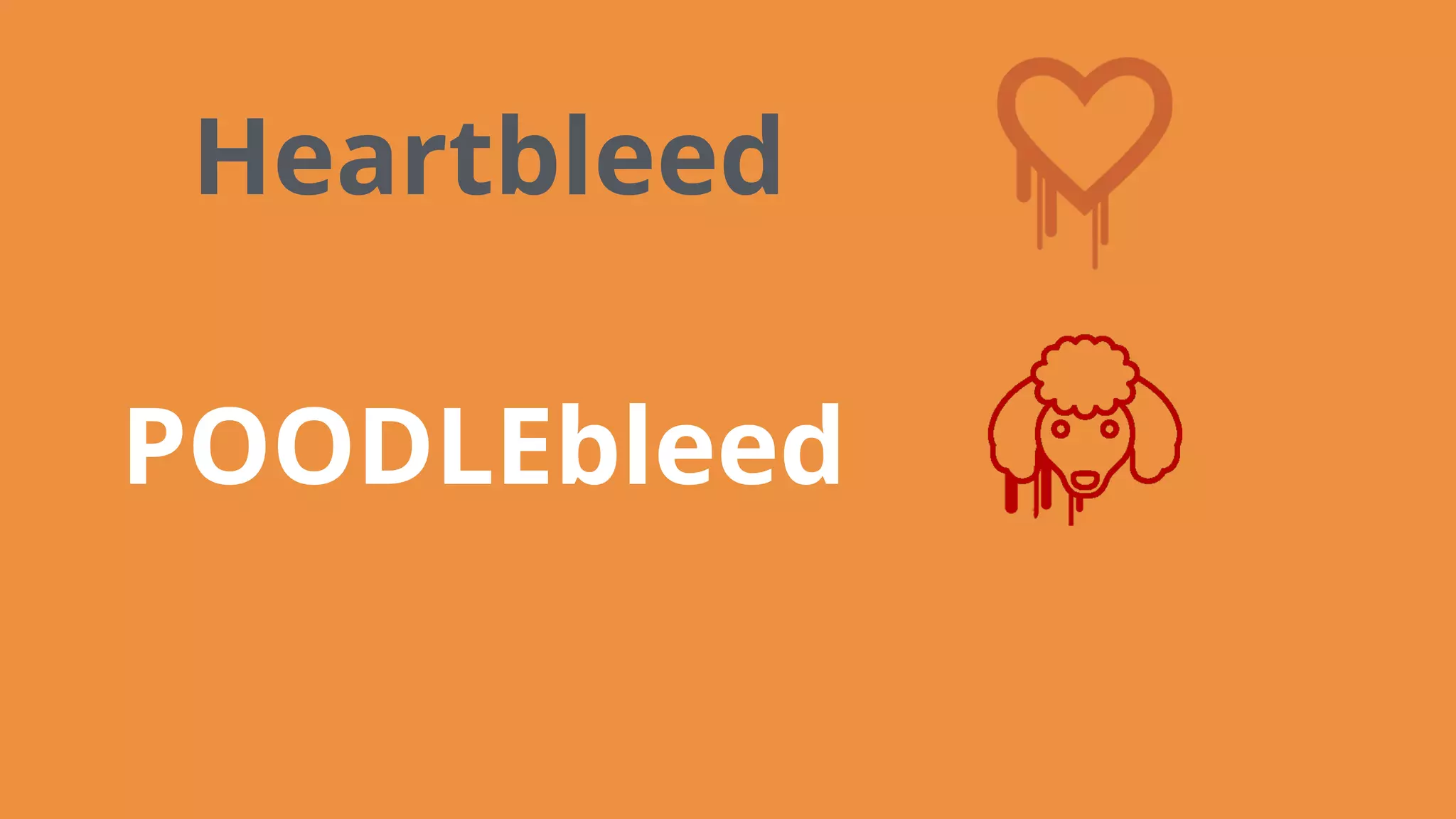

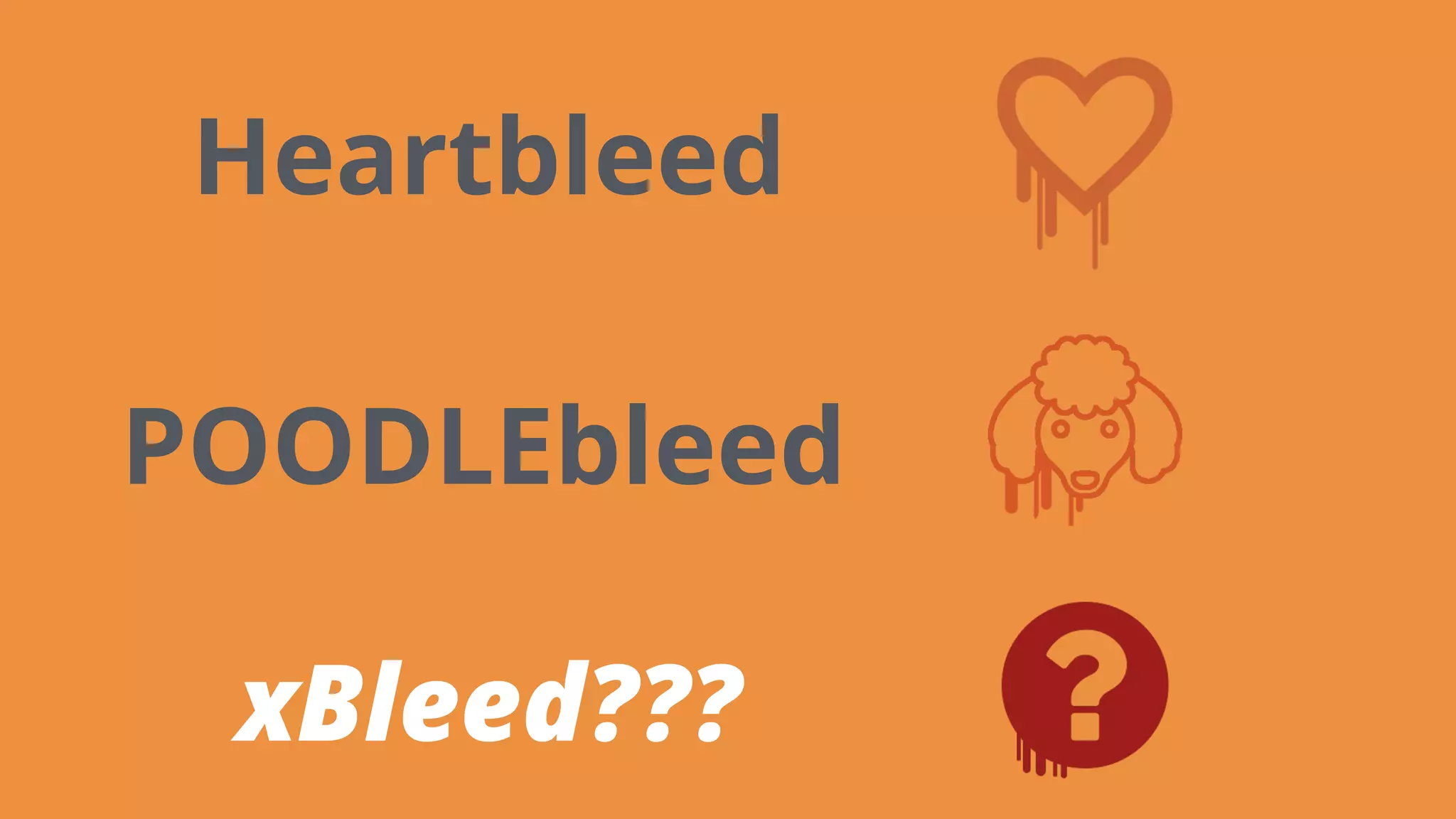

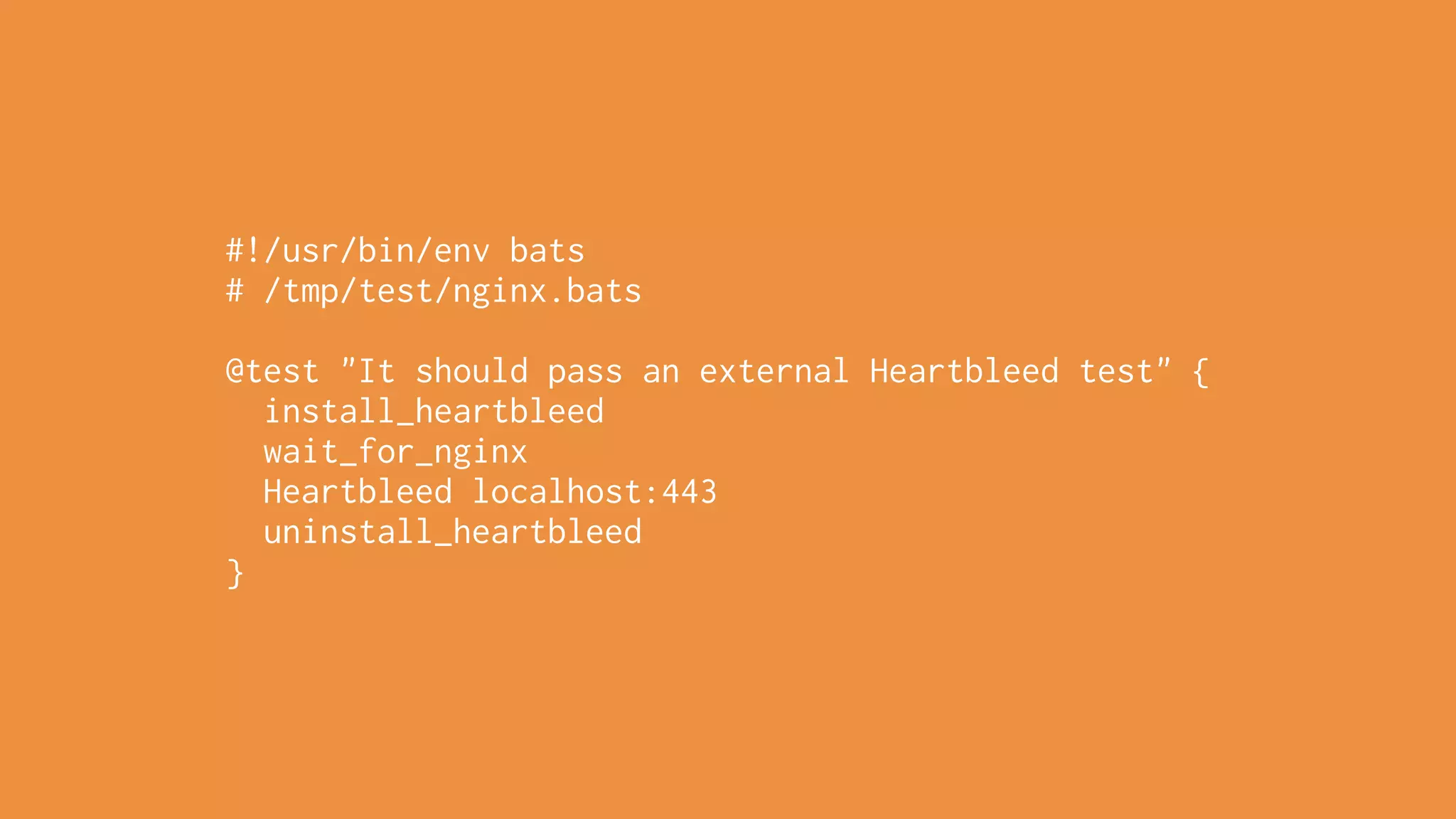

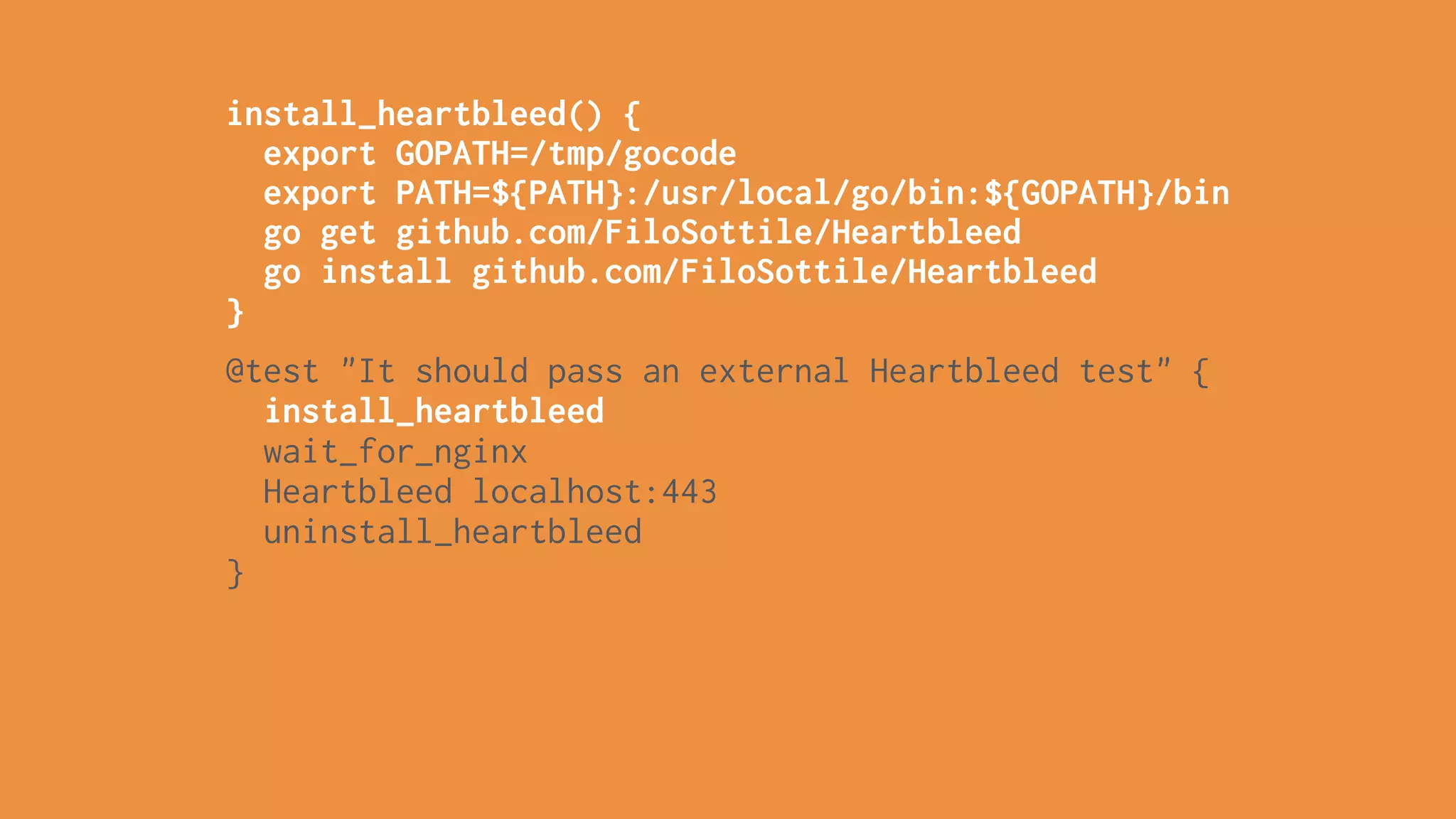

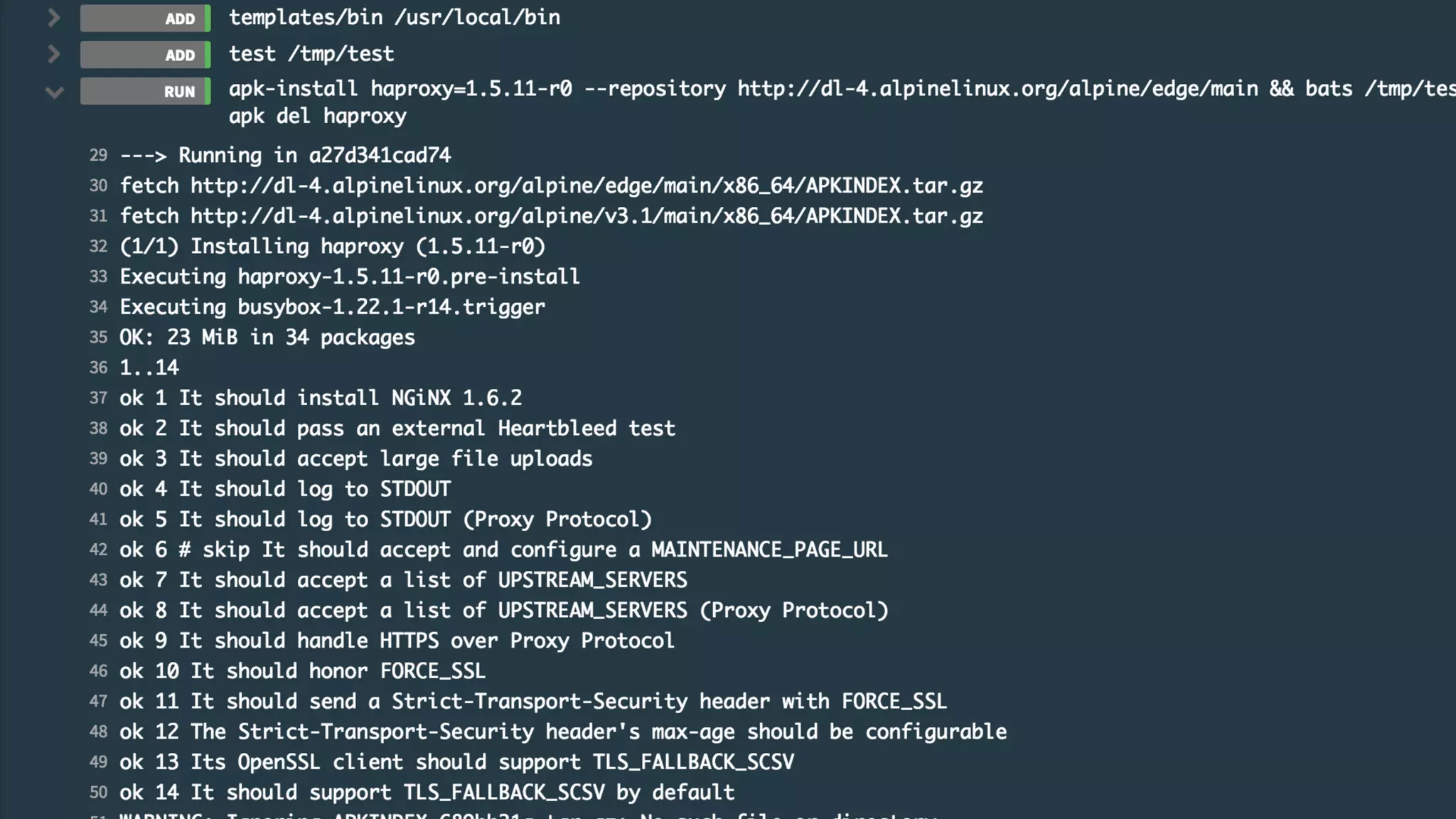

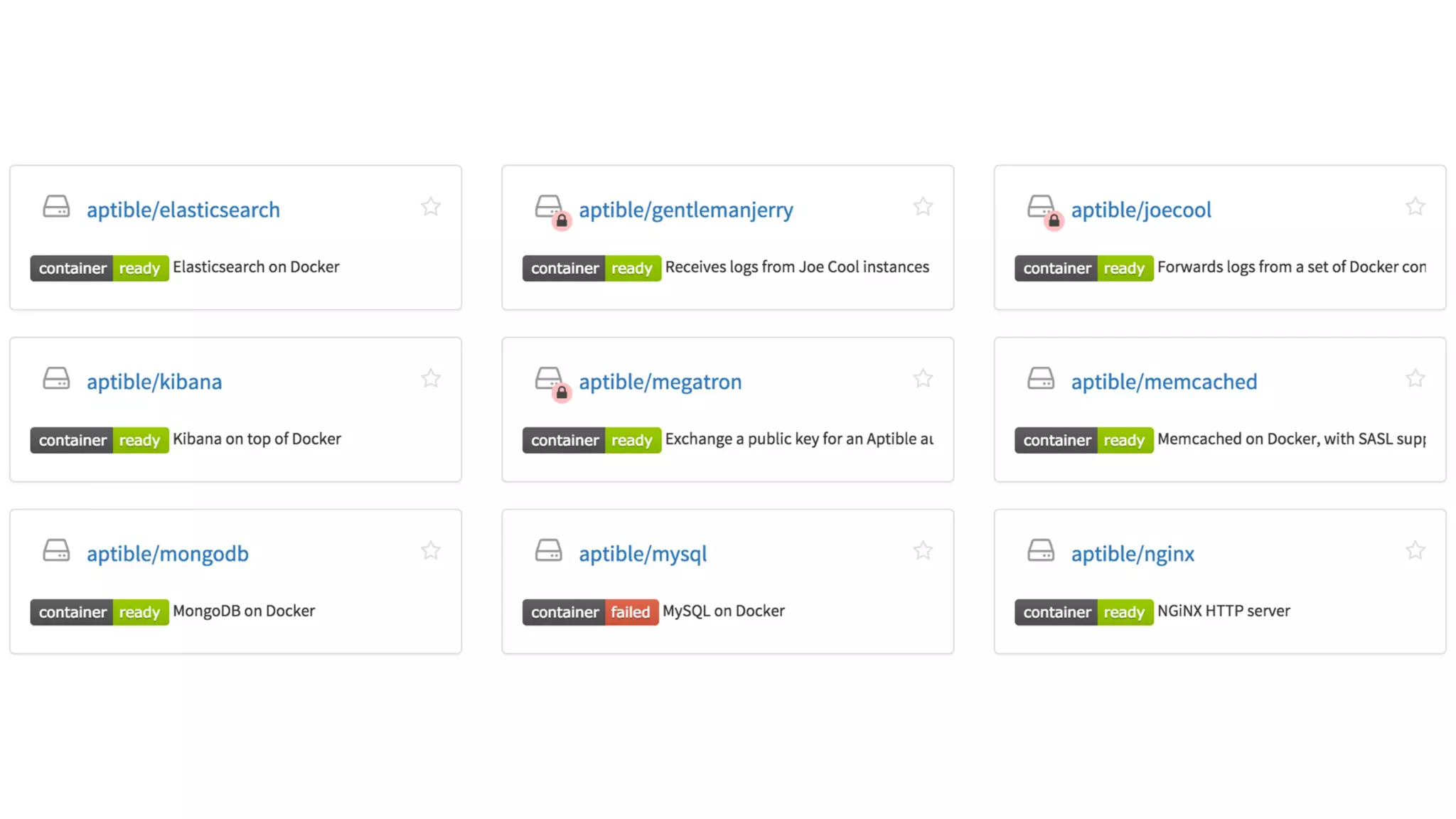

The document discusses the use of Docker for Ruby developers, highlighting its advantages over traditional virtual machines and emphasizing the importance of achieving development and production parity using Docker images and containers. It provides practical examples, including Docker commands for pulling images, running containers, and configuring services with Docker Compose, while also addressing deployment complexities in service-oriented architectures. Additionally, it touches on managing SSL infrastructure with Docker and integration testing during image builds to ensure security and reliability.

![#!/usr/bin/env bats # /tmp/test/nginx.bats @test "It should accept a list of UPSTREAM_SERVERS" { simulate_upstream UPSTREAM_SERVERS=localhost:4000 wait_for_nginx run curl localhost 2>/dev/null [[ "$output" =~ "Hello World!" ]] }](https://image.slidesharecdn.com/nycrb-20150811-150812174743-lva1-app6892/75/Docker-for-Ruby-Developers-59-2048.jpg)

![#!/usr/bin/env bats # /tmp/test/nginx.bats @test "It should accept a list of UPSTREAM_SERVERS" { simulate_upstream UPSTREAM_SERVERS=localhost:4000 wait_for_nginx run curl localhost 2>/dev/null [[ "$output" =~ "Hello World!" ]] }](https://image.slidesharecdn.com/nycrb-20150811-150812174743-lva1-app6892/75/Docker-for-Ruby-Developers-60-2048.jpg)

![@test "It should accept a list of UPSTREAM_SERVERS" { simulate_upstream UPSTREAM_SERVERS=localhost:4000 wait_for_nginx run curl localhost 2>/dev/null [[ "$output" =~ "Hello World!" ]] } simulate_upstream() { nc -l -p 4000 127.0.0.1 < upstream-response.txt }](https://image.slidesharecdn.com/nycrb-20150811-150812174743-lva1-app6892/75/Docker-for-Ruby-Developers-61-2048.jpg)

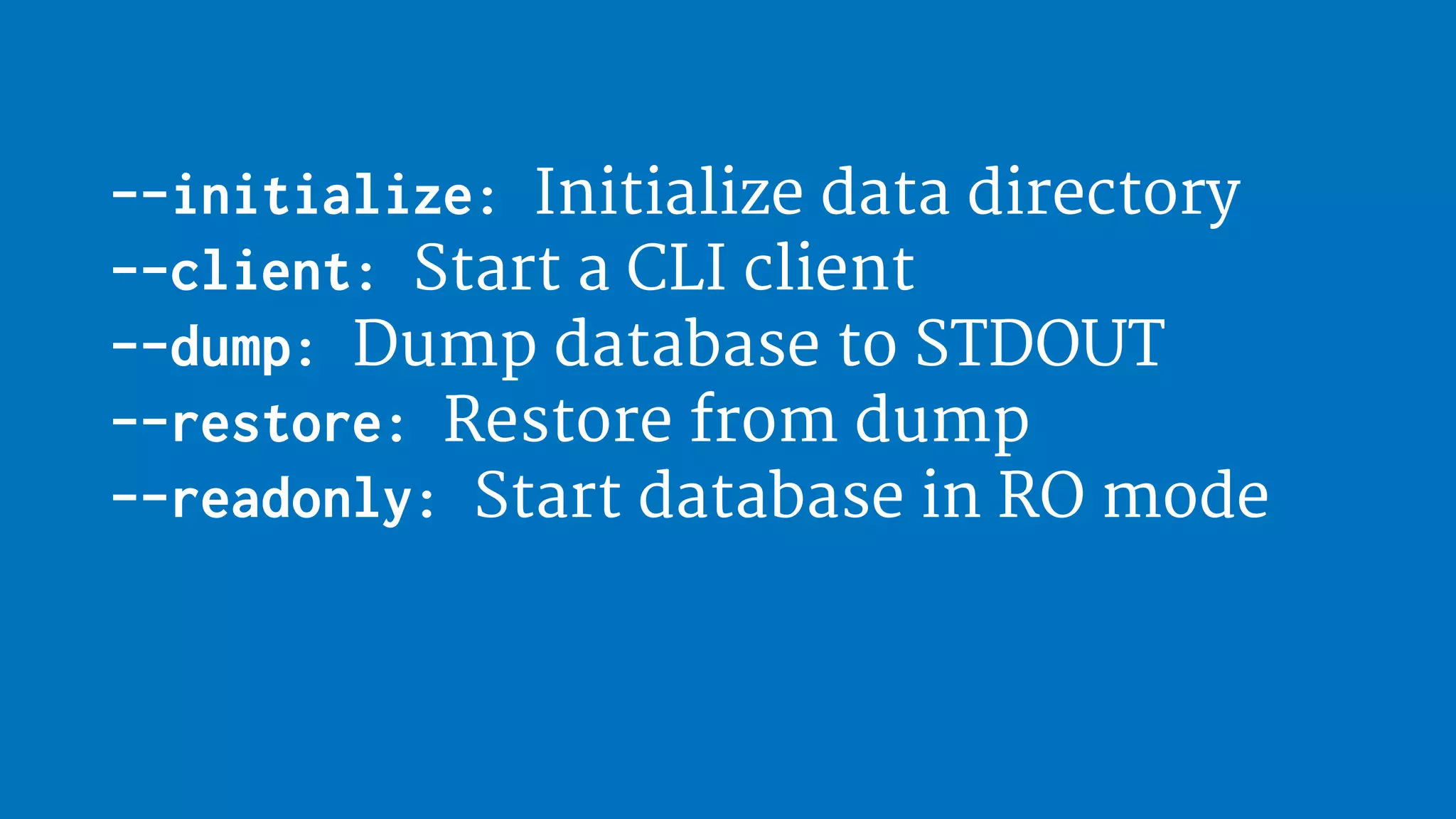

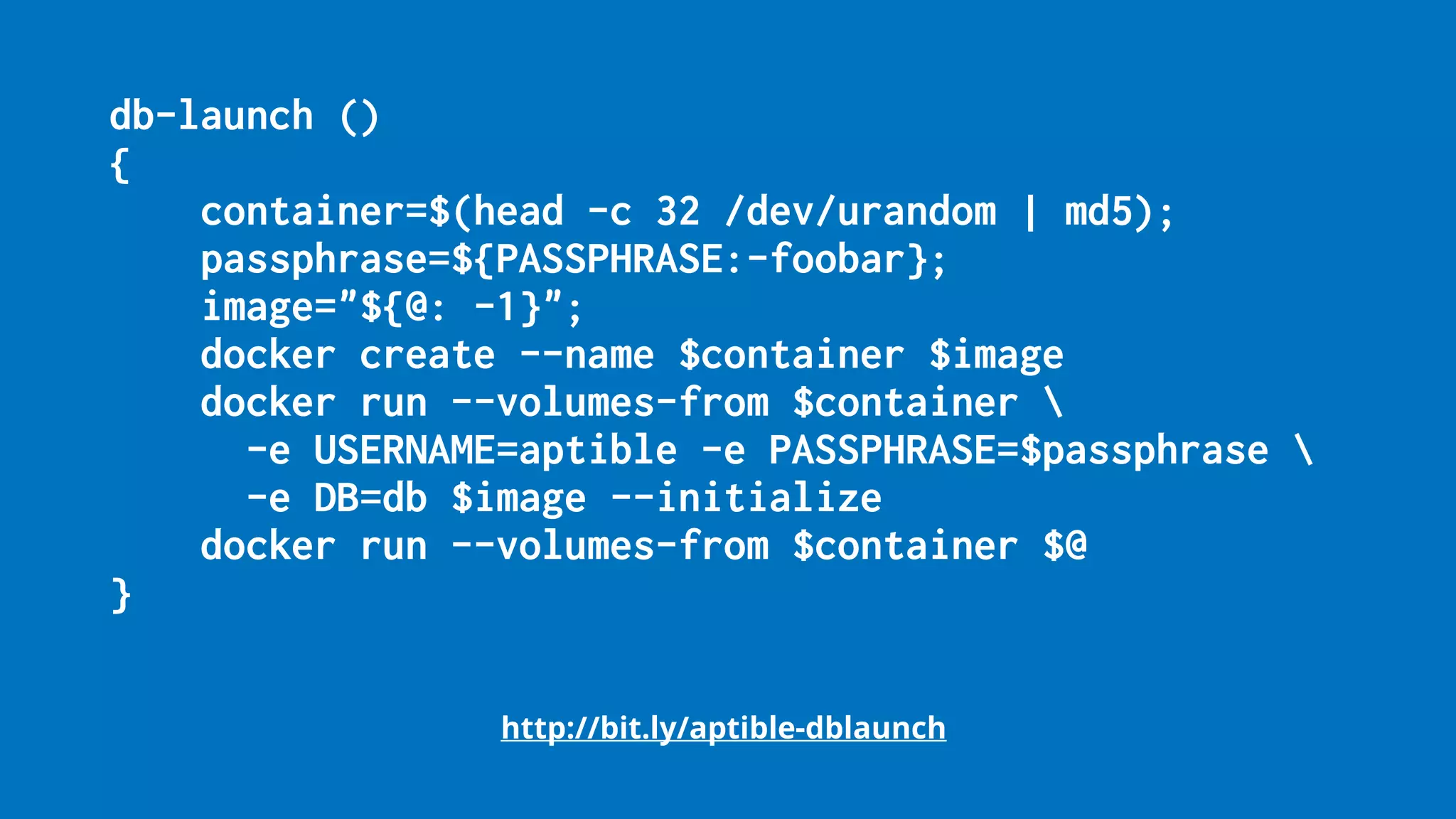

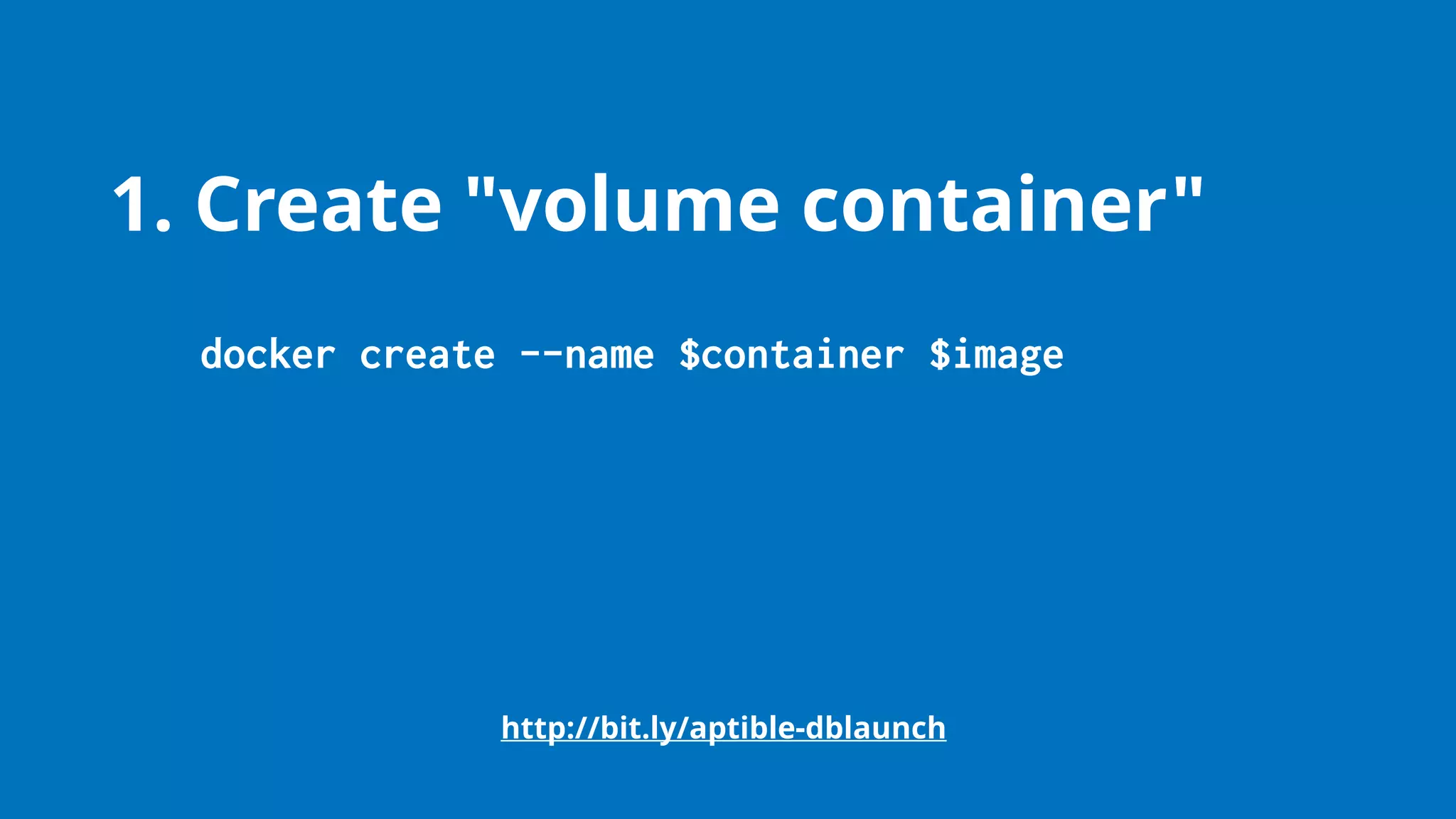

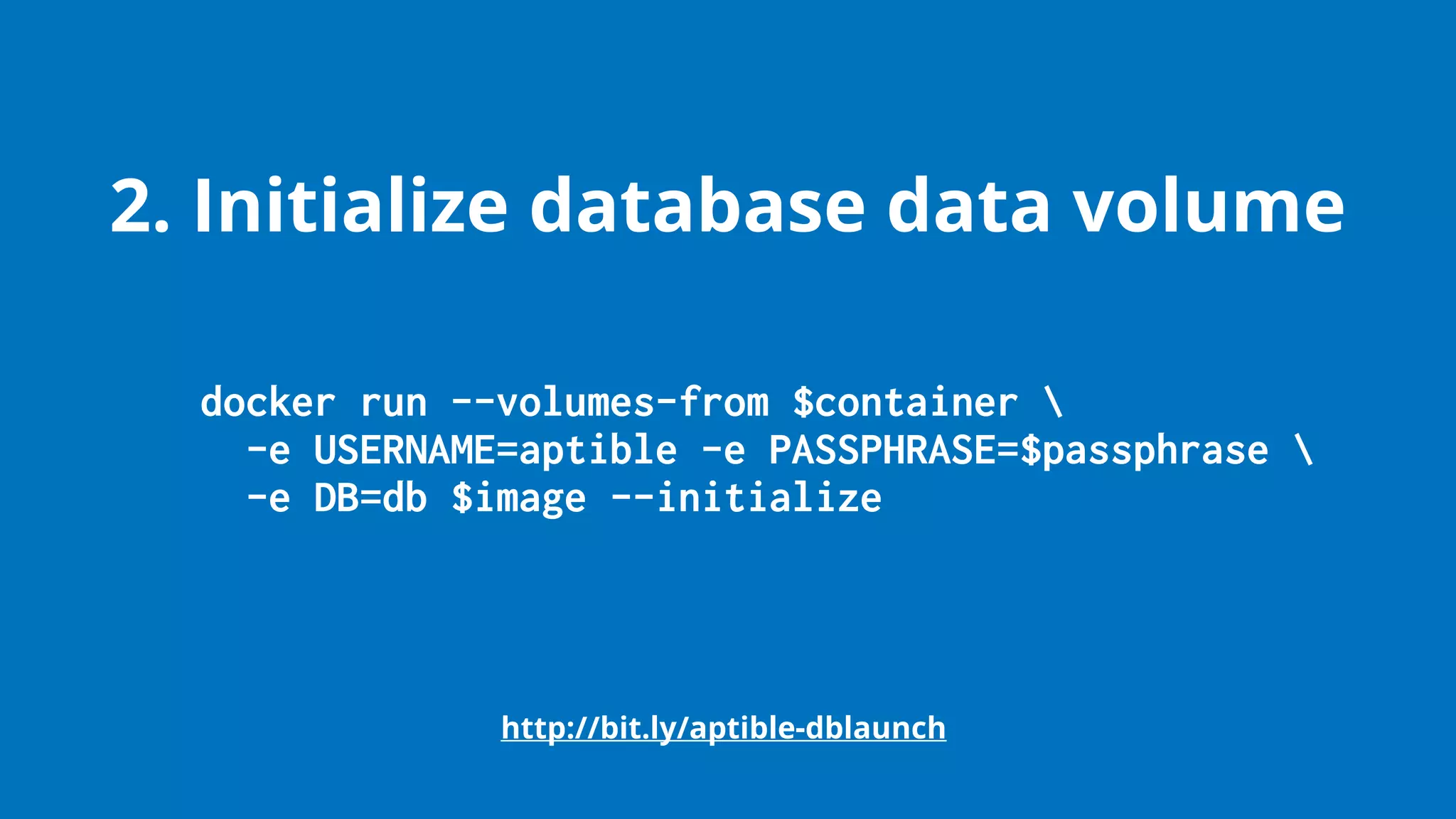

![#!/bin/bash # run-database.sh command="/usr/lib/postgresql/$PG_VERSION/bin/postgres -D "$DATA_DIRECTORY" -c config_file=/etc/postgresql/ $PG_VERSION/main/postgresql.conf" if [[ "$1" == "--initialize" ]]; then chown -R postgres:postgres "$DATA_DIRECTORY" su postgres <<COMMANDS /usr/lib/postgresql/$PG_VERSION/bin/initdb -D "$DATA_DIRECTORY" /etc/init.d/postgresql start psql --command "CREATE USER ${USERNAME:-aptible} WITH SUPERUSER PASSWORD '$PASSPHRASE'" psql --command "CREATE DATABASE ${DATABASE:-db}" /etc/init.d/postgresql stop COMMANDS elif [[ "$1" == "--client" ]]; then [ -z "$2" ] && echo "docker run -it aptible/postgresql --client postgresql://..." && exit psql "$2" elif [[ "$1" == "--dump" ]]; then [ -z "$2" ] && echo "docker run aptible/postgresql --dump postgresql://... > dump.psql" && exit pg_dump "$2" elif [[ "$1" == "--restore" ]]; then [ -z "$2" ] && echo "docker run -i aptible/postgresql --restore postgresql://... < dump.psql" && exit psql "$2"](https://image.slidesharecdn.com/nycrb-20150811-150812174743-lva1-app6892/75/Docker-for-Ruby-Developers-86-2048.jpg)

![#!/bin/bash # run-database.sh command="/usr/lib/postgresql/$PG_VERSION/bin/postgres -D "$DATA_DIRECTORY" -c config_file=/etc/postgresql/ $PG_VERSION/main/postgresql.conf" if [[ "$1" == "--initialize" ]]; then chown -R postgres:postgres "$DATA_DIRECTORY" su postgres <<COMMANDS /usr/lib/postgresql/$PG_VERSION/bin/initdb -D "$DATA_DIRECTORY" /etc/init.d/postgresql start psql --command "CREATE USER ${USERNAME:-aptible} WITH SUPERUSER PASSWORD '$PASSPHRASE'" psql --command "CREATE DATABASE ${DATABASE:-db}" /etc/init.d/postgresql stop COMMANDS elif [[ "$1" == "--client" ]]; then [ -z "$2" ] && echo "docker run -it aptible/postgresql --client postgresql://..." && exit psql "$2" elif [[ "$1" == "--dump" ]]; then [ -z "$2" ] && echo "docker run aptible/postgresql --dump postgresql://... > dump.psql" && exit pg_dump "$2" elif [[ "$1" == "--restore" ]]; then [ -z "$2" ] && echo "docker run -i aptible/postgresql --restore postgresql://... < dump.psql" && exit psql "$2"](https://image.slidesharecdn.com/nycrb-20150811-150812174743-lva1-app6892/75/Docker-for-Ruby-Developers-87-2048.jpg)