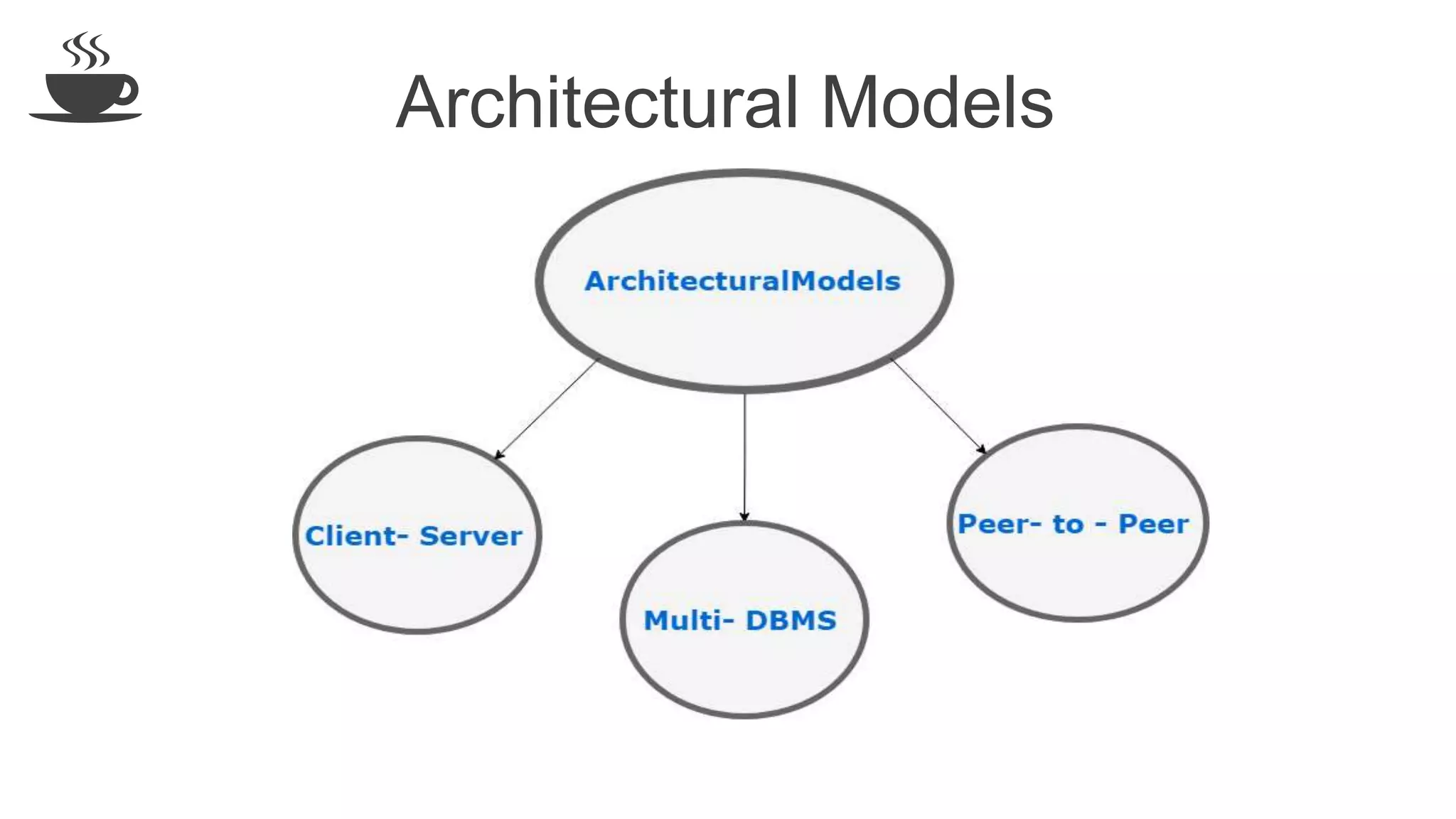

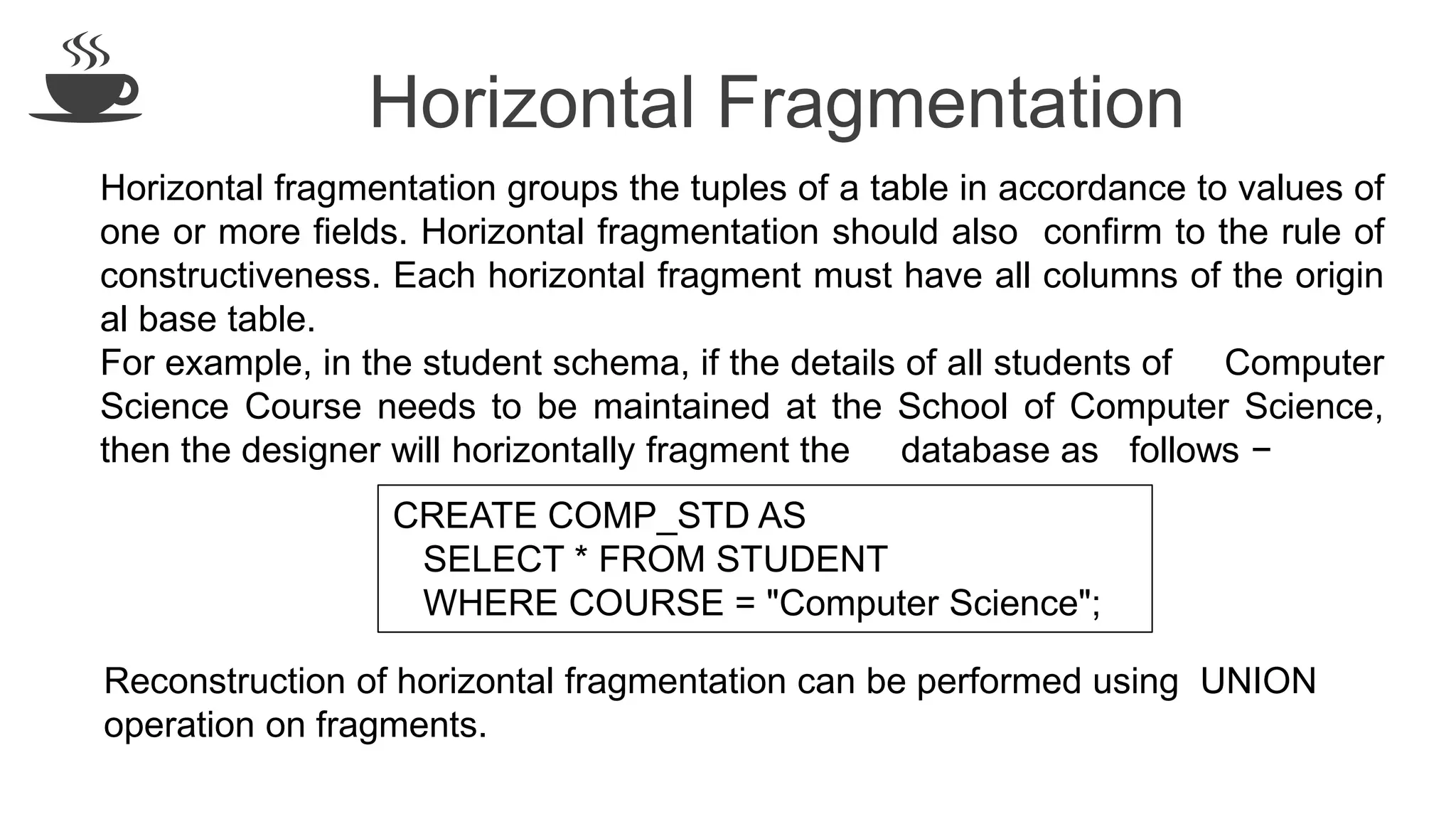

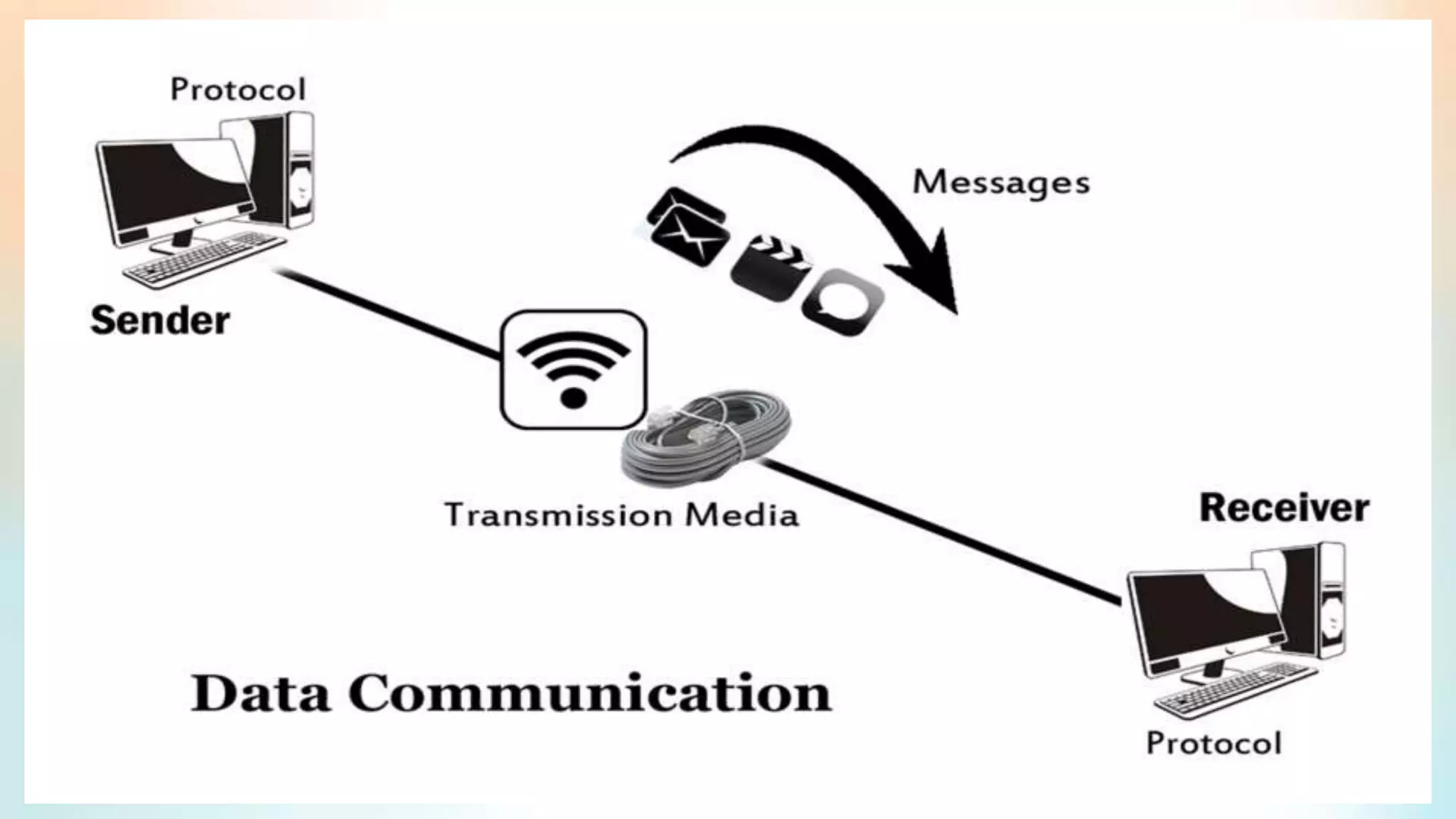

The document discusses distributed database design, outlining its architecture, fragmentation methods, and concurrency control mechanisms. It highlights various design alternatives for data distribution, including non-replicated, fully replicated, partially replicated, and fragmented approaches, along with their advantages and disadvantages. Additionally, it explains essential concepts in data communication and concurrency control, emphasizing the complexity of managing distributed systems compared to centralized databases.