Deep Learning On Edge Computing Devices Design Challenges Of Algorithm And Architecture 1st Edition Xichuan Zhou Deep Learning On Edge Computing Devices Design Challenges Of Algorithm And Architecture 1st Edition Xichuan Zhou Deep Learning On Edge Computing Devices Design Challenges Of Algorithm And Architecture 1st Edition Xichuan Zhou

![CHAPTER 1 Introduction 1.1 Background At present, the human society is rapidly entering the era of Internet of Everything. The application of the Internet of Things based on the smart embedded device is exploding. The report “The mobile economy 2020” released by Global System for Mobile Communications Assembly (GSMA) has shown that the total number of connected devices in the global Inter- net of Things reached 12 billion in 2019 [1]. It is estimated that by 2025 the total scale of the connected devices in the global Internet of Things will reach 24.6 billion. Applications such as smart terminals, smart voice assistants, and smart driving will dramatically improve the organizational efficiency of the human society and change people’s lives. With the rapid development of artificial intelligence technology toward pervasive intelli- gence, the smart terminal devices will further deeply penetrate the human society. Looking back at the development process of artificial intelligence, at a key time point in 1936, British mathematician Alan Turing proposed an ideal computer model, the general Turing machine, which provided a theoretical basis for the ENIAC (Electronic Numerical Integrator And Computer) born ten years later. During the same period, inspired by the behavior of the human brain, American scientist John von Neumann wrote the monograph “The Computer and the Brain” [2] and proposed an improved stored program computer for ENIAC, i.e., Von Neumann Architecture, which became a prototype for computers and even artificial intelligence systems. The earliest description of artificial intelligence can be traced back to the Turing test [3] in 1950. Turing pointed out that “if a machine talks with a person through a specific device without communication with the outside, and the person cannot reliably tell that the talk object is a machine or a person, this machine has humanoid intelligence”. The word “artificial intelligence” actually appeared at the Dartmouth symposium held by John McCarthy in 1956 [4]. The “father of artificial intelligence” defined it as “the science and engineering of manufacturing smart machines”. The pro- posal of artificial intelligence has opened up a new field. Since then, the Deep Learning on Edge Computing Devices https://doi.org/10.1016/B978-0-32-385783-3.00008-9 Copyright © 2022 Tsinghua University Press. Published by Elsevier Inc. All rights reserved. 3](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-18-2048.jpg)

![4 Deep Learning on Edge Computing Devices Figure 1.1 Relationship diagram of deep learning related research fields. academia has also successively presented research results of artificial intel- ligence. After several historical cycles of development, at present, artificial intelligence has entered a new era of machine learning. As shown in Fig. 1.1, machine learning is a subfield of theoretical re- search on artificial intelligence, which has developed rapidly in recent years. Arthur Samuel proposed the concept of machine learning in 1959 and con- ceived the establishment of a theoretical method “to allow the computer to learn and work autonomously without relying on certain coded instruc- tions” [5]. A representative method in the field of machine learning is the support vector machine [6] proposed by Russian statistician Vladimir Vap- nik in 1995. As a data-driven method, the statistics-based SVM has perfect theoretical support and excellent model generalization ability, and is widely used in scenarios such as face recognition. Artificial neural network (ANN) is one of the methods to realize machine learning. The artificial neural network uses the structural and functional features of the biological neural network to build mathematical models for estimating or approximating functions. ANNs are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biologi- cal brain. The concept of the artificial neural network can be traced back to the neuron model (MP model) [7] proposed by Warren McCulloch and Walter Pitts in 1943. In this model the input multidimensional data are multiplied by the corresponding weight parameters and accumulated,](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-19-2048.jpg)

![Introduction 5 and the accumulated value is calculated by a specific threshold function to output the prediction result. Later, Frank Rosenblatt built a perceptron system [8] with two layers of neurons in 1958, but the perceptron model and its subsequent improvement methods had limitations in solving high- dimensional nonlinear problems. Until 1986, Geoffrey Hinton, a professor in the Department of Computer Science at the University of Toronto, in- vented the back propagation algorithm [9] for parameter estimation of the artificial neural network and realized the training of the multilayer neural networks. As a branch of the neural network technology, the deep learning tech- nology has been a great success in recent years. The algorithmic milestone appeared in 2006. Hinton invented the Boltzmann machine and success- fully solved the problem [10] of vanishing gradients in training the mul- tilayer neural networks. So far, the artificial neural network has officially entered the “deep” era. In 2012, the convolutional neural network [11] and its variants invented by Professor Yann LeCun from New York Uni- versity greatly improved the classification accuracy of the machine learning methods on large-scale image databases and reached and surpassed people’s image recognition level in the following years, which laid the technical foundation for the large-scale industrial application of the deep learning technology. At present, the deep learning technology is ever developing rapidly and achieved great success in subdivision fields of machine vision [12] and voice processing [13]. Especially in 2016, Demis Hassabis’s Alpha Go artificial intelligence built based on the deep learning technology de- feated Shishi Li, the international Go champion by 4:1, which marked that artificial intelligence has entered a new era of rapid development. 1.2 Applications and trends The Internet of Things technology is considered to be one of the impor- tant forces that lead to the next wave of industrial change. The concept of the Internet of Things was first proposed by Kevin Ashton of MIT in 2009. He pointed out that “the computer can observe and understand the world by RF transmission and sensor technology, i.e., empower computers with their own means of gathering information” [14]. After the massive data collected by various sensors are connected to the network, the connection between human beings and everything is enhanced, thereby expanding the boundaries of the Internet and greatly increasing industrial production ef- ficiency. In the new “wave of industrial technological change”, the smart](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-20-2048.jpg)

![6 Deep Learning on Edge Computing Devices terminal devices will undoubtedly play an important role. As a carrier for connection of Internet of Things, the smart perception terminal device not only realizes data collection, but also has front-end and local data process- ing capabilities, which can realize the protection of data privacy and the extraction and analysis of perceived semantic information. With the proposal of the smart terminal technology, the fields of Arti- ficial Intelligence (AI) and Internet of Things (IoT) have gradually merged into the artificial intelligence Internet of Things (AI&IoT or AIoT). On one hand, the application scale of artificial intelligence has been gradually expanded and penetrated into more fields relying on the Internet of Things; on the other hand, the devices of Internet of Things require the embedded smart algorithms to extract valuable information in the front-end collection of sensor data. The concept of intelligence Internet of Things (AIoT) was proposed by the industrial community around 2018 [15], which aimed at realizing the digitization and intelligence of all things based on the edge computing of the Internet of Things terminal. AIoT-oriented smart ter- minal applications have a period of rapid development. According to a third-party report from iResearch, the total amount of AIoT financing in the Chinese market from 2015 to 2019 was approximately $29 billion, with an increase of 73%. The first characteristic of AIoT smart terminal applications is the high data volume because the edge has a large number of devices and large size of data. Gartner’s report has shown that there are approximately 340,000 autonomous vehicles in the world in 2019, and it is expected that in 2023, there will be more than 740,000 autonomous vehicles with data collec- tion capabilities running in various application scenarios. Taking Tesla as an example, with eight external cameras and one powerful system on chip (SOC) [16], the autonomous vehicles can support end-to-end machine vi- sion image processing to perceive road conditions, surrounding vehicles and the environment. It is reported that a front camera with a resolution of 1280 × 960 in Tesla Model 3 can generate about 473 GB of image data in one minute. According to the statistics, at present, Tesla has collected more than 1 million video data and labeled the information about dis- tance, acceleration, and speed of 6 billion objects in the video. The data amount is as high as 1.5 PB, which provides a good data basis for improve- ment of the performance of the autonomous driving artificial intelligence model. The second characteristic of AIoT smart terminal applications is high la- tency sensitivity. For example, the vehicle-mounted ADAS of autonomous](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-21-2048.jpg)

![Introduction 7 vehicles has strict requirements on response time from image acquisi- tion and processing to decision making. For example, the average re- sponse time of Tesla autopilot emergency brake system is 0.3 s (300 ms), and a skilled driver also needs approximately 0.5 s to 1.5 s. With the data-driven machine learning algorithms, the vehicle-mounted system HW3 proposed by Tesla in 2019 processes 2300 frames per second (fps), which is 21 times higher than the 110 fps image processing capacity of HW2.5. The third characteristic of AIoT smart terminal applications is high energy efficiency. Because wearable smart devices and smart speakers in embedded artificial intelligence application fields [17] are mainly battery- driven, the power consumption and endurance are particularly critical. Most of the smart speakers use a voice awakening mechanism, which can realize conversion from the standby state to the working state according to the recognition of human voice keywords. Based on the embedded voice recognition artificial intelligence chip with high power efficiency, a novel smart speaker can achieve wake-on-voice at standby power consumption of 0.05 W. In typical offline human–machine voice interaction application scenarios, the power consumption of the chip can also be controlled within 0.7 W, which provides conditions for battery-driven systems to work for a long time. For example, Amazon smart speakers can achieve 8 hours of battery endurance in the always listening mode, and the optimized smart speakers can achieve up to 3 months of endurance. From the perspective of future development trends, the development goal of the artificial intelligence Internet of Things is achieving ubiquitous pervasive intelligence [18]. The pervasive intelligence technology aims to solve the core technical challenges of high volume, high time sensitivity, and high power efficiency of the embedded smart devices and finally to realize the digitization and intelligence of all things [19]. The basis of de- velopment is to understand the legal and ethical relationship between the efficiency improvement brought by the development of the artificial intel- ligence technology and the protection of personal privacy, so as to improve the efficiency of social production and the convenience of people’s lives under the premise of guaranteeing the personal privacy. We believe that pervasive intelligence calculation for the artificial intelligence Internet of Things will become a key technology to promote a new wave of industrial technological revolution.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-22-2048.jpg)

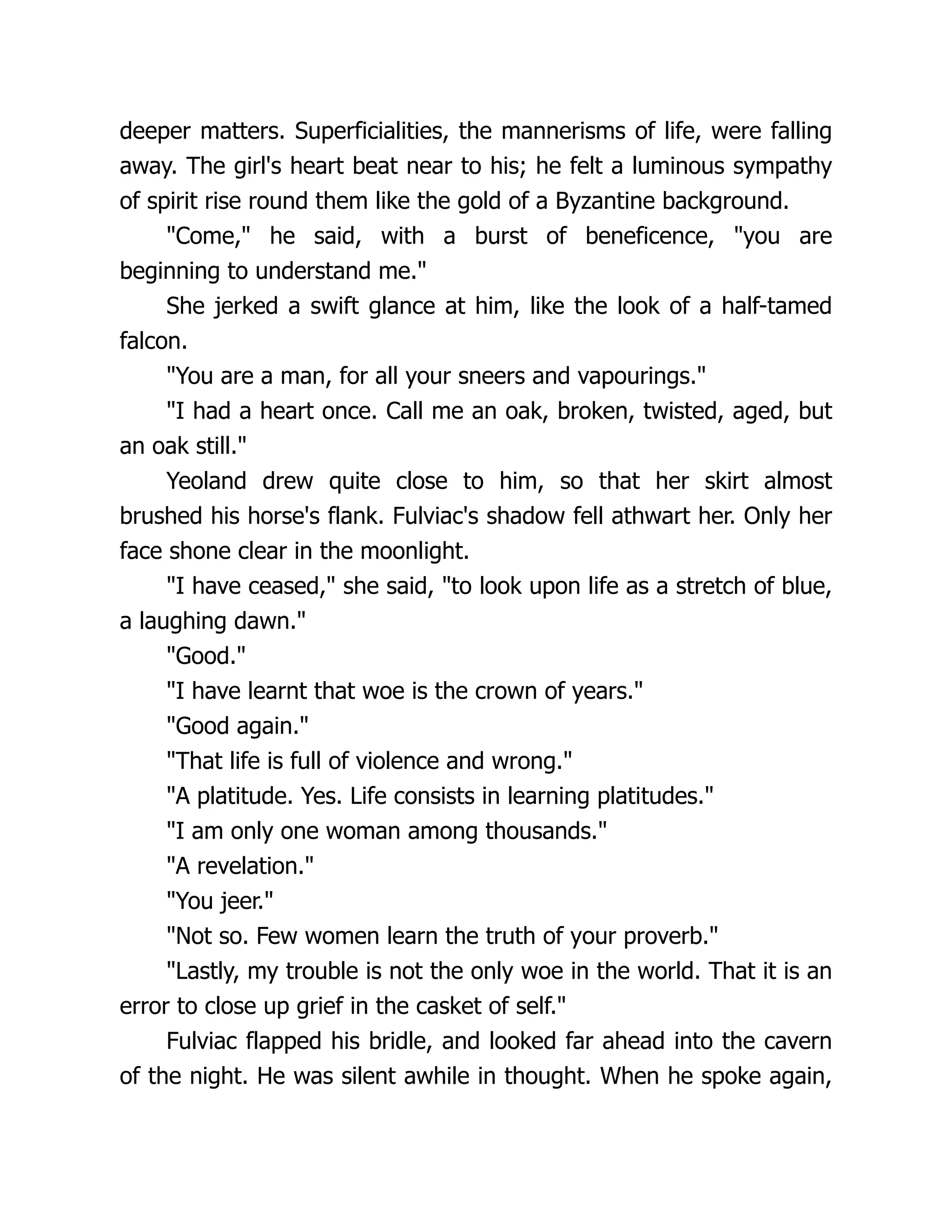

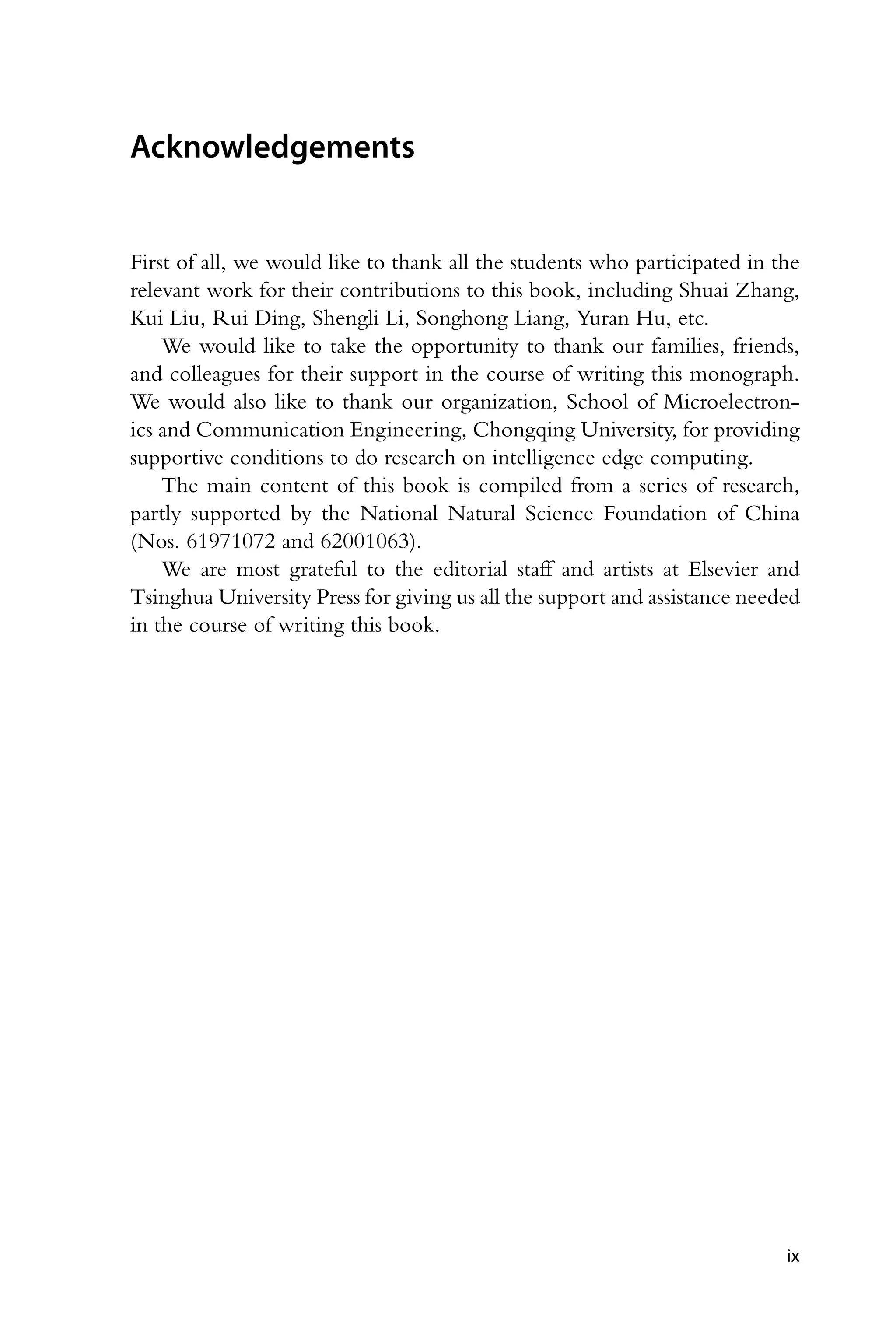

![8 Deep Learning on Edge Computing Devices Figure 1.2 Global data growth forecast. 1.3 Concepts and taxonomy 1.3.1 Preliminary concepts Data, computing power, and algorithms are regarded as three elements that promote the development of artificial intelligence, and the development of these three elements has become a booster for the explosion of the deep learning technology. First of all, the ability to acquire data, especially large- scale data with labels, is a prerequisite for the development of the deep learning technology. According to the statistics, the size of the global Inter- net data in 2020 has exceeded 30 ZB [20]. Without data optimization and compression, the estimated storage cost alone will exceed RMB 6 trillion, which is equivalent to the sum of GDP of Norway and Austria in 2020. With the further development of the Internet of Things and 5G technol- ogy, more data sources and capacity enhancements at the transmission level will be brought. It is foreseeable that the total amount of data will continue to develop rapidly at higher speed. It is estimated that the total amount of data will be 175 ZB by 2025, as shown in Fig. 1.2. The increase in data size provides a good foundation for the performance improvement of deep learning models. On the other hand, the rapidly growing data size also puts forward higher computing performance requirements for model training. Secondly, the second element of the development of artificial intel- ligence is the computing system. The computing system refers to the hardware computing devices required to achieve an artificial intelligence system. The computing system is sometimes described as the “engine” that supports the application of artificial intelligence. In the deep learning era of artificial intelligence, the computing system has become an infrastruc- ture resource. When Google’s artificial intelligence Alpha Go [21] defeated Korean chess player Shishi Li in 2016, people lamented the powerful artifi- cial intelligence, and the huge “payment” behind it was little known: 1202](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-23-2048.jpg)

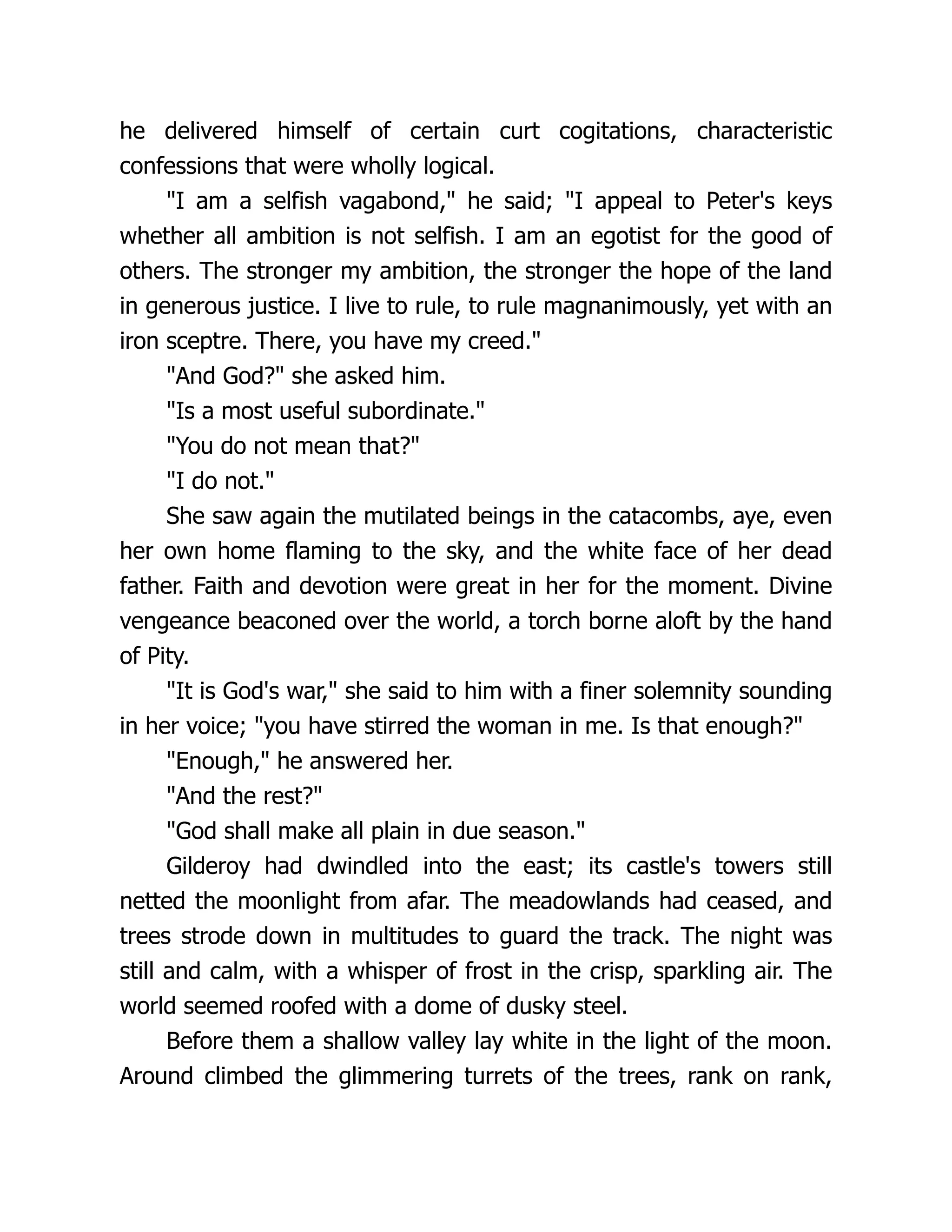

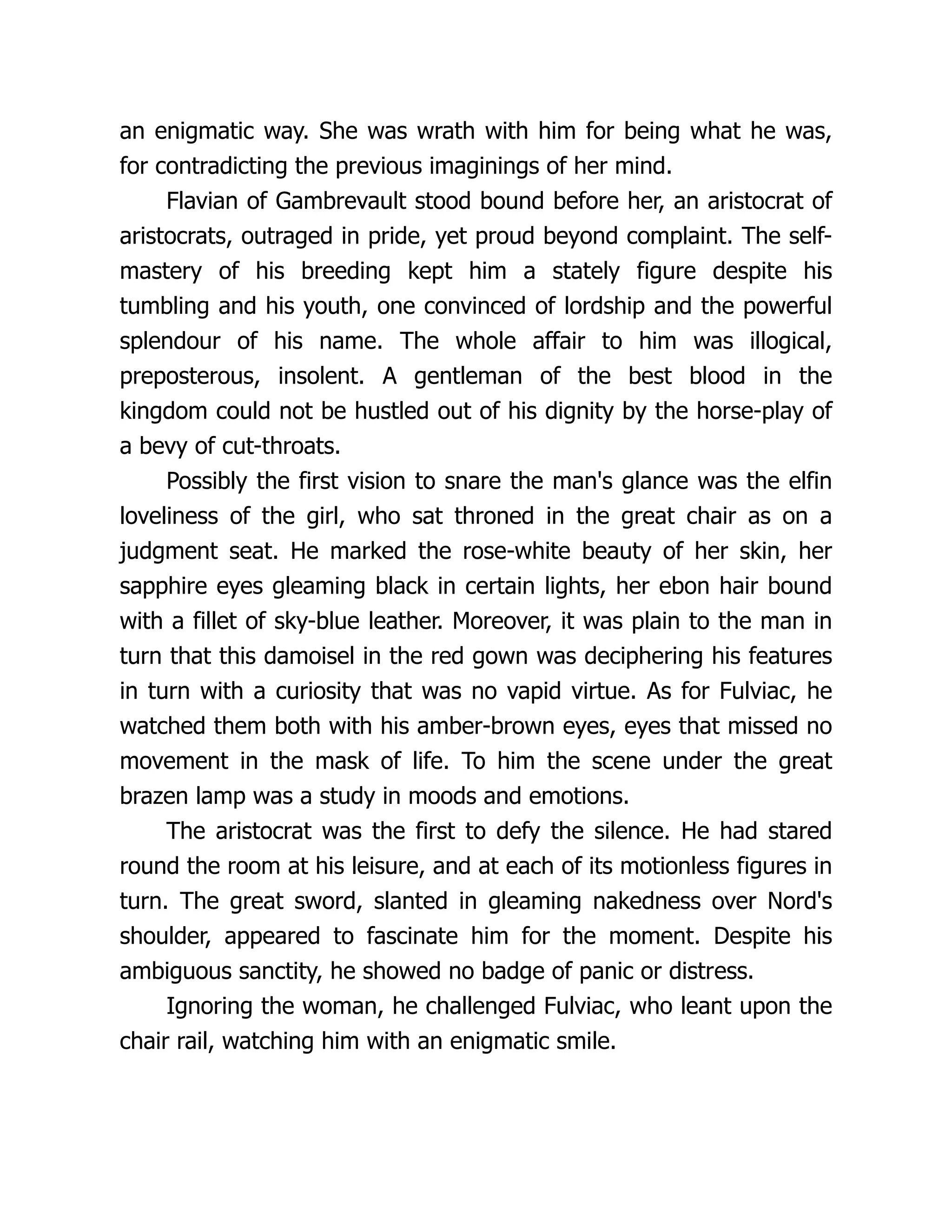

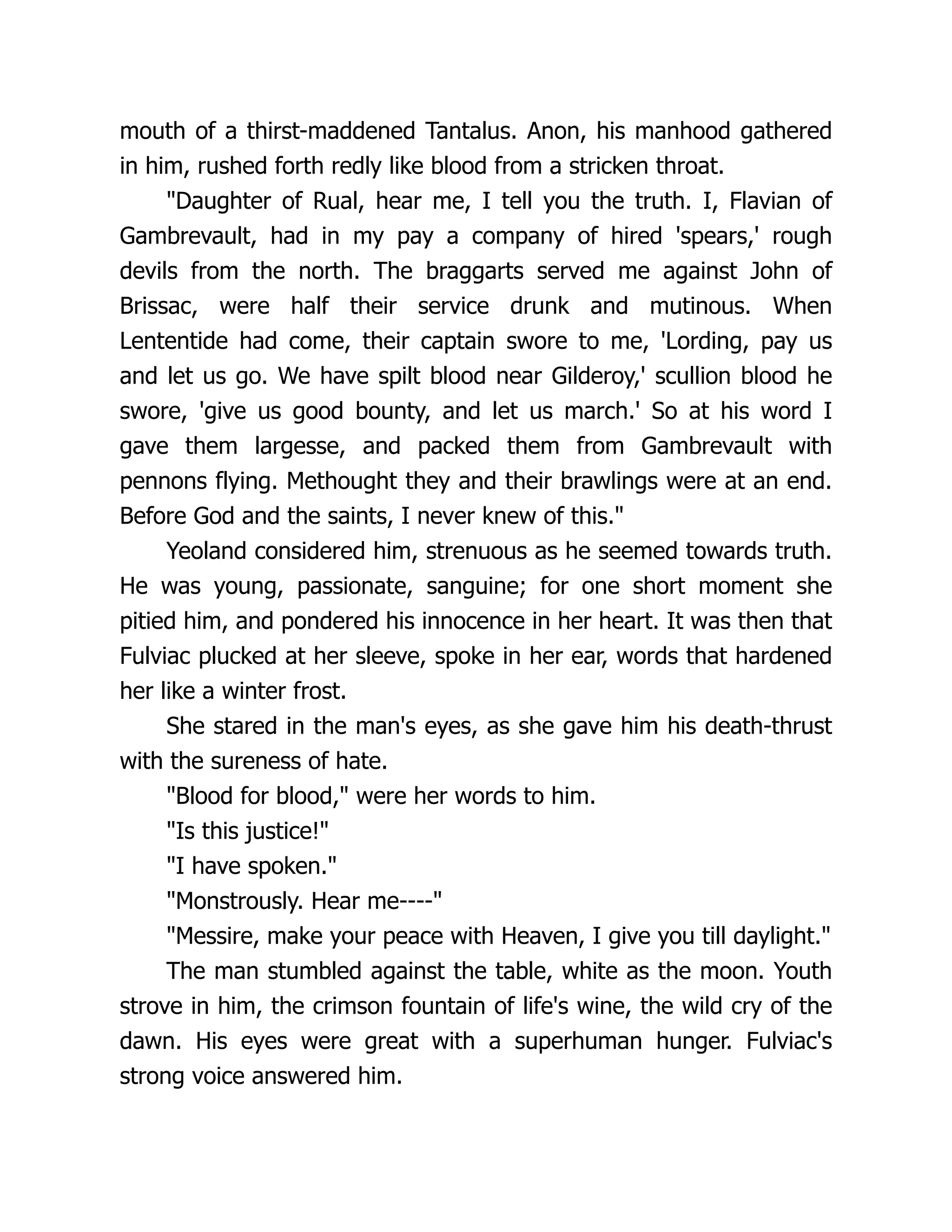

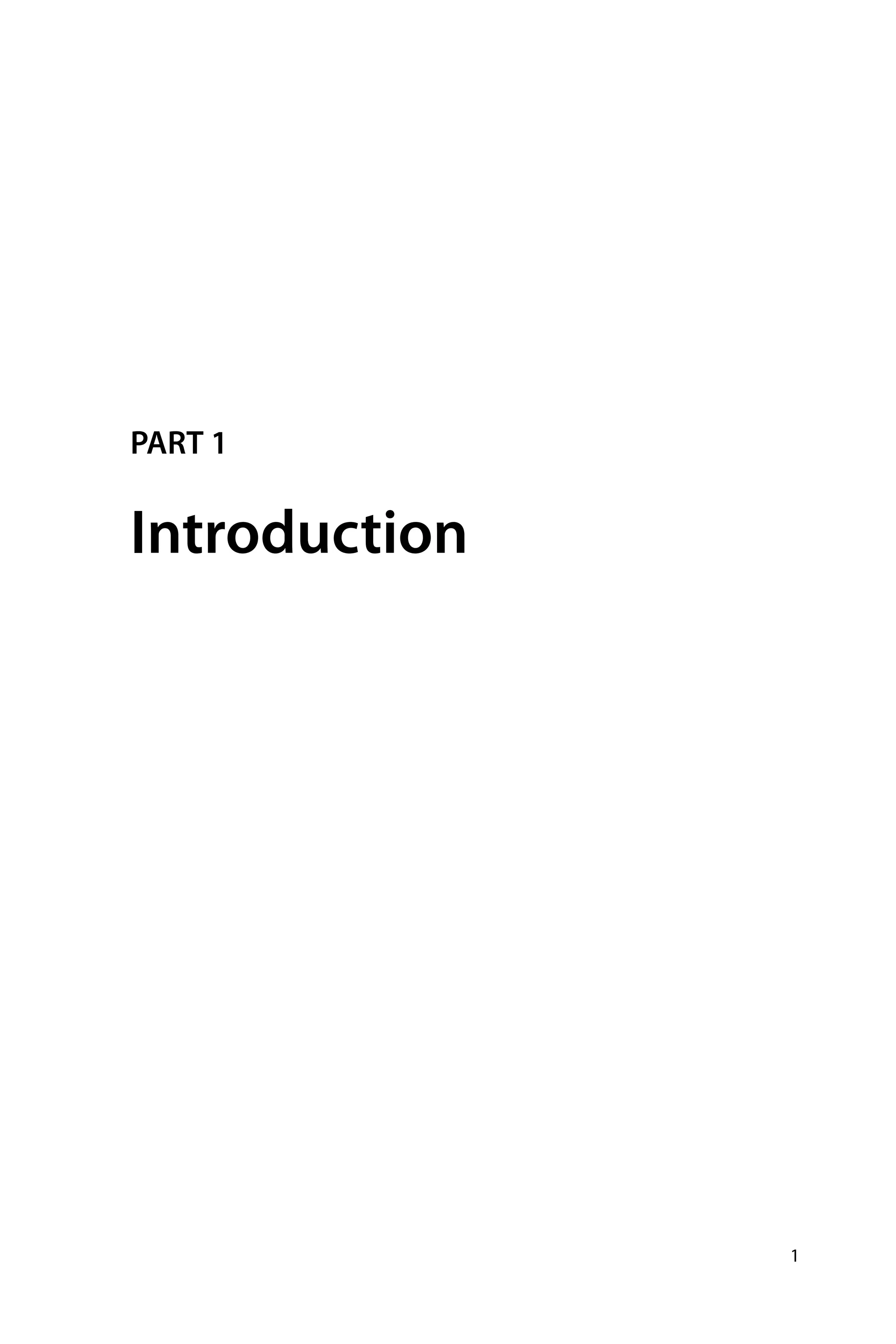

![Introduction 9 Figure 1.3 Development trend of transistor quantity. CPUs, 176 high-performance GPUs, and the astonishing power of 233 kW consumed in a game of chess. From the perspective of the development of the computing system, the development of VLSI chips is the fundamental power for the improve- ment of AI computing performance. The good news is that although the development of the semiconductor industry has periodic fluctuation, the well-known “Moore’s law” [22] in the semiconductor industry has expe- rienced the test for 50 years (Fig. 1.3). Moore’s law is still maintained in the field of VLSI chips, largely because the rapid development of GPU has made up for the slow development of CPU. We can see from the figure that in 2010 the number of GPU transistors has grown more than that of CPUs, CPU transistors have begun to lag behind Moore’s law, and the development of hardware technologies [23] such as special ASICs for deep learning and FPGA heterogeneous AI computing accelerators have injected new fuel for the increase in artificial intelligence computing power. Last but not least, the third element of artificial intelligence develop- ment is an algorithm. An algorithm is a finite sequence of well-defined, computer-implementable instructions, typically to solve a class of specific problems in finite time. Performance breakthrough in the algorithm and application based on deep learning in the past 10 years is an important rea- son for the milestone development of AI technology. So, what is the future development trend of deep learning algorithms in the era of Internet of Ev- erything? This problem is one of the core problems discussed in academia and industry. A general consensus is that the deep learning algorithms will develop toward high efficiency.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-24-2048.jpg)

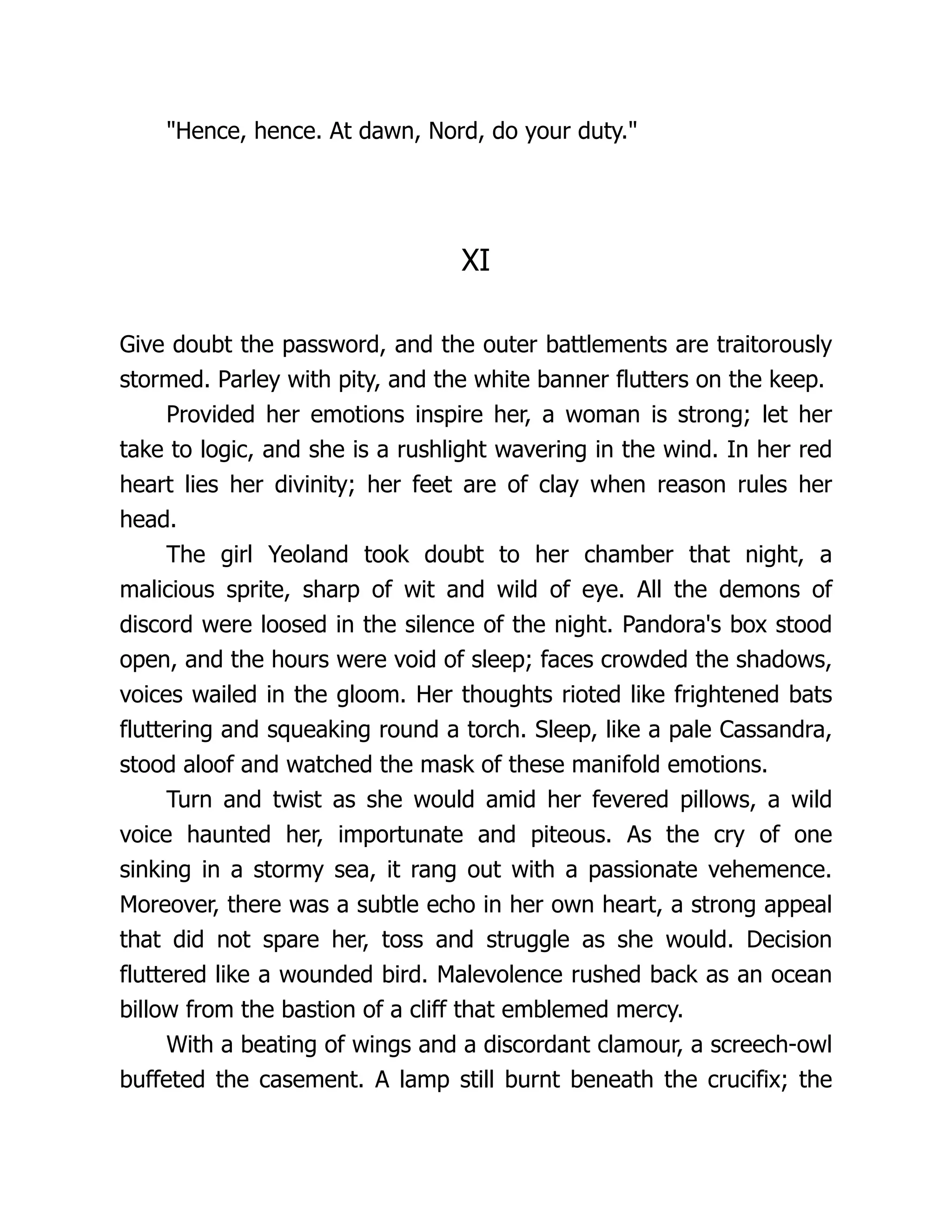

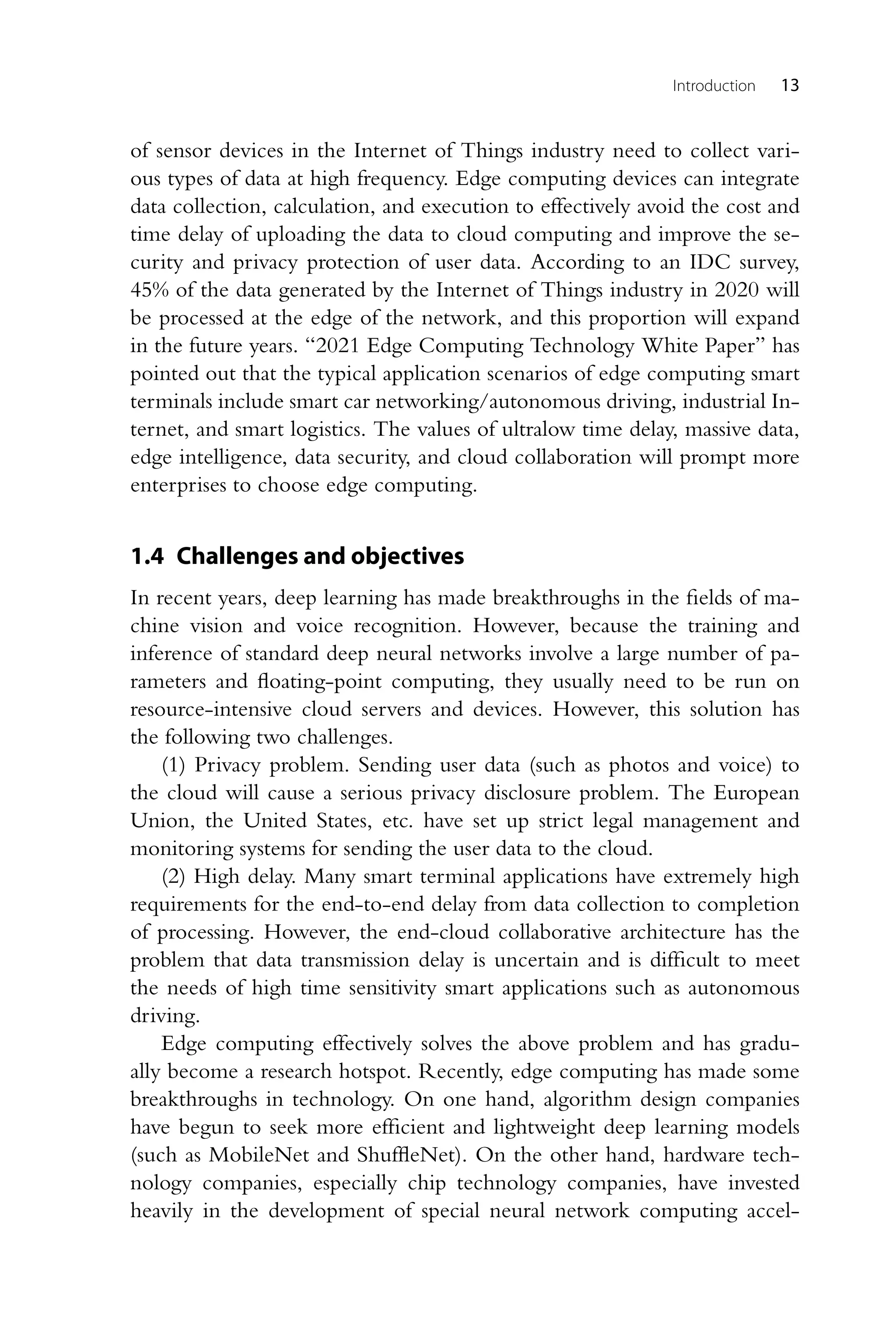

![10 Deep Learning on Edge Computing Devices Figure 1.4 Comparison of computing power demands and algorithms for deep learn- ing model. OpenAI, an open artificial intelligence research organization, has pointed out that “the computing resource required by advanced artificial intelligence doubles approximately every three and a half months”. The computing resource of training a large AI model has increased by 300,000 times since 2012, with an average annual increase of 11.5 times. The growth rate of hardware computing performance has only reached an average an- nual increase of 1.4 times. On the other hand, the improvement of the efficiency of high-efficiency deep learning algorithms reaches annual aver- age saving of about 1.7 times of the computing resource. This means that as we continue to pursue the continuous improvement of algorithm per- formance, the increase of computing resource demands potentially exceeds the development speed of hardware computing performance, as shown in Fig. 1.4. A practical example is the deep learning model GPT-3 [24] for natural language processing issued in 2020. Only the cost of model training and computing resource deployment has reached about 13 million dollars. If the computing resource cost increases exponentially, then it is difficult to achieve sustainable development. How to solve this problem is one of the key problems in the development of artificial intelligence toward the pervasive intelligence. 1.3.2 Two stages of deep learning: training and inference Deep learning is generally classified into two stages, training and inference. First, the process of estimating the parameters of the neural network model based on known data is called training. Training is sometimes also known as the process of parameter learning. In this book, to avoid ambiguity, we use](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-25-2048.jpg)

![Introduction 11 the word “training” to describe the parameter estimation process. The data required in the training process is called a training dataset. The training al- gorithm is usually described as an optimization task. The model parameters with the smallest prediction error of the data labels on the training sample set are estimated through gradient descent [25], and the neural network model with better generalization is acquired through regularization [26]. In the second stage, the trained neural network model is deployed in the system to predict the labels of the unknown data obtained by the sensor in real time. This process is called the inference process. Training and inference of models are like two sides of the same coin, which belong to different stages and are closely related. The training quality of the model determines the inference accuracy of the model. For the convenience of understanding the subsequent content of this book, we summarize the main concepts of machine learning involved in the training and inference process as follows. Dataset. The dataset is a collection of known data with similar at- tributes or features and their labels. In deep learning, signals such as voices and images acquired by the sensor are usually converted into data expres- sion forms of vectors, matrices, or tensors. The dataset is usually classified into a training dataset and a test dataset, which are used for the estimation of the parameters of the neural network model and the evaluation of neural network inference performance respectively. Deep learning model. In this book, we will name a function f (x;θ) from the known data x to the label y to be estimated as the model, where θ is a collection of internal parameters of the neural network. It is worth mentioning that in deep learning, the parameters and function forms of the model are diverse and large in scale. It is usually difficult to write the analytical form of the function. Only a formal definition is provided here. Objective function. The process of deep learning model training is defined as an optimization problem. The objective function of the op- timization problem generally includes two parts, a loss function and a regularization term. The loss function is used to describe the average error of the label prediction of the neural network model on the training sam- ples. The loss function is minimized to enhance the accuracy of the model on the training sample set. The regularization term is usually used to con- trol the complexity of the model to improve the accuracy of the model for unknown data labels and the generalization performance of the model.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-26-2048.jpg)

![12 Deep Learning on Edge Computing Devices Figure 1.5 Application scenarios of cloud and edge. 1.3.3 Cloud and edge devices Edge computing [27] refers to a concept in which a distributed architec- ture decomposes and cuts the large-scale computing of the central node into smaller and easier-to-manage parts and disperses them to the edge nodes for processing. The edge nodes are closer to the terminal devices and have higher transmission speed and lower time delay. As shown in Fig. 1.5, the cloud refers to the central servers far away from users. The users can access these servers anytime and anywhere through the Internet to realize information query and sharing. The edge refers to the base station or server close to the user side. We can see from the figure that the terminal devices [28] such as monitoring cameras, mobile phones, and smart watches are closer to the edge. For deep learning applications, if the inference stage can be completed at the edge, then the problem of transmission time de- lay may be solved, and the edge computing provides services near data sources or users, which will not cause the problem of privacy disclosure. Data show that cloud computing power will grow linearly in future years, with a compound annual growth rate of 4.6%, whereas demand at the edge is exponential, with a compound annual growth rate of 32.5%. The edge computing terminal refers to the smart devices that focus on real-time, secure, and efficient specific scenario data analysis on user termi- nals. The edge computing terminal has huge development prospects in the field of artificial intelligence Internet of Things (AIoT). A large number](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-27-2048.jpg)

![16 Deep Learning on Edge Computing Devices deep adaptive network method from the perspective of algorithm and hard- ware collaborative design to explore the sparsity between neural network connections. To make full use of the advantages of algorithm optimization, we propose an efficient hardware architecture based on a sparsely mapped memory. Unlike the traditional network architecture on chip, the deep adaptive network on chip (DANoC) closely combines communication and calculation to avoid massive power loss caused by parameter transmission between the onboard memory and the on-chip computing unit. The ex- perimental results show that compared with the most advanced method, the system has higher precision and efficiency. Chapter 8 (Hardware Architecture Optimization for Object Tracking) proposes a low-cost and high-speed VLSI system for object tracking from the perspective of algorithm and hardware collaborative design based on texture and dynamic compression perception features and ellipse matching algorithm. The system introduces a memory-centric architecture mode, multistage pipelines, and parallel processing circuits to achieve high frame rates while consuming minimal hardware resources. Based on the FPGA prototype system, at a clock frequency of 100 MHz, a processing speed of 600 frames per second is realized, and stable tracking results are main- tained. Chapter 9 (SensCamera: A Learning based Smart Camera Prototype) provides an example of edge computing terminals, a smart monitoring camera prototype system from the perspective of algorithm and hardware collaborative design, and integrates algorithm innovation and hardware ar- chitecture innovation. First, we propose a hardware-friendly algorithm, which is an efficient convolutional neural network for unifying object detection and image compression. The algorithm uses convolution com- putation to perform near-isometric compressed perception and invents a new noncoherent convolution method to learn the sampling matrix to re- alize the near-isometric characteristics of compressed perception. Finally, through hardware-oriented algorithm optimization, a smart camera pro- totype built with independent hardware can be used to perform object detection and image compression of 20 to 25 frames of video images per second with power consumption of 14 watts. References [1] Intelligence-GSMA, The mobile economy 2020, Tech. rep., GSM Association, Lon- don, 2020. [2] J. Von Neumann, The Computer and the Brain, Yale University Press, 2012.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-31-2048.jpg)

![Introduction 17 [3] B.G. Buchanan, A (very) brief history of artificial intelligence, AI Magazine 26 (2005) 53–60. [4] J. McCarthy, M. Minsky, N. Rochester, C.E. Shannon, A proposal for the Dartmouth summer research project on artificial intelligence, August 31, 1955, AI Magazine 27 (2006) 12–14. [5] A.L. Samuel, Some studies in machine learning using the game of checkers, IBM Journal of Research and Development 3 (1959) 210–229. [6] C. Cortes, V. Vapnik, Support-vector networks, Machine Learning 20 (3) (1995) 273–297. [7] W.S. McCulloch, W. Pitts, A logical calculus of the ideas immanent in nervous activity, The Bulletin of Mathematical Biophysics 5 (4) (1943) 115–133. [8] F. Rosenblatt, The perceptron: a probabilistic model for information storage and or- ganization in the brain, Psychological Review 65 (6) (1958) 386. [9] D.E. Rumelhart, G.E. Hinton, R.J. Williams, Learning representations by back- propagating errors, Nature 323 (6088) (1986) 533–536. [10] G.E. Hinton, S. Osindero, Y.-W. Teh, A fast learning algorithm for deep belief nets, Neural Computation 18 (7) (2006) 1527–1554. [11] Y. LeCun, L. Bottou, Y. Bengio, P. Haffner, Gradient-based learning applied to doc- ument recognition, Proceedings of the IEEE 86 (11) (1998) 2278–2324. [12] A. Voulodimos, N. Doulamis, A. Doulamis, E. Protopapadakis, Deep learning for computer vision: A brief review, Computational Intelligence and Neuroscience (2018). [13] H. Purwins, B. Li, T. Virtanen, J. Schlüter, S.-y. Chang, T. Sainath, Deep learning for audio signal processing, IEEE Journal of Selected Topics in Signal Processing 13 (2019) 206–219. [14] K. Ashton, et al., That ‘internet of things’ thing, RFID Journal 22 (7) (2009) 97–114. [15] A. Ghosh, D. Chakraborty, A. Law, Artificial intelligence in internet of things, CAAI Transactions on Intelligence Technology 3 (4) (2018) 208–218. [16] S. Ingle, M. Phute, Tesla autopilot: semi autonomous driving, an uptick for future autonomy, International Research Journal of Engineering and Technology 3 (9) (2016) 369–372. [17] B. Sudharsan, S.P. Kumar, R. Dhakshinamurthy, AI vision: Smart speaker design and implementation with object detection custom skill and advanced voice interaction ca- pability, in: Proceedings of International Conference on Advanced Computing, 2019, pp. 97–102. [18] D. Saha, A. Mukherjee, Pervasive computing: a paradigm for the 21st century, Com- puter 36 (3) (2003) 25–31. [19] M. Satyanarayanan, Pervasive computing: Vision and challenges, IEEE Personal Com- munications 8 (4) (2001) 10–17. [20] D. Reinsel, J. Gantz, J. Rydning, Data age 2025: the evolution of data to life-critical don’t focus on big data; focus on the data that’s big, Tech. rep., IDC, Seagate, 2017. [21] S.D. Holcomb, W.K. Porter, S.V. Ault, G. Mao, J. Wang, Overview on DeepMind and its AlphaGo Zero AI, in: Proceedings of the International Conference on Big Data and Education, 2018, pp. 67–71. [22] R. Schaller, Moore’s law: past, present and future, IEEE Spectrum 34 (1997) 52–59. [23] D. Han, S. Zhou, T. Zhi, Y. Chen, T. Chen, A survey of artificial intelligence chip, Journal of Computer Research and Development 56 (1) (2019) 7. [24] T.B. Brown, B. Mann, N. Ryder, M. Subbiah, J. Kaplan, et al., Language models are few-shot learners, arXiv:2005.14165 [abs]. [25] J. Zhang, Gradient descent based optimization algorithms for deep learning models training, arXiv:1903.03614 [abs]. [26] J. Kukacka, V. Golkov, D. Cremers, Regularization for deep learning: A taxonomy, arXiv:1710.10686 [abs].](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-32-2048.jpg)

![18 Deep Learning on Edge Computing Devices [27] W. Shi, J. Cao, Q. Zhang, Y. Li, L. Xu, Edge computing: Vision and challenges, IEEE Internet of Things Journal 3 (5) (2016) 637–646. [28] J. Chen, X. Ran, Deep learning with edge computing: A review, Proceedings of the IEEE 107 (8) (2019) 1655–1674.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-33-2048.jpg)

![CHAPTER 2 The basics of deep learning 2.1 Feedforward neural networks A feedforward neural network (or fully connected neural network) is one of the earliest neural network models invented in the field of artificial intel- ligence [1]. It is able to learn autonomously via the input data to complete specific tasks. Here we take image classification [2], one of the core prob- lems in the field of computer vision, as an example to illustrate the principle of a feedforward neural network. The so-called classification problem is allocating a label to each input data on the premise of a fixed set of classi- fication labels. The task of a feedforward neural network is predicting the classification label of a given image. The prediction is made by giving scores (prediction probabilities) of the image under each classification label in the form of a vector, which are also the output of the feedforward neural net- work. Apparently, the label with the highest score is the category to which the network predicts that the image belongs. As shown in Fig. 2.1(b), the process of prediction is a simple linear mapping combined with an activa- tion function σ, f (x;W,b) = σ(Wx + b), (2.1) where the image data x ∈ Rd, d is the number of pixel elements of the im- ages. The parameters of this linear function are the matrix W ∈ Rc×d and column vector b ∈ Rc, and c represents the number of categories. The pa- rameter W is called the weight, and b is called the bias vector. Obviously, the weight and bias affect the performance of the feedforward neural net- work, and the correct prediction is closely related to the values of these two matrix vectors. According to the operational rule of matrices, the output will be a column vector of size c × 1, i.e., the scores of the c categories mentioned earlier. The structure of the feedforward neural network is inspired by the neu- ronal system of human brain [3]. The basic unit of computation of the brain is neuron. There are 80 billion neurons in a human neuronal system, which are connected by approximately 1014 to 1015 synapses. Fig. 2.1(a) shows a biological neuron. As shown in the figure, each neuron receives input signals from its dendrites and then generates output signals along its Deep Learning on Edge Computing Devices https://doi.org/10.1016/B978-0-32-385783-3.00009-0 Copyright © 2022 Tsinghua University Press. Published by Elsevier Inc. All rights reserved. 19](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-34-2048.jpg)

![20 Deep Learning on Edge Computing Devices Figure 2.1 The correspondings between the neuronal structure of human brain and the artificial neural network. unique axon. The axon branches off gradually at the end and is connected to dendrites of other neurons through synapses. In the computation model of an artificial neuron, signals propagating along the axon (equivalent to input x) interact with the dendrites of other neurons (equivalent to matrix operation Wx) based on the synaptic strength of synapses (equivalent to weight W). The synaptic strength can control the strength of influence of one neuron on the other one, as well as the direction of influence: to ex- cite (positive weight) or suppress (negative weight) that neuron. Dendrites transmit signals to a cell body, where the signals are added up. According to what has been said so far, the human brain system works in a way similar to the linear mapping we just mentioned, but then the crucial point comes. The neurons activate and output an electrical pulse to their axons only if the sum in the cell body is above a certain threshold. In neuronal dynamics, the Leaky Integrate-and-Fire (LIF) model [4] is com- monly used to describe this process. The model describes the membrane potential of the neuron based on the input to synapse and the injection cur- rent it receives. Simply speaking, the communication between two neurons requires a spike as a mark. When the synapse of the previous neuron sends](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-35-2048.jpg)

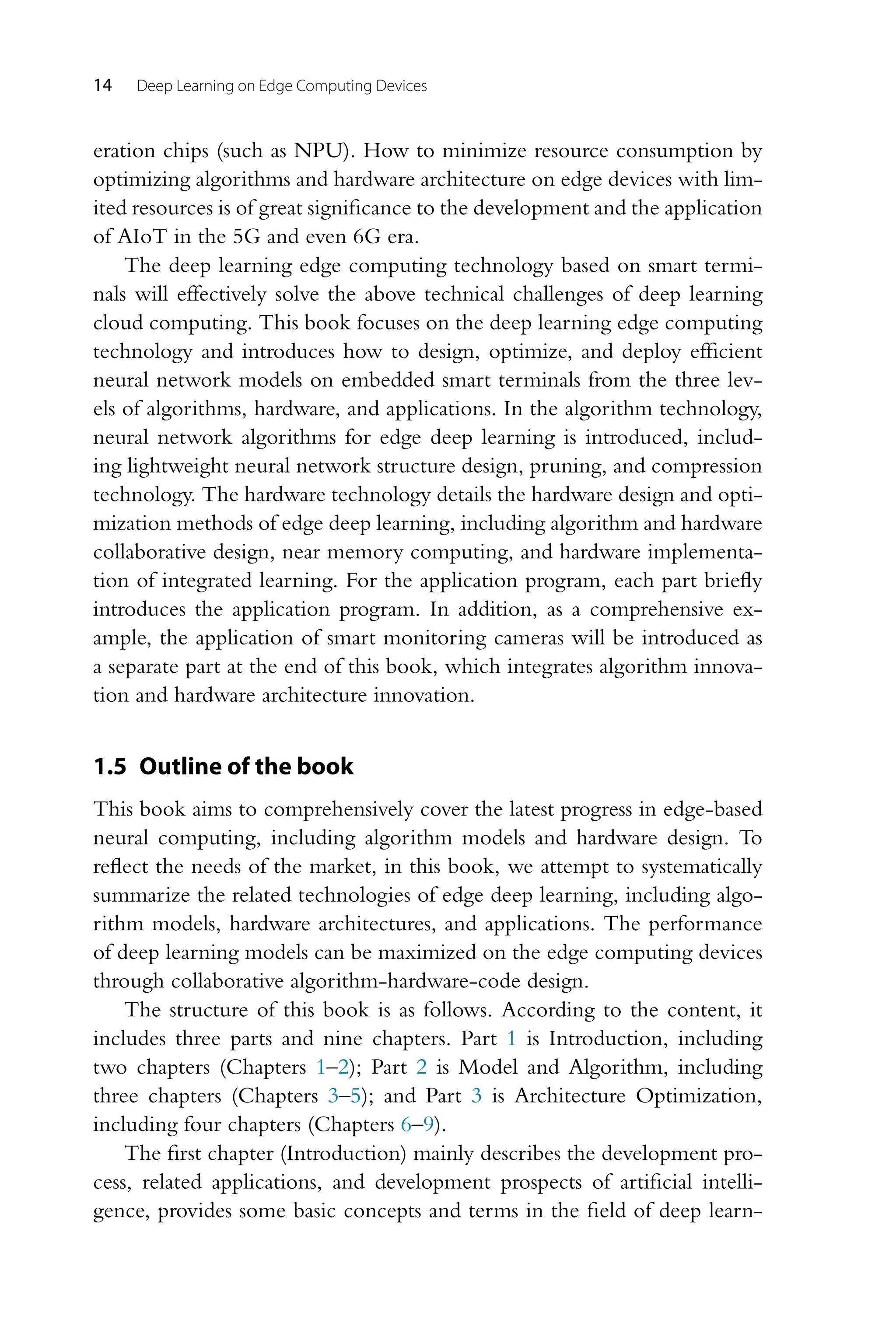

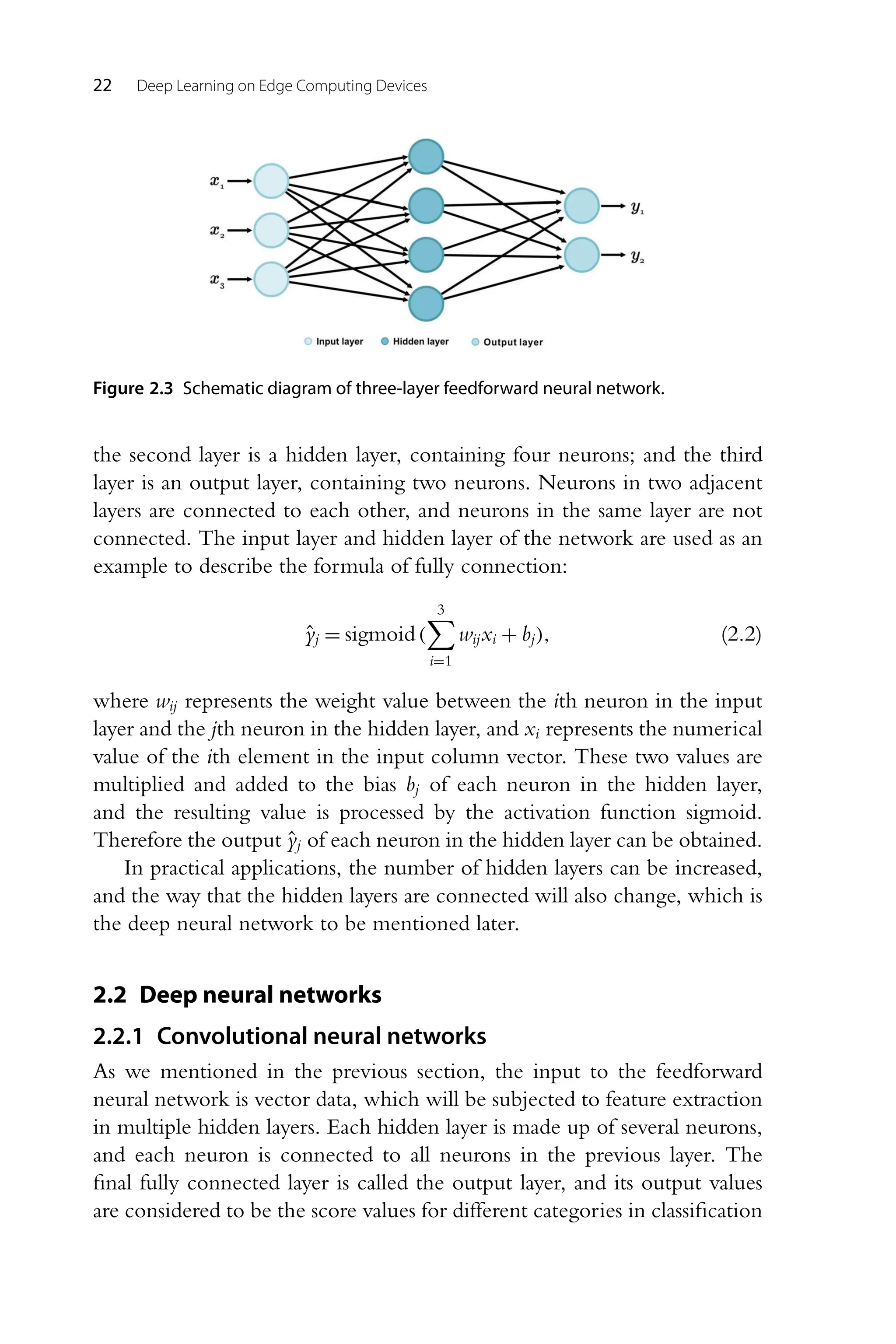

![The basics of deep learning 21 Figure 2.2 The illustration of two activation functions, step and sigmoid functions. out a current, the membrane potential rises. Once the membrane potential exceeds a given threshold, a spike will be generated, and the membrane potential will be reset. Obviously, this process of spike generation is similar to a threshold-based function. If the current is lower than the threshold, then there will be no spike, and if the current is higher than the threshold, then there will be a spike, which is similar to the characteristics of a step function. The concept of activation function [5] was proposed in the light of this characteristic of human brain neurons. The activation function makes the neural network nonlinear, so that some problems that linear regression can- not handle can be solved. The step function just mentioned can handle the binary classification problem (outputting “yes” or “no”). For more cate- gories, we need an intermediate activation value or an accurate description of the degree of activation, rather than a simple division into 100% or 0. In such a context, traditional activation functions such as sigmoid were pro- posed, which normalizes the input to (0, 1), achieves nonlinearity, and has an intermediate activation value. The formulations and curves are shown in Fig. 2.2. Normally, a typical feedforward neural network has one or more addi- tional layers of neurons between the input and output layers, which are called hidden layers. The hidden layers exist to identify and divide the features of the input data in greater detail [6], so as to make correct predic- tions. We divide a classification problem into multiple subproblems based on physical features, and each neuron in the hidden layers is responsible for dealing with such a subproblem. Fig. 2.3 shows a three-layer feedforward neural network. The first layer is an input layer, containing three neurons;](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-36-2048.jpg)

![The basics of deep learning 23 Figure 2.4 Schematic diagram of convolutional operation receptive field. problems. Such a kind of network structure has obvious defects when facing large-size image input. The fully connected structure between hidden layers leads to a sharp increase in network parameter numbers, which not only greatly reduces the training speed, but also may lead to overfitting of the network and greatly damage the model performance. The fitting accuracy can be improved by increasing the number of network layers, but with the increase in the number of layers, problems such as gradient vanishing are easy to appear, making it difficult for the network to train convergence. Patterns of image recognition by human brain have been found to be instructive for the improvement of the structure of artificial neural net- works. The human brain first perceives each local feature in the picture and then performs a higher level of integration to obtain global information. This is to make use of the sparse connectivity of the observed objects in the image, that is, local pixels in the image are closely related, whereas the correlation between pixels that are further apart is weak. Like the human brain, we only need to perceive local features of an image at the hidden lay- ers and then integrate the local information at a higher layer to recognize a complete image. In recent years, it has been found that the convolution operator, which is widely used in the field of signal processing, can complete such a process. For one-dimensional time series signals, convolution is a special integral operation. When extended to a two-dimensional image, a matrix called convolutional kernel will be used to replace a signal participating in convo- lution in the one-dimensional case [7]. We have each convolutional kernel in the hidden layer connected to only one local area of the input data, and the spatial size of the connection is called the receptive field of the neuron.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-38-2048.jpg)

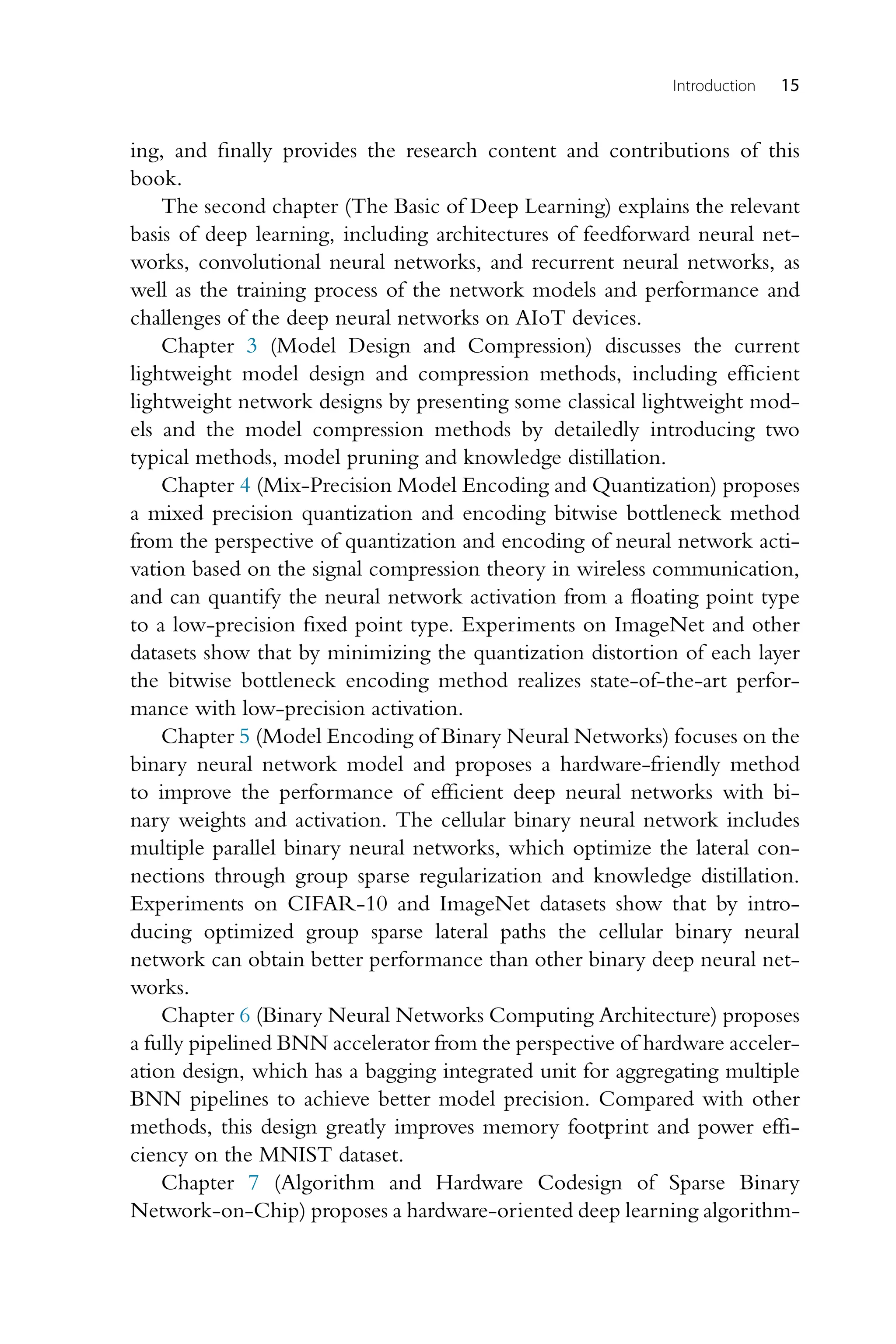

![24 Deep Learning on Edge Computing Devices Figure 2.5 The illustration of a typical convolutional neural network structure. The receptive field can be understood as the size of the area seen by a neu- ron [8]. The deeper the neuron, the larger the input area that the neuron can see. As shown in Fig. 2.4, the receptive field of each neuron in the first hidden layer is 3, the receptive field of each neuron in the second hidden layer is 5, and the receptive field of each neuron in the third hidden layer is 7. The further away the hidden layer is from the input layer, the more features can be obtained, realizing the control from local features to the whole perception. The convolutional kernel is equivalent to a mapping rule in which the value of an original image pixel point is multiplied by the value of the convolutional kernel at the corresponding location, and then the resulting values are added according to the weights. This process is similar to the search for a class of patterns in an image to extract the features of the image. Obviously, such a filter is not able to extract all features, and a set of different filters is required. Convolutional neural network is a kind of feedforward neural networks with convolution operation and deep structure [9]. Its structure, as shown in Fig. 2.5, includes multiple convolutional layers for feature extraction, pool- ing layers for reducing the amount of computation, and a fully connected neural network layer for classification. We will elaborate on the principles of each layer below. A convolutional layer is a hidden layer that contains several convolu- tion units in a convolutional neural network, which is used for feature extraction. As mentioned above, the convolution is characterized by sparse connection and parameter sharing. The structure of the convolutional layer is shown in Fig. 2.6. The square window on the left is the previously men-](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-39-2048.jpg)

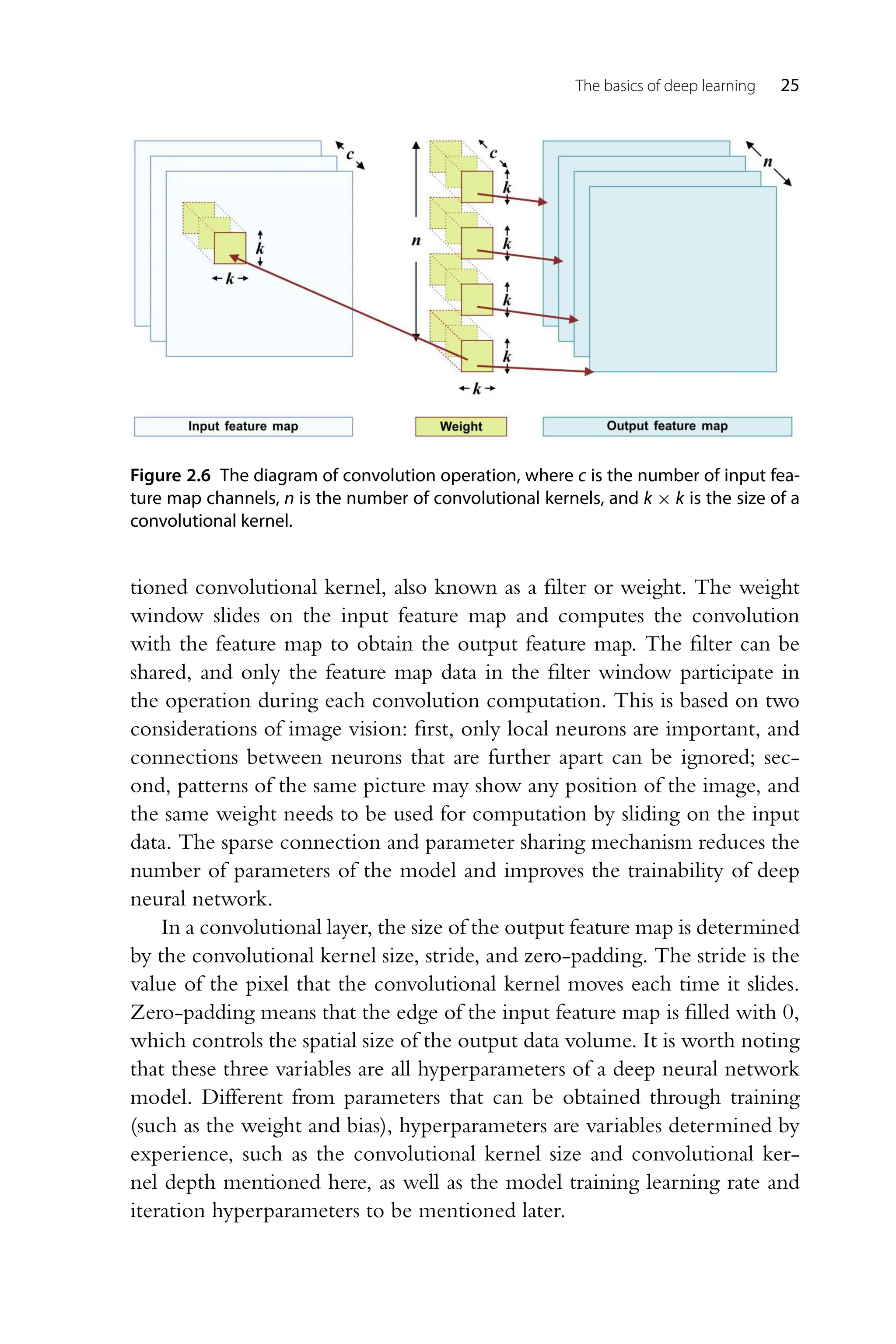

![26 Deep Learning on Edge Computing Devices Figure 2.7 The illustration of two different types of pooling, max pooling and average pooling. The pooling layer is a hidden layer used to abstract information in a deep neural network. Pooling is also a method to reduce the amount of com- putation of the model, which can increase the receptive field and reduce the difficulty and parameters of optimization. Fig. 2.7 shows two common pooling operations [10]. For each feature map channel, a window sliding operation is performed to realize max pooling (take the maximum value) or average pooling (take the average value) of data in the window to reduce the amount of data, prevent overfitting, and improve the generalization ability of the model. Generally, the pooling stride is greater than 1, which is used to reduce the scale of feature map. As shown in Fig. 2.7, the 4 × 4 feature map passes through a 2 × 2 pooling layer, whose stride is 2, and the output size is 2 × 2. A convolutional neural network, as an important supporting technol- ogy of deep learning, promotes the development of artificial intelligence. Convolution operators can effectively extract spatial information and are widely used in the field of visual images, including image recognition [11], image segmentation [12], target detection [13], etc. In the model inference stage, the image data is input into the network, multilevel feature extrac- tion is carried out through computation of multiple macromodules, and the prediction results of categories are output using a fully connected layer. In the model training stage, for a given input data, the error between the predicted result of a label and the real label is computed. Then the error gradient with respect to each parameter is computed by the back propa- gation algorithm. Finally, the parameters are updated by using the gradient descent algorithm. The above iterative steps are repeated for many times to gradually reduce the neural network prediction error until it converges. Compared with traditional feedforward neural networks, the convolutional](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-41-2048.jpg)

![The basics of deep learning 27 neural network generally has better prediction accuracy and is one of the most important deep neural network structures. 2.2.2 Recurrent neural networks Both the deep feedforward and convolutional neural networks mentioned above have a characteristic that their network structures are arranged in order, neurons in the lth layer receive only signals from neurons in the (l − 1)th layer, and there is no feedback structure. However, in a particular task, to better capture the time-sequential features of the input vector, it is sometimes necessary to combine the sequential inputs. For example, in speech signal processing, if an exact translation of a sentence is required, then it is obviously impossible to translate each word separately. Instead, the words need to be connected together to form a sequence, and then the entire time-sequential sequence is processed. The recurrent neural network (RNN) [14] described in this section is a neural network structure that processes time-sequential data and has feedback. RNN, originated in the 1980s and 1990s [15], is a recursive neural network that takes sequence data as input, adds feedback in the evolu- tion direction of the sequence, and links all nodes in a chain. It is difficult for a traditional feedforward neural network to establish a time-dependent model, whereas RNN can integrate information from input unit and pre- vious time node, allowing information to continue to function across the time node. This means that the network has a memory function, which is very useful in natural language processing, translation, speech recognition, and video processing. Fig. 2.8 shows the basic structure of a standard RNN. On the left, there is a folded diagram, and on the right, there is the structure diagram ex- panded in chronological order. We can see that the loop body structure of RNN is located in the hidden layer. This network structure reveals the essence of RNN: the network information of the previous moment will act on that of the next moment, that is, the historical information of the previ- ous moment will be connected to the neuron of the next moment through weights. As shown in the figure, in an RNN network, x represents an in- put, h represents a hidden layer unit, o represents an output, y represents a training label, t represents time, U is a parameter from the input layer to hidden layer, V is a parameter from the hidden layer to output layer, and W is a recurrent layer parameter. As we can see from the previous description, the performance of h at the moment of t is not only determined by the](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-42-2048.jpg)

![28 Deep Learning on Edge Computing Devices Figure 2.8 The schematic diagram of RNN, and its corresponding unfolded form. input at that moment, but also influenced by the previous moment: h(t) = tanh(Ux(t) + Wh(t−1) + b). (2.3) The output at the moment of t is o(t) = Vh(t) + c. (2.4) The final output predicted by the model is y = σ(o(t) ). (2.5) It is worth noting that for excessively long speech sequences, RNN only has short-term memory due to the problem of gradient vanishing during the training of back propagation model. Long short-term memory (LSTM) [16] and gate recurrent unit (GRU) [17] are two solutions to short-term memory of RNN, which introduce a gating mechanism to regulate infor- mation flow. Take the LSTM structure as an example. It contains a forget gate, an input gate, and an output gate, which are used to reserve or delete the incoming information and to record key information. LSTM performs well in long-term memory tasks, but the structure also leads to more pa- rameters, making the training more difficult. Compared with LSTM, GRU with similar structure uses a single gate to complete the forget and se- lection information stages, reducing parameters while achieving the same performance as LSTM, which is widely used under the condition of limited computing resource and time cost.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-43-2048.jpg)

![The basics of deep learning 29 2.3 Learning objectives and training process 2.3.1 Loss function Deep learning model training and parameter estimation are generally based on the optimization of specific loss functions (or objective functions, col- lectively referred to as loss functions in this book). In model optimization theory, loss functions are a kind of functions that map the values of one or more variables to the real number field. For the training of neural network model, we use the loss function to measure the degree of inconsistency between the predicted value and the ground-truth label, which is a non- negative real-valued function, so the loss function for all samples of the training set is usually expressed as J(θ) = E(x,y)∼Pdata L(x,y;θ) = E(x,y)∼Pdata L(f (x;θ),y), (2.6) where L is the loss function of each sample, f (x;θ) is the output predicted by the model when x is the input, Pdata is the empirical distribution, E rep- resents expectation, y is the vector of all data labels, and θ represents all parameters of the neural networks. The smaller the output of the loss func- tion, the smaller the gap between the predicted value and the data label, and the better the performance of the model. Most importantly, the loss function is differentiable and can be used to solve optimization problems. In the model training stage the predicted value is obtained through for- ward propagation after data is fed into the model, and then the loss function computes the difference between the predicted value and the data label, i.e., the loss value. The model updates the parameters by back propagation to reduce the loss value, so that the predicted value generated by the model is close to the ground-truth label of the data, so as to achieve the purpose of learning. In the following sections, we mainly introduce two loss functions in two classical prediction tasks, classification and regression. The cross entropy loss function [18] is one of the most commonly used classification objective functions in current deep neural networks, which is a kind of loss functions based on probability distribution measurements. In information theory, entropy is used to describe the measurement of un- certainty. Cross entropy is originally used to estimate the average coding length, and in machine learning, it is used to evaluate the difference be- tween the probability distribution obtained by current training and the real distribution. For a single sample, the cross entropy loss function takes the](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-44-2048.jpg)

![30 Deep Learning on Edge Computing Devices form L = − C k=1 yk logŷk, (2.7) where C represents the number of output categories, ŷk represents the kth output element (k ∈ 1,2,...,C) of the neural network, and the cor- responding data label is yk. If it is the corresponding category, then yk is 1; otherwise, yk is 0. Different from the duality of data labels in a classification task (discrete type), each dimension of data labels in a regression task is a real number (successive type). In regression tasks the prediction error is frequently used to measure how close the predicted value of a model is to the data label. Assuming that the real label corresponding to the ith input feature xi in a regression question is yi = [yi1,...,yik,...,yiM]T , and M is the total dimen- sion of label vector, the prediction error of the network regression predicted value ŷik and its real label yik in the kth dimension is Lik = yik − ŷik. (2.8) Loss functions frequently used in regression tasks are L1 [19] and L2 [20]. The L1 loss function for N samples is defined as L = 1 N N i=1 M k=1 |Lik|. (2.9) There are many kinds of loss functions, including loss functions based on specific tasks, such as classification and regression tasks mentioned above, and loss functions based on distance measurement and probability distribu- tion, for example, the mean square error loss function [21] and the L1 and L2 loss functions are based on distance measurement, whereas the cross en- tropy loss function and Softmax loss function [22] are based on probability distribution. The selection of a loss function needs to consider data fea- tures, and, in some cases, some regular terms should be added to improve the performance of the model. 2.3.2 Regularization In model training, the loss value on the training sample can be continu- ously decreased by increasing the number of training iterations or adjusting hyperparameter settings. However, the prediction accuracy of the label of](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-45-2048.jpg)

![The basics of deep learning 31 the training sample may keep improving, but the prediction accuracy of the label of the testing sample decreases instead of rising, which is called overfitting. Therefore the regularization method should be used to improve the generalization ability of the model and avoid overfitting. Regularization is a method designed to reduce generalization errors, i.e., the errors of the model on testing samples, to correct models. In tra- ditional machine learning algorithms, the generalization ability is mainly improved by limiting the complexity of the model. Generally speaking, the model complexity is linearly related to the data amount of weight param- eters W: the larger the data volume of W, the greater the complexity, and the more complex the model. Therefore, to limit the complexity of the model, it is quite natural to reduce the number of weight parameters W, that is, to make some elements in W be zero or limit the number of nonzero elements. Make the parameter θ of the neural network contain the weight coefficients of all neural network levels. The complexity of model parame- ters can be limited by adding a parameter penalty (θ) to the loss function. The regularized loss function is denoted as L̃(x,y;θ) = L(x,y;θ) + α(θ), (2.10) where α ∈ [0,∞) is the hyperparameter weighing the relative contribution of regularization term and standard objective function L(x,y;θ). Setting α to 0 indicates that there is no regularization, and the larger the α, the greater the corresponding regularization contribution. By introducing regularization terms we hope to limit the number of nonzero elements in vector W, so that the weight parameters are as small as possible and close to 0. The most frequently used regularization penalty is the L2 norm, which suppresses the weights of large values by applying an element-by-element squared penalty to all parameters. The L2 parameter norm penalty is also known as the weight decay [23], which is a regu- larization strategy that makes the weight closer to the origin by adding a regularization term Ω = 1 2 W2 2 to the objective function. 2.3.3 Gradient-based optimization method As mentioned earlier, model training is achieved by minimizing loss func- tions in machine learning. Under normal circumstances, the loss function is very complicated, and it is difficult to solve the analytic expression of minimum value. The gradient descent [24] is designed to solve this kind of problem. For ease of understanding, let us take an example and regard](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-46-2048.jpg)

![32 Deep Learning on Edge Computing Devices the process of solving the minimum value of a loss function as “standing somewhere on a slope to look for the lowest point”. We do not know the exact location of the lowest point, the gradient descent strategy is to take a small step in the direction of downward slope, and after a long downward walk, there is a high probability that you will end up near the lowest point. We select the direction of downward slope to be the negative direction of the gradient, because the negative direction of the gradient at each point is the steepest downward direction of the function at that point. Deep neu- ral networks usually use the gradient descent to update parameters, and by introduction of random, adjustment of learning rate, and other methods it is hoped that the networks can avoid falling into poor local minimum points and converge to better points. This is the traditional idea of gradient descent. Stochastic gradient descent (SGD) is one of the most frequently used methods for updating parameters. In that method, the gradient of loss function with respect to parameters is computed by using a mini-batch of random sample data of the whole data set. SGD typically divides the whole data set into several small batches of sample data, then iterates the input and computes losses and gradients, and finally updates the parameters. Set the neural network parameter θ and collect a small batch of {x1,x2,...,xN } containing N samples from the training set, where xi corresponds to the la- bel yi. The following equations show the computation principle of gradient descent [25]. Gradient computation ĝ ← 1 m ∇θ i L(f (xi;θ),yi). (2.11) Application update θ ← θ − εĝ, (2.12) where ĝ represents the gradient of the loss function with respect to parame- ter θ, and ε is called the learning rate, which is a hyperparameter to control the update stride of parameters. A too large learning rate will fluctuate near the minimum value but fail to converge, and a too little learning rate leads to spending more time for convergence, so the convergence speed is slow. The learning rate can be adjusted by experience or algorithms. For exam- ple, the learning process may be slower when a flat or high-curvature area is encountered. A momentum algorithm can be added to SGD to improve](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-47-2048.jpg)

![The basics of deep learning 33 the convergence speed. At present, there are also adaptive algorithms such as Adaptive Moment Estimation (Adam) algorithm [26] and RMSProp al- gorithm [27], which can make an optimization from both the gradient and the learning rate to achieve good results. In deep learning, gradient computation is complicated because of the large number of network layers and parameters. Back propagation algo- rithm [28] is widely used in gradient computation of neural network parameters. The principle of the back propagation algorithm is to compute the gradient of the loss function with respect to each weight parameter layer by layer through the chain rule. Then based on the chain rule, the reverse iteration is performed from the last layer, and the weight parameters of the model are updated at the end of each iteration. In the process of model training, weight parameters are constantly updated by inputting dif- ferent batches of data until the loss function values converge to get a better parameter solution. 2.4 Computational complexity From the perspective of complexity, two considerations should be taken into account when designing a CNN network. One is the amount of computation required by the network, and the other is the scale of the parameters of the model and the input and output features of each layer. The former determines the speed of network training or inferring, usu- ally measured by time complexity, and the latter determines how much memory a computing device needs, usually measured by space complexity. The time complexity of an algorithm is a function that describes the running time of an input algorithm of a given size. It can describe the trend of change in code execution time with the increase in data size. Gen- erally speaking, the time complexity of the algorithm can be understood as the total time spent completing a set of specific algorithms. On a spe- cific device the time is determined by the total amount of computation required by the execution of the algorithm. The frequently used units for the measurement of amount of computation of deep learning algorithms are required floating-point operations and FLOPS. Floating point opera- tions per second (FLOPS) is a measure of computer performance, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second. At present, the total amount of computation of most convolutional neural networks can reach dozens or even hundreds of GigaFLOPs, such](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-48-2048.jpg)

![34 Deep Learning on Edge Computing Devices as the common convolutional neural network models MobileNet-V2 [29] and ResNet-50 [30], with a total amount of computation of 33.6 GFLOPs to 109.8 GFLOPs, which makes it difficult for neural networks deployed at the edge to complete real-time reasoning. The space complexity refers to the amount of memory space required to solve an instance of the computational problem as a function of char- acteristics of the input, which is usually measured in units of computer memory. Inside a computer, information is stored, computed, and trans- mitted in binary form. The most basic units of storage are bits and bytes. In convolutional neural networks the space complexity is mainly de- termined by the size of parameters at all layers. The parameters of the convolutional layer are mainly determined by the size and number of con- volutional kernels, whereas the parameters of the fully connected layer are determined by the number of input neurons and output neurons. Take AlexNet [31], the champion model of 2012 ImageNet Image Classifica- tion Challenge, for example, which contains five convolutional layers with parameter sizes of 35 KB, 307 KB, 884 KB, 1.3 MB, and 442 KB, respec- tively, and three fully connected layers with parameter sizes of 37 MB, 16 MB, and 4 MB. The total size of parameters in AlexNet is about 60 MB, among which the fully connected structure undoubtedly increases the size of parameters, contributing 57 MB of parameters. Some CNN models that emerged after AlexNet performed better, but they were difficult to deploy on edge computing terminals due to their high space complexity. References [1] D. Svozil, V. Kvasnicka, J. Pospichal, Introduction to multi-layer feed-forward neural networks, Chemometrics and Intelligent Laboratory Systems 39 (1) (1997) 43–62. [2] B.D. Ripley, Neural networks and related methods for classification, Journal of the Royal Statistical Society: Series B (Methodological) 56 (3) (1994) 409–437. [3] R. Sylwester, A Celebration of Neurons: An Educator’s Guide to the Human Brain, ERIC, 1995. [4] A.N. Burkitt, A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input, Biological Cybernetics 95 (1) (2006) 1–19. [5] F. Agostinelli, M. Hoffman, P. Sadowski, P. Baldi, Learning activation functions to improve deep neural networks, arXiv:1412.6830 [abs]. [6] G. Huang, Y. Chen, H.A. Babri, Classification ability of single hidden layer feedfor- ward neural networks, IEEE Transactions on Neural Networks 11 (3) (2000) 799–801. [7] Y. Pang, M. Sun, X. Jiang, X. Li, Convolution in convolution for network in net- work, IEEE Transactions on Neural Networks and Learning Systems 29 (5) (2017) 1587–1597. [8] W. Luo, Y. Li, R. Urtasun, R. Zemel, Understanding the effective receptive field in deep convolutional neural networks, in: Proceedings of International Conference on Neural Information Processing Systems, 2016, pp. 4905–4913.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-49-2048.jpg)

![The basics of deep learning 35 [9] S. Albawi, T.A. Mohammed, S. Al-Zawi, Understanding of a convolutional neural network, in: Proceedings of International Conference on Engineering and Technol- ogy, 2017, pp. 1–6. [10] D. Yu, H. Wang, P. Chen, Z. Wei, Mixed pooling for convolutional neural networks, in: Proceedings of International Conference on Rough Sets and Knowledge Technol- ogy, 2014. [11] S. Hijazi, R. Kumar, C. Rowen, et al., Using convolutional neural networks for image recognition, Cadence Design Systems (2015) 1–12. [12] H. Ajmal, S. Rehman, U. Farooq, Q.U. Ain, F. Riaz, A. Hassan, Convolutional neural network based image segmentation: a review, in: Proceedings of Pattern Recognition and Tracking XXIX, 2018. [13] Z. Wang, J. Liu, A review of object detection based on convolutional neural network, in: Proceedings of Chinese Control Conference, 2017, pp. 11104–11109. [14] Z.C. Lipton, A critical review of recurrent neural networks for sequence learning, arXiv:1506.00019 [abs]. [15] J.J. Hopfield, Neural networks and physical systems with emergent collective com- putational abilities, Proceedings of the National Academy of Sciences 79 (8) (1982) 2554–2558. [16] S. Hochreiter, J. Schmidhuber, Long short-term memory, Neural Computation 9 (8) (1997) 1735–1780. [17] R. Dey, F.M. Salem, Gate-variants of gated recurrent unit (GRU) neural networks, in: Proceedings of IEEE International Midwest Symposium on Circuits and Systems, 2017, pp. 1597–1600. [18] D.M. Kline, V. Berardi, Revisiting squared-error and cross-entropy functions for train- ing neural network classifiers, Neural Computing Applications 14 (2005) 310–318. [19] M.W. Schmidt, G. Fung, R. Rosales, Fast optimization methods for L1 regularization: A comparative study and two new approaches, in: Proceedings of European Confer- ence on Machine Learning, 2007. [20] P. Bühlmann, B. Yu, Boosting with the L2 loss: regression and classification, Journal of the American Statistical Association 98 (462) (2003) 324–339. [21] S. Singh, D. Singh, S. Kumar, Modified mean square error algorithm with reduced cost of training and simulation time for character recognition in backpropagation neu- ral network, in: Proceedings of International Conference on Frontiers in Intelligent Computing: Theory and Applications, 2013. [22] W. Liu, Y. Wen, Z. Yu, M. Yang, Large-margin softmax loss for convolutional neural networks, arXiv:1612.02295 [abs]. [23] A. Krogh, J. Hertz, A simple weight decay can improve generalization, in: Proceedings of International Conference on Neural Information Processing Systems, 1991. [24] E. Dogo, O. Afolabi, N. Nwulu, B. Twala, C. Aigbavboa, A comparative analysis of gradient descent-based optimization algorithms on convolutional neural networks, in: Proceedings of International Conference on Computational Techniques, Electronics and Mechanical Systems, 2018, pp. 92–99. [25] Y. Bengio, I. Goodfellow, A. Courville, Deep Learning, vol. 1, MIT press, Mas- sachusetts, USA, 2017. [26] D.P. Kingma, J. Ba Adam, A method for stochastic optimization, arXiv:1412.6980 [abs]. [27] G. Hinton, N. Srivastava, K. Swersky, RMSProp: Divide the gradient by a running average of its recent magnitude, Neural Networks for Machine Learning, Coursera lecture 6e (2012) 13. [28] D.E. Rumelhart, G.E. Hinton, R.J. Williams, Learning representations by back- propagating errors, Nature 323 (6088) (1986) 533–536.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-50-2048.jpg)

![36 Deep Learning on Edge Computing Devices [29] M. Sandler, A.G. Howard, M. Zhu, A. Zhmoginov, L.-C. Chen, MobileNetV2: Inverted residuals and linear bottlenecks, in: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2018. [30] K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, 2016. [31] A. Krizhevsky, I. Sutskever, G.E. Hinton, ImageNet classification with deep convolu- tional neural networks, Communications of the ACM 60 (2012) 84–90.](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-51-2048.jpg)

![CHAPTER 3 Model design and compression 3.1 Background and challenges Although convolutional neural networks have achieved good results in such fields as computer vision and natural language processing, they are daunting to many embedded device-based applications due to their massive parame- ters. At present, deep learning models require large amounts of computing resource and memory, often accompanied by huge energy consumption. Large models become the biggest bottleneck when we need to deploy models on terminal devices with limited computing resources for real-time inference. The training and reasoning of deep neural networks usually heav- ily rely on GPU with high computing ability. The huge scale of features and the deluge of model parameters also greatly increase the time. Take as an example AlexNet [1], a network containing 60 million parameters. It takes two to three days to train the entire model on the ImageNet data set using NVIDA K40. In fact, Denil et al. [9] have shown that deep neural networks are facing severe overparameterization, and a small subset of the parameters can completely reconstruct the remaining parameters. For ex- ample, ResNet-50 [10], which has 50 convolutional layers, requires more than 95 MB of storage memory and more than 3.8 billion floating-point multiplication operations to process images. After some redundant weights are discarded, the network still works as usual, but more than 75% of the parameters and 50% of the computation time can be saved. This indicates that there is huge redundancy in the parameters of the model, which reveals the feasibility of model compression. In the field of deep neural network study, model compression and accel- eration have received great attention from researchers, and great progress has been made in the past few years. Significant advances in intelligent wearable devices and AIoT in recent years have created unprecedented opportuni- ties for researchers to address the fundamental challenges of deploying deep learning systems to portable devices with limited resources, such as mem- ory, CPU, energy, and bandwidth. A highly efficient deep learning method can have a significant impact on distributed systems, embedded devices, and FPGAs for artificial intelligence. For the design and compression of highly efficient deep neural network models, in this chapter, we will first analyze Deep Learning on Edge Computing Devices https://doi.org/10.1016/B978-0-32-385783-3.00011-9 Copyright © 2022 Tsinghua University Press. Published by Elsevier Inc. All rights reserved. 39](https://image.slidesharecdn.com/22247573-250617220800-3c585c34/75/Deep-Learning-On-Edge-Computing-Devices-Design-Challenges-Of-Algorithm-And-Architecture-1st-Edition-Xichuan-Zhou-54-2048.jpg)