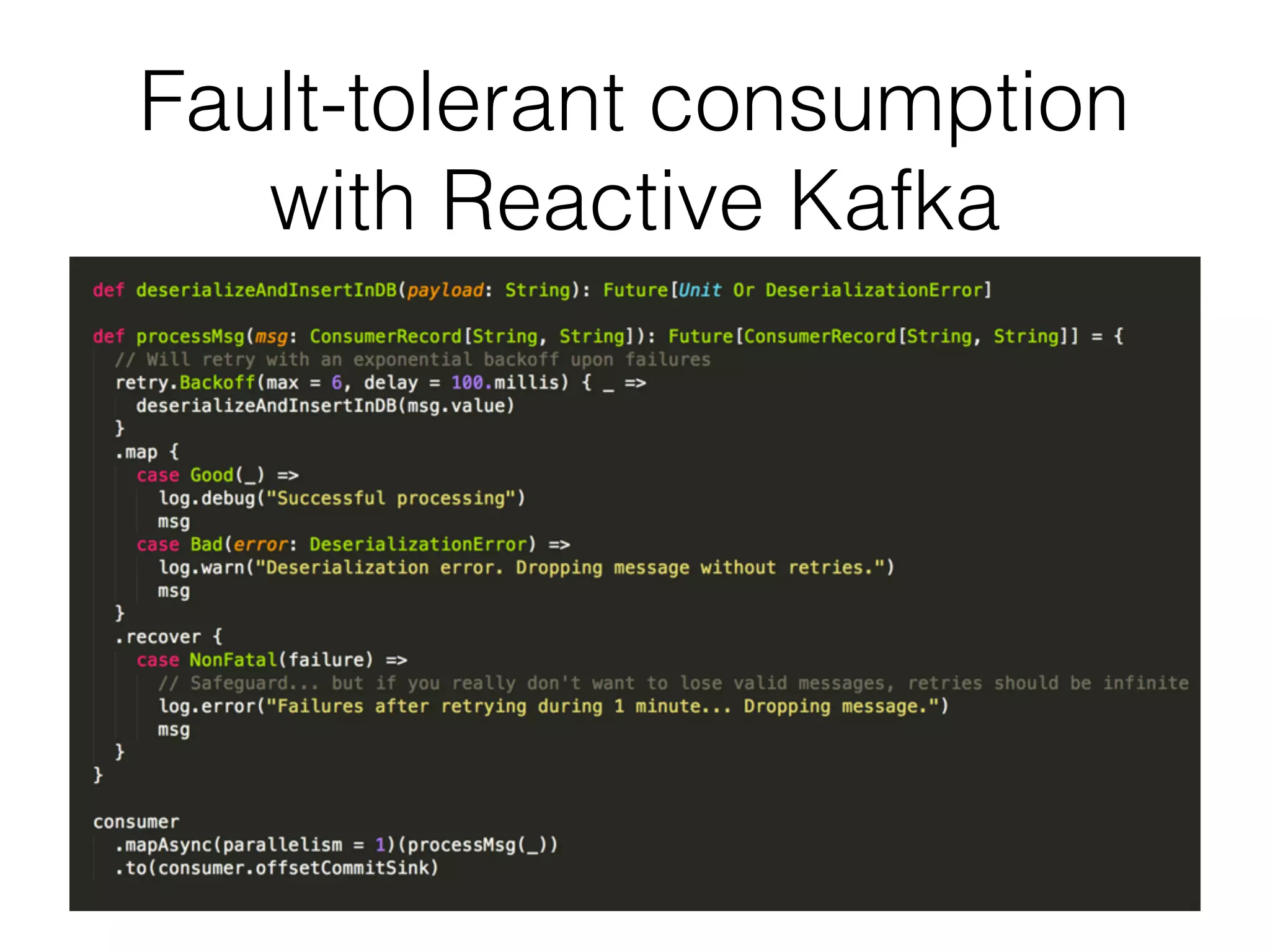

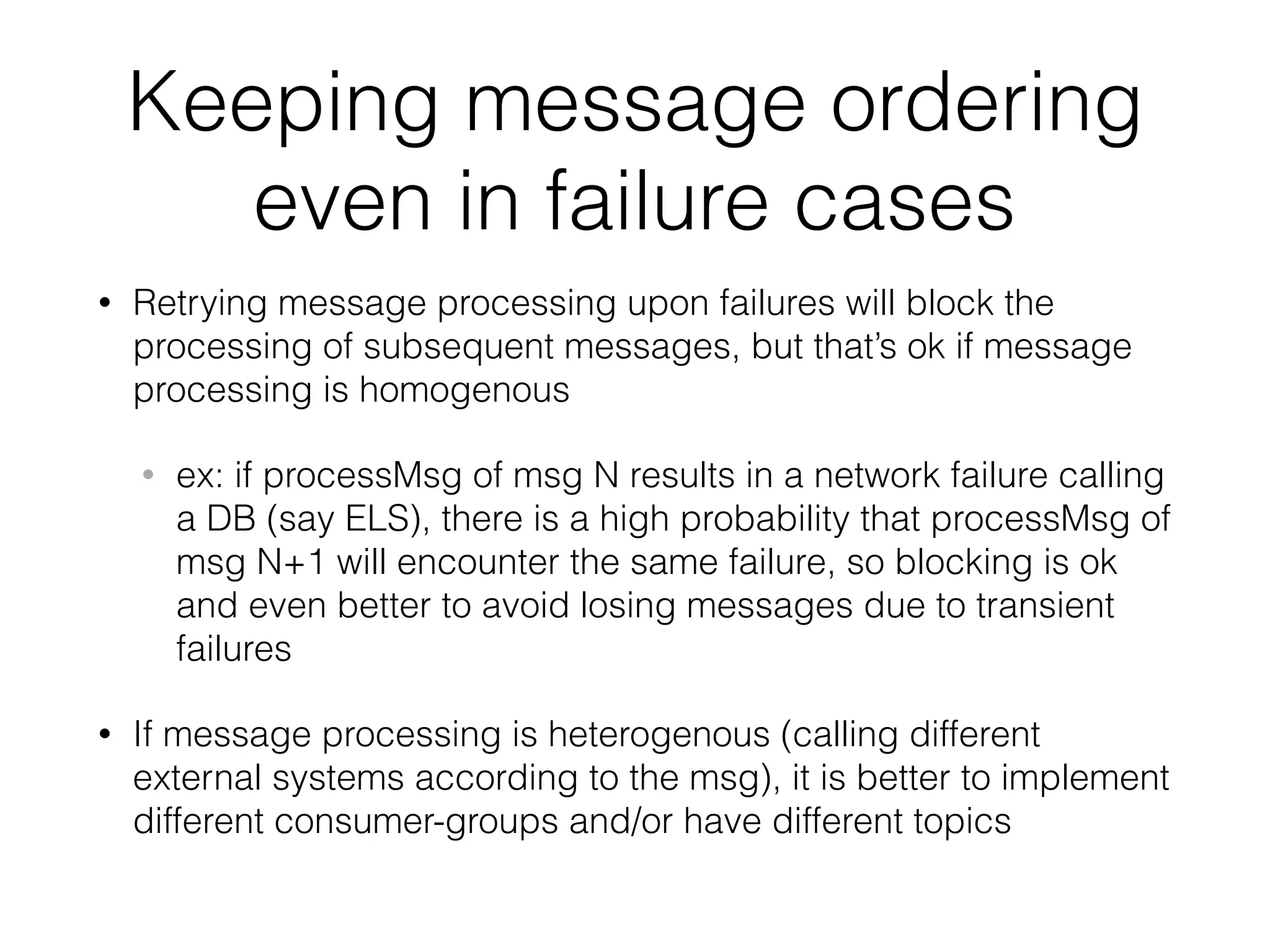

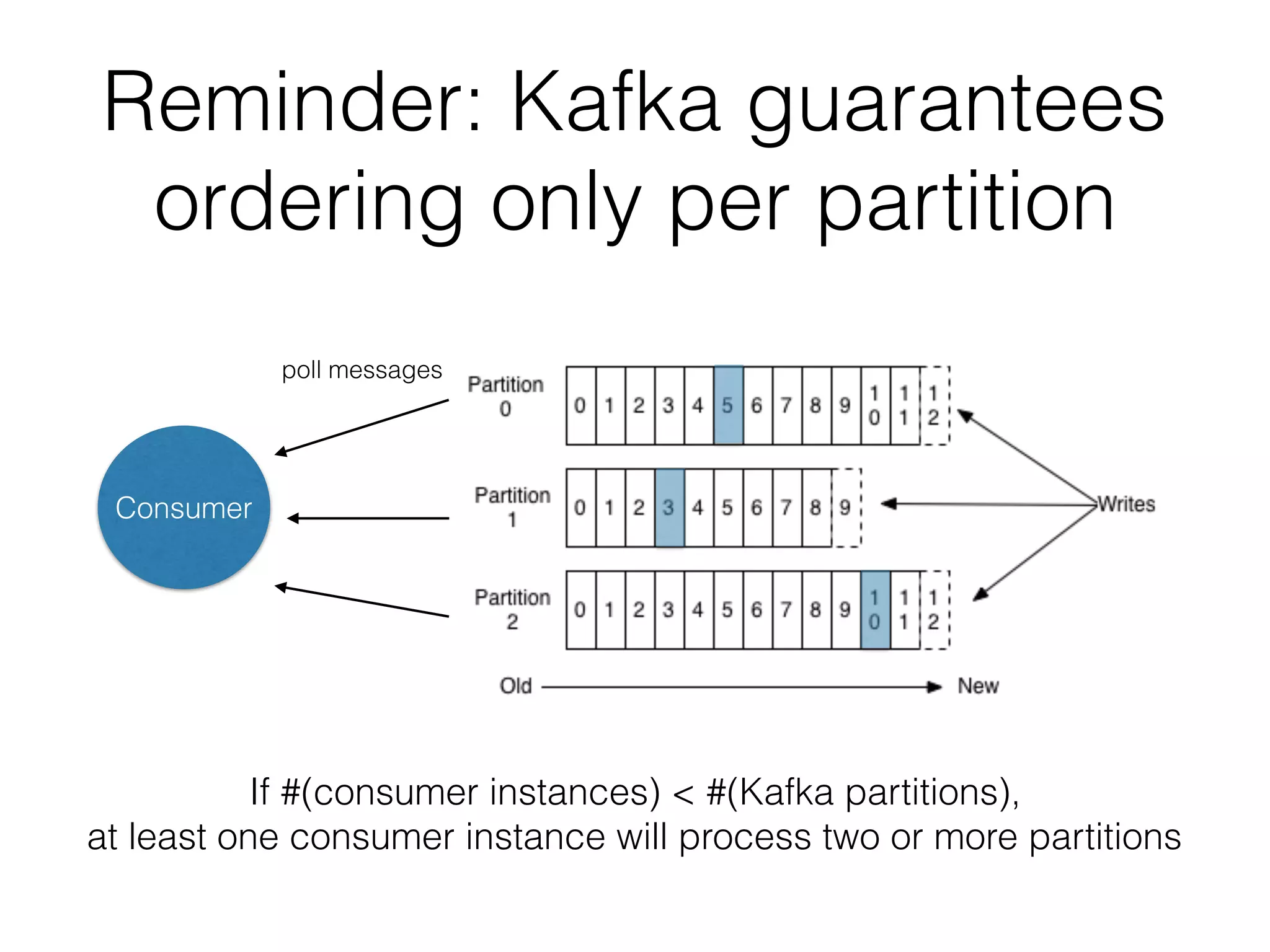

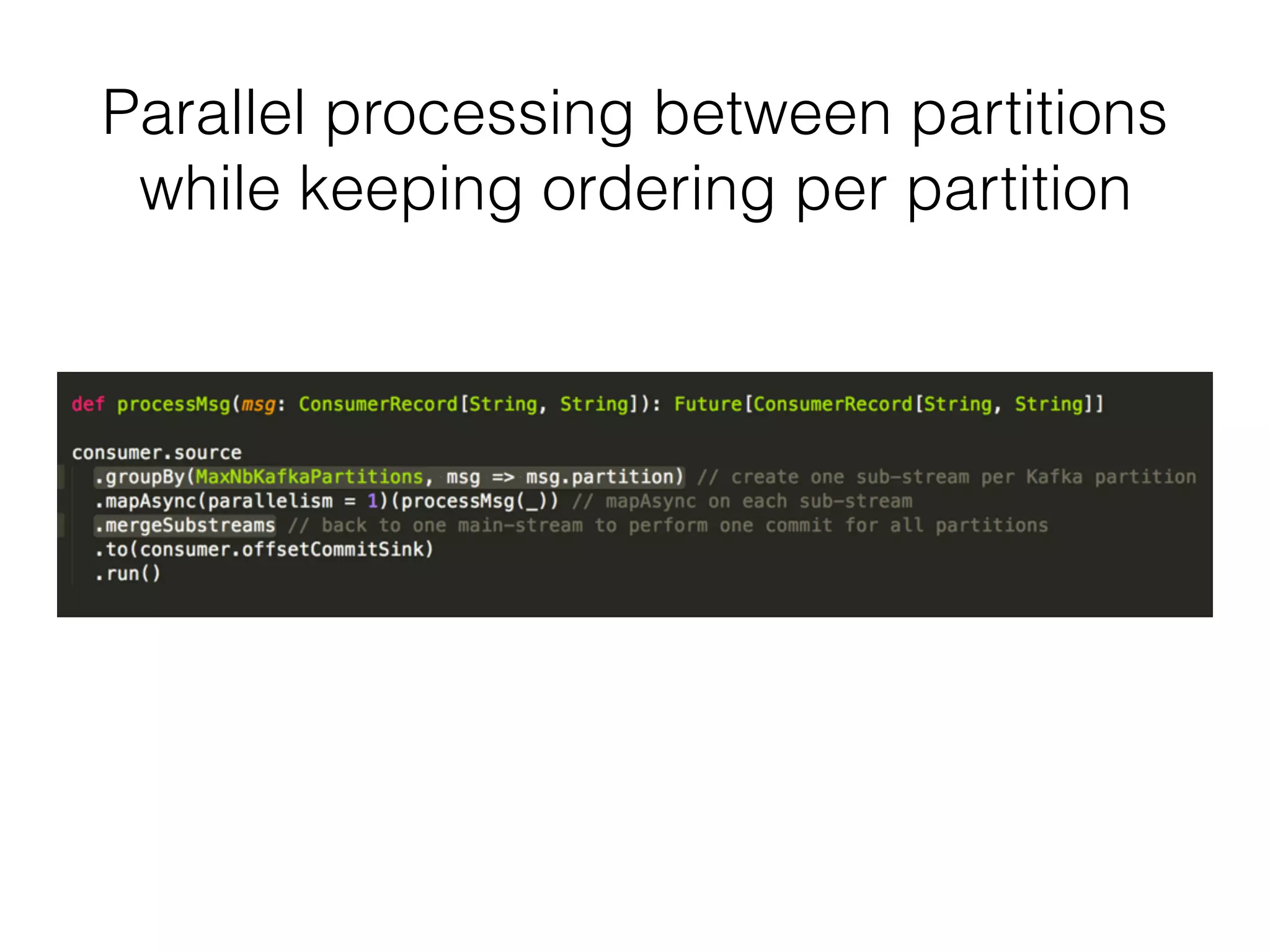

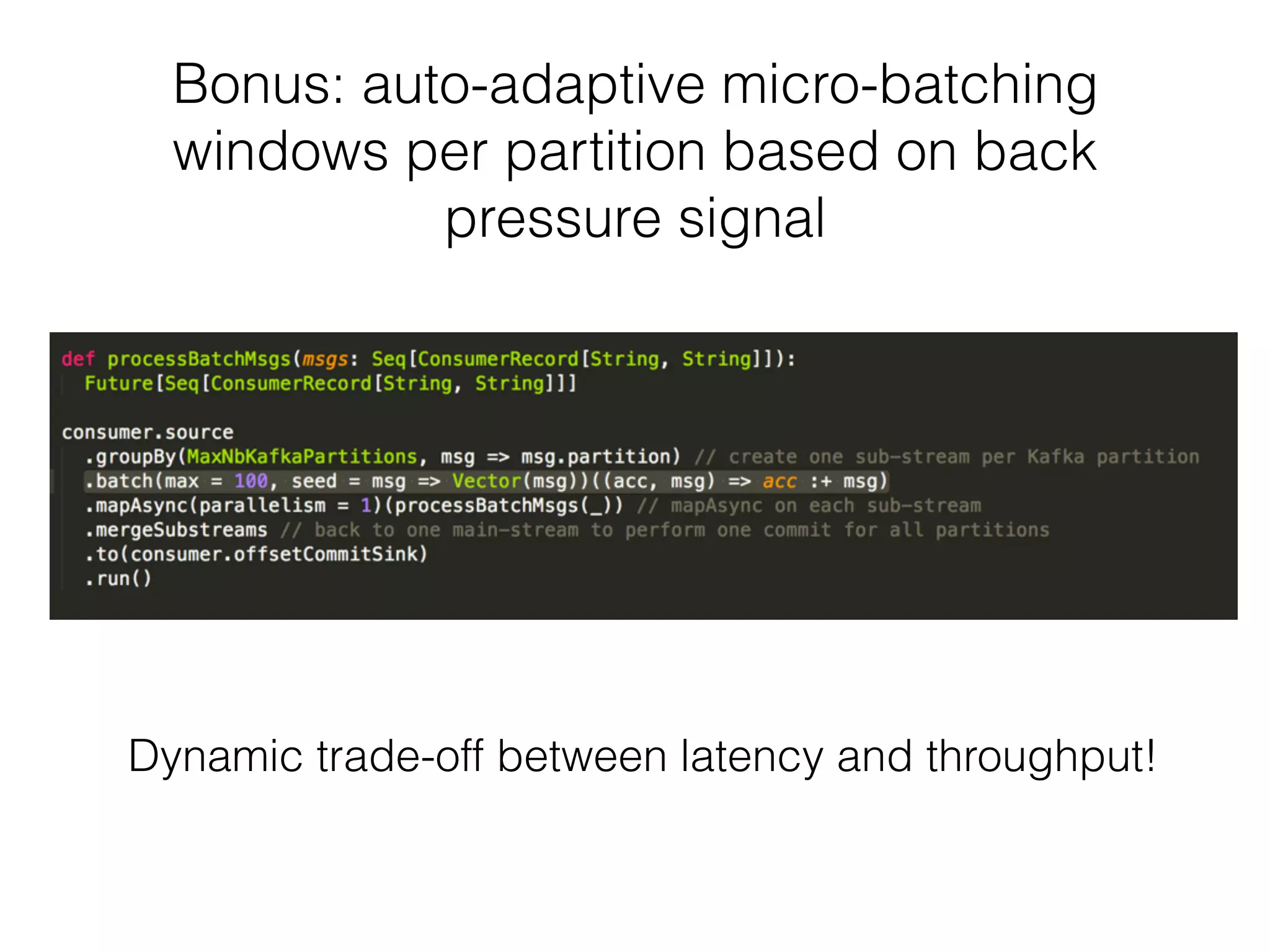

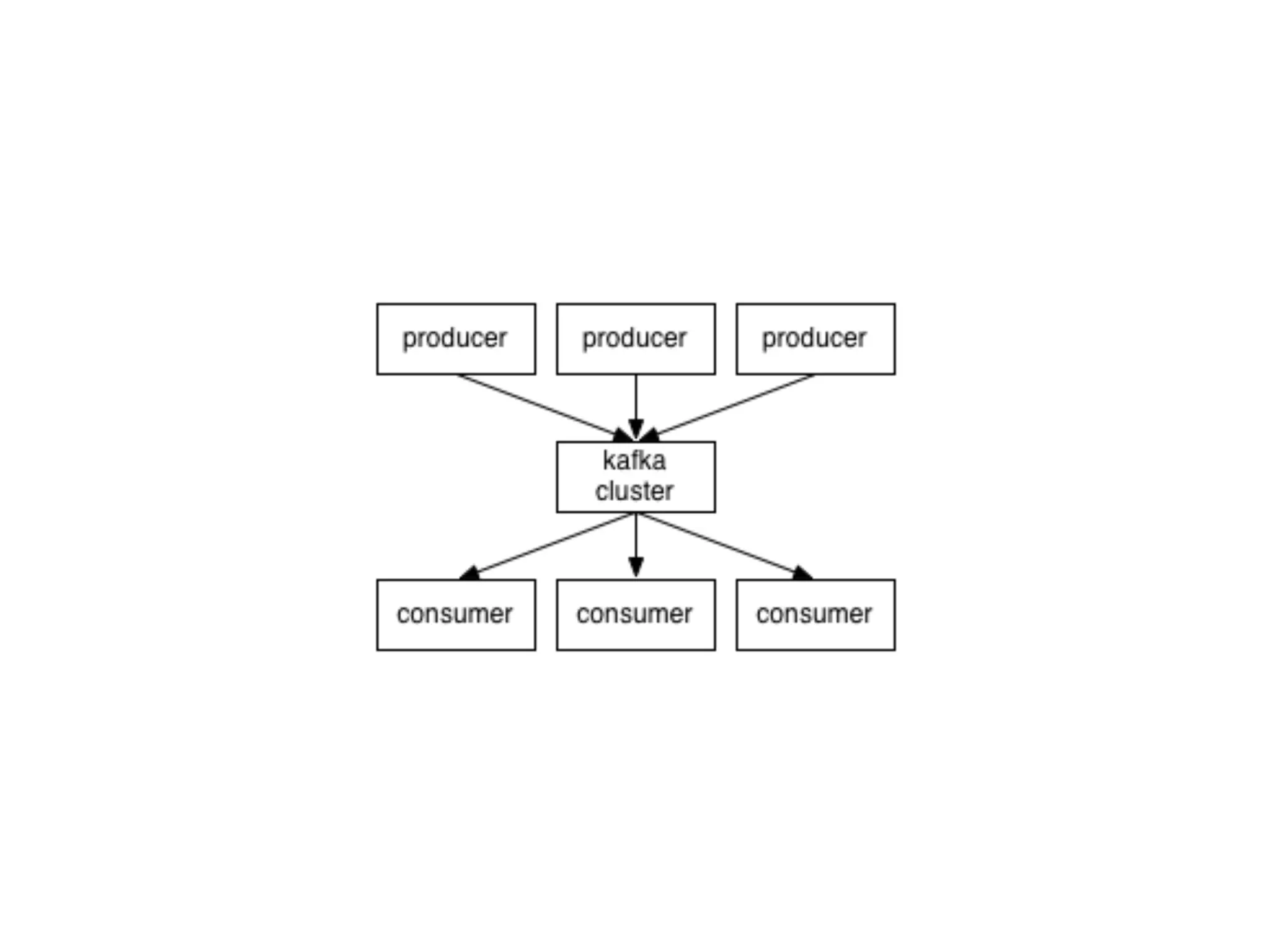

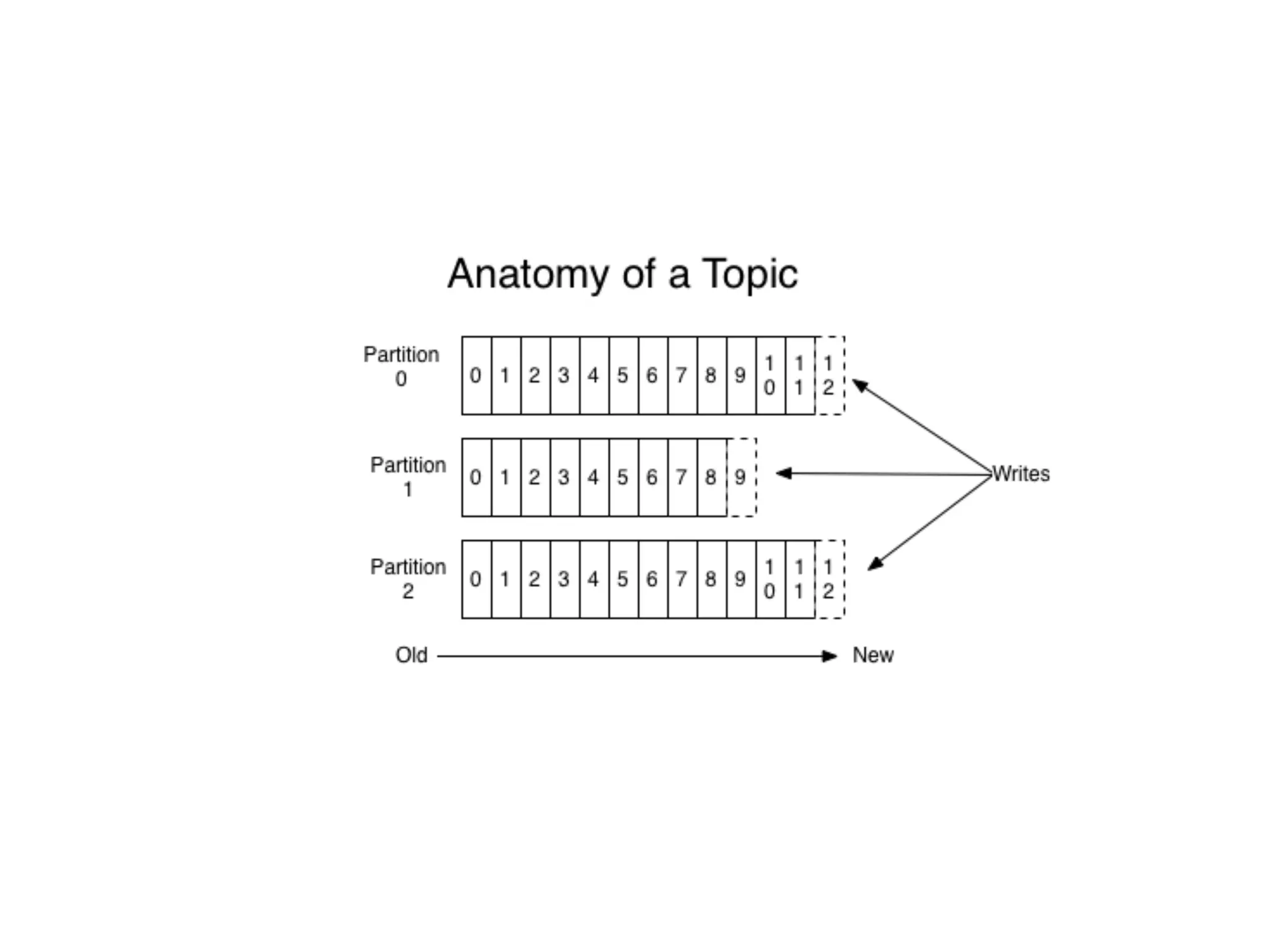

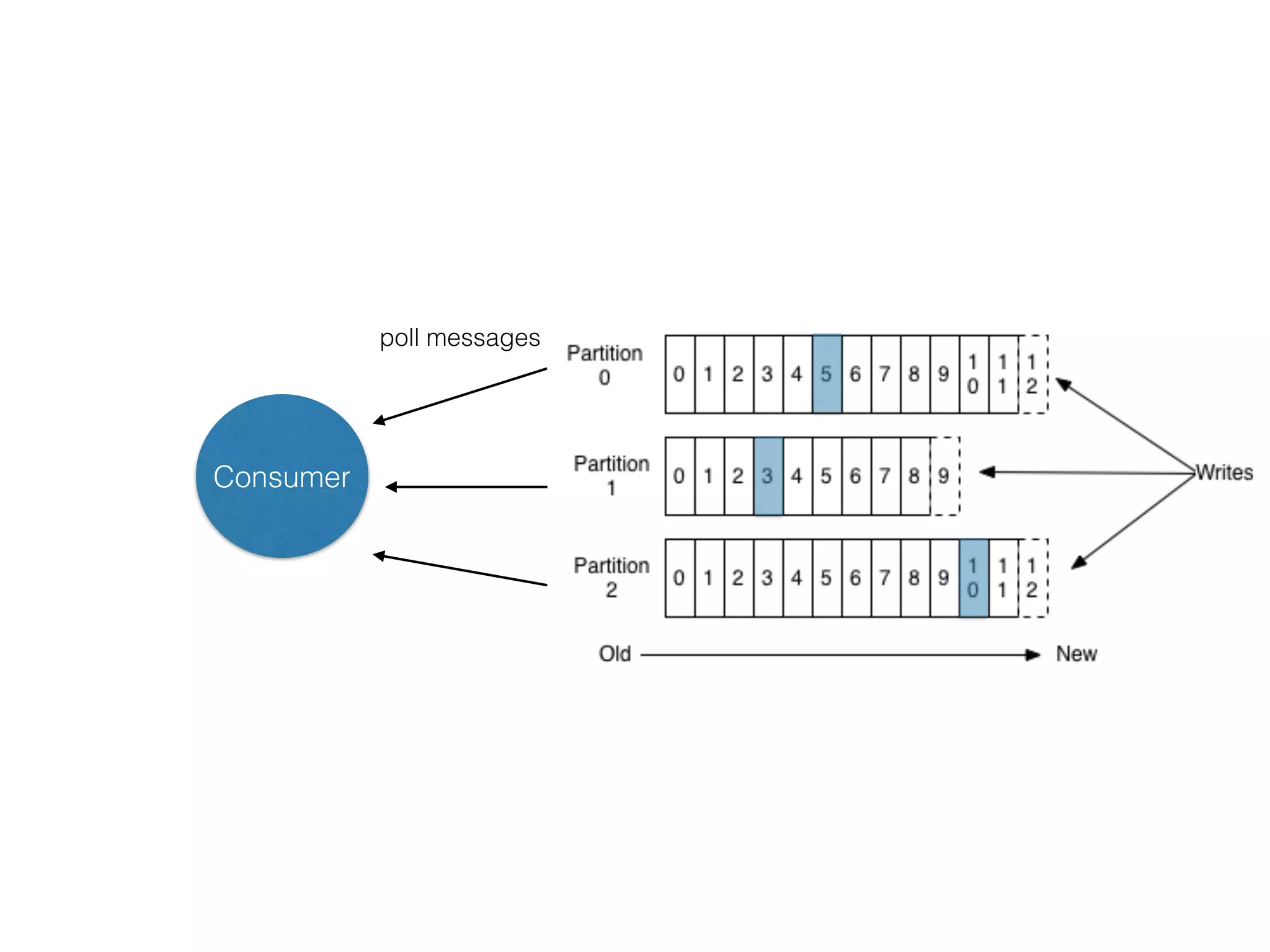

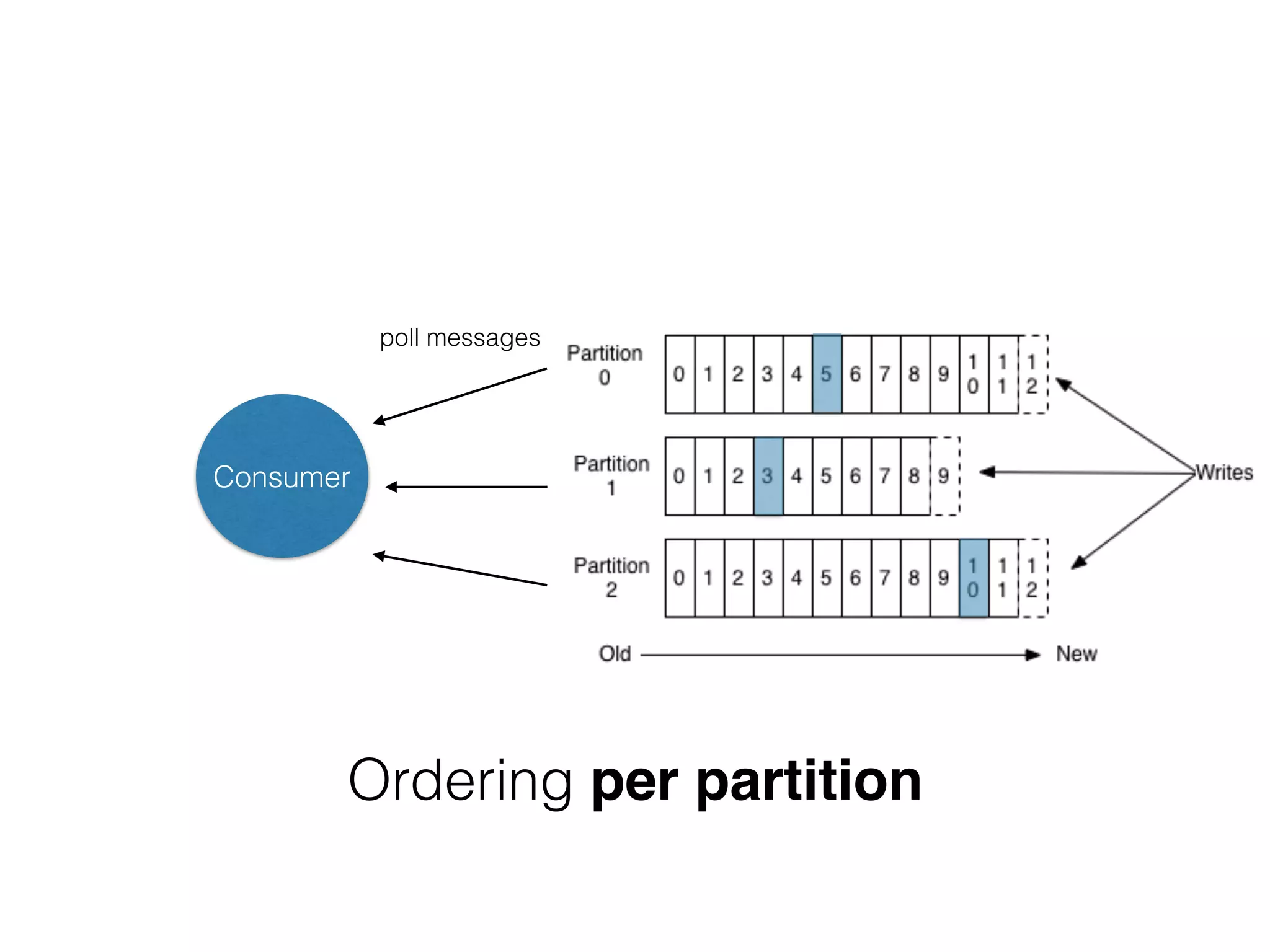

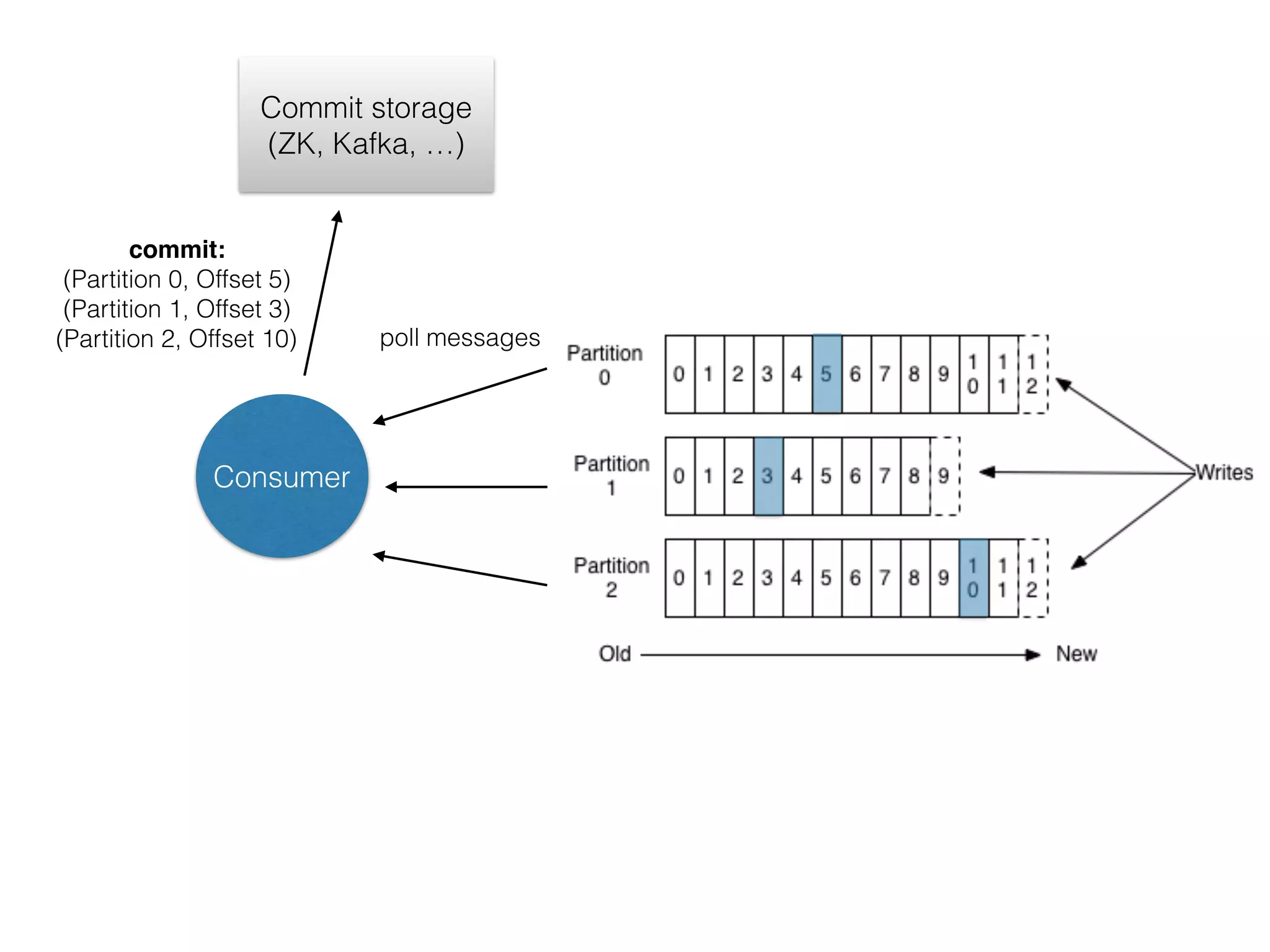

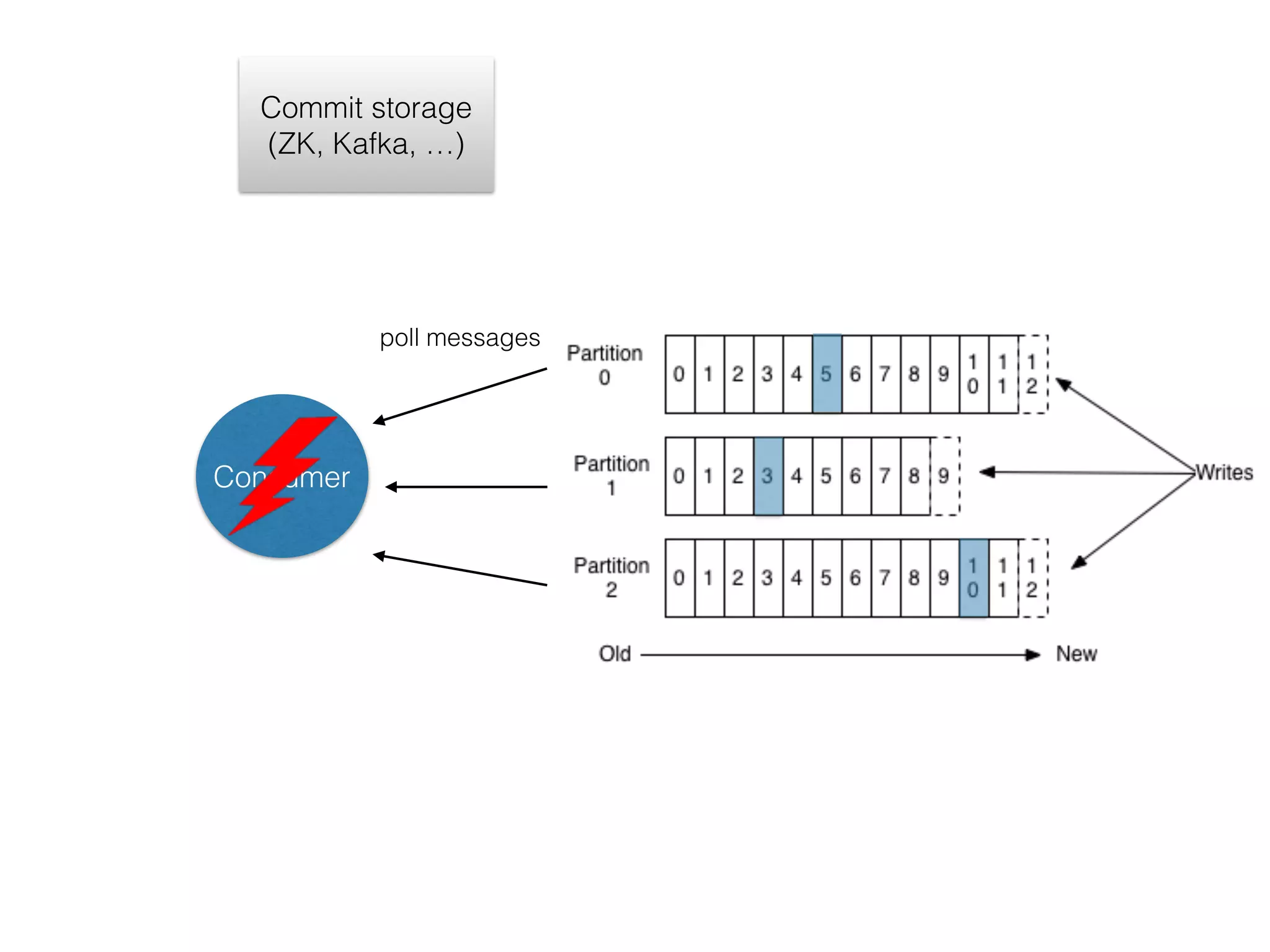

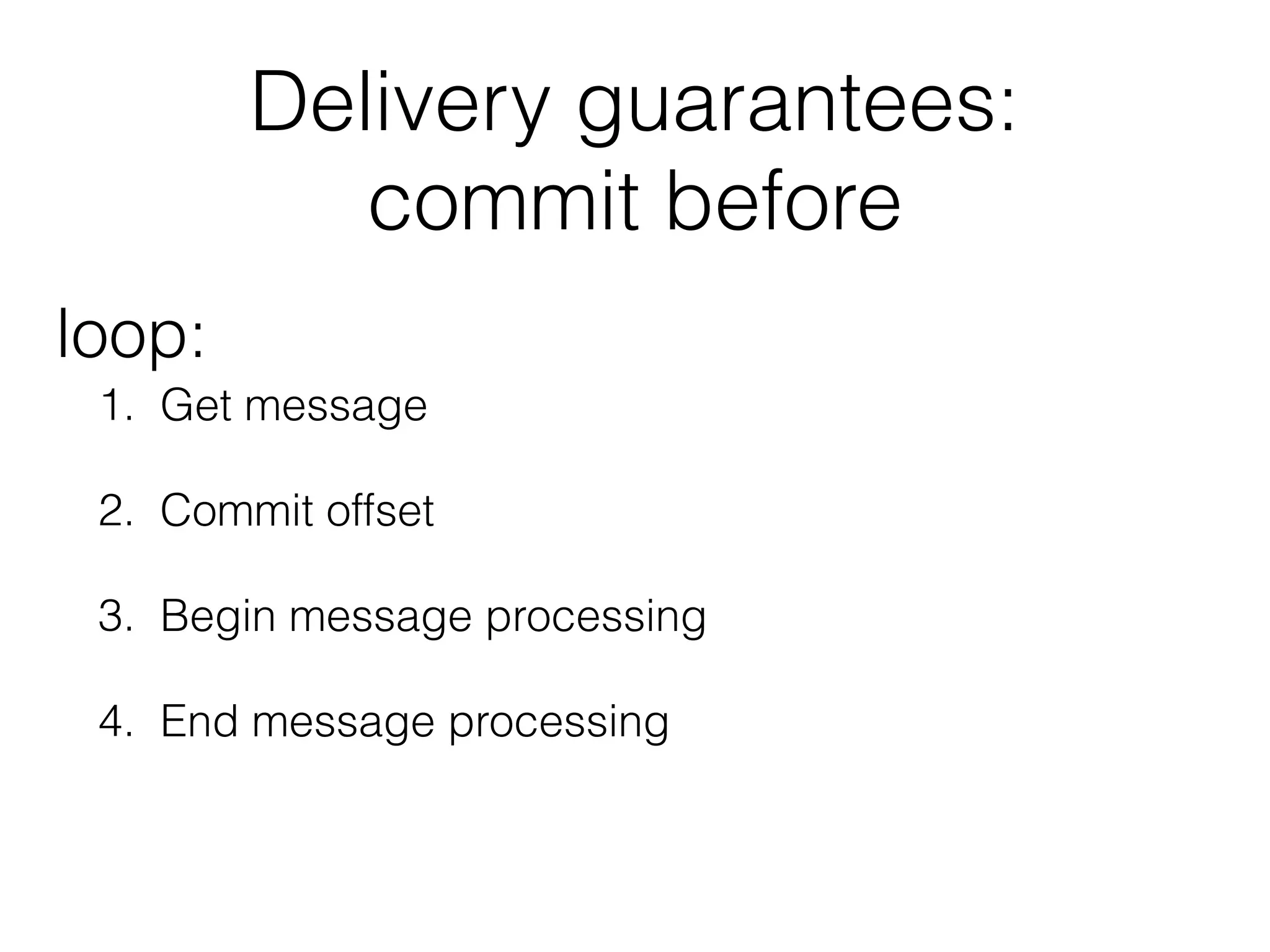

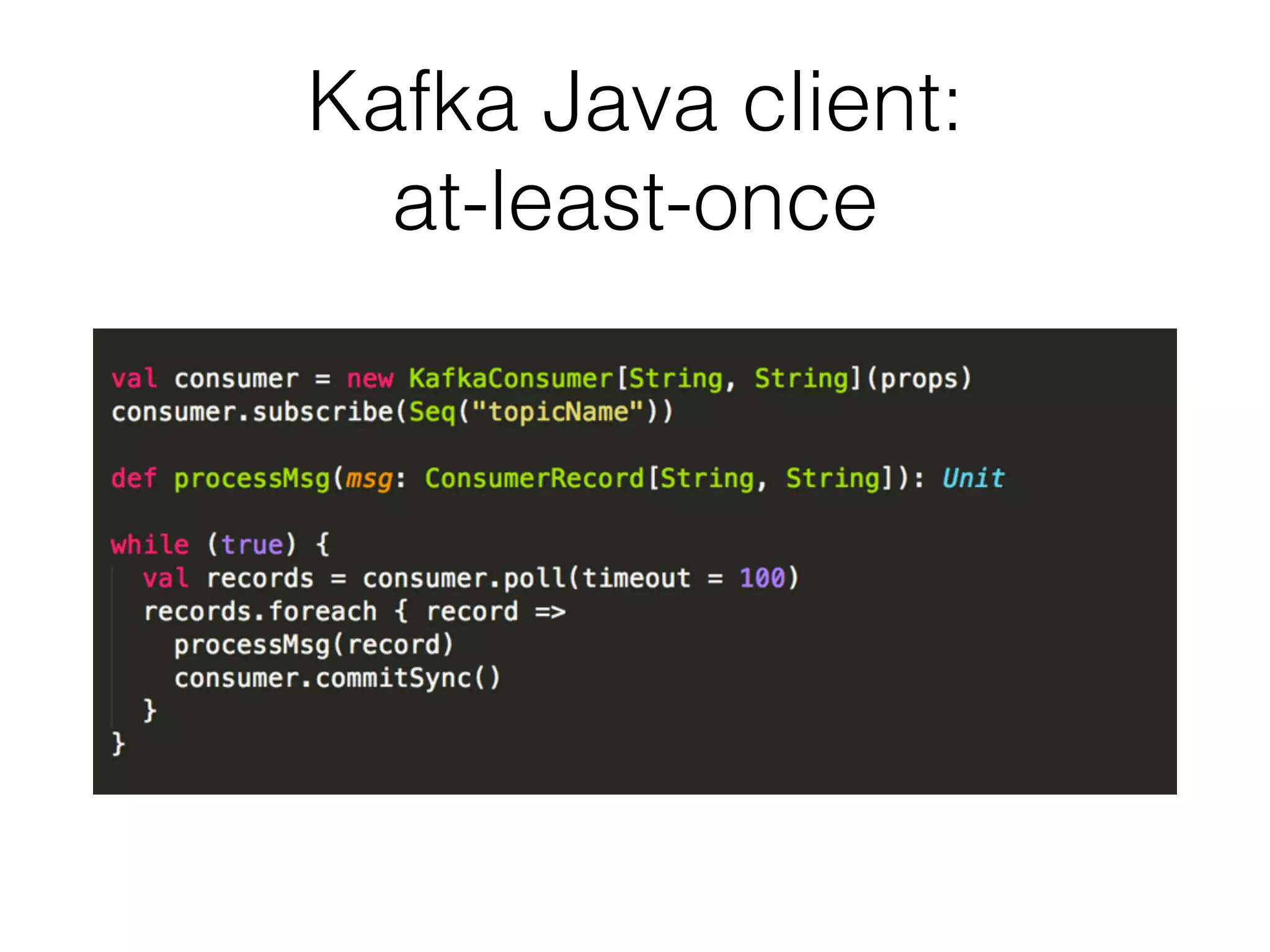

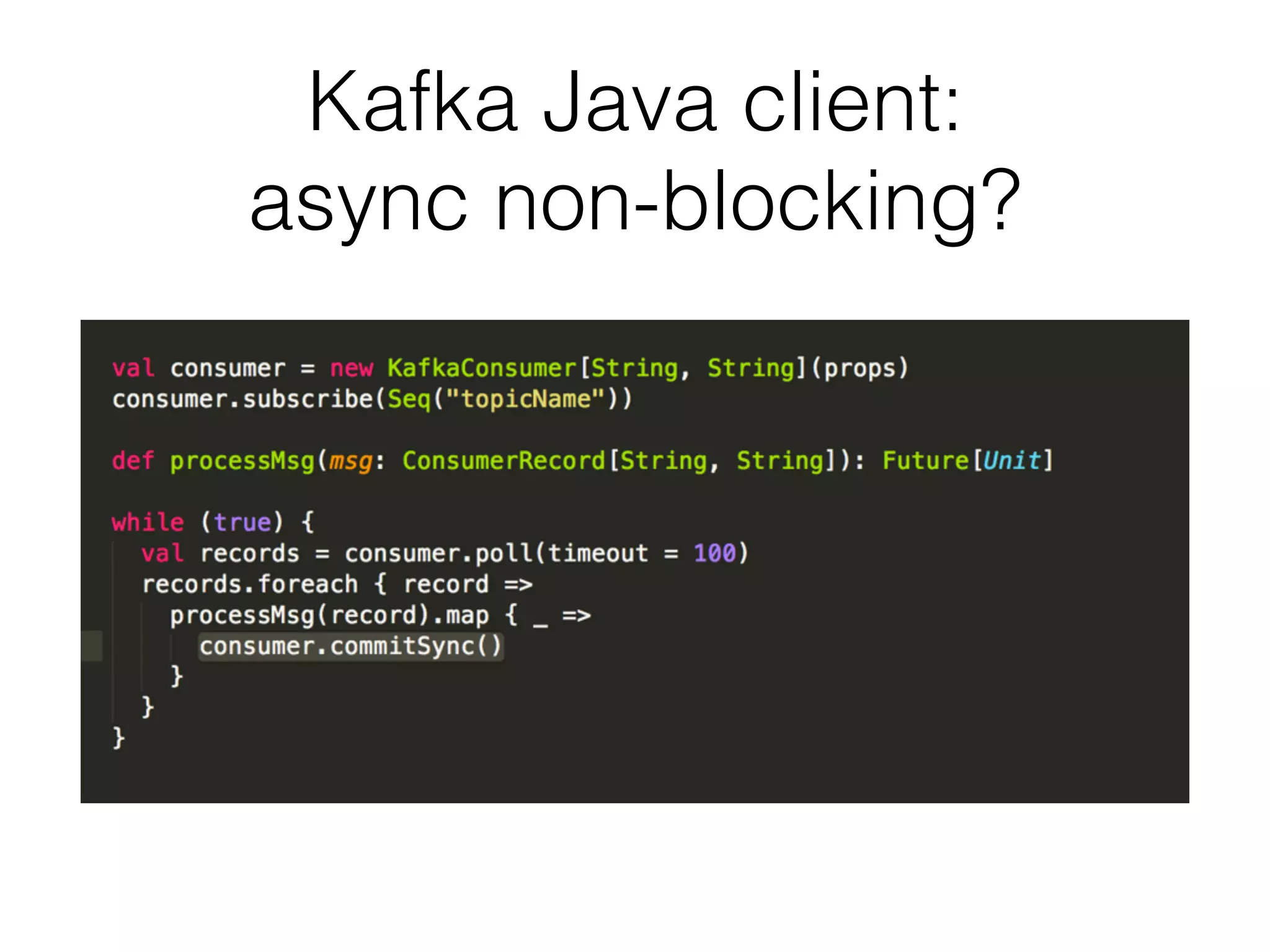

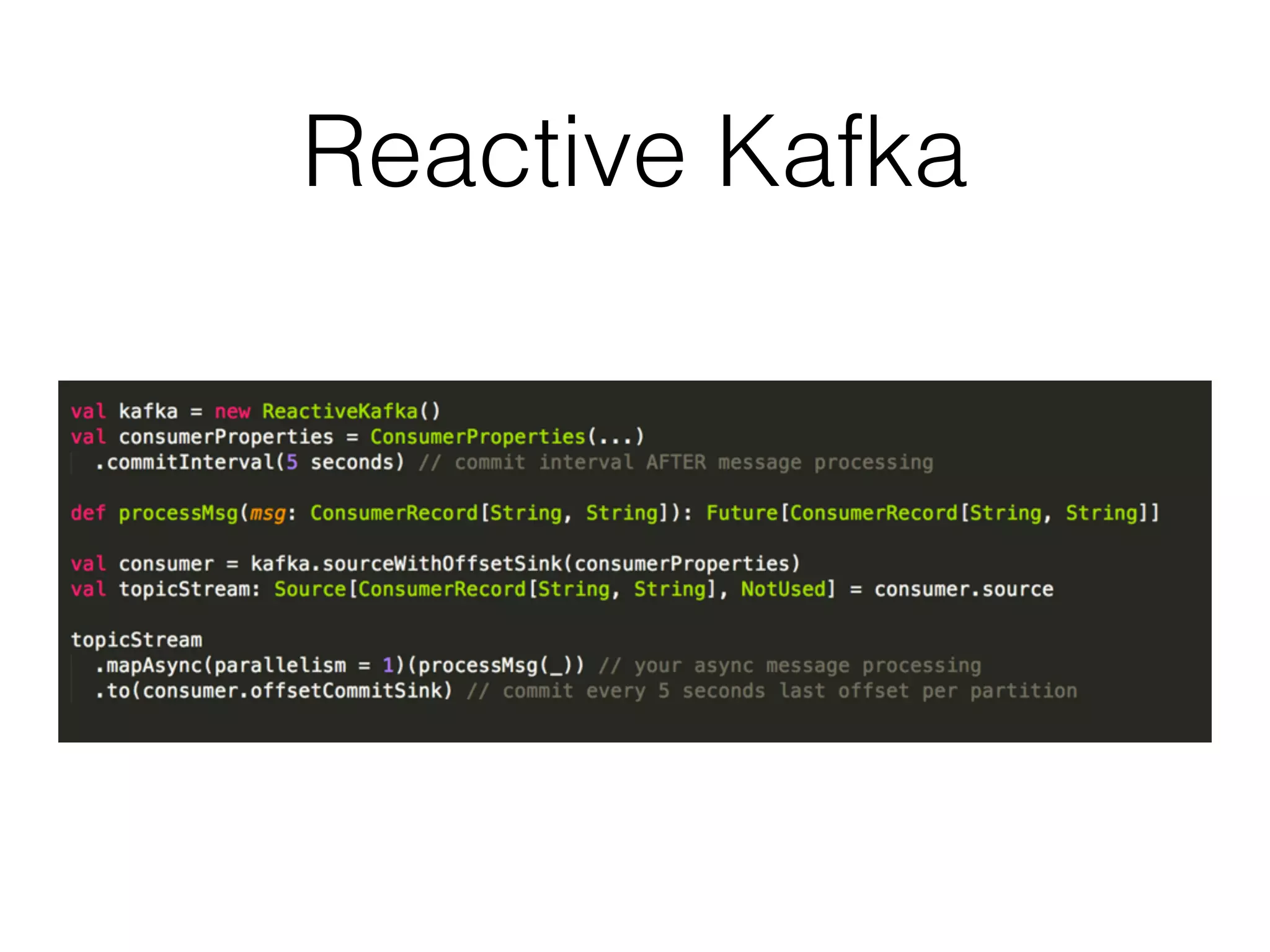

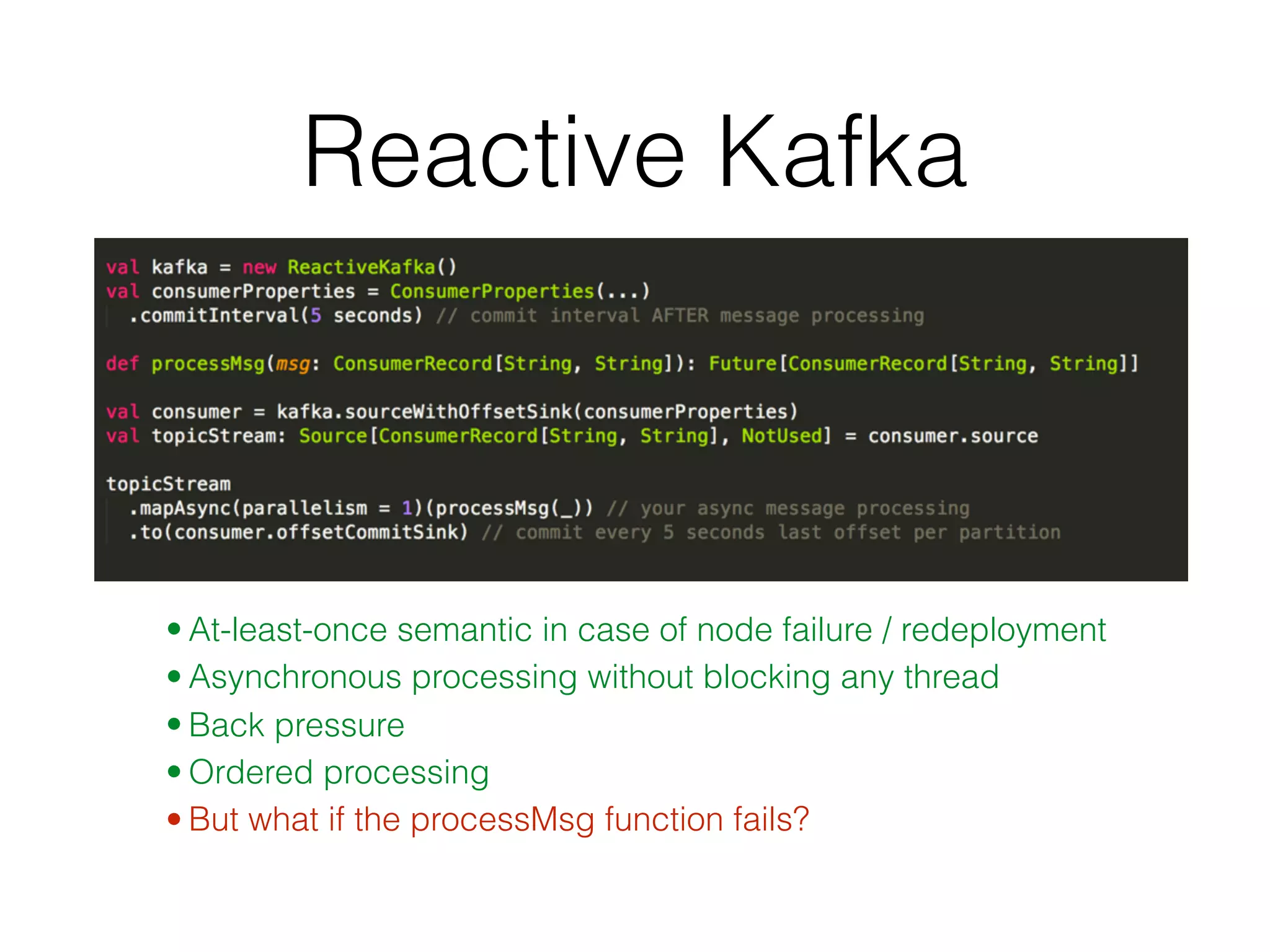

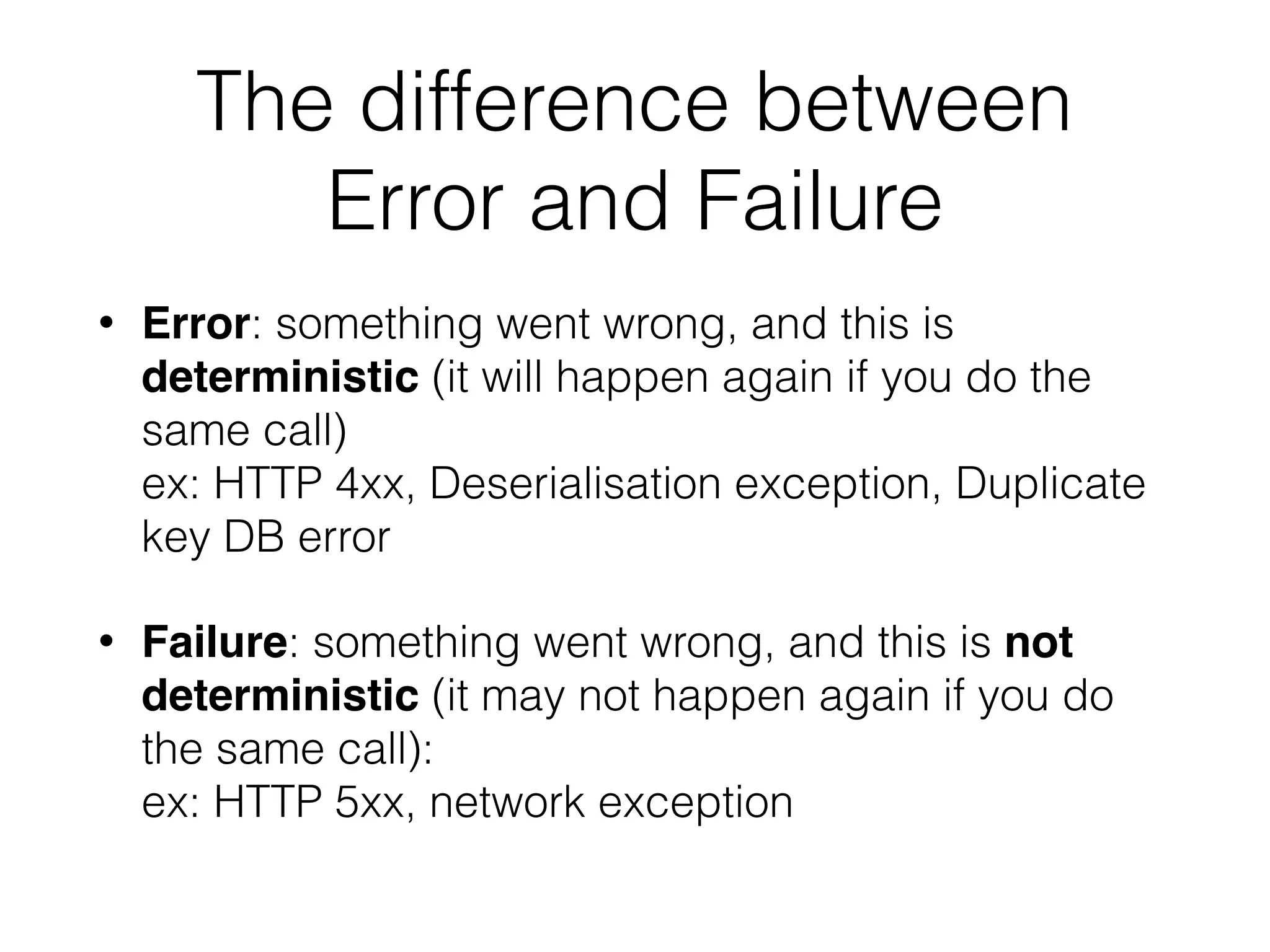

This document discusses fault-tolerant consumption from Apache Kafka using the Kafka Java client and Akka Streams. It describes how to achieve at-least-once processing guarantees through committing offsets after message processing. It also discusses how to keep ordering when processing messages asynchronously and in parallel across partitions using reactive streams with back pressure. Micro-batching messages dynamically per partition can provide a latency-throughput trade-off. With the right abstractions, fault-tolerant Kafka consumption can be achieved with just a few extra lines of code.

![Async non-blocking? • In a Reactive/Scala world, message processing is usually asynchronous (non-blocking IO call to a DB, ask Akka actor, …):

def processMsg(message: String): Future[Result] • How to process your Kafka messages staying reactive (i.e not blocking threads)?](https://image.slidesharecdn.com/kafkatalkpublic-160629133015/75/Deep-dive-into-Apache-Kafka-consumption-20-2048.jpg)

![Akka Stream • Stream processing abstraction on top of Akka Actors • Types! Types are back! • Source[A] ~> Flow[A, B] ~> Sink[B] • Automatic back pressure](https://image.slidesharecdn.com/kafkatalkpublic-160629133015/75/Deep-dive-into-Apache-Kafka-consumption-26-2048.jpg)

![Error and Failure in Scala code using Scalactic Future[Result Or Every[Error]] can contain one or more Errorscan contain a Failure](https://image.slidesharecdn.com/kafkatalkpublic-160629133015/75/Deep-dive-into-Apache-Kafka-consumption-31-2048.jpg)

![Error and Failure in Scala code (non-async) Try[Result Or Every[Error]] can contain one or more Errorscan contain a Failure](https://image.slidesharecdn.com/kafkatalkpublic-160629133015/75/Deep-dive-into-Apache-Kafka-consumption-32-2048.jpg)