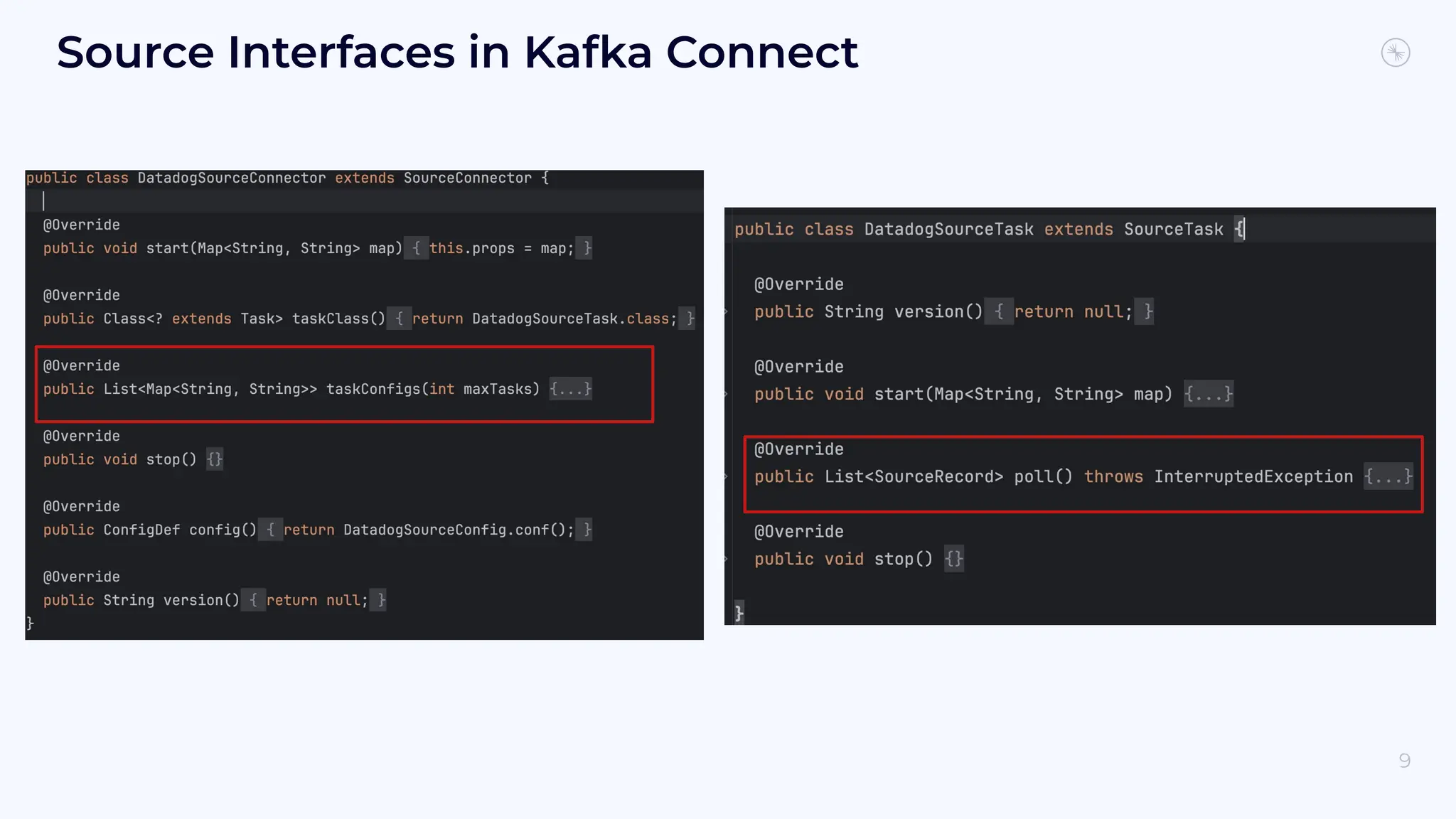

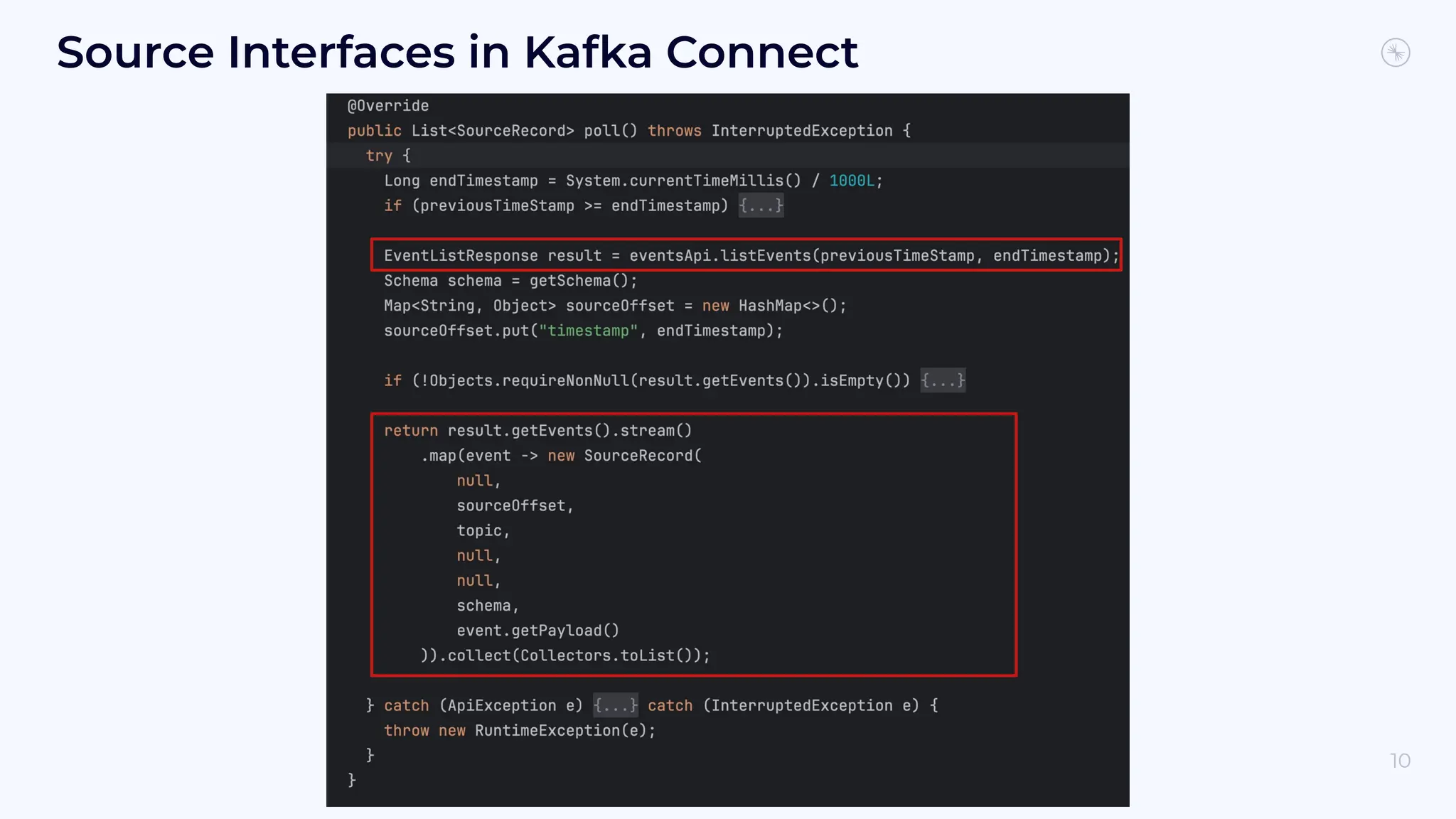

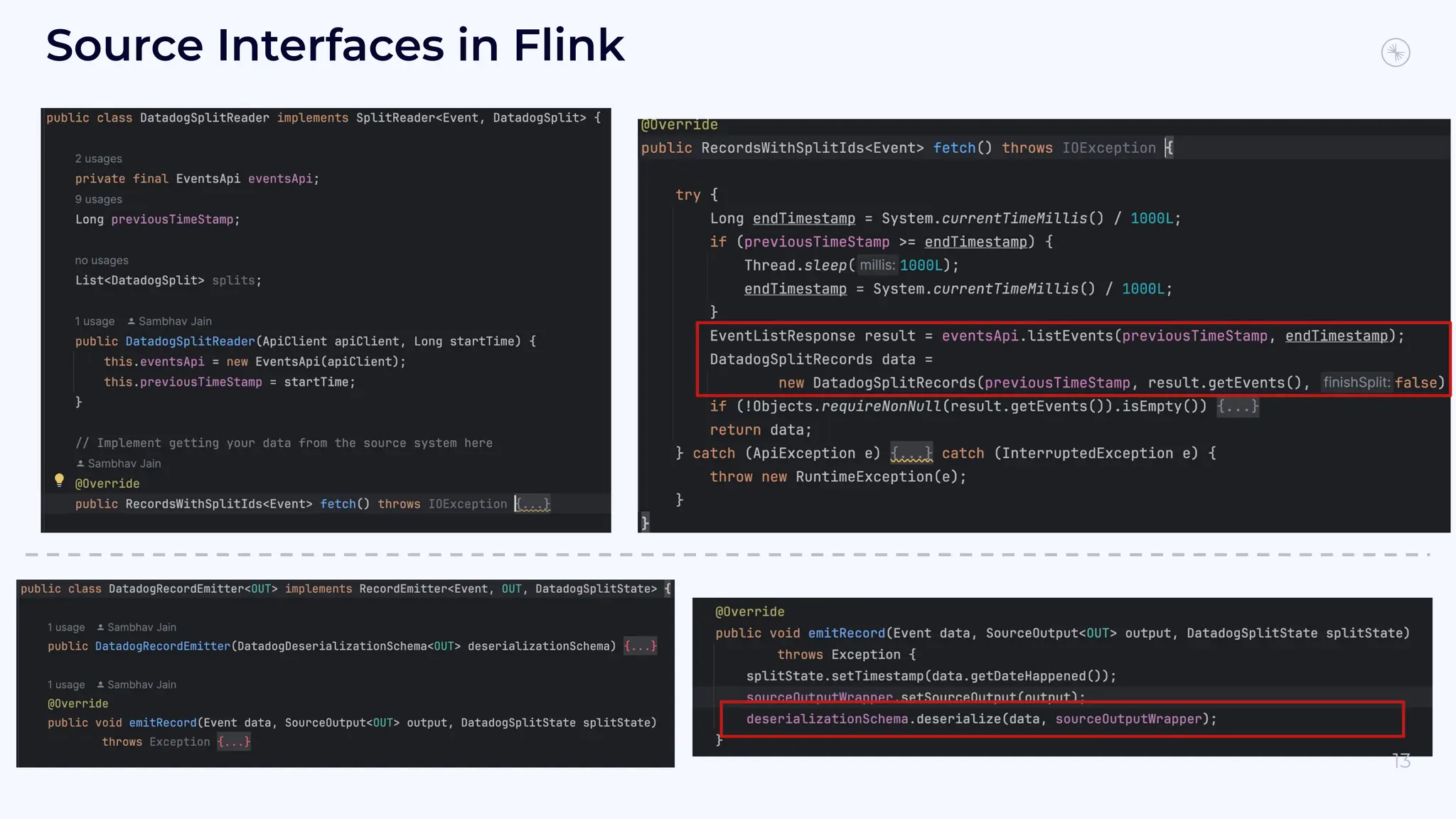

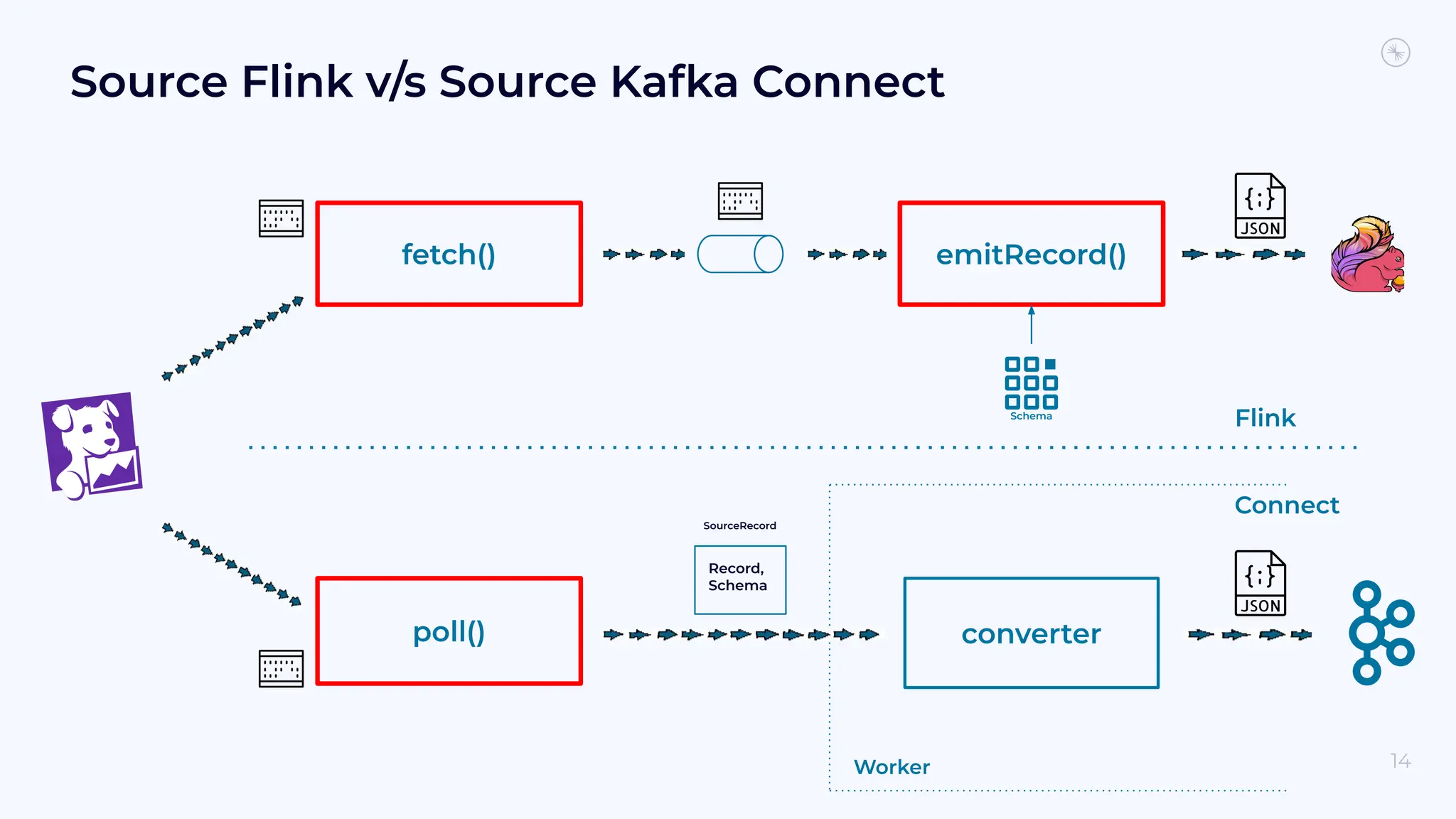

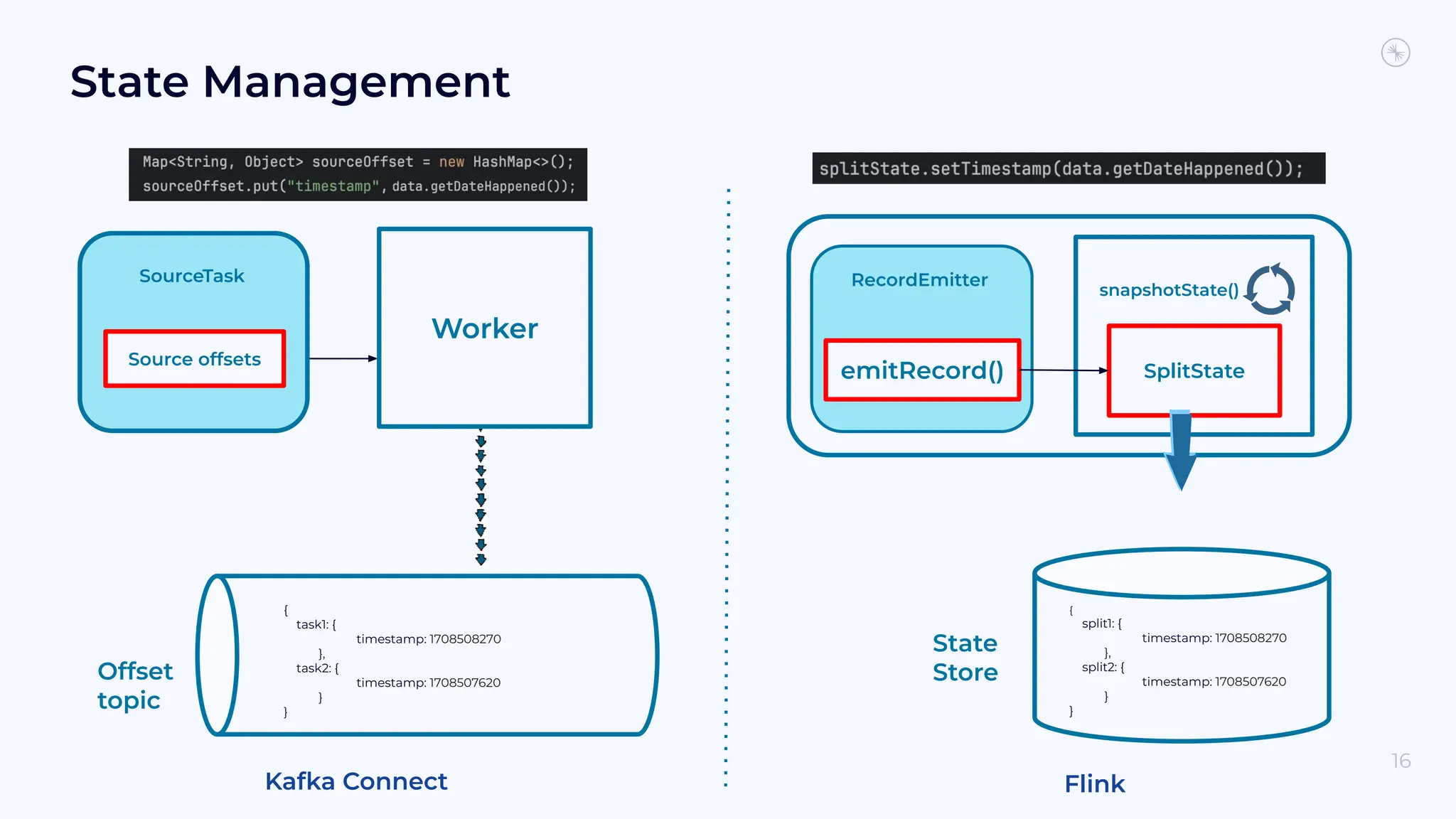

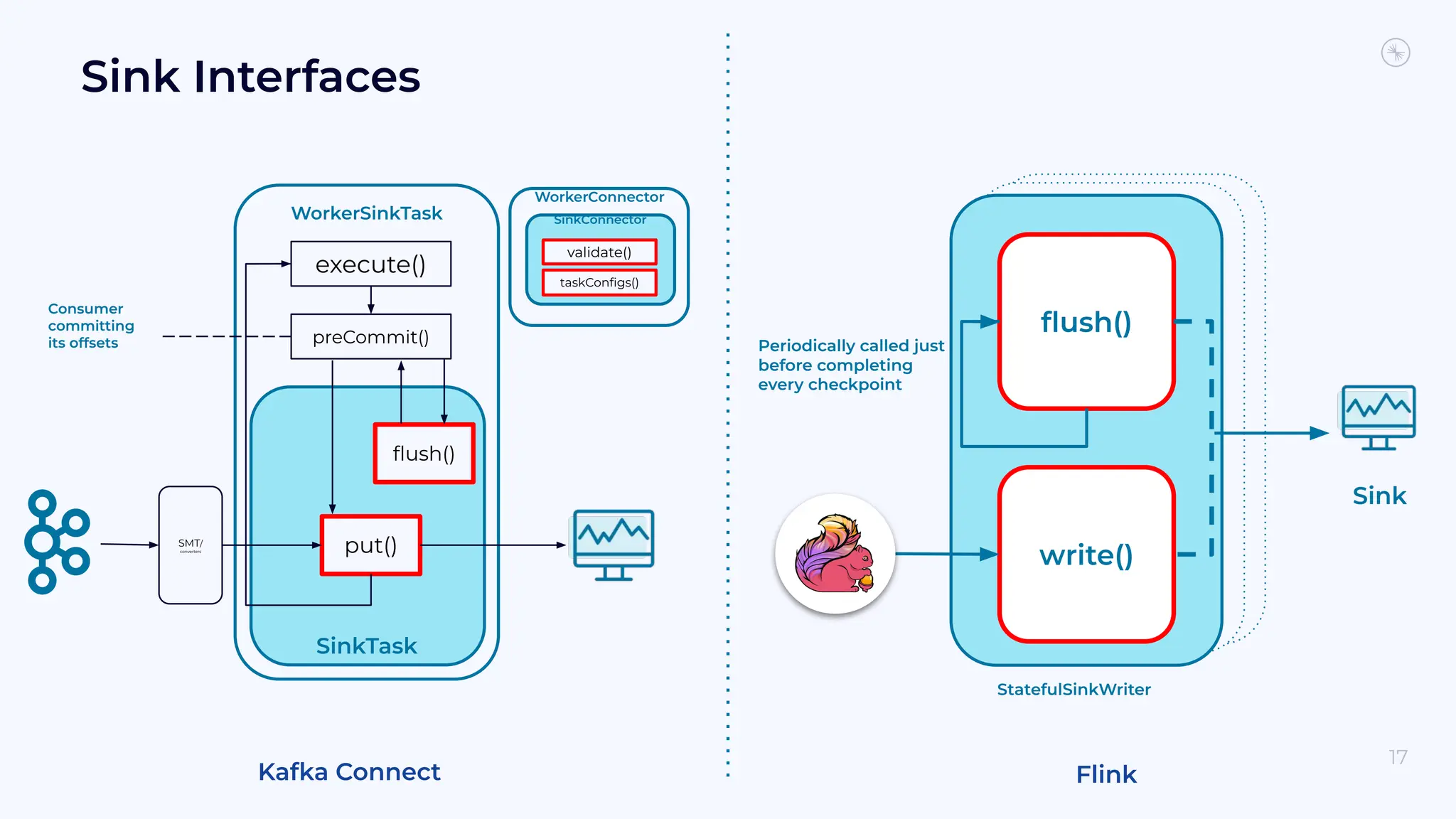

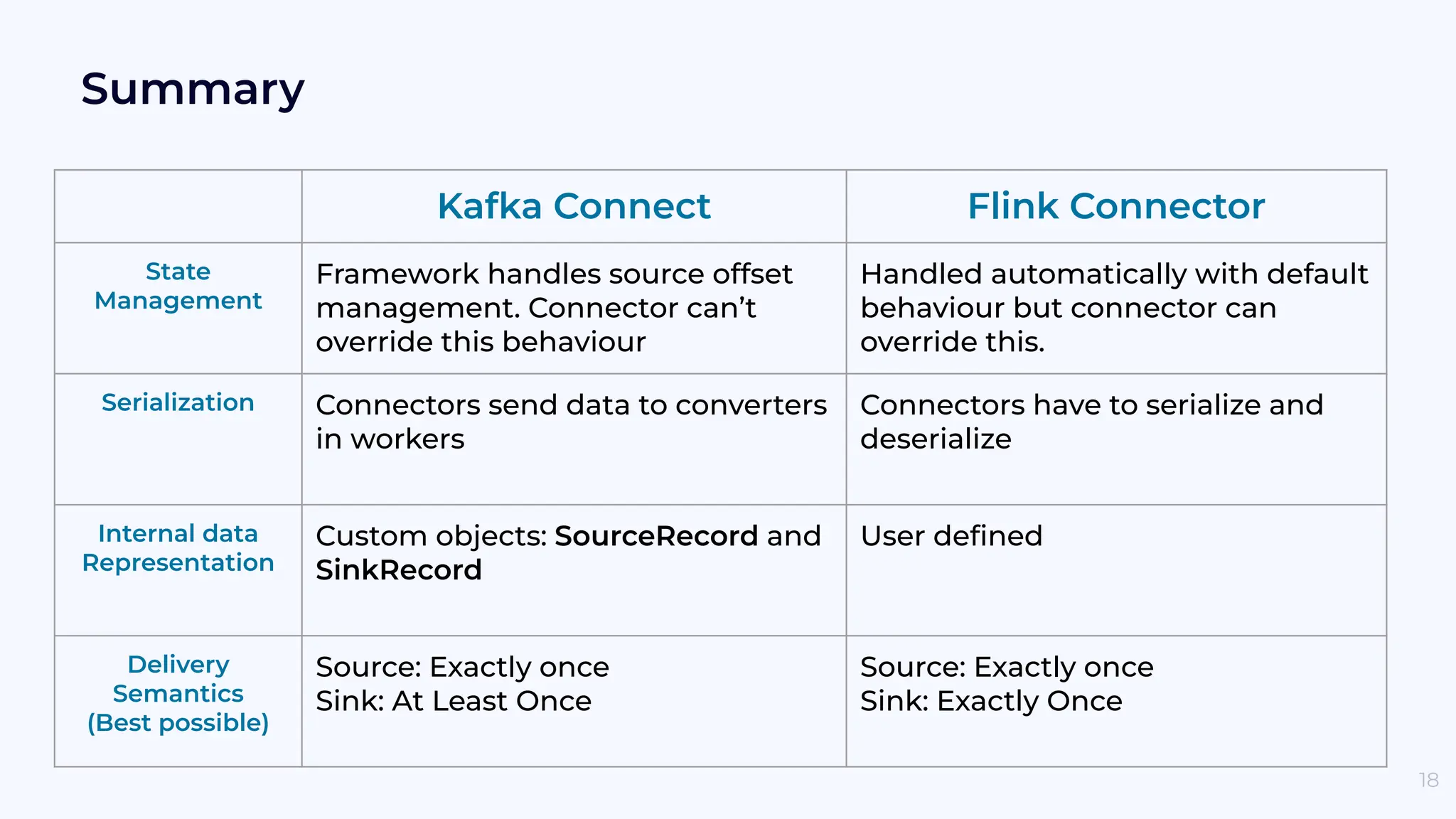

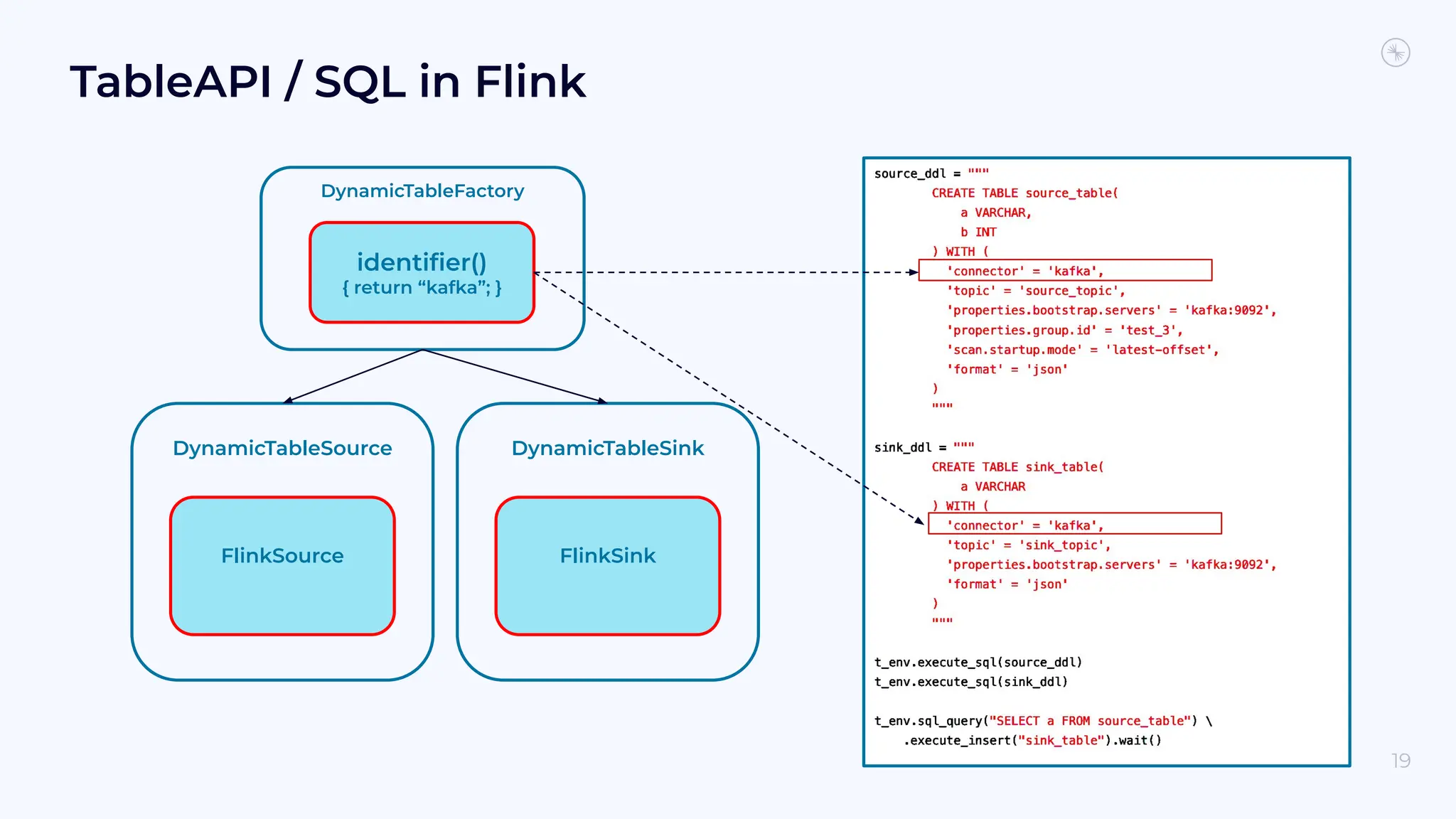

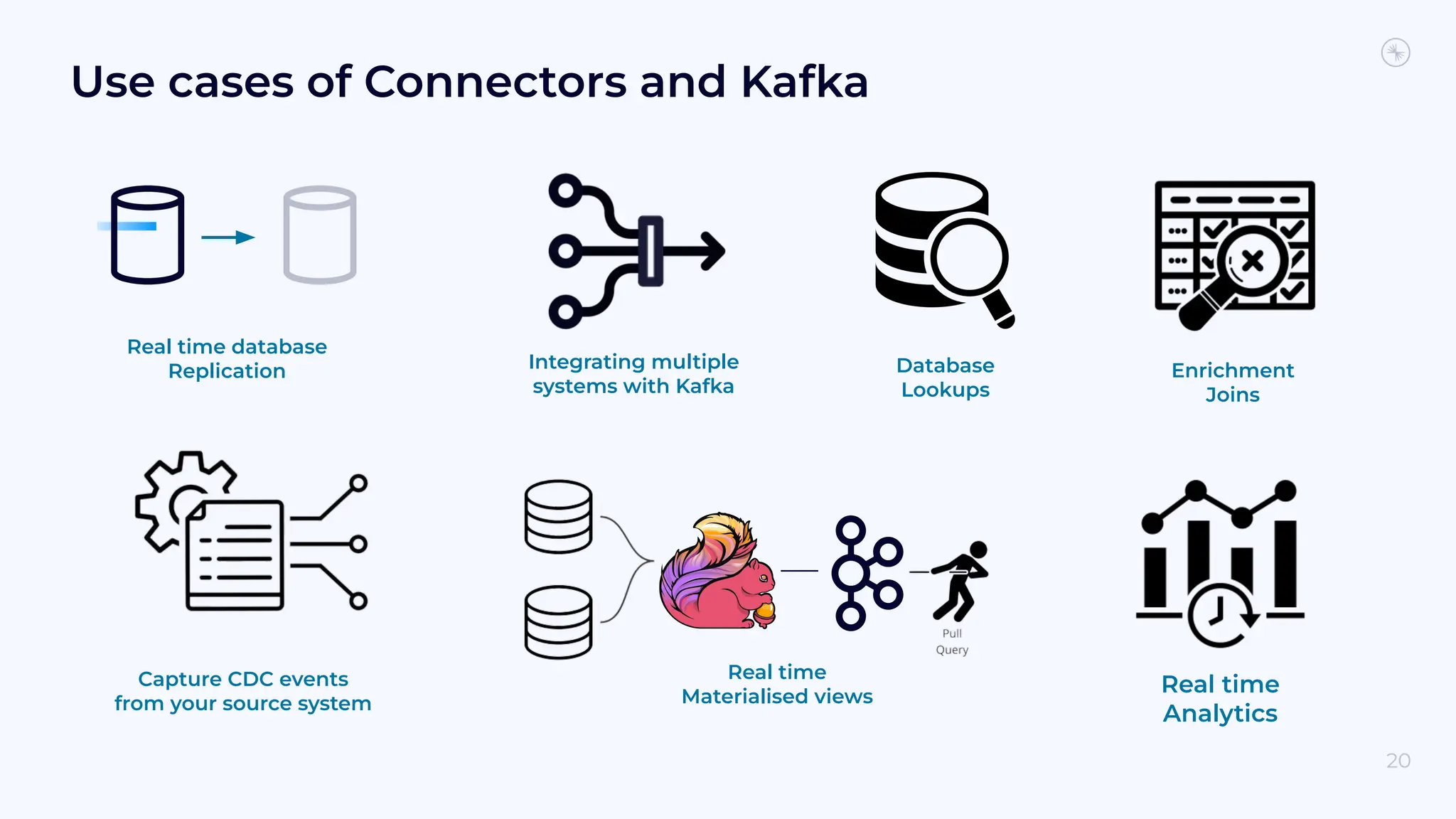

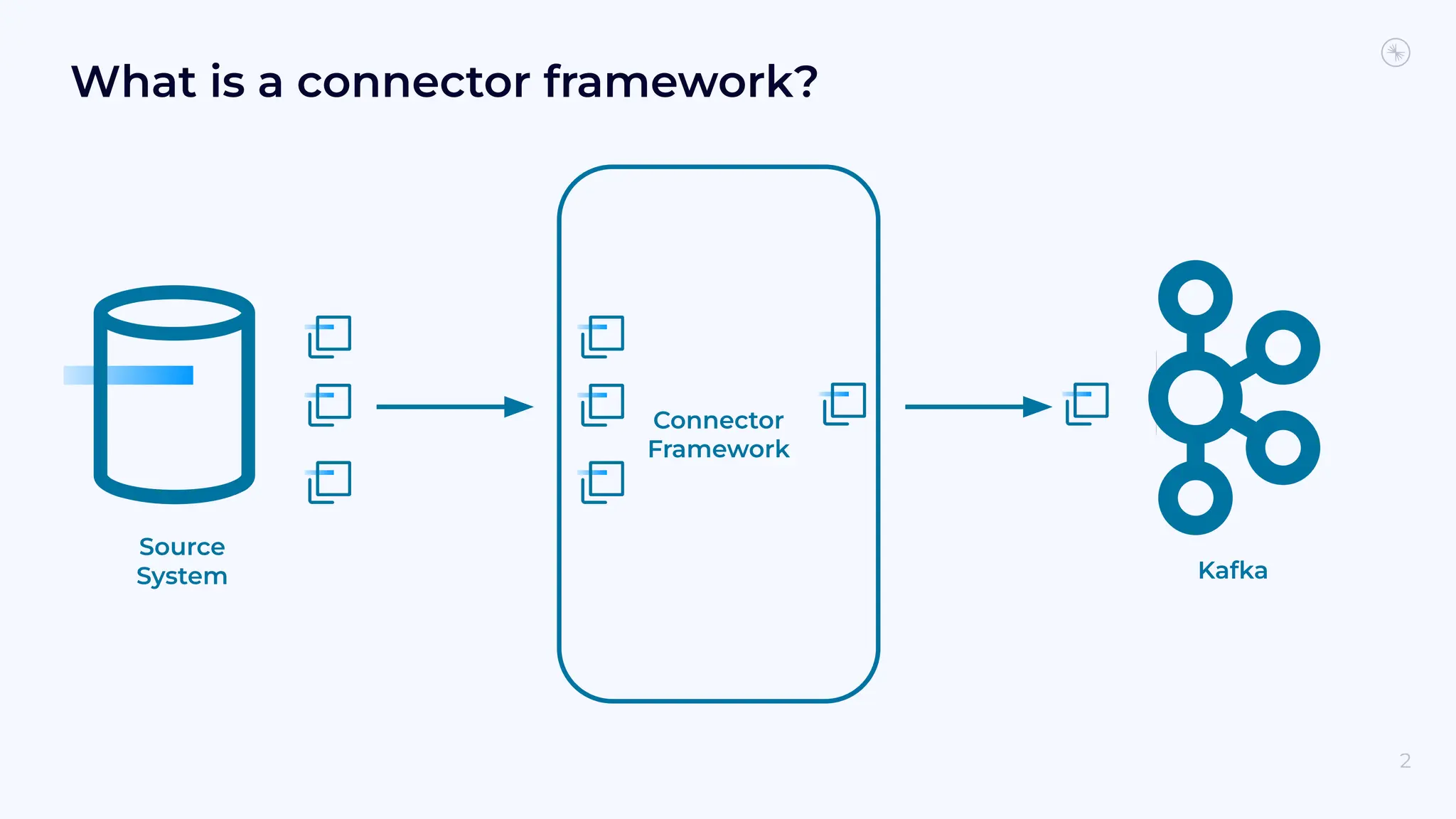

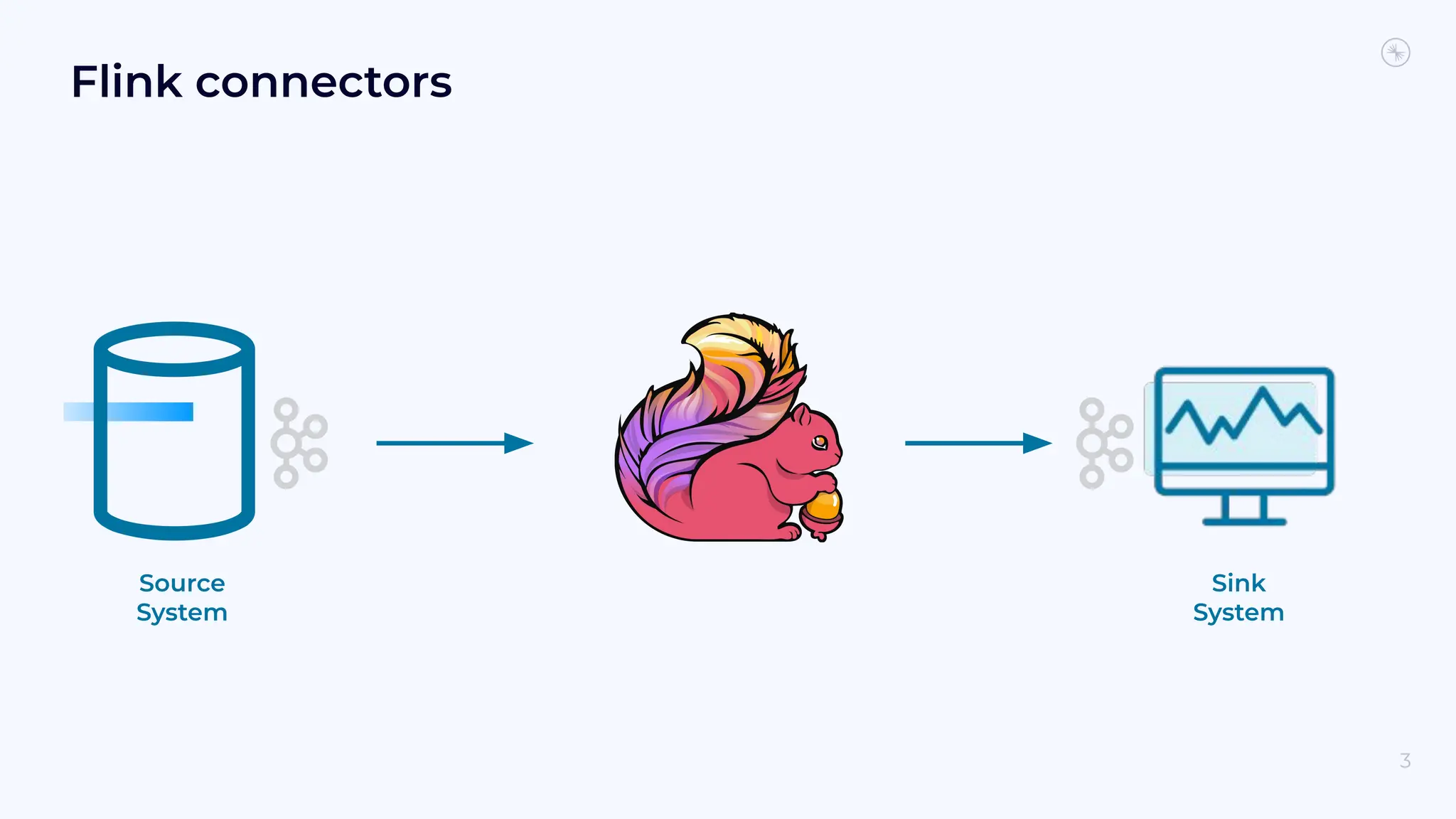

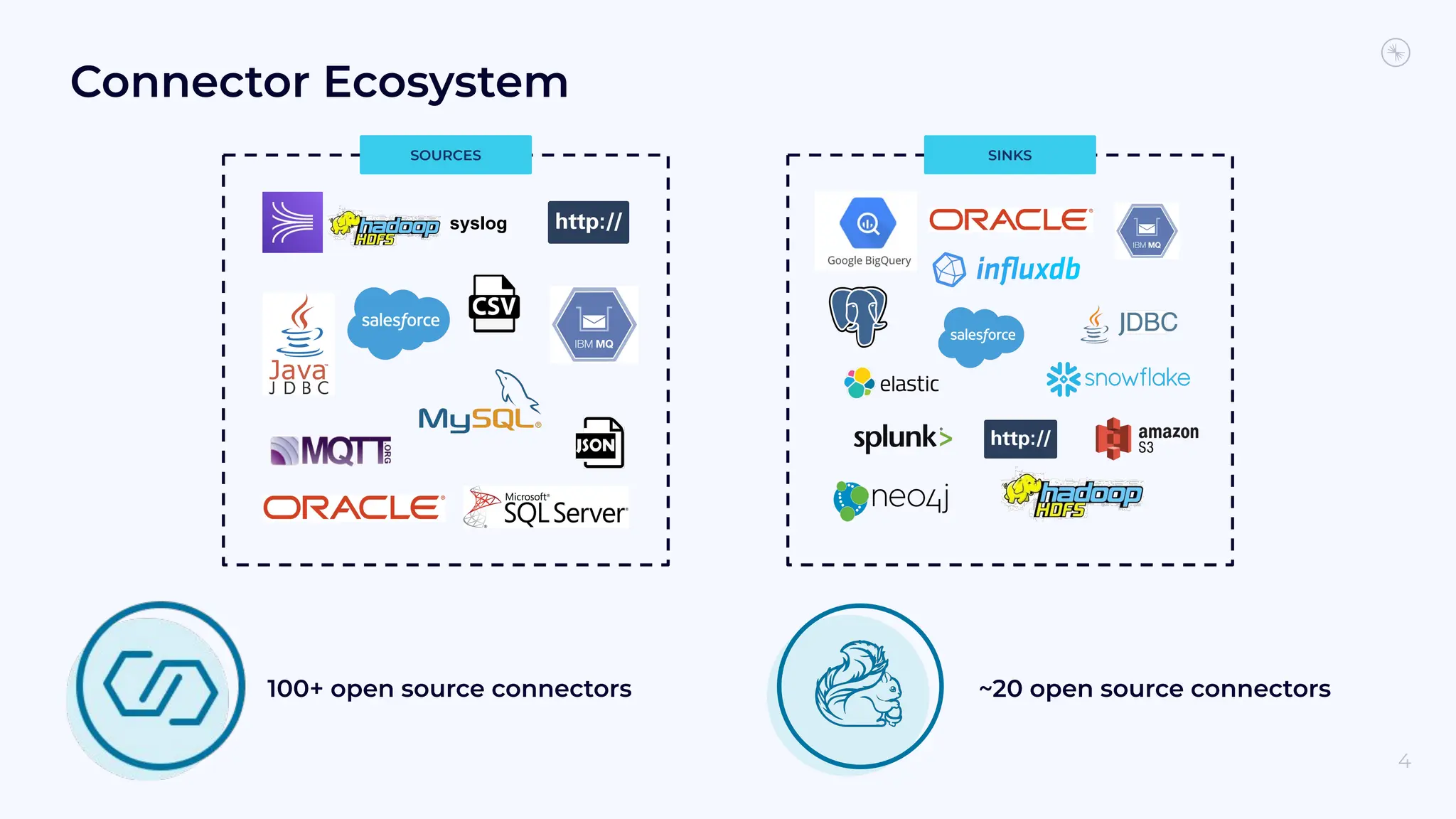

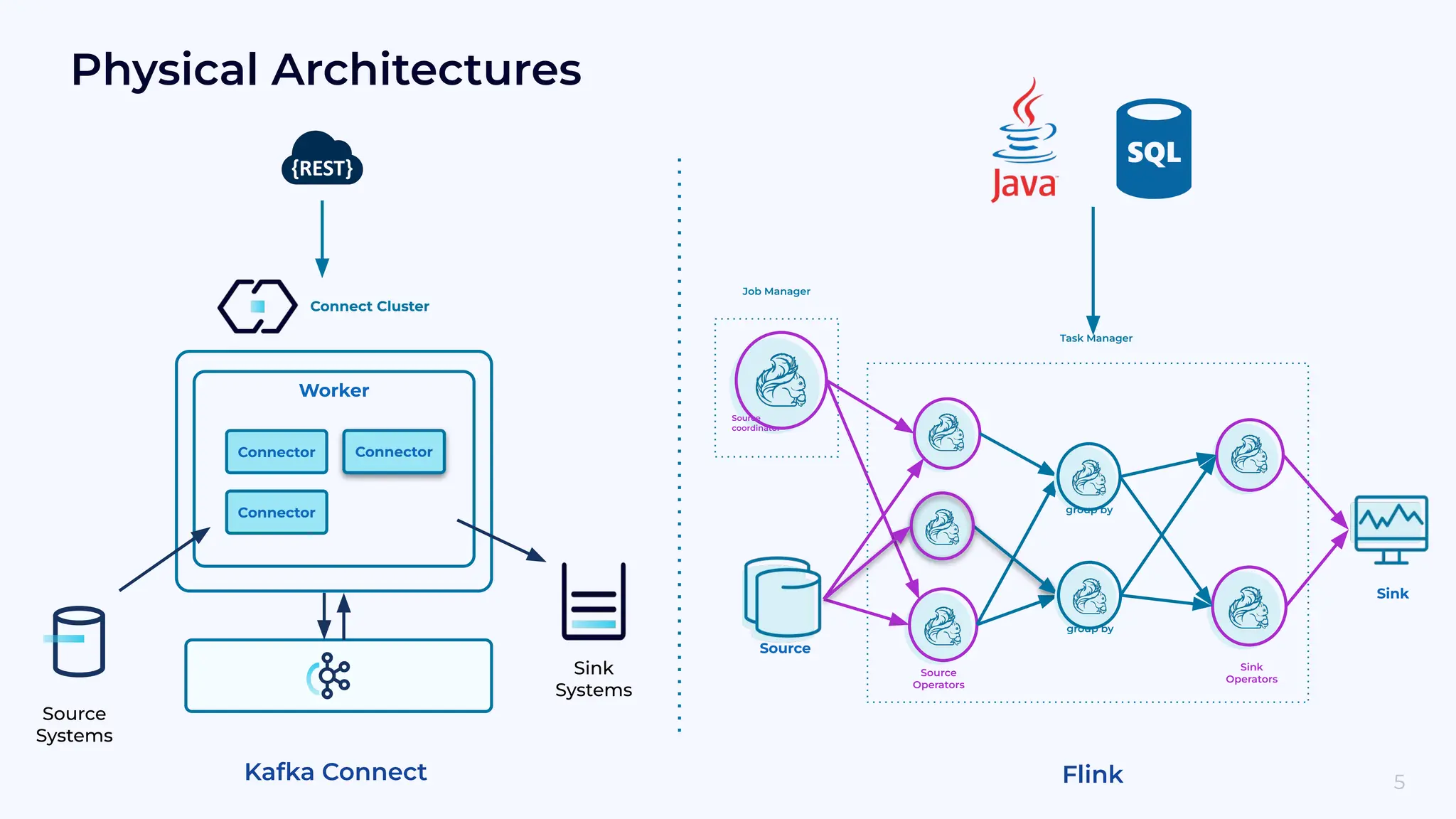

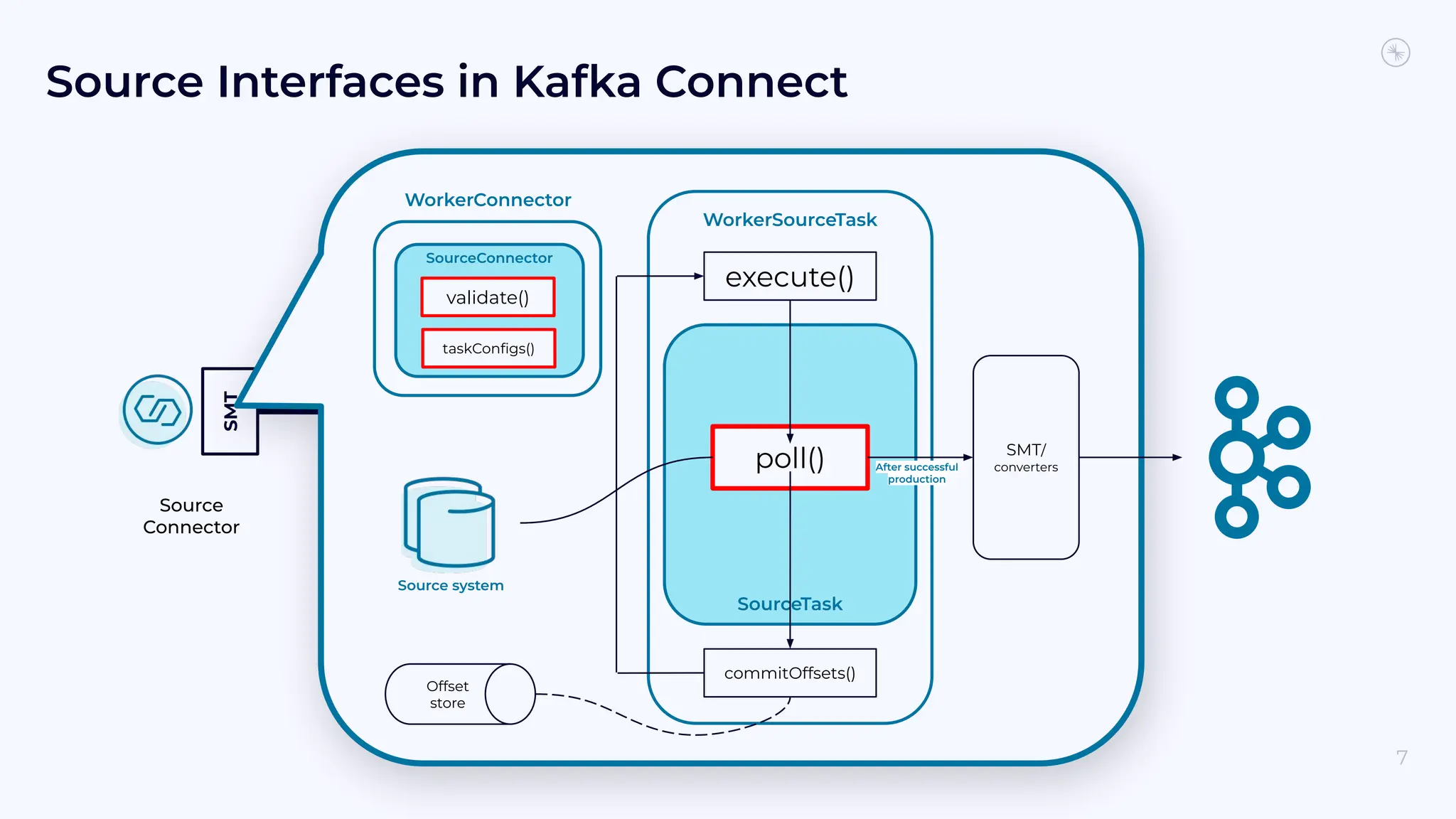

The document discusses the integration of data systems using Kafka Connect and Flink, highlighting the architecture and development of connectors. It covers source and sink connectors, their interfaces, and state management, illustrating how connectors can serialize and manage offsets. Additionally, it provides use cases for real-time database replication and references for further reading.

![Let’s start with the datadog connector { "events": [ { "alert_type": "info", "date_happened": "integer", "device_name": "string", "host": "string", "id": "integer", "id_str": "string", "payload": "{}", "priority": "normal", "source_type_name": "string", "tags": [ "environment:test" ], "text": "Oh boy!", "title": "Did you hear the news today?", "url": "string" } ], "status": "string" } API Request API Response 8](https://image.slidesharecdn.com/bs40-20230320-ksl-confluent-jainsambhav-240402155216-3cb18e78/75/Decoding-the-Data-Integration-Matrix-Connectors-in-Kafka-Connect-and-Flink-8-2048.jpg)