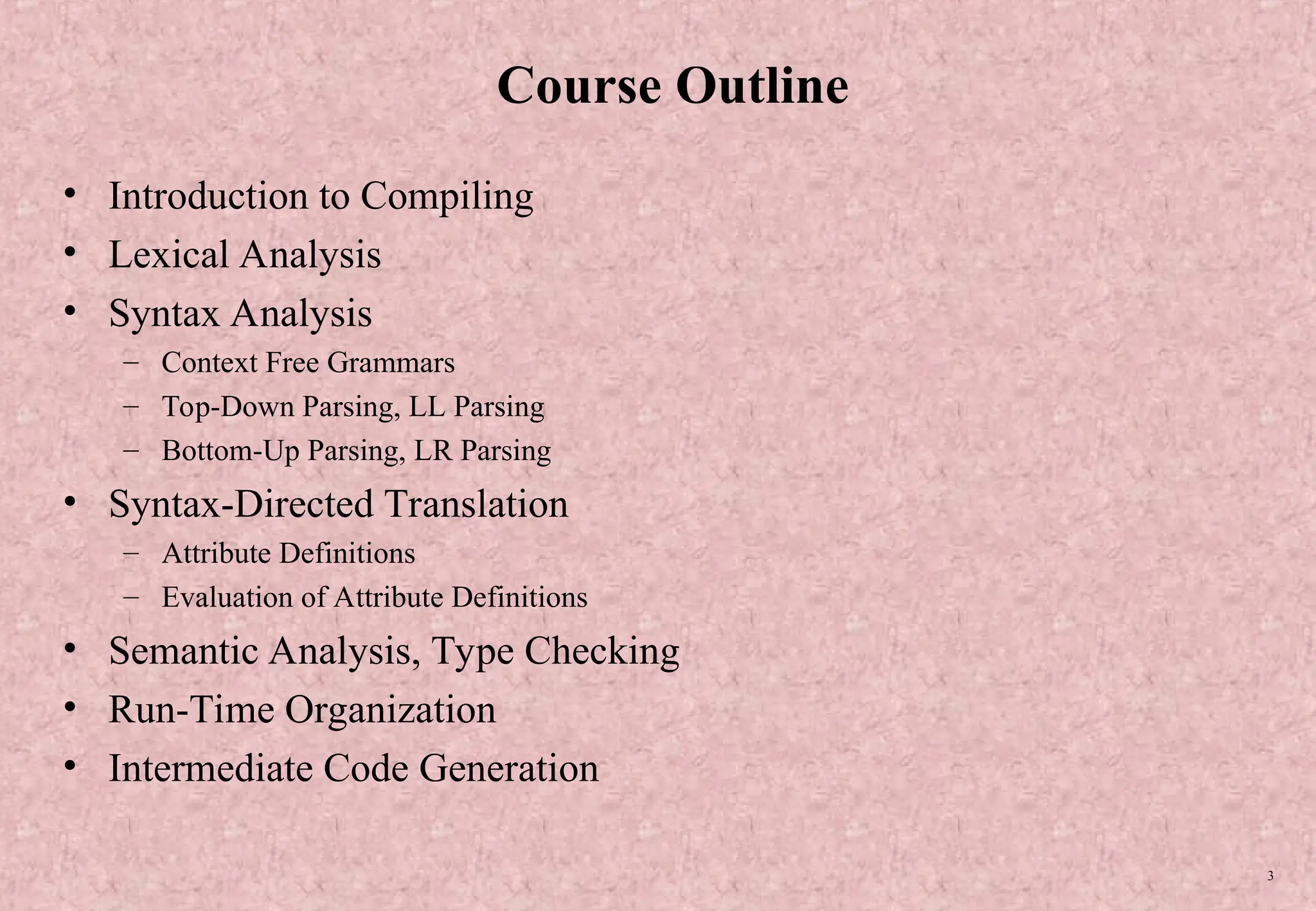

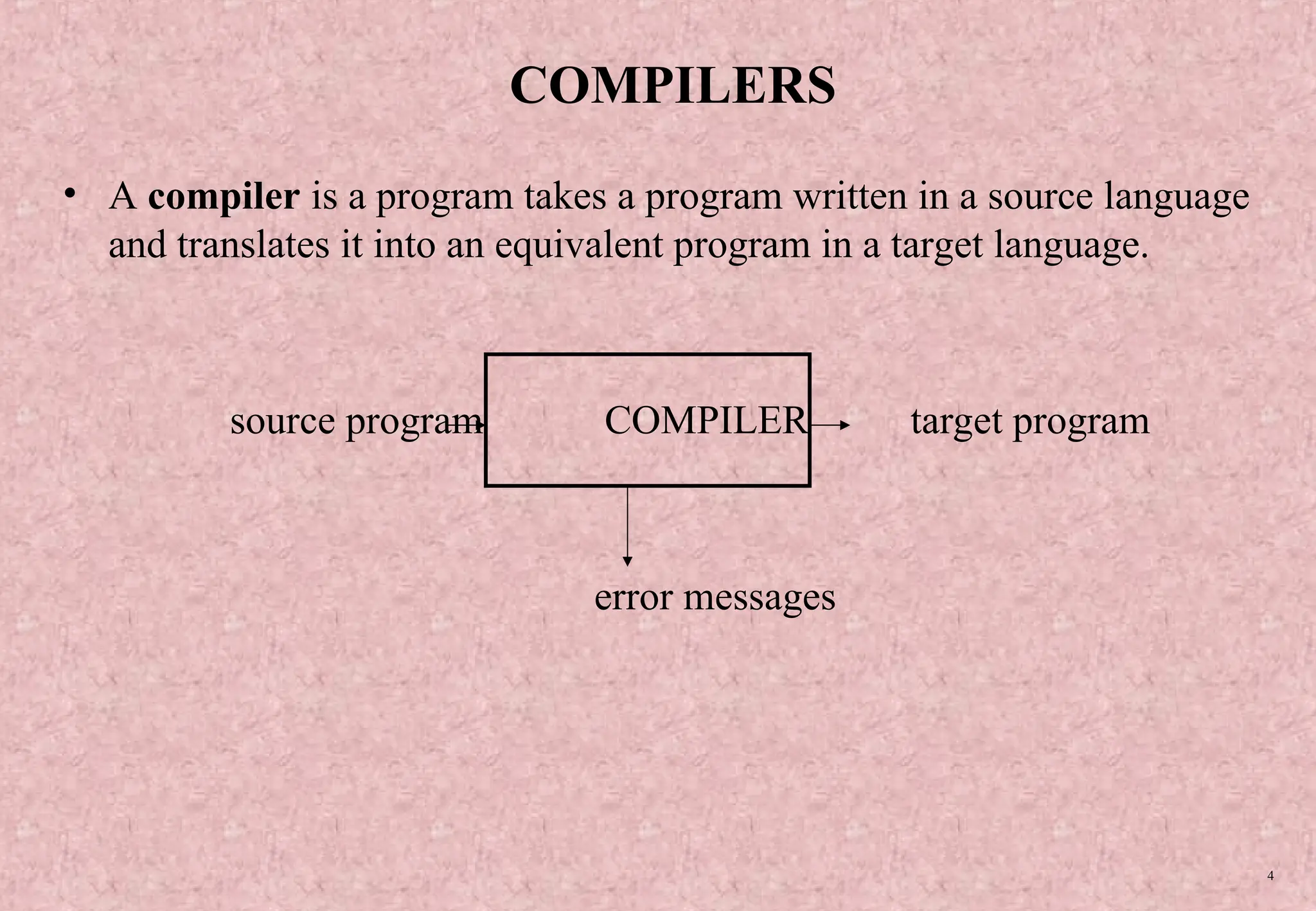

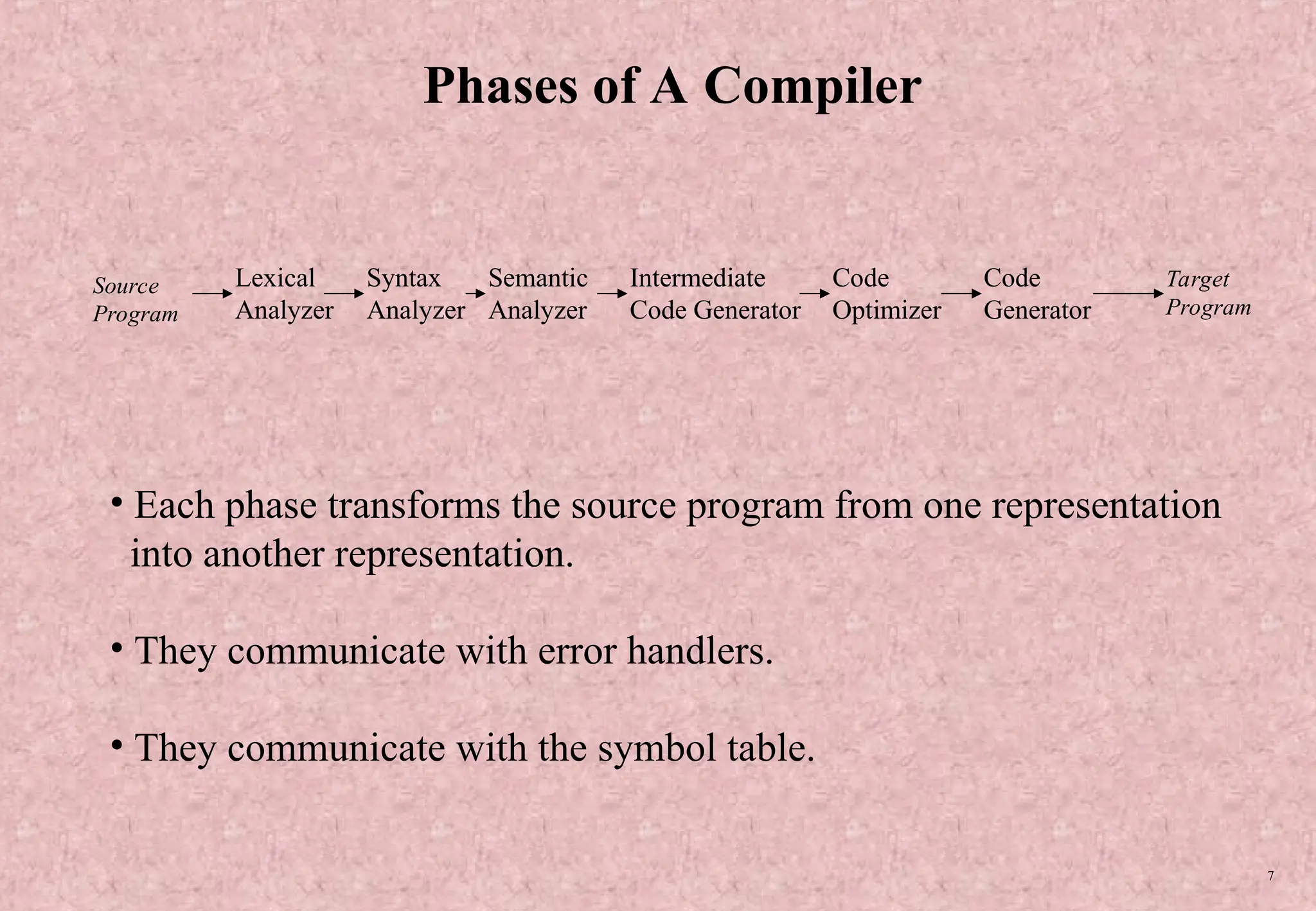

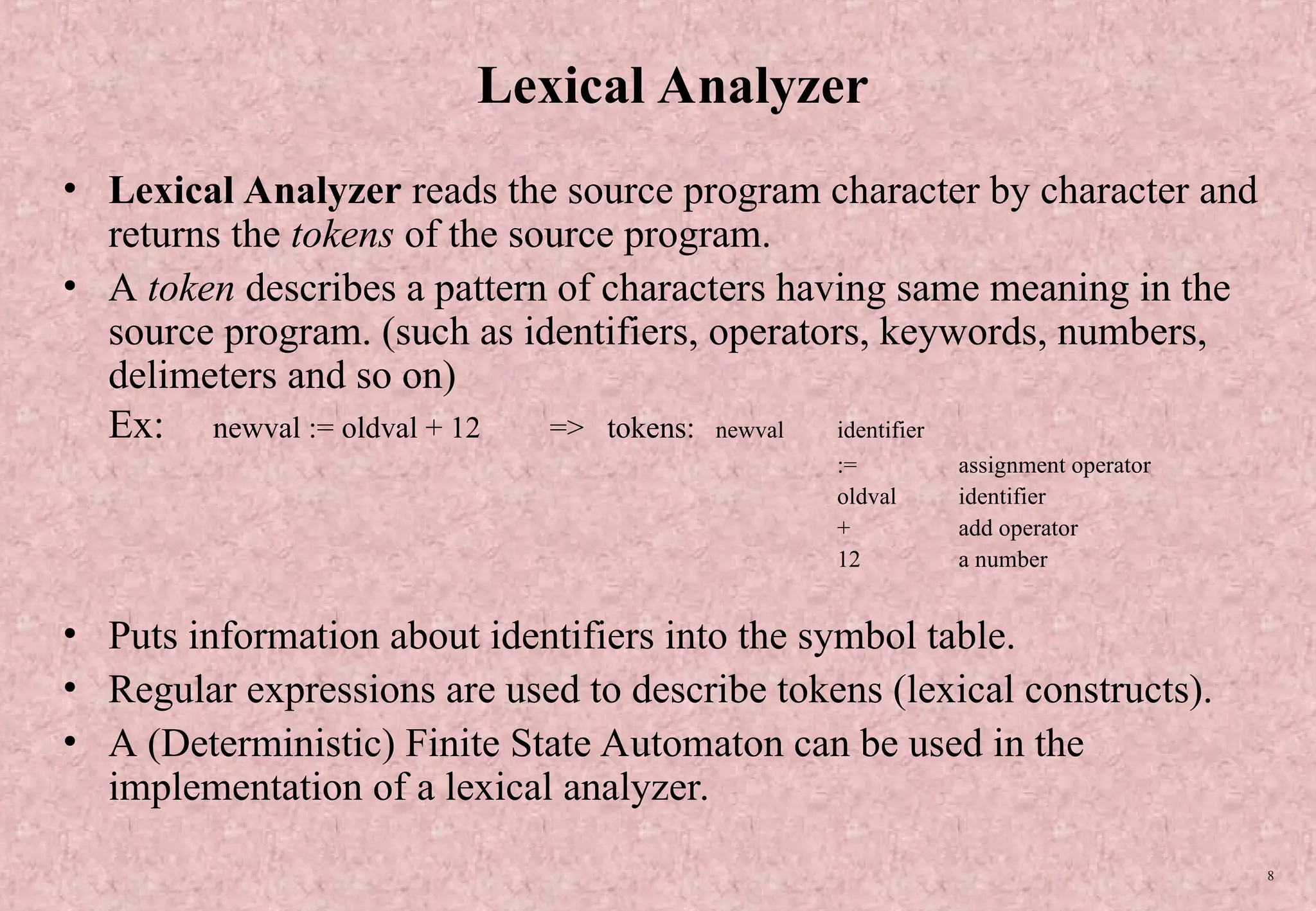

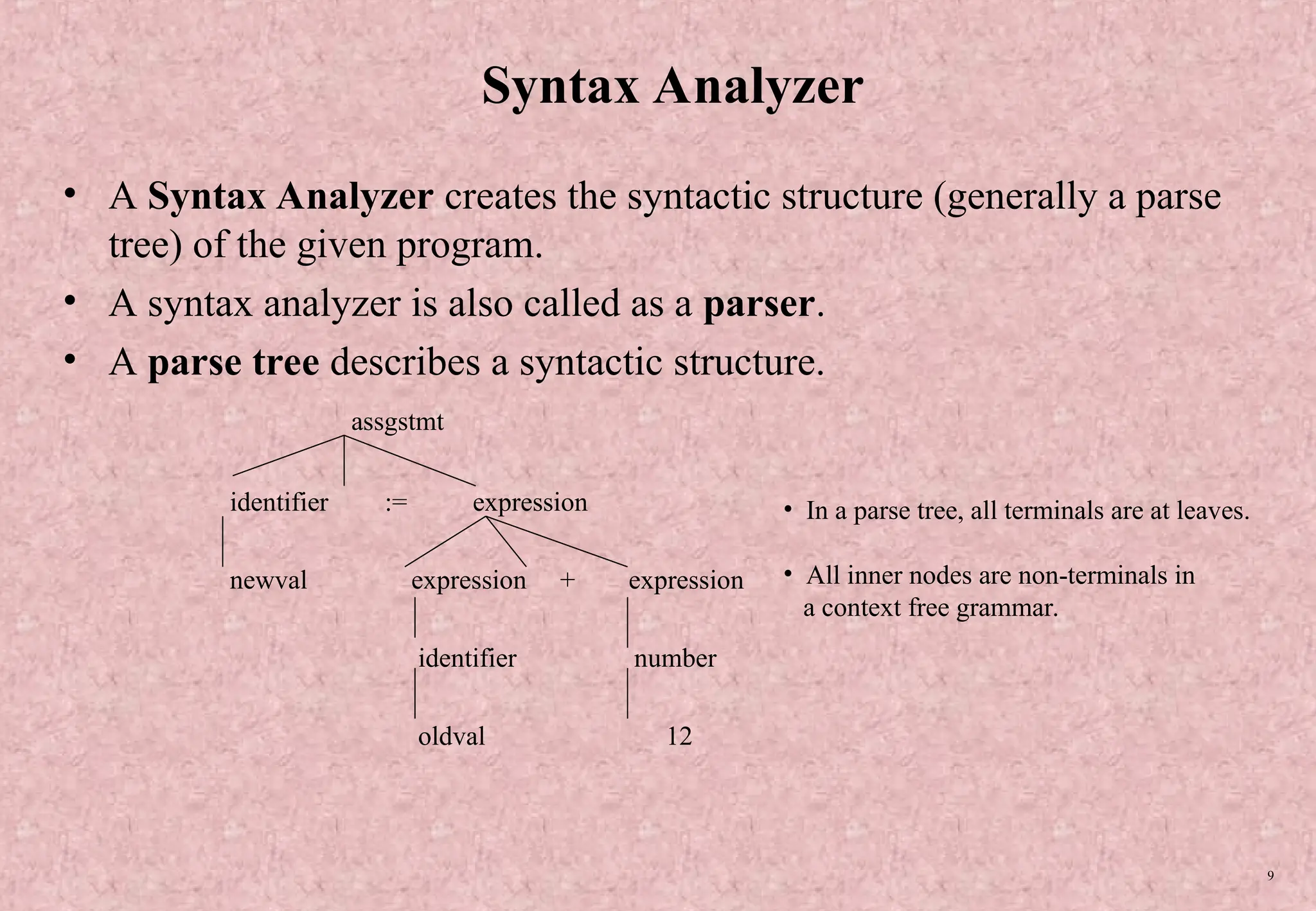

The document provides an overview of compiler design, outlining key concepts such as lexical analysis, syntax analysis, semantic analysis, and the phases of compilers, which include analysis and synthesis. It emphasizes the significance of parsing techniques and the role of different components like lexical analyzers and syntax analyzers in transforming source programs into target programs. Additionally, it notes the broader applications of compiler techniques in various areas of computer science, including natural language processing and software with complex front-ends.