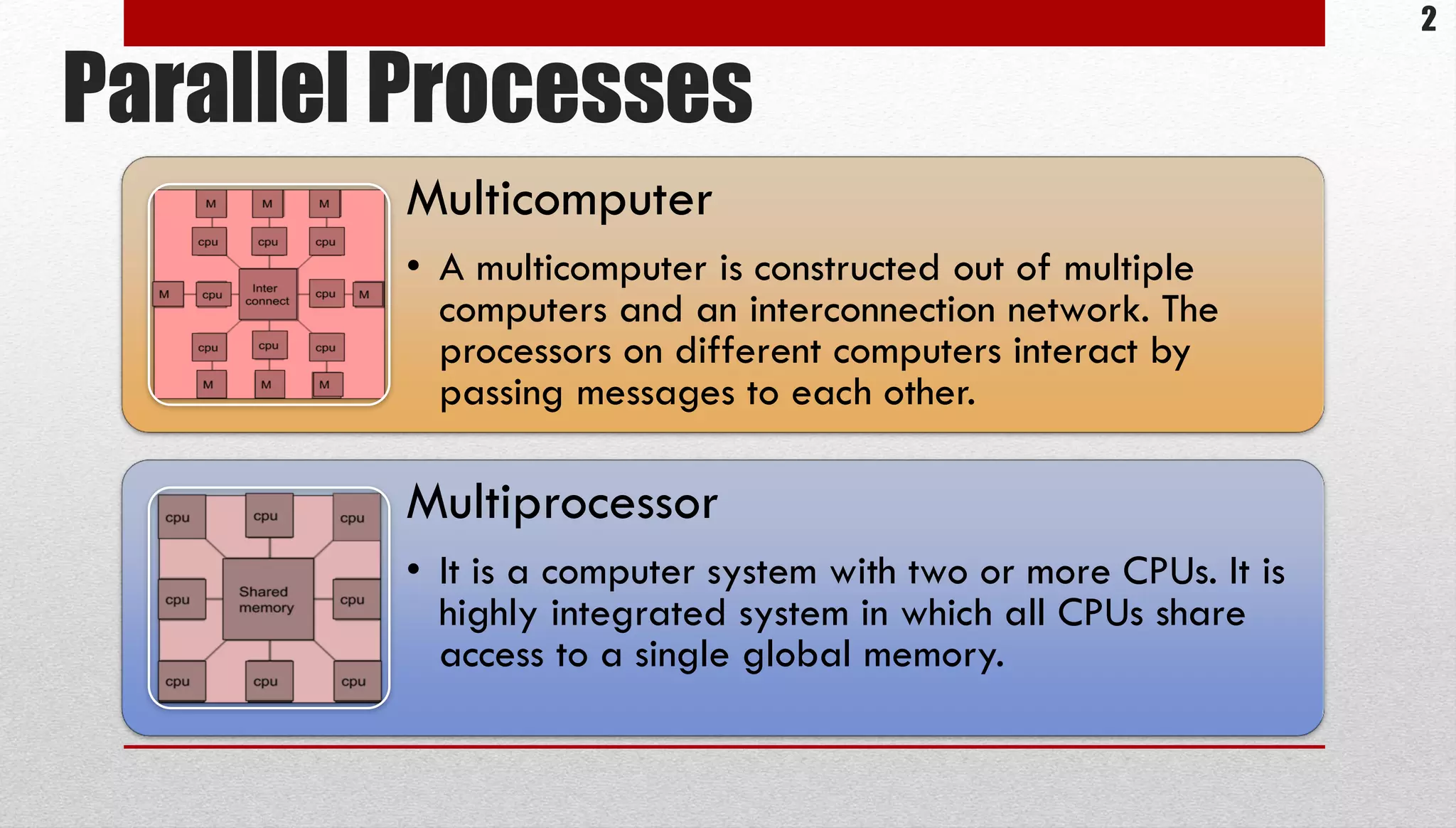

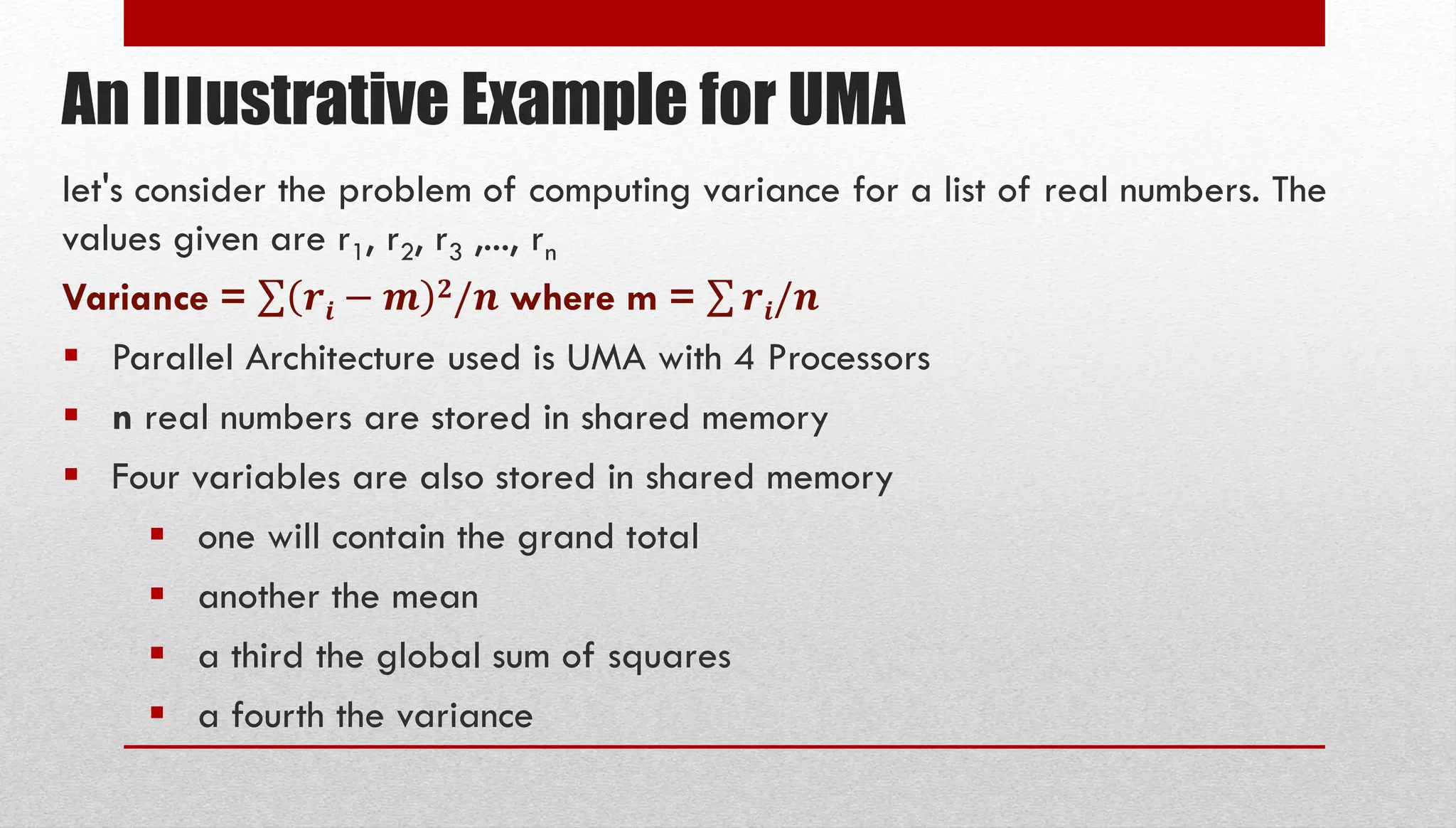

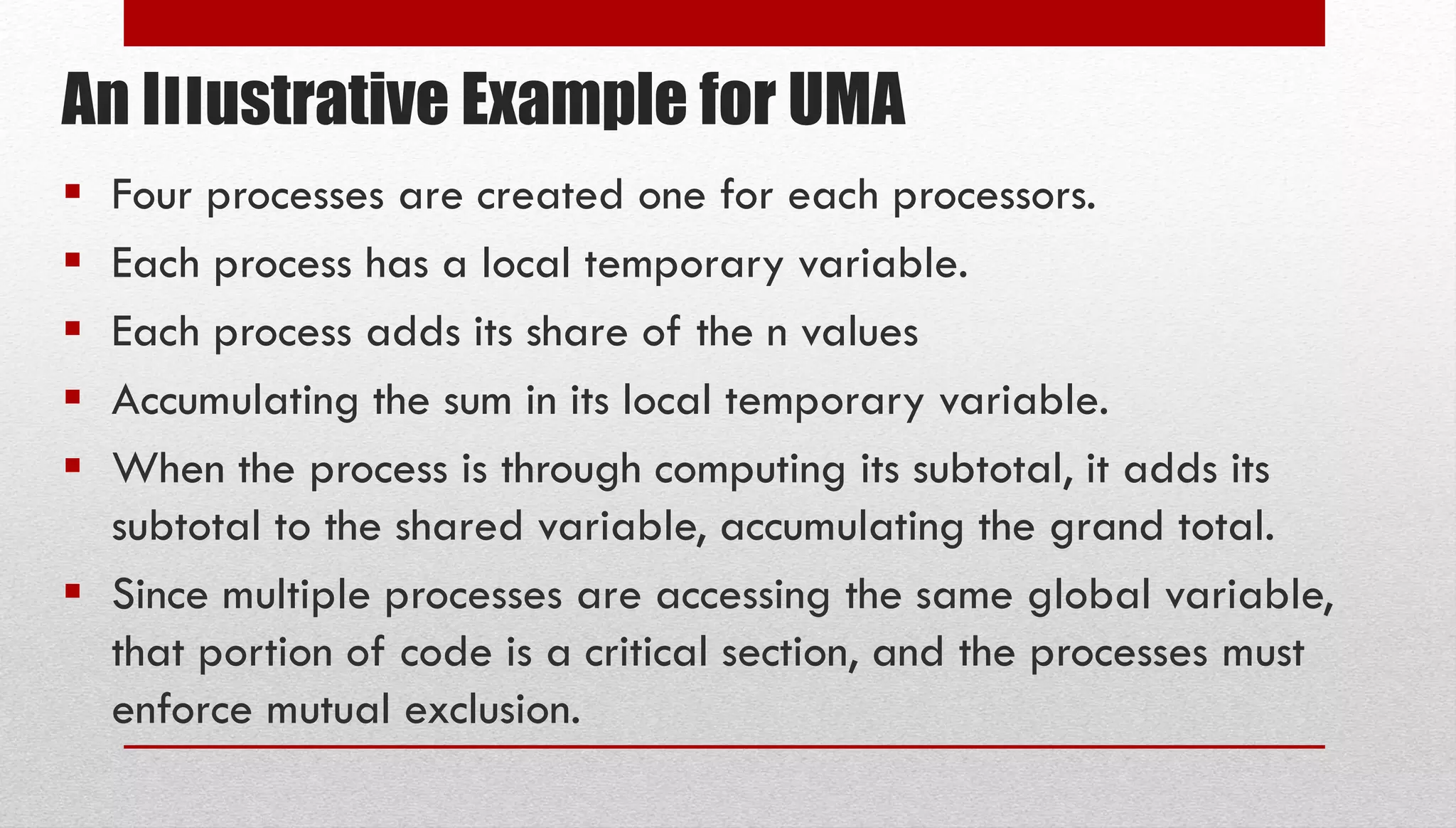

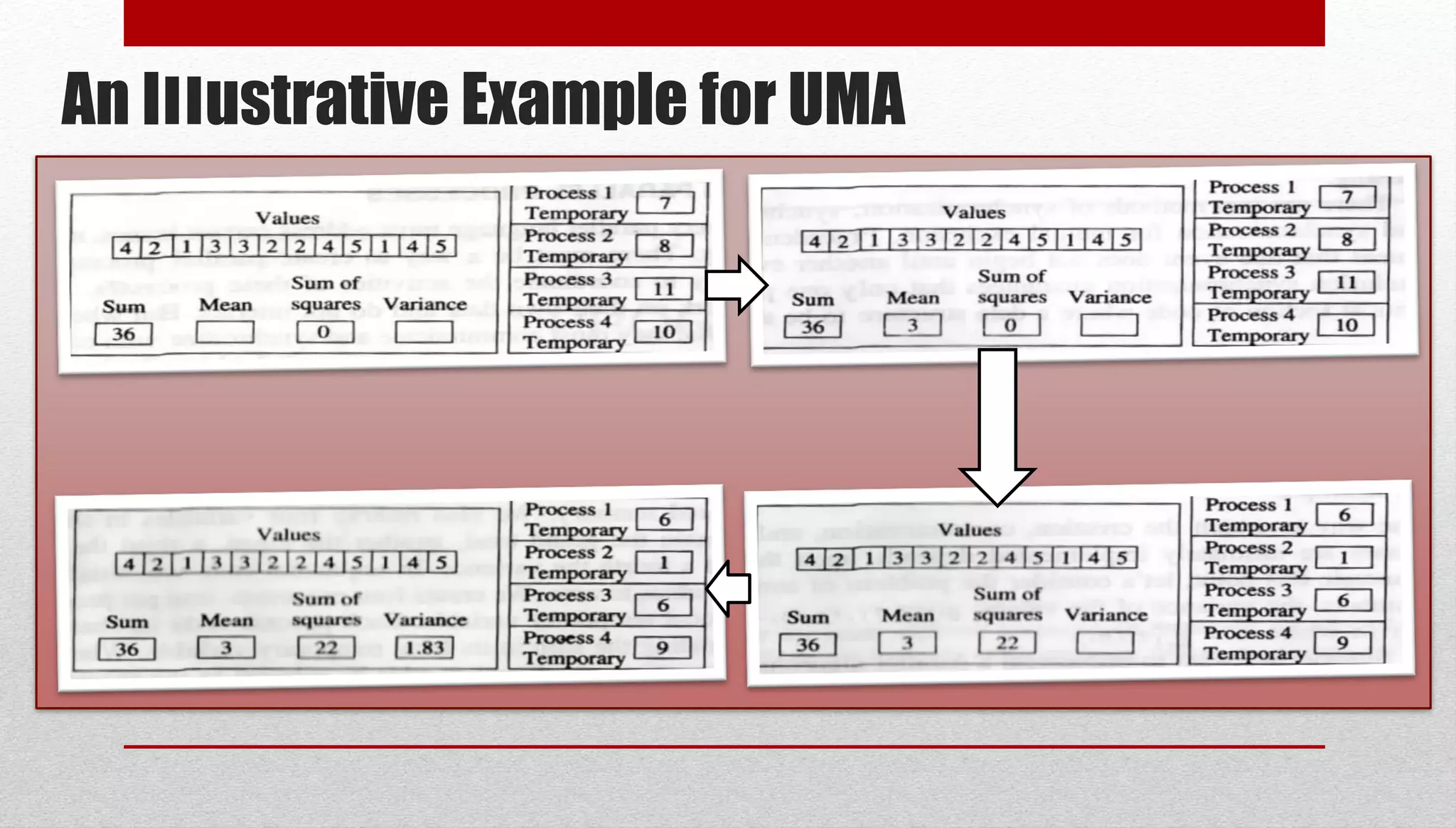

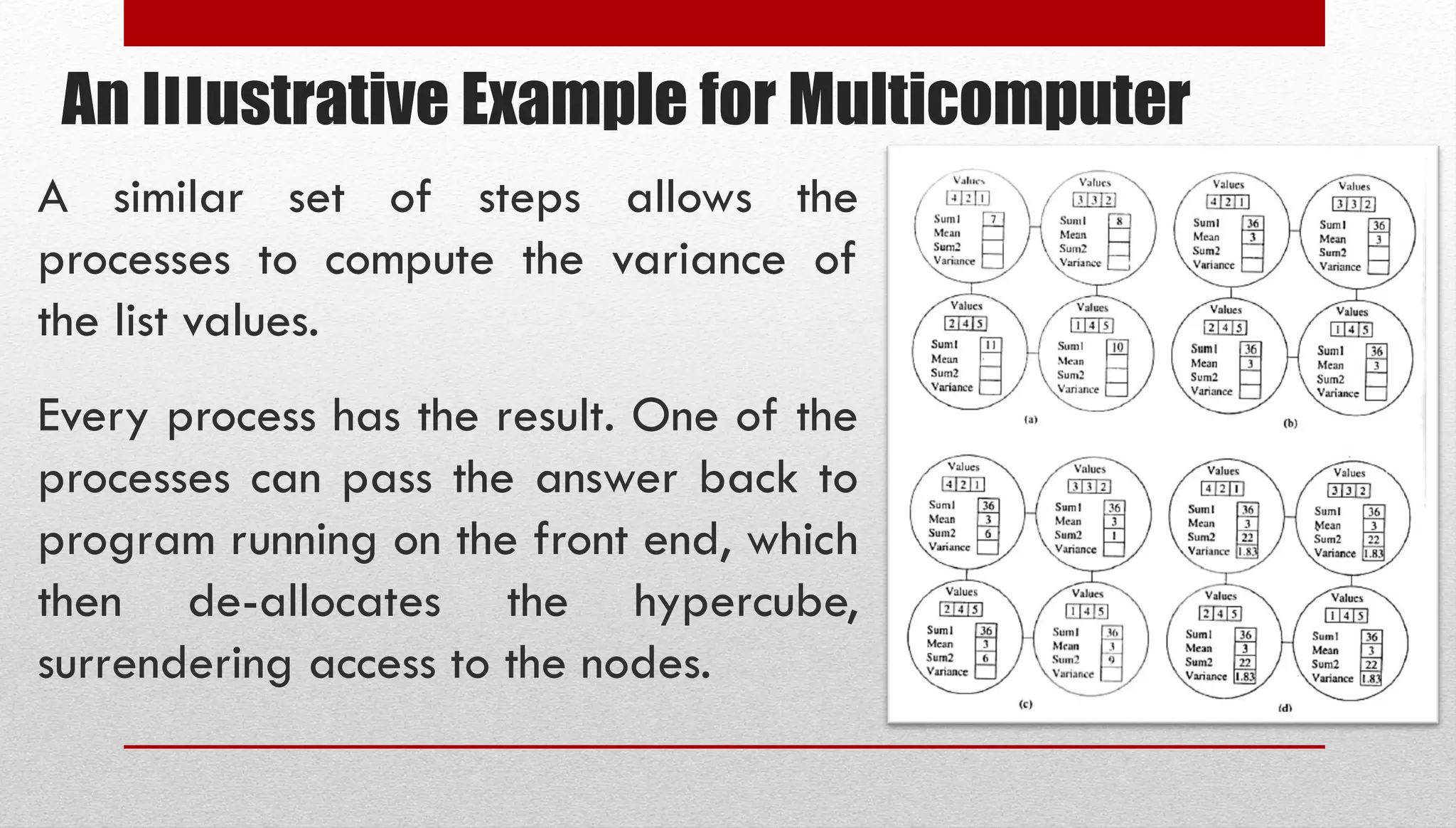

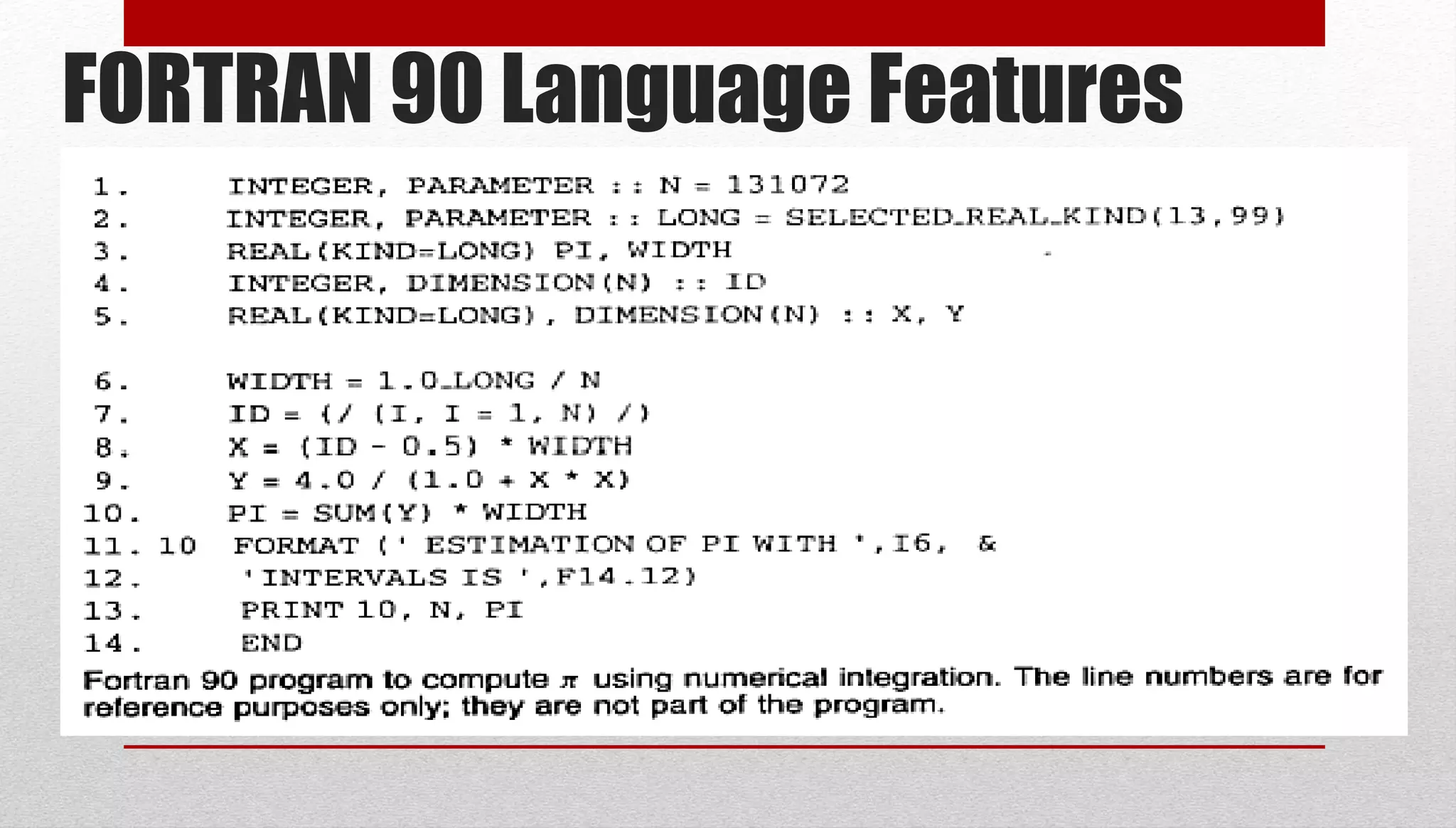

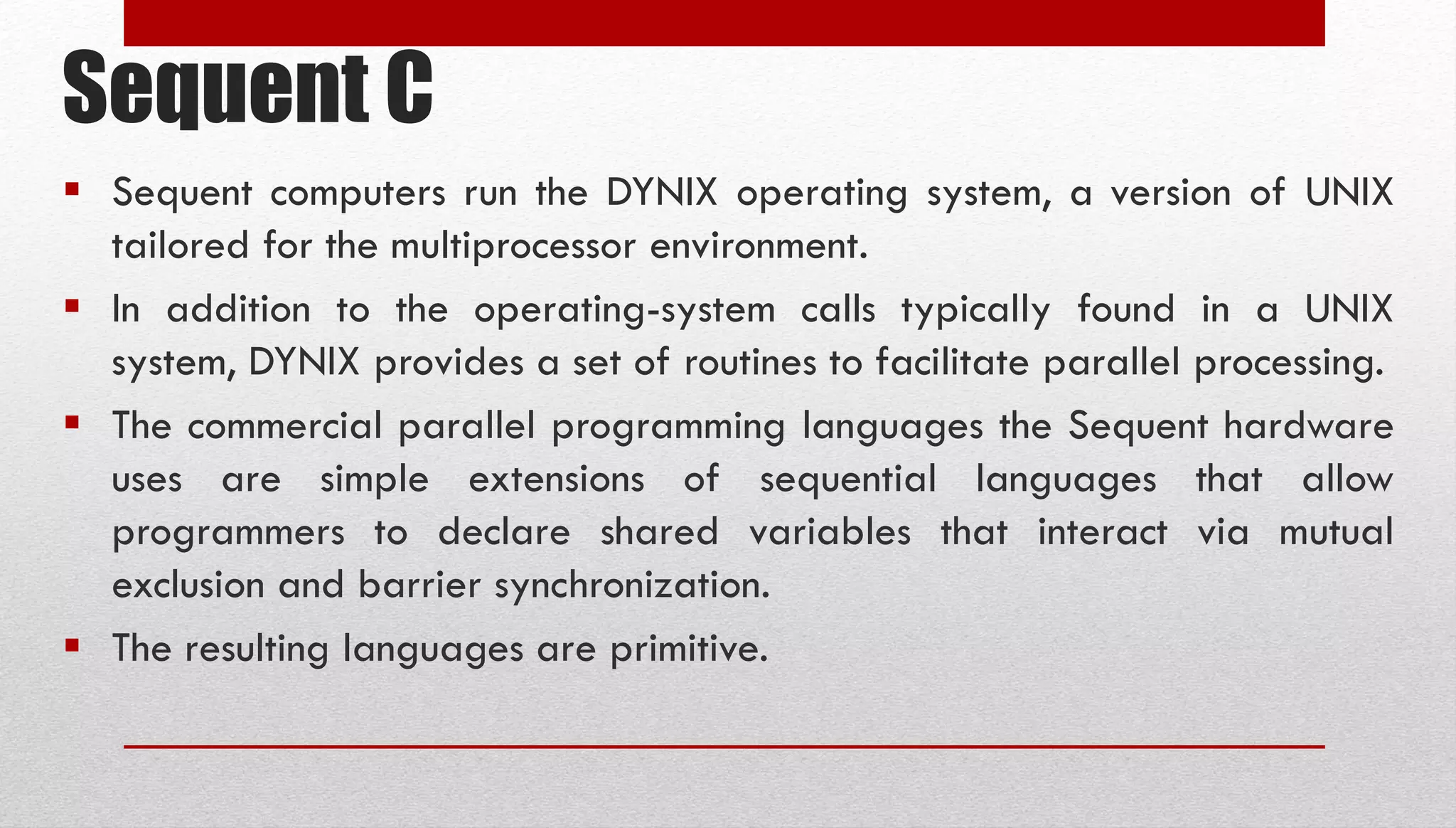

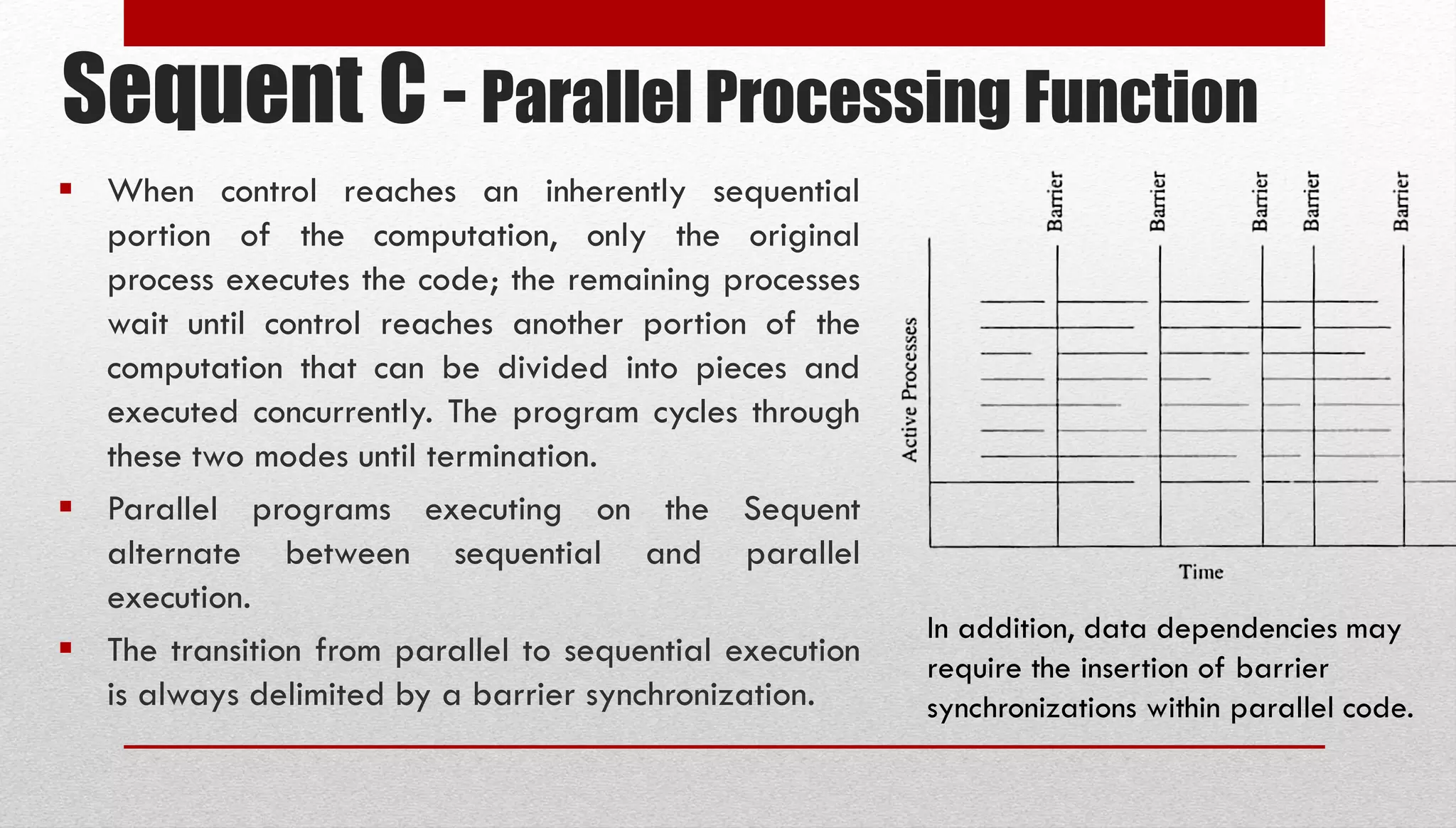

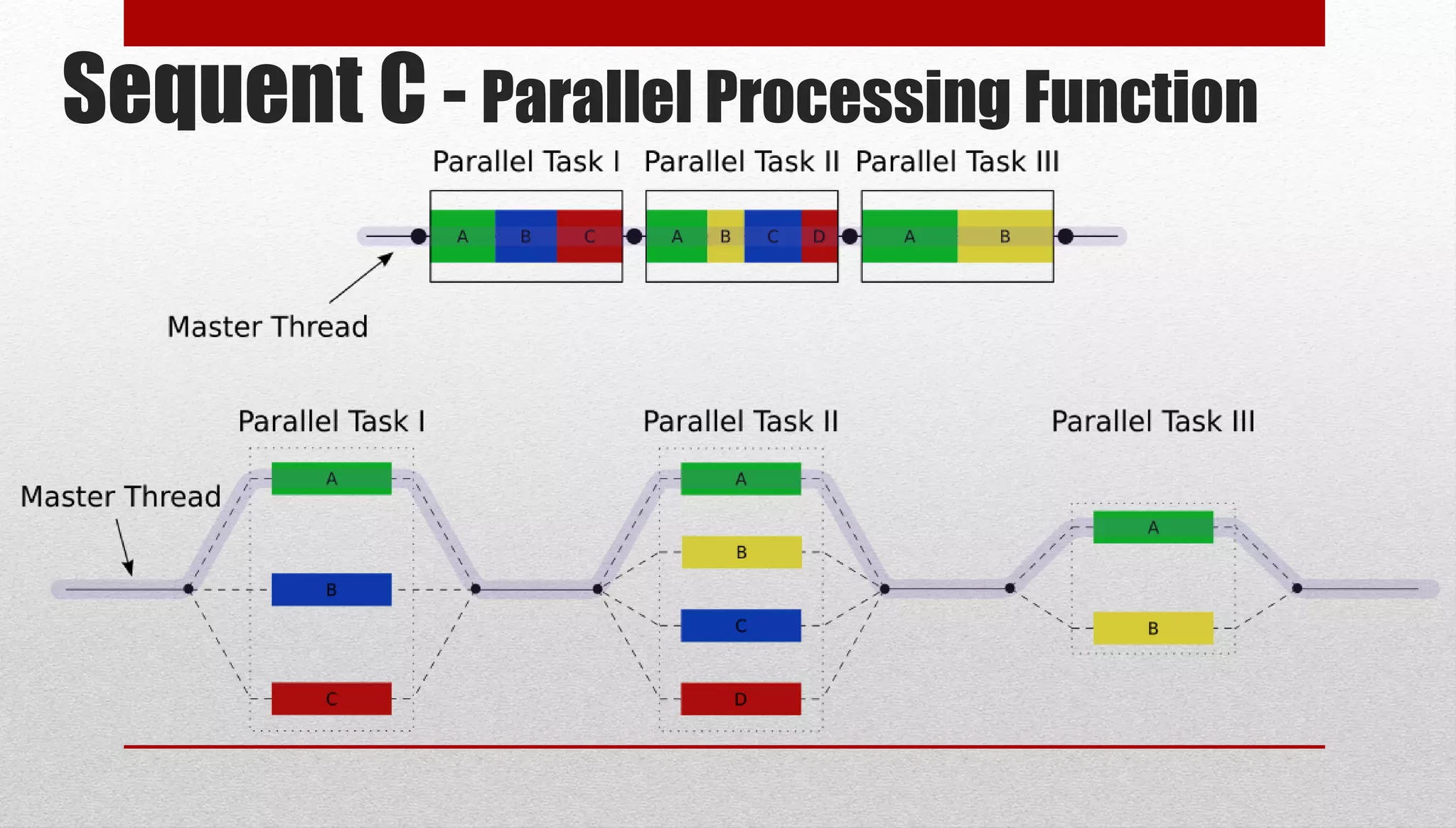

This document discusses parallel programming languages, including Fortran 90 and Sequent C. It provides an overview of parallel programming concepts like multicomputers, multiprocessors, and uniform memory access. It also gives examples of calculating variance and integration in parallel and describes language features for parallel programming like process creation, communication, and synchronization using barriers and mutual exclusion.

![A Sample Application Integration: Find the area under the curve 4/( I +x2) between 0 and 1= The interval [0, 1] is divided into n subintervals of width I/n. For each these intervals the algorithm computes the area of a rectangle whose height is such that the curve intersects the top of the rectangle at its midpoint. The sum of the areas of the n rectangles approximates the area under the curve.](https://image.slidesharecdn.com/chapter-4-200902085019/75/Chapter-4-Parallel-Programming-Languages-16-2048.jpg)

![Sequent C - Shared Data Parallel processes on the Sequent coordinate their activities by accessing shared data structures. The keyword shared placed before a global variable declaration, indicates that all processes are to share a single instance of that variable. For example, if a 10-element global array is declared int a [10], then every active process has its own copy of the array; if one process modifies a value in its copy of a, no other process's value will change. On the other hand, if the array is declared shared int a [10] , then all active processes share a single instance of the array, and changes made by one process can be detected by the other processes.](https://image.slidesharecdn.com/chapter-4-200902085019/75/Chapter-4-Parallel-Programming-Languages-31-2048.jpg)

![Sequent C - Parallel Processing Function 2. m_fork(name[,arg,...]): The parent process creates a number of child processes, The parent process and the child processes begin executing function name with the arguments (if any) also specified by the call to m_fork. After all the processes (the parent and all the children) have completed execution of function name, the parent process resumes execution with the code after m_fork, while the child processes busy wait until the next call to m_fork.](https://image.slidesharecdn.com/chapter-4-200902085019/75/Chapter-4-Parallel-Programming-Languages-36-2048.jpg)

![Sequent C - Parallel Processing Function 2. m_fork(name[,arg,...]): The parent process creates p number of child processes All processes begin executing function name The parent process resumes execution with the code after m_fork The child processes busy wait until the next call to m_fork. Therefore, the first call to m_fork is more expensive than subsequent calls, because only the first call entails process creation.](https://image.slidesharecdn.com/chapter-4-200902085019/75/Chapter-4-Parallel-Programming-Languages-37-2048.jpg)