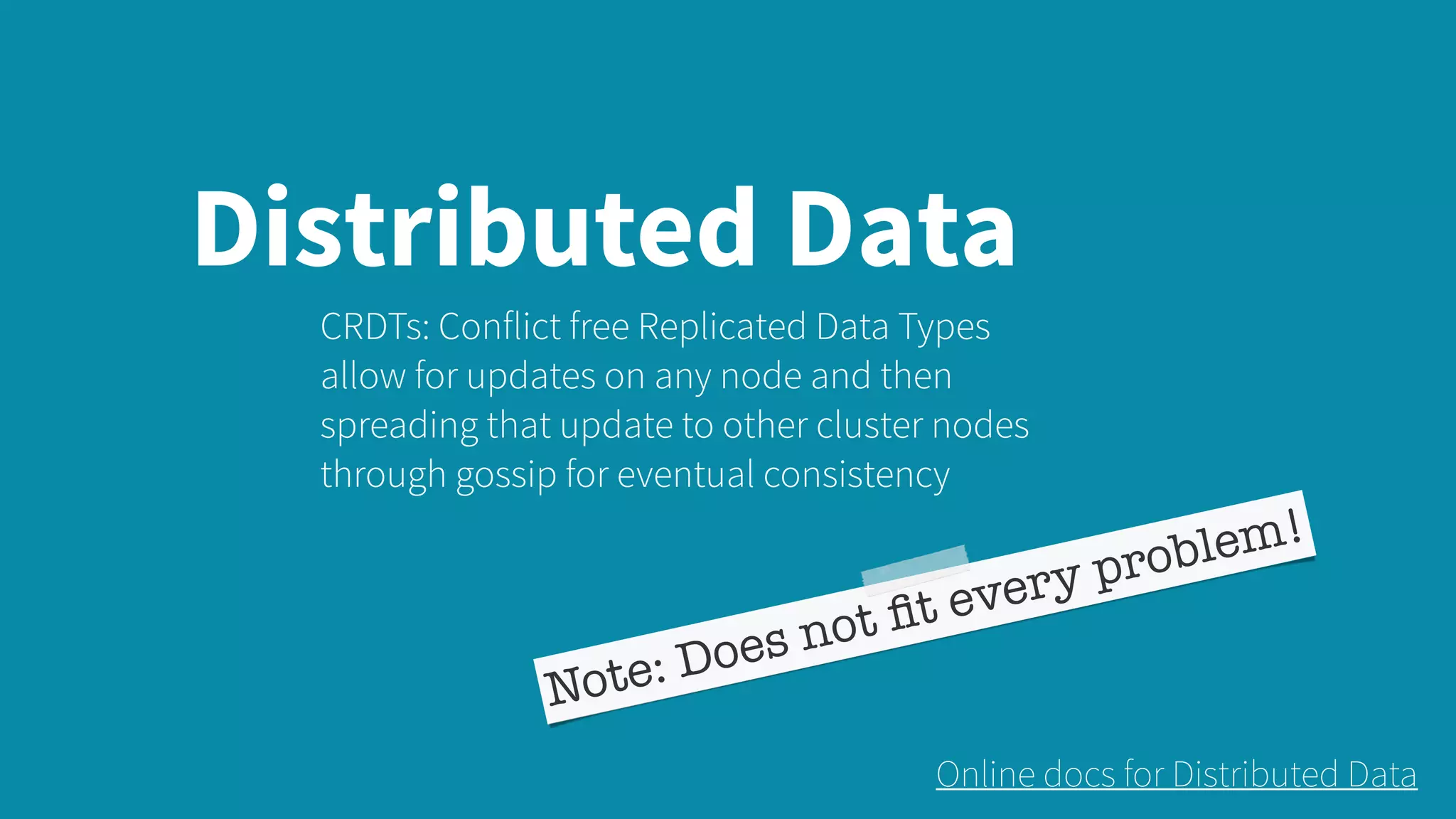

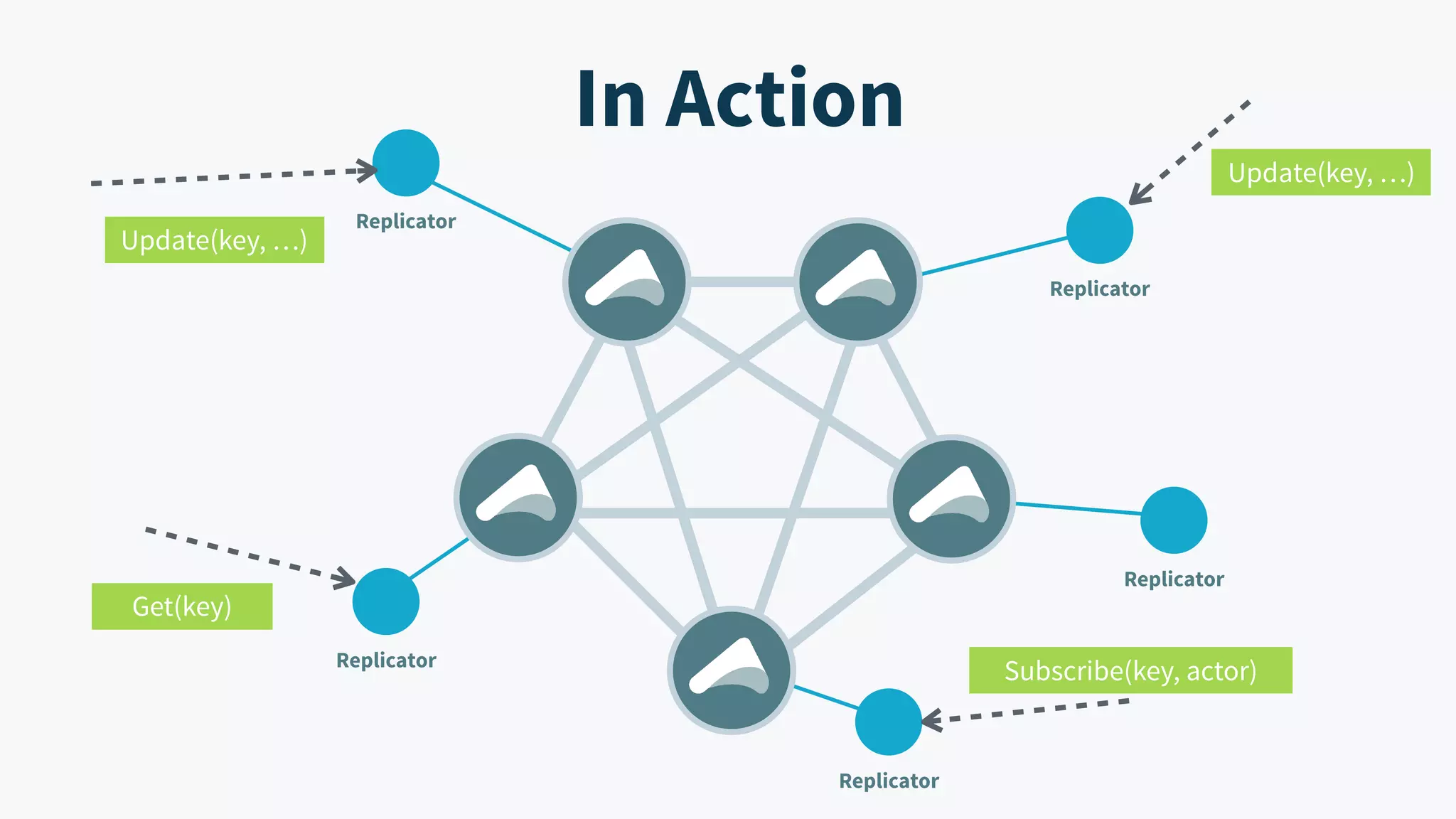

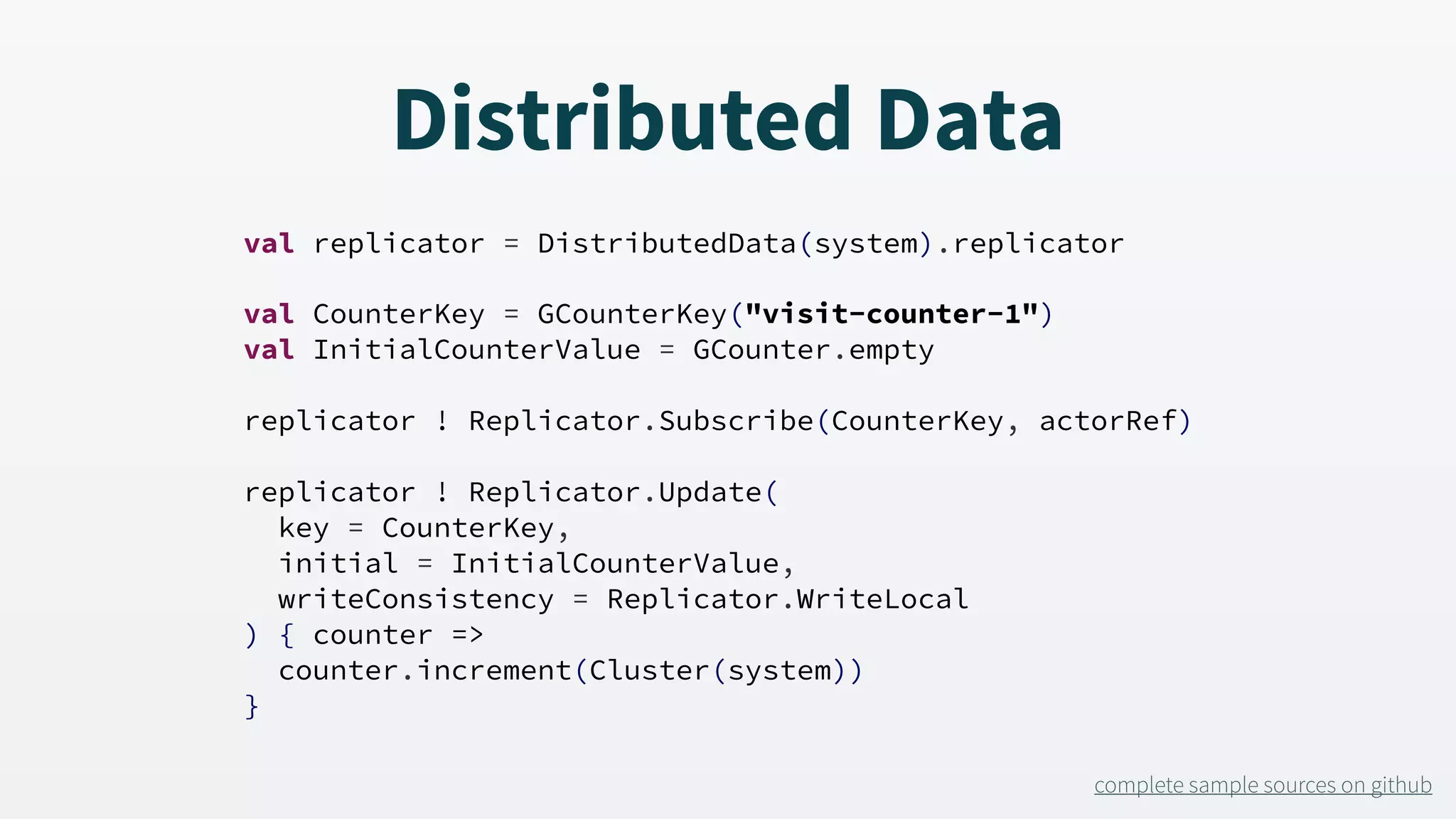

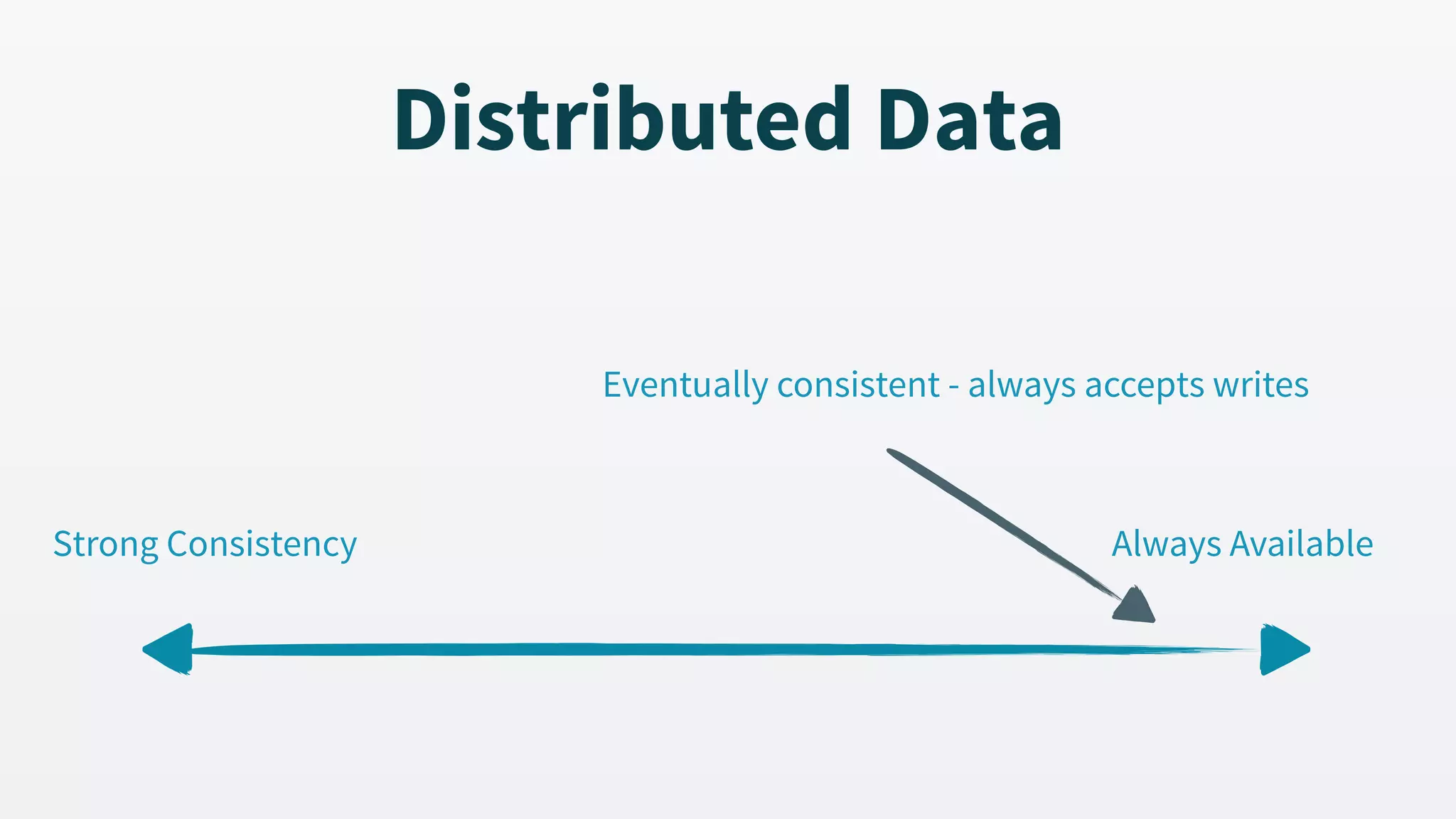

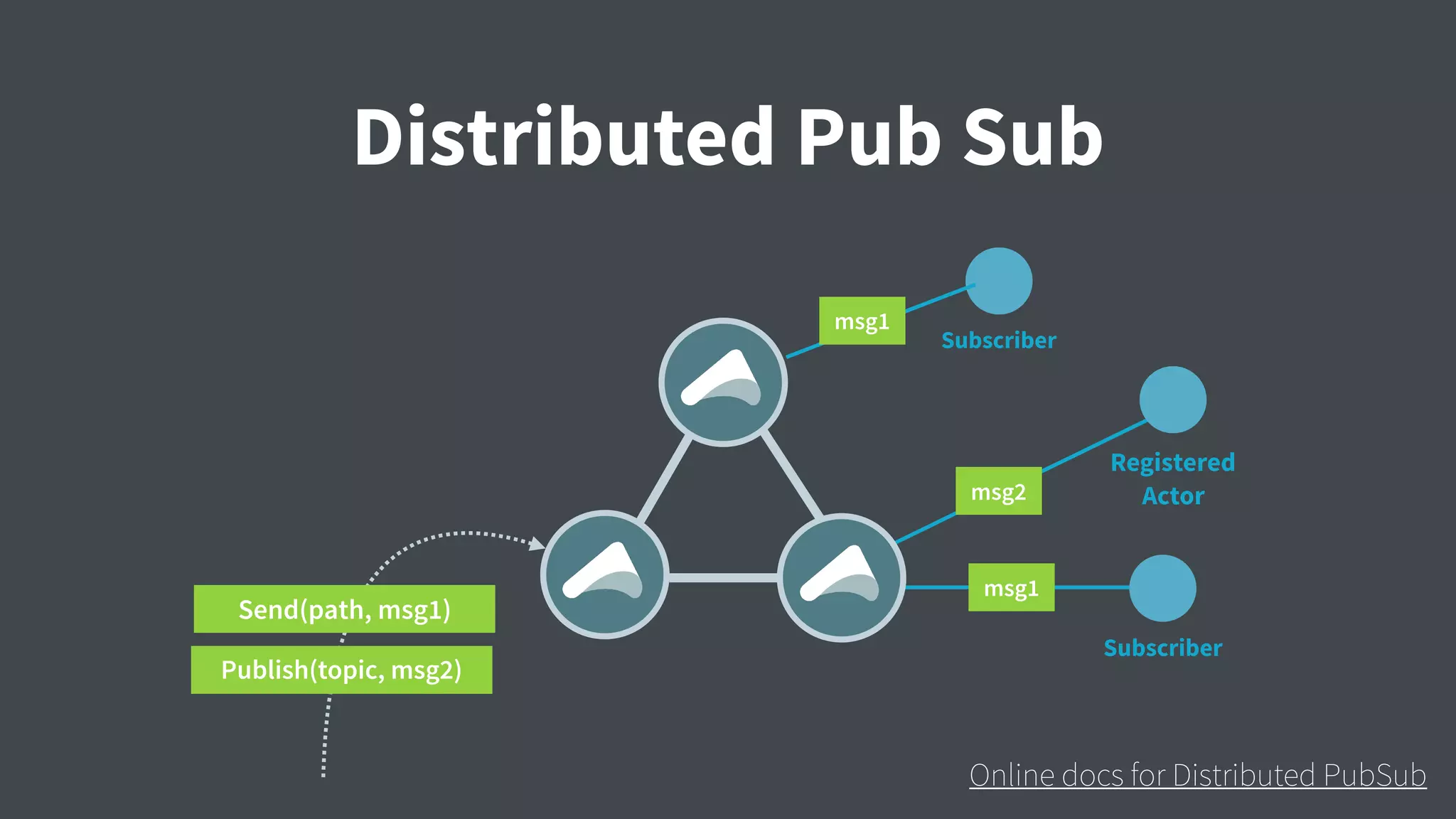

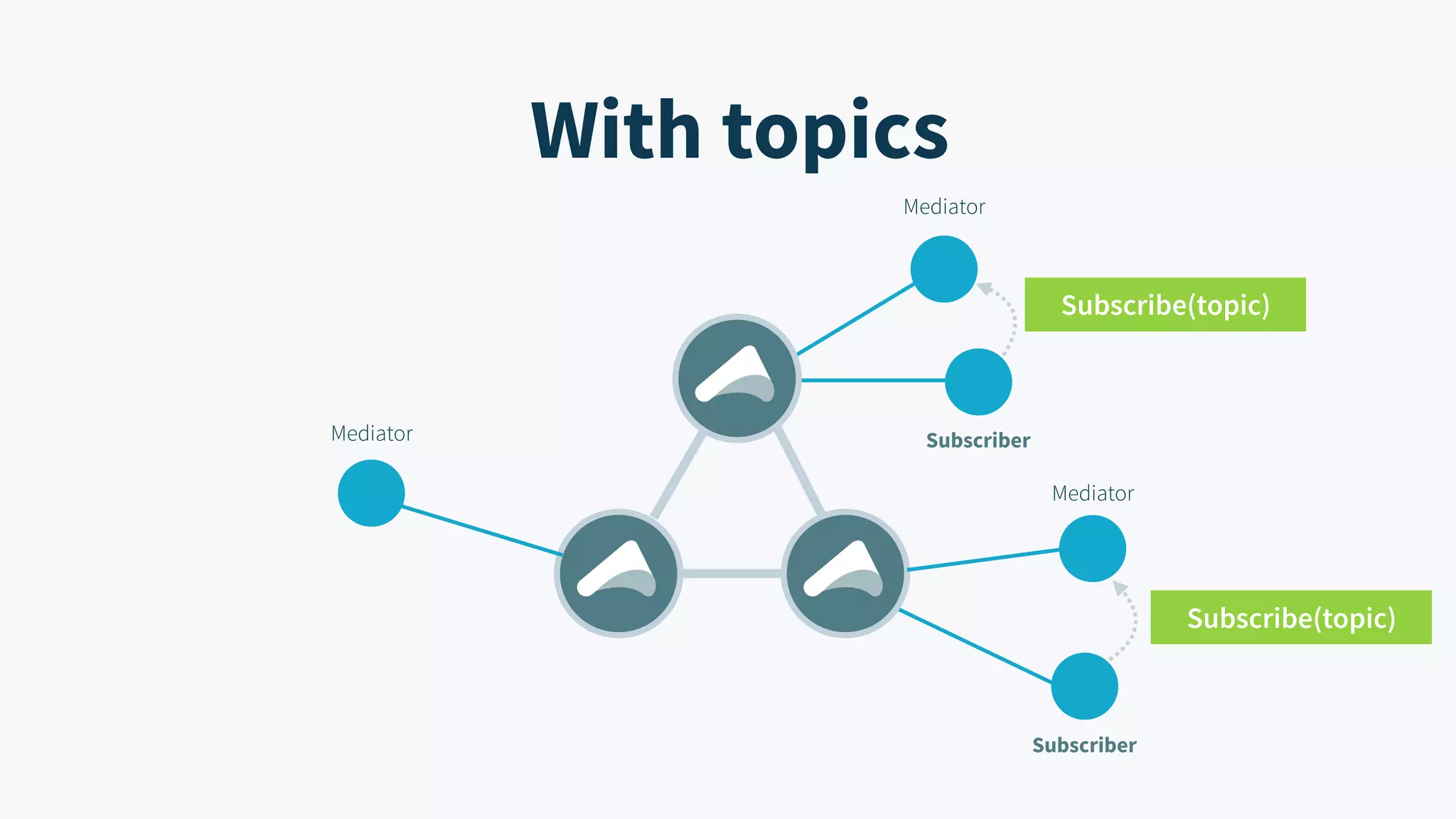

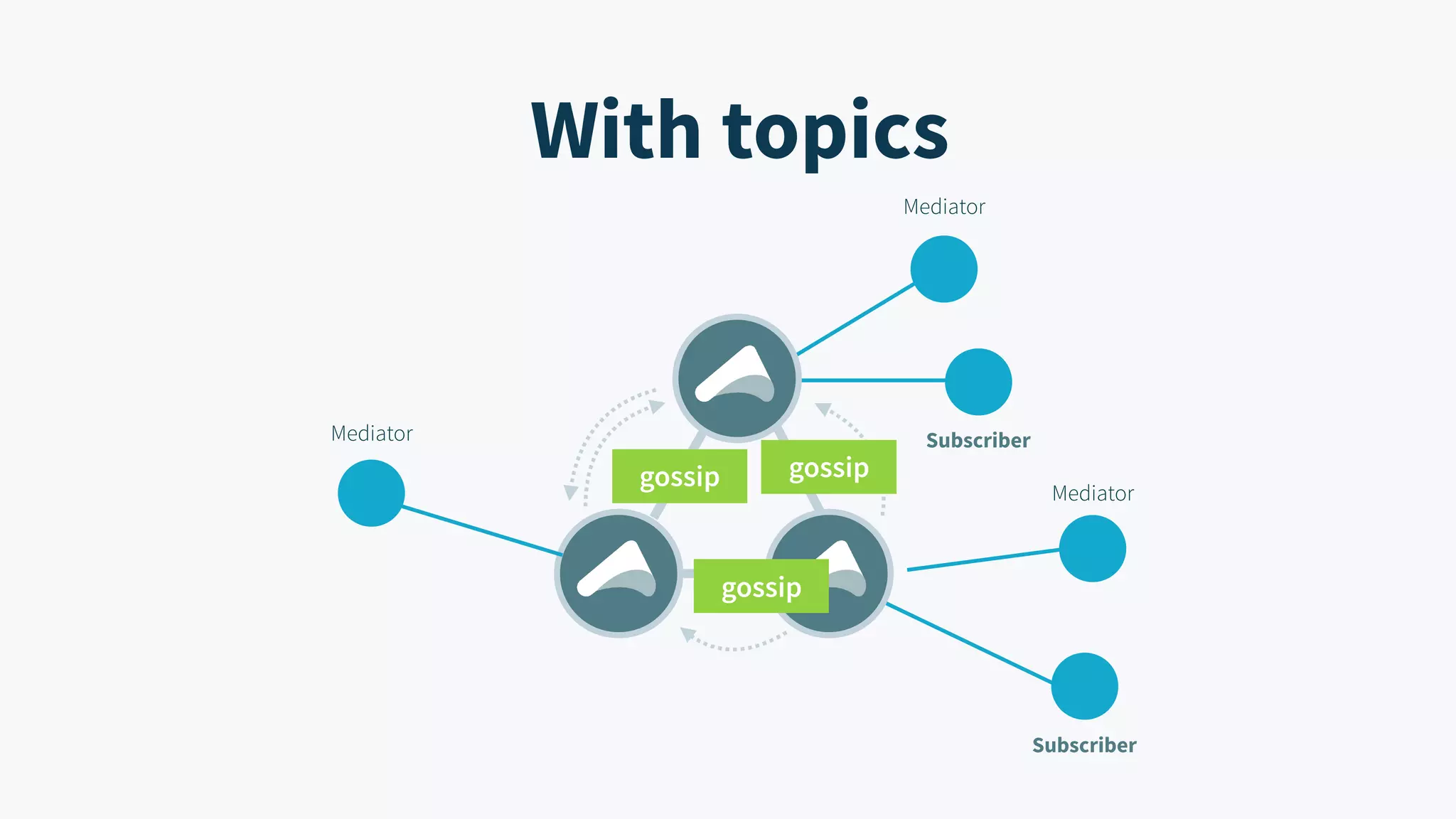

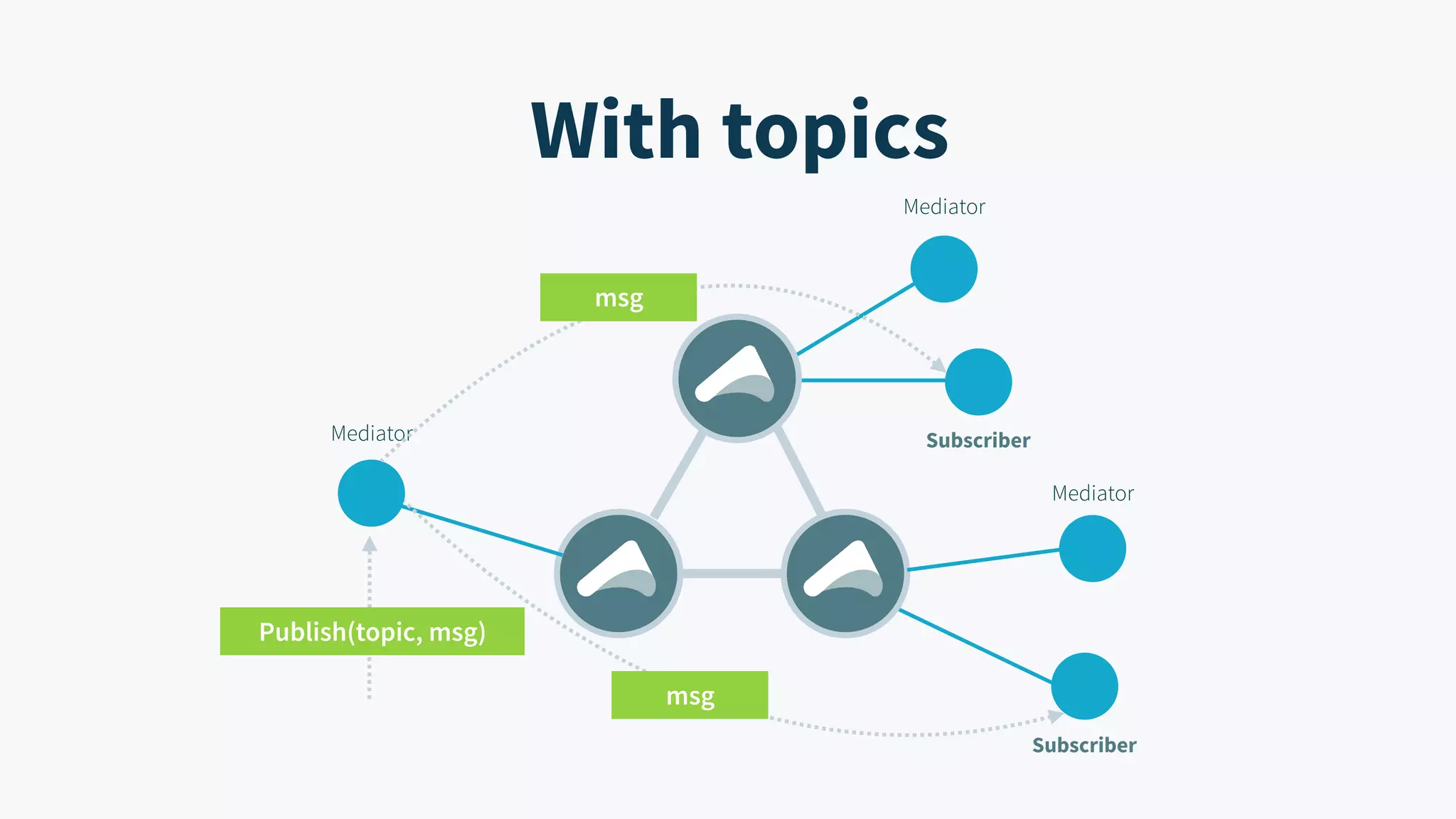

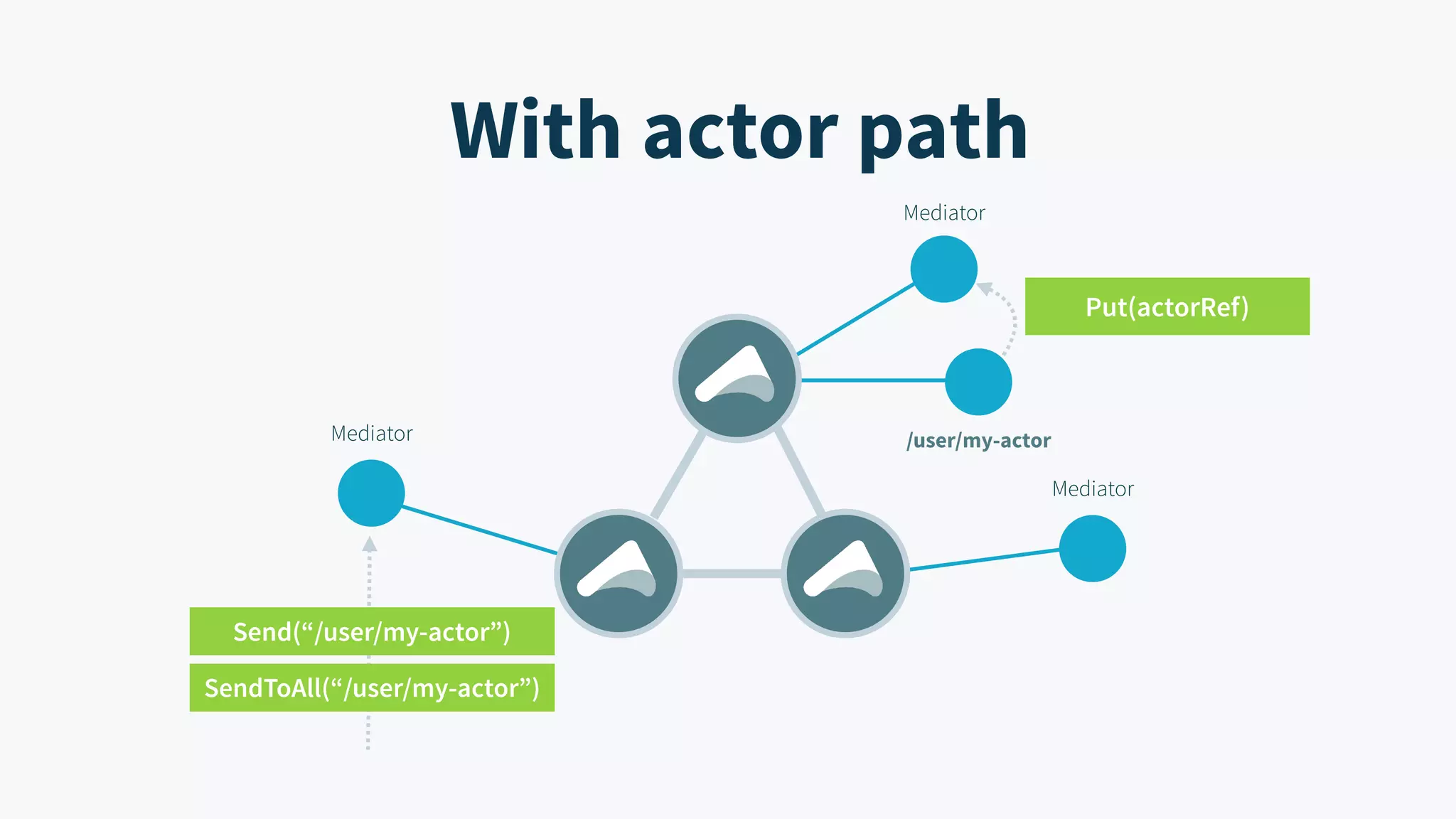

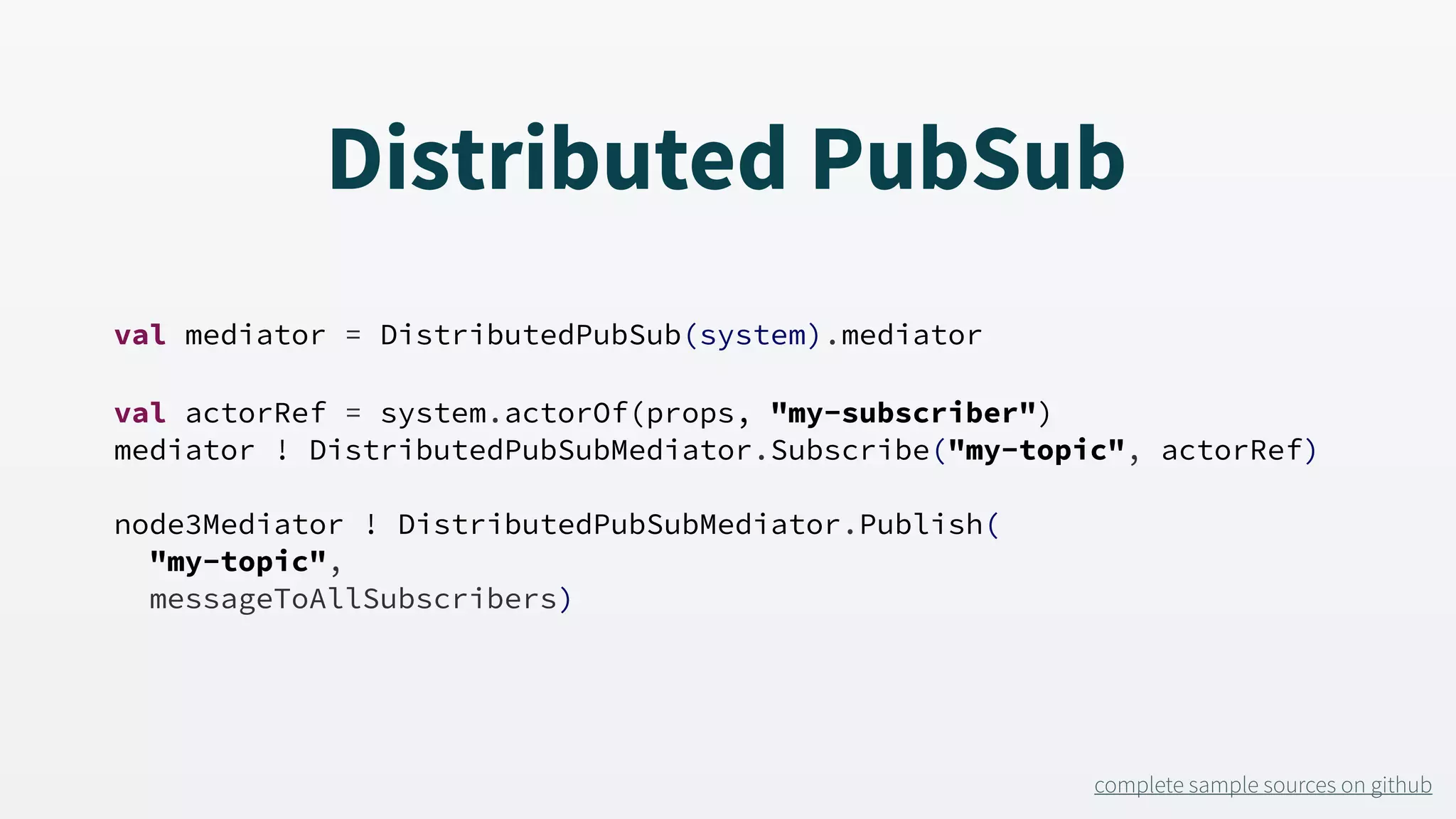

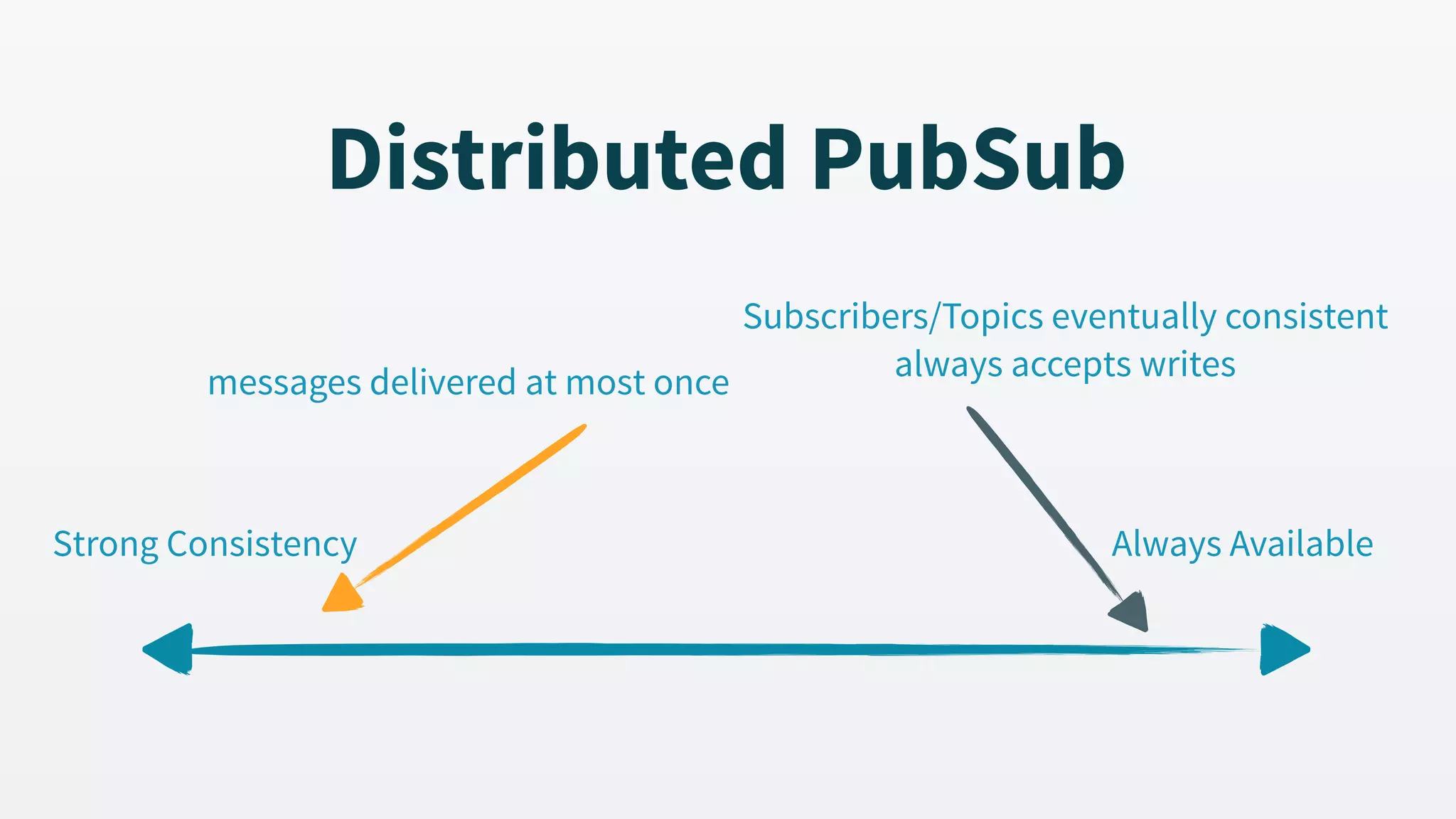

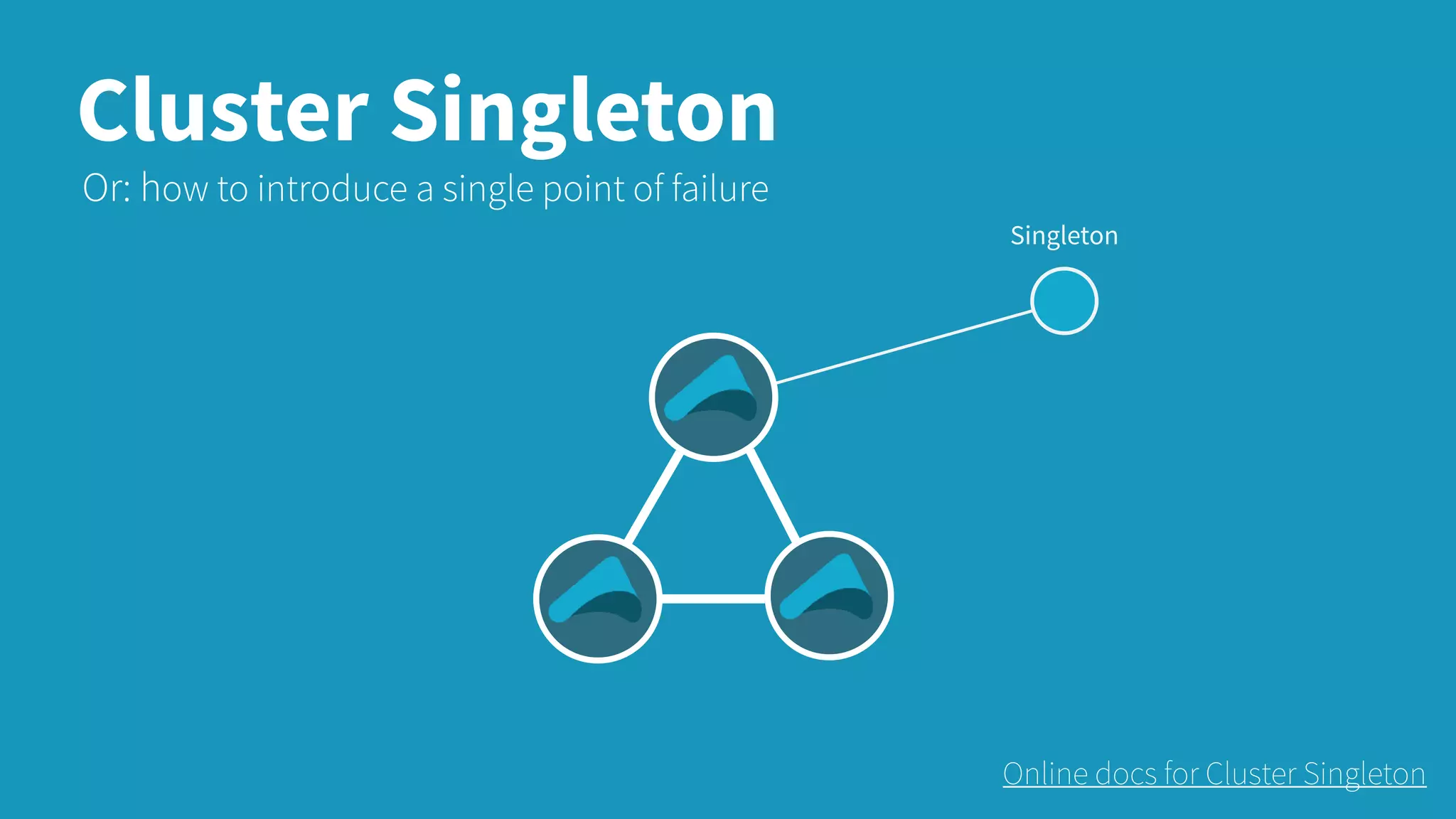

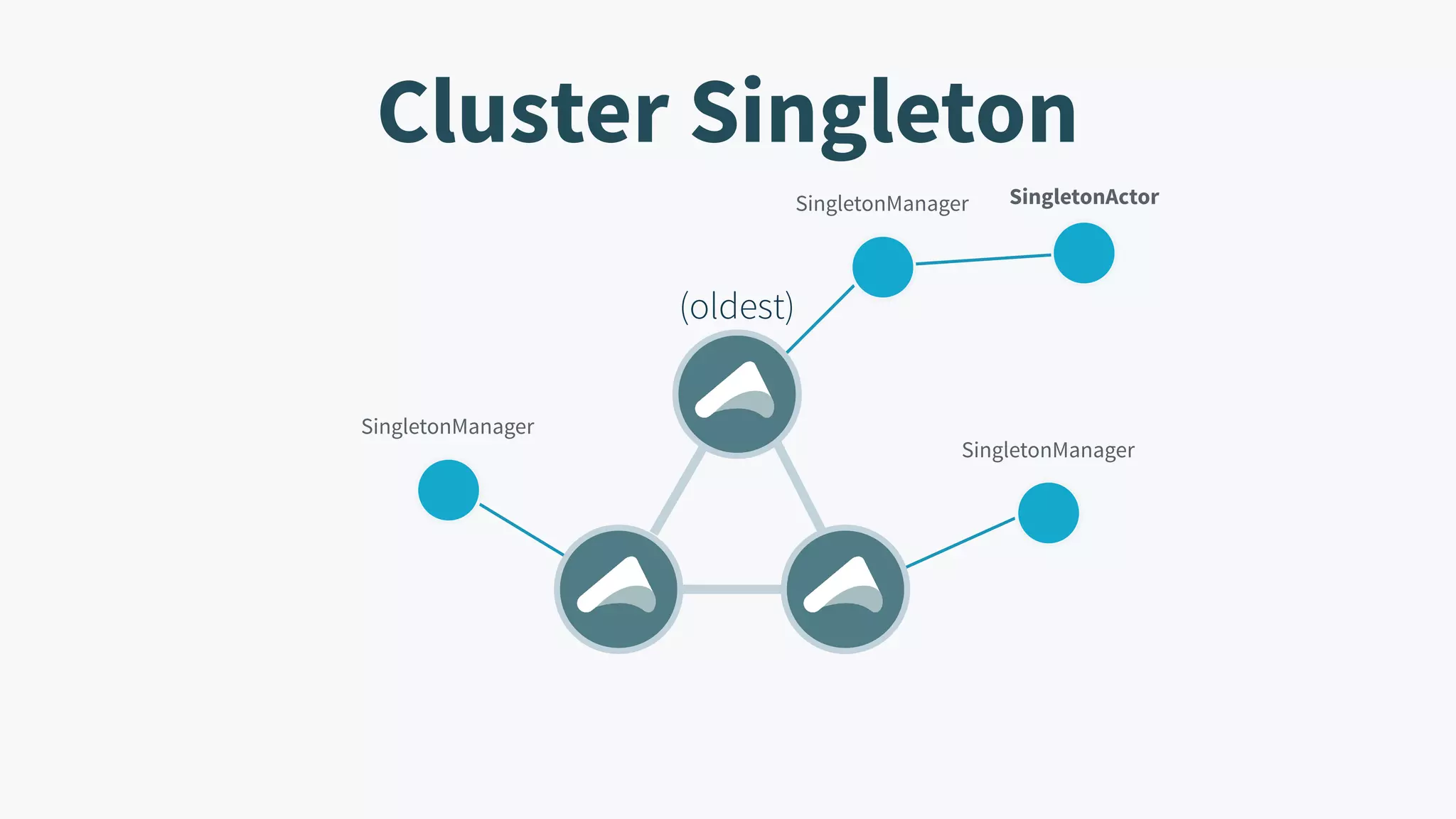

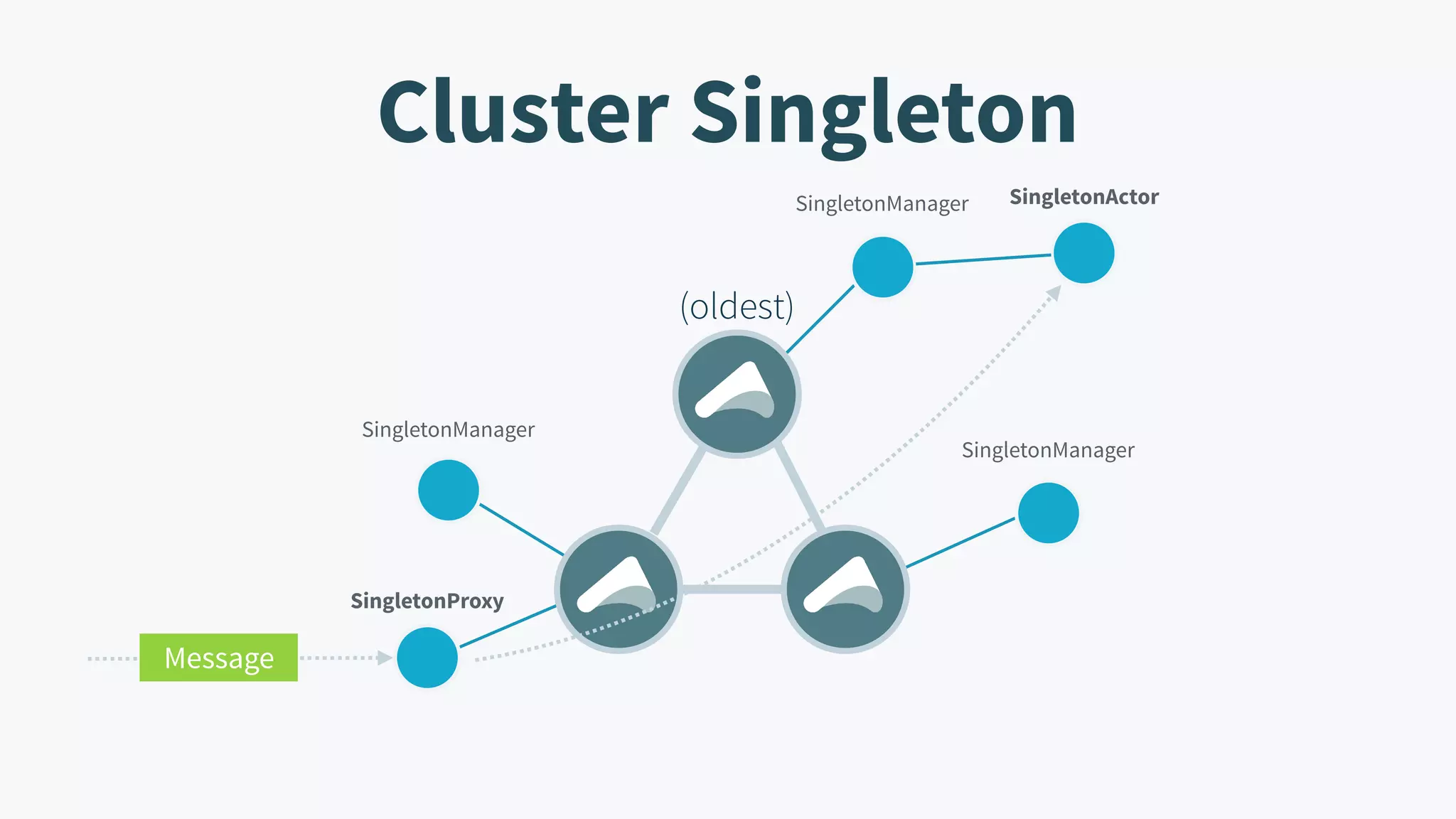

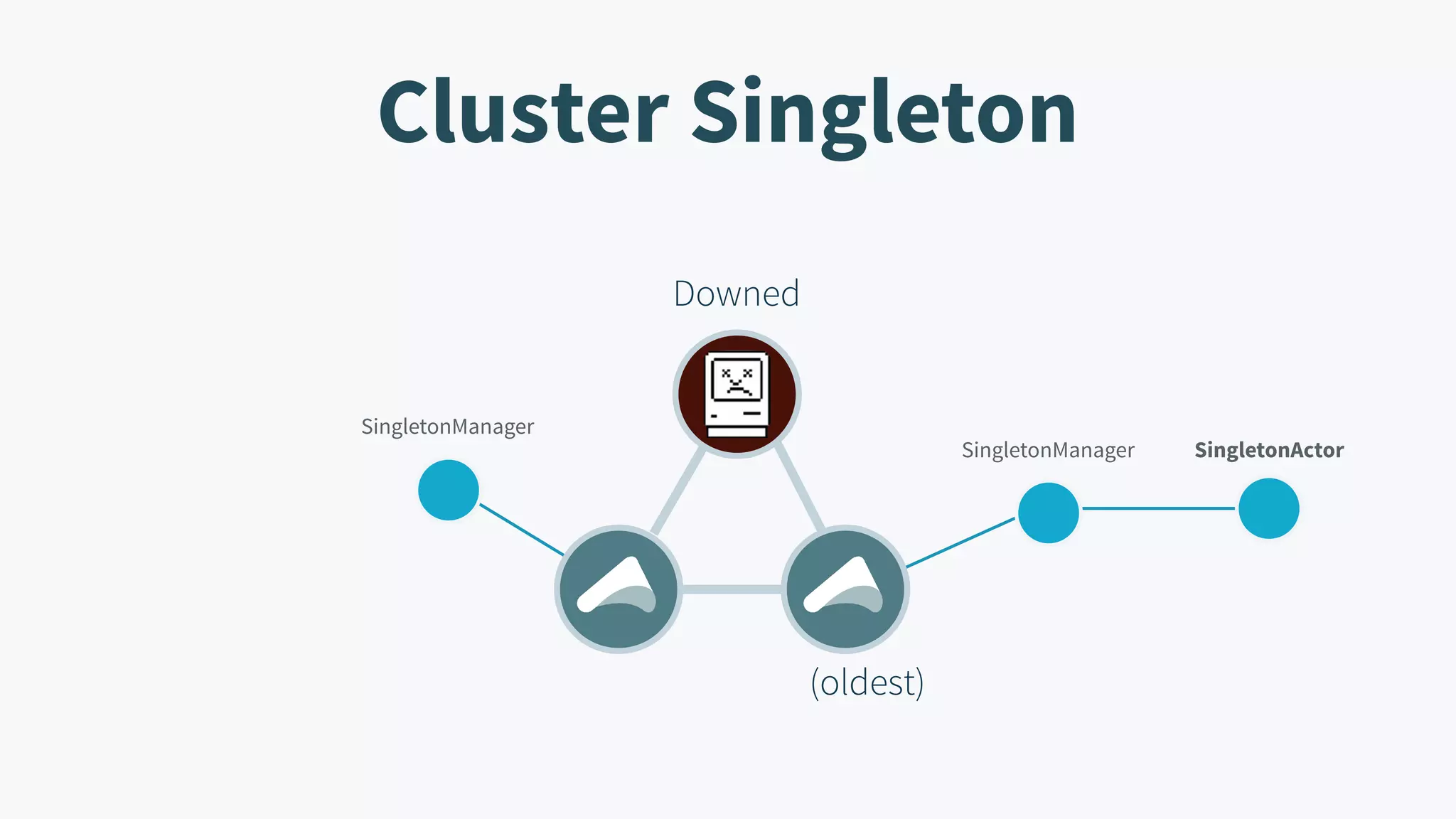

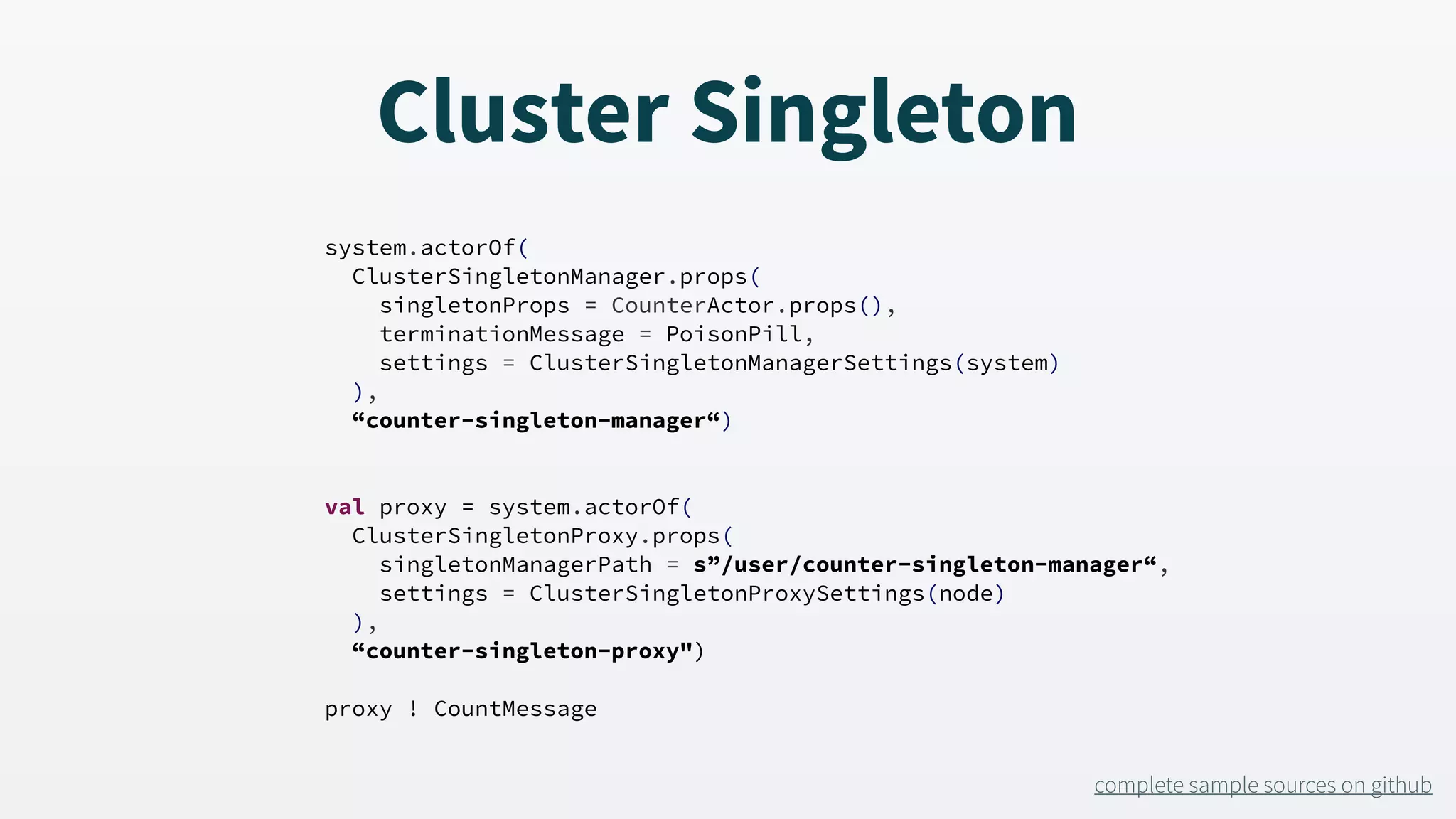

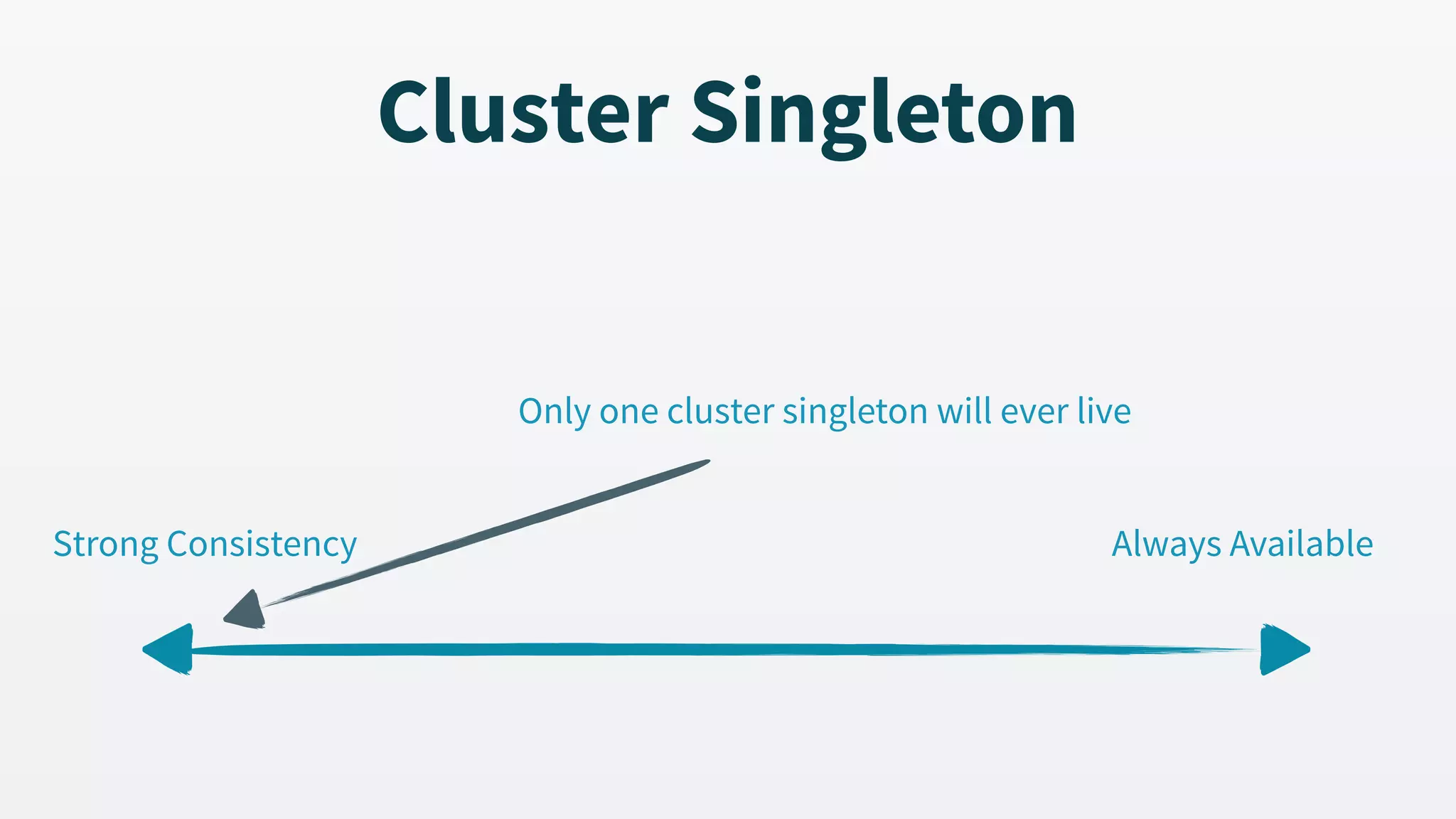

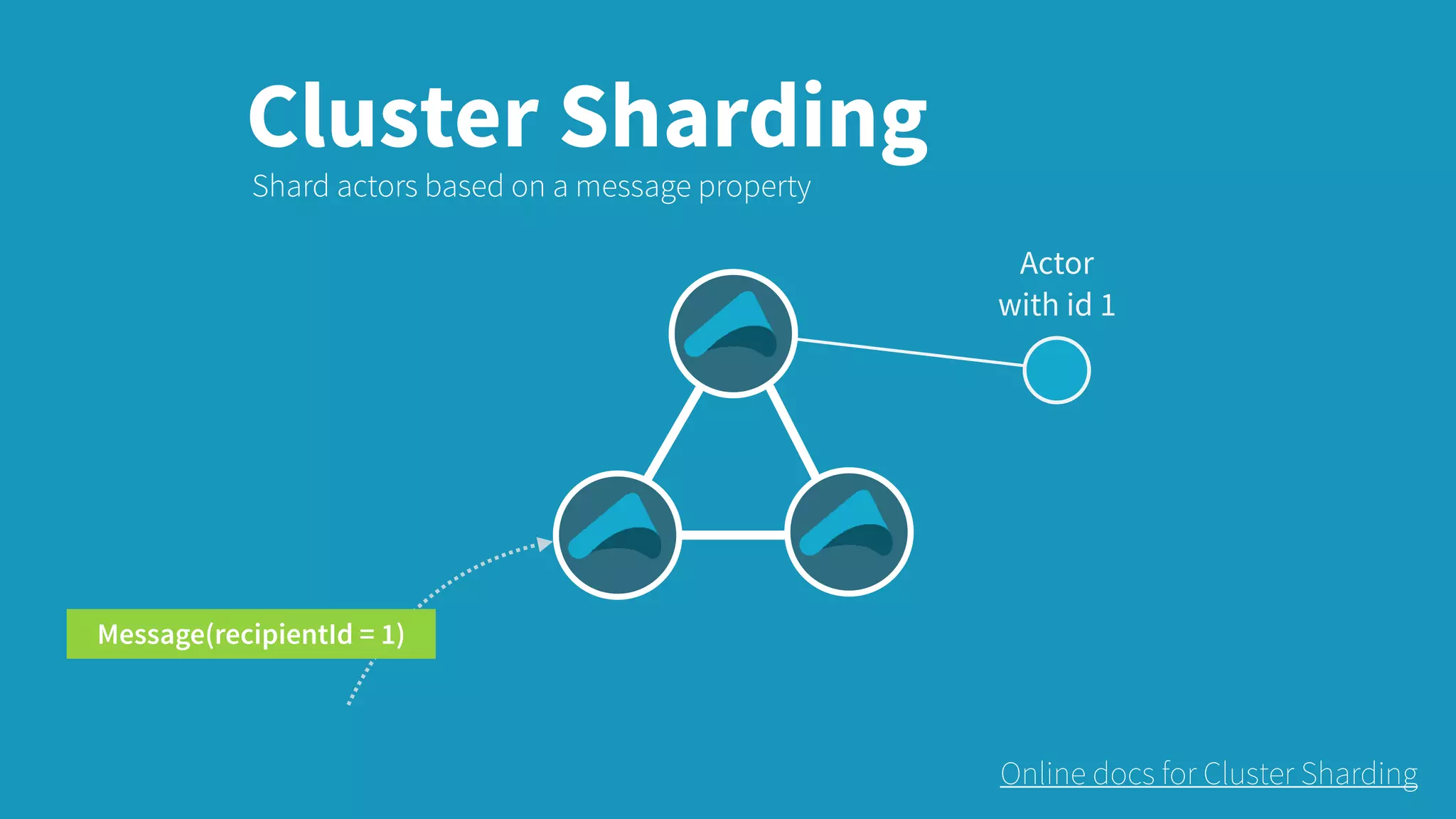

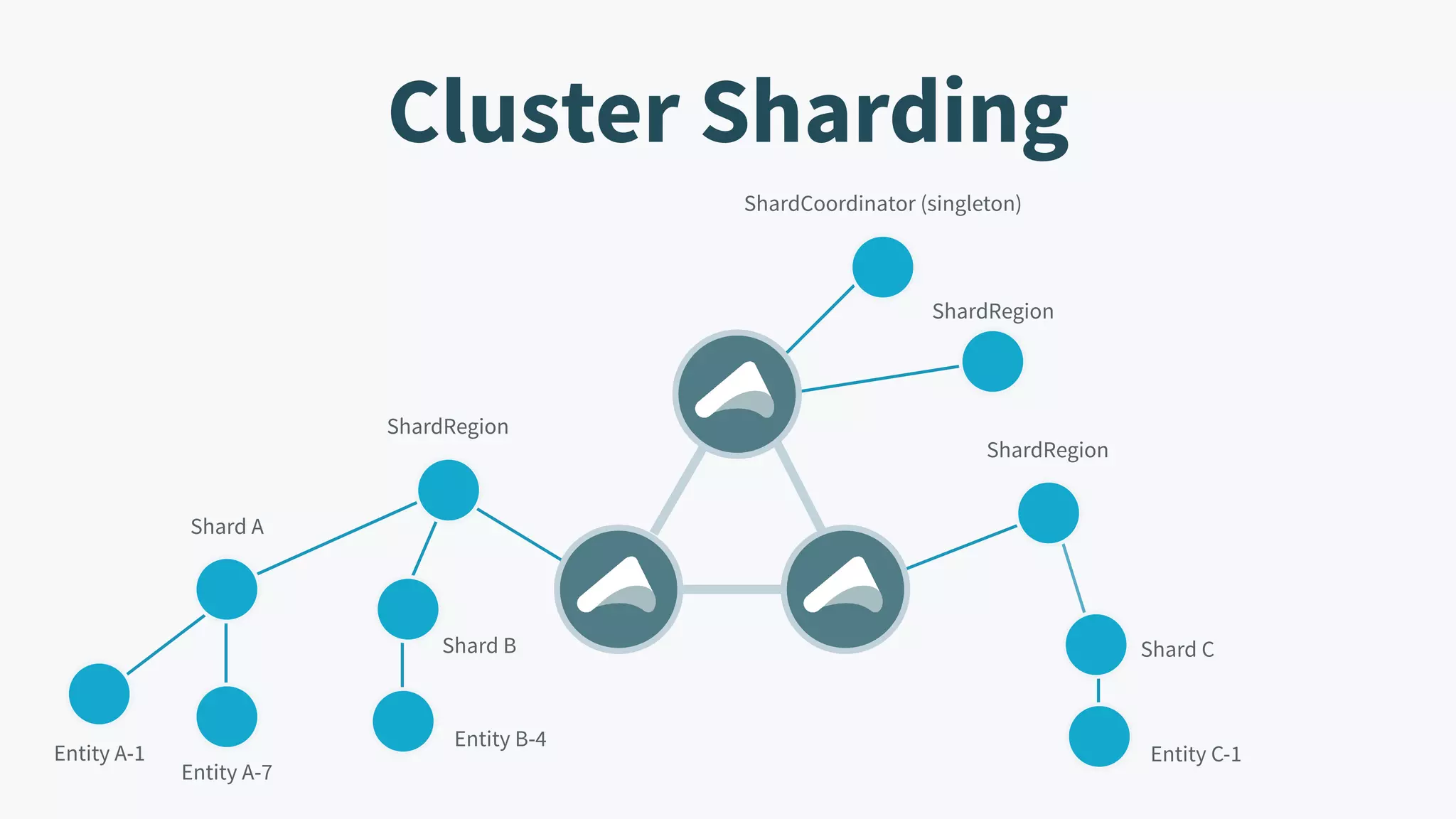

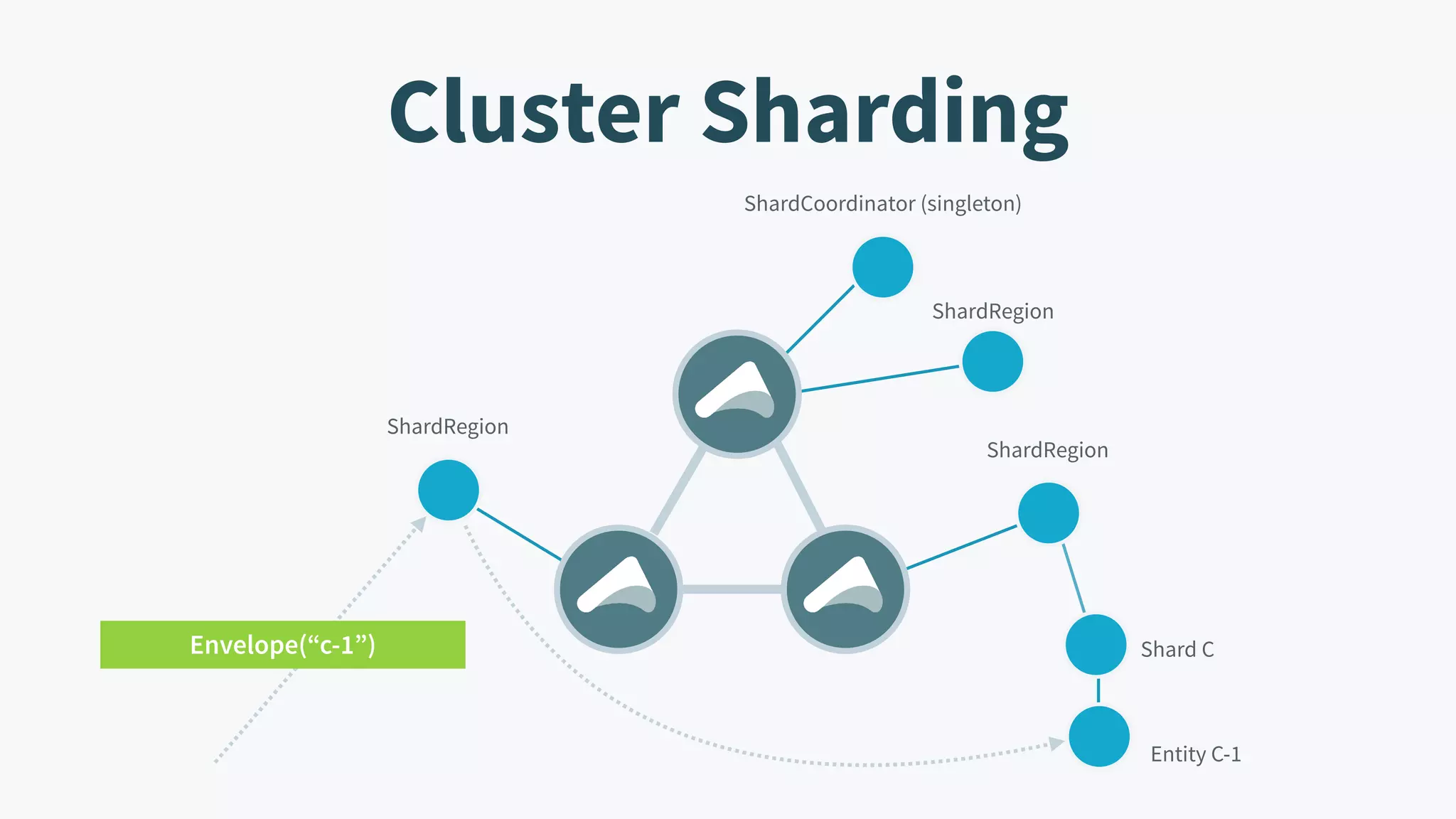

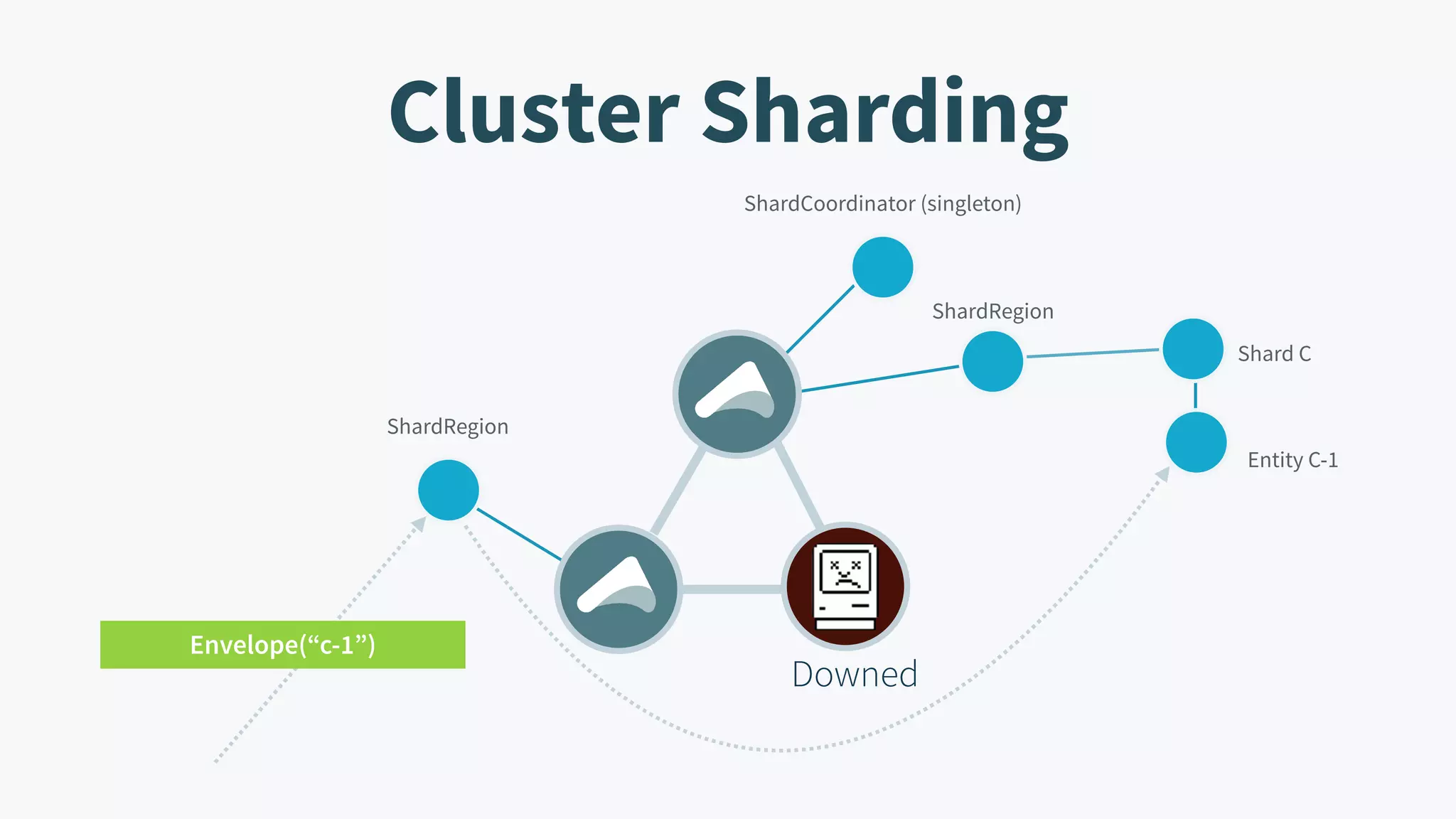

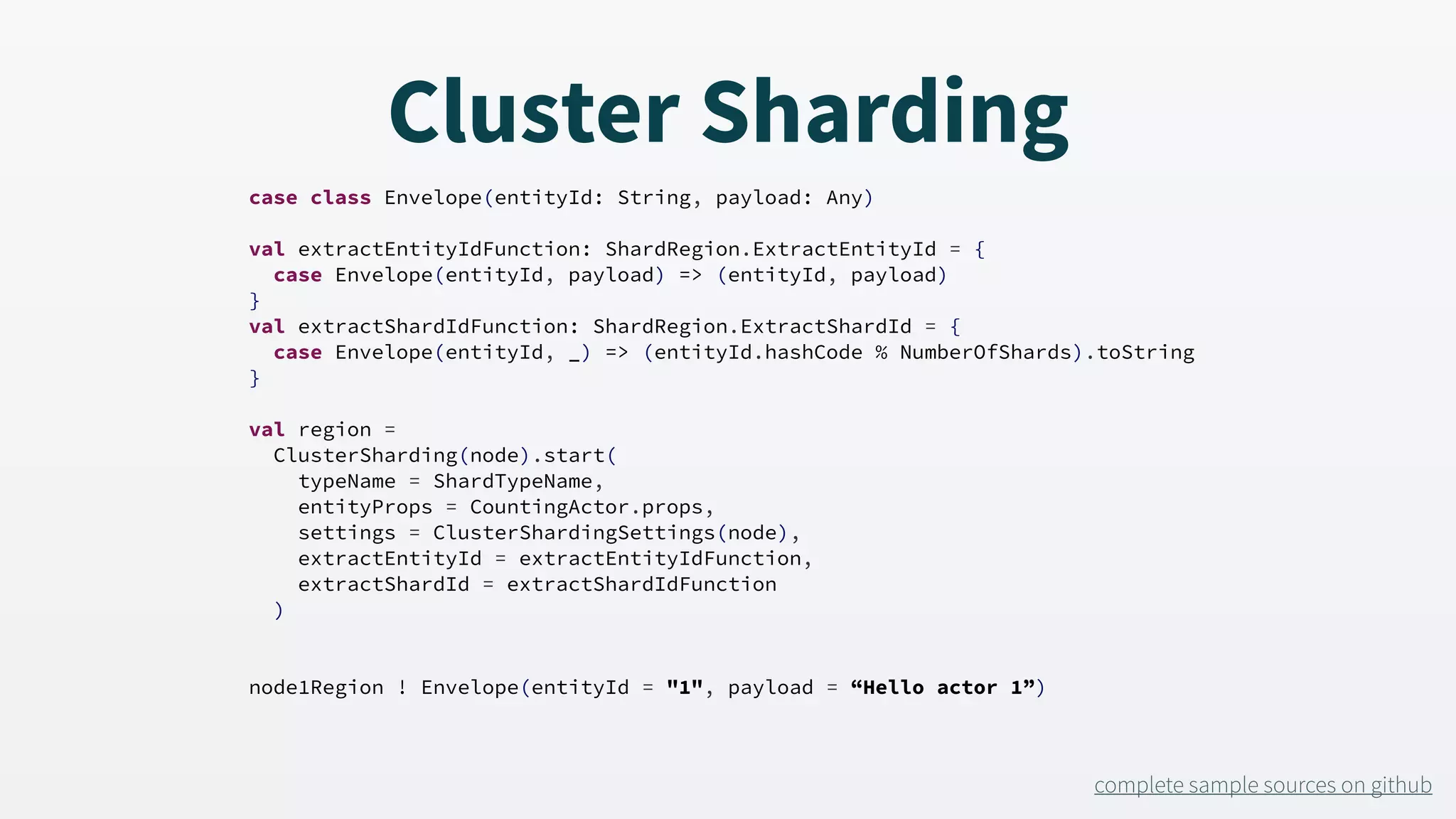

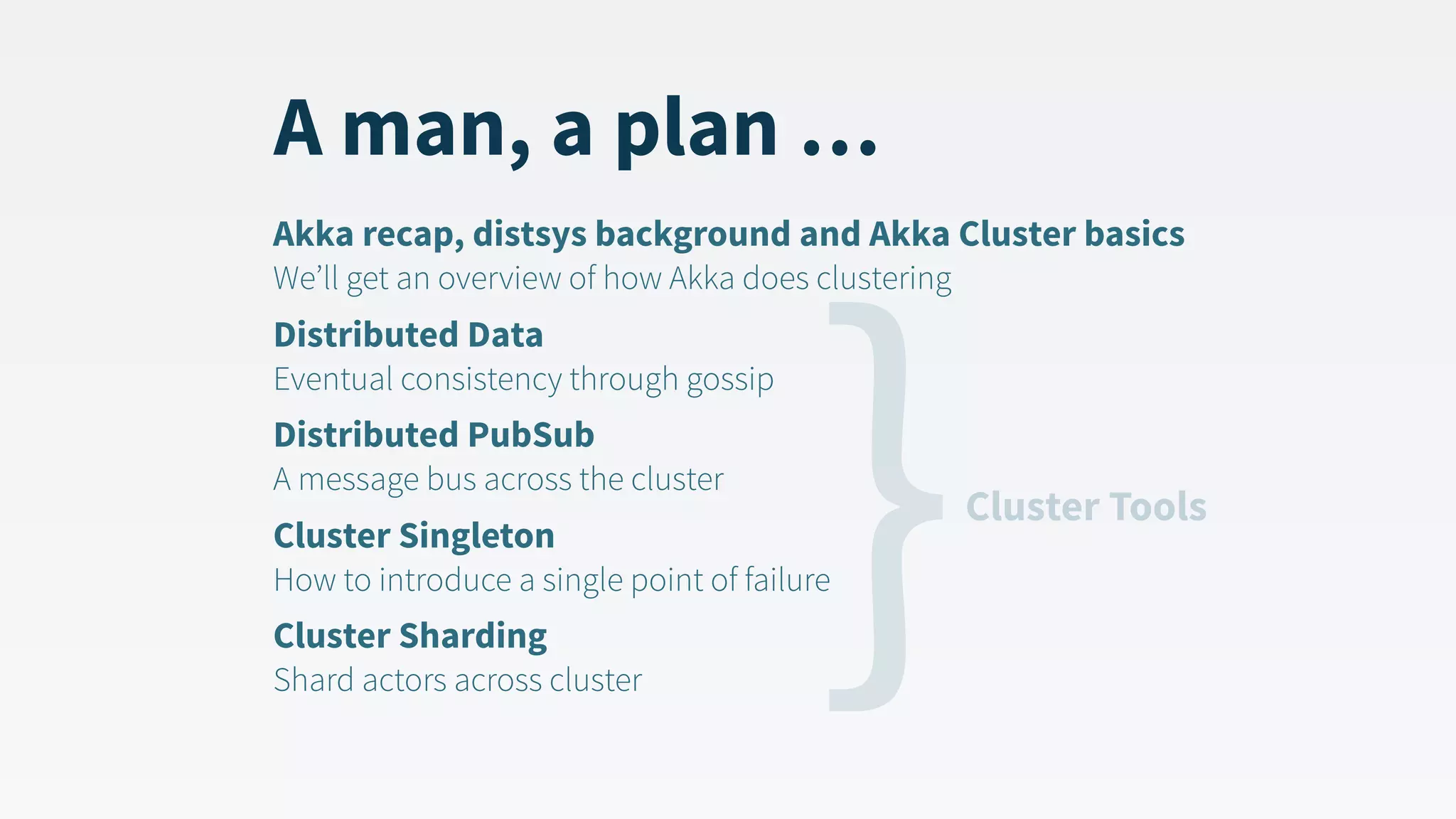

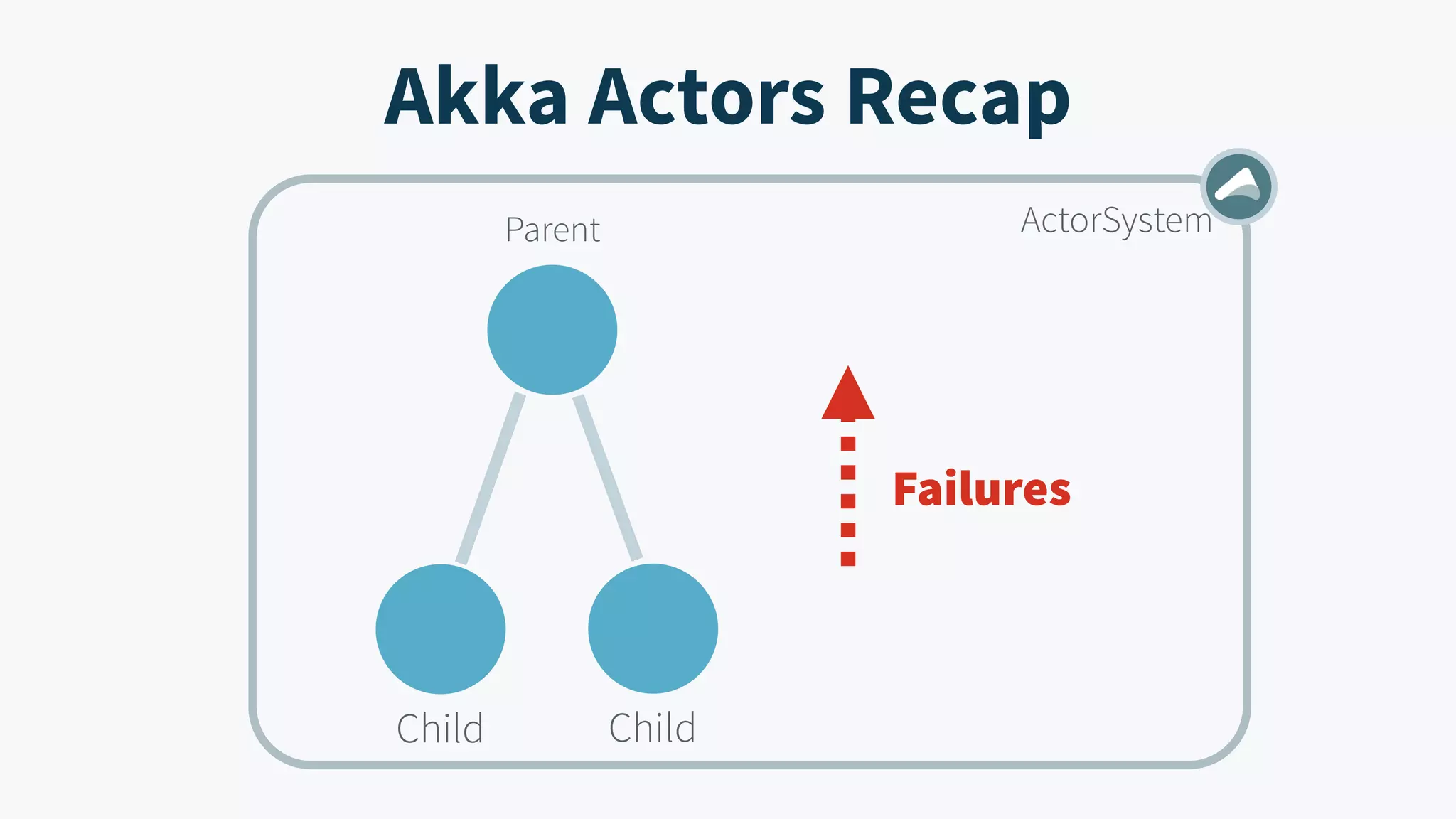

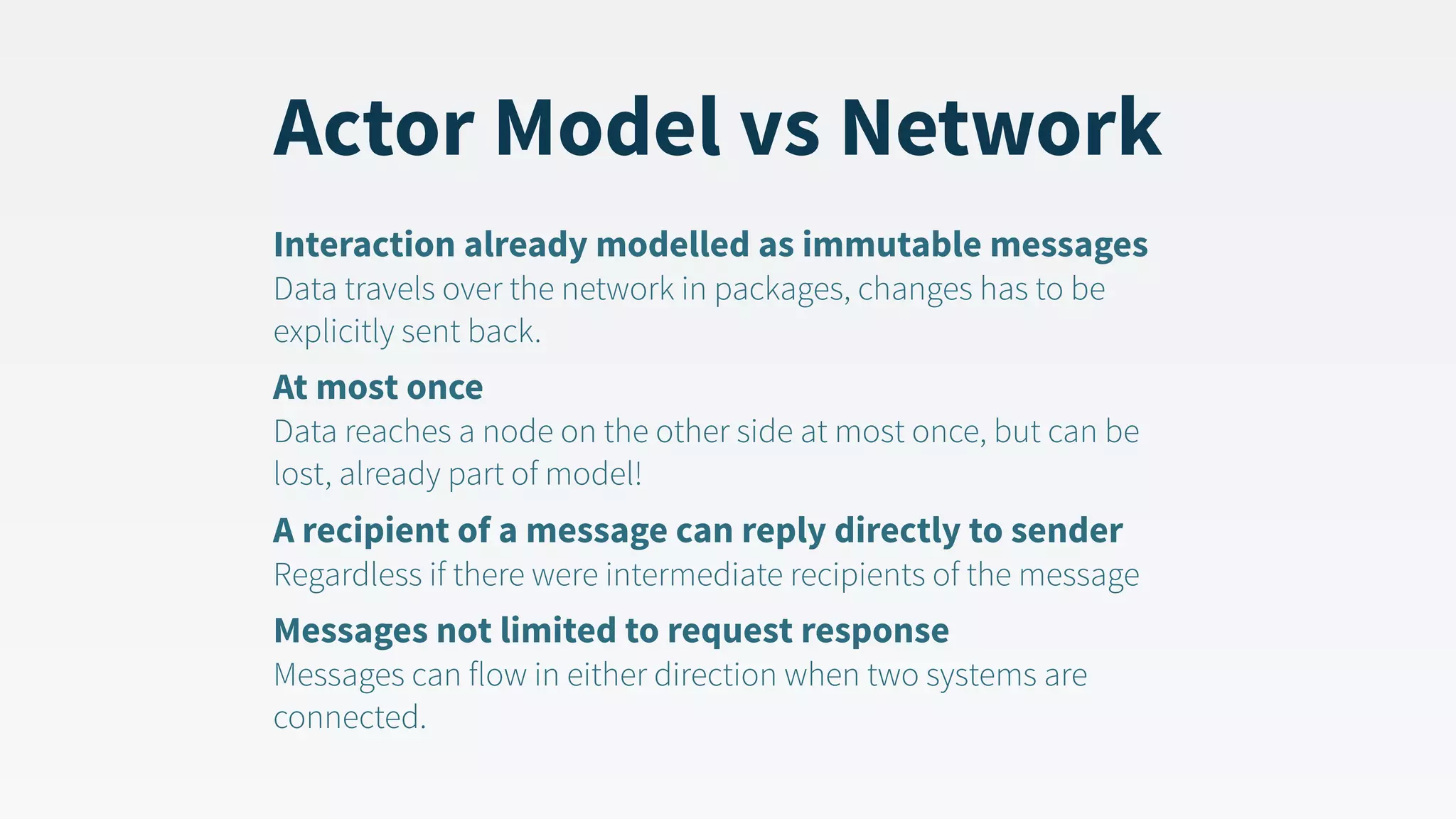

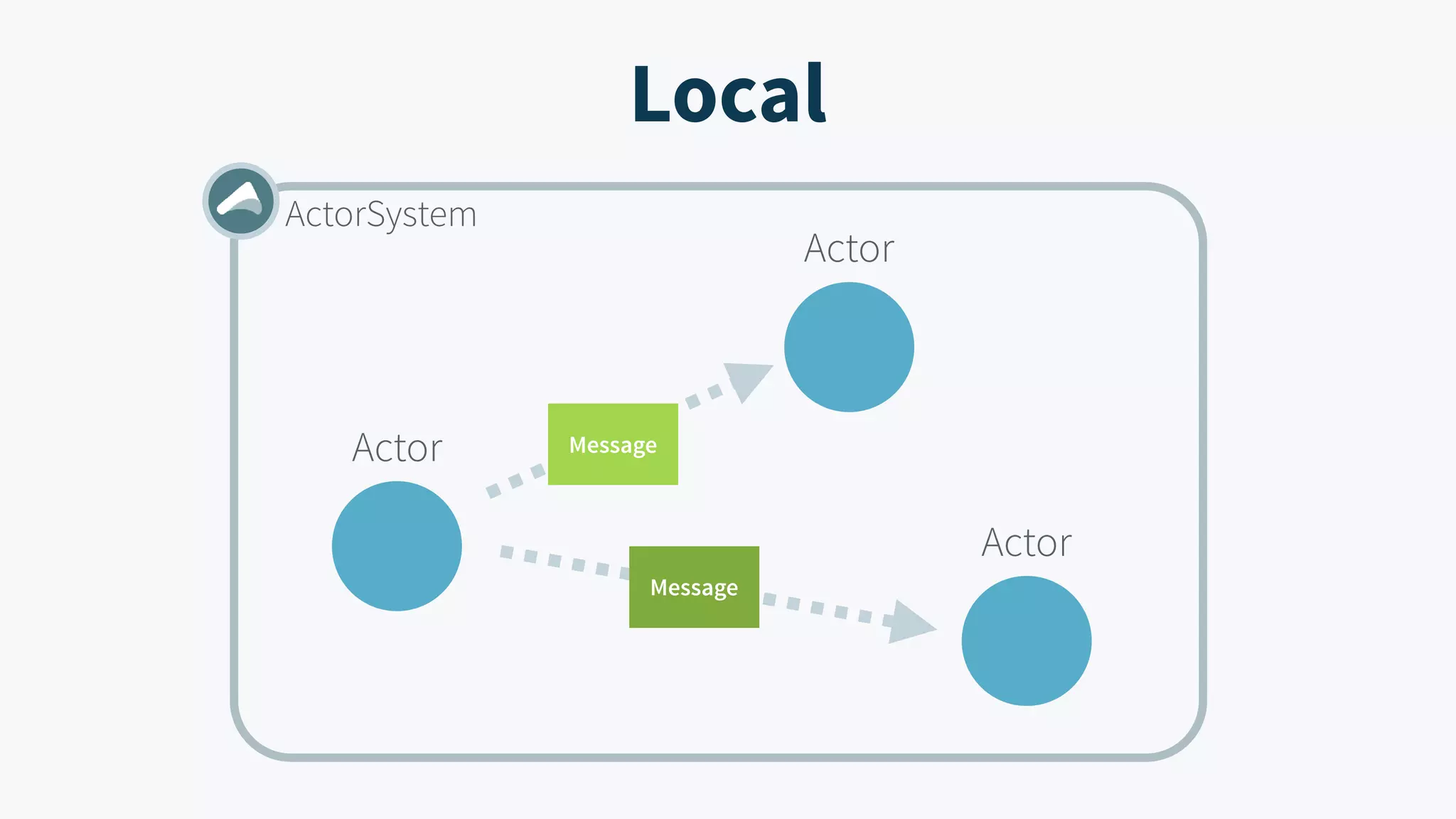

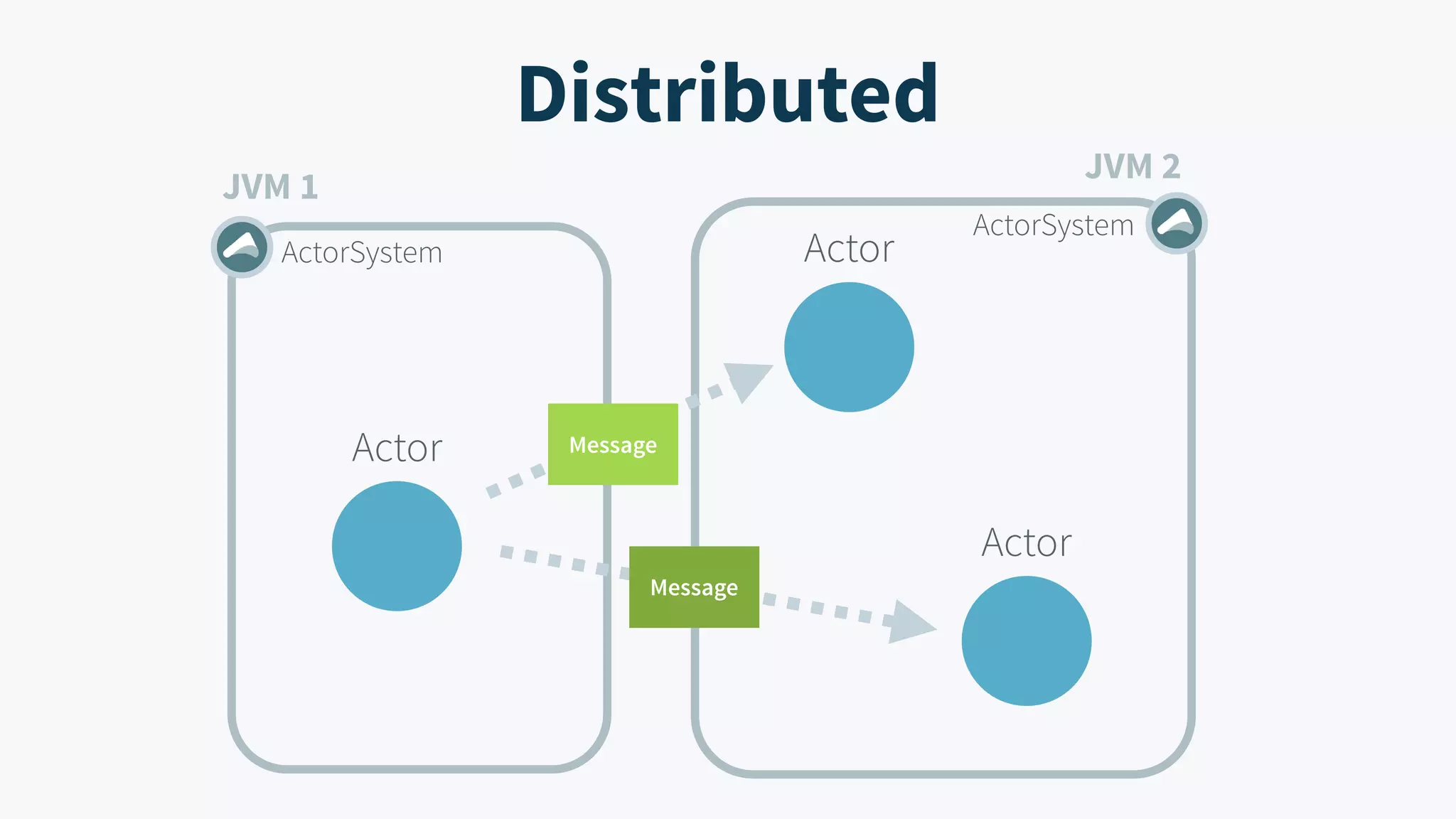

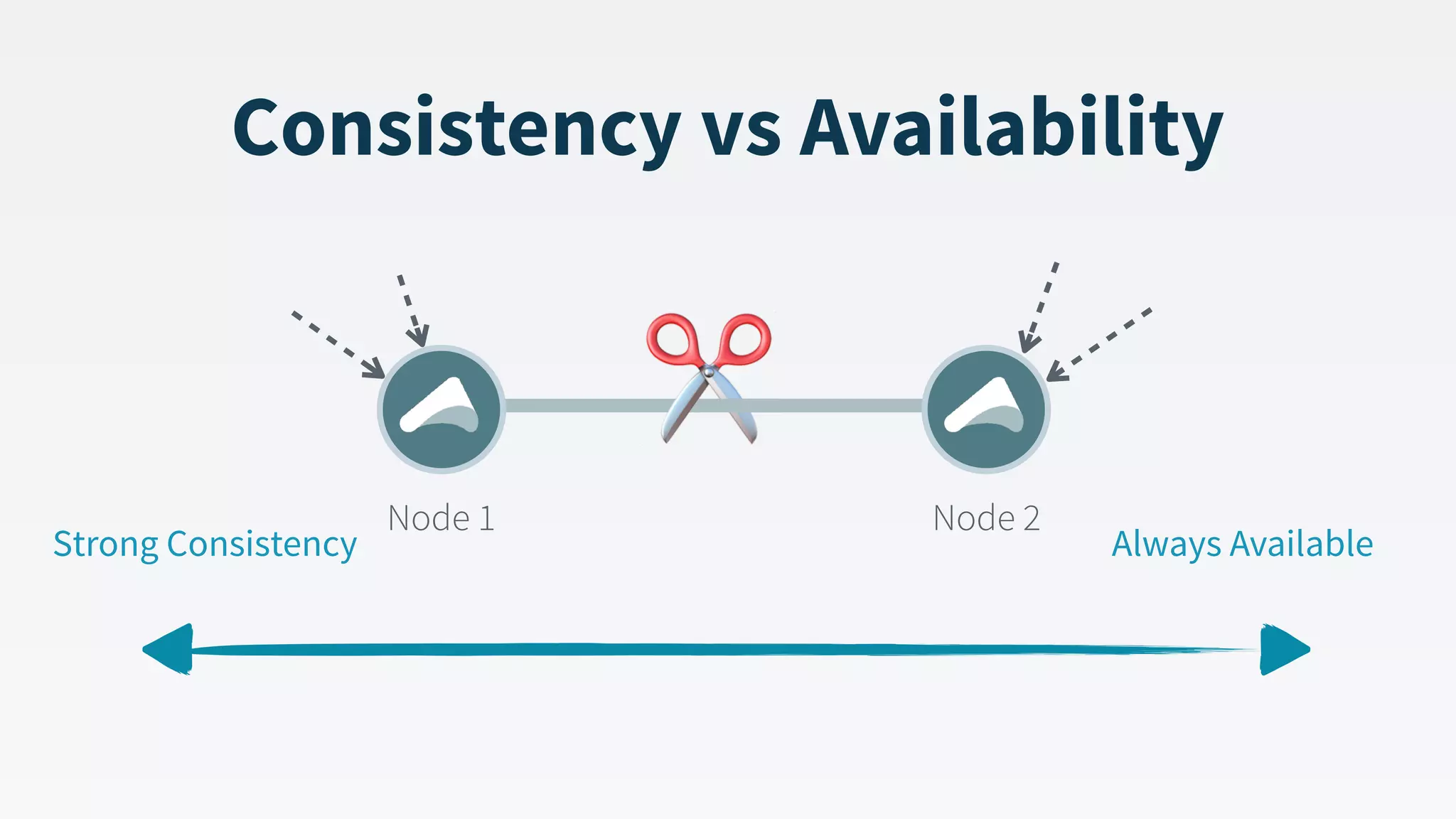

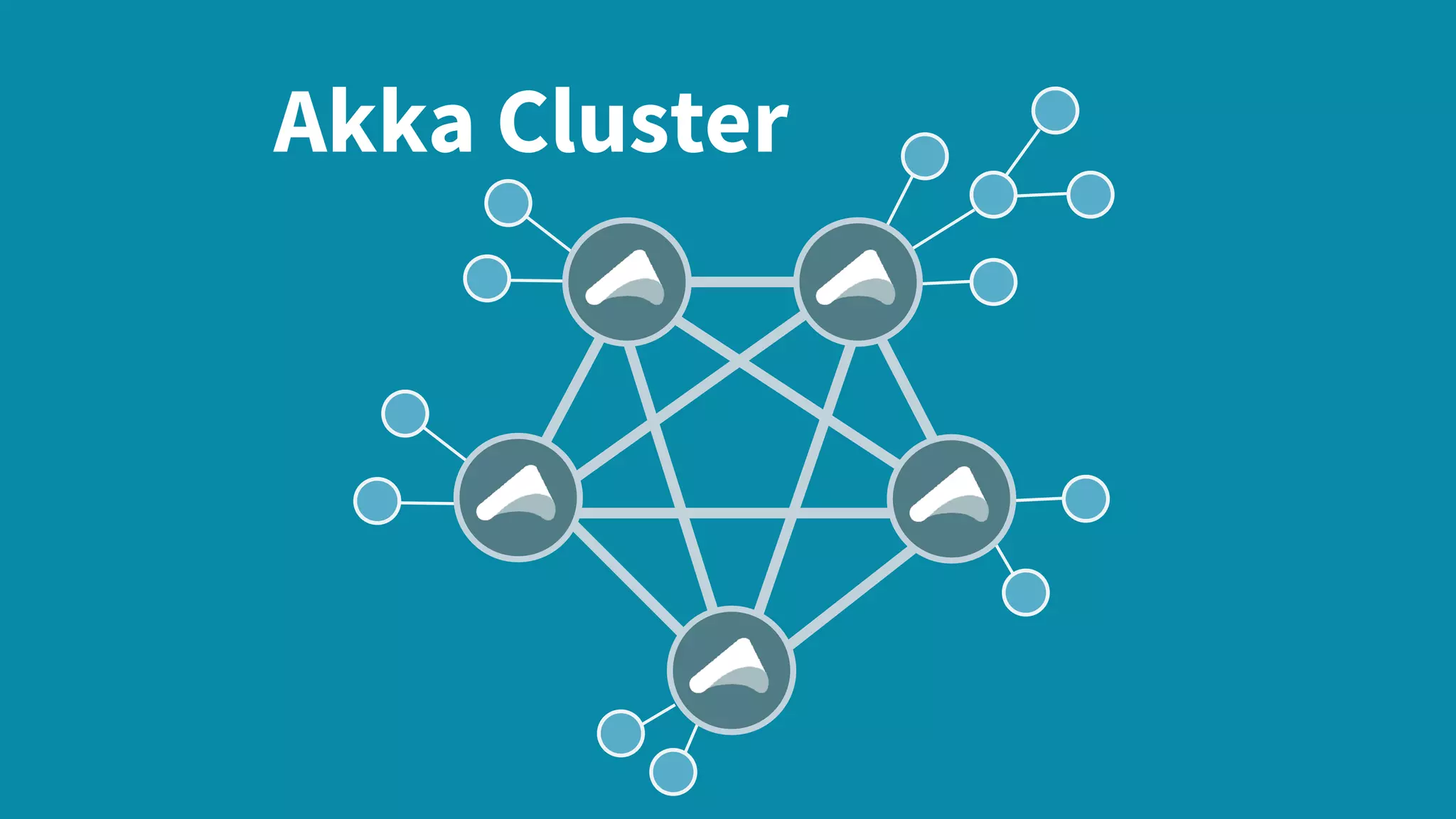

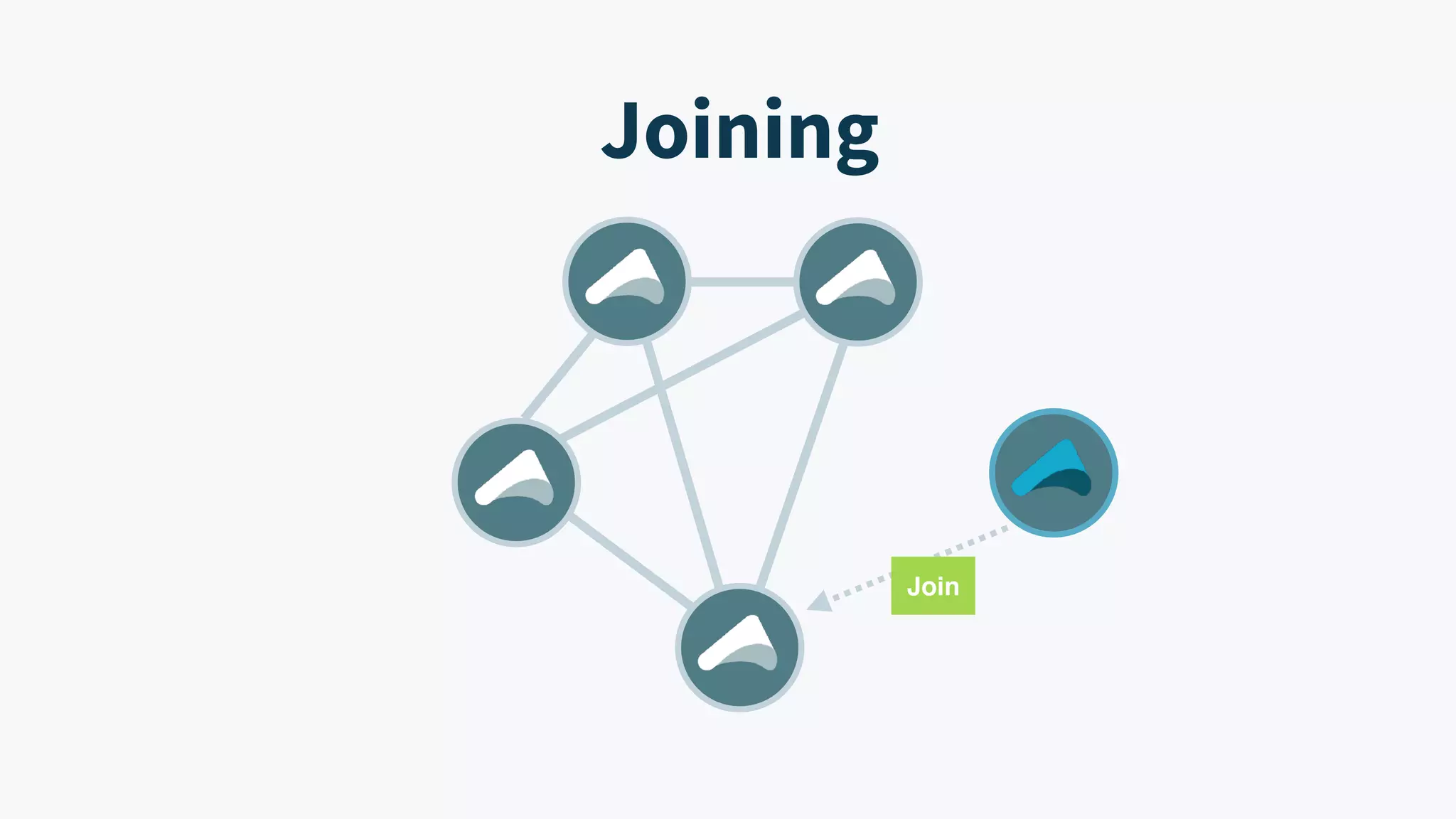

Johan Andrén presented on building reactive distributed systems with Akka. He provided an overview of Akka clustering and distributed data types. He discussed how Akka actors map to distributed systems and Akka's support for eventual consistency through gossip. He also covered distributed pub/sub messaging, cluster singletons that introduce a single point of failure, and cluster sharding to shard actors across nodes.

![Roles [api] [api] [worker, backend] [worker] [worker]](https://image.slidesharecdn.com/buiildingreactivedistributedsystemswithakka-scala-swarm2017-170623133003/75/Building-reactive-distributed-systems-with-Akka-23-2048.jpg)

![val commonConfig = ConfigFactory.parseString( """ akka { actor.provider = cluster remote.artery.enabled = true remote.artery.canonical.hostname = 127.0.0.1 cluster.seed-nodes = [ "akka://cluster@127.0.0.1:25520", "akka://cluster@127.0.0.1:25521" ] cluster.jmx.multi-mbeans-in-same-jvm = on } """) def portConfig(port: Int) = ConfigFactory.parseString(s"akka.remote.artery.canonical.port = $port") val node1 = ActorSystem("cluster", portConfig(25520).withFallback(commonConfig)) val node2 = ActorSystem("cluster", portConfig(25521).withFallback(commonConfig)) val node3 = ActorSystem("cluster", portConfig(25522).withFallback(commonConfig)) Three node cluster complete sample sources on github](https://image.slidesharecdn.com/buiildingreactivedistributedsystemswithakka-scala-swarm2017-170623133003/75/Building-reactive-distributed-systems-with-Akka-25-2048.jpg)