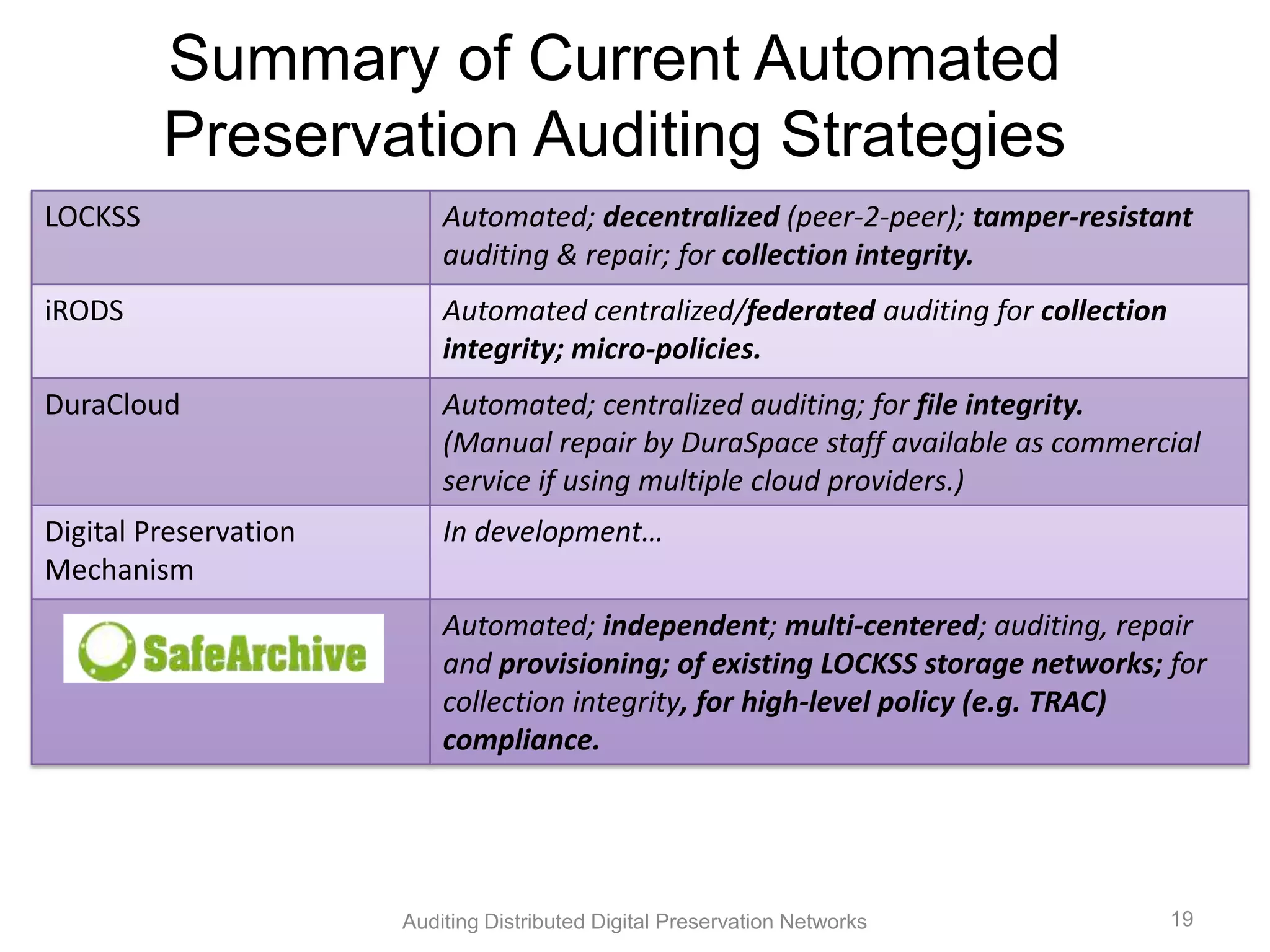

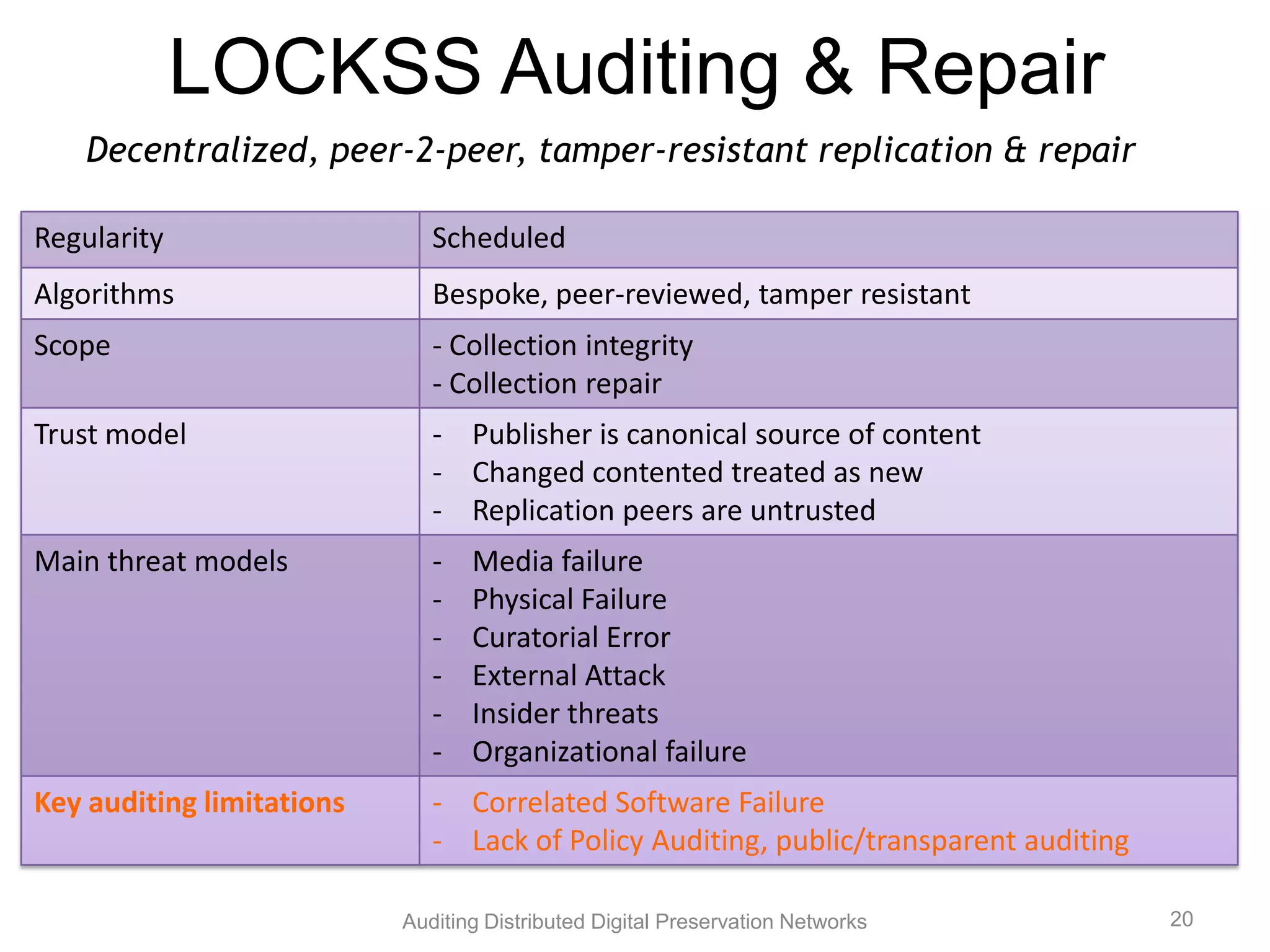

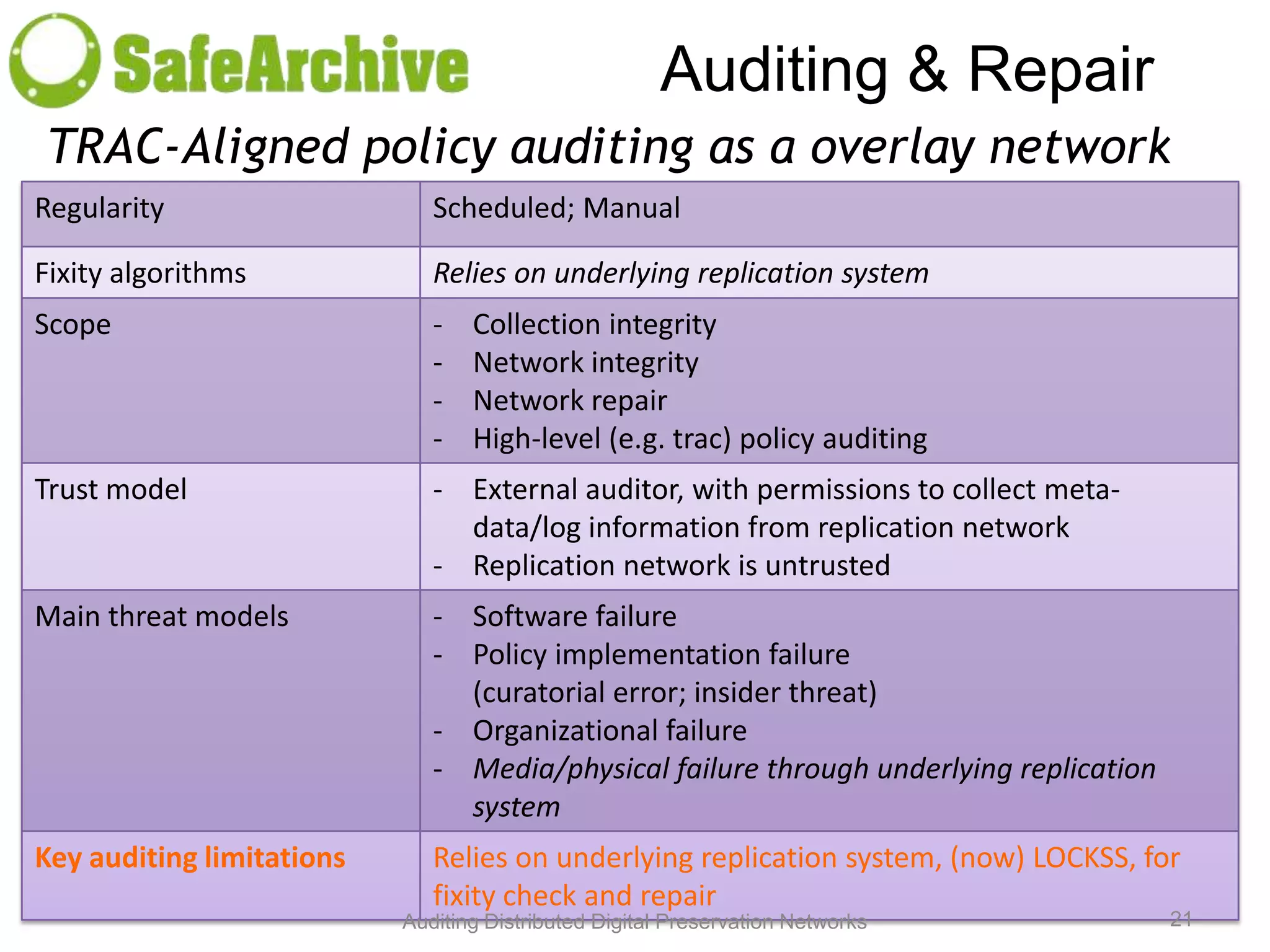

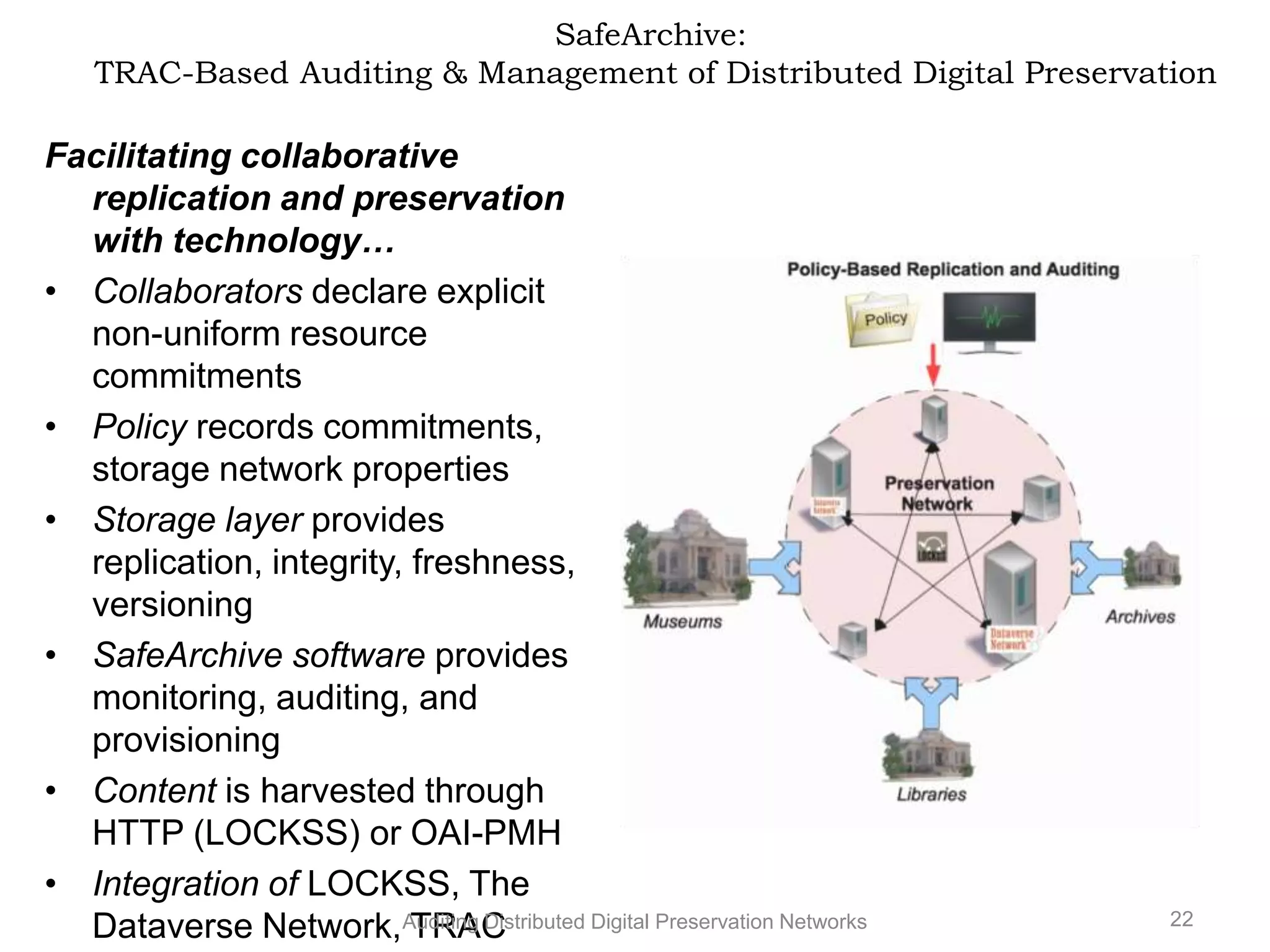

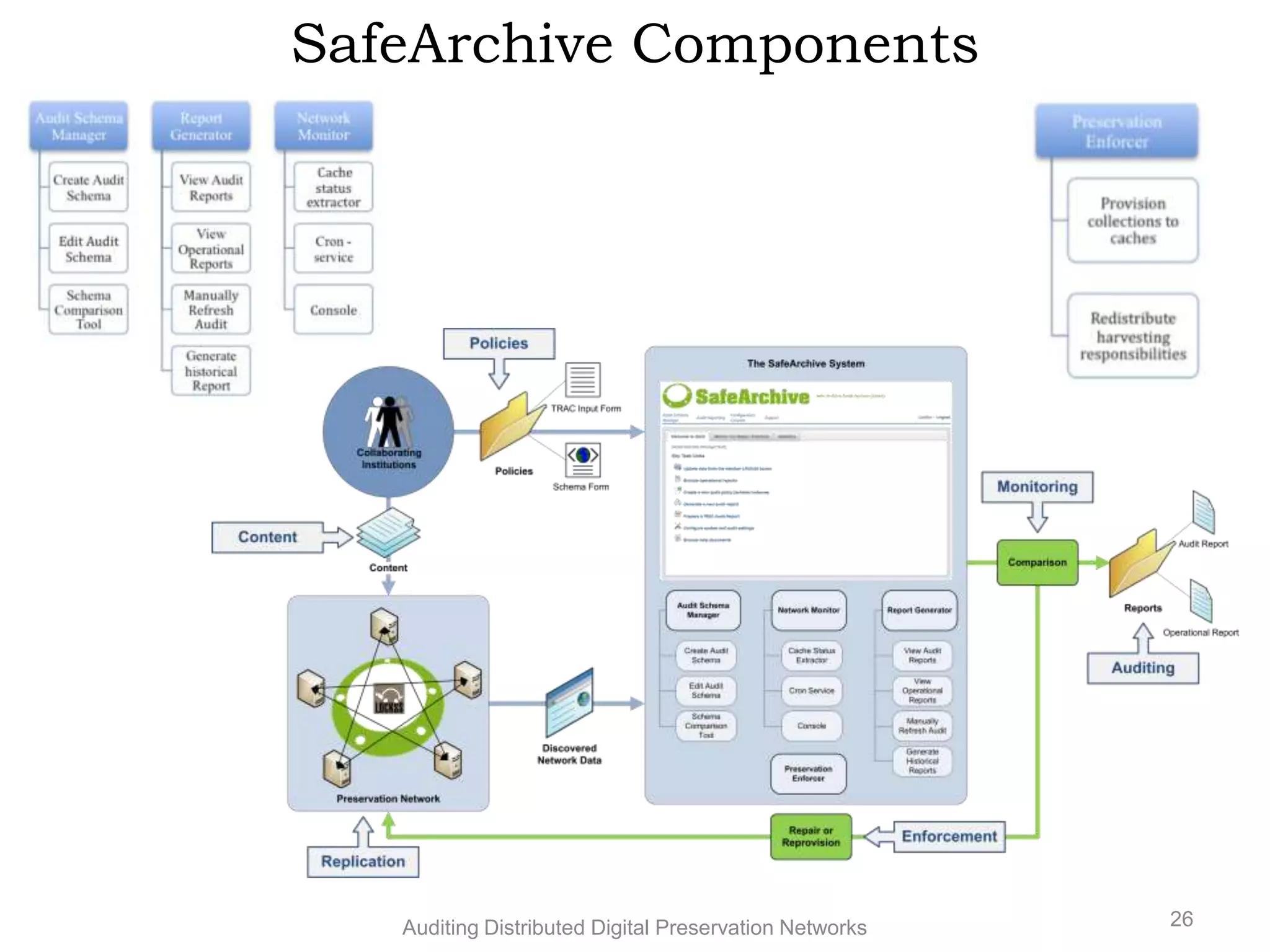

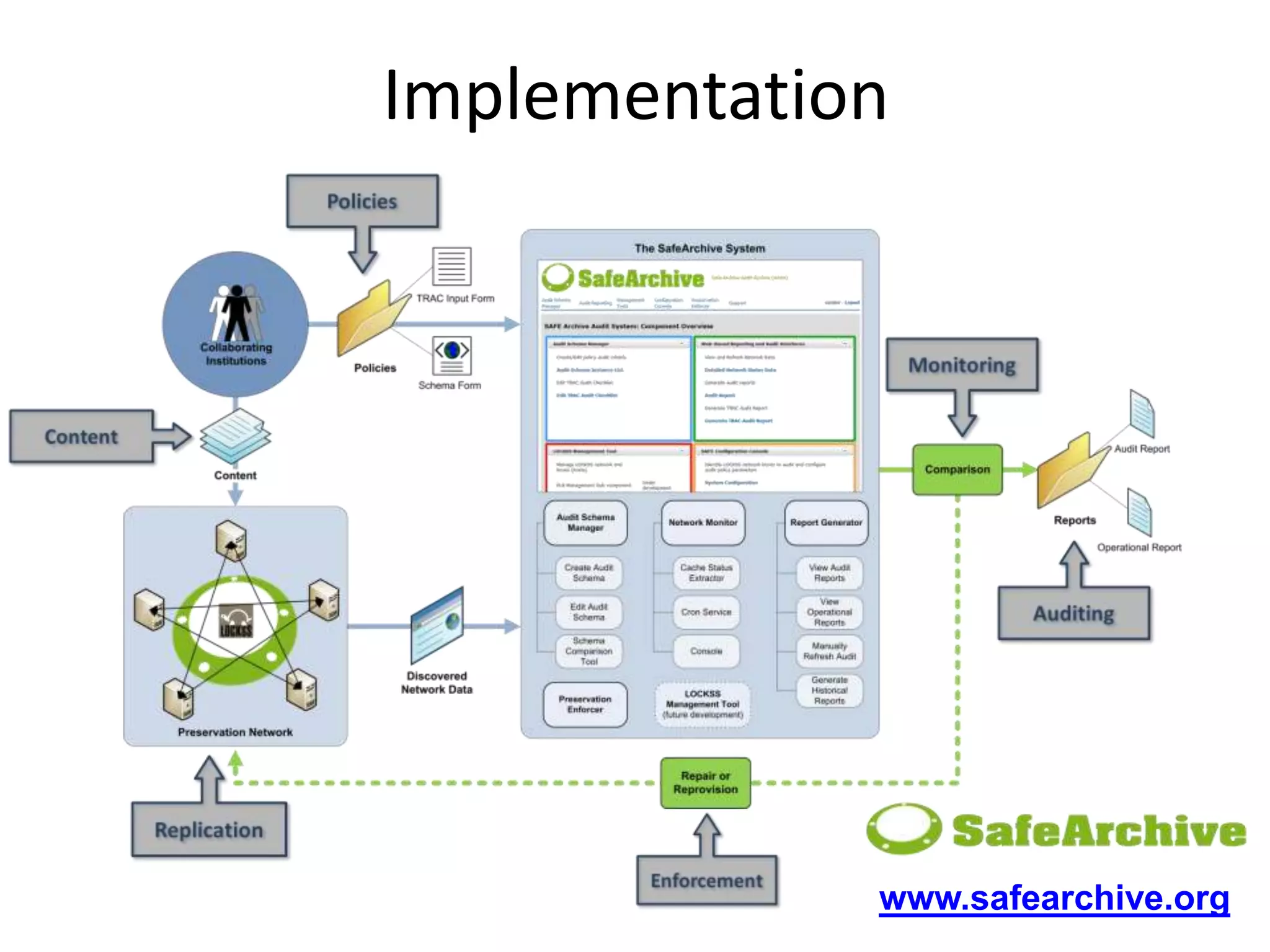

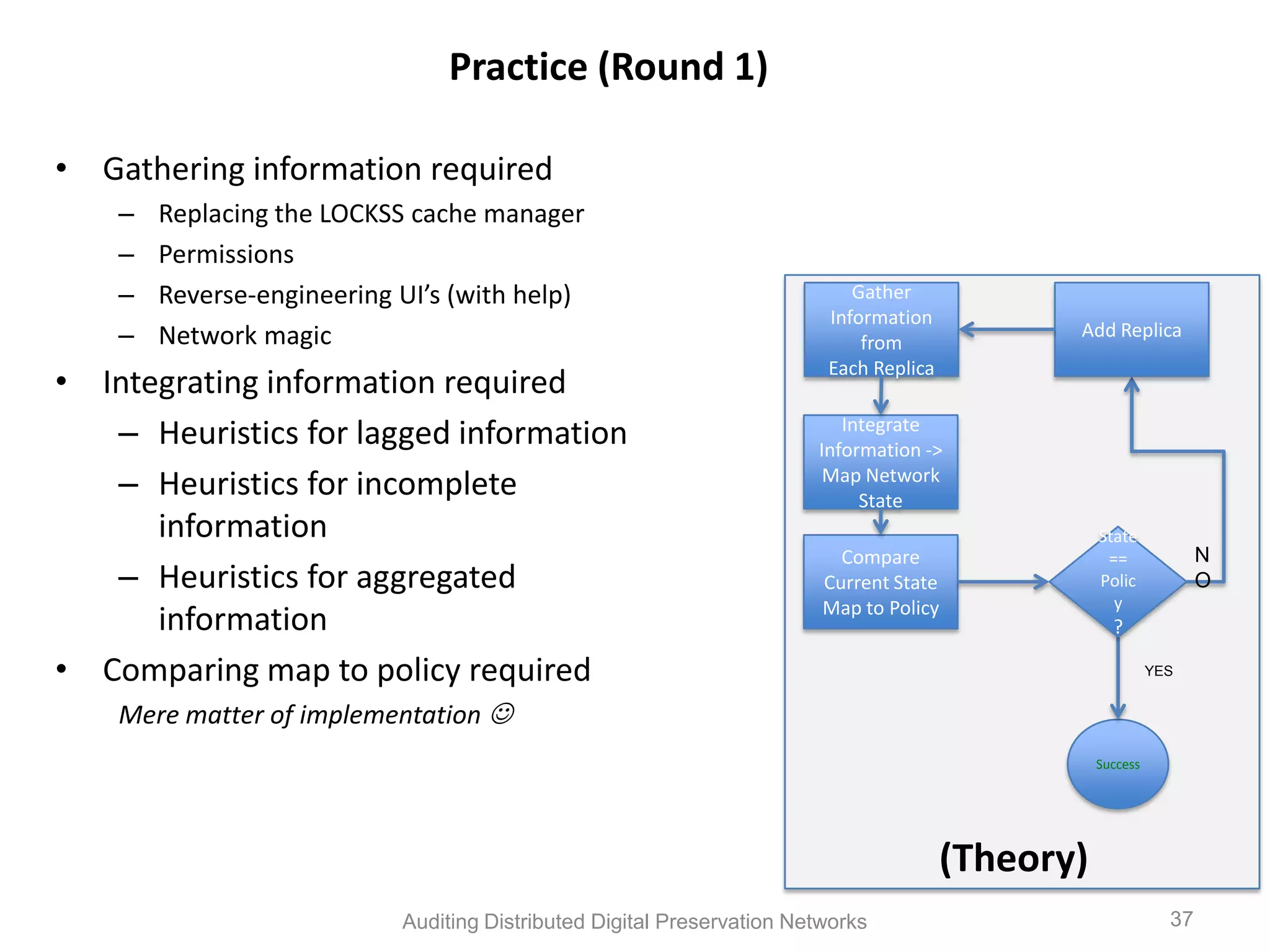

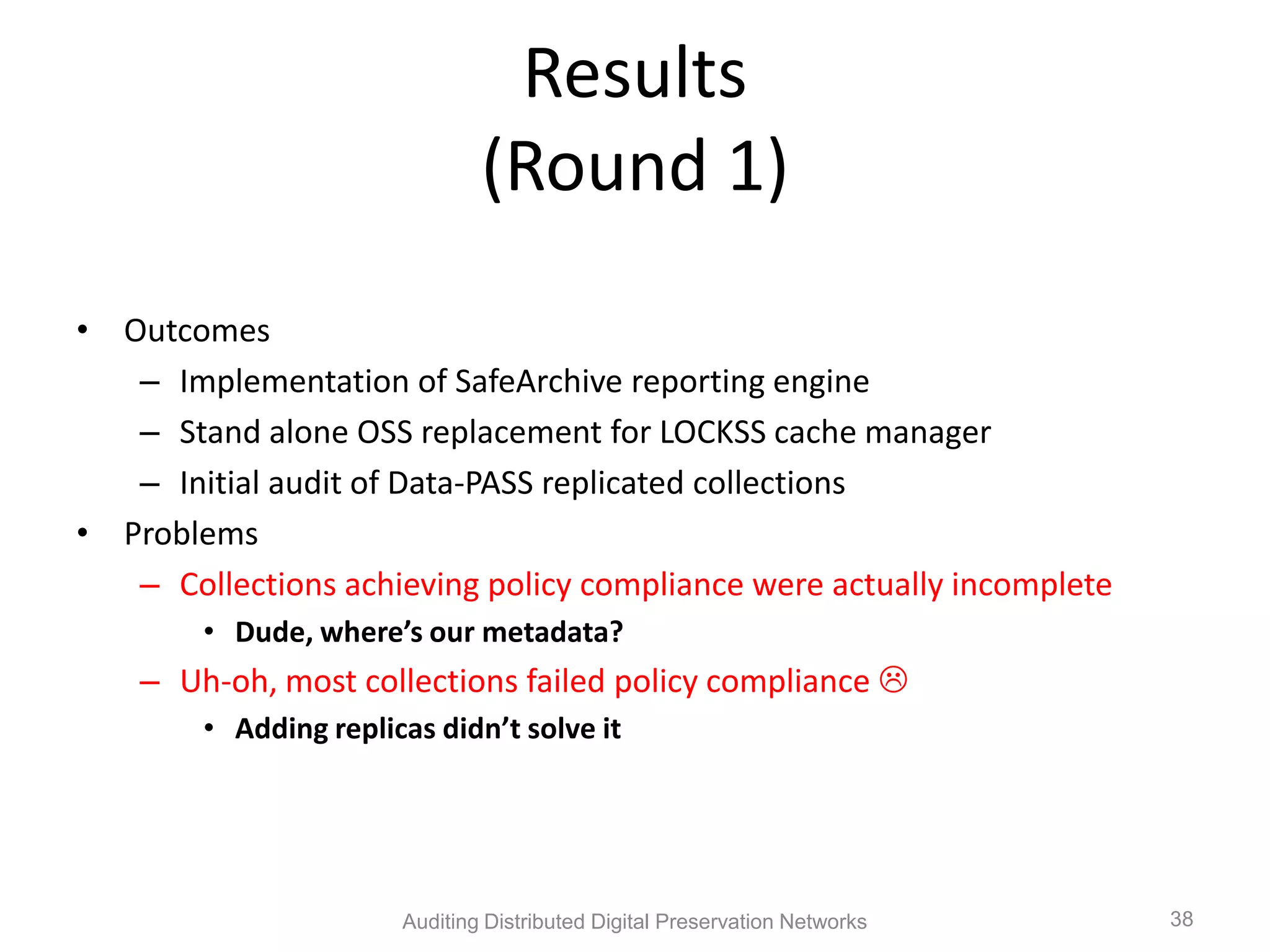

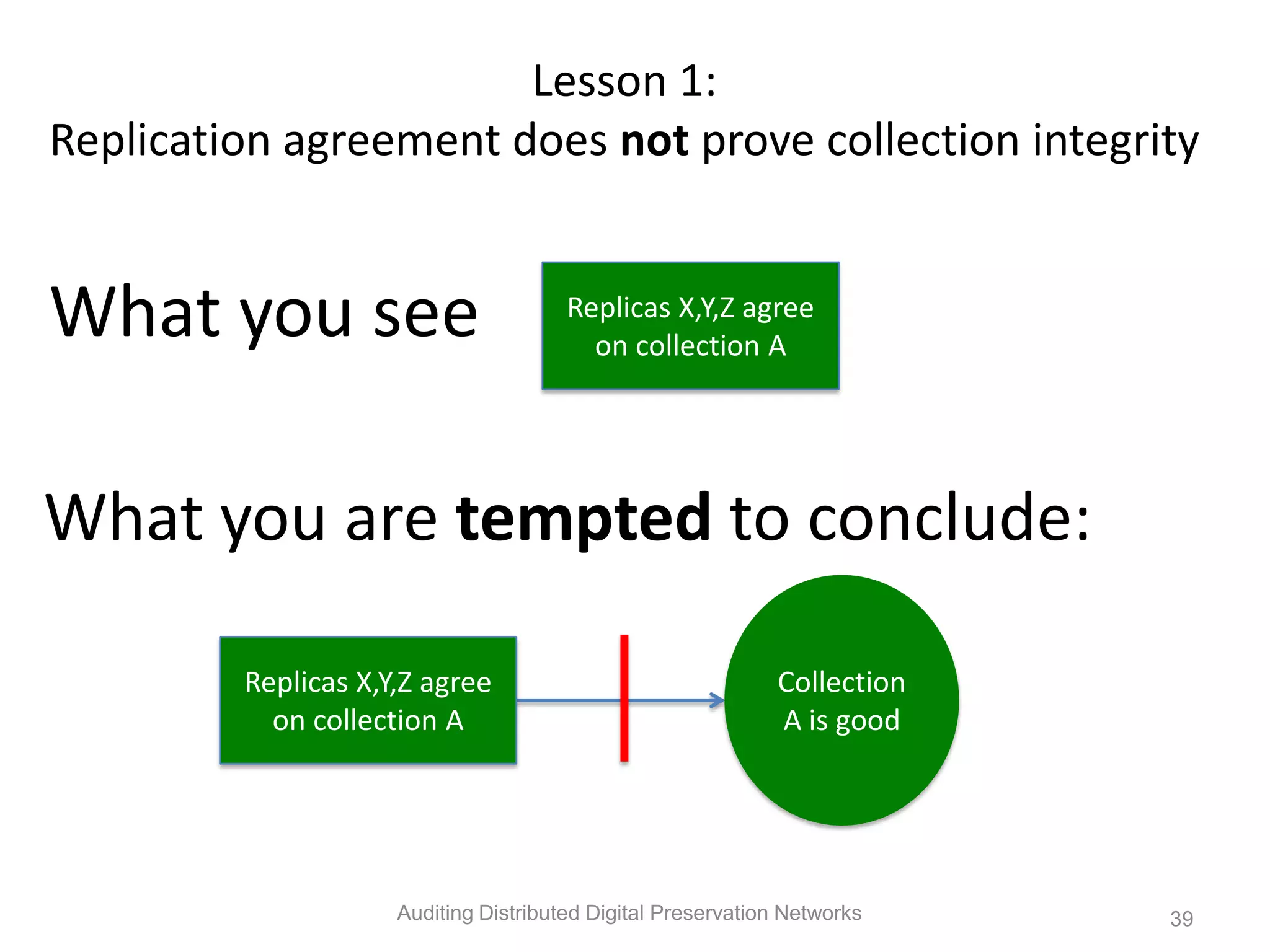

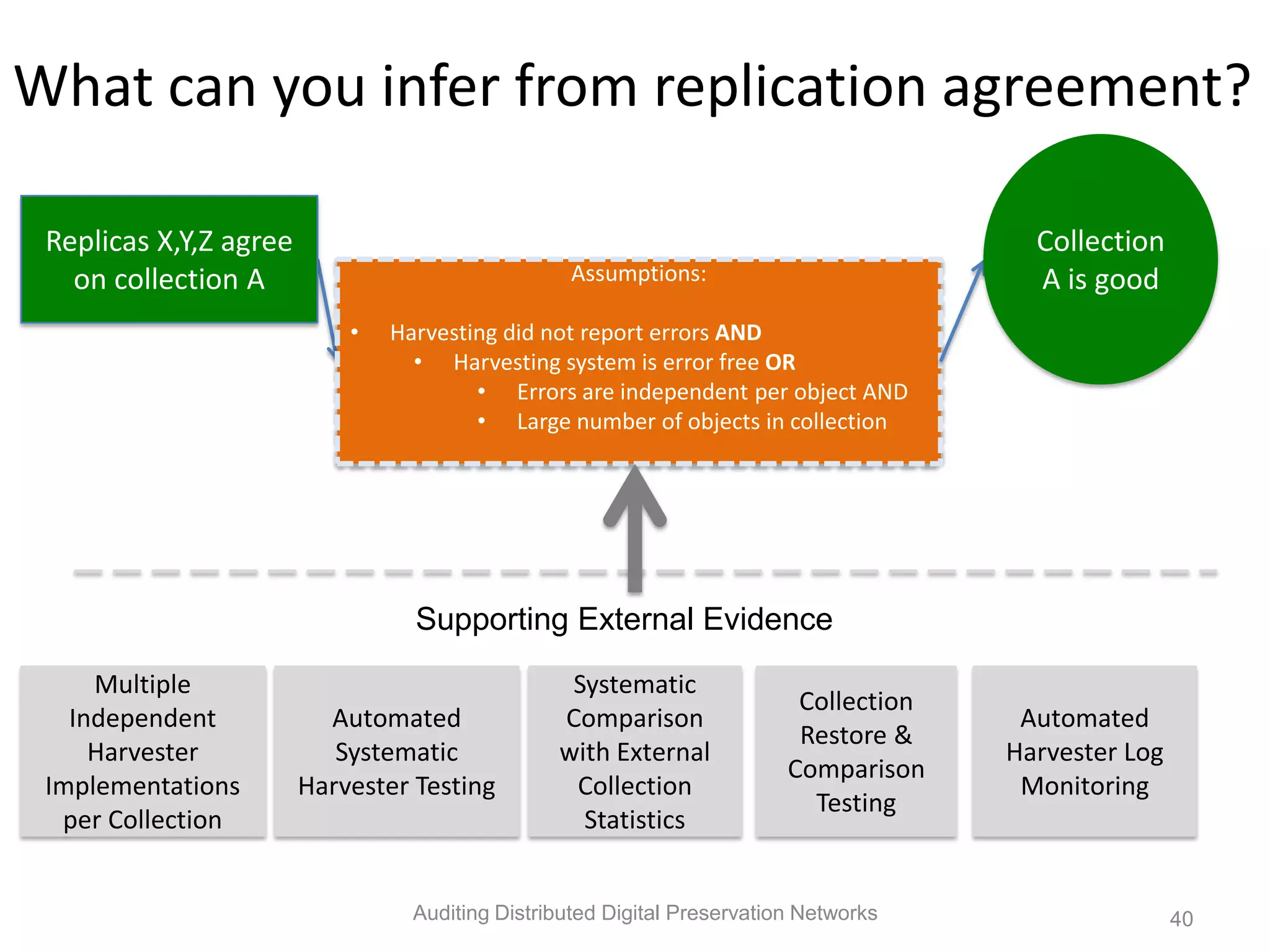

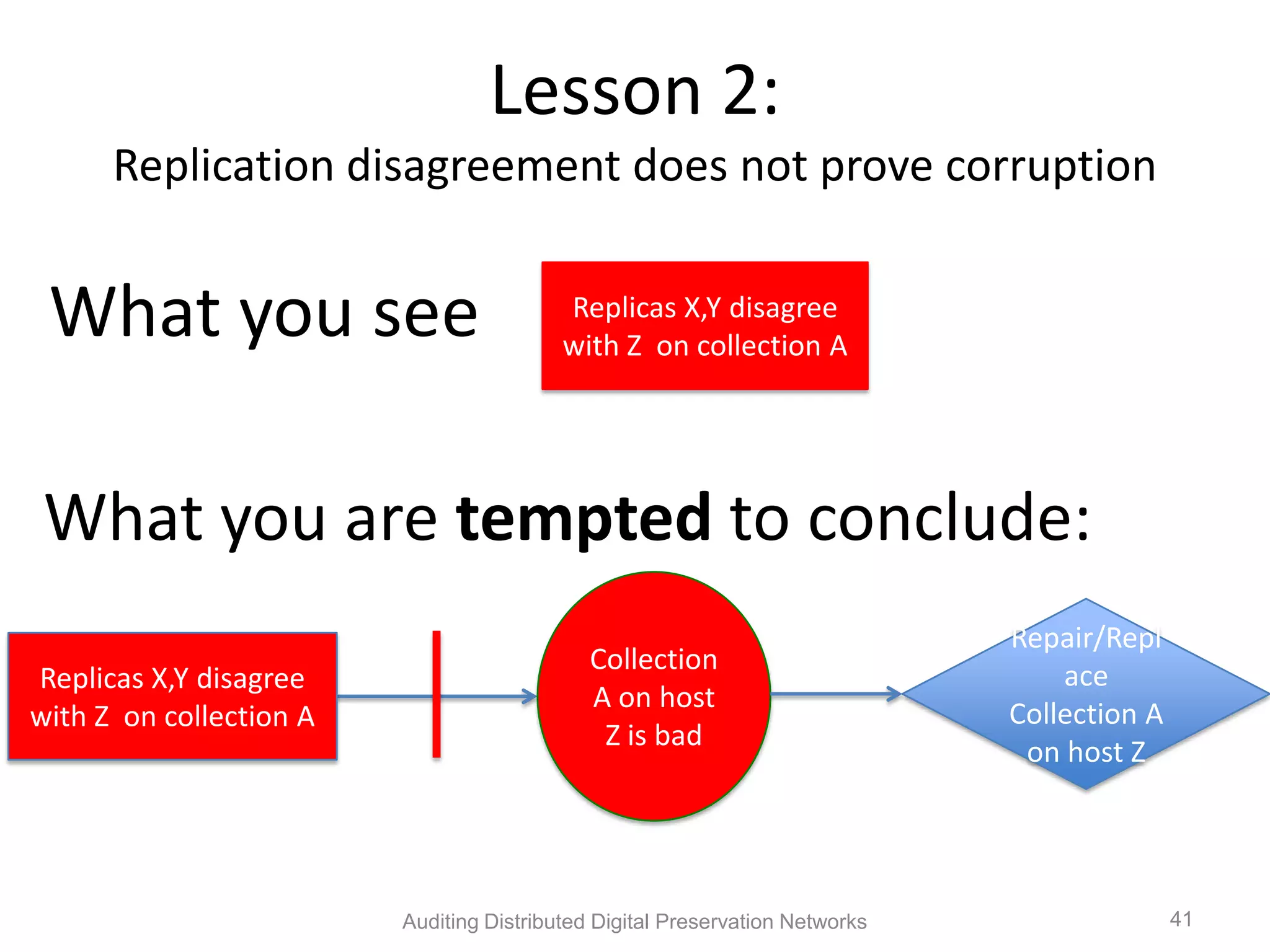

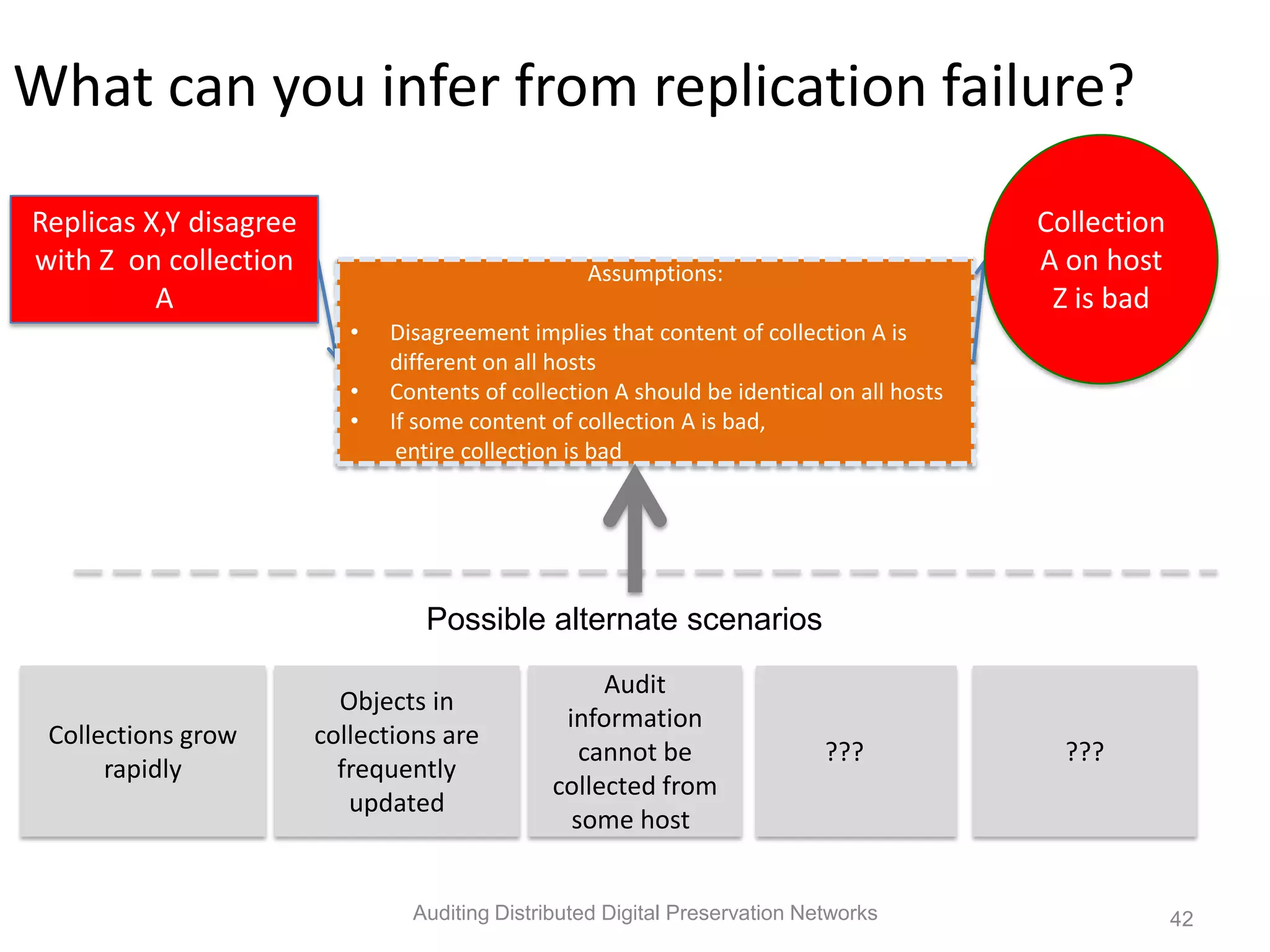

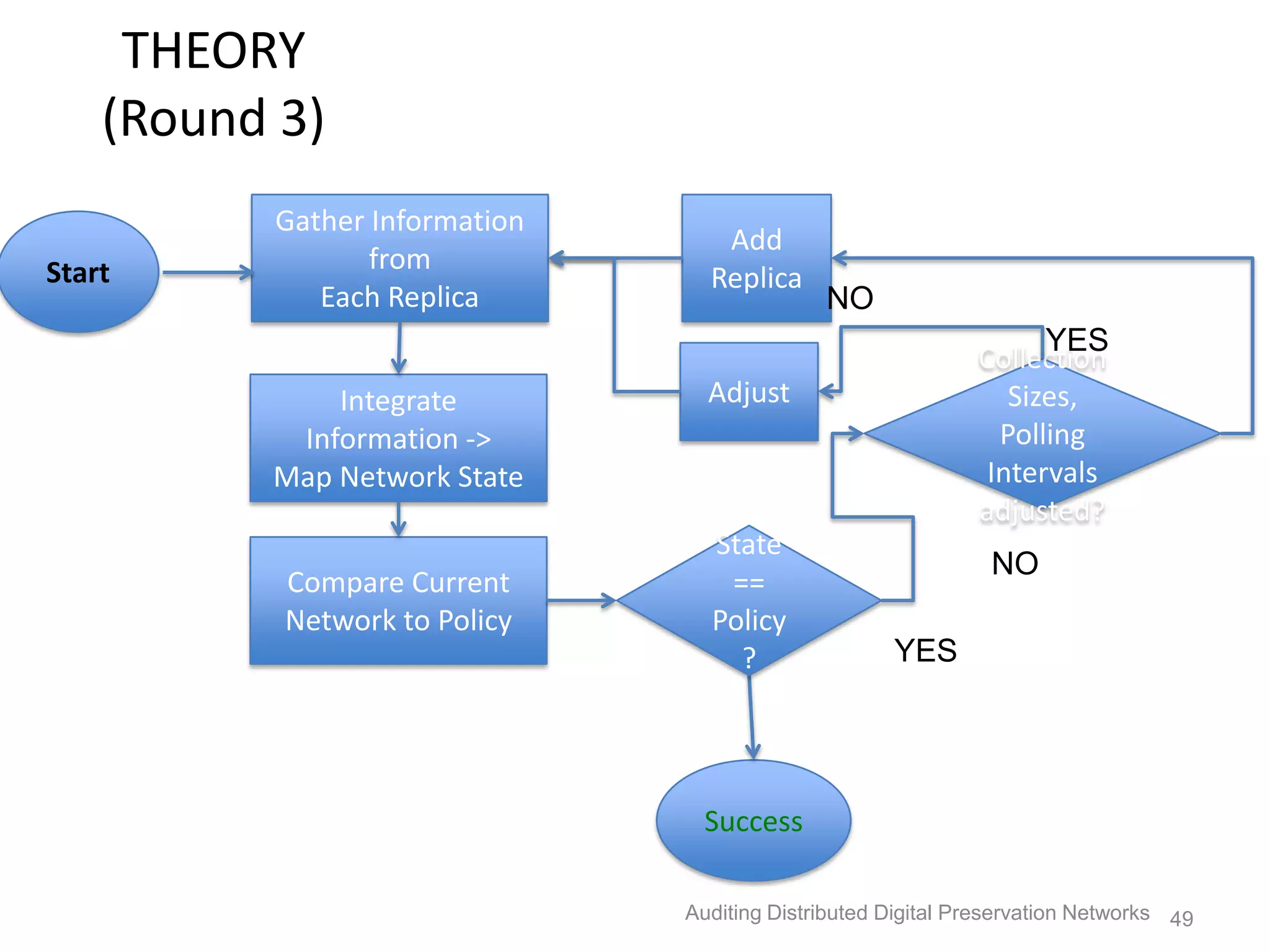

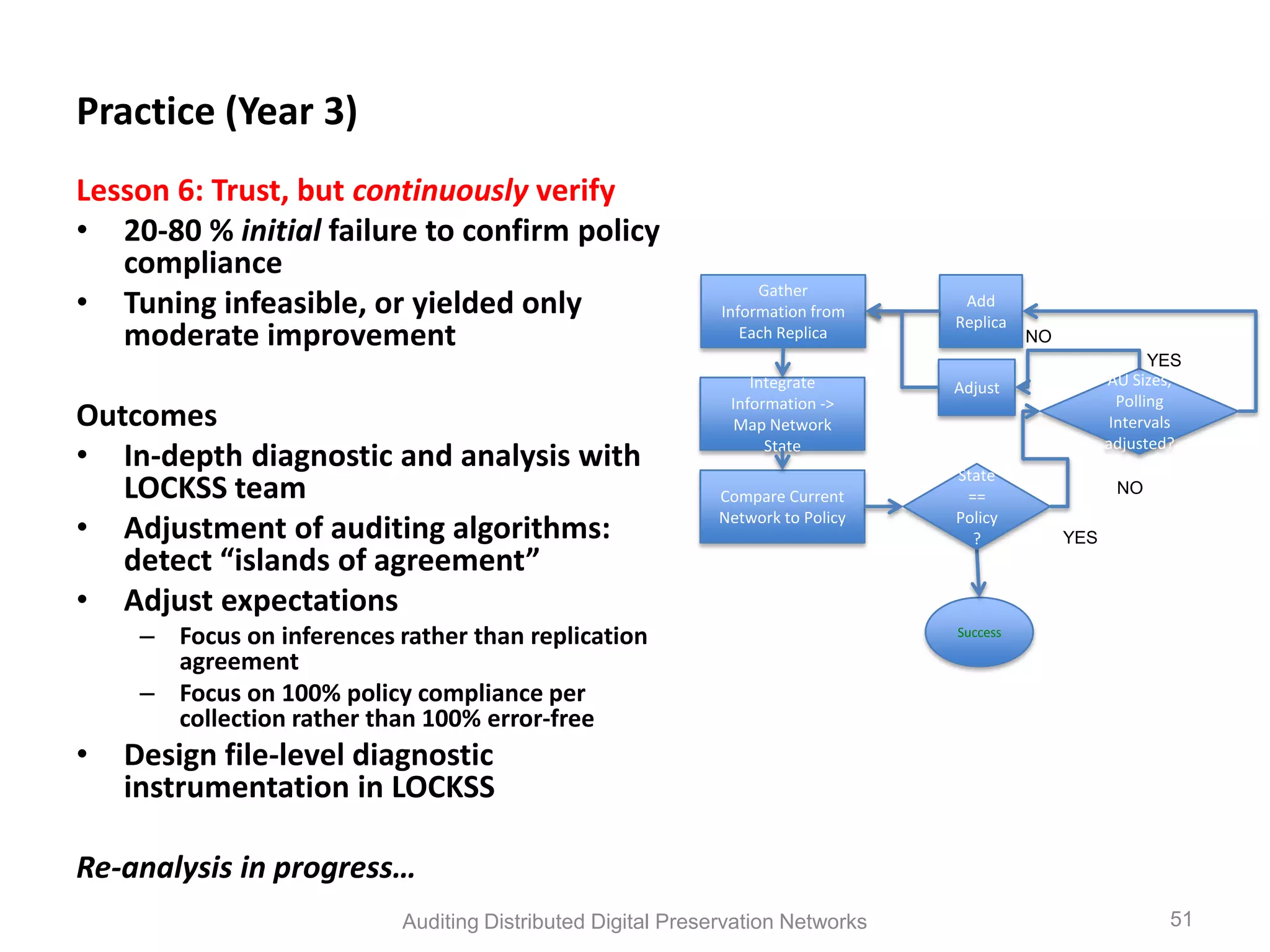

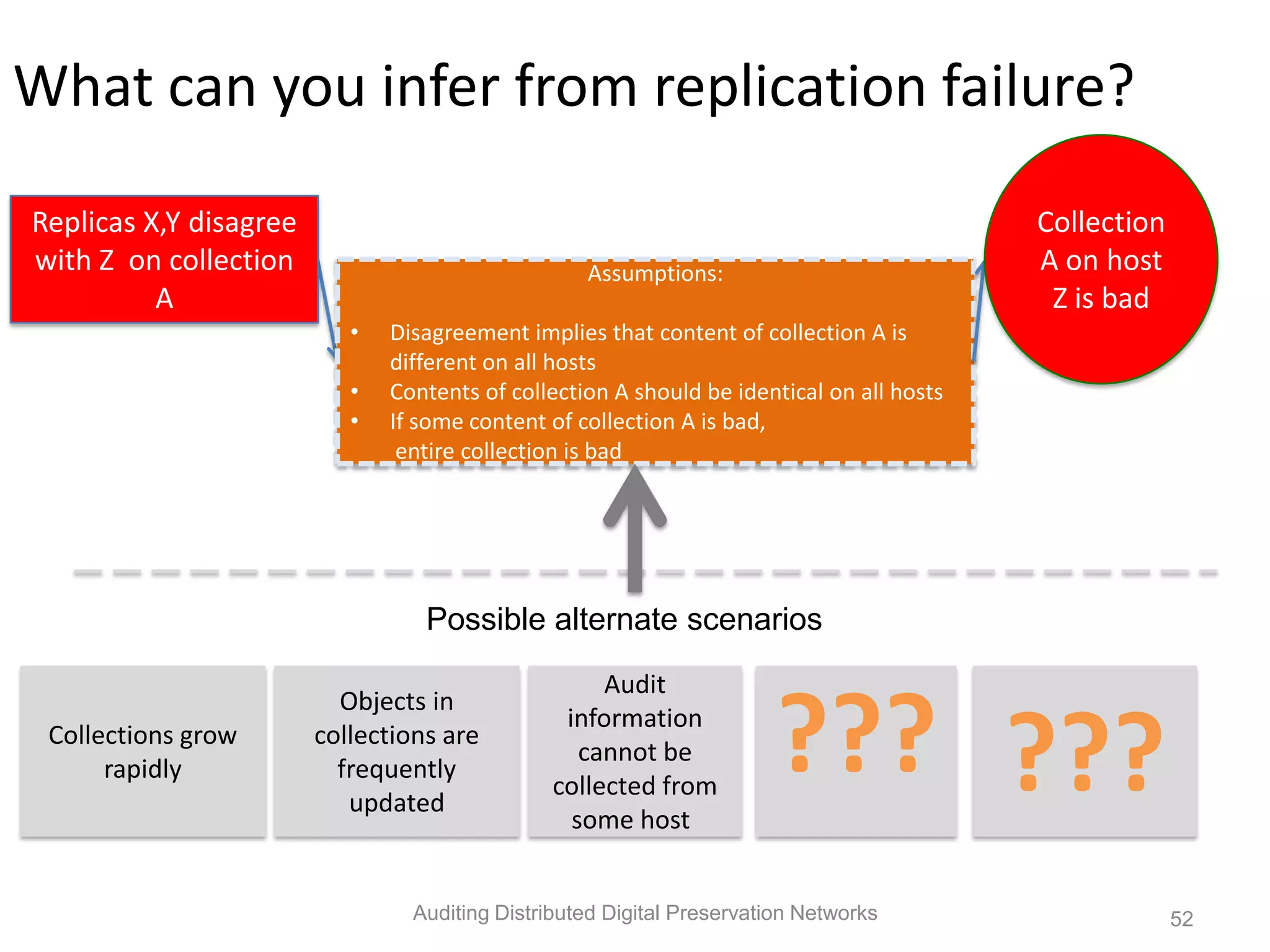

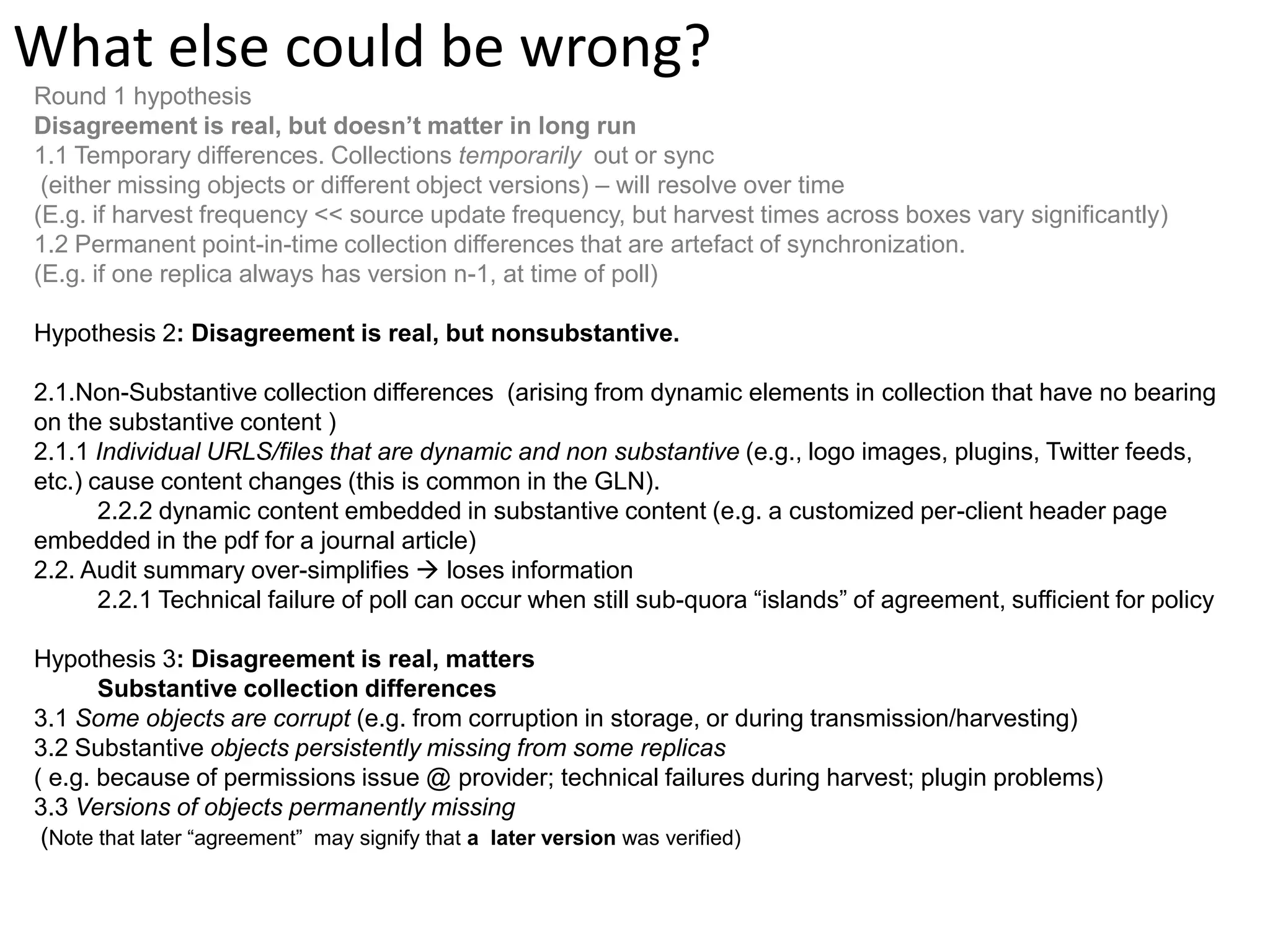

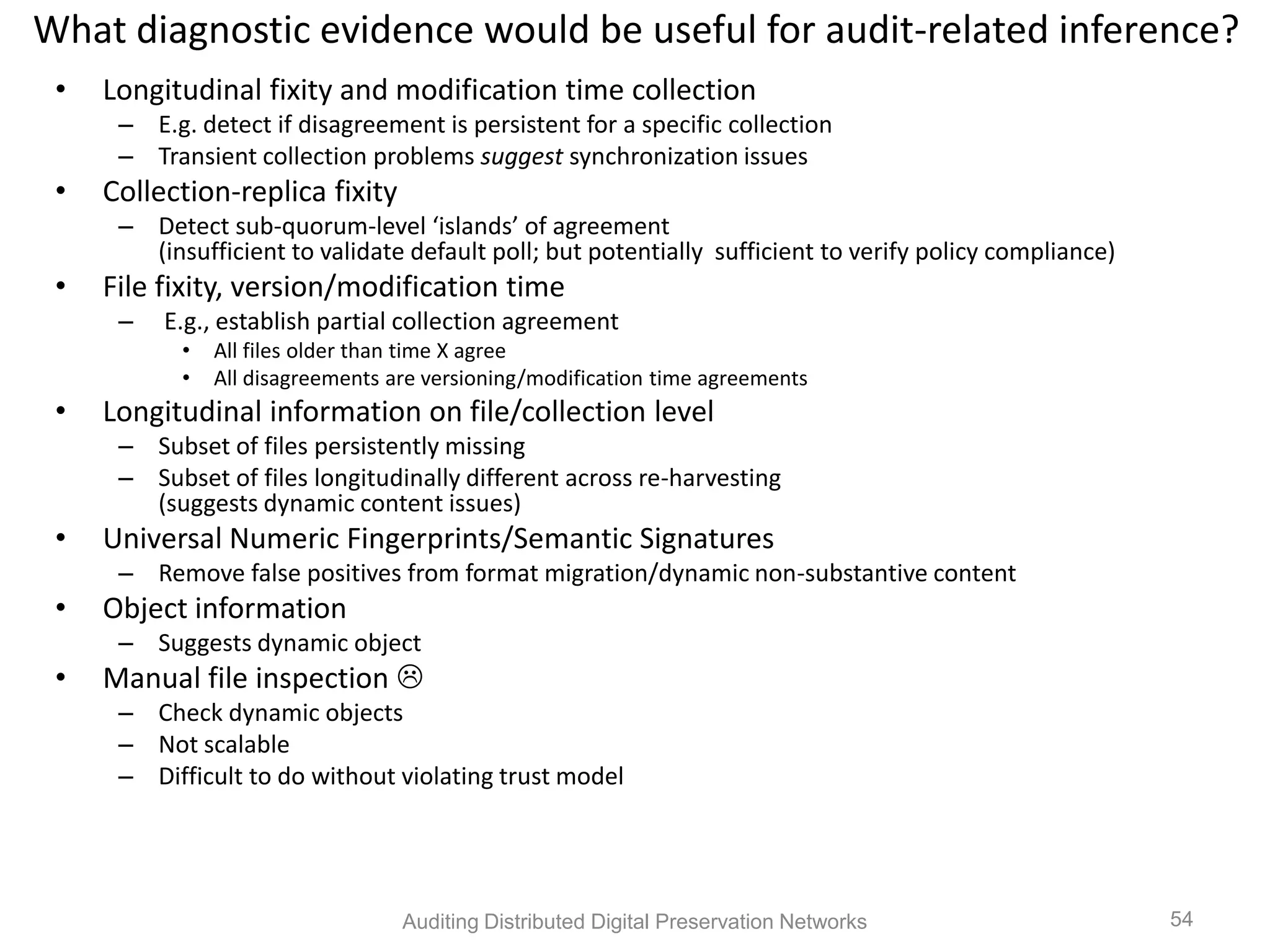

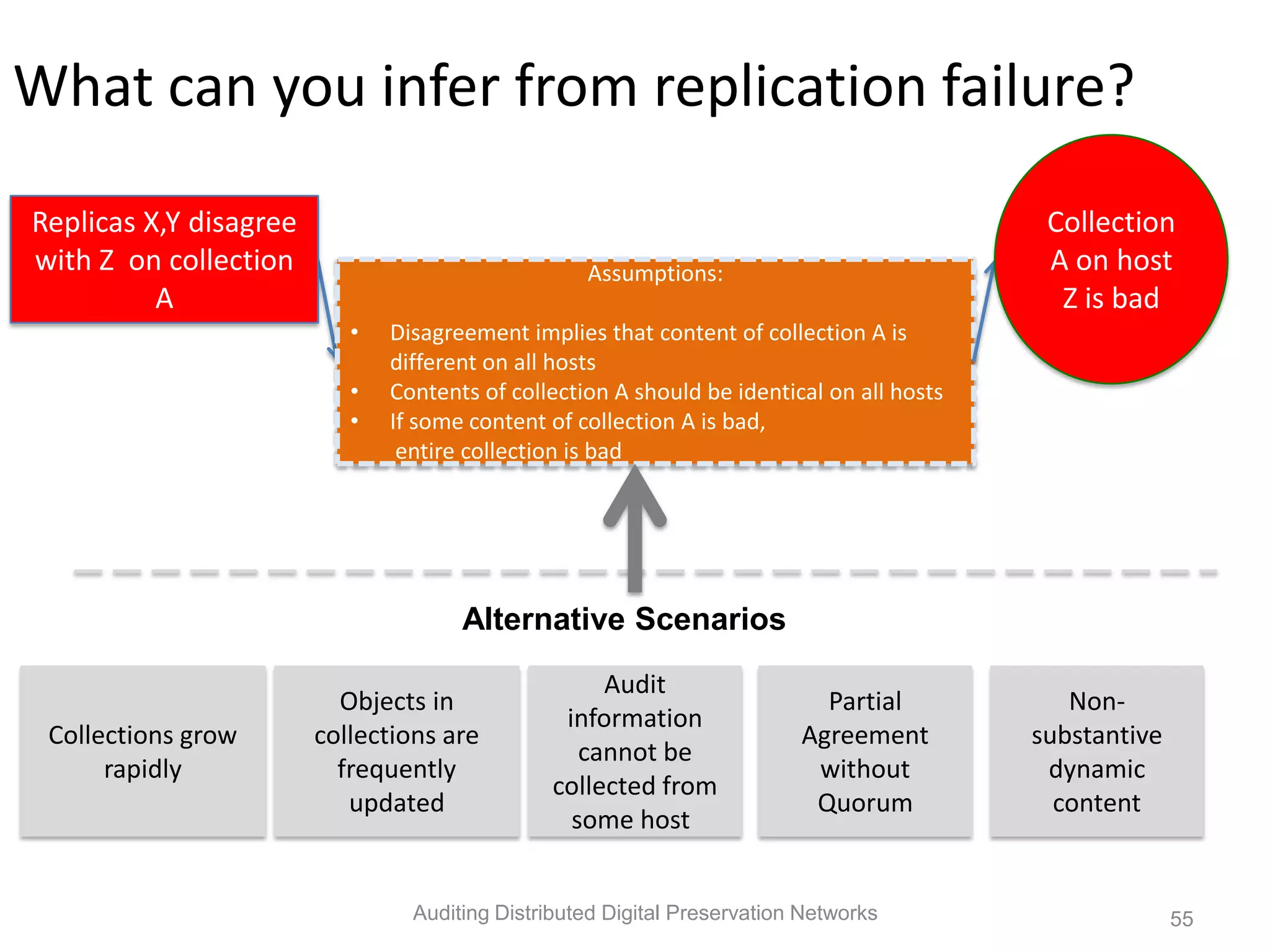

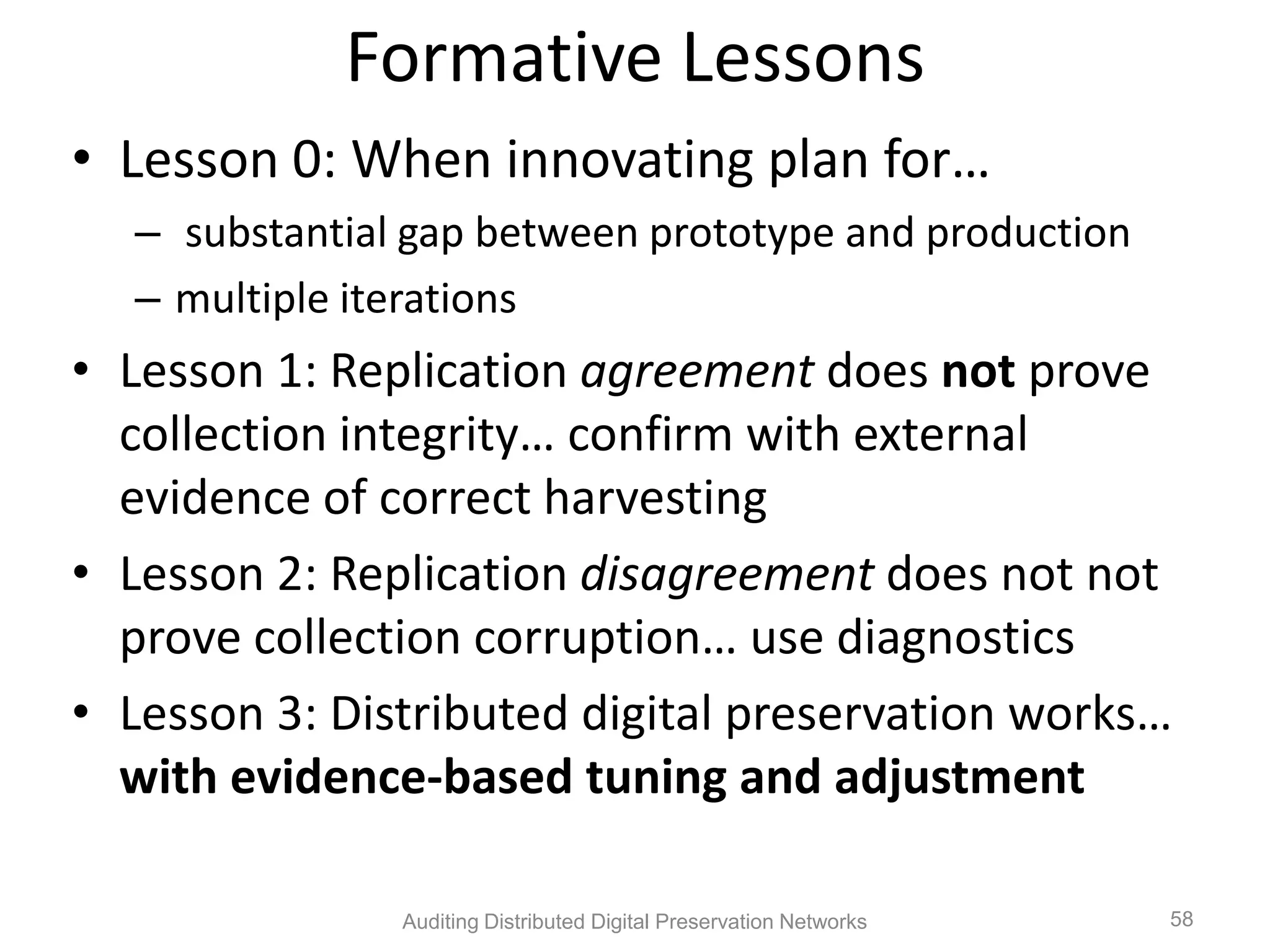

The document discusses the auditing of distributed digital preservation networks, highlighting the importance of verifying the integrity of digital assets held by libraries and archives. It introduces the Safearchive system, which automates auditing processes while addressing challenges related to replication, compliance, and assurance of data preservation. Key lessons emphasize the need for continuous verification and evidence-based tuning to ensure the reliability of preservation efforts.

![The Problem “Preservation was once an obscure backroom operation of interest chiefly to conservators and archivists: it is now widely recognized as one of the most important elements of a functional and enduring cyberinfrastructure.” – [Unsworth et al., 2006] “ • Libraries, archives and museums hold digital assets they wish to preserve, many unique • Many of these assets are not replicated at all • Even when institutions keep multiple backups offsite, many single points of failure remain, Auditing Distributed Digital Preservation Networks 8](https://image.slidesharecdn.com/cni2012safeauditv11-121225101907-phpapp02/75/Auditing-Distributed-Preservation-Networks-8-2048.jpg)

![Audit [aw-dit]: An independent evaluation of records and activities to assess a system of controls Fixity mitigates risk only if used for auditing. Auditing Distributed Digital Preservation Networks 15](https://image.slidesharecdn.com/cni2012safeauditv11-121225101907-phpapp02/75/Auditing-Distributed-Preservation-Networks-15-2048.jpg)

![What’s Next? “It‟s tough to make predictions, especially about the future” -Attributed to Woody Allen, Yogi Berra, Niels Bohr, Vint Cerf, Winston Churchill, Confucius, Disreali [sic], Freeman Dyson, Cecil B. Demille, Albert Einstein, Enrico Fermi, Edgar R. Fiedler, Bob Fourer, Sam Goldwyn, Allan Lamport, Groucho Marx, Dan Quayle, George Bernard Shaw, Casey Stengel, Will Rogers, M. Taub, Mark Twain, Kerr L. White, and others Auditing Distributed Digital Preservation Networks 60](https://image.slidesharecdn.com/cni2012safeauditv11-121225101907-phpapp02/75/Auditing-Distributed-Preservation-Networks-60-2048.jpg)