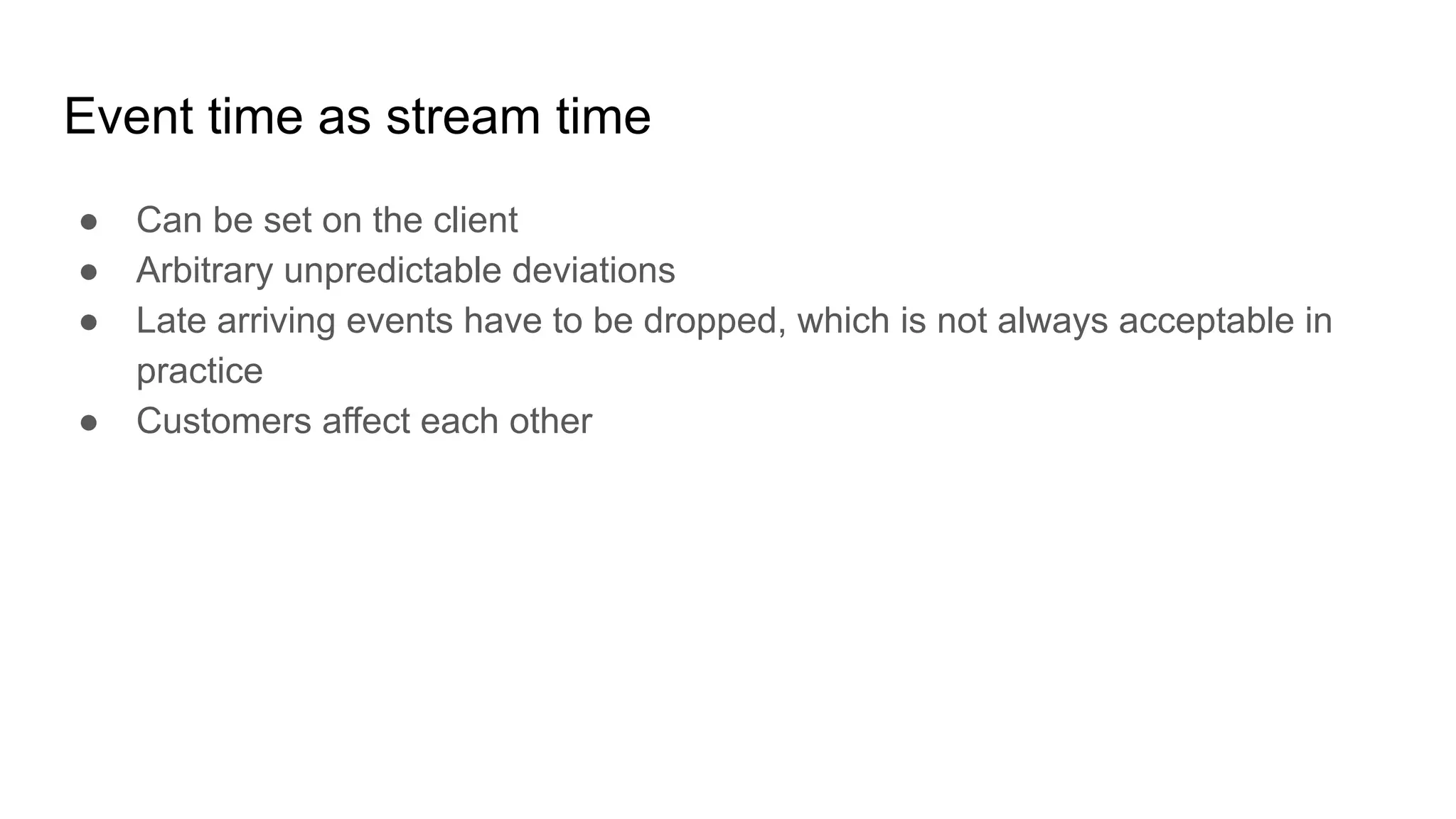

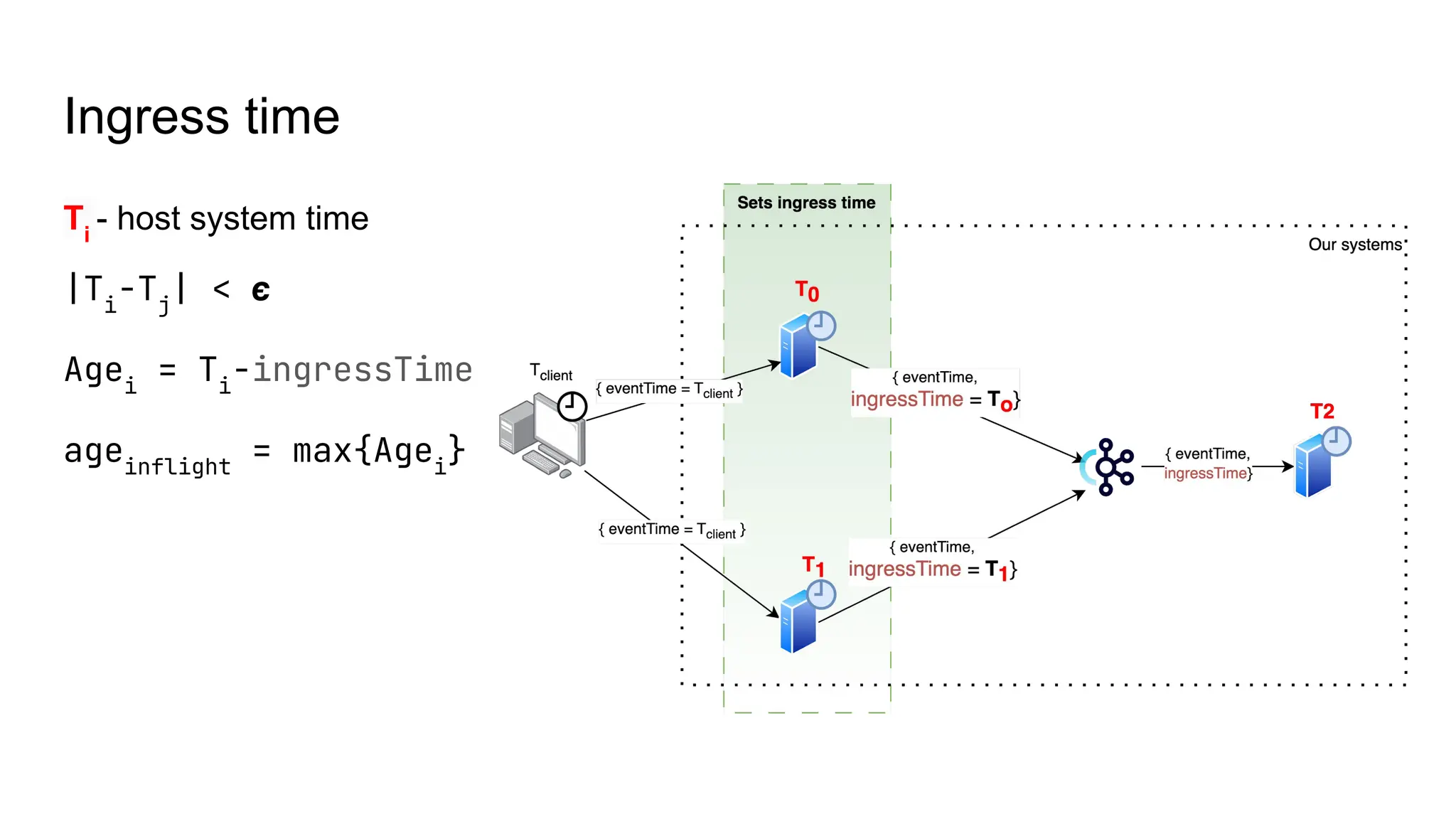

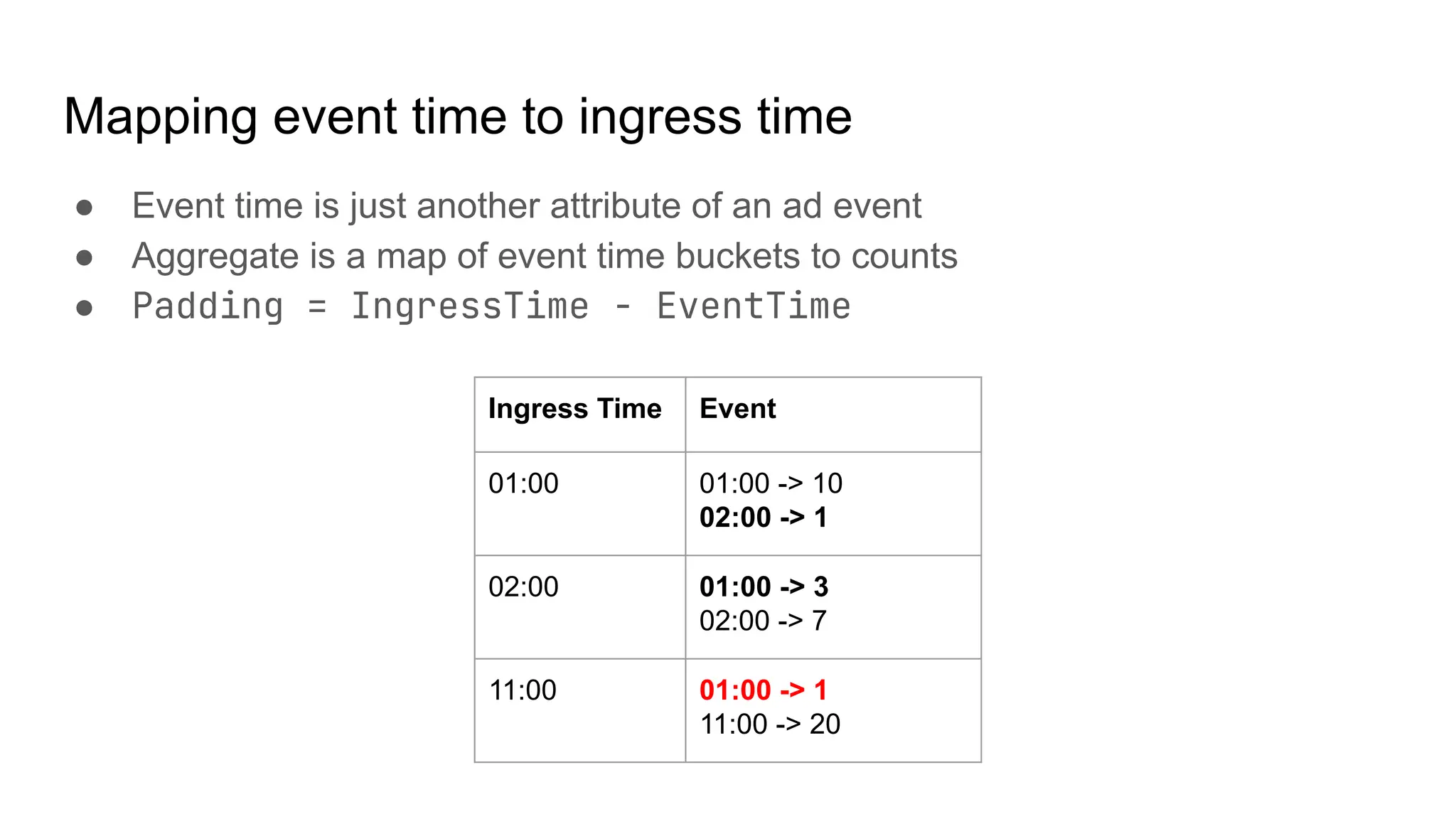

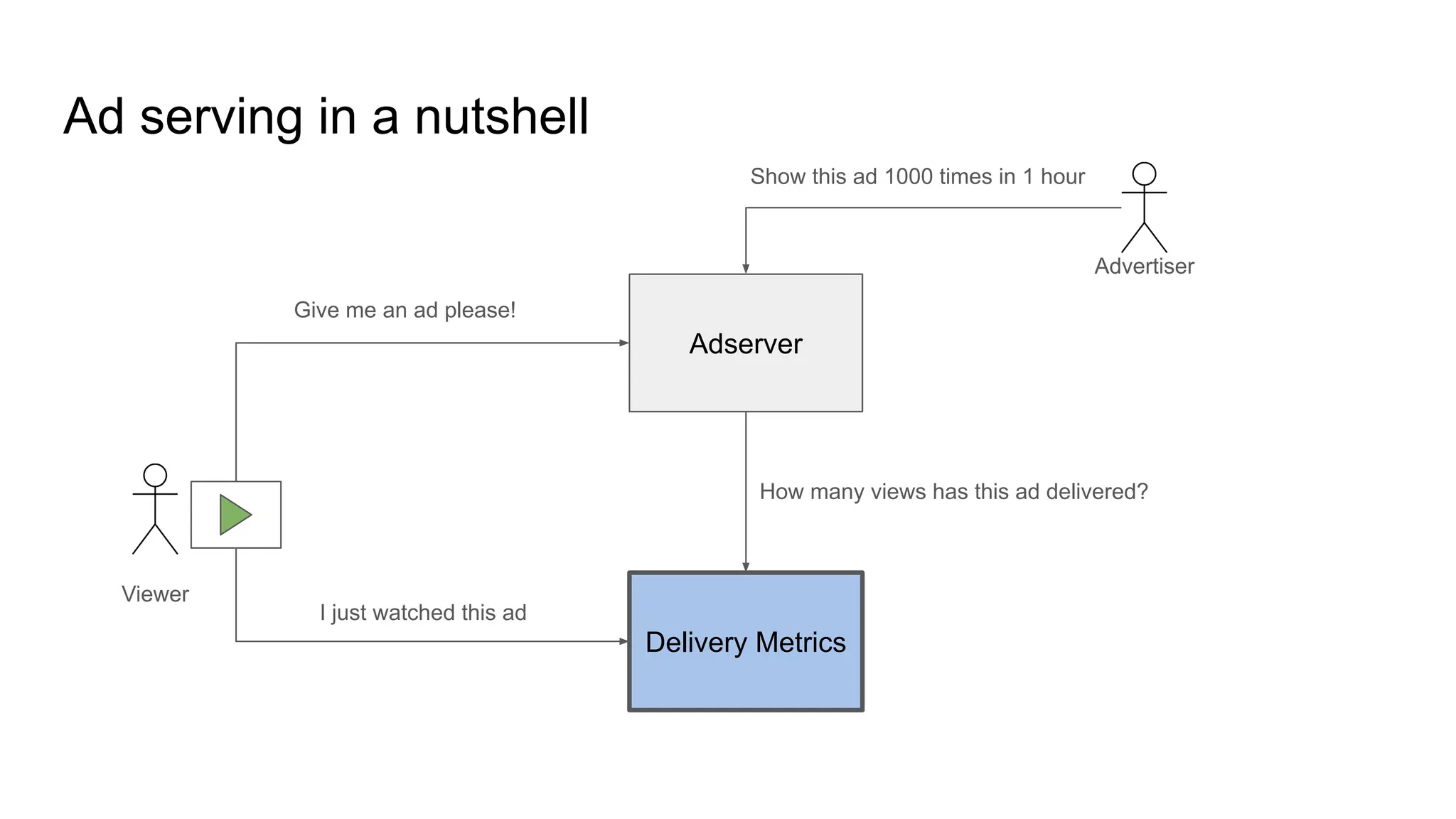

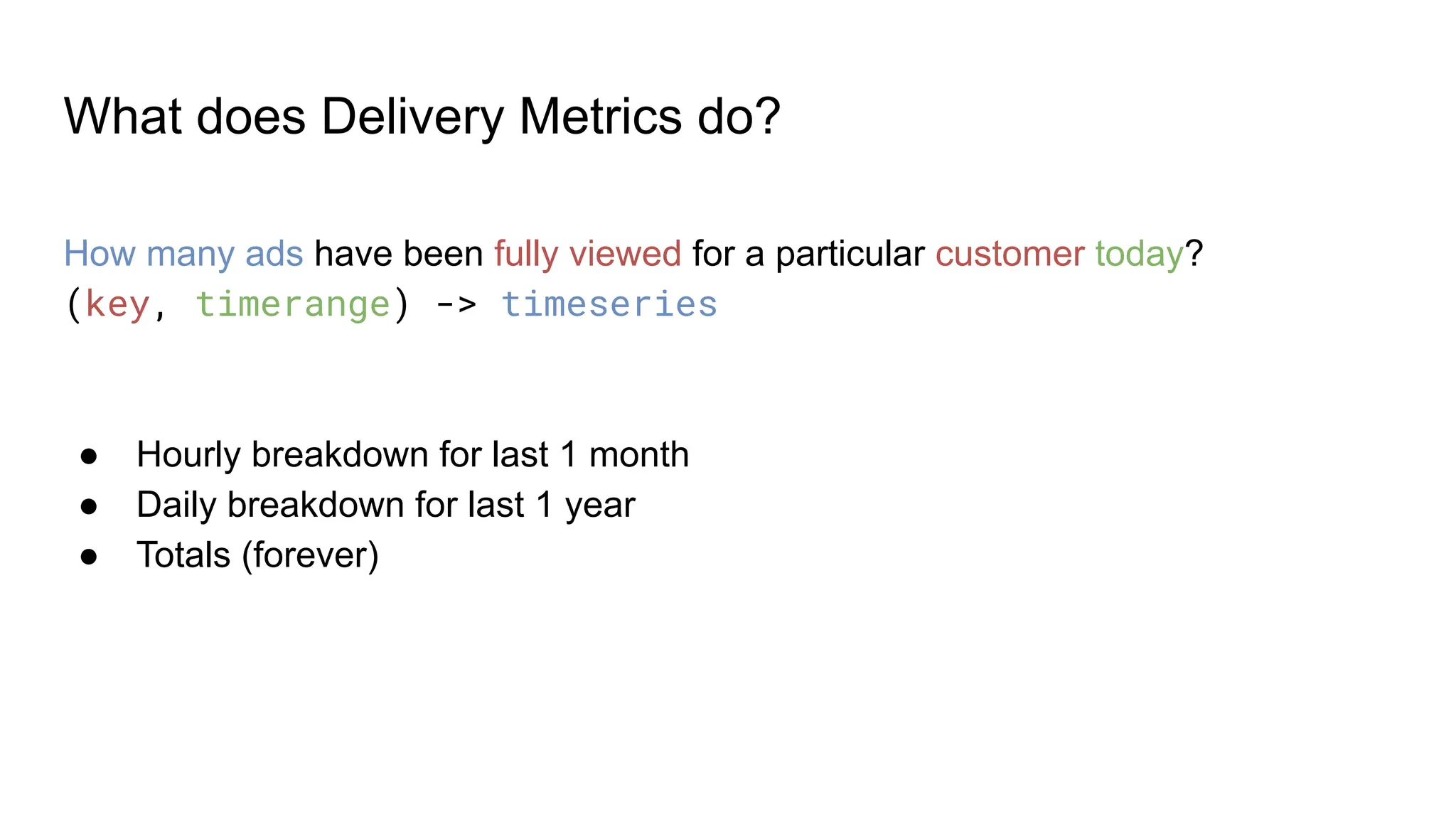

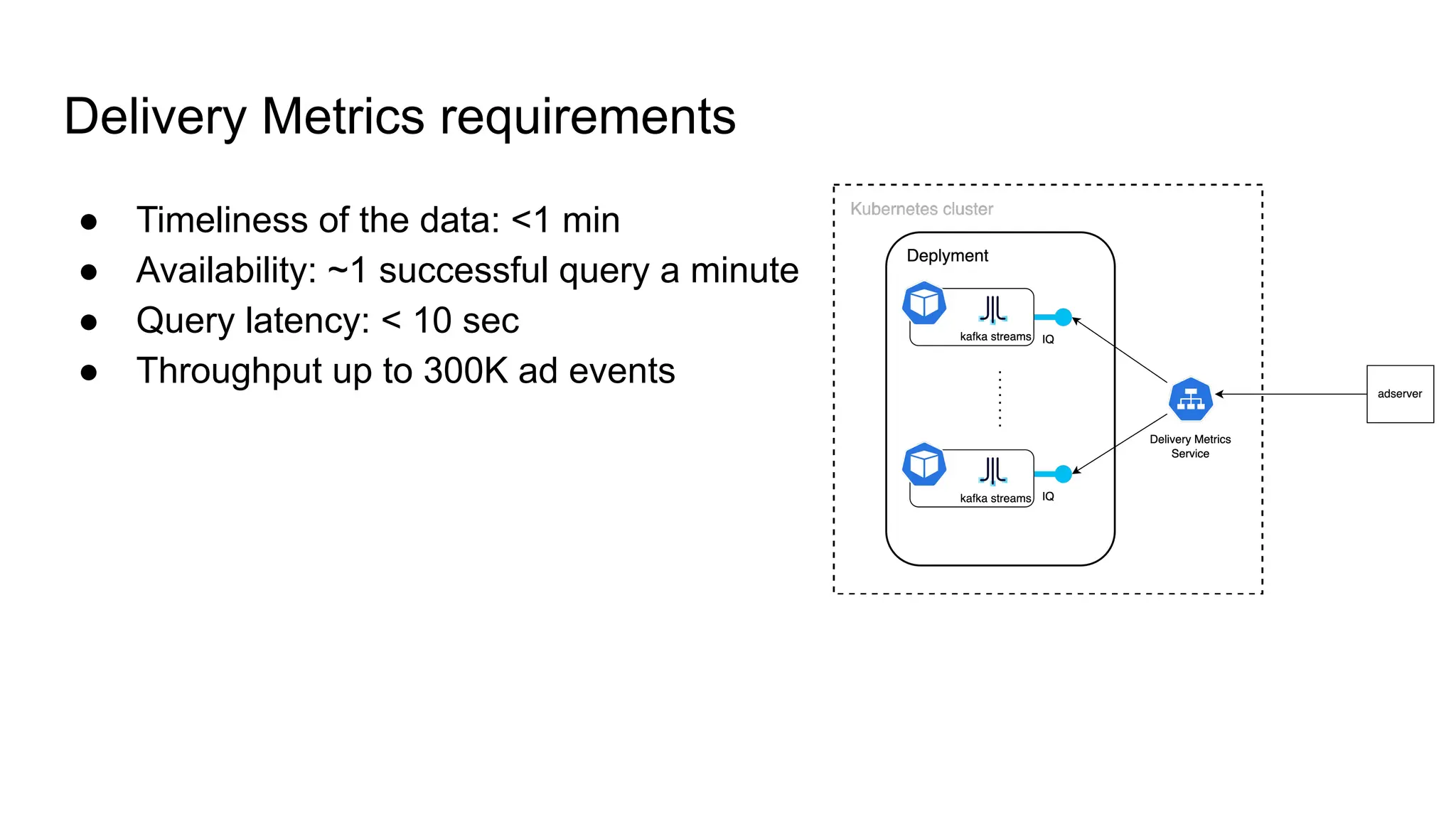

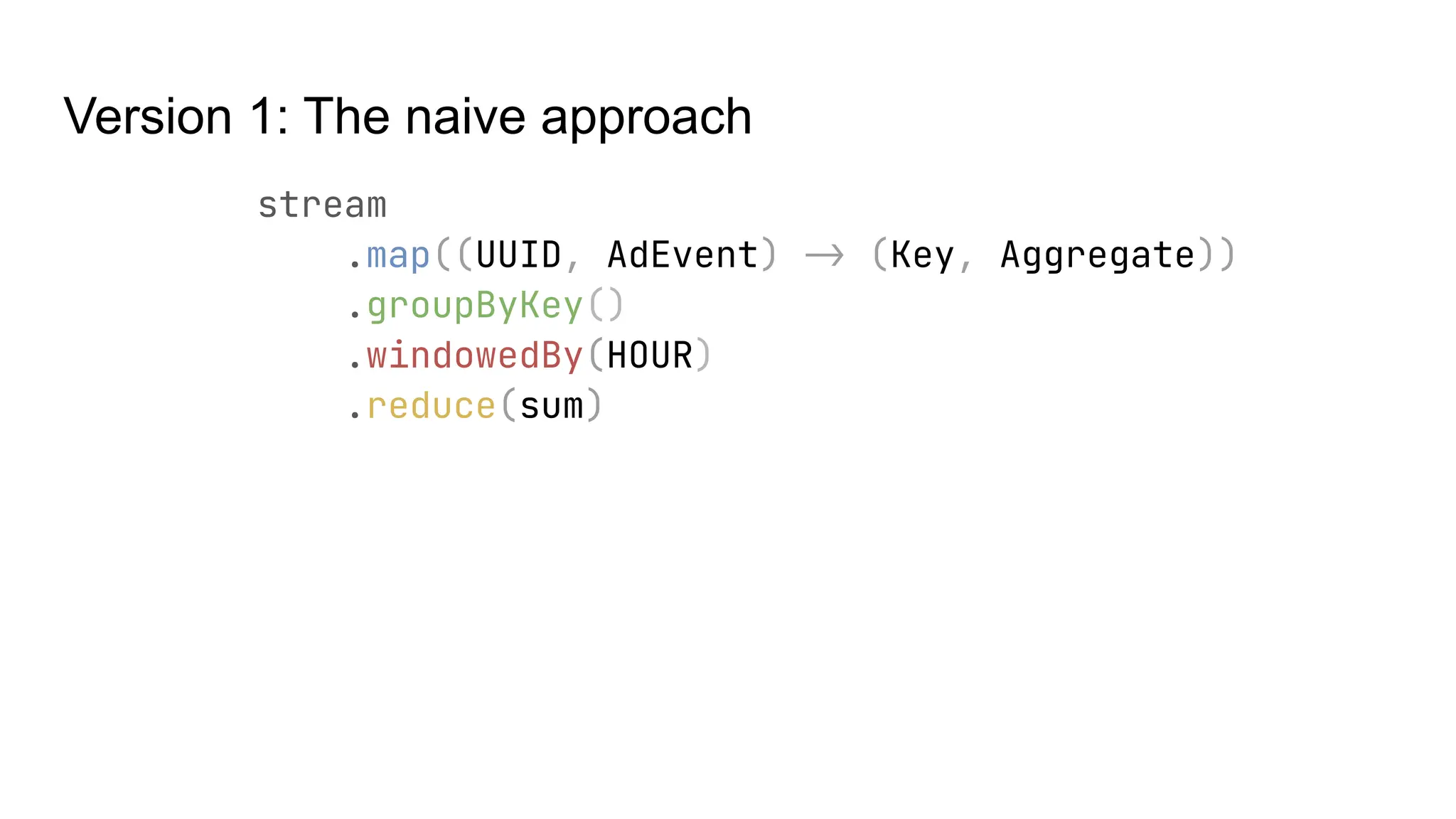

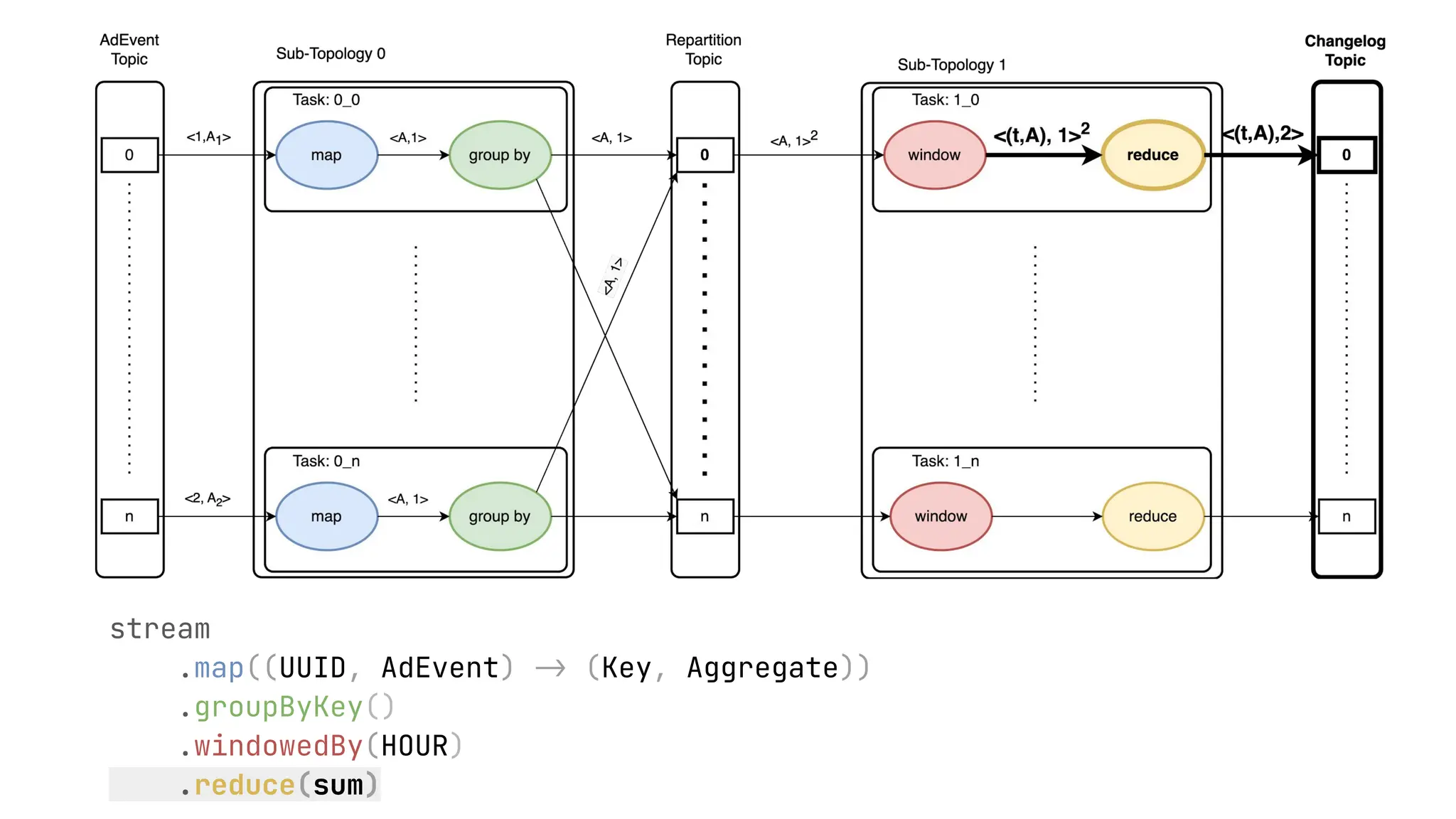

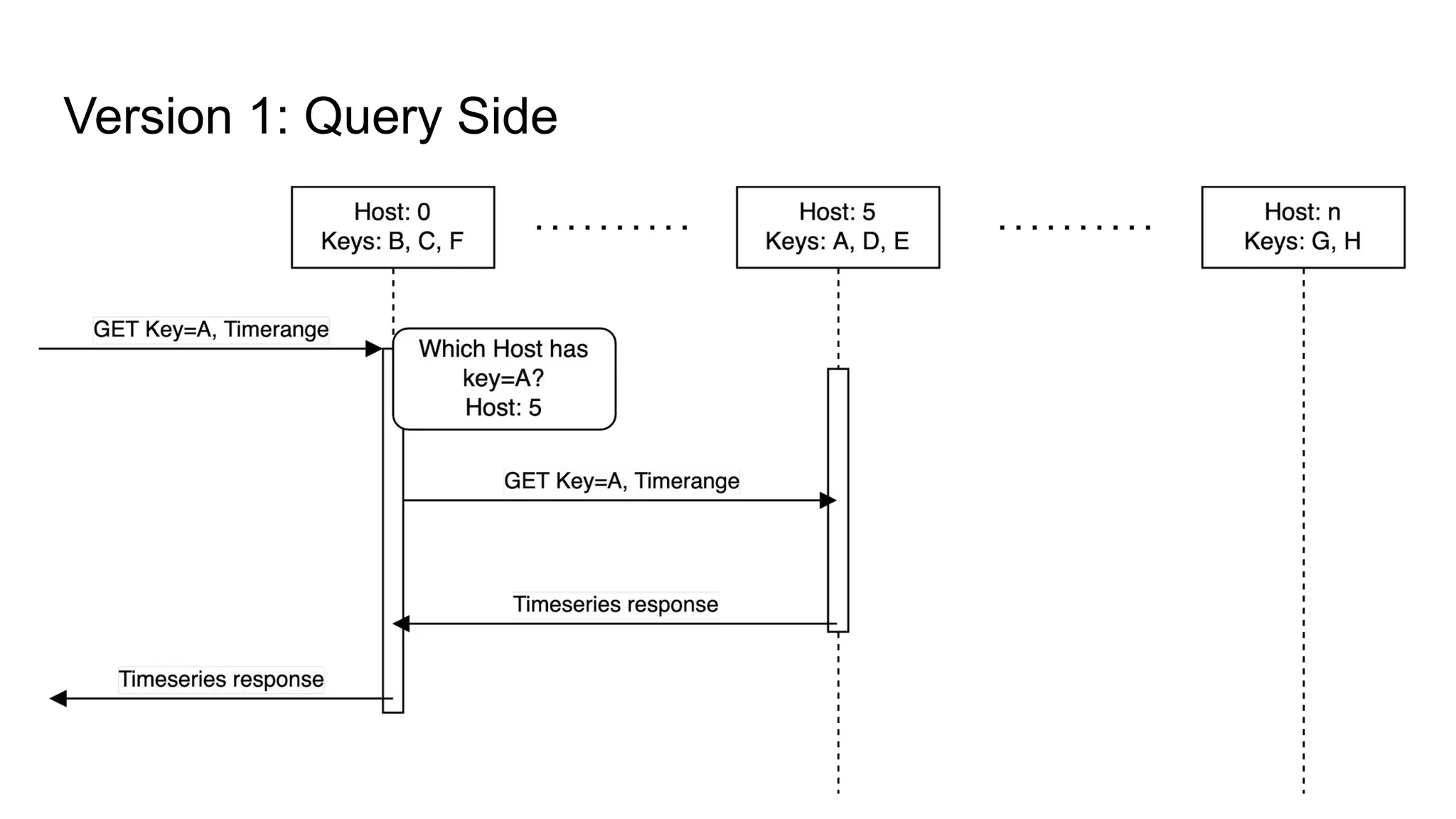

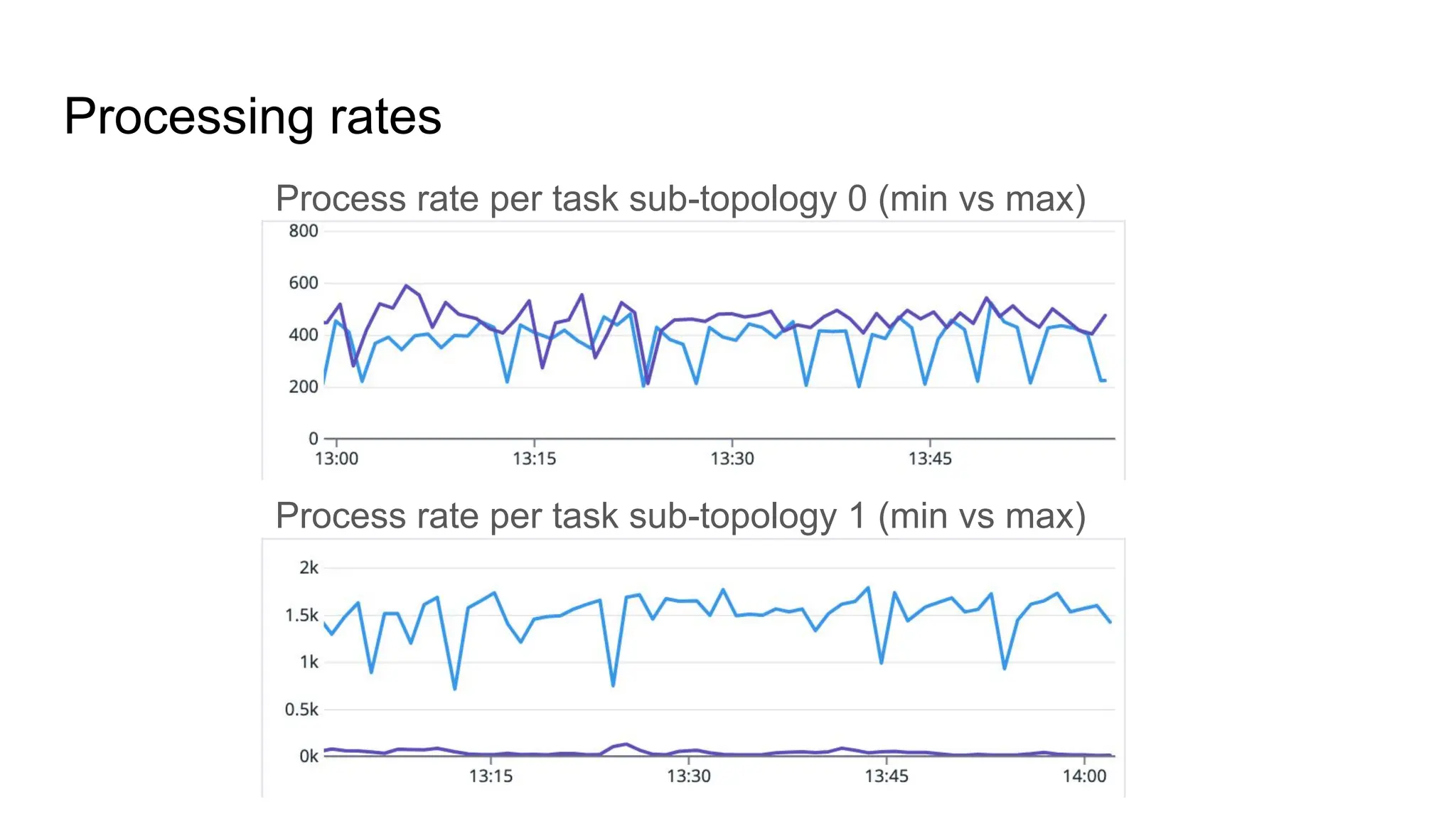

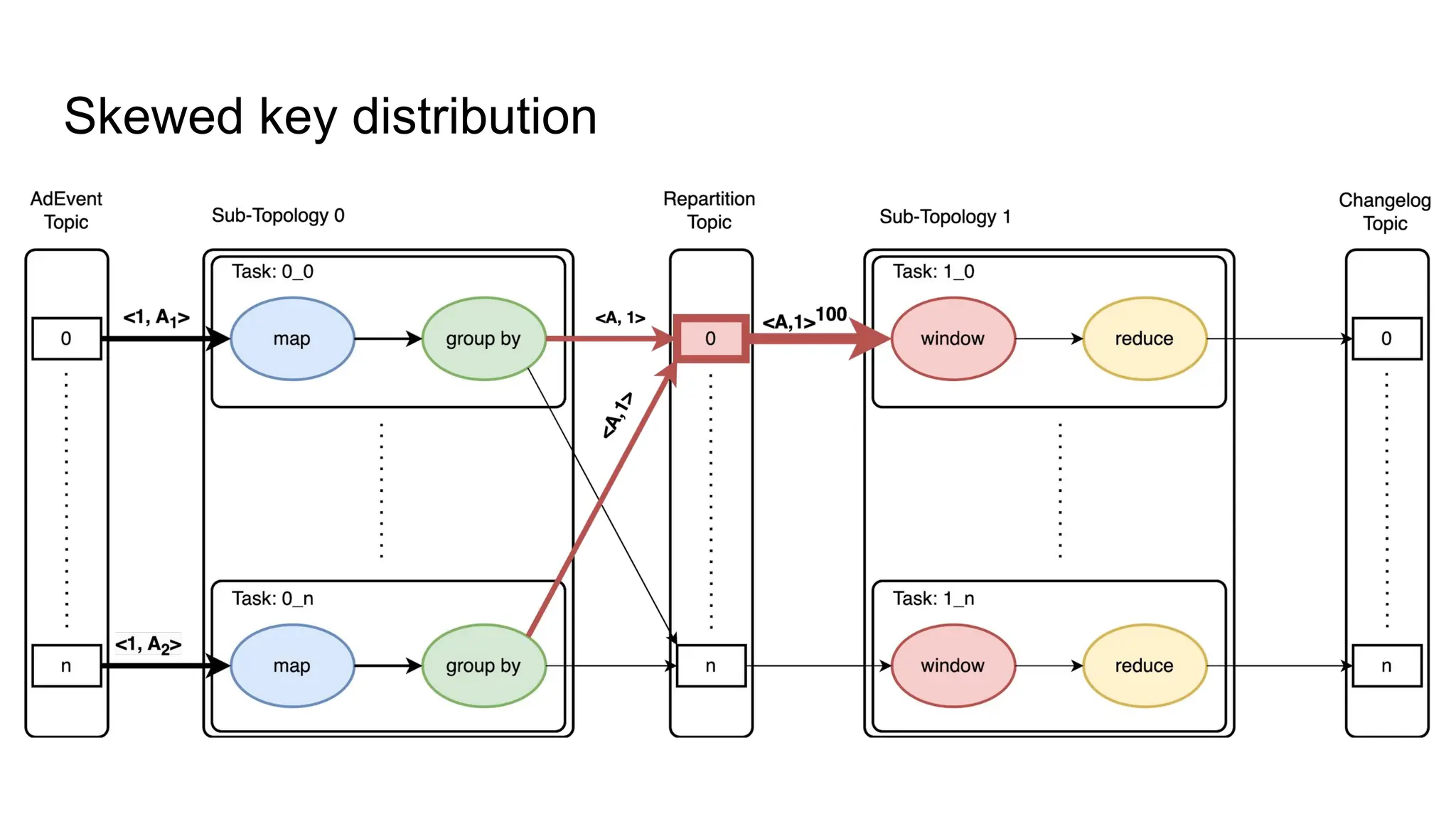

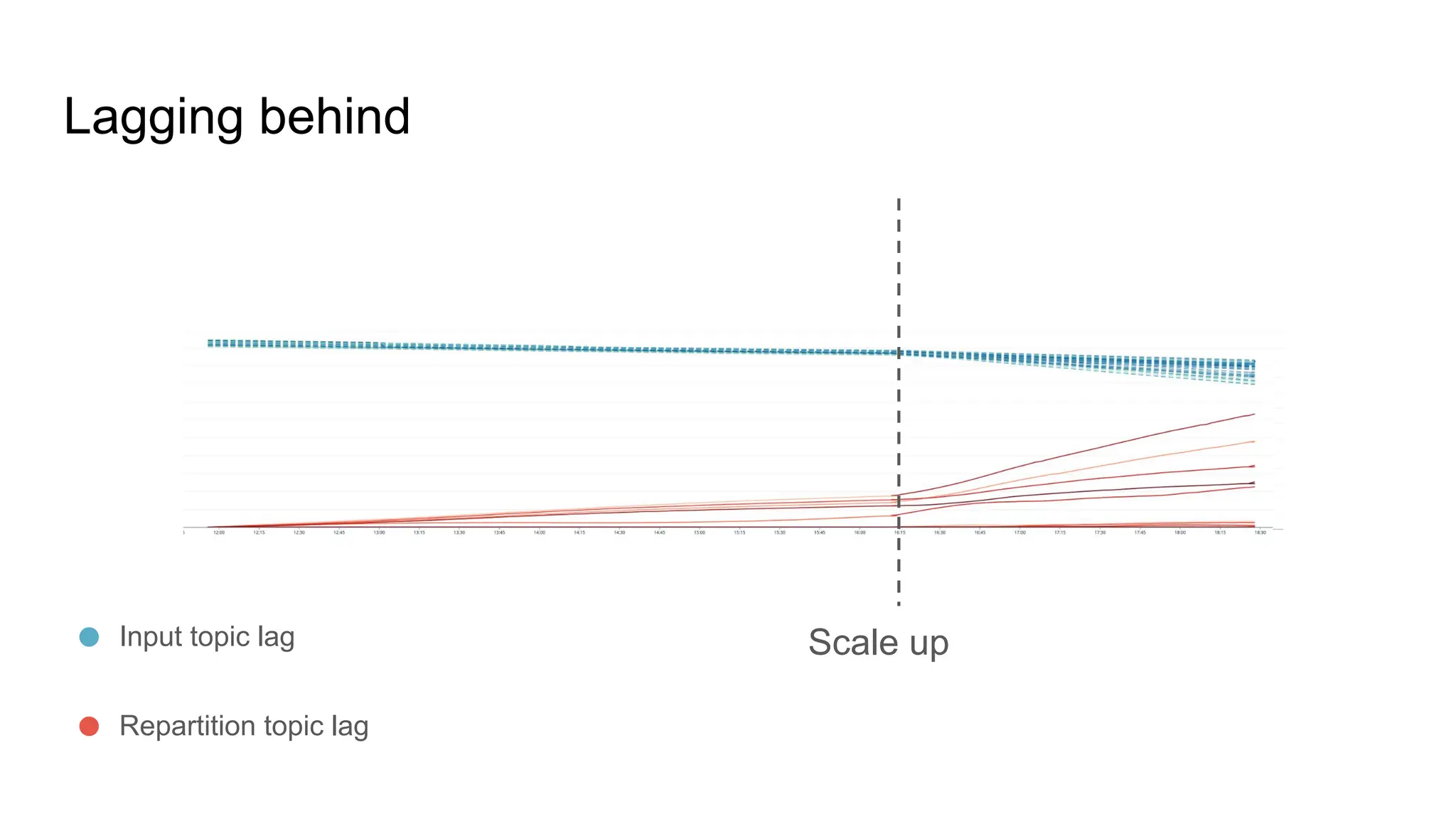

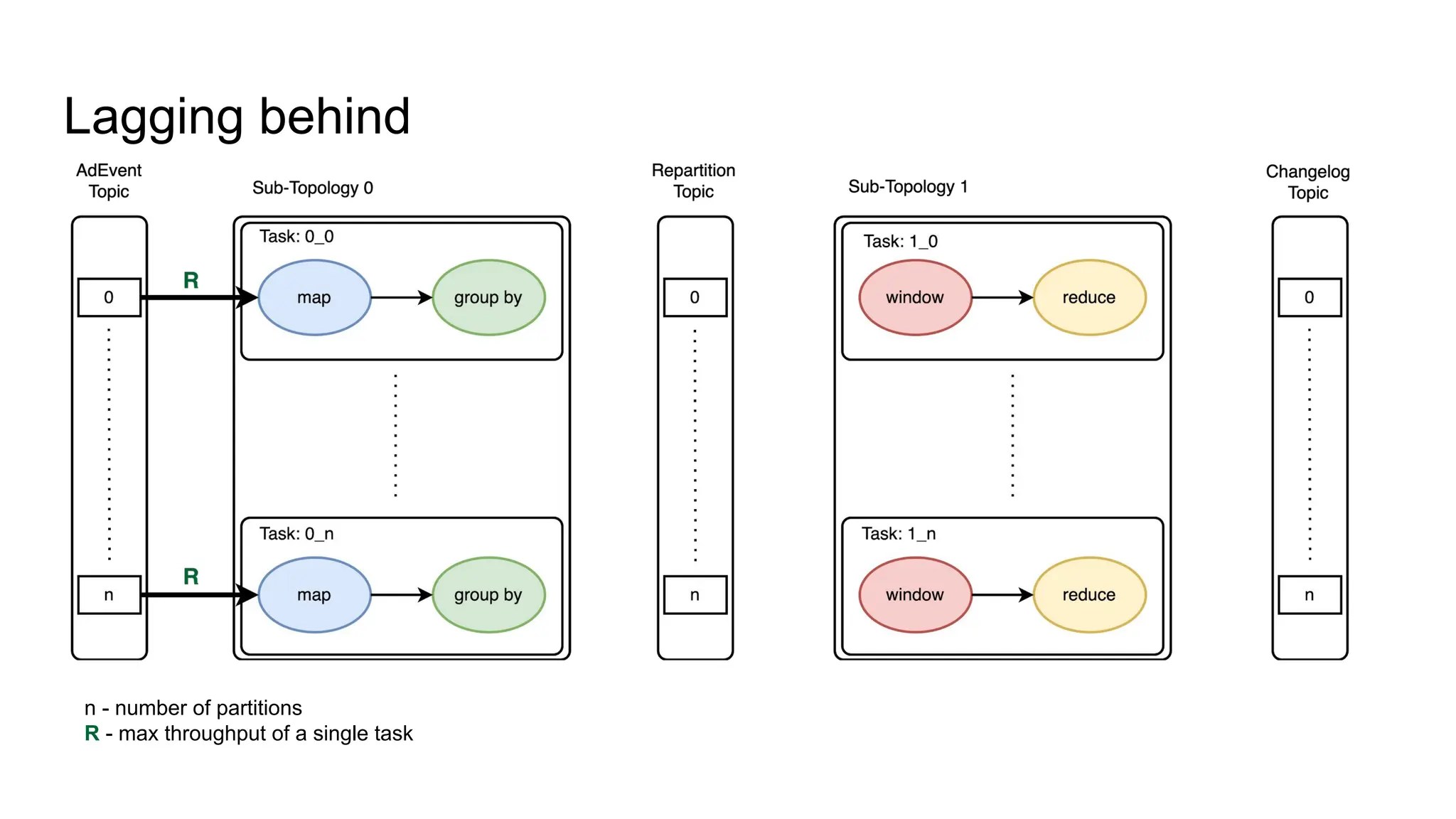

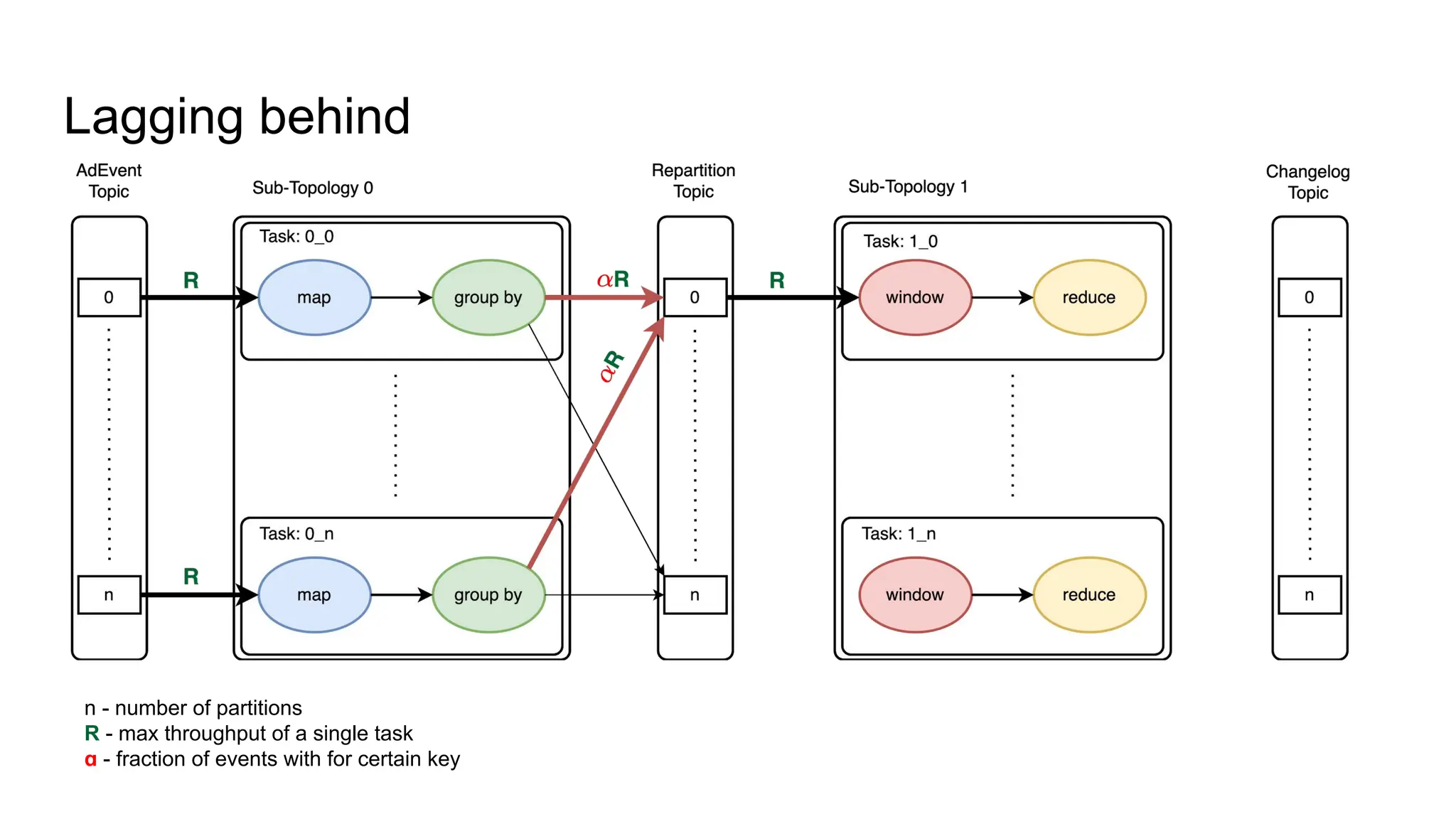

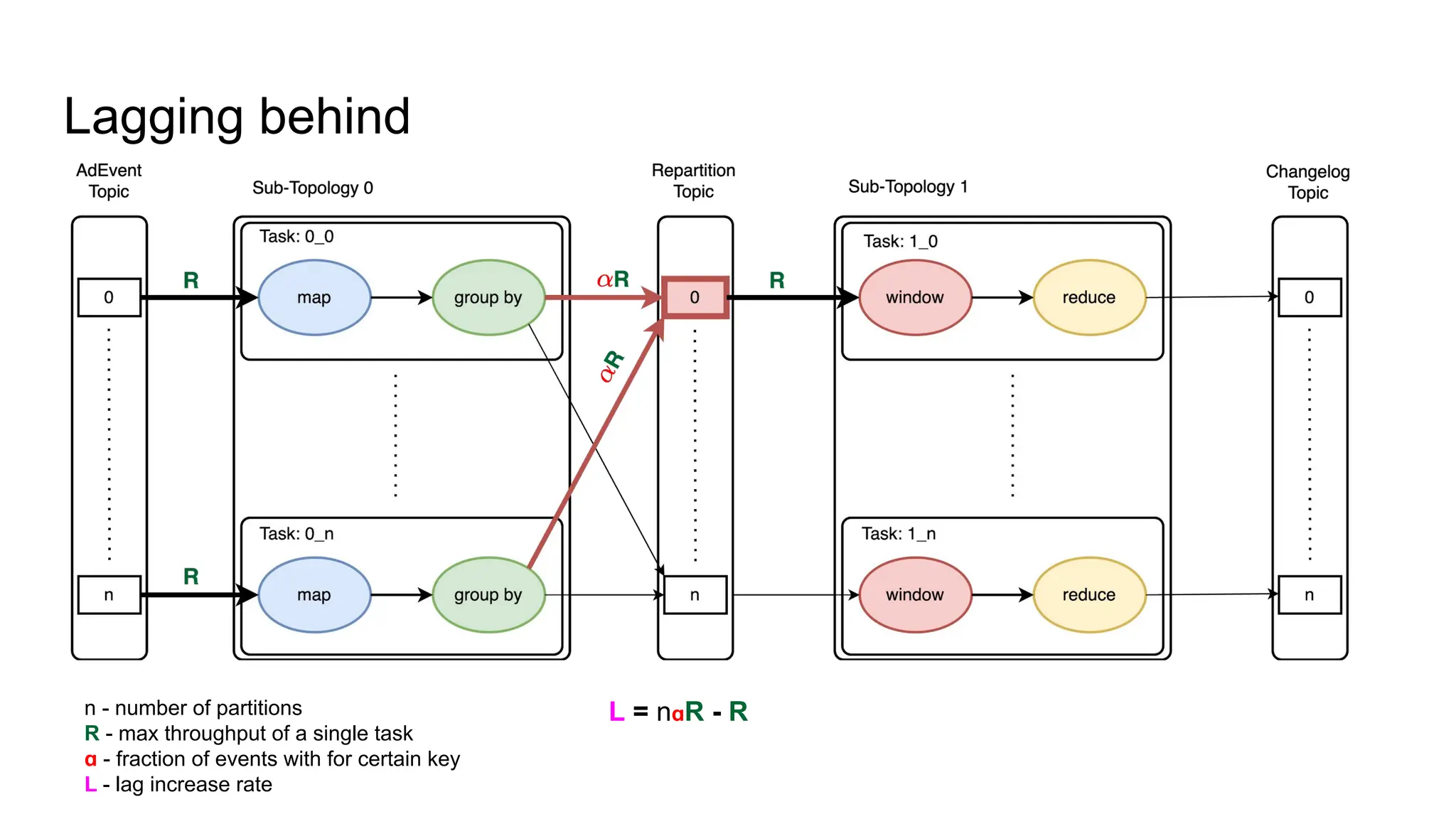

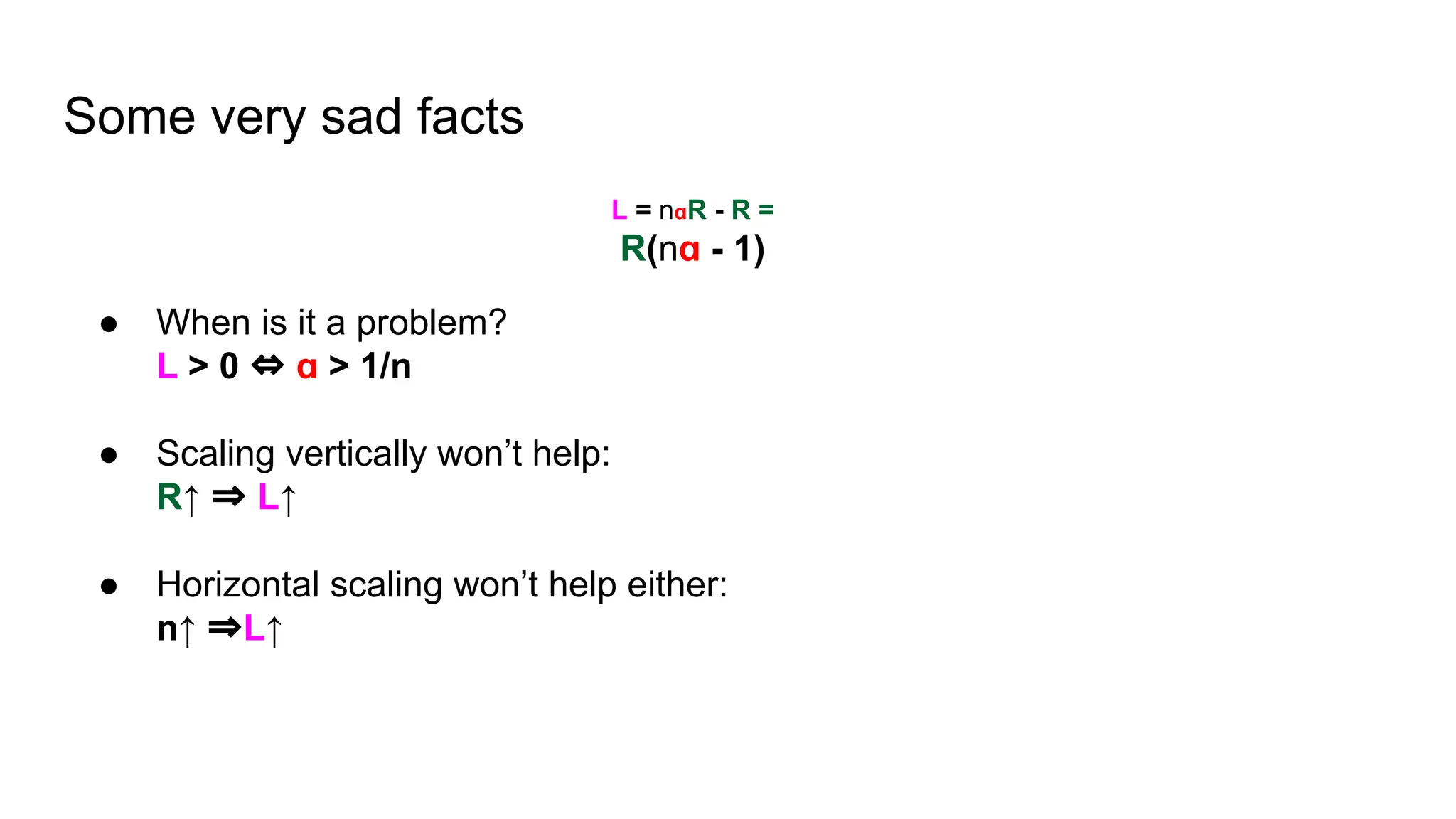

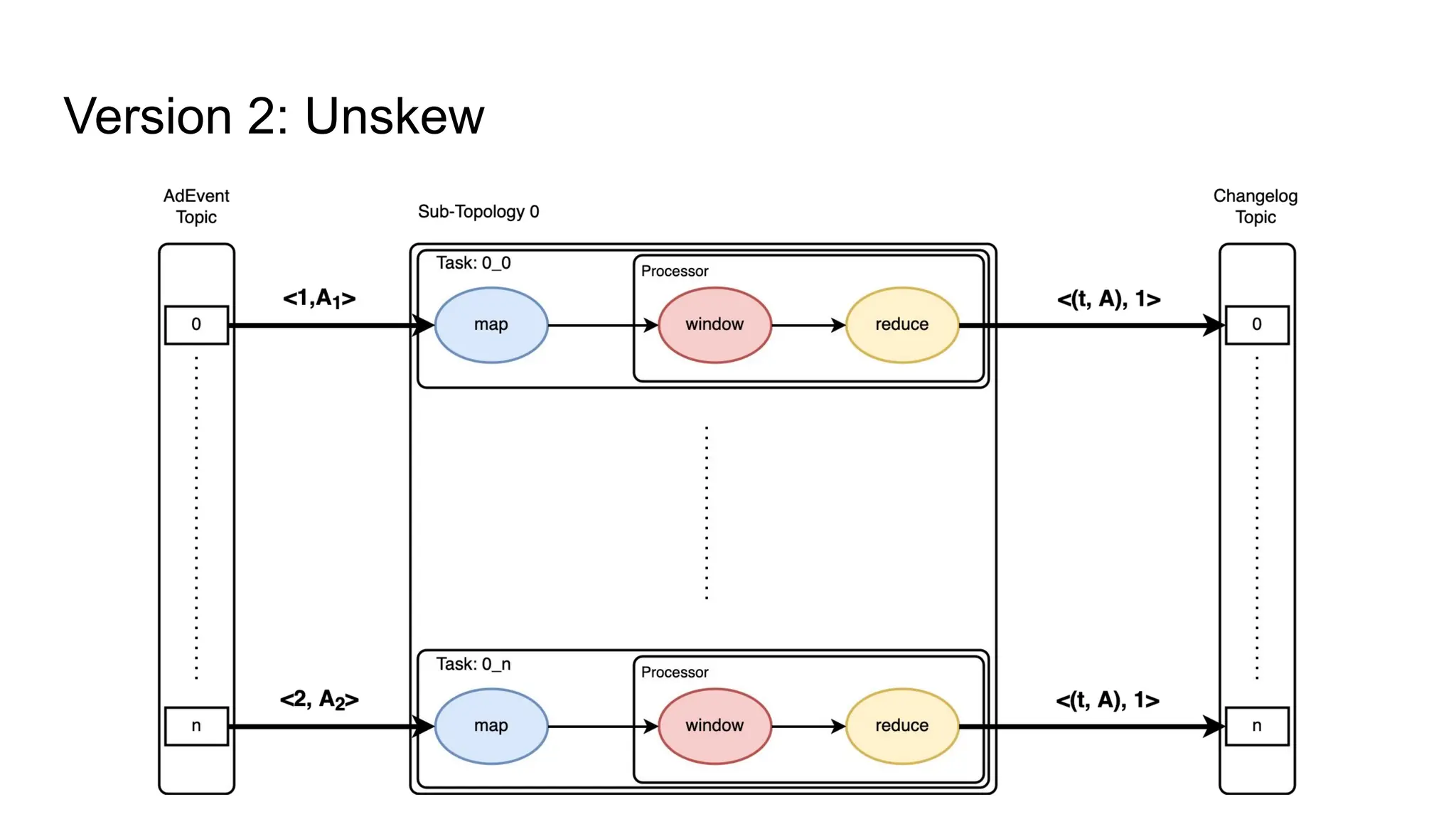

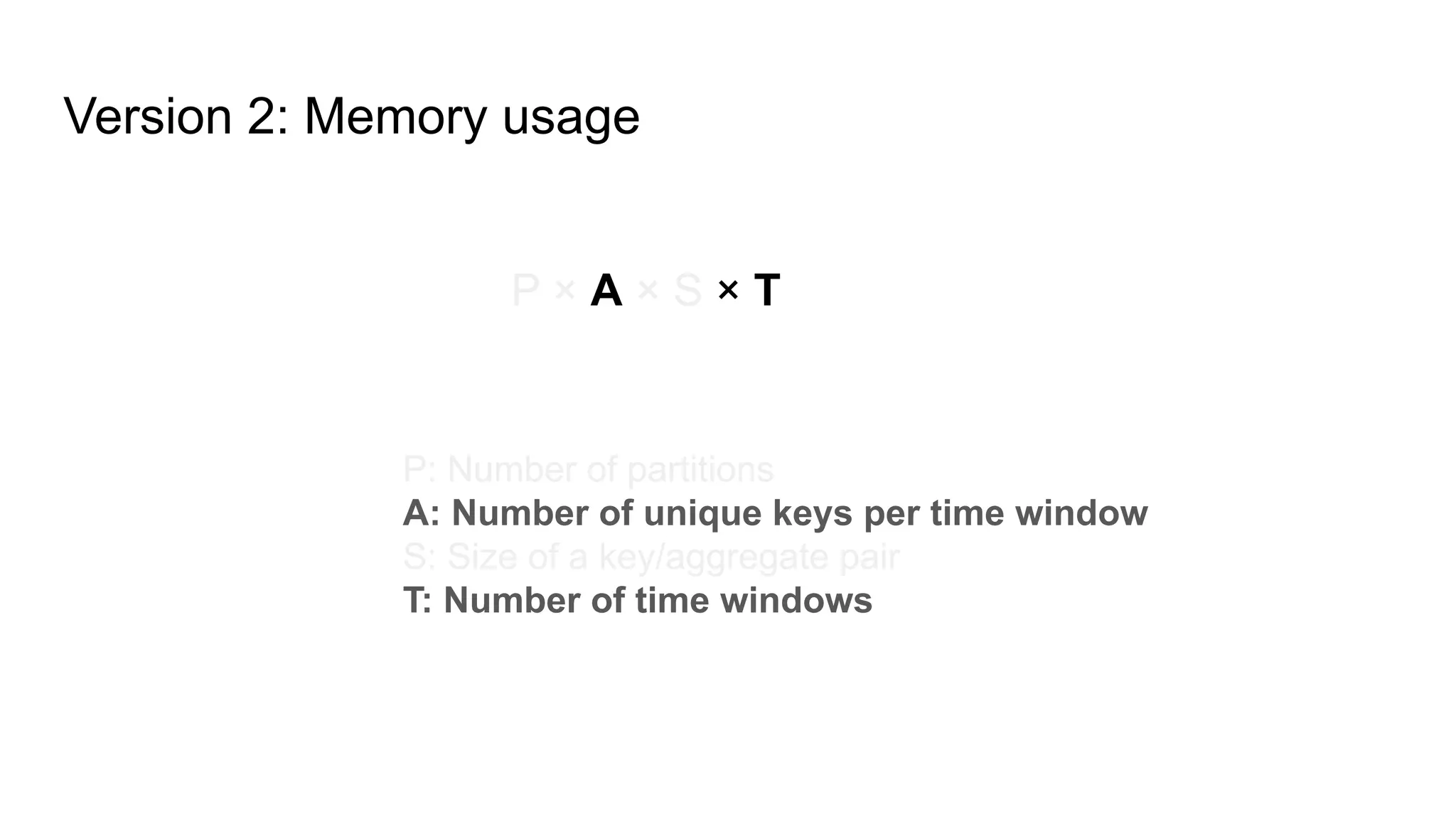

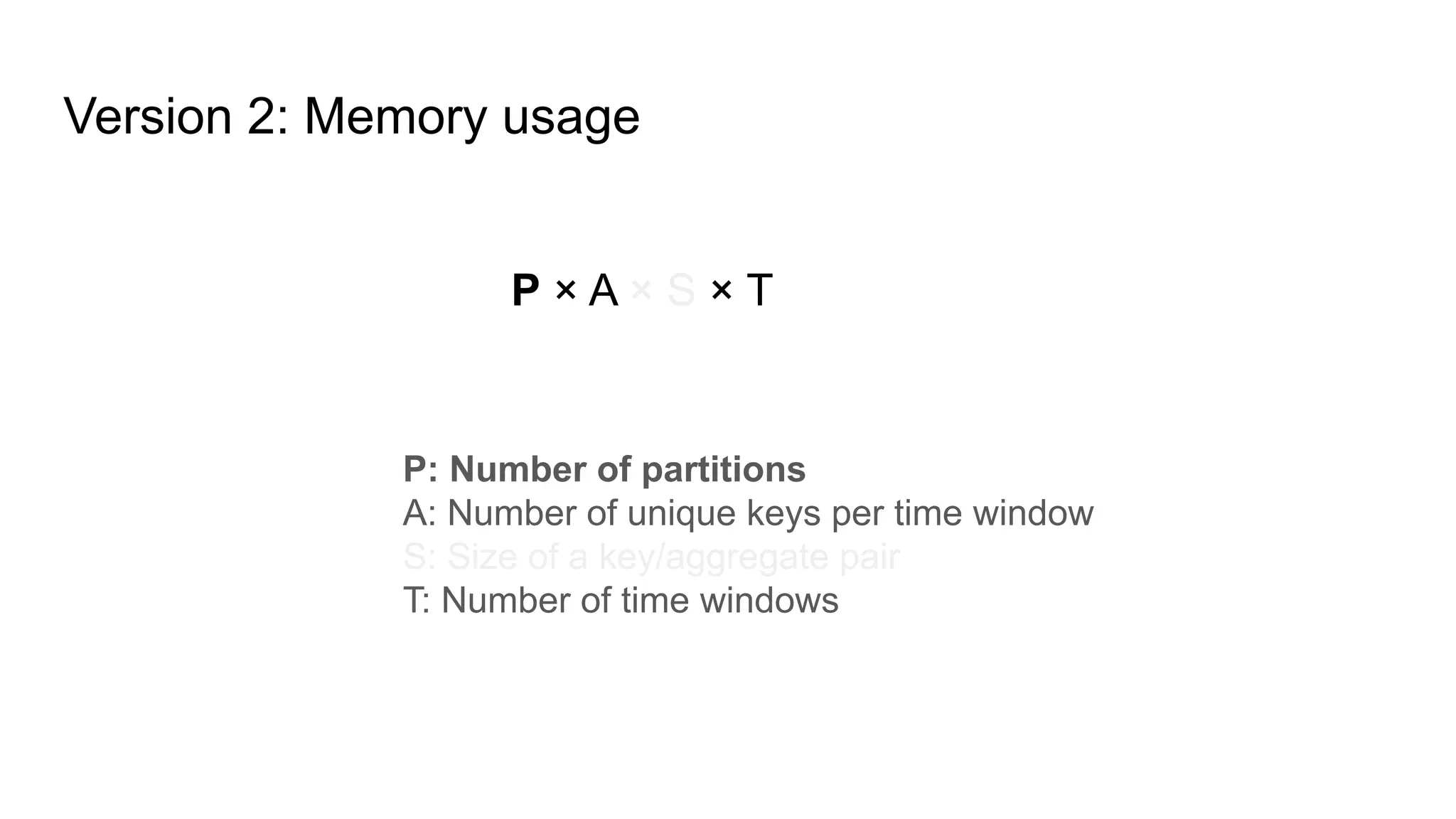

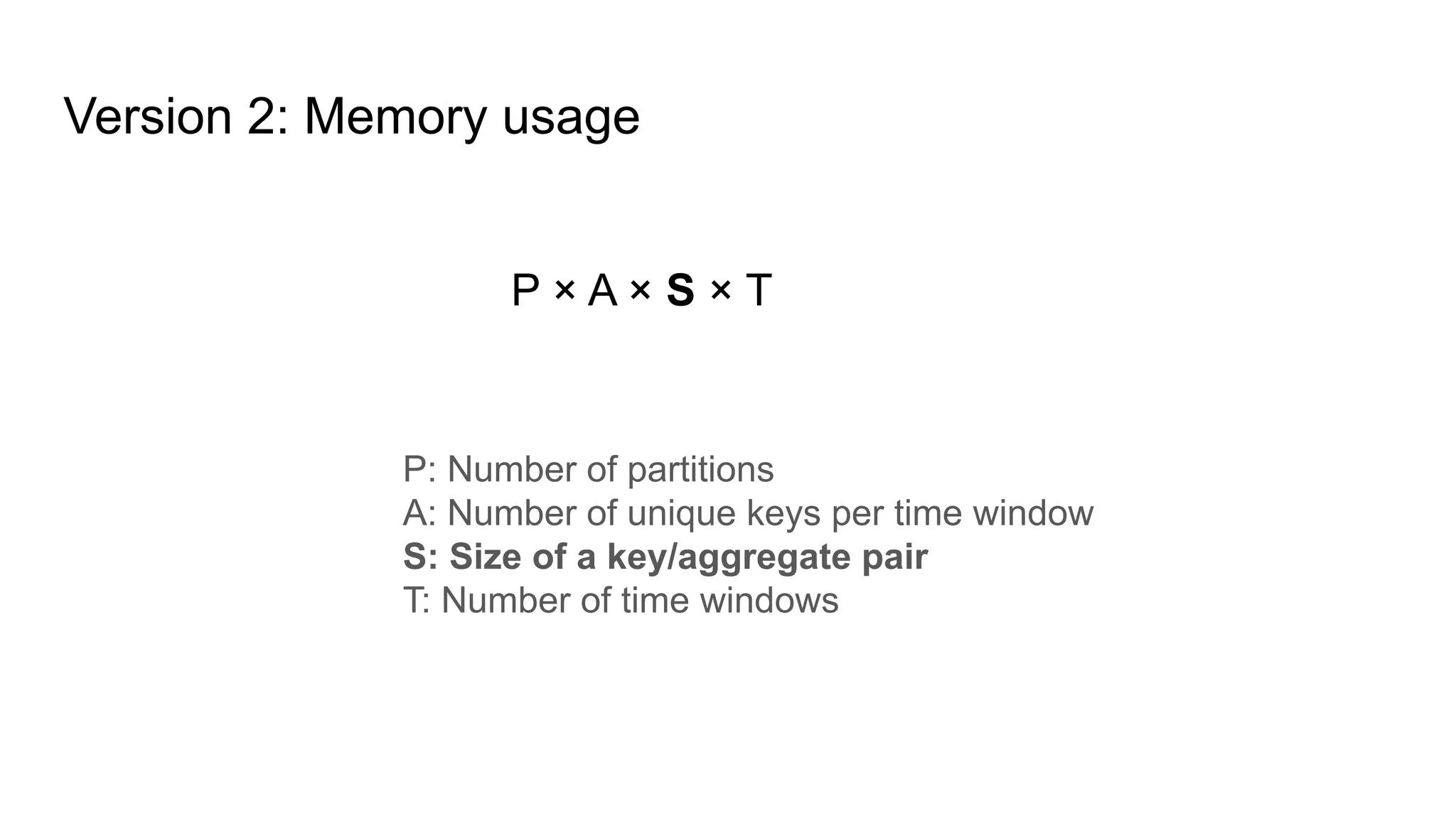

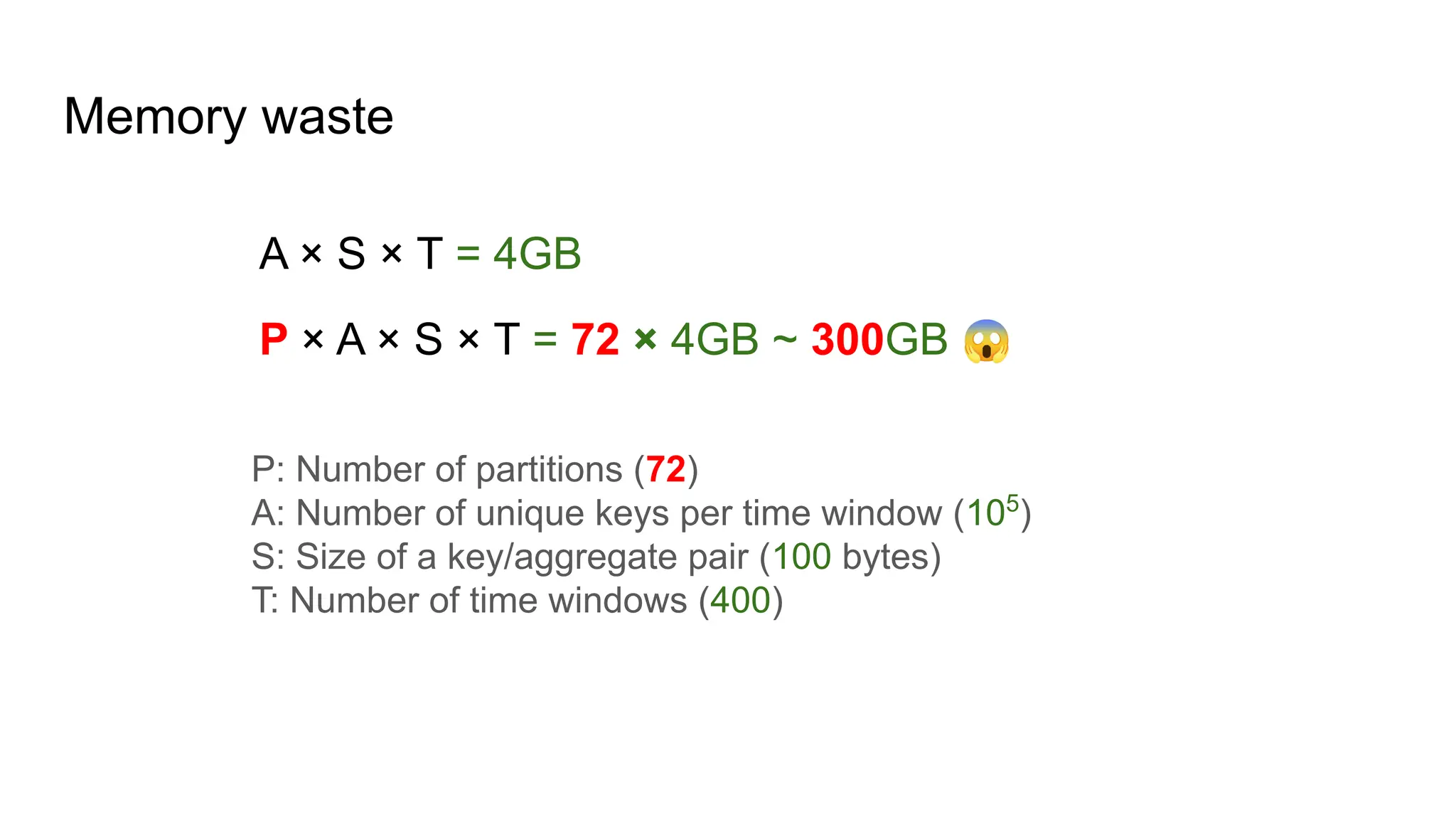

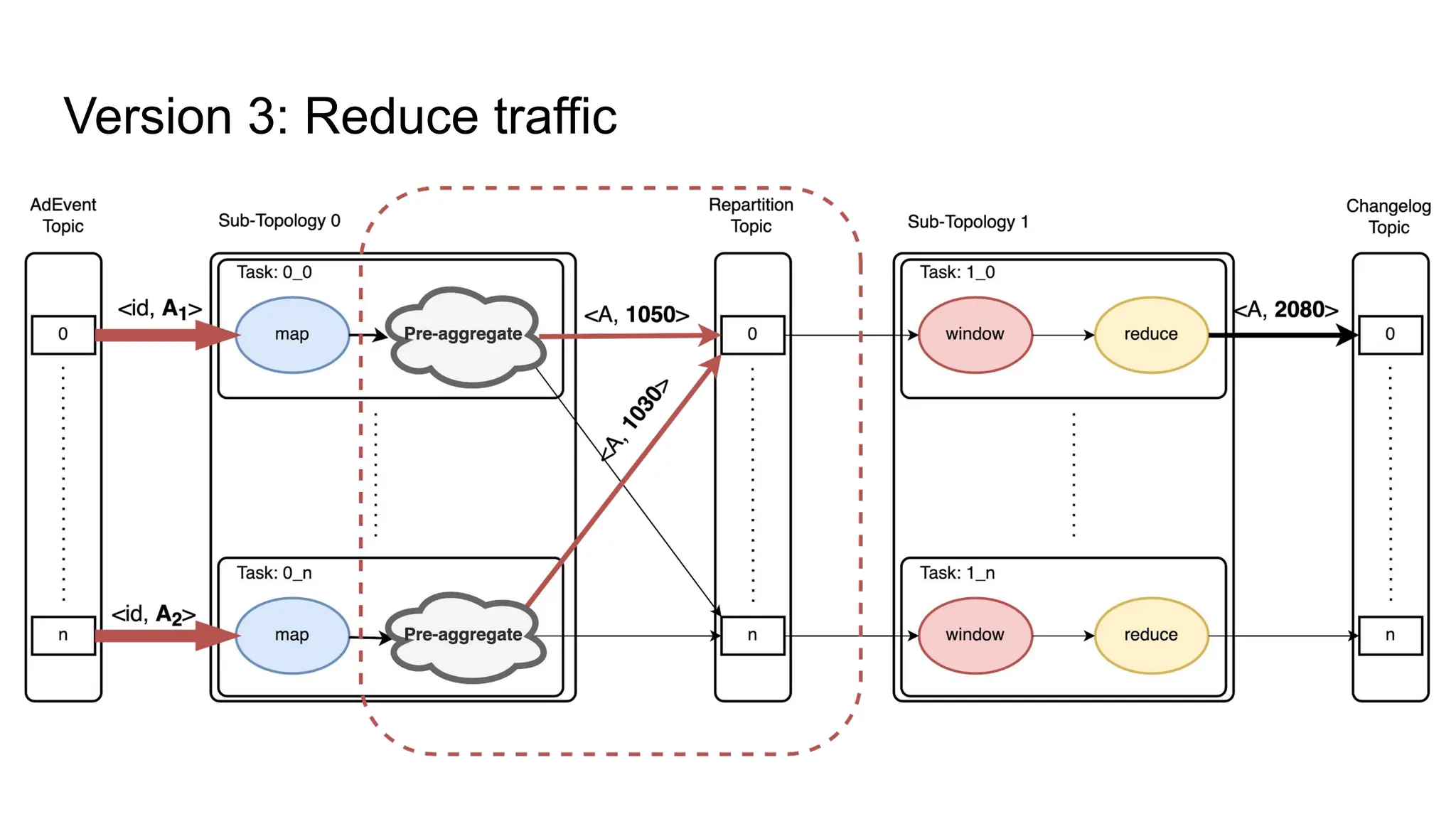

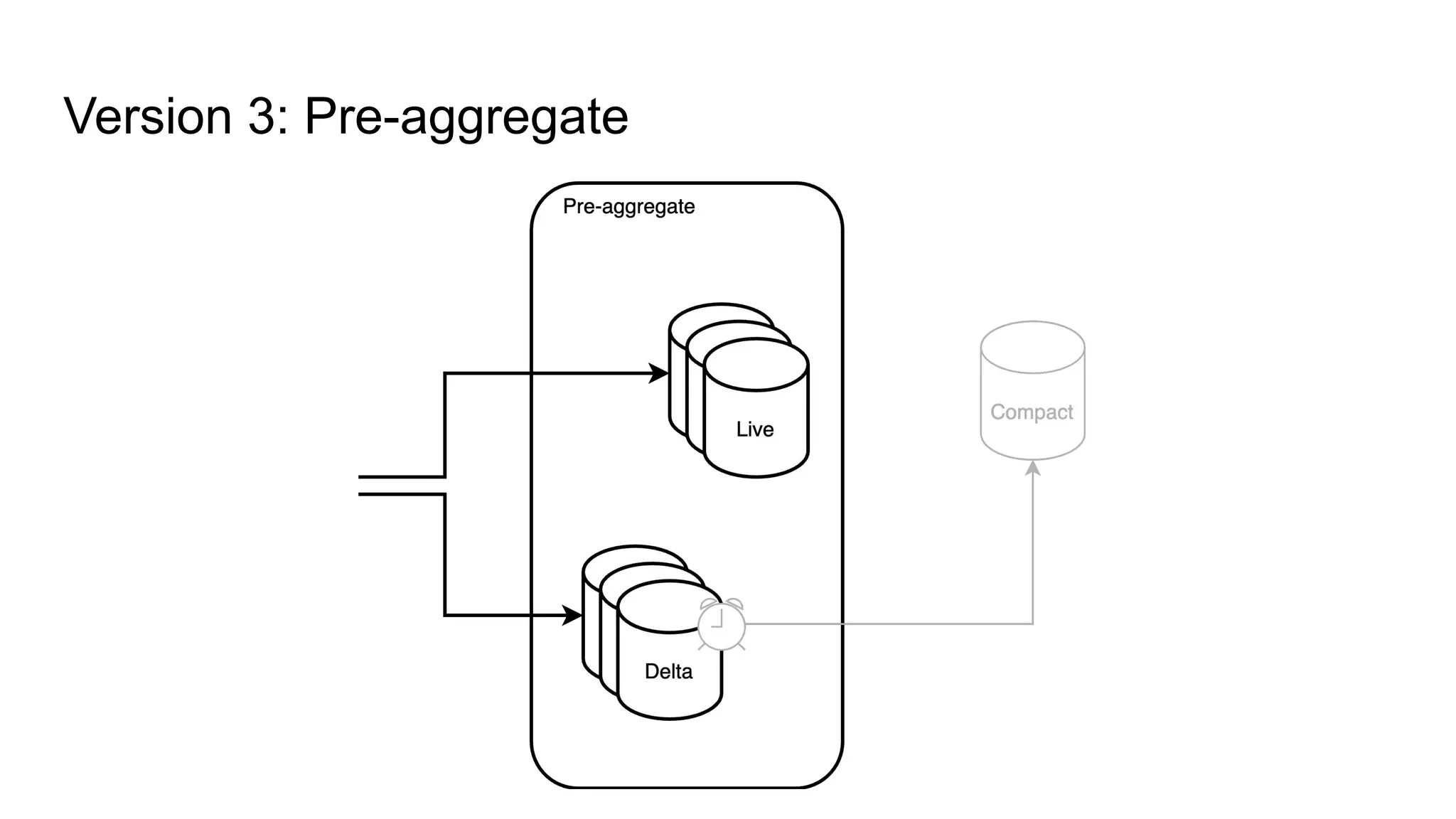

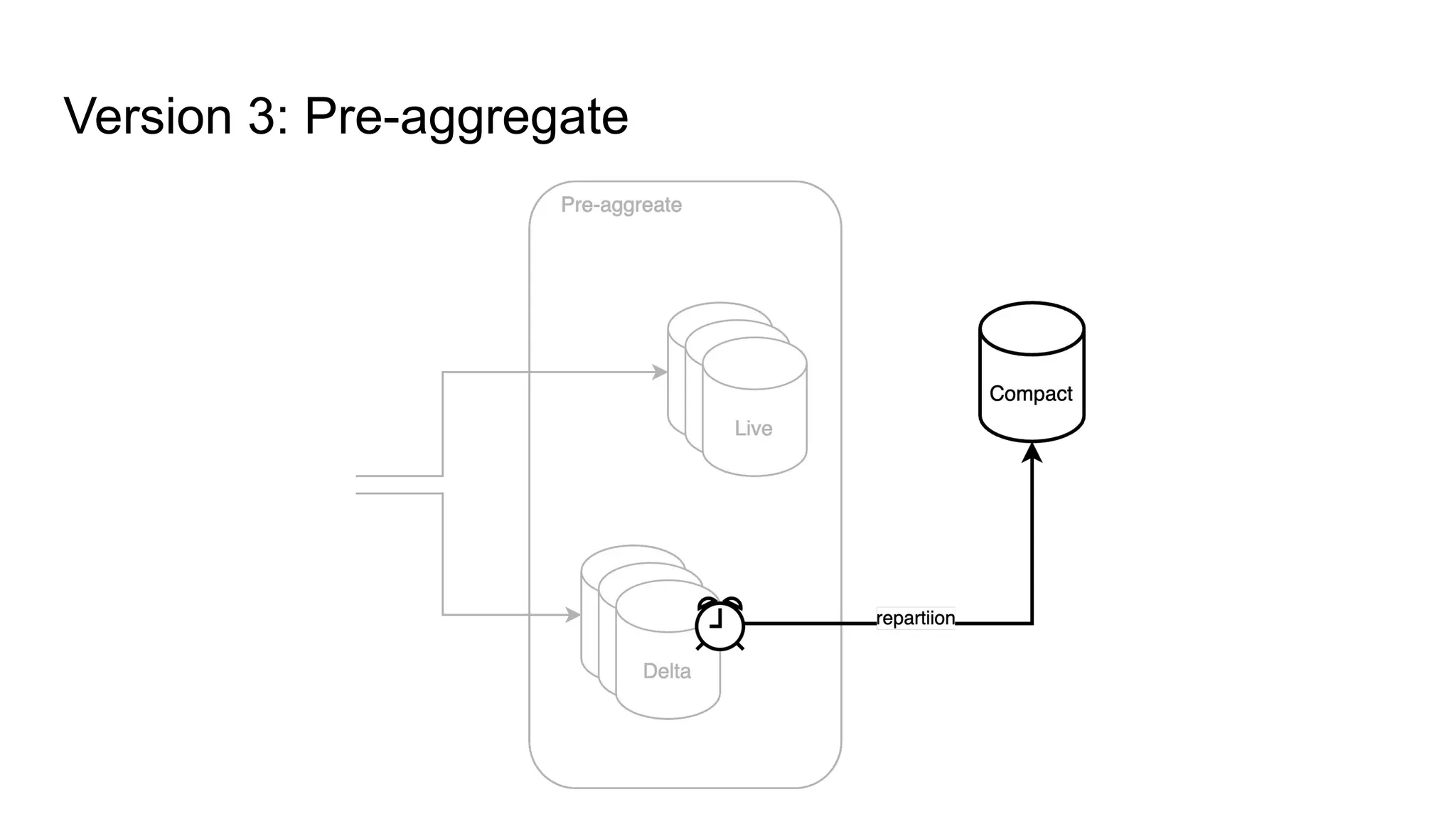

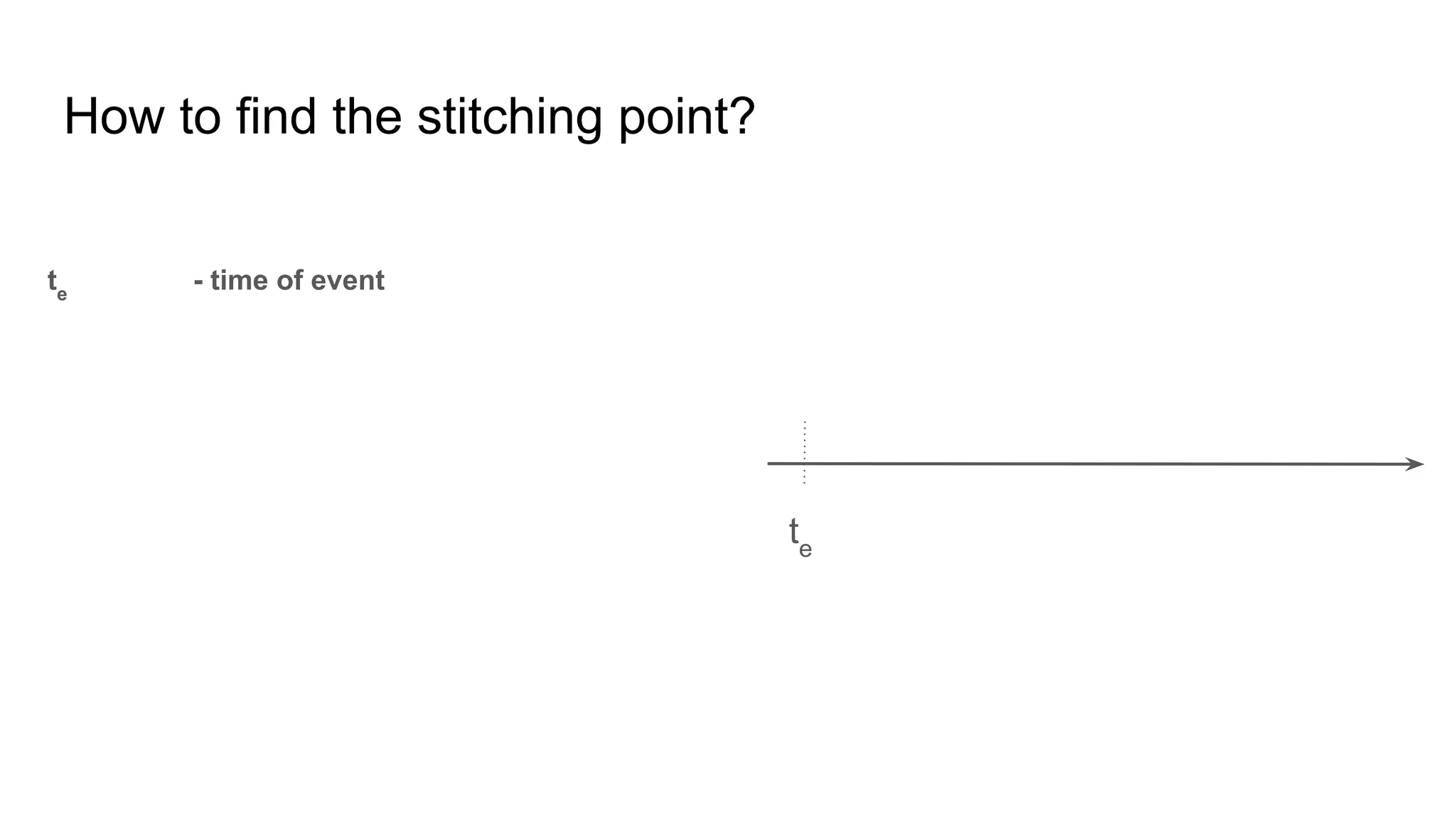

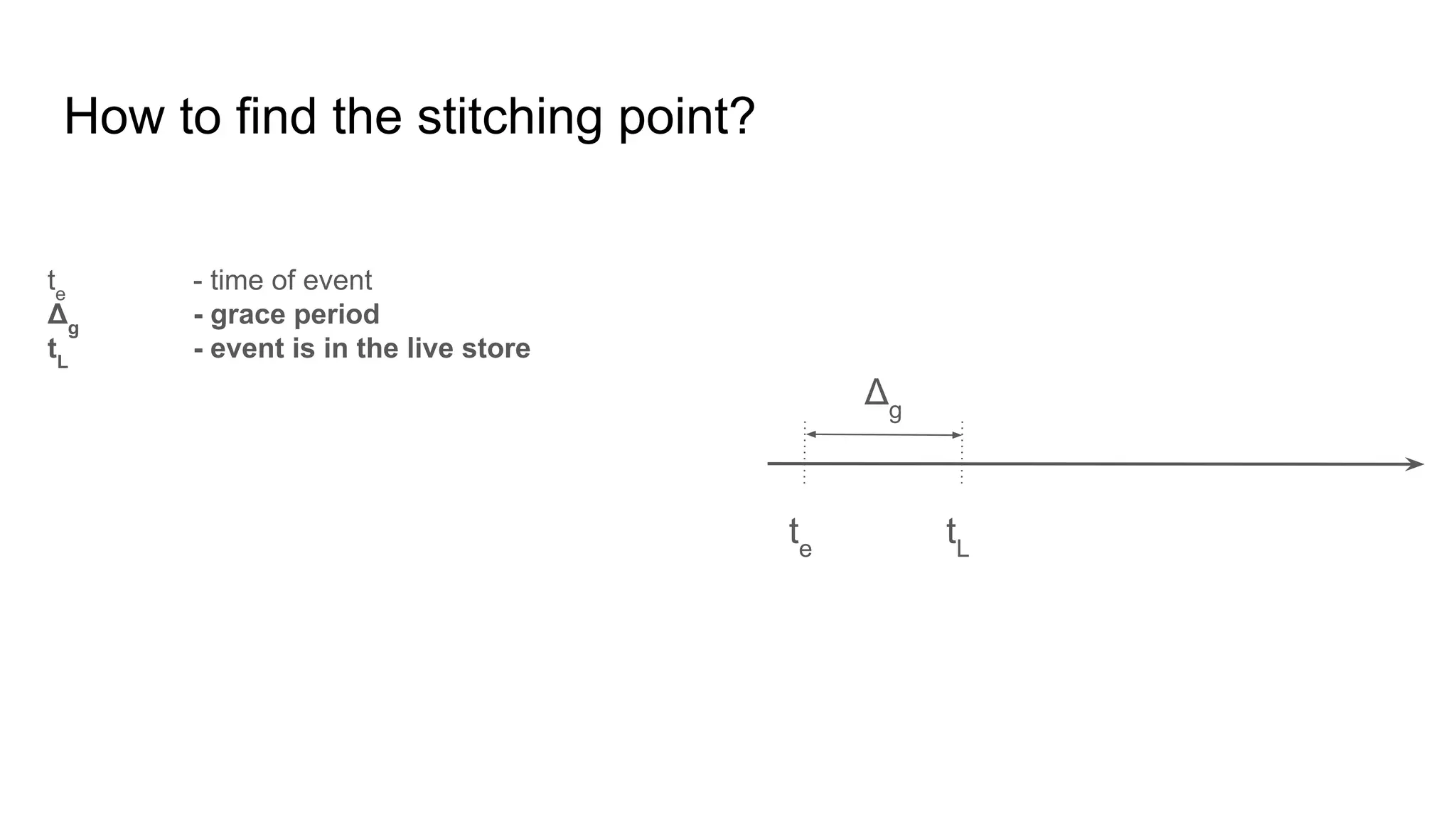

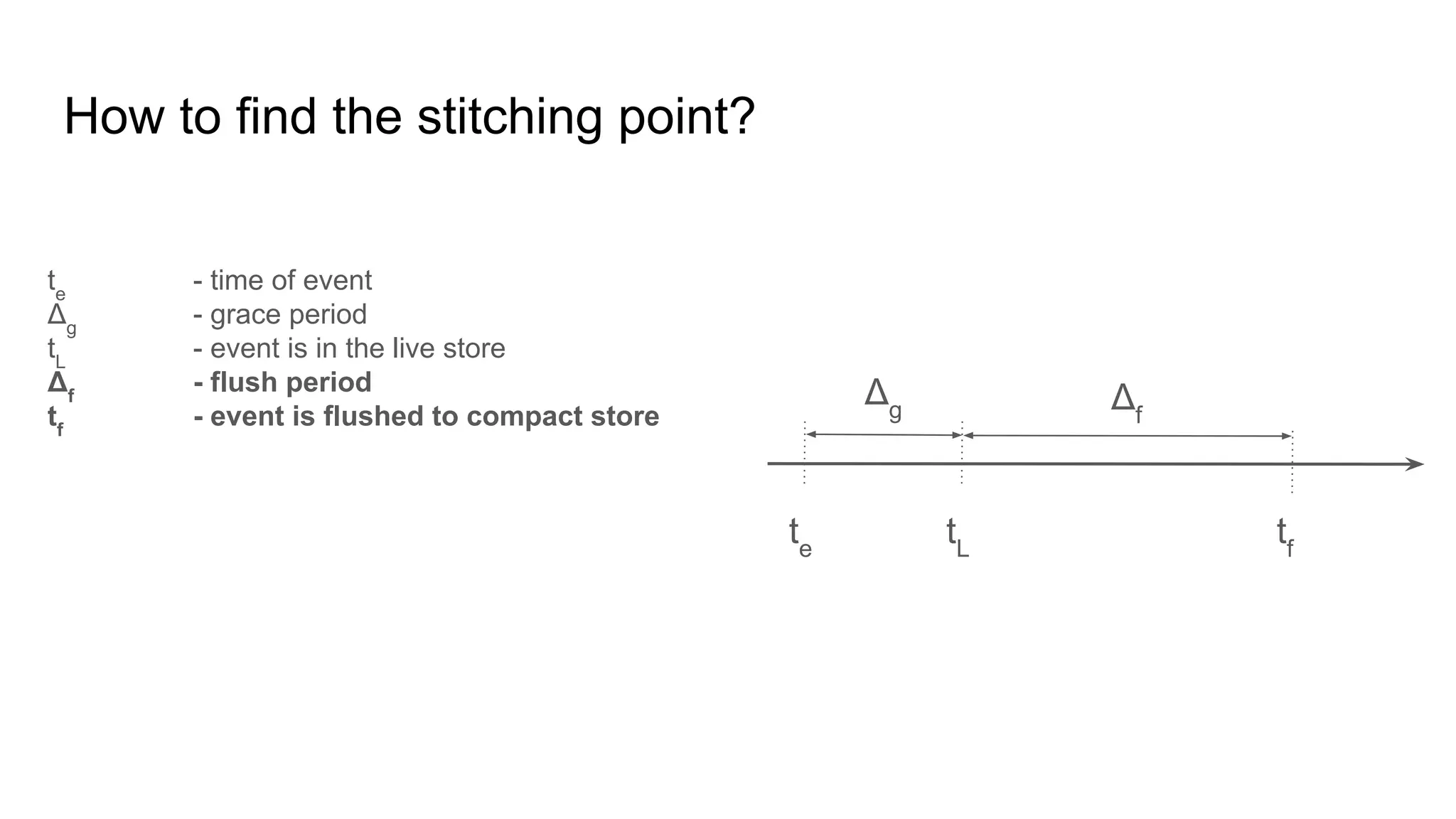

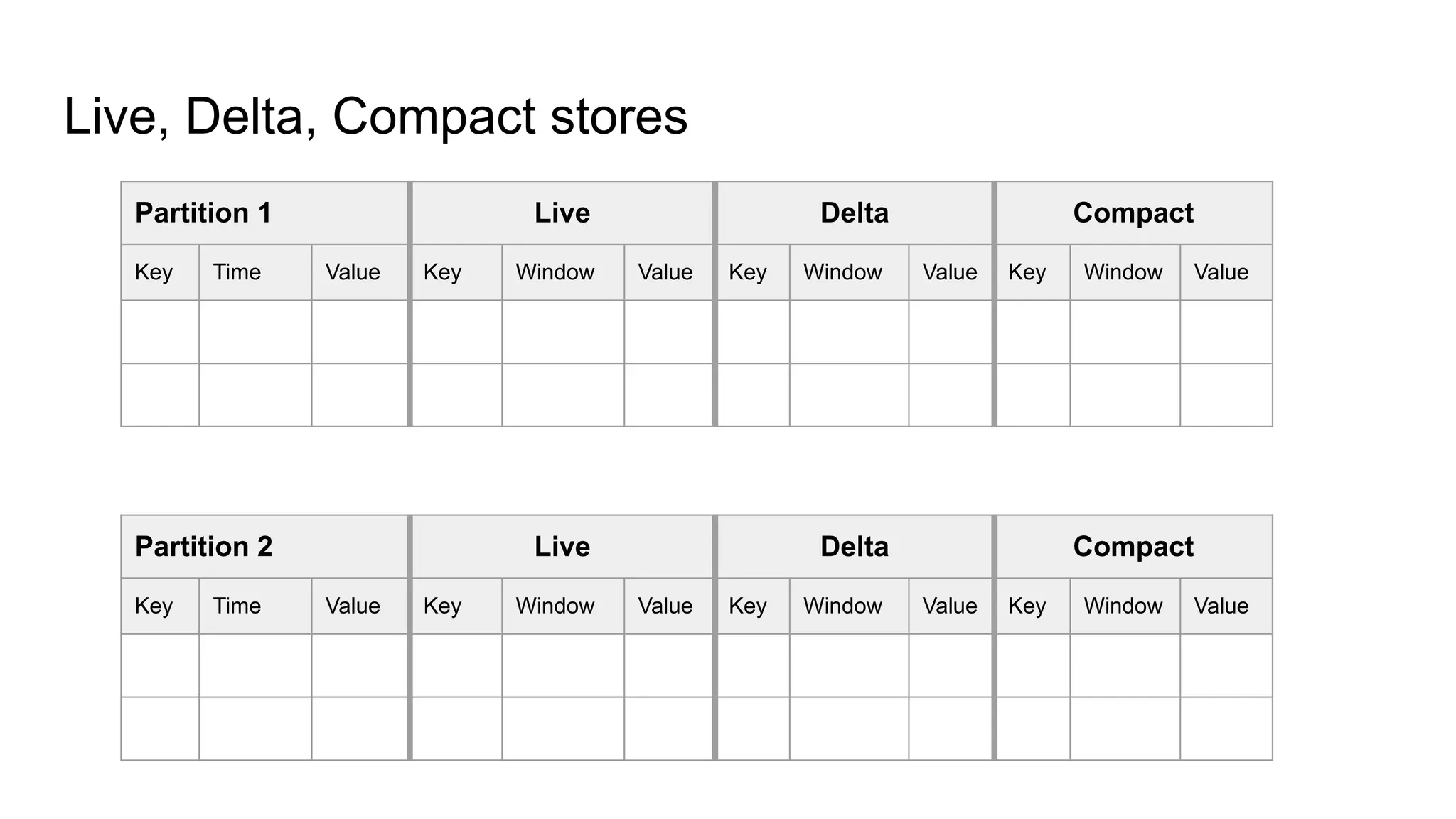

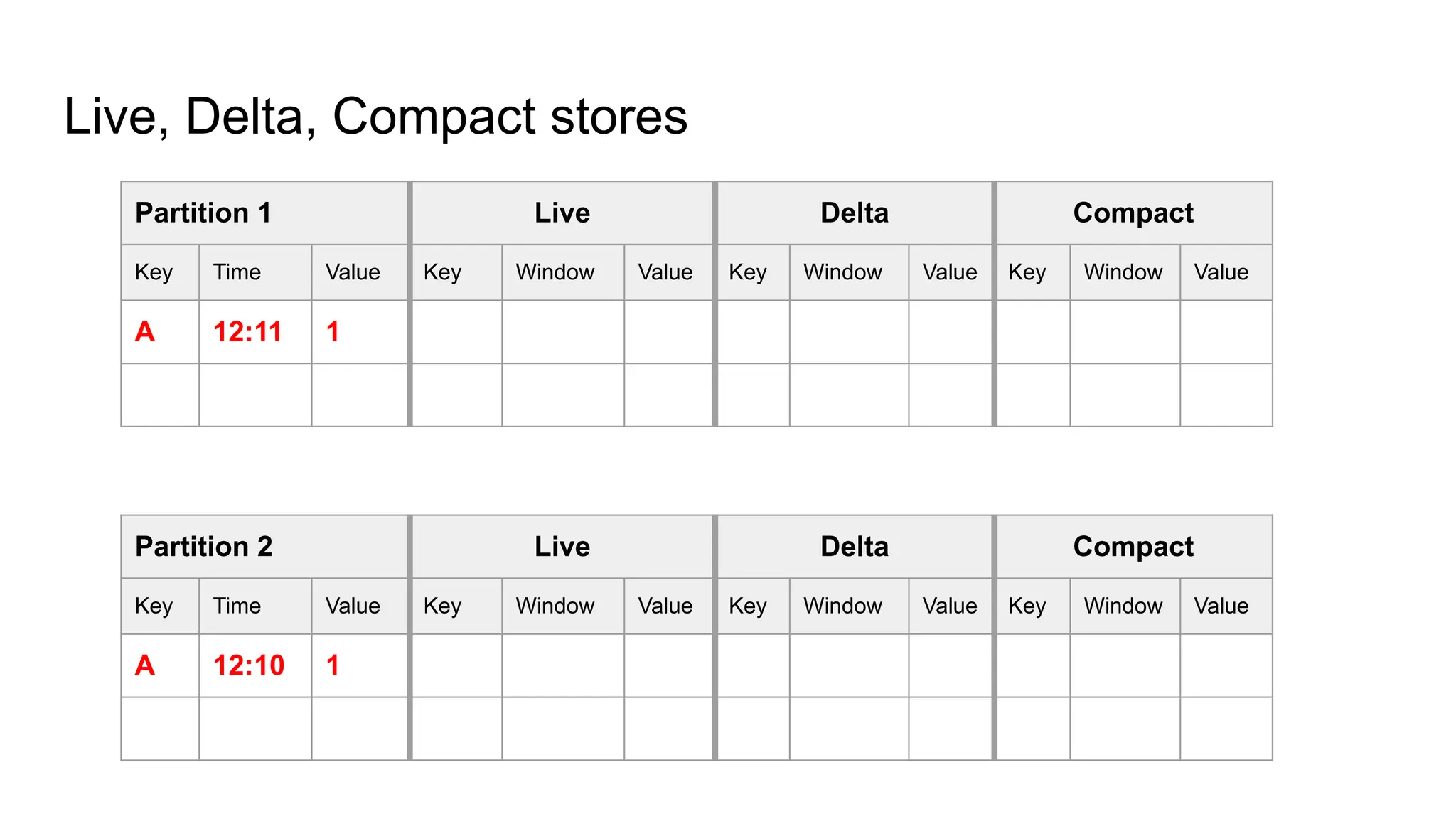

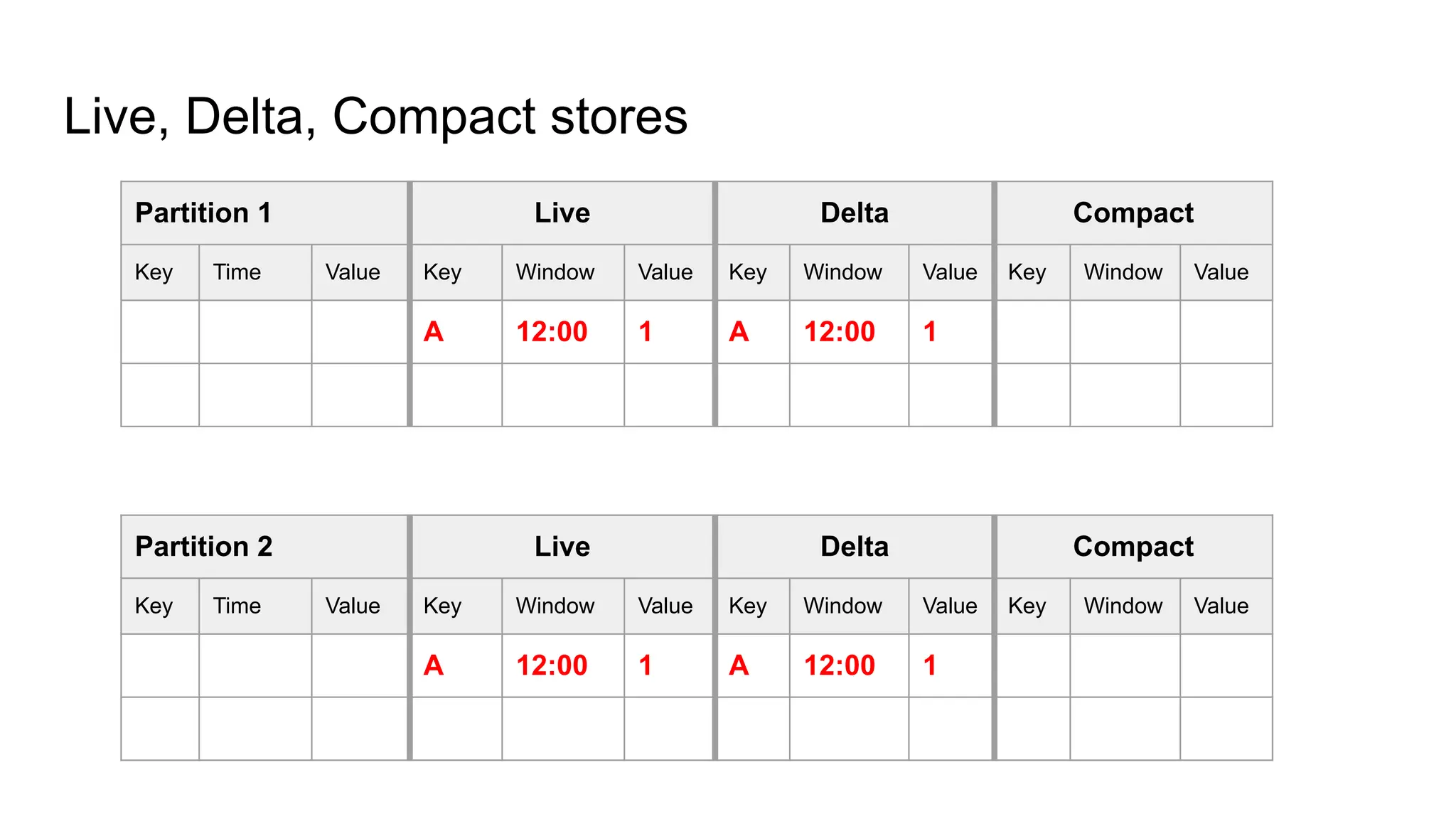

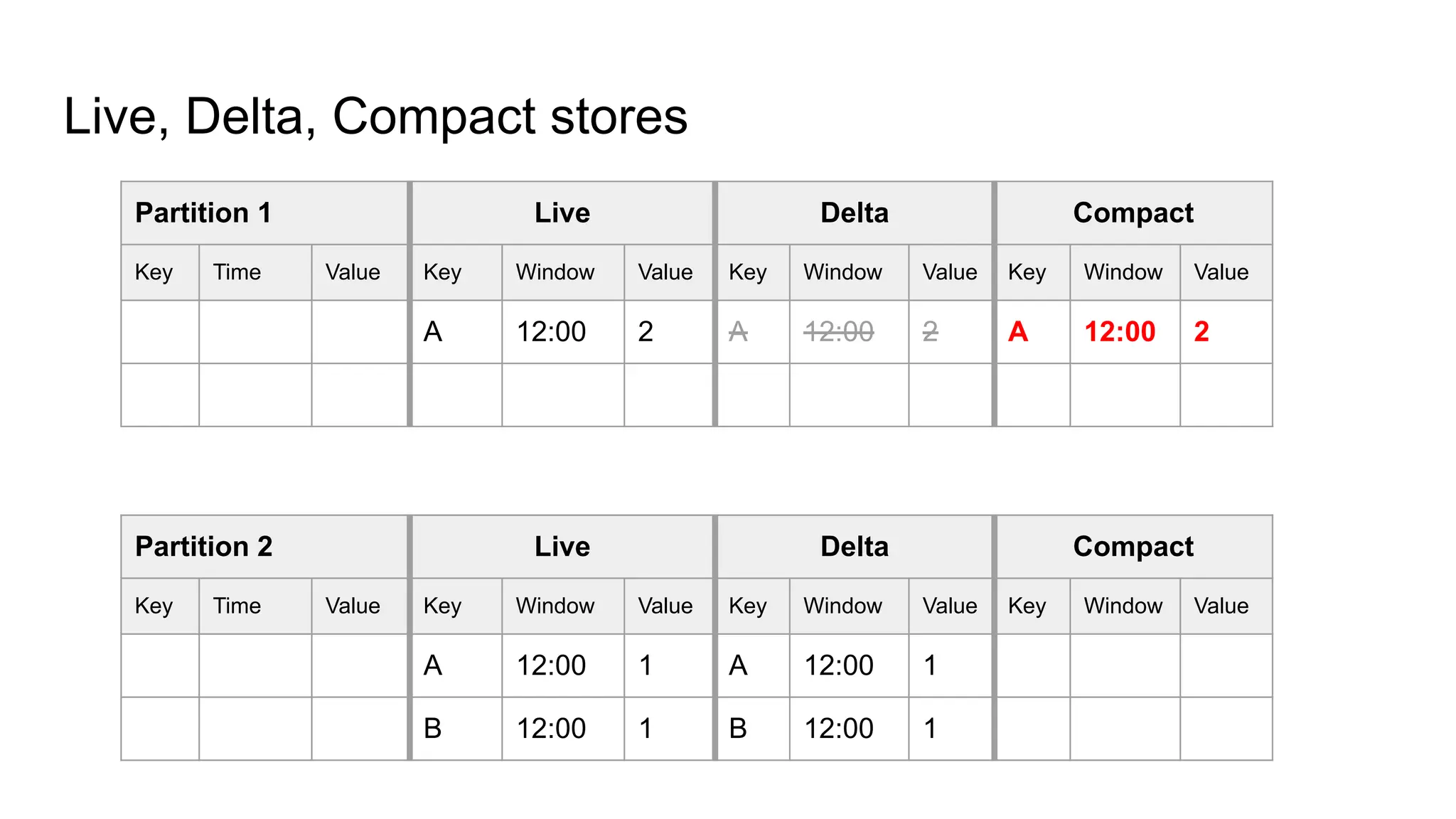

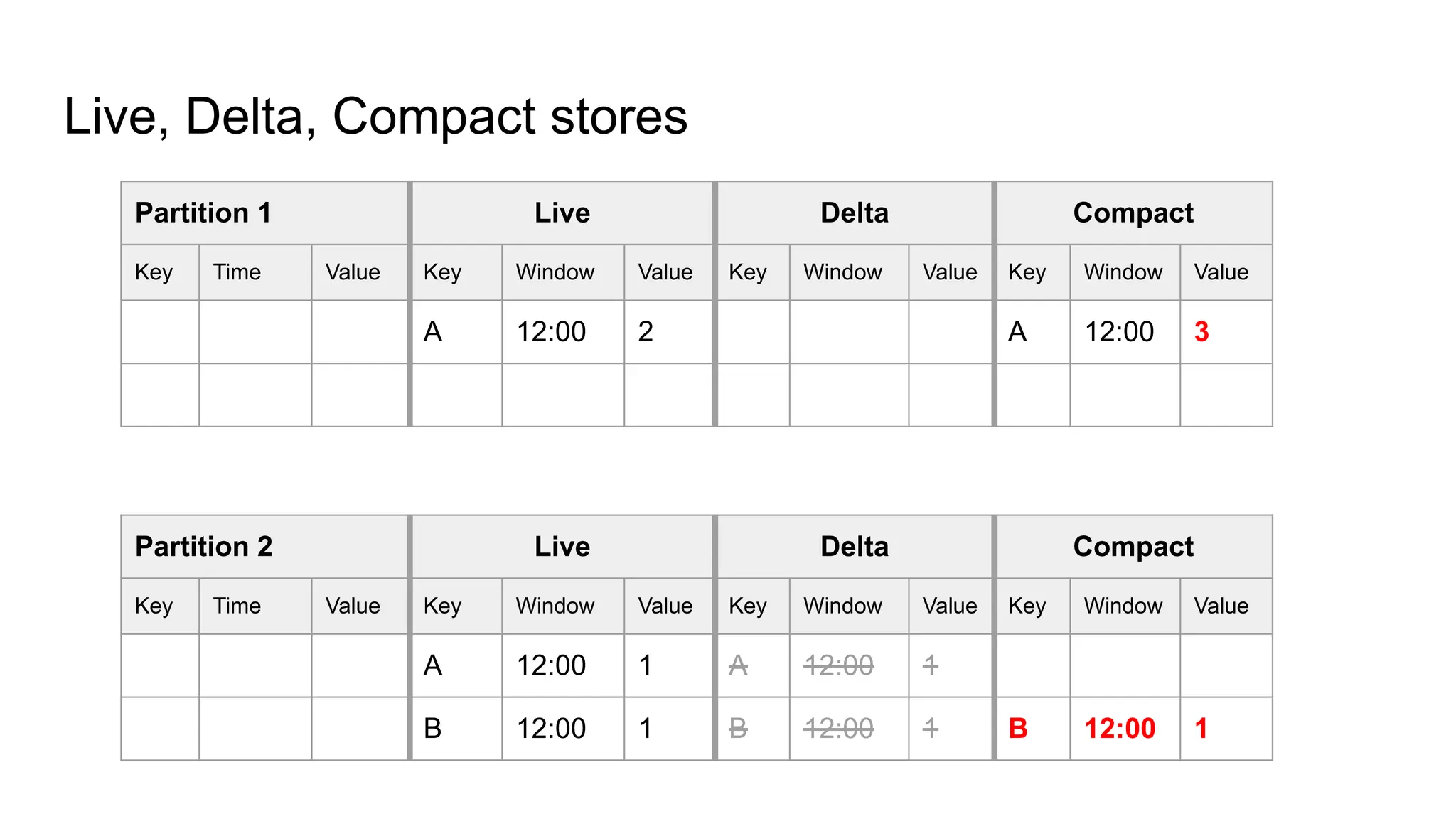

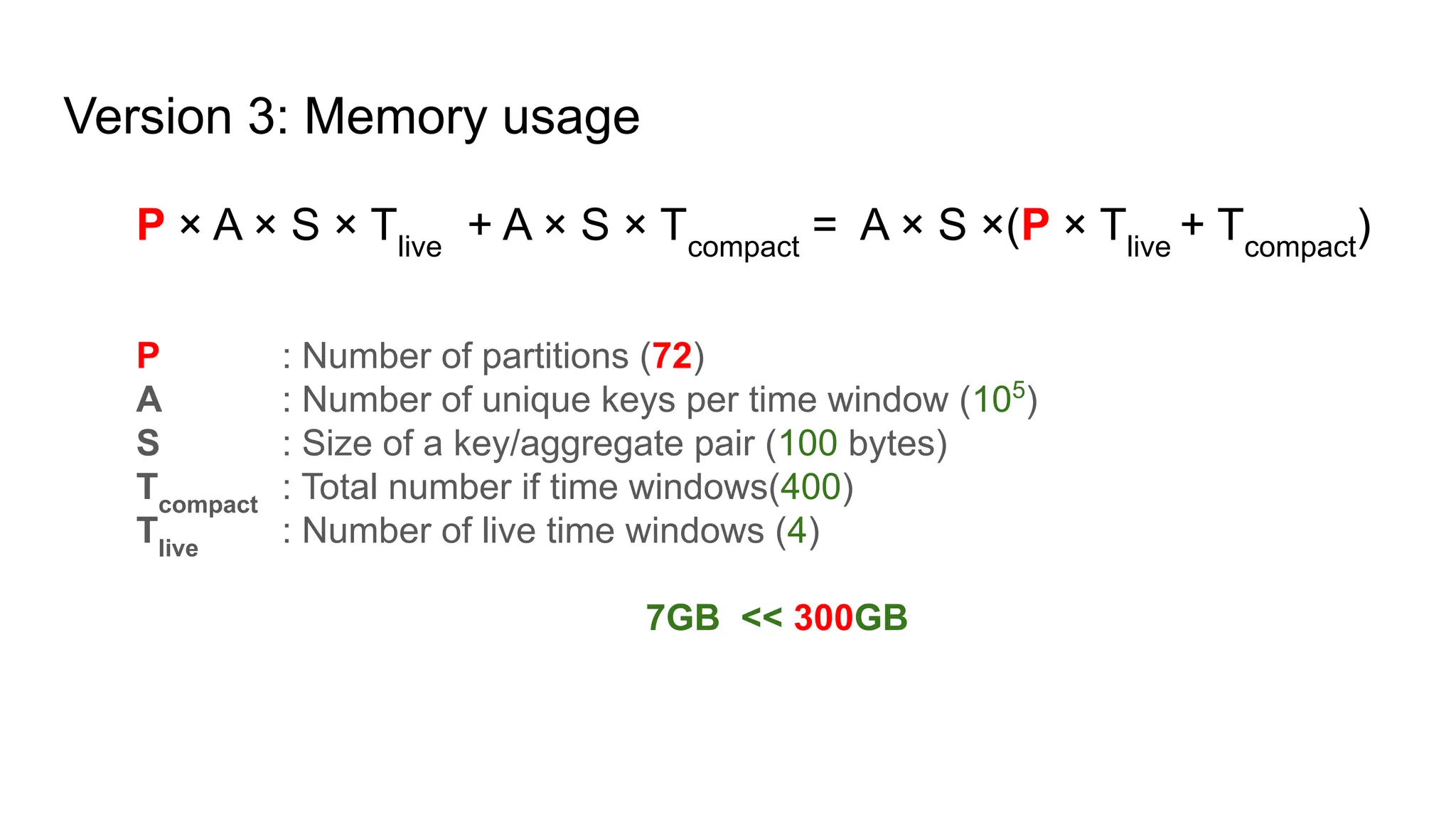

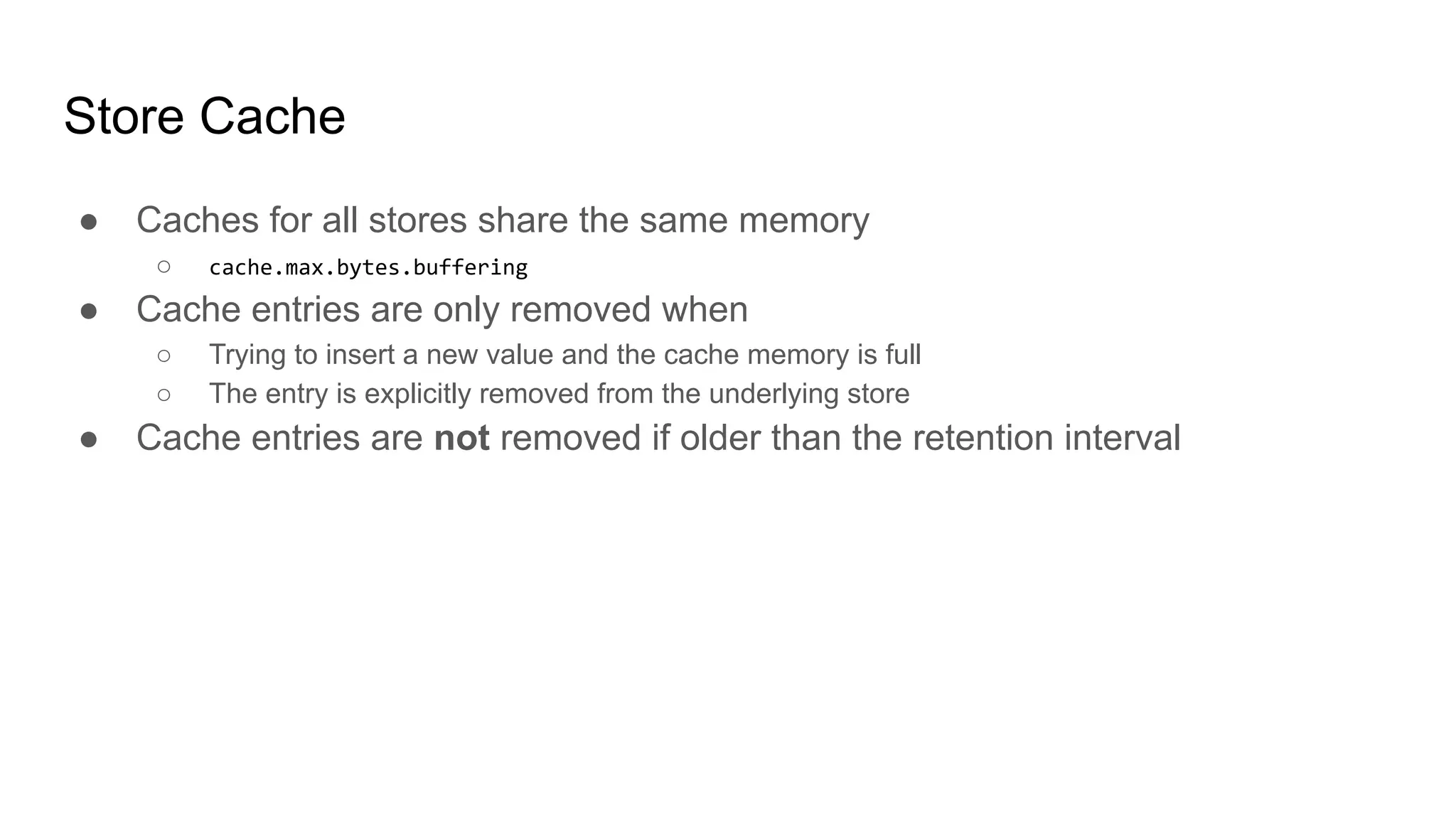

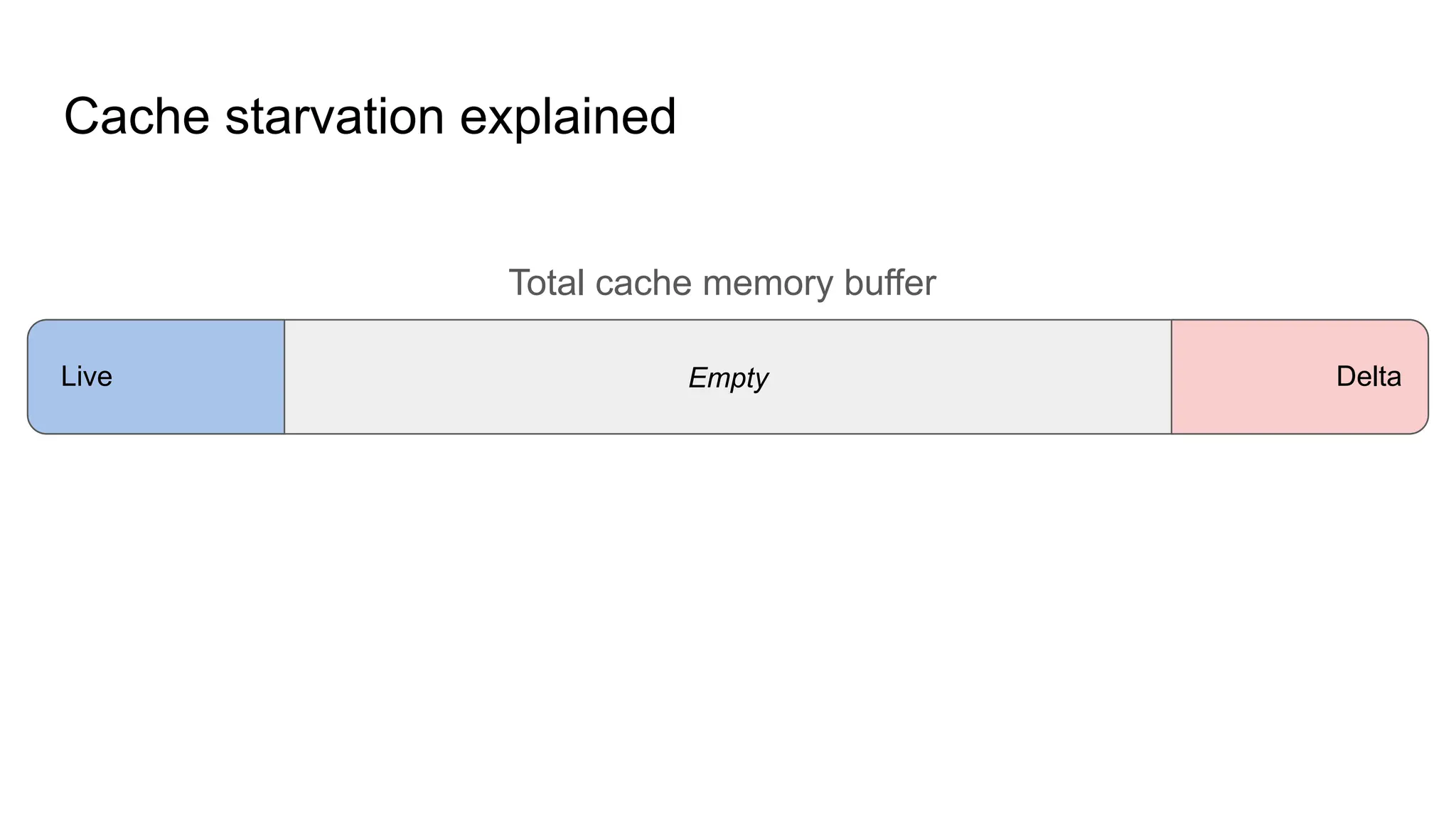

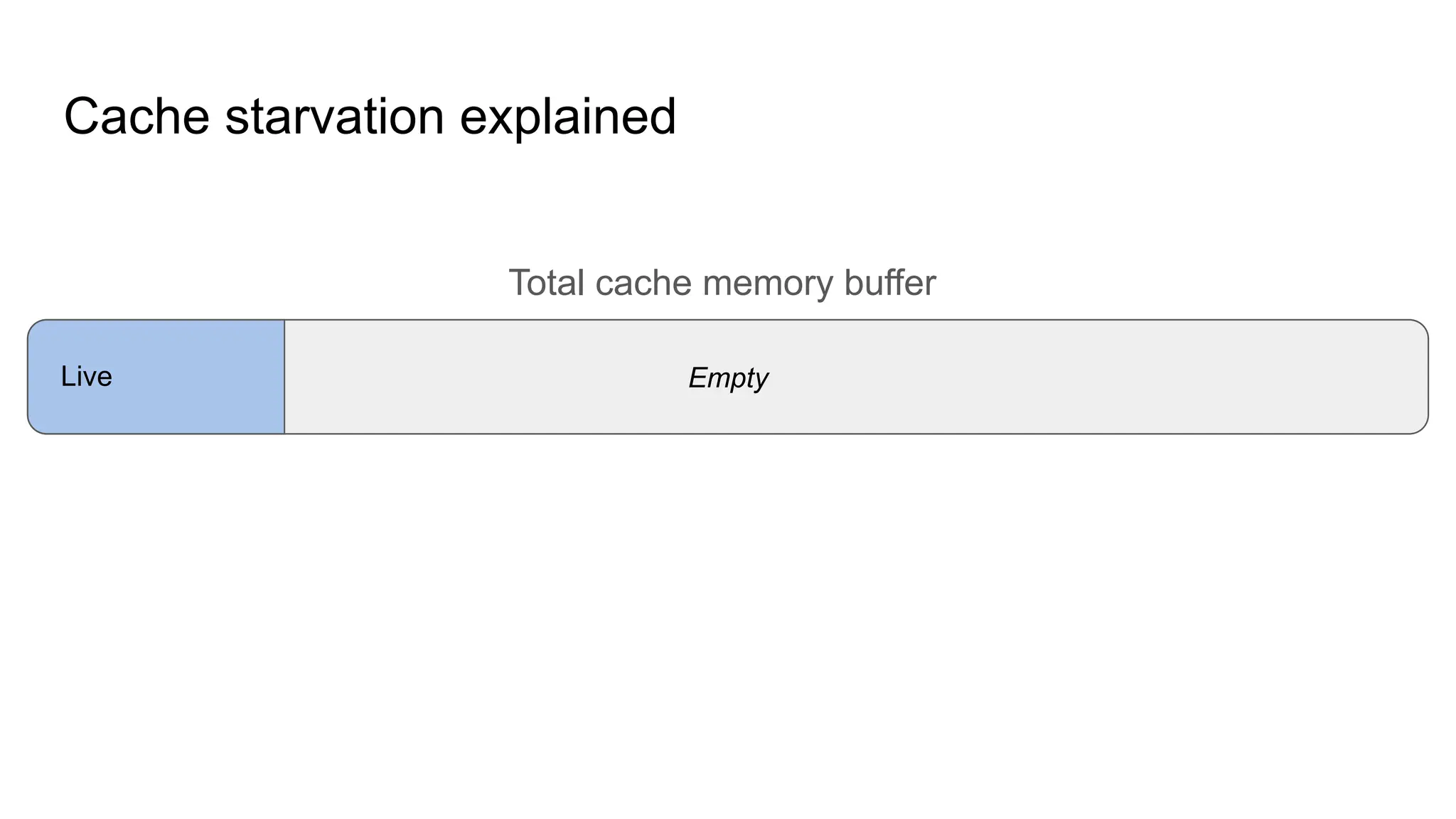

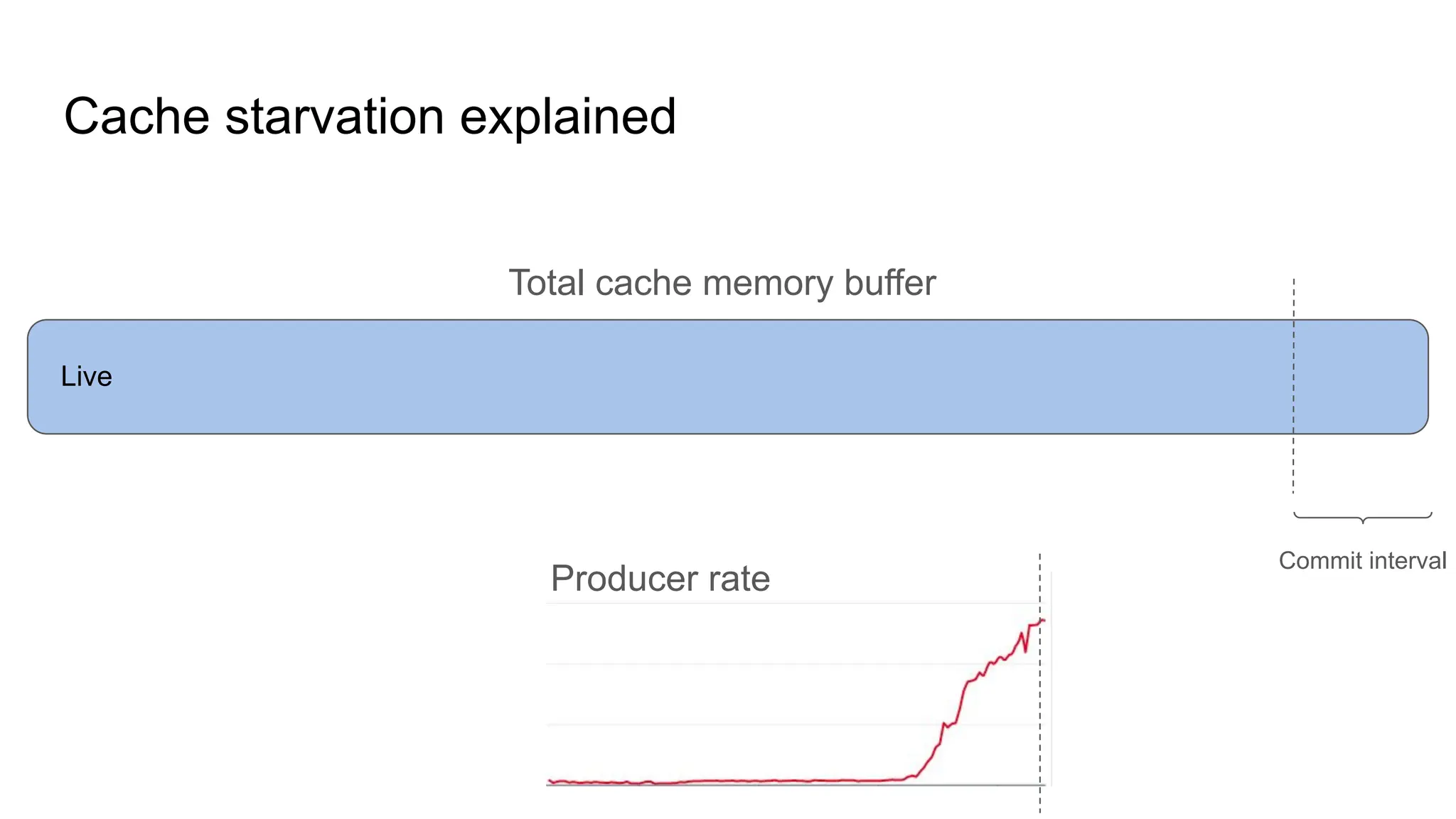

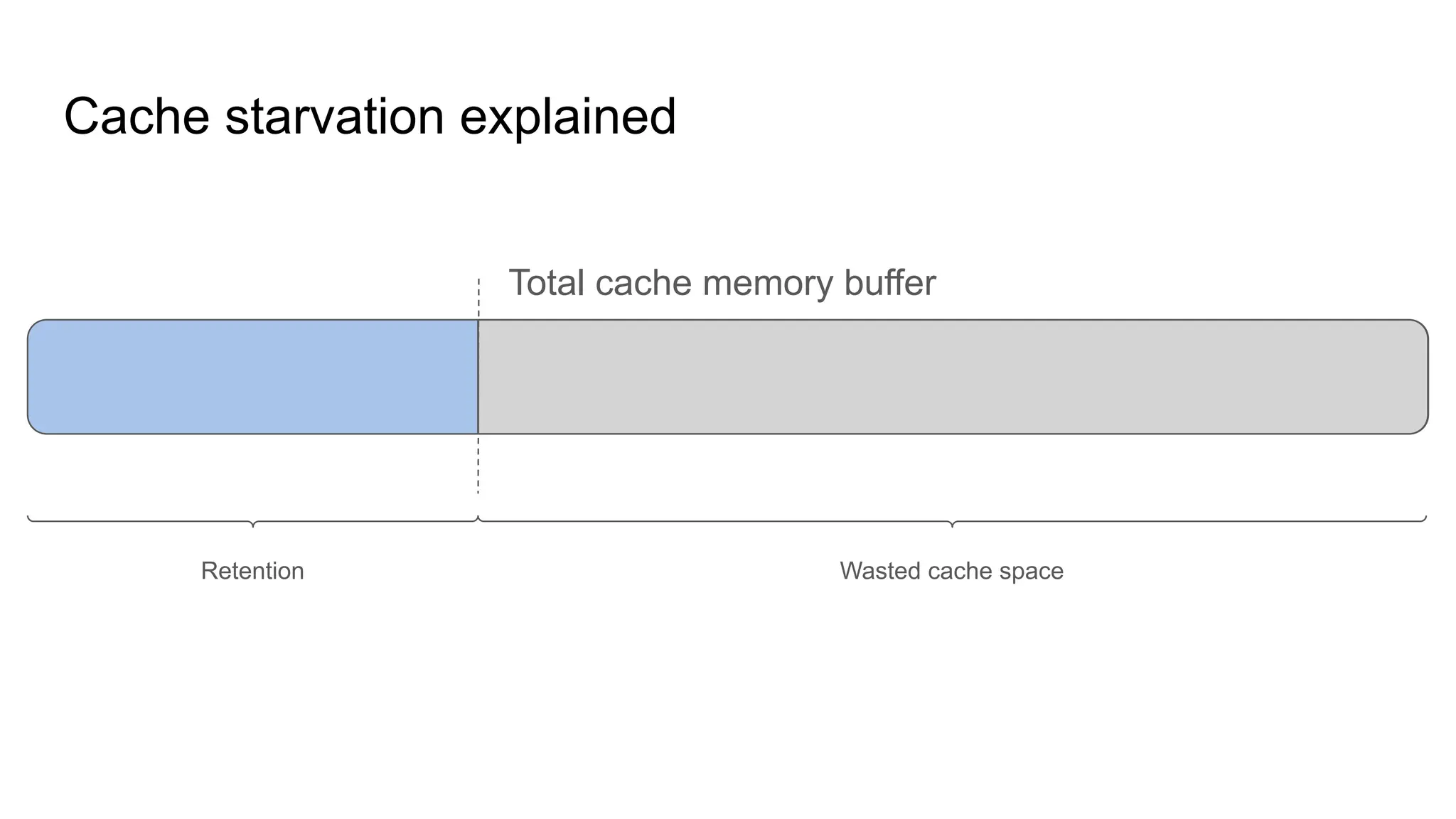

The document discusses aggregating ad events using Kafka Streams and interactive queries, addressing delivery metrics and processing requirements for ad events. It outlines problems related to data timeliness, query latency, and throughput while providing strategies for handling skewed key distribution and memory usage. The authors highlight the importance of controlling skew and managing cache to optimize ad event processing.

![Version 3: Query stitching Tnow - wall clock time S - stitching time [Ts , Te ] - query time range](https://image.slidesharecdn.com/bs62-20240320-ksl24-invidi-chizhovbergenlid-240402160336-b859fc60/75/Aggregating-Ad-Events-with-Kafka-Streams-and-Interactive-Queries-at-Invidi-38-2048.jpg)

![Version 3: Query stitching Tnow - wall clock time S - stitching time [Ts , Te ] - query time range Δg - grace period Δf - flush period S = ⌊ Tnow - Δg - Δf ⌋](https://image.slidesharecdn.com/bs62-20240320-ksl24-invidi-chizhovbergenlid-240402160336-b859fc60/75/Aggregating-Ad-Events-with-Kafka-Streams-and-Interactive-Queries-at-Invidi-43-2048.jpg)

![Store caching issue Producer rate Live store [messages/sec] Producer rate Delta store [messages/sec]](https://image.slidesharecdn.com/bs62-20240320-ksl24-invidi-chizhovbergenlid-240402160336-b859fc60/75/Aggregating-Ad-Events-with-Kafka-Streams-and-Interactive-Queries-at-Invidi-54-2048.jpg)

![Finally Producer rate Live store [messages/sec] Producer rate Delta store [messages/sec]](https://image.slidesharecdn.com/bs62-20240320-ksl24-invidi-chizhovbergenlid-240402160336-b859fc60/75/Aggregating-Ad-Events-with-Kafka-Streams-and-Interactive-Queries-at-Invidi-65-2048.jpg)