Reporting in Microservices. How to Optimize Performance?

Business intelligence solutions use microservice systems for better scalability and flexibility. Optimization of their performance is a challenge for a development team. So, I've decided to describe the improvement of microservice architecture with the help of a reporting module system. The article includes its technical scheme, estimates, pros and cons of suitable technologies. It will be useful both for tech professionals and business owners.

Microservice architecture: Pros and Cons

Since 2010, the popularity of microservice design grows with the rise of DevOps and Agile development. Nowadays, Airbnb, Netflix, Uber, LinkedIn and other big companies benefit from microservices.

A monolith system has a single processor for all the implemented logic. Unlike it, microservice architecture consists of several independent processors. Each of them usually includes the common parts of an enterprise application:

- user interface

- database

- server

Any change in the system leads to to new version of a new version deployment of the server part of the system. Let's consider the concept in detail.

What does microservices architecture really mean?

Microservice design is a set of services, but this definition is vague. I can single out 4 features that a microserver usually has:

- meeting a specific business need

- automatic deployment

- usage of endpoints

- the decentralized control of languages and data

In the image below, you can see microservice design compared to a monolith app.

What is scalability in microservices?

One of the main benefits of the microservice style development is its scalability. You can scale several services without changing the whole system, save resources, and keep the app less complex. Netflix is a prime example of this benefit. The company had to cope with the growing subscribers' database. The microservice design was an excellent solution for scaling it.

Microservice design speeds up app development and accelerates the product launch schedule. Each part can be rolled out separately, making the deployment of microservices quicker and easier.

However, each microservice needs a separate database. Otherwise, you can't use all the benefits of the modularization pattern. But the variety of databases leads to challenges in the reporting process. Keep reading to find out more about solving this problem.

What are the other advantages of microservices?

What are the disadvantages of using microservices?

Despite all these benefits, microservice architecture has drawbacks, including the necessity of operating many systems and completing various tasks in the distributed environment. I believe the main pitfalls of using microservices are:

BI project details: the issue of custom reports

A while back, the Freshcode team worked on a legacy EdTech project. The application consisted of over 10,000 files developed in Coldfusion. It was a 7-year old US-built app running on an MS SQL database. The system was overly complex and included many microservices. Its main parts were:

- sophisticated financial and billing system

- multi-organization structure for large group entities

- workflow management tool for business processes

- integrated bulk email, SMS, and live chat

- online system for surveys, quizzes, examination

- flexible assessment and learning management system

Freshcode worked on the project on the stage of migrating to a new interface. The product was preparing for the global launch. The microservice system was supposed to process great amounts of data. As for the app target audience, it was developed for

- large education networks that manage hundreds of campuses

- governments that have up to 200k schools, colleges and universities

Meanwhile, the EdTech app design was convenient both for large-scale education networks and small schools of 100 students.

The Freshcode development team faced the problem of managing and improving the performance of the complex microservice architecture. Our client wanted to build both SaaS and self-hosted systems, and we chose the technical solutions while keeping this fact in mind.

How to improve microservices performance?

Report generation relied on engaging different services and caused performance issues. That's why the Freshcode team decided to optimize the app's architecture by creating a separate reporting microservice. It received data from all the databases, saved it, and transformed it into custom reports.

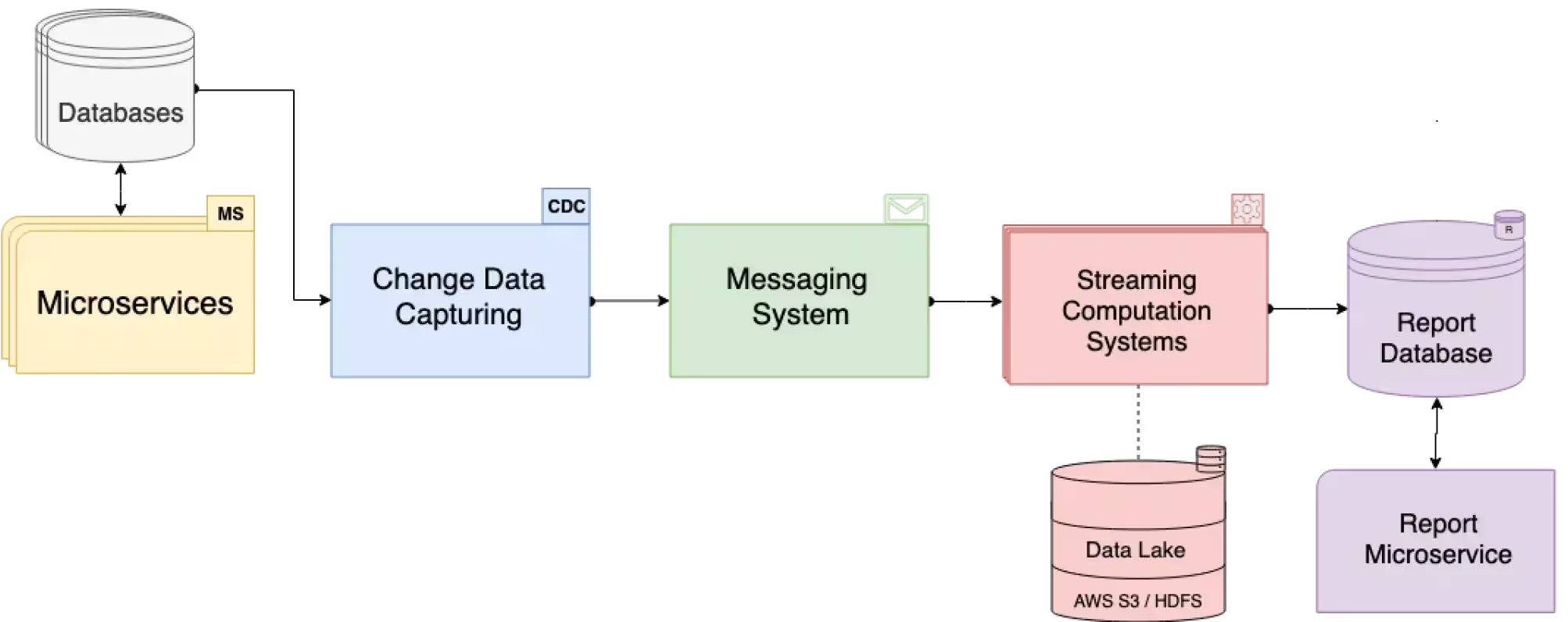

In the image below, you can see the scheme of reporting microservices system and the technologies used for its implementation.

Yellow color marks all microservices in the system, each with an individual database. The reporting module tracks all changes via a messaging system. Then, it stores the new data in a separate report database.4

Reporting module implementation in 6 steps

Let's look at the six chief components of the reporting system, the technologies that can be used, and the best solutions.

Step №1: Change Data Capturing (CDC)

CDC tracks every single change (insert, update, delete) and applies logic to them. There were three technologies suitable for the first step of the microservice reporting system's implementation.

Oracle was the central database of our microservice reporting system. Considering this fact, the Freshcode team chose StreamSets Data Collector that offered Oracle CDC support out of the box.

Step №2: Messaging System

It allows sending messages between computer systems, as well as setting publishing standards for them.

Although Apache Kafka required a bit more effort to deploy and setup, we used it as a cost-efficient on-premise solution.

Step №3: Streaming Computation Systems

The high-performance computer system analyzes multiple data streams from many sources. It helps to prepare data before ingestion, so it is possible to denormalize/join it and add any info if needed.

AWS provides a great set of tools for ETL and data procession. It's a good starting point, but there is no way to deploy it on custom servers. That's why it doesn't fit on-premise solutions. Apache Flink is the most feature-rich and high-performance solution. It allows storing large application state (multi-terabyte). But it requires a larger team for development.

Step №4: Data Lake

The central repository of integrated data from one or more disparate sources, it stores current and historical data in one place. We can use data lakes for creating analytical reports, machine learning solutions, and more.

We decided to start with AWS S3 as it had an open-source implementation, so we could integrate it with the on-premise microservice reporting system.

Step №5: Report Databases

All of these databases are amazing, but our client's goal was to create reports based on data from all microservices. The development team suggested AWS Aurora as the best choice for this task as it simplified the workflow a lot.

Step №6: Report Microservice

The report microservice was responsible for storing information about data objects and relations between them. It was also responsible for managing security and generating reports as they were based on the chosen data objects.

SaaS and self-hosted technological stacks

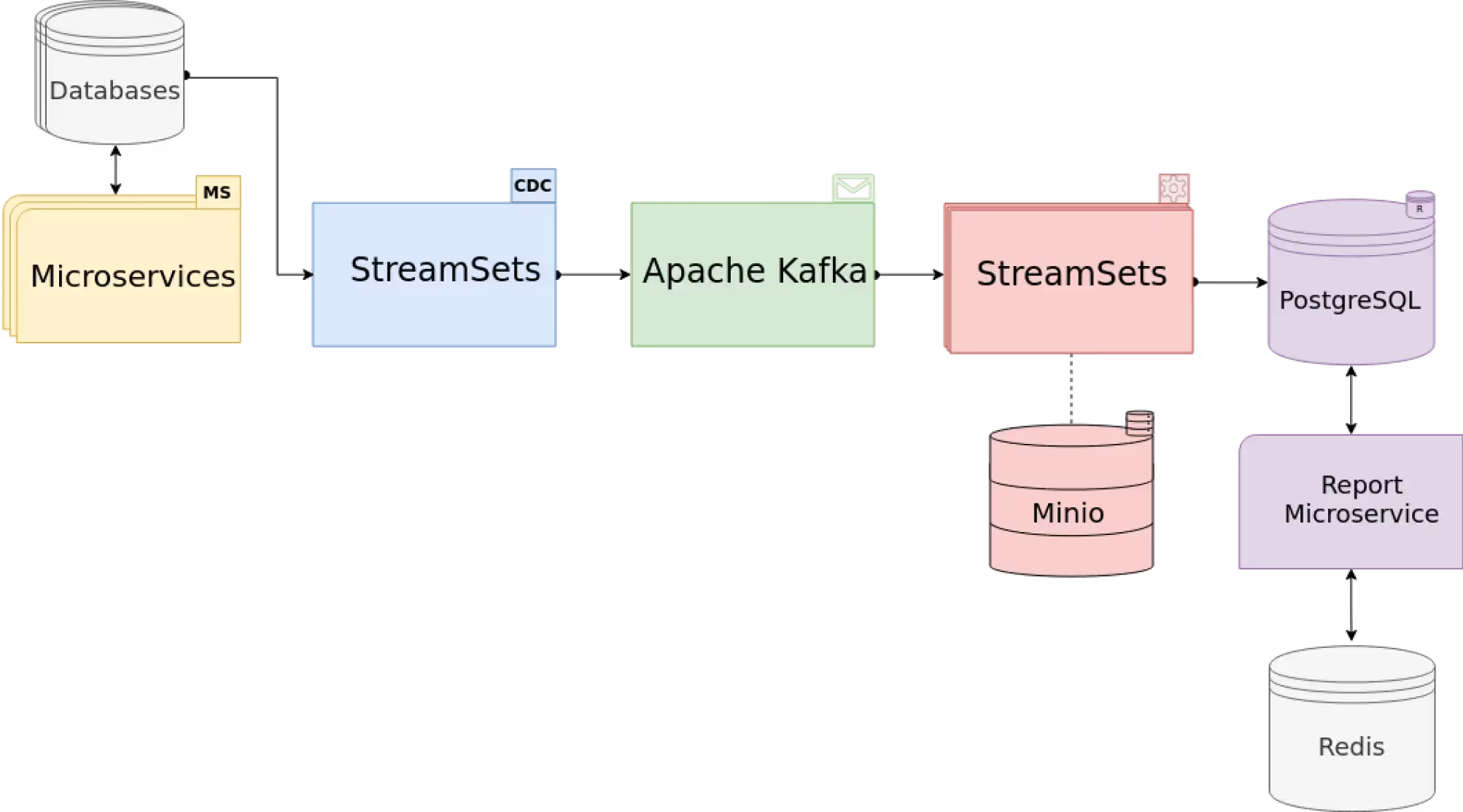

We prepared two technological stack options for the microservice reporting system. For the SaaS solution on AWS, we used:

- StreamSets for CDC

- Apache Kafka as a messaging system

- AWS S3 DataLake

- AWS Aurora as a report database

- AWS ElasticCache as an in-memory data store

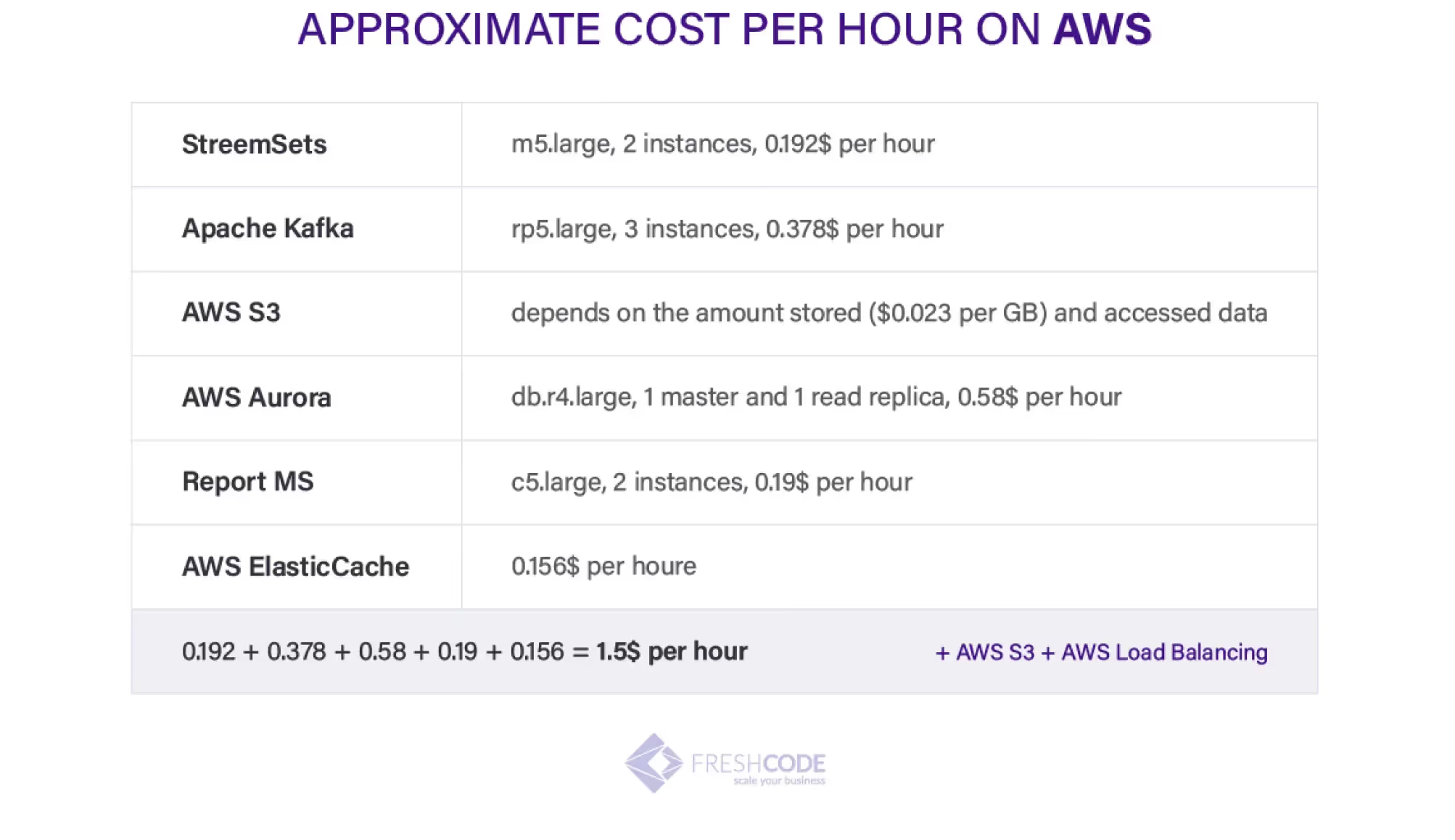

The reporting microservice was written in NodeJS. You can see the rough estimates for the SaaS solution in the table below.

This infrastructure was the most appropriate for the client's requirements. Its main advantage was the easy way to replace AWS services with self-hosted solutions. It allowed us to avoid code/logic duplication for different deployment schemas.

For the on-premise solution, we used Minio, PostgreSQL, and Redis accordingly. Their APIs were fully compatible, so we had no significant problems in the microservice reporting system.

The bottom line: custom reporting in microservices

Our client received the improved microservice reporting system and achieved these goals:

- to update the app's architecture and design

- to improve the product by adding new features

- to optimize performance, enhance flexibility and scalability

If you are interested in solving the same problem or face other technical challenges, contact our team. We provide free expert advice for startups, small business owners, and enterprises. Check out the Freshcode portfolio to learn about the other exciting projects.

with Freshcode