Log user feedback

Logging user feedback and scoring traces is a crucial aspect of evaluating and improving your agent. By systematically recording qualitative or quantitative feedback on specific interactions or entire conversation flows, you can:

- Track performance over time

- Identify areas for improvement

- Compare different model versions or prompts

- Gather data for fine-tuning or retraining

- Provide stakeholders with concrete metrics on system effectiveness

Logging user feedback using the SDK

You can use the SDKs to log user feedback and score traces:

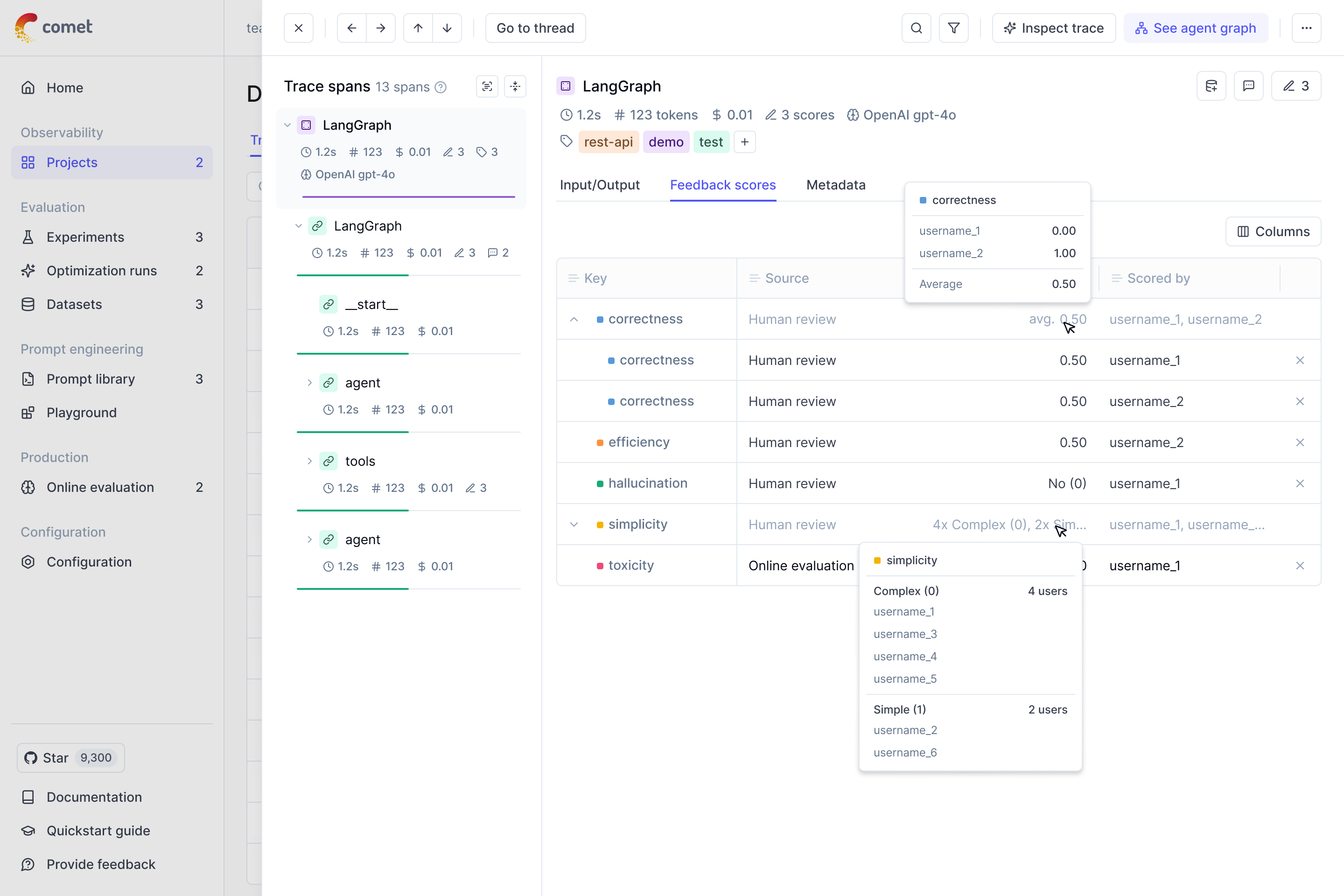

Annotating Traces through the UI

To annotate traces through the UI, you can navigate to the trace you want to annotate in the traces page and click on the Annotate button. This will open a sidebar where you can add annotations to the trace.

You can annotate both traces and spans through the UI, make sure you have selected the correct span in the sidebar.

Once a feedback scores has been provided, you can also add a reason to explain why this particular score was provided. This is useful to add additional context to the score.

If multiple team members are annotating the same trace, you can see the annotations of each team member in the UI in the Feedback scores section. The average score will be displayed at a trace and trace level.

If you want a more dedicated annotation interface, you can use the Annotation Queues feature.

Online evaluation

You don’t need to manually annotate each trace to measure the performance of your agents! By using Opik’s online evaluation feature, you can define LLM as a Judge metrics that will automatically score all, or a subset, of your production traces.

Next steps

You can go one step further and:

- Create an offline evaluation to evaluate your agent before it is deployed to production

- Score your agent in production to track and catch specific issues with your agent

- Use annotation queues to organize your traces for review and labeling by your team of experts

- Checkout our LLM as a Judge metrics