Simple Log Service can ship collected data to an Object Storage Service (OSS) bucket for storage and analysis. This topic describes how to create an OSS data shipping job using the new version.

Prerequisites

A project and a Logstore have been created. For more information, see Create a project and a Logstore.

Data has been collected. For more information, see Data collection.

A bucket has been created in the same region as the Simple Log Service project. For more information, see Create a bucket in the console.

Supported regions

Simple Log Service ships data to an OSS bucket in the same region as the Simple Log Service project.

Data shipping to OSS is supported only in the following regions: China (Hangzhou), China (Shanghai), China (Qingdao), China (Beijing), China (Zhangjiakou), China (Hohhot), China (Ulanqab), China (Chengdu), China (Shenzhen), China (Heyuan), China (Guangzhou), China (Hong Kong), Singapore, Malaysia (Kuala Lumpur), Indonesia (Jakarta), Philippines (Manila), Thailand (Bangkok), Japan (Tokyo), US (Silicon Valley), and US (Virginia).

Create a data shipping job

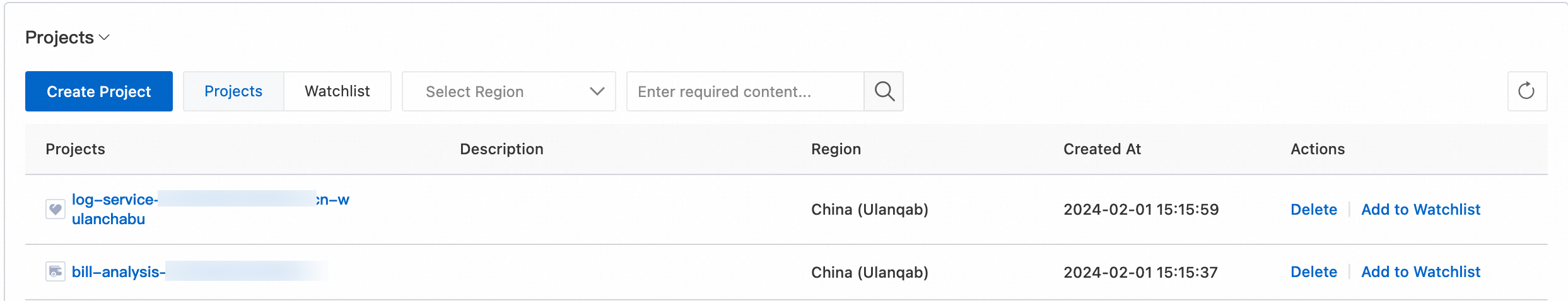

Log on to the Simple Log Service console.

In the Projects section, click the one you want.

On the tab, click the > icon to the left of the destination Logstore and choose .

Hover the pointer over OSS (Object Storage Service) and click +.

In the OSS Data Shipping panel, configure the following parameters and click OK.

Set Shipping Version to New Version. The following table describes the key parameters.

ImportantAfter you create an OSS data shipping job, the shipping frequency for each shard is determined by the shipping size and shipping interval. A shipping task is triggered when either condition is met.

After you create the job, you can check its status and the data shipped to OSS to confirm that the job runs as expected.

Parameter

Description

Job Name

The unique name of the data shipping job.

Display Name

The display name of the data shipping job.

Job Description

The description of the OSS job.

OSS Bucket

The name of the OSS bucket.

ImportantThe bucket must already exist, have the Write-Once-Read-Many (WORM) feature disabled, and be in the same region as the Simple Log Service project. For more information about WORM, see Retention Policy (WORM).

You can ship data to buckets of the Standard, Infrequent Access, Archive, Cold Archive, or Deep Cold Archive storage class. After shipping, the storage class of the generated OSS objects is the same as the bucket's storage class by default. For more information, see Storage classes.

Buckets of non-Standard storage classes have limits on minimum storage duration and minimum billable size. Set the storage class for the destination bucket as needed. For more information, see Storage class comparison.

File Shipping Directory

The directory in the OSS bucket. The directory name cannot start with a forward slash (/) or a backslash (\).

After you create the OSS data shipping job, data from the Logstore is shipped to this directory in the destination OSS bucket.

File Suffix

If you do not set a file suffix, Simple Log Service automatically generates a suffix based on the storage format and compression type. For example:

.suffix.Partition Format

The format for dynamically generating directories in the OSS bucket based on the shipping time. The format cannot start with a forward slash (/). The default value is %Y/%m/%d/%H/%M. For examples, see Partition format. For more information about the parameters, see the strptime API.

Write OSS RAM Role

The permission that grants the OSS data shipping job to write data to the OSS bucket.

Default Role: Authorizes the OSS data shipping job to use the AliyunLogDefaultRole system role to write data to the OSS bucket. This requires the Alibaba Cloud Resource Name (ARN) of AliyunLogDefaultRole. For information about how to obtain the ARN, see Access data using a default role.

Custom Role: Authorizes the OSS data shipping job to use a custom role to write data to the OSS bucket.

You must first grant the custom role the permission to write data to the OSS bucket. Then, in the Write OSS RAM Role field, enter the ARN of your custom role. For information about how to obtain the ARN, see one of the following topics:

If the Logstore and the OSS bucket belong to the same Alibaba Cloud account, see Step 2: Grant a RAM role the permission to write data to an OSS bucket.

If the Logstore and the OSS bucket belong to different Alibaba Cloud accounts, see Step 2: Grant the role-b RAM role under Alibaba Cloud Account B the permission to write data to an OSS bucket.

Read Logstore RAM Role

The permission that grants the OSS data shipping job to read data from the Logstore.

Default Role: Authorizes the OSS data shipping job to use the AliyunLogDefaultRole system role to read data from the Logstore. This requires the ARN of AliyunLogDefaultRole. For information about how to obtain the ARN, see Access data using a default role.

Custom Role: Authorizes the OSS data shipping job to use a custom role to read data from the Logstore.

You must first grant the custom role the permission to read data from the Logstore. Then, in the Read Logstore RAM Role field, enter the ARN of your custom role. For information about how to obtain the ARN, see one of the following topics:

If the Logstore and the OSS bucket belong to the same Alibaba Cloud account, see Step 1: Grant a RAM role the permission to read data from a Logstore.

If the Logstore and the OSS bucket belong to different Alibaba Cloud accounts, see Step 1: Grant the role-a RAM role under Alibaba Cloud Account A the permission to read data from a Logstore.

Storage Format

After data is shipped to OSS, it can be stored in different file formats. For more information, see CSV format, JSON format, Parquet format, and ORC format.

Compress Data

The compression method for data stored in OSS.

None (no compression): Data is not compressed.

snappy: Compresses data using the snappy algorithm to reduce storage space in the OSS bucket. For more information, see snappy.

zstd: Compresses data using the zstd algorithm to reduce storage space in the OSS bucket.

gzip: Compresses data using the gzip algorithm to reduce storage space in the OSS bucket.

Delivery Tag

The tag field is a reserved field in Simple Log Service. For more information, see Reserved fields.

Batch Size

The job starts to ship data after the log size in a shard reaches this value. This parameter controls the size of each OSS object (uncompressed). Value range: 5 MB to 256 MB.

NoteBatch size refers to the size of the data after it is read, not the size of the data already written to Simple Log Service. Data is read and shipped only after the condition for the batch interval is met.

Batch Interval

The interval for shipping logs from a shard. The job starts to ship data when the time elapsed since the first log arrived is greater than or equal to this value. Default: 300 seconds. Value range: 300 seconds to 900 seconds.

Shipping Latency

The delay before data is shipped. For example, if you set this to 3600, data is shipped with a 1-hour delay. Data from 10:00:00 on June 5, 2023, is not written to the specified OSS bucket before 11:00:00 on June 5, 2023. For information about the limits, see Configuration item limits.

Start Time Range

The time range of the data to ship. This range is based on the time when logs are received. The details are as follows:

All: The job ships data starting from the time the first log is received by the Logstore until the job is manually stopped.

From Specific Time: The job ships data starting from the specified time until the job is manually stopped.

Specific Time Range: The job runs within the specified start and end times and stops automatically after the end time.

NoteThe time range is specified by the

__tag__:__receive_time__field. For more information, see Reserved fields.Time Zone

The time zone used to format the time.

If you set Time Zone and Partition Format, the system generates directories in the OSS bucket based on your settings.

View OSS data

After data is successfully shipped to OSS, you can access the data using the OSS console, an API, an SDK, or other methods. For more information, see Manage files.

The OSS object path uses the following format:

oss://OSS-BUCKET/OSS-PREFIX/PARTITION-FORMAT_RANDOM-IDIn this format, OSS-BUCKET is the OSS bucket name, OSS-PREFIX is the directory prefix, PARTITION-FORMAT is the partition format calculated from the shipping time using the strptime API, and RANDOM-ID is the unique ID of a shipping task.

OSS data shipping is performed in batches. Each batch is written to a single file. The file path is determined by the earliest receive_time (the time the data arrived at Simple Log Service) within that batch. Note the following two situations:

When shipping real-time data (for example, every 5 minutes), a shipping task at 00:00:00 on January 22, 2022, ships data that was written to a shard after 23:55:00 on January 21, 2022. Therefore, to analyze all data for January 22, 2022, you must check all objects in the 2022/01/22 directory in the OSS bucket. You must also check if the last few objects in the 2022/01/21 directory contain data from January 22, 2022.

When shipping historical data, if the Logstore contains a small amount of data, a single shipping task might pull data that spans multiple days. This can cause a file to be placed in the 2022/01/22 directory that contains all the data for January 23, 2022. As a result, the 2022/01/23 directory may be empty.

Partition format

Each shipping task corresponds to an OSS object path in the format oss://OSS-BUCKET/OSS-PREFIX/PARTITION-FORMAT_RANDOM-ID. The following table provides examples of partition formats for a shipping task created at 19:50:43 on January 20, 2022.

OSS Bucket | OSS Prefix | Partition Format | File Suffix | OSS File Path |

test-bucket | test-table | %Y/%m/%d/%H/%M | .suffix | oss://test-bucket/test-table/2022/01/20/19/50_1484913043351525351_2850008.suffix |

test-bucket | log_ship_oss_example | year=%Y/mon=%m/day=%d/log_%H%M | .suffix | oss://test-bucket/log_ship_oss_example/year=2022/mon=01/day=20/log_1950_1484913043351525351_2850008.suffix |

test-bucket | log_ship_oss_example | ds=%Y%m%d/%H | .suffix | oss://test-bucket/log_ship_oss_example/ds=20220120/19_1484913043351525351_2850008.suffix |

test-bucket | log_ship_oss_example | %Y%m%d/ | .suffix | oss://test-bucket/log_ship_oss_example/20220120/_1484913043351525351_2850008.suffix Note Platforms such as Hive cannot parse the corresponding OSS content in this format. Do not use this format. |

test-bucket | log_ship_oss_example | %Y%m%d%H | .suffix | oss://test-bucket/log_ship_oss_example/2022012019_1484913043351525351_2850008.suffix |

When you use big data platforms such as Hive and MaxCompute or Alibaba Cloud Data Lake Analytics (DLA) to analyze OSS data, you can use partition information by setting PARTITION-FORMAT in the key=value format. For example, in the path oss://test-bucket/log_ship_oss_example/year=2022/mon=01/day=20/log_195043_1484913043351525351_2850008.parquet, the partition keys are set to three levels: year, mon, and day.