Retrieval-augmented generation (RAG) technology enhances the ability of a large language model (LLM) to answer questions about private domains. This technology retrieves relevant information from an external knowledge base and combines it with user input before sending the combined data to the LLM. Elastic Algorithm Service (EAS) provides a scenario-based deployment method that lets you flexibly select an LLM and a vector database to quickly build and deploy a RAG chatbot. This topic describes how to deploy a RAG chatbot service and test its model inference capabilities.

Step 1: Deploy the RAG service

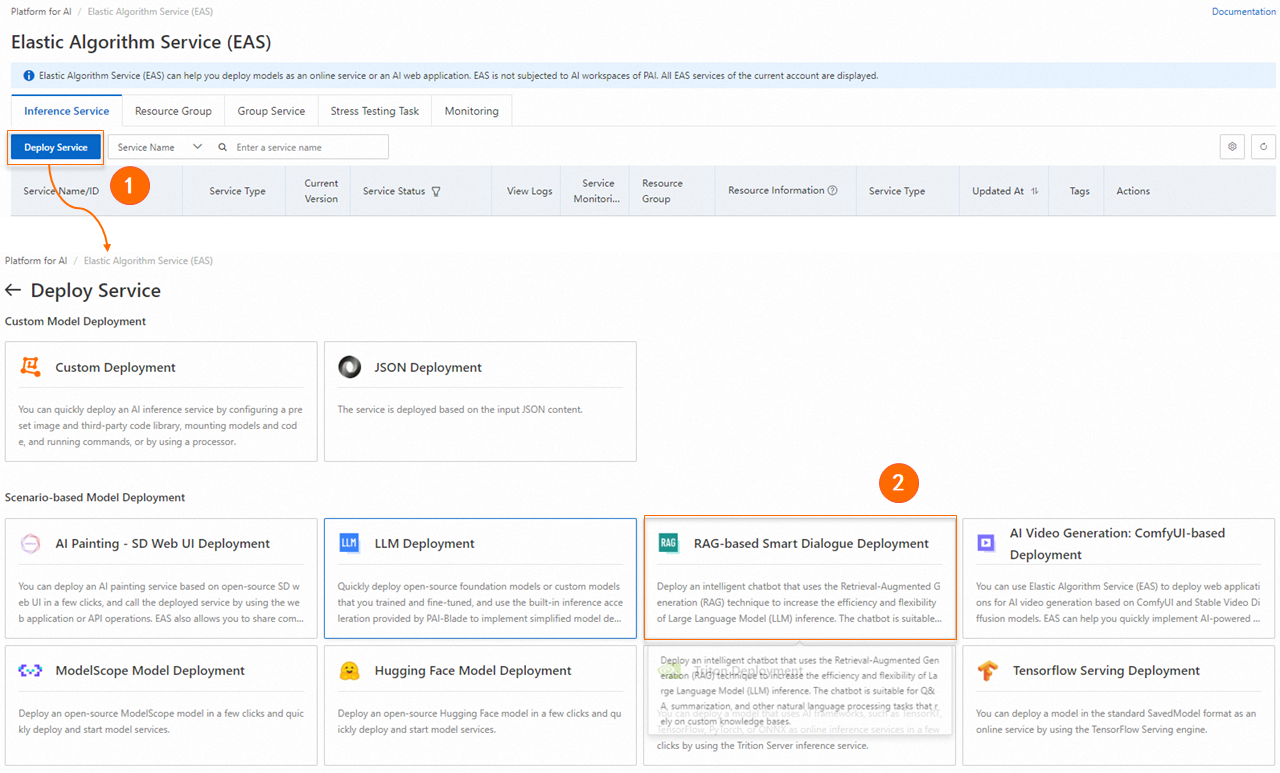

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. Under Scenario-based Model Deployment, click RAG-based Smart Dialogue Deployment.

On the RAG-based Smart Dialogue Deployment page, configure the parameters and click Deploy. The deployment is complete when the Service Status changes to Running. The deployment typically takes about 5 minutes, but the actual duration may vary depending on factors such as the number of model parameters. The following table describes the key parameters.

Basic Information

Parameter

Description

Version Selection

Two deployment versions are available:

LLM Integrated Deployment: Deploys the large language model (LLM) service and the retrieval-augmented generation (RAG) service in the same service.

LLM-Separated Deployment: Deploys only the RAG service. This option offers greater flexibility, letting you freely replace and connect LLM services.

Model Category

When you select LLM Integrated Deployment as the version, select the large language model (LLM) to deploy. Choose an open-source model based on your specific scenario.

Resource Information

Parameter

Description

Deploy Resources

For the LLM Integrated Deployment version, the system automatically selects suitable resource specifications based on the chosen model category. Changing to a different resource specification may prevent the model service from starting correctly.

For the LLM-Separated Deployment version, select a CPU with 8 or more cores and at least 16 GB of memory. Recommended instance types include ecs.g6.2xlarge and ecs.g6.4xlarge.

Vector Database Settings

RAG supports using Faiss, Elasticsearch, Hologres, OpenSearch, or RDS PostgreSQL to build a vector database. Select one of these services as your vector database based on your requirements.

FAISS

Use Faiss to build a local vector database. This method is lightweight, user-friendly, and eliminates the need to purchase an online vector database product.

Parameter

Description

Version Type

Select FAISS.

OSS Address

Select an existing OSS folder in the current region to store the uploaded knowledge base files. If no folder is available, see Console Quick Start to create one.

NoteIf you use a self-hosted fine-tuned model deployment service, ensure that the selected OSS path is different from the model's path to prevent conflicts.

Elasticsearch

Configure the connection information for your Alibaba Cloud Elasticsearch instance. For more information about how to create an Elasticsearch instance and prepare the configuration items, see Prepare a vector database by using Elasticsearch.

Parameter

Description

Version Type

Select Elasticsearch.

Private Endpoint/port

Configure the private endpoint and port for the Elasticsearch instance. The format is

http://<private_endpoint>:<private_port>. To obtain the private endpoint and port, see View instance basic information.Index Name

Enter a new or existing index name. For an existing index, the index schema must meet PAI-RAG requirements. For example, you can use an index that was automatically created when you deployed the RAG service using EAS.

Account

The logon name configured when creating the Elasticsearch instance. The default is elastic.

Password

Enter the logon password that you set when you created the Elasticsearch instance. If you forget the logon password, reset the instance access password.

OSS Address

Select an existing OSS folder in the current region. This folder is mounted as a path for knowledge base management.

Hologres

Configure the connection information for your Hologres instance. If you have not activated a Hologres instance, see Purchase a Hologres instance.

Parameter

Description

Version Type

Select Hologres.

Call Information

The host for the specified VPC. Go to the instance details page in the Hologres Management Console. In the Network Information area, click Copy next to Specified VPC to get the host. The host is the part of the domain name before

:80.Database Name

The name of the database for the Hologres instance. For more information, see Create a database.

Account

The custom account that you created. For more information, see Create a custom user. For Select Member Role, select Instance Super Administrator (SuperUser).

Password

The password for the existing custom user.

Table Name

Enter a new table name or an existing table name. The schema for an existing table must meet PAI-RAG requirements. For example, you can specify a Hologres table that was automatically created when you deployed a RAG service on EAS.

OSS Address

Select an existing OSS storage folder in the current region. This lets you manage the knowledge base by mounting an OSS path.

OpenSearch

Configure the connection information for your OpenSearch Vector Search Edition instance. For more information about how to create an OpenSearch instance and prepare the configuration items, see Prepare the OpenSearch vector database.

Parameters

Description

Version Type

Select OpenSearch.

Endpoint

The Internet endpoint for the OpenSearch Vector Search Edition instance. Enable Internet access for the instance. For more information, see Set up an OpenSearch vector search database.

Instance ID

In the OpenSearch Vector Search Edition instance list, get the instance ID.

Username

Configure the username and password that you entered when creating the OpenSearch Vector Search Edition instance.

Password

Table Name

The name of the index table that you created when you prepared the OpenSearch Vector Search Edition instance. For more information, see Prepare the OpenSearch vector search database.

OSS Path

Select an existing OSS storage directory in the current region. Manage the knowledge base by attaching an OSS path.

ApsaraDB RDS for PostgreSQL

Configure the connection information for your ApsaraDB RDS for PostgreSQL instance. For more information about how to create an ApsaraDB RDS for PostgreSQL instance and prepare the configuration items, see Prepare the ApsaraDB RDS for PostgreSQL vector database.

Parameter

Description

Version Type

Select RDS PostgreSQL.

Host Address

Configure the internal endpoint of the ApsaraDB RDS for PostgreSQL instance. You can find it on the Database Connection page of your ApsaraDB RDS for PostgreSQL instance in the ApsaraDB RDS for PostgreSQL console.

Port

The default is 5432. Change the value as needed.

Database

Configure the name of the database you created. For more information about how to create a database and an account, see Create a database and an account. Note the following:

When creating an account, set Account Type to Privileged Account.

When creating a database, set Authorized Account to the privileged account you created.

Table Name

Customize the database table name.

Account

Configure the username and password for the privileged account you created. For more information about how to create a privileged account, see Create a database and an account. Set Account Type to Privileged Account.

Password

OSS Address

Select an existing OSS storage directory in the current region. Manage the knowledge base by attaching an OSS path.

VPC

Parameter

Description

Virtual Private Cloud (VPC)

When deploying the RAG service, if you select LLM-Separated Deployment, make sure the RAG service can access the LLM service. The network requirements are as follows:

To access the LLM service over the Internet, configure a VPC with Internet access here. For more information, see Scenario 1: Enable an EAS service to access the Internet.

To access the LLM service using an internal endpoint, the RAG service and the LLM service must use the same VPC.

To use an Alibaba Cloud Model Studio model or use web search for Q&A, configure a VPC with Internet access. For more information, see Scenario 1: Enable an EAS service to access the Internet.

Network requirements for the vector database:

A Faiss vector database does not require network access.

EAS can access Hologres, Elasticsearch, or RDS PostgreSQL over the Internet or a private network. We recommend that you use private network access. For private network access, the virtual private cloud (VPC) that is configured in EAS must be the same as the VPC of the vector search database. To create a VPC, vSwitch, or security group, see Create and manage a VPC and Create a security group.

EAS can only access OpenSearch over the Internet. For configuration instructions, see Step 2: Prepare configuration items.

VSwitch

Security Group Name

Step 2: API Call

The following section describes how to call the API for common RAG features. For more information about how to call the API for more features, such as managing knowledge bases and updating RAG service configurations, see RAG API operations.

You can specify a knowledge base name in both the query and upload APIs to switch between knowledge bases. If you omit the knowledge base name parameter, the default knowledge base is used.

Obtain invocation information

On the Elastic Algorithm Service (EAS) page, click the RAG service name and then click View Endpoint Information in the Basic Information section.

Obtain the service endpoint and token from the Invocation Method dialog box.

NoteYou can choose to use a public endpoint or a VPC endpoint:

To use a public endpoint, the client must have Internet access.

To use a VPC endpoint, the client must be in the same VPC as the RAG service.

Upload knowledge base files

You can upload local knowledge base files using the API. You can query the status of the file upload task using the `task_id` returned by the upload interface.

In the following examples, replace <EAS_SERVICE_URL> with the RAG service endpoint and <EAS_TOKEN> with the RAG service token. For more information about how to obtain them, see Obtain invocation information.

Upload a single file (using -F 'files=@path to upload a file)

# Replace <EAS_TOKEN> and <EAS_SERVICE_URL> with the service token and the service access address, respectively. # Replace <name> with the name of your knowledge base. # Replace the path after "-F 'files=@" with the path to your knowledge base file. curl -X 'POST' /api/v1/knowledgebases//files \ -H 'Authorization: ' \ -H 'Content-Type: multipart/form-data' \ -F 'files=@example_data/paul_graham/paul_graham_essay.txt'Upload multiple files

Use multiple

-F 'files=@path'parameters with each parameter corresponding to a file to be uploaded, as shown in the example:# Replace <EAS_TOKEN> and <EAS_SERVICE_URL> with the service token and the service access address, respectively. # Replace <name> with the name of your knowledge base. # Replace the path after "-F 'files=@" with the path to your knowledge base file. curl -X 'POST' /api/v1/knowledgebases//files \ -H 'Authorization: ' \ -H 'Content-Type: multipart/form-data' \ -F 'files=@example_data/paul_graham/paul_graham_essay.txt' \ -F 'files=@example_data/another_file1.md' \ -F 'files=@example_data/another_file2.pdf' \Query the upload status

# Replace <EAS_TOKEN> and <EAS_SERVICE_URL> with the service token and the service access address, respectively. # Replace <name> with the name of your knowledge base. Replace <file_name> with the name of your file. curl -X 'GET' /api/v1/knowledgebases//files/ -H 'Authorization: '

Send a chat request

Call the service by using the OpenAI API that is compatible with the service. Before calling the service, you need to complete the corresponding configurations on the WebUI page of the RAG service based on your business requirements.

Supported features

Web search: You need to configure web search parameters on the WebUI page of the RAG service in advance.

Knowledge base query: You need to upload knowledge base files in advance.

LLM chat: Use large language model (LLM) services to provide answers. You need to configure LLM services in advance.

Agent chat: You need to complete agent-related code configurations on the WebUI page of the RAG service in advance.

Database or table query: You need to complete data analysis-related configurations on the WebUI page of the RAG service in advance.

Request examples

Web search

from openai import OpenAI ##### API configuration ##### # Replace <EAS_TOKEN> and <EAS_SERVICE_URL> with the service token and the service access address, respectively. openai_api_key = "" openai_api_base = "/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) #### Chat ###### def chat(): stream = True chat_completion = client.chat.completions.create( model="default", stream=stream, messages=[ {"role": "user", "content": "Hello"}, {"role": "assistant", "content": "Hello, how can I help you?"}, {"role": "user", "content": "What is the capital of Zhejiang province?"}, {"role": "assistant", "content": "Hangzhou is the capital of Zhejiang province."}, {"role": "user", "content": "What are some interesting places to visit?"}, ], extra_body={ "search_web": True, }, ) if stream: for chunk in chat_completion: print(chunk.choices[0].delta.content, end="") else: result = chat_completion.choices[0].message.content print(result) chat()Database query

from openai import OpenAI ##### API configuration ##### # Replace <EAS_TOKEN> and <EAS_SERVICE_URL> with the service token and the service access address, respectively. openai_api_key = "" openai_api_base = "/v1" client = OpenAI( api_key=openai_api_key, base_url=openai_api_base, ) #### Chat ###### def chat(): stream = True chat_completion = client.chat.completions.create( model="default", stream=stream, messages=[ {"role": "user", "content": "How many cats are there?"}, {"role": "assistant", "content": "There are 2 cats"}, {"role": "user", "content": "What about dogs?"}, ], extra_body={ "chat_db": True, }, ) if stream: for chunk in chat_completion: print(chunk.choices[0].delta.content, end="") else: result = chat_completion.choices[0].message.content print(result) chat()Notes

This tutorial is limited by the server resource size and the default token limit of the LLM service, and can only support conversations of a limited length. The purpose of this tutorial is to help you experience the basic retrieval features of the RAG chatbot.

FAQ

How am I charged?

Billing

When you deploy a RAG-based LLM chatbot, you are charged only for EAS resources. If you use other products, such as Alibaba Cloud Model Studio, vector databases (such as Elasticsearch, Hologres, OpenSearch, or RDS PostgreSQL), Object Storage Service (OSS), an Internet NAT gateway, or web search services (such as Bing), you are billed for each product separately according to its billing rules.

Stop billing

After you stop the EAS service, only the billing for EAS resources stops. To stop billing for other products, refer to the documentation for those products and follow the instructions to stop or delete the relevant instances.

Are knowledge base documents uploaded through the API stored permanently?

Knowledge base files uploaded using the RAG service API are not stored permanently. Their storage duration depends on the configuration of the selected vector database, such as Object Storage Service (OSS), Elasticsearch, or Hologres. We recommend that you consult the relevant documentation to understand the storage policies and ensure long-term data preservation.

Why are the parameters I set through the API not taking effect?

Currently, the PAI-RAG service lets you configure parameters using the API only for those listed in the API Reference document. You must configure all other parameters using the WebUI.

References

Using EAS, you can also complete the following scenario-based deployments:

EAS provides stress testing methods for LLM services and general services. This feature helps you easily create stress testing tasks and perform one-click stress tests to fully understand the performance of your EAS services. For more information, see Automatic stress testing.